We will start with the PRISM average monthly minimum-temperature raster dataset for 2016 with coverage for the continental United States. The raster is provided by the PRISM Climate Group at Oregon State University, with additional rasters available at http://www.prism.oregonstate.edu/mtd/.

On the command line, navigate to the PRISM directory as follows:

> cd C:\postgis_cookbook\data\chap05\PRISM

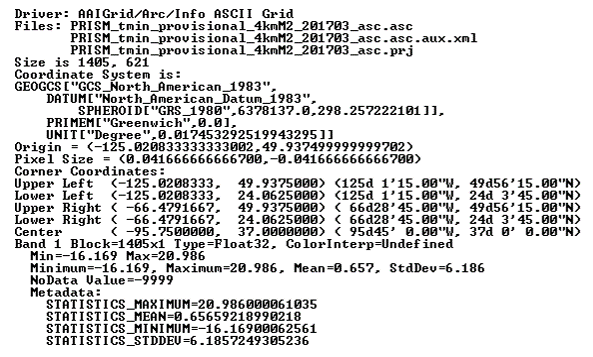

Let us spot check one of the PRISM rasters with the GDAL utility gdalinfo. It is always a good practice to inspect at least one raster to get an idea of the metadata and ensure that the raster does not have any issues. This can be done using the following command:

> gdalinfo PRISM_tmin_provisional_4kmM2_201703_asc.asc

The gdalinfo output is as follows:

The gdalinfo output reveals that the raster has no issues, as evidenced by the Corner Coordinates, Pixel Size, Band, and Coordinate System being unempty.

Looking through the metadata, we find that the metadata about the spatial reference system indicates that raster uses the NAD83 coordinate system. We can double-check this by searching for the details of NAD83 in the spatial_ref_sys table:

SELECT srid, auth_name, auth_srid, srtext, proj4text

FROM spatial_ref_sys WHERE proj4text LIKE '%NAD83%'

Comparing the text of srtext to the PRISM raster's metadata spatial attributes, we find that the raster is in EPSG (SRID 4269).

You can load the PRISM rasters into the chp05.prism table with raster2pgsql, which will import the raster files to the database in a similar manner as the shp2pgsql command:

> raster2pgsql -s 4269 -t 100x100 -F -I -C -Y .\PRISM_tmin_provisional_4kmM2_*_asc.asc

chp05.prism | psql -d postgis_cookbook -U me

The raster2pgsql command is called with the following flags:

- -s: This flag assigns SRID4269 to the imported rasters.

- -t: This flag denotes the tile size. It chunks the imported rasters into smaller and more manageable pieces; each record added to the table will be, at most, 100 x 100 pixels.

- -F: This flag adds a column to the table and fills it with the raster's filename.

- -I: This flag creates a GIST spatial index on the table's raster column.

- -C: This flag applies the standard set of constraints on the table. The standard set of constraints includes checks for dimension, scale, skew, upper-left coordinate, and SRID.

- -Y: This flag instructs raster2pgsql to use COPY statements instead of INSERT statements. COPY is typically faster than INSERT.

There is a reason why we passed -F to raster2pgsql. If you look at the filenames of the PRISM rasters, you'll note the year and month. So, let's convert the value in the filename column to a date in the table:

ALTER TABLE chp05.prism ADD COLUMN month_year DATE; UPDATE chp05.prism SET month_year = ( SUBSTRING(split_part(filename, '_', 5), 0, 5) || '-' || SUBSTRING(split_part(filename, '_', 5), 5, 4) || '-01' ) :: DATE;

This is all that needs to be done with the PRISM rasters for now.

Now, let's import a Shuttle Radar Topography Mission (SRTM) raster. The SRTM raster is from the SRTM that was conducted by the NASA Jet Propulsion Laboratory in February, 2000. This raster and others like it are available at: http://dds.cr.usgs.gov/srtm/version2_1/SRTM1/.

Change the current directory to the SRTM directory:

> cd C:\postgis_cookbook\data\chap05\SRTM

Make sure you spot check the SRTM raster with gdalinfo to ensure that it is valid and has a value for Coordinate System. Once checked, import the SRTM raster into the chp05.srtm table:

> raster2pgsql -s 4326 -t 100x100 -F -I -C -Y N37W123.hgt chp05.srtm | psql -d postgis_cookbook

We use the same raster2pgsql flags for the SRTM raster as those for the PRISM rasters.

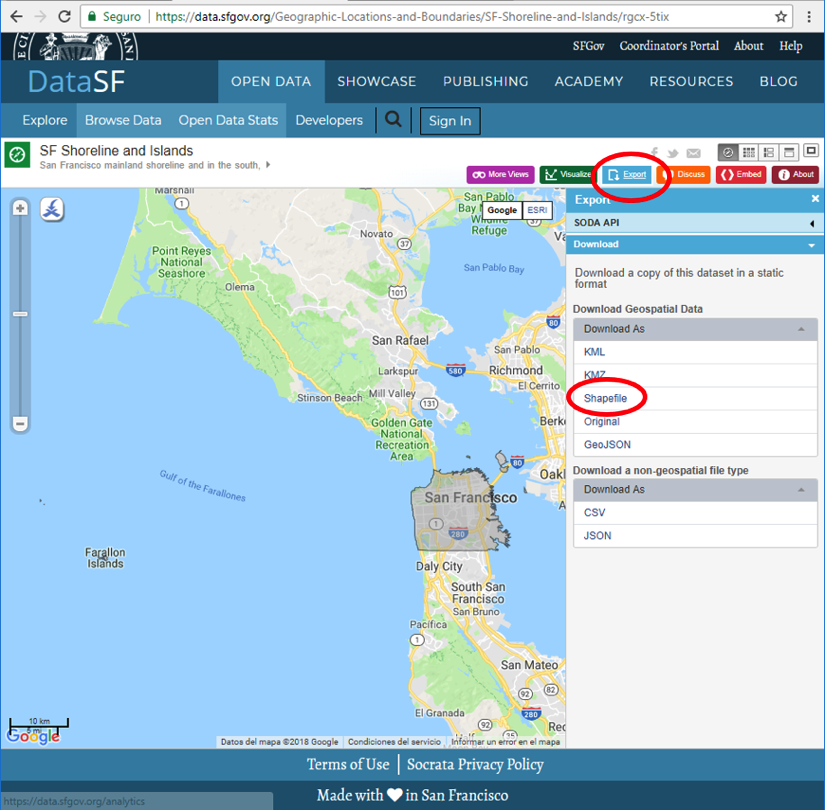

We also need to import a shapefile of San Francisco provided by the City and County of San Francisco, available with the book's dataset files, or the one found on the following link, after exporting the data to a shapefile:

https://data.sfgov.org/Geographic-Locations-and-Boundaries/SF-Shoreline-and-Islands/rgcx-5tix

The San Francisco's boundaries from the book's files will be used in many of the follow-up recipes, and it must be loaded to the database as follows:

> cd C:\postgis_cookbook\data\chap05\SFPoly > shp2pgsql -s 4326 -I sfpoly.shp chp05.sfpoly | psql -d postgis_cookbook -U me