At the moment, our API container can communicate with our Elasticsearch container because, under the hood, they're connected to the same network, on the same host machine:

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

d764e66872cf bridge bridge local

61bc0ca692fc host host local

bdbbf199a4fe none null local

$ docker network inspect bridge --format='{{range $index, $container := .Containers}}{{.Name}} {{end}}'

elasticsearch hobnob

However, if these containers are deployed on separate machines, using different networks, how can they communicate with each other?

Our API container must obtain network information about the Elasticsearch container so that it can send requests to it. One way to do this is by using a service discovery tool.

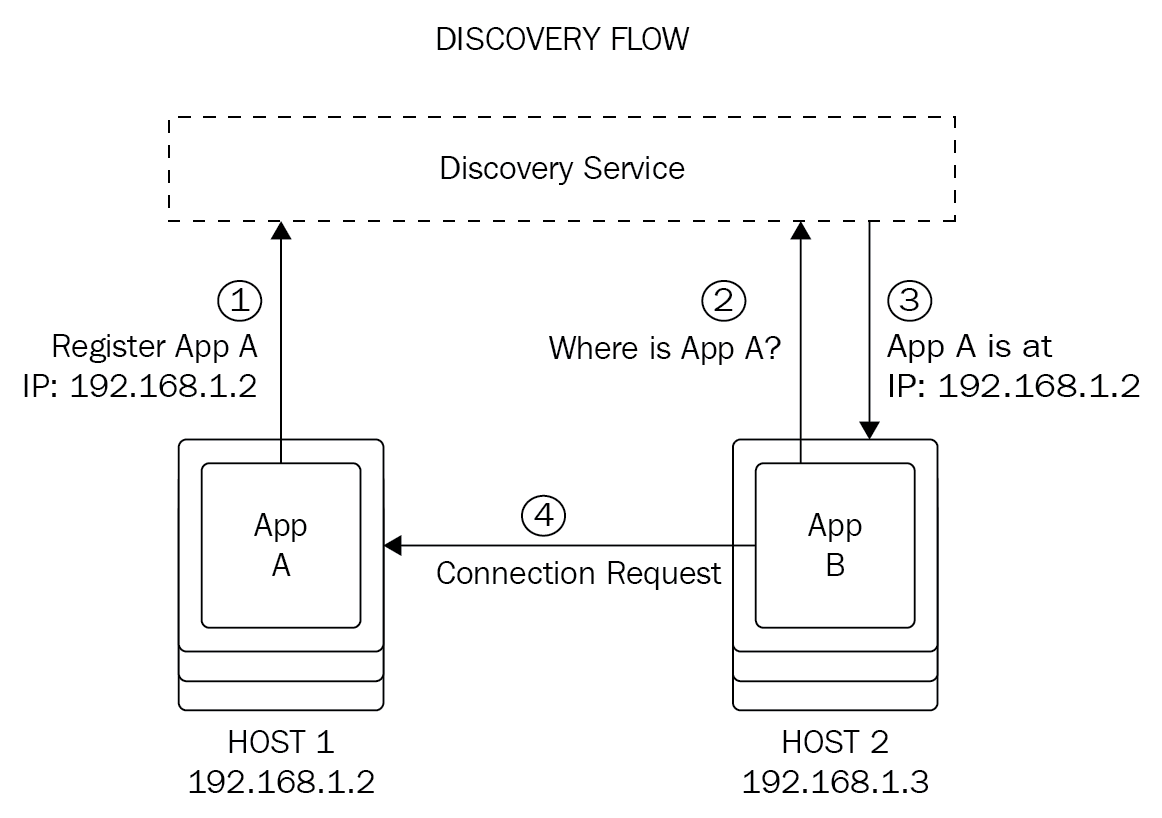

With service discovery, whenever a new container (running a service) is initialized, it registers itself with the Discovery Service, providing information about itself, which includes its IP address. The Discovery Service then stores this information in a simple key-value store.

The service should update the Discovery Service regularly with its status, so that the Discovery Service always has an up-to-date state of the service at any time.

When a new service is initiated, it will query the Discovery Service to request information about the services it needs to connect with, such as their IP address. Then, the Discovery Service will retrieve this information from its key-value store and return it to the new service:

Therefore, when we deploy our application as a cluster, we can employ a service discovery tool to facilitate our API's communication with our Elasticsearch service.

Popular service discovery tools include the following:

- etcd, by CoreOS (https://github.com/coreos/etcd)

- Consul, by HashiCorp (https://www.consul.io/)

- Zookeeper, by Yahoo, now an Apache Software Foundation (https://zookeeper.apache.org/)