We discussed too many topics on increasing the performance of our application. Now, let's discuss the scalability and availability of our application. With time, the traffic on our application can increase to thousands of users at a time. If our application runs on a single server, the performance will be hugely effected. Also, it is not a good idea to keep the application running at a single point because in case this server goes down, our complete application will be down.

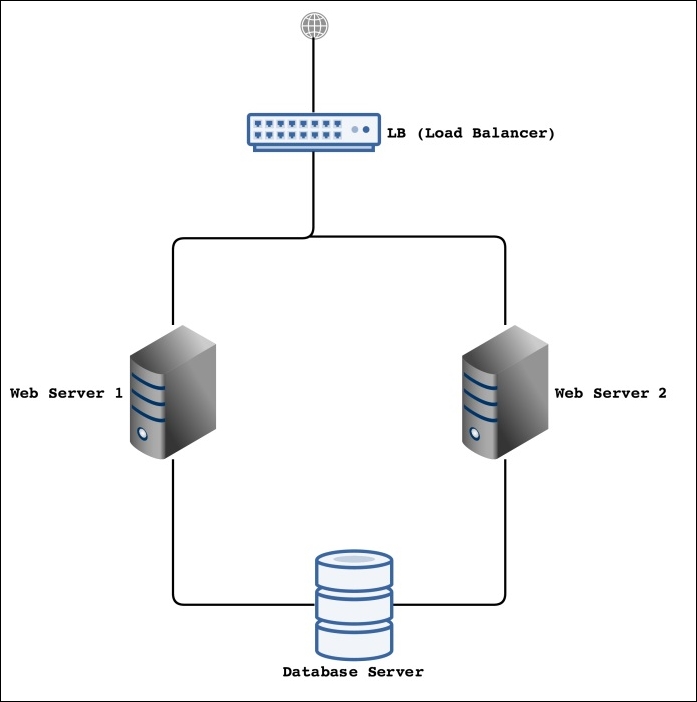

To make our application more scalable and better in availability, we can use an infrastructure setup in which we can host our application on multiple servers. Also, we can host different parts of the application on different servers. To better understand, take a look at the following diagram:

This is a very basic design for the infrastructure. Let's talk about its different parts and what operations will be performed by each part and server.

Note

It is possible that only the Load Balancer (LB) will be connected to the public Internet, and the rest of the parts can be connected to each through a private network in a Rack. If a Rack is available, this will be very good because all the communication between all the servers will be on a private network and therefore secure.

In the preceding diagram, we have two web servers. There can be as many web servers as needed, and they can be easily connected to LB. The web servers will host our actual application, and the application will run on NGINX or Apache and PHP 7. All the performance tunings we will discuss in this chapter can be used on these web servers. Also, it is not necessary that these servers should be listening at port 80. It is good that our web server should listen at another port to avoid any public access using browsers.

The database server is mainly used for the database where the MySQL or Percona Server can be installed. However, one of the problems in the infrastructure setup is to store session data in a single place. For this purpose, we can also install the Redis server on the database server, which will handle our application's session data.

The preceding infrastructure design is not a final or perfect design. It is just to give the idea of a multiserver application hosting. It has room for a lot of improvement, such as adding another local balancer, more web servers, and servers for the database cluster.

The first part is the load balancer (LB). The purpose of the load balancer is to divide the traffic among the web servers according to the load on each web server.

For the load balancer, we can use HAProxy, which is widely used for this purpose. Also, HAProxy checks the health of each web server, and if a web server is down, it automatically redirects the traffic of this down web server to other available web servers. For this purpose, only LB will be listening at port 80.

We don't want to place a load on our available web servers (in our case, two web servers) of encrypting and decrypting the SSL communication, so we will use the HAProxy server to terminate SSL there. When our LB receives a request with SSL, it will terminate SSL and send a normal request to one of the web servers. When it receives a response, HAProxy will encrypt the response and send it back to the client. This way, instead of using both the servers for SSL encryption/decryption, only a single LB server will be used for this purpose.

In the preceding infrastructure, we placed a load balancer in front of our web servers, which balance load on each server, check the health of each server, and terminate SSL. We will install HAProxy and configure it to achieve all the configurations mentioned before.

We will install HAProxy on Debian/Ubuntu. As of writing this book, HAProxy 1.6 is the latest stable version available. Perform the following steps to install HAProxy:

- First, update the system cache by issuing the following command in the terminal:

sudo apt-get update - Next, install HAProxy by entering the following command in the terminal:

sudo apt-get install haproxyThis will install HAProxy on the system.

- Now, confirm the HAProxy installation by issuing the following command in the terminal:

haproxy -v

If the output is as in the preceding screenshot, then congratulations! HAProxy is installed successfully.

Now, it's time to use HAProxy. For this purpose, we have the following three servers:

- The first is a load balancer server on which HAProxy is installed. We will call it LB. For this book's purpose, the IP of the LB server is 10.211.55.1. This server will listen at port 80, and all HTTP requests will come to this server. This server also acts as a frontend server as all the requests to our application will come to this server.

- The second is a web server, which we will call Web1. NGINX, PHP 7, MySQL, or Percona Server are installed on it. The IP of this server is 10.211.55.2. This server will either listen at port 80 or any other port. We will keep it to listen at port 8080.

- The third is a second web server, which we will call Web2, with the IP 10.211.55.3. This has the same setup as of the Web1 server and will listen at port 8080.

The Web1 and Web2 servers are also called backend servers. First, let's configure the LB or frontend server to listen at port 80.

Open the haproxy.cfg file located at /etc/haproxy/ and add the following lines at the end of the file:

frontend http bind *:80 mode http default_backend web-backends

In the preceding code, we set HAProxy to listen at the HTTP port 80 on any IP address, either the local loopback IP 127.0.0.1 or the public IP. Then, we set the default backend.

Now, we will add two backend servers. In the same file, at the end, place the following code:

backend web-backend mode http balance roundrobin option forwardfor server web1 10.211.55.2:8080 check server web2 10.211.55.3:8080 check

In the preceding configuration, we added two servers into the web backend. The reference name for the backend is web-backend, which is used in the frontend configuration too. As we know, both our web servers listen at port 8080, so we mentioned that it is the definition of each web server. Also, we used check at the end of the definition of each web server, which tells HAProxy to check the server's health.

Now, restart HAProxy by issuing the following command in the terminal:

sudo service haproxy restart

Now, enter the IP or hostname of the LB server in the browser, and our web application page will be displayed either from Web1 or Web2.

Now, disable any of the web servers and then reload the page again. The application will still work fine, because HAProxy automatically detected that one of web servers is down and redirected the traffic to the second web server.

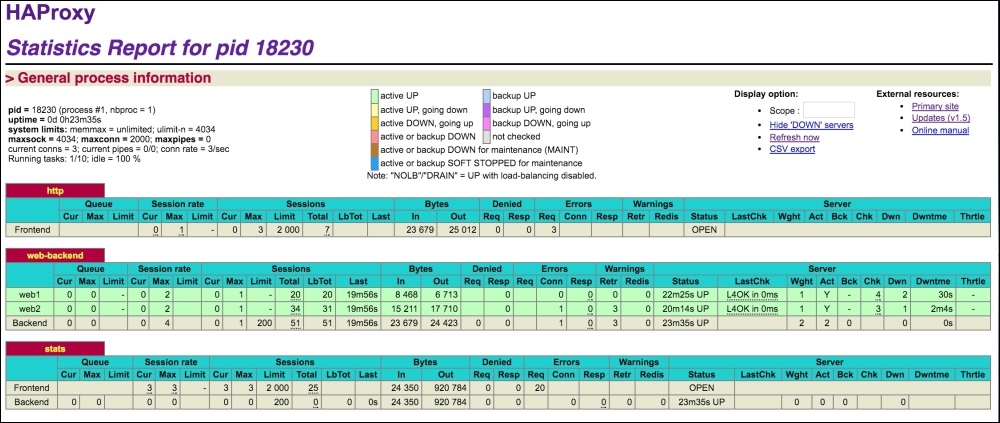

HAProxy also provides a stats page, which is browser-based. It provides complete monitoring information about the LB and all the backends. To enable stats, open haprox.cfg, and place the following code at the end of the file:

listen stats *:1434 stats enable stats uri /haproxy-stats stats auth phpuser:packtPassword

The stats are enabled at port 1434, which can be set to any port. The URL of the page is stats uri. It can be set to any URL. The auth section is for basic HTTP authentication. Save the file and restart HAProxy. Now, open the browser and enter the URL, such as 10.211.55.1:1434/haproxy-stats. The stats page will be displayed as follows:

In the preceding screenshot, each backend web server can be seen, including frontend information.

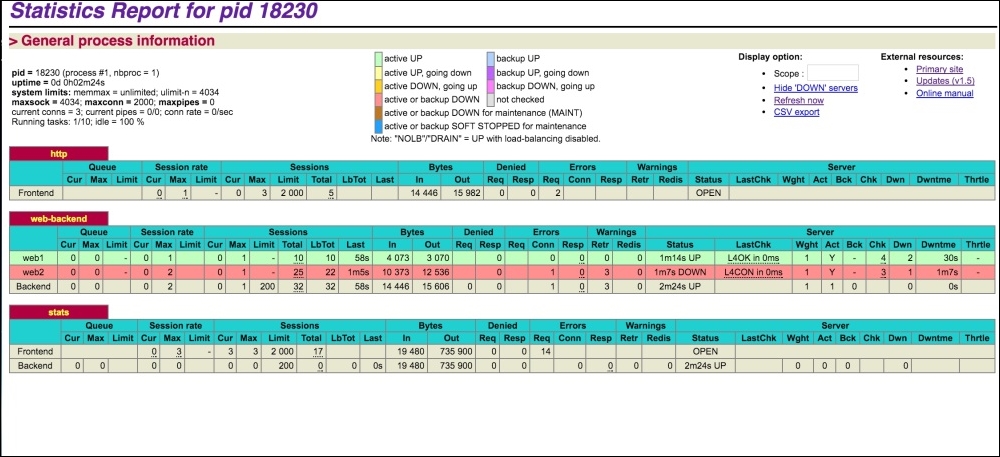

Also, if a web server is down, HAProxy stats will highlight the row for this web server, as can be seen in the following screenshot:

For our test, we stopped NGINX at our Web2 server and refreshed the stats page, and the Web2 server row in the backend section was highlighted.

To terminate SSL using HAProxy, it is pretty simple. To terminate SSL using HAProxy, we will just add the SSL port 443 binding along with the SSL certificate file location. Open the haproxy.cfg file, edit the frontend block, and add the highlighted code in it, as in the following block:

frontend http

bind *:80

bind *:443 ssl crt /etc/ssl/www.domain.crt

mode http

default_backend web-backendsNow, HAProxy also listens at 443, and when an SSL request is sent to it, it processes it there and terminates it so that no HTTPS requests are sent to backend servers. This way, the load of SSL encryption/decryption is removed from the web servers and is managed by the HAProxy server only. As SSL is terminated at the HAProxy server, there is no need for web servers to listen at port 443, as regular requests from HAProxy server are sent to the backend.