Copyright © 2018 Packt Publishing

All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews.

Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the authors, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book.

Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information.

Commissioning Editor: Amey Varangaonkar

Acquisition Editor: Prachi Bisht

Content Development Editor: Eisha Dsouza

Technical Editor: Nirbhaya Shaji

Copy Editors: Laxmi Subramanian and Safis Editing

Project Coordinator: Kinjal Bari

Proofreader: Safis Editing

Indexer: Tejal Daruwale Soni

Graphics: Jisha Chirayil

Production Coordinator: Aparna Bhagat

First published: March 2018

Production reference: 1270318

Published by Packt Publishing Ltd.

Livery Place

35 Livery Street

Birmingham

B3 2PB, UK.

ISBN 978-1-78728-886-7

Mapt is an online digital library that gives you full access to over 5,000 books and videos, as well as industry leading tools to help you plan your personal development and advance your career. For more information, please visit our website.

Spend less time learning and more time coding with practical eBooks and Videos from over 4,000 industry professionals

Improve your learning with Skill Plans built especially for you

Get a free eBook or video every month

Mapt is fully searchable

Copy and paste, print, and bookmark content

Did you know that Packt offers eBook versions of every book published, with PDF and ePub files available? You can upgrade to the eBook version at www.PacktPub.com and as a print book customer, you are entitled to a discount on the eBook copy. Get in touch with us at service@packtpub.com for more details.

At www.PacktPub.com, you can also read a collection of free technical articles, sign up for a range of free newsletters, and receive exclusive discounts and offers on Packt books and eBooks.

Aaron Ploetz is the NoSQL Engineering Lead for Target, where his DevOps team supports Cassandra, MongoDB, Redis, and Neo4j. He has been named a DataStax MVP for Apache Cassandra three times, and has presented at multiple events, including the DataStax Summit and Data Day Texas. He earned a BS in Management/Computer Systems from the University of Wisconsin-Whitewater, and a MS in Software Engineering from Regis University. He and his wife, Coriene, live with their three children in the Twin Cities area.

Devram Kandhare has 4 years of experience of working with the SQL database—MySql and NoSql databases—MongoDB and DynamoDB. He has worked as database designer and developer. He has developed various projects using the Agile development model. He is experienced in building web-based applications and REST API.

Sudarshan Kadambi has a background in distributed systems and database design. He has been a user and contributor to various NoSQL databases and is passionate about solving large-scale data management challenges.

Xun (Brian) Wu has more than 15 years of experience in web/mobile development, big data analytics, cloud computing, blockchain, and IT architecture. He holds a master's degree in computer science from NJIT. He is always enthusiastic about exploring new ideas, technologies, and opportunities that arise. He has previously reviewed more than 40 books from Packt Publishing.

If you're interested in becoming an author for Packt, please visit authors.packtpub.com and apply today. We have worked with thousands of developers and tech professionals, just like you, to help them share their insight with the global tech community. You can make a general application, apply for a specific hot topic that we are recruiting an author for, or submit your own idea.

The book will help you understand the fundamentals of each database, and understand how their functionalities differ, while still giving you a common result – a database solution with speed, high performance, and accuracy.

If you are a budding DBA or a developer who wants to get started with the fundamentals of NoSQL databases, this book is for you. Relational DBAs who want to get insights into the various offerings of the popular NoSQL databases will also find this book to be very useful.

Chapter 1, Introduction to NoSQL Databases, introduces the topic of NoSQL and distributed databases. The design principles and trade-offs involved in NoSQL database design are described. These design principles provide context around why individual databases covered in the following chapters are designed in a particular way and what constraints they are trying to optimize for.

Chapter 2, MongoDB, covers installation and basic CRUD operations. High-level concepts such as indexing allow you to speed up database operations, sharding, and replication. Also, it covers data models, which help us with application database design.

Chapter 3, Neo4j, introduces the Neo4j graph database. It discusses Neo4j's architecture, use cases, administration, and application development.

Chapter 4, Redis, discusses the Redis data store. Redis’ unique architecture and behavior will be discussed, as well as installation, application development, and server-side scripting with Lua.

Chapter 5, Cassandra, introduces the Cassandra database. Cassandra’s highly-available, eventually consistent design will be discussed along with the appropriate use cases. Known anti-patterns will also be presented, as well as production-level configuration, administration, and application development.

Chapter 6, HBase, introduces HBase, that is, the Hadoop Database. Inspired by Google's Bigtable, HBase is a widely deployed key-value store today. This chapter covers HBase's architectural internals, data model, and API.

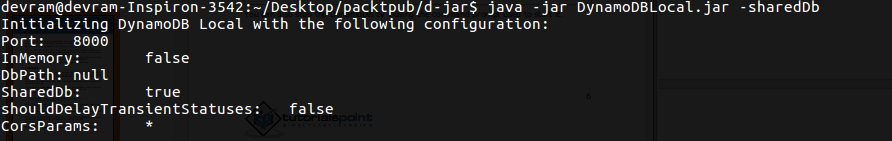

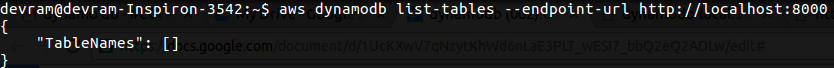

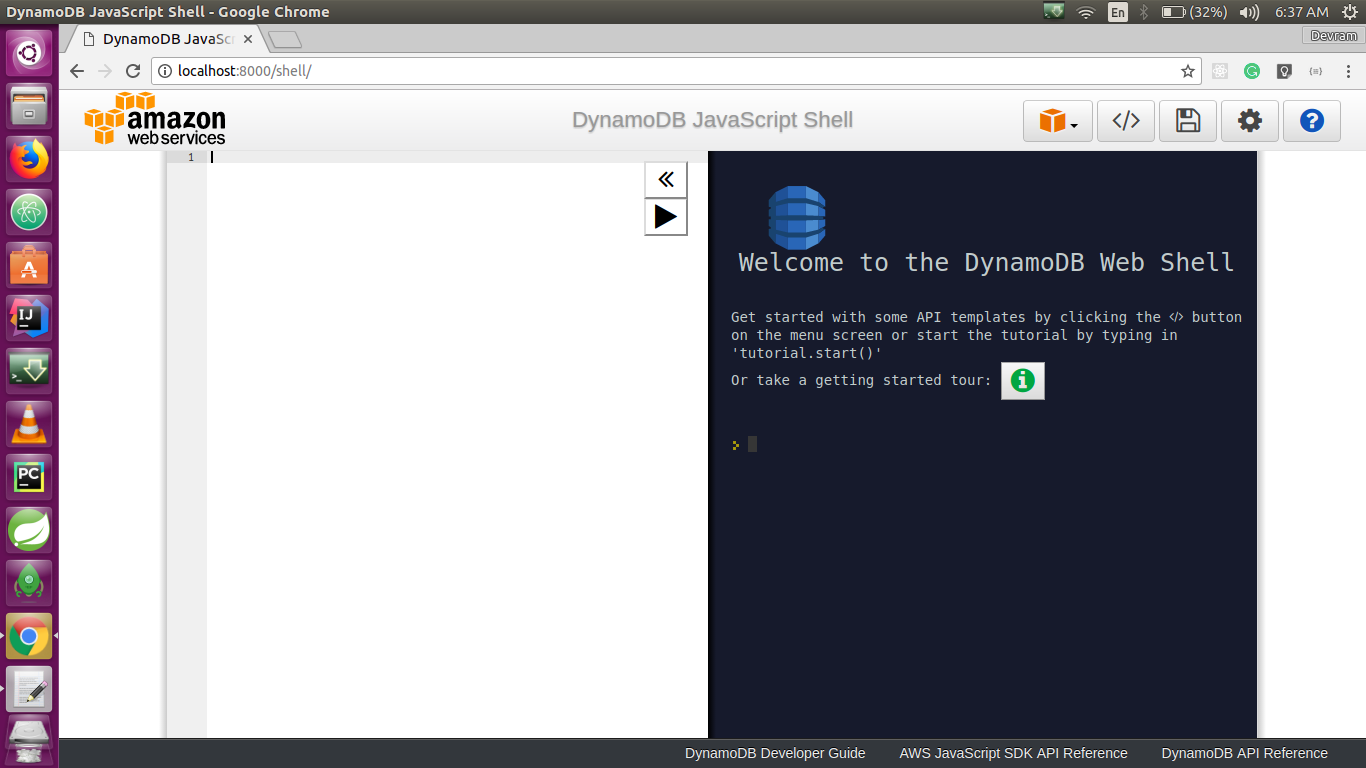

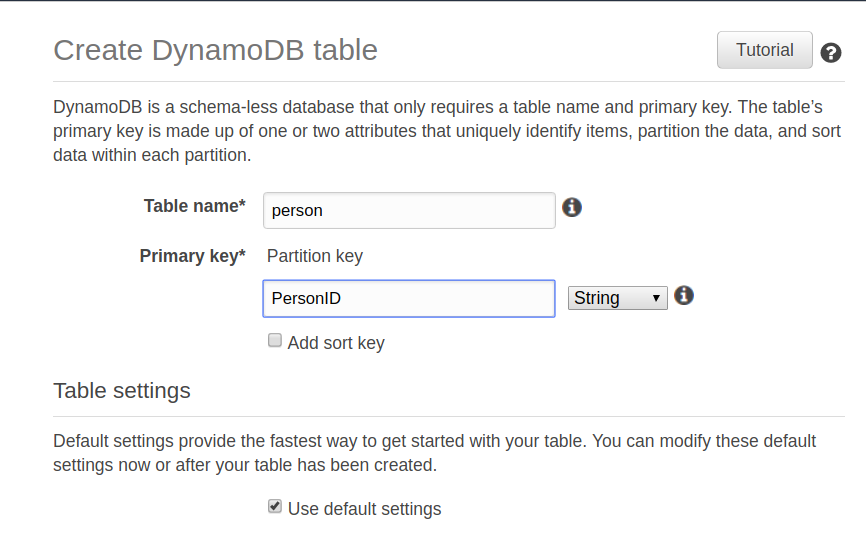

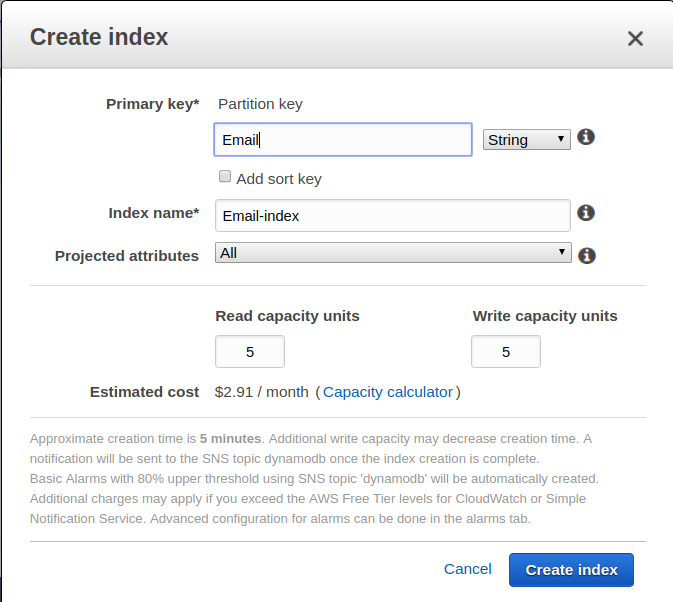

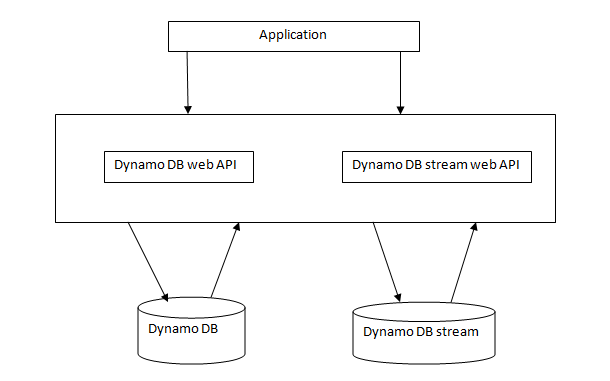

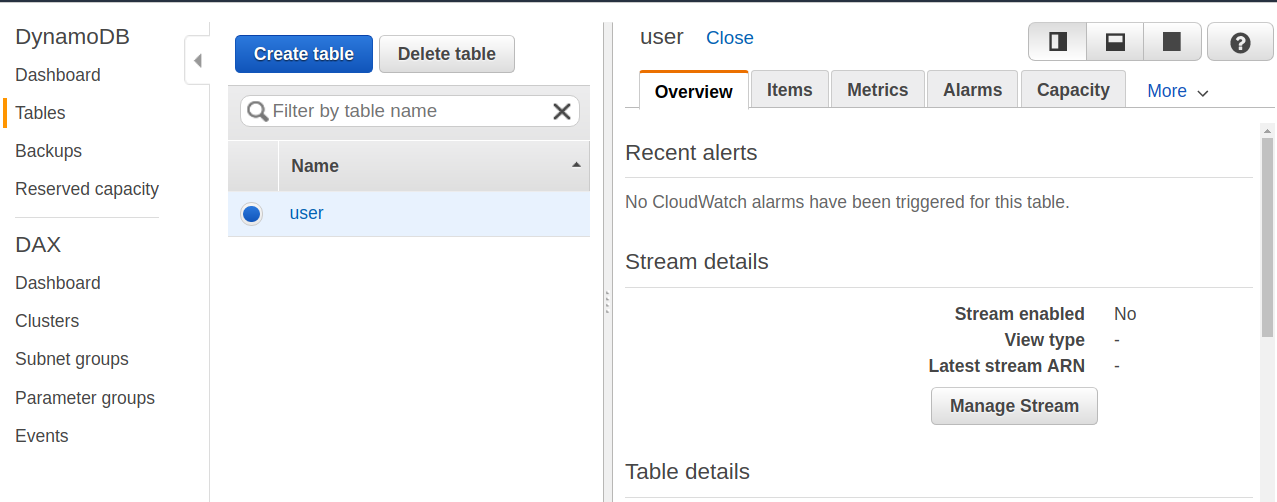

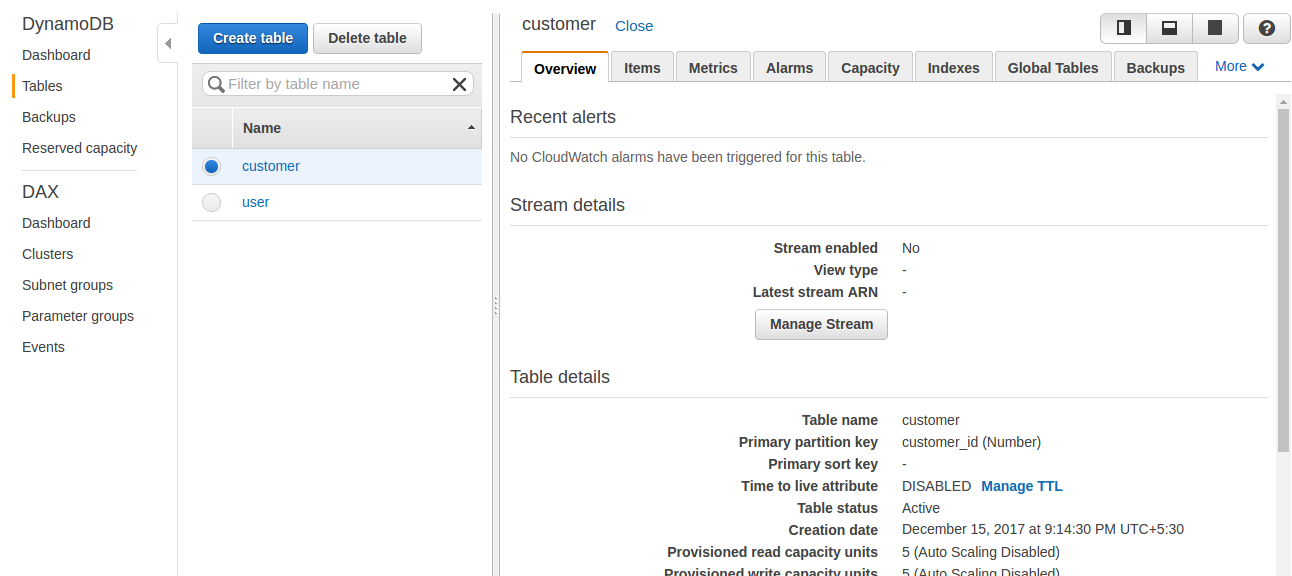

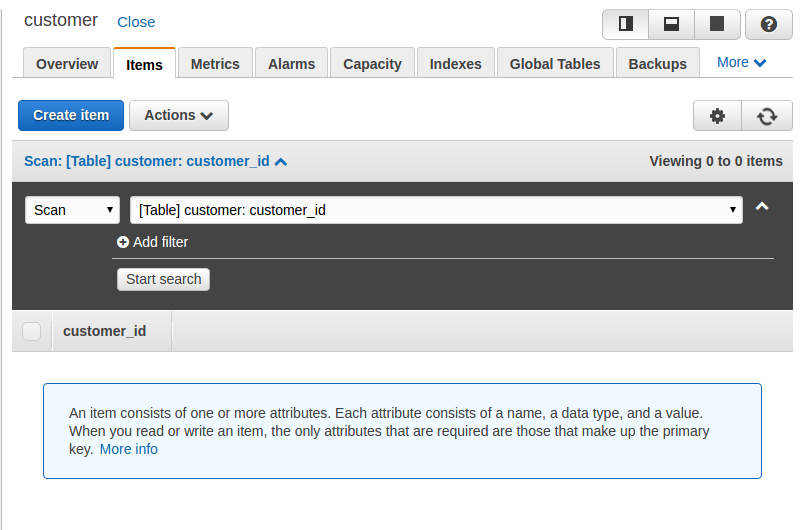

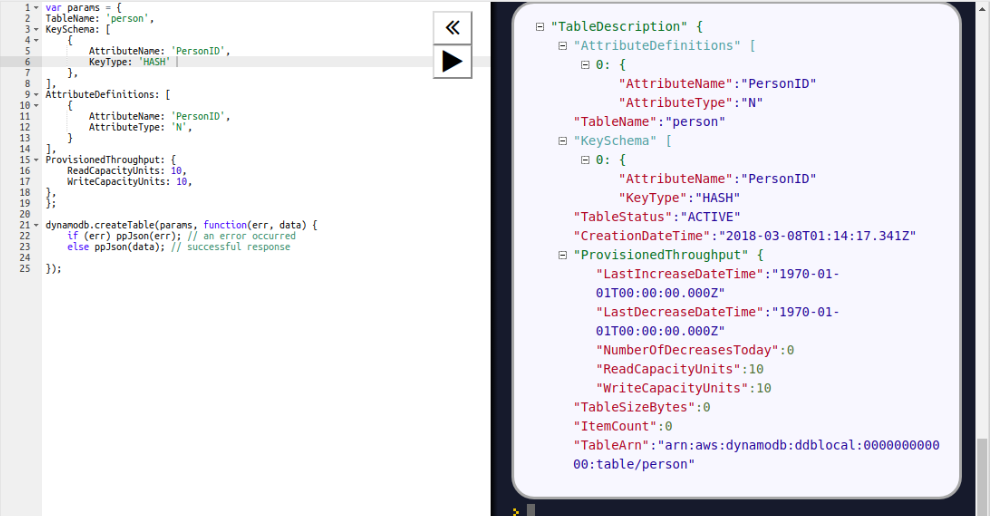

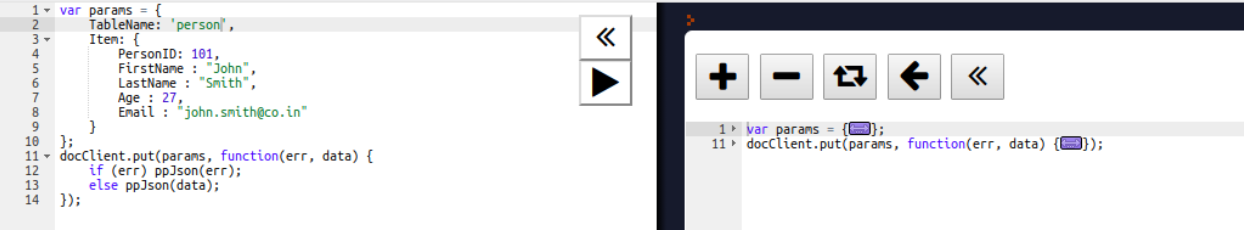

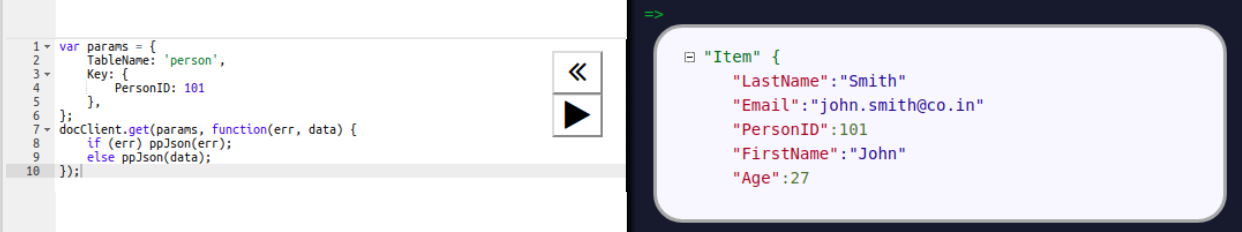

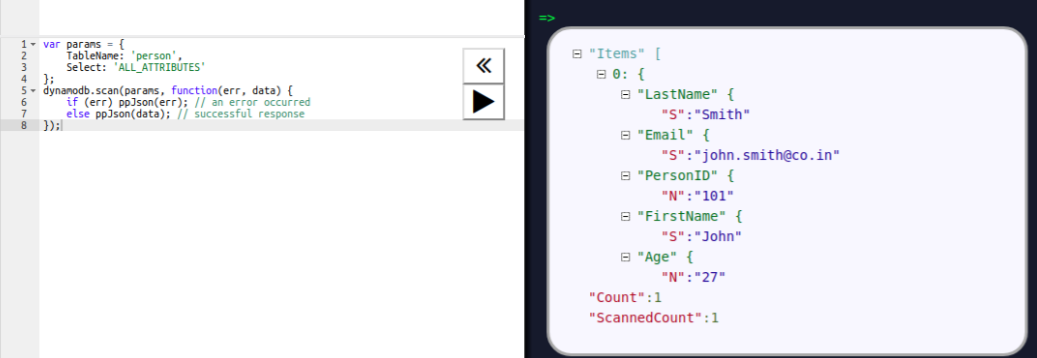

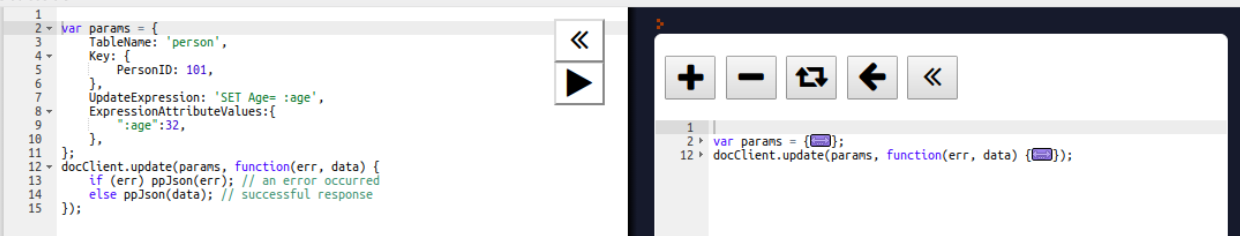

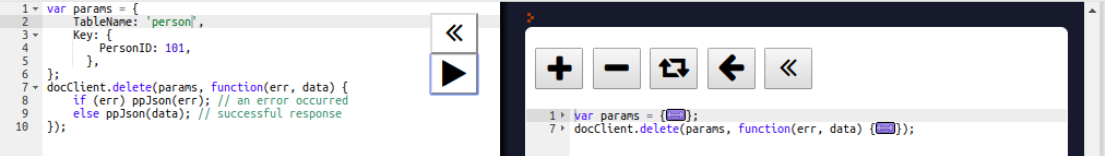

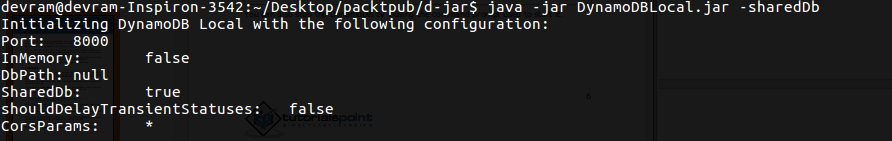

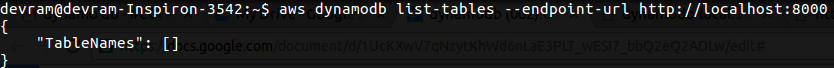

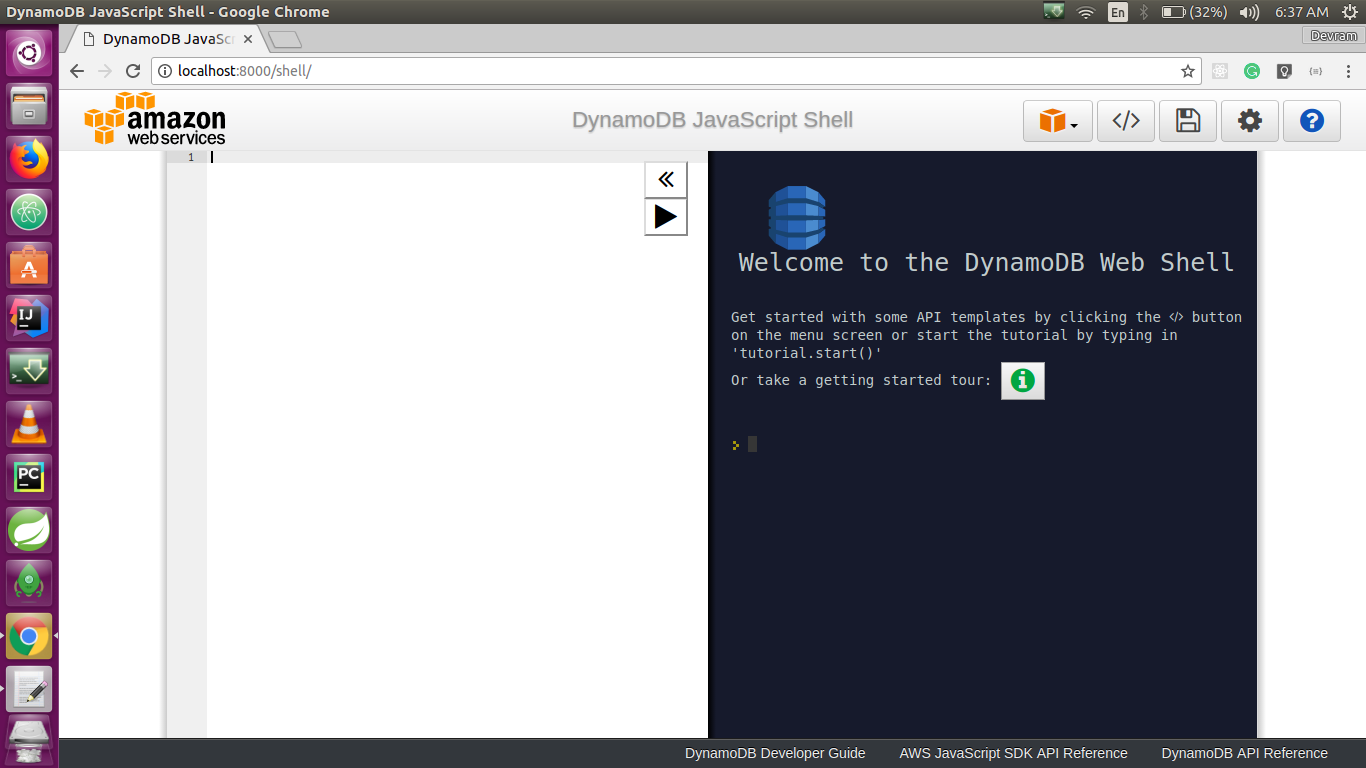

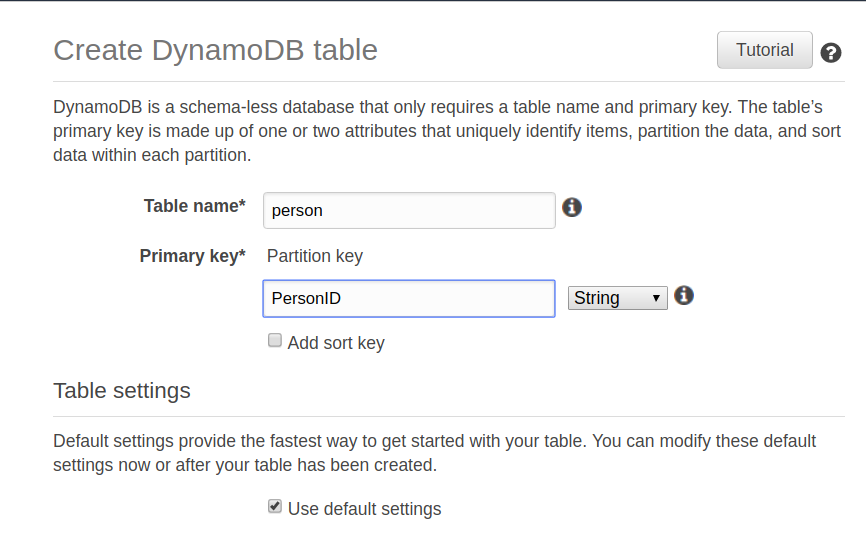

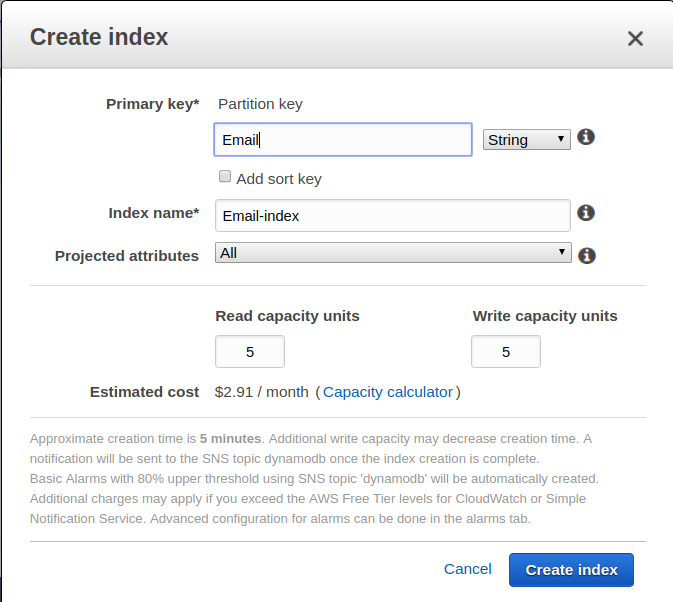

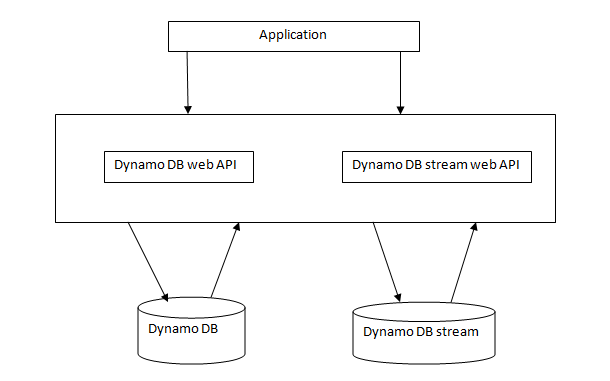

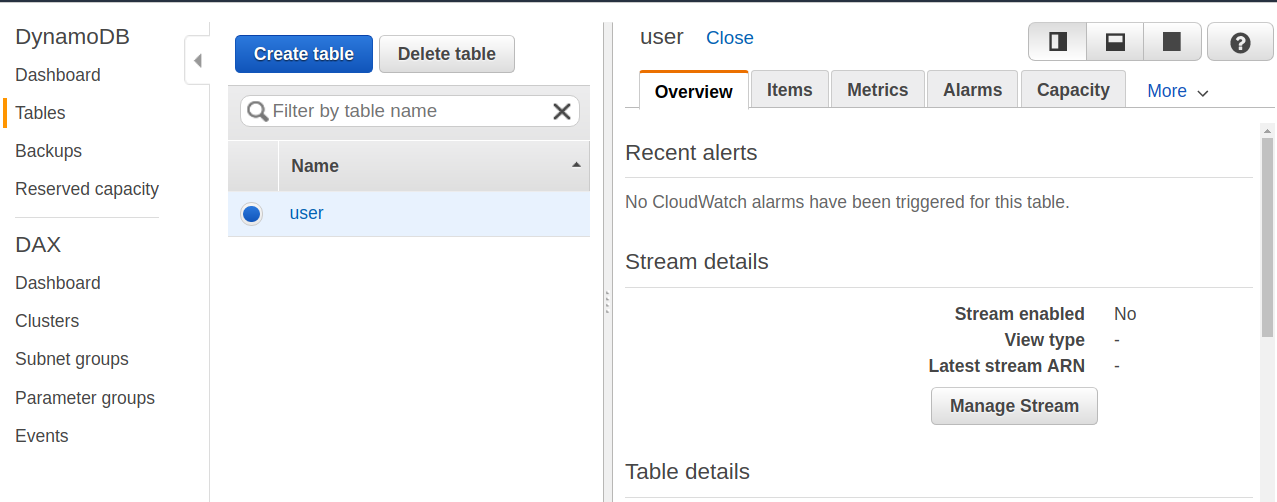

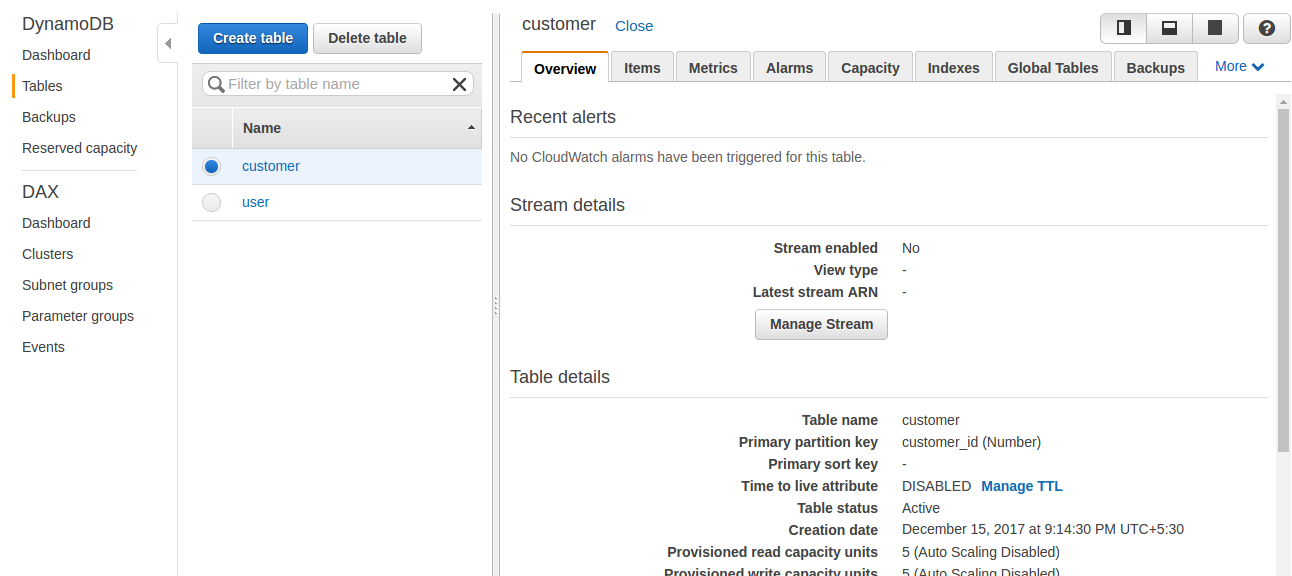

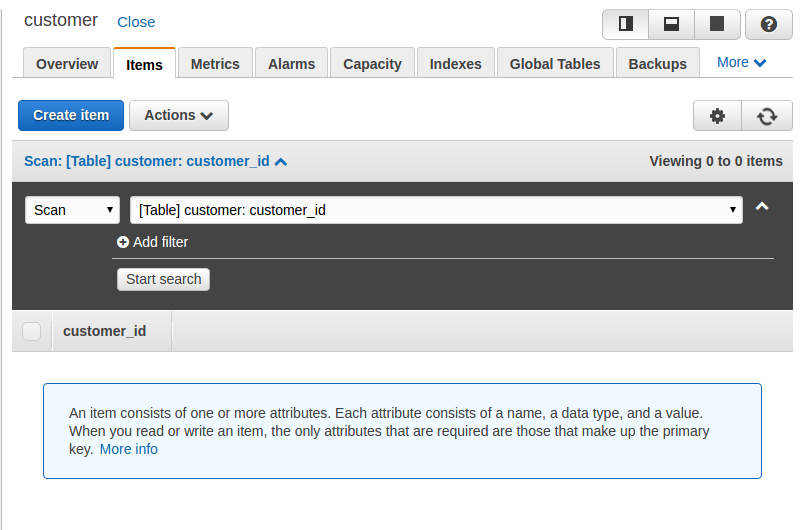

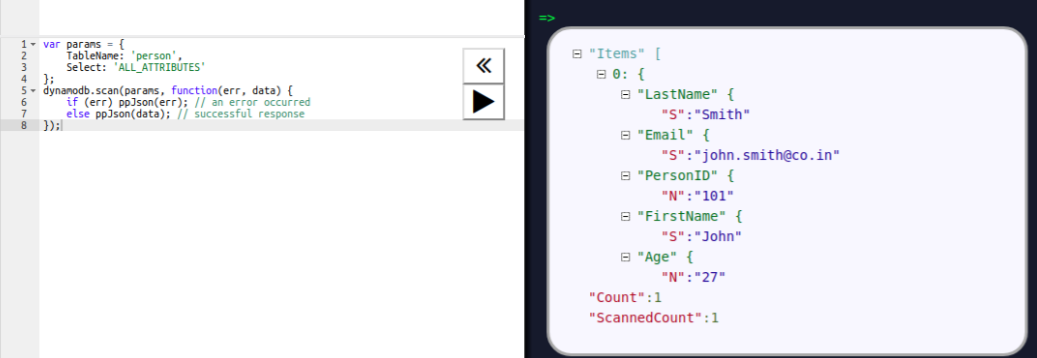

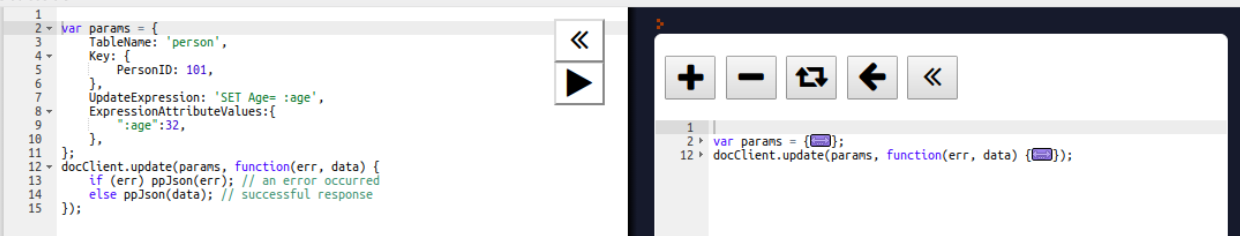

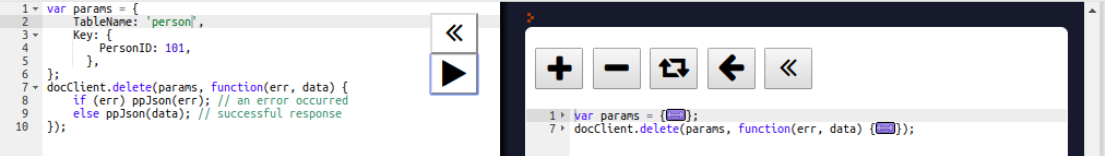

Chapter 7, DynamoDB, covers how to set up a local and AWS DynamoDB service and perform CRUD operations. It also covers how to deal with partition keys, sort keys, and secondary indexes. It covers various advantages and disadvantages of DynamoDB over other databases, which makes it easy for developers to choose a database for an application.

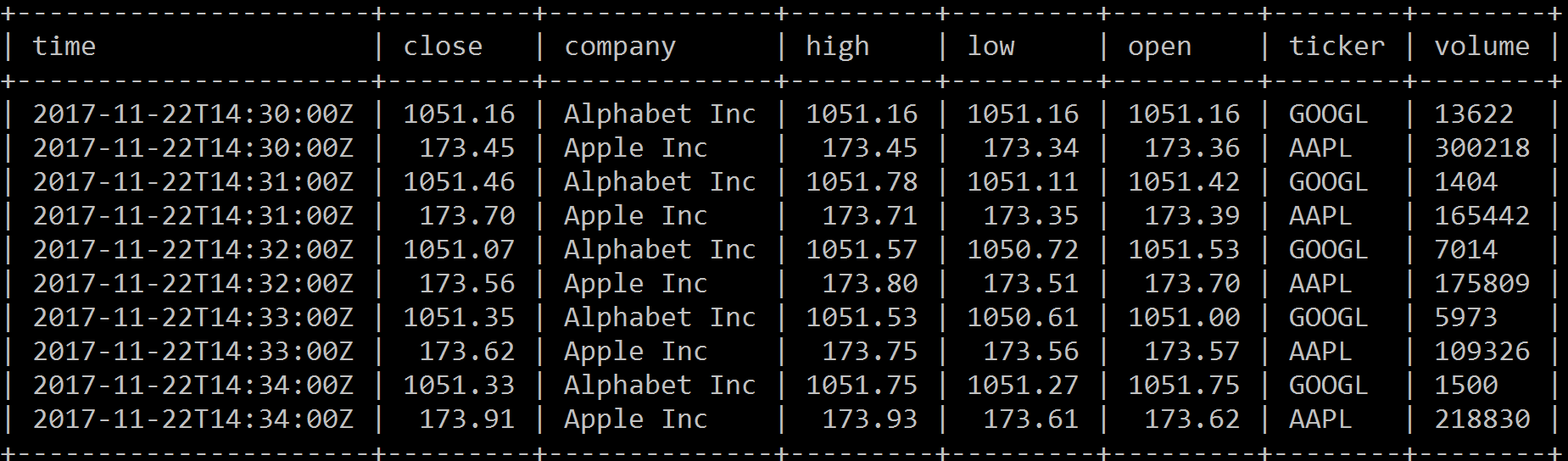

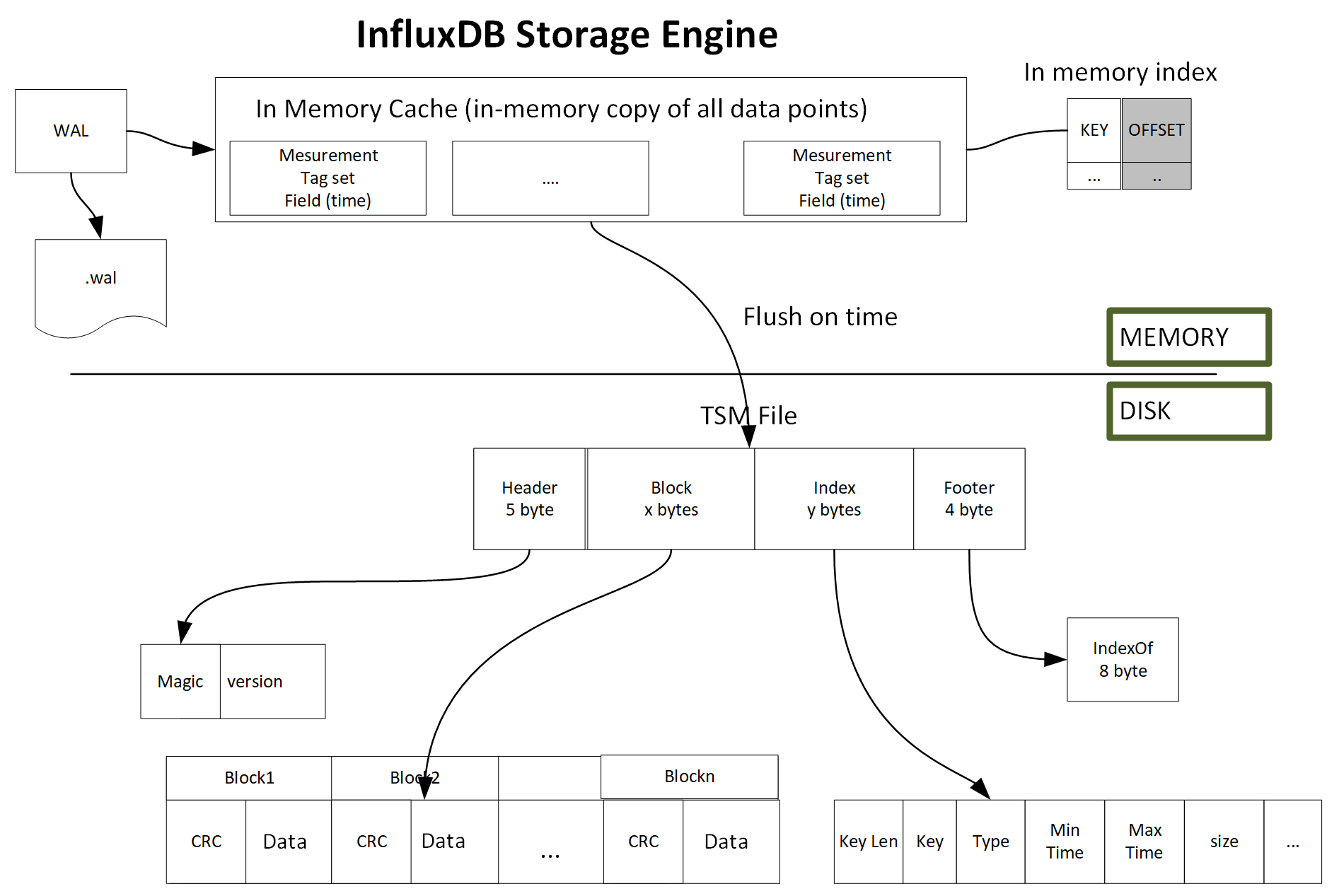

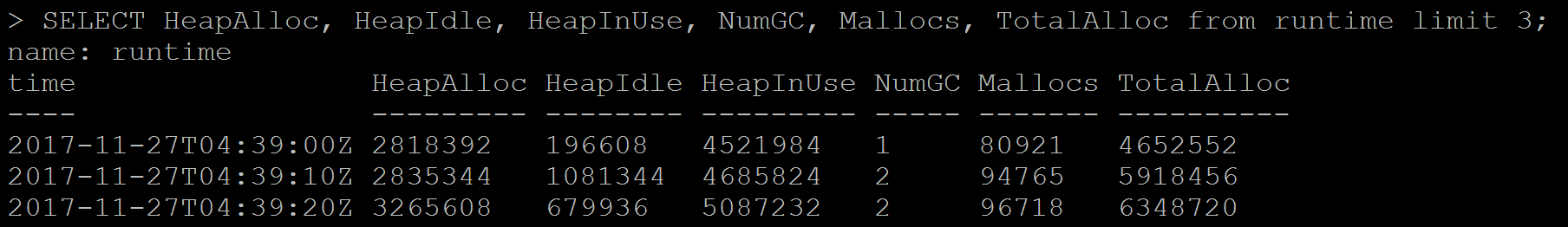

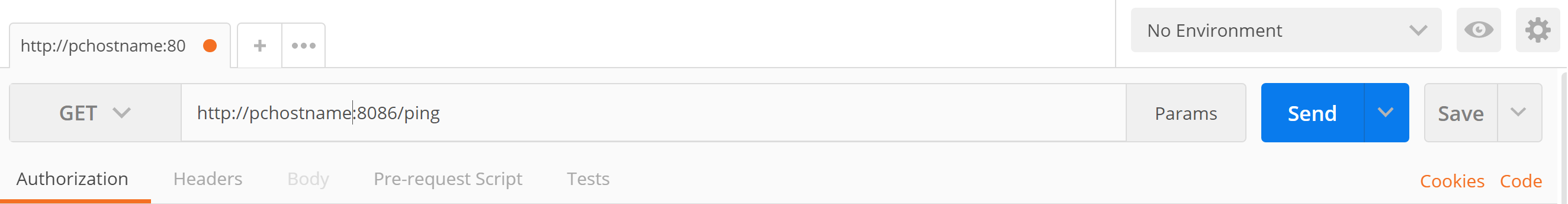

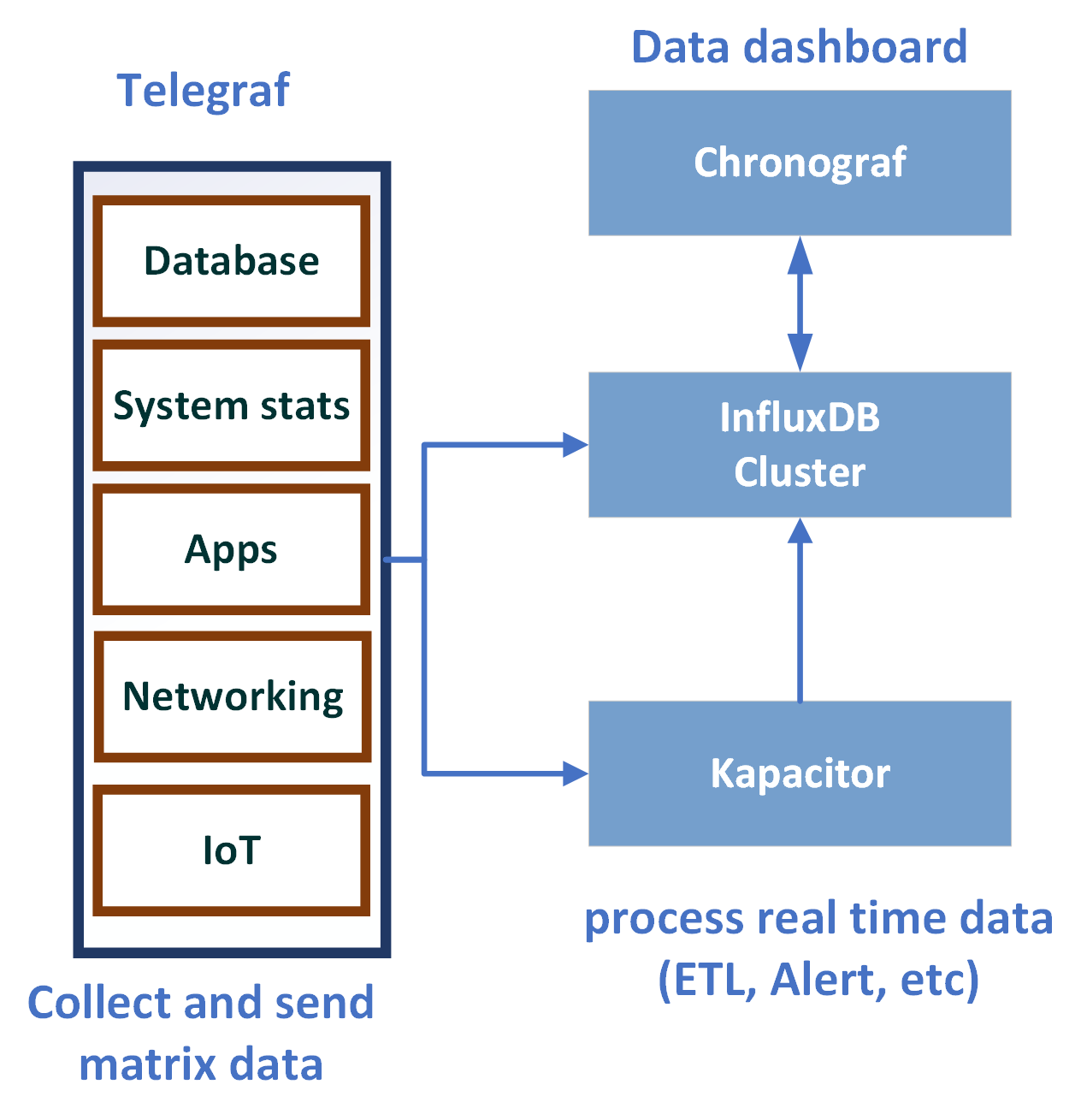

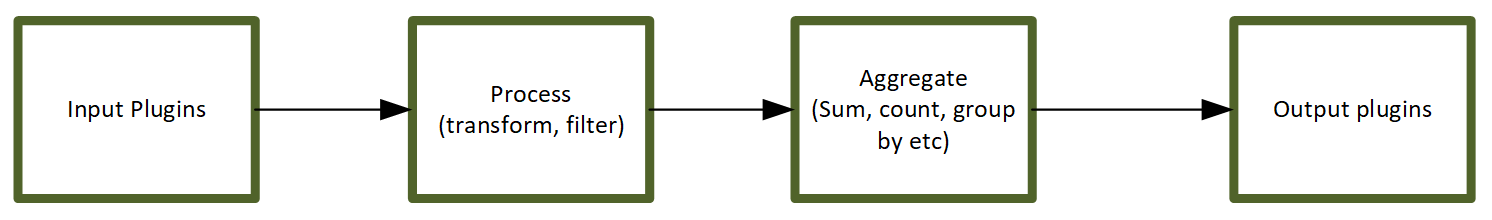

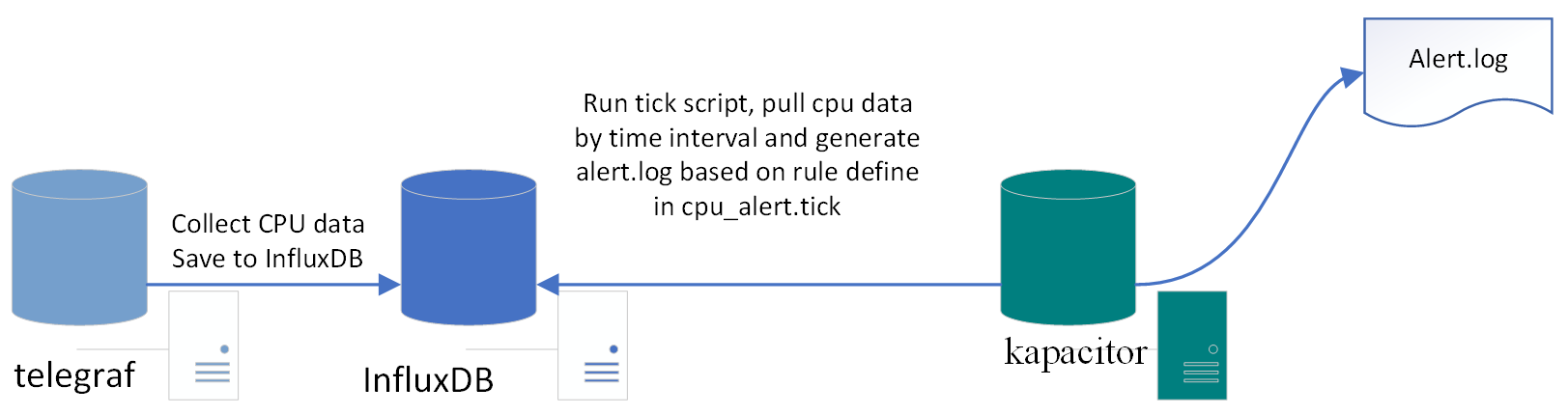

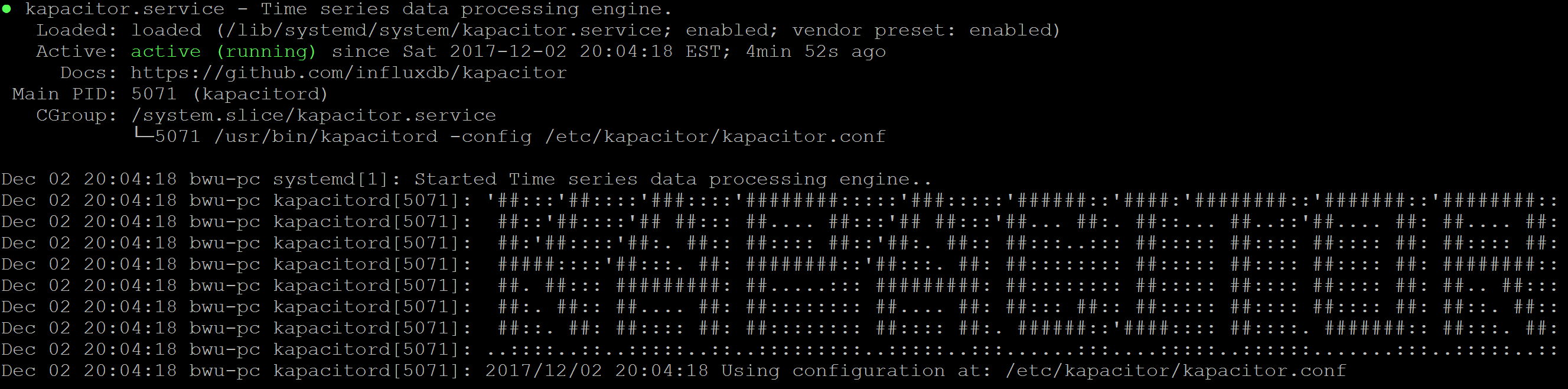

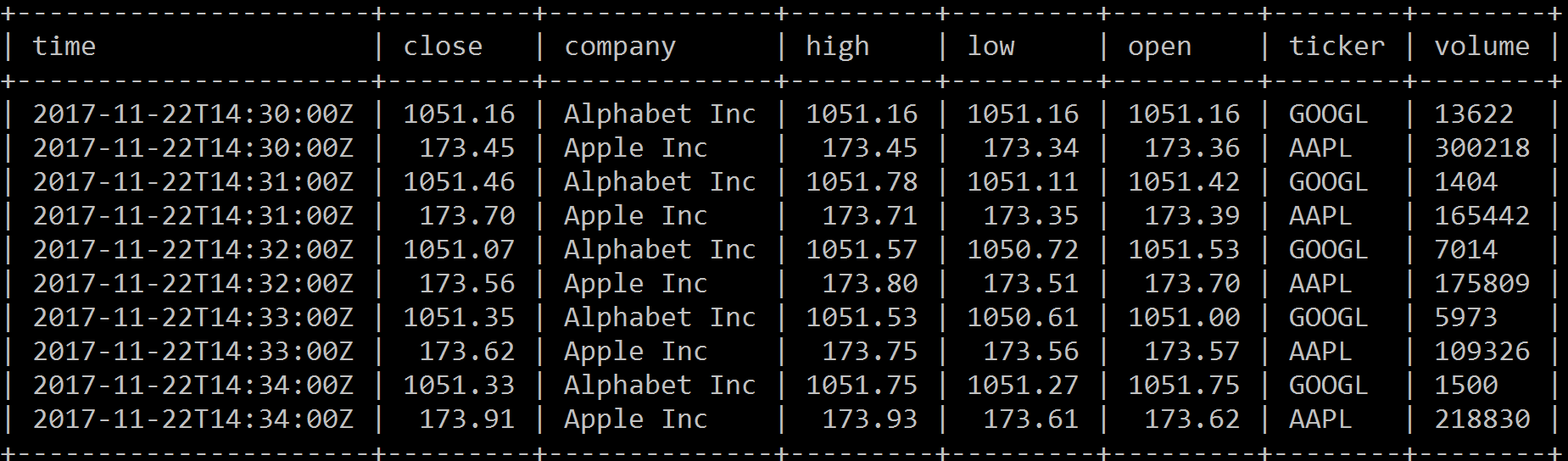

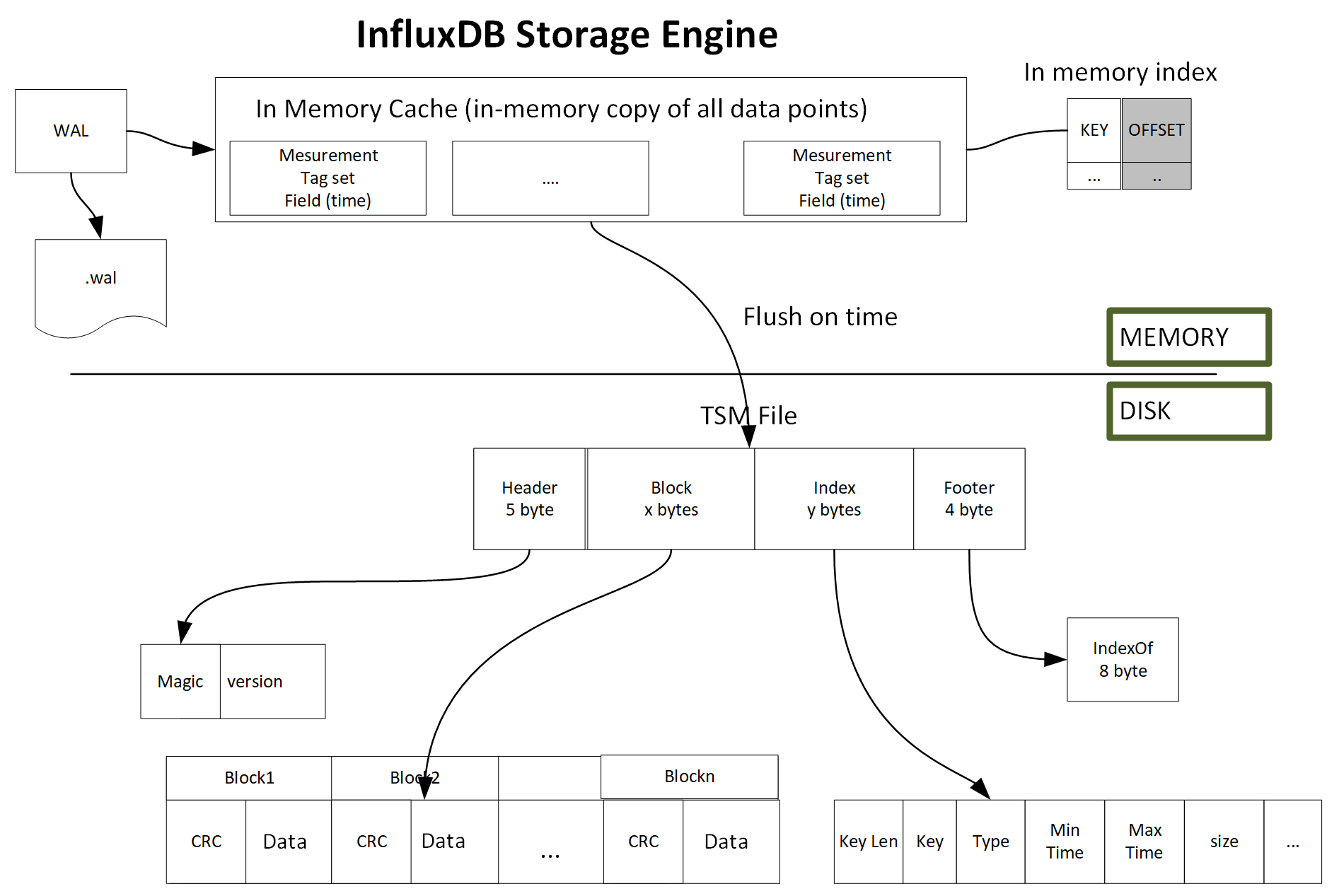

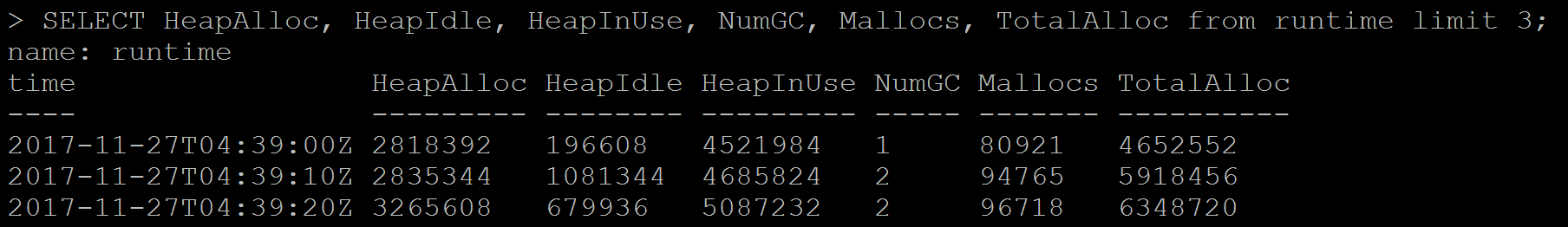

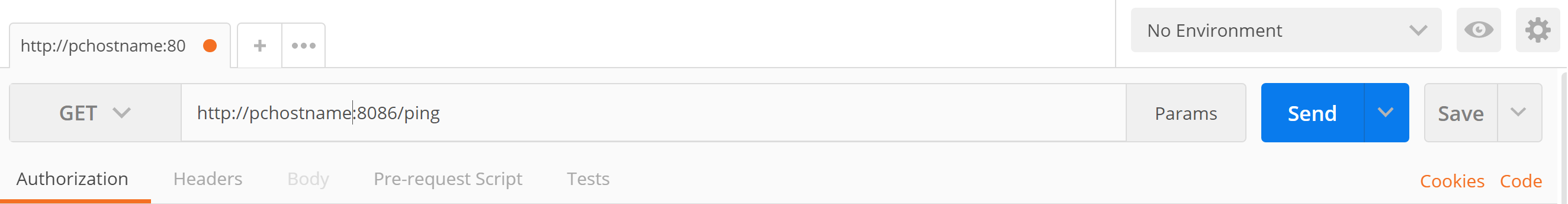

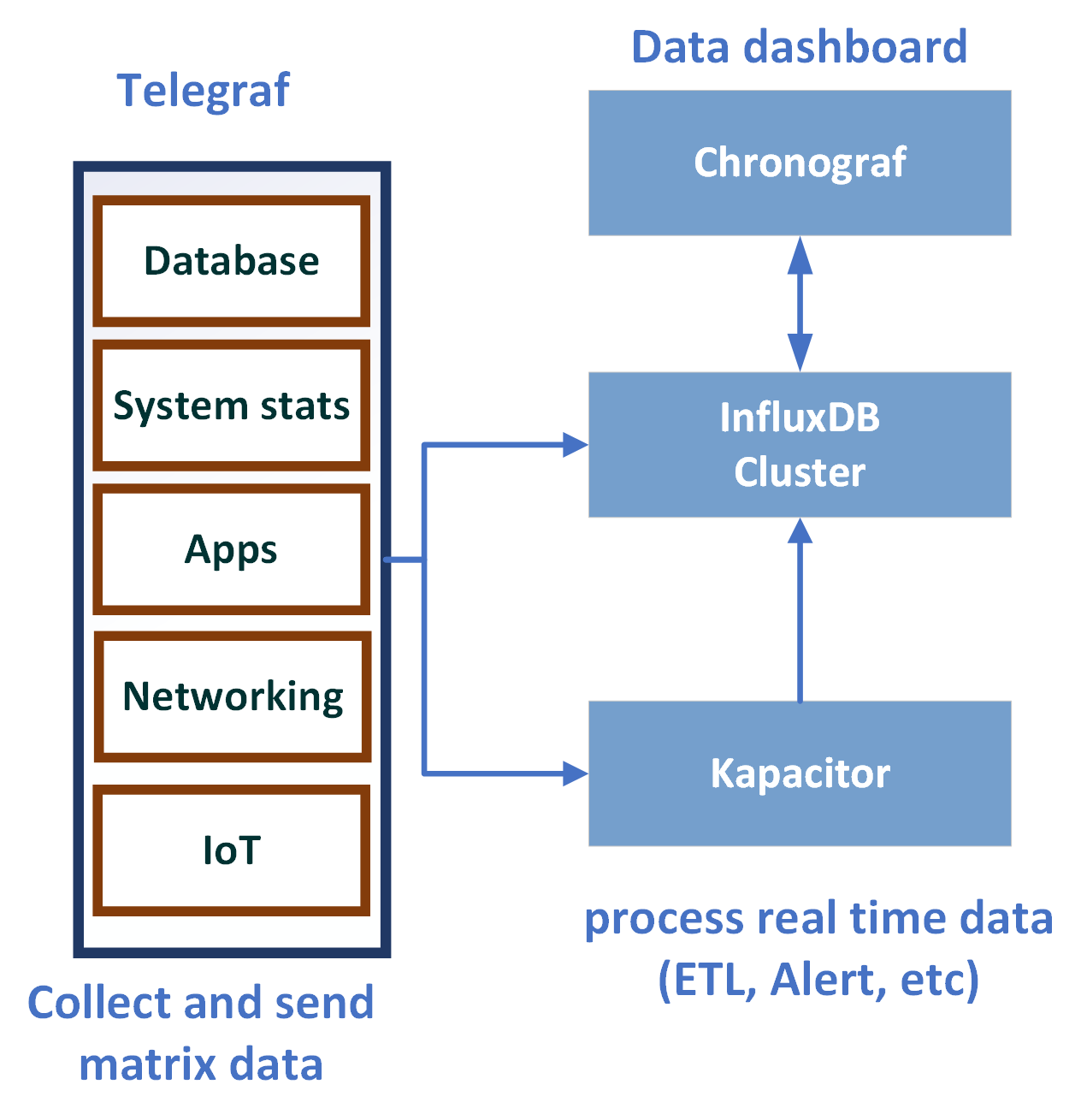

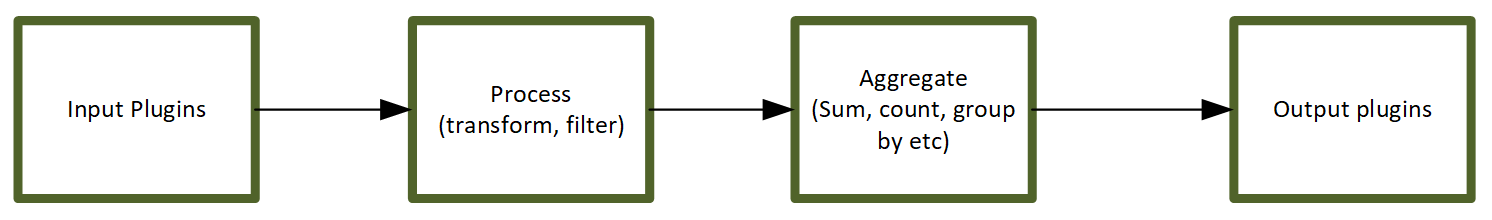

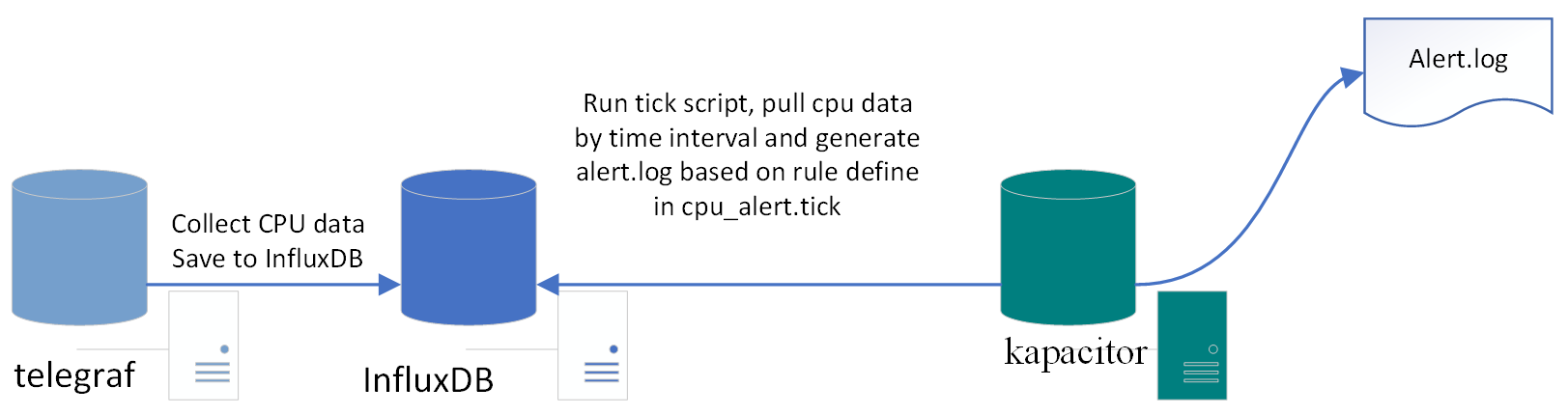

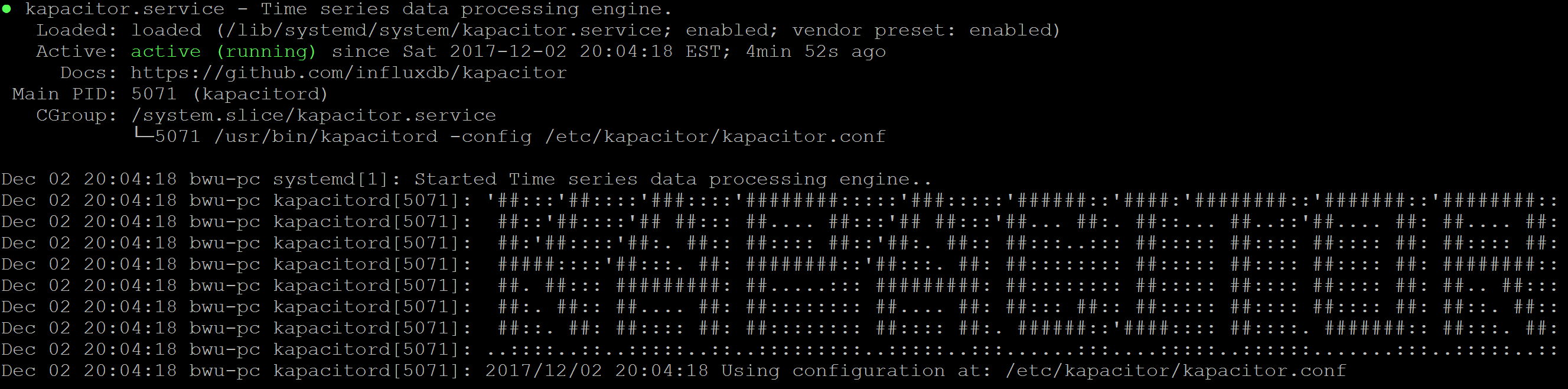

Chapter 8, InfluxDB, describes InfluxDB and its key concepts and terms. It also covers InfluxDB installation and configuration. It explores the query language and APIs. It helps you set up Telegraf and Kapacitor as an InfluxDB ecosystem's key components to collect and process data. At the end of the chapter, you will also find information about InfluxDB operations.

This book assumes that you have access to hardware on which you can install, configure, and code against a database instance. Having elevated admin or sudo privileges on the aforementioned machine will be essential to carrying out some of the tasks described.

Some of the NoSQL databases discussed will only run on a Linux-based operating system. Therefore, prior familiarity with Linux is recommended. As OS-specific system administration is not within the scope of this book, readers who are new to Linux may find value in seeking out a separate tutorial prior to attempting some of the examples.

The Java Virtual Machine (JVM)-based NoSQL databases will require a Java Runtime Environment (JRE) to be installed. Do note that some of them may require a specific version of the JRE to function properly. This will necessitate updating or installing a new JRE, depending on the database.

The Java coding examples will be easier to do from within an Integrated Developer Envorinment (IDE), with Maven installed for dependency management. You may need to look up additional resources to ensure that these components are configured properly.

In Chapter 6, HBase, you can install the Hortonworks sandbox to get a small HBase cluster set up on your laptop. The sandbox can be installed for free from https://hortonworks.com/products/sandbox/.

In Chapter 8, InfluxDB, to run the examples you will need to install InfluxDB in a UNIX or Linux environment. In order to run different InfluxDB API client examples, you also need to install a programming language environment and related InfluxDB client packages:

You can download the example code files for this book from your account at www.packtpub.com. If you purchased this book elsewhere, you can visit www.packtpub.com/support and register to have the files emailed directly to you.

You can download the code files by following these steps:

Once the file is downloaded, please make sure that you unzip or extract the folder using the latest version of:

The code bundle for the book is also hosted on GitHub at https://github.com/PacktPublishing/Seven-NoSQL-Databases-in-a-Week. In case there's an update to the code, it will be updated on the existing GitHub repository.

We also have other code bundles from our rich catalog of books and videos available at https://github.com/PacktPublishing/. Check them out!

We also provide a PDF file that has color images of the screenshots/diagrams used in this book. You can download it here: http://www.packtpub.com/sites/default/files/downloads/SevenNoSQLDatabasesinaWeek_ColorImages.pdf.

There are a number of text conventions used throughout this book.

CodeInText: Indicates code words in text, database table names, folder names, filenames, file extensions, pathnames, dummy URLs, user input, and Twitter handles. Here is an example: "Now is also a good time to change the initial password. Neo4j installs with a single default admin username and password of neo4j/neo4j."

A block of code is set as follows:

# Paths of directories in the installation.

#dbms.directories.data=data

#dbms.directories.plugins=plugins

#dbms.directories.certificates=certificates

#dbms.directories.logs=logs

#dbms.directories.lib=lib

#dbms.directories.run=run

When we wish to draw your attention to a particular part of a code block, the relevant lines or items are set in bold:

# Paths of directories in the installation.

#dbms.directories.data=data

#dbms.directories.plugins=plugins

#dbms.directories.certificates=certificates

#dbms.directories.logs=logs

#dbms.directories.lib=lib

#dbms.directories.run=run

Any command-line input or output is written as follows:

sudo mkdir /local

sudo chown $USER:$USER /local

cd /local

mv ~/Downloads/neo4j-community-3.3.3-unix.tar.gz .

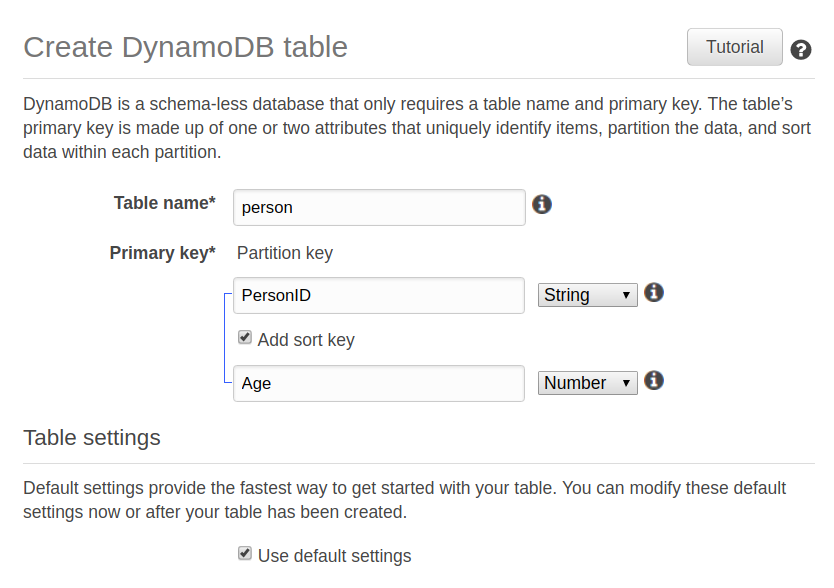

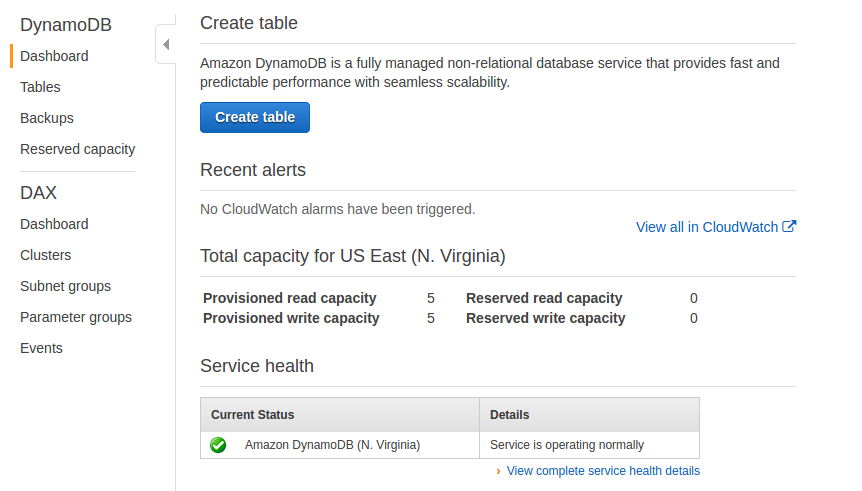

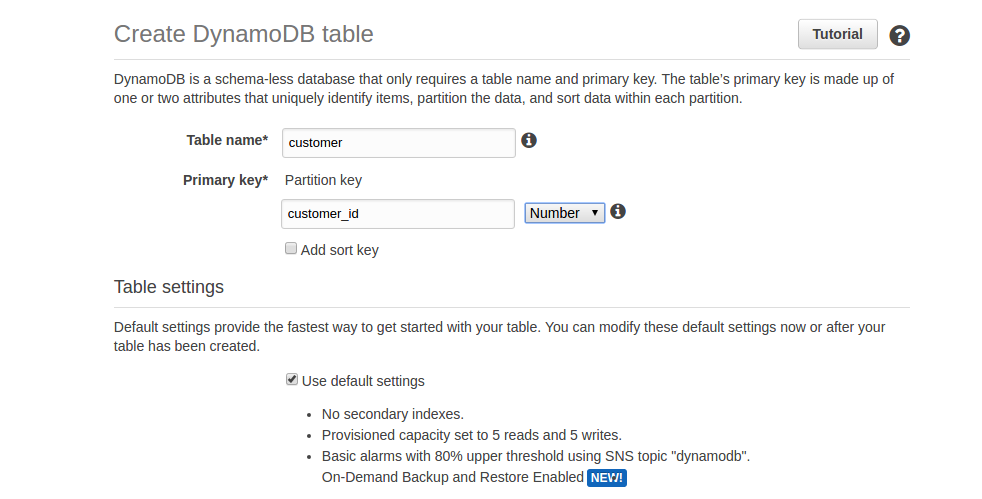

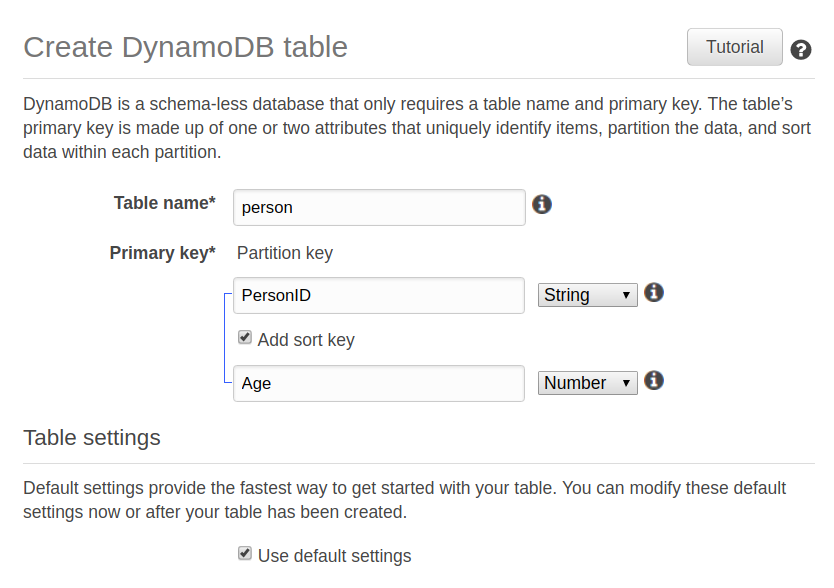

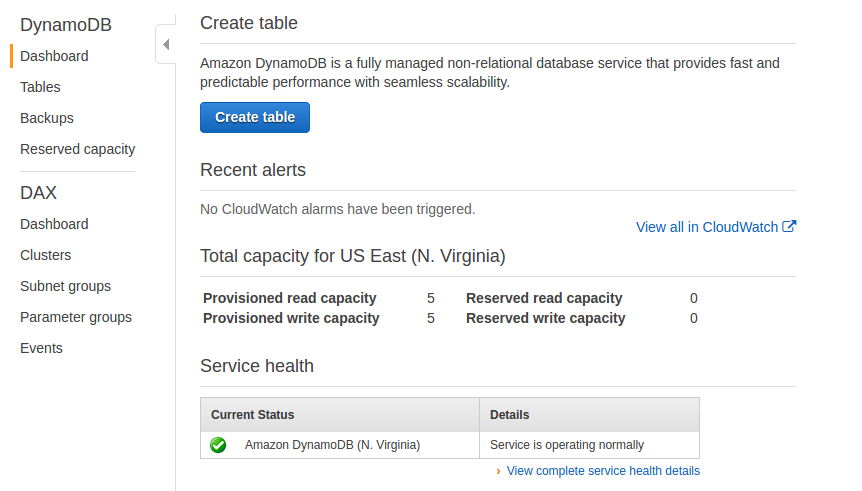

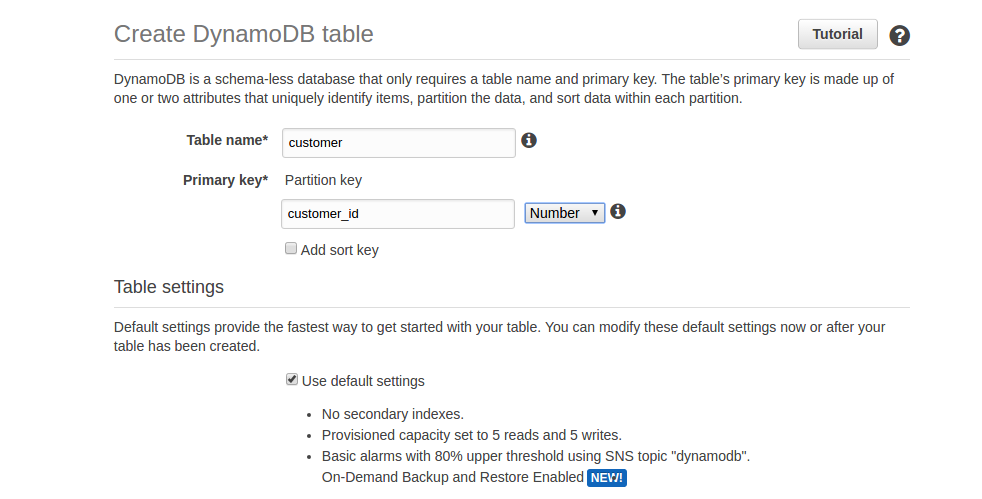

Bold: Indicates a new term, an important word, or words that you see onscreen. For example, words in menus or dialog boxes appear in the text like this. Here is an example: "To create a table, click on the Create table button. This will take you to the Create table screen."

Feedback from our readers is always welcome.

General feedback: Email feedback@packtpub.com and mention the book title in the subject of your message. If you have questions about any aspect of this book, please email us at questions@packtpub.com.

Errata: Although we have taken every care to ensure the accuracy of our content, mistakes do happen. If you have found a mistake in this book, we would be grateful if you would report this to us. Please visit www.packtpub.com/submit-errata, selecting your book, clicking on the Errata Submission Form link, and entering the details.

Piracy: If you come across any illegal copies of our works in any form on the Internet, we would be grateful if you would provide us with the location address or website name. Please contact us at copyright@packtpub.com with a link to the material.

If you are interested in becoming an author: If there is a topic that you have expertise in and you are interested in either writing or contributing to a book, please visit authors.packtpub.com.

Please leave a review. Once you have read and used this book, why not leave a review on the site that you purchased it from? Potential readers can then see and use your unbiased opinion to make purchase decisions, we at Packt can understand what you think about our products, and our authors can see your feedback on their book. Thank you!

For more information about Packt, please visit packtpub.com.

Over the last decade, the volume and velocity with which data is generated within organizations has grown exponentially. Consequently, there has been an explosion of database technologies that have been developed to address these growing data needs. These databases have typically had distributed implementations, since the volume of data being managed far exceeds the storage capacity of a single node. In order to support the massive scale of data, these databases have provided fewer of the features that we've come to expect from relational databases.

The first generation of these so-called NoSQL databases only provided rudimentary key-value get/put APIs. They were largely schema-free and didn't require well-defined types to be associated with the values being stored in the database. Over the last decade, however, a number of features that we've come to expect from standard databases—such as type systems and SQL, secondary indices, materialized views, and some kind of concept of transactions—have come to be incorporated and overlaid over those rudimentary key-value interfaces.

Today, there are hundreds of NoSQL databases available in the world, with a few popular ones, such as MongoDB, HBase, and Cassandra, having the lion's share of the market, followed by a long list of other, less popular databases.

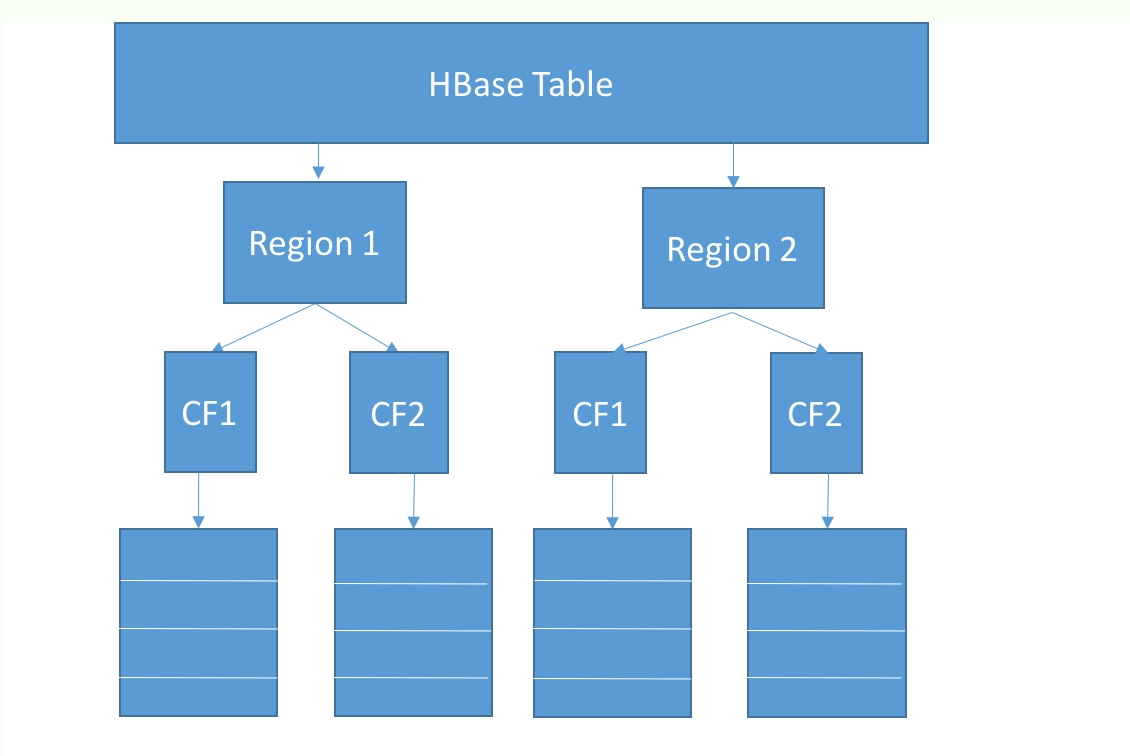

These databases have different data models, ranging from the document model of MongoDB, to the column-family model of HBase and Cassandra, to the columnar model of Kudu. These databases are widely deployed in hundreds of organizations and at this point are considered mainstream and commonplace.

This book covers some of the most popular and widely deployed NoSQL databases. Each chapter covers a different NoSQL database, how it is architected, how to model your data, and how to interact with the database. Before we jump into each of the NoSQL databases covered in this book, let's look at some of the design choices that should be considered when one is setting out to build a distributed database.

Knowing about some of these database principles will give us insight into why different databases have been designed with different architectural choices in mind, based on the use cases and workloads they were originally designed for.

A database's consistency refers to the reliability of its functions' performance. A consistent system is one in which reads return the value of the last write, and reads at a given time epoch return the same value regardless of where they were initiated.

NoSQL databases support a range of consistency models, such as the following:

A database's availability refers to the system's ability to complete a certain operation. Like consistency, availability is a spectrum. A system can be unavailable for writes while being available for reads. A system can be unavailable for admin operations while being available for data operations.

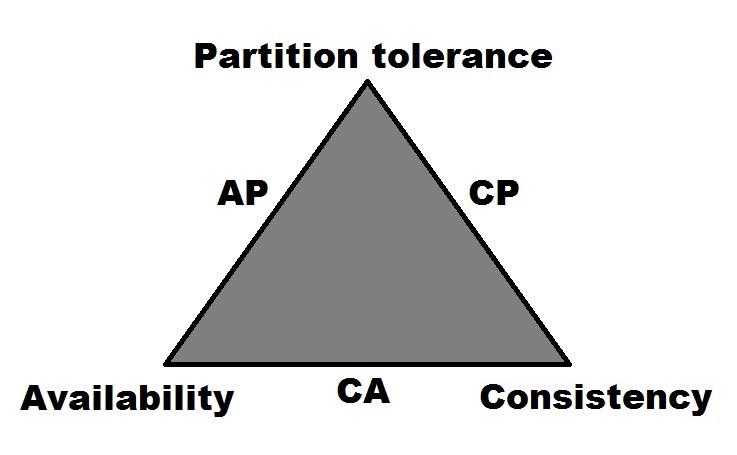

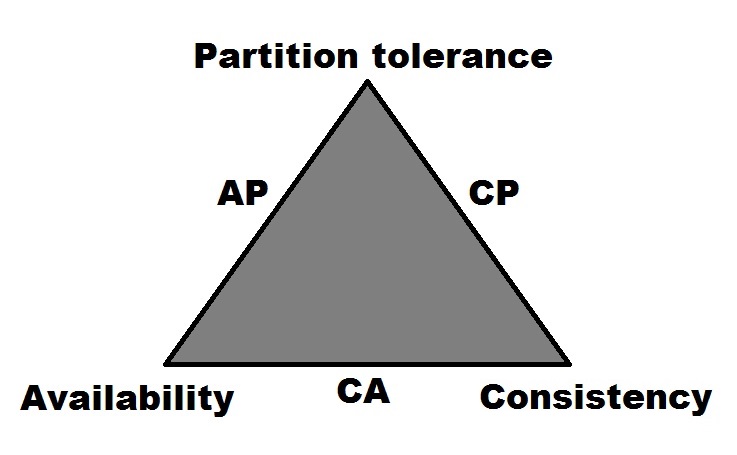

As is well known at this point, there's tension between consistency and availability. A system that is highly available needs to allow operations to succeed even if some nodes in the system are unreachable (either dead or partitioned off by the network). However, since it is unknown as to whether those nodes are still alive and are reachable by some clients or are dead and reachable by no one, there are no guarantees about whether those operations left the system in a consistent state or not.

So, a system that guarantees consistency must make sure that all of the nodes that contain data for a given key must be reachable and participate in the operation. The degenerate case is that a single node is responsible for operations on a given key. Since there is just a single node, there is no chance of inconsistency of the sort we've been discussing. The downside is that when a node goes down, there is a complete loss of availability for operations on that key.

Relational databases have provided the traditional properties of ACID: atomicity, consistency, isolation, and durability:

NoSQL databases vary widely in their support for these guarantees, with most of them not approaching the level of strong guarantees provided by relational databases (since these are hard to support in a distributed setting).

Once you've decided to distribute data, how should the data be distributed?

Firstly, data needs to be distributed using a partitioning key in the data. The partitioning key can be the primary key or any other unique key. Once you've identified the partitioning key, we need to decide how to assign a key to a given shard.

One way to do this would be to take a key and apply a hash function. Based on the hash bucket and the number of shards to map keys into, the key would be assigned to a shard. There's a bit of nuance here in the sense that an assignment scheme based on a modulo by the number of nodes currently in the cluster will result in a lot of data movement when nodes join or leave the cluster (since all of the assignments need to be recalculated). This is addressed by something called consistent hashing, a detailed description of which is outside the scope of this chapter.

Another way to do assignments would be to take the entire keyspace and break it up into a set of ranges. Each range corresponds to a shard and is assigned to a given node in the cluster. Given a key, you would then do a binary search to find out the node it is meant to be assigned to. A range partition doesn't have the churn issue that a naive hashing scheme would have. When a node joins, shards from existing nodes will migrate onto the new node. When a node leaves, the shards on the node will migrate to one or more of the existing nodes.

What impact do the hash and range partitions have on the system design? A hash-based assignment can be built in a decentralized manner, where all nodes are peers of each other and there are no special master-slave relationships between nodes. Ceph and Cassandra both do hash-based partition assignment.

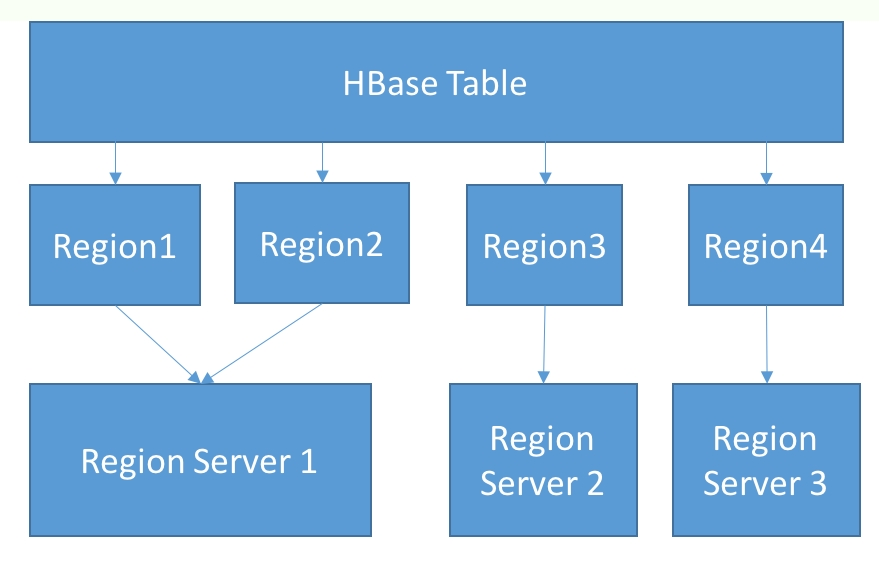

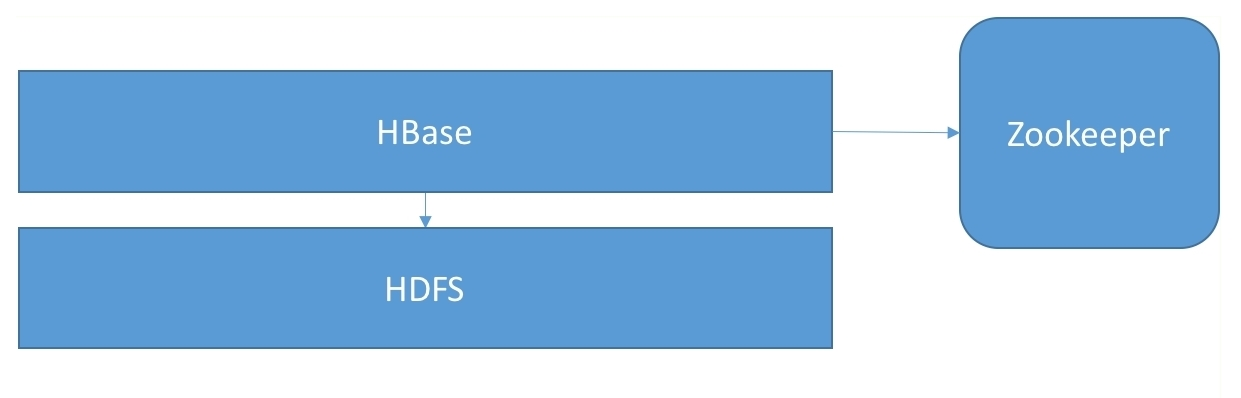

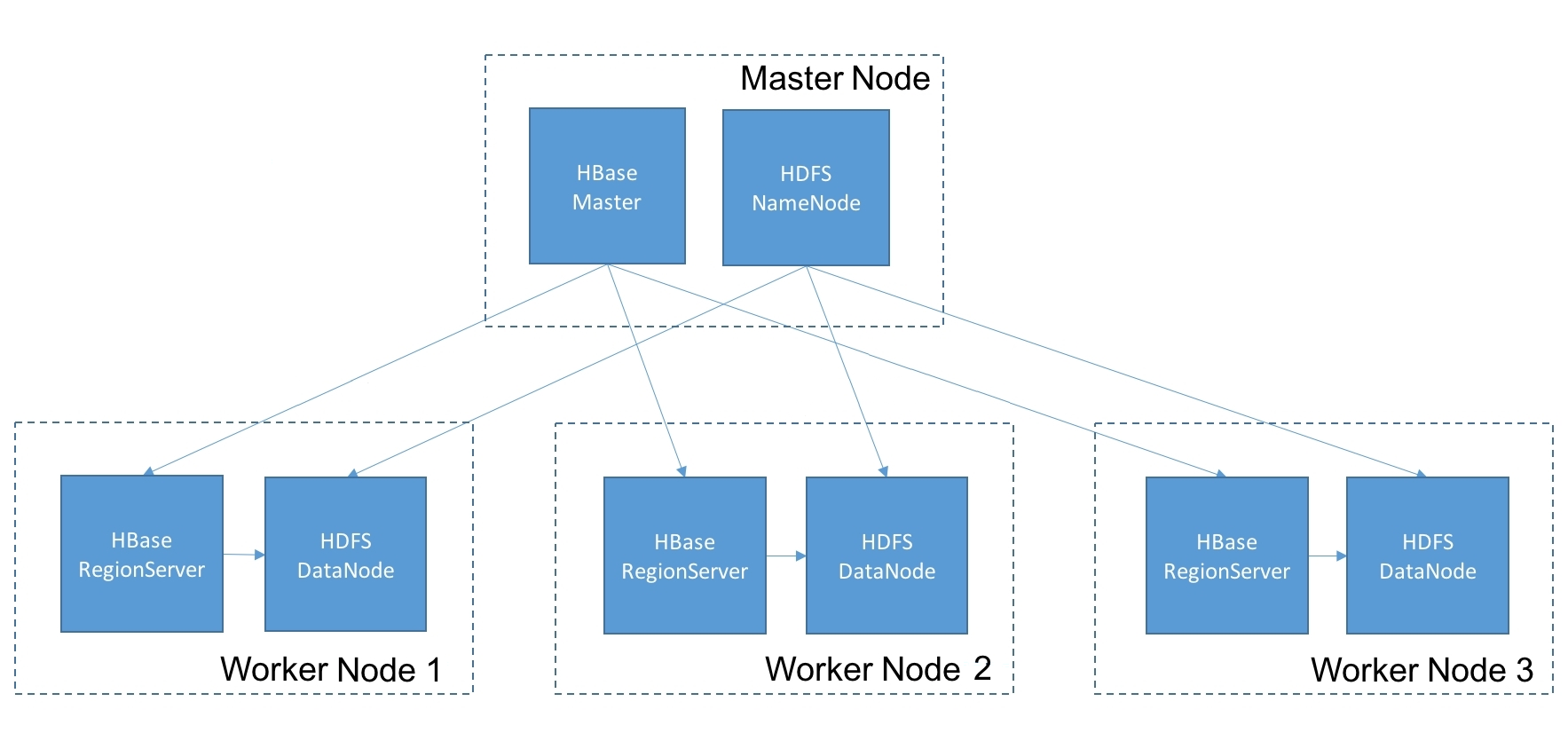

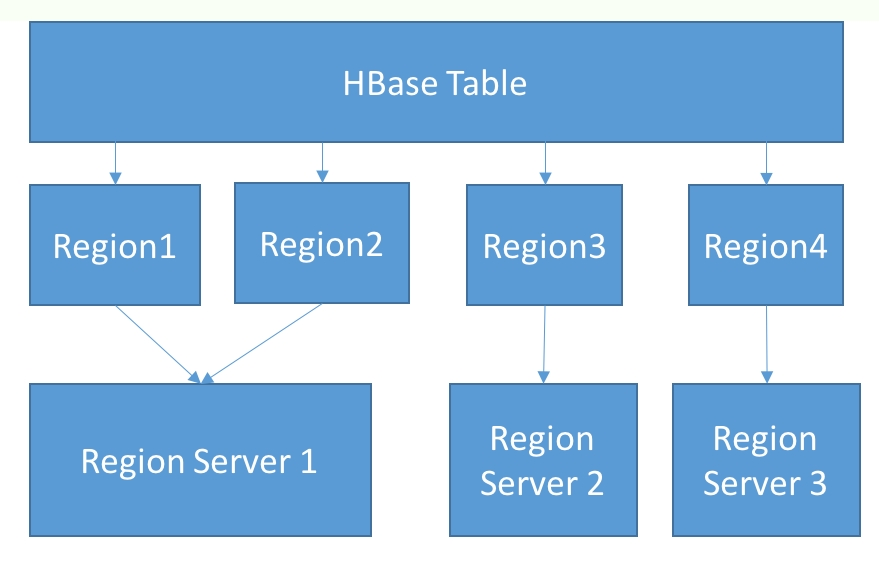

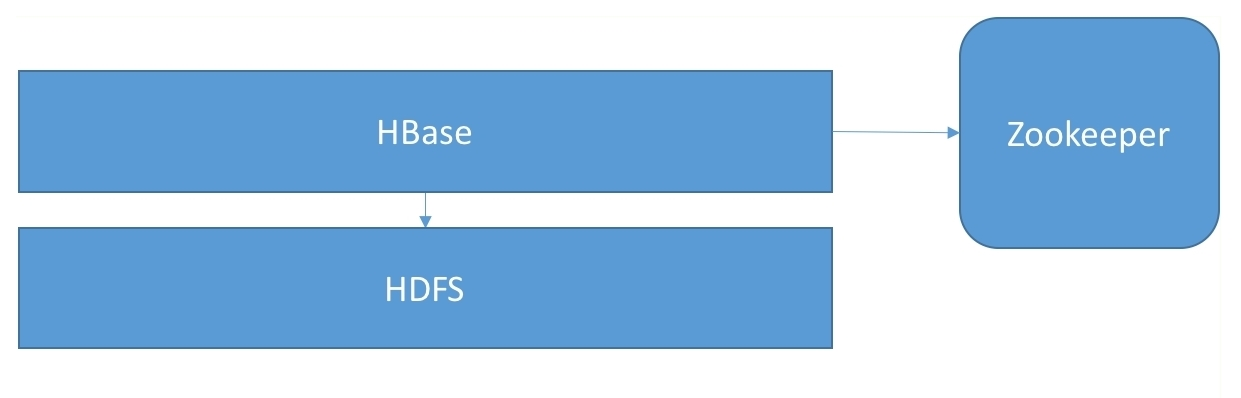

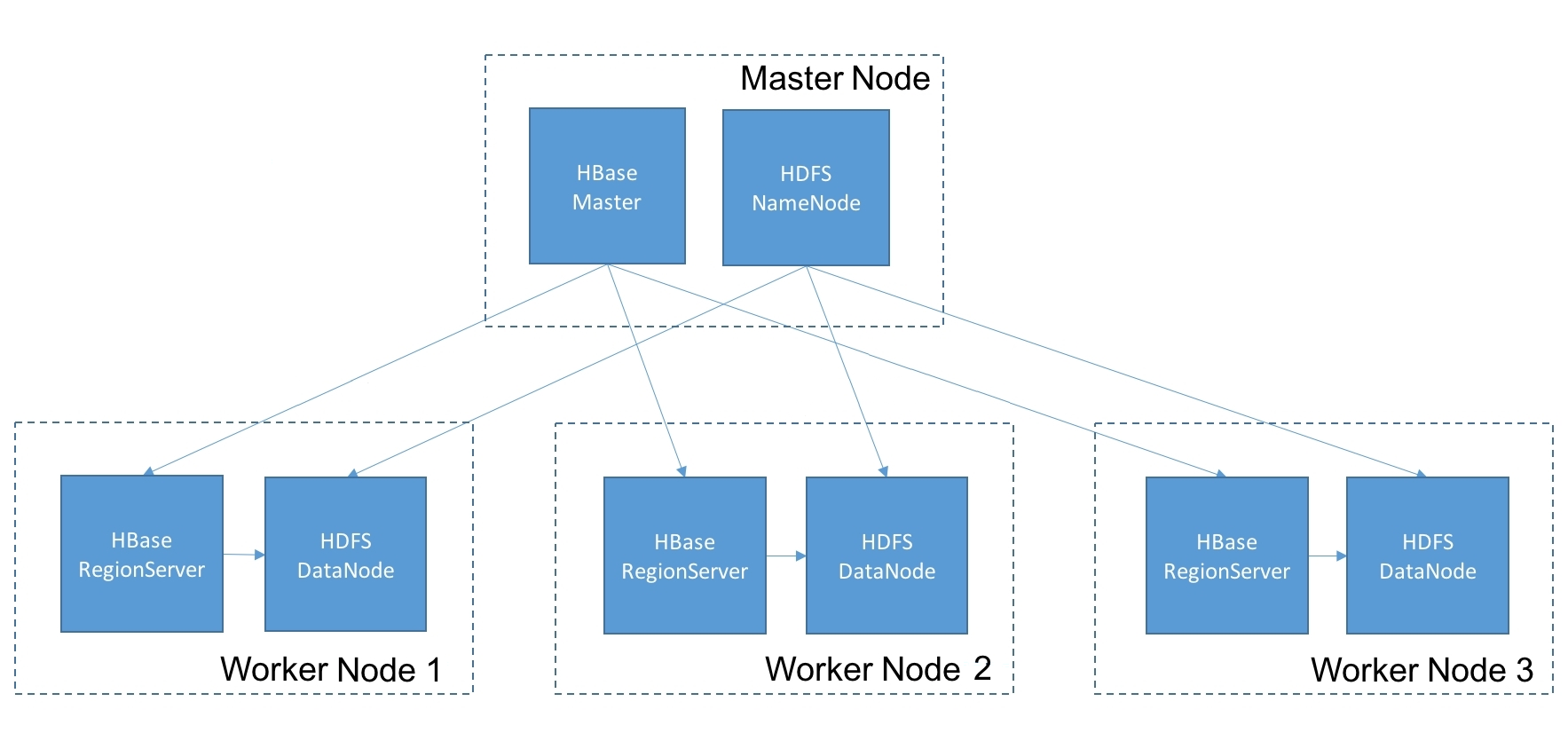

On the other hand, a range-based partitioning scheme requires that range assignments be kept in some special service. Hence, databases that do range-based partitioning, such as Bigtable and HBase, tend to be centralized and peer to peer, but instead have nodes with special roles and responsibilities.

Another key difference between database systems is how they handle updates to the physical records stored on disk.

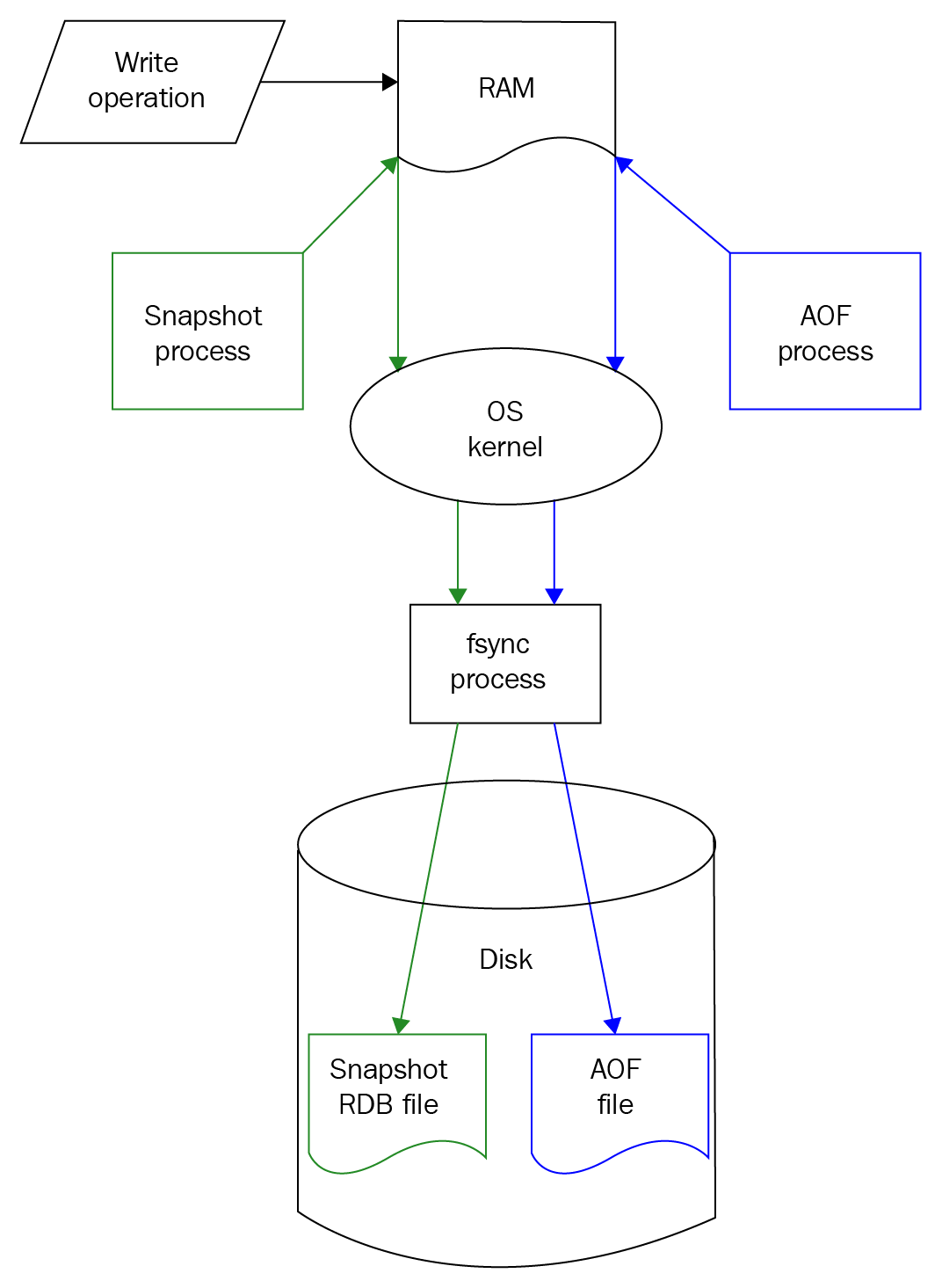

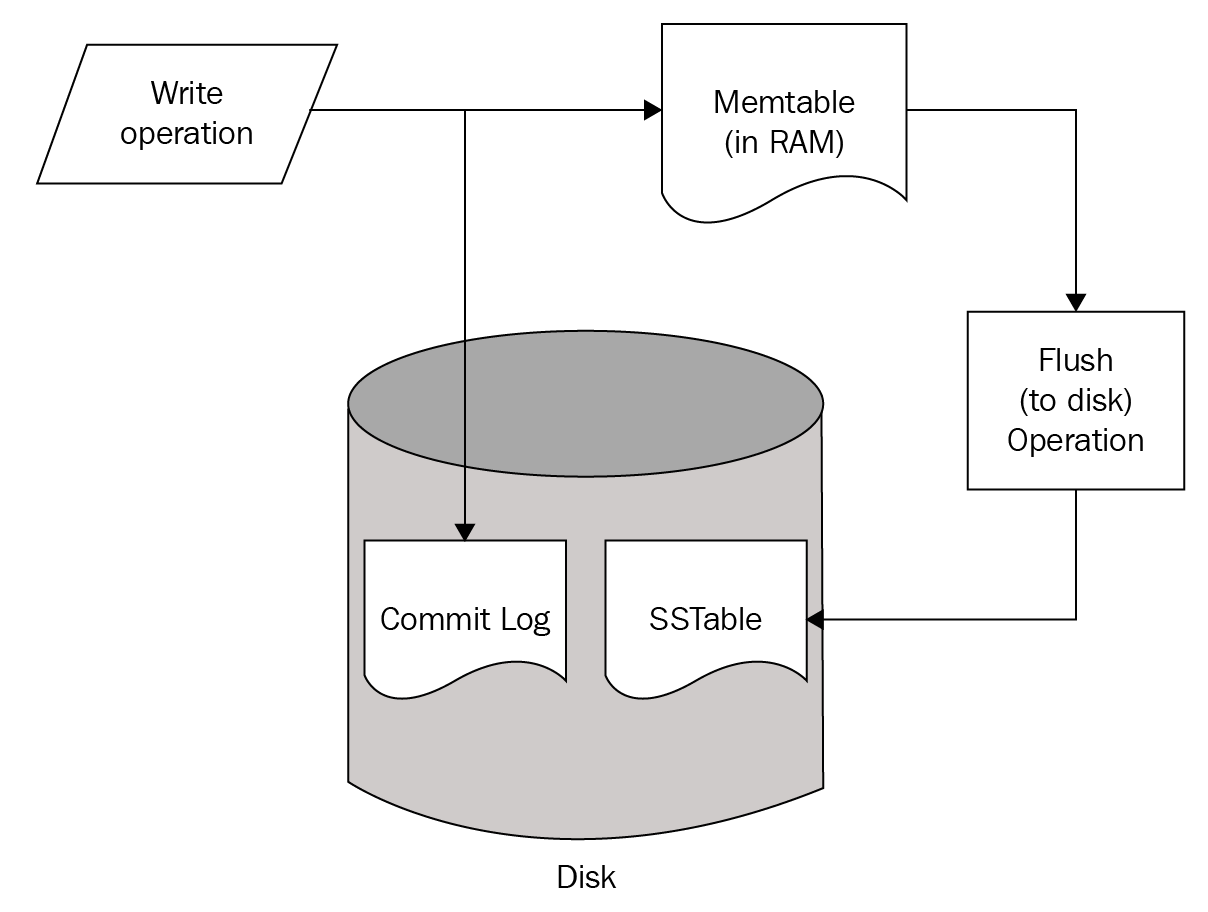

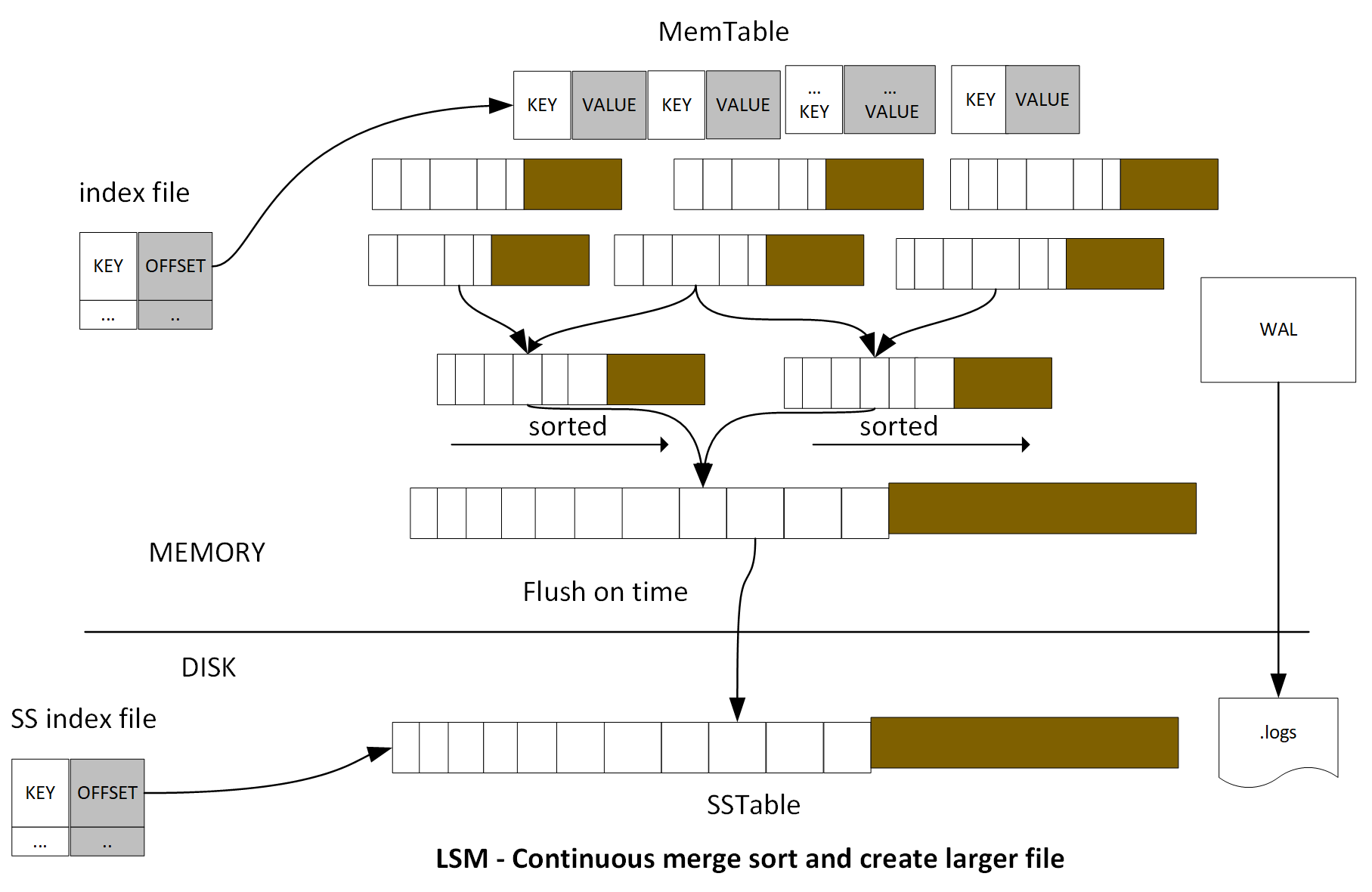

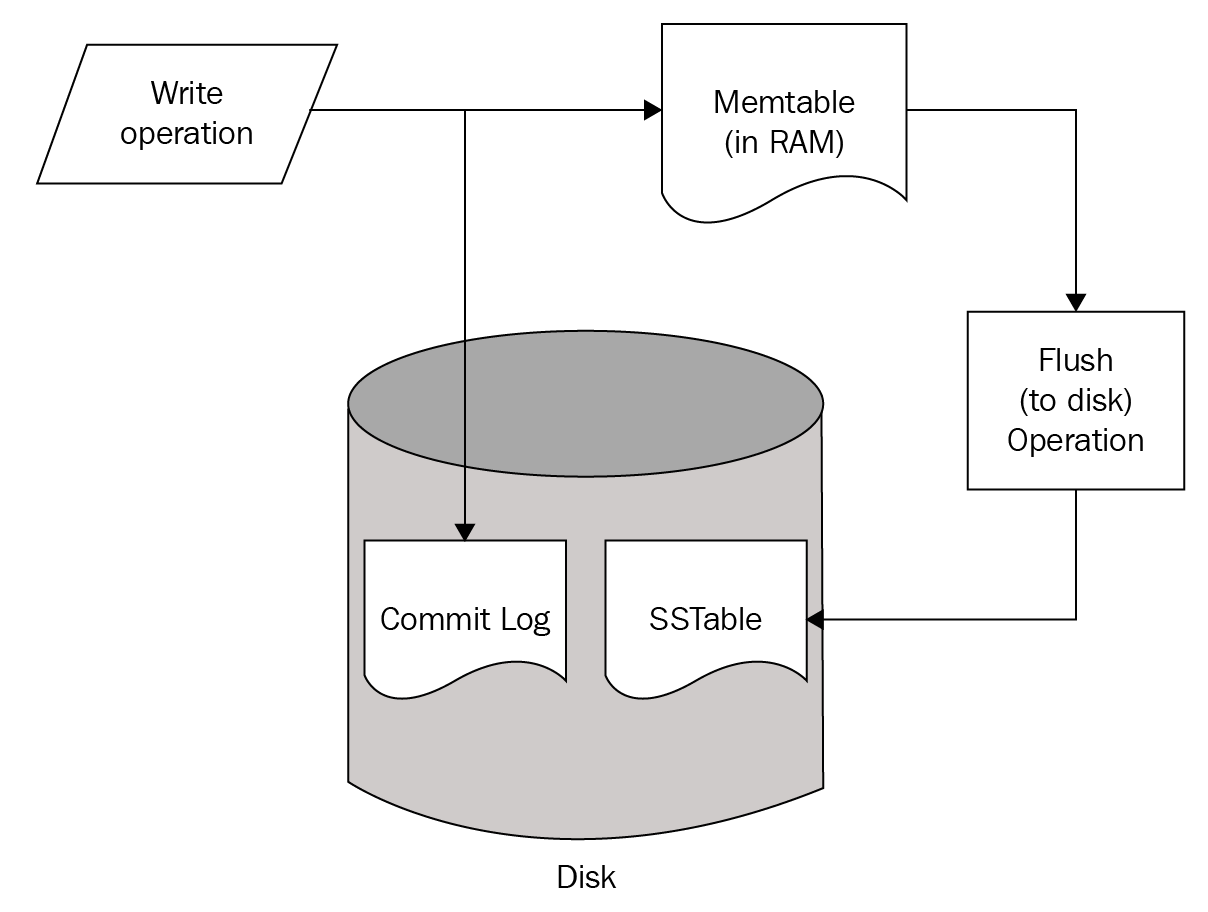

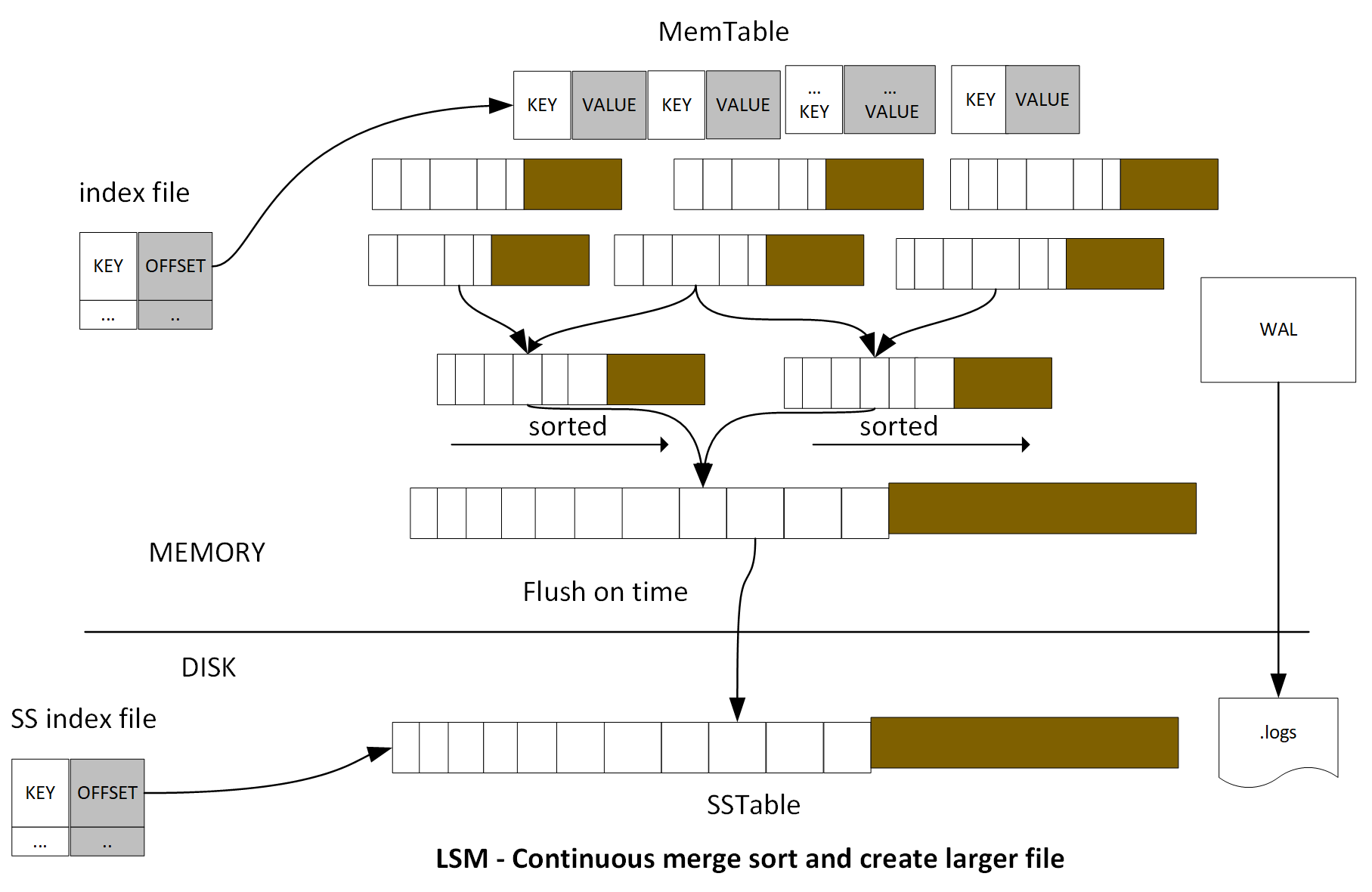

Relational databases, such as MySQL, maintain a variety of structures in both the memory and disk, where writes from in-flight transactions and writes from completed transactions are persisted. Once the transaction has been committed, the physical record on disk for a given key is updated to reflect that. On the other hand, many NoSQL databases, such as HBase and Cassandra, are variants of what is called a log-structured merge (LSM) database.

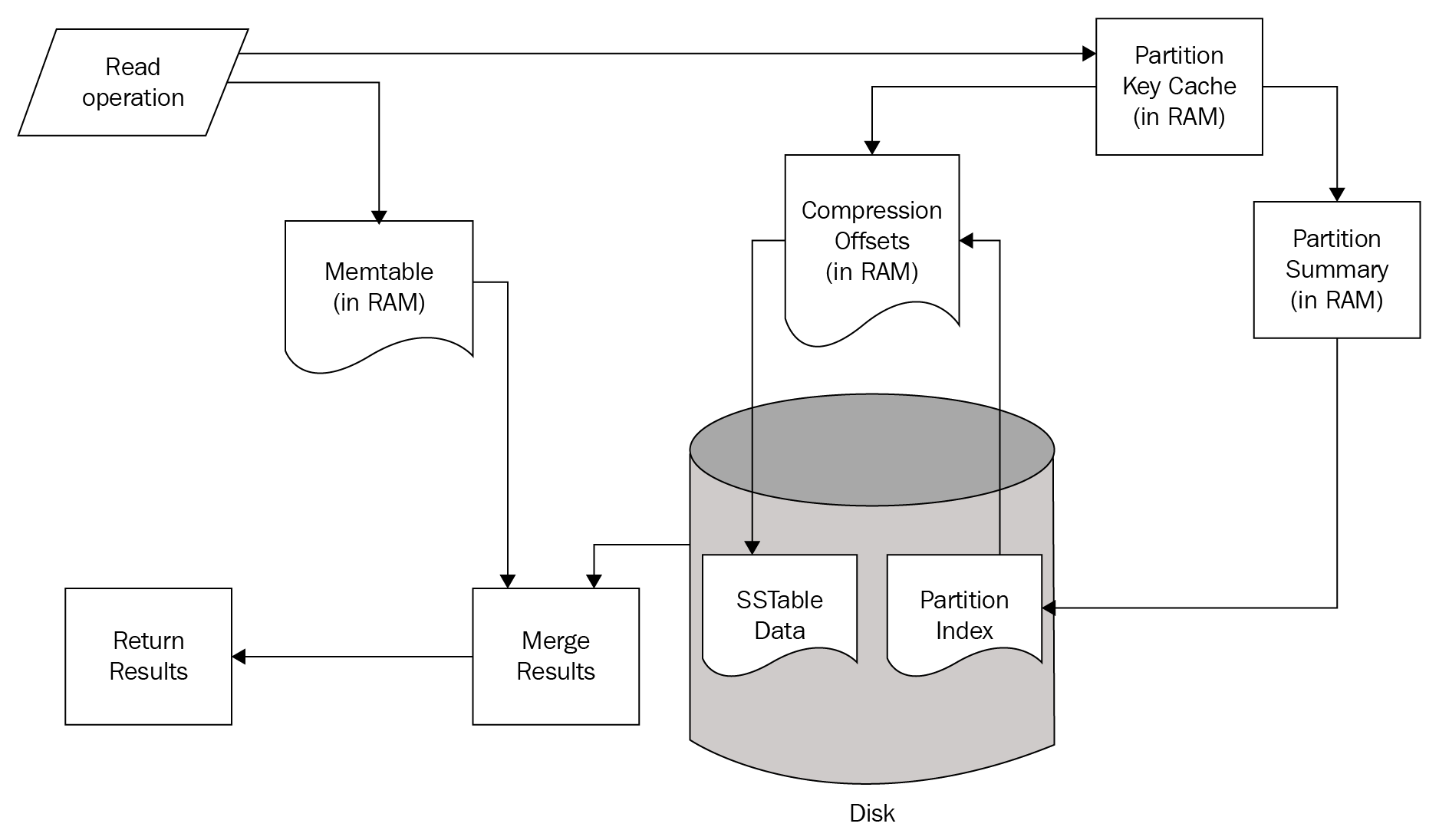

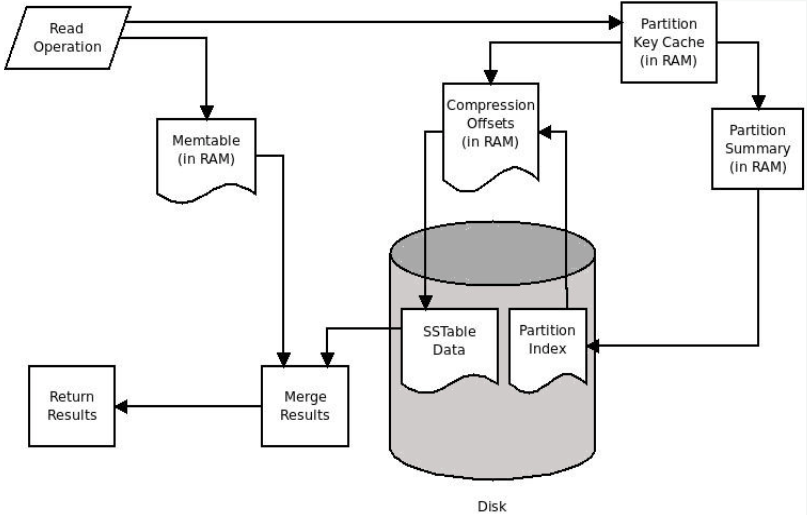

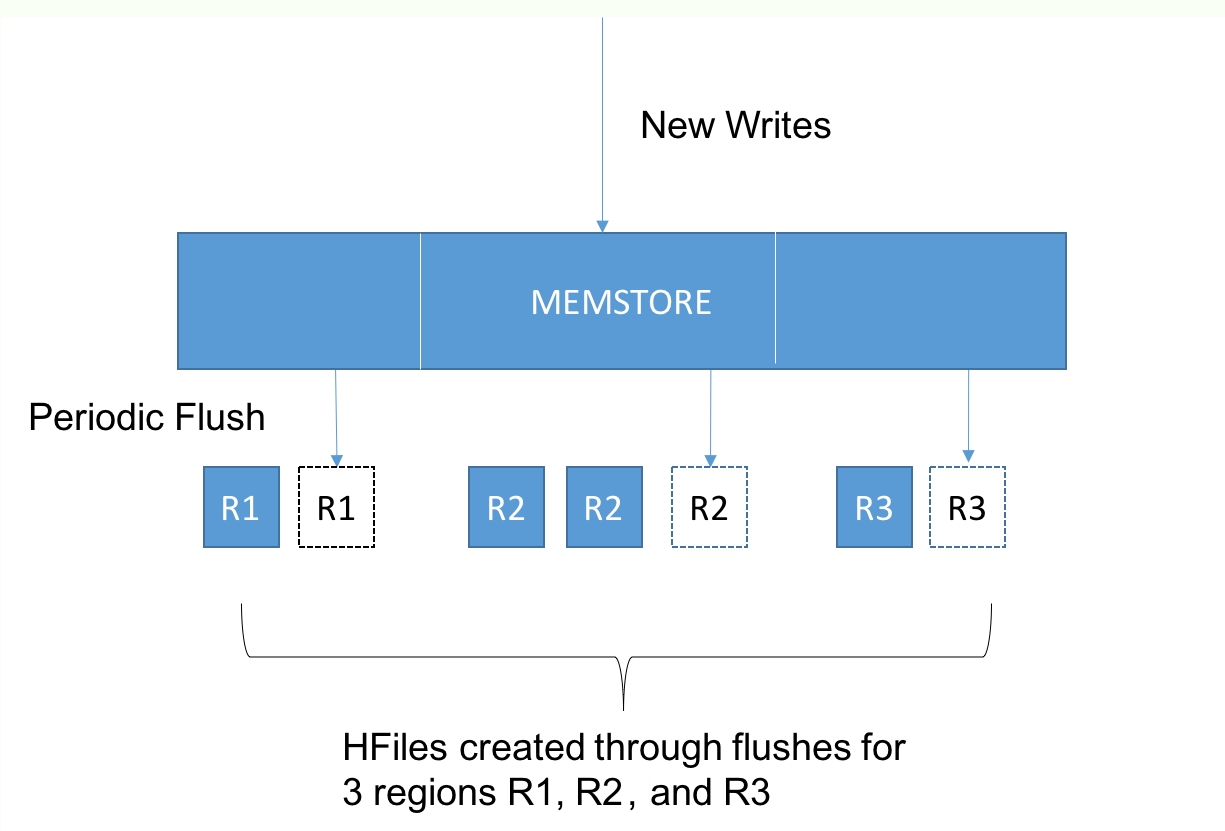

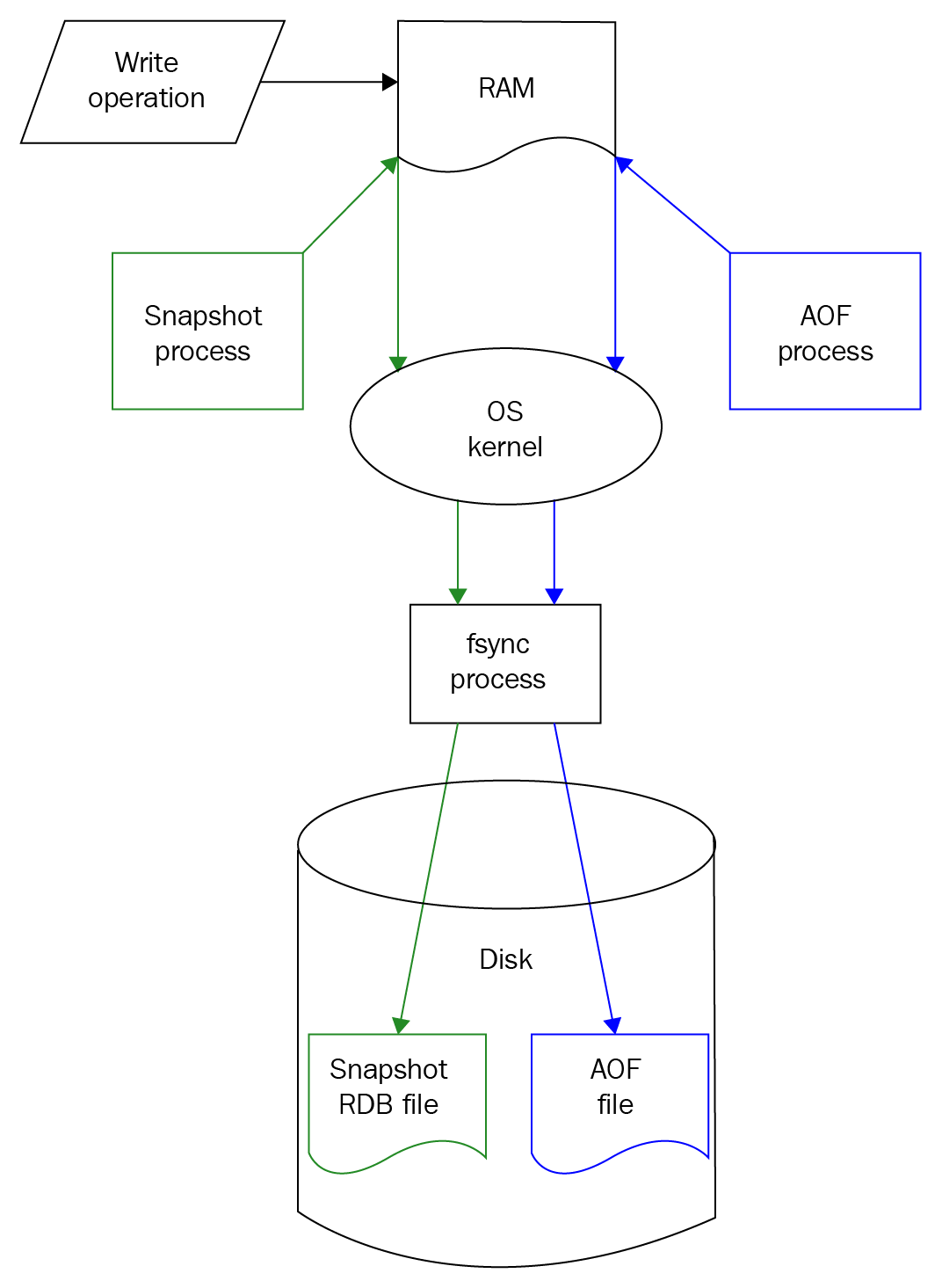

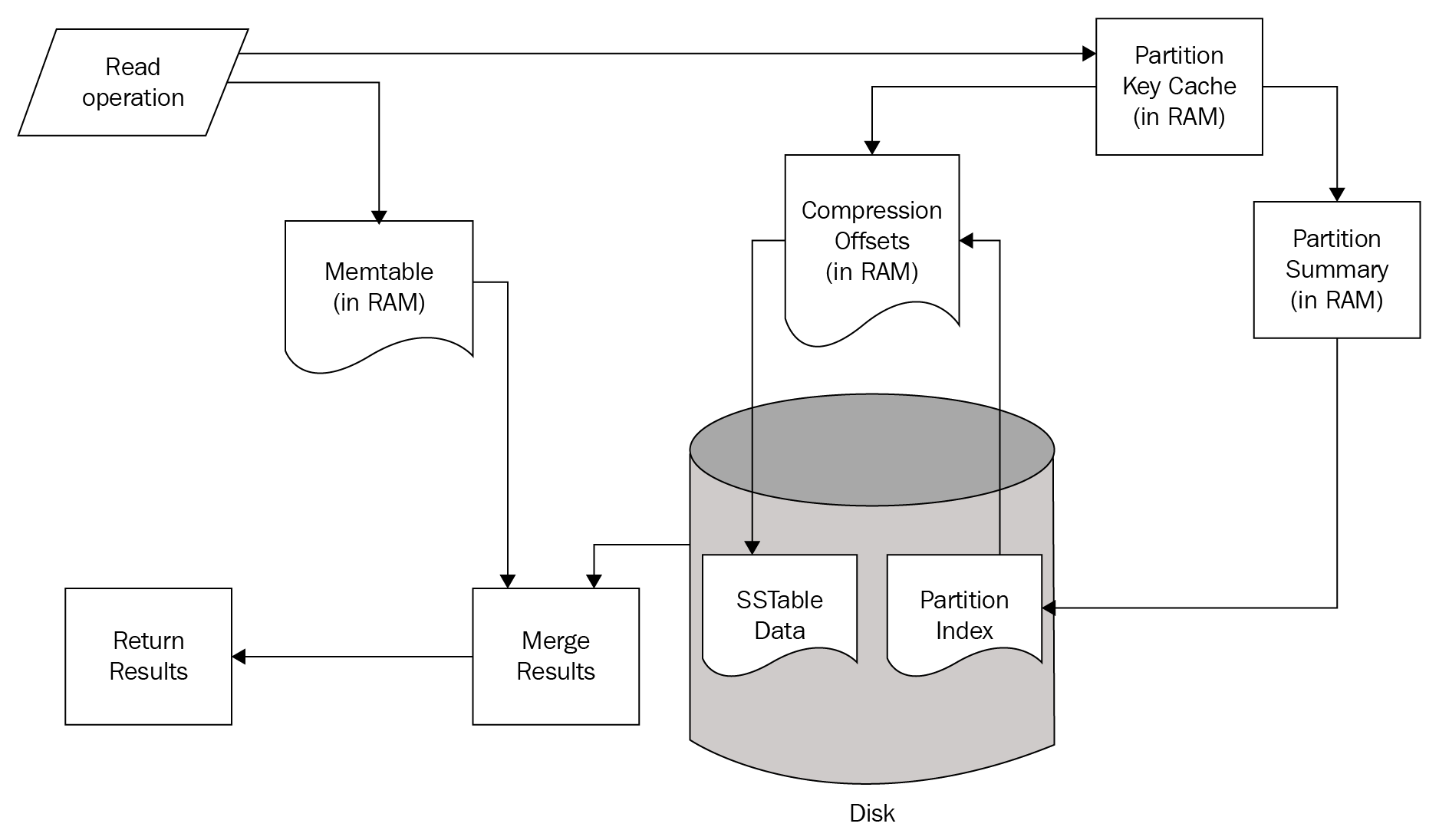

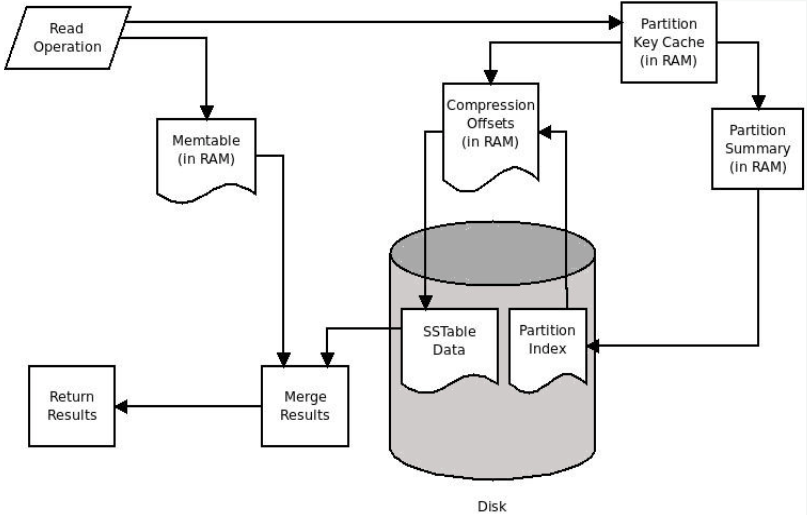

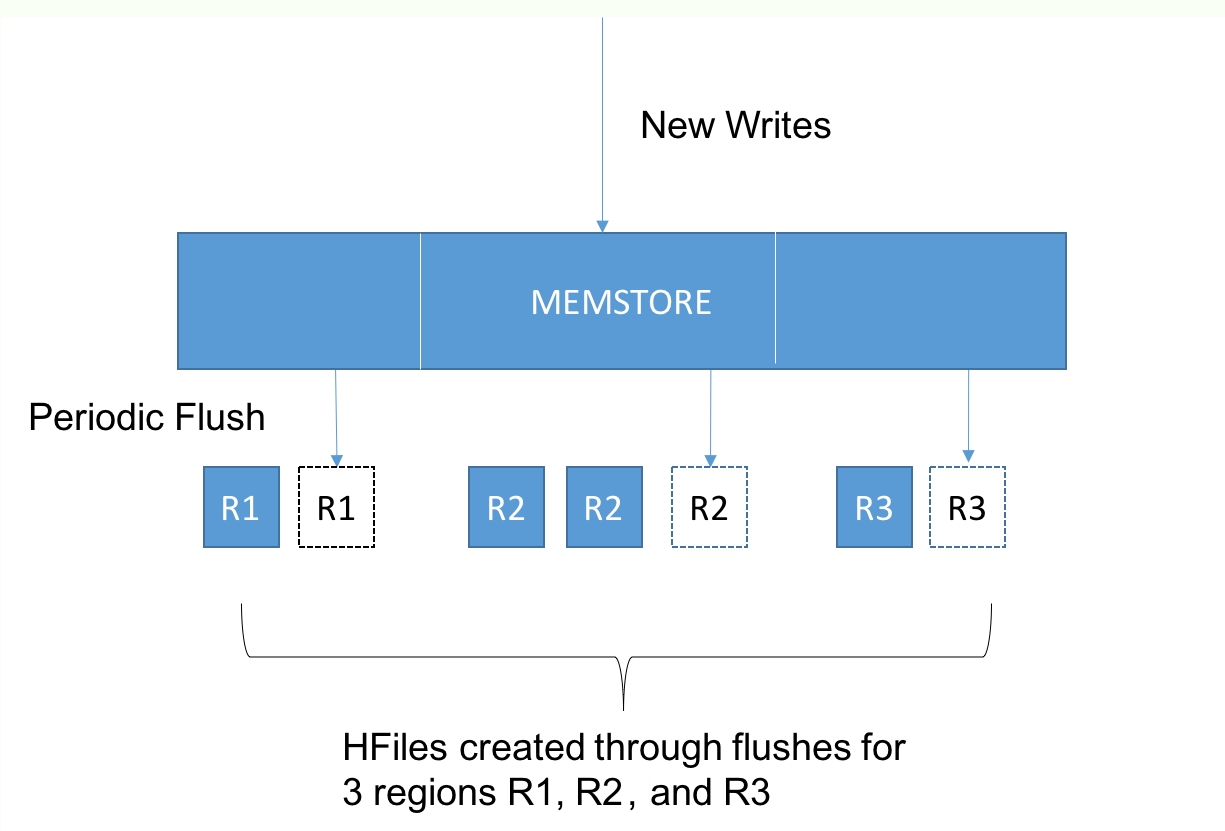

In such an LSM database, updates aren't applied to the record at transaction commit. Instead, updates are applied in memory. Once the memory structure gets full, the contents of the memory are flushed to the disk. This means that updates to a single record can be fragmented and located within separate flush files that are created over time. This means that when there is a read for that record, you need to read in fragments of the record from the different flush files and merge the fragments in reverse time order in order to construct the latest snapshot of the given record. We will discuss the mechanics of how an LSM database works in the Chapter 6, HBase.

When you have a logical table with a bunch of rows and columns, there are multiple ways in which they can be stored physically on a disk.

You can store the contents of entire rows together so that all of the columns of a given row would be stored together. This works really well if the access pattern accesses a lot of the columns for a given set of rows. MySQL uses such a row-oriented storage model.

On the other hand, you could store the contents of entire columns together. In this scheme, all of the values from all of the rows for a given column can be stored together. This is really optimized for analytic use cases where you might need to scan through the entire table for a small set of columns. Storing data as column vectors allows for better compression (since there is less entropy between values within a column than there is between the values across a column). Also, these column vectors can be retrieved from a disk and processed quickly in a vectorized fashion through the SIMD capabilities of modern processors. SIMD processing on column vectors can approach throughputs of a billion data points/sec on a personal laptop.

Hybrid schemes are possible as well. Rather than storing an entire column vector together, it is possible to first break up all of the rows in a table into distinct row groups, and then, within a row group, you could store all of the column vectors together. Parquet and ORC use such a data placement strategy.

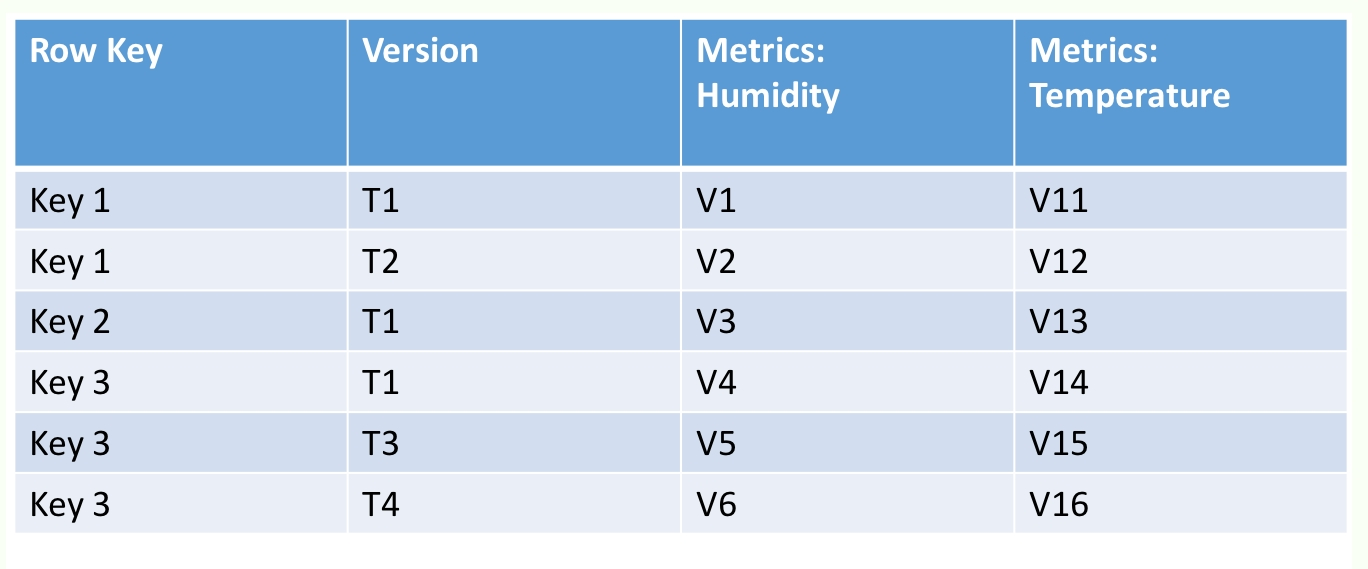

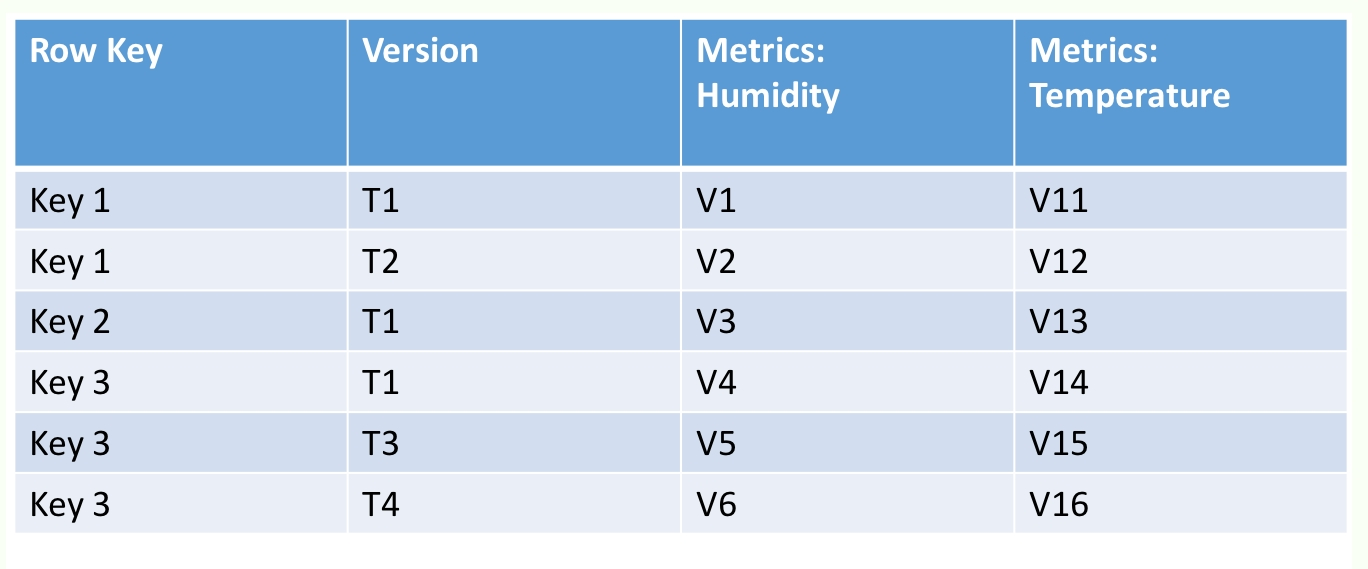

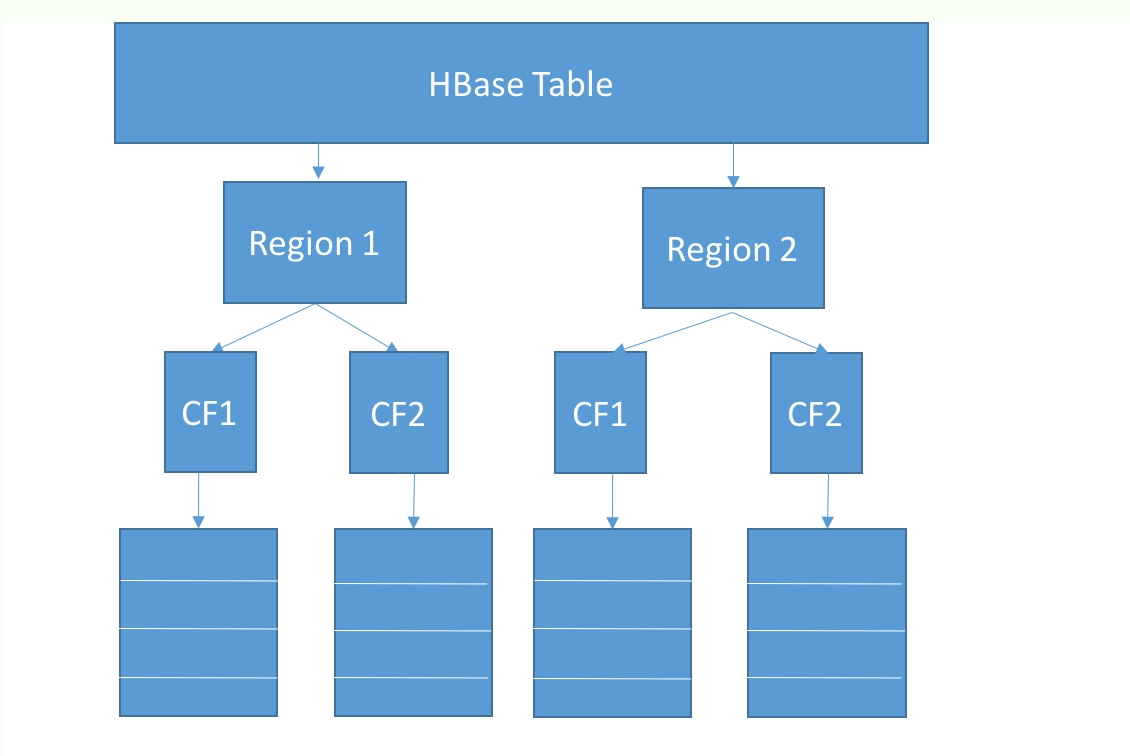

Another variant is that data is stored row-wise, but the rows are divided into row groups such that a row group is assigned to a shard. Within a row group, groups of columns that are often queried together, called column families, are then stored physically together on the disk. This storage model is used by HBase and is discussed in more detail in Chapter 6, HBase.

Databases can decide up-front how prescriptive they want to be about specifying a schema for the data.

When NoSQL databases came to the fore a decade ago, a key point was that they didn't require a schema. The schema could be encoded and enforced in the application rather than in the database. It was thought that schemas were a hindrance in dealing with all of the semi structured data that was getting produced in modern enterprise. So because the early NoSQL systems didn't have a type system, they didn't enforce the standard that all rows in the table have the same structure, they didn't enforce a whole lot.

However, today, most of these NoSQL databases have acquired an SQL interface. Most of them have acquired a rich type system. One of the reasons for this has been the realization that SQL is widely known and reduces the on-board friction in working with a new database. Getting started is easier with an SQL interface than it is with an obscure key-value API. More importantly, having a type system frees application developers from having to remember how a particular value was encoded and to decode it appropriately.

Hence, Cassandra deprecated the Thrift API and made CQL the default. HBase still doesn't support SQL access natively, but use of HBase is increasingly pivoting towards SQL interfaces over HBase, such as Phoenix.

In this chapter, we introduced the notion of a NoSQL database and considered some of the principles that go into the design of such a database. We now understand that there are many trade-offs to be considered in database design based on the specific use cases and types of workloads the database is being designed for. In the following chapters, we are going to be looking in detail at seven popular NoSQL databases. We will look at their architecture, data, and query models, as well as some practical tips on how you can get started using these databases, if they are a fit for your use case.

MongoDB is an open source, document-oriented, and cross-platform database. It is primarily written in C++. It is also the leading NoSQL database and tied with the SQL database in fifth position after PostgreSQL. It provides high performance, high availability, and easy scalability. MongoDB uses JSON-like documents with schema. MongoDB, developed by MongoDB Inc., is free to use. It is published under a combination of the GNU Affero General Public License and the Apache License.

Let's go through the MongoDB features:

You can download the latest version of MongoDB here: https://www.mongodb.com/download-center#community. Follow the setup instructions to install it.

Once MongoDB is installed on your Windows PC, you have to create the following directory:

Data directory C:\data\db

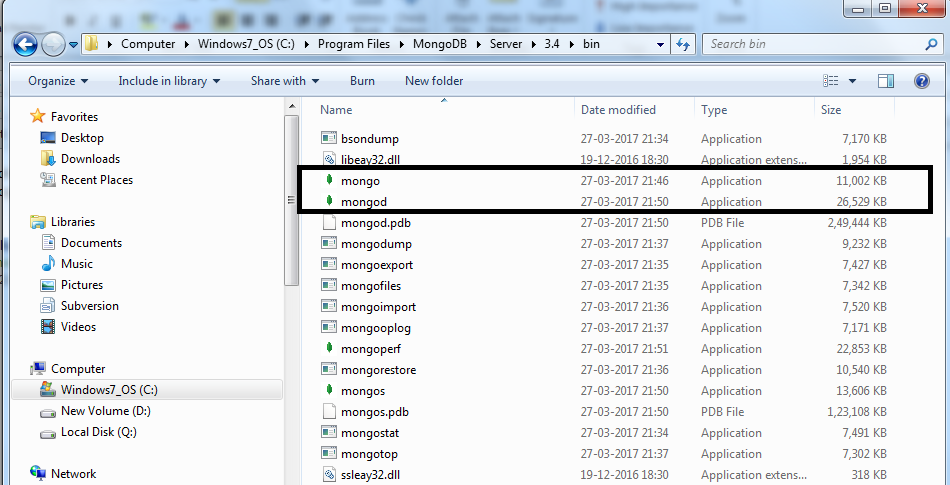

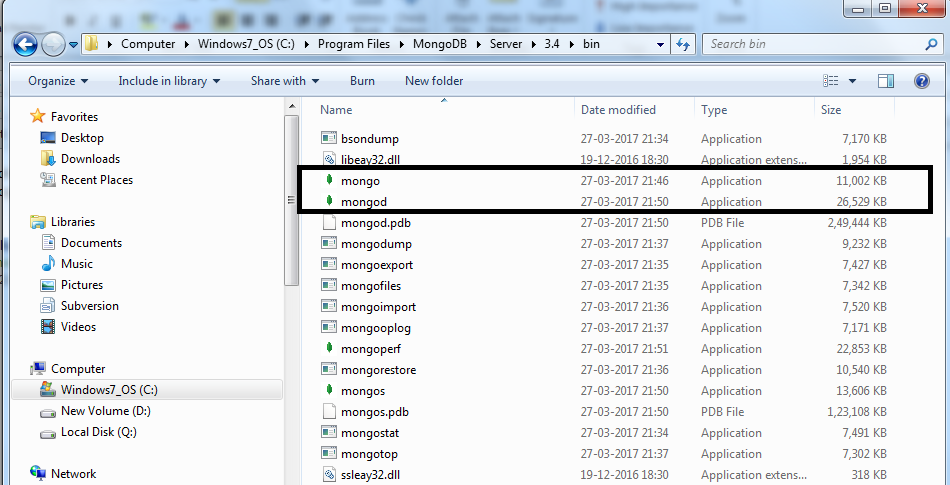

Once you have successfully installed MongoDB, you will be able to see the following executable:

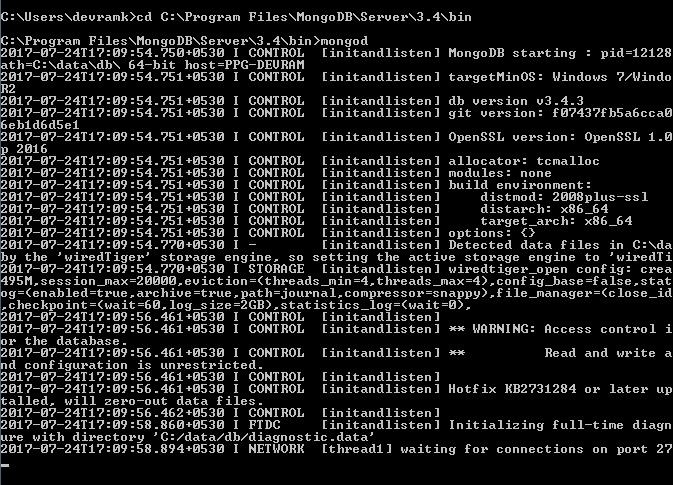

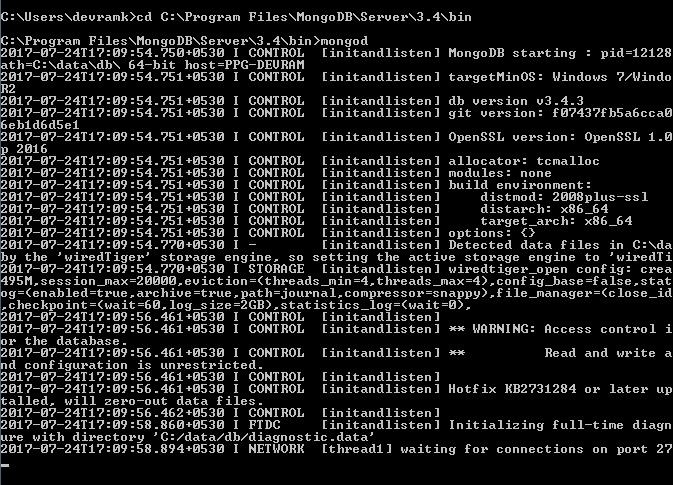

We have to start the mongod instances to begin working with MongoDB. To start the mongod instance, execute it from the command prompt, as shown in the following screenshot:

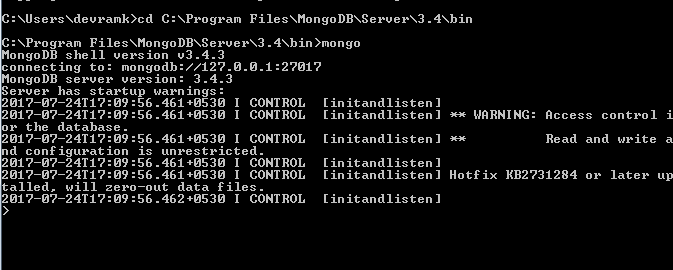

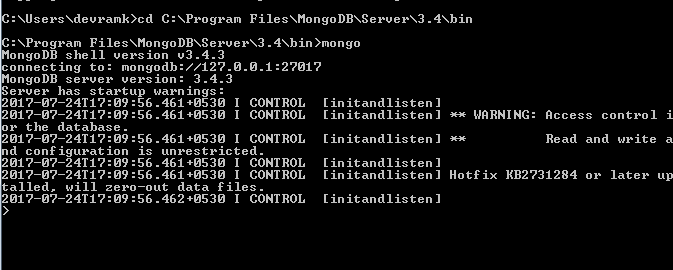

Once mongod has started, we have to connect this instance using the mongo client with the mongo executable:

Once we are connected to the database, we can start working on the database operations.

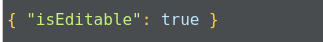

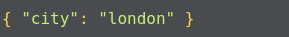

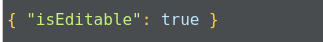

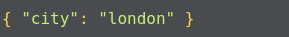

Documents in MongoDB are JSON-like objects. JSON is a simple representation of data. It supports the following data types:

Data is stored in a database in the form of collections. It is a container for collection, just like in SQL databases where the database is a container for tables.

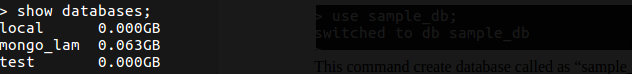

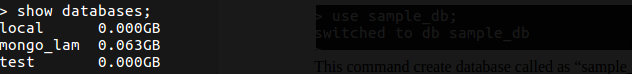

To create a database in MongoDB, we use the following command:

This command creates a database called sample_db, which can be used as a container for storing collections.

The default database for mongo is test. If we do not specify a database before storing our collection, MongoDB will store the collection in the test database.

Each database has its own set of files on the filesystem. A MongoDB server can have multiple databases. We can see the list of all the databases using the following command:

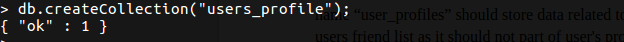

The collection is a container for MongoDB documents. It is equivalent to SQL tables, which store the data in rows. The collection should only store related documents. For example, the user_profiles collection should only store data related to user profiles. It should not contain a user's friend list as this should not be a part of a user's profile; instead, this should fall under the users_friend collection.

To create a new collection, you can use the following command:

Here, db represents the database in which we are storing a collection and users_profile is the new collection we are creating.

Documents in a collection should have a similar or related purpose. A database cannot have multiple collections with the same name, they are unique in the given database.

Collections do not force the user to define a schema and are thus known as schemaless. Documents within the collection have different fields. For example, in one document, we can have user_address, but in another document, it is not mandatory to have the user_address field.

This is suitable for an agile approach.

Data in MongoDB is actually stored in the form of documents. The document is a collection of key-value pairs. The key is also known as an attribute.

Documents have a dynamic schema and documents in the same collection may vary in field set.

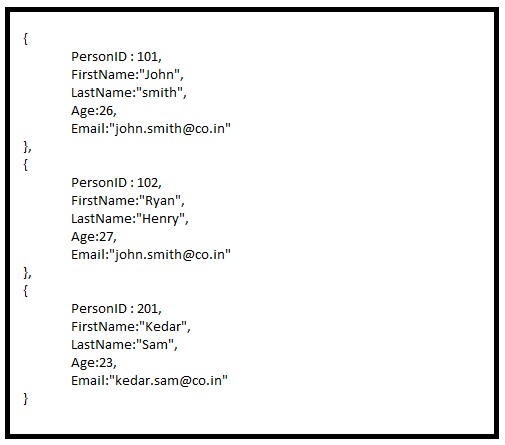

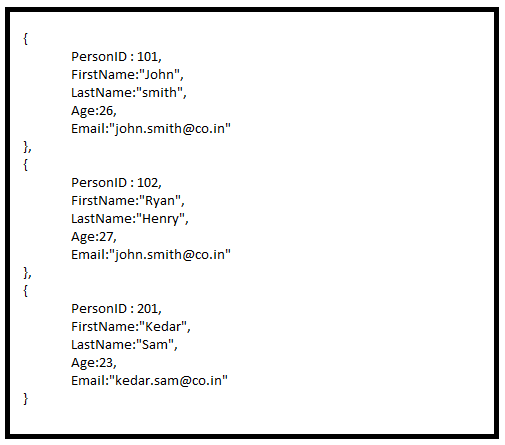

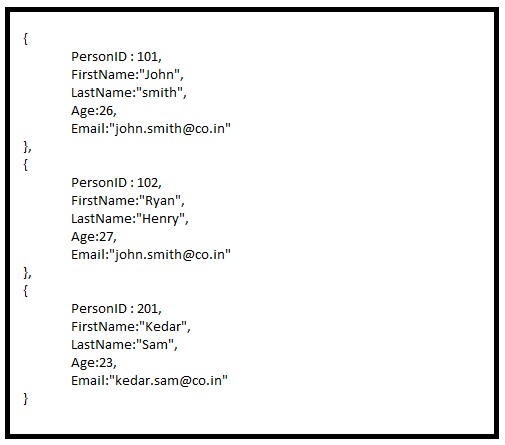

Here is the structure of a MongoDB document:

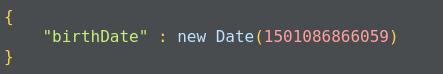

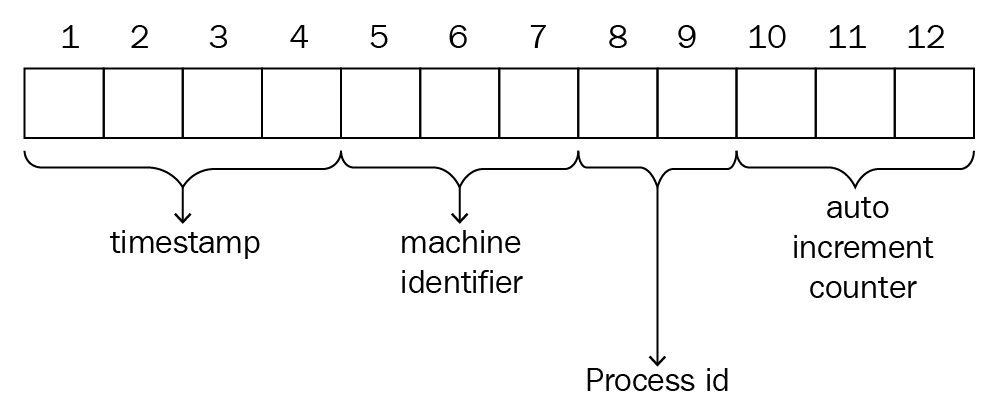

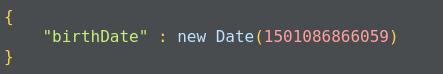

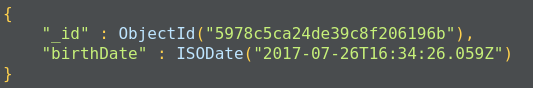

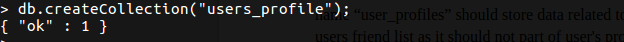

MongoDB documents have a special field called _id._id is a 12-byte hexadecimal number that ensures the uniqueness of the document. It is generated by MongoDB if not provided by the developer.

Of these 12 bytes, the first 4 bytes represent the current time stamp, the next 3 bytes represent the machine ID, the next 2 bytes represent the process ID on the MongoDB server, and the remaining 3 bytes are simple auto-increment values, as shown in the following diagram:

_id also represents the primary key for MongoDB documents.

Let's look at a quick comparison between SQL and MongoDB:

|

SQL |

MongoDB |

|

Database |

Database |

|

Table |

Collection |

|

Row |

Document |

|

Column |

Field |

Here are some of MongoDB's advantages over RDBMS:

The following are the uses of MongoDB:

The applications of MongoDB are:

The limitations of MongoDB are:

Now we will go through the MongoDB CRUD operations.

The create operation inserts the new document into the collection. If a collection does not exist, MongoDB will create a new collection and insert a document in it. MongoDB provides the following methods to insert a document into the database:

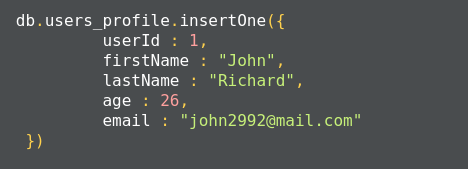

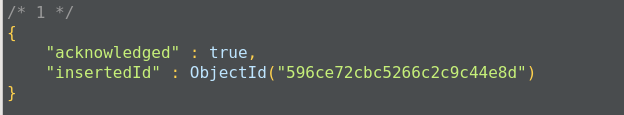

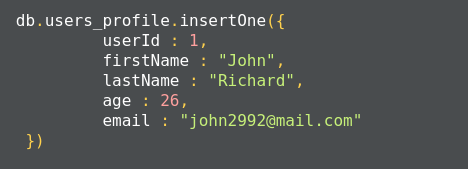

The MongoDB insert operation will target single collections. Also, mongo preserves atomicity at the document level:

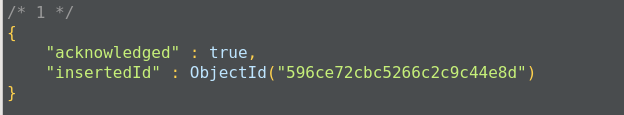

This method returns a document that contains the newly added documents _id value:

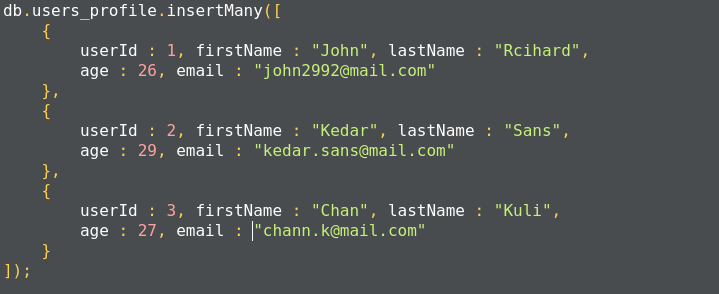

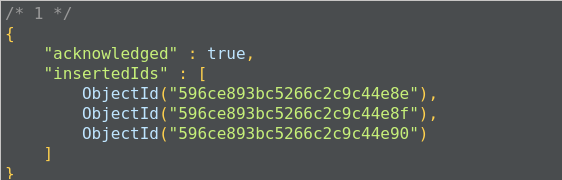

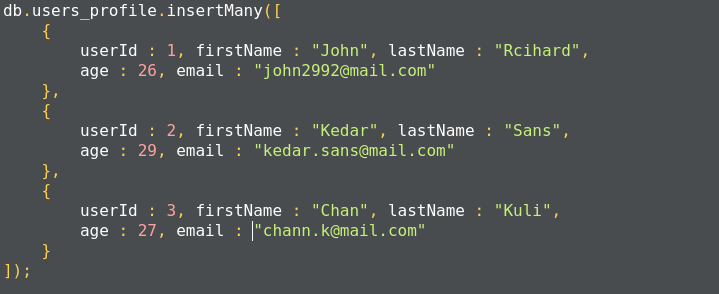

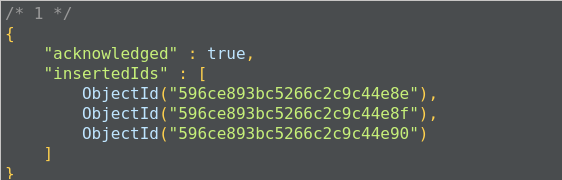

The method insertMany(), can insert multiple documents into the collection at a time. We have to pass an array of documents to the method:

In MongoDB, each document requires an _id field that uniquely identifies the document that acts as a primary key. If a user does not insert the _id field during the insert operation, MongoDB will automatically generate and insert an ID for each document:

The following is the list of methods that can also be used to insert documents into the collection:

The read operation retrieves documents or data from documents in the collection. To retrieve all documents from a given collection, pass an empty document as a filter. We have to pass the query filter parameter in order to apply our criteria for the selection of documents:

The preceding code returns all the documents in the given collection:

This operation will return all the documents from the user_profiles collection. It is also equivalent to the following SQL operation, where user_profiles is the table, and the query will return all the rows from the table:

SELECT * FROM user_profiles;

To apply equal conditions, we use the following filter expression:

<field>:<value>

For example, to select the user profile with John as the user's first name, we have to apply the query as:

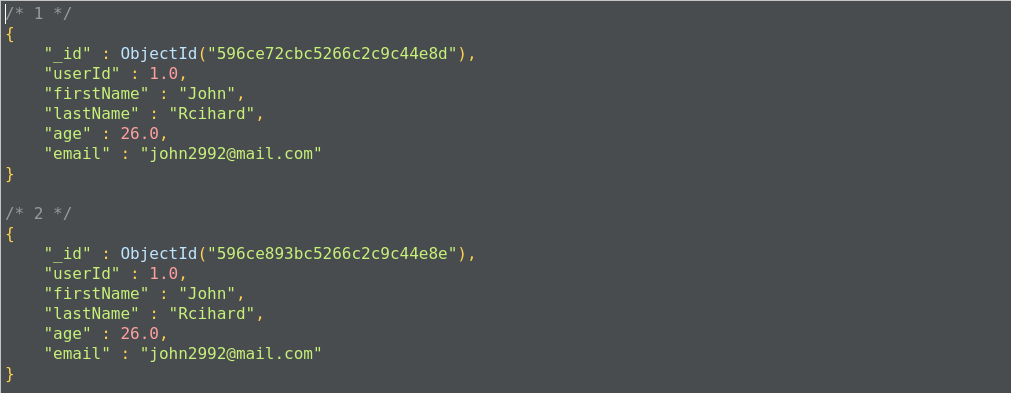

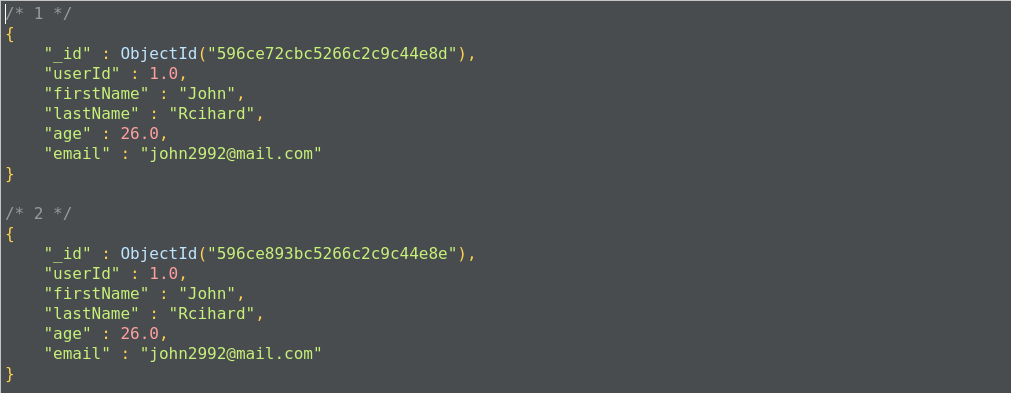

This gives us all the documents where we have the firstName as John:

This query will return all the documents where the first name is John. This operation is equivalent to the following SQL operation:

SELECT * FROM user_profiles WHERE firstName='John';

We can apply conditional parameters while retrieving data from collections, such as IN, AND, and OR with less than and greater than conditions:

{<field 1> : {<operator 1> : <value 1>},....}

The following query will return all the documents from user_profiles where the first name is John or Kedar:

Here, we get all the documents with a firstName of either John or Kedar:

The preceding operation corresponds to the following SQL operation query:

SELECT * FROM user_profiles WHERE firstName in('John', 'Kedar');

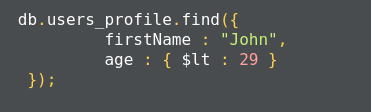

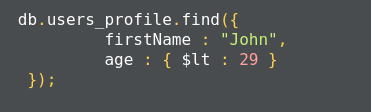

For example, the following query returns the result where the firstname matches John and the age of the user is less than 29:

This corresponds to the following SQL query:

SELECT * FROM user_profiles WHERE firstName='John' AND age<30;

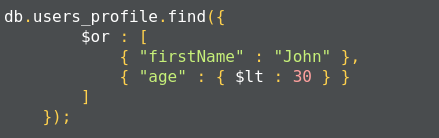

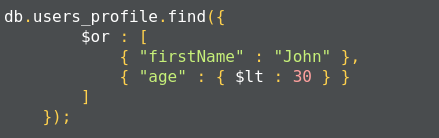

This corresponds to the following SQL query:

SELECT * FROM user_profiles WHERE firstName='John' or age<30;

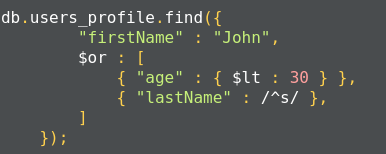

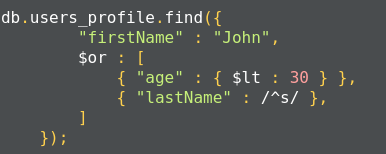

This operation corresponds to the following SQL operation:

SELECT * FROM user_profiles WHERE firstName='John'

AND age<30 OR lastName like 's%';

MongoDB also supports $regex to perform string-pattern matching. Here is a list of the comparison query operators:

|

Operator |

Description |

|

$eq |

Matches values that equals a specified value |

|

$gt |

Matches values that are greater than a specified value |

|

$gte |

Matches values that are greater than or equal to a specified value |

|

$lt |

Matches values that are less than a specified value |

|

$lte |

Matches values that are less than or equal to a specified value |

|

$ne |

Matches values that are not equal to a specified value |

|

$in |

Matches values that are specified in a set of values |

|

$nin |

Matches values that are not specified in a set of values |

Here is a list of logical operators:

|

Operator |

Description |

|

$or |

Joins query clauses with the OR operator and returns all the documents that match any condition of the clause. |

|

$and |

Joins query clauses with the AND operator and returns documents that match all the conditions of all the clauses. |

|

$not |

Inverts the effects of the query expression and returns all the documents that do not match the given criteria. |

|

$nor |

Joins query clauses with the logical NOR operator and returns all the documents that match the criteria specified in the clauses. |

MongoDB also uses the findOne method to retrieve documents from the mongo collection. It internally calls the find method with a limit of 1. findOne matches all the documents with the filter criteria and returns the first document from the result set.

The following is a list of methods MongoDB uses to update the document information:

* db.collection.updateOne(<filter>, <update>, <options>);

* db.collection.updateMany(<filter>, <update>, <options>);

* db.collection.replaceOne(<filter>, <update>, <options>);

If the update operation increases the size of the document while updating, the update operation relocates the document on the disk:

Here, the query uses the $set operator to update the value of the age field, where the userId matches 1:

The following example updates the age of all users between the ages of 30 to 35:

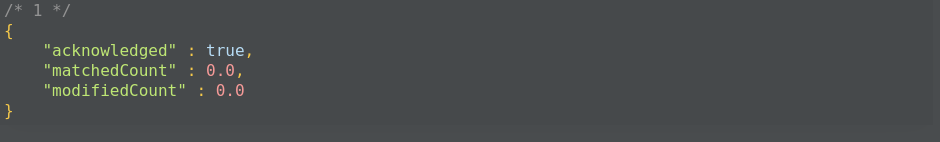

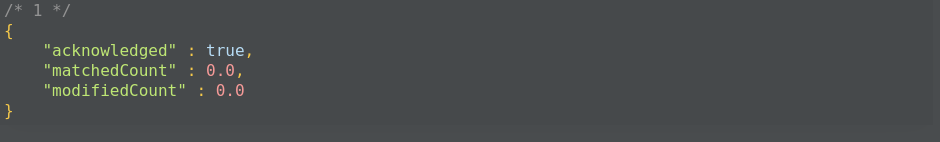

The output gives us acknowledgement of how many documents have been updated:

The following are additional methods that can be used for the update operation:

MongoDB provides the following methods to remove documents from the collection:

The db.collection.deleteMany() method is used to delete all the documents that match the given criteria. If you want to delete all the documents, you can pass an empty document criterion:

This query will delete all the documents contained in the user_profiles collection.

To delete the single most document which matched the given criteria, we use the db.collection.deleteOne() method. The following query deletes the record where the userId is equal to 1:

The following methods can also delete documents from the collection:

The MongoDB collection does not enforce structure on the document. This allows the document to map to objects or entities easily. Each document can match a data field of the entity. In practice, documents in collections share the same structure.

When deciding on data modeling, we have to consider the requirements of the application, the performance characteristics of the database's design, and data retrieval patterns. When designing data models, we have to focus on the application's usage of data and the inherent structure of the data.

While deciding the data model, we have to consider the structure of the document and how documents relate to each other. There are two key data models that show these relationships:

In this model, the relationship is maintained using links between documents. References from one document is stored in another document. This process is also called normalization; it establishes the relationship between different collections and defines collections for a more specific purpose:

We use the normalized data approach in the following scenarios:

In this document model, the relationships between data are maintained by storing data in a single document. Here, we do not create a separate document to define a relationship. We can embed the document structure in a field or array within the document. These documents are denormalized. This allows us to retrieve data in a single operation, but it unnecessarily increases the size of the document.

The embedded document structure allows us to store related pieces of data in the same document. This also allows us to update a single document without worrying about data consistency.

The embedded document structure is used in two cases:

The embedded structure provides better performance for read operations, requests and retrieves related data in a single database operation, and updates the data in a single atomic operation. But this approach can lead to an increase in the size of the document, and MongoDB will store such documents in a fragment, which leads to poor write performance.

Modeling the application data for MongoDB depends on the data as well as the characteristics of MongoDB itself. When creating data models for applications, analyze all of the read and write operations with the following operations and MongoDB features:

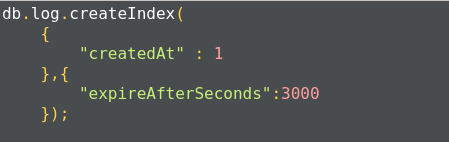

Indexes allow efficient execution of MongoDB queries. If we don't have indexes, MongoDB has to scan all the documents in the collection to select those documents that match the criteria. If proper indexing is used, MongoDB can limit the scanning of documents and select documents efficiently. Indexes are a special data structure that store some field values of documents in an easy-to-traverse way.

Indexes store the values of specific fields or sets of fields, ordered by the values of fields. The ordering of field values allows us to apply effective algorithms of traversing, such as the mid-search algorithm, and also supports range-based operations effectively. In addition, MongoDB can return sorted results easily.

Indexes in MongoDB are the same as indexes in other database systems. MongoDB defines indexes at the collection level and supports indexes on fields and sub-fields of documents.

MongoDB creates the default _id index when creating a document. The _id index prevents users from inserting two documents with the same _id value. You cannot drop an index on an _id field.

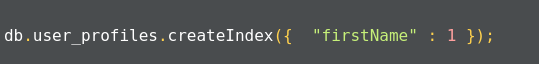

The following syntax is used to create an index in MongoDB:

>db.collection.createIndex(<key and index type specification>, <options>);

The preceding method creates an index only if an index with the same specification does not exist. MongoDB indexes use the B-tree data structure.

The following are the different types of indexes:

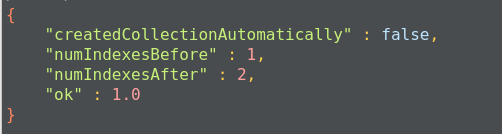

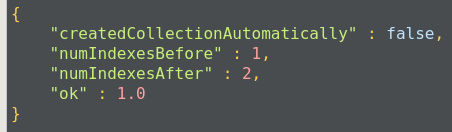

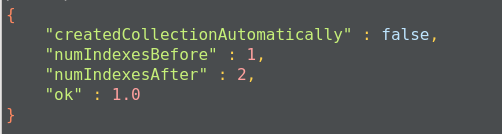

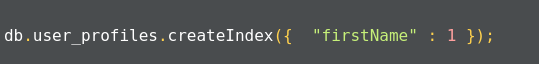

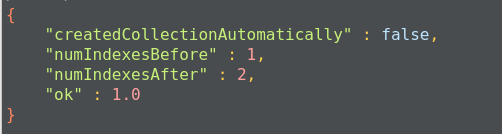

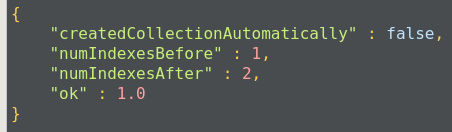

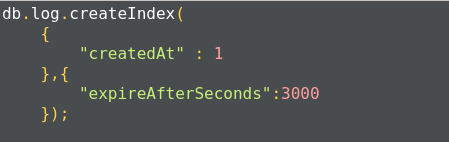

The query gives acknowledgment after creating the index:

This will create an ascending index on the firstName field. To create a descending index, we have to provide -1 instead of 1.

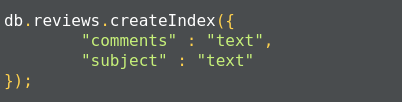

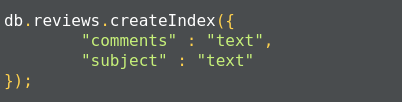

You can also create text indexes on multiple fields, for example:

Once the index is created, we get an acknowledgment:

Compound indexes can be used with text indexes to define an ascending or descending order of the index.

Indexes have the following properties:

Once the index is created, we get an acknowledgment message:

The limitations of indexes:

The limitation on data:

A replica set is a group of MongoDB instances that store the same set of data. Replicas are basically used in production to ensure a high availability of data.

Redundancy and data availability: because of replication, we have redundant data across the MongoDB instances. We are using replication to provide a high availability of data to the application. If one instance of MongoDB is unavailable, we can serve data from another instance. Replication also increases the read capacity of applications as reading operations can be sent to different servers and retrieve data faster. By maintaining data on different servers, we can increase the locality of data and increase the availability of data for distributed applications. We can use the replica copy for backup, reporting, as well as disaster recovery.

A replica set is a group of MongoDB instances that have the same dataset. A replica set has one arbiter node and multiple data-bearing nodes. In data-bearing nodes, one node is considered the primary node while the other nodes are considered the secondary nodes.

All write operations happen at the primary node. Once a write occurs at the primary node, the data is replicated across the secondary nodes internally to make copies of the data available to all nodes and to avoid data inconsistency.

If a primary node is not available for the operation, secondary nodes use election algorithms to select one of their nodes as a primary node.

A special node, called an arbiter node, is added in the replica set. This arbiter node does not store any data. The arbiter is used to maintain a quorum in the replica set by responding to a heartbeat and election request sent by the secondary nodes in replica sets. As an arbiter does not store data, it is a cost-effective resource used in the election process. If votes in the election process are even, the arbiter adds a voice to choose a primary node. The arbiter node is always the arbiter, it will not change its behavior, unlike a primary or secondary node. The primary node can step down and work as secondary node, while secondary nodes can be elected to perform as primary nodes.

Secondary nodes apply read/write operations from a primary node to secondary nodes asynchronously.

Primary nodes always communicate with other members every 10 seconds. If it fails to communicate with the others in 10 seconds, other eligible secondary nodes hold an election to choose a primary-acting node among them. The first secondary node that holds the election and receives the majority of votes is elected as a primary node. If there is an arbiter node, its vote is taken into consideration while choosing primary nodes.

Basically, the read operation happens at the primary node only, but we can specify the read operation to be carried out from secondary nodes also. A read from a secondary node does not affect data at the primary node. Reading from secondary nodes can also give inconsistent data.

Sharding is a methodology to distribute data across multiple machines. Sharding is basically used for deployment with a large dataset and high throughput operations. The single database cannot handle a database with large datasets as it requires larger storage, and bulk query operations can use most of the CPU cycles, which slows down processing. For such scenarios, we need more powerful systems.

One approach is to add more capacity to a single server, such as adding more memory and processing units or adding more RAM on the single server, this is also called vertical scaling. Another approach is to divide a large dataset across multiple systems and serve a data application to query data from multiple servers. This approach is called horizontal scaling. MongoDB handles horizontal scaling through sharding.

MongoDB's sharding consists of the following components:

Here are some of the advantages of sharding:

MongoDB is document-based database, data is stored in JSON and XML documents. MongoDB has a document size limit of 16 MB. If the size of a JSON document exceeds 16 MB, instead of storing data as a single file, MongoDB divides the file into chunks and each chunk is stored as a document in the system. MongoDB creates a chunk of 255 KB to divide files and only the last chuck can have less than 255 KB.

MongoDB uses two collections to work with gridfs. One collection is used to store the chunk data and another collection is used to store the metadata. When you query MongoDB for the operation of the gridfs file, MongoDB uses the metadata collection to perform the query and collect data from different chunks. GridFS stores data in two collections:

In this chapter, we learned about MongoDB, which is one of the most popular NoSQL databases. It is widely used in projects where requirements change frequently and is suitable for agile projects. It is a highly fault-tolerant and robust database.

Some application use cases or data models may place as much (or more) importance on the relationships between entities as the entities themselves. When this is the case, a graph database may be the optimal choice for data storage. In this chapter, we will look at Neo4j, one of the most commonly used graph databases.

Over the course of this chapter, we will discuss several aspects of Neo4j:

Once you have completed this chapter, you will begin to understand the significance of graph databases. You will have worked through installing and configuring Neo4j as you build up your own server. You will have employed simple scripts and code to interact with and utilize Neo4j, allowing you to further explore ideas around modeling interconnected data.

We'll start with a quick introduction to Neo4j. From there, we will move on to the appropriate graph database use cases, and begin to learn more about the types of problems that can be solved with Neo4j.

Neo4j is an open source, distributed data store used to model graph problems. It was released in 2007 and is sponsored by Neo4j, Inc., which also offers enterprise licensing and support for Neo4j. It departs from the traditional nomenclature of database technologies, in which entities are stored in schema-less, entity-like structures called nodes. Nodes are connected to other nodes via relationships or edges. Nodes can also be grouped together with optional structures called labels.

This relationship-centric approach to data modeling is known as the property graph model. Under the property graph model, both nodes and edges can have properties to store values. Neo4j embraces this approach. It is designed to ensure that nodes and edges are stored efficiently, and that nodes can share any number or type of relationships without sacrificing performance.[8]

Neo4j stores nodes, edges, and properties on disk in stores that are specific to each type—for example, nodes are stored in the node store.[5, s.11] They are also stored in two types of caches—the file system (FS) and the node/relationship caches. The FS cache is divided into regions for each type of store, and data is evicted on a least-frequently-used (LFU) policy.

Data is written in transactions assembled from commands and sorted to obtain a predictable update order. Commands are sorted at the time of creation, with the aim of preserving consistency. Writes are added to the transaction log and either marked as committed or rolled back (in the event of a failure). Changes are then applied (in sorted order) to the store files on disk.

It is important to note that transactions in Neo4j dictate the state and are therefore idempotent by nature.[5, s.34] They do not directly modify the data. Reapplying transactions for a recovery event simply replays the transactions as of a given safe point.

In a high-availability (HA), clustered scenario, Neo4j embraces a master/slave architecture. Transaction logs are then shared between all Neo4j instances, regardless of their current role. Unlike most master/slave implementations, slave nodes can handle both reads and writes.[5, s.37] On a write transaction, the slave coordinates a lock with the master and buffers the transaction while it is applied to the master. Once complete, the buffered transaction is then applied to the slave.

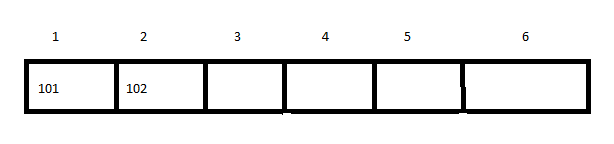

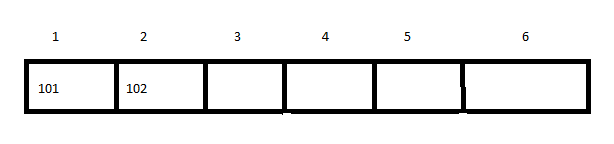

Another important aspect of Neo4j's HA architecture is that each node/edge has its own unique identifier (ID). To accomplish this, the master instance allocates the IDs for each slave instance in blocks. The blocks are then sent to each instance so that IDs for new nodes/edges can be applied locally, preserving consistency, as shown in the following diagram:

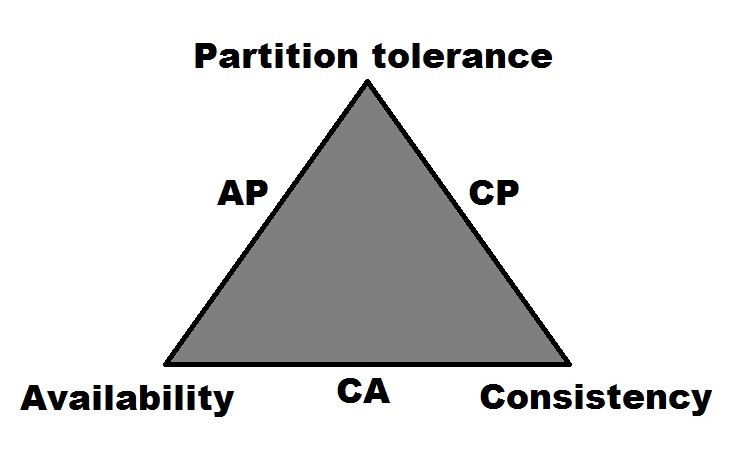

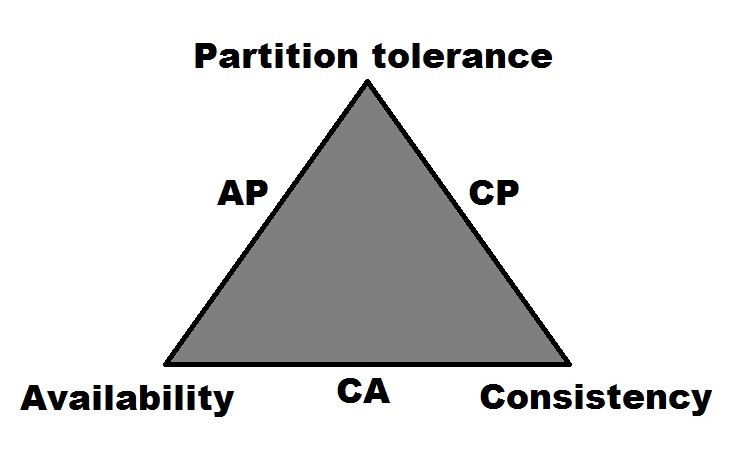

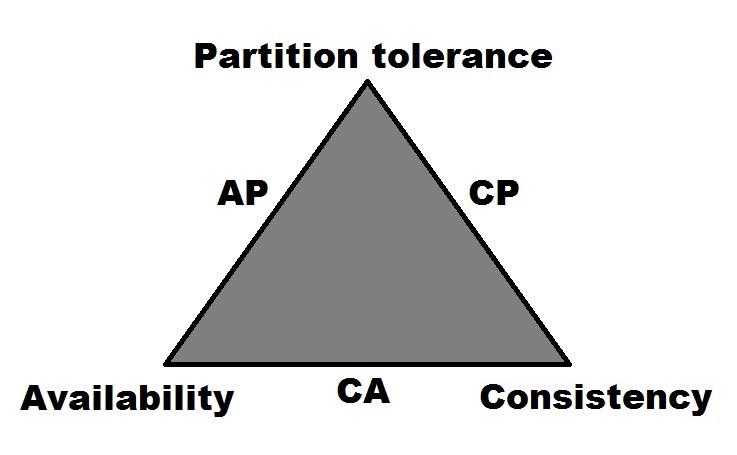

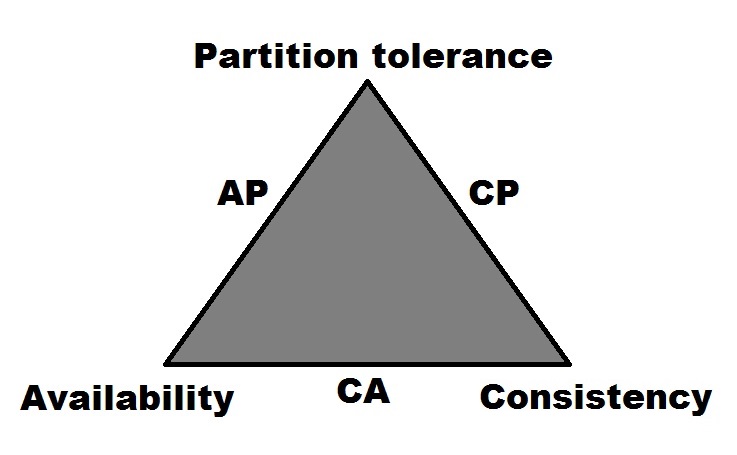

When looking at Neo4j within the context of Brewer's CAP theorem (formerly known as both Brewer's CAP principle and Brewer's CAP conjecture), its designation would be as a CP system.[3, p.1] It earns this designation because of its use of locking mechanisms to support Consistency (C) over multiple, horizontally-scaled instances in a cluster. Its support for clustering multiple nodes together indicates that Neo4j is also Partition tolerant (P).

While the Enterprise Edition of Neo4j does offer high-availability clustering as an option, there are a limited number of nodes that can accept write operations. Despite the name, this configuration limits its ability to be considered highly-available within the bounds of the CAP theorem.

Aside from its support of the property graph model, Neo4j has several other features that make it a desirable data store. Here, we will examine some of those features and discuss how they can be utilized in a successful Neo4j cluster.

Enterprise Neo4j offers horizontal scaling through two types of clustering. The first is the typical high-availability clustering, in which several slave servers process data overseen by an elected master. In the event that one of the instances should fail, a new master is chosen.

The second type of clustering is known as causal clustering. This option provides additional features, such as disposable read replicas and built-in load balancing, that help abstract the distributed nature of the clustered database from the developer. It also supports causal consistency, which aims to support Atomicity Consistency Isolation and Durability (ACID) compliant consistency in use cases where eventual consistency becomes problematic. Essentially, causal consistency is delivered with a distributed transaction algorithm that ensures that a user will be able to immediately read their own write, regardless of which instance handles the request.

Neo4j ships with Neo4j Browser, a web-based application that can be used for database management, operations, and the execution of Cypher queries. In addition to, monitoring the instance on which it runs, Neo4j Browser also comes with a few built-in learning tools designed to help new users acclimate themselves to Neo4j and graph databases. Neo4j Browser is a huge step up from the command-line tools that dominate the NoSQL landscape.

In most clustered Neo4j configurations, a single instance contains a complete copy of the data. At the moment, true sharding is not available, but Neo4j does have a feature known as cache sharding. This feature involves directing queries to instances that only have certain parts of the cache preloaded, so that read requests for extremely large data sets can be adequately served.

One of the things that Neo4j does better than most NoSQL data stores is the amount of documentation and tutorials that it has made available for new users. The Neo4j website provides a few links to get started with in-person or online training, as well as meetups and conferences to become acclimated to the community. The Neo4j documentation is very well-done and kept up to date, complete with well-written manuals on development, operations, and data modeling. The blogs and videos by the Neo4j, Inc. engineers are also quite helpful in getting beginners started on the right path.

Additionally, when first connecting to your instance/cluster with Neo4j Browser, the first thing that is shown is a list of links directed at beginners. These links direct the user to information about the Neo4j product, graph modeling and use cases, and interactive examples. In fact, executing the play movies command brings up a tutorial that loads a database of movies. This database consists of various nodes and edges that are designed to illustrate the relationships between actors and their roles in various films.

Because of Neo4j's focus on node/edge traversal, it is a good fit for use cases requiring analysis and examination of relationships. The property graph model helps to define those relationships in meaningful ways, enabling the user to make informed decisions. Bearing that in mind, there are several use cases for Neo4j (and other graph databases) that seem to fit naturally.

Social networks seem to be a natural fit for graph databases. Individuals have friends, attend events, check in to geographical locations, create posts, and send messages. All of these different aspects can be tracked and managed with a graph database such as Neo4j.

Who can see a certain person's posts? Friends? Friends of friends? Who will be attending a certain event? How is a person connected to others attending the same event? In small numbers, these problems could be solved with a number of data stores. But what about an event with several thousand people attending, where each person has a network of 500 friends? Neo4j can help to solve a multitude of problems in this domain, and appropriately scale to meet increasing levels of operational complexity.

Like social networks, Neo4j is also a good fit for solving problems presented by matchmaking or dating sites. In this way, a person's interests, goals, and other properties can be traversed and matched to profiles that share certain levels of equality. Additionally, the underlying model can also be applied to prevent certain matches or block specific contacts, which can be useful for this type of application.

Working with an enterprise-grade network can be quite complicated. Devices are typically broken up into different domains, sometimes have physical and logical layers, and tend to share a delicate relationship of dependencies with each other. In addition, networks might be very dynamic because of hardware failure/replacement, organization, and personnel changes.

The property graph model can be applied to adequately work with the complexity of such networks. In a use case study with Enterprise Management Associates (EMA), this type of problem was reported as an excellent format for capturing and modeling the inter dependencies that can help to diagnose failures.[4]

For instance, if a particular device needs to be shut down for maintenance, you would need to be aware of other devices and domains that are dependent on it, in a multitude of directions. Neo4j allows you to capture that easily and naturally without having to define a whole mess of linear relationships between each device.[4] The path of relationships can then be easily traversed at query time to provide the necessary results.

Many scalable data store technologies are not particularly suitable for business analysis or online analytical processing (OLAP) uses. When working with large amounts of data, coalescing desired data can be tricky with relational database management systems (RDBMS). Some enterprises will even duplicate their RDBMS into a separate system for OLAP so as not to interfere with their online transaction processing (OLTP) workloads.

Neo4j can scale to present meaningful data about relationships between different enterprise-marketing entities. In his graduate thesis titled GraphAware: Towards Online Analytical Processing in Graph Databases, researcher Michal Bachman illustrates this difference in a simple comparison of traversing relationships in both RDBMS and graph database management systems (GDBMS). Bachman observes that What might be a straightforward shallow breadth-first search in a GDBMS (hence considered OLTP) could be a very expensive multi-join operation in RDBMS (thus qualifying as OLAP).[2]

However, Bachman also urges caution with analytical workloads on graph databases, stating that graph databases lack native OLAP support.[2] This implies that additional tools may need to be built to suit specific business analysis needs.

Many brick-and-mortar and online retailers collect data about their customers' shopping habits. However, many of them fail to properly utilize this data to their advantage. Graph databases, such as Neo4j, can help assemble the bigger picture of customer habits for searching and purchasing, and even take trends in geographic areas into consideration.

For example, purchasing data may contain patterns indicating that certain customers tend to buy certain beverages on Friday evenings. Based on the relationships of other customers to products in that area, the engine could also suggest things such as cups, mugs, or glassware. Is the customer also a male in his thirties from a sports-obsessed area? Perhaps suggesting a mug supporting the local football team may spark an additional sale. An engine backed by Neo4j may be able to help a retailer uncover these small troves of insight.

Relative to other NoSQL databases, Neo4j does not have a lot of anti-patterns. However, there are some common troubles that seem to befall new users, and we will try to detail them here.

Using relational modeling techniques can lead to trouble with almost every NoSQL database, and Neo4j is no exception to that rule. Similar to other NoSQL databases, building efficient models in Neo4j involves appropriately modeling the required queries. Relational modeling requires you to focus on how your data is stored, and not as much on how it is queried or returned.

Whereas modeling for Neo4j requires you to focus on what your nodes are, and how they are related to each other. Additionally, the relationships should be dependent on the types of questions (queries) that your model will be answering. Failure to apply the proper amount of focus on your data model can lead to performance and operational troubles later.

In a talk at GraphConnect, San Francisco, Stefan Armbruster (field engineer for Neo4j, Inc.) described this as one of the main ways new users can get into trouble. Starting with a mission-critical use case for your first Neo4j project will probably not end well. Developers new to Neo4j need to make sure that they have an appropriate level of experience with it before attempting to build something important and complicated and move it into production. The best idea is to start with something small and expand your Neo4j footprint over time.

One possible way to avoid this pitfall is to make sure that you have someone on your team with graph database experience. Failing to recruit someone with that experience and knowledge, avoiding training, and ignoring the graph learning curve are surefire ways to make sure you really mess up your project on the very first day.[1] The bottom line is that there is no substitute for experience, if you want to get your graph database project done right.

While it might seem like a good idea to define entities as properties inside other entities, this can quickly get out of control. Remember that each property on an entity should directly relate to that entity, otherwise you will find your nodes getting big and queries becoming limited.

For example, it might be tempting to store things, such as drivers and cars, together, storing attributes about the car as properties on the driver. Querying will become difficult to solve if new requirements to query properties about the cars are introduced. But querying will become near impossible once the system needs to account for drivers with multiple cars. The bottom line is that if you need to store properties for an entity, it should have its own node and there should be a relationship to it.

Be sure to build your edges with meaningful relationship types. Do not use a single, general type such as CONNECTED_TO for different types of relationships.[1] Be specific. On the other end of that spectrum, it is also important not to make every relationship unique. Neo4j caps the number of relationship types at 65,000, and your model should not approach that number. Following either of those extremes will create trouble later with queries that traverse relationships. Problems with relationship type usage usually stem from a lack of understanding of the property graph model.

While Neo4j does offer primitive storage types, resist the urge to store binary large object (BLOB) data. BLOB data storage leads to large property values. Neo4j stores property values in a single file.[1] Large property values take a while to read, which can slow down all queries seeking property data (not just the BLOBs). To suit projects with requirements to store BLOB data, a more appropriate data store should be selected.

Indexes can be a helpful tool to improve the performance of certain queries. The current release of Neo4j offers two types of indexes: schema indexes and legacy indexes. Schema indexes should be used as your go-to index for new development, but legacy indexes are still required for things such as full-text indexing.[1] However, beware of creating indexes for every property of a node or label. This can cause your disk footprint to increase by a few orders of magnitude because of the extra write activity.

Building your Neo4j instance(s) with the right hardware is essential to running a successful cluster. Neo4j runs best when there is plenty of RAM at its disposal.

One aspect to consider is that Neo4j runs on a Java virtual machine (JVM). This means that you need to have at least enough random-access memory (RAM) to hold the JVM heap, plus extra for other operating system processes. While Neo4j can be made to run on as little as 2 GB of RAM, a memory size of 32 GB of RAM (or more) is recommended for production workloads. This will allow you to configure your instances to map as much data into memory as possible, leading to optimal performance.

Neo4j supports both x86 and OpenPOWER architectures. It requires at least an Intel Core i3, while an Intel Core i7 or IBM POWER8 is recommended for production.

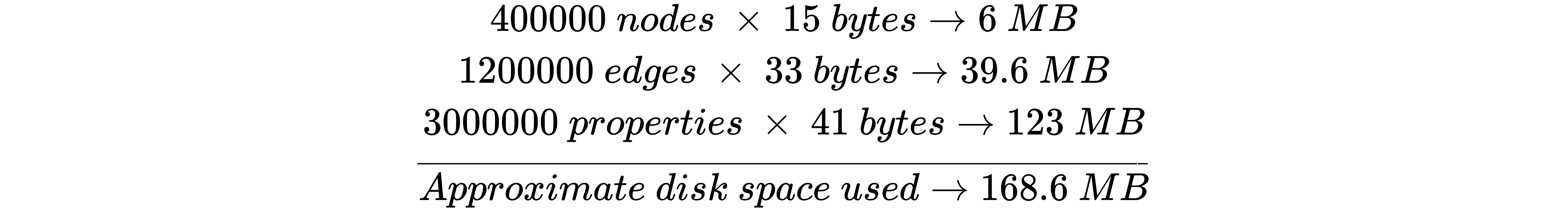

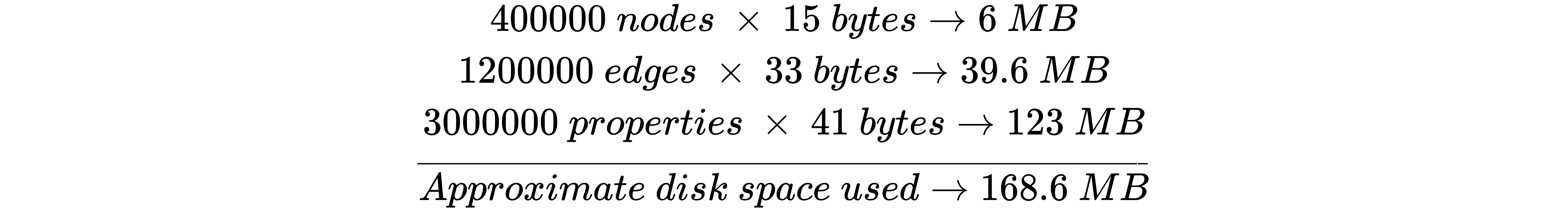

As with most data store technologies, disk I/O is a potential performance bottleneck. Therefore, it is recommended to use solid-state drives with either the ZFS or ext4 file systems. To get an idea of the amount of required disk space, the Neo4j documentation offers the following approximations (Neo4j Team 2017d):

For example, assume that my data model consists of 400,000 nodes, 1.2 million relationships, and 3 million properties. I can then calculate my estimated disk usage with Neo4j as:

For development, Neo4j should run fine on Windows and most flavors of Linux or BSD. A DMG installer is also available for OS X. When going to production, however, Linux is the best choice for a successful cluster. With Neo4j version 2.1 and earlier, one of the cache layers was off heap in the Linux releases, but on heap for Windows. This essentially made it impossible to cache a large amount of graph data while running on Windows.[1]

On Linux, Neo4j requires more than the default number of maximum open file handles. Increase that to 40,000 by adjusting the following line in the /etc/security/limits.conf file:

* - nofile 40000

Neo4j requires the following (TCP) ports to be accessible:

Here, we will install a single Neo4j instance running on one server. We will start by downloading the latest edition of the Neo4j Community Edition from https://neo4j.com/download/other-releases/#releases. There are downloads with nice, GUI-based installers available for most operating systems. We will select the Linux tarball install and download it. Then, we will copy the tarball to the directory from which we intend to run it. For this example, we will use the Neo4j 3.3.3 Community Edition:

sudo mkdir /local

sudo chown $USER:$USER /local

cd /local

mv ~/Downloads/neo4j-community-3.3.3-unix.tar.gz .

Now we can untar it, and it should create a directory and expand the files into it:

tar -zxvf neo4j-community-3.3.3-unix.tar.gz

Many people find it more convenient to rename this directory:

mv neo4j-community-3.3.3/ neo4j/

Be sure to use the most recent version of Java 8 from either Oracle or OpenJDK with Neo4j. Note that at the time of writing, Java 9 is not yet compatible with Neo4j.

For the purposes of the following examples, no additional configuration is necessary. But for deploying a production Neo4j server, there are settings within the conf/neo4j.conf file that are desirable or necessary to be altered.

The underlying database filename can be changed from its default setting of graph.db:

# The name of the database to mount

dbms.active_database=graph.db

The section after the database name configuration is where things such as the data, certificates, and log directories can be defined. Remember that all locations defined here are relative, so make sure to explicitly define any paths that may be required to be located somewhere other than the Neo4j home directory:

# Paths of directories in the installation.

#dbms.directories.data=data

#dbms.directories.plugins=plugins

#dbms.directories.certificates=certificates

#dbms.directories.logs=logs

#dbms.directories.lib=lib

#dbms.directories.run=run

For instance, if we wanted to put the data or log location on a different directory or mount point (data0 off of root and /var/log, respectively), we could define them like this:

dbms.directories.data=/data0

dbms.directories.logs=/var/log/neo4j

Our instance should be able to accept connections from machines other than the localhost (127.0.0.1), so we'll also set the default_listen_address and default_advertised_address. These settings can be modified in the network connector configuration section of the neo4j.conf file:

dbms.connectors.default_listen_address=192.168.0.100

dbms.connectors.default_advertised_address=192.168.0.100

The default connector ports can be changed as well. Client connections will need the Bolt protocol enabled. By default, it is defined to connect on port 7687:

#Bolt connector

dbms.connector.bolt.enabled=true

dbms.connector.bolt.listen_address=:7687

Note that if you disable the bolt connector, then client applications will only be able to connect to Neo4j using the REST API. The web management interface and REST API use HTTP/HTTPS, so they connect (by default) on ports 7474 and 7473, respectively. These ports can also be altered:

# HTTP Connector. There must be exactly one HTTP connector.

dbms.connector.http.enabled=true

dbms.connector.http.listen_address=:7474

# HTTPS Connector. There can be zero or one HTTPS connectors.

dbms.connector.https.enabled=true

dbms.connector.https.listen_address=:7473

Now is also a good time to change the initial password. Neo4j installs with a single default admin username and password of neo4j/neo4j. To change the password, we can use the following command:

bin/neo4j-admin set-initial-password flynnLives

By default, Neo4j installs with the Usage Data Collector (UDC) enabled.[7] The UDC collects data concerning your usage and installation, tars it up and sends it to udc.neo4j.org. A full description of the data collected can be found at http://neo4j.com/docs/operations-manual/current/configuration/usage-data-collector/.

To disable this feature, simply add this line to the bottom of your neo4j.conf file:

dbms.udc.enabled=false

While the default JVM settings should be fine for most single-instance Neo4j clusters, larger operational workloads may require the tuning of various settings. Some bulk-load operations may not complete successfully if the JVM stack size is too small. To adjust it (say, to 1 MB), add the following line to the end of the neo4j.conf file:

dbms.jvm.additional=-Xss1M

The default garbage collector for Neo4j is the Concurrent Mark and Sweep (CMS) garbage collector. As Neo4j typically recommends larger heap sizes for production (20 GB+), performance can be improved by using the garbage-first garbage collector (G1GC). For example, to enable G1GC with a 24 GB heap and a target pause time of 200 ms, add the following settings to your neo4j.conf file:

dbms.memory.heap.max_size=24G

dbms.memory.heap.initial_size=24G

dbms.jvm.additional=-XX:+UseG1GC

dbms.jvm.additional=-XX:MaxGCPauseMillis=200

Note that regardless of the choice of the garbage collector and its settings, Neo4j should be tested under the production load to ensure that performance is within desirable levels. Be sure to keep track of GC behaviors and trends, and make adjustments as necessary.

Neo4j supports high-availability (HA) master/slave clustering. To set up an HA Neo4j cluster, build the desired number of instances as if they were single-server installs. Then, adjust or add the following properties in the conf/neo4j.conf file:

ha.server_id=1

ha.initial_hosts=192.168.0.100:5001,192.168.0.101:5001,192.168.0.102:5001

dbms.mode=HA

Each Neo4j instance in your cluster must have a unique ha.server_id. It must be a positive number. List each IP address with its HA port (5001 by default) in the ha.initial_hosts property. Host names can also be used here. The property defaults to single, and should be set to HA for an HA cluster.

Each instance of Neo4j can now be started in any order.

Neo4j 3.1 introduced support for a new type of clustering known as causal clustering. With causal clustering, a few core servers will be configured, along with many read replicas. The configuration for causal clustering is similar to HA clustering:

causal_clustering.initial_discovery_members=192.168.0.100:5000,192.168.0.101:5000,192.168.0.102:5000

causal_clustering.expected_core_cluster_size=3

dbms.mode=CORE

This will define the 192.168.0.100-102 instances as core servers. Note that dbms.mode is set to CORE. The ha.initial_hosts property is replaced with the causal_clustering.initial_discovery_members property. The expected number of core servers is defined with the causal_clustering.expected_core_cluster_size property.

Configuring a read replica requires the same three lines in the configuration file, except that the dbms.mode is specified differently:

dbms.mode=READ_REPLICA

You should now be able to start your Neo4j server process in the foreground:

bin/neo4j console

This yields the following output:

Active database: graph.db

Directories in use:

home: /local/neo4j

config: /local/neo4j/conf

logs: /local/neo4j/logs

plugins: /local/neo4j/plugins

import: /local/neo4j/import

data: /local/neo4j/data

certificates: /local/neo4j/certificates

run: /local/neo4j/run

Starting Neo4j.

2017-07-09 17:10:05.300+0000 INFO ======== Neo4j 3.2.2 ========

2017-07-09 17:10:05.342+0000 INFO Starting...

2017-07-09 17:10:06.464+0000 INFO Bolt enabled on 192.168.0.100:7687.

2017-07-09 17:10:09.576+0000 INFO Started.

2017-07-09 17:10:10.982+0000 INFO Remote interface available at http://192.168.0.100:7474/

Alternatively, Neo4j can be started with the start command (instead of console) to run the process in the background. For this, current logs for the server process can be obtained by tailing the log/debug.log file:

tail -f /local/neo4j/log/debug.log

If Neo4j is running as a service, it will respond to the service start/stop commands as well:

sudo service neo4j start

Similarly, the Neo4j process can be stopped in the following ways:

When the Neo4j process is started, it returns output describing the on-disk locations of various server components. This information is helpful for operators to apply changes to the configuration quickly. It also clearly lists the addresses and ports that it is listening on for Bolt and HTTP connections.

To run Neo4j Browser, navigate to the URL mentioned in your server output (or in your neo4j.log file) as the Remote interface:

INFO Remote interface available at http://192.168.0.100:7474/

If you did not set the initial password from the preceding command line, Neo4j Browser will prompt you to do so immediately.

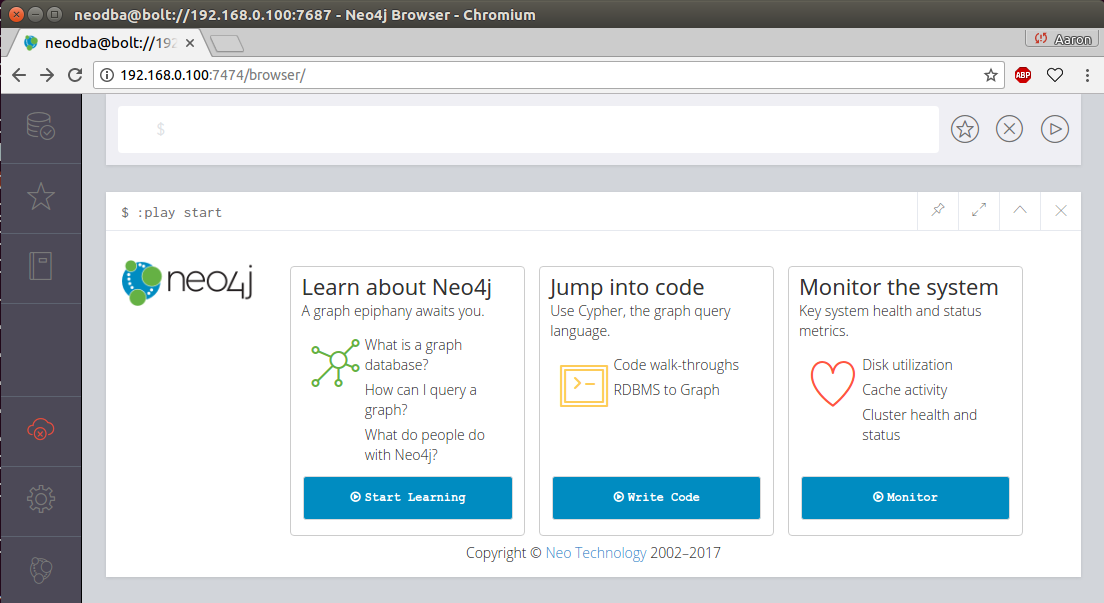

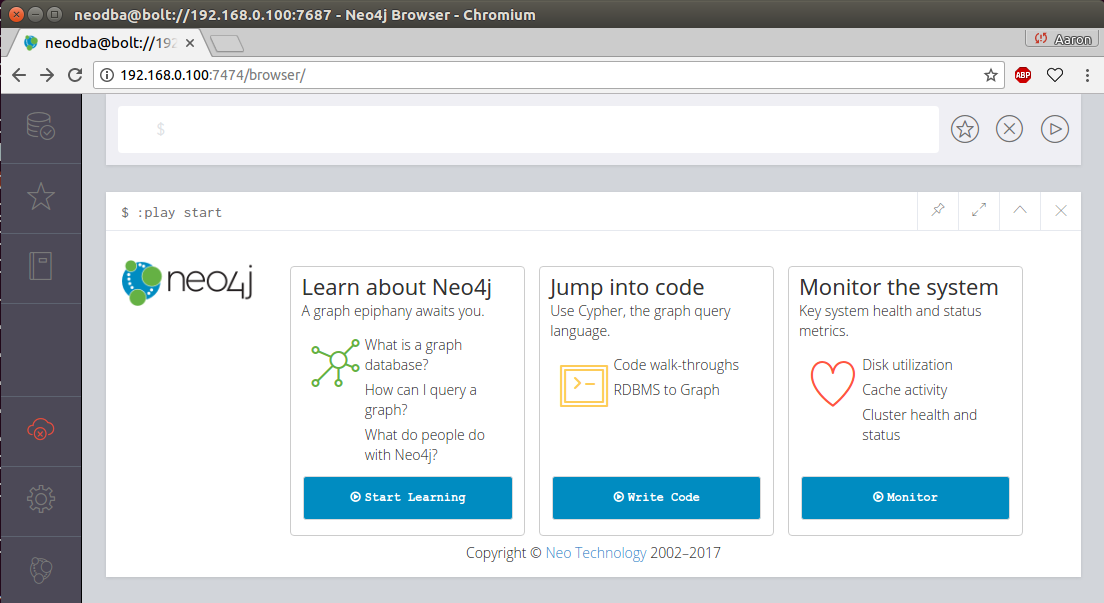

Once you have successfully logged in, you should see a screen similar to this:

This initial screen gives you several links to get started with, including an introduction to Neo4j, walkthroughs with the code, and ways to monitor the current server's resources.

The underlying query language used in Neo4j is called Cypher. It comes with an intuitive set of pattern-matching tools to allow you to model and query nodes and relationships. The top section, located above the play start section in the previous screenshot, is a command panel that accepts Cypher queries.

Let's click the command panel and create a new node:

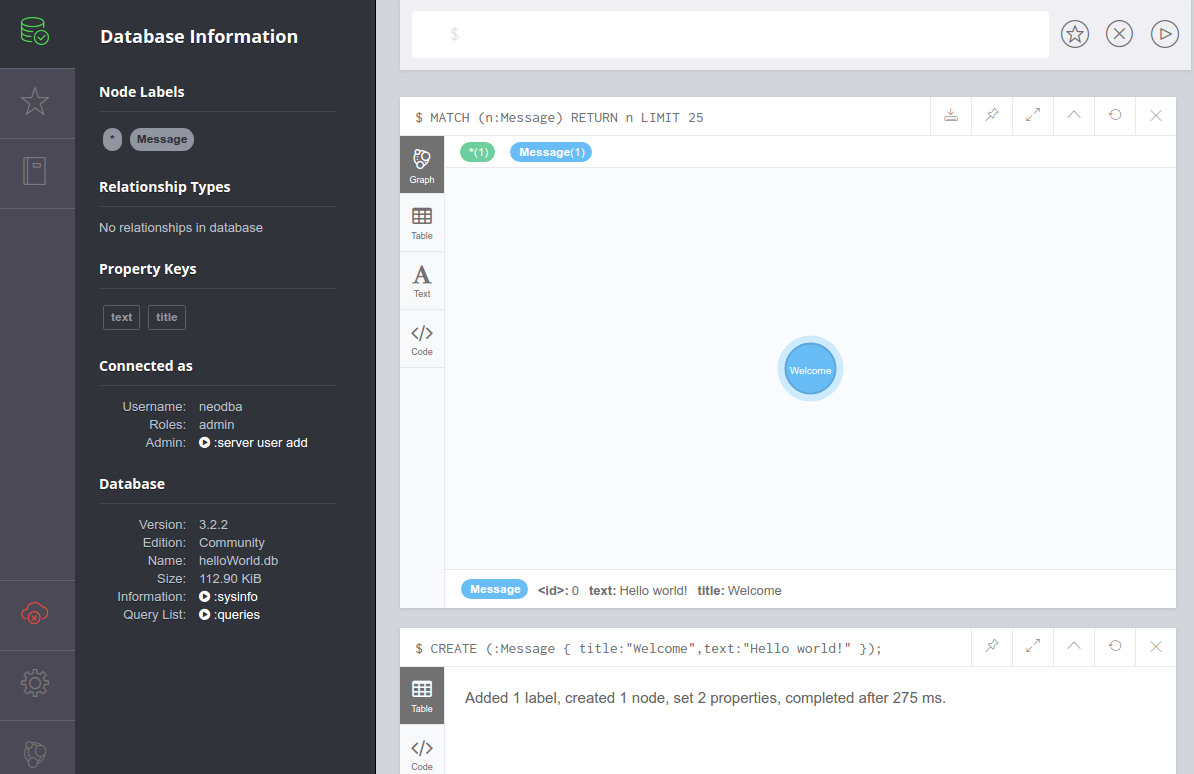

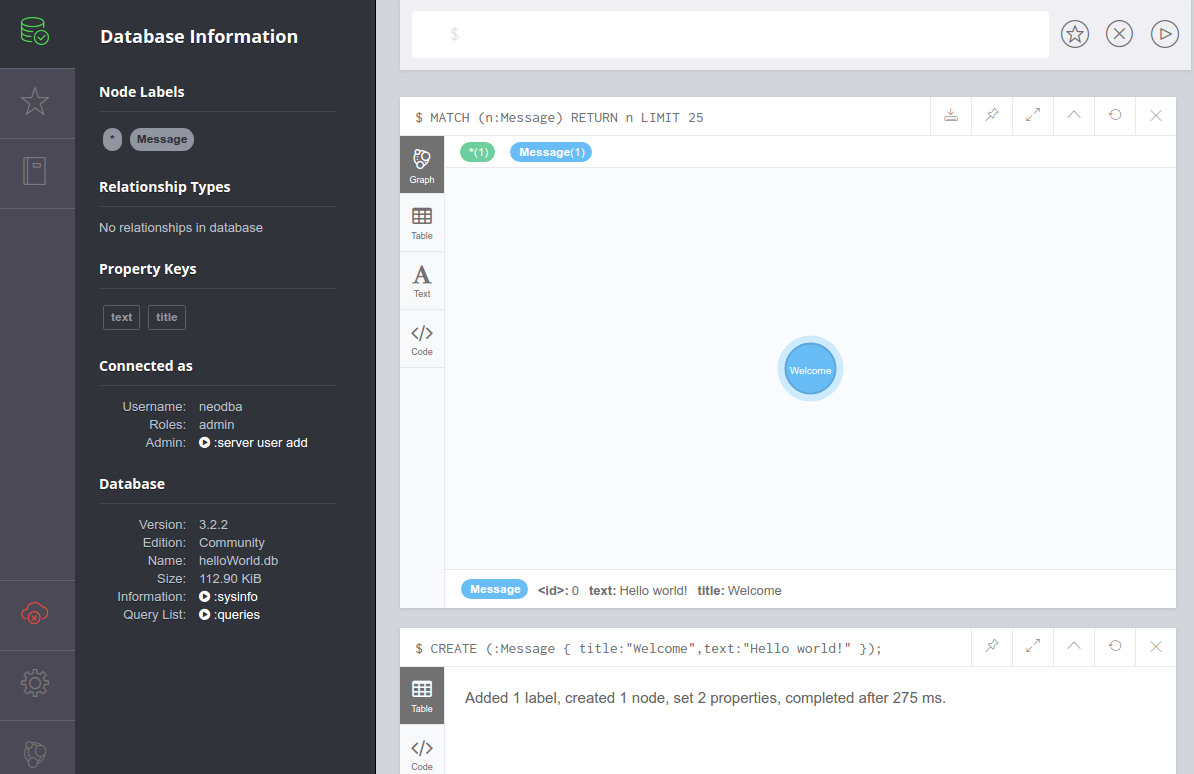

CREATE (:Message { title:"Welcome",text:"Hello world!" });

With the preceding command, I have created a new node of the Message type. The Message node has two properties, title and text. The title property has the value of Welcome, and the text property has the value of Hello world!.

In the following screenshot, in the left-hand portion of Neo4j Browser, I can see that I now have a node label named Message. Click the Message label:

Once you do that, Neo4j Browser sends the server process a Cypher query of its own:

MATCH (n:Message) RETURN n LIMIT 25;

The results of this query appear in a new pane, and the default view is one that shows a graphical representation of your node, which right now is only a blue Message node, as shown in the previous screenshot. If you hover over the Welcome message node, you should see its properties displayed at the bottom of the pane:

<id>: 0 text: Hello world! Title: Welcome

Of course, this lone node doesn't really help us do very much. So let's create a new node representing the new query language that we're learning, Cypher:

CREATE (:Language { name:"Cypher",version:"Cypher w/ Neo4j 3.2.2" });

Now, our node labels section contains types for both Message and Language. Feel free to click around for the different node types. But this still doesn't give us much that we can be productive with.

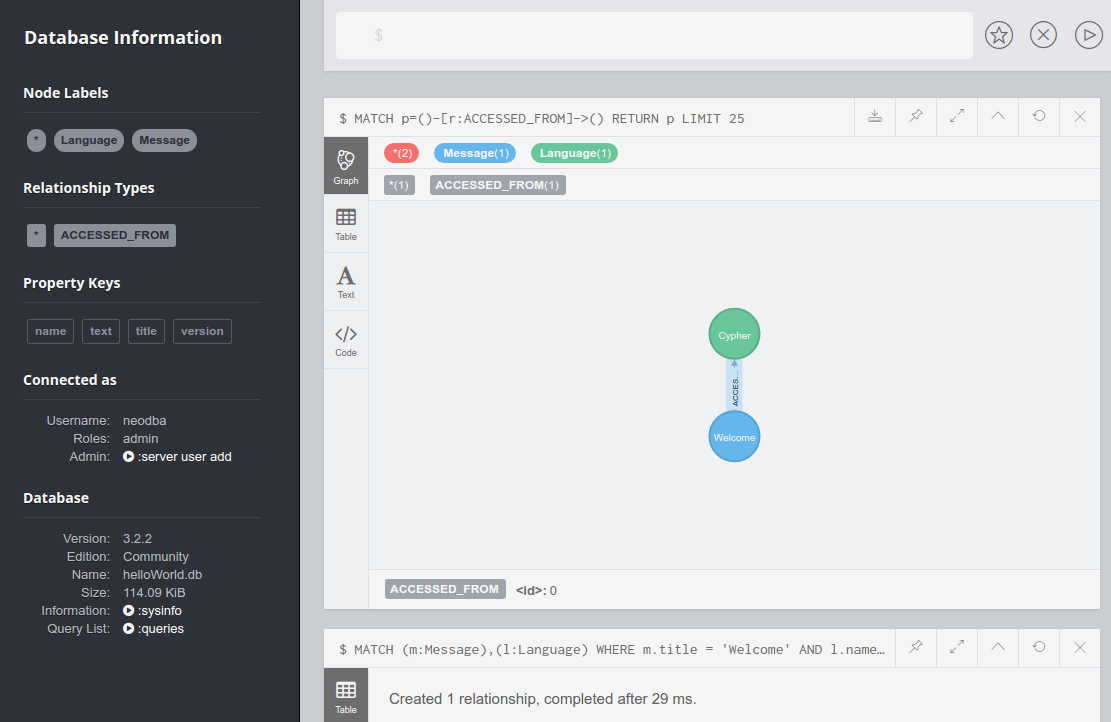

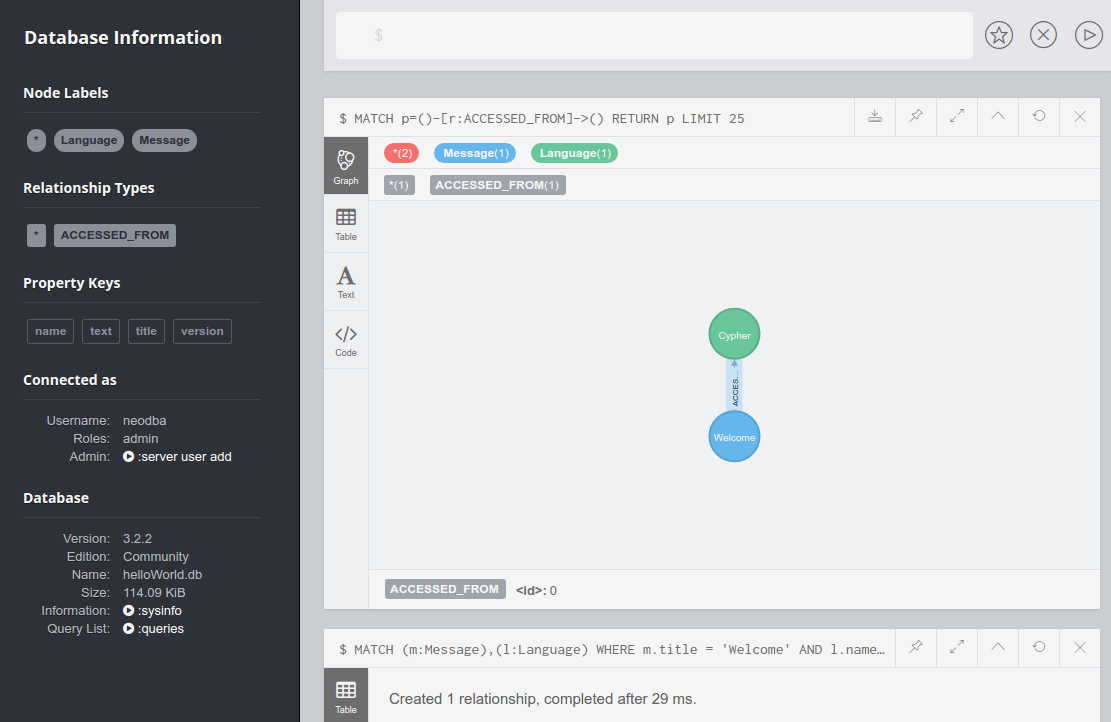

Next, we'll create a relationship or edge. Edges can be one-way or two-way, but we'll just create a one-way edge (from Message to Language) for now:

MATCH (m:Message),(c:Language)

WHERE m.title = 'Welcome' AND c.name = 'Cypher'

CREATE (m)-[:ACCESSED_FROM]->(c);

We now have an entry in the left-hand navigation menu under Relationships Types, as shown in the following screenshot. Click the ACCESSED_FROM relationship to see a graphical view of the Welcome message and the Cypher language connected by the new edge:

Next, we will load a file of NASA astronaut data[6] into our local Neo4j instance. Download the following files (or clone the repository) from https://github.com/aploetz/packt/:

If it is not present, install the Neo4j Python driver:

pip install neo4j-driver

The neoCmdFile.py is a Python script designed to load files that consist of Cypher commands. The astronaut_data.neo file is a Cypher command file that will build a series of nodes and edges for the following examples. Run the neoCmdFile.py script to load the astronaut_data.neo file:

python neoCmdFile.py 192.168.0.100 neo4j flynnLives astronaut_data.neo Data from astronaut_data.neo loaded!

Note that if you get a TransientError exception informing you that your JVM stack size is too small, try increasing it to 2 MBs (or more) by adding the following line to the end of your neo4j.conf file (and bouncing your Neo4j server):

dbms.jvm.additional=-Xss2M

With the astronaut data now loaded, we can return to Neo4j Browser and run Cypher queries against it. Run this query for NASA astronauts who were recruited in group 1:

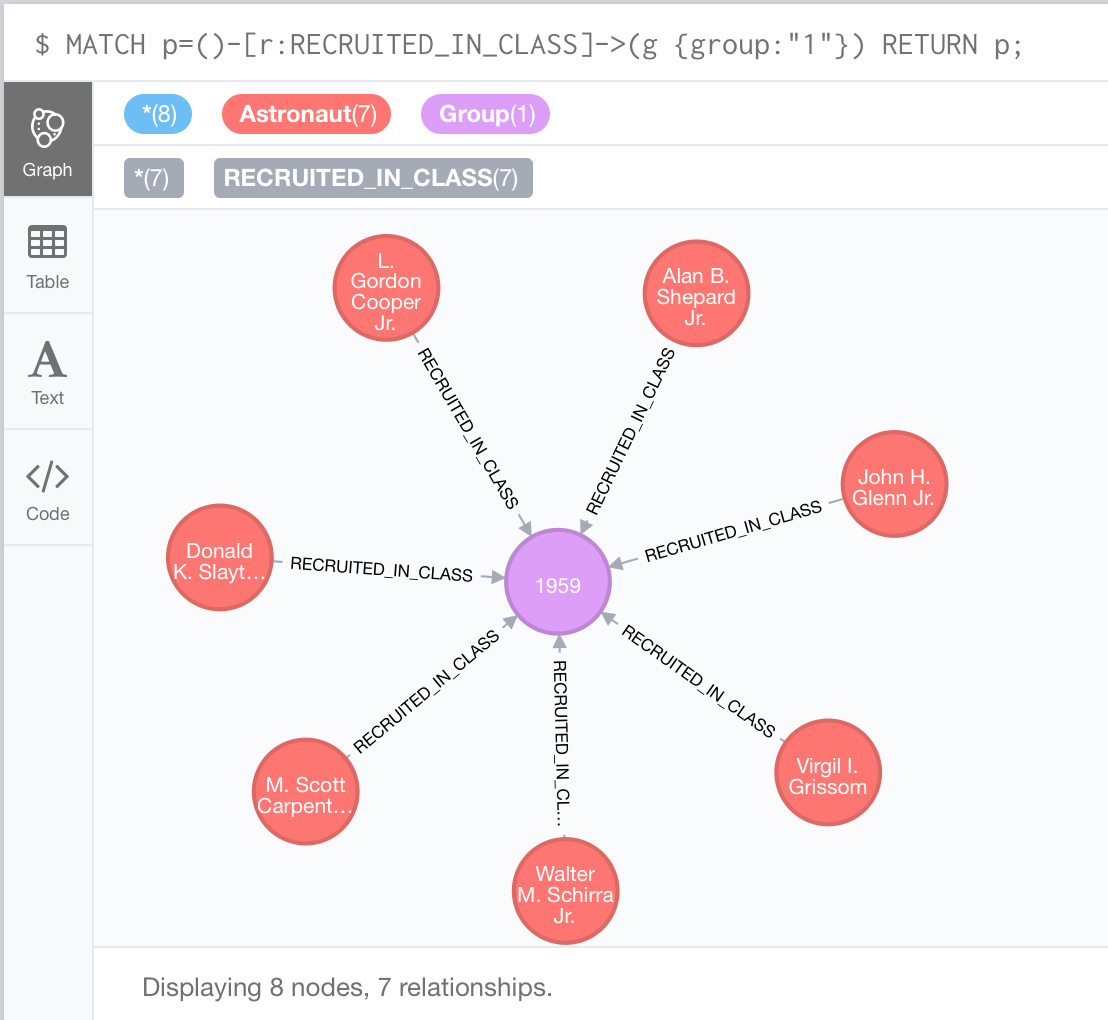

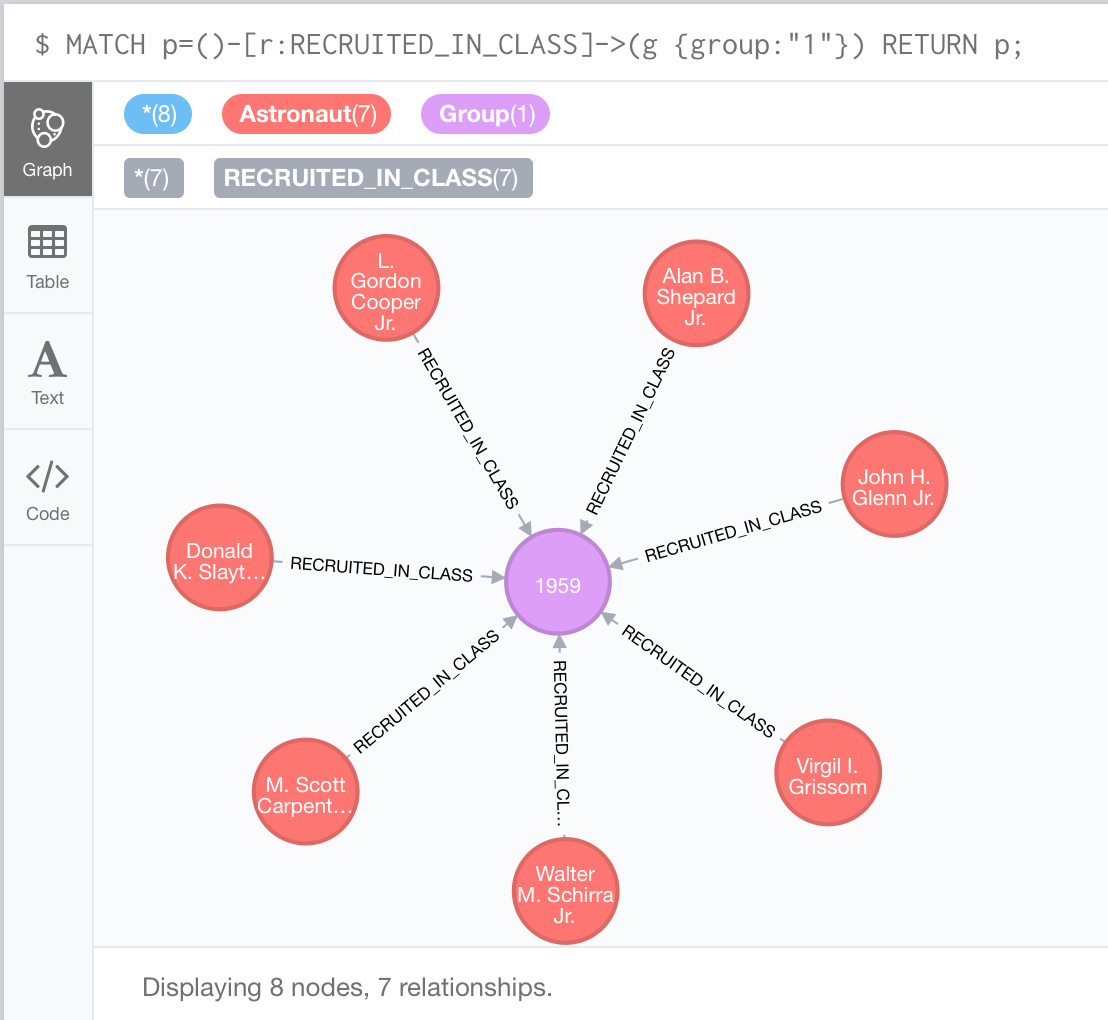

MATCH p=()-[r:RECRUITED_IN_CLASS]->(g {group:"1"}) RETURN p;

The output for the preceding query would look like this:

This particular query, in the preceding screenshot, returns data for the famous Mercury Seven astronauts.

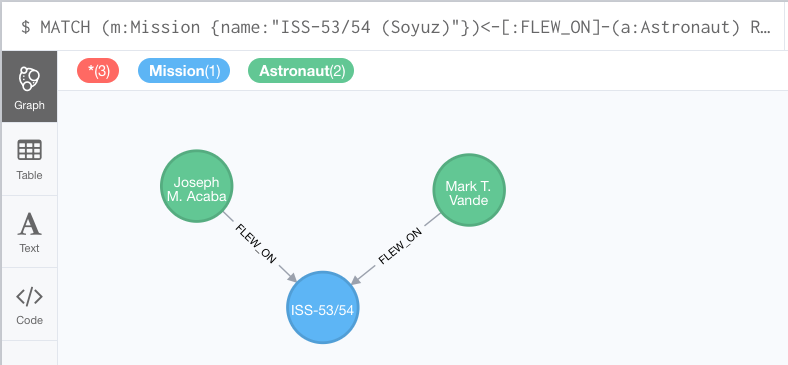

In addition to querying astronauts by group, we can also query by other things, such as a specific mission. If we wanted data for the astronauts who flew on the successful failure of Apollo 13, the query would look like this:

MATCH (a:Astronaut)-[:FLEW_ON]->(m:Mission {name:'Apollo 13'})

RETURN a, m;

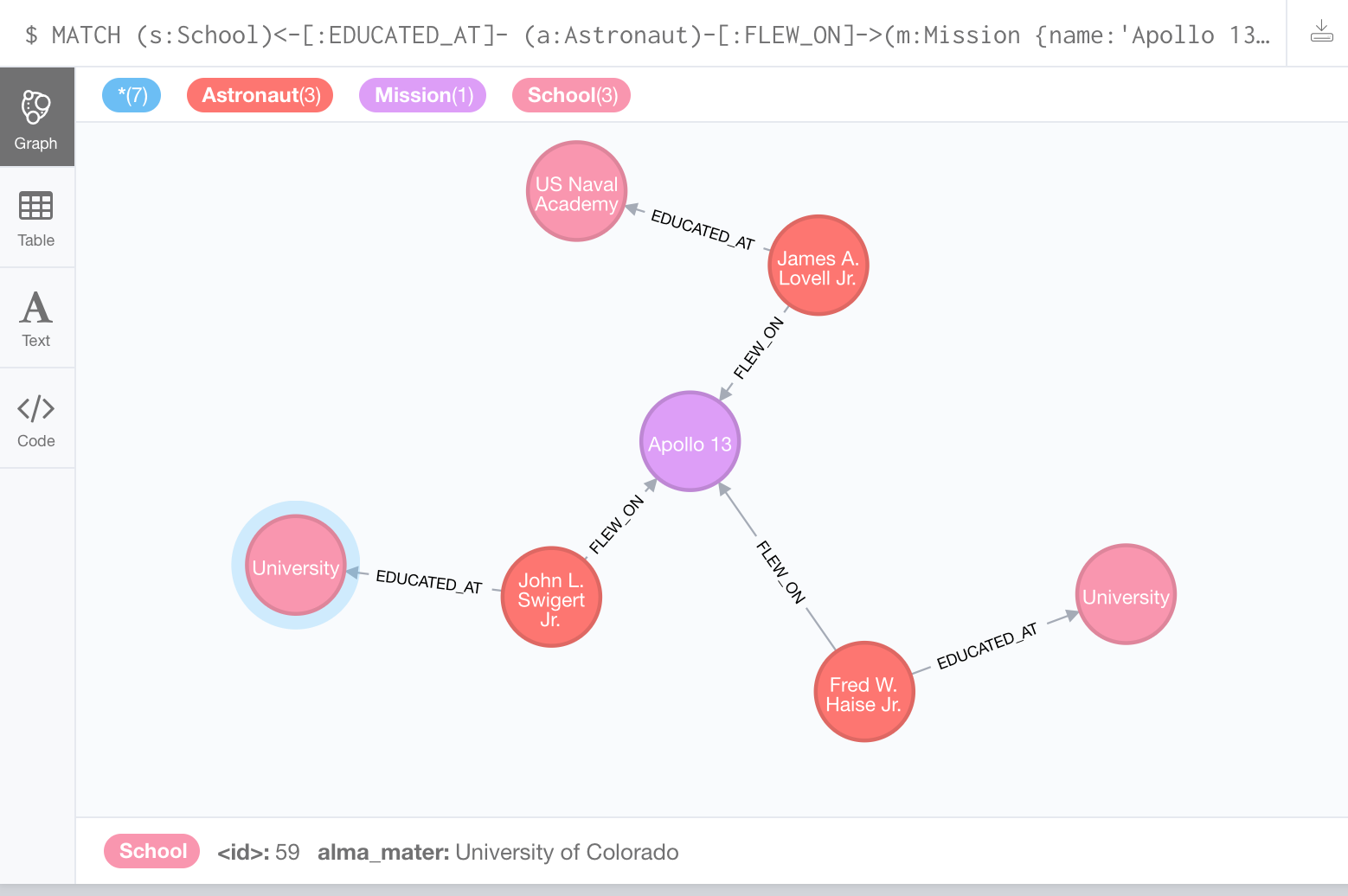

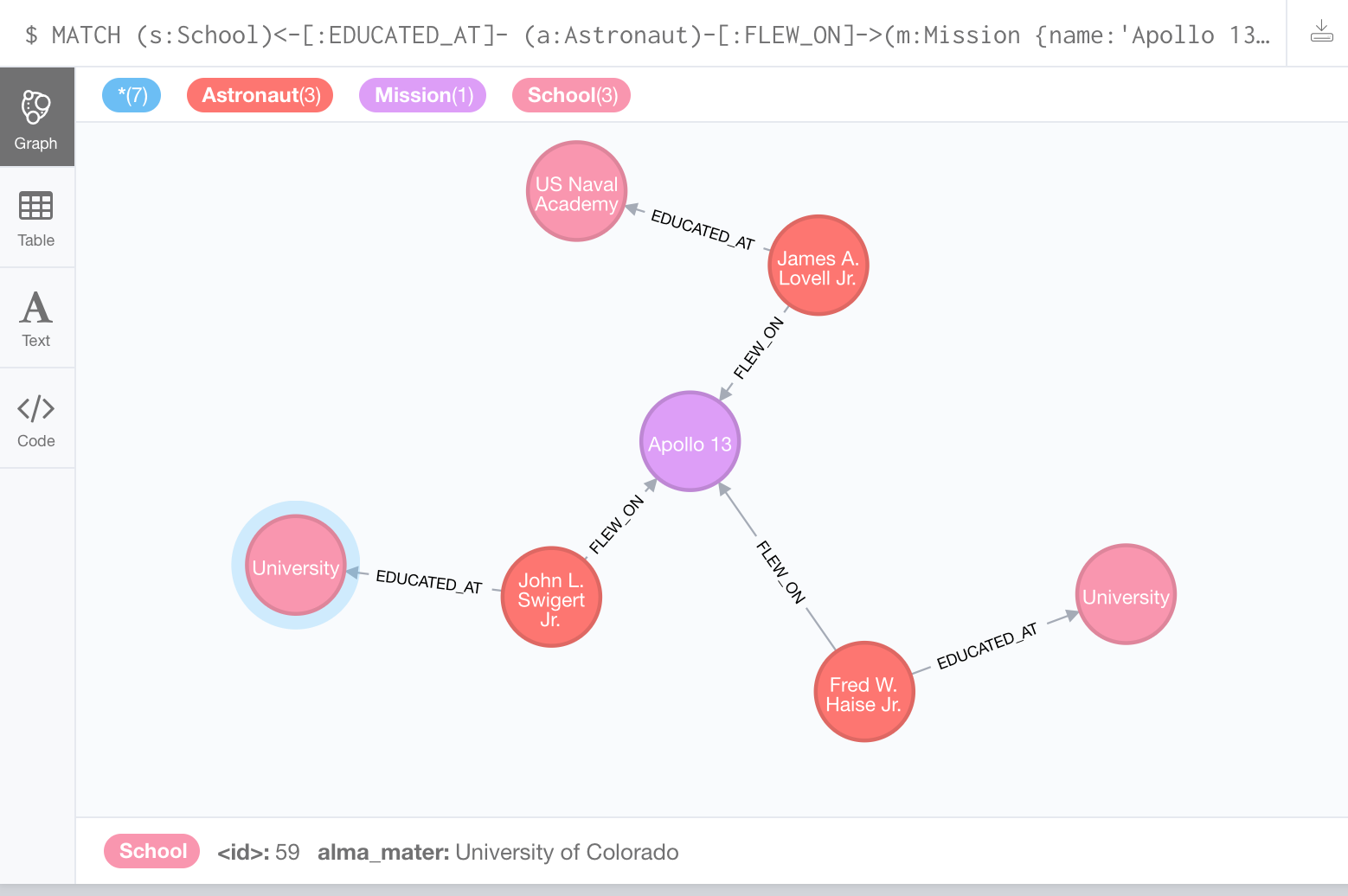

If we view astronaut as the criterion, we can easily modify the query to return data for other entities based on relationships. Here, we can prepend an edge of EDUCATED_AT to the School node on the previous query:

MATCH (s:School)<-[:EDUCATED_AT]-

(a:Astronaut)-[:FLEW_ON]->(m:Mission {name:'Apollo 13'})

RETURN a, m, s;

The output for the preceding query would look like this:

In addition to the entities for the Apollo 13 mission and the three astronauts, we can also see where each of the astronauts received their education. Feel free to further explore and experiment with the astronaut data. In doing so, we will begin to realize the potential of graph database modeling and the problems that it can help us to solve.

Now let's try using Neo4j with Python. If it wasn't installed before, make sure to install the (officially supported) Neo4j Python driver:

sudo pip install neo4j-driver

Now we will write a simple Python script (named neo4jHelloWorld.py) to accomplish three tasks:

We will start with our imports and our system arguments to handle hostname, username, and password from the command line:

from neo4j.v1 import GraphDatabase, basic_auth import sys hostname=sys.argv[1] username=sys.argv[2] password=sys.argv[3]

Next, we will connect to our local Neo4j instance using the bolt protocol:

driver=GraphDatabase.driver("bolt://" + hostname +

":7687",auth=basic_auth(username,password))

session=driver.session()

With our session established, we will create the Python language node:

createLanguage="CREATE (:Language {name:{name},version:{ver}});"

session.run(createLanguage, {"name":"Python","ver":"2.7.13"})

Next, we will create the ACCESSED_FROM edge:

createRelationship="""MATCH (m:Message),(l:Language) WHERE m.title='Welcome' AND l.name='Python' CREATE (m)-[:ACCESSED_FROM]->(l);""" session.run(createRelationship)

Then, we will query for the Python node via the ACCESSED_FROM edge to the Welcome message and process the result set as output:

queryRelationship="""MATCH (m:Message)-[:ACCESSED_FROM]->

(l:Language {name:'Python'})

RETURN m,l;"""

resultSet = session.run(queryRelationship)

for result in resultSet:

print("%s from %s" % (result["m"]["text"], result["l"]["name"]))

Finally, we will close our connection to Neo4j:

session.close()

Running this script from the command line yields the following output:

python neo4jHelloWorld.py 192.168.0.100 neo4j flynnLives Hello world! from Python

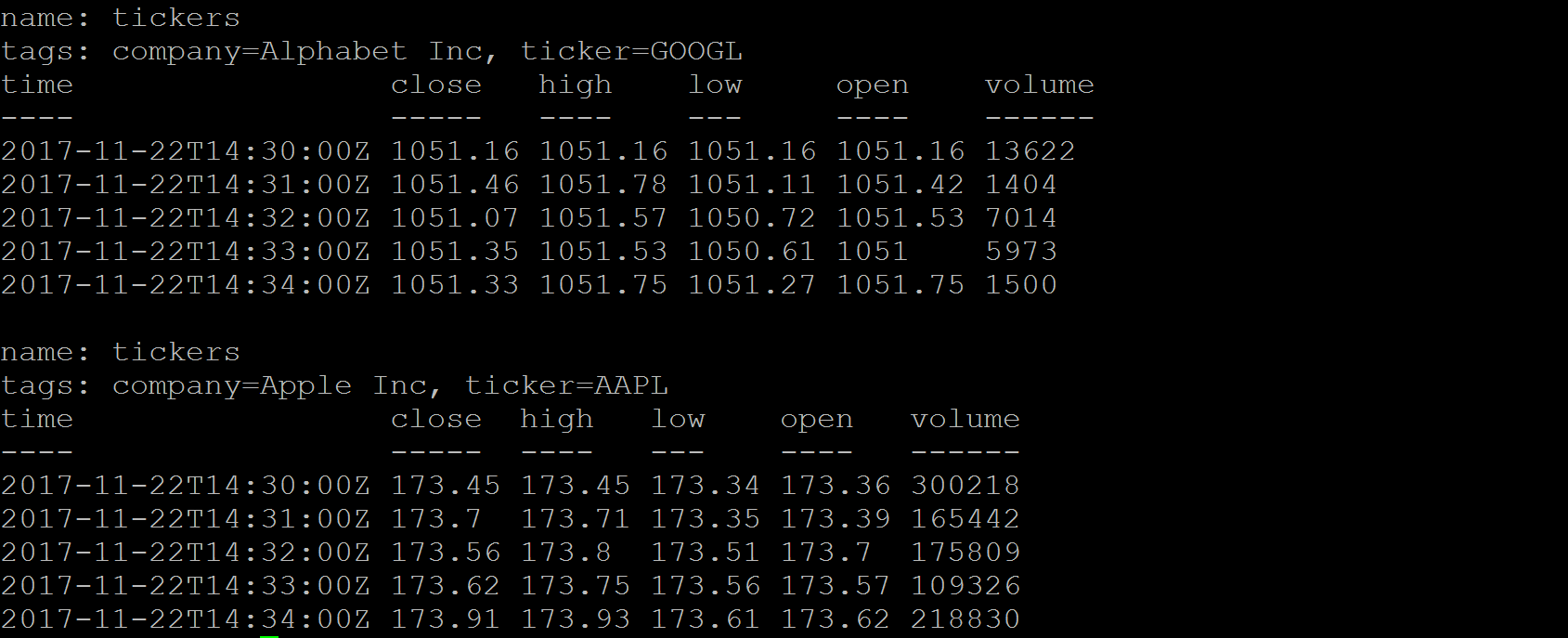

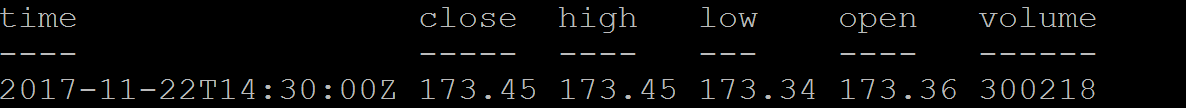

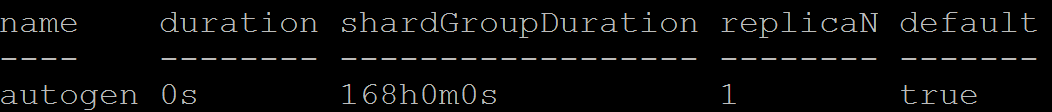

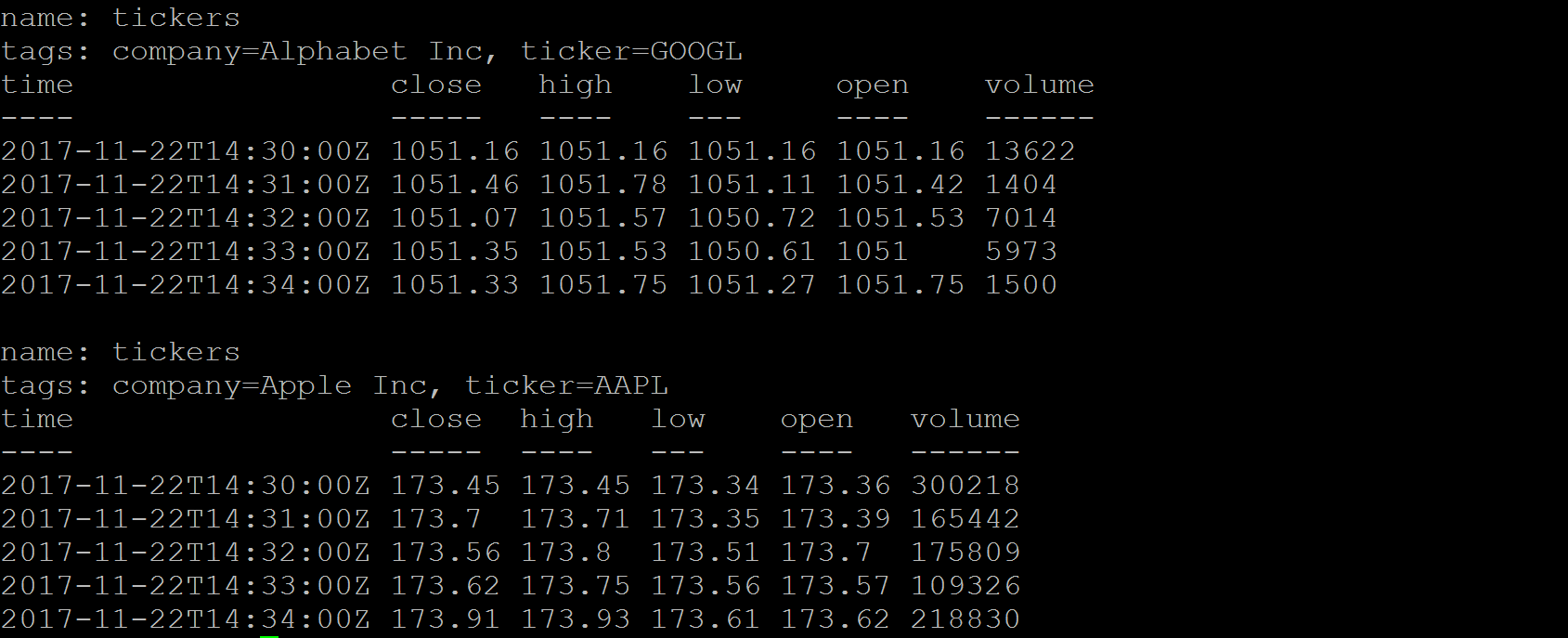

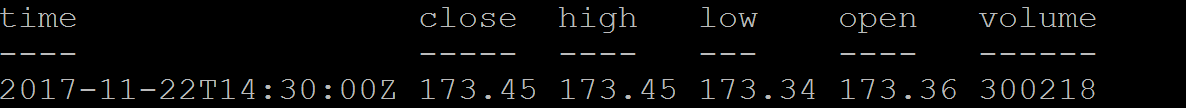

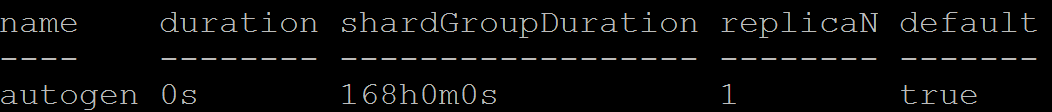

Going back to Neo4j Browser, if we click the ACCESSED_FROM edge, as shown in the screenshot provided previously in the chapter, two language nodes should now be connected to the Welcome message.