Finally, we test the model such that we have built a machine learning solution that is able to crack the CAPTCHA:

from keras.models import load_model

from helpers import resize_to_fit

from imutils import paths

import numpy as np

import imutils

import cv2

import pickle

We load up the model labels and the neural network to test whether the model is able to read from the test set:

MODEL = "captcha.hdf5"

MODEL_LABELS = "labels.dat"

CAPTCHA_IMAGE = "generated_captcha_images"

with open(MODEL_LABELS, "rb") as f:

labb = pickle.load(f)

model = load_model(MODEL)

We get some CAPTCHA images from different authentication sites to see whether the model is working:

captcha_image_files = list(paths.list_images(CAPTCHA_IMAGE))

captcha_image_files = np.random.choice(captcha_image_files, size=(10,), replace=False)

for image_file in captcha_image_files:

# grayscale

image = cv2.imread(image_file)

image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

#extra padding

image = cv2.copyMakeBorder(image, 20, 20, 20, 20, cv2.BORDER_REPLICATE)

# threshold

thresh = cv2.threshold(image, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]

#contours

contours = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

#different OpenCV versions

contours = contours[0] if imutils.is_cv2() else contours[1]

letter_image_regions = []

We loop through each of the four contours and extract the letter:

for contour in contours:

(x, y, w, h) = cv2.boundingRect(contour)

if w / h > 1.25:

half_width = int(w / 2)

letter_image_regions.append((x, y, half_width, h))

letter_image_regions.append((x + half_width, y, half_width, h))

else:

letter_image_regions.append((x, y, w, h))

We sort the detected letter images from left to right. We make a list of predicted letters:

letter_image_regions = sorted(letter_image_regions, key=lambda x: x[0])

output = cv2.merge([image] * 3)

predictions = []

for letter_bounding_box in letter_image_regions:

x, y, w, h = letter_bounding_box

letter_image = image[y - 2:y + h + 2, x - 2:x + w + 2]

letter_image = resize_to_fit(letter_image, 20, 20)

letter_image = np.expand_dims(letter_image, axis=2)

letter_image = np.expand_dims(letter_image, axis=0)

prediction = model.predict(letter_image)

letter = labb.inverse_transform(prediction)[0]

predictions.append(letter)

We finally match the images that we predicted with the actual letters in the image with the list created from the predicted images:

cv2.rectangle(output, (x - 2, y - 2), (x + w + 4, y + h + 4), (0, 255, 0), 1)

cv2.putText(output, letter, (x - 5, y - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.55, (0, 255, 0), 2)

captcha_text = "".join(predictions)

print("CAPTCHA text is: {}".format(captcha_text))

cv2.imshow("Output", output)

cv2.waitKey()

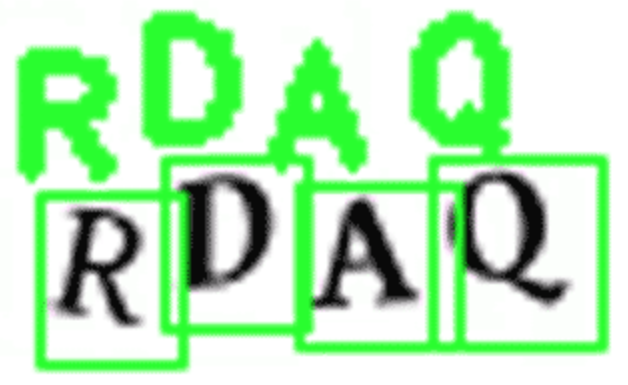

This is the output: