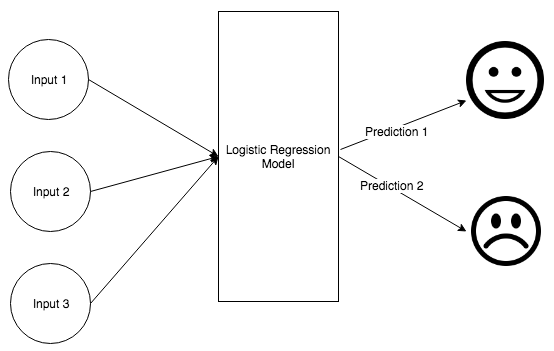

The logistic regression model is a regression model that can be used to categorize data using a logistic function. These mostly consist of a dependent binary variable that is used to estimate the outcome of the logistic model, as shown in the following diagram:

To start with our use case, we first import the respective packages using the following code:

import pandas as pd

import numpy as np

import random

import pickle

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

The URL needs to undergo a degree of cleansing before we use it. We tokenize it by removing the slashes, dots, and coms, as shown in the following code. We do this because the input data needs to be converted to the binary format for logistic regression:

def url_cleanse(web_url):

web_url = web_url.lower()

urltoken = []

dot_slash = []

slash = str(web_url).split('/')

for i in slash:

r1 = str(i).split('-')

token_slash = []

for j in range(0,len(r1)):

r2 = str(r1[j]).split('.')

token_slash = token_slash + r2

dot_slash = dot_slash + r1 + token_slash

urltoken = list(set(dot_slash))

if 'com' in urltoken:

urltoken.remove('com')

return urltoken

We then ingest the data and convert it to the relevant dataframes using the following code:

input_url = '~/data.csv'

data_csv = pd.read_csv(input_url,',',error_bad_lines=False)

data_df = pd.DataFrame(data_csv)

url_df = np.array(data_df)

random.shuffle(data_df)

y = [d[1] for d in data_df]

inputurls = [d[0] for d in data_df]

We now need to generate the term frequency–inverse document frequency (TF-IDF) from the URLs.