The Naive Bayes theorem is a classification technique. The basis of this algorithm is Bayes' theorem; the basic assumption is that the predictor variables are independent of each other.

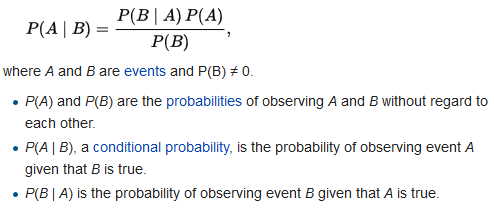

Bayes' theorem is mathematically expressed as follows:

It essentially gives us a trick for calculating conditional probabilities, in situations where it wouldn't be feasible to directly measure them. For instance, if you wanted to calculate someone's chance of having cancer, given their age, instead of performing a nationwide study, you can just take existing statistics about age distribution and cancer and plug them into Bayes' theorem.

However, it is recommended to go back and try to understand later as the failure to understand Bayes' theorem is the root of many logical fallacies.

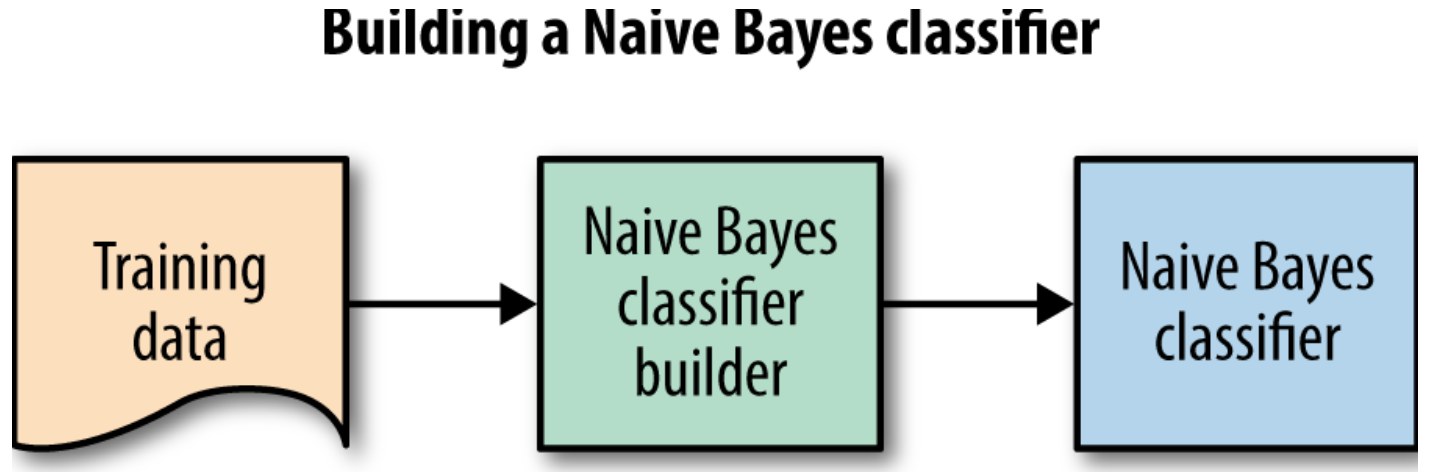

For our problem, we can set A to the probability that the email is spam and B as the contents of the email. If P(A|B) > P(¬A|B), then we can classify the email as spam; otherwise, we can't. Note that, since Bayes' theorem results in a divisor of P(B) in both cases, we can remove it from the equation for our comparison. This leaves the following: P(A)*P(B|A) > P(¬A)*P(B|¬A). Calculating P(A) and P(¬A) is trivial; they are simply the percentages of your training set that are spam or not spam. The following block diagram shows how to build a Naive Bayes classifier:

The following code shows the training data:

#runs once on training data

def train:

total = 0

numSpam = 0

for email in trainData:

if email.label == SPAM:

numSpam += 1

total += 1

pA = numSpam/(float)total

pNotA = (total — numSpam)/(float)total

The most difficult part is calculating P(B|A) and P(B|¬A). In order to calculate these, we are going to use the bag of words model. This is a pretty simple model that treats a piece of text as a bag of individual words, paying no attention to their order. For each word, we calculate the percentage of times it shows up in spam emails, as well as in non-spam emails. We call this probability P(B_i|A_x). For example, in order to calculate P(free | spam), we would count the number of times the word free occurs in all of the spam emails combined and divide this by the total number of words in all of the spam emails combined. Since these are static values, we can calculate them in our training phase, as shown in the following code:

#runs once on training data

def train:

total = 0

numSpam = 0

for email in trainData:

if email.label == SPAM:

numSpam += 1

total += 1

processEmail(email.body, email.label)

pA = numSpam/(float)total

pNotA = (total — numSpam)/(float)total

#counts the words in a specific email

def processEmail(body, label):

for word in body:

if label == SPAM:

trainPositive[word] = trainPositive.get(word, 0) + 1

positiveTotal += 1

else:

trainNegative[word] = trainNegative.get(word, 0) + 1

negativeTotal += 1

#gives the conditional probability p(B_i | A_x)

def conditionalWord(word, spam):

if spam:

return trainPositive[word]/(float)positiveTotal

return trainNegative[word]/(float)negativeTotal

To get p(B|A_x) for an entire email, we simply take the product of the p(B_i|A_x) value for every word i in the email. Note that this is done at the time of classification and not when initially training:

#gives the conditional probability p(B | A_x)

def conditionalEmail(body, spam):

result = 1.0

for word in body:

result *= conditionalWord(word, spam)

return result

Finally, we have all of the components that are required to put it all together. The final piece that we need is the classifier, which gets called for every email, and which uses our previous functions to classify the emails:

#classifies a new email as spam or not spam

def classify(email):

isSpam = pA * conditionalEmail(email, True) # P (A | B)

notSpam = pNotA * conditionalEmail(email, False) # P(¬A | B)

return isSpam > notSpam

However, there are some changes that you'd need to make in order to make it work optimally and bug free.