Some websites restrict access or display different content-based on the browser or device you're using to view it. For example, a web site may show a mobile-oriented theme for users browsing from an iPhone or display a warning to users with an old and vulnerable version of Internet Explorer. This can be a good place to find vulnerabilities because these might have been tested less rigorously or even forgotten about by the developers.

In this recipe, we will show you how to spoof your user agent, so you appear to the website as if you're using a different device in an attempt to uncover alternative content:

import requests

import hashlib

user_agents = { 'Chrome on Windows 8.1' : 'Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.115 Safari/537.36',

'Safari on iOS' : 'Mozilla/5.0 (iPhone; CPU iPhone OS 8_1_3 like Mac OS X) AppleWebKit/600.1.4 (KHTML, like Gecko) Version/8.0 Mobile/12B466 Safari/600.1.4',

'IE6 on Windows XP' : 'Mozilla/5.0 (Windows; U; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 2.0.50727)',

'Googlebot' : 'Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)' }

responses = {}

for name, agent in user_agents.items():

headers = {'User-Agent' : agent}

req = requests.get('http://packtpub.com', headers=headers)

responses[name] = req

md5s = {}

for name, response in responses.items():

md5s[name] = hashlib.md5(response.text.encode('utf- 8')).hexdigest()

for name,md5 in md5s.iteritems():

if name != 'Chrome on Windows 8.1':

if md5 != md5s['Chrome on Windows 8.1']:

print name, 'differs from baseline'

else:

print 'No alternative site found via User-Agent spoofing:', md5We first set up an array of user agents, with a friendly name assigned to each key:

user_agents = { 'Chrome on Windows 8.1' : 'Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.115 Safari/537.36',

'Safari on iOS' : 'Mozilla/5.0 (iPhone; CPU iPhone OS 8_1_3 like Mac OS X) AppleWebKit/600.1.4 (KHTML, like Gecko) Version/8.0 Mobile/12B466 Safari/600.1.4',

'IE6 on Windows XP' : 'Mozilla/5.0 (Windows; U; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 2.0.50727)',

'Googlebot' : 'Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)' }There are four user agents here: Chrome on Windows 8.1, Safari on iOS, Internet Explorer 6 on Windows XP, and finally, the Googlebot. This gives a wide range of browsers and examples of which you would expect to find different content behind each request. The final user agent in the list, Googlebot, is the crawler that Google sends when spidering data for their search engine.

The next part loops through each of the user agents and sets the User-Agent header in the request:

responses = {}

for name, agent in user_agents.items():

headers = {'User-Agent' : agent}The next section sends the HTTP request, using the familiar requests library, and stores each response in the responses array, using the user friendly name as the key:

req = requests.get('http://www.google.com', headers=headers)

responses[name] = reqThe next part of the code creates an md5s array and then iterates through the responses, grabbing the response.text file. From this, it generates an md5 hash of the response content and stores it into the md5s array:

md5s = {}

for name, response in responses.items():

md5s[name] = hashlib.md5(response.text.encode('utf- 8')).hexdigest()The final part of the code iterates through the md5s array and compares each item to the original baseline request, in this recipe Chrome on Windows 8.1:

for name,md5 in md5s.iteritems():

if name != 'Chrome on Windows 8.1':

if md5 != md5s['Chrome on Windows 8.1']:

print name, 'differs from baseline'

else:

print 'No alternative site found via User-Agent spoofing:', md5We hashed the response text so that it keeps the resulting array small, thus reducing the memory footprint. You could compare each response directly by its content, but this would be slower and use more memory to process.

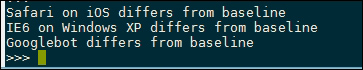

This script will print out the user agent friendly name if the response from the web server is different from the Chrome on Windows 8.1 baseline response, as seen in the following screenshot:

This recipe is based upon being able to manipulate headers in the HTTP requests. Check out Header-based Cross-site scripting and Shellshock checking sections in Chapter 3, Vulnerability Identification, for more examples of data that can be passed into the headers.