Description

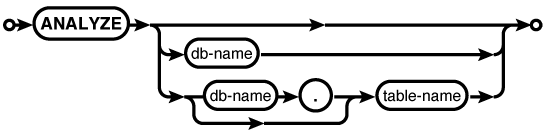

The ANALYZE command computes and records

statistical data about database indexes. If available, this data

is used by the query optimizer to compute the most efficient

query plan.

If no parameters are given, statistics will be computed for all indexes in all attached databases. You can also limit analysis to just those indexes in a specific database, or just those indexes associated with a specific table.

The statistical data is not automatically updated as the index values change. If the contents or distribution of an index changes significantly, it would be wise to reanalyze the appropriate database or table. Another option would be to simply delete the statistical data, as no data is usually better than incorrect data.

Data generated by ANALYZE is stored in one or more

tables named sqlite_stat,

starting with #sqlite_stat1.

These tables cannot be manually dropped, but the data inside can

be altered with standard SQL commands. Generally, this is not

recommended, except to delete any ANALYZE data that is no longer valid or

desired.

By default, the ANALYZE command generates data

on the number of entries in an index, as well as the ratio of

unique values to total values. This ratio is computed by

dividing the total number of entries by the number of unique

values, rounding up to the nearest integer. This data is used to

compute the cost difference between a full-table scan and an

indexed lookup.

If SQLite is compiled with the

SQLITE_ENABLE_STAT2

directive, then ANALYZE will

also generate an sqlite_stat2

table that contains a histogram of the index distribution. This

is used to compute the cost of targeting a range of

values.

There is one known issue with the

ANALYZE command. When

generating the sqlite_stat1

table, ANALYZE must calculate

the number of unique values in an index. To accomplish this, the

ANALYZE command uses

the standard SQL test for equivalence between index values. This

means that if a single-column index contains multiple NULL

entries, they will each be considered a nonequivalent, unique

value (since NULL != NULL).

As a result, if an index contains a large number of NULL values,

the ANALYZE data will

incorrectly consider the index to have more uniqueness than it

actually does. This can incorrectly influence the optimizer to

pick an index-based lookup when a full-table scan would be less

expensive. Due to this behavior, if an index contains a

noticeable percentage of NULL entries (say, 10 to 15% or more)

and it is common to ask for all of the NULL (or non-NULL) rows,

it is recommended that the ANALYZE data for that index is

discarded.