Practical Techniques for Improving JVM Application Performance

Copyright © 2018 Benjamin J. Evans, James Gough, and Chris Newland. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781492025795 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Optimizing Java, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-492-02579-5

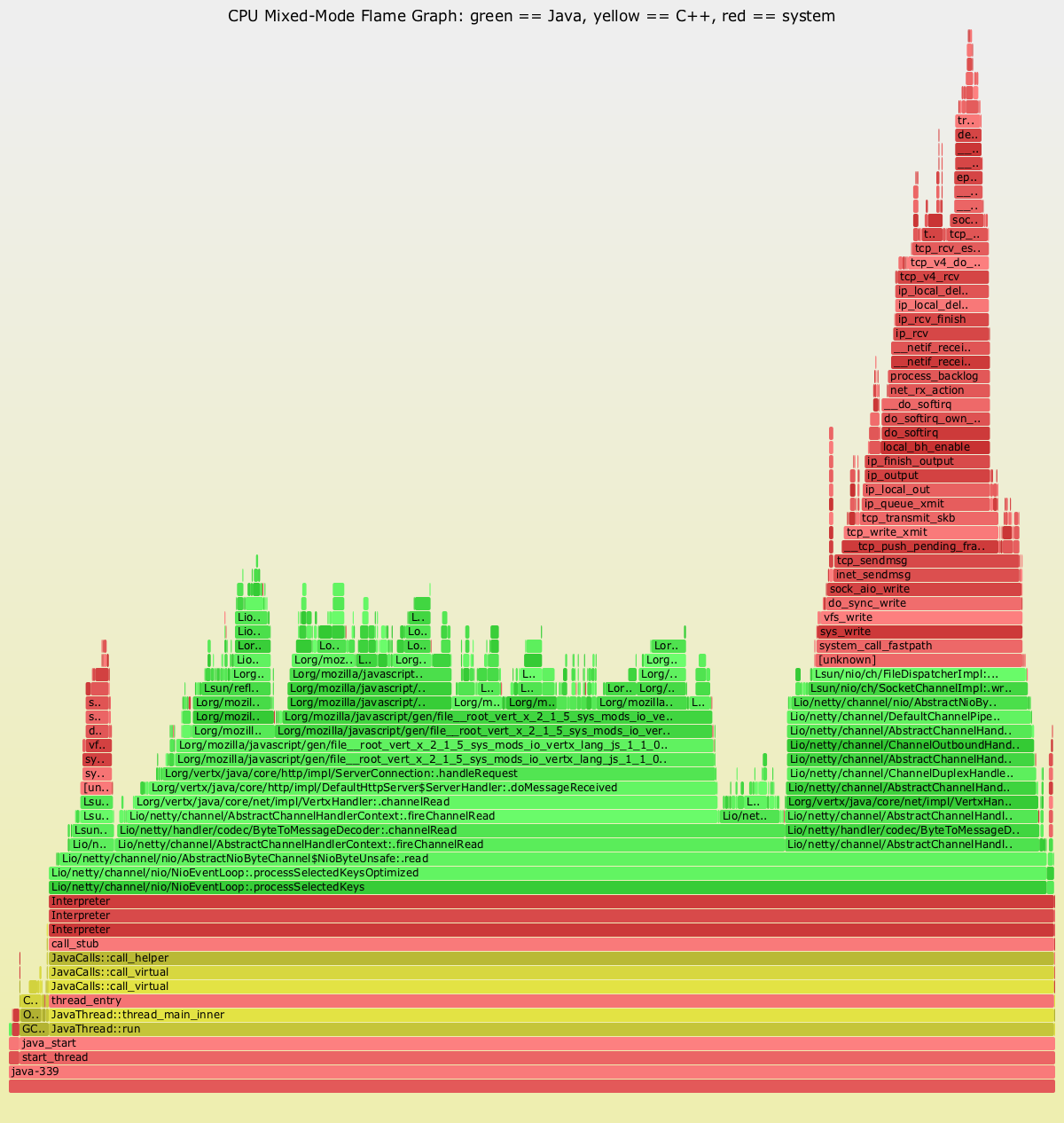

[LSI]

This book is dedicated to my wife, Anna, who not only illustrated it beautifully, but also helped edit portions and, crucially, was often the first person I bounced ideas off.

—Benjamin J. Evans

This book is dedicated to my incredible family Megan, Emily, and Anna. Writing would not have been possible without their help and support. I’d also like to thank my parents, Heather and Paul, for encouraging me to learn and their constant support.

I’d also like to thank Benjamin Evans for his guidance and friendship—it’s been a pleasure working together again.

—James Gough

This book is dedicated to my wife, Reena, who supported and encouraged my efforts and to my sons, Joshua and Hugo, may they grow up with inquisitive minds.

—Chris Newland

How do you define performance?

Most developers, when asked about the performance of their application, will assume some measure of speed is requested. Something like transactions per second, or gigabytes of data processed…getting a lot of work done in the shortest amount of time possible. If you’re an application architect, you may measure performance in broader metrics. You may be more concerned about resource utilization than straight-line execution. You might pay more attention to the performance of connections between services than of the services themselves. If you make business decisions for your company, application performance will probably not be measured in time as often as it is measured in dollars. You may argue with developers and architects about resource allocation, weighing the cost of devops against the time it takes to do the company’s work.

And regardless of which role you identify with, all these metrics are important.

I started out developing Java applications in 1996. I had just moved from my first job writing AppleScript CGIs for the University of Minnesota’s business school to maintaining server-side Perl applications for the web development team. Java was very new then—the first stable version, 1.0.2, was released earlier that year—and I was tasked with finding something useful to build.

Back in those days, the best way to get performance out of a Java application was to write it in some other language. This was before Java had a Just-in-Time (JIT) compiler, before parallel and concurrent garbage collectors, and long before the server side would become dominated by Java technology. But many of us wanted to use Java, and we developed all sorts of tricks to make our code run well. We wrote gigantic methods to avoid method dispatch overhead. We pooled and reused objects because garbage collection was slow and disruptive. We used lots of global state and static methods. We wrote truly awful Java code, but it worked…for a little while.

In 1999, things started to change.

After years struggling to use Java for anything demanding speed, JIT technologies started to reach us. With compilers that could inline methods, the number of method calls became less important than breaking up our giant monoliths into smaller pieces. We gleefully embraced object-oriented design, splitting our methods into tiny chunks and wrapping interfaces around everything. We marveled at how every release of Java would run things just a little bit better, because we were writing good Java code and the JIT compiler loved it. Java soared past other technologies on the server, leading us to build larger and more complex apps with richer abstractions.

At the same time, garbage collectors were rapidly improving. Now the overhead of pooling would very frequently overshadow the cost of allocation. Many garbage collectors offered multithreaded operation, and we started to see low-pause, nearly concurrent GCs that stayed out of our applications’ way. The standard practice moved toward a carefree creating and throwing away of objects with the promise that a sufficiently smart GC would eventually make it all OK. And it worked…for a little while.

The problem with technology is that it always invalidates itself. As JIT and GC technologies have improved, the paths to application performance have become tricky to navigate. Even though JVMs can optimize our code and make objects almost free, the demands of applications and users continue to grow.

Some of the time, maybe even most of the time, the “good” coding patterns prevail: small methods inline properly, interface and type checks become inexpensive, native code produced by the JIT compiler is compact and efficient. But other times we need to hand-craft our code, dial back abstractions and architecture in deference to the limitations of the compiler and CPU. Some of the time, objects really are free and we can ignore the fact that we’re consuming memory bandwidth and GC cycles. Other times we’re dealing with terabyte-scale (or larger) datasets that put stress on even the best garbage collectors and memory subsystems.

The answer to the performance question these days is to know your tools. And frequently, that means knowing not just how Java the language works, but also how JVM libraries, memory, the compiler, GCs, and the hardware your apps run on are interacting. In my work on the JRuby project, I’ve learned an immutable truth about the JVM: there’s no single solution for all performance problems, but for all performance problems there are solutions. The trick is finding those solutions and piecing together the ones that meet your needs best. Now you have a secret weapon in these performance battles: the book you are about to read.

Turn the page, friends, and discover the wealth of tools and techniques available to you. Learn how to balance application design with available resources. Learn how to monitor and tune the JVM. Learn how to make use of the latest Java technologies that are more efficient than old libraries and patterns. Learn how to make Java fly.

It’s an exciting time to be a Java developer, and there have never been so many opportunities to build efficient and responsive applications on the Java platform. Let’s get started.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

<constant width> in angle bracketsShows text that should be replaced with user-supplied values or by values determined by context.

This element signifies a tip or suggestion.

This element signifies a general note.

This element indicates a warning or caution.

Supplemental material (code examples, exercises, etc.) is available for download at http://bit.ly/optimizing-java-1e-code-examples.

This book is here to help you get your job done. In general, if example code is offered with this book, you may use it in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing a CD-ROM of examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

We appreciate, but do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “Optimizing Java by Benjamin J. Evans, James Gough, and Chris Newland (O’Reilly). Copyright 2018 Benjamin J. Evans, James Gough, and Chris Newland, 978-1-492-02579-5.”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

Safari (formerly Safari Books Online) is a membership-based training and reference platform for enterprise, government, educators, and individuals.

Members have access to thousands of books, training videos, Learning Paths, interactive tutorials, and curated playlists from over 250 publishers, including O’Reilly Media, Harvard Business Review, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Adobe, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, and Course Technology, among others.

For more information, please visit http://oreilly.com/safari.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://bit.ly/optimizing-java.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

The authors would like to thank a large number of people for their invaluable assistance.

For writing the foreword:

Charlie Nutter

For providing highly specialist technical help, including information and knowledge not available anywhere else:

Christine Flood

Chris Seaton

Kirk Pepperdine

Simon Ritter

Monica Beckwith

David Jones

Richard Warburton

Stephen Connolly

Jaroslav Tulach

For general detail, encouragement, advice and introductions:

George Ball

Steve Poole

Richard Pollock

Andrew Binstock

Our technical reviewers:

Michael Hsu

Dmitry Vyazelenko

Julian Templeman

Alex Blewitt

The O’Reilly Team:

Virginia Wilson

Susan Conant

Colleen Cole

Rachel Monaghan

Nan Barber

Brian Foster

Lindsay Ventimiglia

Maureen Spencer

Heather Scherer

Optimizing the performance of Java (or any other sort of code) is often seen as a Dark Art. There’s a mystique about performance analysis—it’s commonly viewed as a craft practiced by the “lone hacker, who is tortured and deep thinking” (one of Hollywood’s favorite tropes about computers and the people who operate them). The image is one of a single individual who can see deeply into a system and come up with a magic solution that makes the system work faster.

This image is often coupled with the unfortunate (but all-too-common) situation where performance is a second-class concern of the software teams. This sets up a scenario where analysis is only done once the system is already in trouble, and so needs a performance “hero” to save it. The reality, however, is a little different.

The truth is that performance analysis is a weird blend of hard empiricism and squishy human psychology. What matters is, at one and the same time, the absolute numbers of observable metrics and how the end users and stakeholders feel about them. The resolution of this apparent paradox is the subject of the rest of this book.

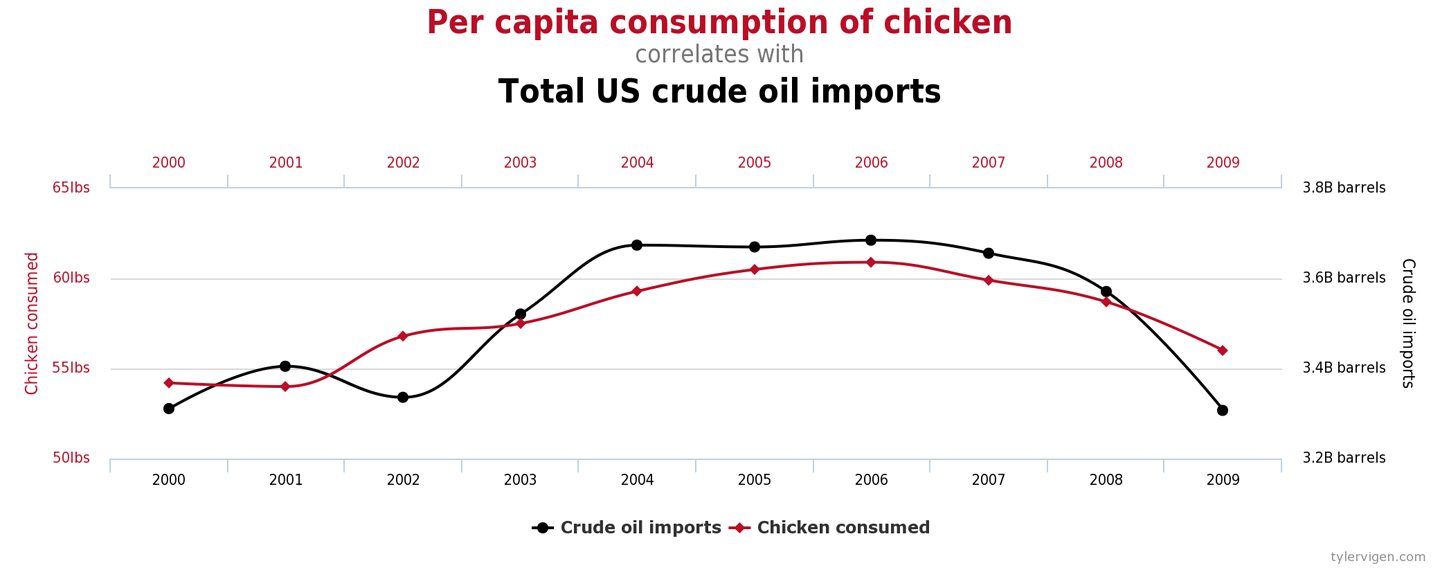

For many years, one of the top three hits on Google for “Java performance tuning” was an article from 1997–8, which had been ingested into the index very early in Google’s history. The page had presumably stayed close to the top because its initial ranking served to actively drive traffic to it, creating a feedback loop.

The page housed advice that was completely out of date, no longer true, and in many cases detrimental to applications. However, its favored position in the search engine results caused many, many developers to be exposed to terrible advice.

For example, very early versions of Java had terrible method dispatch performance. As a workaround, some Java developers advocated avoiding writing small methods and instead writing monolithic methods. Of course, over time, the performance of virtual dispatch greatly improved. Not only that, but with modern Java Virtual Machines (JVMs) and especially automatic managed inlining, virtual dispatch has now been eliminated at the majority of call sites. Code that followed the “lump everything into one method” advice is now at a substantial disadvantage, as it is very unfriendly to modern Just-in-Time (JIT) compilers.

There’s no way of knowing how much damage was done to the performance of applications that were subjected to the bad advice, but this case neatly demonstrates the dangers of not using a quantitative and verifiable approach to performance. It also provides another excellent example of why you shouldn’t believe everything you read on the internet.

The execution speed of Java code is highly dynamic and fundamentally depends on the underlying Java Virtual Machine. An old piece of Java code may well execute faster on a more recent JVM, even without recompiling the Java source code.

As you might imagine, for this reason (and others we will discuss later) this book is not a cookbook of performance tips to apply to your code. Instead, we focus on a range of aspects that come together to produce good performance engineering:

Performance methodology within the overall software lifecycle

Theory of testing as applied to performance

Measurement, statistics, and tooling

Analysis skills (both systems and data)

Underlying technology and mechanisms

Later in the book, we will introduce some heuristics and code-level techniques for optimization, but these all come with caveats and tradeoffs that the developer should be aware of before using them.

Please do not skip ahead to those sections and start applying the techniques detailed without properly understanding the context in which the advice is given. All of these techniques are capable of doing more harm than good if you lack a proper understanding of how they should be applied.

In general, there are:

No magic “go faster” switches for the JVM

No “tips and tricks” to make Java run faster

No secret algorithms that have been hidden from you

As we explore our subject, we will discuss these misconceptions in more detail, along with some other common mistakes that developers often make when approaching Java performance analysis and related issues. Still here? Good. Then let’s talk about performance.

To understand why Java performance is the way that it is, let’s start by considering a classic quote from James Gosling, the creator of Java:

Java is a blue collar language. It’s not PhD thesis material but a language for a job.

That is, Java has always been an extremely practical language. Its attitude to performance was initially that as long as the environment was fast enough, then raw performance could be sacrificed if developer productivity benefited. It was therefore not until relatively recently, with the increasing maturity and sophistication of JVMs such as HotSpot, that the Java environment became suitable for high-performance computing applications.

This practicality manifests itself in many ways in the Java platform, but one of the most obvious is the used of managed subsystems. The idea is that the developer gives up some aspects of low-level control in exchange for not having to worry about some of the details of the capability under management.

The most obvious example of this is, of course, memory management. The JVM provides automatic memory management in the form of a pluggable garbage collection subsystem, so that memory does not have to be manually tracked by the programmer.

Managed subsystems occur throughout the JVM and their existence introduces extra complexity into the runtime behavior of JVM applications.

As we will discuss in the next section, the complex runtime behavior of JVM applications requires us to treat our applications as experiments under test. This leads us to think about the statistics of observed measurements, and here we make an unfortunate discovery.

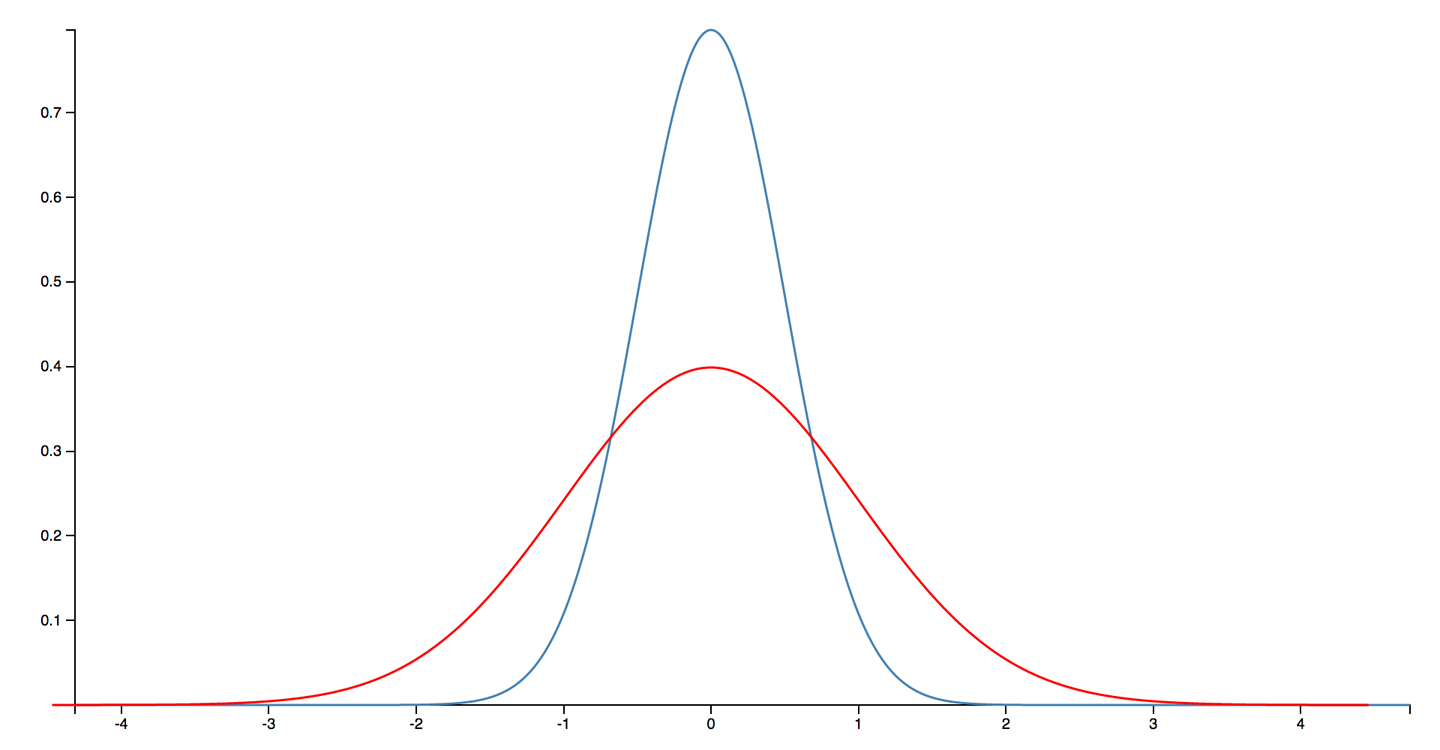

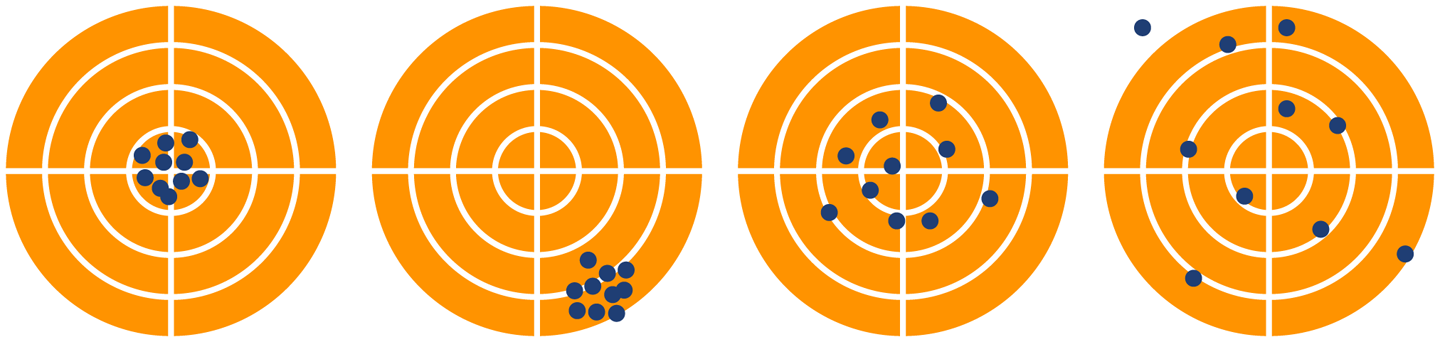

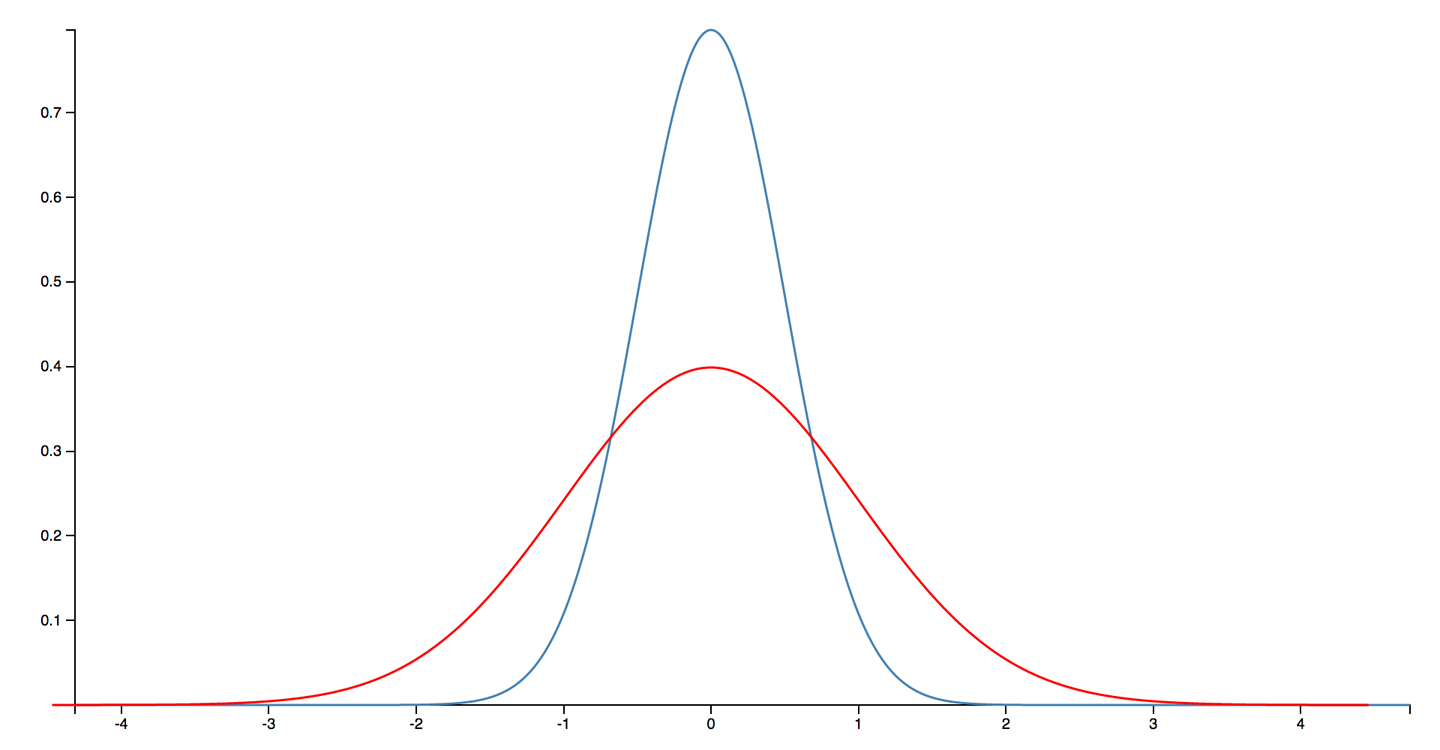

The observed performance measurements of JVM applications are very often not normally distributed. This means that elementary statistical techniques (e.g., standard deviation and variance) are ill-suited for handling results from JVM applications. This is because many basic statistics methods contain an implicit assumption about the normality of results distributions.

One way to understand this is that for JVM applications outliers can be very significant—for a low-latency trading application, for example. This means that sampling of measurements is also problematic, as it can easily miss the exact events that have the most importance.

Finally, a word of caution. It is very easy to be misled by Java performance measurements. The complexity of the environment means that it is very hard to isolate individual aspects of the system.

Measurement also has an overhead, and frequent sampling (or recording every result) can have an observable impact on the performance numbers being recorded. The nature of Java performance numbers requires a certain amount of statistical sophistication, and naive techniques frequently produce incorrect results when applied to Java/JVM applications.

Java/JVM software stacks are, like most modern software systems, very complex. In fact, due to the highly optimizing and adaptive nature of the JVM, production systems built on top of the JVM can have some incredibly subtle and intricate performance behavior. This complexity has been made possible by Moore’s Law and the unprecedented growth in hardware capability that it represents.

The most amazing achievement of the computer software industry is its continuing cancellation of the steady and staggering gains made by the computer hardware industry.

Henry Petroski

While some software systems have squandered the historical gains of the industry, the JVM represents something of an engineering triumph. Since its inception in the late 1990s the JVM has developed into a very high-performance, general-purpose execution environment that puts those gains to very good use. The tradeoff, however, is that like any complex, high-performance system, the JVM requires a measure of skill and experience to get the absolute best out of it.

A measurement not clearly defined is worse than useless.

Eli Goldratt

JVM performance tuning is therefore a synthesis between technology, methodology, measurable quantities, and tools. Its aim is to effect measurable outputs in a manner desired by the owners or users of a system. In other words, performance is an experimental science—it achieves a desired result by:

Defining the desired outcome

Measuring the existing system

Determining what is to be done to achieve the requirement

Undertaking an improvement exercise

Retesting

Determining whether the goal has been achieved

The process of defining and determining desired performance outcomes builds a set of quantitative objectives. It is important to establish what should be measured and record the objectives, which then form part of the project’s artifacts and deliverables. From this, we can see that performance analysis is based upon defining, and then achieving, nonfunctional requirements.

This process is, as has been previewed, not one of reading chicken entrails or another divination method. Instead, we rely upon statistics and an appropriate handling of results. In Chapter 5 we will introduce a primer on the basic statistical techniques that are required for accurate handling of data generated from a JVM performance analysis project.

For many real-world projects, a more sophisticated understanding of data and statistics will undoubtedly be required. You are encouraged to view the statistical techniques found in this book as a starting point, rather than a definitive statement.

In this section, we introduce some basic performance metrics. These provide a vocabulary for performance analysis and will allow you to frame the objectives of a tuning project in quantitative terms. These objectives are the nonfunctional requirements that define performance goals. One common basic set of performance metrics is:

Throughput

Latency

Capacity

Utilization

Efficiency

Scalability

Degradation

We will briefly discuss each in turn. Note that for most performance projects, not every metric will be optimized simultaneously. The case of only a few metrics being improved in a single performance iteration is far more common, and this may be as many as can be tuned at once. In real-world projects, it may well be the case that optimizing one metric comes at the detriment of another metric or group of metrics.

Throughput is a metric that represents the rate of work a system or subsystem can perform. This is usually expressed as number of units of work in some time period. For example, we might be interested in how many transactions per second a system can execute.

For the throughput number to be meaningful in a real performance exercise, it should include a description of the reference platform it was obtained on. For example, the hardware spec, OS, and software stack are all relevant to throughput, as is whether the system under test is a single server or a cluster. In addition, transactions (or units of work) should be the same between tests. Essentially, we should seek to ensure that the workload for throughput tests is kept consistent between runs.

Performance metrics are sometimes explained via metaphors that evoke plumbing. If a water pipe can produce 100 liters per second, then the volume produced in 1 second (100 liters) is the throughput. In this metaphor, the latency is effectively the length of the pipe. That is, it’s the time taken to process a single transaction and see a result at the other end of the pipe.

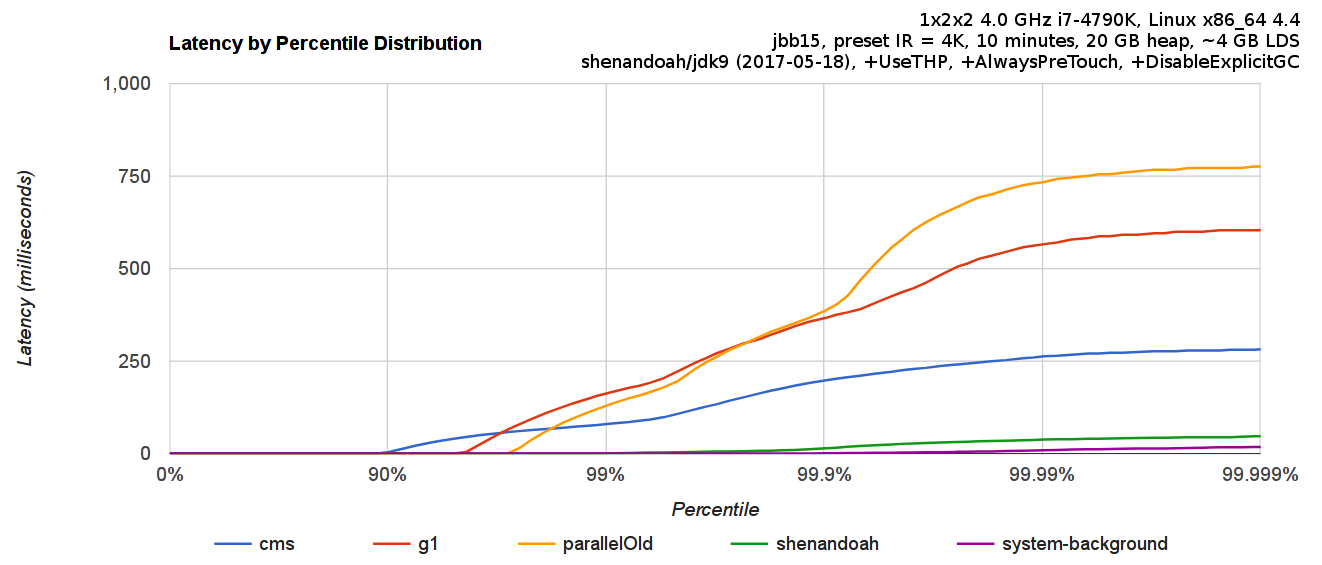

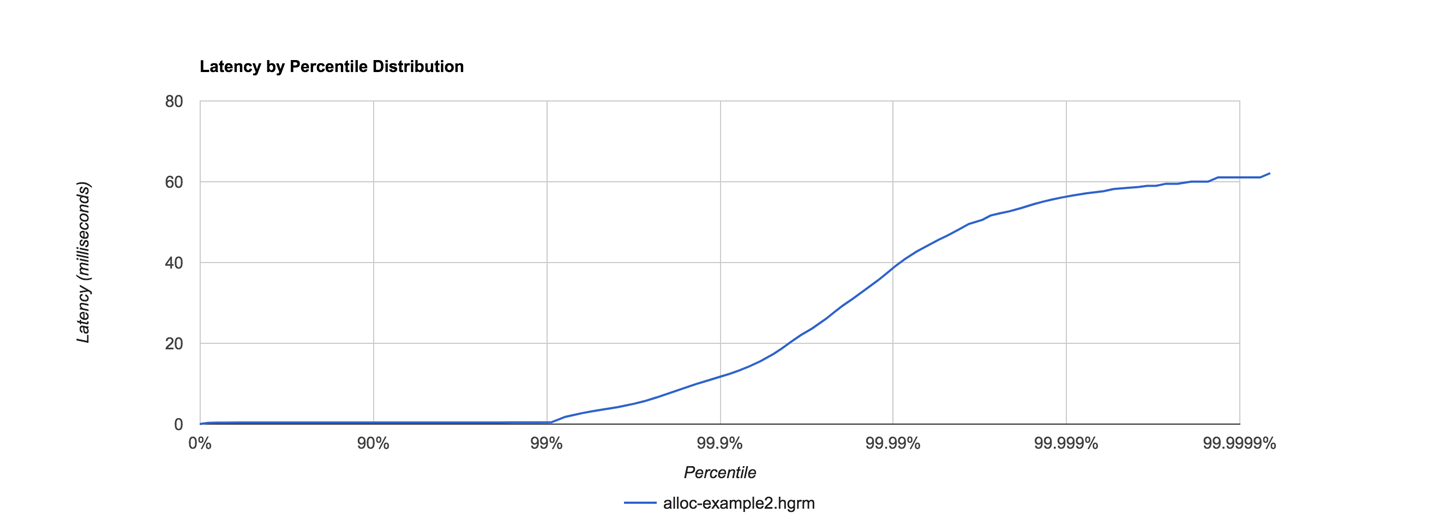

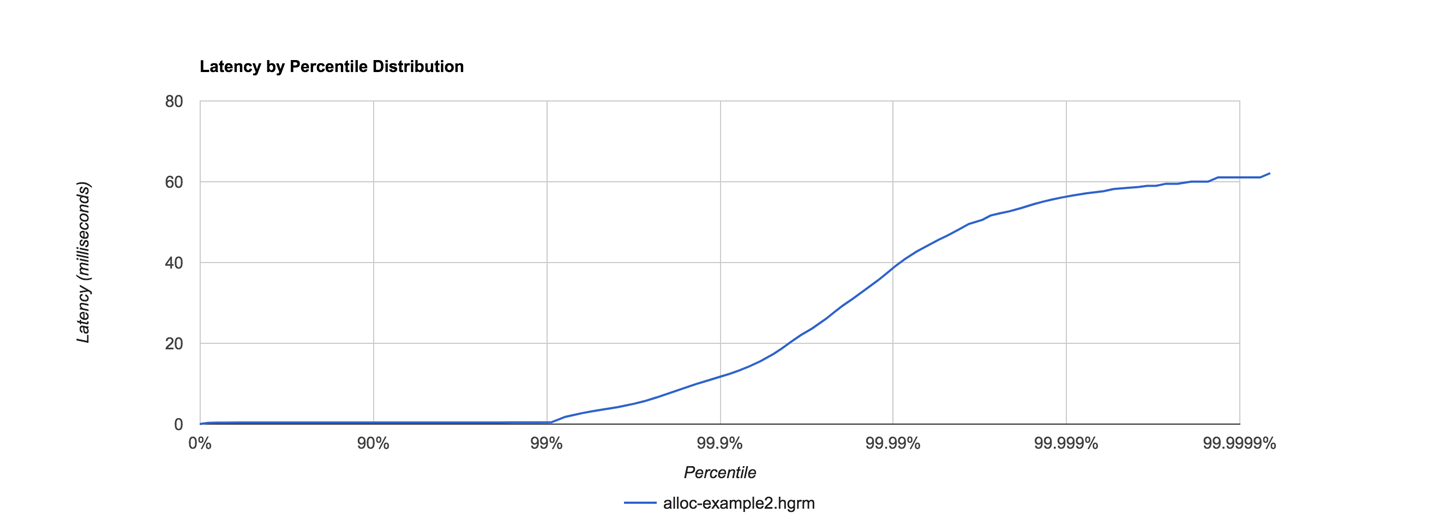

It is normally quoted as an end-to-end time. It is dependent on workload, so a common approach is to produce a graph showing latency as a function of increasing workload. We will see an example of this type of graph in “Reading Performance Graphs”.

The capacity is the amount of work parallelism a system possesses—that is, the number of units of work (e.g., transactions) that can be simultaneously ongoing in the system.

Capacity is obviously related to throughput, and we should expect that as the concurrent load on a system increases, throughput (and latency) will be affected. For this reason, capacity is usually quoted as the processing available at a given value of latency or throughput.

One of the most common performance analysis tasks is to achieve efficient use of a system’s resources. Ideally, CPUs should be used for handling units of work, rather than being idle (or spending time handling OS or other housekeeping tasks).

Depending on the workload, there can be a huge difference between the utilization levels of different resources. For example, a computation-intensive workload (such as graphics processing or encryption) may be running at close to 100% CPU but only be using a small percentage of available memory.

Dividing the throughput of a system by the utilized resources gives a measure of the overall efficiency of the system. Intuitively, this makes sense, as requiring more resources to produce the same throughput is one useful definition of being less efficient.

It is also possible, when one is dealing with larger systems, to use a form of cost accounting to measure efficiency. If solution A has a total dollar cost of ownership (TCO) twice that of solution B for the same throughput then it is, clearly, half as efficient.

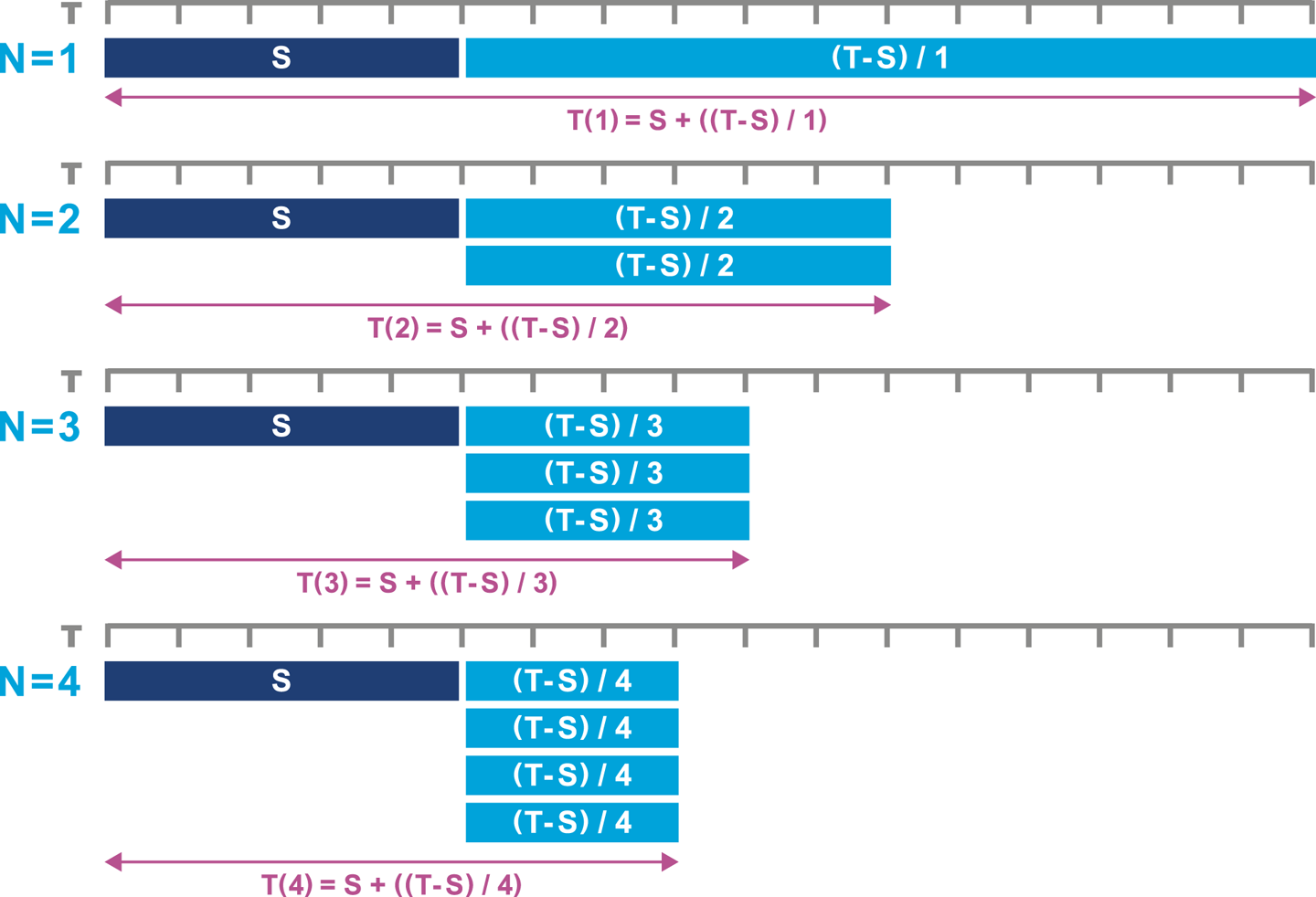

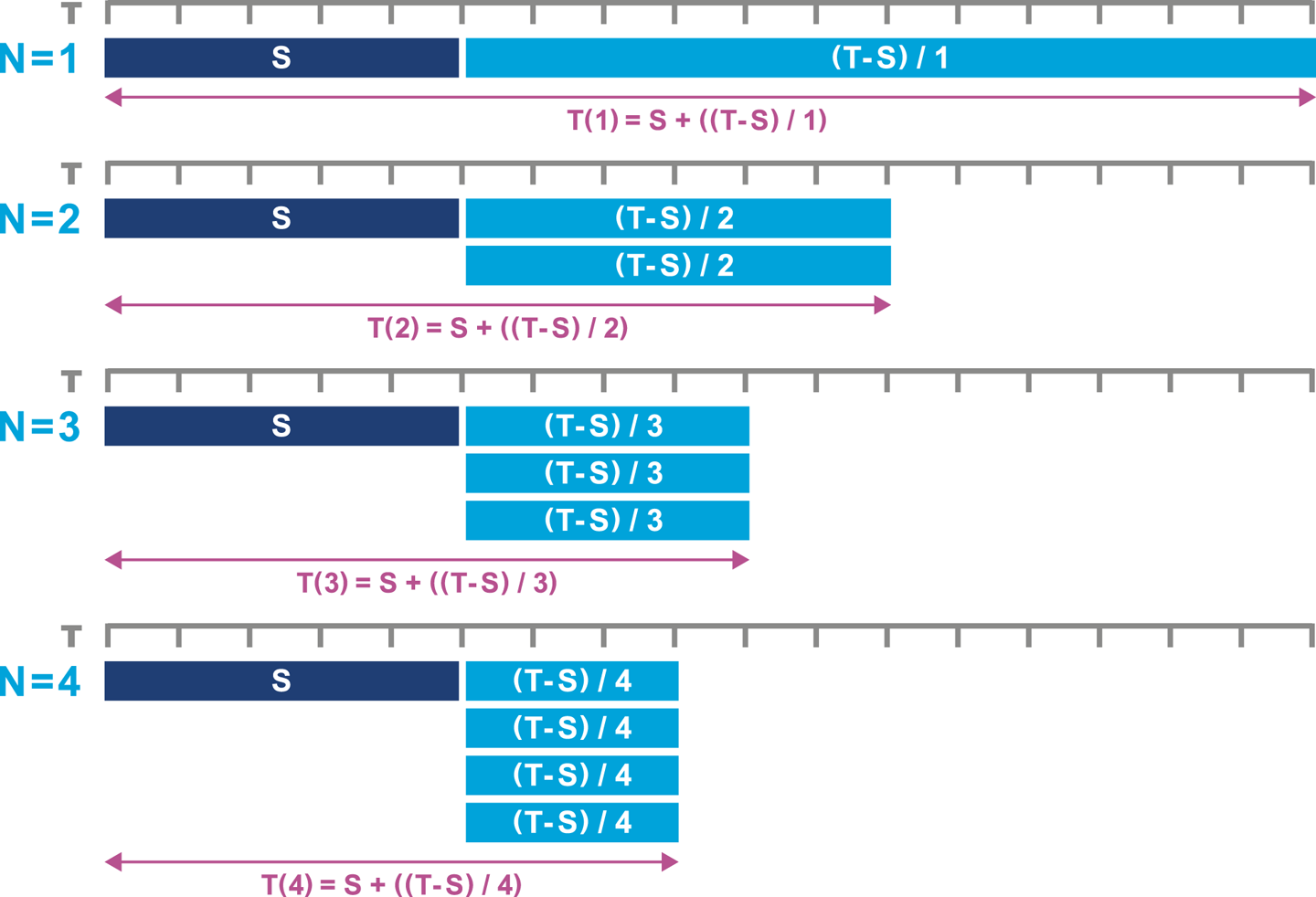

The throughout or capacity of a system depends upon the resources available for processing. The change in throughput as resources are added is one measure of the scalability of a system or application. The holy grail of system scalability is to have throughput change exactly in step with resources.

Consider a system based on a cluster of servers. If the cluster is expanded, for example, by doubling in size, then what throughput can be achieved? If the new cluster can handle twice the volume of transactions, then the system is exhibiting “perfect linear scaling.” This is very difficult to achieve in practice, especially over a wide range of possible loads.

System scalability is dependent upon a number of factors, and is not normally a simple constant factor. It is very common for a system to scale close to linearly for some range of resources, but then at higher loads to encounter some limitation that prevents perfect scaling.

If we increase the load on a system, either by increasing the number of requests (or clients) or by increasing the speed requests arrive at, then we may see a change in the observed latency and/or throughput.

Note that this change is dependent on utilization. If the system is underutilized, then there should be some slack before observables change, but if resources are fully utilized then we would expect to see throughput stop increasing, or latency increase. These changes are usually called the degradation of the system under additional load.

The behavior of the various performance observables is usually connected in some manner. The details of this connection will depend upon whether the system is running at peak utility. For example, in general, the utilization will change as the load on a system increases. However, if the system is underutilized, then increasing load may not appreciably increase utilization. Conversely, if the system is already stressed, then the effect of increasing load may be felt in another observable.

As another example, scalability and degradation both represent the change in behavior of a system as more load is added. For scalability, as the load is increased, so are available resources, and the central question is whether the system can make use of them. On the other hand, if load is added but additional resources are not provided, degradation of some performance observable (e.g., latency) is the expected outcome.

In rare cases, additional load can cause counterintuitive results. For example, if the change in load causes some part of the system to switch to a more resource-intensive but higher-performance mode, then the overall effect can be to reduce latency, even though more requests are being received.

To take one example, in Chapter 9 we will discuss HotSpot’s JIT compiler in detail. To be considered eligible for JIT compilation, a method has to be executed in interpreted mode “sufficiently frequently.” So it is possible at low load to have key methods stuck in interpreted mode, but for those to become eligible for compilation at higher loads due to increased calling frequency on the methods. This causes later calls to the same method to run much, much faster than earlier executions.

Different workloads can have very different characteristics. For example, a trade on the financial markets, viewed end to end, may have an execution time (i.e., latency) of hours or even days. However, millions of them may be in progress at a major bank at any given time. Thus, the capacity of the system is very large, but the latency is also large.

However, let’s consider only a single subsystem within the bank. The matching of a buyer and a seller (which is essentially the parties agreeing on a price) is known as order matching. This individual subsystem may have only hundreds of pending orders at any given time, but the latency from order acceptance to completed match may be as little as 1 millisecond (or even less in the case of “low-latency” trading).

In this section we have met the most frequently encountered performance observables. Occasionally slightly different definitions, or even different metrics, are used, but in most cases these will be the basic system numbers that will normally be used to guide performance tuning, and act as a taxonomy for discussing the performance of systems of interest.

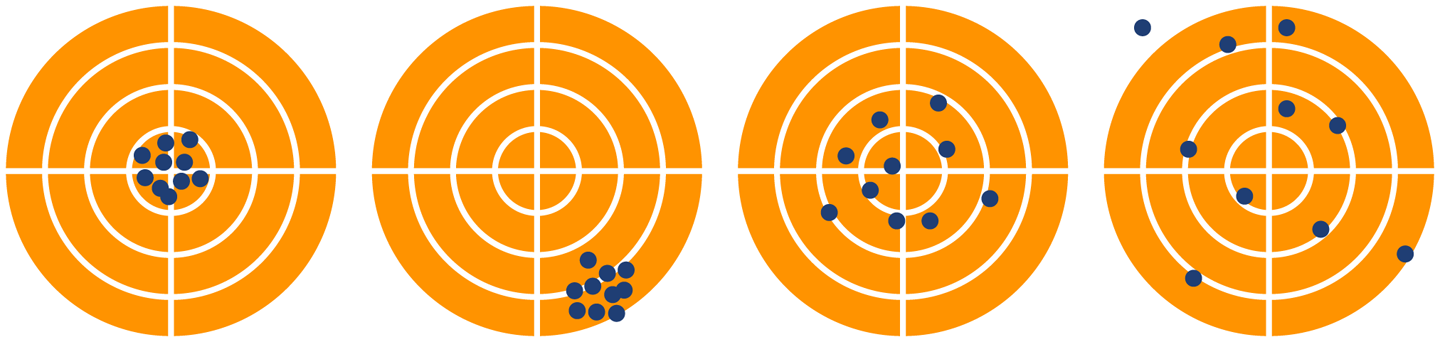

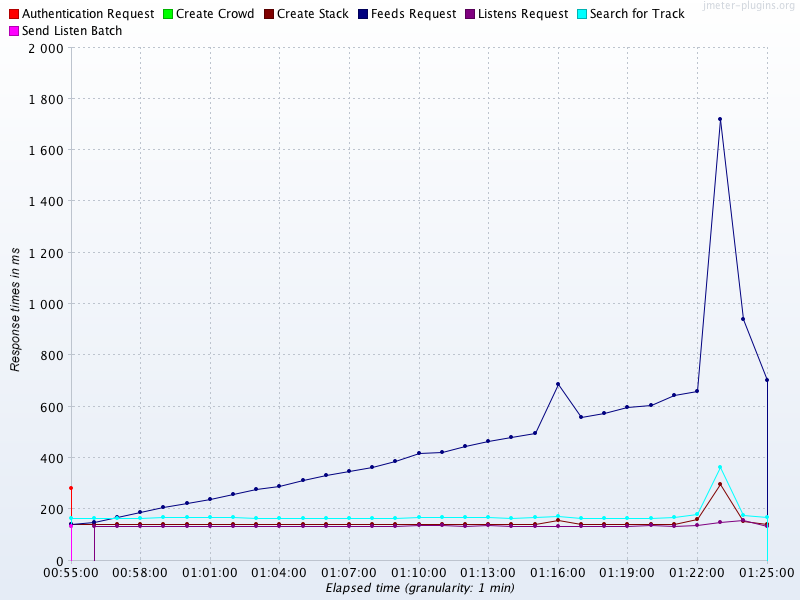

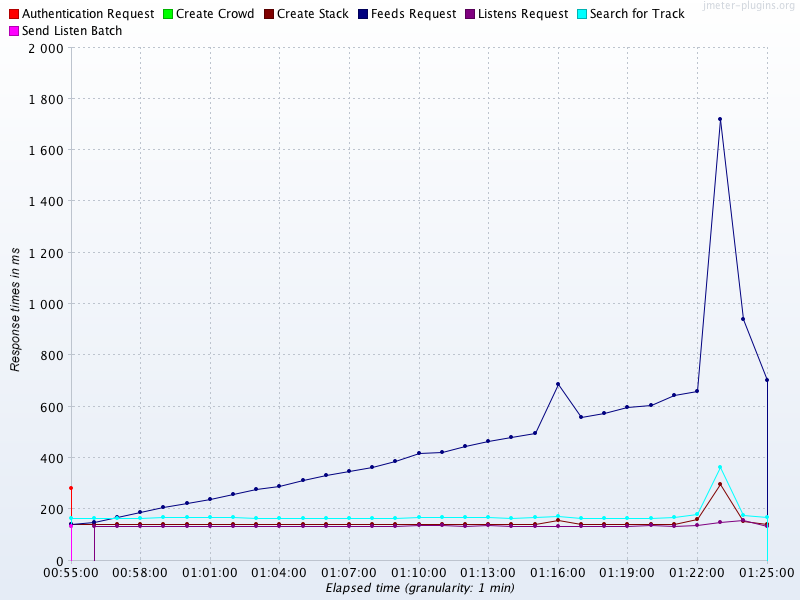

To conclude this chapter, let’s look at some common patterns of behavior that occur in performance tests. We will explore these by looking at graphs of real observables, and we will encounter many other examples of graphs of our data as we proceed.

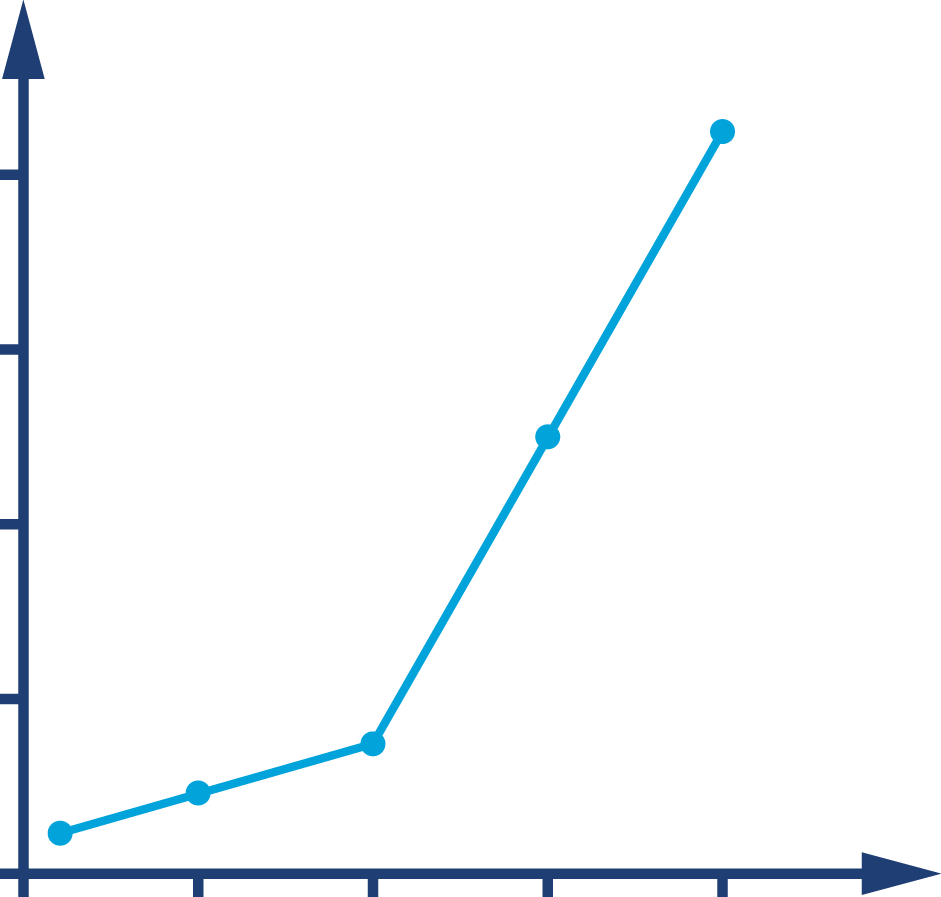

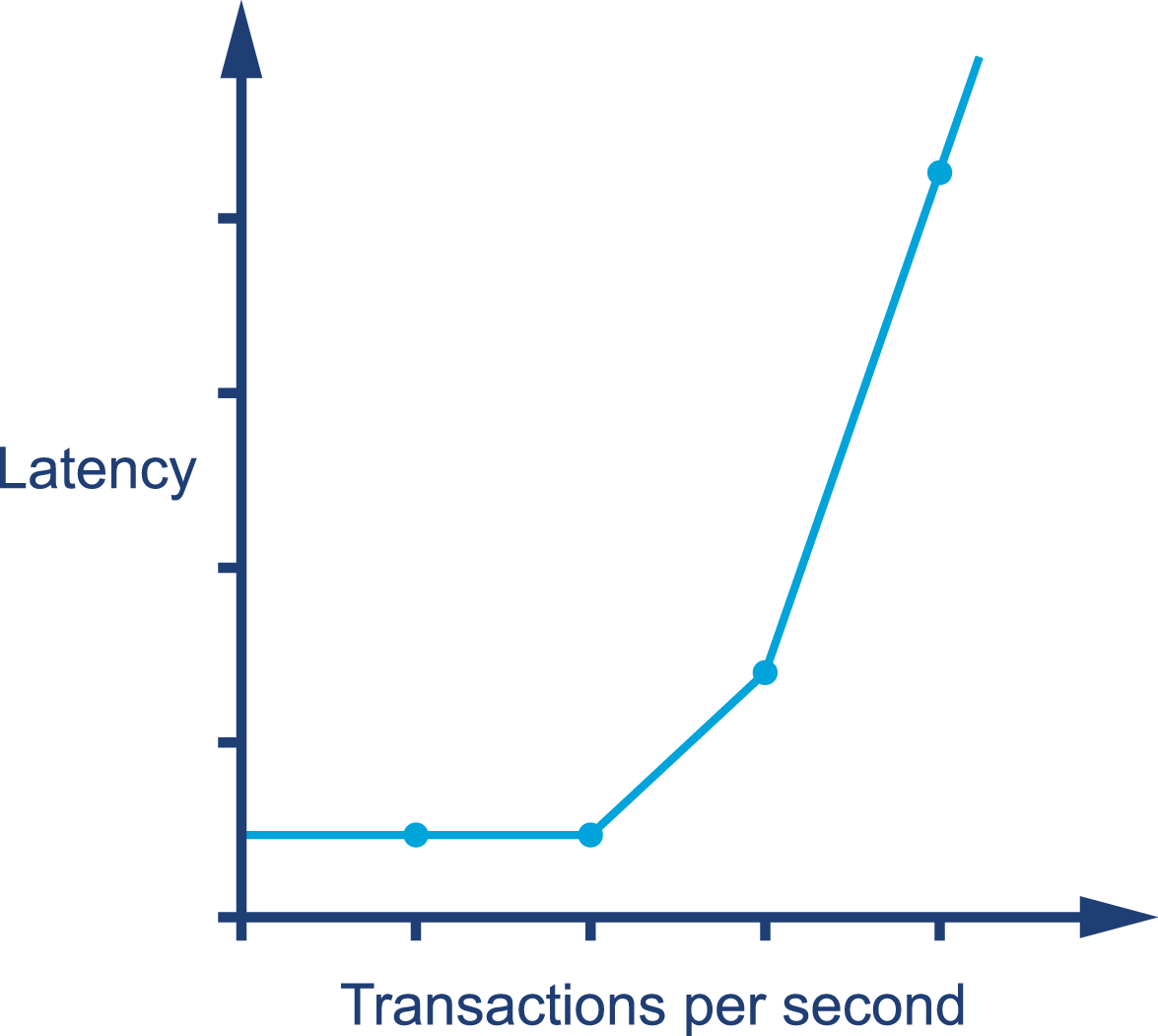

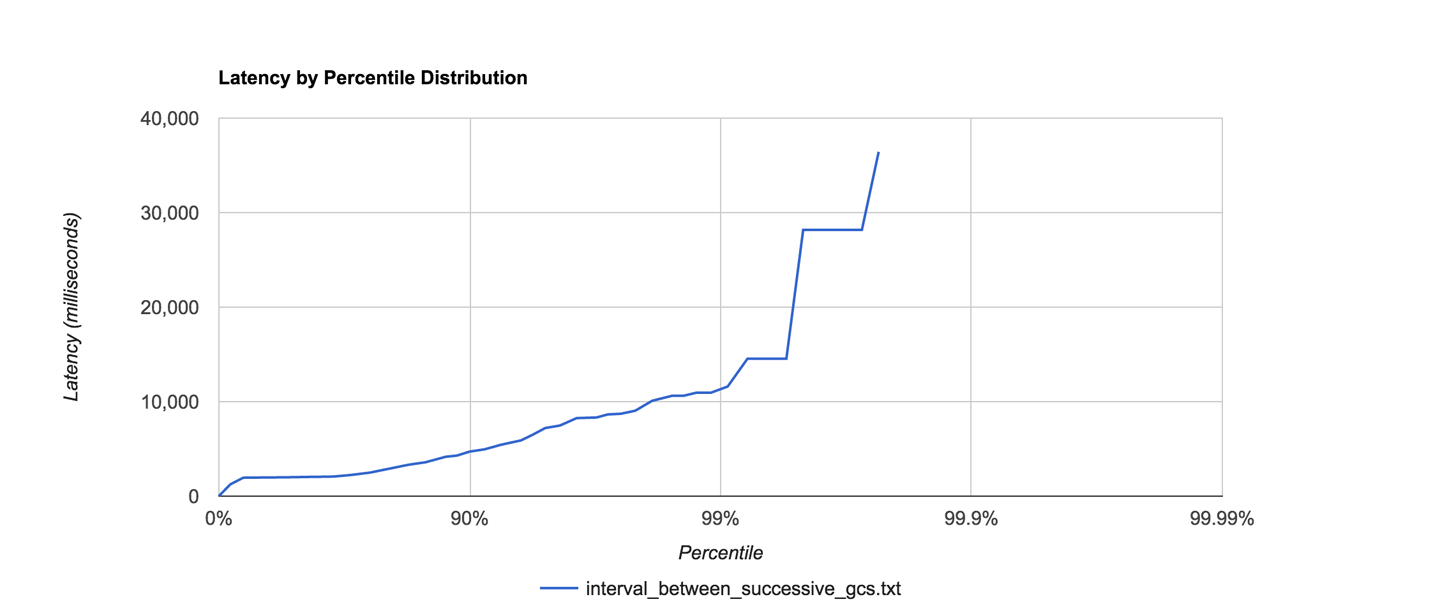

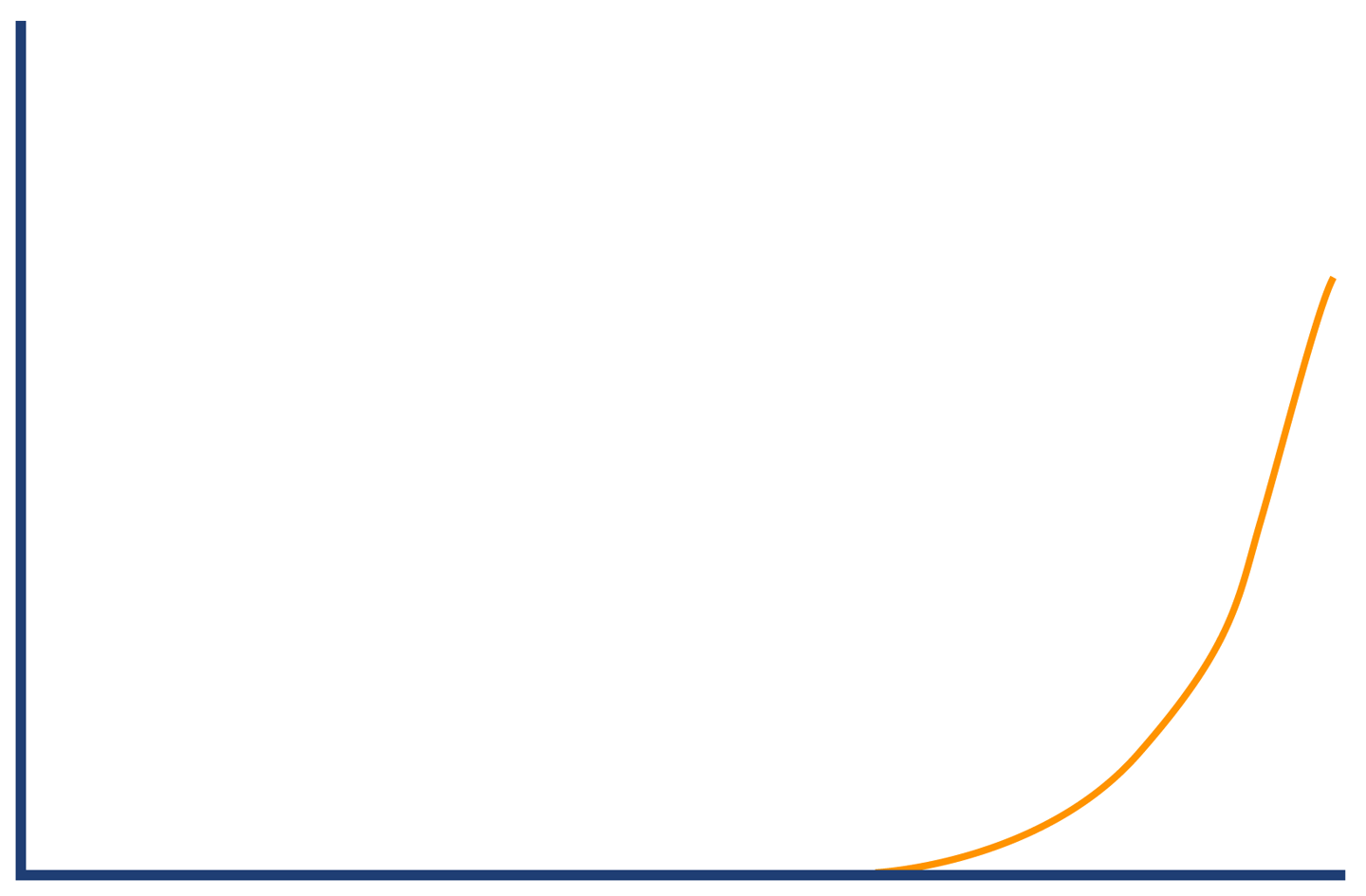

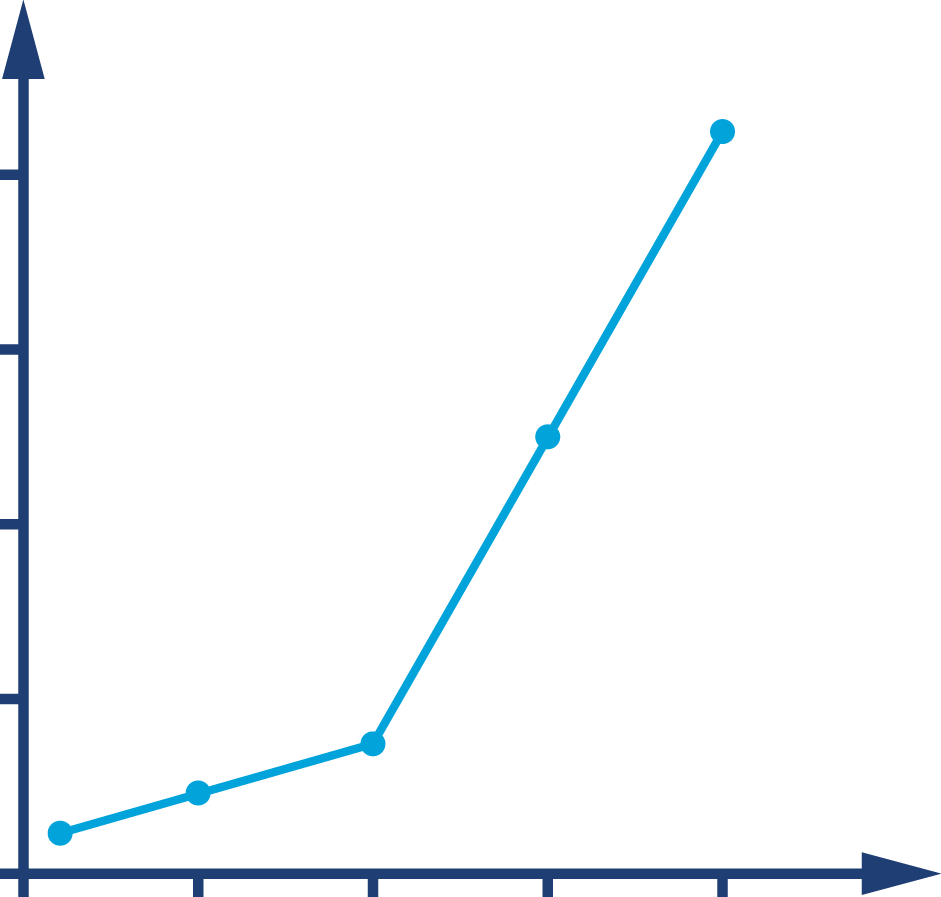

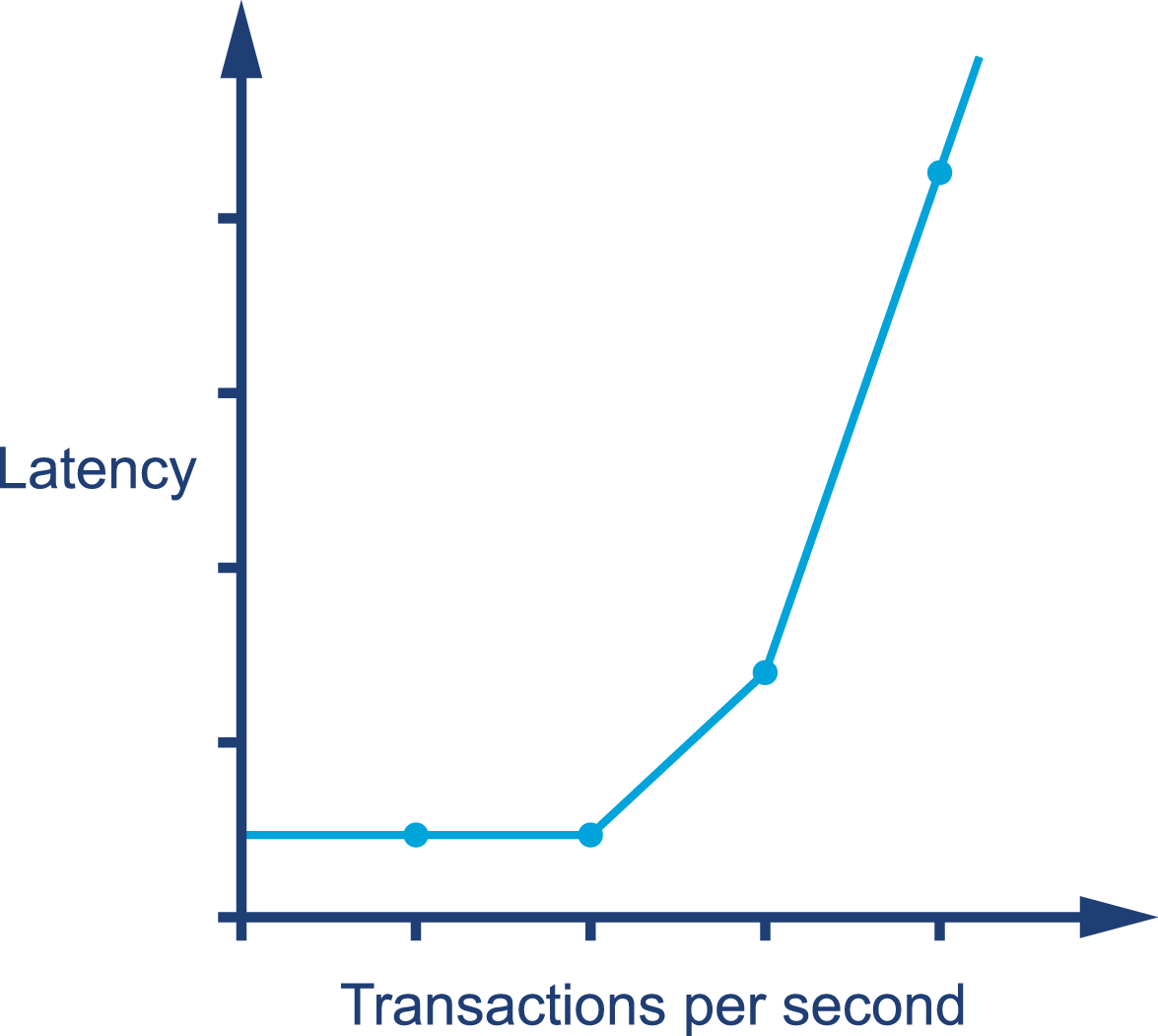

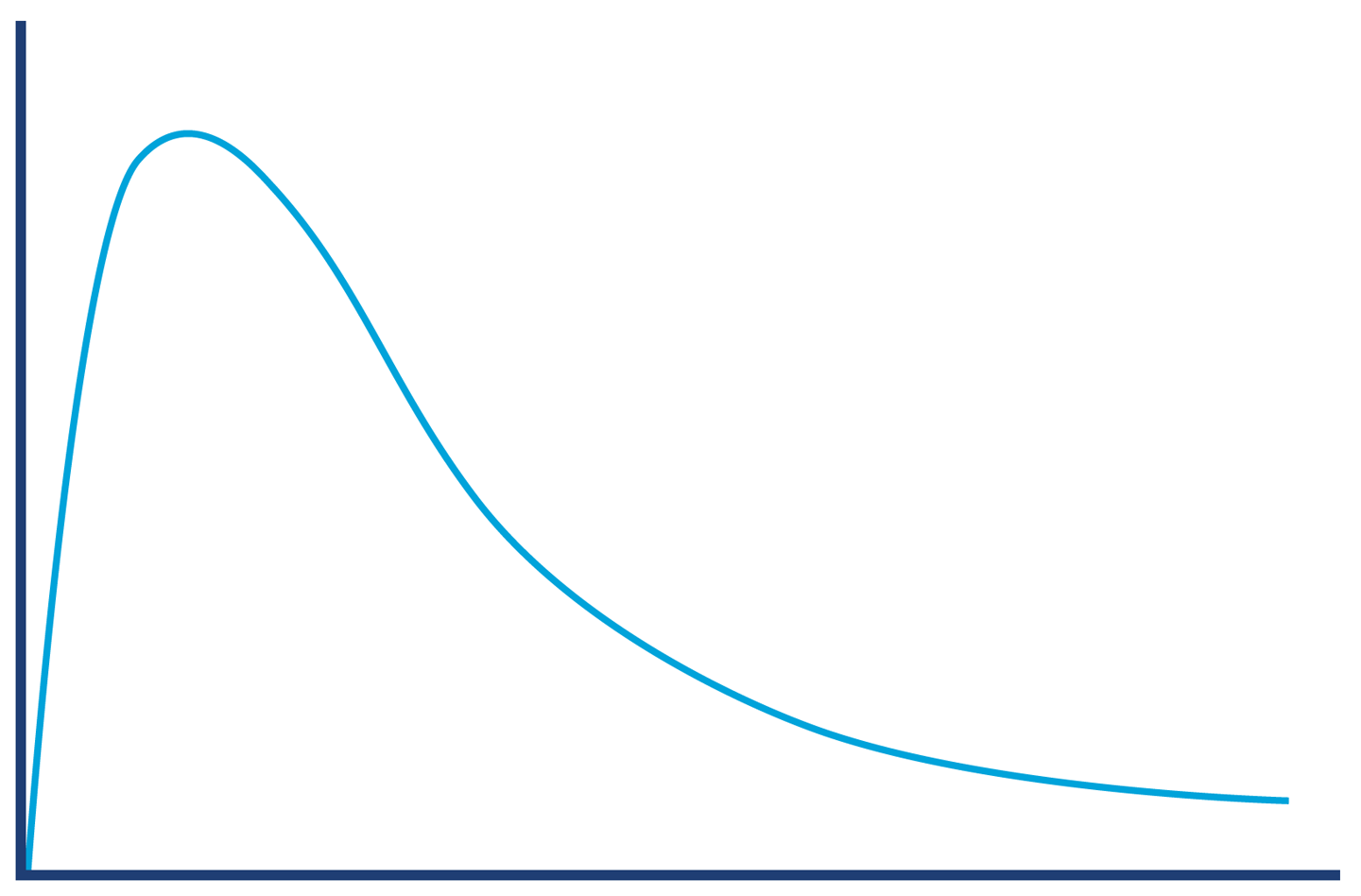

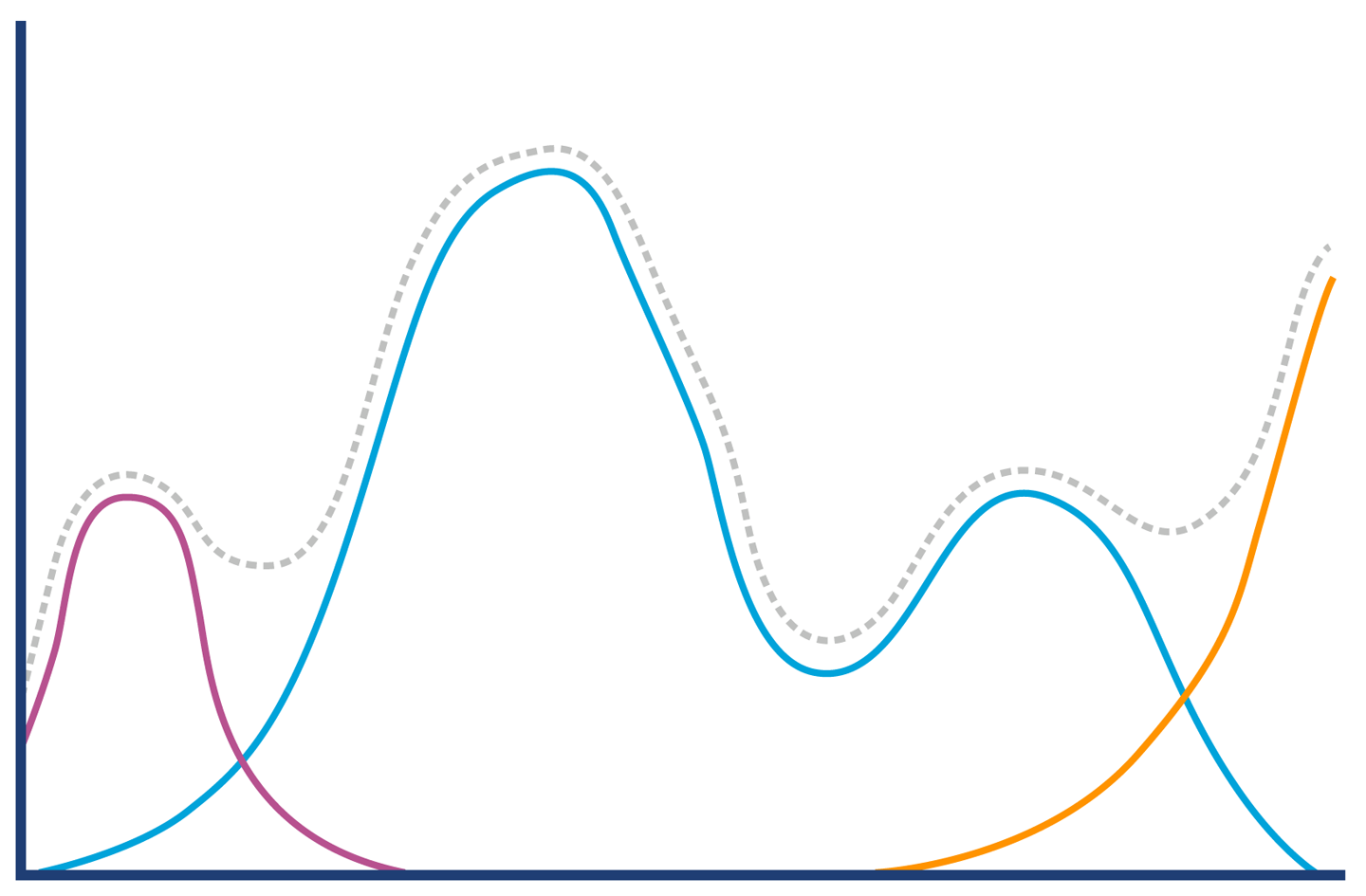

The graph in Figure 1-1 shows sudden, unexpected degradation of performance (in this case, latency) under increasing load—commonly called a performance elbow.

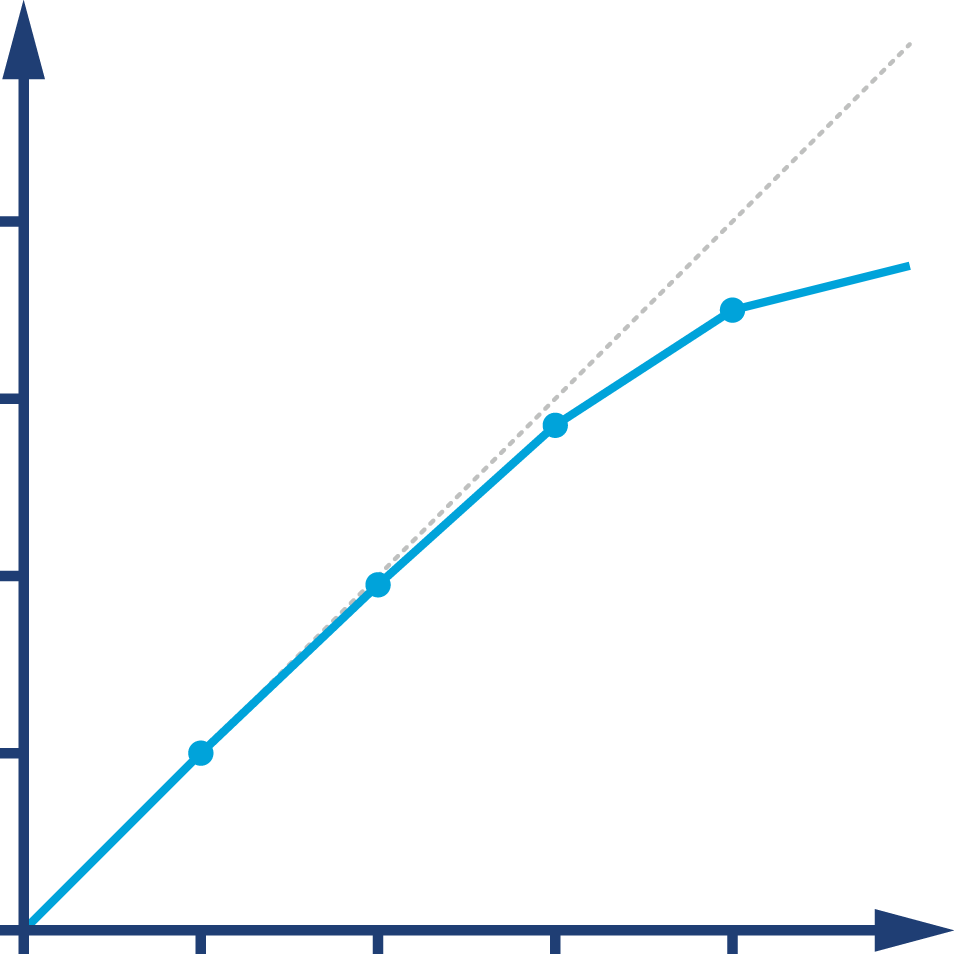

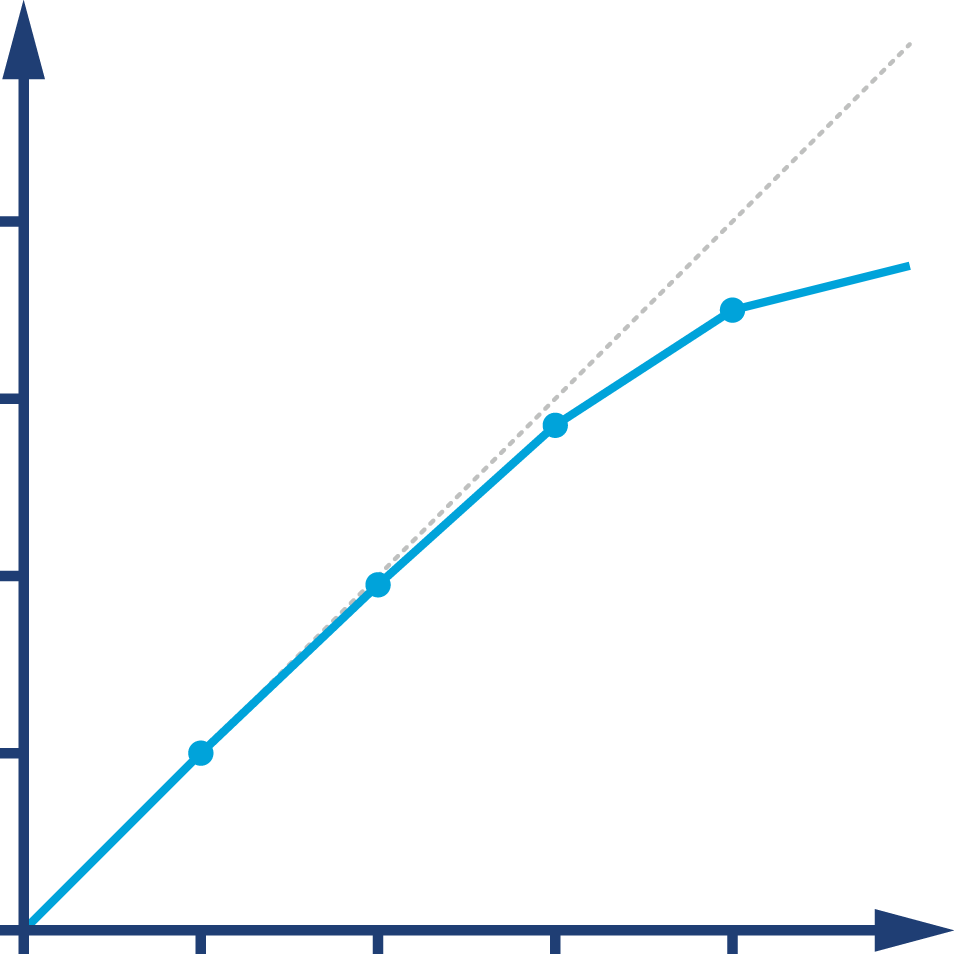

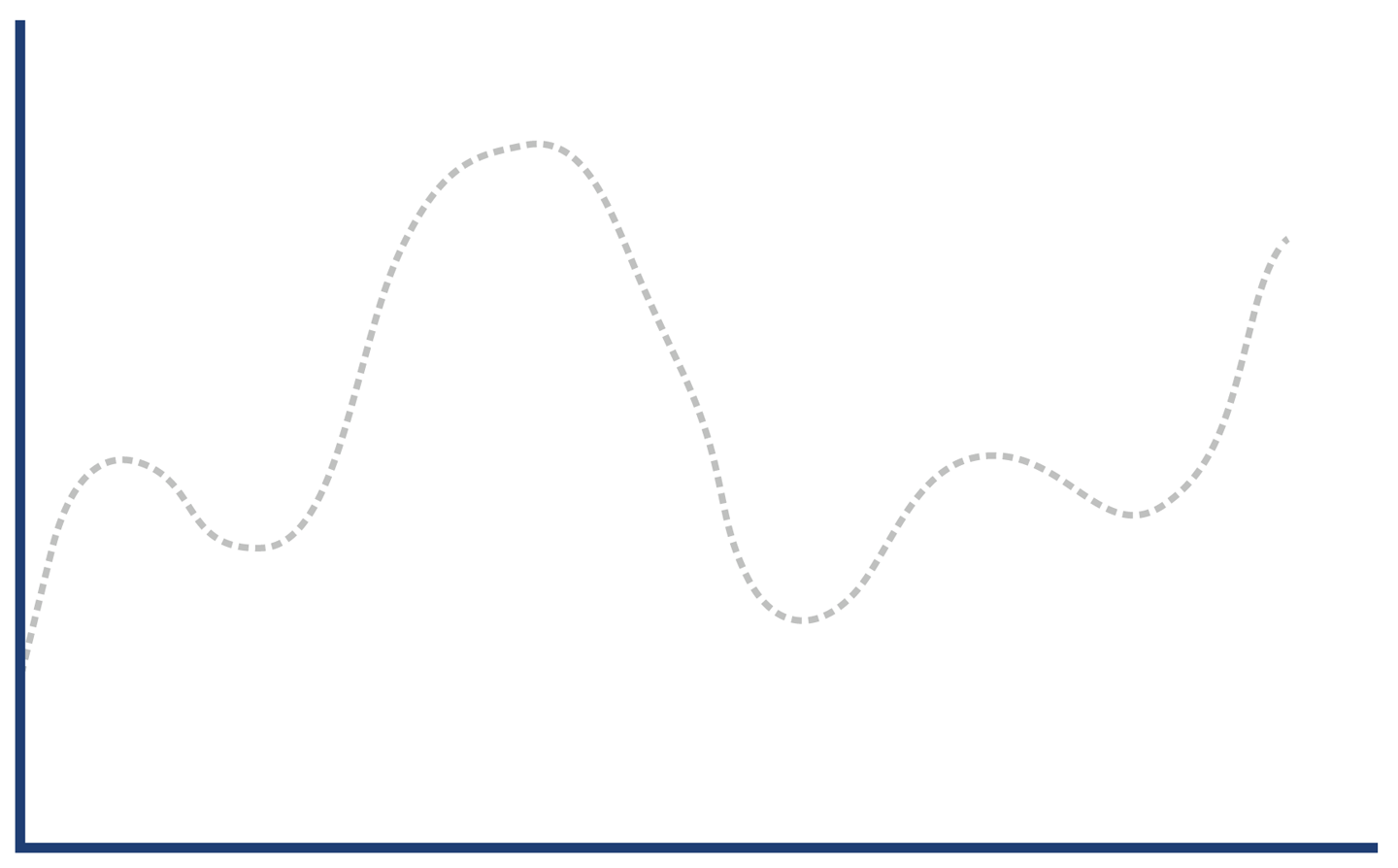

By contrast, Figure 1-2 shows the much happier case of throughput scaling almost linearly as machines are added to a cluster. This is close to ideal behavior, and is only likely to be achieved in extremely favorable circumstances—e.g., scaling a stateless protocol with no need for session affinity with a single server.

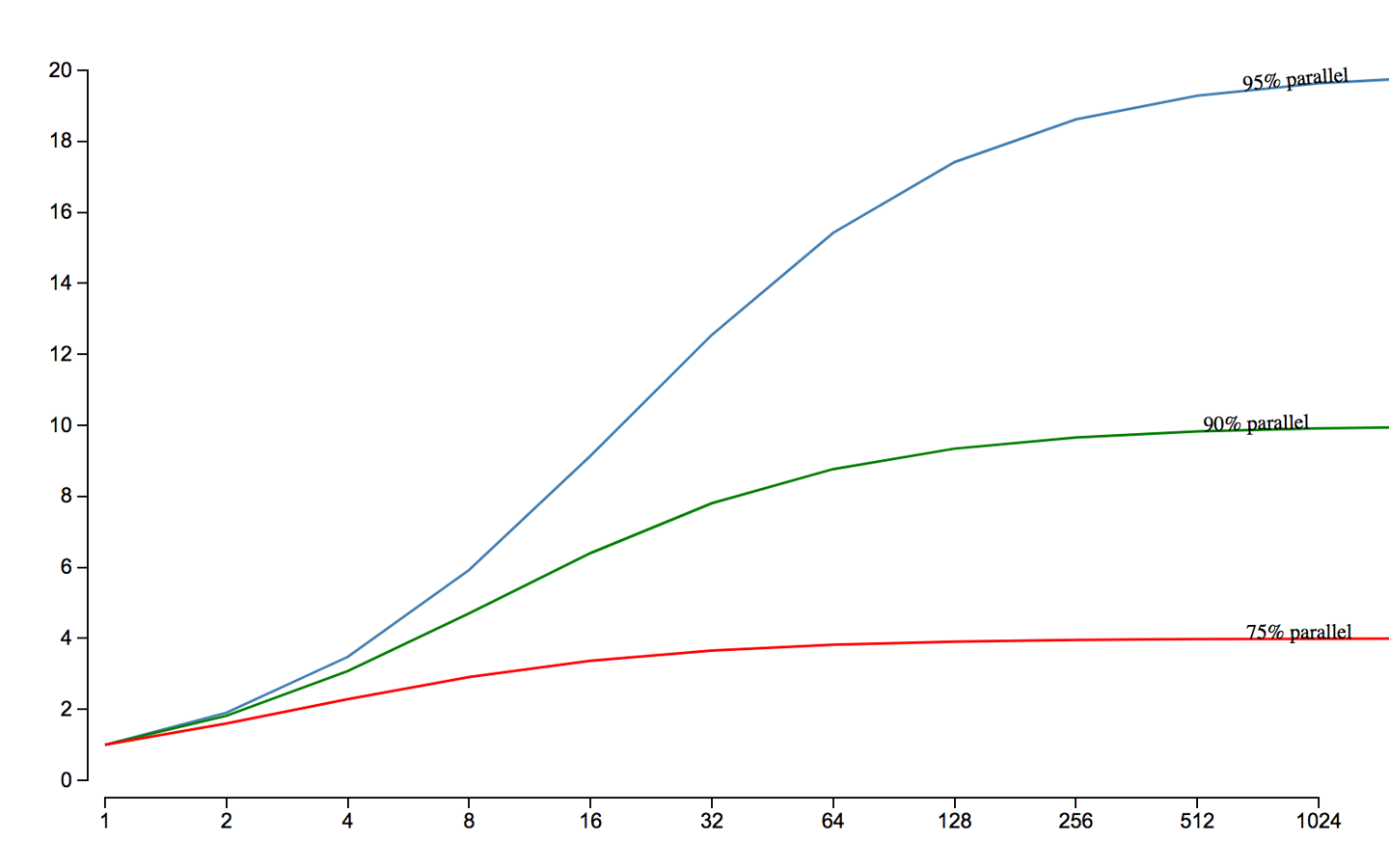

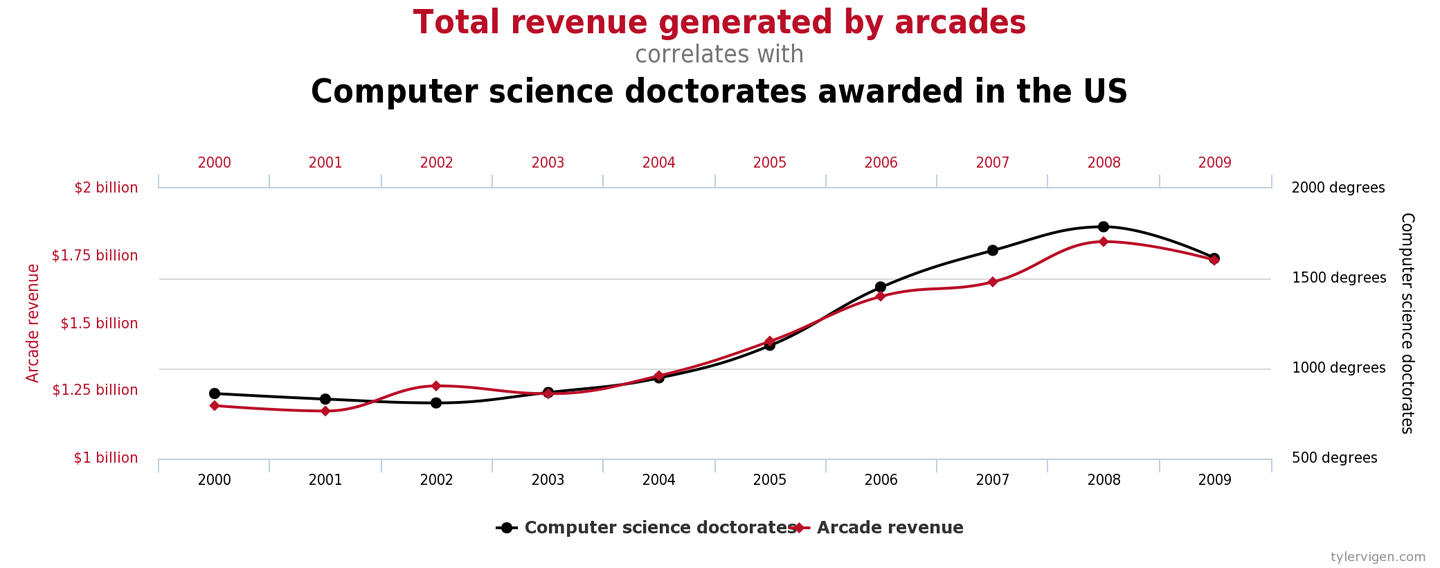

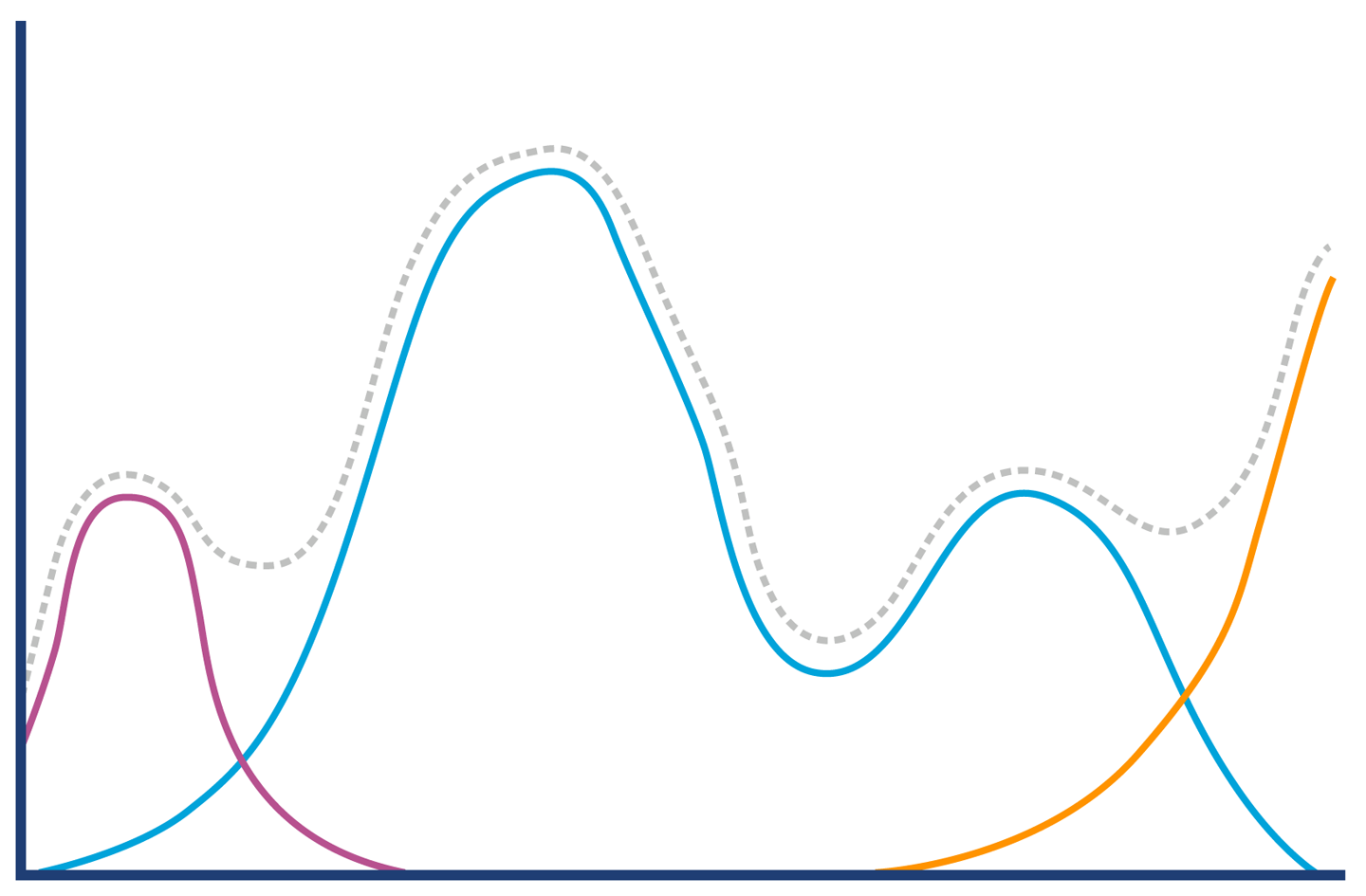

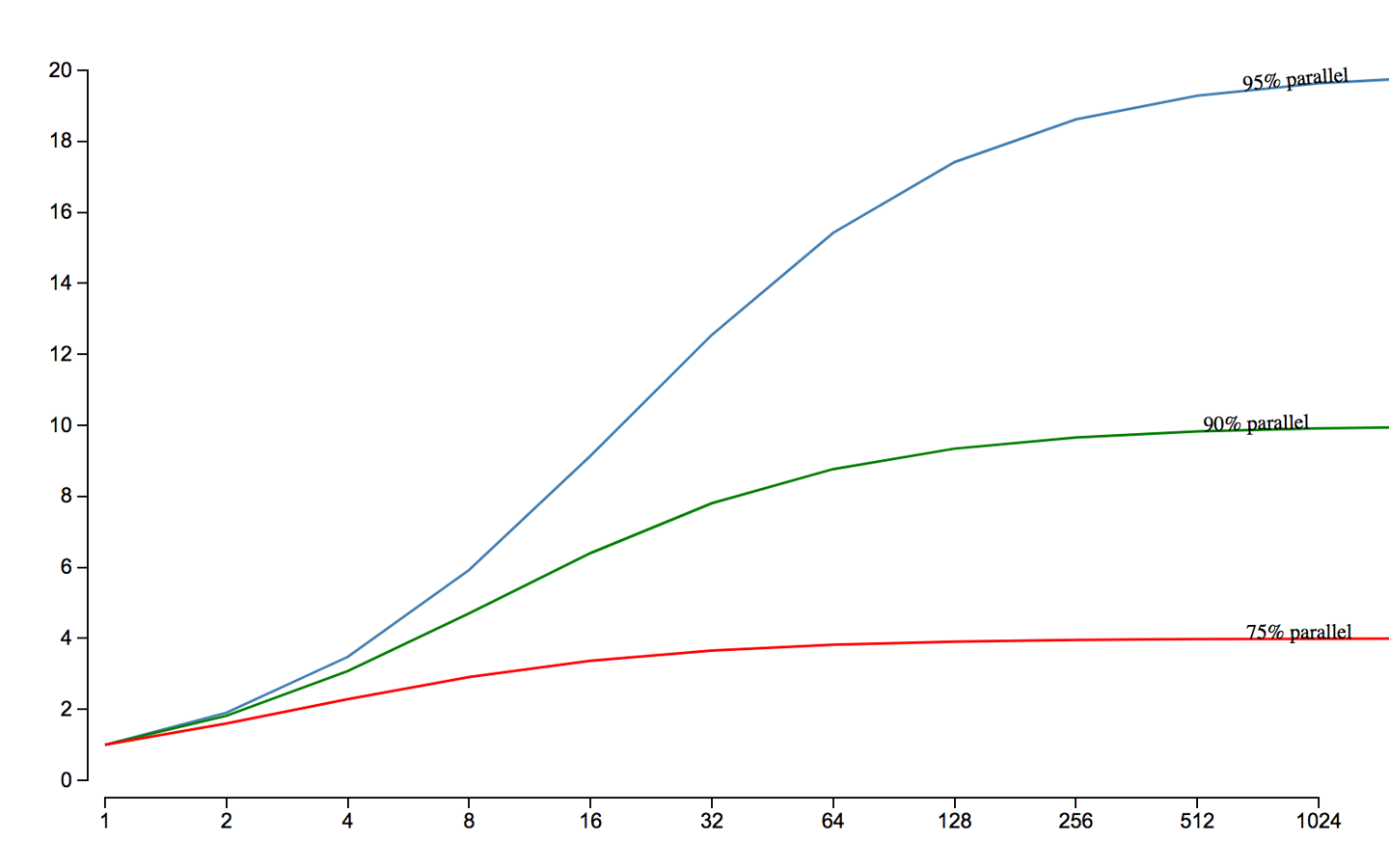

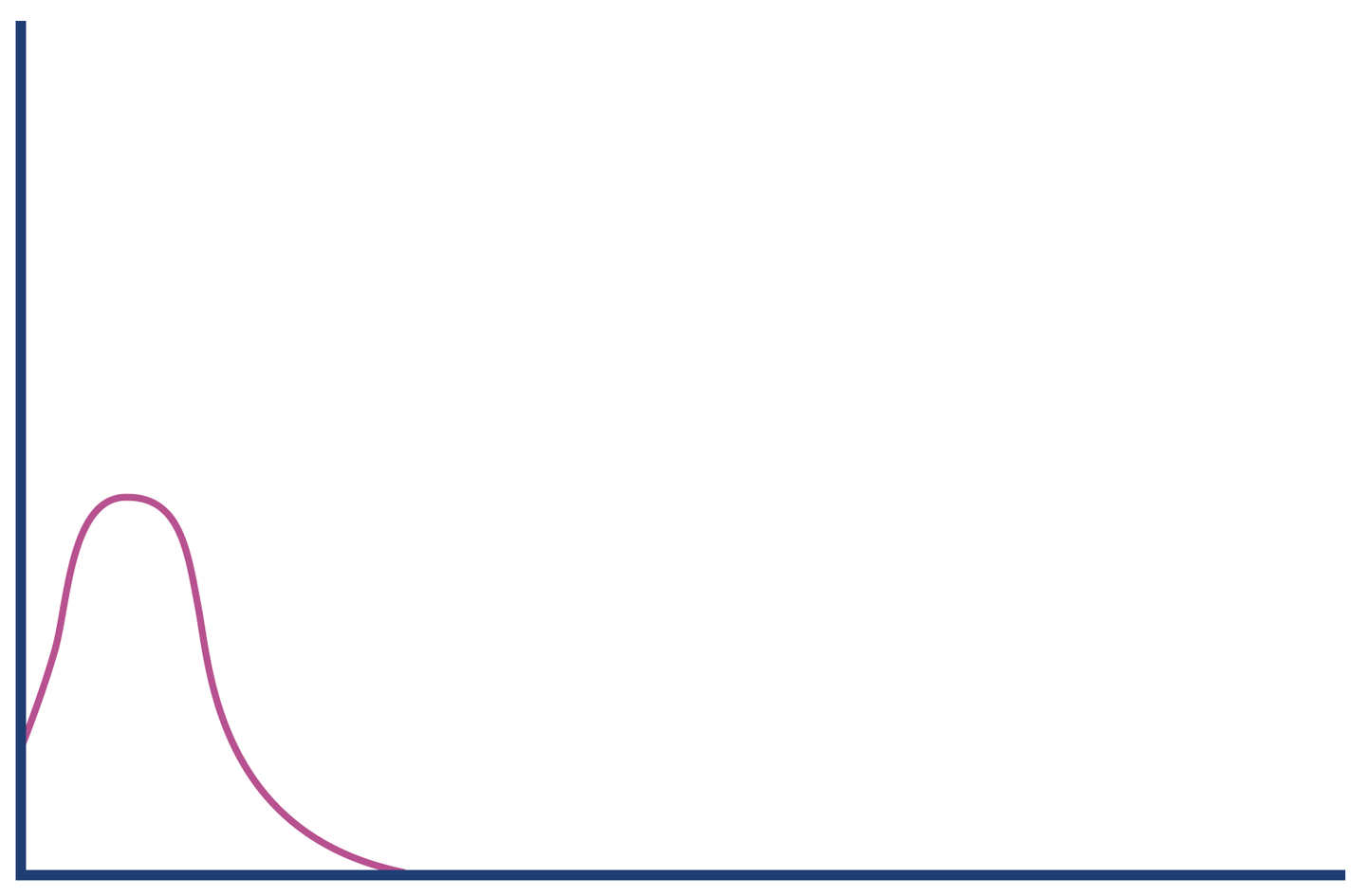

In Chapter 12 we will meet Amdahl’s Law, named for the famous computer scientist (and “father of the mainframe”) Gene Amdahl of IBM. Figure 1-3 shows a graphical representation of his fundamental constraint on scalability; it shows the maximum possible speedup as a function of the number of processors devoted to the task.

We display three cases: where the underlying task is 75%, 90%, and 95% parallelizable. This clearly shows that whenever the workload has any piece at all that must be performed serially, linear scalability is impossible, and there are strict limits on how much scalability can be achieved. This justifies the commentary around Figure 1-2—even in the best cases linear scalability is all but impossible to achieve.

The limits imposed by Amdahl’s Law are surprisingly restrictive. Note in particular that the x-axis of the graph is logarithmic, and so even with an algorithm that is (only) 5% serial, 32 processors are needed for a factor-of-12 speedup. Even worse, no matter how many cores are used, the maximum speedup is only a factor of 20 for that algorithm. In practice, many algorithms are far more than 5% serial, and so have a more constrained maximum possible speedup.

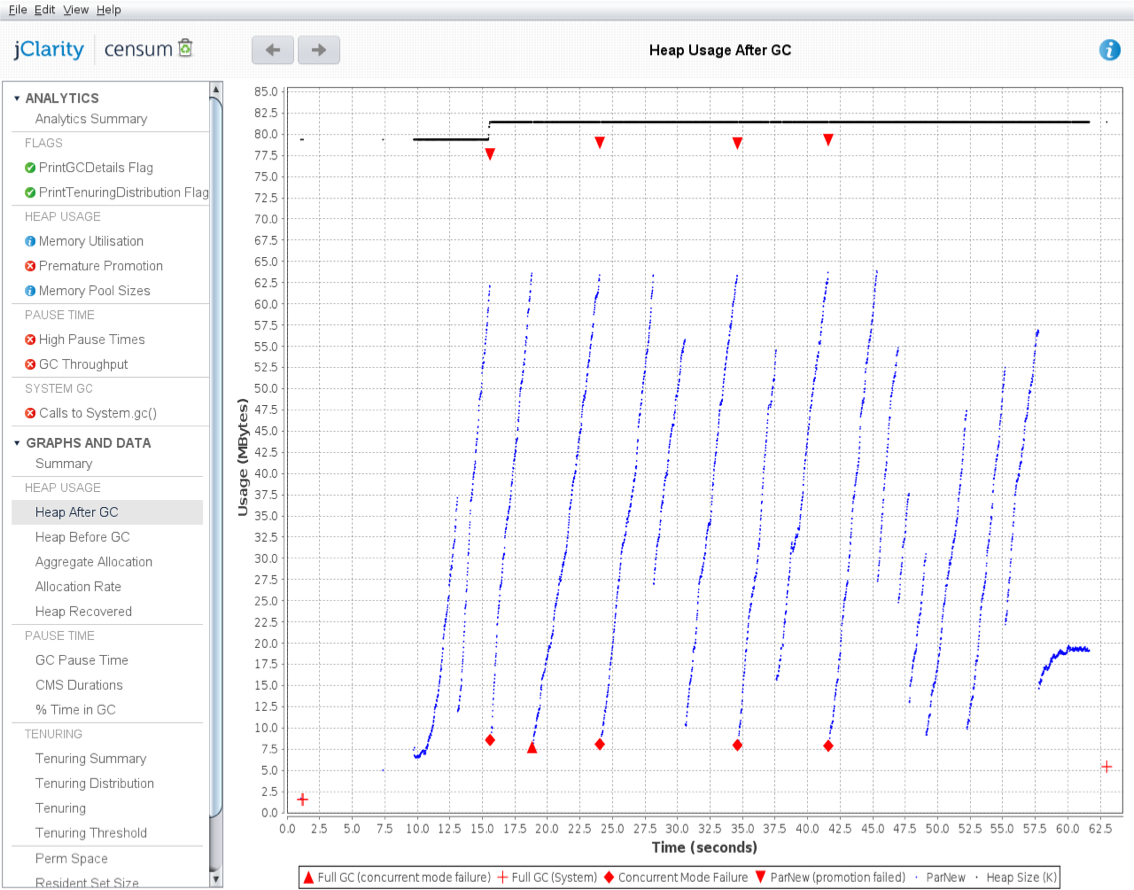

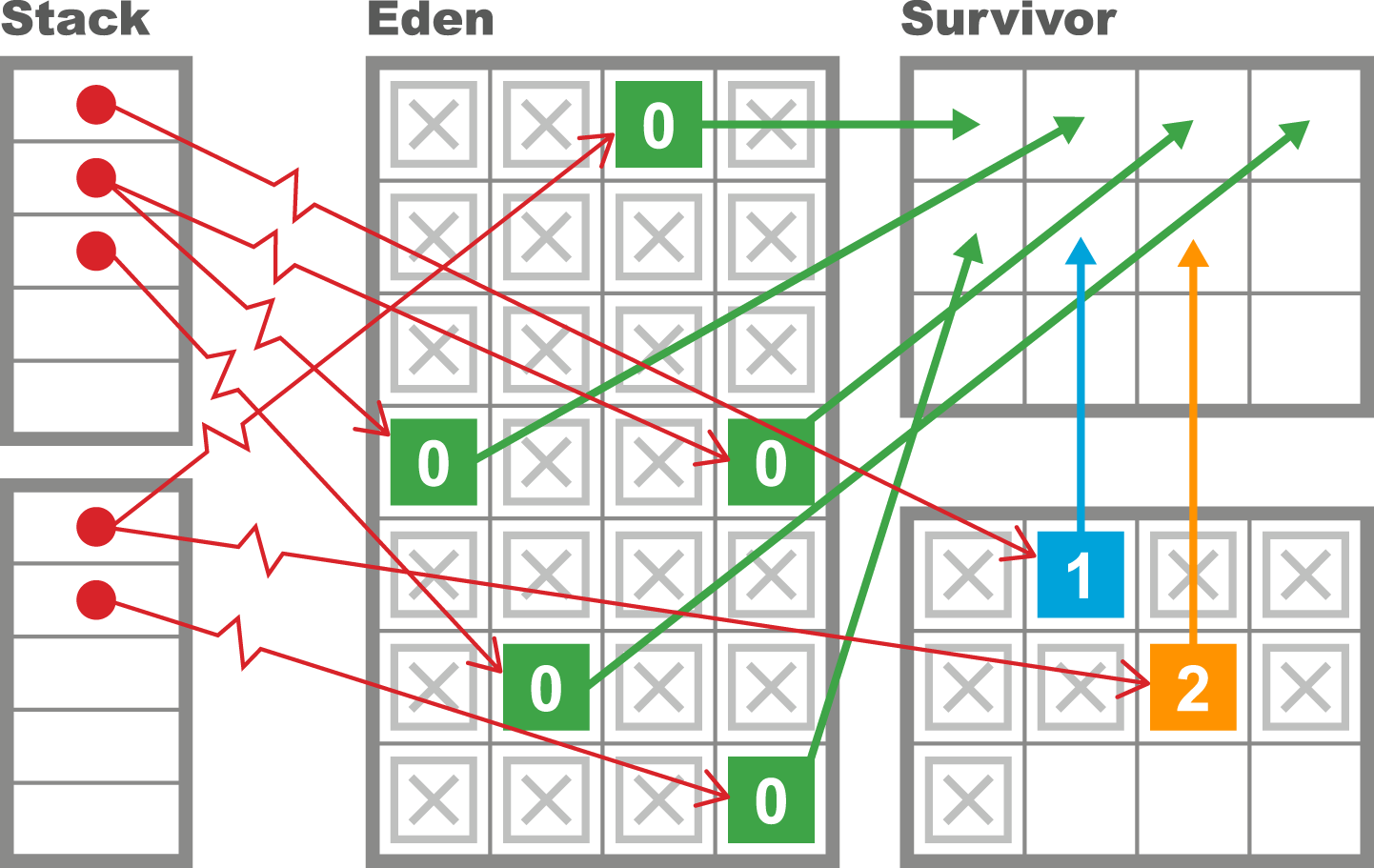

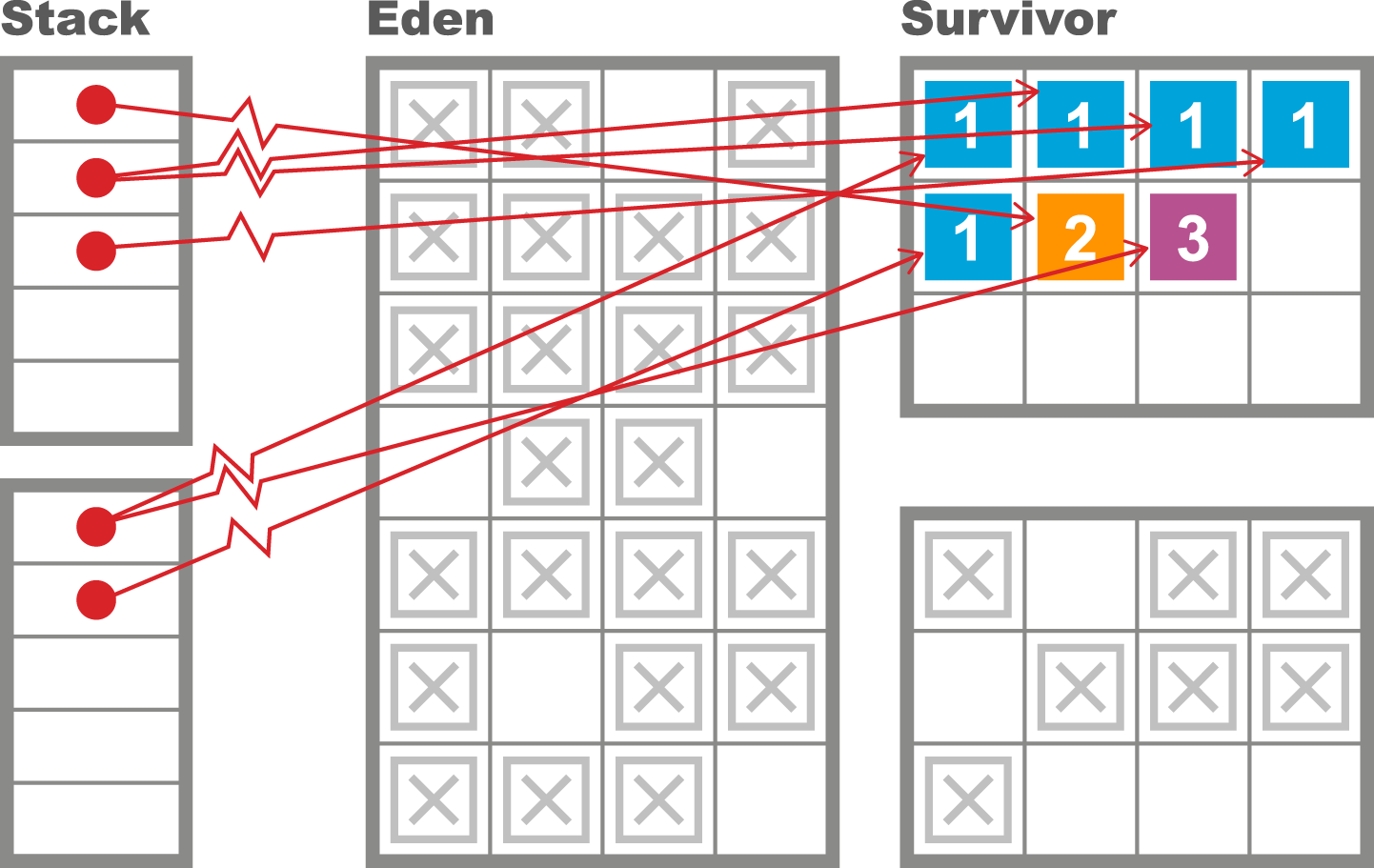

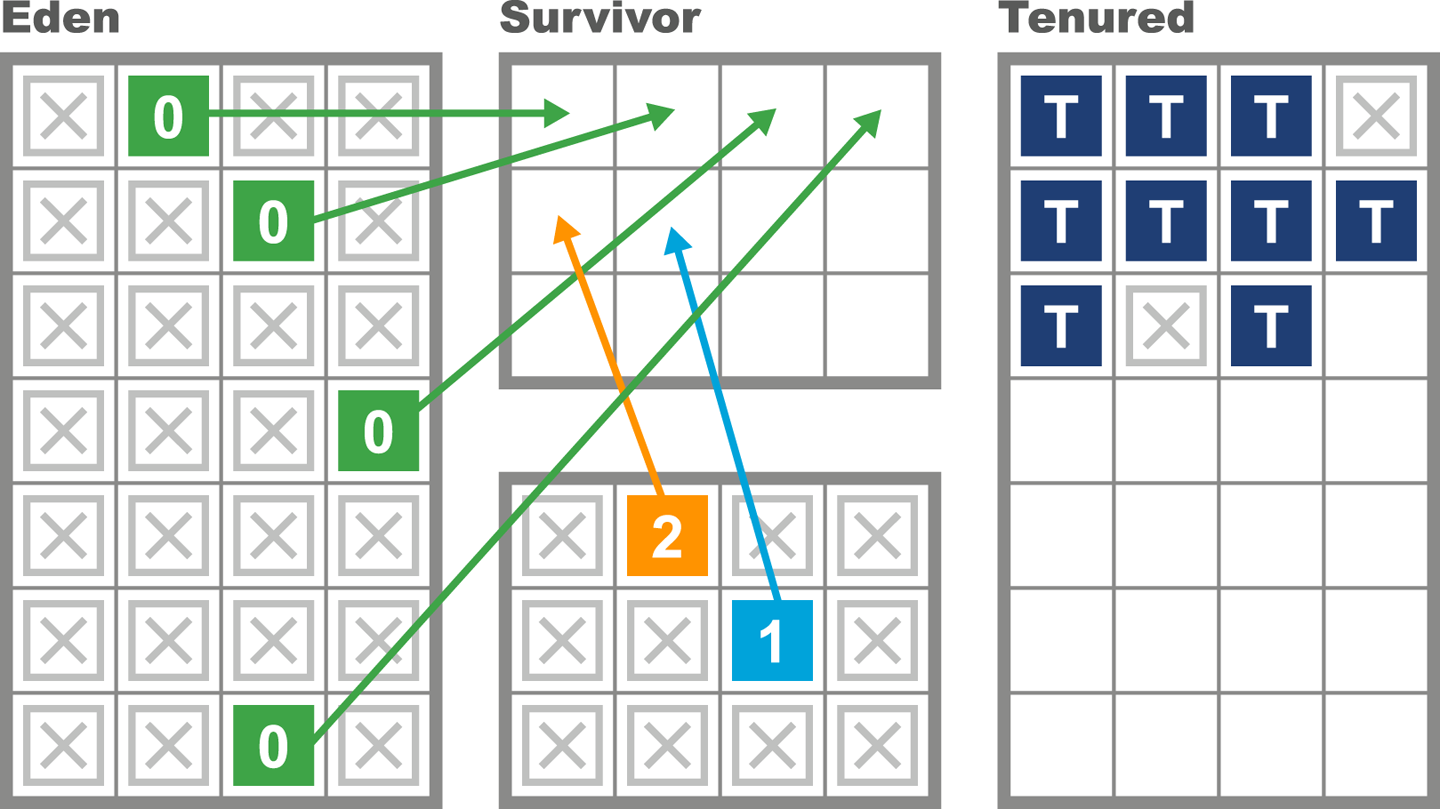

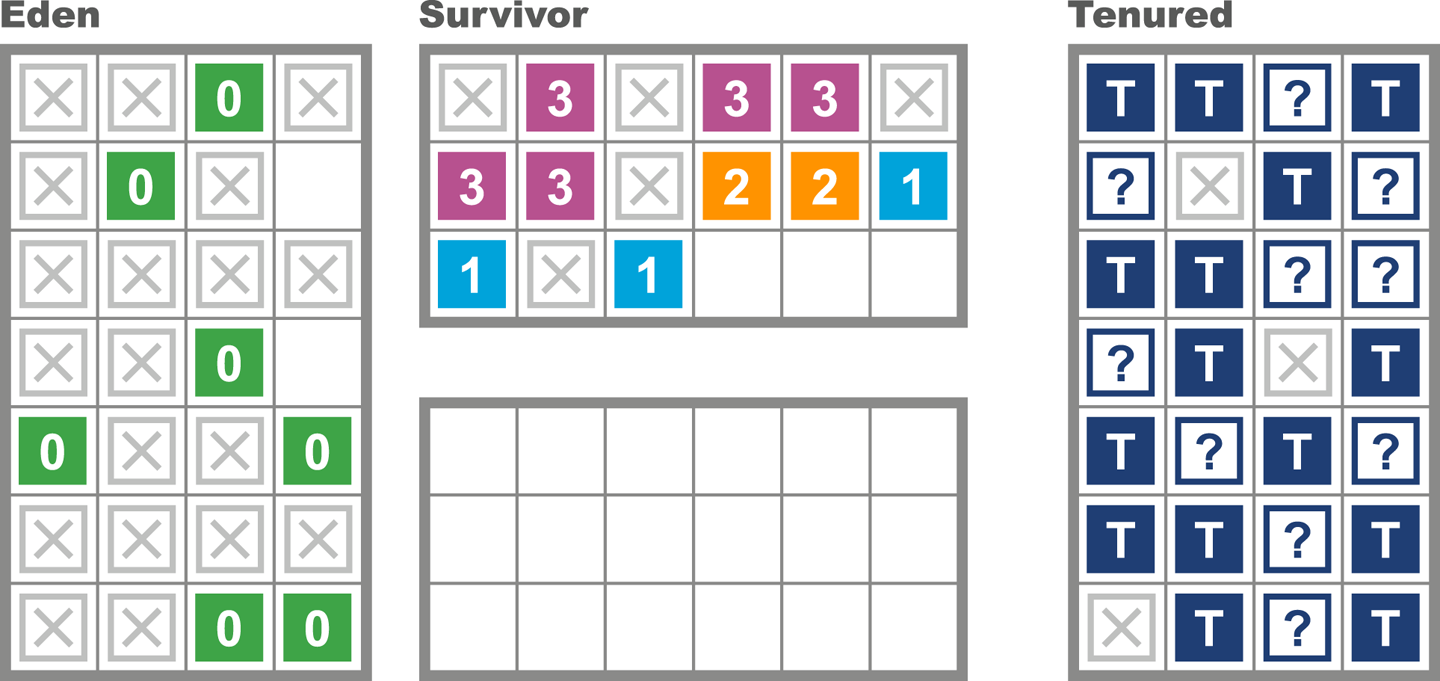

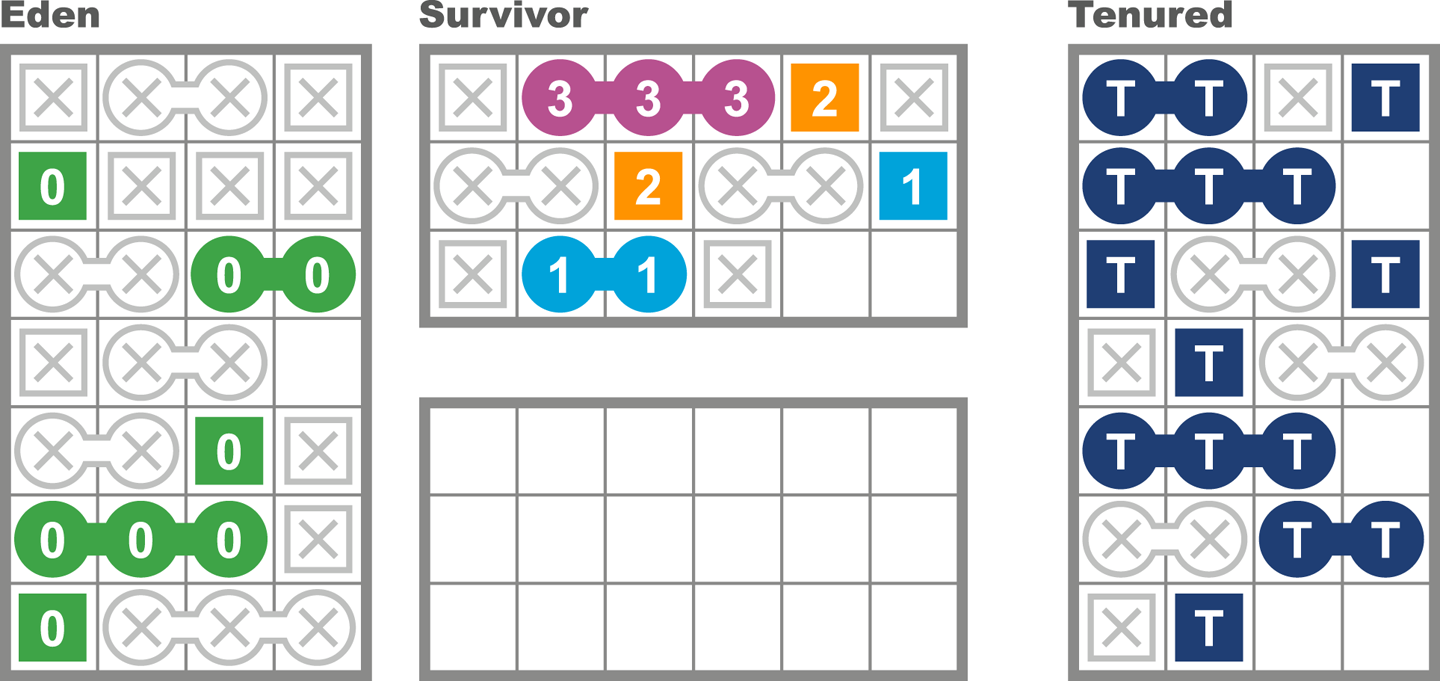

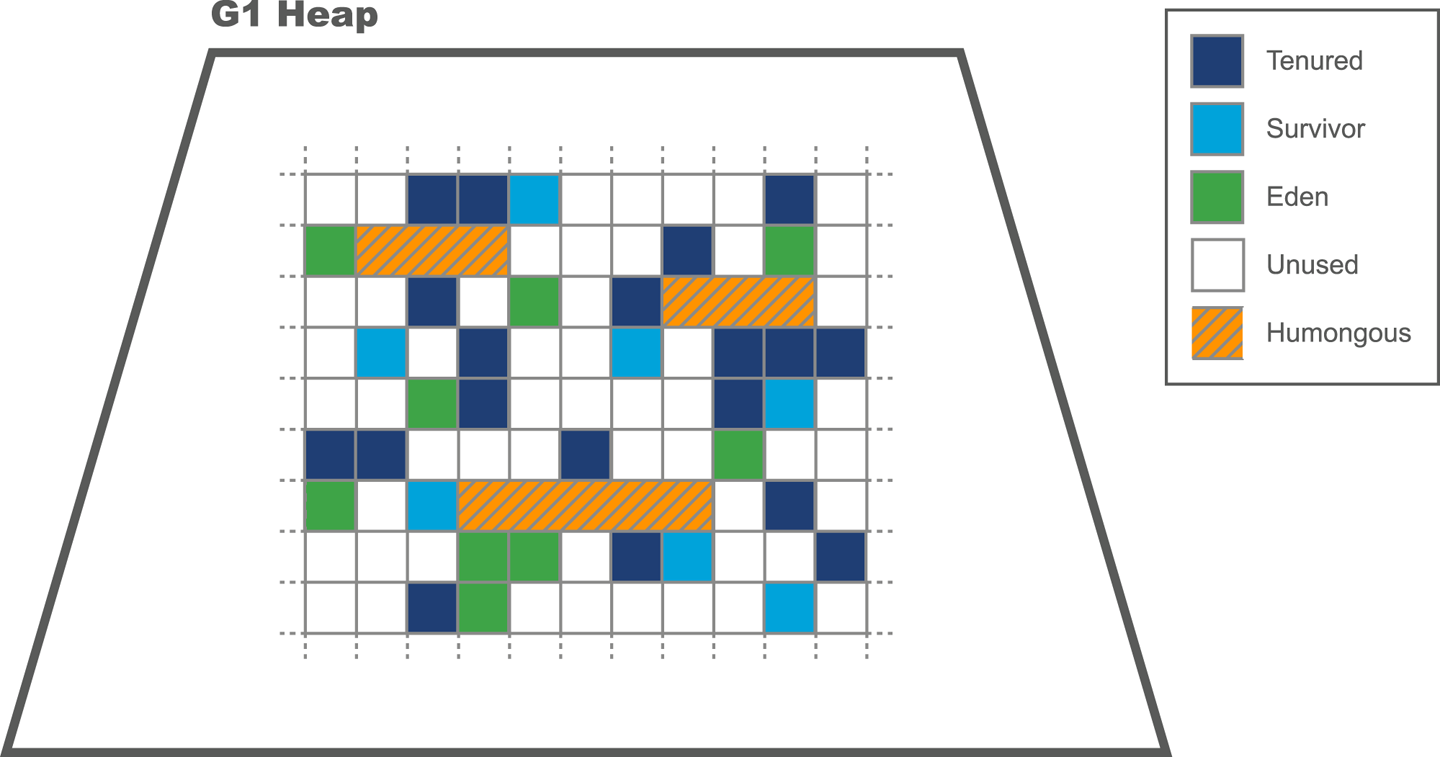

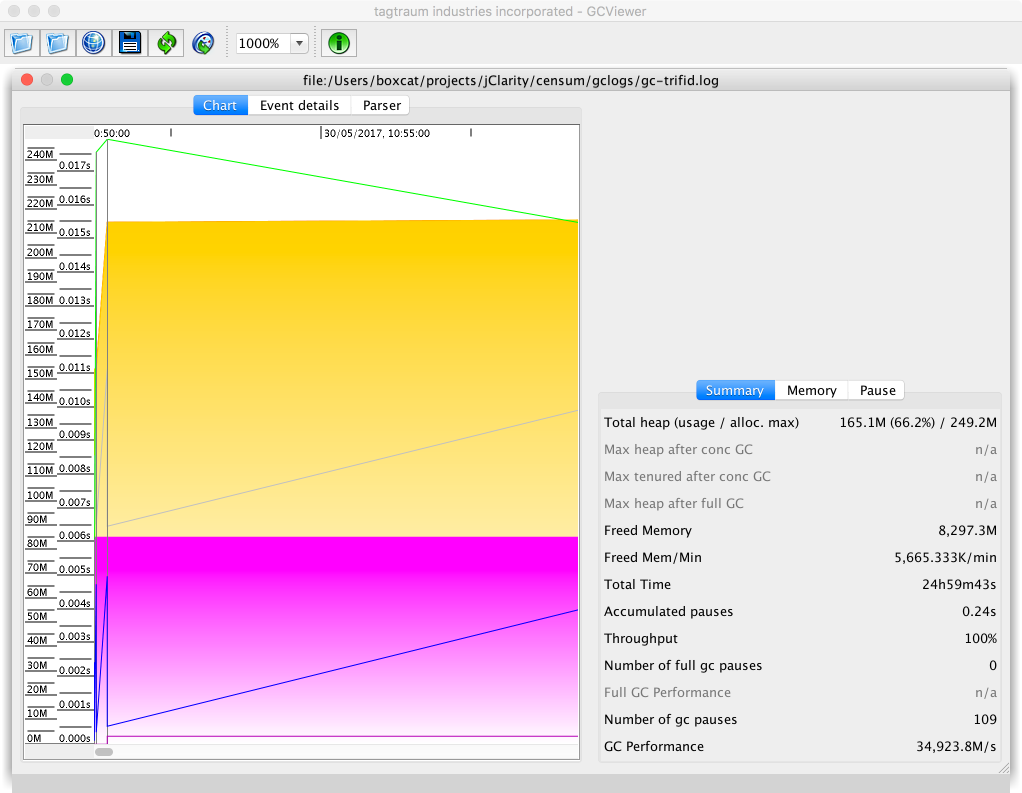

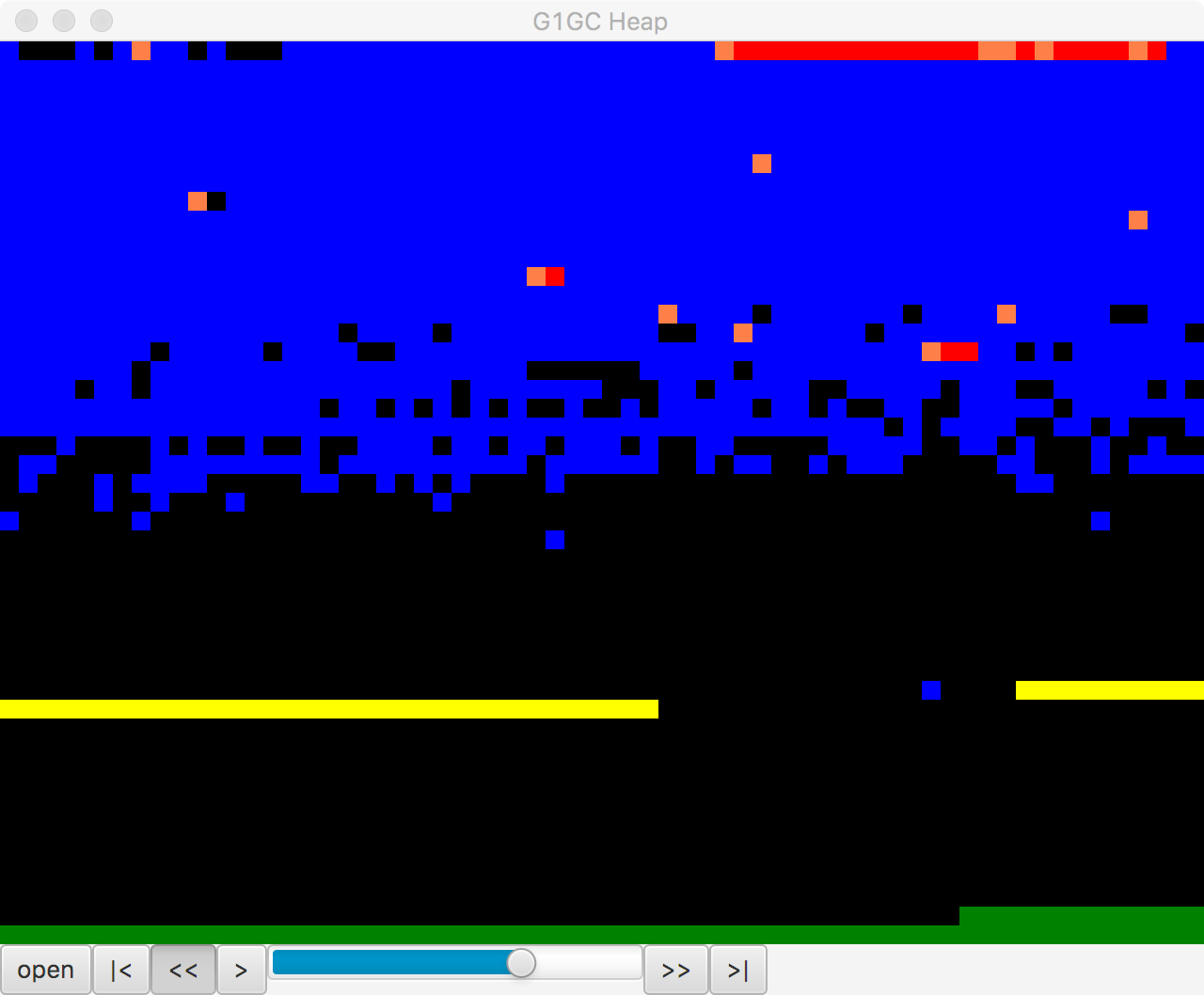

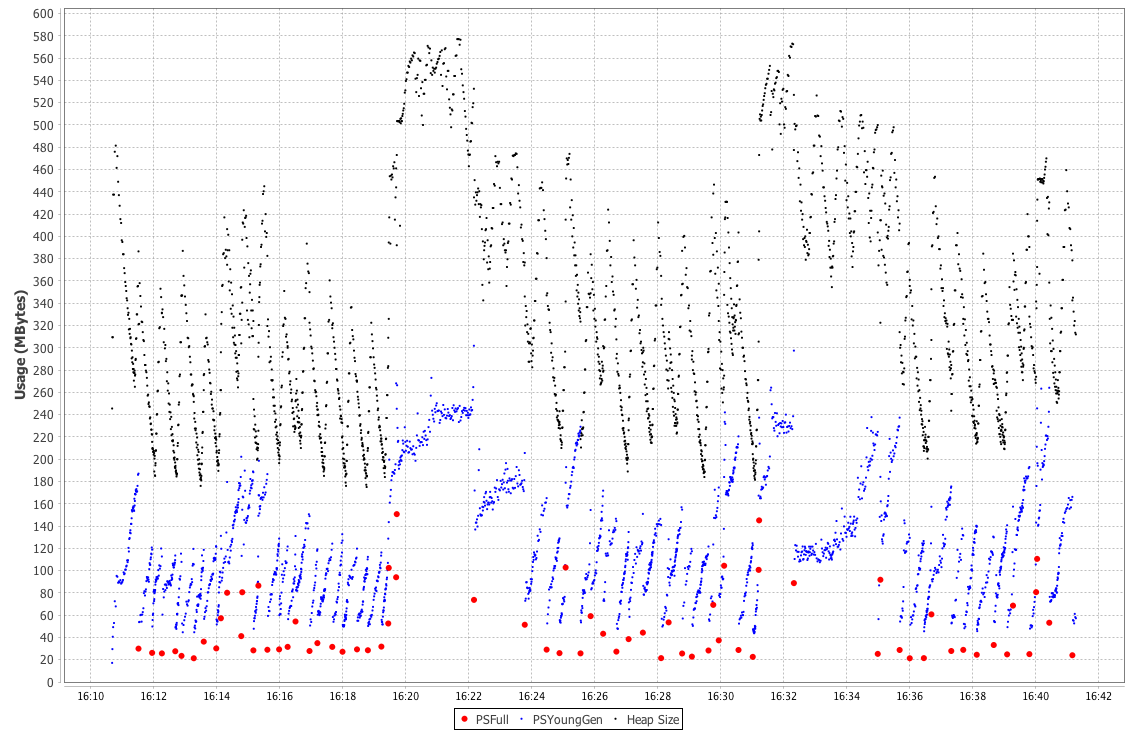

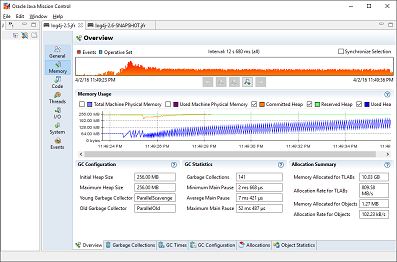

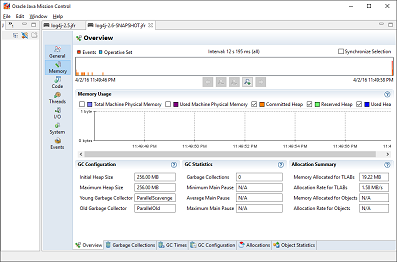

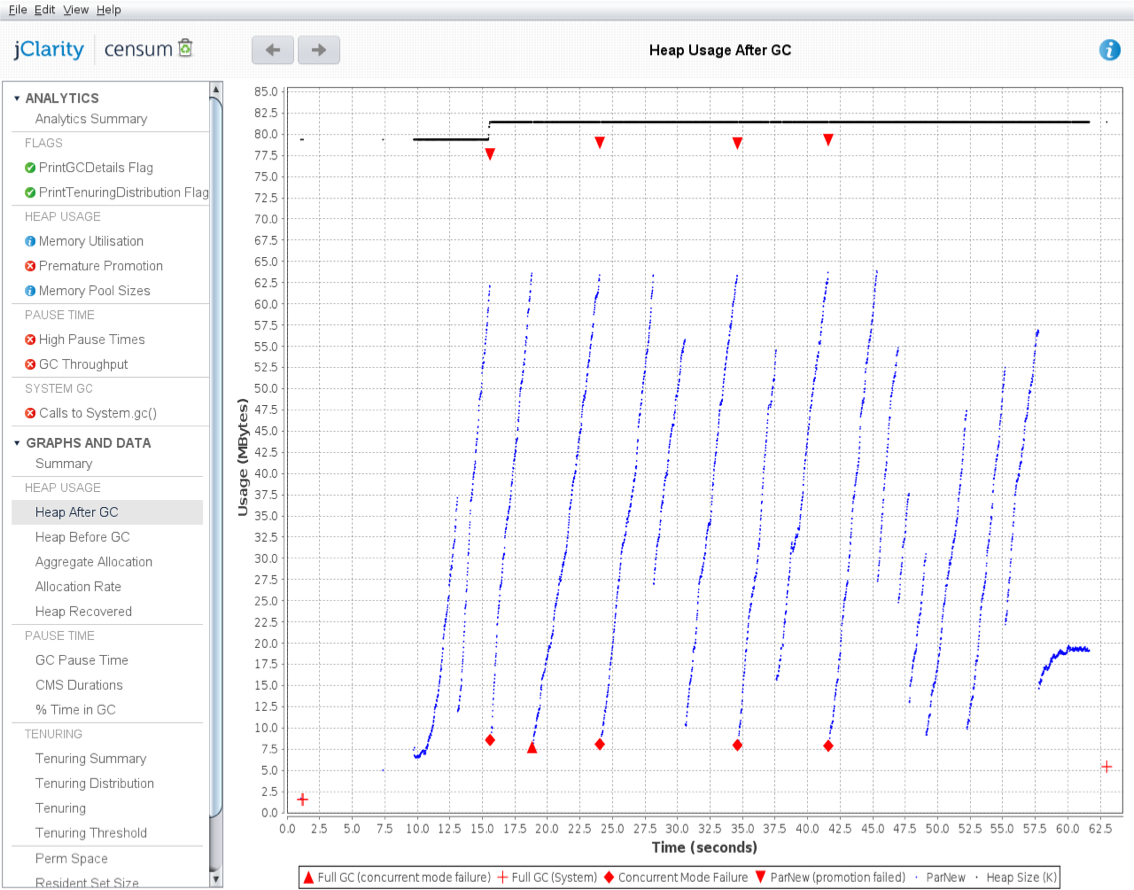

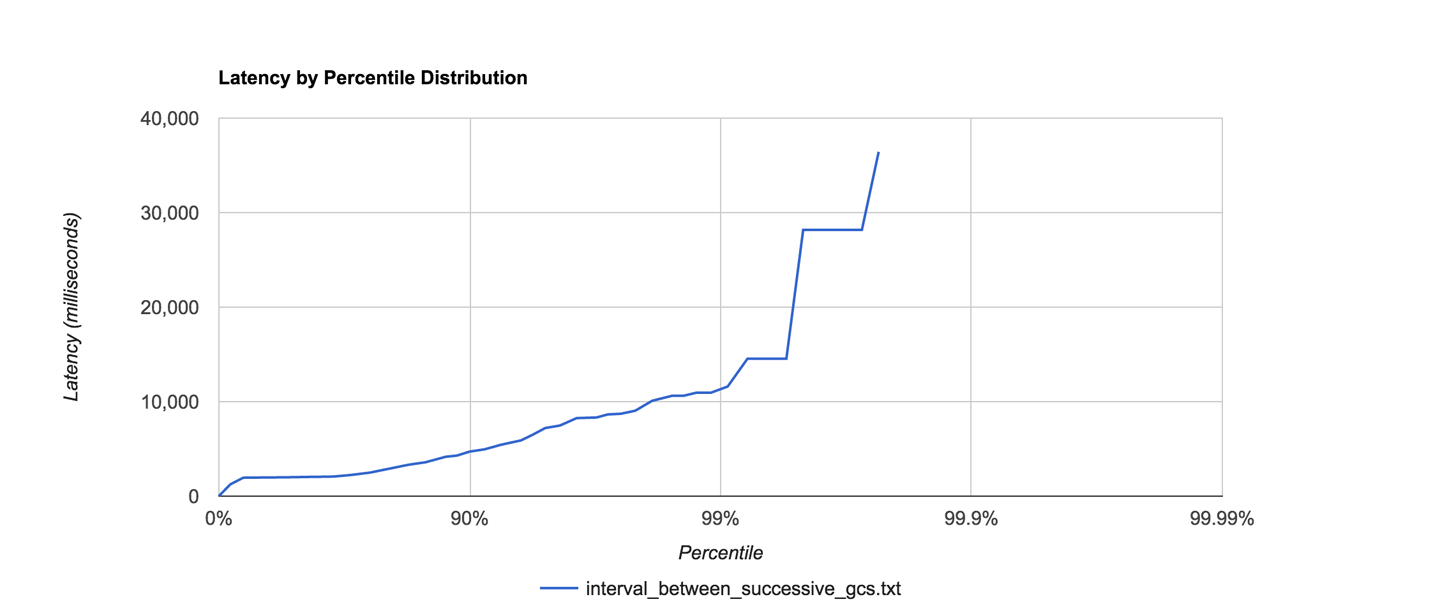

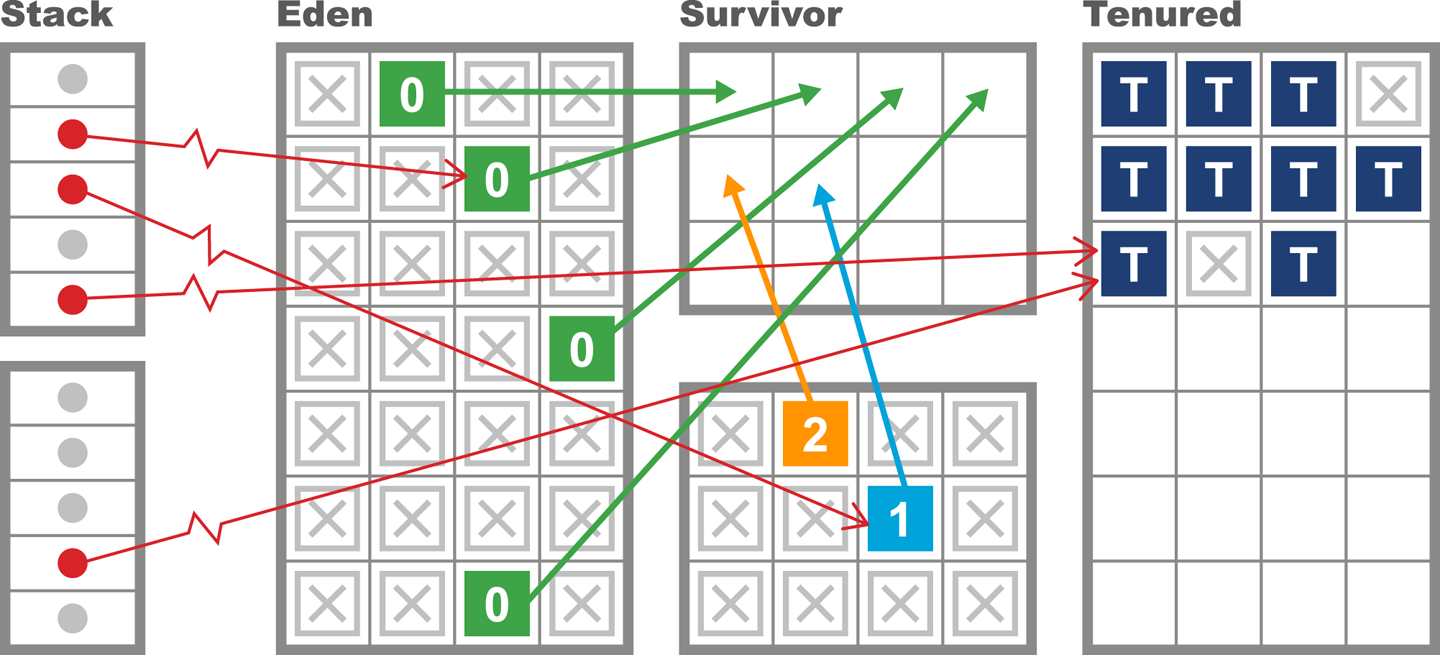

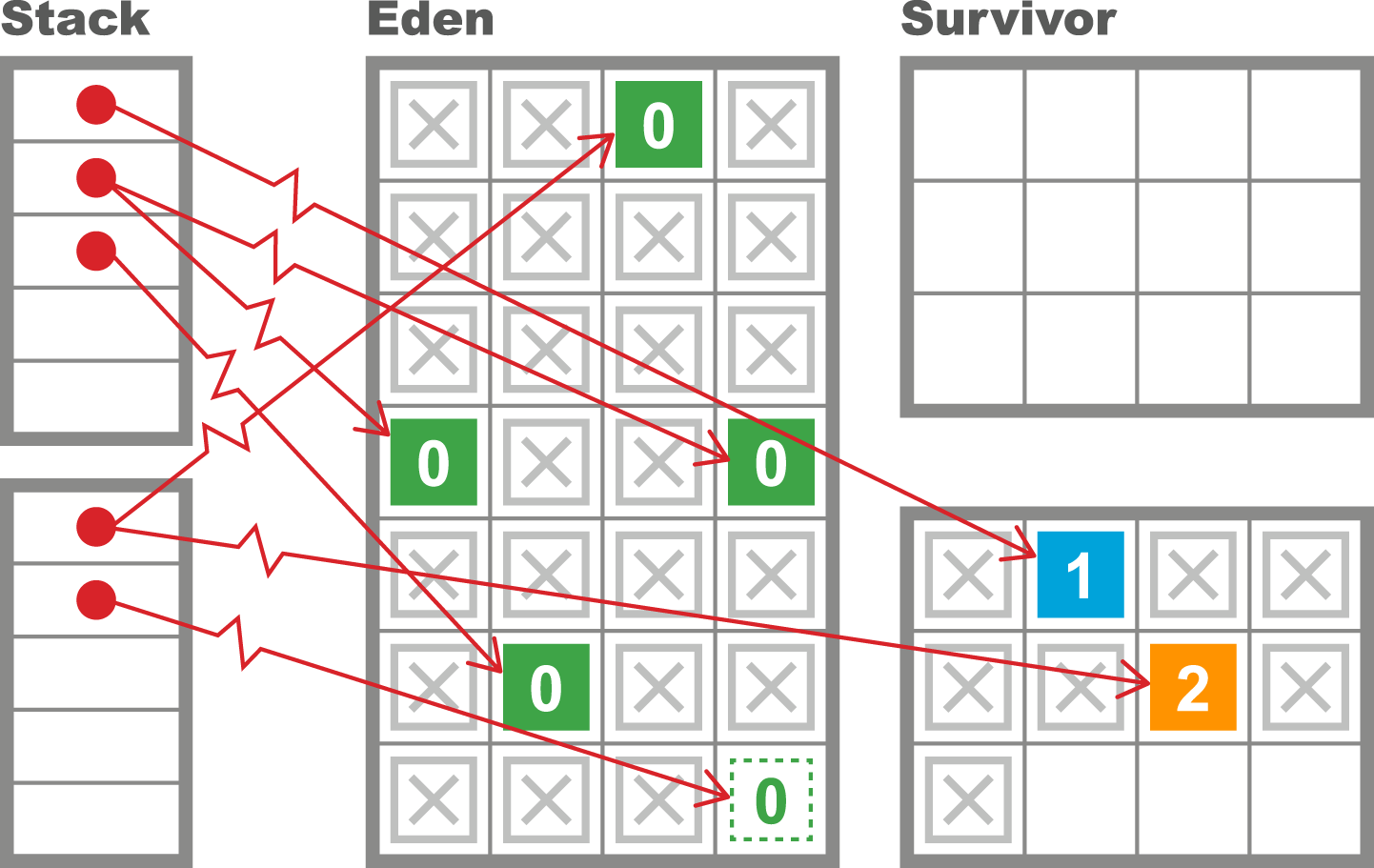

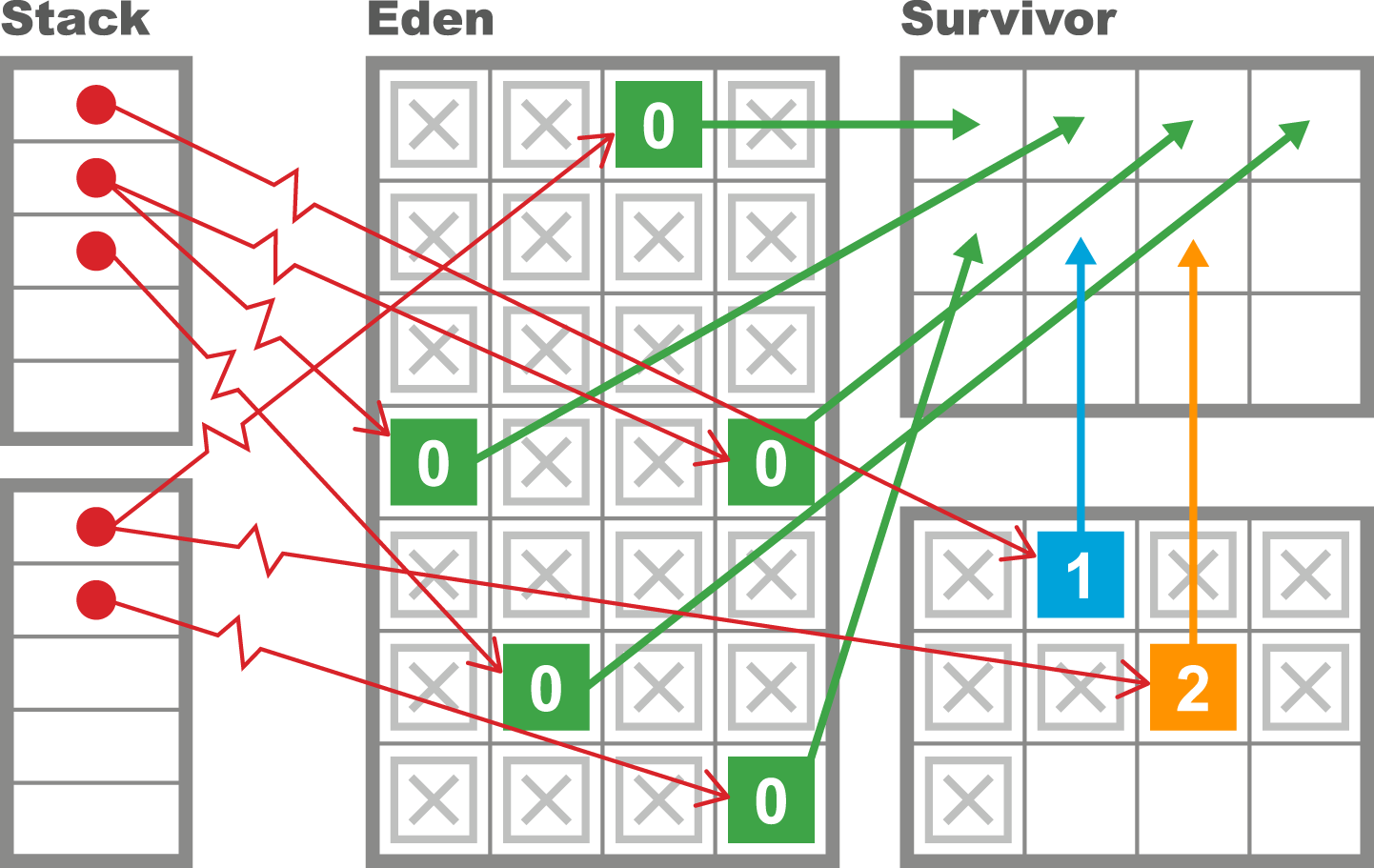

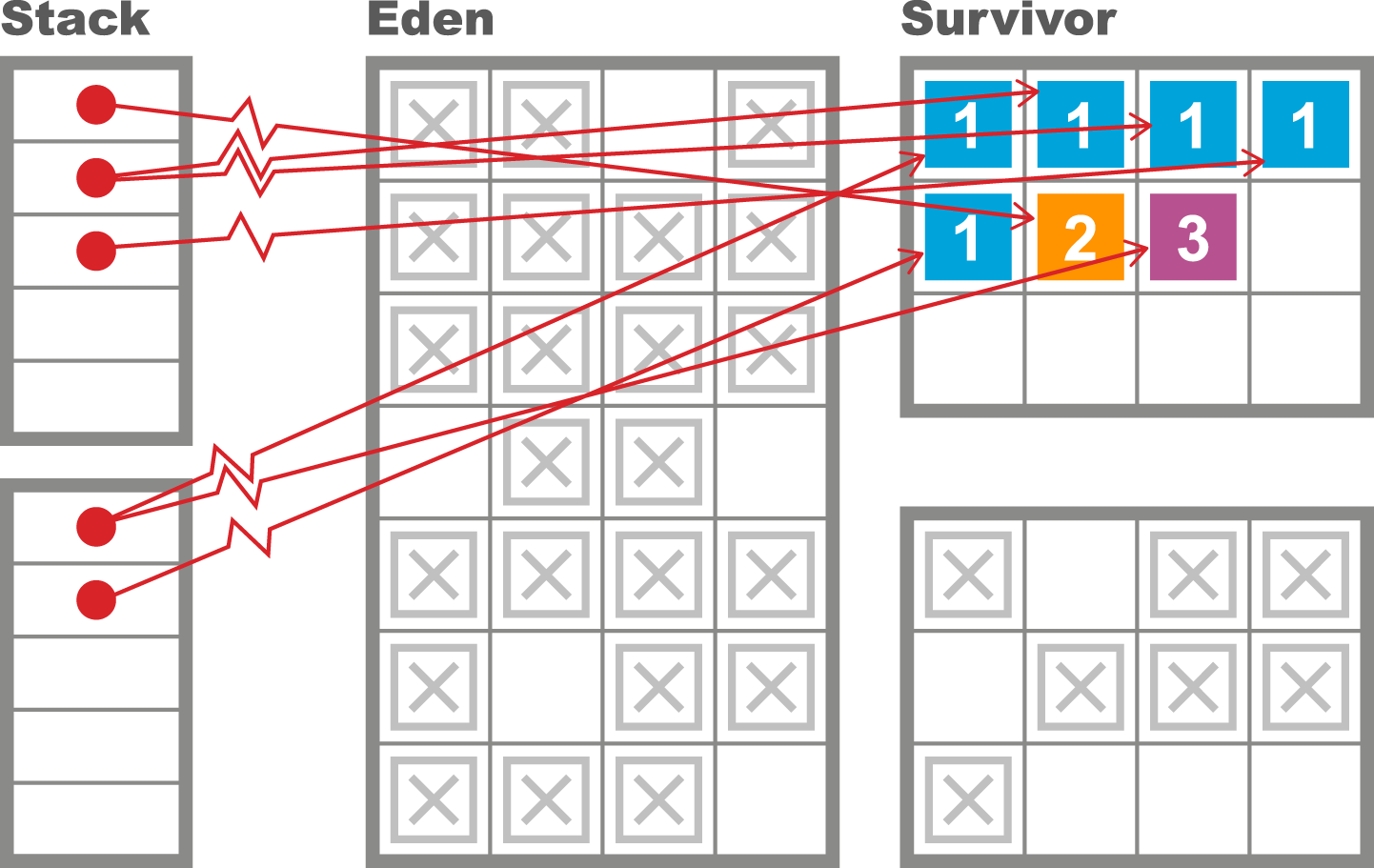

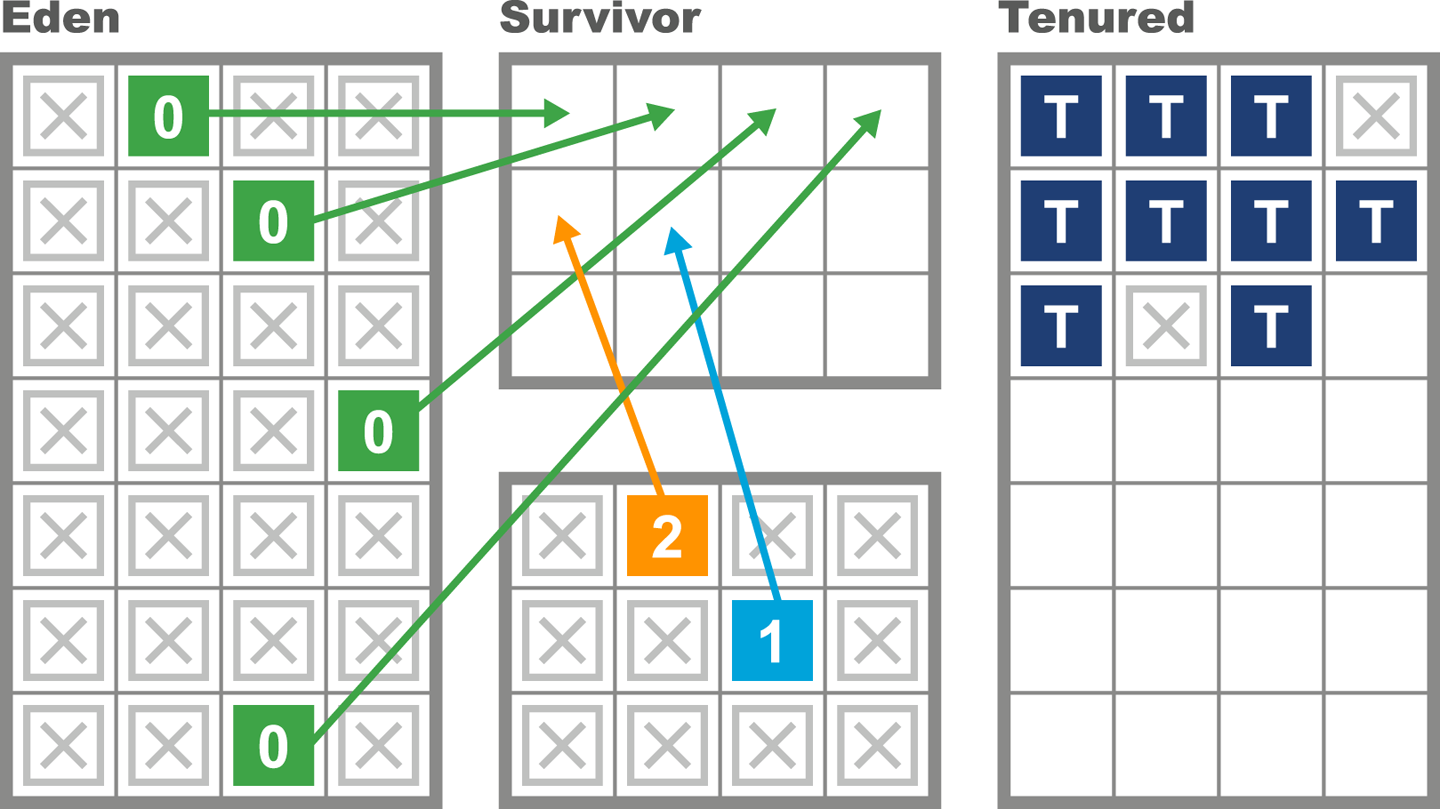

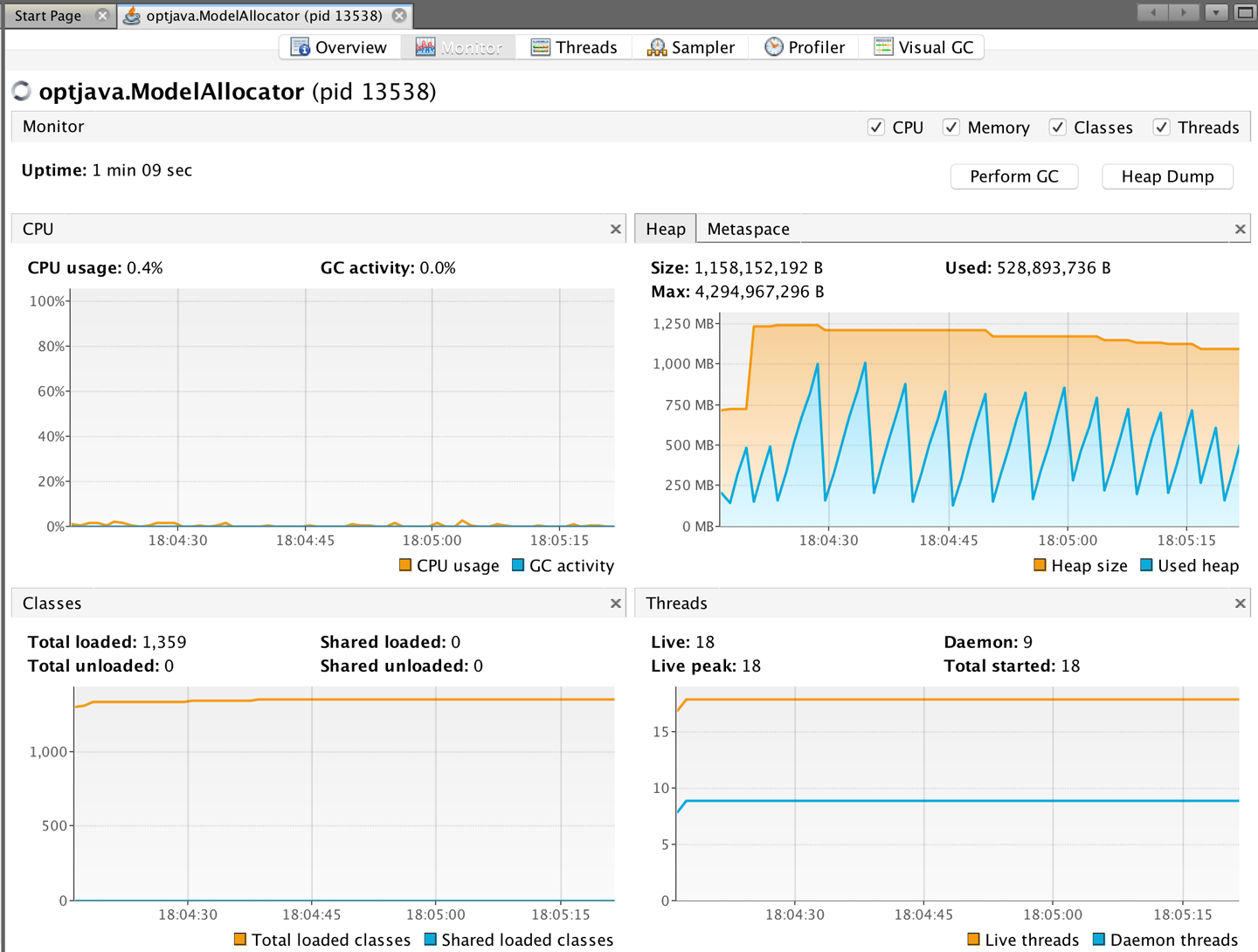

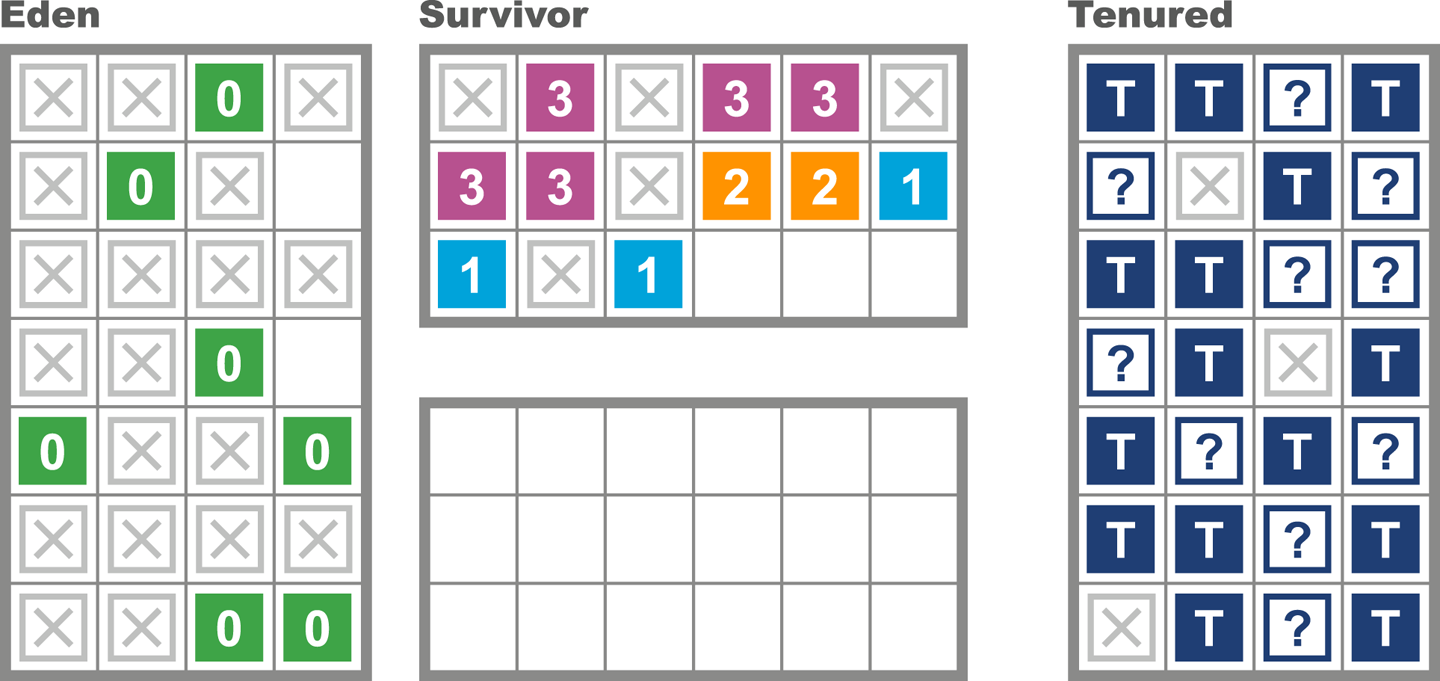

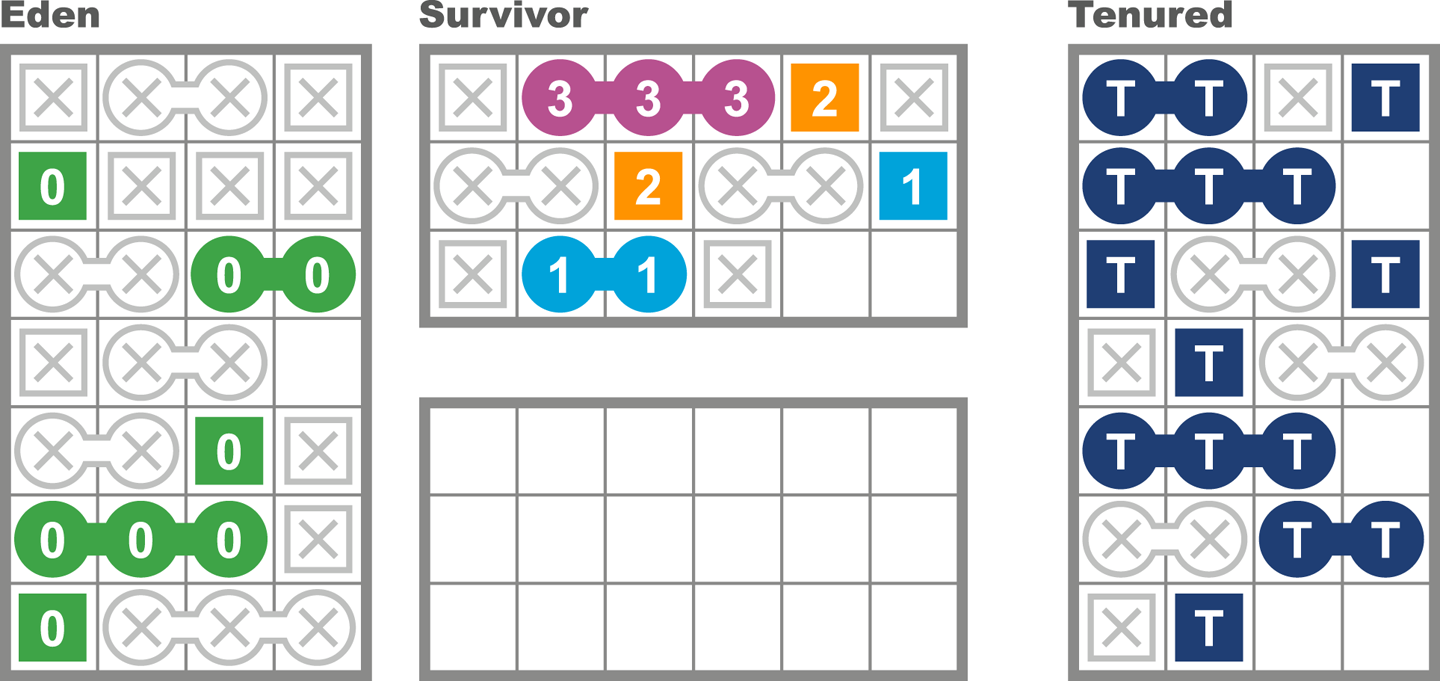

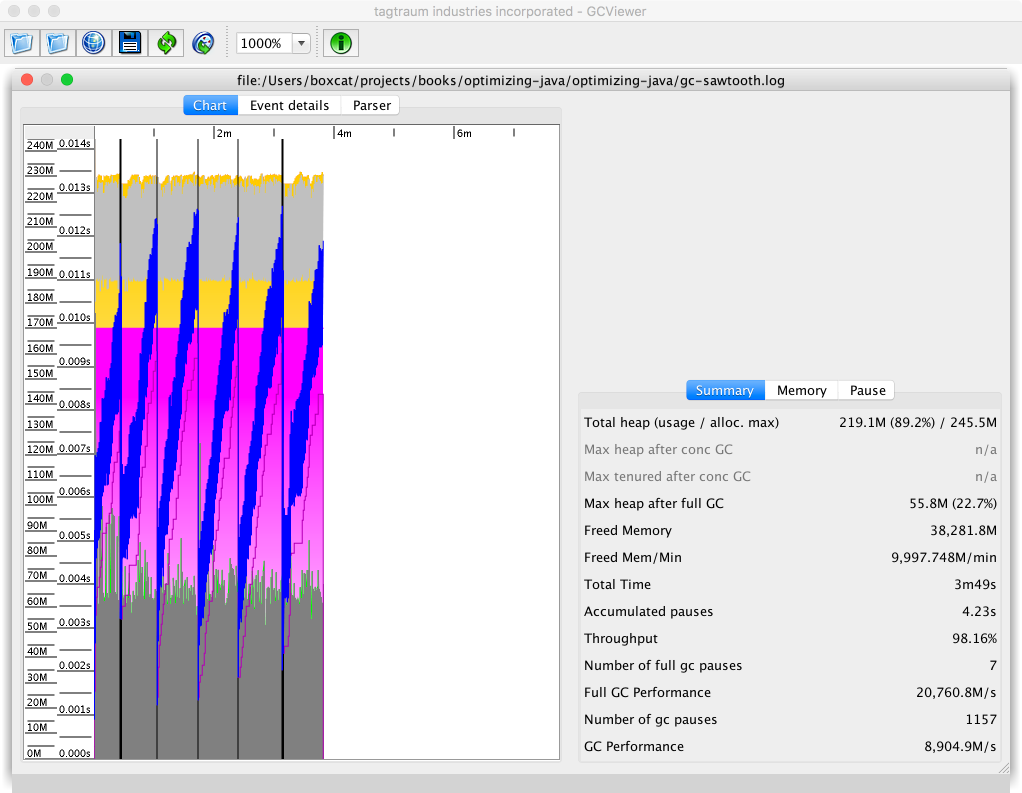

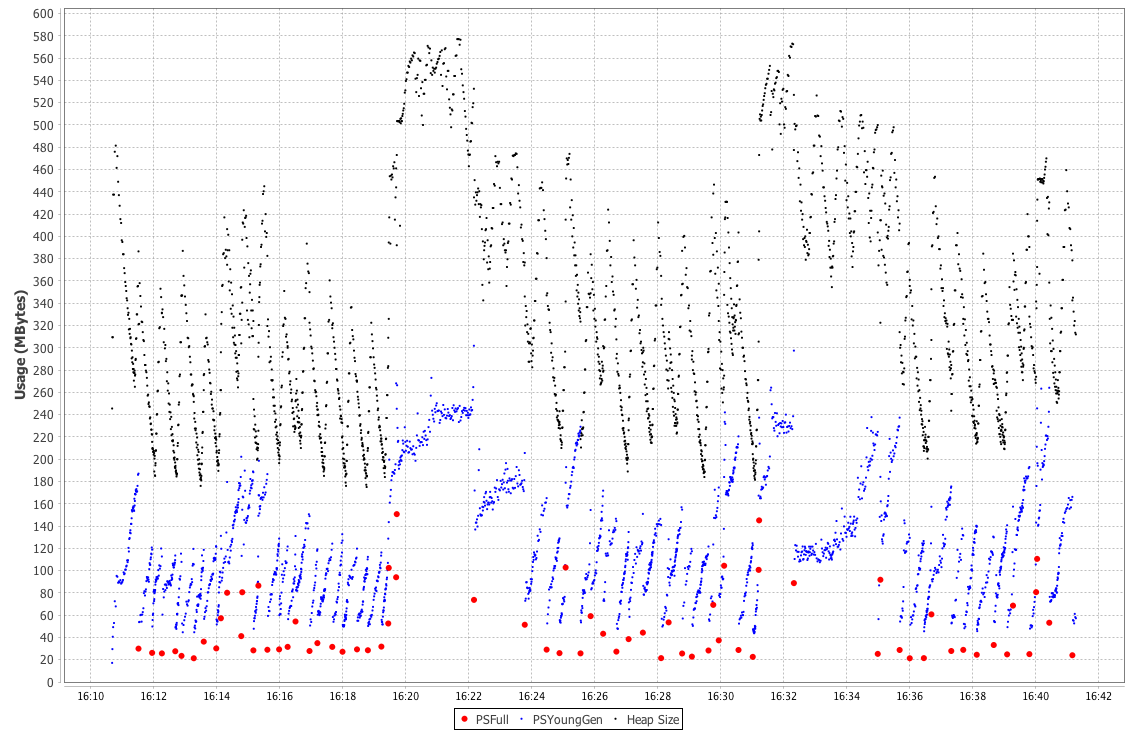

As we will see in Chapter 6, the underlying technology in the JVM’s garbage collection subsystem naturally gives rise to a “sawtooth” pattern of memory used for healthy applications that aren’t under stress. We can see an example in Figure 1-4.

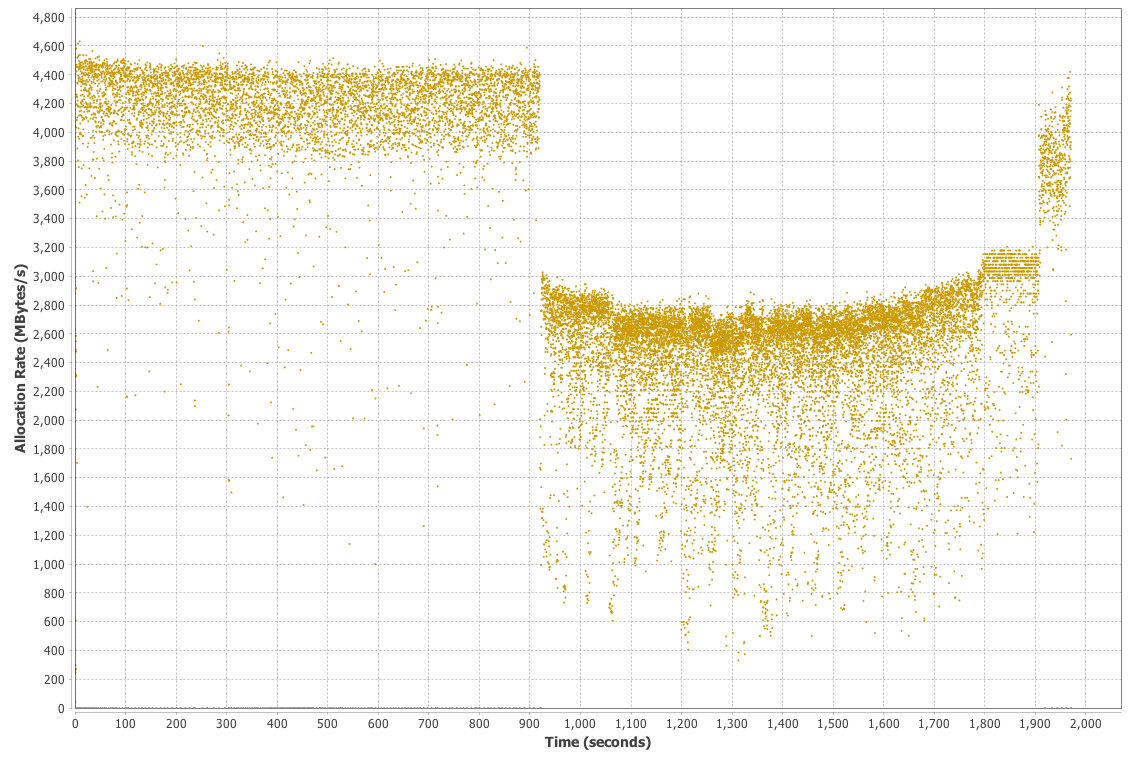

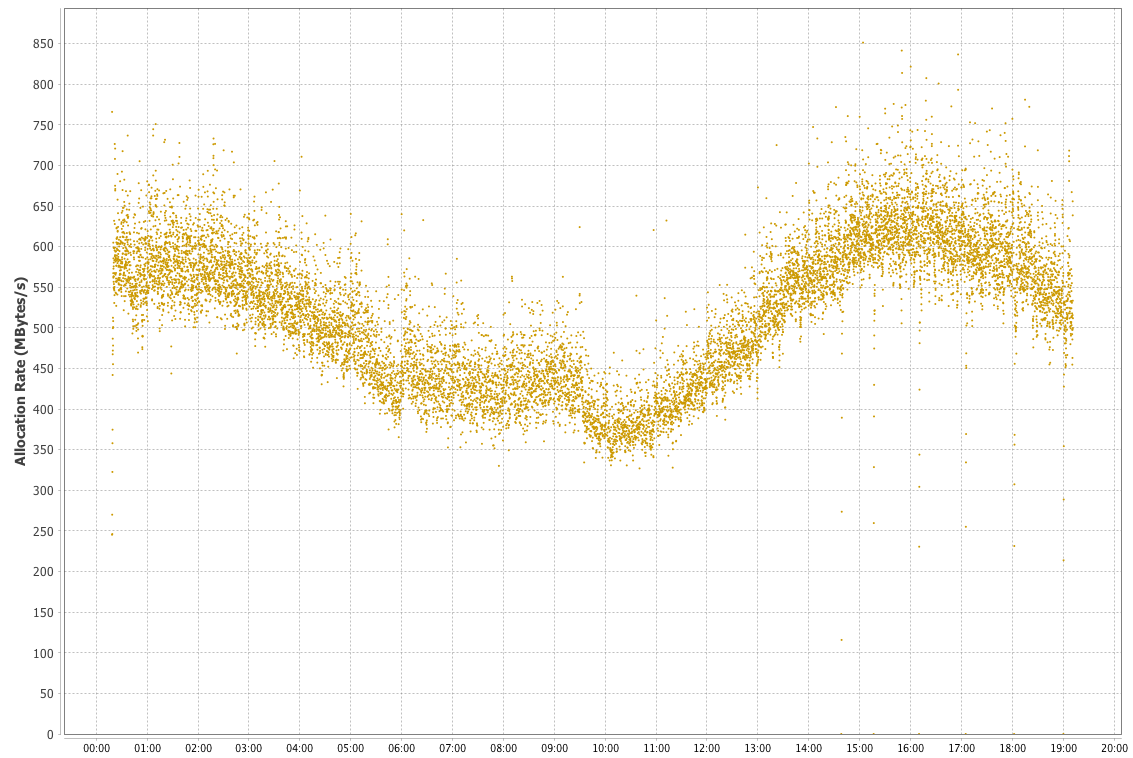

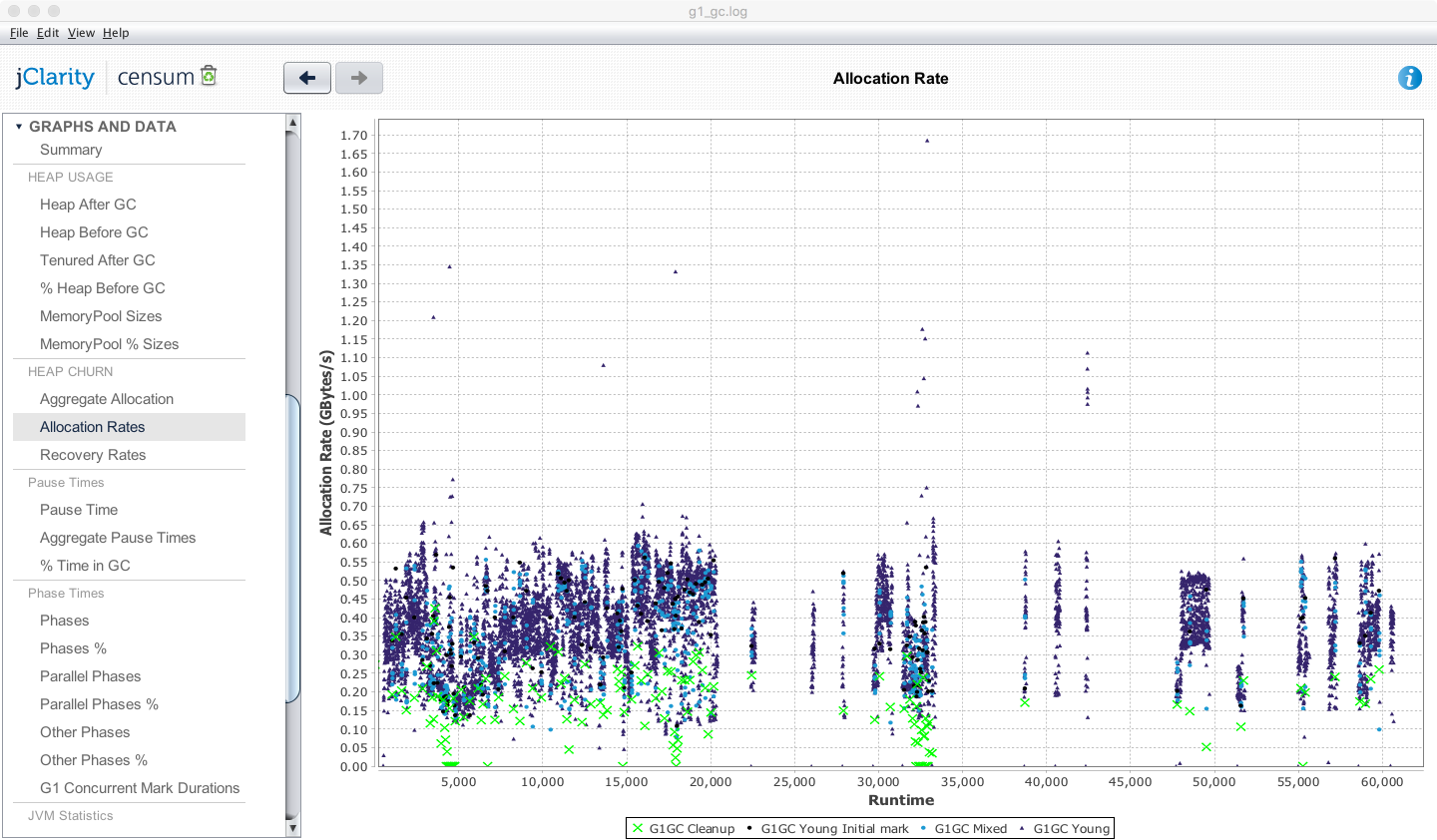

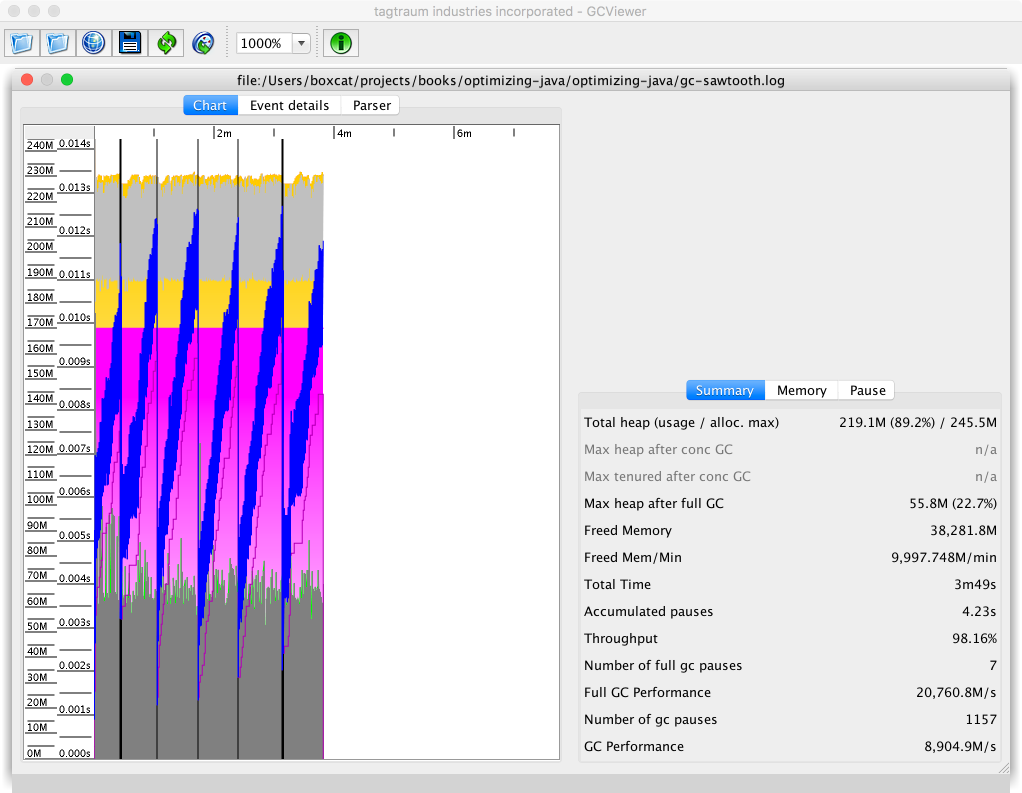

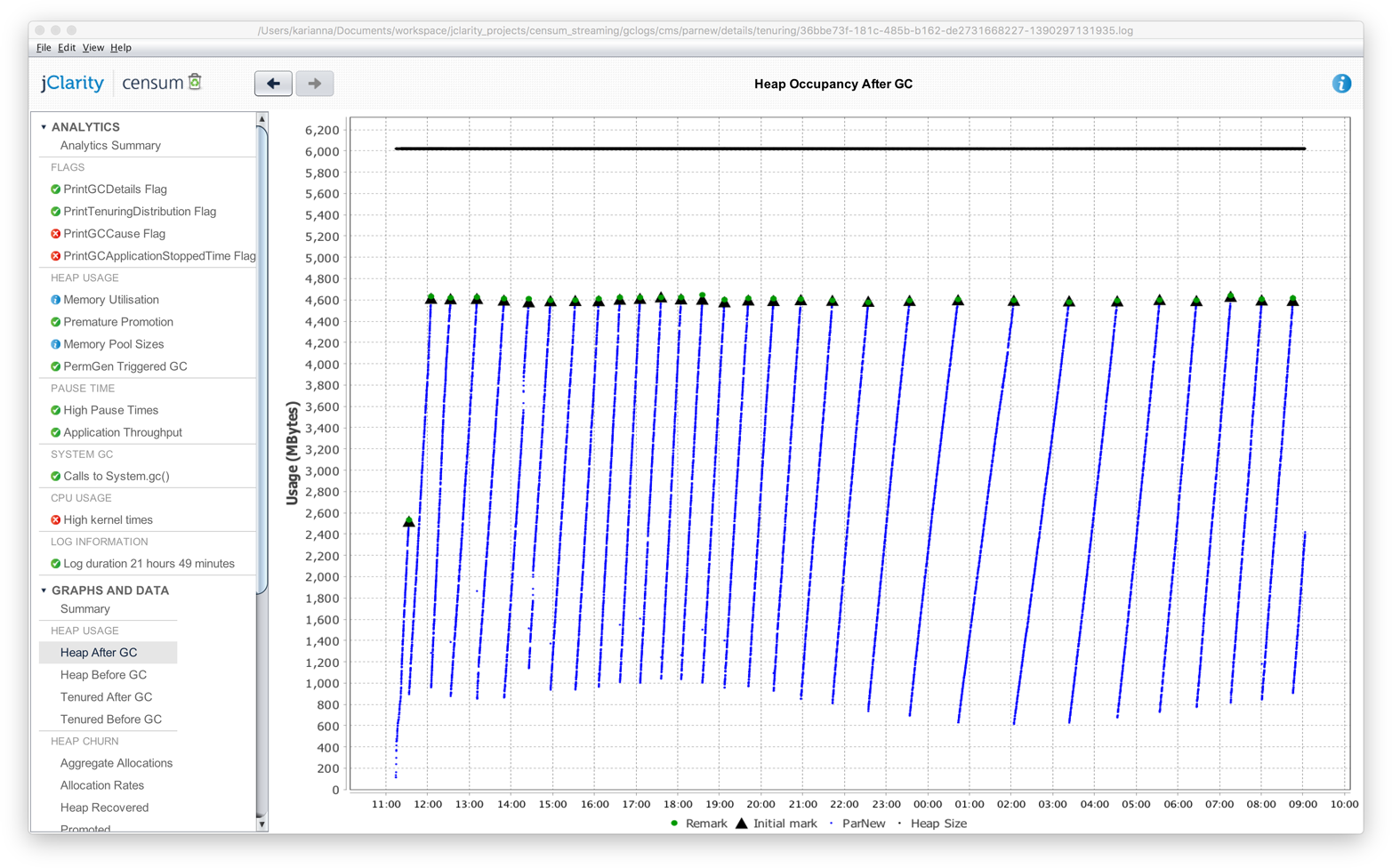

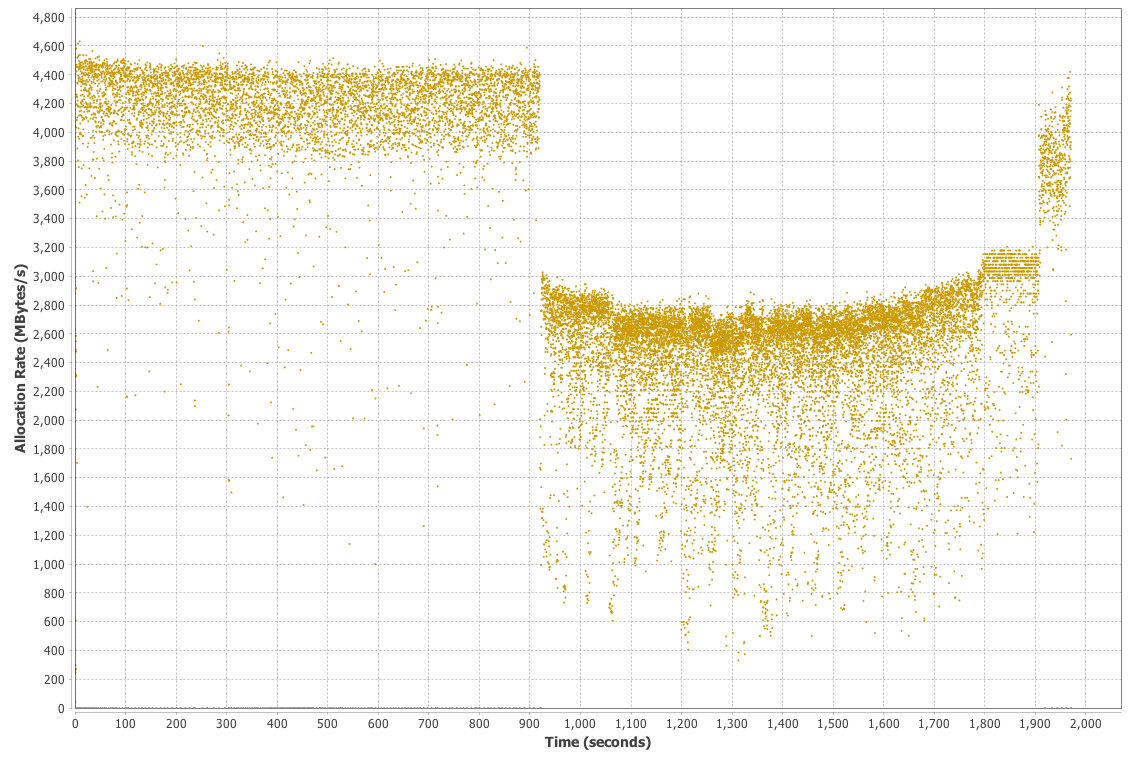

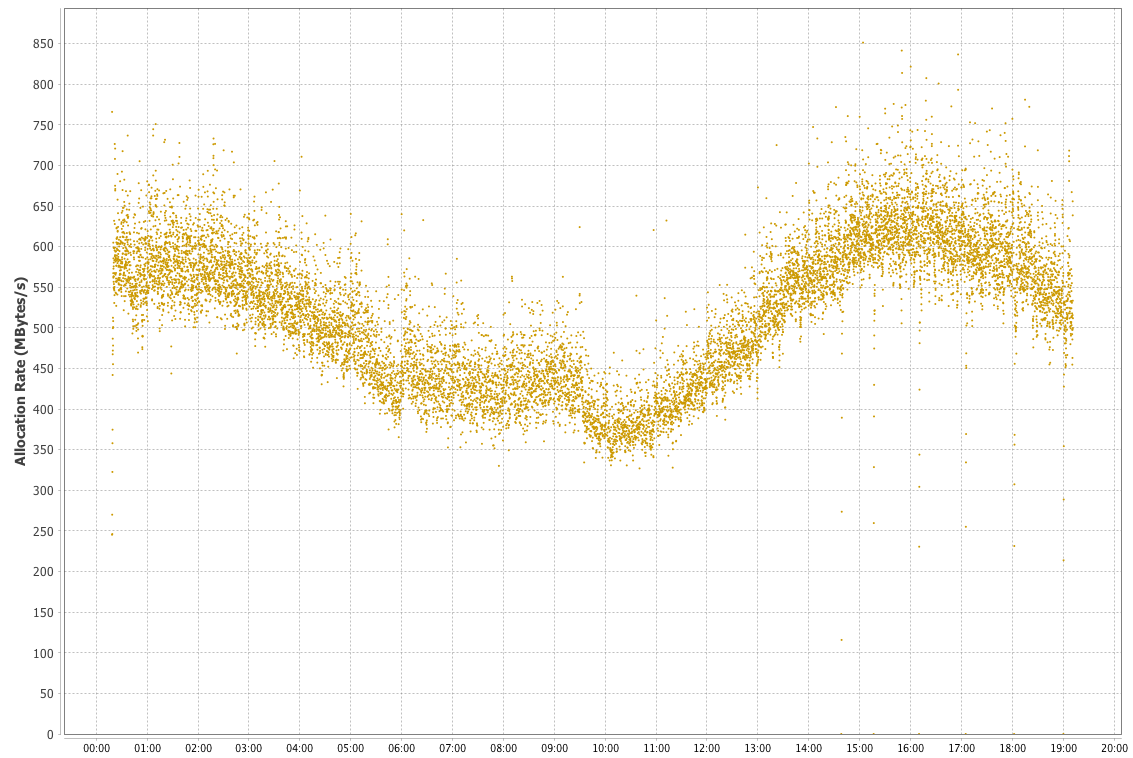

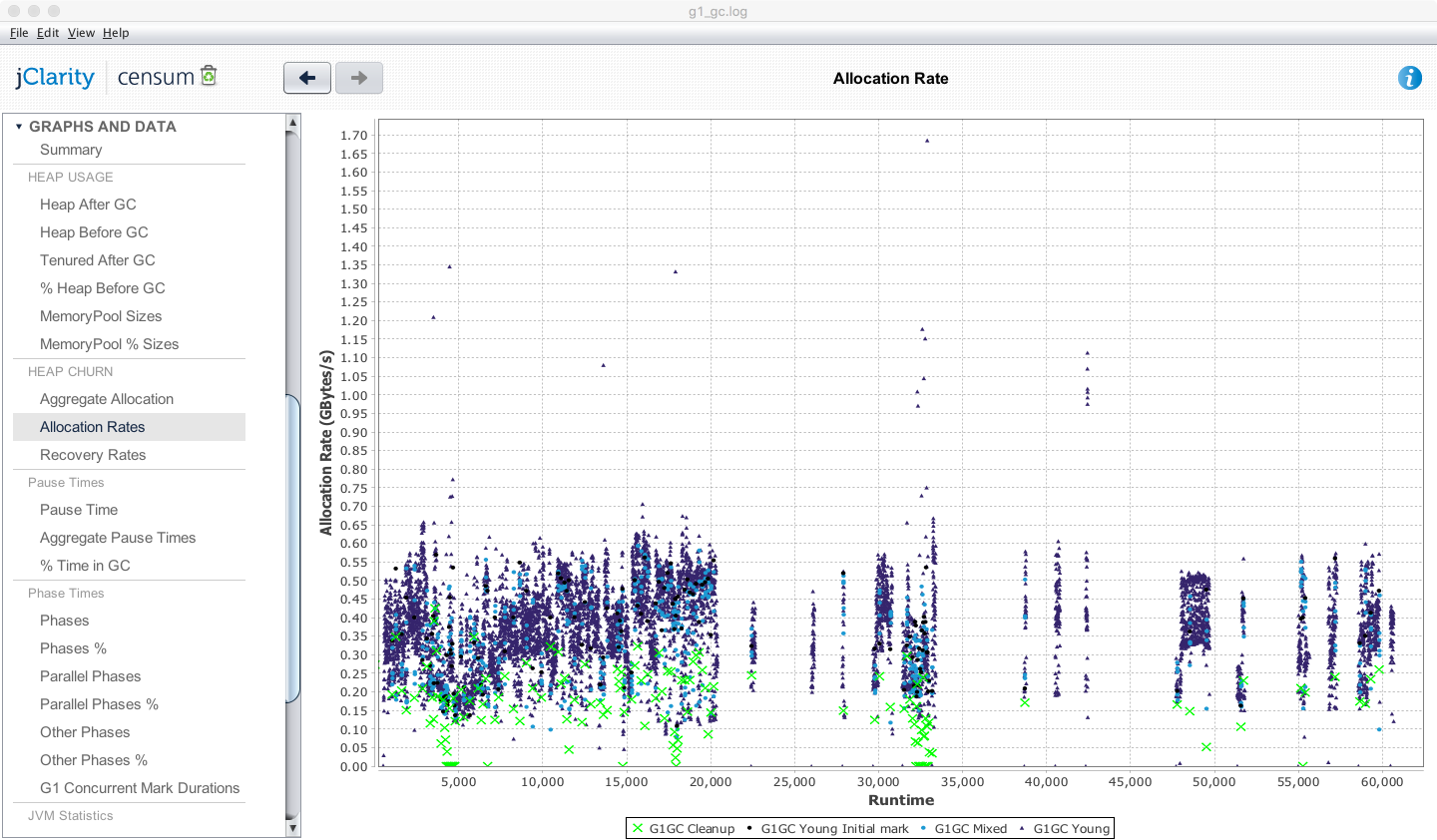

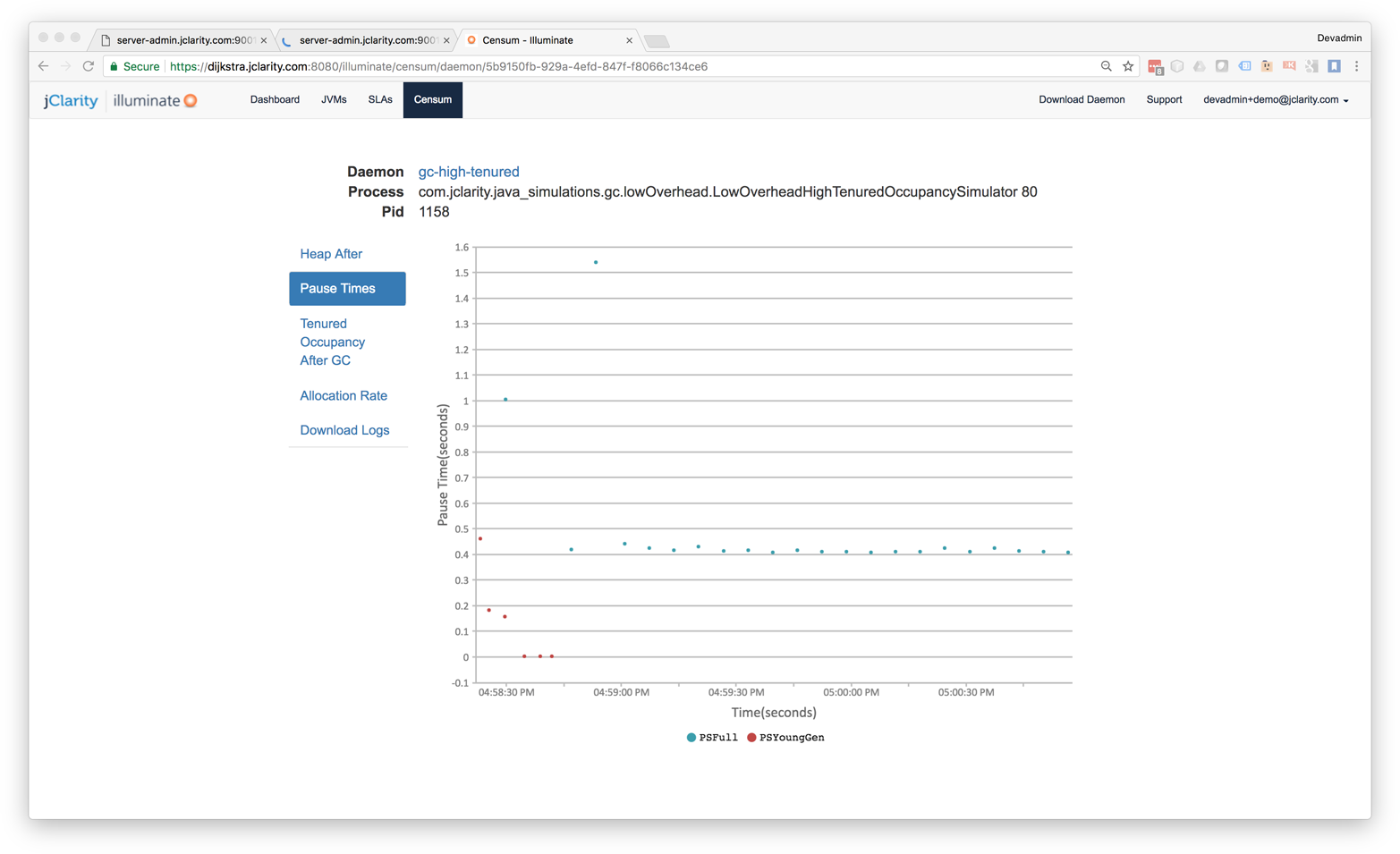

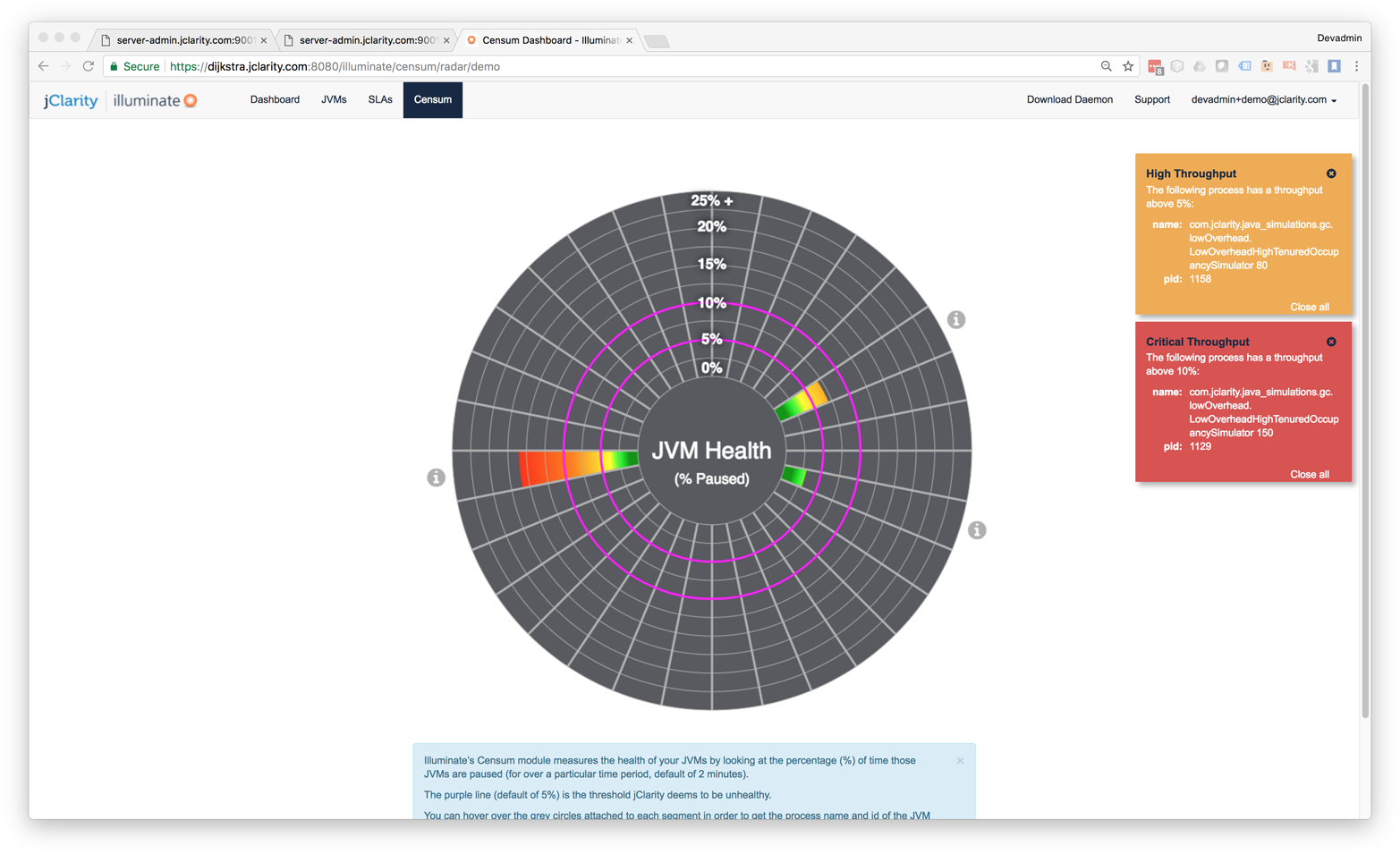

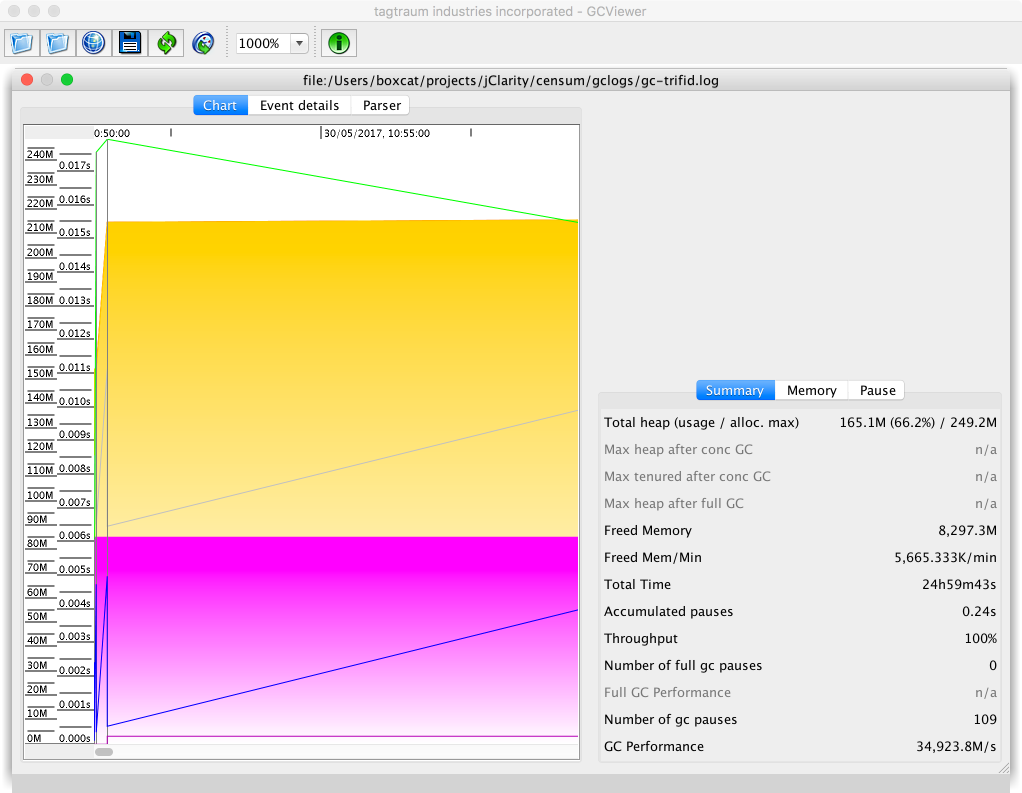

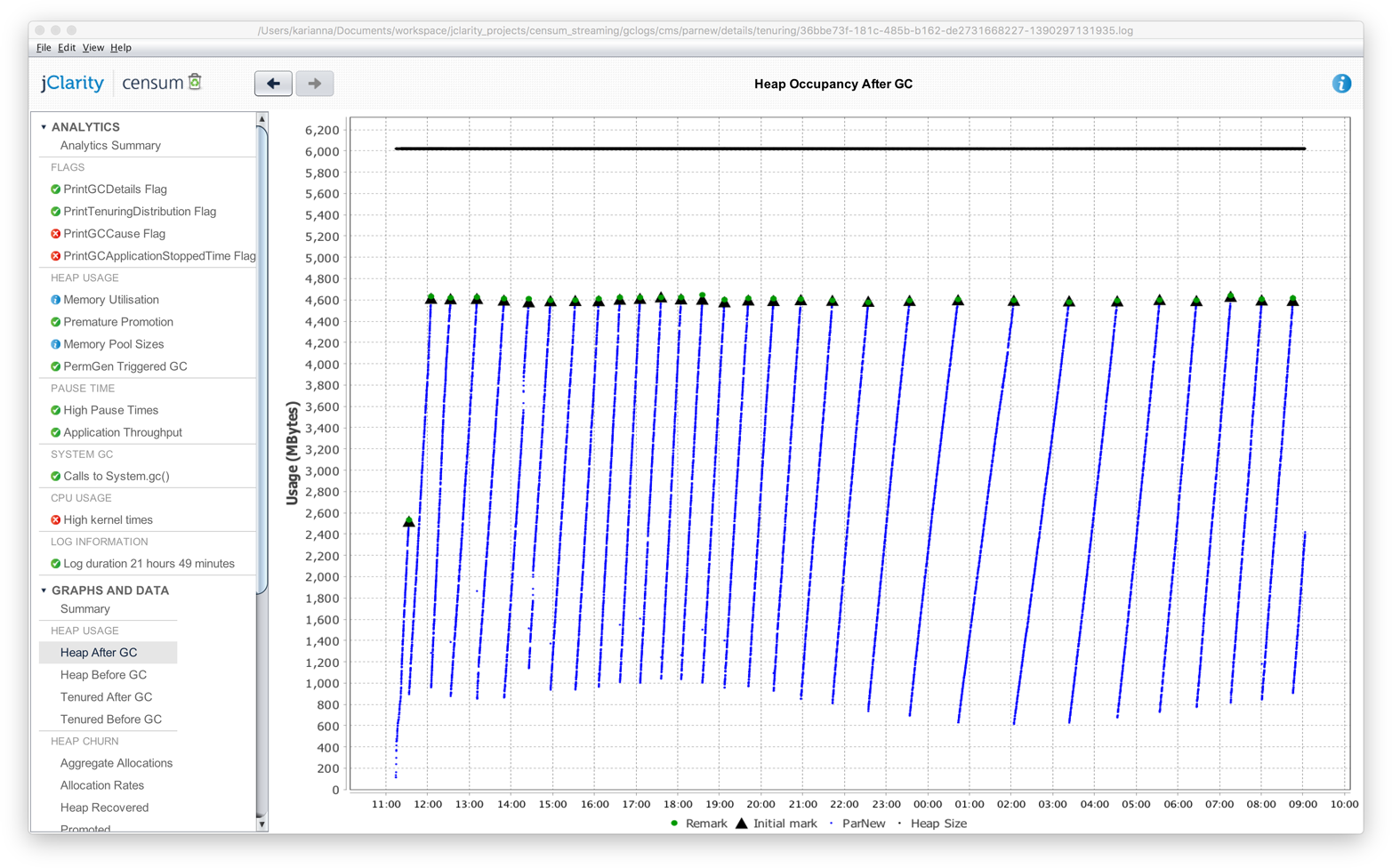

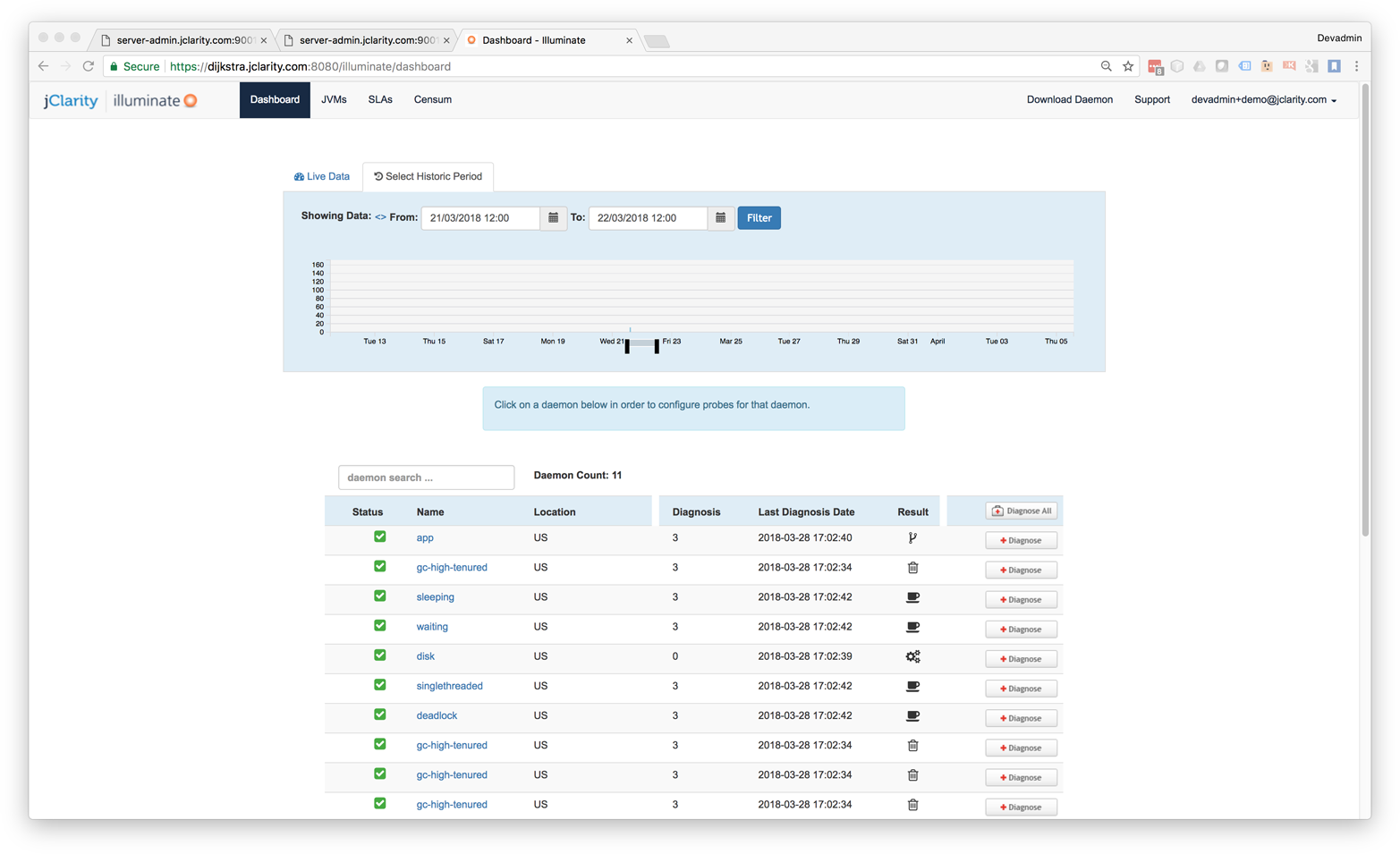

In Figure 1-5, we show another memory graph that can be of great importance when performance tuning an application’s memory allocation rate. This short example shows a sample application (calculating Fibonnaci numbers). It clearly displays a sharp drop in the allocation rate at around the 90 second mark.

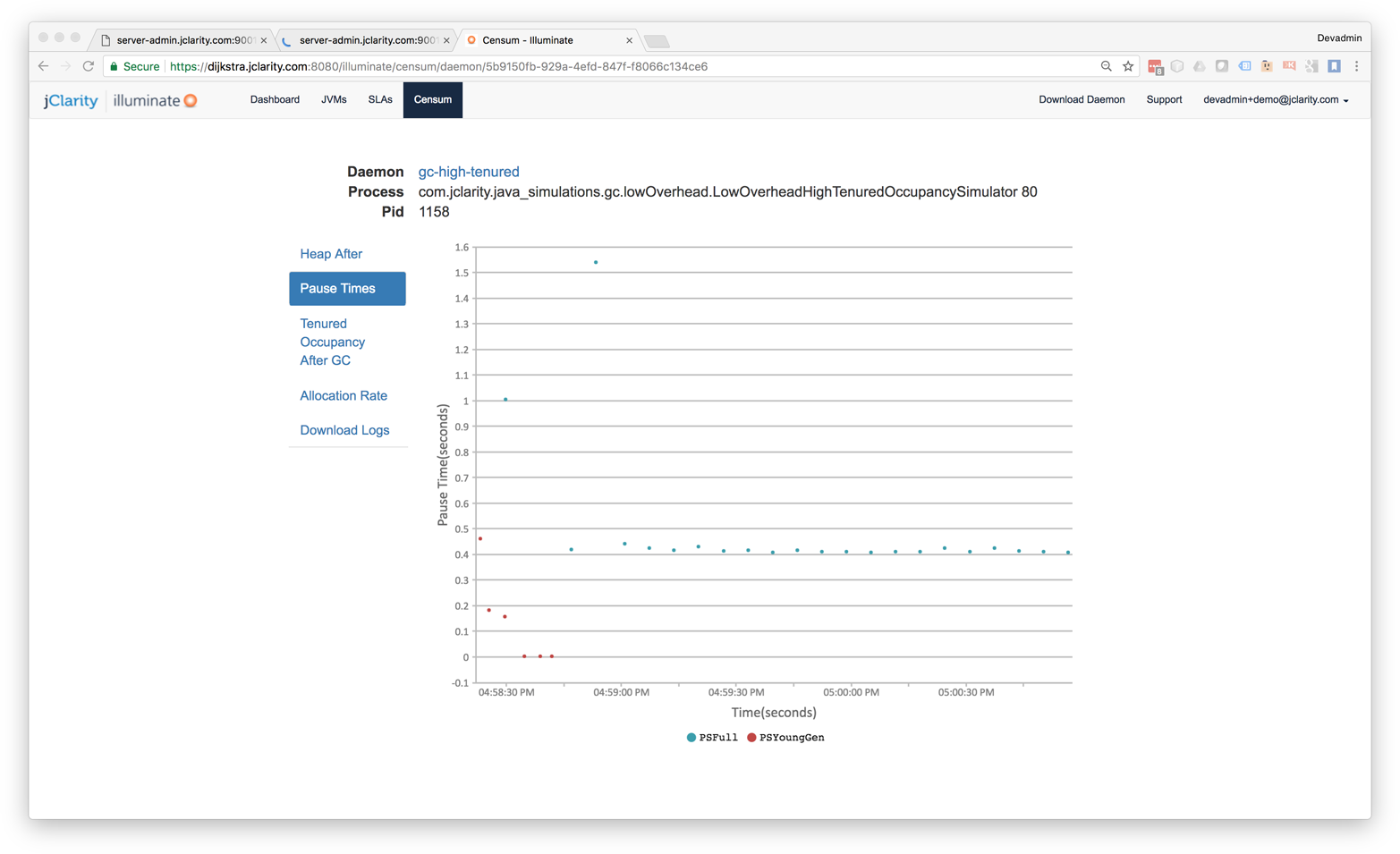

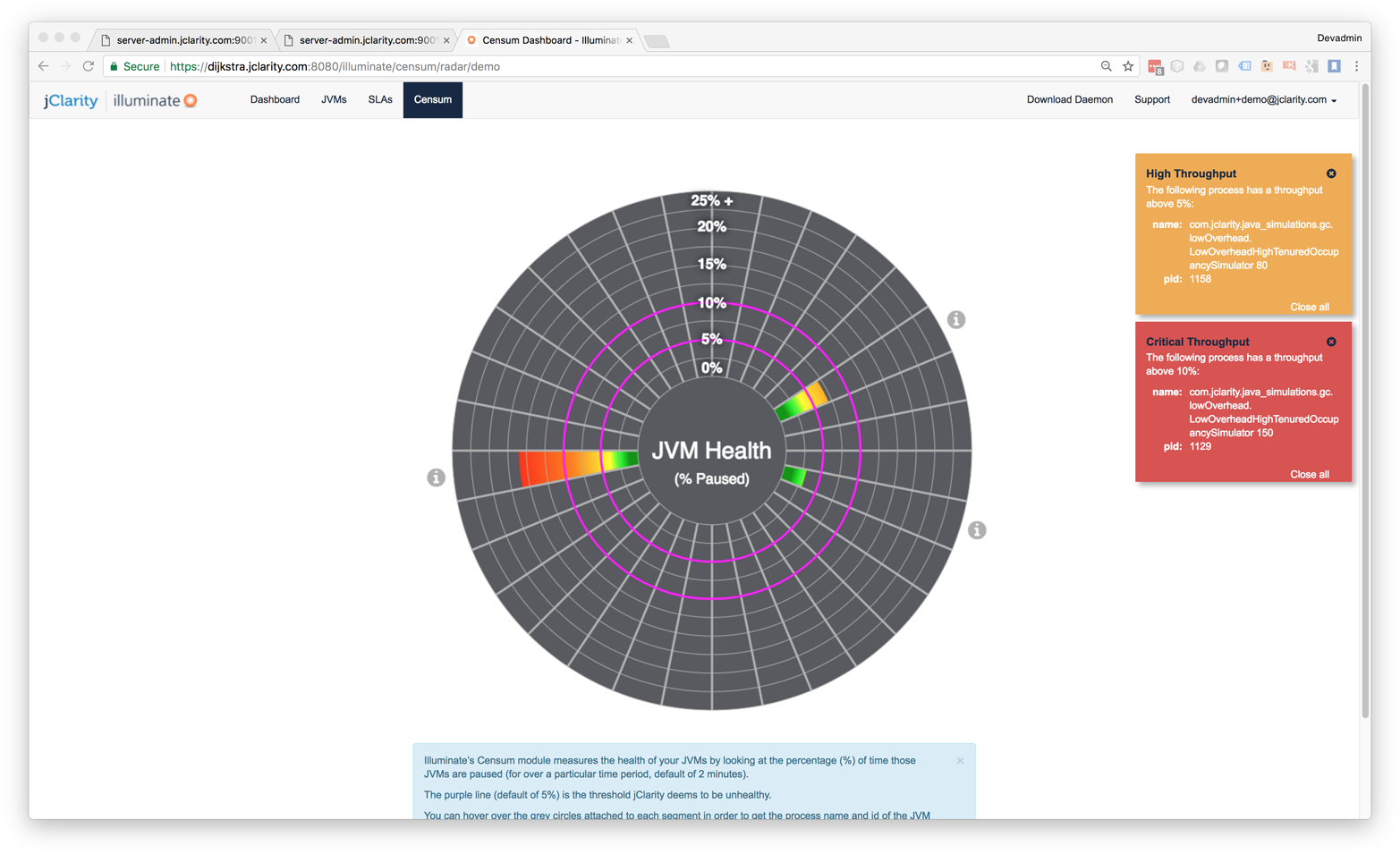

Other graphs from the same tool (jClarity Censum) show that the application starts to suffer from major garbage collection problems at this time, and so the application is unable to allocate sufficient memory due to CPU contention from the garbage collection threads.

We can also spot that the allocation subsystem is running hot—allocating over 4 GB per second. This is well above the recommended maximum capacity of most modern systems (including server class hardware). We will have much more to say about the subject of allocation when we discuss garbage collection in Chapter 6.

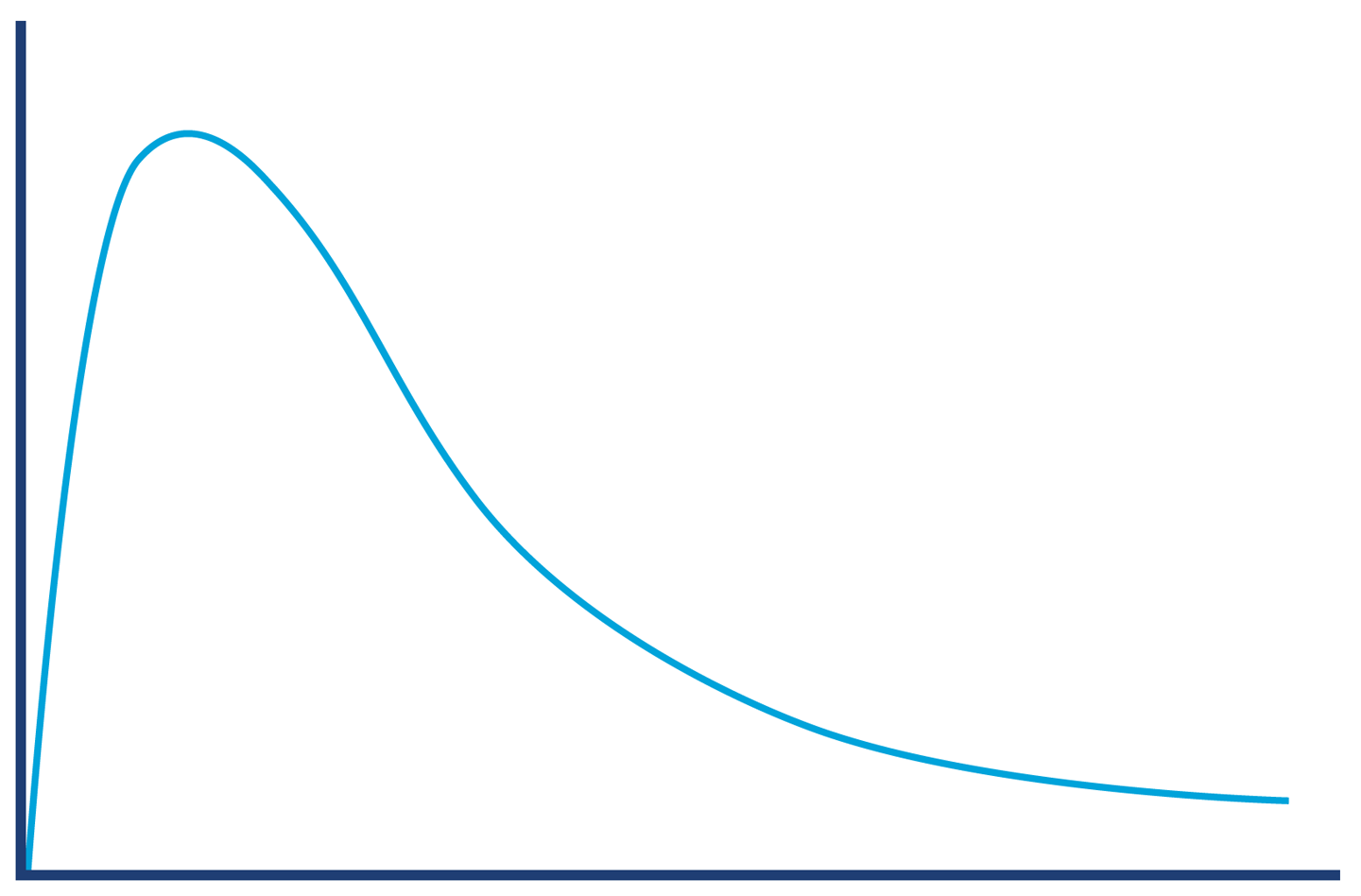

In the case where a system has a resource leak, it is far more common for it to manifest in a manner like that shown in Figure 1-6, where an observable (in this case latency) slowly degrades as the load is ramped up, before hitting an inflection point where the system rapidly degrades.

In this chapter we have started to discuss what Java performance is and is not. We have introduced the fundamental topics of empirical science and measurement, and the basic vocabulary and observables that a good performance exercise will use. Finally, we have introduced some common cases that are often seen within the results obtained from performance tests. Let’s move on and begin our discussion of some of the major aspects of the JVM, and set the scene for understanding what makes JVM-based performance optimization a particularly complex problem.

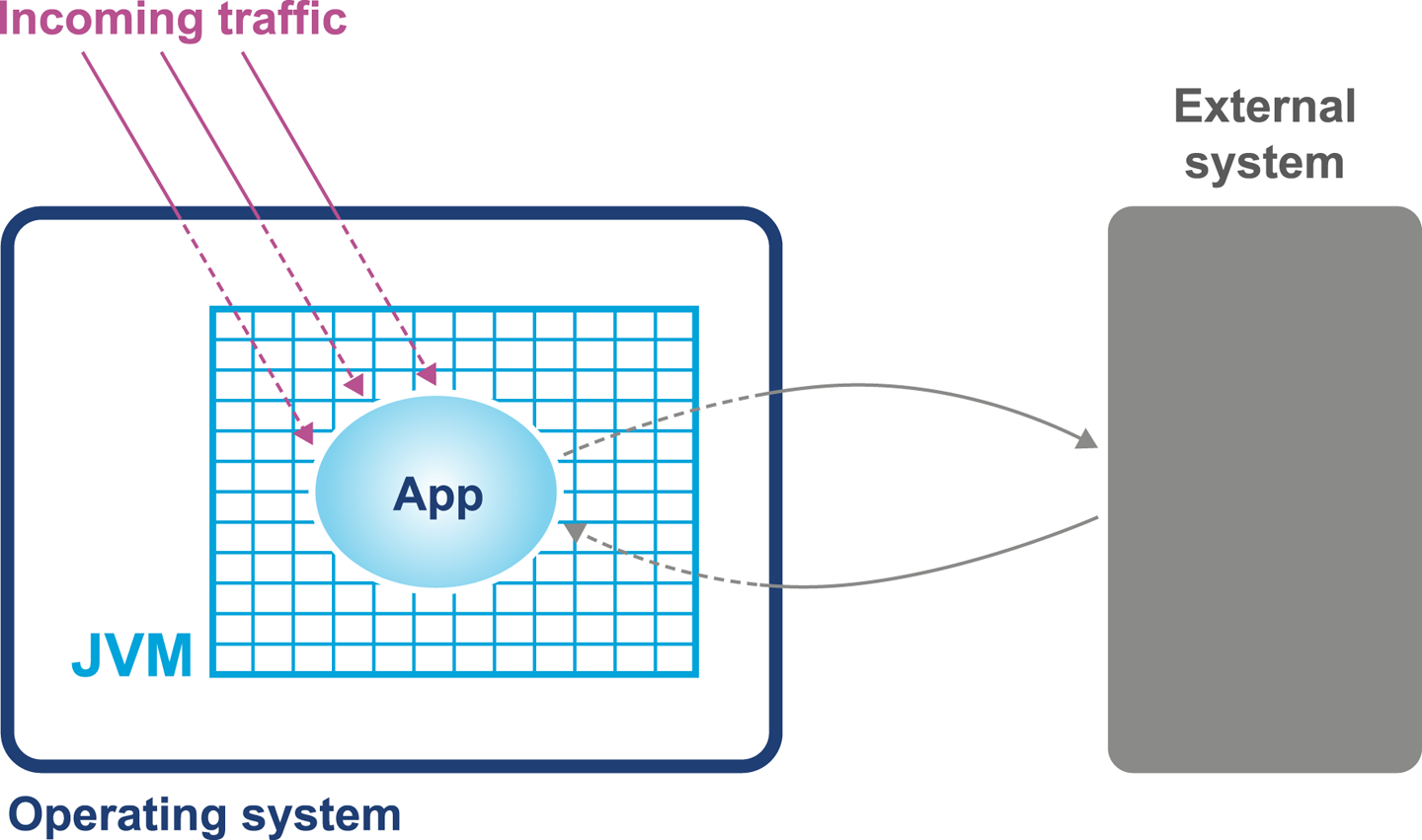

There is no doubt that Java is one of the largest technology platforms on the planet, boasting roughly 9–10 million developers (according to Oracle). By design, many developers do not need to know about the low-level intricacies of the platform they work with. This leads to a situation where developers only meet these aspects when a customer complains about performance for the first time.

For developers interested in performance, however, it is important to understand the basics of the JVM technology stack. Understanding JVM technology enables developers to write better software and provides the theoretical background required for investigating performance-related issues.

This chapter introduces how the JVM executes Java in order to provide a basis for deeper exploration of these topics later in the book. In particular, Chapter 9 has an in-depth treatment of bytecode. One strategy for the reader could be to read this chapter now, and then reread it in conjunction with Chapter 9, once some of the other topics have been understood.

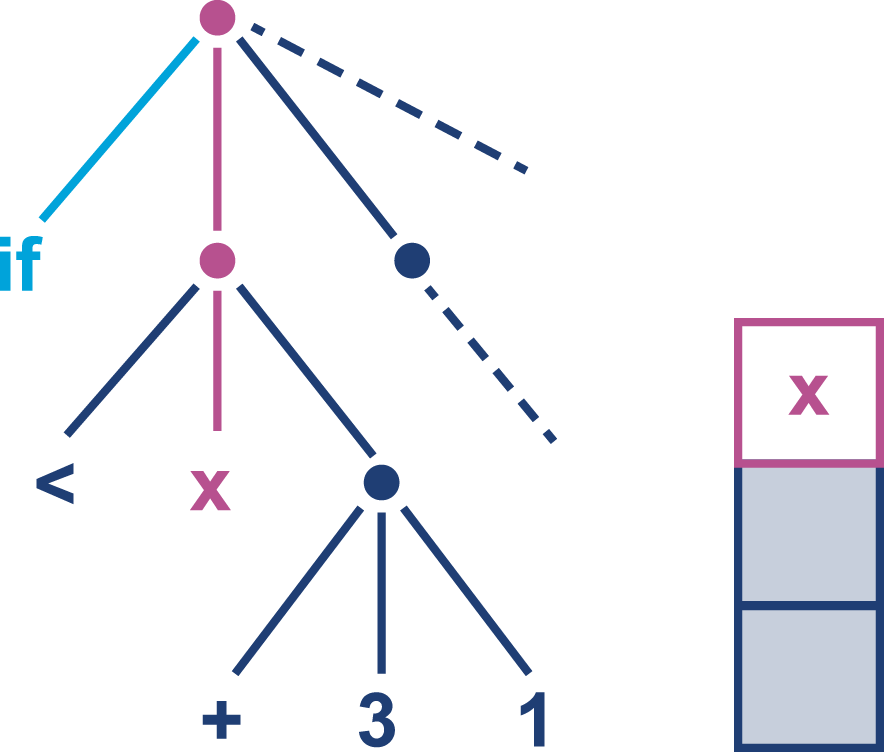

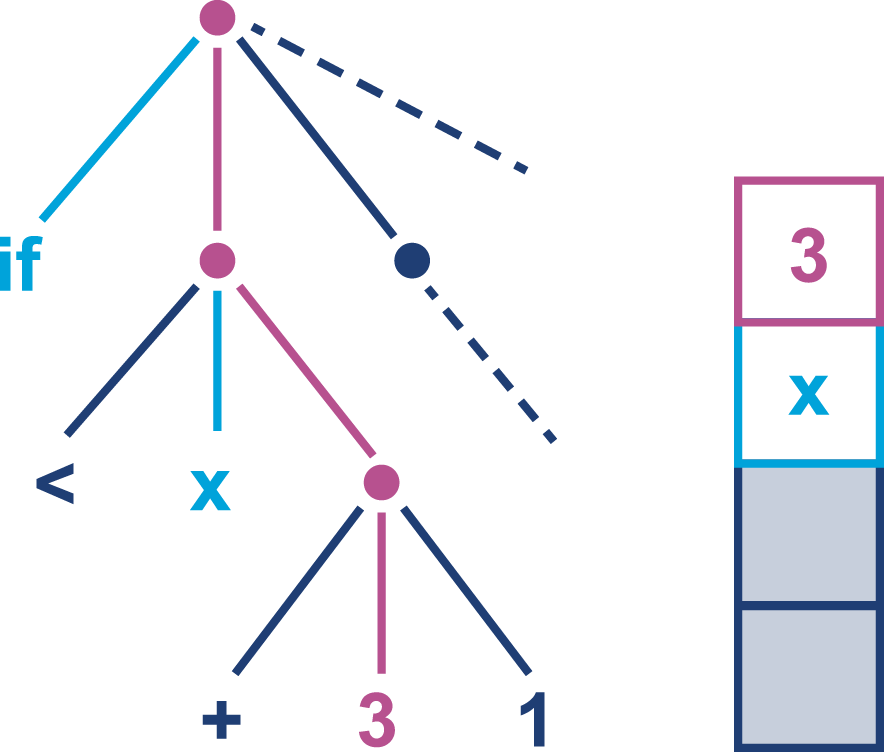

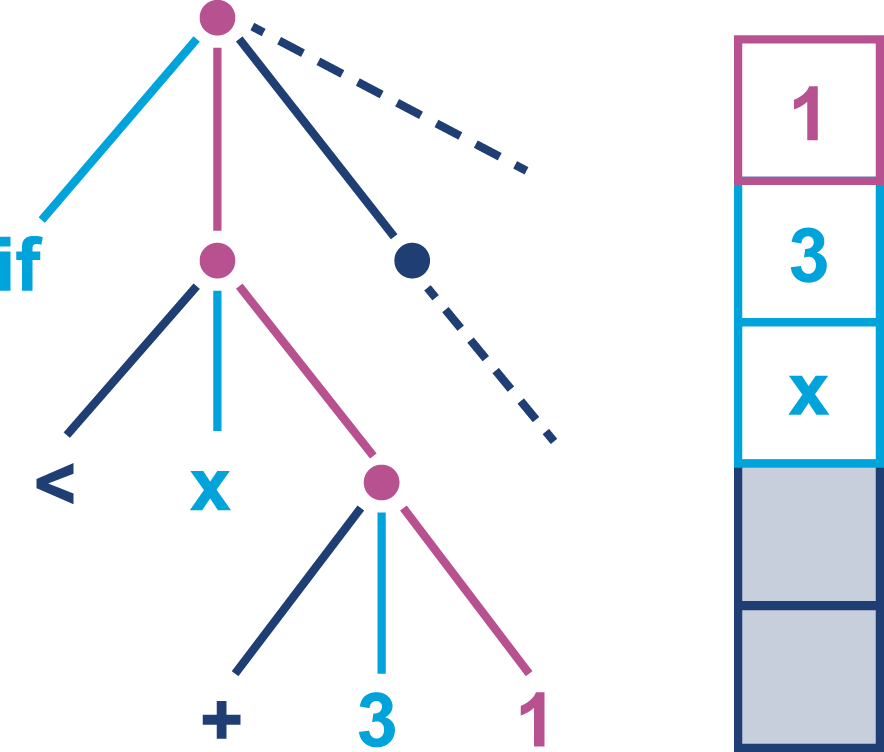

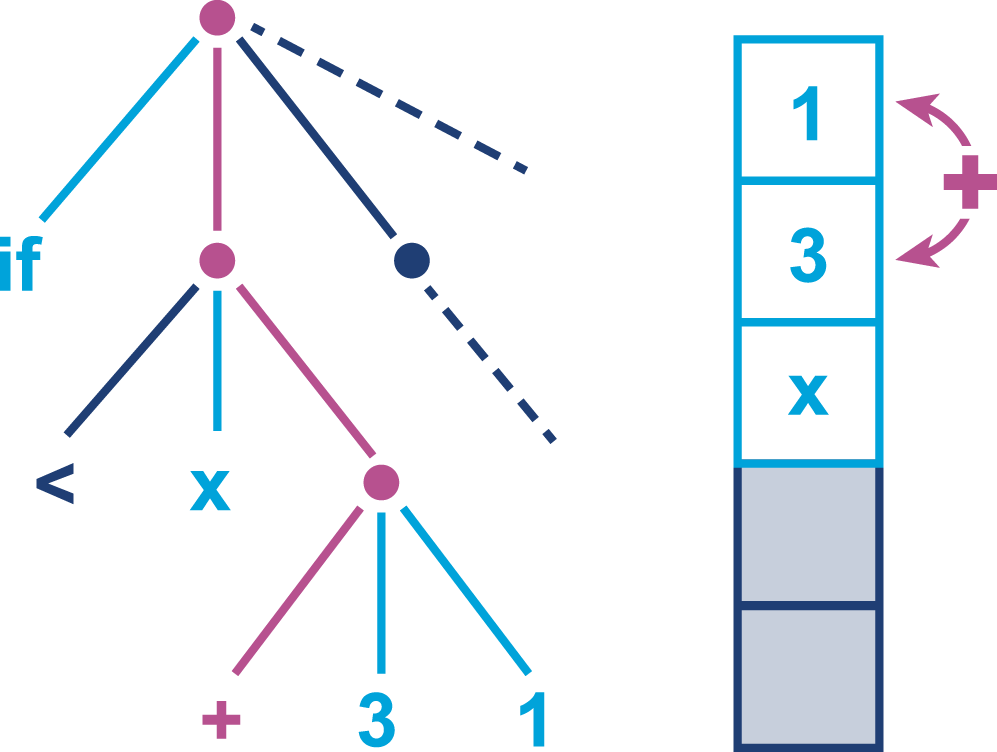

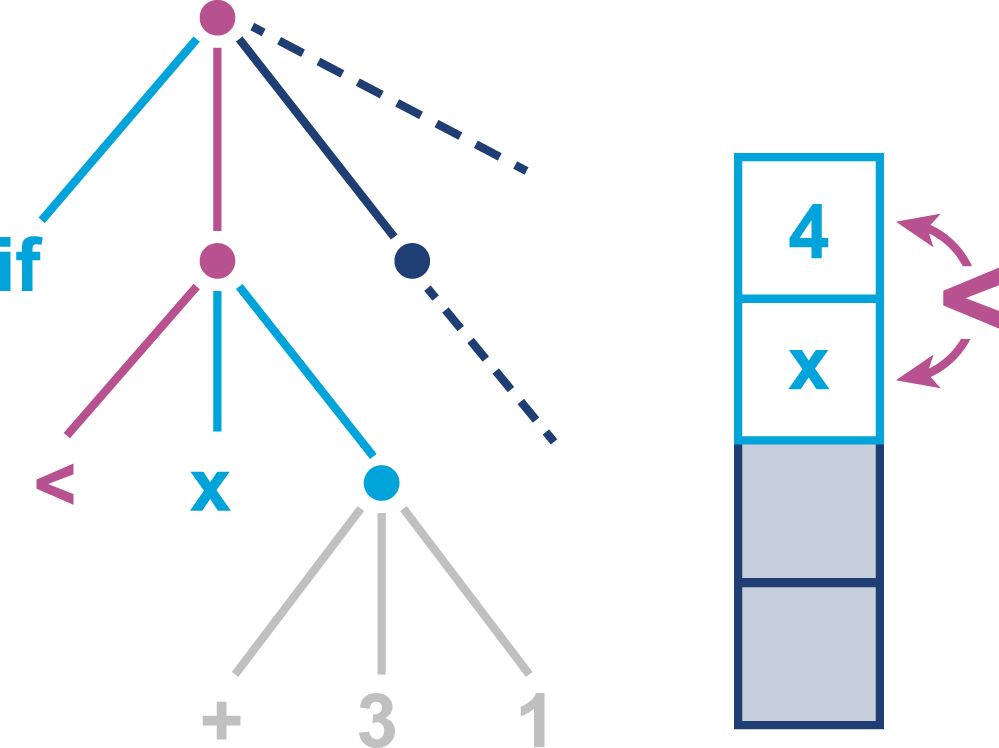

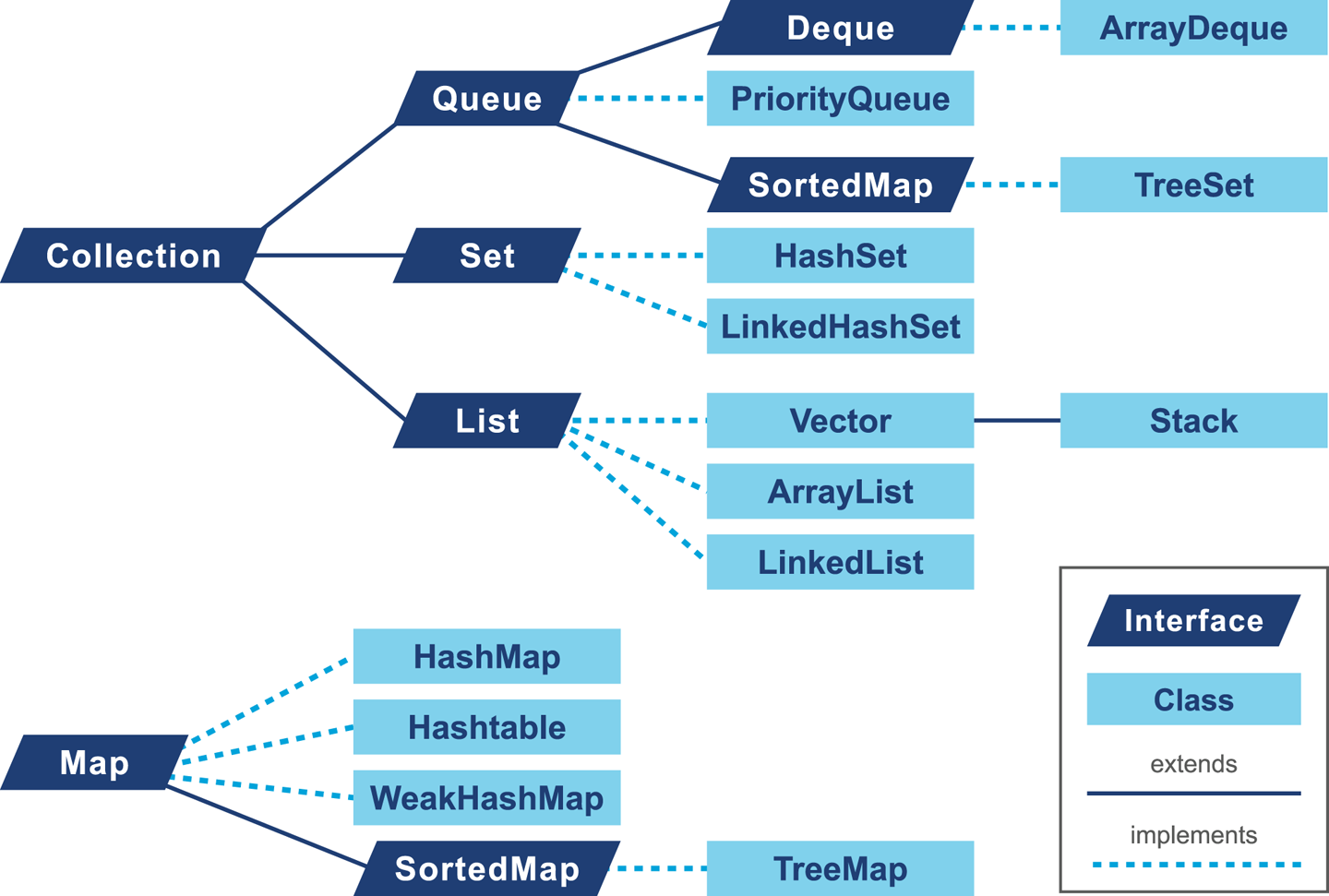

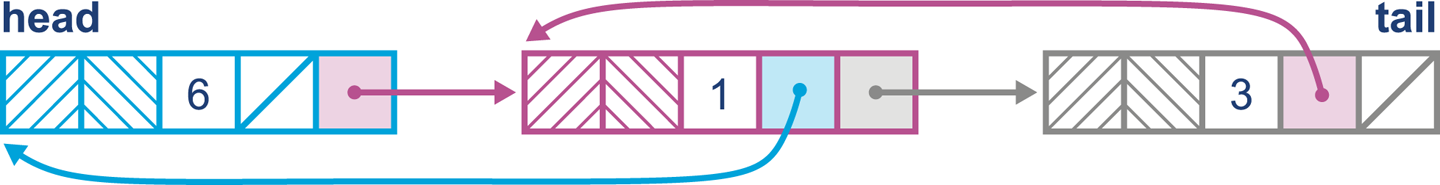

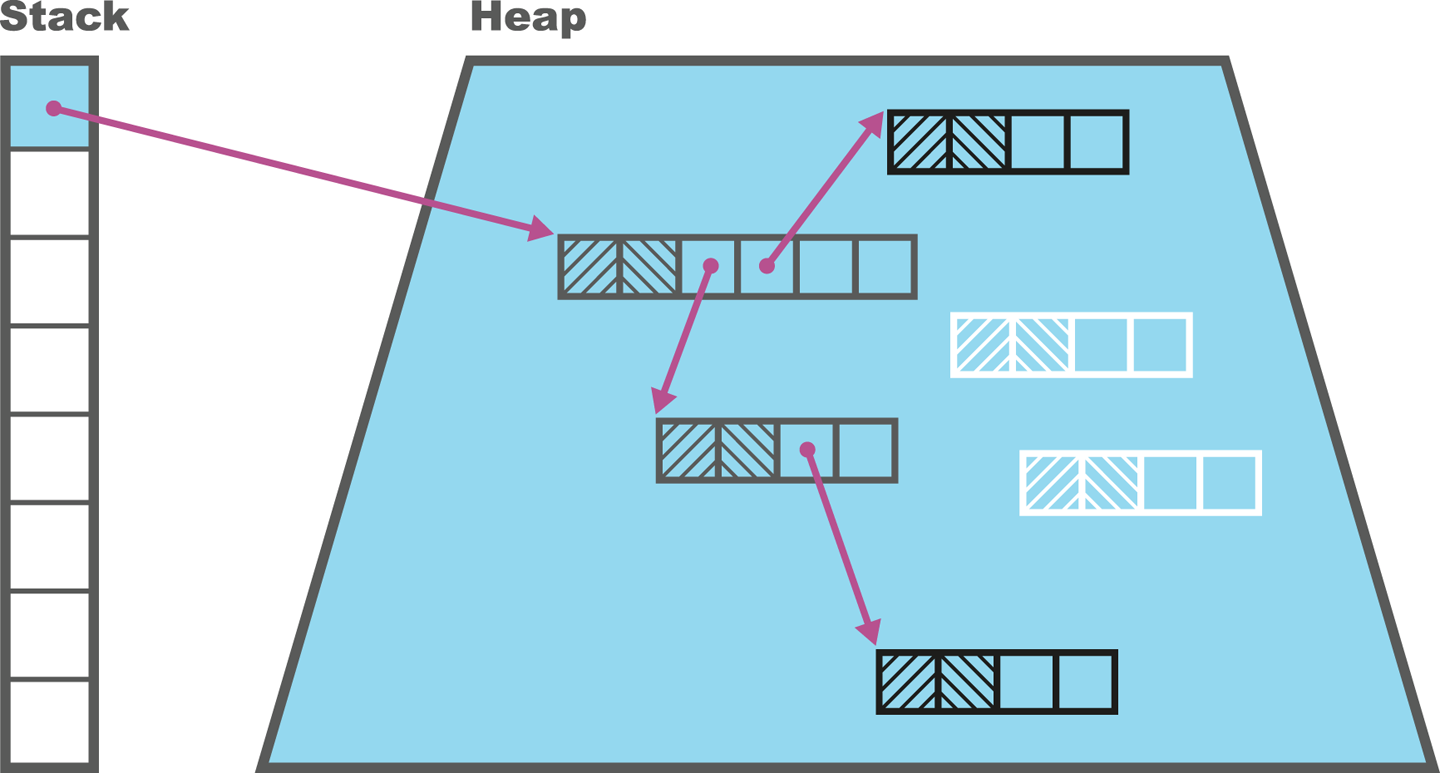

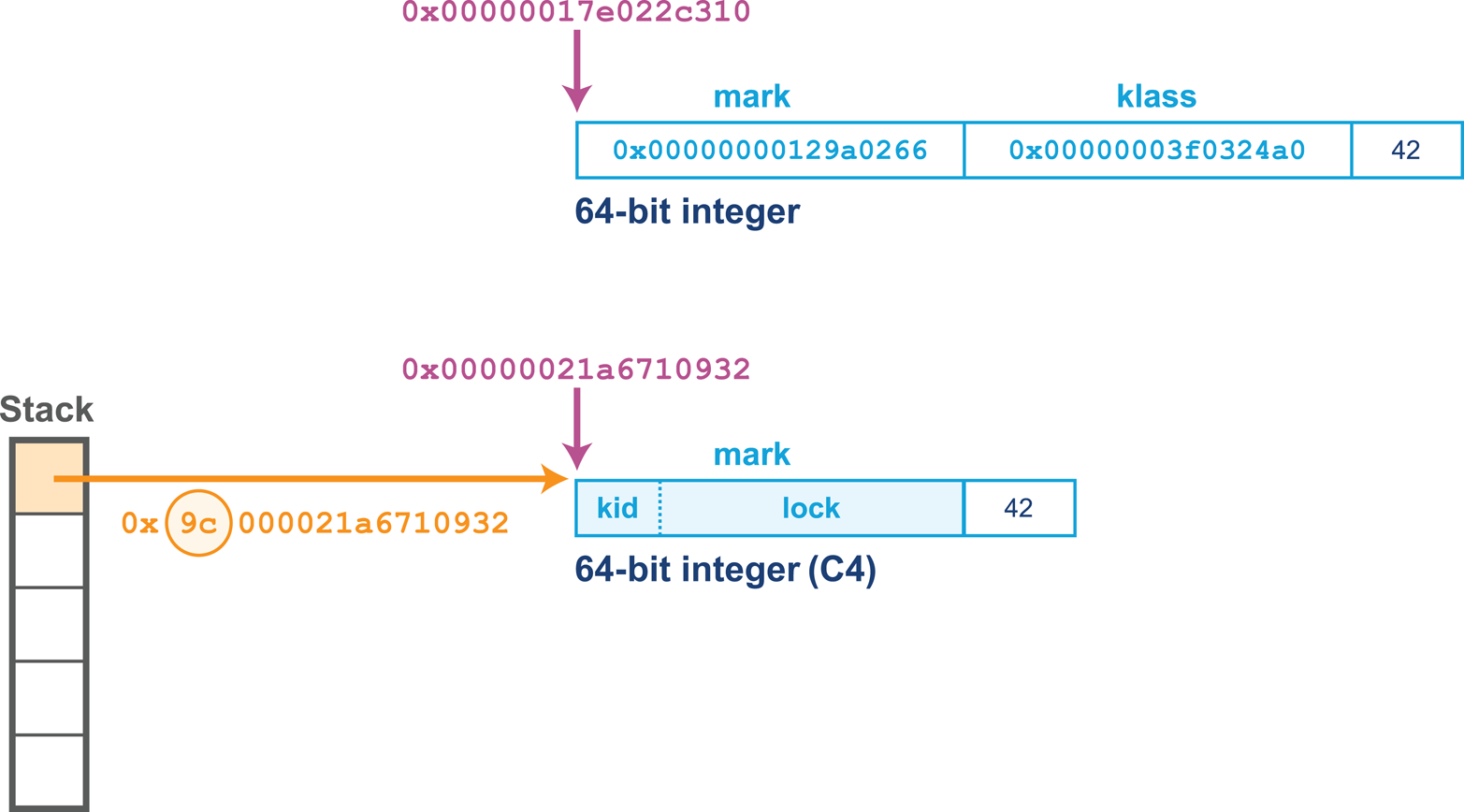

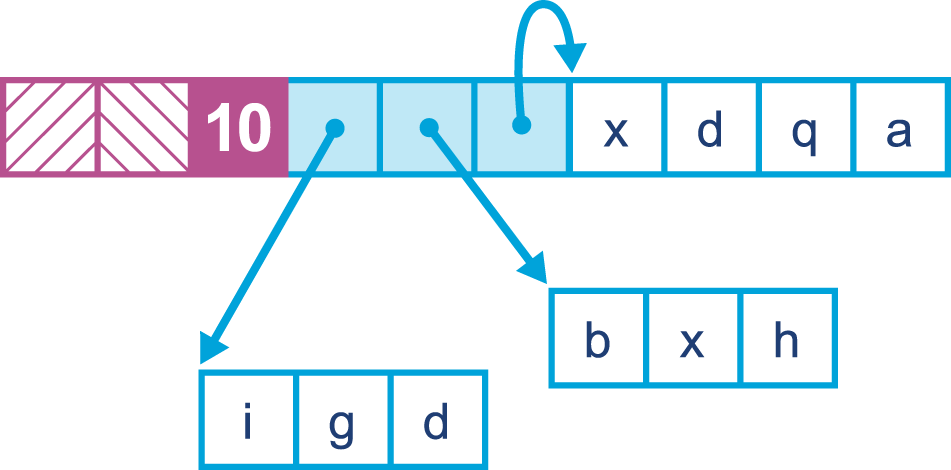

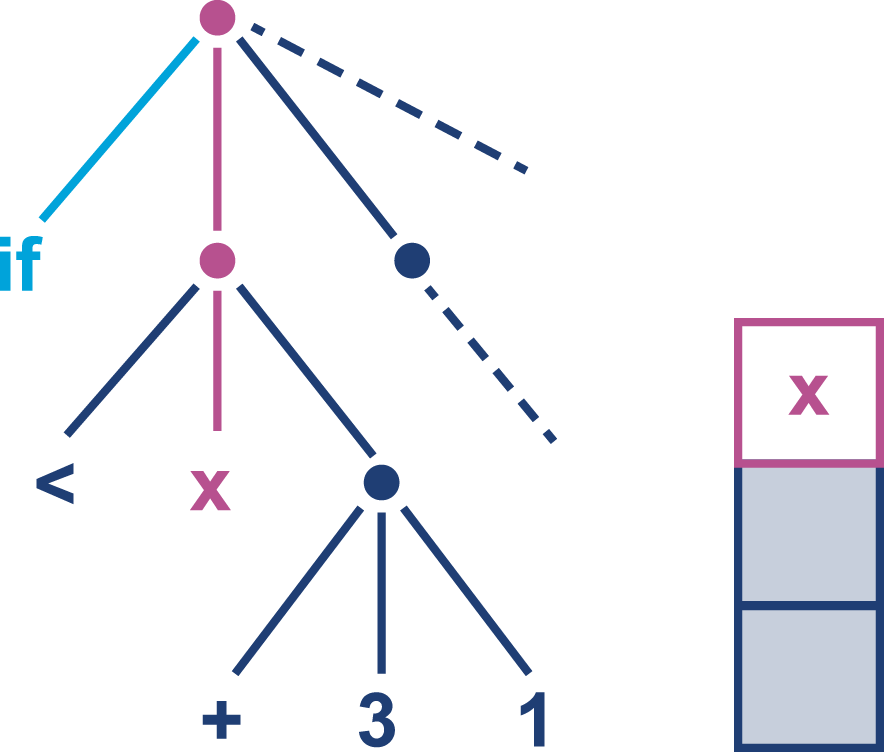

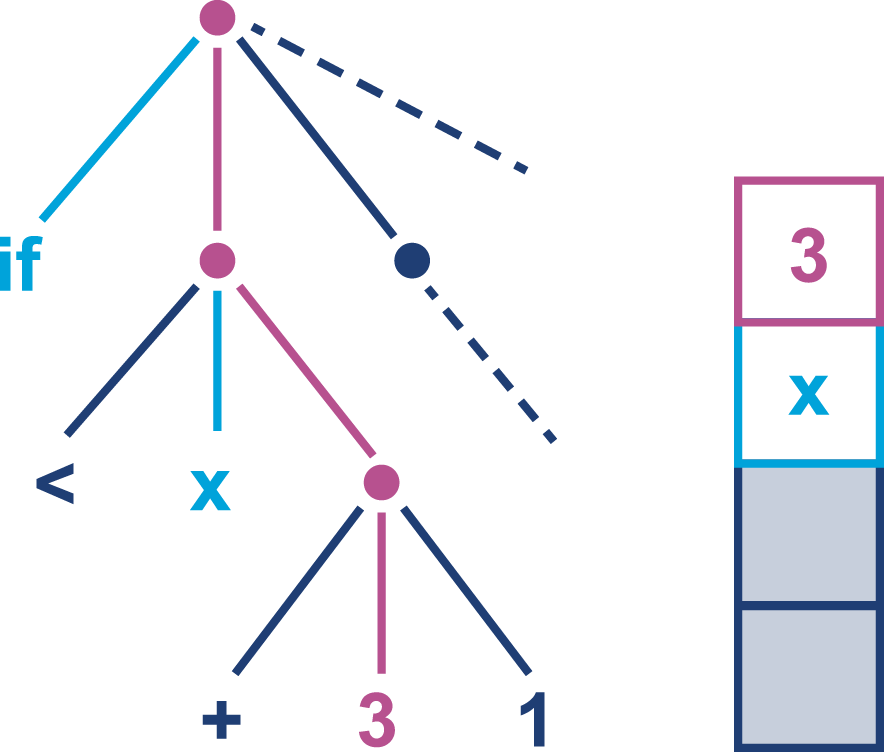

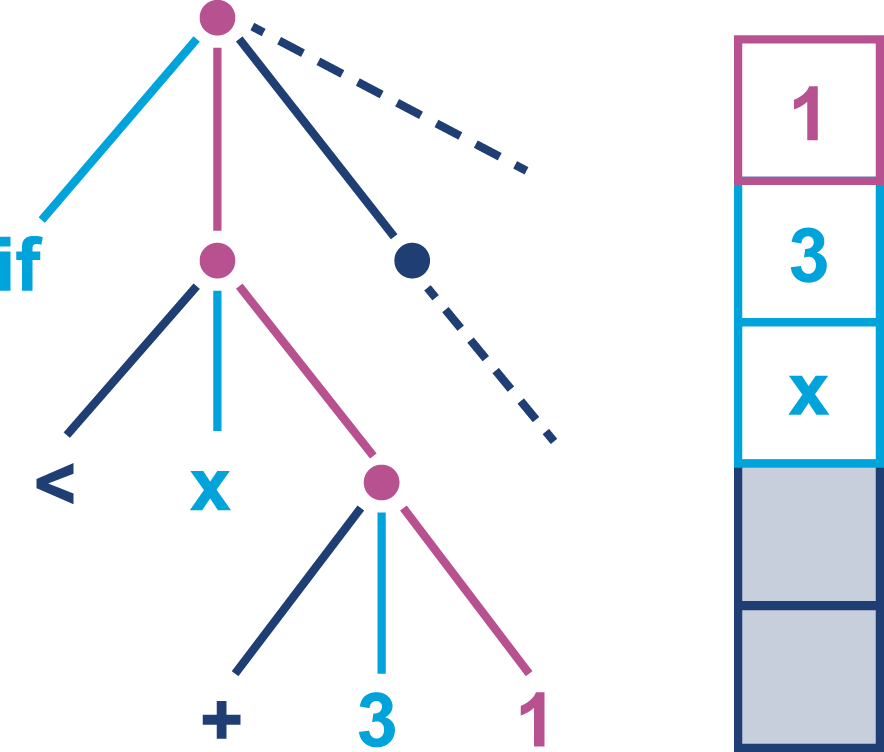

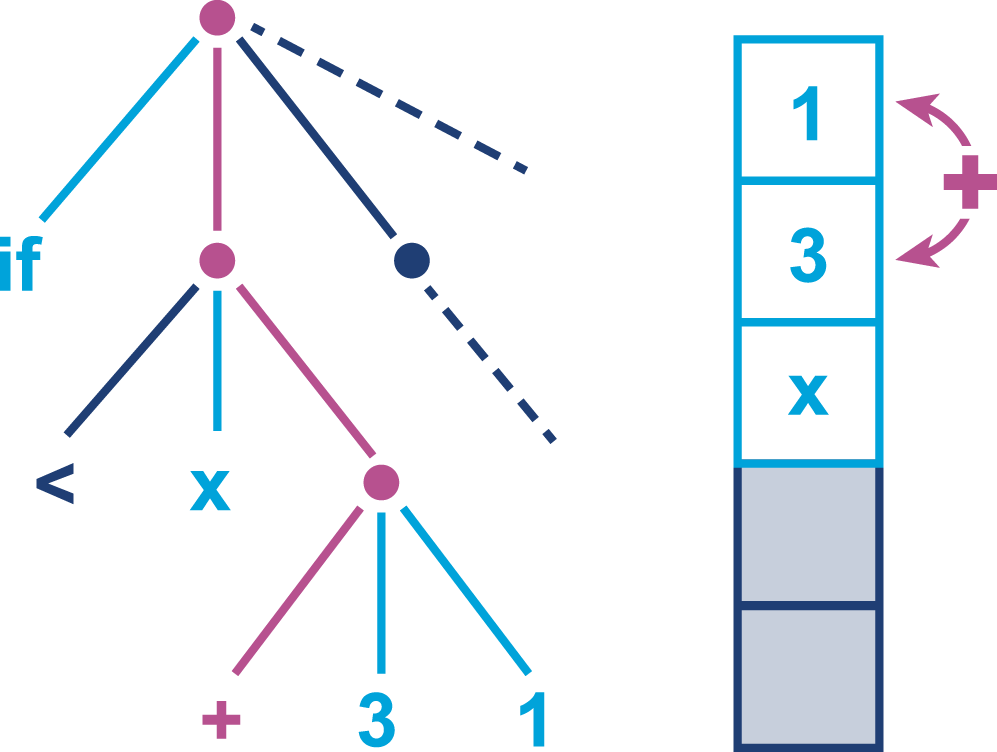

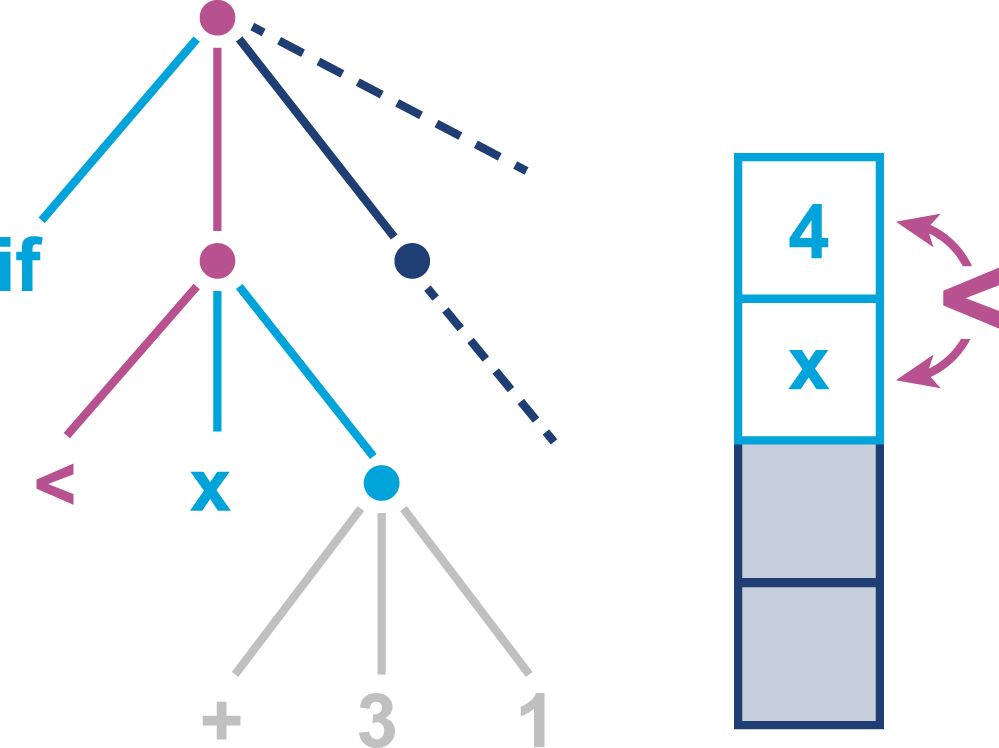

According to the specification that defines the Java Virtual Machine (usually called the VM Spec), the JVM is a stack-based interpreted machine. This means that rather than having registers (like a physical hardware CPU), it uses an execution stack of partial results and performs calculations by operating on the top value (or values) of that stack.

The basic behavior of the JVM interpreter can be thought of as essentially “a switch inside a while loop”—processing each opcode of the program independently of the last, using the evaluation stack to hold intermediate values.

As we will see when we delve into the internals of the Oracle/OpenJDK VM (HotSpot), the situation for real production-grade Java interpreters can be more complex, but switch-inside-while is an acceptable mental model for the moment.

When we launch our application using the java HelloWorld command, the operating system starts the virtual machine process (the java binary). This sets up the Java virtual environment and initializes the stack machine that will actually execute the user code in the HelloWorld class file.

The entry point into the application will be the main() method of HelloWorld.class. In order to hand over control to this class, it must be loaded by the virtual machine before execution can begin.

To achieve this, the Java classloading mechanism is used. When a new Java process is initializing, a chain of classloaders is used. The initial loader is known as the Bootstrap classloader and contains classes in the core Java runtime. In versions of Java up to and including 8, these are loaded from rt.jar. In version 9 and later, the runtime has been modularised and the concepts of classloading are somewhat different.

The main point of the Bootstrap classloader is to get a minimal set of classes (which includes essentials such as java.lang.Object, Class, and Classloader) loaded to allow other classloaders to bring up the rest of the system.

Java models classloaders as objects within its own runtime and type system, so there needs to be some way to bring an initial set of classes into existence. Otherwise, there would be a circularity problem in defining what a classloader is.

The Extension classloader is created next; it defines its parent to be the Bootstrap classloader and will delegate to its parent if needed. Extensions are not widely used, but can supply overrides and native code for specific operating systems and platforms. Notably, the Nashorn JavaScript runtime introduced in Java 8 is loaded by the Extension loader.

Finally, the Application classloader is created; it is responsible for loading in user classes from the defined classpath. Some texts unfortunately refer to this as the “System” classloader. This term should be avoided, for the simple reason that it doesn’t load the system classes (the Bootstrap classloader does). The Application classloader is encountered extremely frequently, and it has the Extension loader as its parent.

Java loads in dependencies on new classes when they are first encountered during the execution of the program. If a classloader fails to find a class, the behavior is usually to delegate the lookup to the parent. If the chain of lookups reaches the Bootstrap classloader and it isn’t found, a ClassNotFoundException will be thrown. It is important that developers use a build process that effectively compiles with the exact same classpath that will be used in production, as this helps to mitigate this potential issue.

Under normal circumstances Java only loads a class once and a Class object is created to represent the class in the runtime environment. However, it is important to realize that the same class can potentially be loaded twice by different classloaders. As a result, a class in the system is identified by the classloader used to load it as well as the fully qualified class name (which includes the package name).

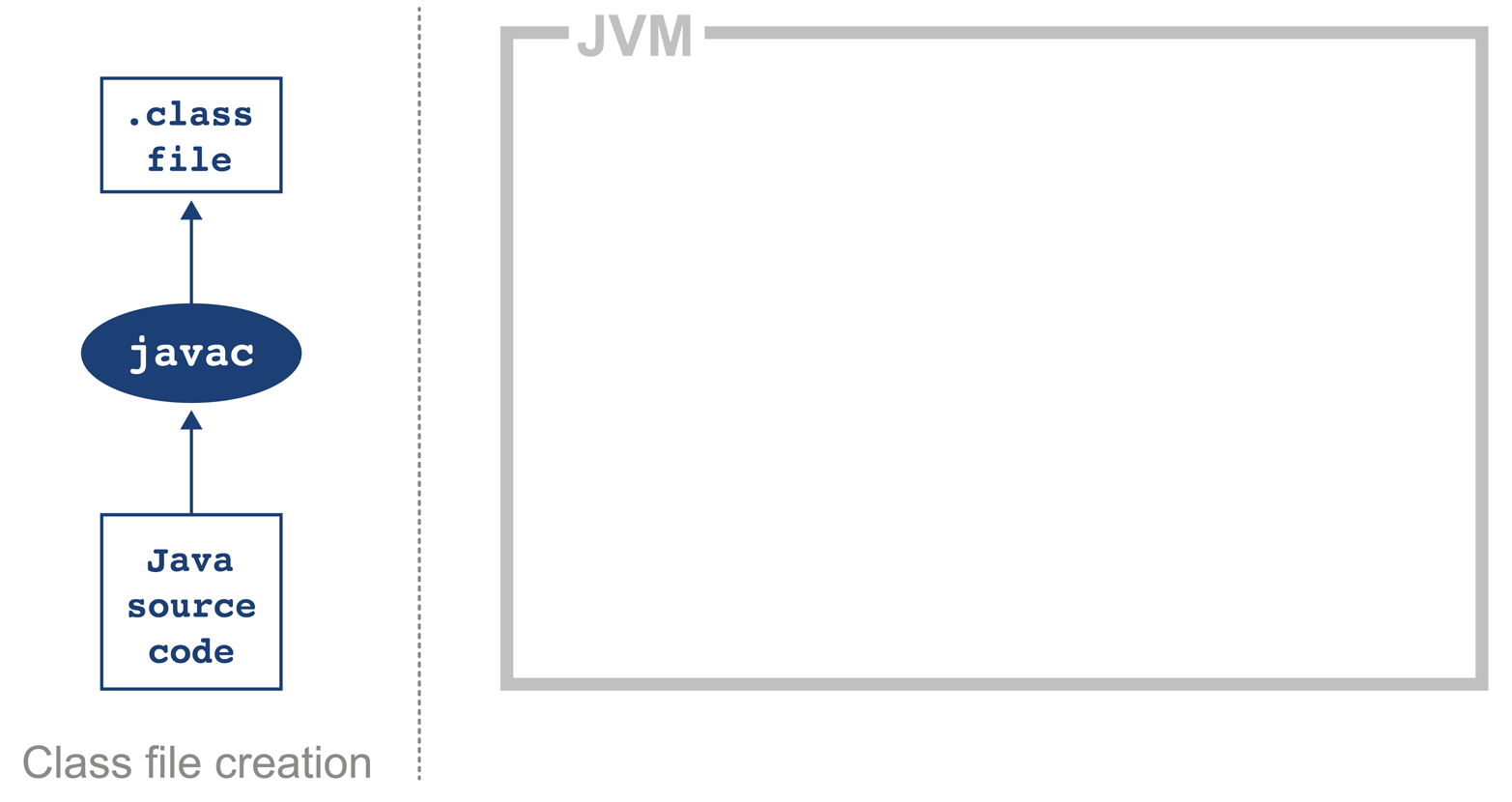

It is important to appreciate that Java source code goes through a significant number of transformations before execution. The first is the compilation step using the Java compiler javac, often invoked as part of a larger build process.

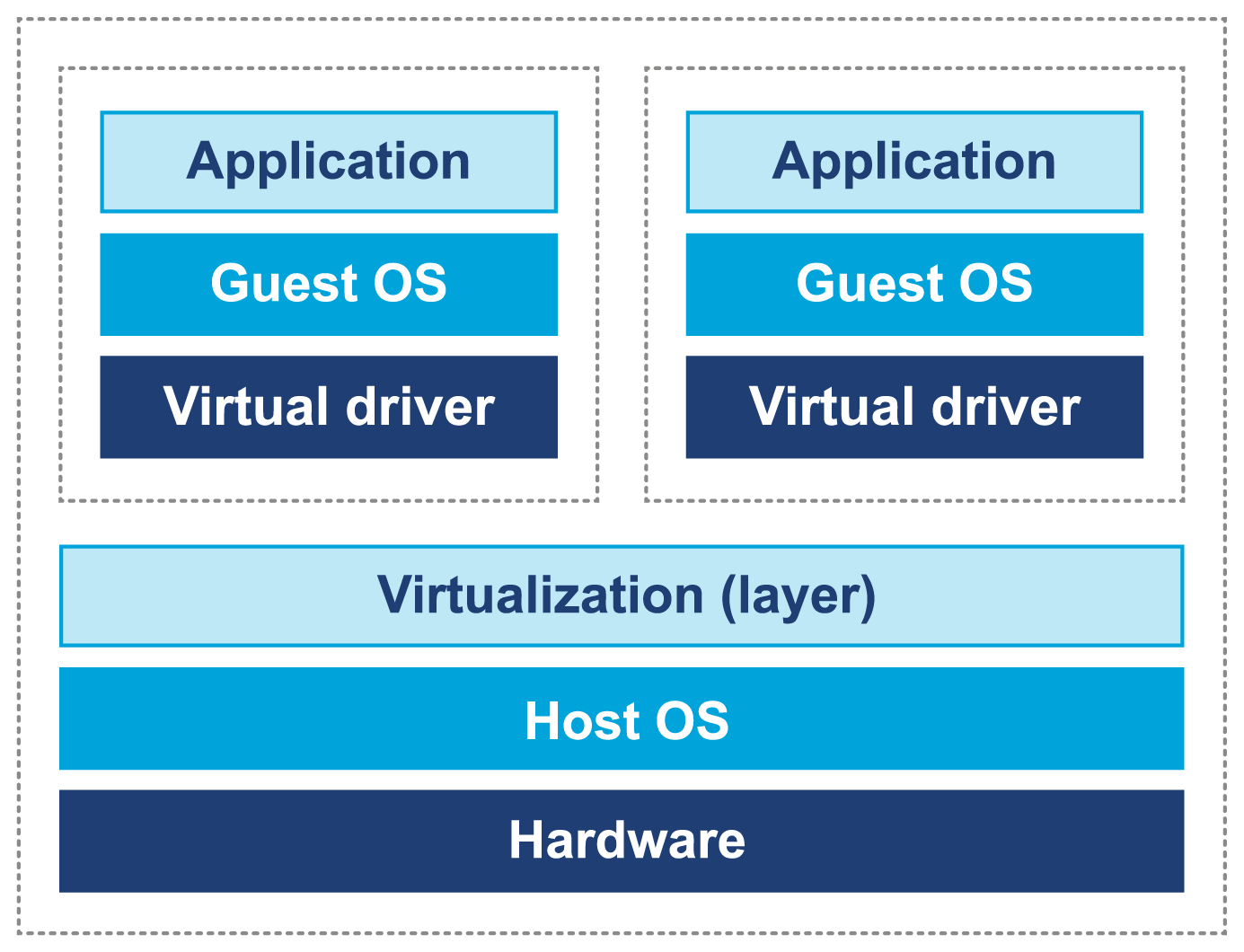

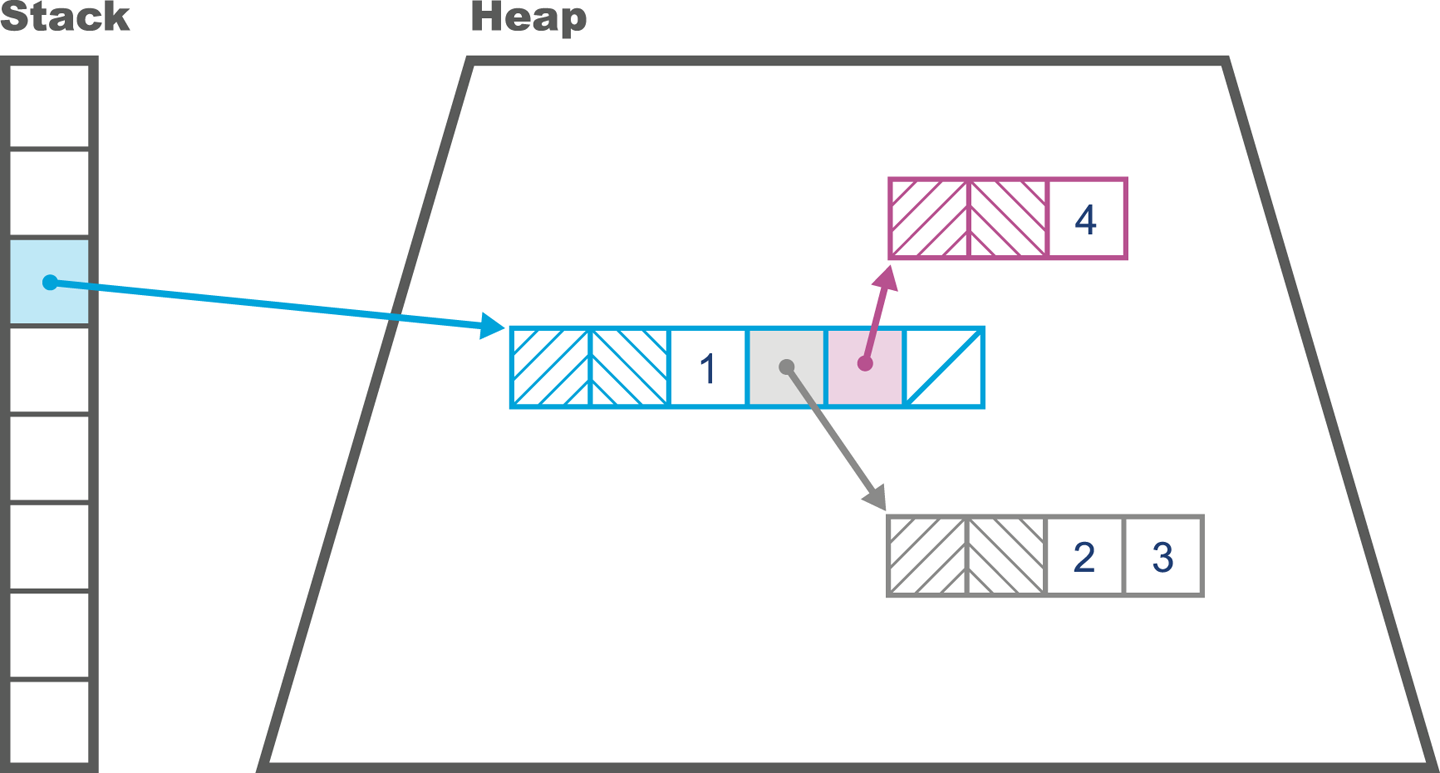

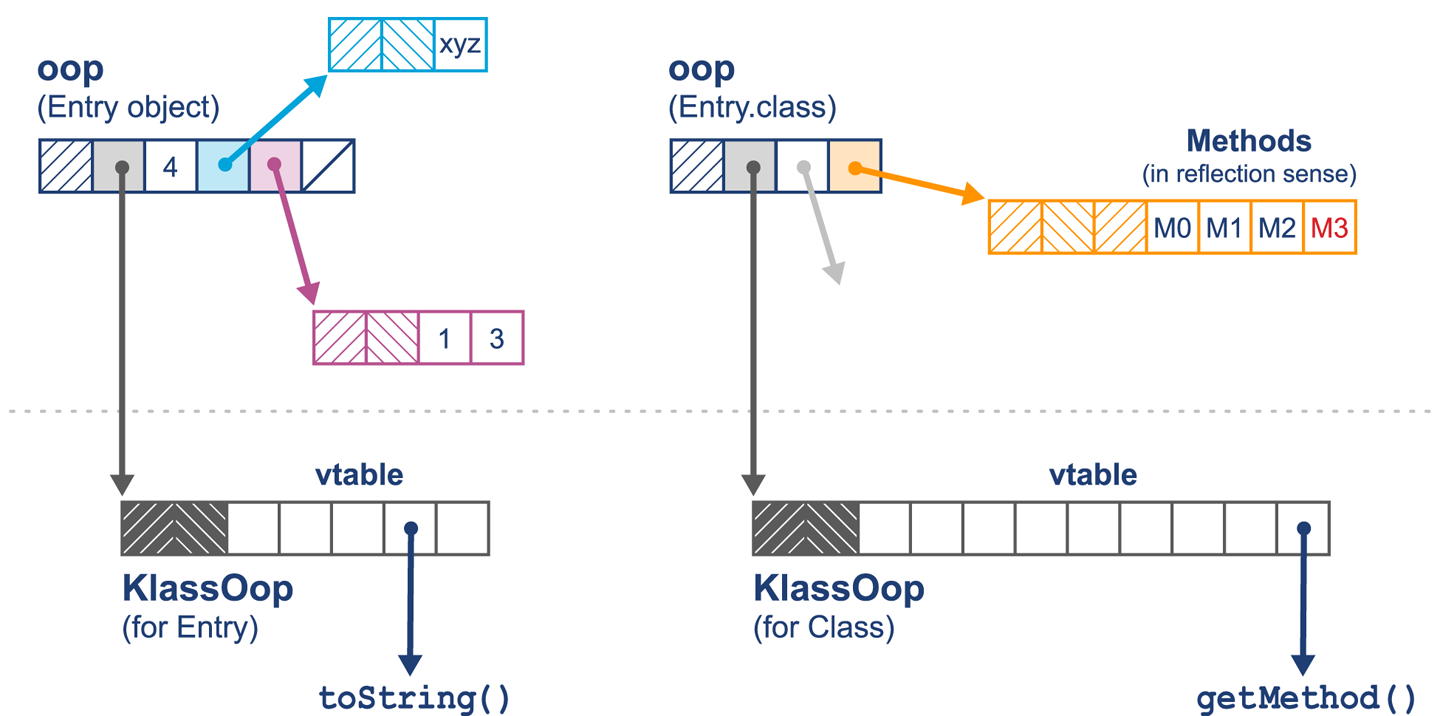

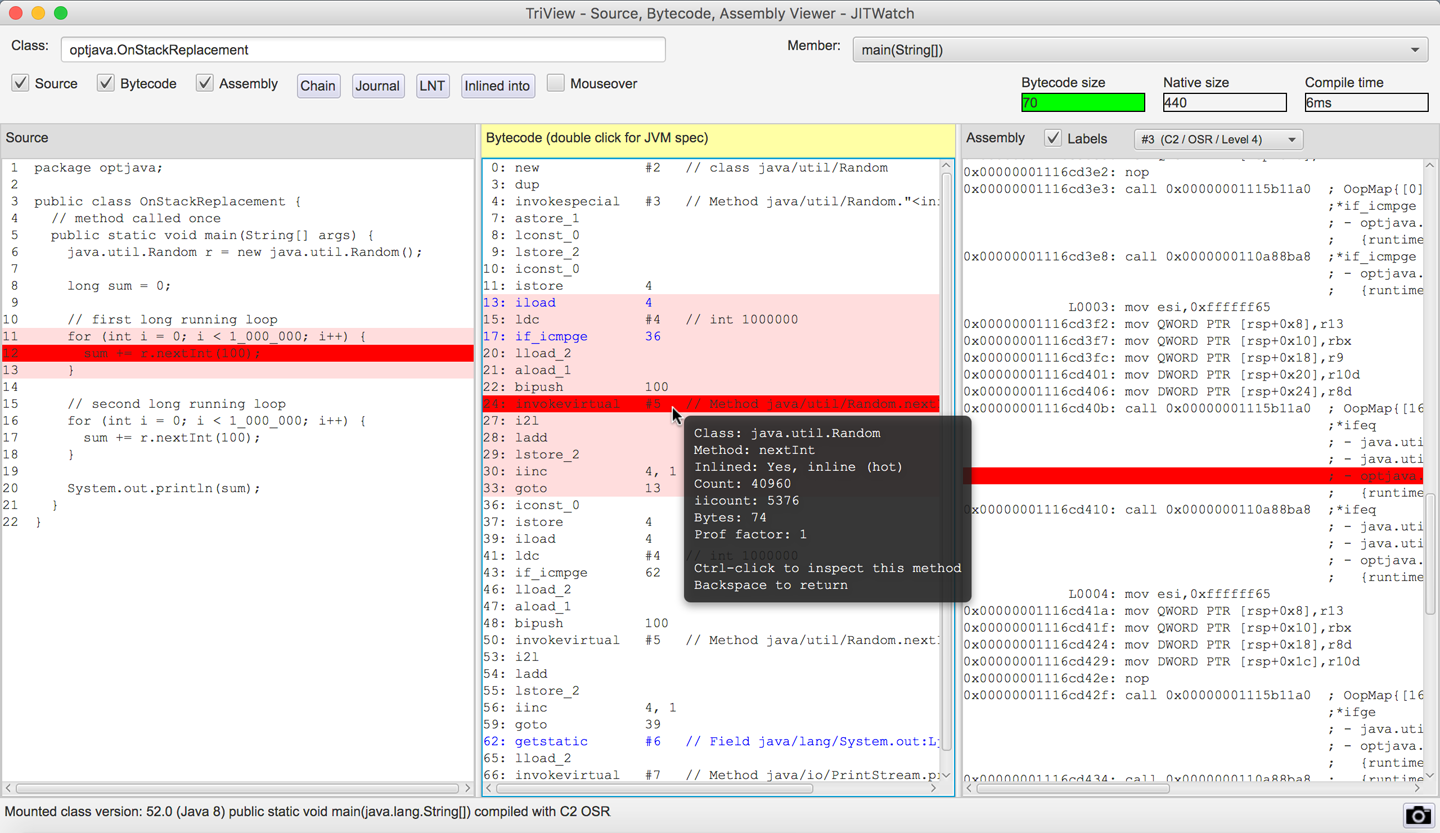

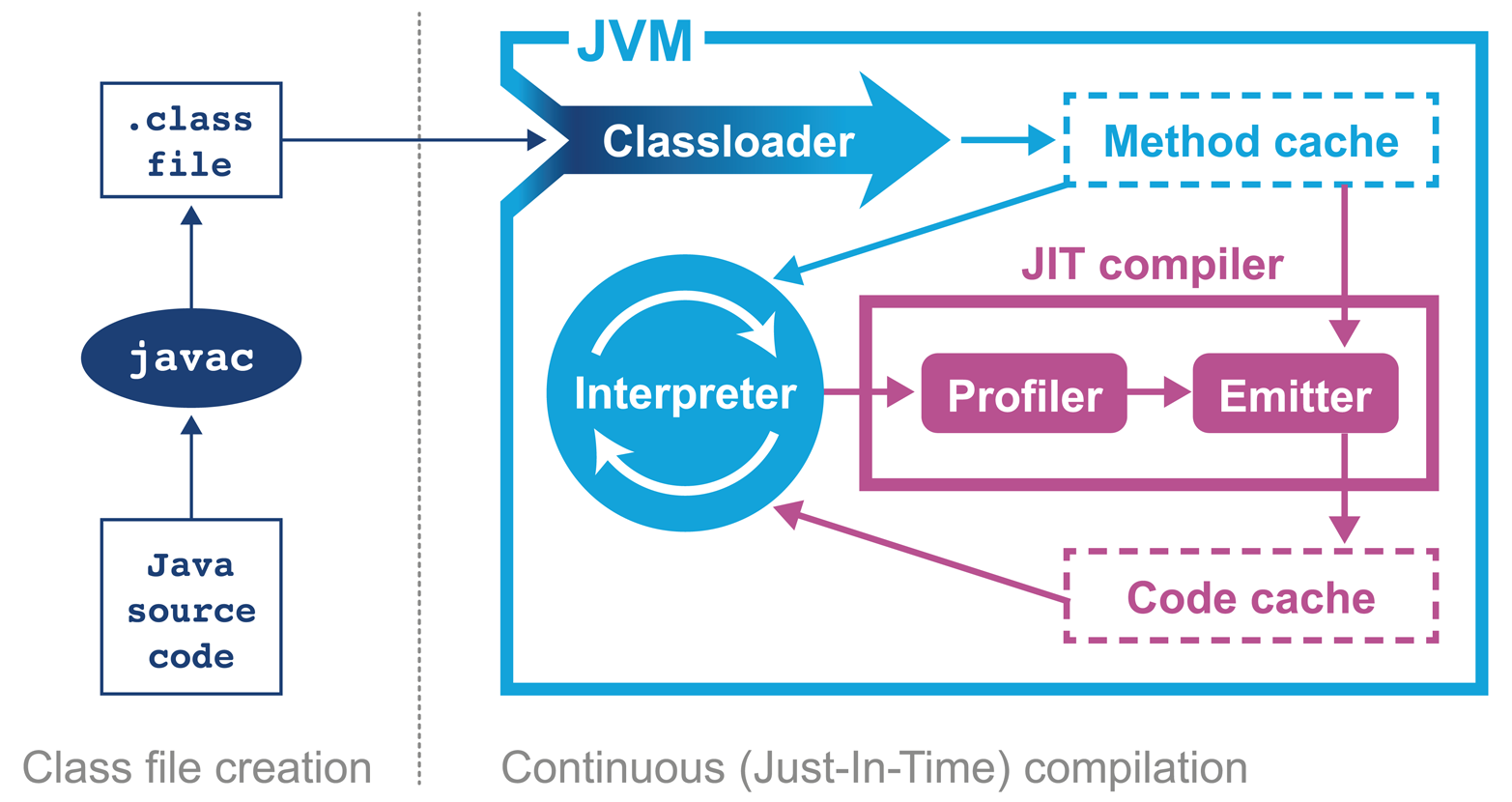

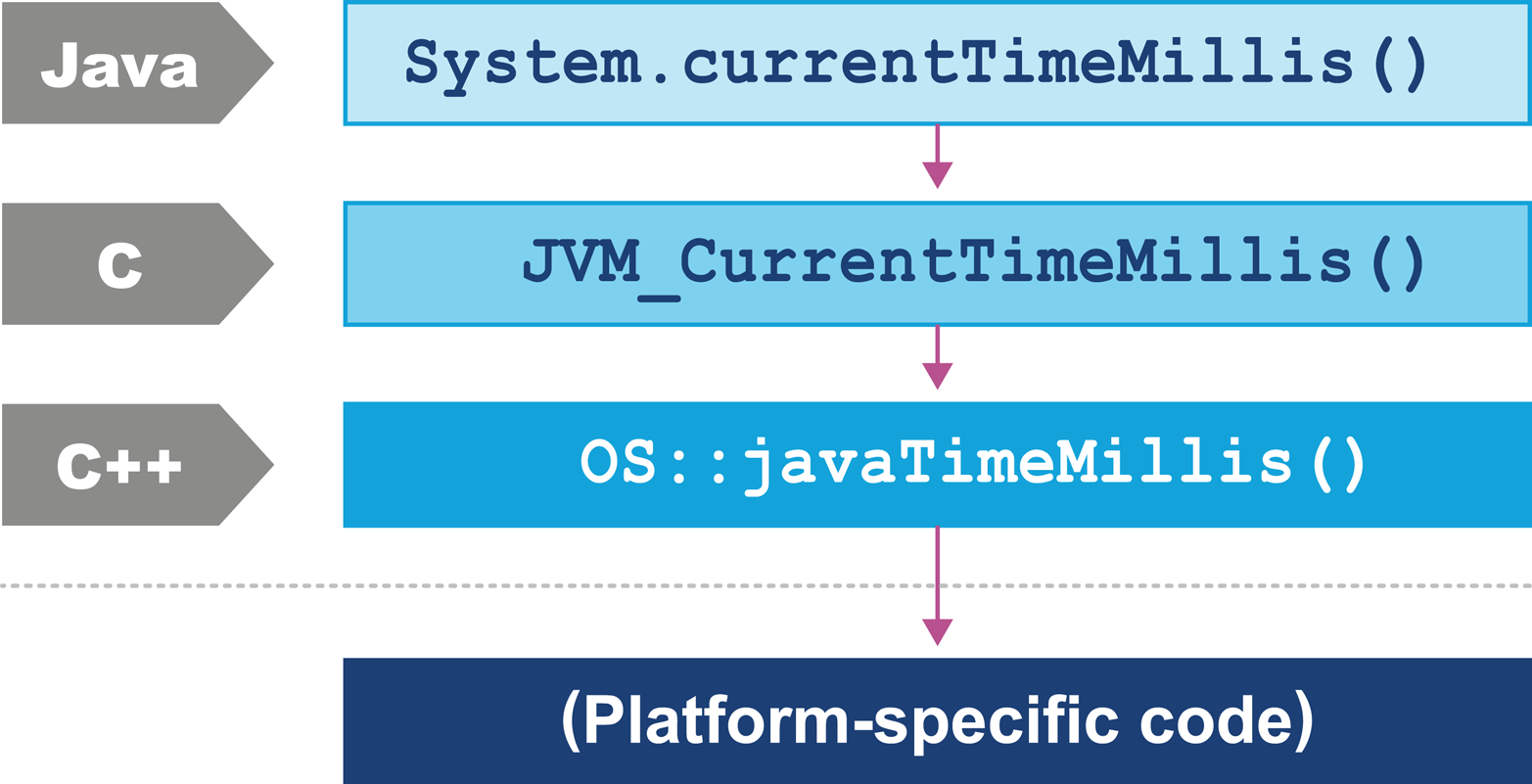

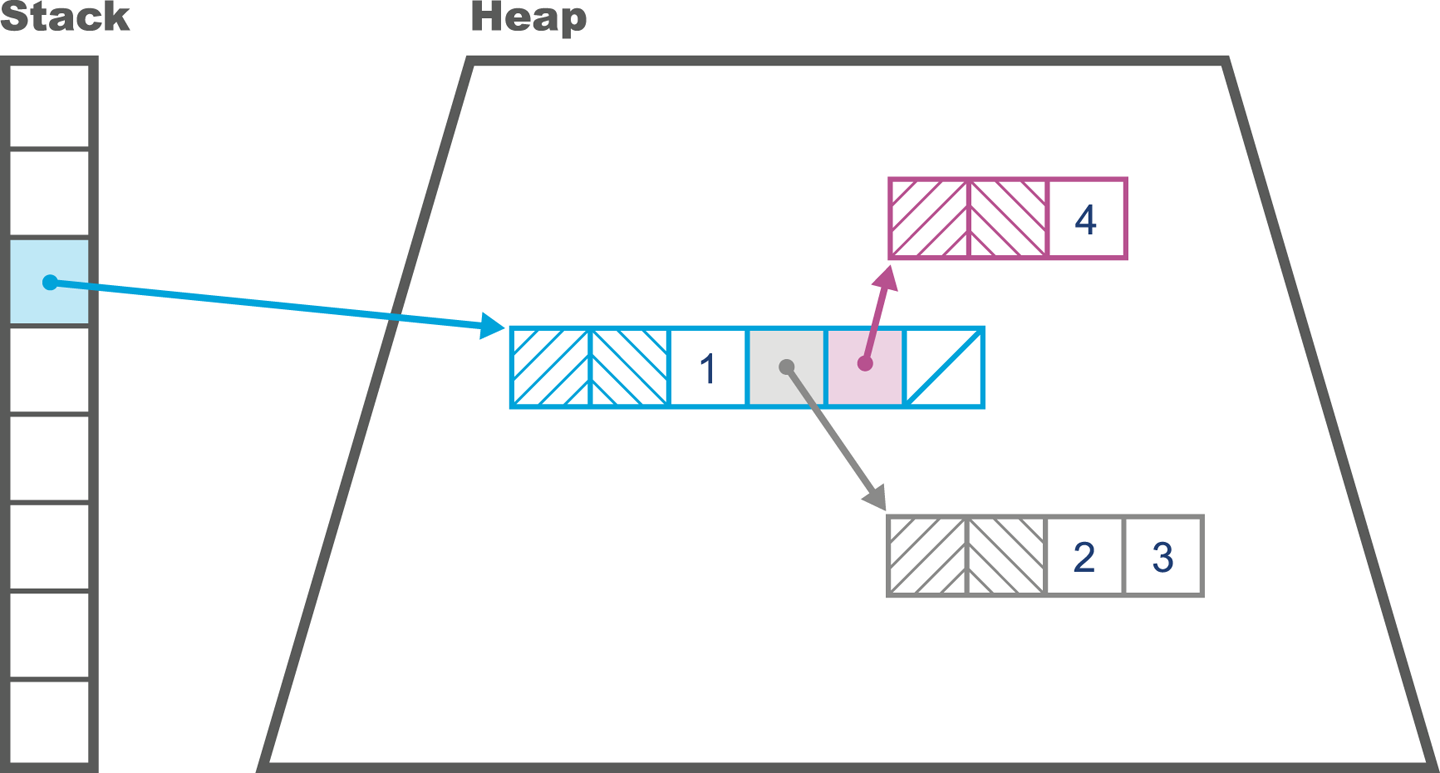

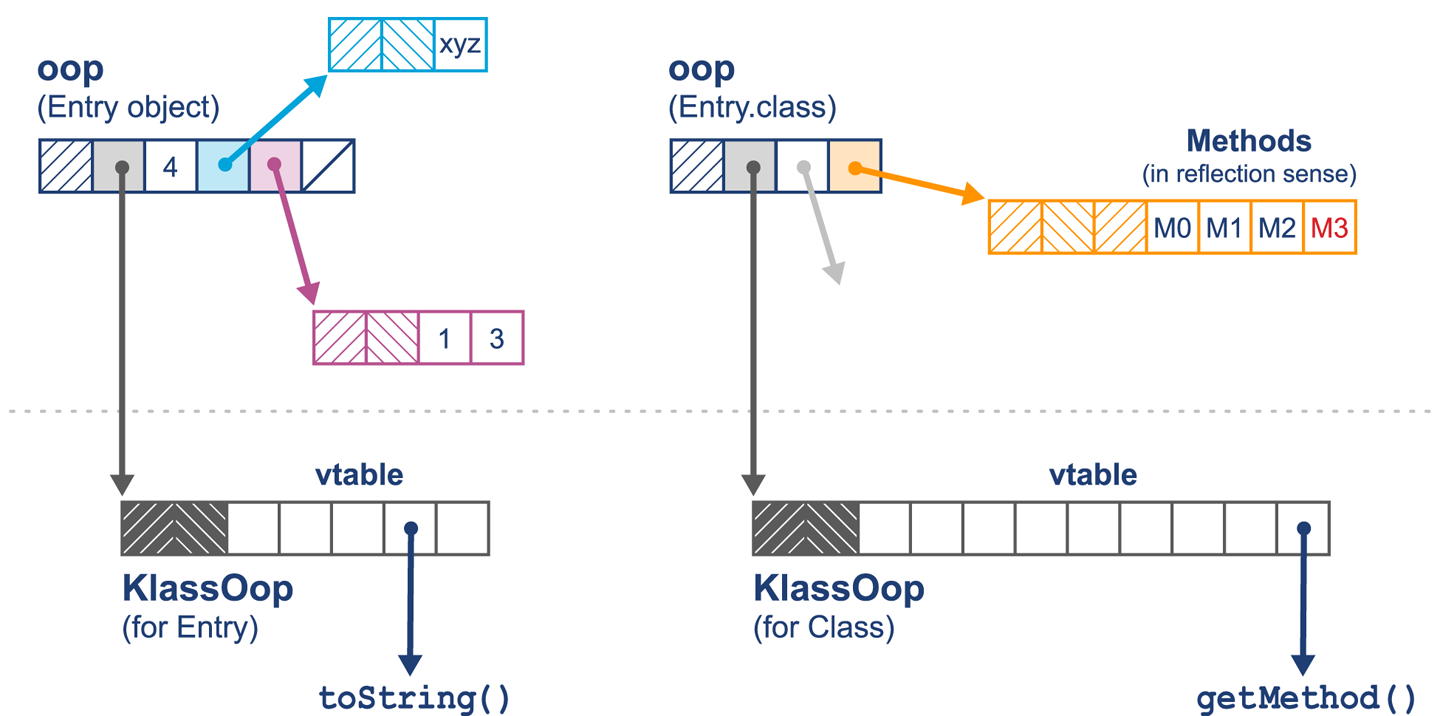

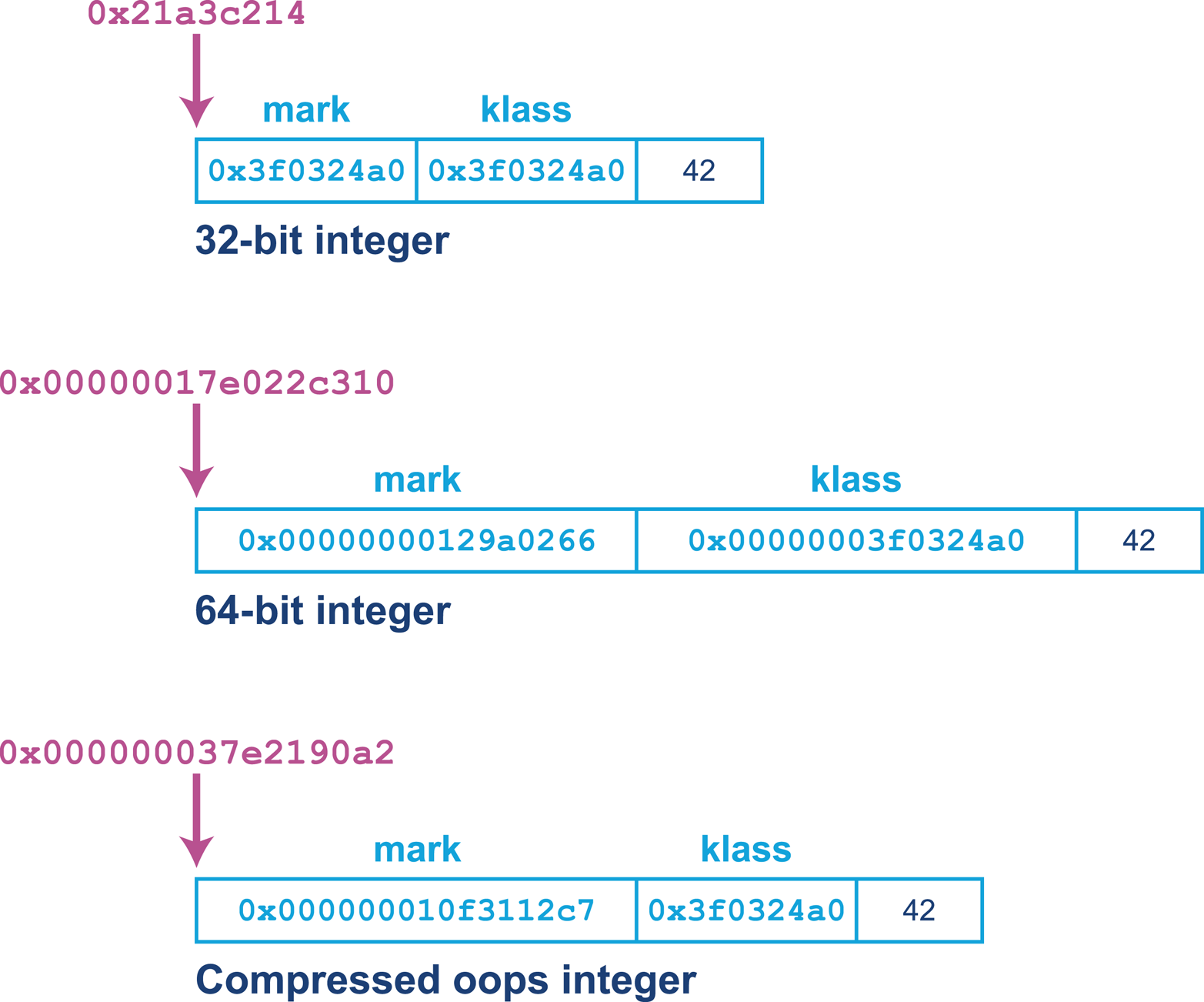

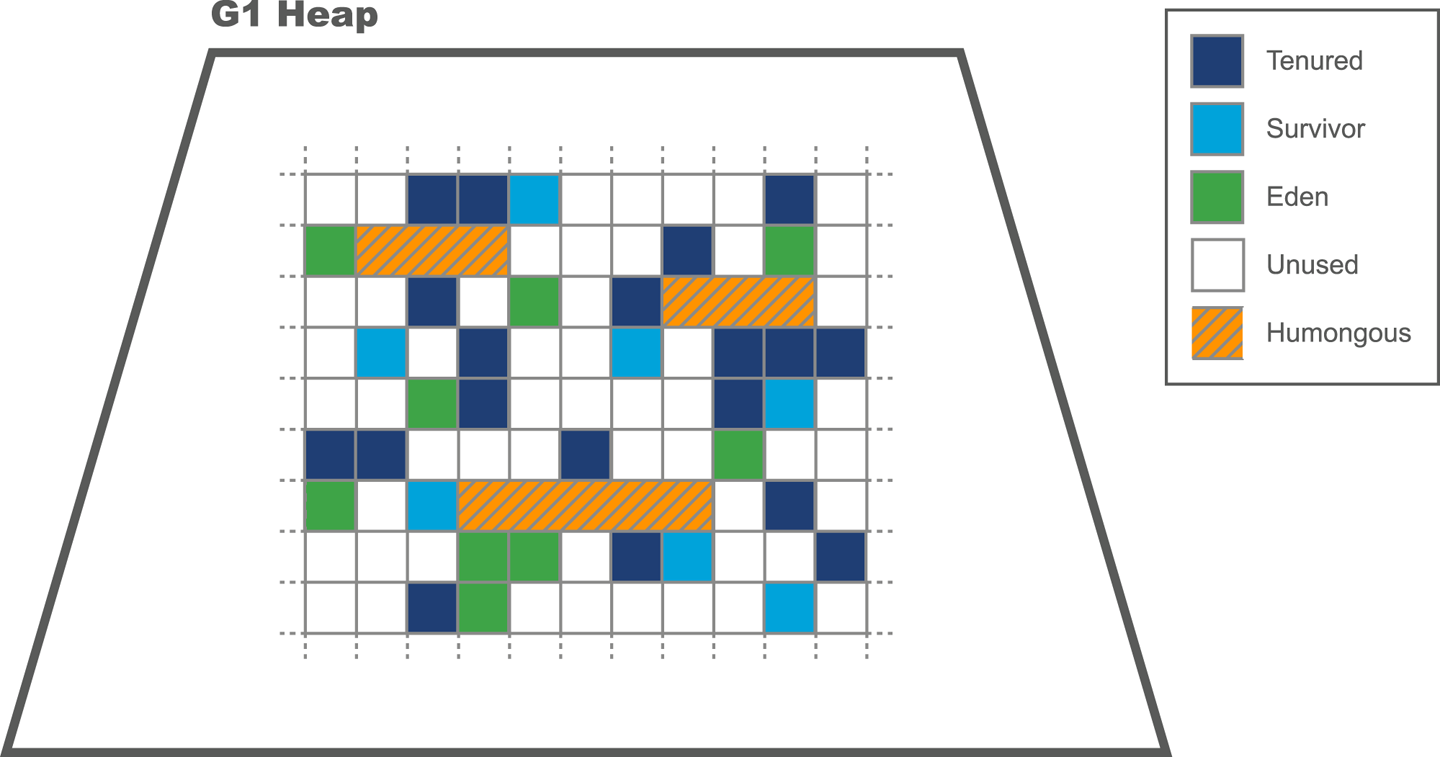

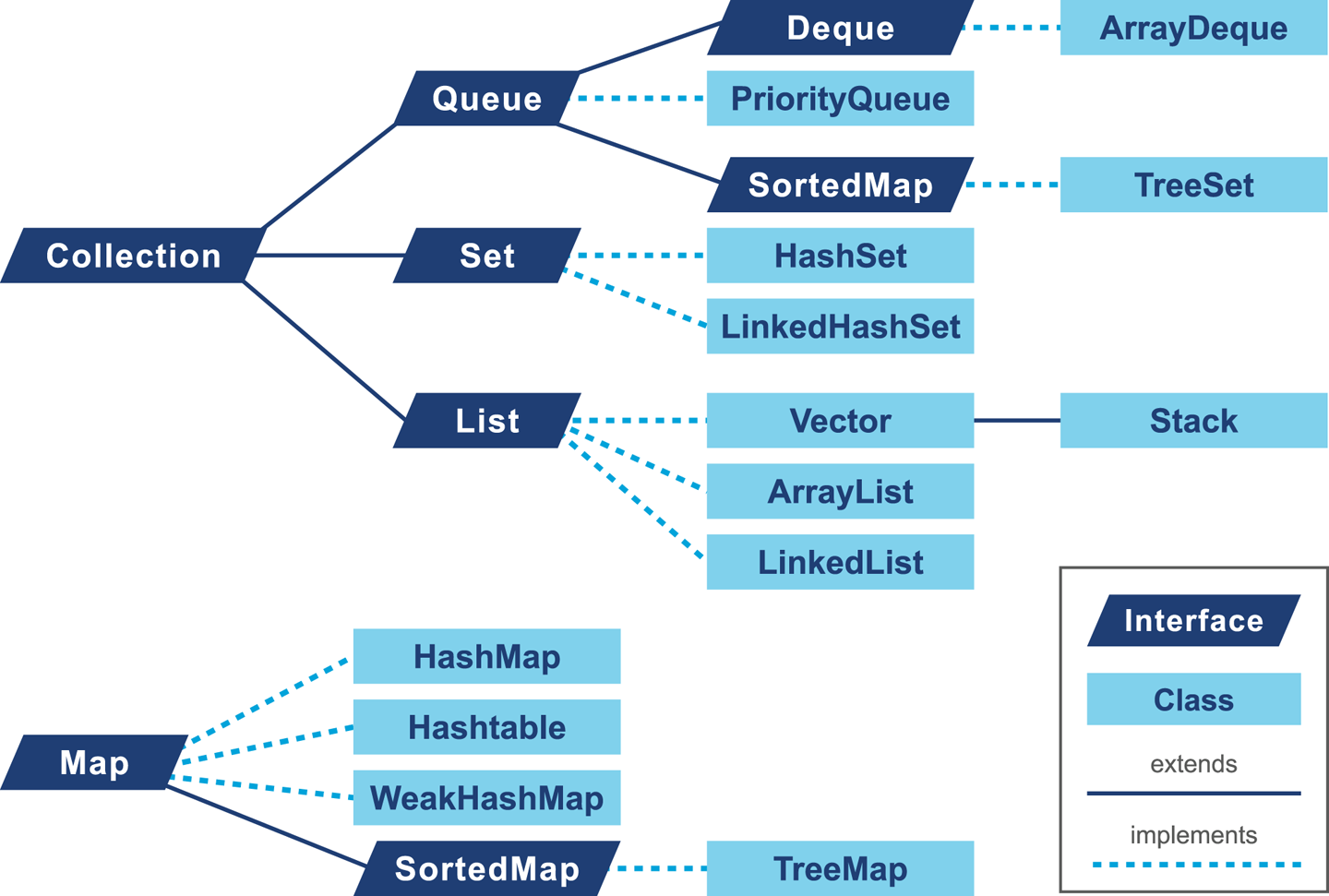

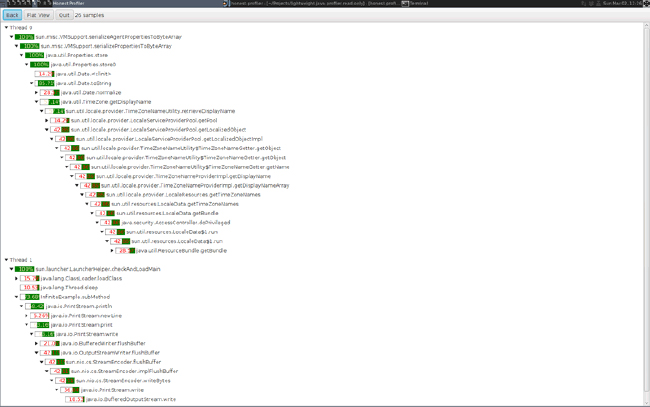

The job of javac is to convert Java code into .class files that contain bytecode. It achieves this by doing a fairly straightforward translation of the Java source code, as shown in Figure 2-1. Very few optimizations are done during compilation by javac, and the resulting bytecode is still quite readable and recognizable as Java code when viewed in a disassembly tool, such as the standard javap.

Bytecode is an intermediate representation that is not tied to a specific machine architecture. Decoupling from the machine architecture provides portability, meaning already developed (or compiled) software can run on any platform supported by the JVM and provides an abstraction from the Java language. This provides our first important insight into the way the JVM executes code.

The Java language and the Java Virtual Machine are now to a degree independent, and so the J in JVM is potentially a little misleading, as the JVM can execute any JVM language that can produce a valid class file. In fact, Figure 2-1 could just as easily show the Scala compiler scalac generating bytecode for execution on the JVM.

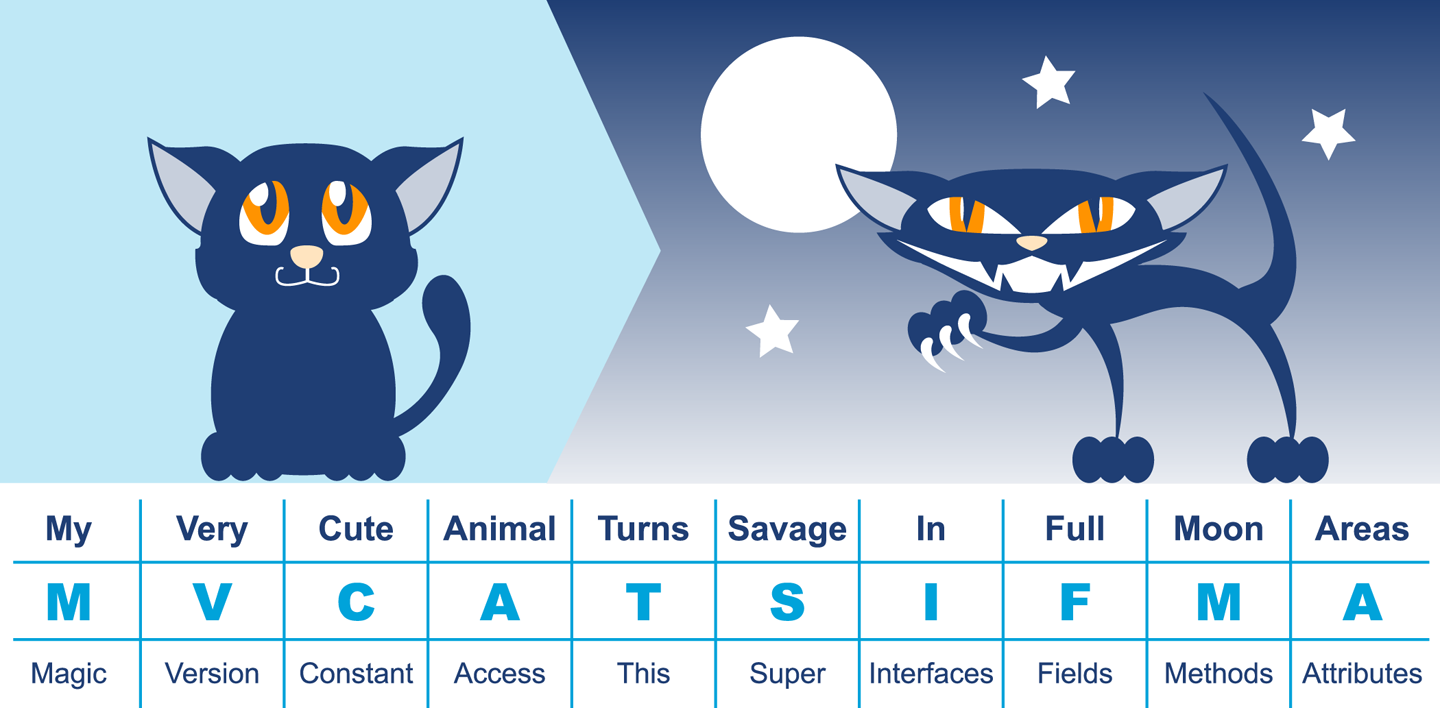

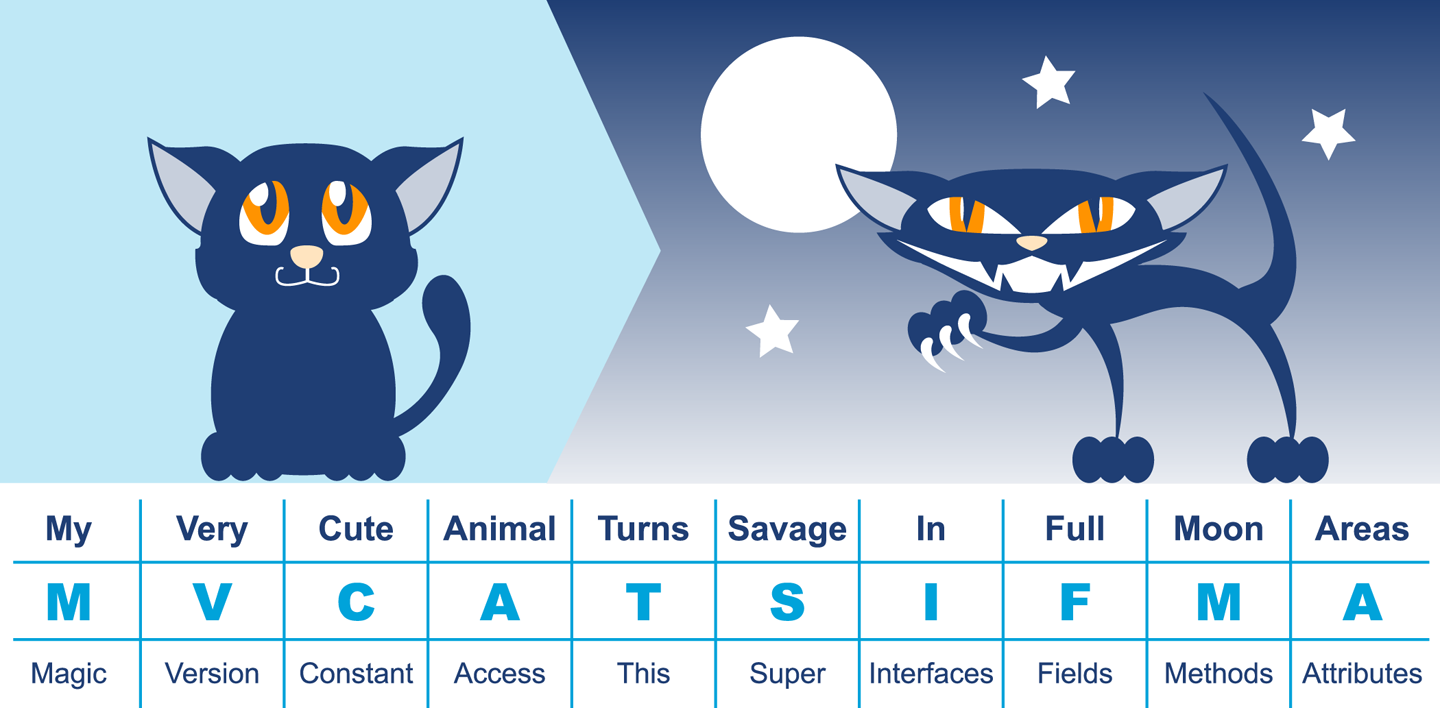

Regardless of the source code compiler used, the resulting class file has a very well-defined structure specified by the VM specification (Table 2-1). Any class that is loaded by the JVM will be verified to conform to the expected format before being allowed to run.

| Component | Description |

|---|---|

Magic number |

|

Version of class file format |

The minor and major versions of the class file |

Constant pool |

The pool of constants for the class |

Access flags |

Whether the class is abstract, static, and so on |

This class |

The name of the current class |

Superclass |

The name of the superclass |

Interfaces |

Any interfaces in the class |

Fields |

Any fields in the class |

Methods |

Any methods in the class |

Attributes |

Any attributes of the class (e.g., name of the source file, etc.) |

Every class file starts with the magic number 0xCAFEBABE, the first 4 bytes in hexadecimal serving to denote conformance to the class file format. The following 4 bytes represent the minor and major versions used to compile the class file, and these are checked to ensure that the target JVM is not of a lower version than the one used to compile the class file. The major and minor version are checked by the classloader to ensure compatibility; if these are not compatible an UnsupportedClassVersionError will be thrown at runtime, indicating the runtime is a lower version than the compiled class file.

Magic numbers provide a way for Unix environments to identify the type of a file (whereas Windows will typically use the file extension). For this reason, they are difficult to change once decided upon. Unfortunately, this means that Java is stuck using the rather embarrassing and sexist 0xCAFEBABE for the foreseeable future, although Java 9 introduces the magic number 0xCAFEDADA for module files.

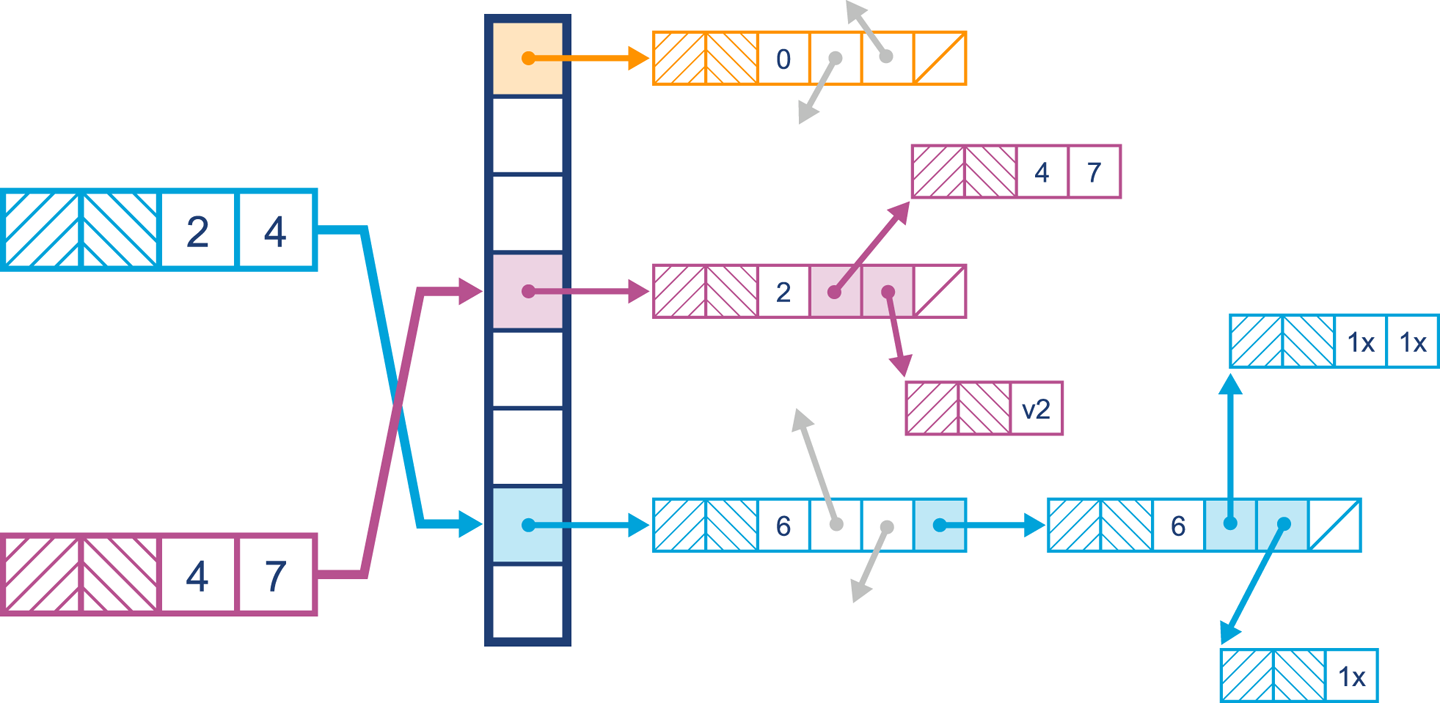

The constant pool holds constant values in code: for example, names of classes, interfaces, and fields. When the JVM executes code, the constant pool table is used to refer to values rather than having to rely on the layout of memory at runtime.

Access flags are used to determine the modifiers applied to the class. The first part of the flag identifies general properties, such as whether a class is public, followed by whether it is final and cannot be subclassed. The flag also determines whether the class file represents an interface or an abstract class. The final part of the flag indicates whether the class file represents a synthetic class that is not present in source code, an annotation type, or an enum.

The this class, superclass, and interface entries are indexes into the constant pool to identify the type hierarchy belonging to the class. Fields and methods define a signature-like structure, including the modifiers that apply to the field or method. A set of attributes is then used to represent structured items for more complicated and non-fixed-size structures. For example, methods make use of the Code attribute to represent the bytecode associated with that particular method.

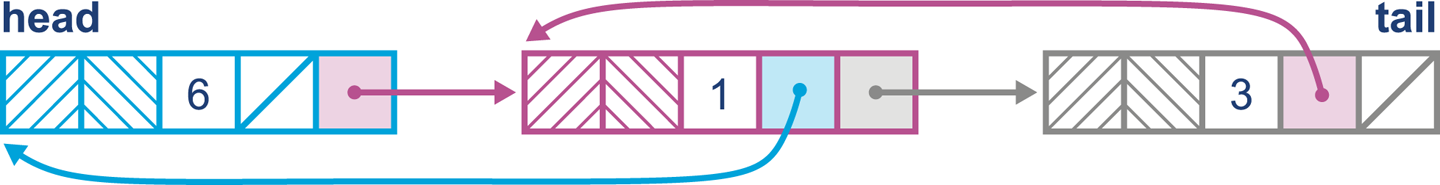

Figure 2-2 provides a mnemonic for remembering the structure.

In this very simple code example, it is possible to observe the effect of running javac:

publicclassHelloWorld{publicstaticvoidmain(String[]args){for(inti=0;i<10;i++){System.out.println("Hello World");}}}

Java ships with a class file disassembler called javap, allowing inspection of .class files. Taking the HelloWorld class file and running javap -c HelloWorld gives the following output:

publicclassHelloWorld{publicHelloWorld();Code:0:aload_01:invokespecial#1// Method java/lang/Object."<init>":()V4:returnpublicstaticvoidmain(java.lang.String[]);Code:0:iconst_01:istore_12:iload_13:bipush105:if_icmpge228:getstatic#2// Field java/lang/System.out ...11:ldc#3// String Hello World13:invokevirtual#4// Method java/io/PrintStream.println ...16:iinc1,119:goto222:return}

This layout describes the bytecode for the file HelloWorld.class. For more detail javap also has a -v option that provides the full class file header information and constant pool details. The class file contains two methods, although only the single main() method was supplied in the source file; this is the result of javac automatically adding a default constructor to the class.

The first instruction executed in the constructor is aload_0, which places the this reference onto the first position in the stack. The invokespecial command is then called, which invokes an instance method that has specific handling for calling superconstructors and creating objects. In the default constructor, the invoke matches the default constructor for Object, as an override was not supplied.

Opcodes in the JVM are concise and represent the type, the operation, and the interaction between local variables, the constant pool, and the stack.

Moving on to the main() method, iconst_0 pushes the integer constant 0 onto the evaluation stack. istore_1 stores this constant value into the local variable at offset 1 (represented as i in the loop). Local variable offsets start at 0, but for instance methods, the 0th entry is always this. The variable at offset 1 is then loaded back onto the stack and the constant 10 is pushed for comparison using if_icmpge (“if integer compare greater or equal”). The test only succeeds if the current integer is >= 10.

For the first few iterations, this comparison test fails and so we continue to instruction 8. Here the static method from System.out is resolved, followed by the loading of the “Hello World” string from the constant pool. The next invoke, invokevirtual, invokes an instance method based on the class. The integer is then incremented and goto is called to loop back to instruction 2.

This process continues until the if_icmpge comparison eventually succeeds (when the loop variable is >= 10); on that iteration of the loop, control passes to instruction 22 and the method returns.

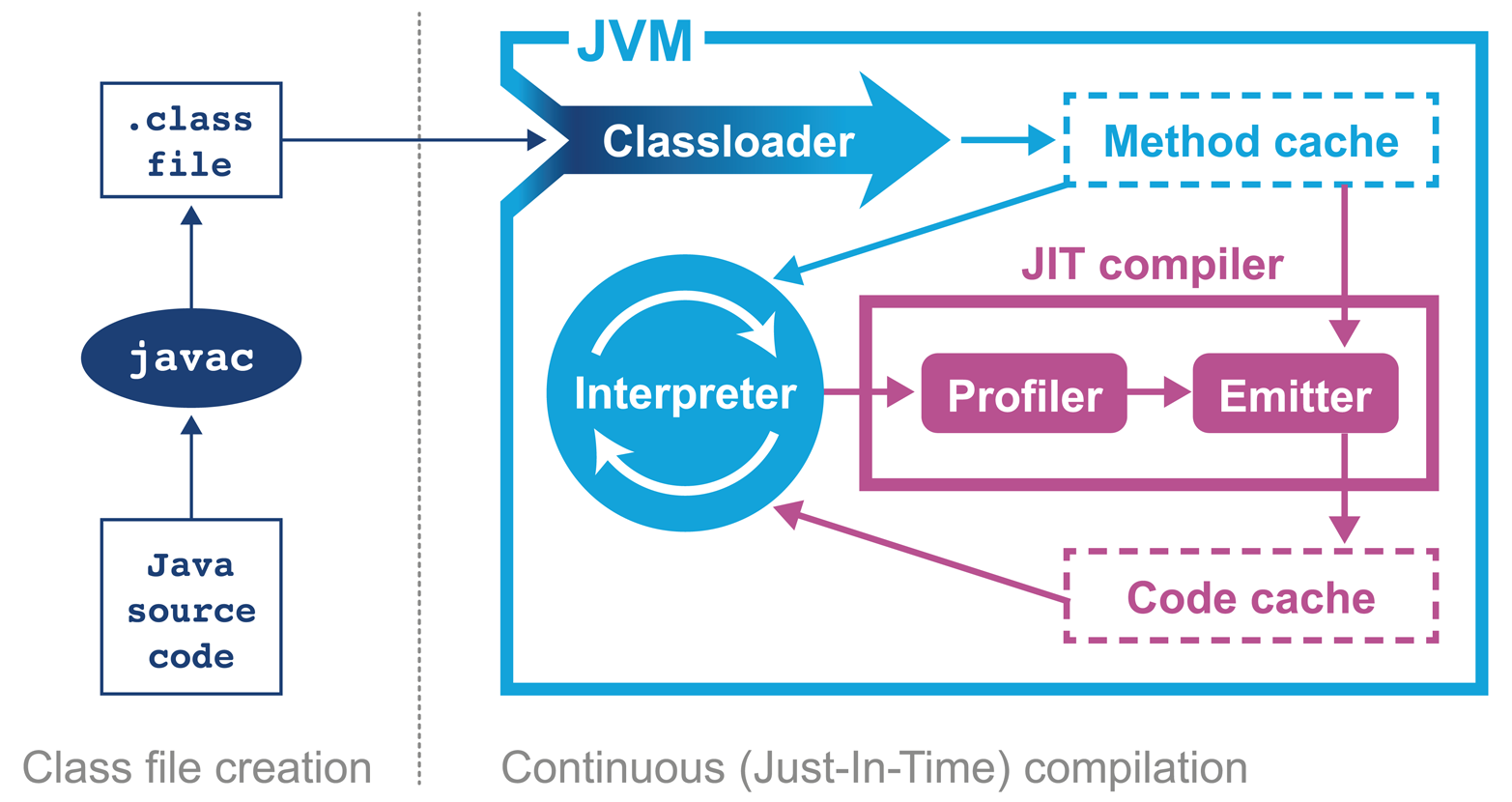

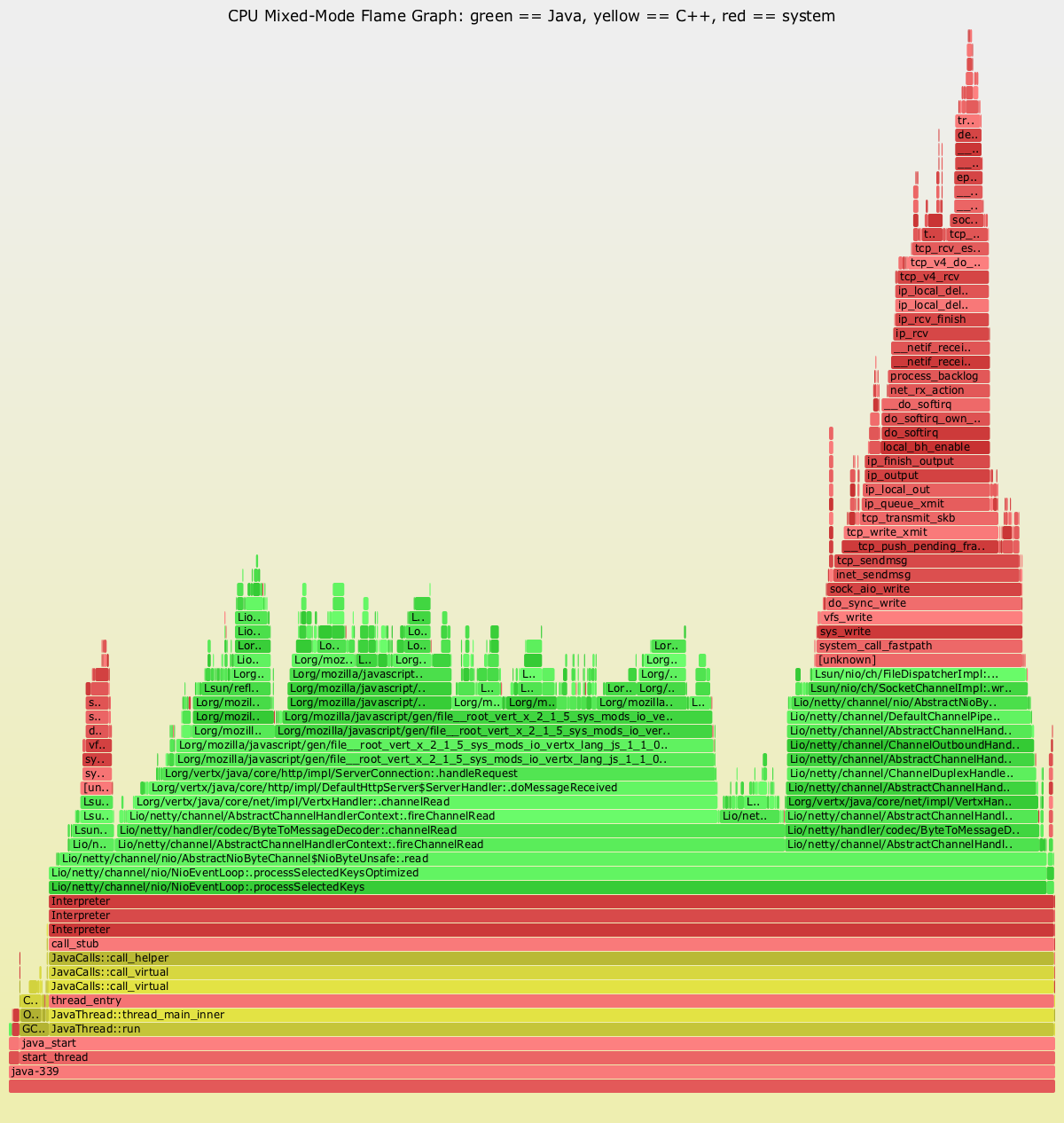

In April 1999 Sun introduced one of the biggest changes to Java in terms of performance. The HotSpot virtual machine is a key feature of Java that has evolved to enable performance that is comparable to (or better than) languages such as C and C++ (see Figure 2-3). To explain how this is possible, let’s delve a little deeper into the design of languages intended for application development.

Language and platform design frequently involves making decisions and tradeoffs between desired capabilities. In this case, the division is between languages that stay “close to the metal” and rely on ideas such as “zero-cost abstractions,” and languages that favor developer productivity and “getting things done” over strict low-level control.

C++ implementations obey the zero-overhead principle: What you don’t use, you don’t pay for. And further: What you do use, you couldn’t hand code any better.

Bjarne Stroustrup

The zero-overhead principle sounds great in theory, but it requires all users of the language to deal with the low-level reality of how operating systems and computers actually work. This is a significant extra cognitive burden that is placed upon developers who may not care about raw performance as a primary goal.

Not only that, but it also requires the source code to be compiled to platform-specific machine code at build time—usually called Ahead-of-Time (AOT) compilation. This is because alternative execution models such as interpreters, virtual machines, and portablity layers all are most definitely not zero-overhead.

The principle also hides a can of worms in the phrase “what you do use, you couldn’t hand code any better.” This presupposes a number of things, not least that the developer is able to produce better code than an automated system. This is not a safe assumption at all. Very few people want to code in assembly language anymore, so the use of automated systems (such as compilers) to produce code is clearly of some benefit to most programmers.

Java has never subscribed to the zero-overhead abstraction philosophy. Instead, the approach taken by the HotSpot virtual machine is to analyze the runtime behavior of your program and intelligently apply optimizations where they will benefit performance the most. The goal of the HotSpot VM is to allow you to write idiomatic Java and follow good design principles rather then contort your program to fit the VM.

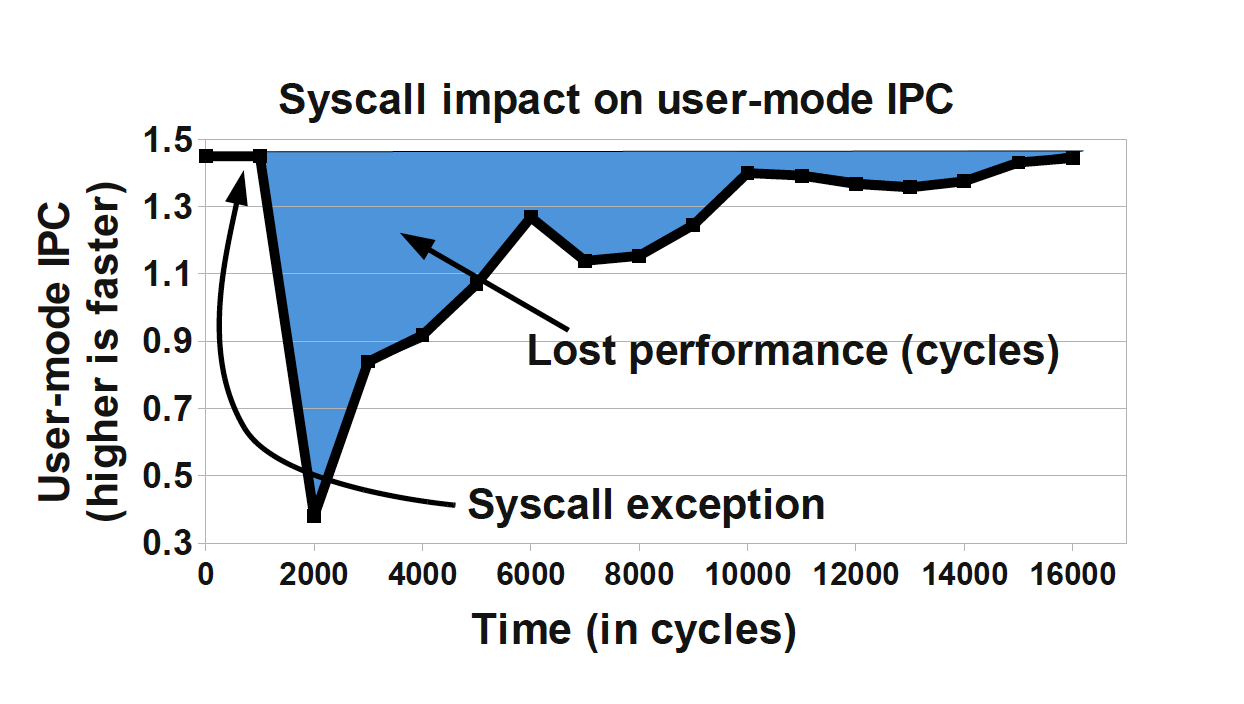

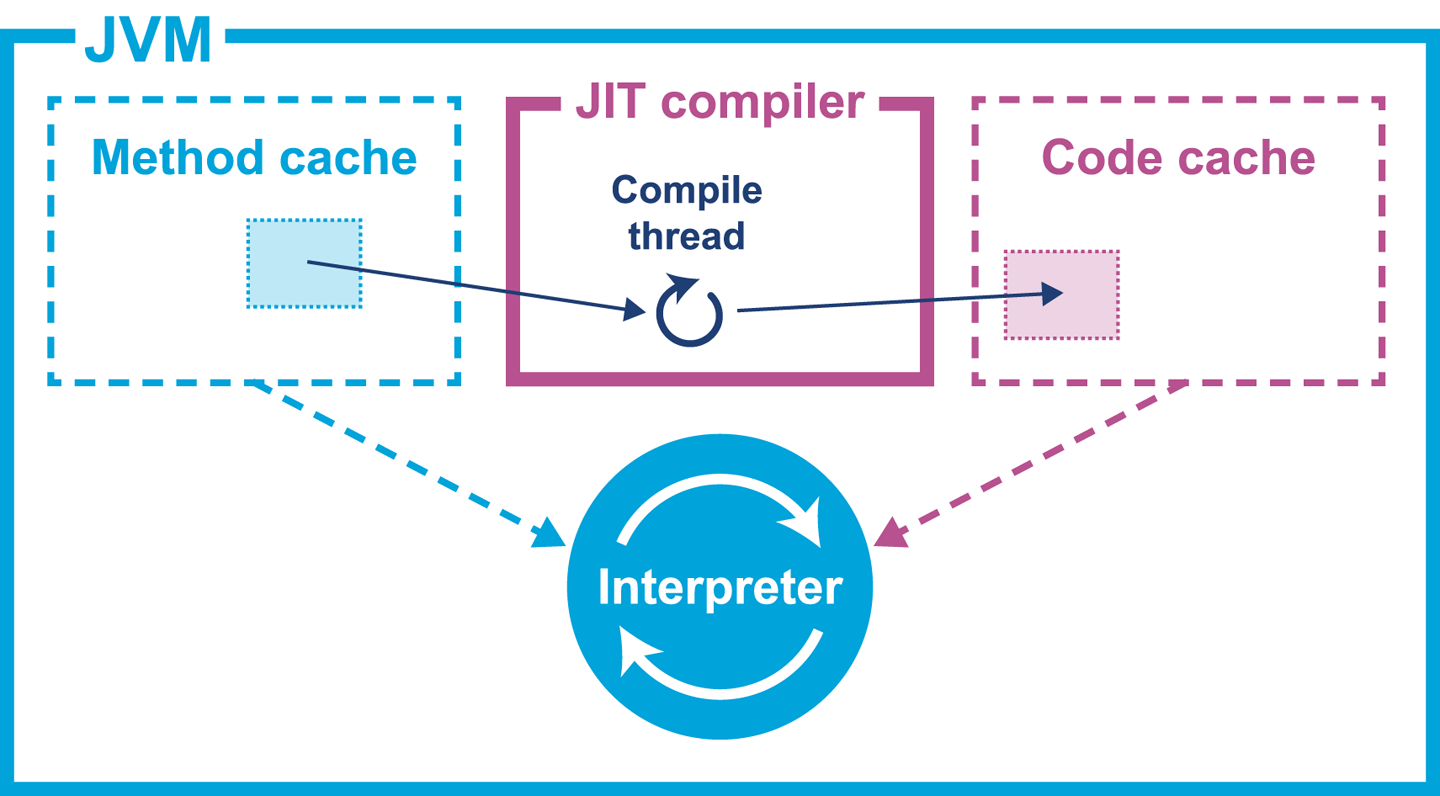

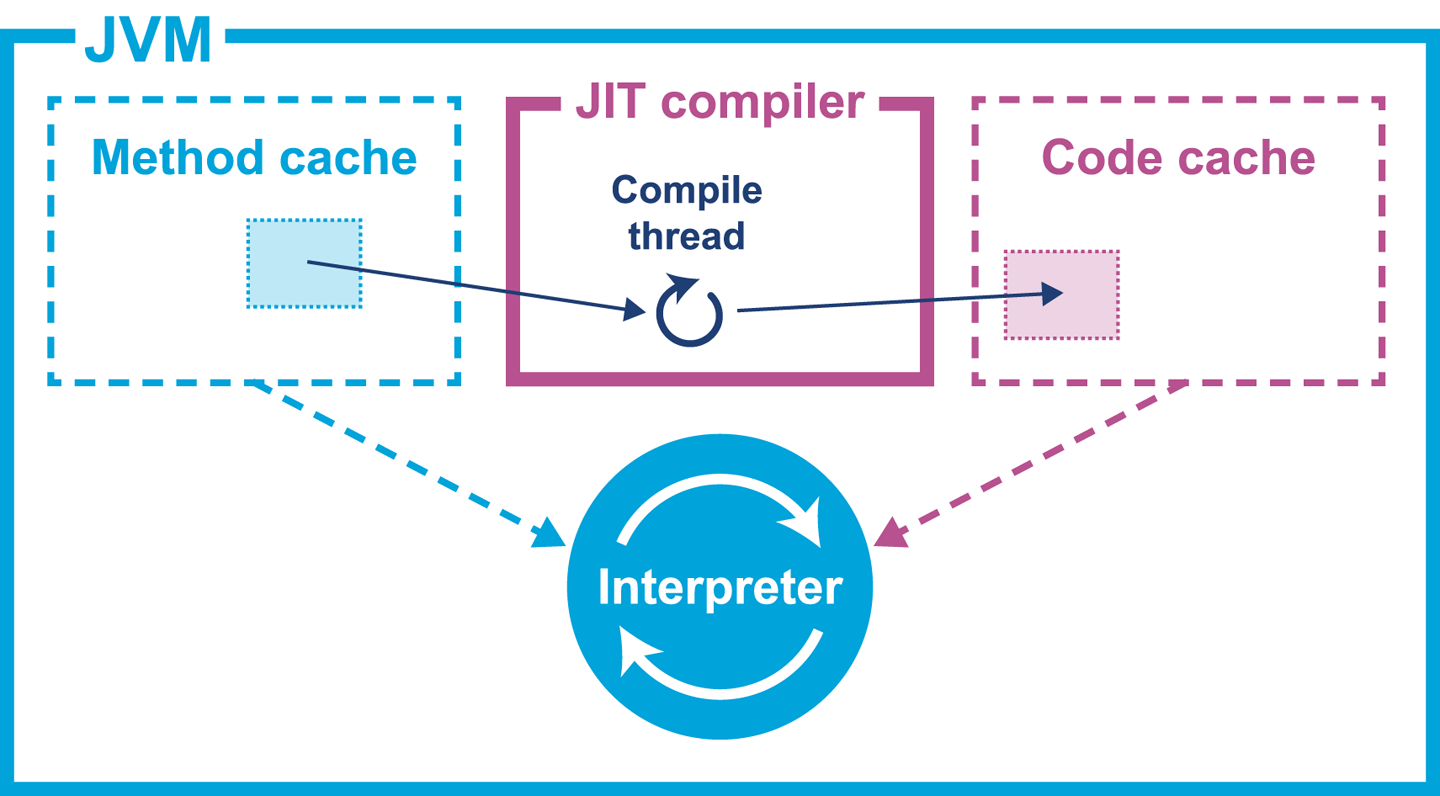

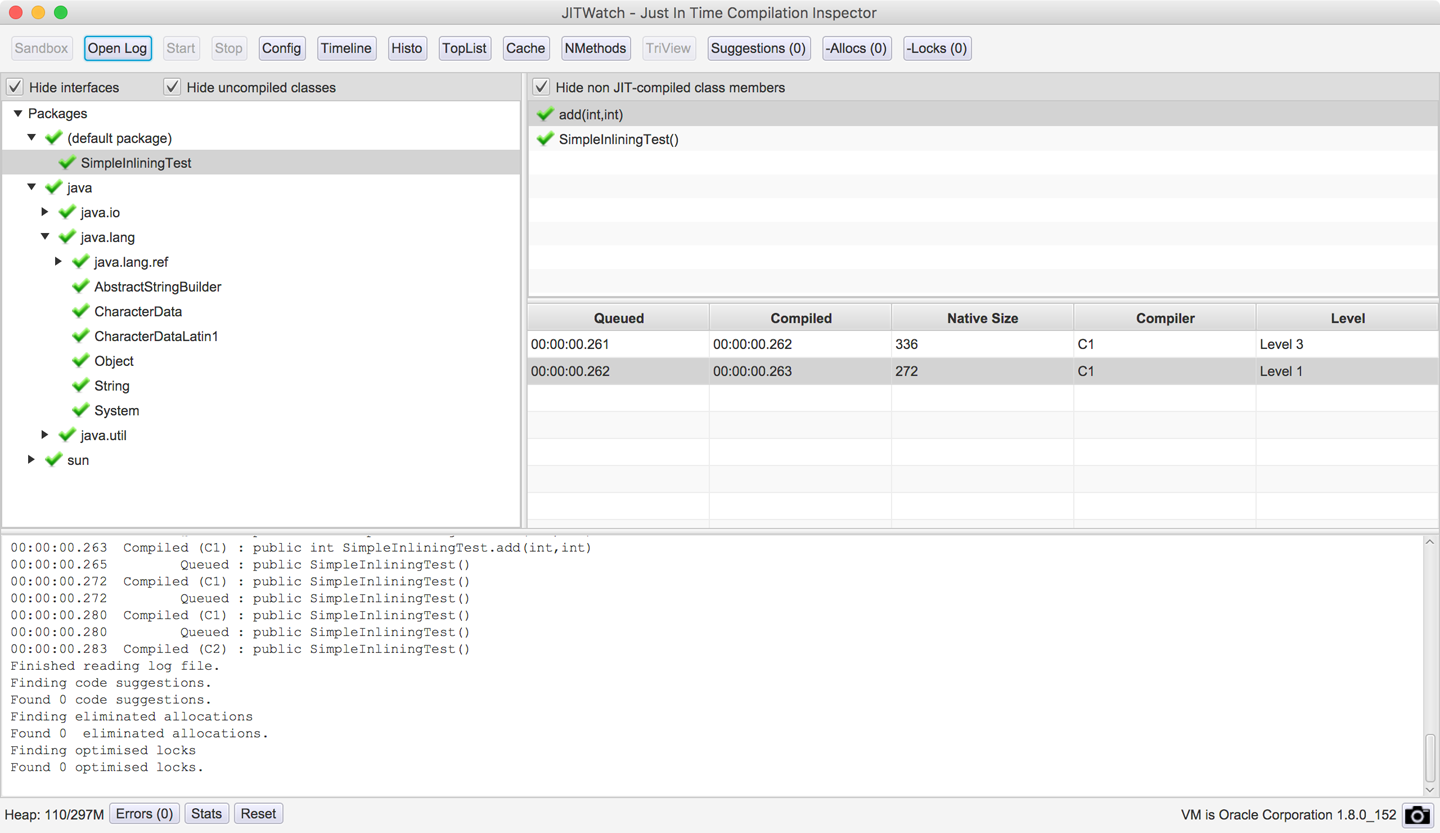

Java programs begin their execution in the bytecode interpreter, where instructions are performed on a virtualized stack machine. This abstraction from the CPU gives the benefit of class file portability, but to get maximum performance your program must execute directly on the CPU, making use of its native features.

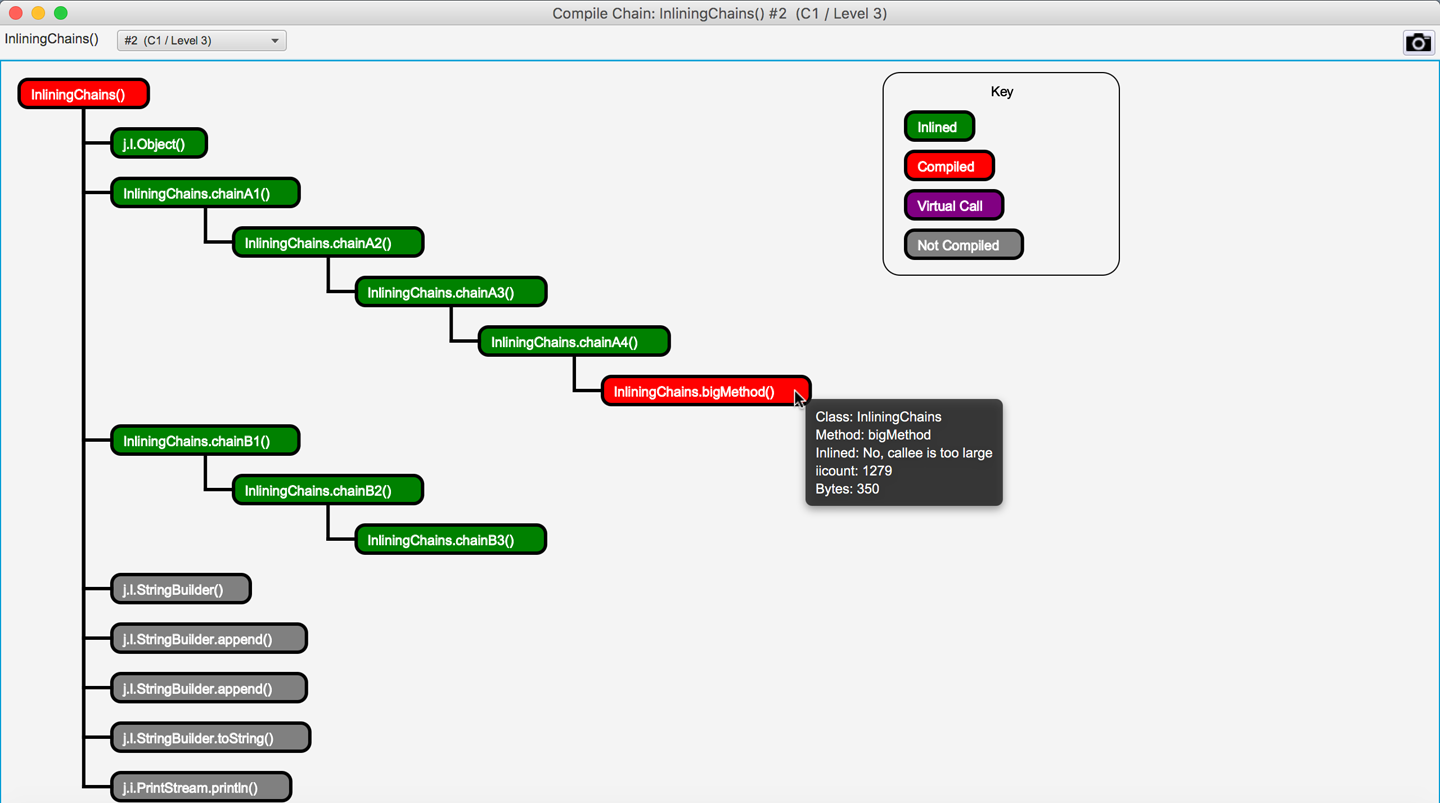

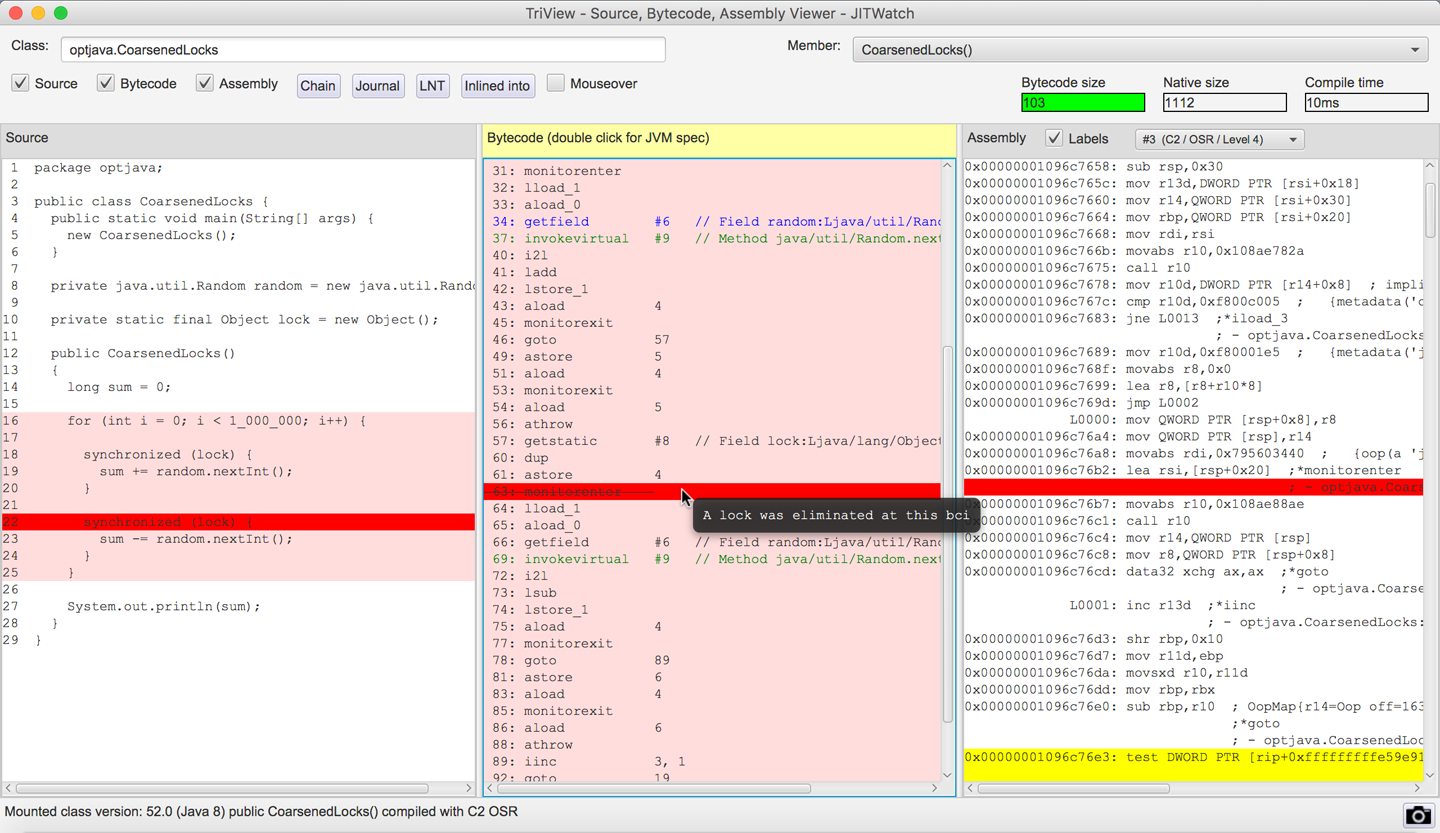

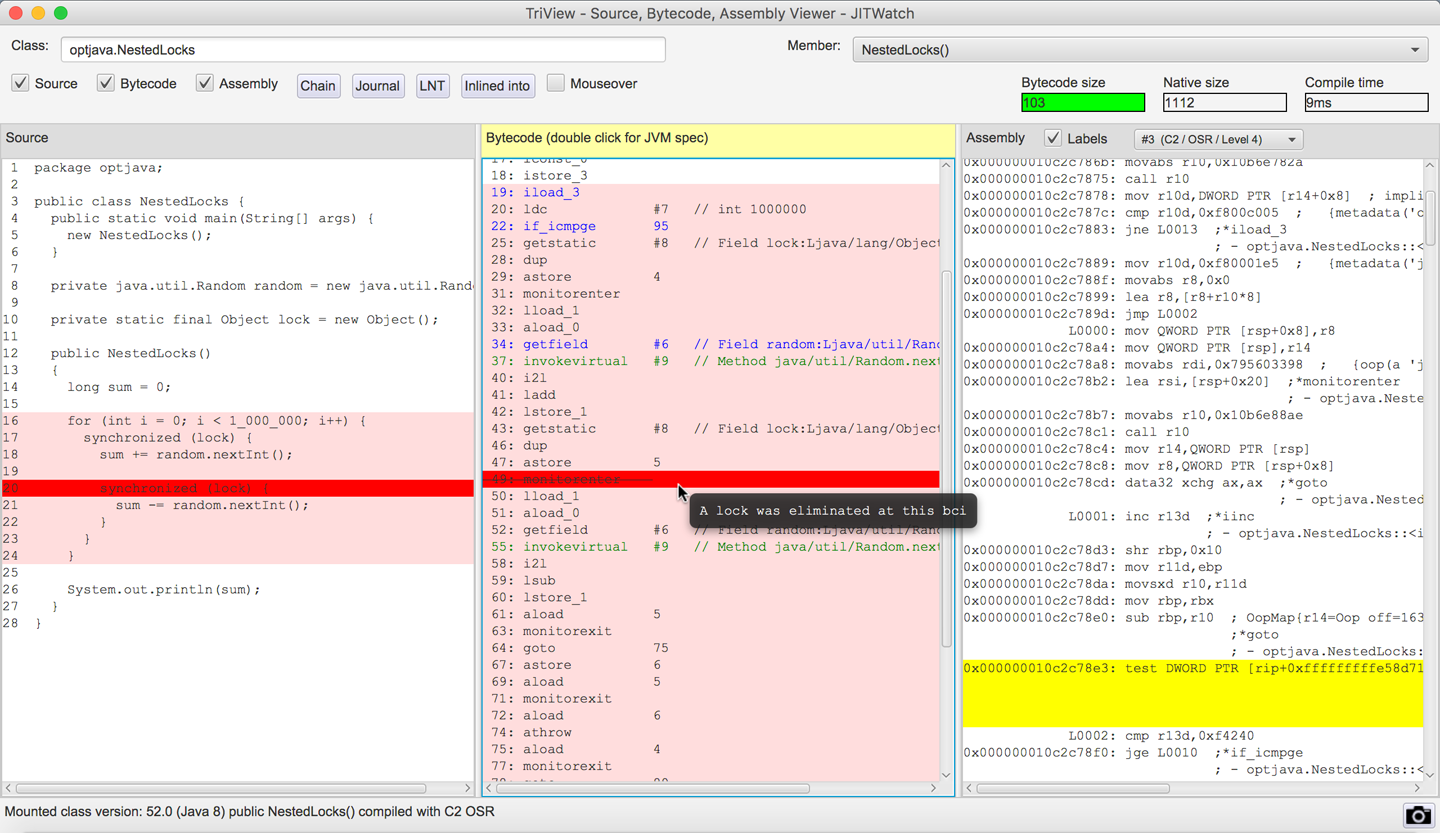

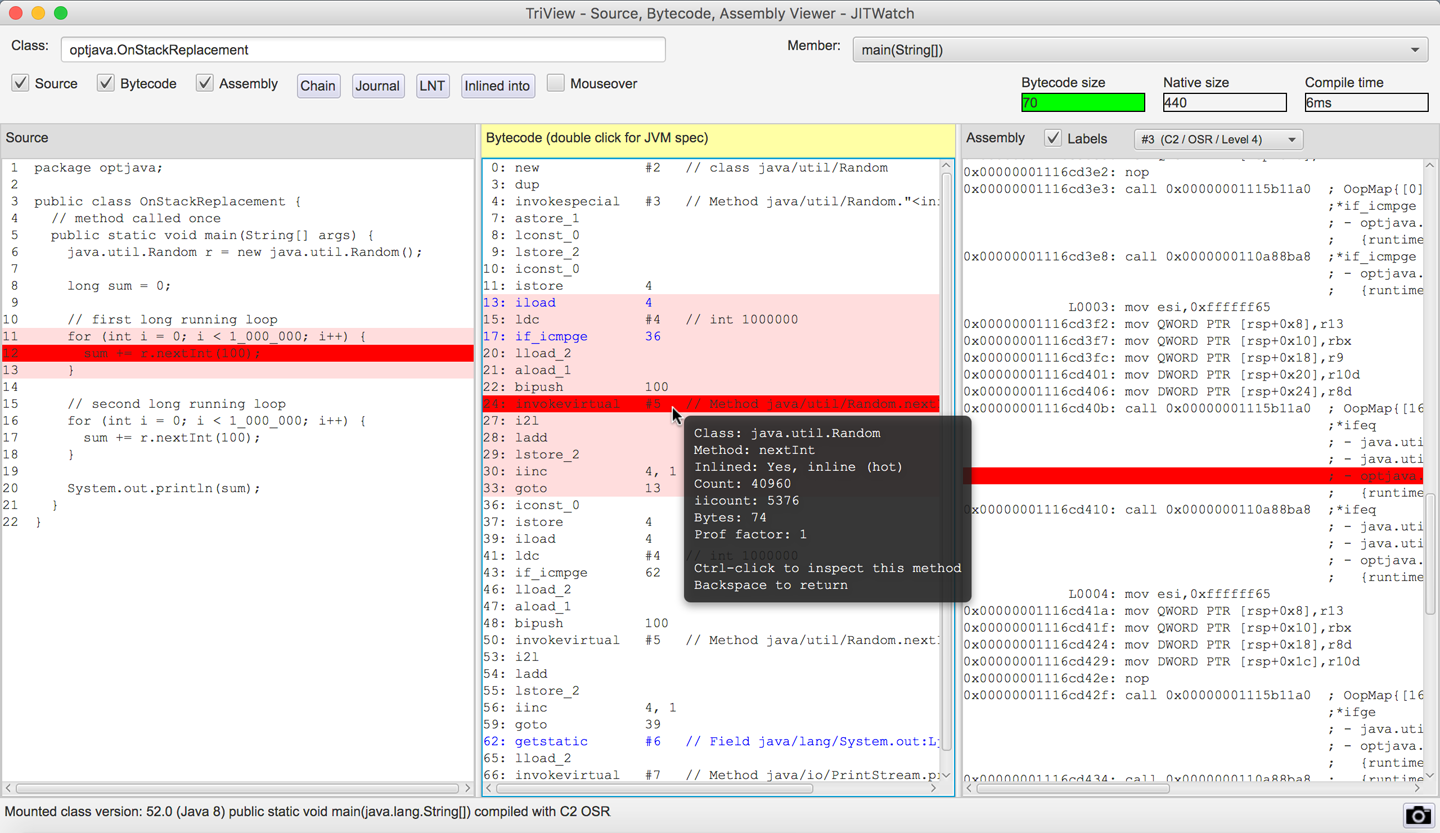

HotSpot achieves this by compiling units of your program from interpreted bytecode into native code. The units of compilation in the HotSpot VM are the method and the loop. This is known as Just-in-Time (JIT) compilation.

JIT compilation works by monitoring the application while it is running in interpreted mode and observing the parts of code that are most frequently executed. During this analysis process, programmatic trace information is captured that allows for more sophisticated optimization. Once execution of a particular method passes a threshold, the profiler will look to compile and optimize that particular section of code.

There are many advantages to the JIT approach to compilation, but one of the main ones is that it bases compiler optimization decisions on trace information that is collected during the interpreted phase, enabling HotSpot to make more informed optimizations.

Not only that, but HotSpot has had hundreds of engineering years (or more) of development attributed to it and new optimizations and benefits are added with almost every new release. This means that any Java application that runs on top of a new release of HotSpot will be able to take advantage of new performance optimizations present in the VM, without even needing to be recompiled.

After being translated from Java source to bytecode and now going through another step of (JIT) compilation, the code actually being executed has changed very significantly from the source code as written. This is a key insight, and it will drive our approach to dealing with performance-related investigations. JIT-compiled code executing on the JVM may well look nothing like the original Java source code.

The general picture is that languages like C++ (and the up-and-coming Rust) tend to have more predictable performance, but at the cost of forcing a lot of low-level complexity onto the user.

Note that “more predictable” does not necessarily mean “better.” AOT compilers produce code that may have to run across a broad class of processors, and may not be able to assume that specific processor features are available.

Environments that use profile-guided optimization (PGO), such as Java, have the potential to use runtime information in ways that are simply impossible to most AOT platforms. This can offer improvements to performance, such as dynamic inlining and optimizing away virtual calls. HotSpot can even detect the precise CPU type it is running on at VM startup, and can use this information to enable optimizations designed for specific processor features if available.

The technique of detecting precise processor capabilities is known as JVM intrinsics, and is not to be confused with the intrinsic locks introduced by the synchronized keyword.

A full discussion of PGO and JIT compilation can be found in Chapters 9 and 10.

The sophisticated approach that HotSpot takes is a great benefit to the majority of ordinary developers, but this tradeoff (to abandon zero-overhead abstractions) means that in the specific case of high-performance Java applications, the developer must be very careful to avoid “common sense” reasoning and overly simplistic mental models of how Java applications actually execute.

Analyzing the performance of small sections of Java code (microbenchmarks) is usually actually harder than analyzing entire applications, and is a very specialized task that the majority of developers should not undertake. We will return to this subject in Chapter 5.

HotSpot’s compilation subsystem is one of the two most important subsystems that the virtual machine provides. The other is automatic memory management, which was one of the major selling points of Java in the early years.

In languages such as C, C++, and Objective-C the programmer is responsible for managing the allocation and release of memory. The benefits of managing memory and lifetime of objects yourself are more deterministic performance and the ability to tie resource lifetime to the creation and deletion of objects. But these benefits come at a huge cost—for correctness, developers must be able to accurately account for memory.

Unfortunately, decades of practical experience showed that many developers have a poor understanding of idioms and patterns for memory management. Later versions of C++ and Objective-C have improved this using smart pointer idioms in the standard library. However, at the time Java was created poor memory management was a major cause of application errors. This led to concern among developers and managers about the amount of time spent dealing with language features rather than delivering value for the business.

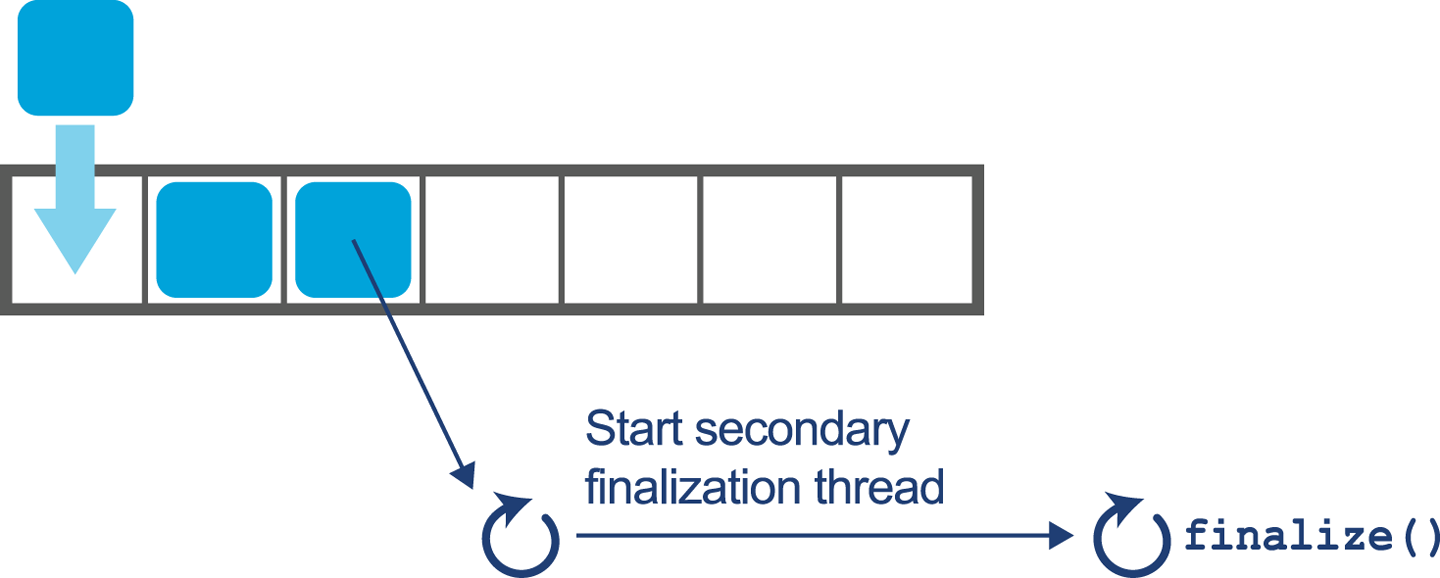

Java looked to help resolve the problem by introducing automatically managed heap memory using a process known as garbage collection (GC). Simply put, garbage collection is a nondeterministic process that triggers to recover and reuse no-longer-needed memory when the JVM requires more memory for allocation.

However, the story behind GC is not quite so simple, and various algorithms for garbage collection have been developed and applied over the course of Java’s history. GC comes at a cost: when it runs, it often stops the world, which means while GC is in progress the application pauses. Usually these pause times are designed to be incredibly small, but as an application is put under pressure they can increase.

Garbage collection is a major topic within Java performance optimization, so we will devote Chapters 6, 7, and 8 to the details of Java GC.

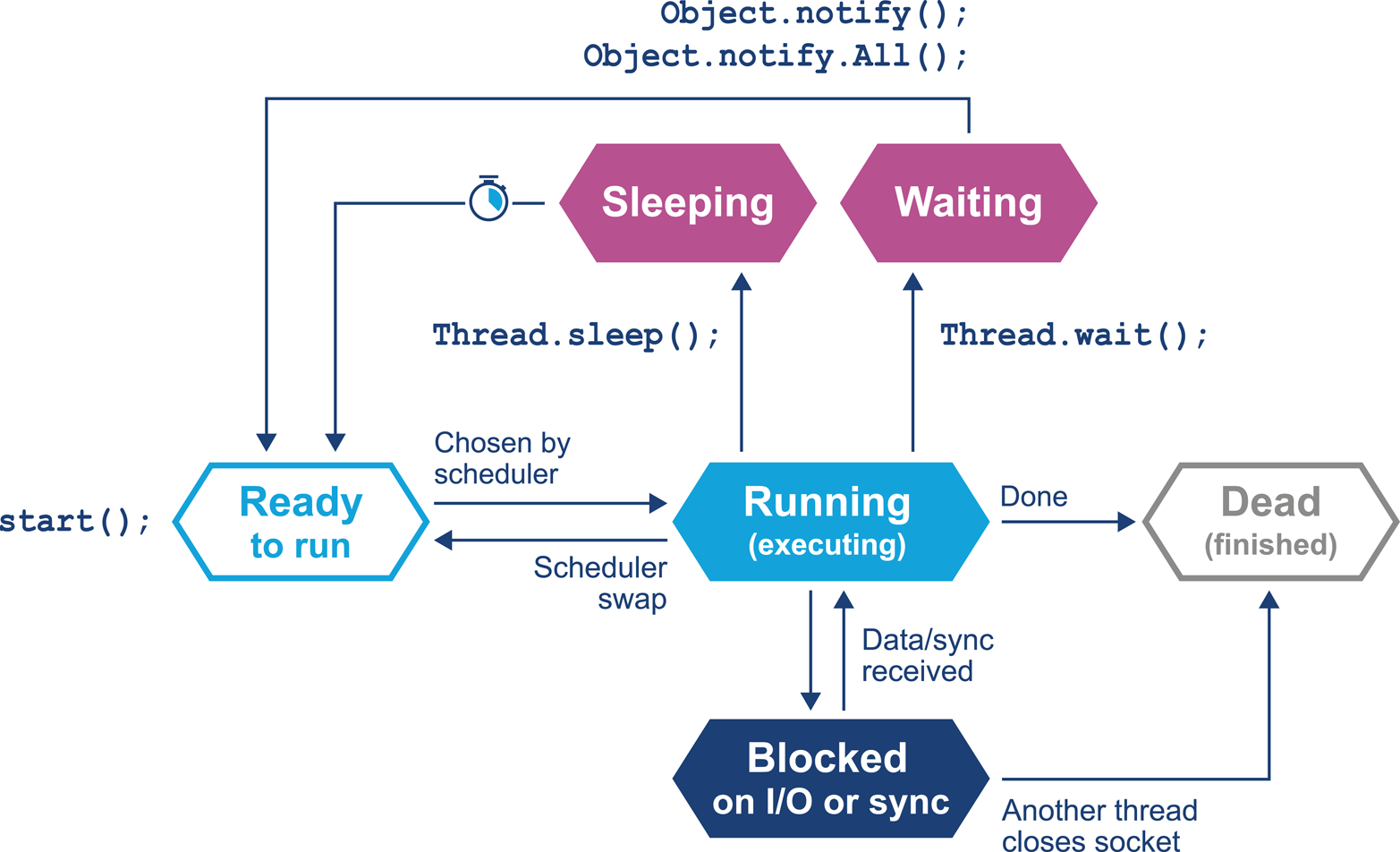

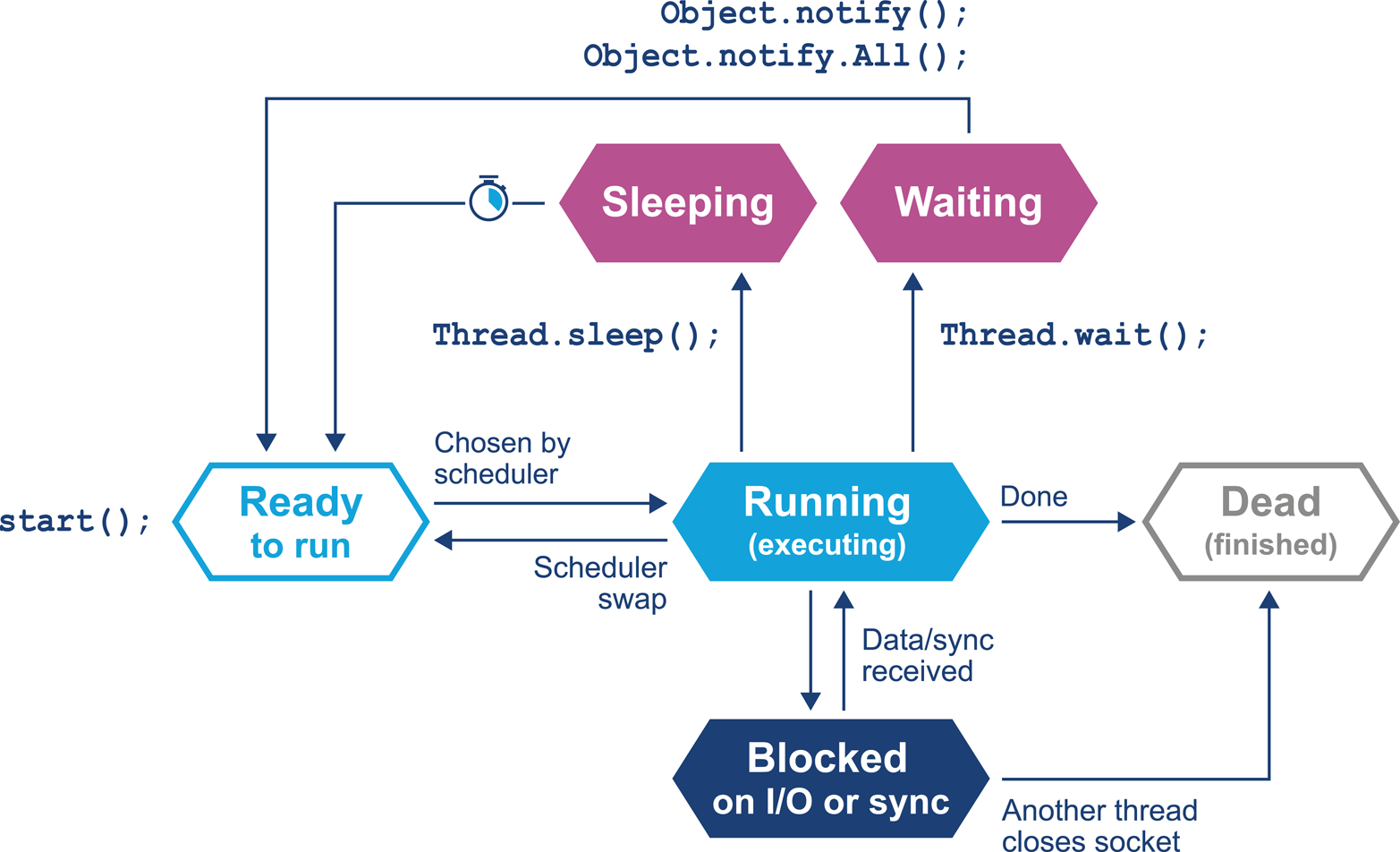

One of the major advances that Java brought in with its first version was built-in support for multithreaded programming. The Java platform allows the developer to create new threads of execution. For example, in Java 8 syntax:

Threadt=newThread(()->{System.out.println("Hello World!");});t.start();

Not only that, but the Java environment is inherently multithreaded, as is the JVM. This produces additional, irreducible complexity in the behavior of Java programs, and makes the work of the performance analyst even harder.

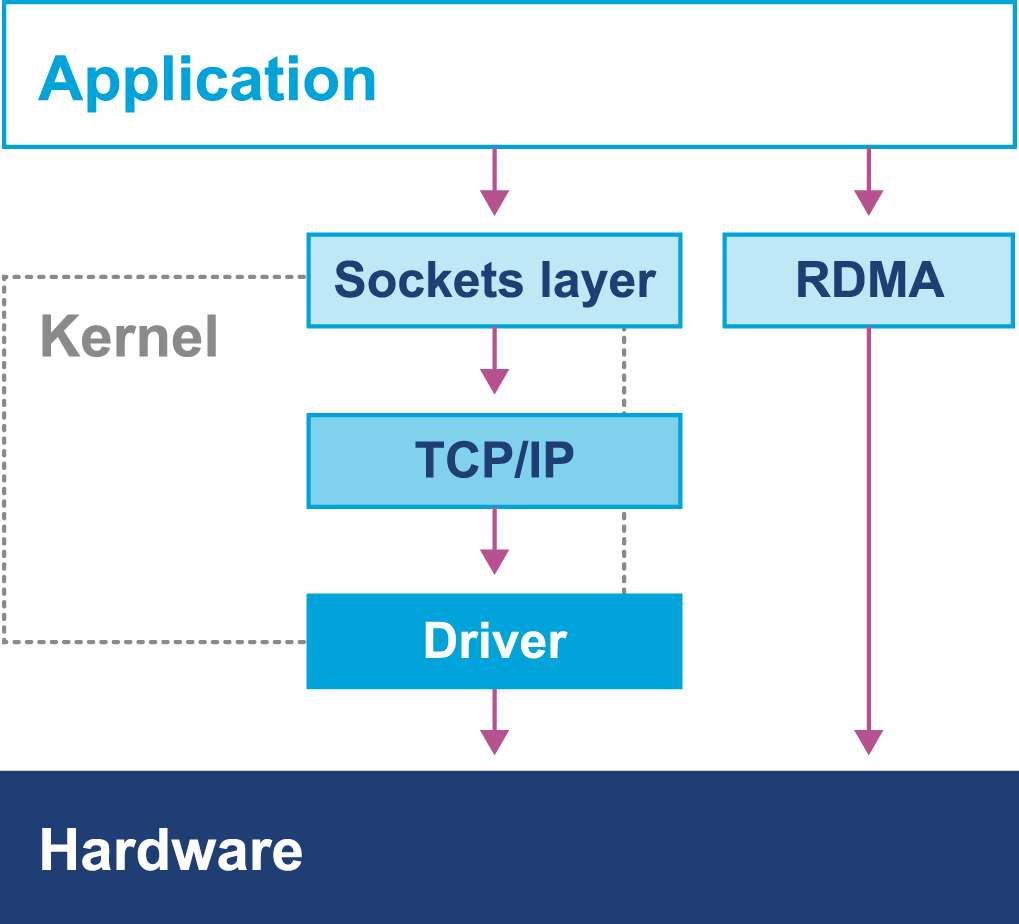

In most mainstream JVM implementations, each Java application thread corresponds precisely to a dedicated operating system thread. The alternative, using a shared pool of threads to execute all Java application threads (an approach known as green threads), proved not to provide an acceptable performance profile and added needless complexity.

It is safe to assume that every JVM application thread is backed by a unique OS thread that is created when the start() method is called on the corresponding Thread object.

Java’s approach to multithreading dates from the late 1990s and has these fundamental design principles:

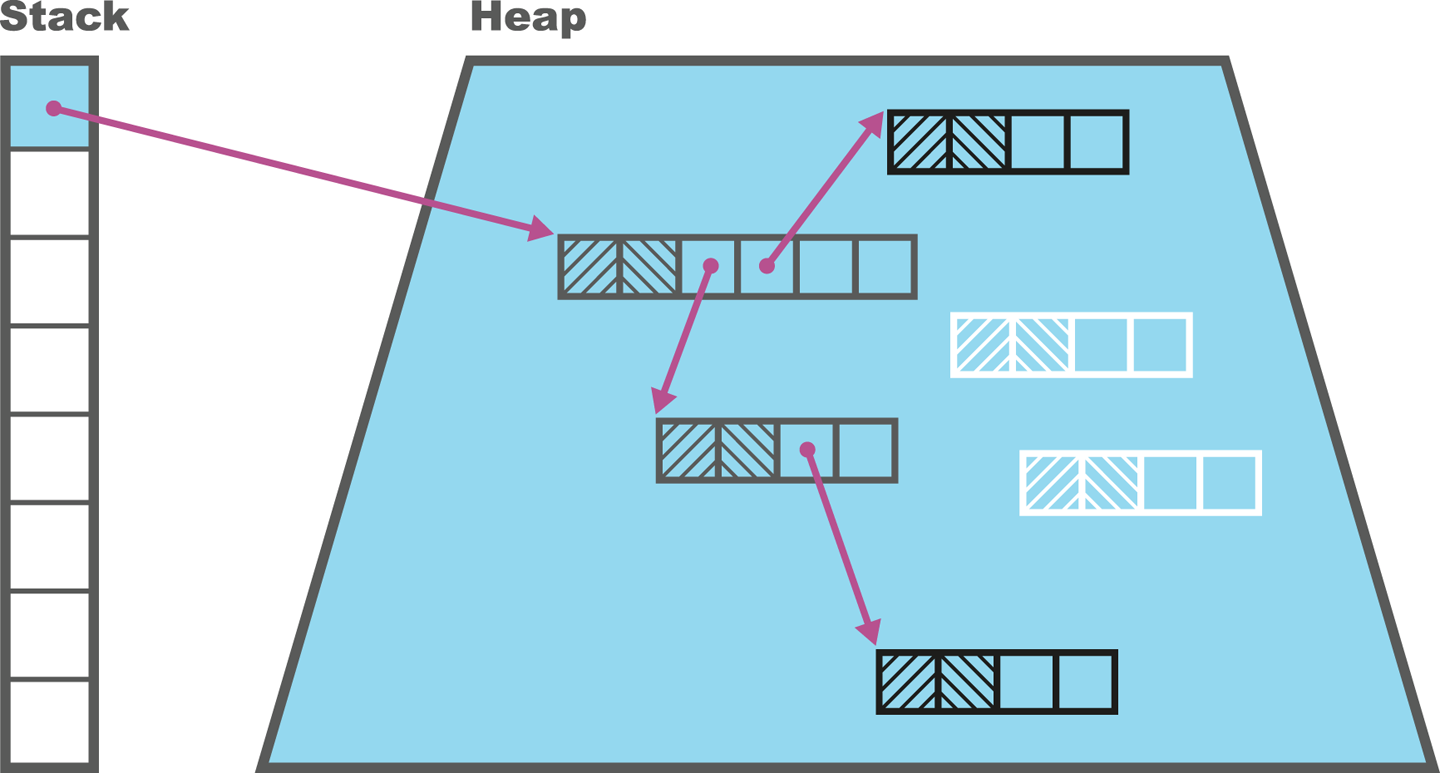

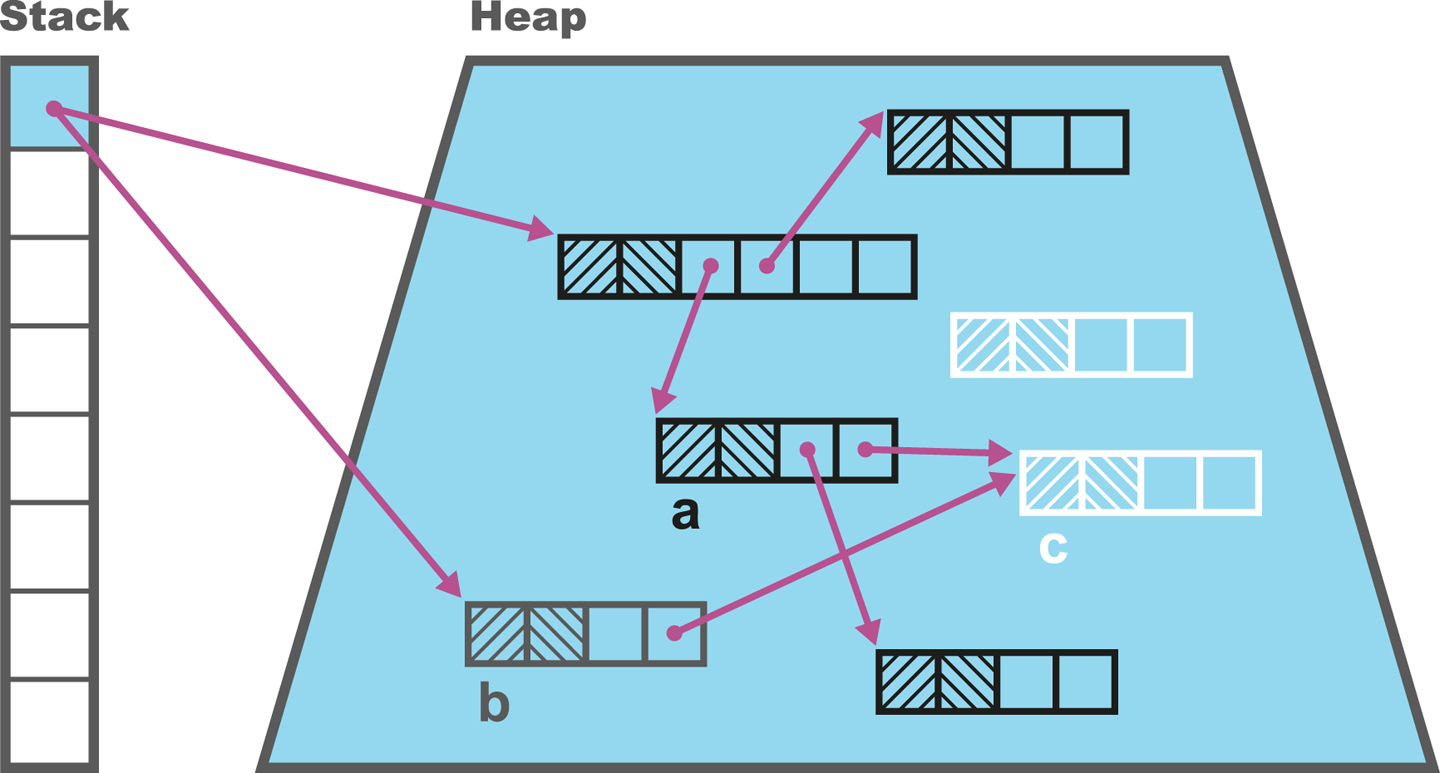

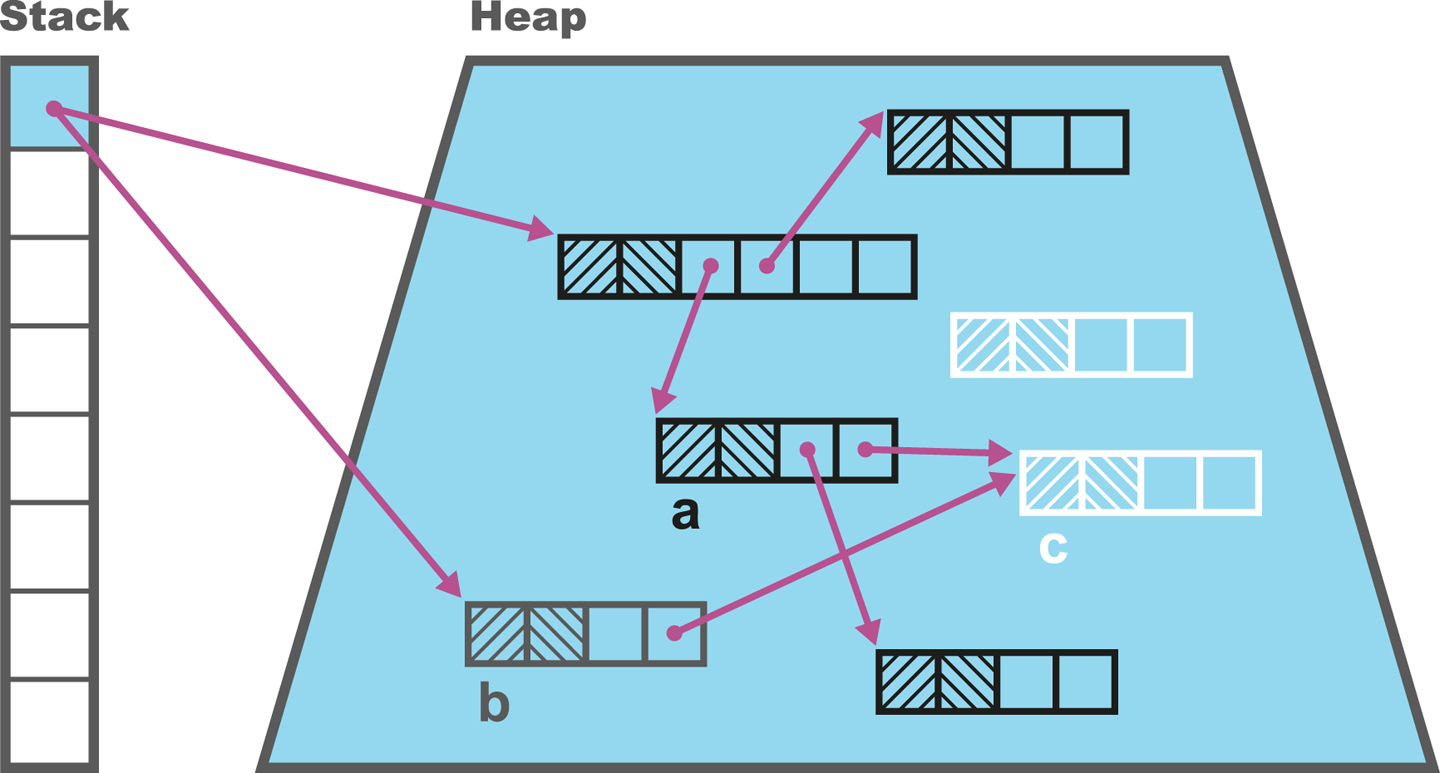

All threads in a Java process share a single, common garbage-collected heap.

Any object created by one thread can be accessed by any other thread that has a reference to the object.

Objects are mutable by default; that is, the values held in object fields can be changed unless the programmer explicitly uses the final keyword to mark them as immutable.

The Java Memory Model (JMM) is a formal model of memory that explains how different threads of execution see the changing values held in objects.

That is, if threads A and B both have references to object obj, and thread A alters it, what happens to the value observed in thread B?

This seemingly simple question is actually more complicated than it seems, because the operating system scheduler (which we will meet in Chapter 3) can forcibly evict threads from CPU cores. This can lead to another thread starting to execute and accessing an object before the original thread had finished processing it, and potentially seeing the object in a damaged or invalid state.

The only defense the core of Java provides against this potential object damage during concurrent code execution is the mutual exclusion lock, and this can be very complex to use in real applications. Chapter 12 contains a detailed look at how the JMM works, and the practicalities of working with threads and locks.

Many developers may only be immediately familiar with the Java implementation produced by Oracle. We have already met the virtual machine that comes from the Oracle implementation, HotSpot. However, there are several other implementations that we will discuss in this book, to varying degrees of depth:

OpenJDK is an interesting special case. It is an open source (GPL) project that provides the reference implementation of Java. The project is led and supported by Oracle and provides the basis of its Java releases.

Oracle’s Java is the most widely known implementation. It is based on OpenJDK, but relicensed under Oracle’s proprietary license. Almost all changes to Oracle Java start off as commits to an OpenJDK public repository (with the exception of security fixes that have not yet been publicly disclosed).

Zulu is a free (GPL-licensed) OpenJDK implementation that is fully Java-certified and provided by Azul Systems. It is unencumbered by proprietary licenses and is freely redistributable. Azul is one of the few vendors to provide paid support for OpenJDK.

Red Hat was the first non-Oracle vendor to produce a fully certified Java implementation based on OpenJDK. IcedTea is fully certified and redistributable.

Zing is a high-performance proprietary JVM. It is a fully certified implementation of Java and is produced by Azul Systems. It is 64-bit Linux only, and is designed for server-class systems with large heaps (10s of 100s of GB) and a lot of CPU.

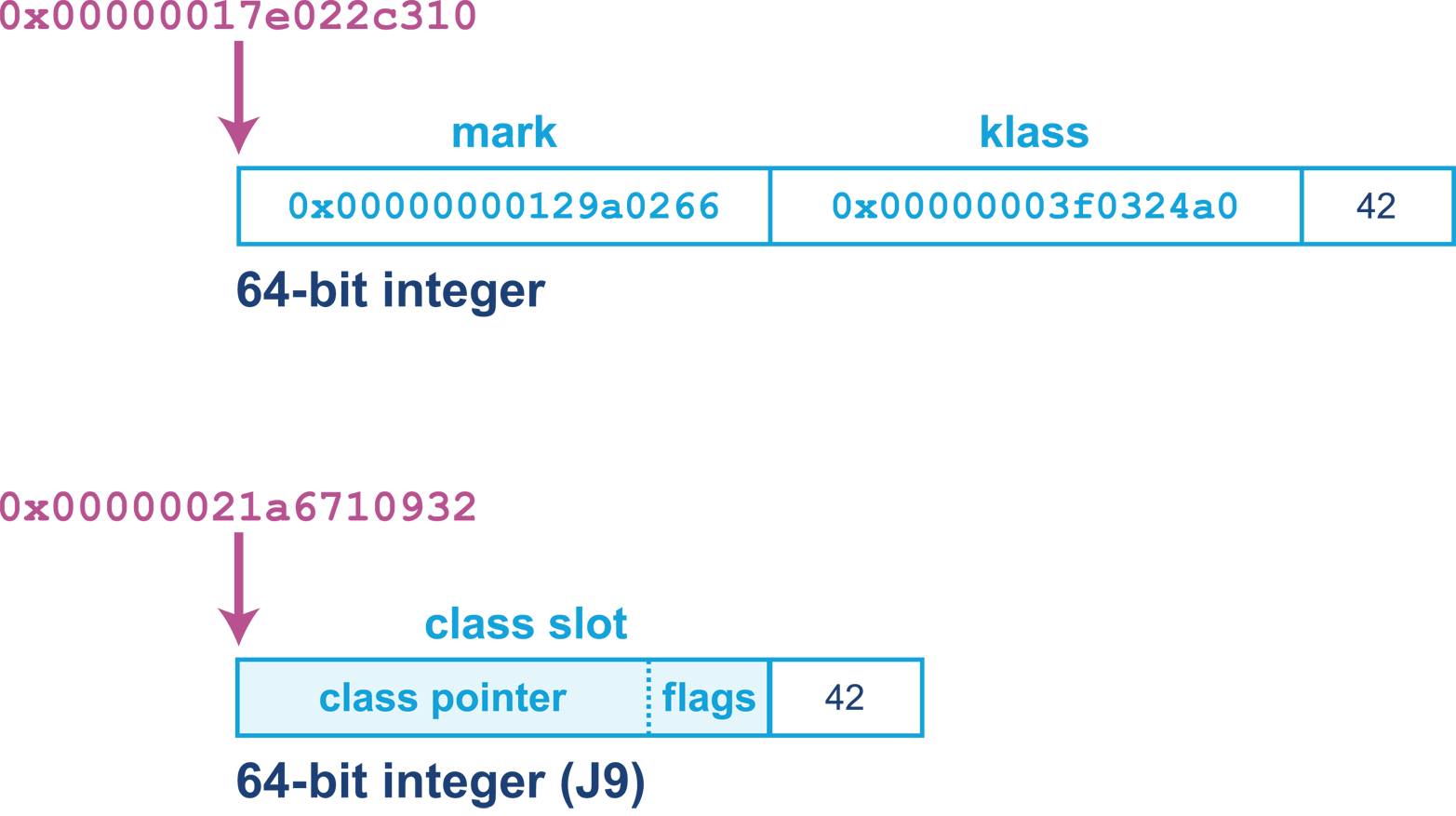

IBM’s J9 started life as a proprietary JVM but was open-sourced partway through its life (just like HotSpot). It is now built on top of an Eclipse open runtime project (OMR), and forms the basis of IBM’s proprietary product. It is fully compliant with Java certification.

The Avian implementation is not 100% Java conformant in terms of certification. It is included in this list because it is an interesting open source project and a great learning tool for developers interested in understanding the details of how a JVM works, rather than as a 100% production-ready solution.

Google’s Android project is sometimes thought of as being “based on Java.” However, the picture is actually a little more complicated. Android originally used a different implementation of Java’s class libraries (from the clean-room Harmony project) and a cross compiler to convert to a different (.dex) file format for a non-JVM virtual machine.

Of these implementations, the great majority of the book focuses on HotSpot. This material applies equally to Oracle Java, Azul Zulu, Red Hat IcedTea, and all other OpenJDK-derived JVMs.

There are essentially no performance-related differences between the various HotSpot-based implementations, when comparing like-for-like versions.

We also include some material related to IBM J9 and Azul Zing. This is intended to provide an awareness of these alternatives rather than a definitive guide. Some readers may wish to explore these technologies more deeply, and they are encouraged to proceed by setting performance goals, and then measuring and comparing, in the usual manner.

Android is moving to use the OpenJDK 8 class libraries with direct support in the Android runtime. As this technology stack is so far from the other examples, we won’t consider Android any further in this book.

Almost all of the JVMs we will discuss are open source, and in fact, most of them are derived from the GPL-licensed HotSpot. The exceptions are IBM’s Open J9, which is Eclipse-licensed, and Azul Zing, which is commercial (although Azul’s Zulu product is GPL).

The situation with Oracle Java (as of Java 9) is slightly more complex. Despite being derived from the OpenJDK code base, it is proprietary, and is not open source software. Oracle achieves this by having all contributors to OpenJDK sign a license agreement that permits dual licensing of their contribution to both the GPL of OpenJDK and Oracle’s proprietary license.

Each update release to Oracle Java is taken as a branch off the OpenJDK mainline, which is not then patched on-branch for future releases. This prevents divergence of Oracle and OpenJDK, and accounts for the lack of meaningful difference between Oracle JDK and an OpenJDK binary based on the same source.

This means that the only real difference between Oracle JDK and OpenJDK is the license. This may seem an irrelevance, but the Oracle license contains a few clauses that developers should be aware of:

Oracle does not grant the right to redistribute its binaries outside of your own organization (e.g., as a Docker image).

You are not permitted to apply a binary patch to an Oracle binary without its agreement (which will usually mean a support contract).

There are also several other commercial features and tools that Oracle makes available that will only work with Oracle’s JDK, and within the terms of its license. This situation will be changing with future releases of Java from Oracle, however, as we will discuss in Chapter 15.

When planning a new greenfield deployment, developers and architects should consider carefully their choice of JVM vendor. Some large organizations, notably Twitter and Alibaba, even maintain their own private builds of OpenJDK, although the engineering effort required for this is beyond the reach of many companies.

The JVM is a mature execution platform, and it provides a number of technology alternatives for instrumentation, monitoring, and observability of running applications. The main technologies available for these types of tools for JVM applications are:

Java Management Extensions (JMX)

Java agents

The JVM Tool Interface (JVMTI)

The Serviceability Agent (SA)

JMX is a powerful, general-purpose technology for controlling and monitoring JVMs and the applications running on them. It provides the ability to change parameters and call methods in a general way from a client application. A full treatment is, unfortunately, outside the scope of this book. However, JMX (and its associated network transport, RMI) is a fundamental aspect of the management capabilities of the JVM.

A Java agent is a tooling component, written in Java (hence the name), that makes use of the interfaces in java.lang.instrument to modify the bytecode of methods.

To install an agent, provide a startup flag to the JVM:

-javaagent:<path-to-agent-jar>=<options>

The agent JAR must contain a manifest and include the attribute Premain-Class.

This attribute contains the name of the agent class, which must implement a public static premain() method that acts as the registration hook for the Java agent.

If the Java instrumentation API is not sufficient, then the JVMTI may be used instead. This is a native interface of the JVM, so agents that make use of it must be written in a native compiled language—essentially, C or C++. It can be thought of as a communication interface that allows a native agent to monitor and be informed of events by the JVM. To install a native agent, provide a slightly different flag:

-agentlib:<agent-lib-name>=<options>

or:

-agentpath:<path-to-agent>=<options>

The requirement that JVMTI agents be written in native code means that it is much easier to write code that can damage running applications and even crash the JVM.

Where possible, it is usually preferable to write a Java agent over JVMTI code. Agents are much easier to write, but some information is not available through the Java API, and to access that data JVMTI may be the only possibility available.

The final approach is the Serviceability Agent. This is a set of APIs and tools that can expose both Java objects and HotSpot data structures.

The SA does not require any code to be run in the target VM. Instead, the HotSpot SA uses primitives like symbol lookup and reading of process memory to implement debugging capability. The SA has the ability to debug live Java processes as well as core files (also called crash dump files).

The JDK ships with a number of useful additional tools along with the well-known binaries such as javac and java.

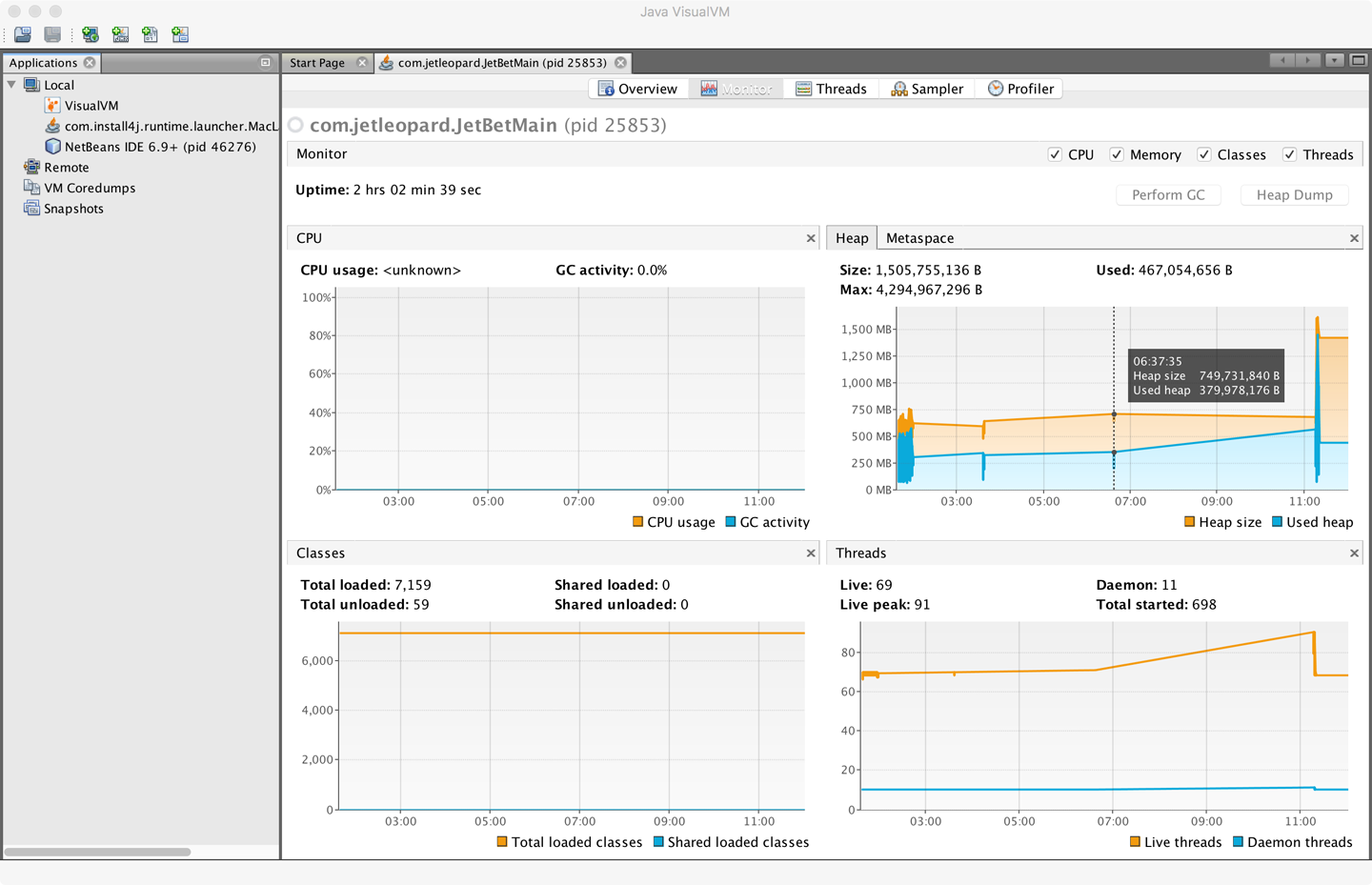

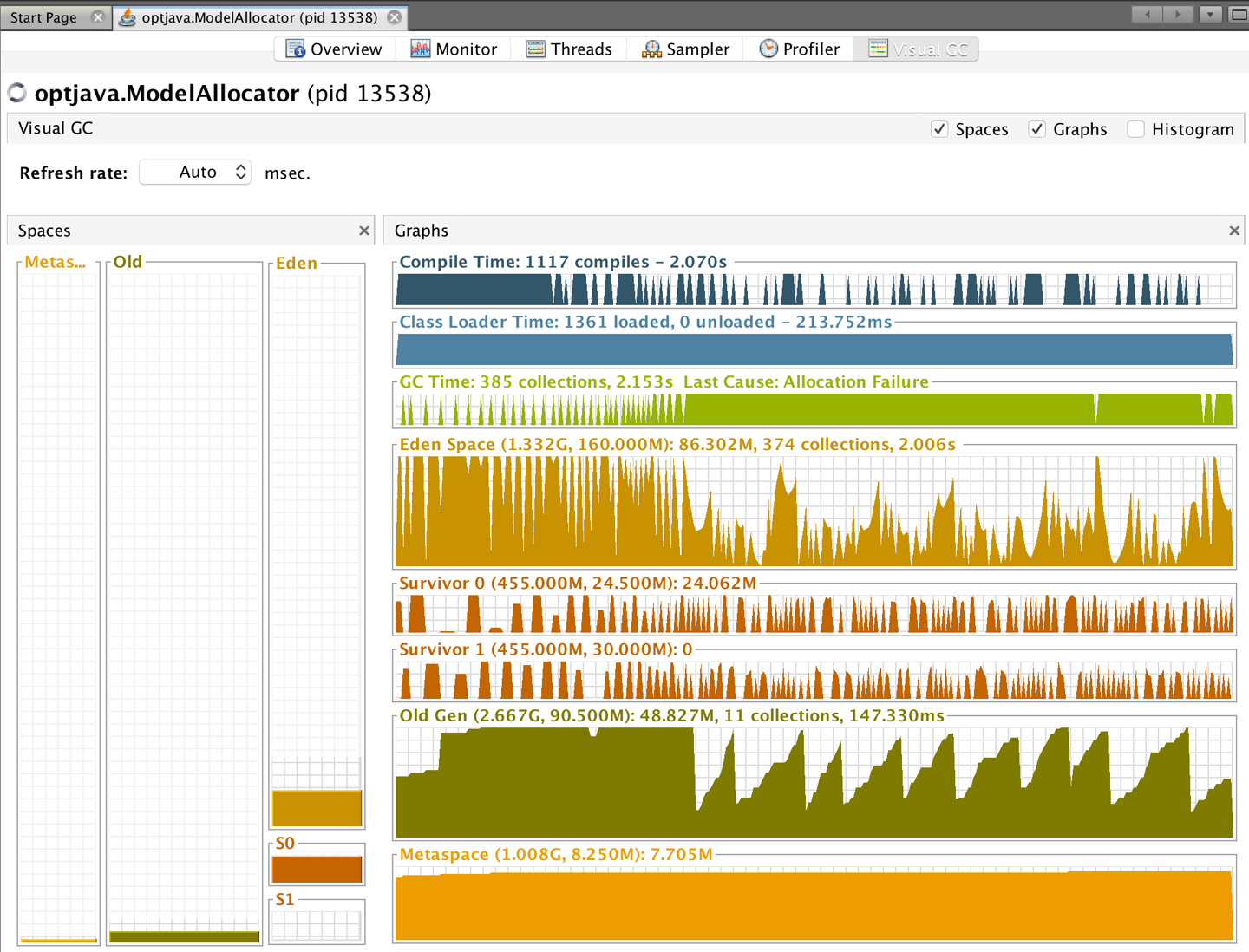

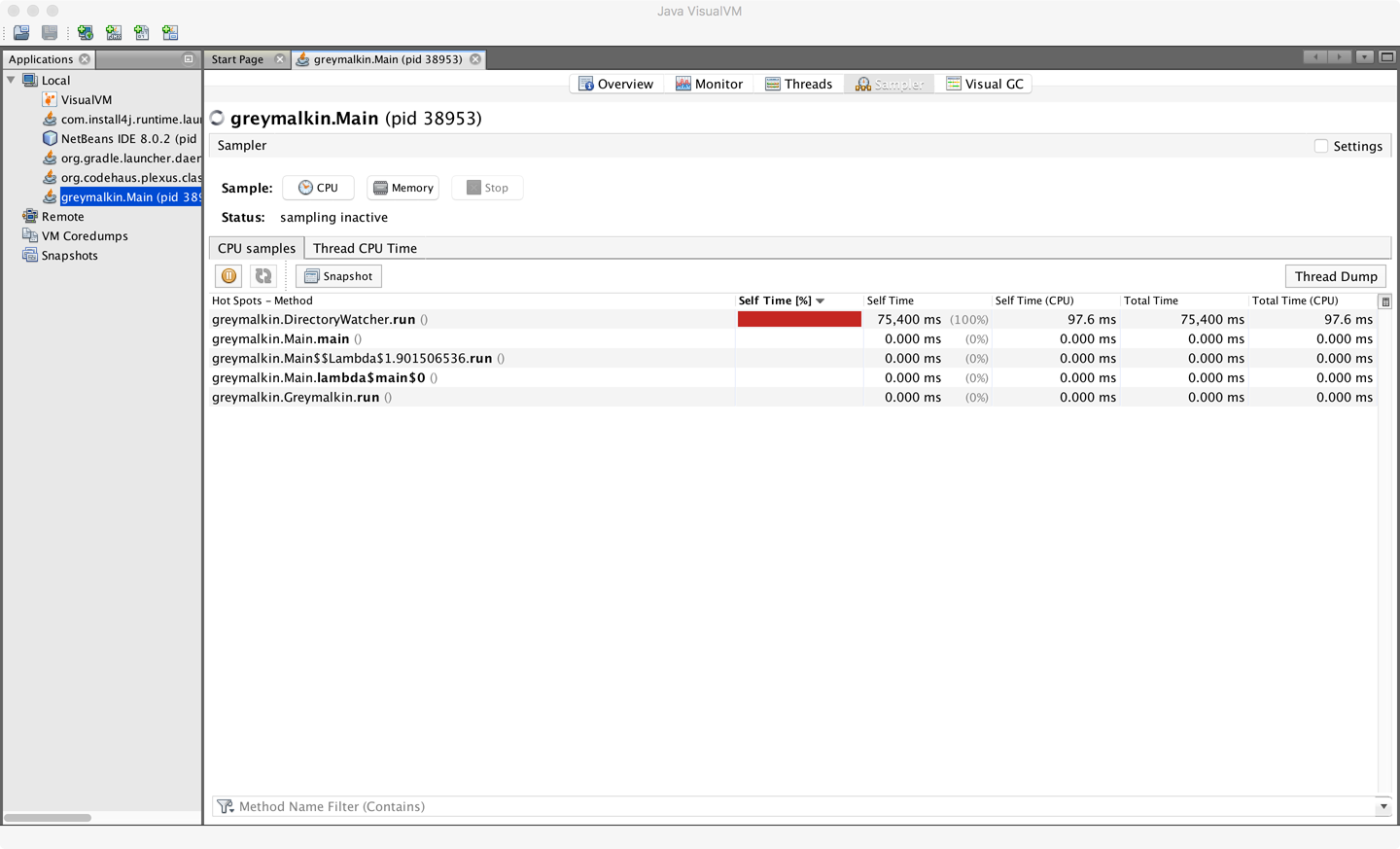

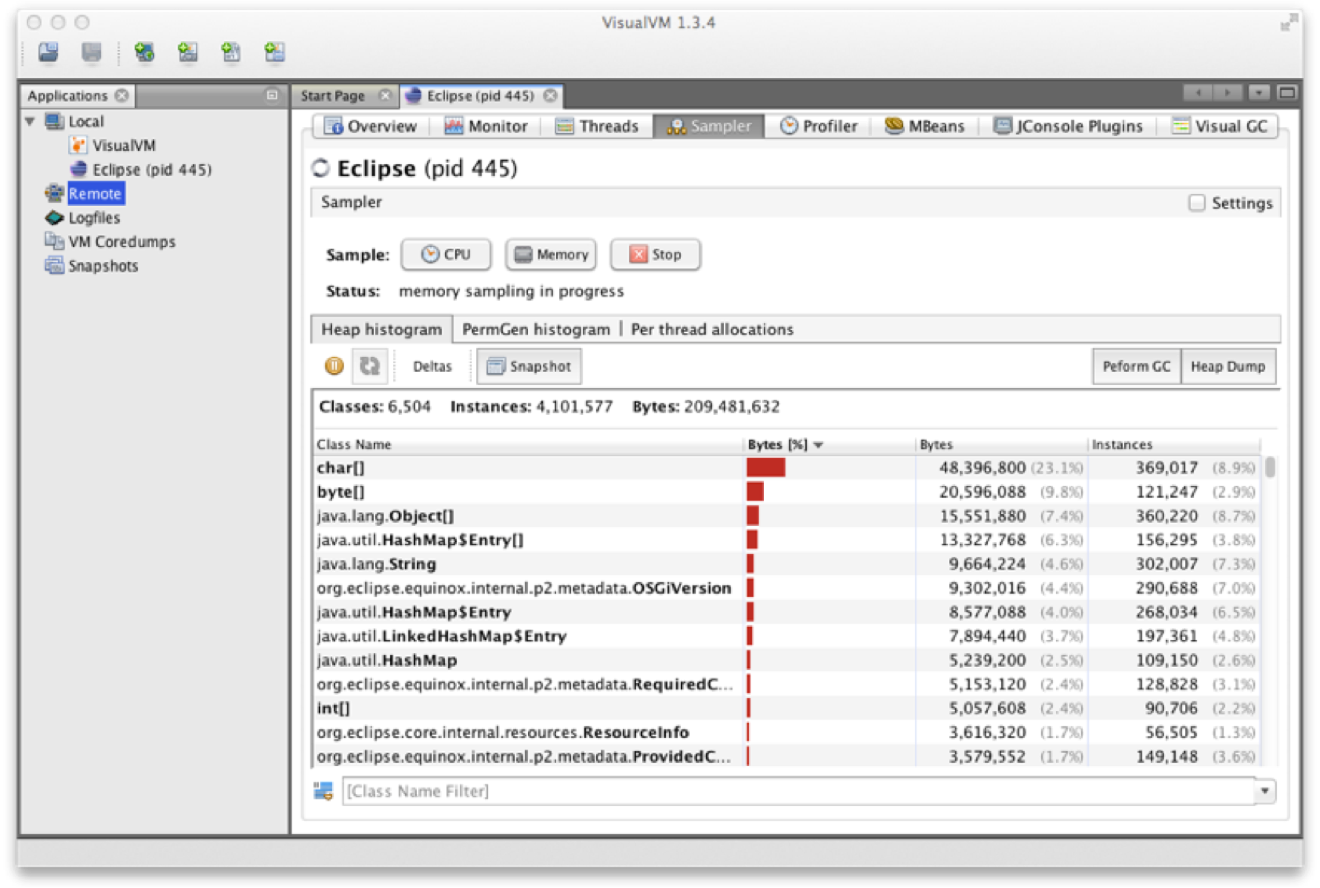

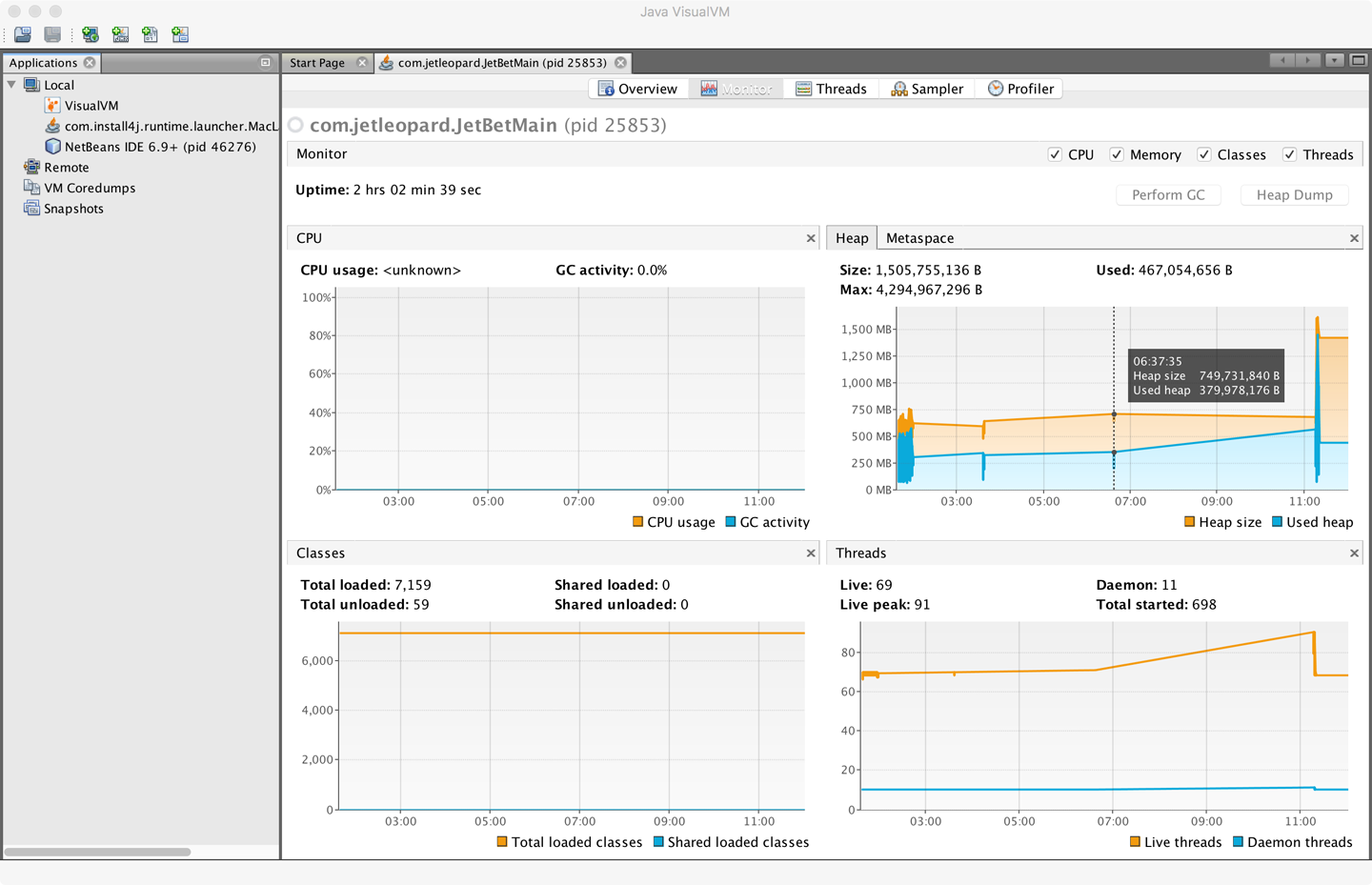

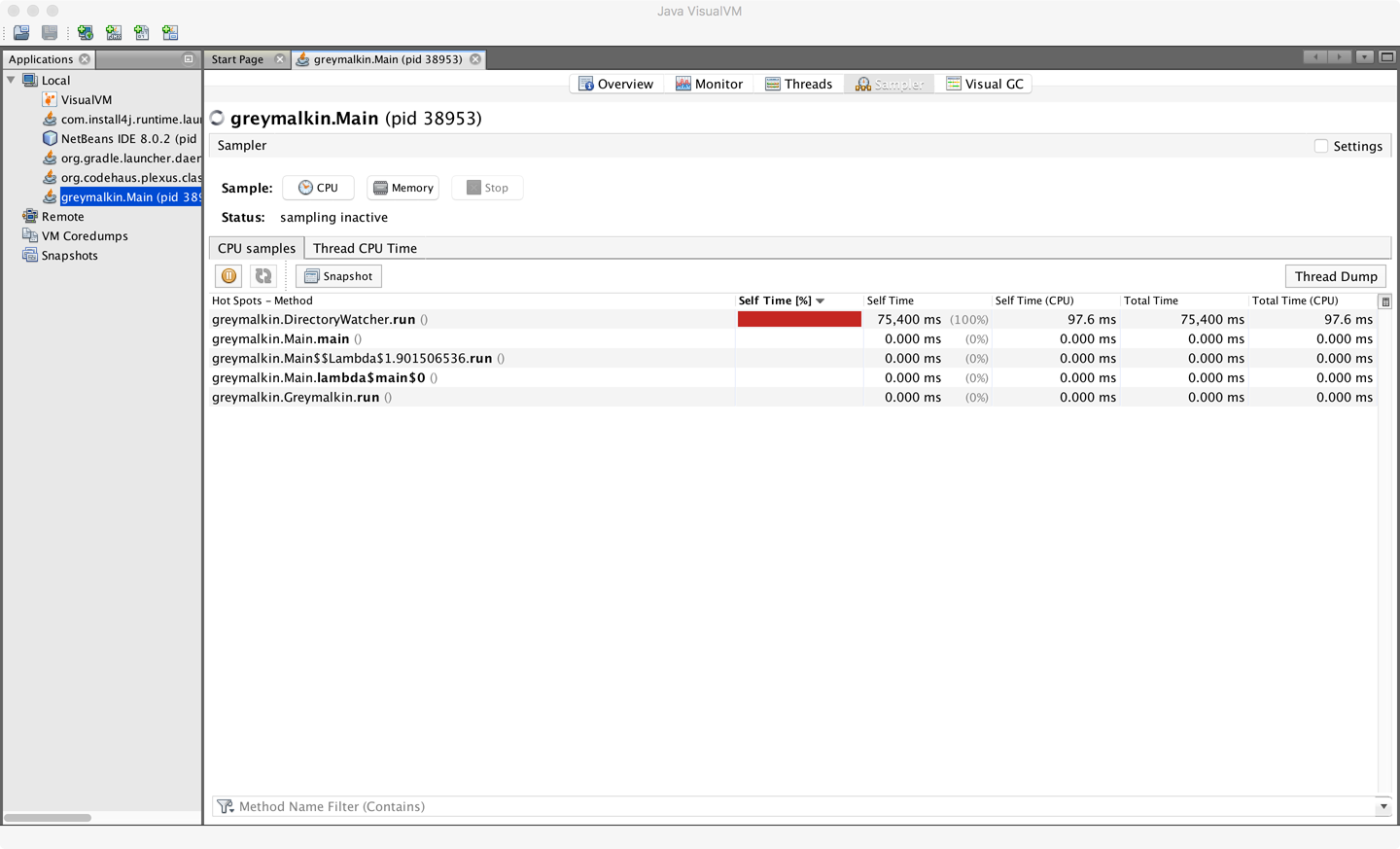

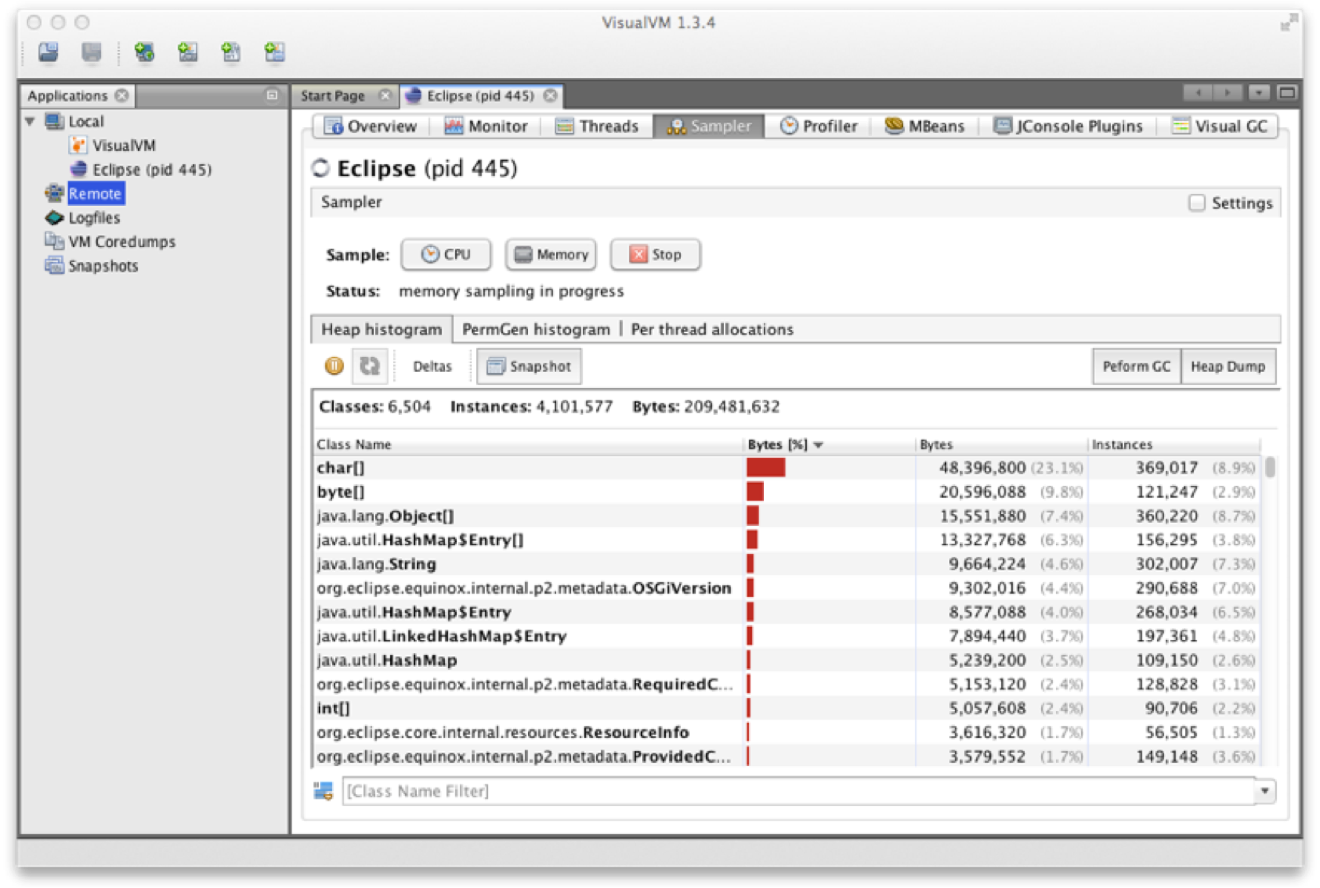

One tool that is often overlooked is VisualVM, which is a graphical tool based on the NetBeans platform.

jvisualvm is a replacement for the now obsolete jconsole tool from earlier Java versions.

If you are still using jconsole, you should move to VisualVM (there is a compatibility plug-in to allow jconsole plug-ins to run inside VisualVM).

Recent versions of Java have shipped solid versions of VisualVM, and the version present in the JDK is now usually sufficient.

However, if you need to use a more recent version, you can download the latest version from http://visualvm.java.net/.

After downloading, you will have to ensure that the visualvm binary is added to your path or you’ll get the JRE default binary.

From Java 9 onward, VisualVM is being removed from the main distribution, so developers will have to download the binary separately.

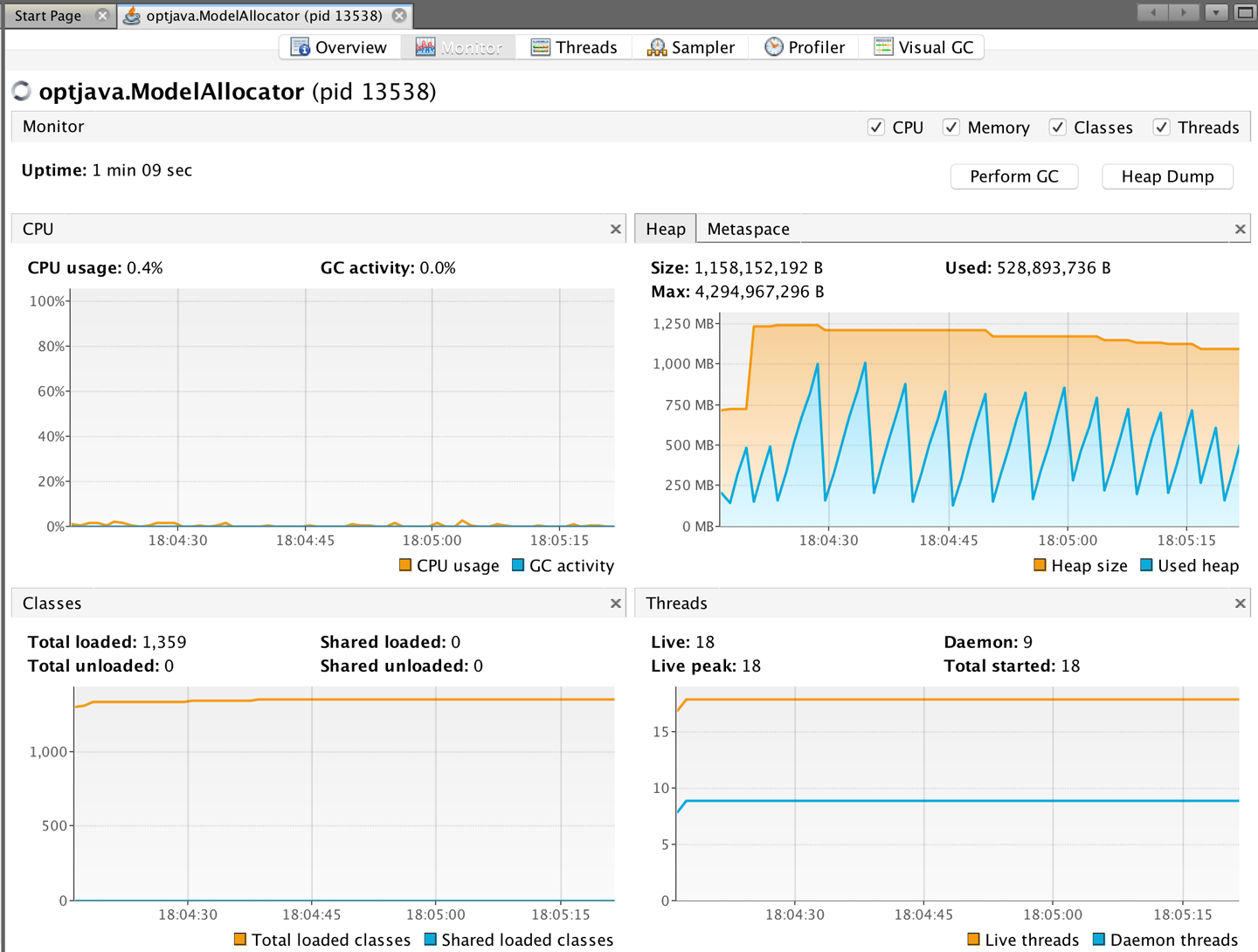

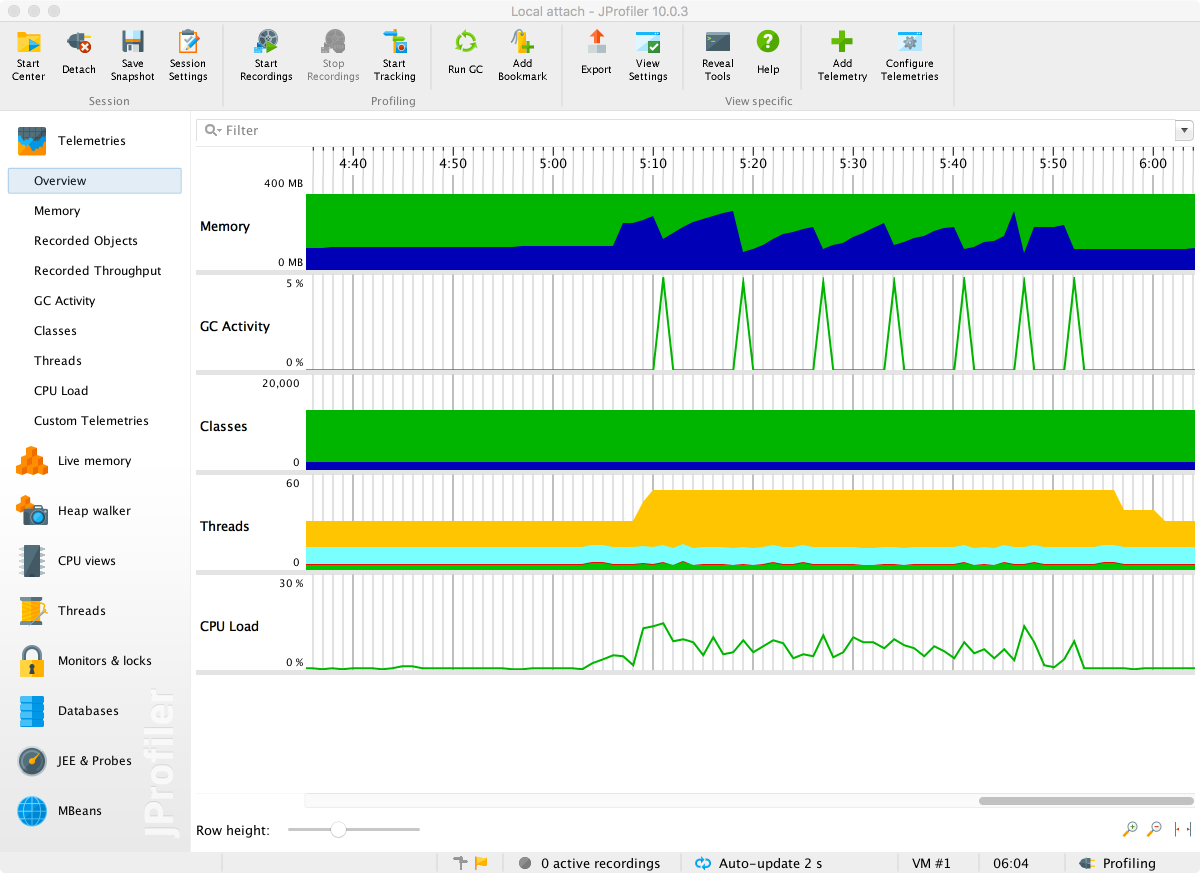

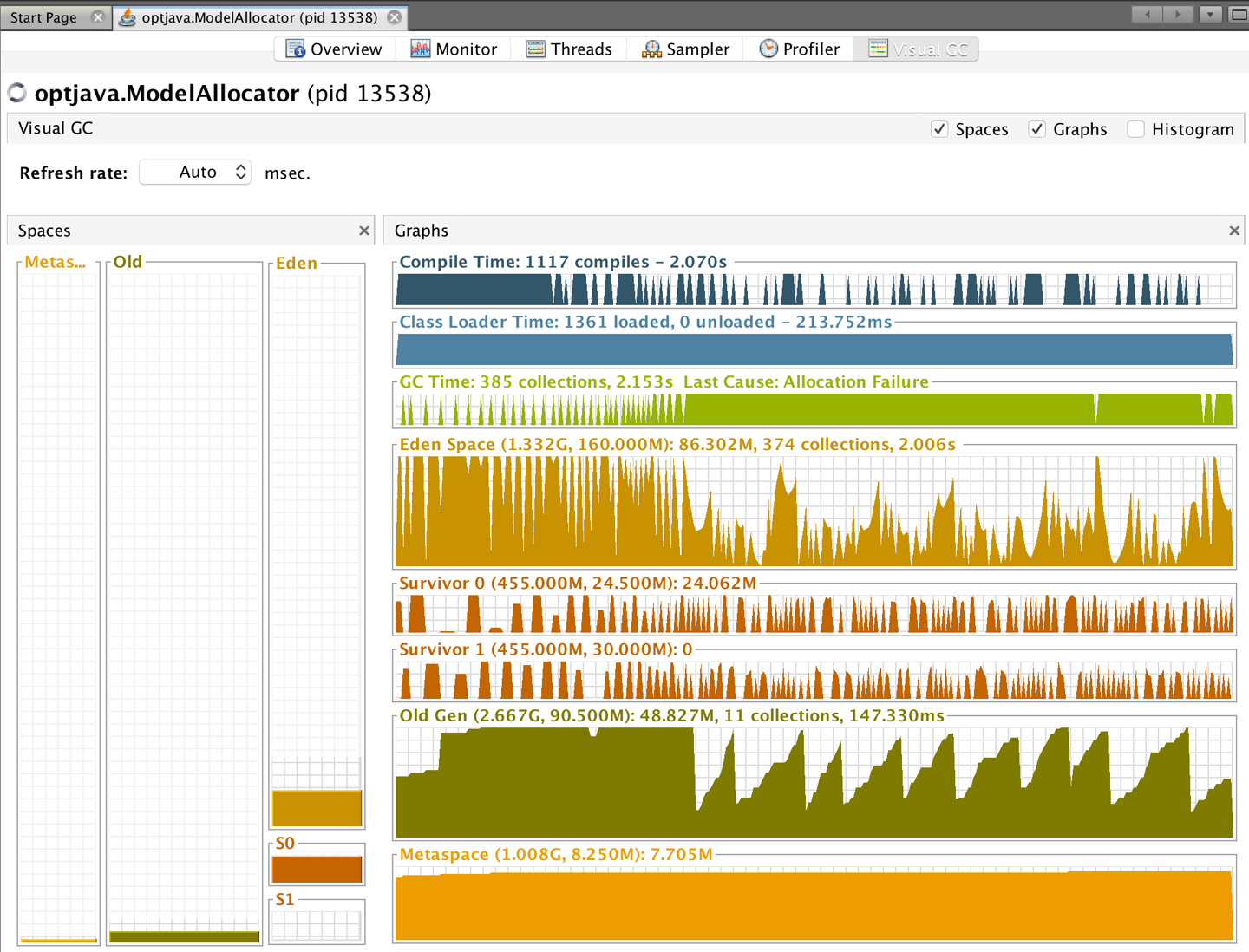

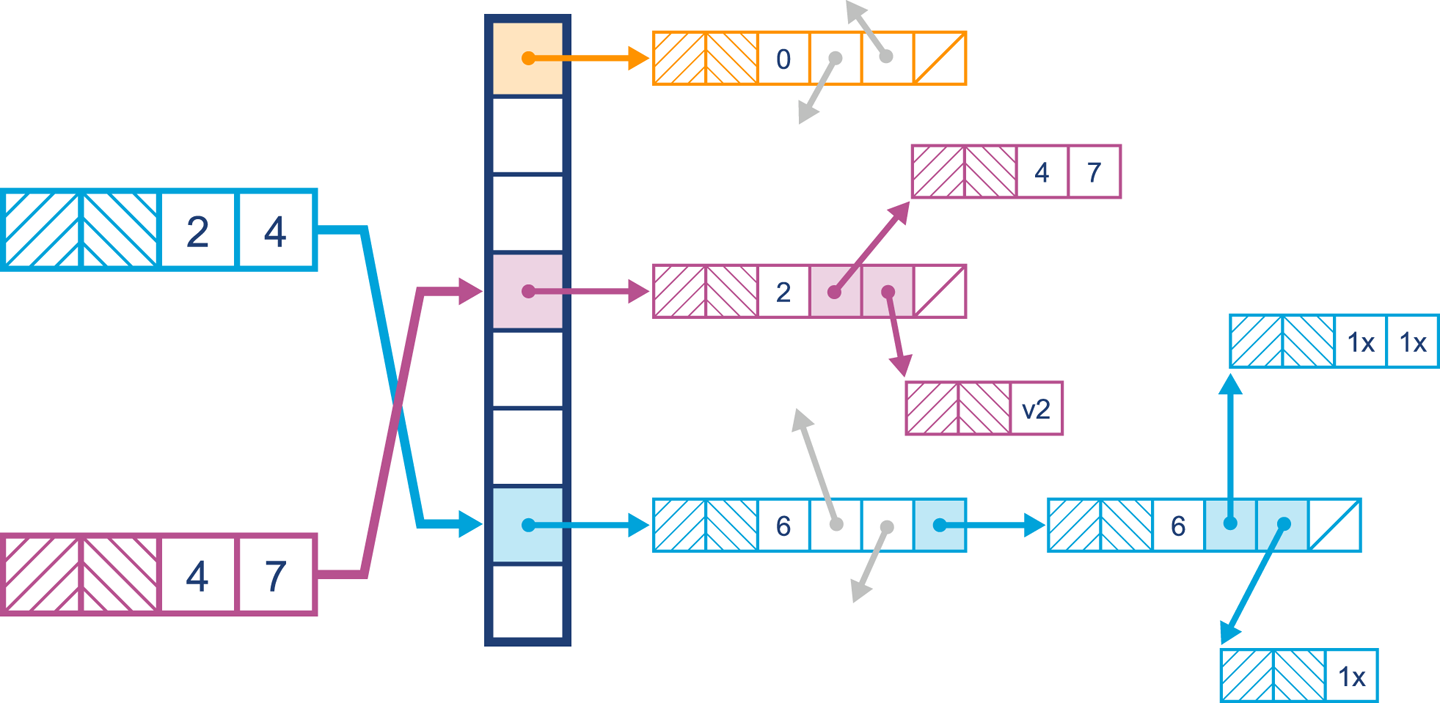

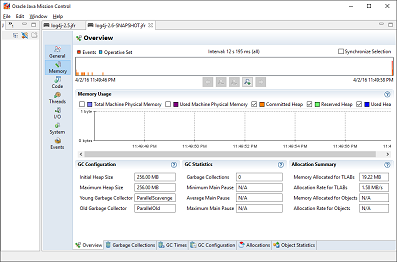

When VisualVM is started for the first time it will calibrate the machine it is running on, so there should be no other applications running that might affect the performance calibration. After calibration, VisualVM will finish starting up and show a splash screen. The most familiar view of VisualVM is the Monitor screen, which is similar to that shown in Figure 2-4.

VisualVM is used for live monitoring of a running process, and it uses the JVM’s attach mechanism. This works slightly differently depending on whether the process is local or remote.

Local processes are fairly straightforward. VisualVM lists them down the lefthand side of the screen. Double-clicking on one of them causes it to appear as a new tab in the righthand pane.

To connect to a remote process, the remote side must accept inbound connections (over JMX). For standard Java processes, this means jstatd must be running on the remote host (see the manual page for jstatd for more details).

Many application servers and execution containers provide an equivalent capability to jstatd directly in the server. Such processes do not need a separate jstatd process.

To connect to a remote process, enter the hostname and a display name that will be used on the tab. The default port to connect to is 1099, but this can be changed easily.

Out of the box, VisualVM presents the user with five tabs:

Provides a summary of information about your Java process. This includes the full flags that were passed in and all system properties. It also displays the exact Java version executing.

This is the tab that is the most similar to the legacy JConsole view. It shows high-level telemetry for the JVM, including CPU and heap usage. It also shows the number of classes loaded and unloaded, and an overview of the numbers of threads running.

Each thread in the running application is displayed with a timeline. This includes both application threads and VM threads. The state of each thread can be seen, with a small amount of history. Thread dumps can also be generated if needed.

In these views, simplified sampling of CPU and memory utilization can be accessed. This will be discussed more fully in Chapter 13.

The plug-in architecture of VisualVM allows additional tools to be easily added to the core platform to augment the core functionality. These include plug-ins that allow interaction with JMX consoles and bridging to legacy JConsole, and a very useful garbage collection plug-in, VisualGC.

In this chapter we have taken a quick tour through the overall anatomy of the JVM. It has only been possible to touch on some of the most important subjects, and virtually every topic mentioned here has a rich, full story behind it that will reward further investigation.

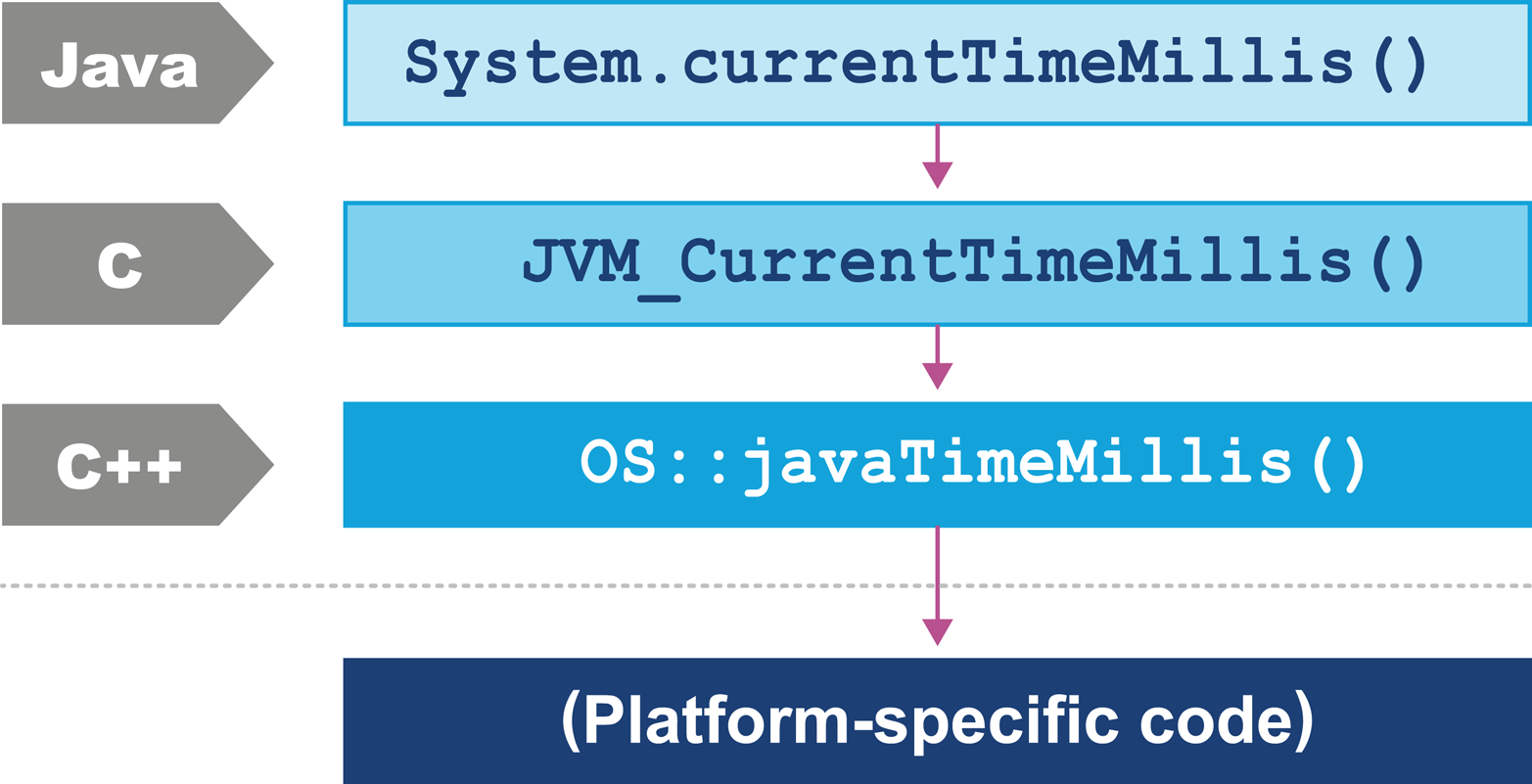

In Chapter 3 we will discuss some details of how operating systems and hardware work. This is to provide necessary background for the Java performance analyst to understand observed results. We will also look at the timing subsystem in more detail, as a complete example of how the VM and native subsystems interact.

Why should Java developers care about hardware?

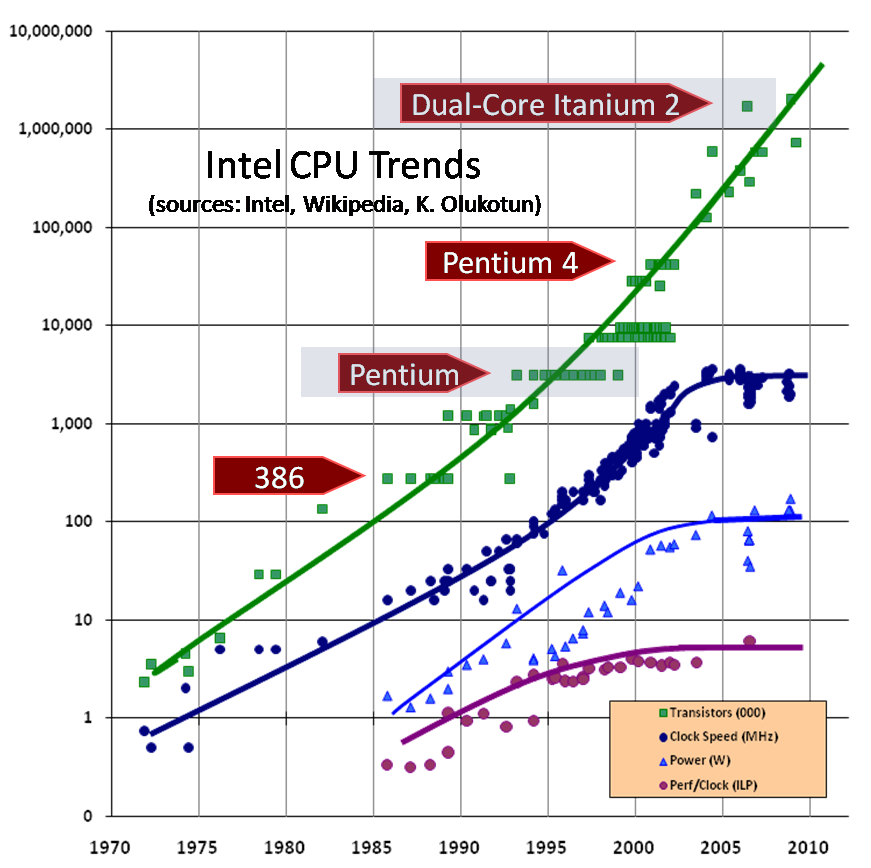

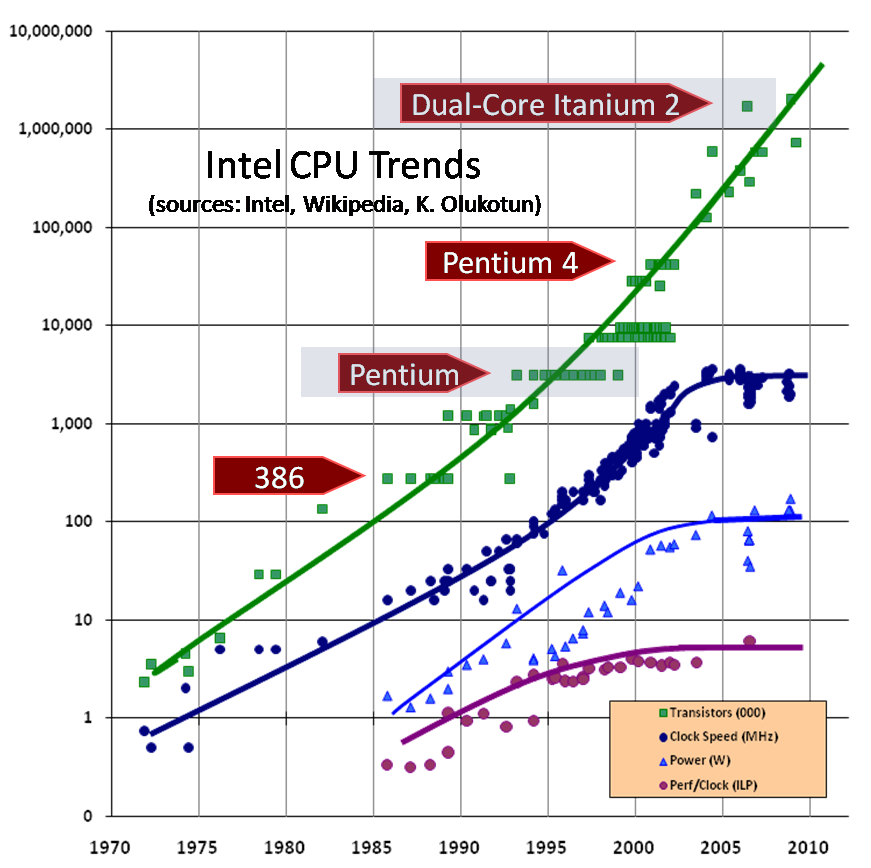

For many years the computer industry has been driven by Moore’s Law, a hypothesis made by Intel founder Gordon Moore about long-term trends in processor capability. The law (really an observation or extrapolation) can be framed in a variety of ways, but one of the most usual is:

The number of transistors on a mass-produced chip roughly doubles every 18 months.

This phenomenon represents an exponential increase in computer power over time. It was originally cited in 1965, so represents an incredible long-term trend, almost unparalleled in the history of human development. The effects of Moore’s Law have been transformative in many (if not most) areas of the modern world.

The death of Moore’s Law has been repeatedly proclaimed for decades now. However, there are very good reasons to suppose that, for all practical purposes, this incredible progress in chip technology has (finally) come to an end.

Hardware has become increasingly complex in order to make good use of the “transistor budget” available in modern computers. The software platforms that run on that hardware have also increased in complexity to exploit the new capabilities, so while software has far more power at its disposal it has come to rely on complex underpinnings to access that performance increase.

The net result of this huge increase in the performance available to the ordinary application developer has been the blossoming of complex software. Software applications now pervade every aspect of global society.

Or, to put it another way:

Software is eating the world.

Marc Andreessen

As we will see, Java has been a beneficiary of the increasing amount of computer power. The design of the language and runtime has been well suited (or lucky) to make use of this trend in processor capability. However, the truly performance-conscious Java programmer needs to understand the principles and technology that underpin the platform in order to make best use of the available resources.

In later chapters, we will explore the software architecture of modern JVMs and techniques for optimizing Java applications at the platform and code levels. But before turning to those subjects, let’s take a quick look at modern hardware and operating systems, as an understanding of those subjects will help with everything that follows.

Many university courses on hardware architectures still teach a simple-to-understand, classical view of hardware. This “motherhood and apple pie” view of hardware focuses on a simple view of a register-based machine, with arithmetic, logic, and load and store operations. As a result, it overemphasizes C programming as the source of truth as compared to what a CPU actually does. This is, simply, a factually incorrect worldview in modern times.

Since the 1990s the world of the application developer has, to a large extent, revolved around the Intel x86/x64 architecture. This is an area of technology that has undergone radical change, and many advanced features now form important parts of the landscape. The simple mental model of a processor’s operation is now completely incorrect, and intuitive reasoning based on it is liable to lead to utterly wrong conclusions.

To help address this, in this chapter, we will discuss several of these advances in CPU technology. We will start with the behavior of memory, as this is by far the most important to a modern Java developer.

As Moore’s Law advanced, the exponentially increasing number of transistors was initially used for faster and faster clock speed. The reasons for this are obvious: faster clock speed means more instructions completed per second. Accordingly, the speed of processors has advanced hugely, and the 2+ GHz processors that we have today are hundreds of times faster than the original 4.77 MHz chips found in the first IBM PC.

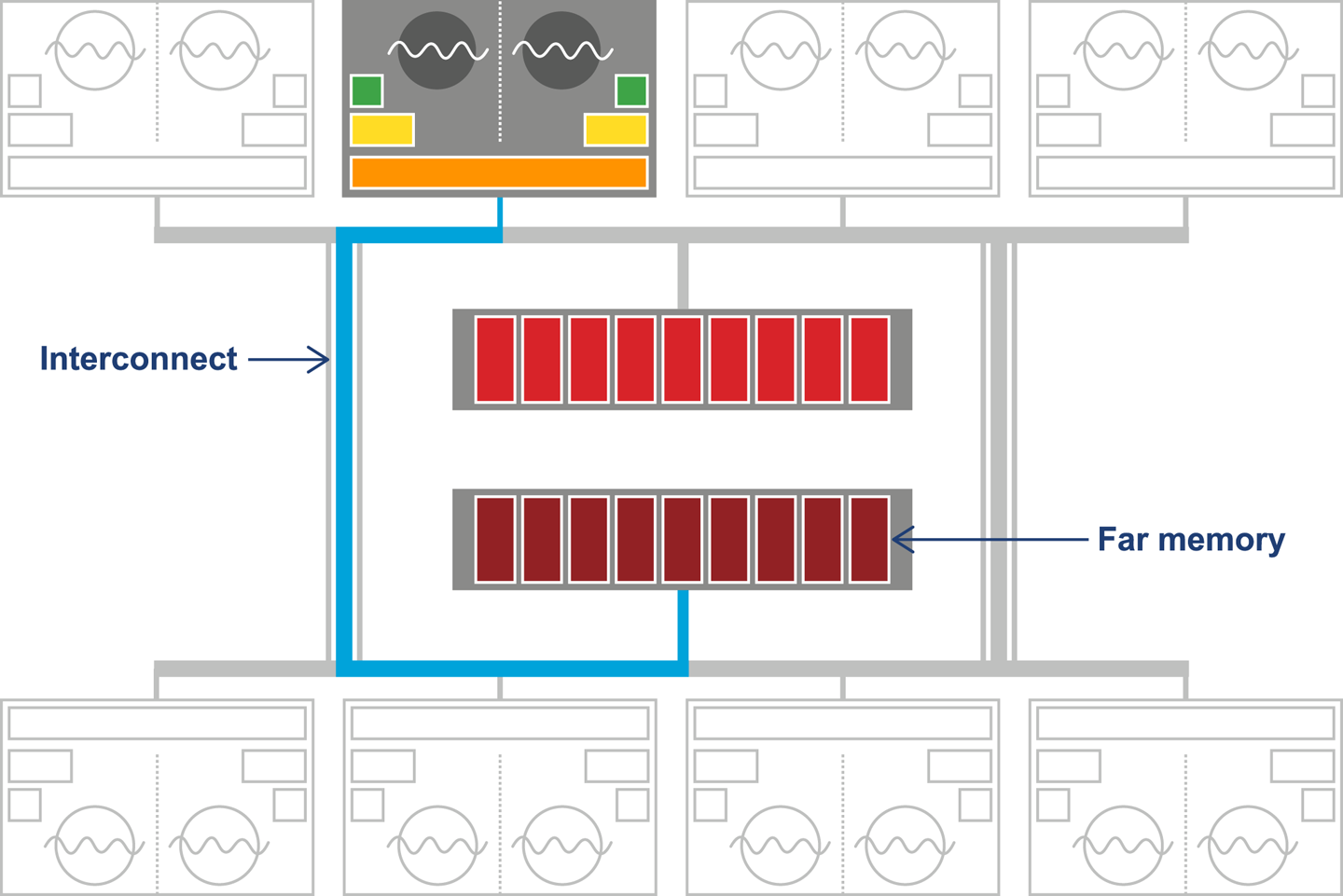

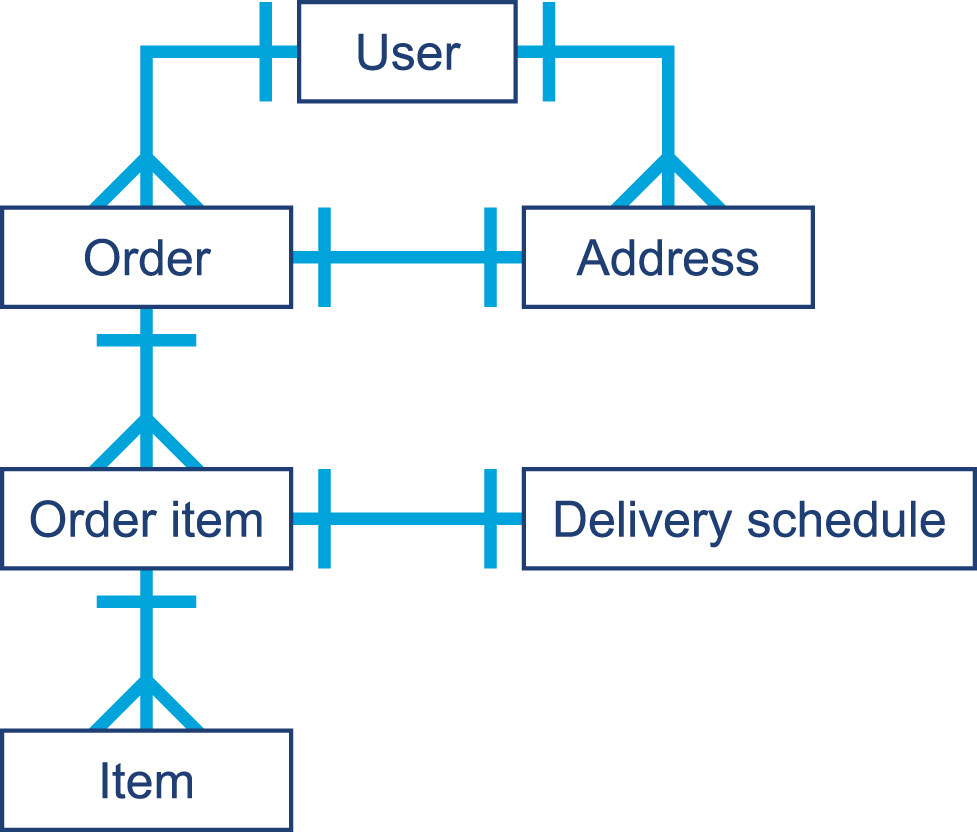

However, the increasing clock speeds uncovered another problem. Faster chips require a faster stream of data to act upon. As Figure 3-1 shows,1 over time main memory could not keep up with the demands of the processor core for fresh data.

This results in a problem: if the CPU is waiting for data, then faster cycles don’t help, as the CPU will just have to idle until the required data arrives.

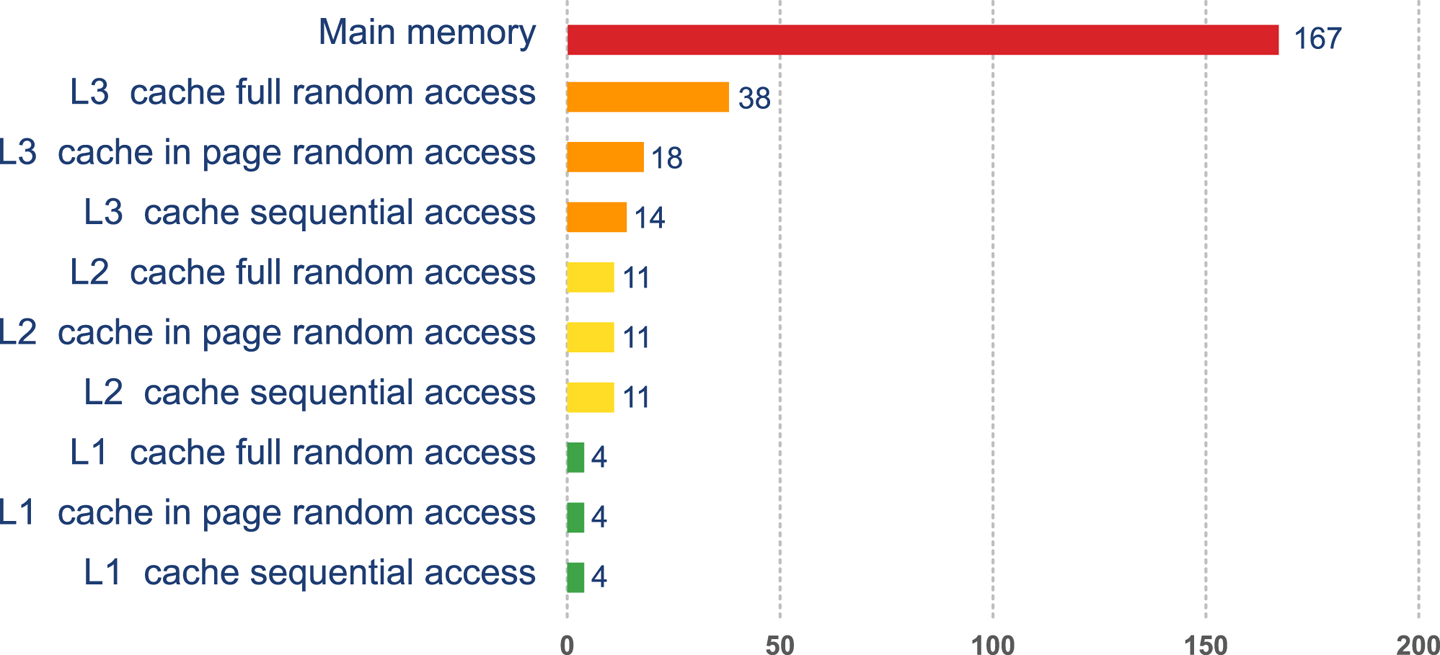

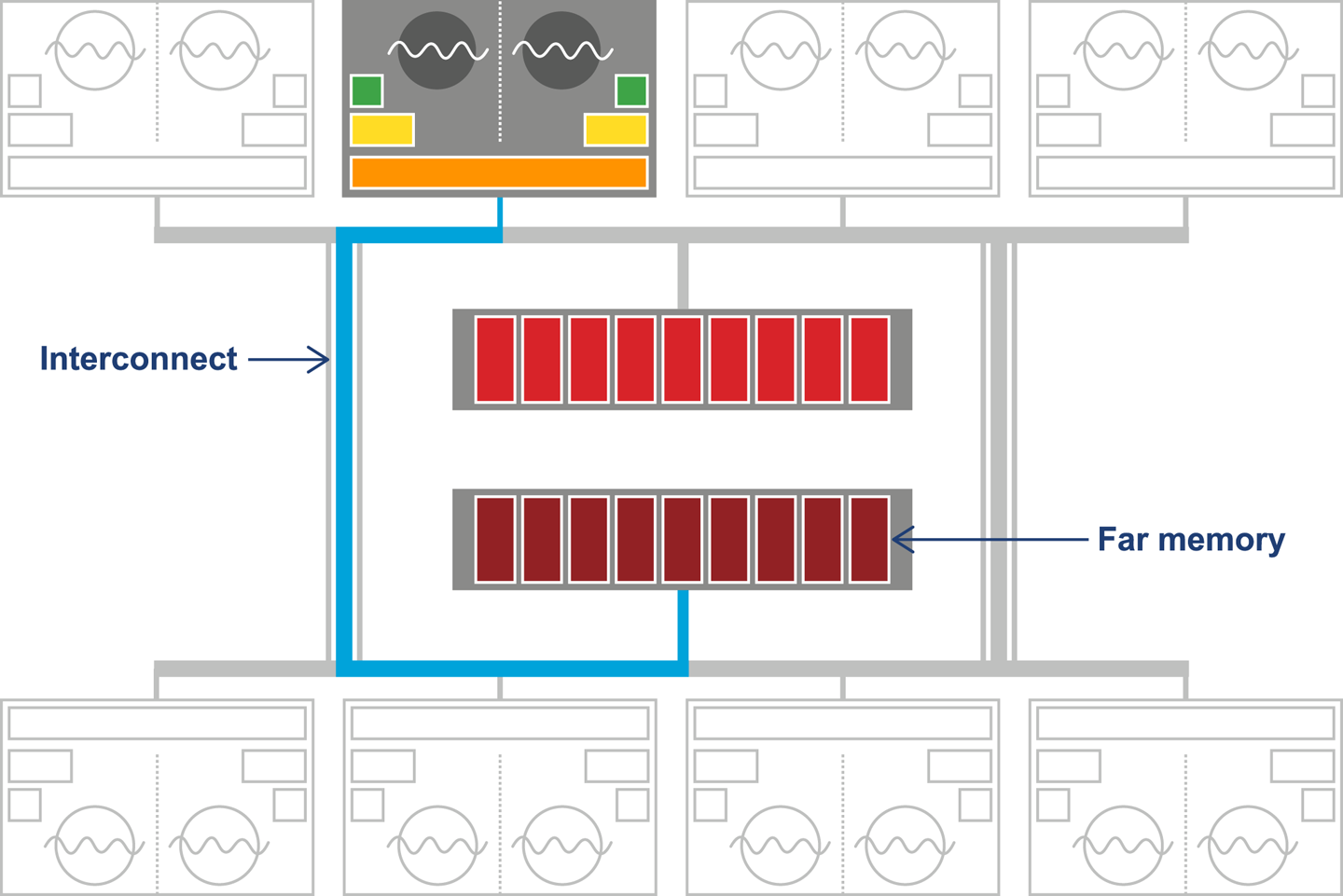

To solve this problem, CPU caches were introduced. These are memory areas on the CPU that are slower than CPU registers, but faster than main memory. The idea is for the CPU to fill the cache with copies of often-accessed memory locations rather than constantly having to re-address main memory.

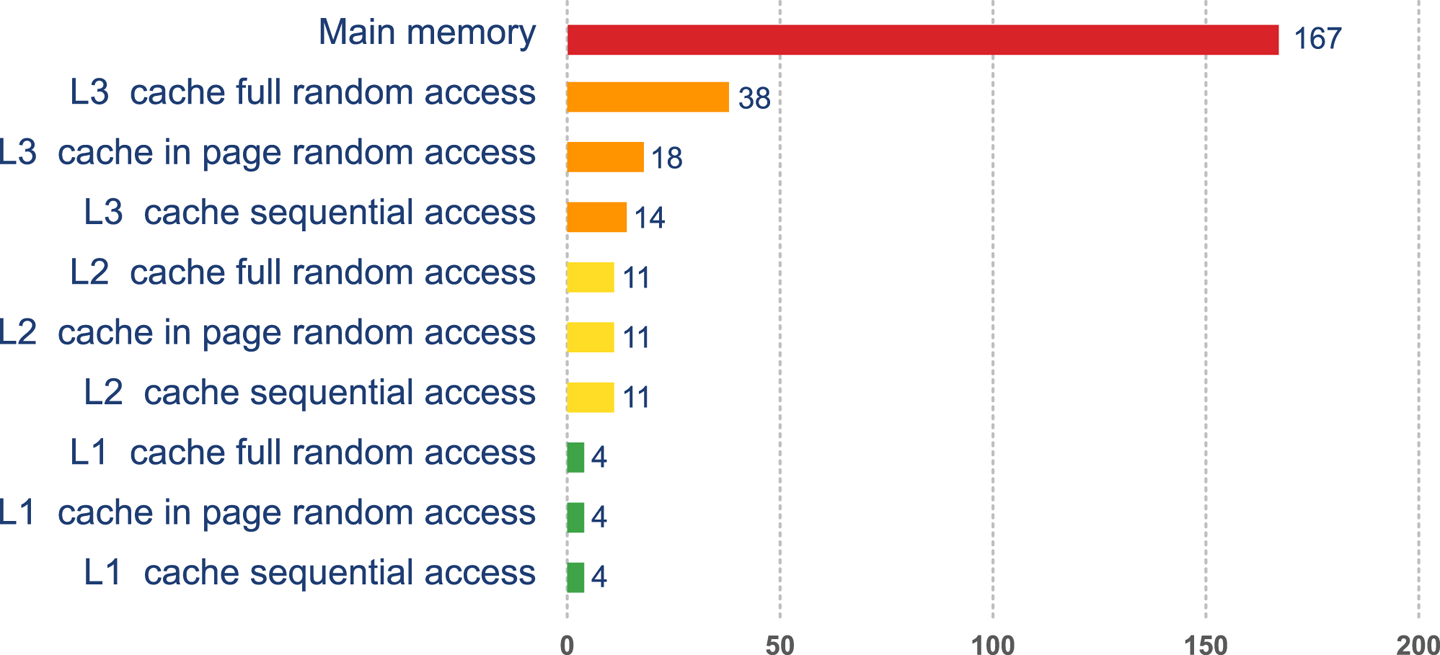

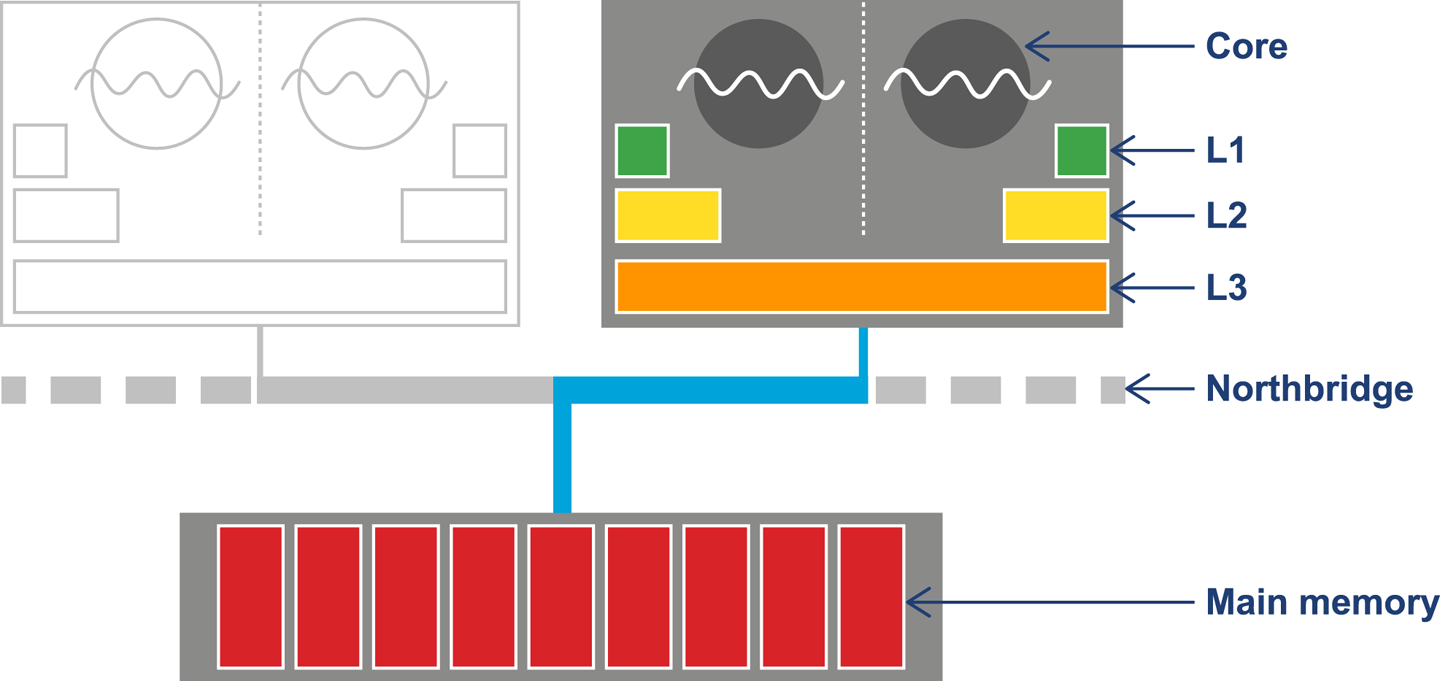

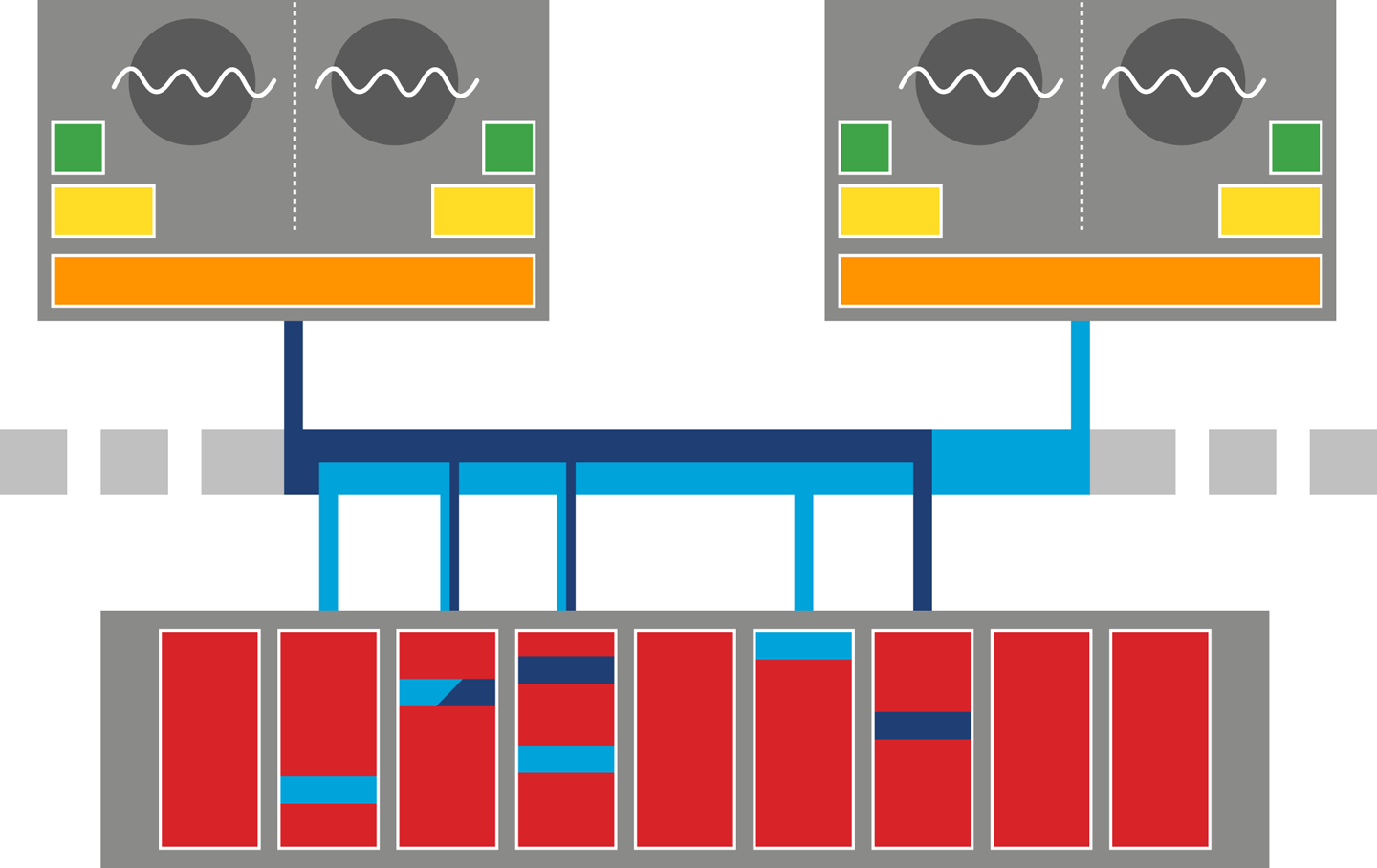

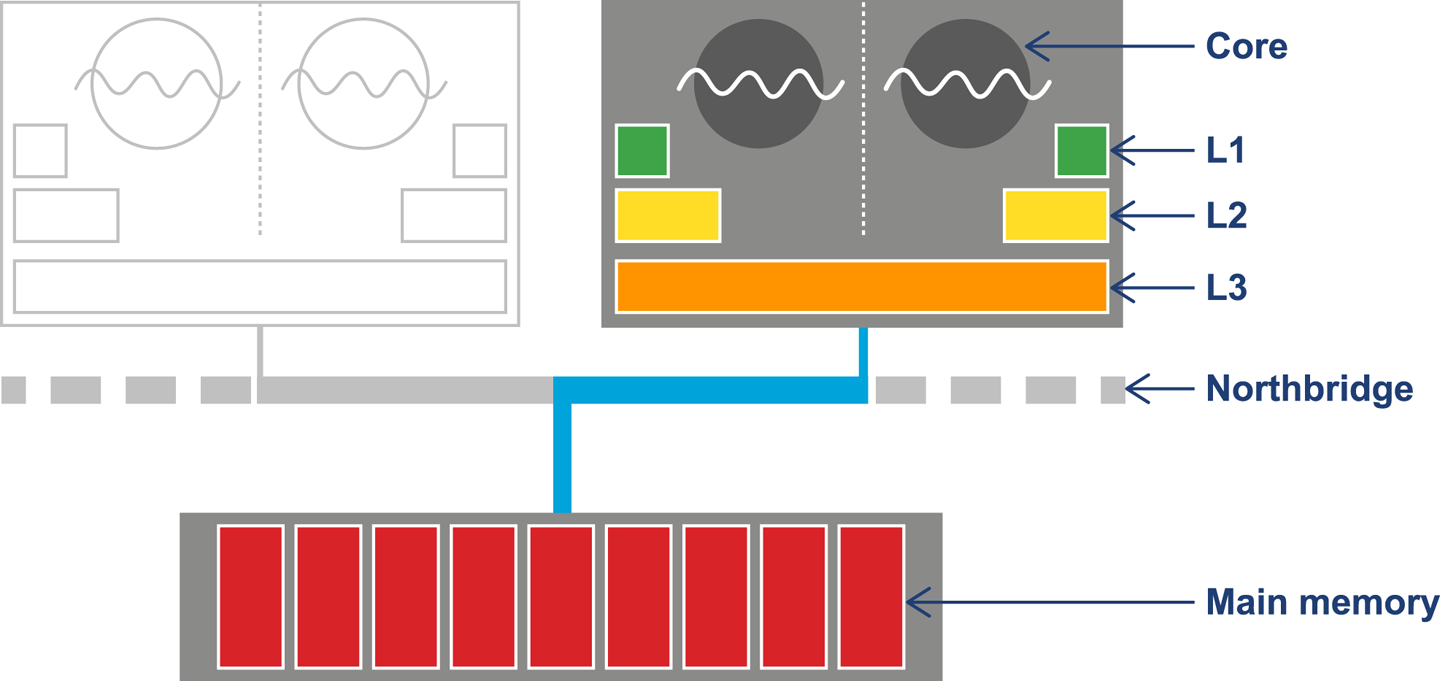

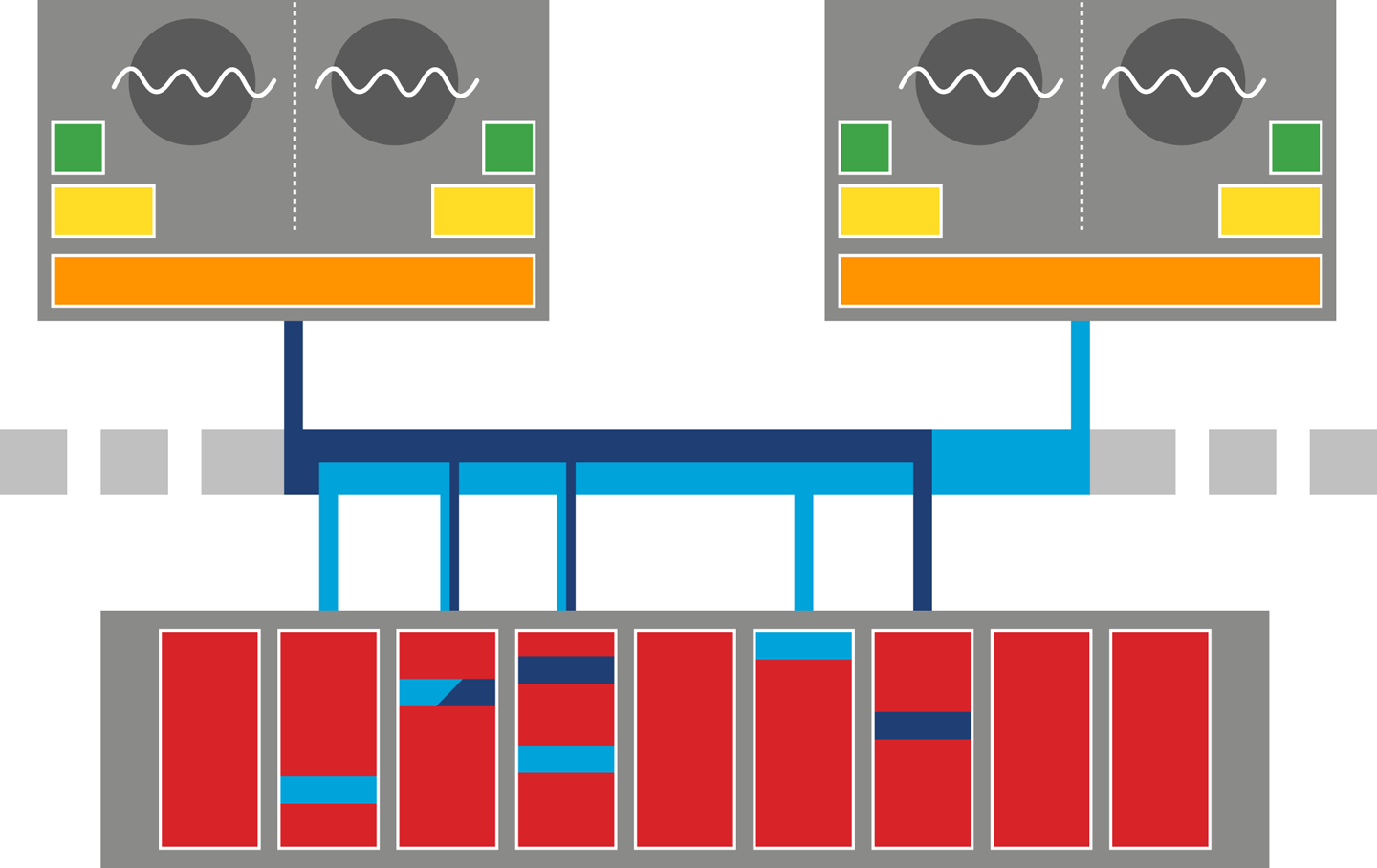

Modern CPUs have several layers of cache, with the most-often-accessed caches being located close to the processing core. The cache closest to the CPU is usually called L1 (for “level 1 cache”), with the next being referred to as L2, and so on. Different processor architectures have a varying number and configuration of caches, but a common choice is for each execution core to have a dedicated, private L1 and L2 cache, and an L3 cache that is shared across some or all of the cores. The effect of these caches in speeding up access times is shown in Figure 3-2.2

This approach to cache architecture improves access times and helps keep the core fully stocked with data to operate on. Due to the clock speed versus access time gap, more transistor budget is devoted to caches on a modern CPU.

The resulting design can be seen in Figure 3-3. This shows the L1 and L2 caches (private to each CPU core) and a shared L3 cache that is common to all cores on the CPU. Main memory is accessed over the Northbridge component, and it is traversing this bus that causes the large drop-off in access time to main memory.

Although the addition of a caching architecture hugely improves processor throughput, it introduces a new set of problems. These problems include determining how memory is fetched into and written back from the cache. The solutions to this problem are usually referred to as cache consistency protocols.

There are other problems that crop up when this type of caching is applied in a parallel processing environment, as we will see later in this book.

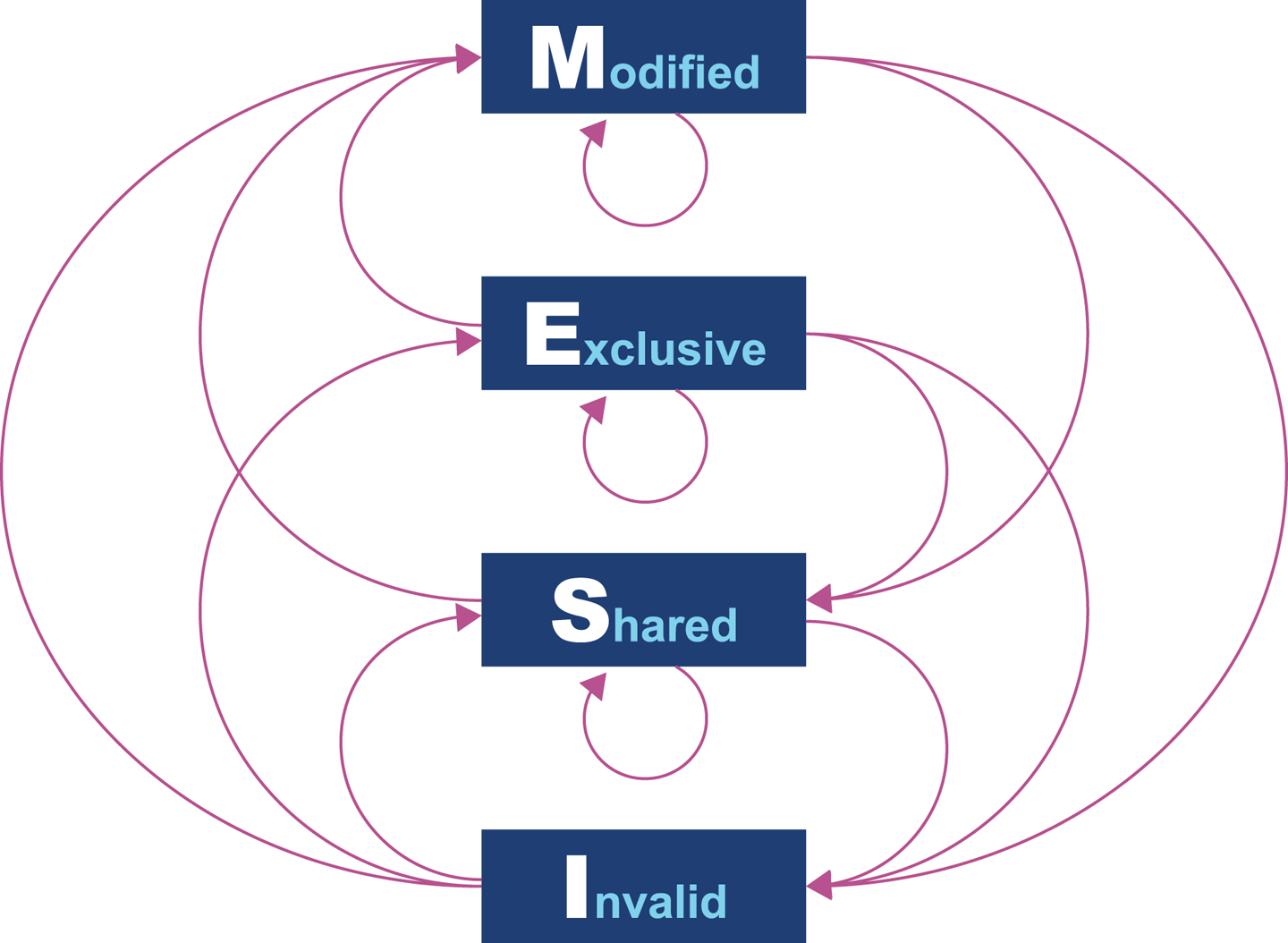

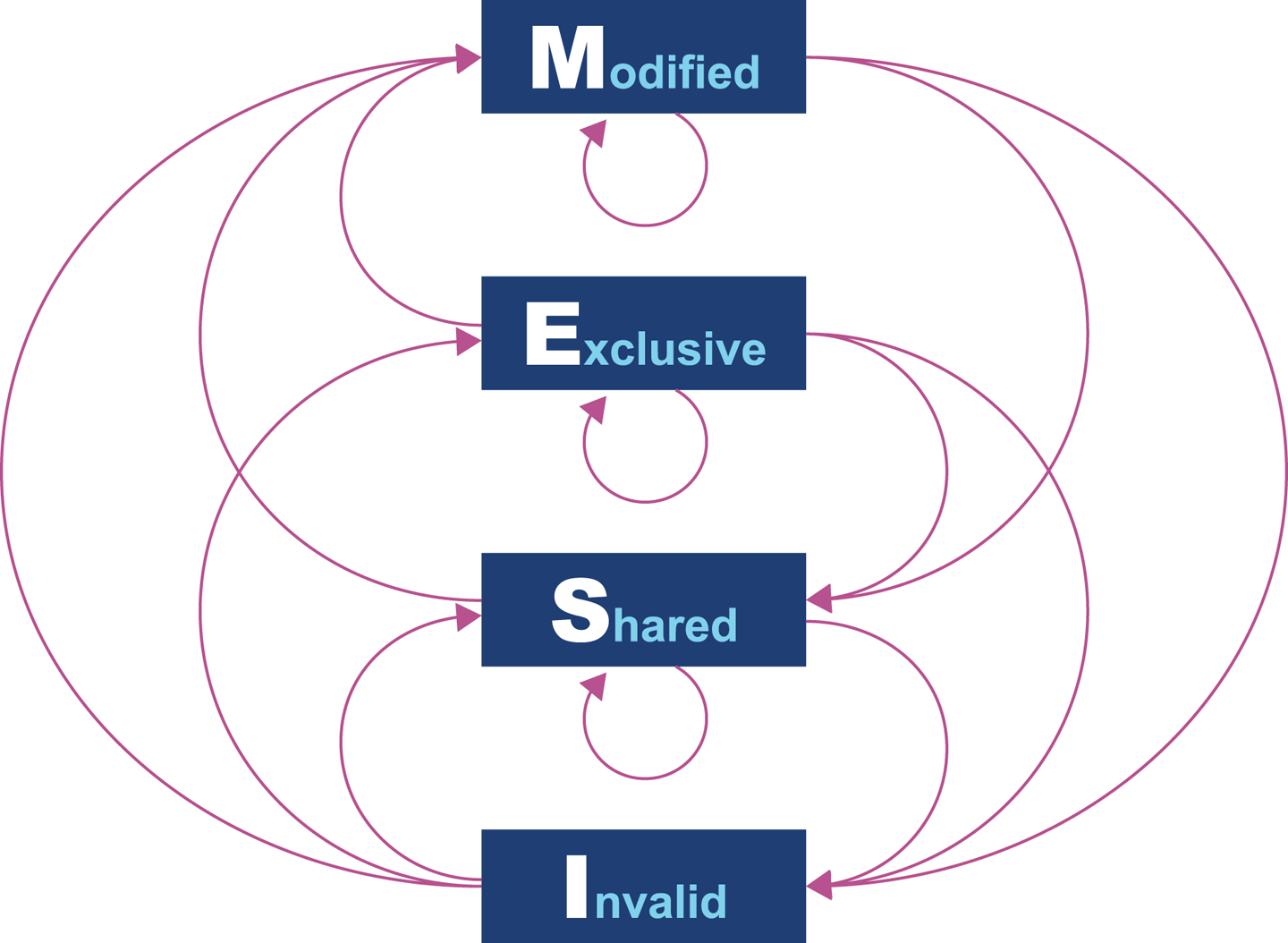

At the lowest level, a protocol called MESI (and its variants) is commonly found on a wide range of processors. It defines four states for any line in a cache. Each line (usually 64 bytes) is either:

Modified (but not yet flushed to main memory)

Exclusive (present only in this cache, but does match main memory)

Shared (may also be present in other caches; matches main memory)

Invalid (may not be used; will be dropped as soon as practical)

The idea of the protocol is that multiple processors can simultaneously be in the Shared state. However, if a processor transitions to any of the other valid states (Exclusive or Modified), then this will force all the other processors into the Invalid state. This is shown in Table 3-1.

| M | E | S | I | |

|---|---|---|---|---|

M |

- |

- |

- |

Y |

E |

- |

- |

- |

Y |

S |

- |

- |

Y |

Y |

I |

Y |

Y |

Y |

Y |

The protocol works by broadcasting the intention of a processor to change state. An electrical signal is sent across the shared memory bus, and the other processors are made aware. The full logic for the state transitions is shown in Figure 3-4.

Originally, processors wrote every cache operation directly into main memory. This was called write-through behavior, but it was and is very inefficient, and required a large amount of bandwidth to memory. More recent processors also implement write-back behavior, where traffic back to main memory is significantly reduced by processors writing only modified (dirty) cache blocks to memory when the cache blocks are replaced.

The overall effect of caching technology is to greatly increase the speed at which data can be written to, or read from, memory. This is expressed in terms of the bandwidth to memory. The burst rate, or theoretical maximum, is based on several factors:

Clock frequency of memory

Width of the memory bus (usually 64 bits)

Number of interfaces (usually two in modern machines)

This is multiplied by two in the case of DDR RAM (DDR stands for “double data rate” as it communicates on both edges of a clock signal). Applying the formula to 2015 commodity hardware gives a theoretical maximum write speed of 8–12 GB/s. In practice, of course, this could be limited by many other factors in the system. As it stands, this gives a modestly useful value to allow us to see how close the hardware and software can get.

Let’s write some simple code to exercise the cache hardware, as seen in Example 3-1.

publicclassCaching{privatefinalintARR_SIZE=2*1024*1024;privatefinalint[]testData=newint[ARR_SIZE];privatevoidrun(){System.err.println("Start: "+System.currentTimeMillis());for(inti=0;i<15_000;i++){touchEveryLine();touchEveryItem();}System.err.println("Warmup finished: "+System.currentTimeMillis());System.err.println("Item Line");for(inti=0;i<100;i++){longt0=System.nanoTime();touchEveryLine();longt1=System.nanoTime();touchEveryItem();longt2=System.nanoTime();longelItem=t2-t1;longelLine=t1-t0;doublediff=elItem-elLine;System.err.println(elItem+" "+elLine+" "+(100*diff/elLine));}}privatevoidtouchEveryItem(){for(inti=0;i<testData.length;i++)testData[i]++;}privatevoidtouchEveryLine(){for(inti=0;i<testData.length;i+=16)testData[i]++;}publicstaticvoidmain(String[]args){Cachingc=newCaching();c.run();}}

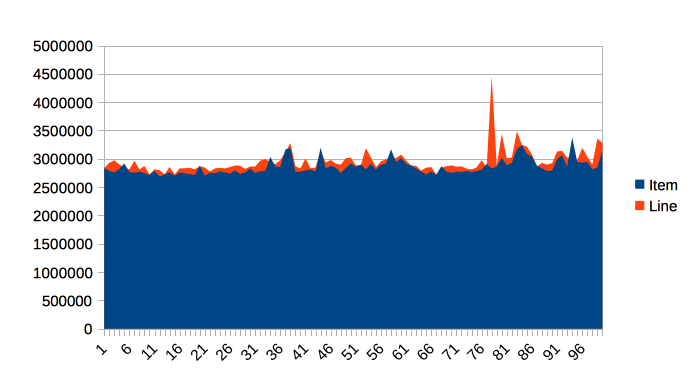

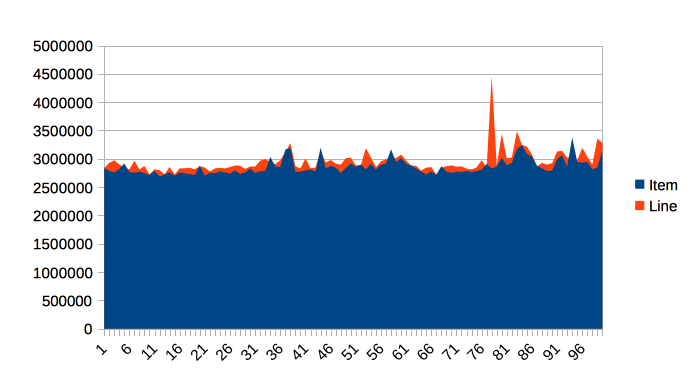

Intuitively, touchEveryItem() does 16 times as much work as

touchEveryLine(), as 16 times as many data items must be updated. However,

the point of this simple example is to show how badly intuition can lead us

astray when dealing with JVM performance. Let’s look at some sample output from

the Caching class, as shown in Figure 3-5.