Implementing SSL/TLS Using Cryptography and PKI

Published by

Wiley Publishing, Inc.

10475 Crosspoint Boulevard

Indianapolis, IN 46256

Copyright © 2011 by Wiley Publishing, Inc., Indianapolis, Indiana

Published simultaneously in Canada

ISBN: 978-0-470-92041-1

ISBN: 978-1-118-03875-8 (ebk)

ISBN: 978-1-118-03876-5 (ebk)

ISBN: 978-1-118-03877-2 (ebk)

Manufactured in the United States of America

10 9 8 7 6 5 4 3 2 1

No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201) 748-6008, or online at http://www.wiley.com/go/permissions.

Limit of Liability/Disclaimer of Warranty: The publisher and the author make no representations or warranties with respect to the accuracy or completeness of the contents of this work and specifically disclaim all warranties, including without limitation warranties of fitness for a particular purpose. No warranty may be created or extended by sales or promotional materials. The advice and strategies contained herein may not be suitable for every situation. This work is sold with the understanding that the publisher is not engaged in rendering legal, accounting, or other professional services. If professional assistance is required, the services of a competent professional person should be sought. Neither the publisher nor the author shall be liable for damages arising herefrom. The fact that an organization or Web site is referred to in this work as a citation and/or a potential source of further information does not mean that the author or the publisher endorses the information the organization or website may provide or recommendations it may make. Further, readers should be aware that Internet websites listed in this work may have changed or disappeared between when this work was written and when it is read.

For general information on our other products and services please contact our Customer Care Department within the United States at (877) 762-2974, outside the United States at (317) 572-3993 or fax (317) 572-4002.

Wiley also publishes its books in a variety of electronic formats. Some content that appears in print may not be available in electronic books.

Library of Congress Control Number: 2010942196

Trademarks: Wiley and the Wiley logo are trademarks or registered trademarks of John Wiley & Sons, Inc. and/or its affiliates, in the United States and other countries, and may not be used without written permission. All other trademarks are the property of their respective owners. Wiley Publishing, Inc. is not associated with any product or vendor mentioned in this book.

Statements of Copyright: This book refers to and incorporates portions of the Internet Engineering Task Force (IETF's) Request For Comments (RFCs). All RFC are protected by the following copyright. Copyright (C) The Internet Society (1999). All Rights Reserved.

This document and translations of it may be copied and furnished to others, and derivative works that comment on or otherwise explain it or assist in its implementation may be prepared, copied, published and distributed, in whole or in part, without restriction of any kind, provided that the above copyright notice and this paragraph are included on all such copies and derivative works. However, this document itself may not be modified in any way, such as by removing the copyright notice or references to the Internet Society or other Internet organizations, except as needed for the purpose of developing Internet standards in which case the procedures for copyrights defined in the Internet Standards process must be followed, or as required to translate it into languages other than English. The limited permissions granted above are perpetual and will not be revoked by the Internet Society or its successors or assigns. This document and the information contained herein is provided on an "AS IS" basis and THE INTERNET SOCIETY AND THE INTERNET ENGINEERING TASK FORCE DISCLAIMS ALL WARRANTIES, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO ANY WARRANTY THAT THE USE OF THE INFORMATION HEREIN WILL NOT INFRINGE ANY RIGHTS OR ANY IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE.

This book incorporates several examples making use of the OpenSSL software suite. OpenSSL is trademarked and copyrighted. OpenSSL is based on the excellent SSLeay library developed by Eric A. Young and Tim J. Hudson. The OpenSSL toolkit is licensed under an Apache-style licence which basically means that you are free to get and use it for commercial and non-commercial purposes.

This book describes in some detail several open standards maintained and owned by FIPS, the ITU, PKCS, and SECG. The maintainers and authors of these standards documents are attributed throughout the text.

Verisign is used as an example of a Certificate Authority in Chapters 3 and 4. VeriSign and other trademarks are the registered or unregistered trademarks of VeriSign, Inc. and its subsidiaries.

Joshua Davies has been hacking on computers since his father brought home the family's first computer, a TI-99/4A, in 1982. He holds a Bachelor's degree in computer science from Valdosta State University and a Masters degree in computer science from the University of Texas at Arlington. He has been programming professionally since 1990 and as a result has never had to do any real work. He is currently responsible for security architecture at Travelocity.com and previously worked internationally as a consultant for One, Inc. whose client list included AT&T, Nieman Marcus, Intermedia, and the Mexican telecommunications giant Pegaso. He prefers to work in C or assembler, but often codes in Java since it pays the bills. He currently resides in Dallas, Texas with his wife and two children.

David Chapa is a Senior Analyst with the Enterprise Strategy Group covering the Data Protection segment with a focus on Disaster Recovery, Backup/Recovery as a Service, and Availability Solutions. David has invested over 25 years in the computer industry, focusing specifically on data protection, data disaster recovery, and business resumption practices. He has held several senior level technical positions with companies such as Cheyenne Software, OpenVision, ADIC, Quantum, and NetApp. Prior to joining ESG, as Director of Data Protection Strategy and Global Alliances for NetApp, David continued to evangelize "Recovery and Backup," his mantra for over a decade now, and the benefits of integrating software solutions with disk-based backup. In his role with ESG, David will bring all of this expertise, knowledge, and passion to raise a greater holistic awareness around data protection. David is an energetic and dynamic speaker who brings a great deal of experiential knowledge, humor, and keen insight to his audience. He has been a featured speaker at VERITAS Vision, CA World, SNW, Chicago Software Association, and CAMP/IT Conferences, and has served as panelist on various discussions related to disaster recovery, compliance, and the use of disk, tape, and cloud for recovery and backup strategies.

David has written several articles and blogs over the years. In addition, he is the co-author of Implementing Backup and Recovery, the Technical Editor of Cloud Security, Security 2020, and Web Commerce Security Design and Development with Wiley and Sons, and is recognized worldwide as an authority on the subject of backup and recovery. David is also a member of SNIA's Data Protection and Capacity Optimization (DPCO) Committee, whose mission is to foster the growth and success of the storage market in the areas of data protection and capacity optimization technologies.

| Executive Editor

Carol Long |

Production Manager

Tim Tate |

| Project Editor

Maureen Spears |

Vice President and Executive Group Publisher

Richard Swadley |

| Technical Editor

David A. Chapa |

Vice President and Executive Publisher

Barry Pruett |

| Production Editor

Kathleen Wisor |

Associate Publisher

Jim Minatel |

| Copy Editor

Charlotte Kughen |

Project Coordinator, Cover

Katie Crocker |

| Editorial Director

Robyn B. Siesky |

Proofreader

Nancy Bell |

| Editorial Manager

Mary Beth Wakefield |

Indexer

Robert Swanson |

| Freelancer Editorial Manager

Rosemarie Graham |

Cover Designer

Ryan Sneed |

| Marketing Manager

Ashley Zurcher |

My name is the name on the cover of this book, but I can't possibly take all of the credit for the finished product. I can't thank the staff at John Wiley and Sons enough for their hard work and dedication in bringing this book to print — Charlotte Kughen for tirelessly correcting my overly casual use of the English language, David Chapa for his encouragement and gentle feedback, Maureen Spears for her infinite patience with me every time I asked to make last-minute changes long after the time for last-minute changes had passed (I'm sure some day you'll look back on this and laugh) and finally to Carol Long for understanding what I was trying to accomplish and expending so much effort to get the green light for this project in the first place.

Thanks to the OpenSSL development team for their excellent software, which I made such heavy use of while developing and testing the code in this book, and to Thomas Hruska of Shining Light Productions for his feedback as well. Many thanks to the IETF TLS working group who volunteer their time to generate free, openly accessibly specifications for no compensation beyond the satisfaction that they are making the world a better, safer place. I've enjoyed debating and discussing the finer points of TLS with all of you while I was lurking on the mailing list over the past three years. This book is in no small part the culmination of the understanding I've achieved from listening to all of you.

I must, of course, acknowledge the support and encouragement I received from my university professors long after graduation — especially to Dr. Roger Lamprey, Dr. Gergely Zaruba, and Dr. Farhad Kamangar. I have no idea what they're paying you, but I'm sure it's far less than you deserve.

A special thank you goes to Troy Magennis of Travelocity, who encouraged me to take the leap from thinking about writing a book to finally sitting down and making it happen. Your example and inspiration were invaluable.

Thank you to my parents and my brother and sisters who are five of the most different, unique, and interesting people on the planet. It's amazing that we're all related, but somehow we pull it off. Finally, thank you to my family for their support as I wrote this book. This took far longer and much more effort than I ever anticipated. For putting up with my long absences and lost evenings and weekends as I wrote, re-wrote, and re-re-wrote: Lupita, Dylan, and Isabelle — you are my purpose on this earth and my reason for being — I hope I can always make you proud.

And, of course, thanks to Tornado the cat for keeping my lap warm night after night as I wrote after everybody else had gone to bed.

Chapter 1 Understanding Internet Security

Chapter 2 Protecting Against Eavesdroppers with Symmetric Cryptography

Chapter 3 Secure Key Exchange over an Insecure Medium with Public Key Cryptography

Chapter 4 Authenticating Communications Using Digital Signatures

Chapter 5 Creating a Network of Trust Using X.509 Certificates

Chapter 6 A Usable, Secure Communications Protocol: Client-Side TLS

Chapter 7 Adding Server-Side TLS 1.0 Support

Chapter 9 Adding TLS 1.2 Support to Your TLS Library

Chapter 10 Other Applications of SSL

Appendix A Binary Representation of Integers: A Primer

Appendix B Installing TCPDump and OpenSSL

Chapter 1 Understanding Internet Security

"Insecure" Communications: Understanding the HTTP Protocol

Adding Support for HTTP Proxies

Reliable Transmission of Binary Data with Base64 Encoding

Roadmap for the Rest of This Book

Chapter 2 Protecting Against Eavesdroppers with Symmetric Cryptography

Understanding Block Cipher Cryptography Algorithms

Implementing the Data Encryption Standard (DES) Algorithm

Padding and Chaining in Block Cipher Algorithms

Using the Triple-DES Encryption Algorithm to Increase Key Length

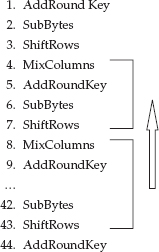

Faster Encryption with the Advanced Encryption Standard (AES) Algorithm

Understanding Stream Cipher Algorithms

Understanding and Implementing the RC4 Algorithm

Converting a Block Cipher to a Stream Cipher: The OFB and COUNTER Block-Chaining Modes

Chapter 3 Secure Key Exchange over an Insecure Medium with Public Key Cryptography

Understanding the Theory Behind the RSA Algorithm

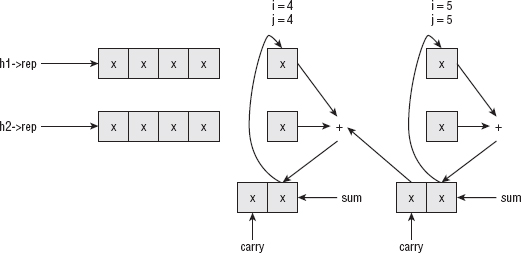

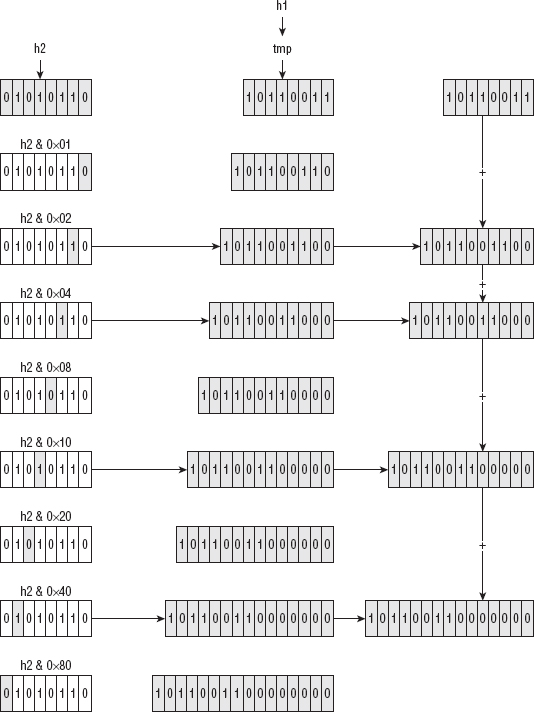

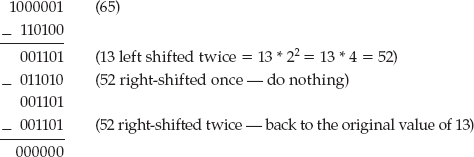

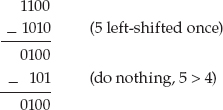

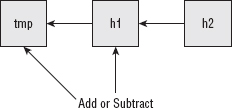

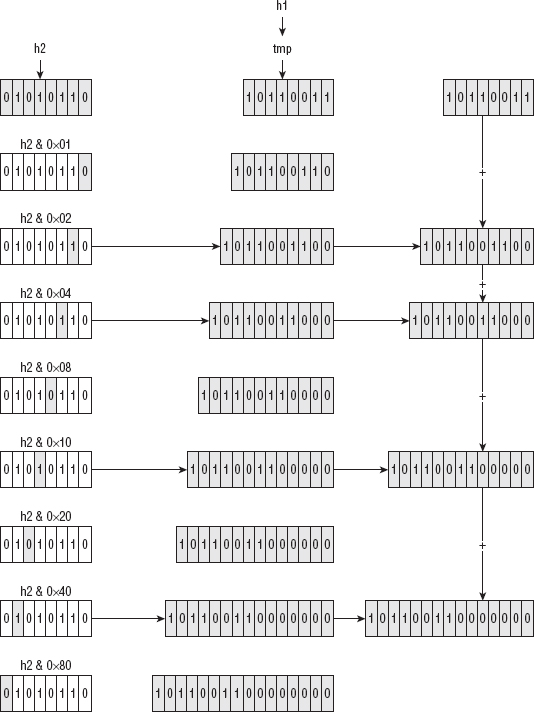

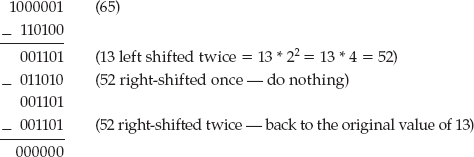

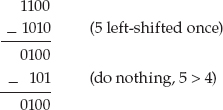

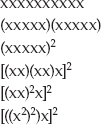

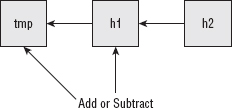

Performing Arbitrary Precision Binary Math to Implement Public-Key Cryptography

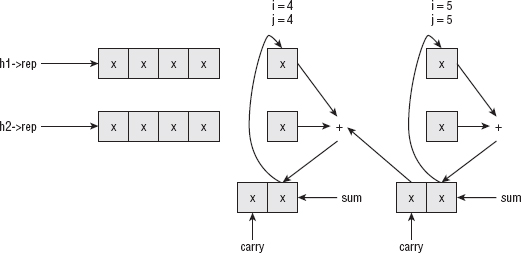

Implementing Large-Number Addition

Implementing Large-Number Subtraction

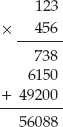

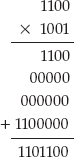

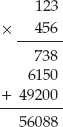

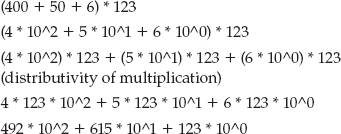

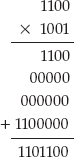

Implementing Large-Number Multiplication

Implementing Large-Number Division

Optimizing for Modulo Arithmetic

Using Modulus Operations to Efficiently Compute Discrete Logarithms in a Finite Field

Encryption and Decryption with RSA

Encrypting a Plaintext Message

Decrypting an RSA-Encrypted Message

Testing RSA Encryption and Decryption

Achieving Perfect Forward Secrecy with Diffie-Hellman Key Exchange

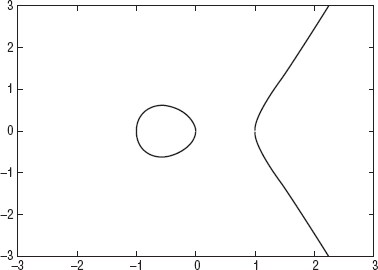

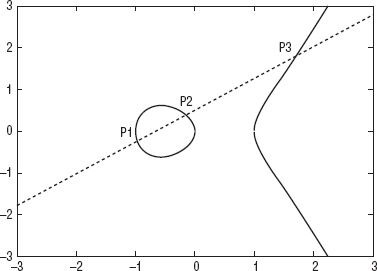

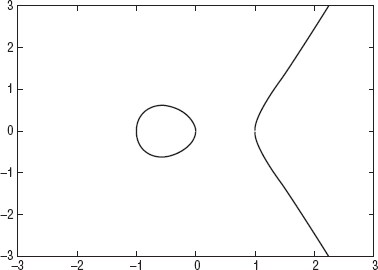

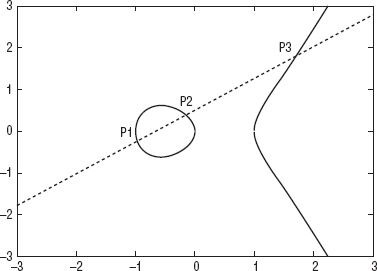

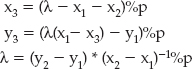

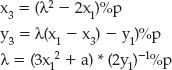

Getting More Security per Key Bit: Elliptic Curve Cryptography

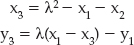

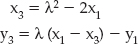

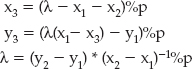

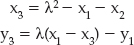

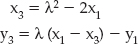

How Elliptic Curve Cryptography Relies on Modular Inversions

Using the Euclidean Algorithm to compute Greatest Common Denominators

Computing Modular Inversions with the Extended Euclidean Algorithm

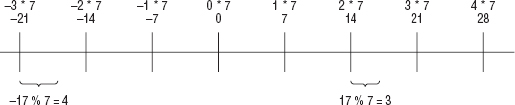

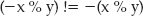

Adding Negative Number Support to the Huge Number Library

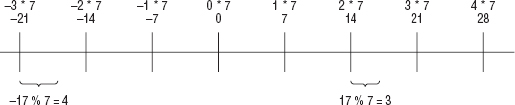

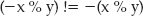

Supporting Negative Remainders

Making ECC Work with Whole Integers: Elliptic-Curve Cryptography over Fp

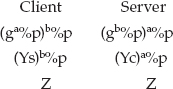

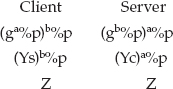

Reimplementing Diffie-Hellman to Use ECC Primitives

Why Elliptic-Curve Cryptography?

Chapter 4 Authenticating Communications Using Digital Signatures

Using Message Digests to Create Secure Document Surrogates

Implementing the MD5 Digest Algorithm

Securely Hashing a Single Block of Data

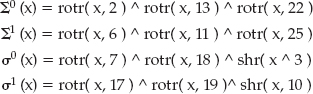

Increasing Collision Resistance with the SHA-1 Digest Algorithm

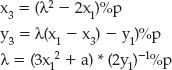

Understanding SHA-1 Block Computation

Understanding the SHA-1 Input Processing Function

Understanding SHA-1 Finalization

Even More Collision Resistance with the SHA-256 Digest Algorithm

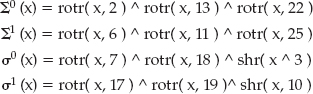

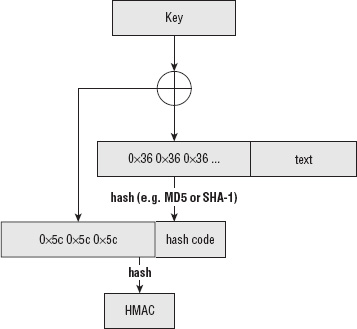

Preventing Replay Attacks with the HMAC Keyed-Hash Algorithm

Implementing a Secure HMAC Algorithm

Creating Updateable Hash Functions

Appending the Length to the Last Block

Computing the MD5 Hash of an Entire File

Where Does All of This Fit into SSL?

Understanding Digital Signature Algorithm (DSA) Signatures

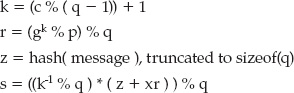

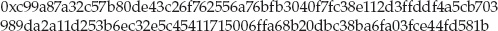

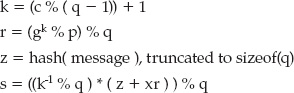

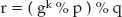

Implementing Sender-Side DSA Signature Generation

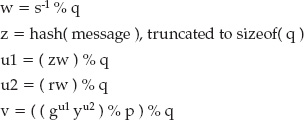

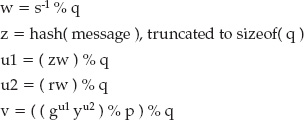

Implementing Receiver-Side DSA Signature Verification

Getting More Security per Bit: Elliptic Curve DSA

Rewriting the Elliptic-Curve Math Functions to Support Large Numbers

Chapter 5 Creating a Network of Trust Using X.509 Certificates

Putting It Together: The Secure Channel Protocol

Understanding Signed Certificate Structure

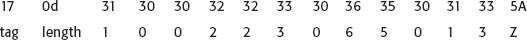

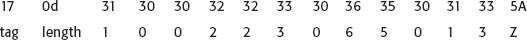

Transmitting Certificates with ASN.1 Distinguished Encoding Rules (DER)

Sequences and Sets: Grouping and Nesting ASN.1 Values

A Real-World Certificate Example

Using OpenSSL to Generate an RSA KeyPair and Certificate

Using OpenSSL to Generate a DSA KeyPair and Certificate

Converting a Byte Stream into an ASN.1 Structure

Turning a Parsed ASN.1 Structure into X.509 Certificate Components

Joining the X.509 Components into a Completed X.509 Certificate Structure

Parsing Object Identifiers (OIDs)

Parsing Certificate Extensions

Validating PKCS #7-Formatted RSA Signatures

Verifying a Self-Signed Certificate

Adding DSA Support to the Certificate Parser

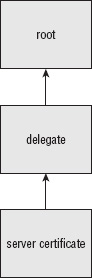

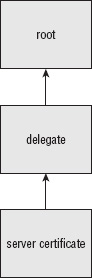

How Authorities Handle Certificate Signing Requests (CSRs)

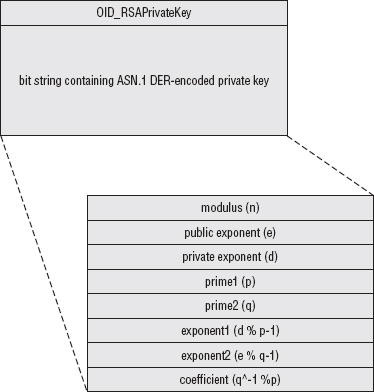

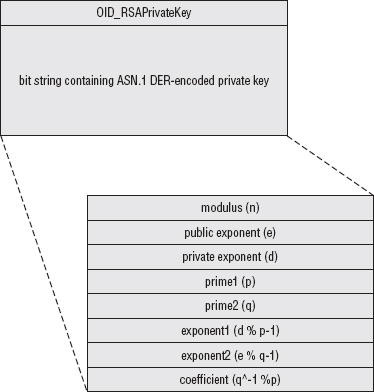

Correlating Public and Private Keys Using PKCS #12 Formatting

Blacklisting Compromised Certificates Using Certificate Revocation Lists (CRLs)

Keeping Certificate Blacklists Up-to-Date with the Online Certificate Status Protocol (OCSP)

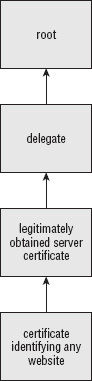

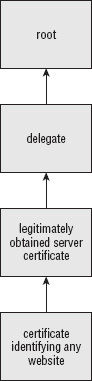

Other Problems with Certificates

Chapter 6 A Usable, Secure Communications Protocol: Client-Side TLS

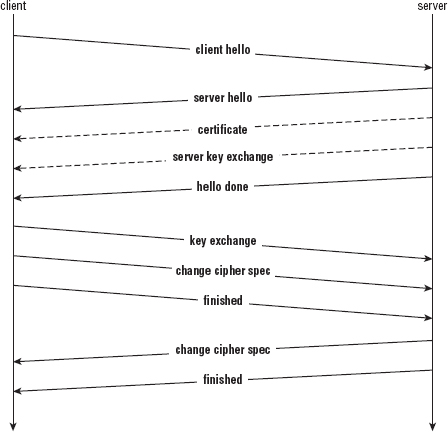

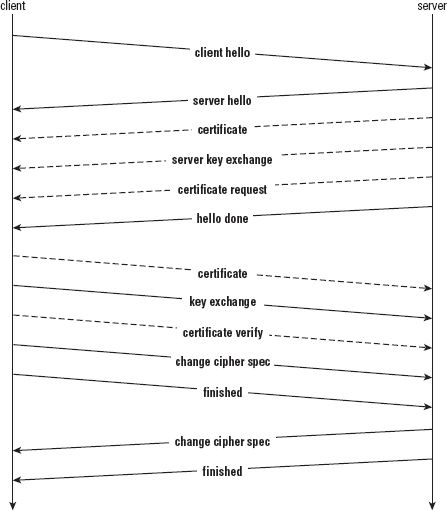

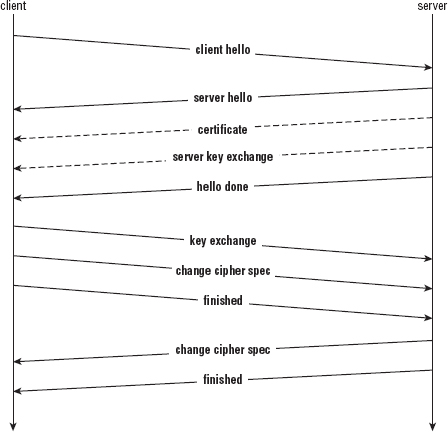

Implementing the TLS 1.0 Handshake (Client Perspective)

Adding TLS Support to the HTTP Client

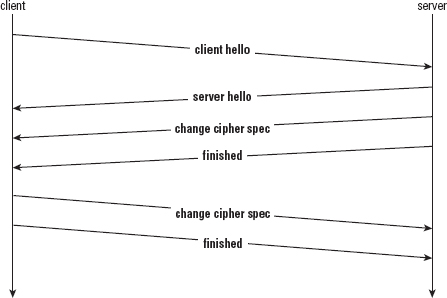

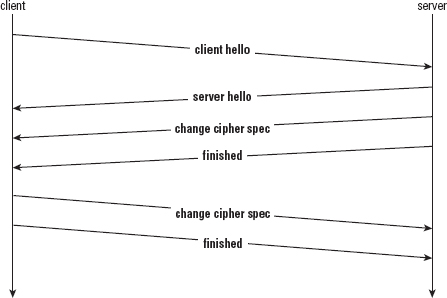

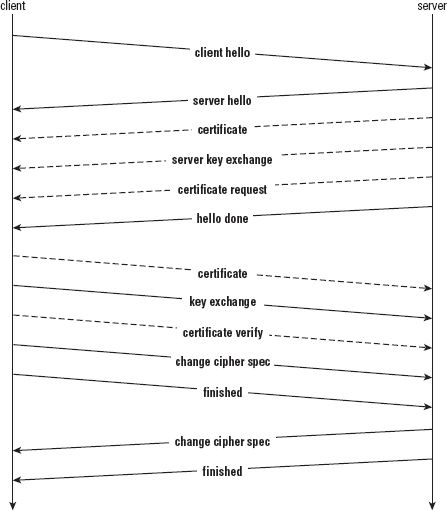

Understanding the TLS Handshake Procedure

Tracking the Handshake State in the TLSParameters Structure

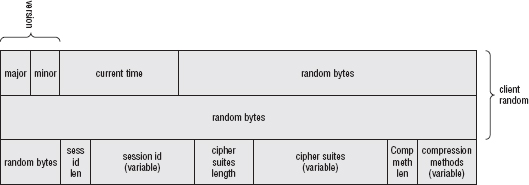

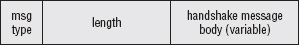

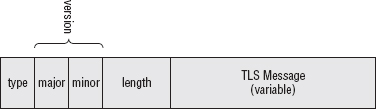

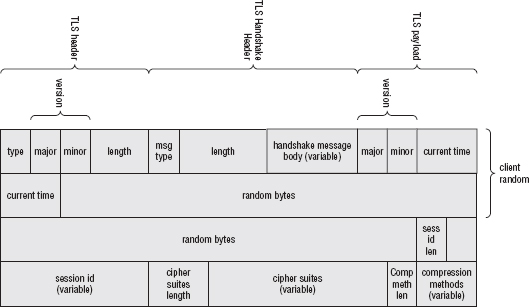

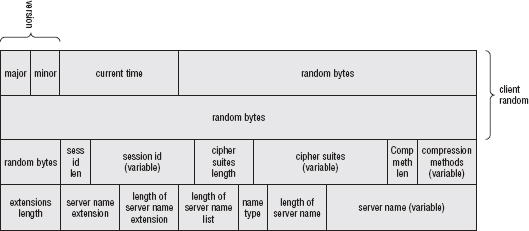

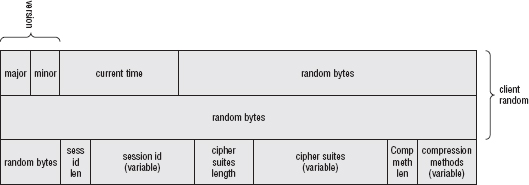

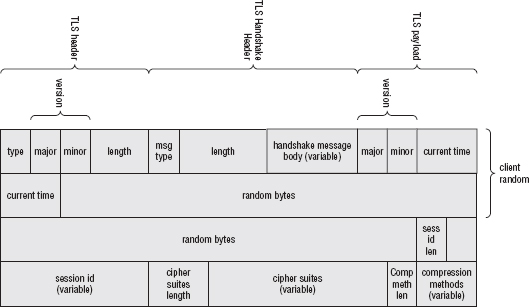

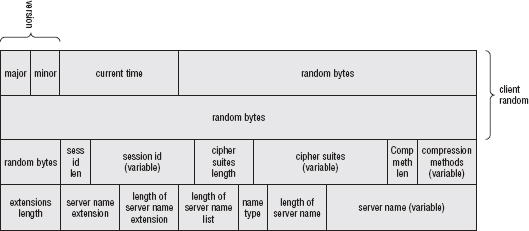

Flattening and Sending the Client Hello Structure

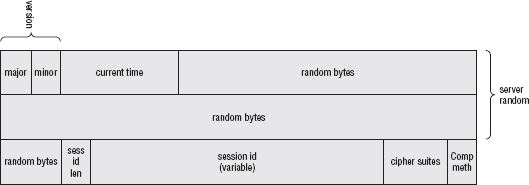

Parsing the Server Hello Structure

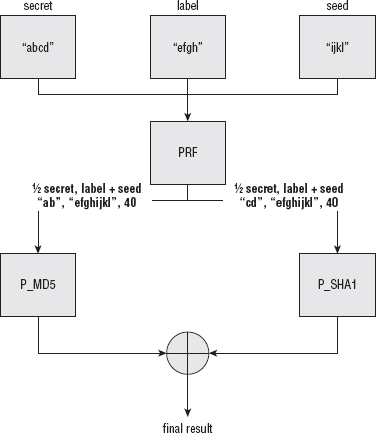

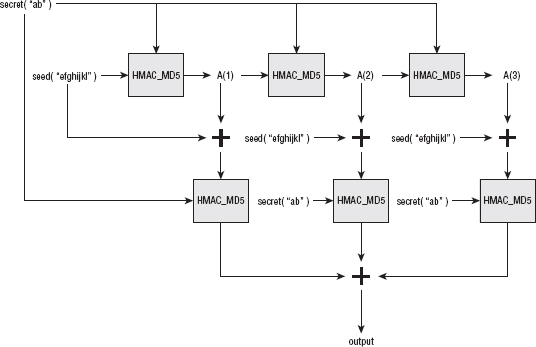

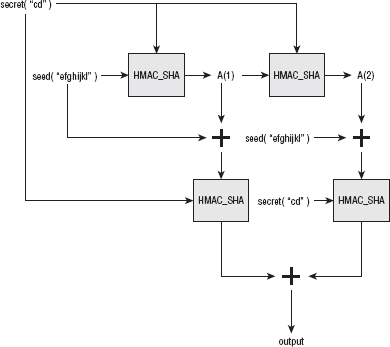

Sharing Secrets Using TLS PRF (Pseudo-Random Function)

Creating Reproducible, Unpredictable Symmetric Keys with Master Secret Computation

Correctly Receiving the Finished Message

Supporting Outgoing Encryption

Adding Support for Stream Ciphers

Updating Each Invocation of send_message

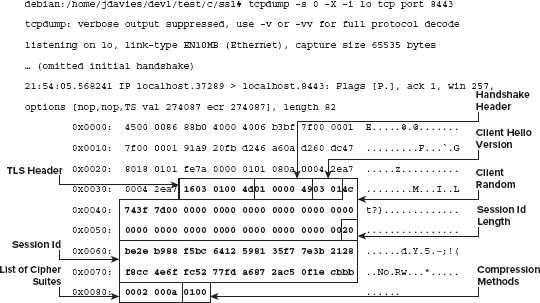

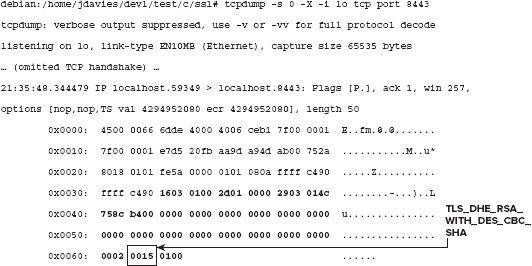

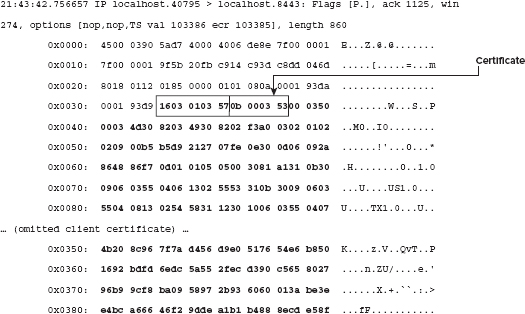

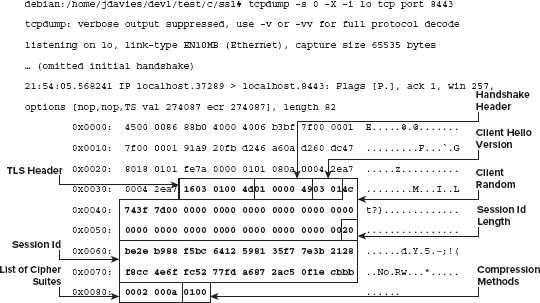

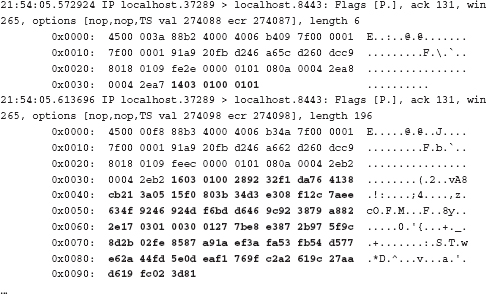

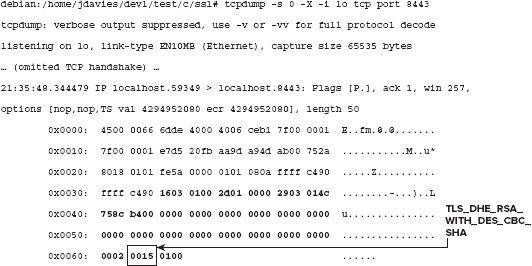

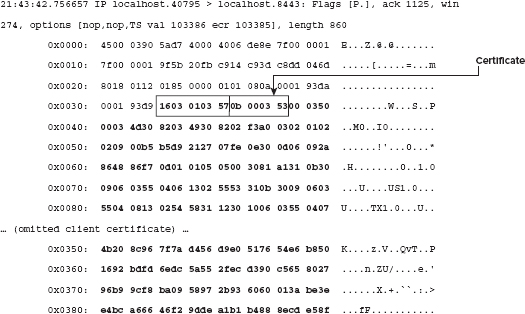

Examining HTTPS End-to-end Examples (TLS 1.0)

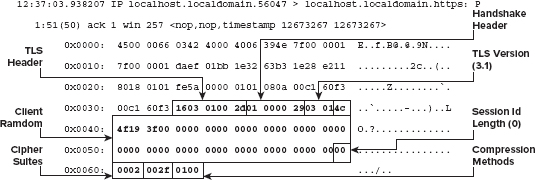

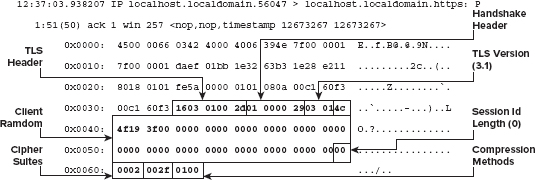

Dissecting the Client Hello Request

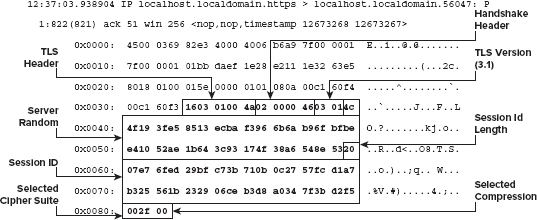

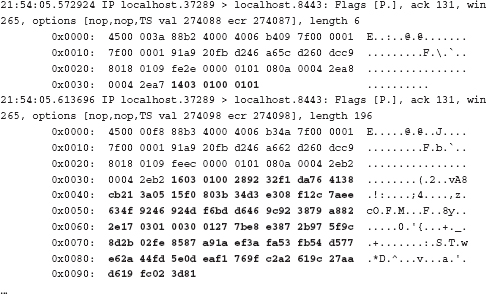

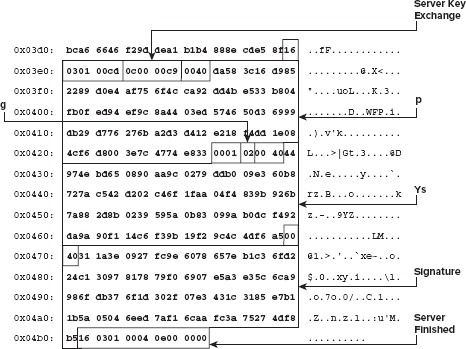

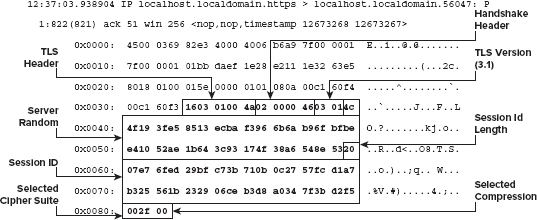

Dissecting the Server Response Messages

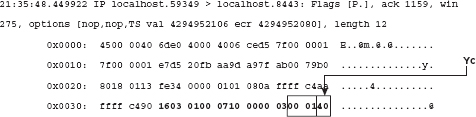

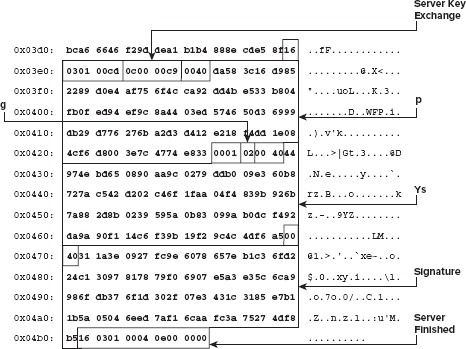

Dissecting the Key Exchange Message

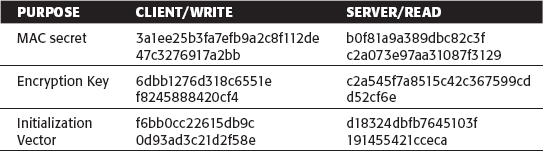

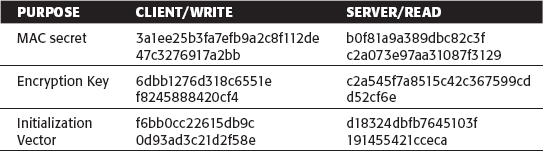

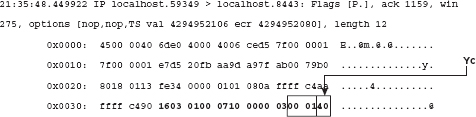

Decrypting the Encrypted Exchange

Differences Between SSL 3.0 and TLS 1.0

Differences Between TLS 1.0 and TLS 1.1

Chapter 7 Adding Server-Side TLS 1.0 Support

Implementing the TLS 1.0 Handshake from the Server's Perspective

RSA Key Exchange and Private Key Location

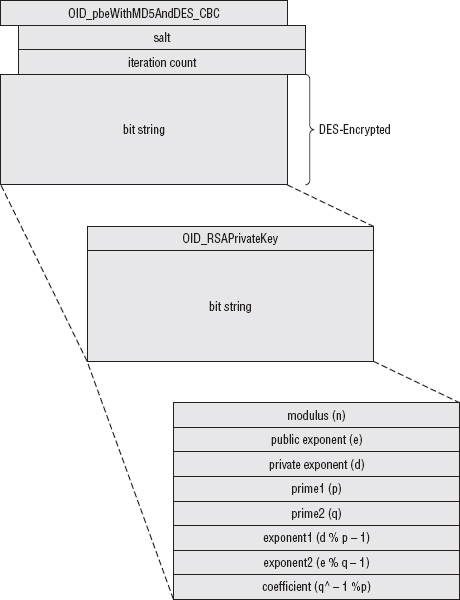

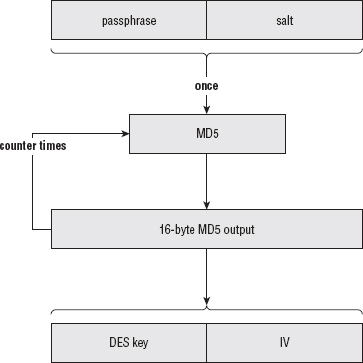

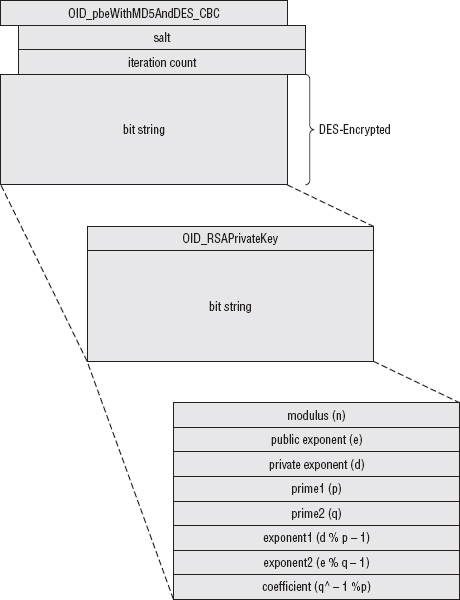

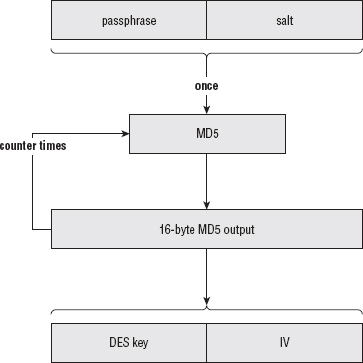

Supporting Encrypted Private Key Files

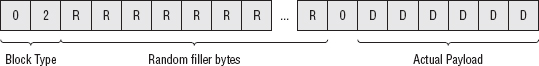

Checking That Decryption was Successful

Avoiding Common Pitfalls When Adding HTTPS Support to a Server

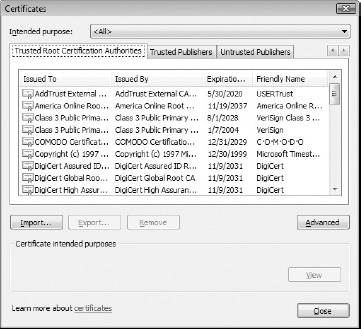

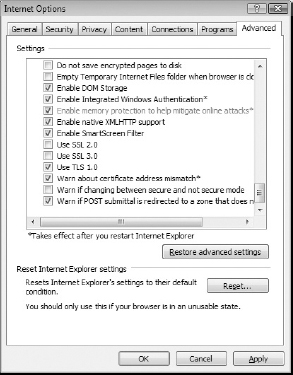

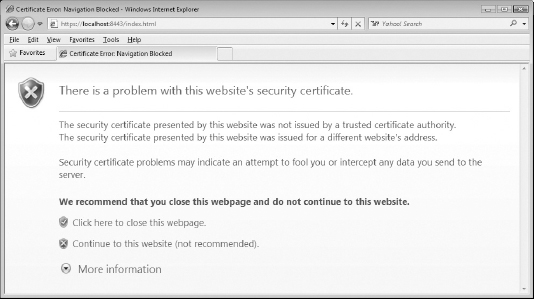

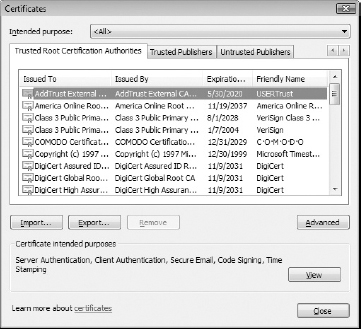

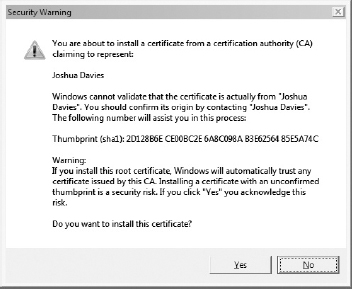

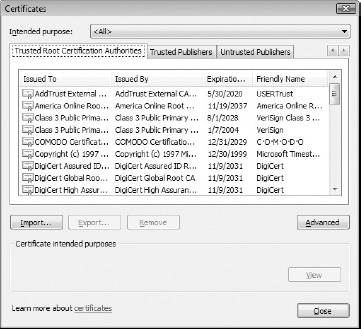

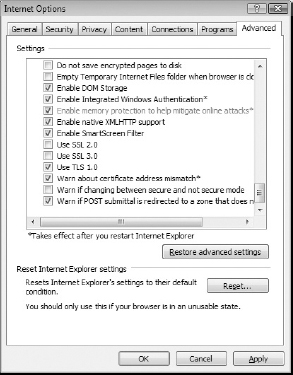

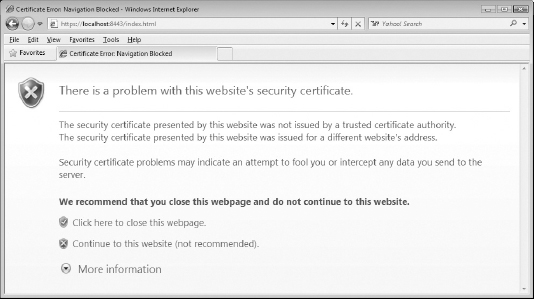

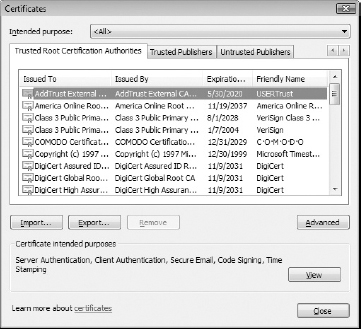

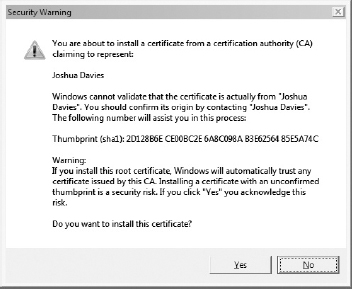

When a Browser Displays Errors: Browser Trust Issues

Passing Additional Information with Client Hello Extensions

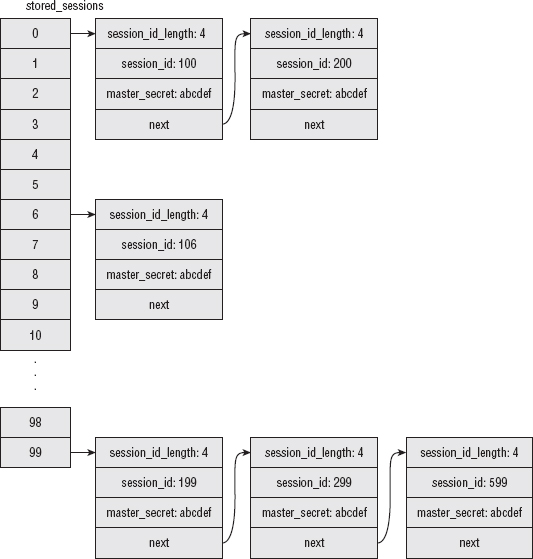

Safely Reusing Key Material with Session Resumption

Adding Session Resumption on the Client Side

Adding Session Resumption Logic to the Client

Restoring the Previous Session's Master Secret

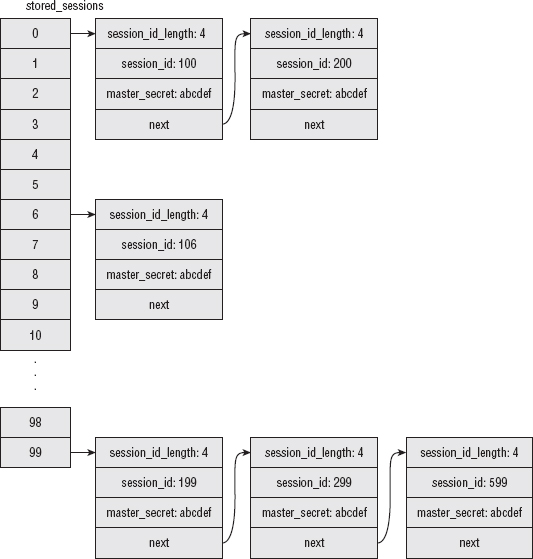

Adding Session Resumption on the Server Side

Assigning a Unique Session ID to Each Session

Modifying parse_client_hello to Recognize Session Resumption Requests

Drawbacks of This Implementation

Avoiding Fixed Parameters with Ephemeral Key Exchange

Supporting the TLS Server Key Exchange Message

Authenticating the Server Key Exchange Message

Examining an Ephemeral Key Exchange Handshake

Verifying Identity with Client Authentication

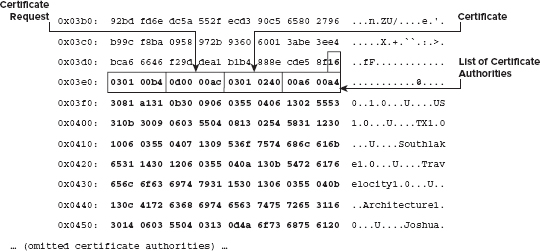

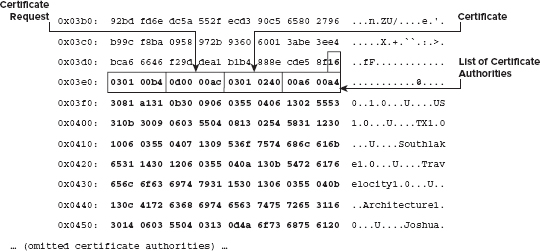

Supporting the CertificateRequest Message

Adding Certificate Request Parsing Capability for the Client

Handling the Certificate Request

Supporting the Certificate Verify Message

Refactoring rsa_encrypt to Support Signing

Viewing a Mutually-Authenticated TLS Handshake

Dealing with Legacy Implementations: Exportable Ciphers

Discarding Key Material Through Session Renegotiation

Renegotiation Pitfalls and the Client Hello Extension 0xFF01

Defending Against the Renegotiation Attack

Implementing Secure Renegotiation

Chapter 9 Adding TLS 1.2 Support to Your TLS Library

Supporting TLS 1.2 When You Use RSA for the Key Exchange

TLS 1.2 Modifications to the PRF

TLS 1.2 Modifications to the Finished Messages Verify Data

Impact to Diffie-Hellman Key Exchange

Adding Support for AEAD Mode Ciphers

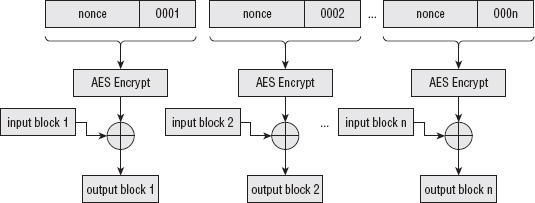

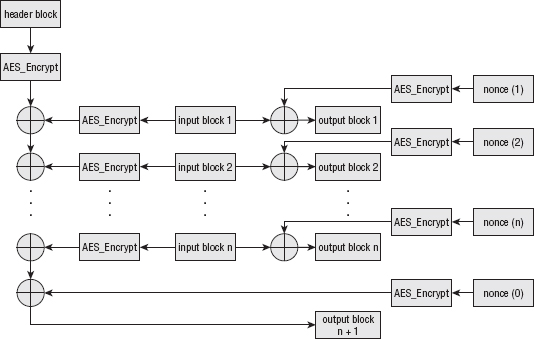

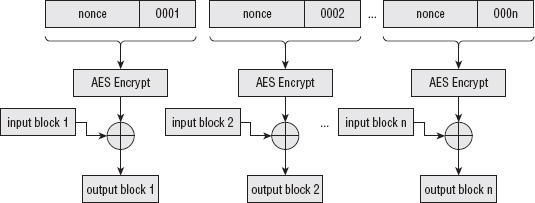

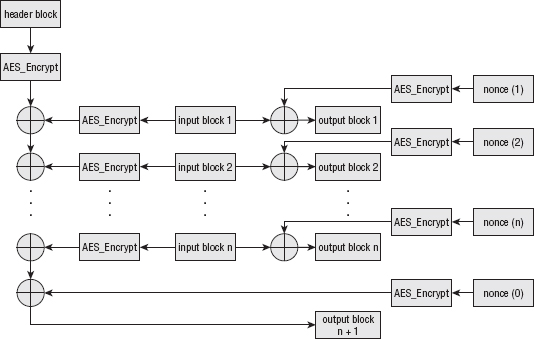

Maximizing Throughput with Counter Mode

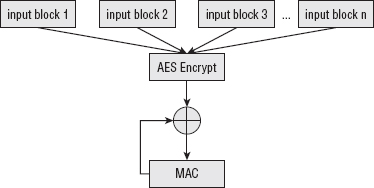

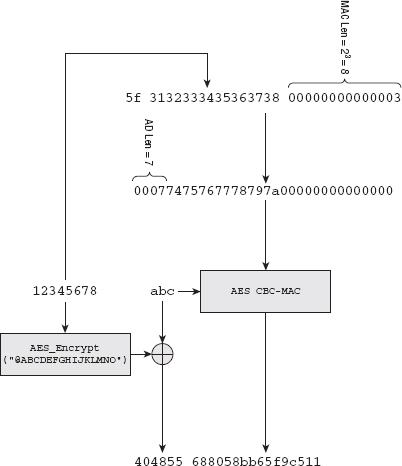

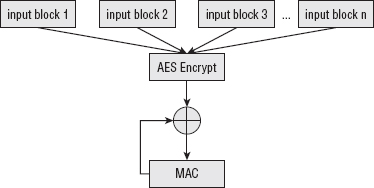

Reusing Existing Functionality for Secure Hashes with CBC-MAC

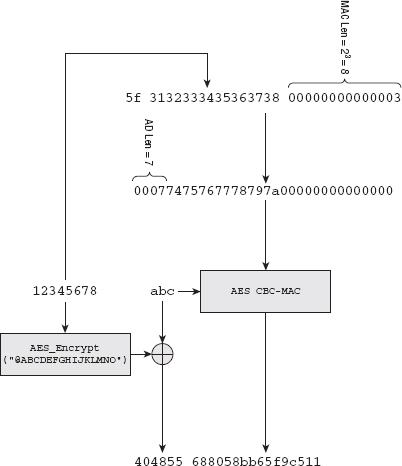

Combining CTR and CBC-MAC into AES-CCM

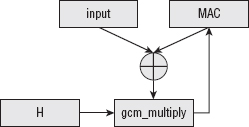

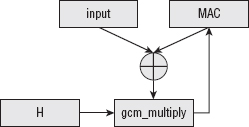

Maximizing MAC Throughput with Galois-Field Authentication

Combining CTR and Galois-Field Authentication with AES-GCM

Authentication with Associated Data

Incorporating AEAD Ciphers into TLS 1.2

Working ECC Extensions into the TLS Library

Chapter 10 Other Applications of SSL

Adding the NTTPS Extension to the NTTP Algorithm

Implementing "Multi-hop" SMTP over TLS and Protecting Email Content with S/MIME

Multipurpose Internet Mail Extensions (MIME)

Protecting Email from Eavesdroppers with S/MIME

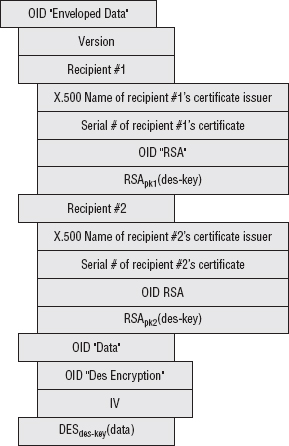

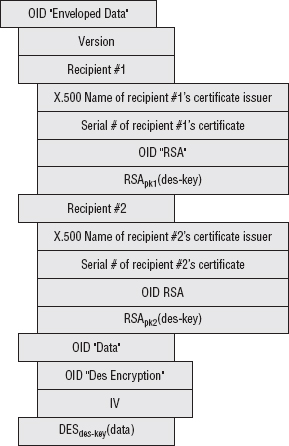

Securing Email When There Are Multiple Recipients

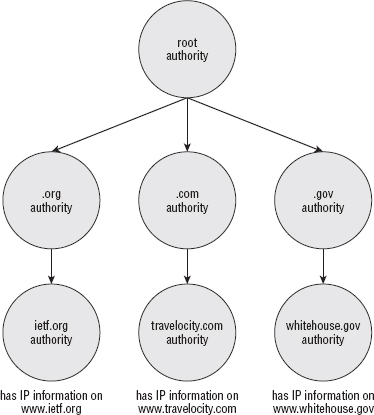

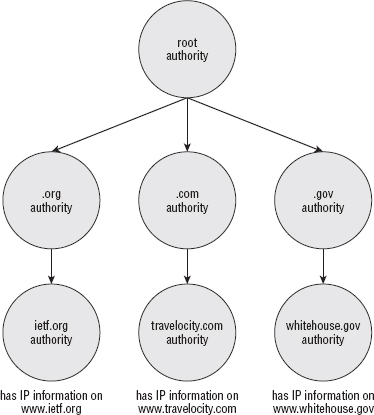

Securing the Domain Name System

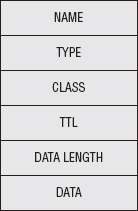

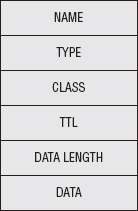

Using the DNS Protocol to Query the Database

Disadvantages of the DNS Query

Preventing DNS Cache Poisoning with DNSSEC

Supporting SSL When Proxies Are Involved

Possible Solutions to the Proxy Problem

Adding Proxy Support Using Tunneling

Appendix A Binary Representation of Integers: A Primer

The Decimal and Binary Numbering Systems

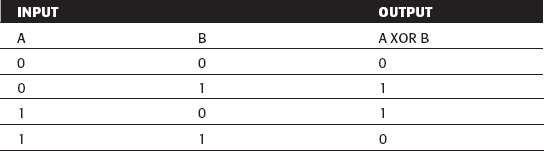

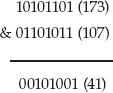

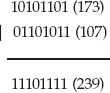

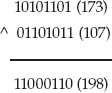

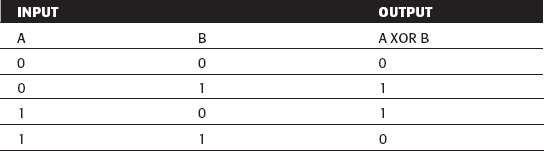

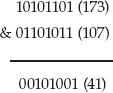

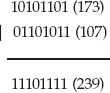

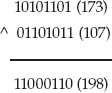

Understanding Binary Logical Operations

Position Shifting of Binary Numbers

Two's-Complement Representation of Negative Numbers

Big-Endian versus Little-Endian Number Formats

Appendix B Installing TCPDump and OpenSSL

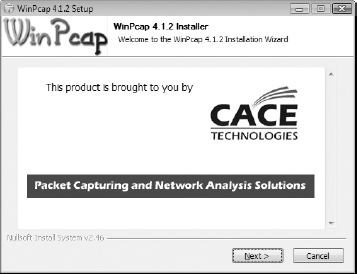

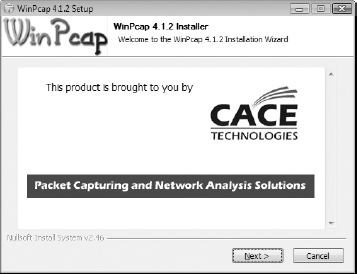

Installing TCPDump on a Windows System

Installing TCPDump on a Linux System

Installing OpenSSL on a Windows System

Installing OpenSSL on a Linux system

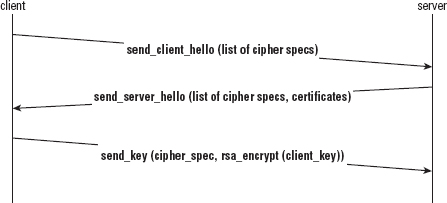

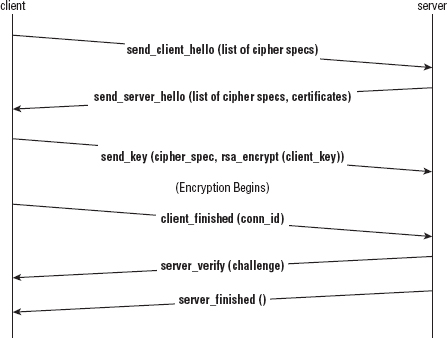

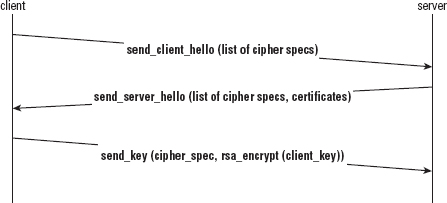

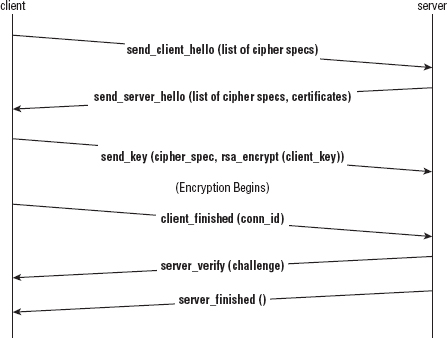

Appendix C Understanding the Pitfalls of SSLv2

Implementing the SSL Handshake

Examining an HTTPS End-to-End Example

This book examines the Secure Sockets Layer (SSL) and Transport Layer Security (TLS) protocols in detail, taking a bottom-up approach. SSL/TLS is a standardized, widely implemented, peer-reviewed protocol for applying cryptographic primitives to arbitrary networked communications. It provides privacy, integrity, and some measure of authenticity to otherwise inherently untrustworthy network connections. Rather than just present the details of the protocol itself, this book develops, incrementally, a relatively complete SSL/TLS library. First, all of the relevant cryptographic protocols are examined and developed, and then the library itself is built piece by piece.

All of the code developed in this book is C (not C++) code. It's been tested on both Windows and Linux systems and should run as advertised on either. Although this is a code-heavy book, non-C programmers — or even non-programmers in general — will still be able to get quite a bit from it. All of the protocols and examples are presented in general form as well as in source code form so that if you're interested in a higher-level overview, you can skip the code examples and the book should still make sense.

I chose C instead of C++ (or Java or Python or Perl, and so on) as a good "least-common-denominator" language. If you can program in any other procedural language, you can program in C; and if you can understand an implementation in C, you can understand an implementation in any other language. This book takes full advantage of the C programming language, though. I use pointer syntax in particular throughout the book. If you plan on following along with the code samples, make sure you're comfortable with C and pointers. I do my best to avoid the sort of excessive macro-ization and gratuitous typedef-ing that make professional C code easy to maintain but hard to read.

You might be wondering, though, why I present the source code of yet another (partially incomplete) implementation when there are so many good, tried-and-tested open-source implementations of SSL available. Effectively, production-grade libraries have (at least) five primary concerns regarding their source code:

When a higher-numbered concern conflicts with a lower-numbered concern, the lower-numbered concern wins. This must be the case for code that's actually used by real people to perform real tasks. The upshot is that the code is not always pretty, nor is it particularly readable, when security/speed/modularity take precedence. The priorities for the code in this book are

Note that security, speed, and modularity aren't concerns. In fact, the code presented in this book (somewhat ironically) is not particularly secure. For example, when the algorithms call for random bytes, the code in this book just returns sequentially numbered bytes, which is the exact opposite of the random bytes that the algorithm calls for. This is done to simplify the code as well as to make sure that what you see if you try it out yourself matches what you see in the book.

There isn't any bounds-checking on buffers or verification that the input matches what's expected, which are things that a proper library ought to be doing. I've omitted these things to keep this book's (already long) page count under control, as well as to avoid obscuring the purpose of the example code with hundreds of lines of error checking. At various times throughout the book, you'll see code comments such as // TODO make this random or // TODO check the length before using. I've placed these comments in the code to draw your attention to the functionality that was intentionally omitted.

Of course, if you're coding in a hostile environment — that is, if you're working with any production quality code — you should prefer a well-established library such as OpenSSL, GnuTLS, or NSS over home-grown code any day. This book, however, should help you understand the internals of these libraries so that, when it comes time to use one, you know exactly what's going on at all stages.

Every aspect of the Internet itself — including SSL/TLS — is described by a series of documents written and maintained by the Internet Engineering Task Force (IETF). These documents are referred to (somewhat confusingly) as Requests for Comments or, more commonly, just RFCs. Each such RFC describes, authoritatively, some aspect of some protocol related to the Internet. And at the time of this writing, there are over 5000 such documents. Although I doubt that anybody, anywhere, has actually read all of them, you'll need to be familiar with quite a few in order to do any serious Internet programming. As such, I'll refer to these RFCs by number throughout the book. Rather than provide a link to each inline, I'll just refer to them as, e.g., RFC 2246. If you want to see RFC 2246 (the authoritative document that describes TLS 1.0 itself), you can visit the IETF's website at www.ietf.org. Each RFC is stored in a document under http://www.ietf.org/rfc/rfcnnnn.txt, where nnnn is the RFC number itself.

In addition, SSL/TLS borrows heavily from a couple of related standards bodies — the International Telecommuncation Union (ITU) "X series" of documents and RSA laboratories' Public Key Cryptography Standards (PKCS). The ITU standards can be found at http://www.itu.int/rec/T-REC-X/en and the PKCS standards can be found at http://www.rsa.com/rsalabs/node.asp?id=2124. I'll refer to RFC's, X-series documents, and PKCS standards throughout the book. You may want to bookmark these locations in a browser for quick reference, if you'd like to compare the text to the official standards documents. All of the standards documents referenced in this book are freely available and downloadable, so I don't make any great effort to repeat them. Instead, I try to explain the background information that the standards documents always seem to take for granted. I'm assuming that, if you're interested in the low-level details, you can always refer to the standards document itself.

I've been around and reading technical documentation since before there was an Internet, or even CD-ROM drives. Back in my day, readers of code-heavy books such as this one couldn't just download the samples from a companion website or an included CD-ROM. If you wanted to see the code samples in action, you had to type them in by hand. Although typing code can be tedious at times, I've found that it's also the best way to completely absorb the material. So, Luddite that I am, I tend to eschew code downloads when I read technical material.

This book has been designed so that somebody who wants to follow along can do so. However, I also recognize that not every reader is a dinosaur like myself — er, I mean not everyone is quite so meticulous. Changes to code presented previously are listed in boldface, so it's easy to see what's been modified and what's been left unchanged.

The companion website at http://www.wiley.com/go/implementingssl has two download files — one for GCC for those following along on a Linux platform and one for Visual Studio for those following along on Windows. Each download is split into two sections: one that includes the finished code for each chapter and another for somebody who might want to follow along. I urge you to download at least the code for following along because it includes Makefiles and headers that aren't specifically reproduced in this book. This book's code is heavily self-referential — especially in the second half — so you want to be sure to build correctly. The downloadable Makefiles ensure that you can.

Because this book is about SSL, I try my best not to get too hung up on unrelated implementation details. However, the code presented here does work and is somewhat nontrivial, so some "implementation asides" are unavoidable.

Chapter 1, "Understanding Internet Security," examines the basics of Internet communication and what is and is not vulnerable to attackers. To motivate the remainder of the book, a basic working HTTP example is developed here. Later chapters incrementally add security features to this beginning HTTP example.

Chapter 2, "Protecting Against Eavesdroppers with Symmetric Cryptography," examines the aspect of communications security that most people think of first, which is scrambling data in flight so that it can't be intercepted or read by unauthorized parties. There are many internationally recognized standard algorithms in this space, which SSL/TLS rely heavily on. Chapter 2 examines three of these standards in detail: DES, AES and RC4. The code developed here will be reused in Chapter 6 when the actual TLS library is built.

Chapter 3, "Secure Key Exchange over an Insecure Medium with Public Key Cryptography," looks at the problem of exchanging keys when the underlying communications channel can't be trusted. The thorny problem of how to take an unencrypted link and turn it into an encrypted one is examined here. There are also several standards in this area — RSA, Diffie-Hellman, and Elliptic-Curve Cryptography are examined in detail in this chapter.

Chapter 4, "Authenticating Communications Using Digital Signatures," examines a less prominent, but equally as important, aspect of secure communications. While cryptography protects data from eavesdroppers, authentication protects data against forgers. The standards MD-5, SHA-1, SHA-256, HMAC, DSA, and ECDSA are all examined in detail in this chapter. Each of these plays a key role in TLS as discussed further in Chapter 6.

Chapter 5, "Creating a Network of Trust Using X.509 Certificates," discusses the final piece of the PKI puzzle that the previous two chapters began, digital certificates. Digital certificates and the Public-Key Infrastructure that support them are required to guard against active attacks. TLS depends greatly on certificates, so this chapter develops an ASN.1 parser and an X.509 certificate reader, which is used in the next chapter to authenticate web sites securely.

Chapter 6, "A Usable, Secure Communications Protocol: Client-Side TLS," ties together all of the concepts from the previous four chapters into a working TLS implementation. This chapter looks at TLS from the perspective of the client and ends with a working HTTPS implementation.

Chapter 7, "Adding Server-Side TLS 1.0 Support," takes the foundation of TLS from Chapter 6 and expands it to the web server example from Chapter 1, developing an SSL-enabled mini–web server. Since the server needs to store private keys, which are, by their nature, especially sensitive, Chapter 7 also examines the topic of using password to securely encrypt data at rest.

Chapter 8, "Advanced SSL Topics," covers the rest of TLS 1.0 — there are several optional elements that a compliant implementation ought to support, but which are not as widespread as the most common case covered in Chapters 6 and 7. Client authentication, server name identification, export grade cryptography, session resumption, and session renegotiation are all explored in depth here.

Chapter 9, "Adding TLS 1.2 Support to Your TLS Library," implements the latest version of the TLS protocol, 1.2, on top of the TLS 1.0 implementation that Chapters 6–8 developed. Here you see elliptic curve cryptography put to use. Additionally, AEAD-mode ciphers are examined, since TLS 1.2 is the first version of TLS to permit this mode.

Chapter 10, "Other Applications of SSL," takes a look at the non-HTTP uses that SSL/TLS has been put to. The STARTTLS extension and DTLS are examined here. Also, S/MIME and DNSSEC — not strictly TLS, but related — are covered in this chapter. Finally, Chapter 10 ends by looking at how HTTPS supports HTTP proxies, which is, overall, an interesting compromise.

This book was written to be read cover to cover. Additionally, if you have some background in C programming, you will want to read through, and probably compile and run, the code samples. If you're not a programmer, or not particularly comfortable with the C programming language, you can skip over the code samples and just read the text descriptions of the relevant protocols — the book was written to make sense when read this way. The benefit of the code samples is that it's impossible to omit any detail — accidentally or intentionally — when writing code, so if you can understand the code, it will cement your understanding of the text preceding it. I've made every effort to ensure that the text and diagrams describe the protocols exactly. If, however, in spite of my best efforts, my descriptions are for any reason unclear, you can always step through the code to see exactly what's going on.

Although this is a book about SSL/TLS, the first half of the book just sets the stage for SSL/TLS by presenting all of the protocols and standards they rely on. If you're just looking for a description of TLS, and have a reasonable understanding of cryptography and PKI in general, you should be able to safely skip ahead to Chapter 6 and start there with the overview of TLS itself. However, at some point, you should jump back and read Chapters 2–5, since there are a lot of implementation details that can bite you in surprising ways when using cryptographic libraries. My primary motivation in writing this book was to present, in detail, the interplay between the SSL and TLS protocols and the cryptographic routines that they rely on.

How secure is the data that you transmit on the Internet? How vulnerable is your personal data to hackers? Even computer-literate, experienced programmers find it's hard to answer these questions with certainty. You probably know that standard encryption algorithms are used to protect data — you've likely heard of public-key algorithms such as RSA and DSA — and you may know that the U.S. government's Data Encryption Standard has been replaced by an Advanced Encryption Standard. Everybody knows about the lock icon in their browsers that indicates that the session is protected by HTTPS. You've most likely heard of PGP for e-mail security (even if you gave up on it after failing to convince your friends to use it).

In all likelihood, though, you've also heard of man in the middle attacks, timing attacks, side-channel attacks, and various other attacks that aim to compromise privacy and security. Anybody with a web browser has been presented with the ominous warning message that "This site's security cannot be trusted — either the certificate has expired, or it was issued by a certificate authority you have chosen not to trust." Every week, you can read about some new zero-day exploit uncovered by security researchers that requires a round of frantic patching. As a professional programmer, you may feel you ought to know exactly what that means — yet trying to decipher these messages and determine whether you should really be worried or not takes you down the rabbit hole of IETF, PKCS, FIPS, NIST, ITU, and ASN. You may have tried to go straight to the source and read RFC 2246, which describes TLS, but you may have discovered, to your chagrin, that RFC 2246 presumes a background in symmetric cryptography, public-key cryptography, digital signature algorithms, and X.509 certificates. It's unclear where to even begin. Although there are a handful of books that describe SSL and "Internet Security," none are targeted at the technically inclined reader who wants, or needs, to know the details.

A mantra among security professionals is that the average programmer doesn't understand security and should not be trusted with it until he verses himself in it. This is good, but ultimately unhelpful, advice. Where does one begin? What the security professionals are really trying to tell you is that, as a practitioner rather than a casual user, it's not enough to treat security as a black box or a binary property; you need to know what the security is doing and how it's doing it so that you know what you are and aren't protected against. This book was written for you — the professional programmer who understands the basics of security but wants to uncover the details without reading thousands of pages of dry technical specifications (only some of which are relevant).

This book begins by examining sockets and socket programming in brief. Afterward, it moves on to a detailed examination of cryptographic concepts and finally applies them to SSL/TLS, the current standard for Internet security. You examine what SSL/TLS does, what it doesn't do, and how it does it. After completing this book, you'll know exactly how and where SSL fits into an overall security strategy and you'll know what steps yet need to be taken, if any, to achieve additional security.

The Internet is a packet-switching network. This means that, for two hosts to communicate, they must packetize their data and submit it to a router with the destination address prepended to each packet. The router then analyzes the destination address and routes the packet either to the target host, or to a router that it believes is closer to the target host. The Internet Protocol (IP), outlined in RFC 971, describes the standard for how this packetization is performed and how addresses are attached to packets in headers.

A packet can and probably will pass through many routers between the sender and the receiver. If the contents of the data in that packet are sensitive — a password, a credit card, a tax identification number — the sender would probably like to ensure that only the receiver can read the packet, rather than the packet being readable by any router along the way. Even if the sender trusts the routers and their operators, routers can be compromised by malicious individuals, called attackers in security terminology, and tricked into forwarding traffic that's meant for one destination to another, as shown in http://www.securesphere.net/download/papers/dnsspoof.htm. If you'd like to get an idea just how many different hosts a packet passes through between you and a server, you can use the traceroute facility that comes with every Internet-capable computer to print a list of the hops between you and any server on the Internet.

An example of a traceroute output is shown below:

[jdavies@localhost]:~$ traceroute www.travelocity.com traceroute to www.travelocity.com (151.193.224.81), 30 hops max, 40 byte packets 1 192.168.0.1 (192.168.0.1) 0.174 ms 0.159 ms 0.123 ms 2 * * * 3 172.216.125.53 (172.216.125.53) 8.052 ms 7.978 ms 9.699 ms 4 10.208.164.65 (10.208.164.65) 10.731 ms 9.895 ms 9.489 ms 5 gig8-2.dllatxarl-t-rtr1.tx.rr.com (70.125.217.92) 12.593 ms 10.952 ms 13.003 ms 6 gig0-1-0.dllatxl3-rtr1.texas.rr.com (72.179.205.72) 69.604 ms 37.540 ms 14.015 ms 7 ae-4-0.cr0.dfw10.tbone.rr.com (66.109.6.88) 13.434 ms 13.696 ms 15.259 ms 8 ae-1-0.pr0.dfw10.tbone.rr.com (66.109.6.179) 15.498 ms 15.948 ms 15.555 ms 9 xe-7-0-0.edge4.Dallas3.Level3.net (4.59.32.17) 18.653 ms 22.451 ms 16.034 ms 10 ae-11-60.car1.Dallas1.Level3.net (4.69.145.3) 19.759 ms ae-21-70.car1.Dallas1.Level3.net (4.69.145.67) 17.455 ms ae-41-90.car1.Dallas1.Level3.net (4.69.145.195) 16.469 ms 11 EDS.car1.Dallas1.Level3.net (4.59.113.86) 28.853 ms 25.672 ms 26.337 ms 12 151.193.129.61 (151.193.129.61) 24.763 ms 26.032 ms 25.481 ms 13 151.193.129.99 (151.193.129.99) 28.727 ms 25.441 ms 26.507 ms 14 151.193.129.173 (151.193.129.173) 26.642 ms 23.995 ms 28.462 ms 15 * * *

Here, I've submitted a traceroute to www.travelocity.com. Each router along the way is supposed to respond with a special packet called an ICMP timeout packet, as described in RFC 793, with its own address. The routers that cannot or will not do so are represented with * * * in the preceding code. Typically the routers don't respond because they're behind a firewall that's configured not to forward ICMP diagnostic packets. As you can see, there are quite a few hops between my home router and Travelocity's main web server.

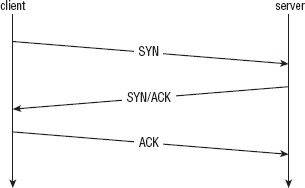

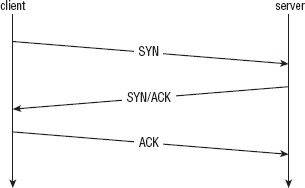

In network programming parlance, the tenuous connection between a sender and a receiver is referred to as a socket. When one host — the client—is ready to establish a connection with another — the server— it sends a synchronize (SYN) packet to the server. If the server is willing to accept the connection, it responds with a SYN and acknowledge packet. Finally, the client acknowledges the acknowledgment and both sides have agreed on a connection. This three-packet exchange is referred to as the TCP handshake and is illustrated in Figure 1-1. The connection is associated with a pair of numbers: the source port and the destination port, which are attached to each subsequent packet in the communication. Because the server is sitting around, always listening for connections, it must advertise its destination port ahead of time. How this is done is protocol-specific; some protocols are lucky enough to have "magic numbers" associated with them that are well-known (in other words, you, the programmer are supposed to know them). This is the Transport Control Protocol (TCP); RFC 793 describes exactly how this works and how both sides agree on a source and destination port and how they sequence these and subsequent packets.

Figure 1-1: TCP three-way handshake

TCP and IP are usually implemented together and called TCP/IP. A socket refers to an established TCP connection; both sides, client and server, have a socket after the three-way handshake described above has been completed. If either side transmits data over this socket, TCP guarantees, to the best of its ability, that the other side sees this data in the order it was sent. As is required by IP, however, any intermediate router along the way also sees this data.

SSL stands for Secure Sockets Layer and was originally developed by Netscape as a way to allow the then-new browser technology to be used for e-commerce. The original specification proposal can be found in http://www.mozilla.org/projects/security/pki/nss/ssl/draft02.html. Although it has since been standardized and renamed Transport Layer Security (TLS), the name SSL is much more recognizable and in some ways describes better what it does and what it's for. After a socket has been established between the client and the server, SSL defines a second handshake that can be performed to establish a secure channel over the inherently insecure TCP layer.

HTTP, or Hypertext Transport Protocol, which is officially described in RFC 2616, is the standard protocol for web communication. Web clients, typically referred to as browsers, establish sockets with web servers. HTTP has a well-known destination port of 80. After the socket has been established, the web browser begins following the rules set forth by the HTTP protocol to request documents. HTTP started out as a fairly simple protocol in which the client issued a GET command and a description of what it would like to get, to which the server responded with either what the client requested in document form or an error indicating why it could not or did not give the client that document. Either way, the socket would be closed after this. If the client wanted another document, it would create another socket and request another document. Over the years, HTTP has been refined quite a bit and optimized for bandwidth, speed, and security features.

HTTP was also the primary motivator for SSL. Originally, SSL didn't stand on its own; it was designed as an add-on to HTTP, called HTTPS. Although SSL was subsequently decoupled from HTTP, some of its features were optimized for HTTP, leaving it to be a bit of a square peg in a round hole in some other contexts. Because HTTP and SSL go so well together, in this book I motivate SSL by developing an HTTP client and adding security features to it incrementally, finally arriving at a working HTTP/SSL implementation.

Web browsers are complex because they need to parse and render HTML — and, in most cases, render images, run Javascript, Flash, Java Applets and leave room for new, as-yet-uninvented add-ons. However, a web client that only retrieves a document from a server, such as the wget utility that comes standard with most Unix distributions, is actually pretty simple. Most of the complexity is in the socket handling itself — establishing the socket and sending and receiving data over it.

Start with all of the includes that go along with socket communication — as you can see, there are quite a few, shown in Listing 1-1.

Listing 1-1: "http.c" header includes

/** * This test utility does simple (non-encrypted) HTTP. */ #include <stdio.h> #include <stdlib.h> #include <errno.h> #include <string.h> #include <sys/types.h> #ifdef WIN32 #include <winsock2.h> #include <windows.h> #else #include <netdb.h> #include <sys/socket.h> #include <netinet/in.h> #include <unistd.h> #endif

The main routine is invoked with a URL of the form http://www.server.com/path/to/document.html. You need to separate the host and the path using a utility routine parse_url, shown in Listing 1-2.

Listing 1-2: "http.c" parse_url

/**

* Accept a well-formed URL (e.g. http://www.company.com/index.html) and return

* pointers to the host part and the path part. Note that this function

* modifies the uri itself as well. It returns 0 on success, −1 if the URL is

* found to be malformed in any way.

*/

int parse_url( char *uri, char **host, char **path )

{

char *pos;

pos = strstr( uri, "//" );

if ( !pos )

{

return −1;

}

*host = pos + 2;

pos = strchr( *host, '/' );

if ( !pos )

{

*path = NULL;

}

else

{

*pos = '\0';

*path = pos + 1;

}

return 0;

}

You scan through the URL, looking for the delimiters // and / and replace them with null-terminators so that the caller can treat them as C strings. Notice that the calling function passes in two pointers to pointers; these should be null when the function starts and will be modified to point into the uri string, which came from argv.

The main routine that coordinates all of this is shown in Listing 1-3.

*/ int main( int argc, char *argv[ ] ) { int client_connection; char *host, *path; struct hostent *host_name; struct sockaddr_in host_address; #ifdef WIN32 WSADATA wsaData; #endif if ( argc < 2 ) { fprintf( stderr, "Usage: %s: <URL>\n", argv[ 0 ] ); return 1; } if ( parse_url( argv[ 1 ], &host, &path ) == −1 ) { fprintf( stderr, "Error - malformed URL '%s'.\n", argv[ 1 ] ); return 1; } printf( "Connecting to host '%s'\n", host );

After the URL has been parsed and the host is known, you must establish a socket to it. In order to do this, convert it from a human-readable host name, such as www.server.com, to a dotted-decimal IP address, such as 100.218.64.2. You call the standard gethostbyname library function to do this, and connect to the server. This is shown in Listing 1-4.

Listing 1-4: "http.c" main (continued)

// Step 1: open a socket connection on http port with the destination host.

#ifdef WIN32

if ( WSAStartup( MAKEWORD( 2, 2 ), &wsaData ) != NO_ERROR )

{

fprintf( stderr, "Error, unable to initialize winsock.\n" );

return 2;

}

#endif

client_connection = socket( PF_INET, SOCK_STREAM, 0 );

if ( !client_connection )

{

perror( "Unable to create local socket" );

return 2;

}

host_name = gethostbyname( host );

if ( !host_name )

{ perror( "Error in name resolution" ); return 3; } host_address.sin_family = AF_INET; host_address.sin_port = htons( HTTP_PORT ); memcpy( &host_address.sin_addr, host_name->h_addr_list[ 0 ], sizeof( struct in_addr ) ); if ( connect( client_connection, ( struct sockaddr * ) &host_address, sizeof( host_address ) ) == −1 ) { perror( "Unable to connect to host" ); return 4; } printf( "Retrieving document: '%s'\n", path );

Assuming nothing went wrong — the socket structure could be created, the hostname could be resolved to an IP address, the IP address was reachable, and the server accepted your connection on the well-known port 80 — you now have a usable (cleartext) socket with which to exchange data with the web server. Issue a GET command, display the result, and close the socket, as shown in Listing 1-5.

Listing 1-5: "http.c" main (continued)

http_get( client_connection, path, host );

display_result( client_connection );

printf( "Shutting down.\n" );

#ifdef WIN32

if ( closesocket( client_connection ) == −1 )

#else

if ( close( client_connection ) == −1 )

#endif

{

perror( "Error closing client connection" );

return 5;

}

#ifdef WIN32

WSACleanup();

#endif

return 0;

}

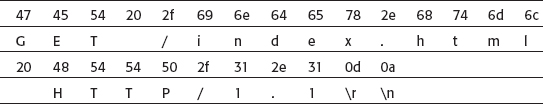

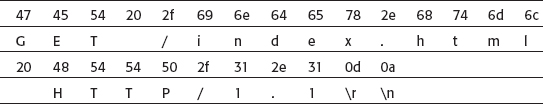

An HTTP GET command is a simple, plaintext command. It starts with the three ASCII-encoded letters GET, all in uppercase (HTTP is case sensitive), a space, the path to the document to be retrieved, another space, and the token HTTP/1.0 or HTTP/1.1 depending on which version of the HTTP protocol the client understands.

NOTE At the time of this writing, there are only two versions of HTTP; the differences are immaterial to this book.

The GET command itself is followed by a carriage-return/line-feed pair (0x0A 0x0D) and a colon-separated, CRLF-delimited list of headers that describe how the client wants the response to be returned. Only one header is required — the Host header, which is required to support virtual hosting, the situation where several hosts share one IP address or vice-versa. The Connection header is not required, but in general you should send it to indicate to the client whether you want it to Keep-Alive the connection — if you plan on requesting more documents on this same socket — or Close it. If you omit the Connection: Close header line, the server keeps the socket open until the client closes it. If you're just sending a single request and getting back a single response, it's easier to let the server just close the connection when it's done sending. The header list is terminated by an empty CRLF pair.

A minimal HTTP GET command looks like this:

GET /index.html HTTP/1.1 Host: www.server.com Connection: close

The code to format and submit a GET command over an established socket is shown in Listing 1-6. Note that the input is the socket itself — the connection argument — the path of the document being requested, and the host (to build the host header).

Listing 1-6: "http.c" http_get

#define MAX_GET_COMMAND 255

/**

* Format and send an HTTP get command. The return value will be 0

* on success, −1 on failure, with errno set appropriately. The caller

* must then retrieve the response.

*/

int http_get( int connection, const char *path, const char *host )

{

static char get_command[ MAX_GET_COMMAND ];

sprintf( get_command, "GET /%s HTTP/1.1\r\n", path );

if ( send( connection, get_command, strlen( get_command ), 0 ) == −1 )

{

return −1;

}

sprintf( get_command, "Host: %s\r\n", host );

if ( send( connection, get_command, strlen( get_command ), 0 ) == −1 )

{

return −1; } sprintf( get_command, "Connection: close\r\n\r\n" ); if ( send( connection, get_command, strlen( get_command ), 0 ) == −1 ) { return −1; } return 0; }

Finally, output the response from the server. To keep things simple, just dump the contents of the response on stdout. An HTTP response has a standard format, just like an HTTP request. The response is the token HTTP/1.0 or HTTP/1.1 depending on which version the server understands (which does not necessarily have to match the client's version), followed by a space, followed by a numeric code indicating the status of the request — errored, rejected, processed, and so on — followed by a space, followed by a textual, human-readable, description of the meaning of the status code.

Some of the more common status codes are shown in Table 1-1.

Table 1-1: Common status codes

| STATUS | MEANING |

| 200 | Everything was OK, requested document follows. |

| 302 | Requested document exists, but has been moved — new location follows. |

| 403 | Forbidden: Requested document exists, but you are not authorized to view it. |

| 404 | Requested document not found. |

| 500 | Internal Server Error. |

There are quite a few more status codes, as described in RFC 2616. The response status line is followed, again, by a CRLF, and a series of colon-separated, CRLF-delimited headers, a standalone CRLF/blank line end-of-headers marker, and the document itself. Here's an example HTTP response:

HTTP/1.1 200 OK Date: Tue, 13 Oct 2009 19:34:51 GMT Server: Apache Last-Modified: Fri, 27 Oct 2006 01:53:57 GMT ETag: "1876a-ff-316f5740" Accept-Ranges: bytes

Content-Length: 255 Vary: Accept-Encoding Connection: close Content-Type: text/html; charset=ISO-8859-1 <html> <head> <TITLE>Welcome to the server</TITLE> </head> <BODY BGCOLOR=ffffff> This is the server's homepage </BODY> </html>

Here's an example of a 404 "not found" error:

HTTP/1.1 404 Not Found Date: Tue, 13 Oct 2009 19:40:53 GMT Server: Apache Last-Modified: Fri, 27 Oct 2006 01:53:58 GMT ETag: "1875d-c5-317e9980" Accept-Ranges: bytes Content-Length: 197 Vary: Accept-Encoding Connection: close Content-Type: text/html; charset=ISO-8859-1 <!DOCTYPE HTML PUBLIC "-//IETF//DTD HTML 2.0//EN"> <html><head> <title>404 Not Found</title> </head><body> <h1>Not Found</h1> <p>The requested URL was not found on this server.</p> </body></html>

Even though the document requested was not found, a document was returned, which can be displayed in a browser to remind the user that something has gone wrong.

For testing purposes, you don't care about the response itself, as long as you get one. Therefore, don't make any efforts to parse these responses — just dump their contents, verbatim, on stdout as shown in Listing 1-7.

Listing 1-7: "http.c" display_result

#define BUFFER_SIZE 255

/**

* Receive all data available on a connection and dump it to stdout

*/

void display_result( int connection )

{

int received = 0;

static char recv_buf[ BUFFER_SIZE + 1 ]; while ( ( received = recv( connection, recv_buf, BUFFER_SIZE, 0 ) ) > 0 ) { recv_buf[ received ] = '\0'; printf( "%s", recv_buf ); } printf( "\n" ); }

This is all that's required to implement a bare-bones web client. Note, however, that because the socket created was a cleartext socket, everything that's transmitted between the client and the server is observable, in plaintext, to every host in between. In general, if you want to protect the transmission from eavesdroppers, you establish an SSL context — that is, secure the line — prior to sending the GET command.

One important topic related to HTTP is the HTTP proxy. Proxies are a bit tricky for SSL. Notice in Listing 1-4 that a socket had to be created from the client to the server before a document could be requested. This means that the client had to be able to construct a SYN packet, hand that off to a router, which hands it off to another router, and so on until it's received by the server. The server then constructs its own SYN/ACK packet, hands it off, and so on until it's received by the client. However, in corporate intranet environments, packets from outside the corporate domain are not allowed in and vice versa. In effect, there is no route from the client to the server with which it wants to connect.

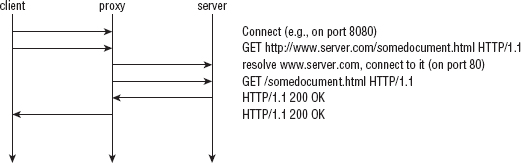

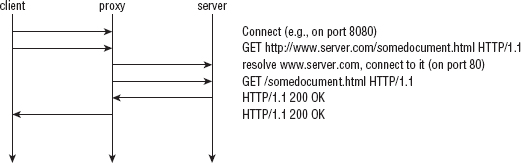

In this scenario, it's typical to set up a proxy server that can connect to the outside world, and have the client funnel its requests through the proxy. This changes the dynamics a bit; the client establishes a socket connection with the proxy server first, and issues a GET request to it as shown in Figure 1-2. After the proxy receives the GET request, the proxy examines the request to determine the host name, resolves the IP address, connects to that IP address on behalf of the client, re-issues the GET request, and forwards the response back to the client. This subtly changes the dynamics of HTTP. What's important to notice is that the client establishes a socket with the proxy server, and the GET request now includes the full URL.

Because you may well be reading this behind such a firewalled environment, and because proxies present some unique challenges for SSL, go ahead and add proxy support to the minimal HTTP client developed in the preceding section.

First of all, you need to modify the main routine to accept an optional proxy specification parameter. A proxy specification includes, of course, the hostname of the proxy server itself, but it also typically allows a username and password to be passed in, as most HTTP proxies are, or at least can be, authenticating. The standard format for a proxy specification is

http://[username:password@]hostname[:port]/

where hostname is the only part that's required. Modify your main routine as shown in Listing 1-8 to accept an optional proxy parameter, preceded by -p.

Listing 1-8: "http.c" main (with proxy support)

int main( int argc, char *argv[ ] )

{

int client_connection;

char *proxy_host, *proxy_user, *proxy_password;

int proxy_port;

char *host, *path;

struct hostent *host_name;

struct sockaddr_in host_address;

int ind;

#ifdef WIN32

WSADATA wsaData;

#endif

if ( argc < 2 )

{

fprintf( stderr,

"Usage: %s: [-p http://[username:password@]proxy-host:proxy-port]\

<URL>\n",

argv[ 0 ] );

return 1;

}

proxy_host = proxy_user = proxy_password = host = path = NULL;

ind = 1;

if ( !strcmp( "-p", argv[ ind ] ) )

{

if ( !parse_proxy_param( argv[ ++ind ], &proxy_host, &proxy_port,

&proxy_user, &proxy_password ) ) { fprintf( stderr, "Error - malformed proxy parameter '%s'.\n", argv[ 2 ] ); return 2; } ind++; } if ( parse_url( argv[ ind ], &host, &path ) == −1 )

If the first argument is -p, take the second argument to be a proxy specification in the canonical form and parse it. Either way, the last argument is still a URL.

If parse_proxy_param succeeds, proxy_host is a non-null pointer to the hostname of the proxy server. You need to make a few changes to your connection logic to support this correctly, as shown in Listing 1-9. First you need to establish a socket connection to the proxy host rather than the actual target HTTP host.

Listing 1-9: "http.c" main (with proxy support) (continued)

if ( proxy_host )

{

printf( "Connecting to host '%s'\n", proxy_host );

host_name = gethostbyname( proxy_host );

}

else

{

printf( "Connecting to host '%s'\n", host );

host_name = gethostbyname( host );

}

host_address.sin_family = AF_INET;

host_address.sin_port = htons( proxy_host ? proxy_port : HTTP_PORT );

memcpy( &host_address.sin_addr, host_name->h_addr_list[ 0 ],

sizeof( struct in_addr ) );

...

http_get( client_connection, path, host, proxy_host,

proxy_user, proxy_password );

Finally, pass the proxy host, user, and password to http_get. The new parse_proxy_param function works similarly to the parse_url function in Listing 1-2: pass in a pointer to the argv string, insert nulls at strategic places, and set char * pointers to the appropriate places within the argv string to represent the individual pieces, as shown in Listing 1-10.

Listing 1-10: "http.c" parse_proxy_param

int parse_proxy_param( char *proxy_spec,

char **proxy_host,

int *proxy_port,

char **proxy_user,

char **proxy_password )

{

char *login_sep, *colon_sep, *trailer_sep; // Technically, the user should start the proxy spec with // "http://". But, be forgiving if he didn't. if ( !strncmp( "http://", proxy_spec, 7 ) ) { proxy_spec += 7; }

In Listing 1-11, check to see if an authentication string has been supplied. If the @ symbol appears in the proxy_spec, it must be preceded by a "username:password" pair. If it is, parse those out; if it isn't, there's no error because the username and password are not strictly required.

Listing 1-11: "http.c" parse_proxy_param (continued)

login_sep = strchr( proxy_spec, '@' );

if ( login_sep )

{

colon_sep = strchr( proxy_spec, ':' );

if ( !colon_sep || ( colon_sep > login_sep ) )

{

// Error - if username supplied, password must be supplied.

fprintf( stderr, "Expected password in '%s'\n", proxy_spec );

return 0;

}

*colon_sep = '\0';

*proxy_user = proxy_spec;

*login_sep = '\0';

*proxy_password = colon_sep + 1;

proxy_spec = login_sep + 1;

}

Notice that, if a username and password are supplied, you modify the proxy_spec parameter to point to the character after the @. This way, proxy_spec now points to the proxy host whether an authentication string was supplied or not.

Listing 1-12 shows the rest of the proxy parameter parsing — the user can supply a port number if the proxy is listening on a non-standard port.

Listing 1-12: "http.c" parse_proxy_param (continued)

// If the user added a "/" on the end (as they sometimes do),

// just ignore it.

trailer_sep = strchr( proxy_spec, '/' );

if ( trailer_sep )

{

*trailer_sep = '\0';

}

colon_sep = strchr( proxy_spec, ':' );

if ( colon_sep )

{ // non-standard proxy port *colon_sep = '\0'; *proxy_host = proxy_spec; *proxy_port = atoi( colon_sep + 1 ); if ( *proxy_port == 0 ) { // 0 is not a valid port; this is an error, whether // it was mistyped or specified as 0. return 0; } } else { *proxy_port = HTTP_PORT; *proxy_host = proxy_spec; } return 1; }

The port number is also optional. If there's a : character before the end of the proxy specification, it denotes a port; otherwise, assume the standard HTTP port 80.

At this point, you have all the pieces you need for HTTP proxy support except for the changes to the actual http_get routine. Remember that, in ordinary, "proxy-less" HTTP, you start by establishing a connection to the target HTTP host and then send in a GET /path HTTP/1.0 request line. However, when connecting to a proxy, you need to send a whole hostname because the socket itself has just been established between the client and the proxy. The request line becomes GET http://host/path HTTP/1.0. Change http_get as shown in Listing 1-13 to recognize this case and send a proxy-friendly GET command if a proxy host parameter was supplied.

Listing 1-13: http_get (modified for proxy support)

int http_get( int connection,

const char *path,

const char *host,

const char *proxy_host,

const char *proxy_user,

const char *proxy_password )

{

static char get_command[ MAX_GET_COMMAND ];

if ( proxy_host )

{

sprintf( get_command, "GET http://%s/%s HTTP/1.1\r\n", host, path );

}

else

{

If the proxy is non-authenticating, this is all you need to do. If the proxy is an authenticating proxy, as most are, you need to supply an additional HTTP header line including the proxy authorization string.

Proxy-Authorization: [METHOD] [connection string]

[METHOD], according to RFC 2617, is one of BASIC or DIGEST. It's also common to see the non-standard NTLM in Microsoft environments. BASIC is, clearly, the simplest of the three, and the only one you'll support — hopefully, if you're behind a proxy, your proxy does, too. The format of connection string varies depending on the METHOD. For BASIC, it's base64_encode('username:password').

You may be somewhat familiar with Base 64 encoding, or at least be familiar with the term. In early modem-based communication systems, such as e-mail relay or UUCP systems, an unexpected byte value outside of the printable ASCII range 32–126 could cause all sorts of problems. Early modems interpreted byte code 6 as an acknowledgment, for example, wherever it occurred in the stream. This created problems when trying to transmit binary data such as compressed images or executable files. Various (incompatible) encoding methods were developed to map binary data into the range of printable ASCII characters; one of the most popular was Base64.

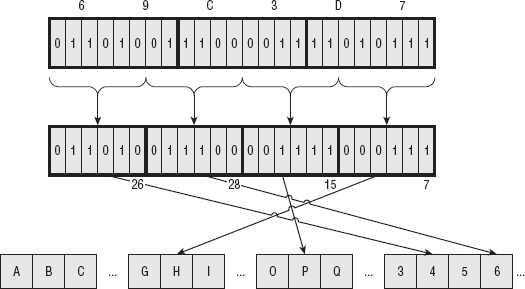

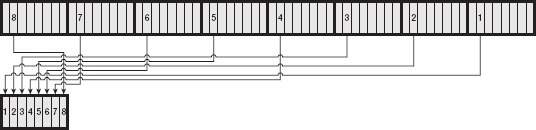

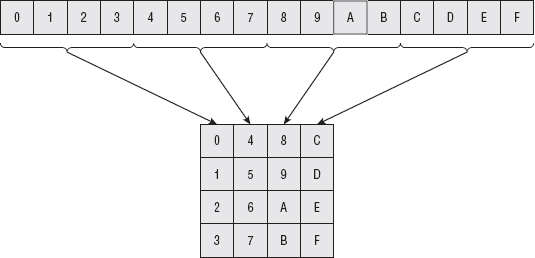

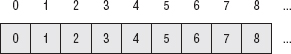

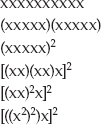

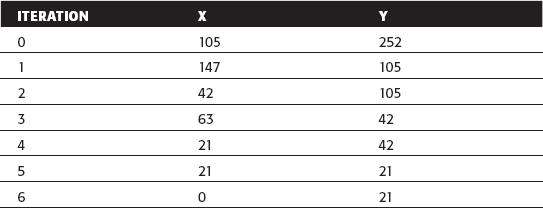

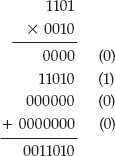

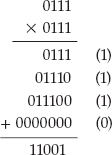

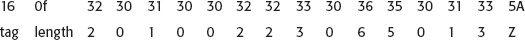

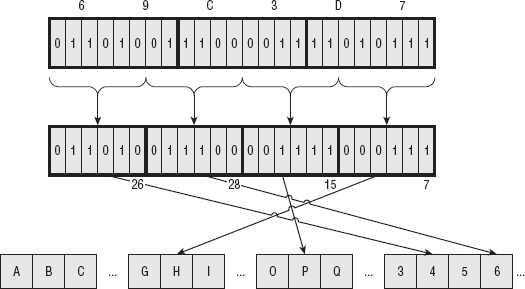

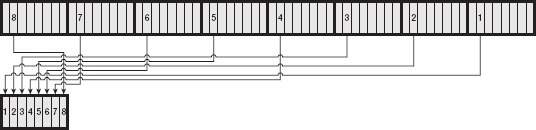

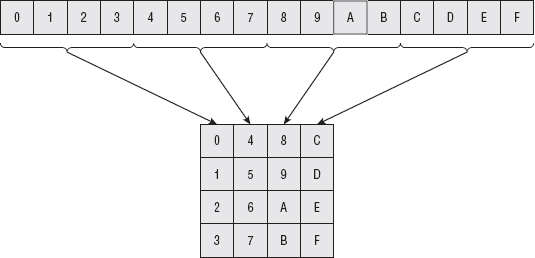

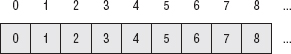

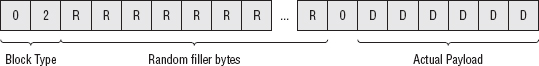

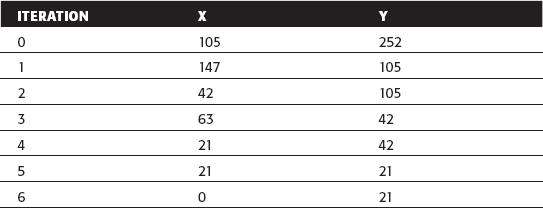

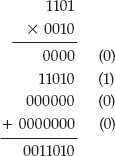

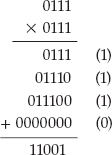

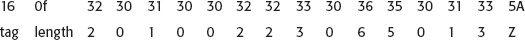

Base64 divides the input into 6-bit chunks — hence the name Base64 because 26=64 — and maps each 6-bit input into one of the printable ASCII characters. The first 52 combinations map to the upper- and lowercase alphabetic characters A–Z and a–z; the next 10 map to the numerals 0–9. That leaves two combinations left over to map. There's been some historical contention on exactly what these characters should be, but compatible implementations map them, arbitrarily, to the characters + and /. An example of a Base64 encoding is shown in Figure 1-3.

Because the input stream is, obviously, a multiple of 8 bits, dividing it into 6-bit chunks creates a minor problem. Because 24 is the least-common-multiple of 6 and 8, the input must be padded to a multiple of 24 bits (three bytes). Although Base64 could just mandate that the encoding routine add padding bytes to ensure alignment, that would complicate the decoding process. Instead the encoder adds two = characters if the last chunk is one byte long, one = character if the last chunk is two bytes long, and no = characters if the input is an even multiple of three bytes. This 6:8 ratio also means that the output is one third bigger than the input.

As you see in Listing 1-14, Base64 encoding is pretty simple to implement after you understand it; most of the complexity deals with non-aligned input:

Listing 1-14: "base64.c" base64_encode

static char *base64 =

"ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/";

void base64_encode( const unsigned char *input, int len, unsigned char *output )

{

do

{

*output++ = base64[ ( input[ 0 ] & 0xFC ) >> 2 ];

if ( len == 1 )

{

*output++ = base64[ ( ( input[ 0 ] & 0x03 ) << 4 ) ];

*output++ = '=';

*output++ = '=';

break;

}

*output++ = base64[

( ( input[ 0 ] & 0x03 ) << 4 ) | ( ( input[ 1 ] & 0xF0 ) >> 4 ) ];

if ( len == 2 )

{

*output++ = base64[ ( ( input[ 1 ] & 0x0F ) << 2 ) ];

*output++ = '=';

break;

}

*output++ = base64[

( ( input[ 1 ] & 0x0F ) << 2 ) | ( ( input[ 2 ] & 0xC0 ) >> 6 ) ]; *output++ = base64[ ( input[ 2 ] & 0x3F ) ]; input += 3; } while ( len -= 3 ); *output = '\0'; }

Here, the output array is already assumed to have been allocated as 4/3 * len. The input masks select 6 bits of the input at a time and process the input in 3-byte chunks.

Base64 decoding is just as easy. Almost. Each input byte corresponds back to six possible output bits. This mapping is the exact inverse of the encoding mapping. However, when decoding, you have to be aware of the possibility that you can receive invalid data. Remember that the input is given in 8-bit bytes, but not every possible 8-bit combination is a legitimate Base64 character — this is, in fact, the point of Base64. You must also reject non-aligned input here; if the input is not a multiple of four, it didn't come from a conformant Base64 encoding routine. For these reasons, there's a bit more error-checking that you need to build into a Base64 decoding routine; when encoding, you can safely accept anything, but when decoding, you must ensure that the input actually came from a real Base64 encoder. Such a Base64 decoder is shown in Listing 1-15.

Listing 1-15: "base64.c" base64_decode

static int unbase64[] =

{

−1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1,

−1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1, −1,

−1, −1, −1, −1, −1, −1, −1, −1, −1, −1, 62, −1, −1, −1, 63, 52,

53, 54, 55, 56, 57, 58, 59, 60, 61, −1, −1, −1, 0, −1, −1, −1,

0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15,

16, 17, 18, 19, 20, 21, 22, 23, 24, 25, −1, −1, −1, −1, −1, −1,

26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41,

42, 43, 44, 45, 46, 47, 48, 49, 50, 51, −1, −1, −1, −1, −1, −1

};

int base64_decode( const unsigned char *input, int len, unsigned char *output )

{

int out_len = 0, i;

assert( !( len & 0x03 ) ); // Is an even multiple of 4

do

{

for ( i = 0; i <= 3; i++ )

{

// Check for illegal base64 characters

if ( input[ i ] > 128 || unbase64[ input[ i ] ] == −1 )

{ fprintf( stderr, "invalid character for base64 encoding: %c\n", input[ i ] ); return −1; } } *output++ = unbase64[ input[ 0 ] ] << 2 | ( unbase64[ input[ 1 ] ] & 0x30 ) >> 4; out_len++; if ( input[ 2 ] != '=' ) { *output++ = ( unbase64[ input[ 1 ] ] & 0x0F ) << 4 | ( unbase64[ input[ 2 ] ] & 0x3C ) >> 2; out_len++; } if ( input[ 3 ] != '=' ) { *output++ = ( unbase64[ input[ 2 ] ] & 0x03 ) << 6 | unbase64[ input[ 3 ] ]; out_len++; } input += 4; } while ( len -= 4 ); return out_len; }

Notice that unbase64 was declared as a static array. Technically you could have computed this from base64, but because this never changes, it makes sense to compute this once and hardcode it into the source. The –1 entries are non-base64 characters. If you encounter one in the decoding input, halt.

What does all of this Base64 stuff have to do with proxy authorization? Well, BASIC authorization has the client pass a username and a password to the proxy to identify itself. In a minor nod to security, HTTP requires that this username and password be Base64 encoded before being transmitted. This provides some safeguard (but not much) against accidental password leakage. Of course, even a lazy attacker with access to a packet sniffer could easily Base64 decode the proxy authorization line. In fact, the open-source Wireshark packet sniffer decodes it for you! Still, it's required by the specification, so you have to support it.

To support proxy authorization, add the following to http_get as shown in Listing 1-16.

Listing 1-16: "http.c" http_get (with proxy support) (continued)

sprintf( get_command, "Host: %s\r\n", host );

if ( send( connection, get_command, strlen( get_command ), 0 ) == −1 )

{

return −1; } if ( proxy_user ) { int credentials_len = strlen( proxy_user ) + strlen( proxy_password ) + 1; char *proxy_credentials = malloc( credentials_len ); char *auth_string = malloc( ( ( credentials_len * 4 ) / 3 ) + 1 ); sprintf( proxy_credentials, "%s:%s", proxy_user, proxy_password ); base64_encode( proxy_credentials, credentials_len, auth_string ); sprintf( get_command, "Proxy-Authorization: BASIC %s\r\n", auth_string ); if ( send( connection, get_command, strlen( get_command ), 0 ) == −1 ) { free( proxy_credentials ); free( auth_string ); return −1; } free( proxy_credentials ); free( auth_string ); } sprintf( get_command, "Connection: close\r\n\r\n" );

Now, if you invoke your http main routine with just a URL, it tries to connect directly to the target host; if you invoke it with parameters:

./http -p http://user:password@proxy-host:80/ http://some.server.com/path

You connect through an authenticating proxy and request the same page.

Because you probably also want to examine server-side SSL, develop a server-side HTTP application — what is usually referred to as a web server — and add SSL support to it, as well. The operation of a web server is pretty straightforward. It starts by establishing a socket on which to listen for new requests. By default, it listens on port 80, the standard HTTP port. When a new request is received, it reads an HTTP request, as described earlier, from the client, forms an HTTP response that either satisfies the request or describes an error condition, and either closes the connection (in the case of HTTP 1.0) or looks for another request (in the case of HTTP 1.1+).

The main routine in Listing 1-17 illustrates the outer shell of an HTTP server — or any other internet protocol server, for that matter.

int listen_sock; int connect_sock; int on = 1; struct sockaddr_in local_addr; struct sockaddr_in client_addr; int client_addr_len = sizeof( client_addr ); #ifdef WIN32 WSADATA wsaData; if ( WSAStartup( MAKEWORD( 2, 2 ), &wsaData ) != NO_ERROR ) { perror( "Unable to initialize winsock" ); exit( 0 ); } #endif if ( ( listen_sock = socket( PF_INET, SOCK_STREAM, 0 ) ) == −1 ) { perror( "Unable to create listening socket" ); exit( 0 ); } if ( setsockopt( listen_sock, SOL_SOCKET, SO_REUSEADDR, &on, sizeof( on ) ) == −1 ) { perror( "Setting socket option" ); exit( 0 ); } local_addr.sin_family = AF_INET; local_addr.sin_port = htons( HTTP_PORT ); local_addr.sin_addr.s_addr = htonl( INADDR_LOOPBACK ); //local_addr.sin_addr.s_addr = htonl( INADDR_ANY ); if ( bind( listen_sock, ( struct sockaddr * ) &local_addr, sizeof( local_addr ) ) == −1 ) { perror( "Unable to bind to local address" ); exit( 0 ); } if ( listen( listen_sock, 5 ) == −1 ) { perror( "Unable to set socket backlog" ); exit( 0 ); } while ( ( connect_sock = accept( listen_sock,

( struct sockaddr * ) &client_addr, &client_addr_len ) ) != −1 ) { // TODO: ideally, this would spawn a new thread. process_http_request( connect_sock ); } if ( connect_sock == −1 ) { perror( "Unable to accept socket" ); } return 0; }

This code is standard sockets fare. It issues the four required system calls that are required for a process to act as a TCP protocol server: socket, bind, listen, and accept. The accept call will block — that is, not return — until a client somewhere on the Internet calls connect with its IP and port number. The inside of this while loop handles the request. Note that there's nothing HTTP specific about this loop yet; this could just as easily be an e-mail server, an ftp server, an IRC server, and so on. If anything goes wrong, these calls return –1, perror prints out a description of what happened, and the process terminates.

There are two points to note about this routine:

Listing 1-18: "webserver.c" remote connection exclusion code

local_addr.sin_family = AF_INET; local_addr.sin_port = htons( HTTP_PORT ); local_addr.sin_addr.s_addr = htonl( INADDR_LOOPBACK ); //local_addr.sin_addr.s_addr = htonl( INADDR_ANY ); if ( bind( listen_sock, ( struct sockaddr * ) &local_addr, sizeof( local_addr ) ) == −1 )

If you uncomment the line below (INADDR_ANY), or just omit the setting of local_addr.sin_addr.s_addr entirely, you accept connections from any available interface, including the one connected to the public Internet. In this case, as a minor security precaution, disable this and only listen on the loopback interface. If you have local firewall software running, this is unnecessary, but just in case you don't, you should be aware of the security implications.

Now for the HTTP-specific parts of this server. Call process_http_request for each received connection. Technically, you ought to spawn a new thread here so that the main thread can cycle back around and accept new connections; however, for the current purpose, this bare-bones single-threaded server is good enough.

Processing an HTTP request involves first reading the request line that should be of the format

GET <path> HTTP/1.x

Of course, HTTP supports additional commands such as POST, HEAD, PUT, DELETE, and OPTIONS, but you won't bother with any of those — GET is good enough. If a client asks for any other functionality, return an error code 501: Not Implemented. Otherwise, ignore the path requested and return a canned HTML response as shown in Listing 1-19.

Listing 1-19: "webserver.c" process_http_request

static void process_http_request( int connection )

{

char *request_line;

request_line = read_line( connection );

if ( strncmp( request_line, "GET", 3 ) )

{

// Only supports "GET" requests

build_error_response( connection, 501 );

}

else

{

// Skip over all header lines, don't care

while ( strcmp( read_line( connection ), "" ) );

build_success_response( connection );

}

#ifdef WIN32

if ( closesocket( connection ) == −1 ) #else if ( close( connection ) == −1 ) #endif { perror( "Unable to close connection" ); } }

Because HTTP is line-oriented — that is, clients are expected to pass in multiple CRLF-delimited lines that describe a request — you need a way to read a line from the connection. fgets is a standard way to read a line of text from a file descriptor, including a socket, but it requires that you specify a maximum line-length up front. Instead, develop a simple (and simplistic) routine that autoincrements an internal buffer until it's read the entire line and returns it as shown in Listing 1-20.

Listing 1-20: "webserver.c" read_line

#define DEFAULT_LINE_LEN 255

char *read_line( int connection )

{

static int line_len = DEFAULT_LINE_LEN;

static char *line = NULL;

int size;

char c; // must be c, not int

int pos = 0;

if ( !line )

{

line = malloc( line_len );

}

while ( ( size = recv( connection, &c, 1, 0 ) ) > 0 )

{

if ( ( c == '\n' ) && ( line[ pos - 1 ] == '\r' ) )

{

line[ pos - 1 ] = '\0';

break;

}

line[ pos++ ] = c;

if ( pos > line_len )

{

line_len *= 2;

line = realloc( line, line_len );

}

}

return line;

}