From Concept to Implementation to Real-World Solutions

Copyright © 2016 Jason Strimpel, Maxime Najim. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://safaribooksonline.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781491932933 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Building Isomorphic JavaScript Apps, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-93293-3

[LSI]

I began my web development career many years ago, when I was hired as an administrative assistant at the University of California, San Diego. One of my job duties was to maintain the department website. It was the time of webmasters, table-based layouts, and CGI-BIN(s), and Netscape Navigator was still a major player in the browser market. As for my level of technical expertise, I think the following anecdote paints a clearer picture of my inexperience than Bob Ross’s desire for a happy little tree. I can clearly remember being concerned that if I sent an email with misspellings, the recipient would see my mistakes underlined in red the same way I saw them. Fortunately, I had a patient supervisor who assured me this was not the case and then handed me the keys to the website!

Fast-forward 15 years, and now I am an industry “expert” speaking at conferences, managing open source projects, coauthoring books, etc. I ask myself, “Well… How did I get here?” The answer to that question is not by letting the days go by—at least, not entirely so.

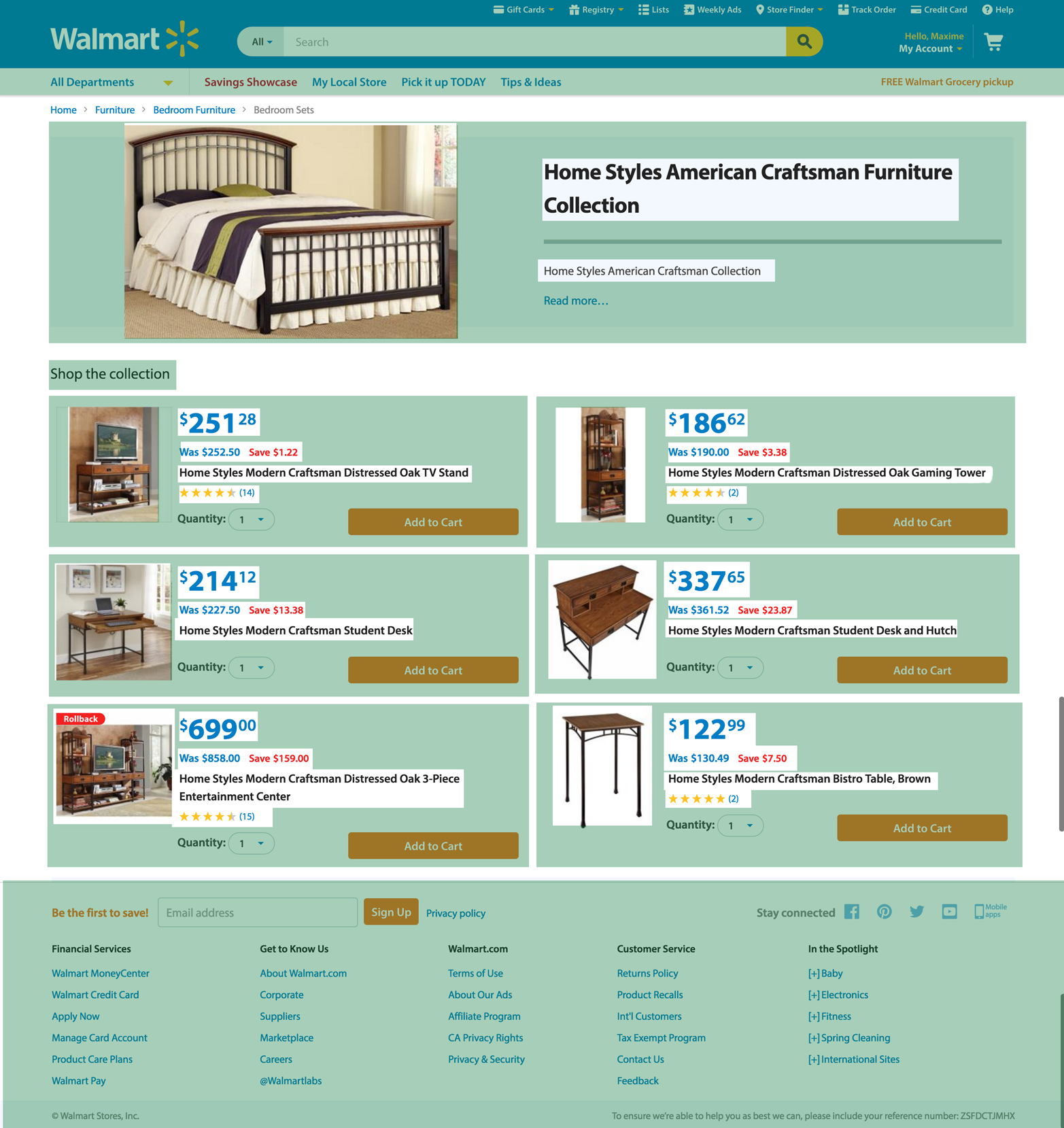

The latest chapter in my web journey is working on the Platform team at WalmartLabs, and it is here that my adventures in isomorphic JavaScript began. When I started at WalmartLabs I was placed on a team that was tasked with creating a new web framework from scratch that could power large, public-facing websites. In addition to meeting the minimum requirements for a public-facing website, SEO support, and an optimized page load, it was important to our team to keep UI engineers—myself included—happy and productive. The obvious choice for appeasing UI engineers would have been to extend an existing single-page application (SPA) solution. The problem with the SPA model is that it doesn’t support the minimum requirements out of the box (see “The Perfect Storm: An All-Too-Common Story” and “Single-page web application” for more details)—so we decided to take the isomorphic path. Here is why:

It uses a single code base for the UI with a common rendering lifecycle. This means no duplication of efforts, which reduces the UI development and maintenance cost, allowing teams to ship features faster.

It renders the initial response HTML on the server for a faster perceived page load, because the user doesn’t have to wait for the application to bootstrap and fetch data before the page is rendered in the browser. The improved perceived page load is even more important in areas with high network latency.

It supports SEO because it uses qualified URLs (no fragments) and gracefully degrades to server rendering (for subsequent page requests) for clients that don’t support the History API.

It uses distributed rendering of the SPA model for subsequent page requests for clients that support the History API, which lessens application server loads.

It gives UI engineers full control of the UI, be it on the server or the client, and provides clear lines of separation between the back- and frontends, which helps reduce operating costs.

Those are the primary reasons our team went down the isomorphic JavaScript path. Before we get into the details of isomorphic JavaScript, though, it is good idea to provide some background about the environment in which we find ourselves today.

It is hard to imagine, but the Web of today did not always exist. It did not always have JavaScript or CSS, either. They were introduced to browsers to provide an interaction model and a separation of concerns. Remember the numerous articles advocating the separation of structure, style, and behavior? Even with the addition of these technologies, the architecture of applications changed very little. A document was requested using a URI, parsed by the browser, and rendered. The only difference was the UI was a bit richer thanks to JavaScript. Then Microsoft introduced a technology that would be the catalyst for transforming the Web into an application platform: XMLHttpRequest.

As much disdain as frontend engineers have for Microsoft and Internet Explorer, they should also harbor an even greater sense of gratitude. If it weren’t for Microsoft, frontend engineers would likely have much less fulfilling careers. Without the advent of XMLHttpRequest there would not be Ajax; without Ajax we wouldn’t have such a great need to modify documents; without the need to modify documents we wouldn’t have the need for jQuery. You see where this is going? We would not have the rich landscape of frontend MV* libraries and the single-page application pattern, which gave way to the History API. So next time you are battling Internet Explorer, make sure to balance your hatred with gratitude for Microsoft. After all, they changed the course of history and laid the groundwork that provides you with a playground in which to exercise your mind.

As influential as Ajax was in shaping of the web platform, it also left behind a path of destruction in the form of technical debt. Ajax blurred the lines of what had previously been a clearly defined model, where the browser requests, receives, and parses a full document response when a user navigates to a new page or submits form data. This all changed when Ajax made its way into mainstream web development. An engineer could now respond to a user’s request for more data or another view without the overhead of requesting a new document from the server. This allowed an application to update regions of a page. This ability drastically optimized both the client and the server and vastly improved the user experience. Unfortunately, client application architecture was virtually nonexistent, and those tasked with working in the view layer did not have the background required to properly support this change of paradigm. These factors compounded with time and turned applications into maintenance nightmares. The lines had been blurred, and a period of painful growth was about to begin.

Imagine you are on a team that maintains the code responsible for rendering the product page for an ecommerce application. In the righthand gutter of the product page is a carousel of consumer reviews that a user can paginate through. When a user clicks on a review pagination link, the client updates the URI and makes a request to the server to fetch the product page. As an engineer, this inefficiency bothers you greatly. You shouldn’t need to reload the entire page and make the data calls required to fully rerender the page. All you really need to do is GET the HTML for the next page of reviews. Fortunately you keep up on technical advances in the industry and you’ve recently learned of Ajax, which you have been itching to try out. You put together a proof of concept (POC) and pitch it to your manager. You look like a wizard. The POC is moved into production like all other POCs.

You are satisfied until you hear of a new data-interchange format called JSON being espoused by the unofficial spokesman of JavaScript, Douglas Crockford. You are immediately dissatisfied with your current implementation. The next day you put together a new POC using something you read about called micro-templating. You show the new POC to your manager. It is well-received and moved into production. You are a god among mortal engineers. Then there is a bug in the reviews code. Your manager looks to you to resolve the bug because you implemented this magic. You review the code and assure your manager that the bug is in the server-side rendering. You then get to have the joyful conversation about why there are two different rendering implementations. After you finish explaining why Java will not run in the browser, you assure your manager that the duplication was worth the cost because it greatly improved the user experience. The bug is then passed around like a hot potato until eventually it is fixed.

Despite the DRY (don’t repeat yourself) violations, you are hailed as an expert. Slowly the pattern you implemented is copied throughout the code base. But as your pattern permeates the code base, the unthinkable happens. The bug count begins to rise, and developers are afraid to make code changes out of fear of causing a regression. The technical debt is now larger than the national deficit, engineering managers are getting pushback from developers, and product people are getting pushback from engineering managers. The application is brittle and the company is unable to react quickly enough to changes in the market. You feel an overwhelming sense of guilt. Luckily, you have been reading about this new pattern called single-page application…

Recently you have been reading articles about people’s frustration with the lack of architecture in the frontend. Often these people blame jQuery, even though it was only intended to be a façade for the DOM. Fortunately, others in the industry have already faced the exact problem you are facing and did not stop at the uninformed critiques of others. One of them was Jeremy Ashkenas, the author of Backbone.

You take a look at Backbone and read some articles. You are sold. It separates application logic from data retrieval, consolidates UI code to a single language and runtime, and significantly reduces the impact on the servers. “Eureka!” you shout triumphantly in your head. This will solve all our problems. You put together another POC, and so it goes.

You are soon known as the savior. Your newest SPA pattern is adopted company-wide. Bug counts begin to drop and engineering confidence returns. The fear once associated with shipping code has practically vanished. Then a product person comes knocking at your door and informs you that site visits have plummeted since the SPA model was implemented. Welcome to the joys of the hash fragment hack. After some exhaustive research, you determine that search engines do not take into account to the window.location.hash portion of the URI that the Backbone.Router uses to create linkable, bookmarkable, shareable page views. So when a search engine crawls the application, there is not any content to index. Now you are in an even worse place than you were before. This is having a direct impact on sales. So you begin a cycle of research and development once again. It turns out you have two options. The first option is to spin up new servers, emulate the DOM to run your client application, and redirect search engines to these servers. The second option is to pay another company to solve the problem for you. Both options have a cost, which is in addition to the drop in revenues that the SPA implementation cost the company.

The previous story was spun together from personal experience and stories that I have read or heard from other engineers. If you have ever worked on a particular web application for long enough, then I am sure you have similar stories and experiences. Some of these issues are from days of yore and some of them still exist today. Some potential issues were not even highlighted—e.g., poorly optimized page loads and perceived rendering. Consolidating the route response/rendering lifecycle to a common code base that runs on the client and server could potentially solve these problems and others. This is what isomorphic JavaScript is all about. It is about taking the best from two different architectures to create easier-to-maintain applications that provide better user experiences.

The primary goal of this book is to provide the foundational knowledge required to implement and understand existing isomorphic JavaScript solutions available in the industry today. The intent is to provide you with the information required to make an informed decision as to whether isomorphic JavaScript is a viable solution for your use case, and then allow some of the brightest minds in the industry to share their solutions so that you do not have to reinvent the wheel.

Part I provides an introduction to the subject, beginning with a detailed examination of the different kinds of web application architectures. It covers the rationale and use cases for isomorphic JavaScript, such as SEO support and improving perceived page loads. It then outlines the different types of isomorphic JavaScript applications, such as real-time and SPA-like applications. It also covers the different pieces that make up isomorphic solutions, like implementations that provide environment shims/abstractions and implementations that are truly environment agnostic. The section concludes by laying the code foundation for Part II.

Part II breaks the topic down into the key concepts that are common in most isomorphic JavaScript solutions. Each concept is implemented without the aid of existing libraries such as React, Backbone, or Ember. This is done so that the concept is not obfuscated by a particular implementation.

In Part III, industry experts weigh in on the topic with their solutions.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, class, data types, statements, and keywords. Also used for module and package names and for commands and command-line output.

Constant width boldShows commands or other text that should be typed literally by the user.

Constant width italicShows text that should be replaced with user-supplied values or by values determined by context.

This element signifies a tip or suggestion.

This element signifies a general note.

This element indicates a warning or caution.

Supplemental material (code examples, exercises, etc.) is available for download at https://github.com/isomorphic-javascript-book.

This book is here to help you get your job done. In general, if example code is offered with this book, you may use it in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing a CD-ROM of examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

We appreciate, but do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “Building Isomorphic JavaScript Apps by Jason Strimpel and Maxime Najim (O’Reilly). Copyright 2016 Jason Strimpel and Maxime Najim, 978-1-491-93293-3.”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

Safari Books Online is an on-demand digital library that delivers expert content in both book and video form from the world’s leading authors in technology and business.

Technology professionals, software developers, web designers, and business and creative professionals use Safari Books Online as their primary resource for research, problem solving, learning, and certification training.

Safari Books Online offers a range of plans and pricing for enterprise, government, education, and individuals.

Members have access to thousands of books, training videos, and prepublication manuscripts in one fully searchable database from publishers like O’Reilly Media, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, Course Technology, and hundreds more. For more information about Safari Books Online, please visit us online.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://bit.ly/building-isomorphic-javascript-apps.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

First, I have to thank my wife Lasca for her patience and support. Every day I am grateful for your intellect, your humor, your compassion, and your love. I feel privileged and humbled that you chose to go through life with me. You make me an infinitely better person. Thanks for loving me.

Next, I would like to thank my coauthor and colleague Max. Without your passion, knowledge, ideas, and expertise my efforts would have fallen short. Your insights into software architecture and observations inspire me daily. Thank you.

I would also like to thank my editor Ally. Your ideas, questions, and edits make me appear to be a much better writer than I actually am in reality. Thank you.

Finally, I would like to thank all contributors to Part III who have graciously given their time and chosen to share their stories with the reader. Your chapters help showcase the depth of isomorphic JavaScript and the actual diversity of solutions. Your unique solutions illustrate the true ingenuity at the heart of every engineer. Thank you.

Foremost, I’d like to thank my family—my wife Nicole and our children Tatiana and Alexandra—for their support and encouragement throughout the writing and publishing of this book.

I’m also eternally grateful to Jason for asking me to coauthor this book with him. I felt privileged and honored to write and collaborate with you throughout this book. This was a once-in-a-lifetime opportunity, and it was your ingenuity, knowledge, and hard work that made this opportunity into a reality. I can’t thank you enough. Likewise, a big thanks goes to our editor Allyson for her invaluable support and consultation throughout this process.

And finally, a big special thanks to the contributors to Part III who took time from their busy schedules to share their stories and experiences with us all. Thank you!

Ever since the term “Golden Age” originated with the early Greek and Roman poets, the phrase has been used to denote periods of time following certain technological advancements or innovations. In the Golden Ages of Radio and Television in the 20th century, writers and artists applied their skills to new mediums to create something fresh and compelling. Perhaps we are now in the Golden Age of JavaScript, although only time will tell. Beyond a doubt, JavaScript has paved the road toward a new age of desktop-like applications running in the browser.

In the past decade, we’ve seen the Web evolve as a platform for building rich and highly interactive applications. The web browser is no longer simply a document renderer, nor is the Web simply a bunch of documents linked together. Websites have evolved into web apps. This means more and more of the web app logic is running in the browser instead of on the server. Yet, in the past decade, we’ve equally seen user expectations evolve. The initial page load has become more critical than ever before. According to a Radware report, in 1999, the average user was willing to wait 8 seconds for a page to load. By 2010, 57% of online shoppers said that they would abandon a page after 3 seconds if nothing was shown. And here lies the problem of the Golden Age of JavaScript: the client-side JavaScript that makes the pages richer and more interactive also increases the page load times, creating a poor initial user experience. Page load times ultimately impact a company’s “bottom line.” Both Amazon.com and Walmart.com have reported that for every 100 milliseconds of improvement in their page load times, they were able to grow incremental revenue by up to 1%.

In Part I of this book we will discuss the concepts of isomorphic JavaScript and how isomorphic rendering can dramatically improve the user experience. We’ll also discuss isomorphic JavaScript as a spectrum, looking at different categories of isomorphic code. Finally, we’ll look beyond server-side rendering at how isomorphic JavaScript can help in creating complex, live-updating, and collaborative real-time applications.

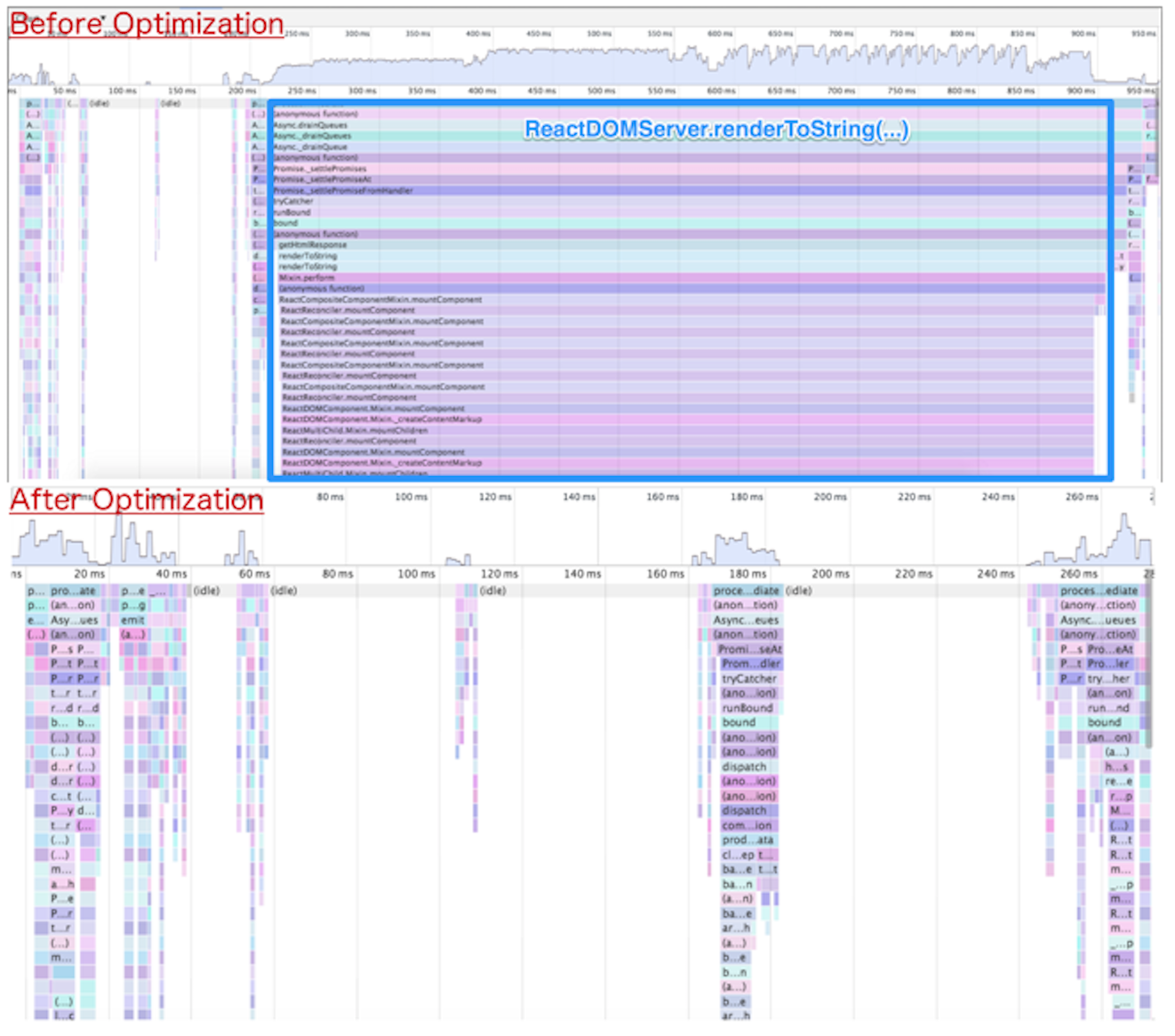

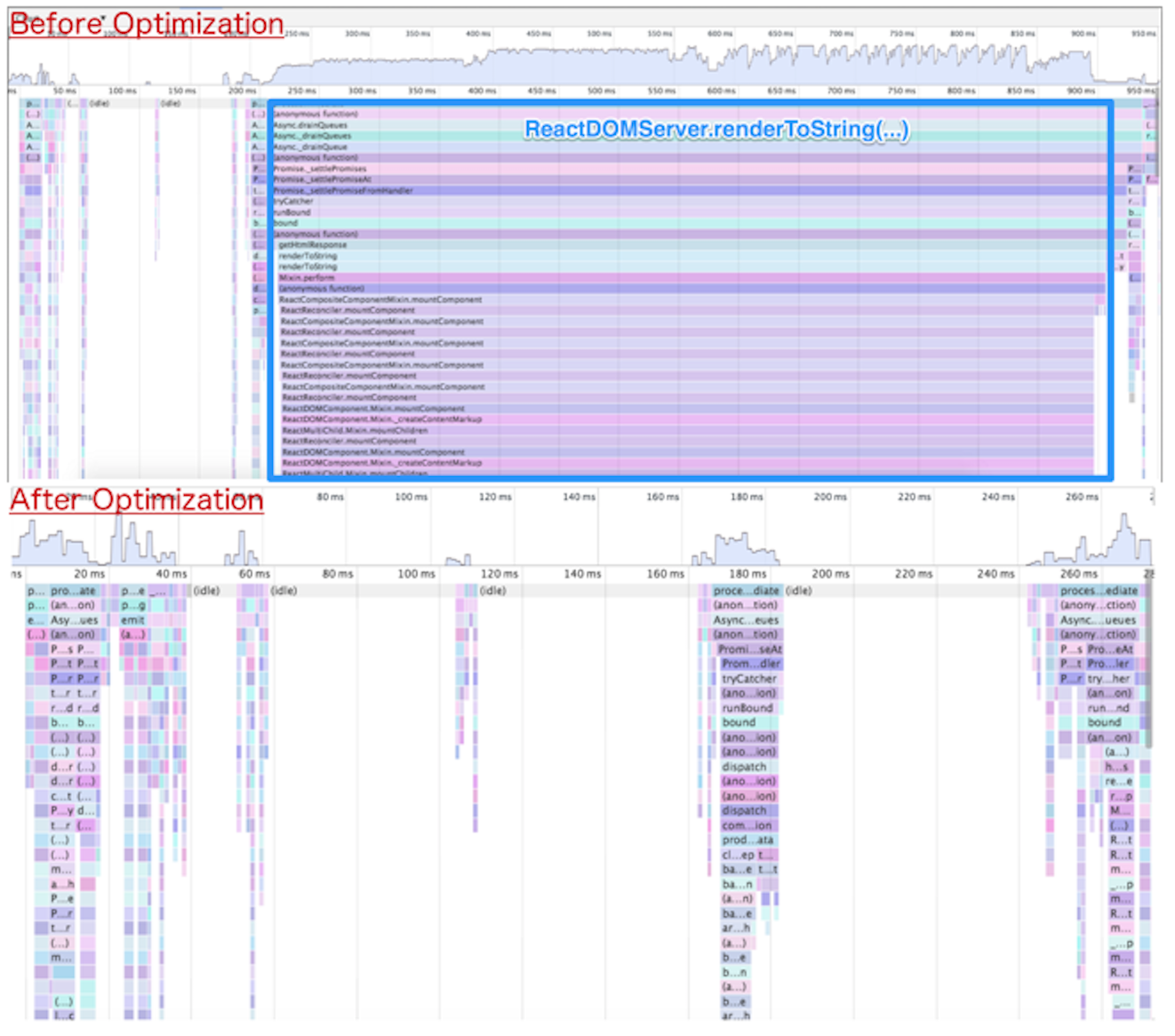

In 2010, Twitter released a new, rearchitected version of its site. This “#NewTwitter” pushed the UI rendering and logic to the JavaScript running in the user’s browser. For its time, this architecture was groundbreaking. However, within two years, Twitter had released a re-rearchitected version of its site that moved the rendering back to the server. This allowed Twitter to drop the initial page load times to one-fifth of what they were previously. Twitter’s move back to server-side rendering caused quite a stir in the JavaScript community. What its developers and many others soon realized was that client-side rendering has a very noticeable impact on performance.

The biggest weakness in building client-side web apps is the expensive initial download of large JavaScript files. TCP (Transmission Control Protocol), the prevailing transport of the Internet, has a congestion control mechanism called slow start, which means data is sent in an incrementally growing number of segments. Ilya Grigorik, in his book High Performance Browser Networking (O’Reilly), explains how it takes “four roundtrips… and hundreds of milliseconds of latency, to reach 64 KB of throughput between the client and server.” Clearly, the first few KB of data sent to the user are essential to a great user experience and page responsiveness.

The rise of client-side JavaScript applications that consist of no markup other than a <script> tag and an empty <body> has created a broken Web of slow initial page loads, hashbang (#!) URL hacks (more on that later), and poor crawlability for search engines. Isomorphic JavaScript is about fixing this brokenness by consolidating the code base that runs on the client and the server. It’s about providing the best from two different architectures and creating applications that are easier to maintain and provide better user experiences.

Isomorphic JavaScript applications are simply applications that share the same JavaScript code between the browser client and the web application server. Such applications are isomorphic in the sense that they take on equal (iso) form or shape (morphosis) regardless of which environment they are running on, be it the client or the server. Isomorphic JavaScript is the next evolutionary step in the advancement of JavaScript. But advancements in software development often seem like a pendulum, accelerating toward an equilibrium position but always oscillating, swinging back and forth. If you’ve done software development for some time, you’ve likely seen design approaches come and go and come back again. It seems in some cases we’re never able to find the right balance, a harmonious equilibrium between two opposite approaches.

This is most true with approaches to web application in the last two decades. We’ve seen the Web evolve from its humble roots of blue hyperlink text on a static page to rich user experiences that resemble full-blown native applications. This was made possible by a major swing in the web client–server model, moving rapidly from a fat-server, thin-client approach to a thin-server, fat-client approach. But this shift in approaches has created plenty of issues that we will discuss in greater detail later in this chapter. For now, suffice it to say there is a need for a harmonious equilibrium of a shared fat-client, fat-server approach. But in order to truly understand the significance of this equilibrium, we must take a step back and look at how web applications have evolved over the last few decades.

In order to understand why isomorphic JavaScript solutions came to be, we must first understand the climate from which the solutions arose. The first step is identifying the primary use case.

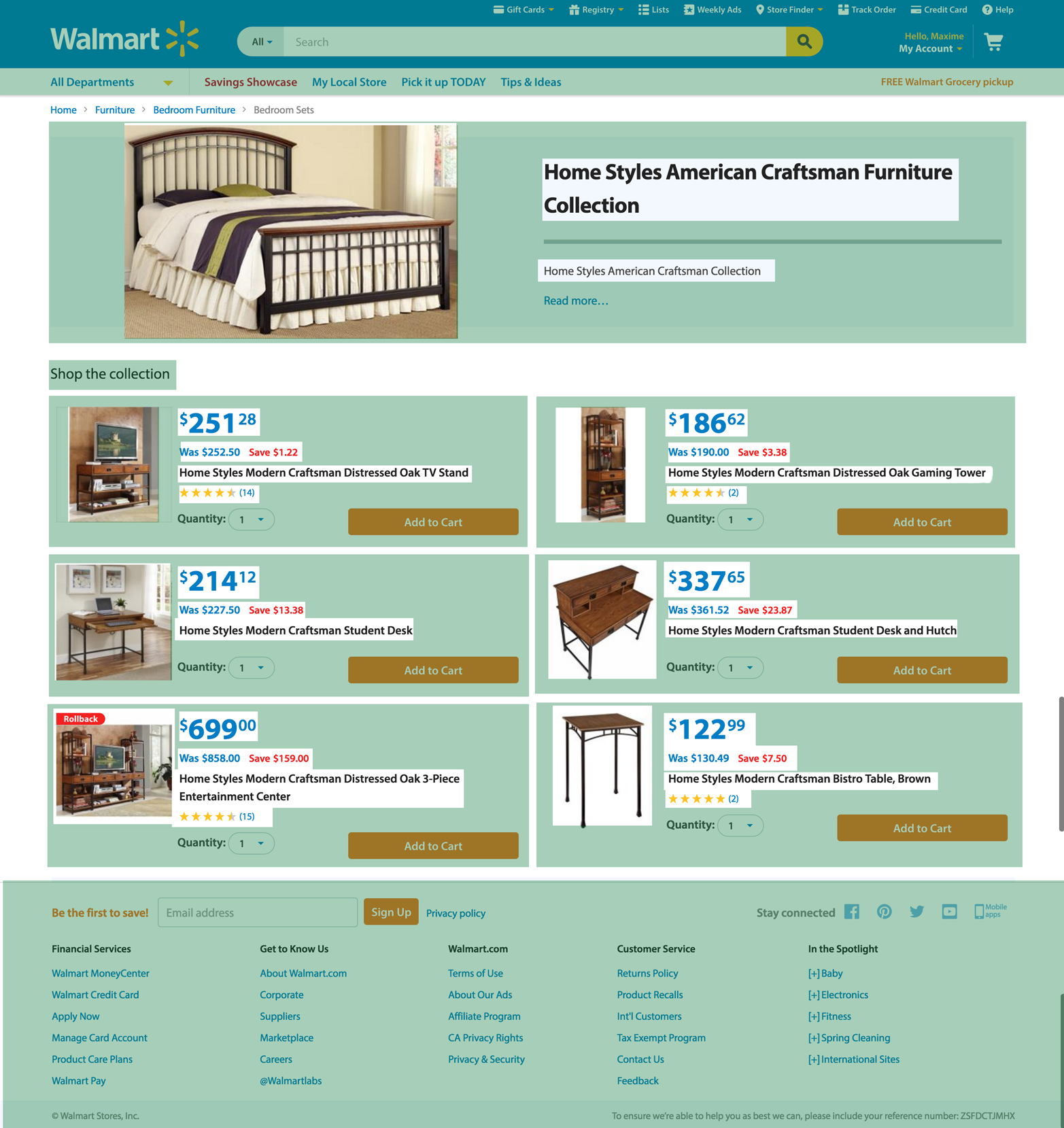

Chapter 2 introduces two different types of isomorphic JavaScript application and examines their architectures. The primary type of isomorphic JavaScript that will be explored by this book is the ecommerce web application.

The creation of the World Wide Web is attributed to Tim Berners Lee, who, while working for a nuclear research company on a project known as “Enquire” experimented with the concept of hyperlinks. In 1989, Tim applied the concept of hyperlinks and put a proposal together for a centralized database that contained links to other documents. Over the course of time, this database has morphed into something much larger and has had a huge impact on our daily lives (e.g., through social media) and business (ecommerce). We are all teenagers stuck in a virtual mall. The variety of content and shopping options empowers us to make informed decisions and purchases. Businesses realize the plethora of choices we have as consumers, and are greatly concerned with ensuring that we can find and view their content and products, with the ultimate goal of achieving conversions (buying stuff)—so much so that there are search engine optimization (SEO) experts whose only job is to make content and products appear higher in search results. However, that is not where the battle for conversions ends. Once consumers can find the products, the pages must load quickly and be responsive to user interactions, or else the businesses might lose the consumers to competitors. This is where we, engineers, enter the picture, and we have our own set of concerns in addition to the business’s concerns.

As engineers, we have a number of concerns, but for the most part these concerns fall into the main categories of maintainability and efficiency. That is not to say that we do not consider business concerns when weighing technical decisions. As a matter of fact, good engineers do exactly the opposite: they find the optimal engineering solution by contemplating the short- and long-term pros and cons of each possibility within the context of the business problem at hand.

Taking into account the primary business use case, an ecommerce application, we are going to examine a couple of different architectures within the context of history. Before we take a look at the architectures, we should first identify some key acceptance criteria, so we can fairly evaluate the different architectures. In order of importance:

The application should be able to be indexed by search engines.

The application’s first page load should be optimized—i.e., the critical rendering path should be part of the initial response.

The application should be responsive to user interactions (e.g., optimized page transitions).

The critical rendering path is the content that is related to the primary action a user wants to take on the page. In the case of an ecommerce application it would be a product description. In the case of a news site it would be an article’s content.

These business criteria will also be weighed against the primary engineering concerns, maintainability and efficiency, throughout the evaluation process.

As mentioned in the previous section, the Web was designed and created to share information. Since the premise of the World Wide Web was the work done for the Enquire project, it is no surprise that when the Web first started, web pages were simply multipage text documents that linked to other text documents. In the early 1990s, most of the Web was rendered as complete HTML pages. The mechanisms that supported (and continue to support) it are HTML, URIs, and HTTP. HTML (Hypertext Markup Language) is the specification for the markup that is translated into the document object model by browsers when the markup is parsed. The URI (uniform resource identifier) is the name that identifies a resource; i.e., the name of the server that should respond to a request. HTTP (Hypertext Transfer Protocol) is the transport protocol that connects everything together. These three mechanisms power the Internet and shaped the architecture of the classic web application.

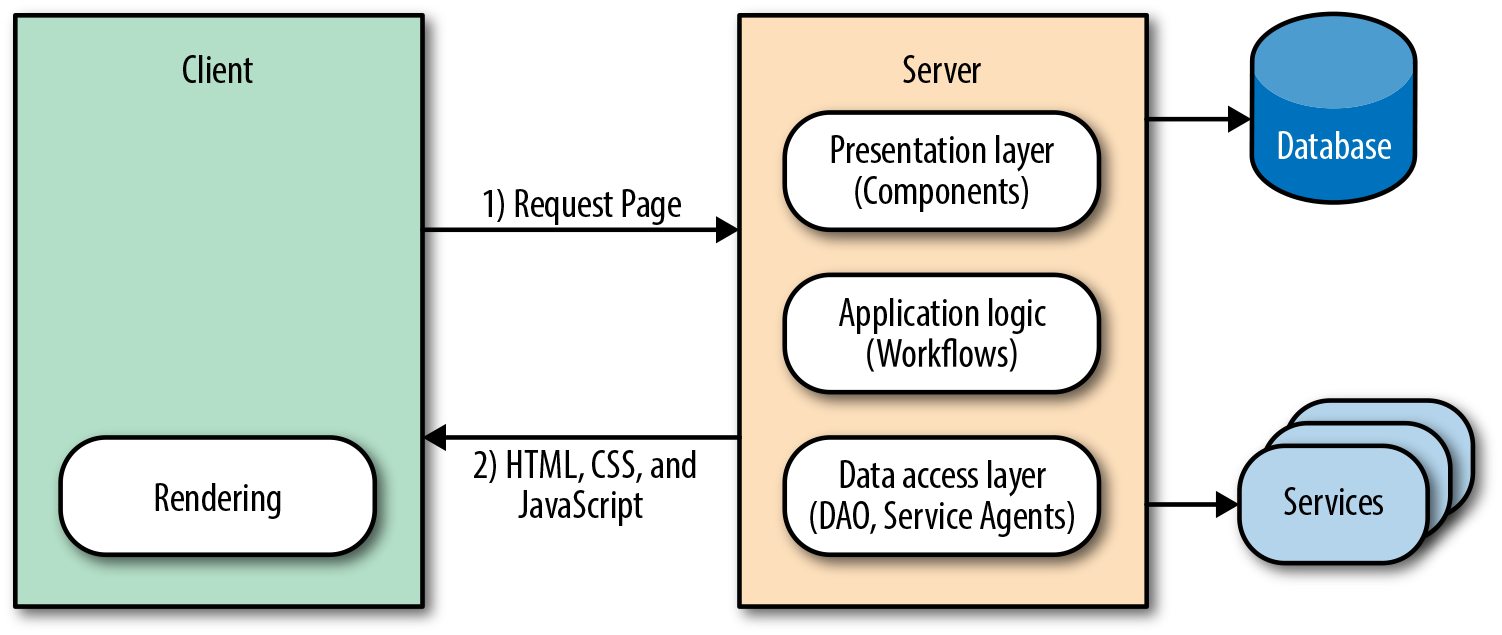

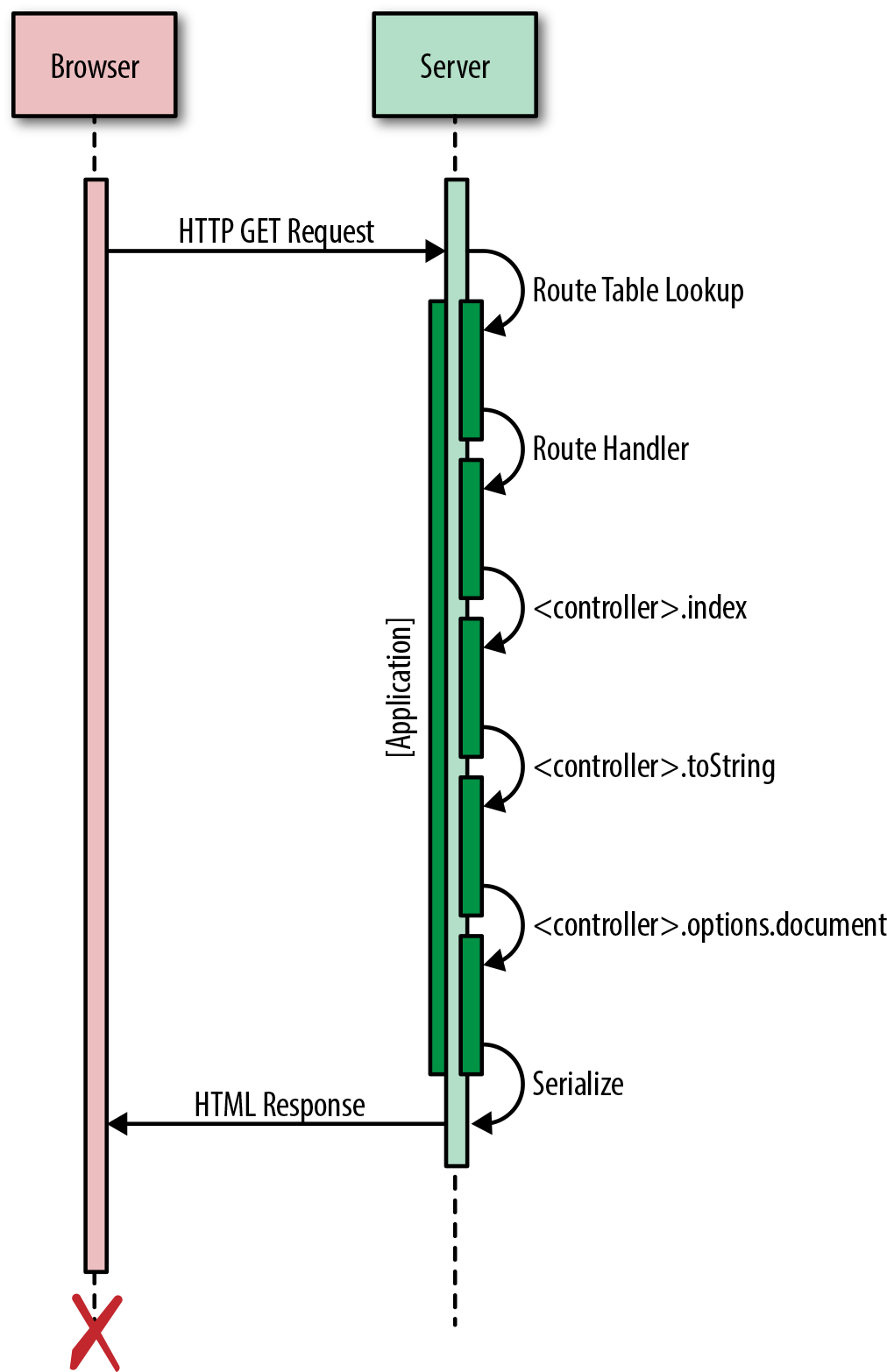

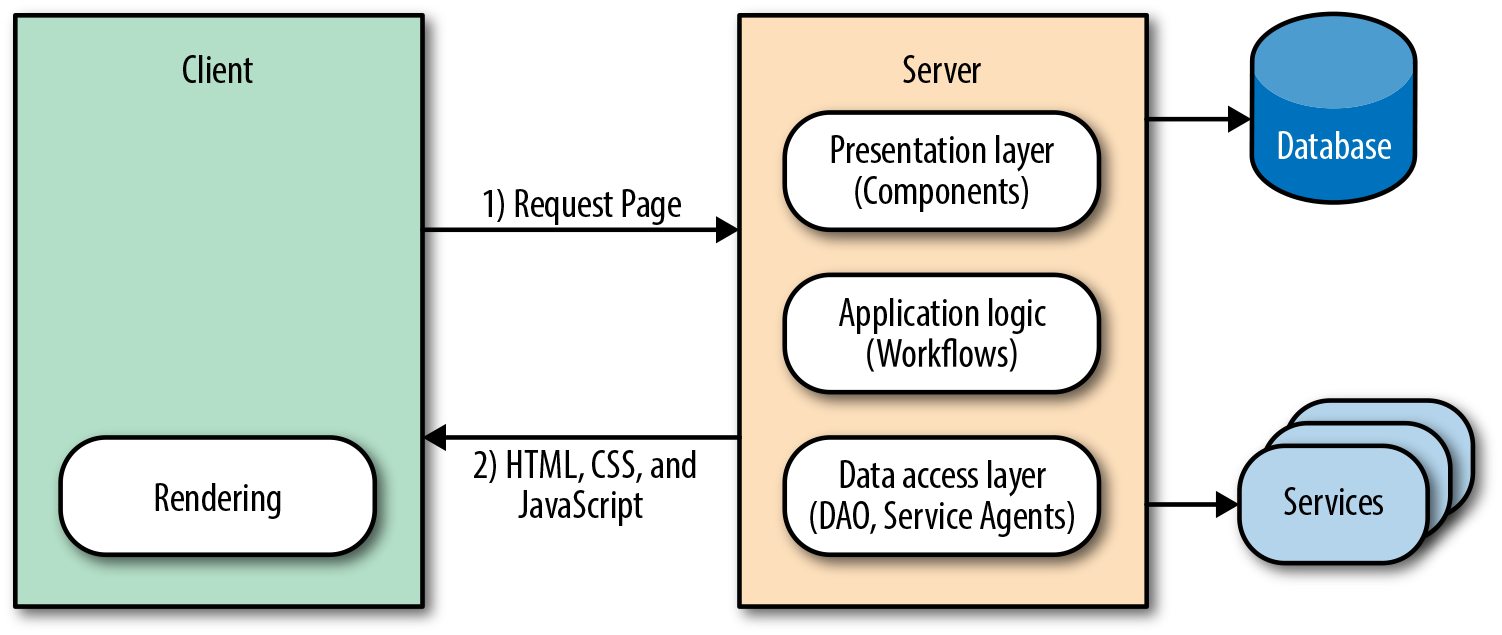

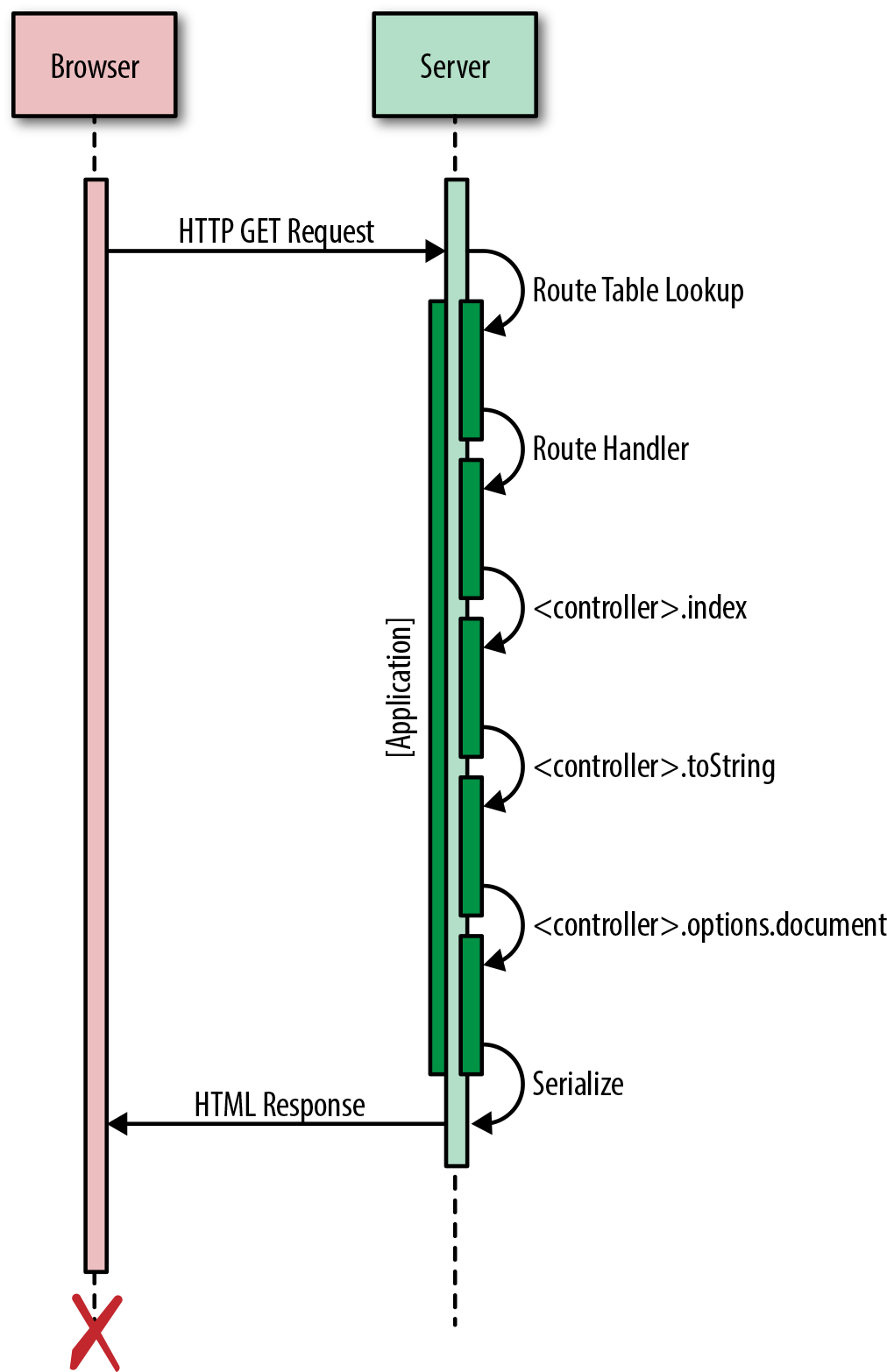

A classic web application is one in which all the markup—or, at a minimum, the critical rendering path markup—is rendered by the server using a server-side language such as PHP, Ruby, Java, and so on (Figure 1-1). Then JavaScript is initialized when the browser parses the document, enriching the user experience.

In a nutshell, that is the classic web application architecture. Let’s see how it stacks up against our acceptance criteria and engineering concerns.

Firstly, it is easily indexed by search engines because all of the content is available when the crawlers traverse the application, so consumers can find the application’s content. Secondly, the page load is optimized because the critical rendering path markup is rendered by the server, which improves the perceived rendering speed, so users are more likely not to bounce from the application. However, two out of three is as good as it gets for the classic web application.

What do we mean by “perceived” rendering speed? In High Performance Browser Networking, Grigorik explains it this way as: “Time is measured objectively but perceived subjectively, and experiences can be engineered to improve perceived performance.”

In the classic web application, navigation and transfer of data work as the Web was originally designed. The browser requests, receives, and parses a full document response when a user navigates to a new page or submits form data, even if only some of the page information has changed. This is extremely effective at meeting the first two criteria, but the setup and teardown of this full-page lifecycle are extremely costly, so it is a suboptimal solution in terms of responsiveness. Since we are privileged enough to live in the time of Ajax, we already know that there is a more efficient method than a full page reload—but it comes at a cost, which we will explore in the next section. However, before we transition to the next section we should take a look at Ajax within the context of the classic web application architecture.

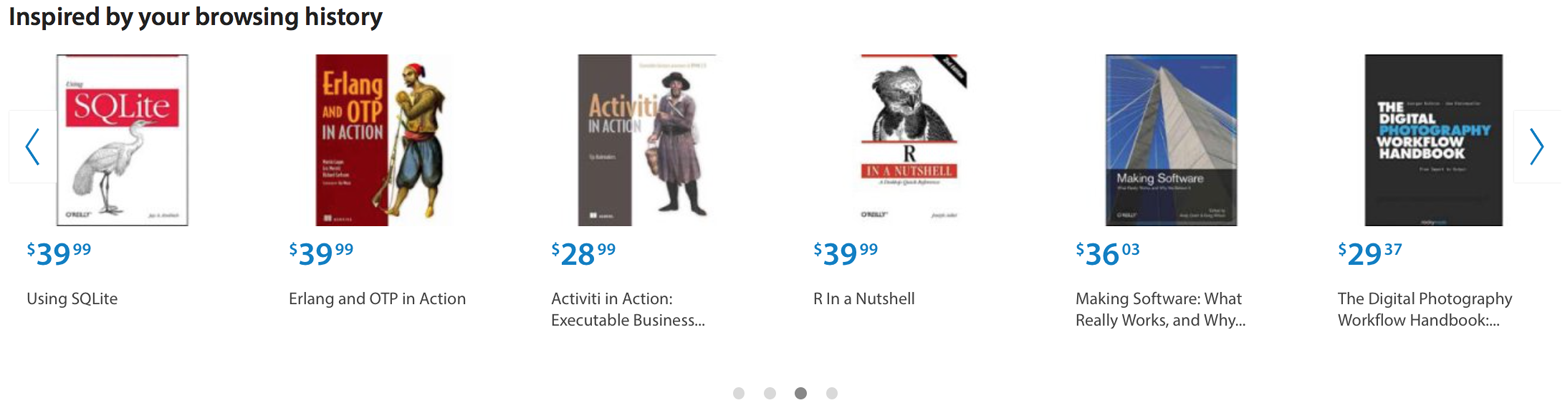

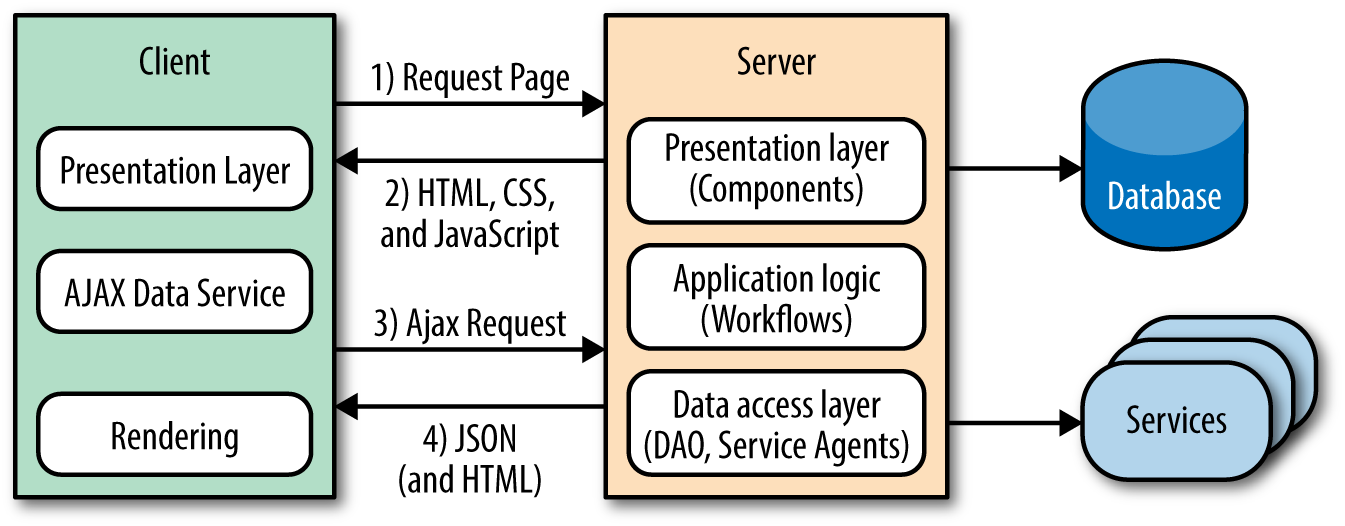

The XMLHttpRequest object is the spark that ignited the web platform fire. However, its integration into classic web applications has been less impressive. This was not due to the design or technology itself, but rather to the inexperience of those who integrated the technology into classic web applications. In most cases they were designers who began to specialize in the view layer. I myself was an administrative assistant turned designer and developer. I was abysmal at both. Needless to say, I wreaked havoc on my share of applications over the years (but I see this as my contribution to the evolution of a platform!). Unfortunately, all the applications I touched and all the other applications that those of us without the proper training and guidance touched suffered during this evolutionary period. The applications suffered because processes were duplicated and concerns were muddled. A good example that highlights these issues is a related products carousel (Figure 1-2).

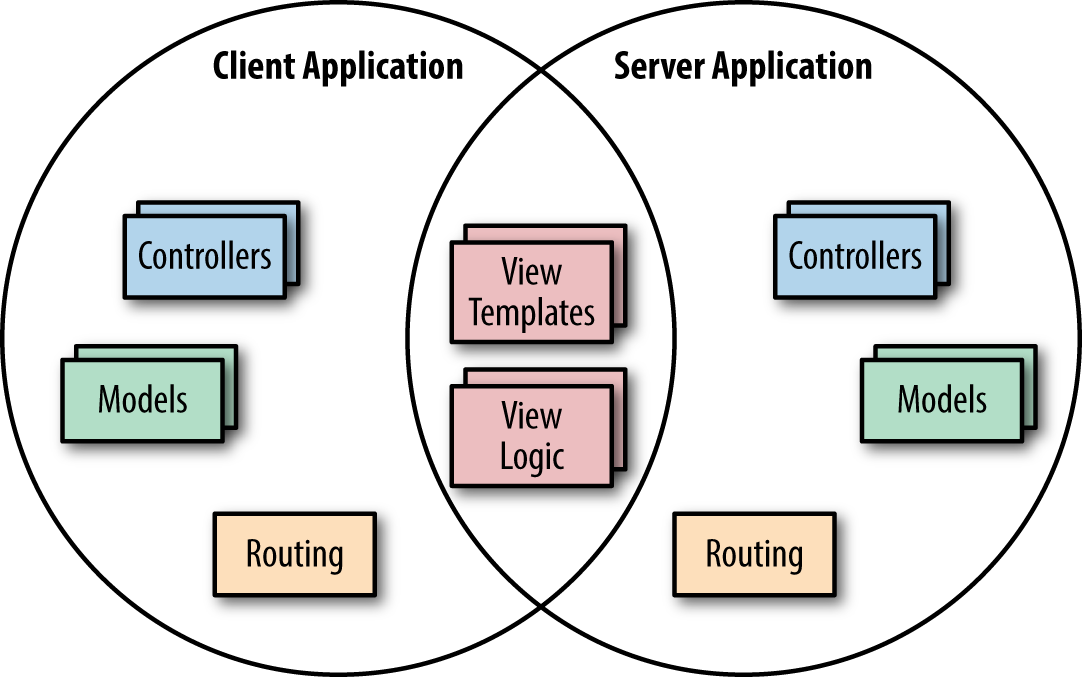

A (related) products carousel paginates through products. Sometimes all the products are preloaded, and in other cases there are too many to preload. In those cases a network request is made to paginate to the next set of products. Refreshing the entire page is extremely inefficient, so the typical solution is to use Ajax to fetch the product page sets when paginating. The next optimization would be to only get the data required to render the page set, which would require duplicating templates, models, assets, and rendering on the client (Figure 1-3). This also necessitates more unit tests. This is a very simple example, but if you take the concept and extrapolate it over a large application, it makes the application difficult to follow and maintain—one cannot easily derive how an application ended up in a given state. Additionally, the duplication is a waste of resources and it opens up an application to the possibility of bugs being introduced across two UI code bases when a feature is added or modified.

This division and replication of the UI/View layer, enabled by Ajax and coupled with the best of intentions, is what turned seemingly well-constructed applications into brittle, regression-prone piles of rubble and frustrated numerous engineers. Fortunately, frustrated engineers are usually the most innovative. It was this frustration-fueled innovation, combined with solid engineering skills, that led us to the next application architecture.

Everything moves in cycles. When the Web began it was a thin client, and likely the inspiration for the Sun Microsystems NetWork Terminal (NeWT). But by 2011 web applications had started to eschew the thin client model, and transition to a fat client model like their operating system counterparts had done long ago. The monolith had surfaced. It was the dawn of the single-page application (SPA) architecture.

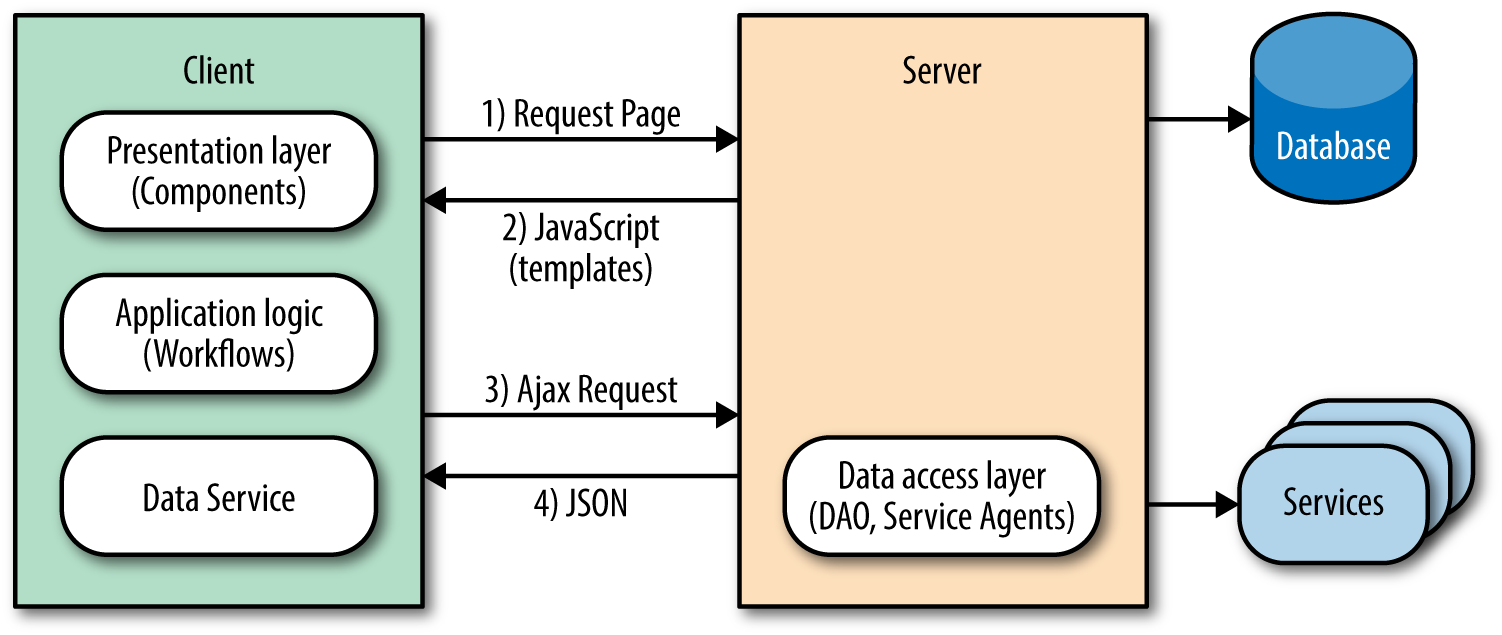

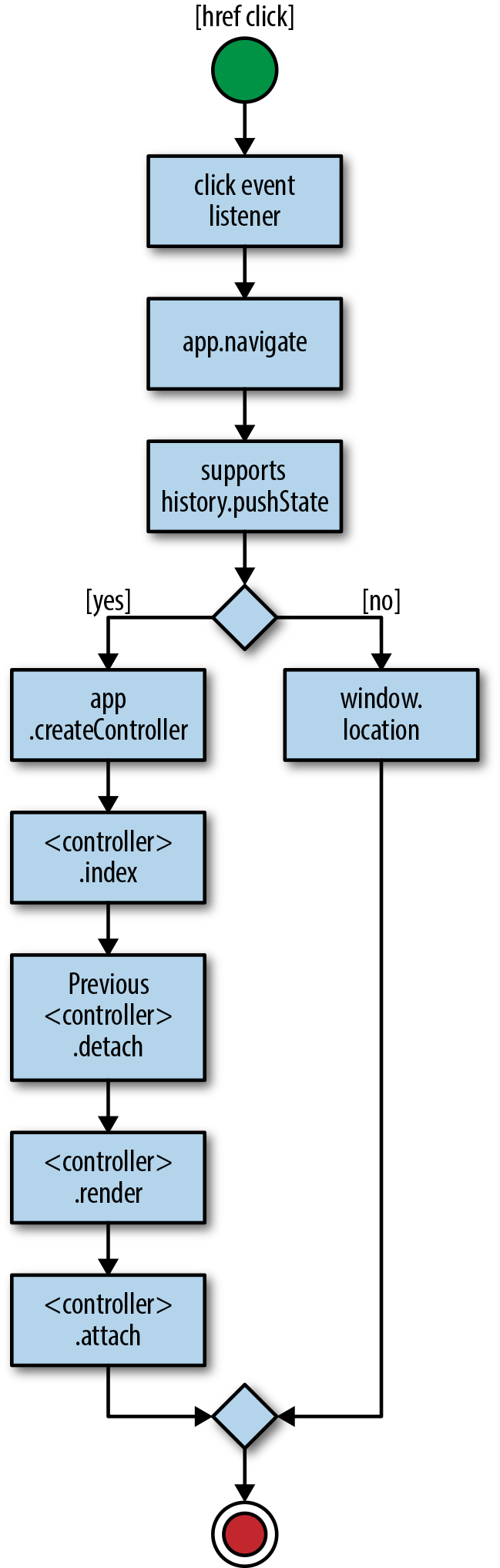

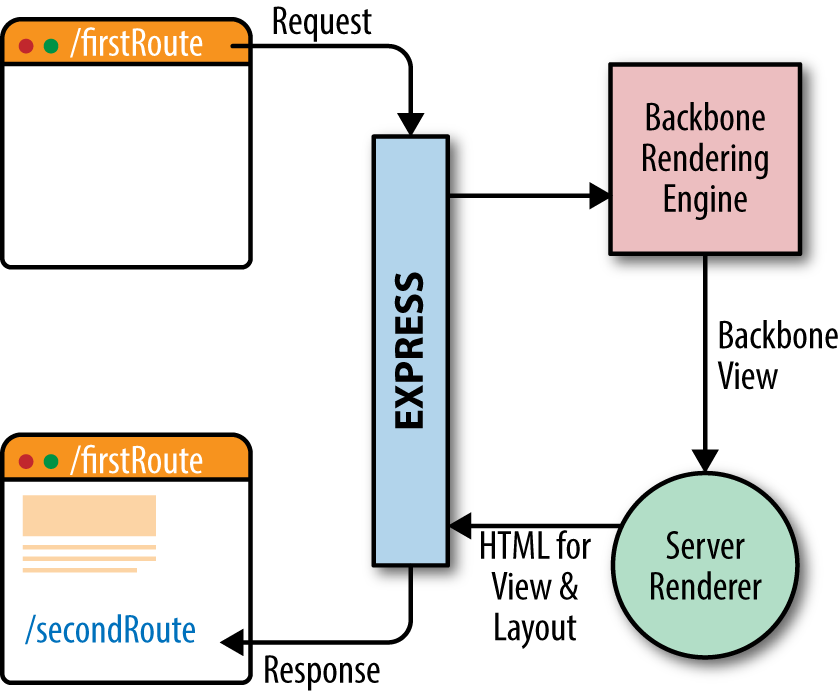

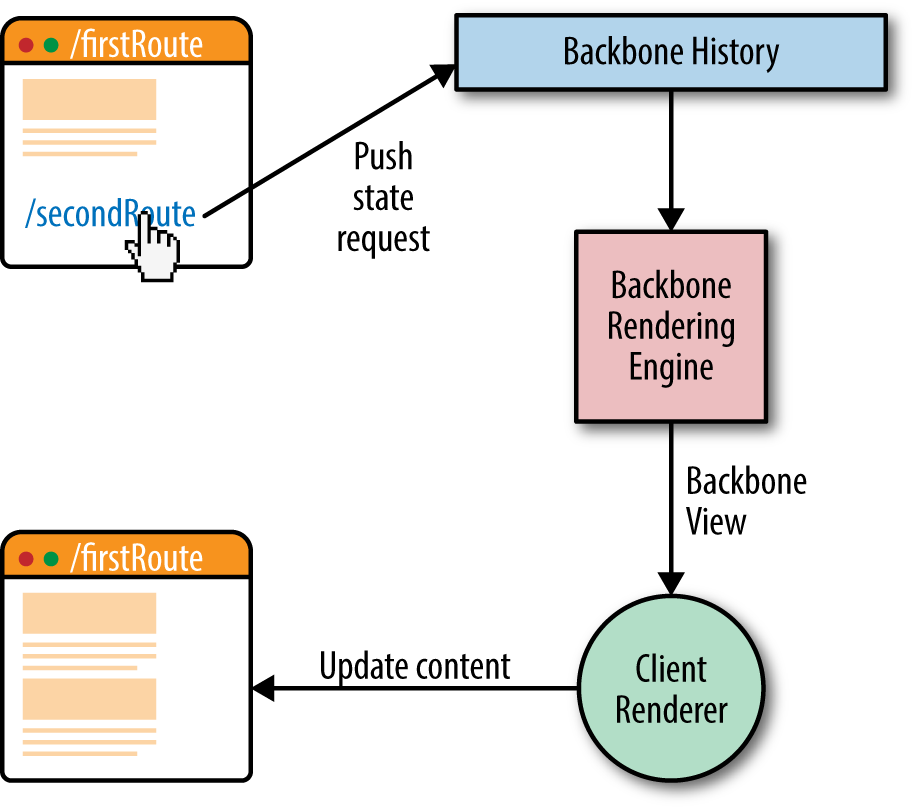

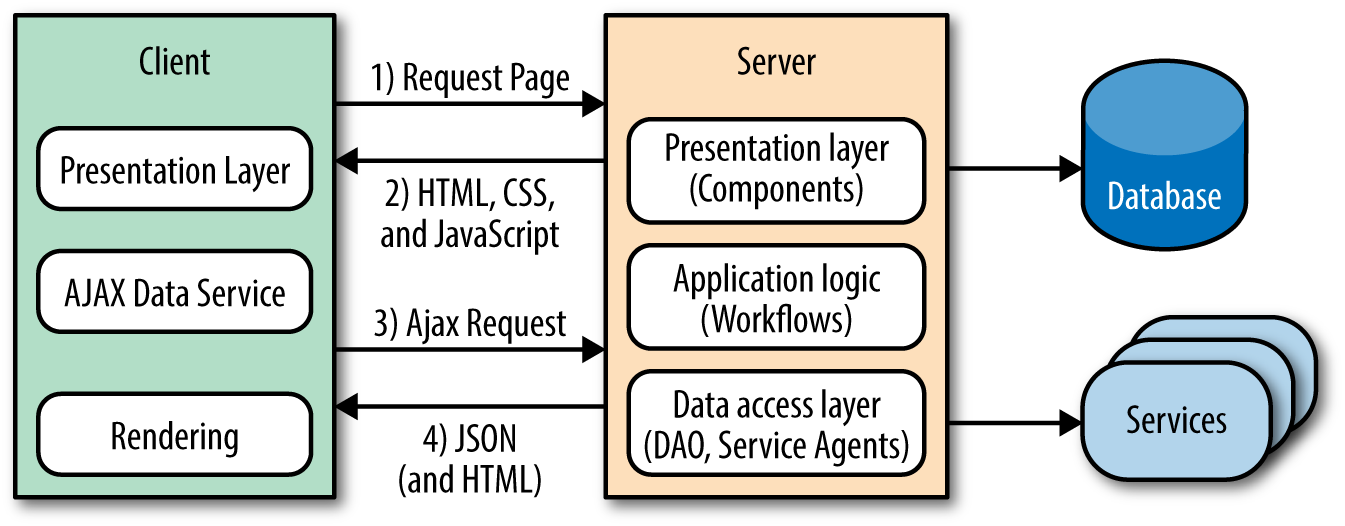

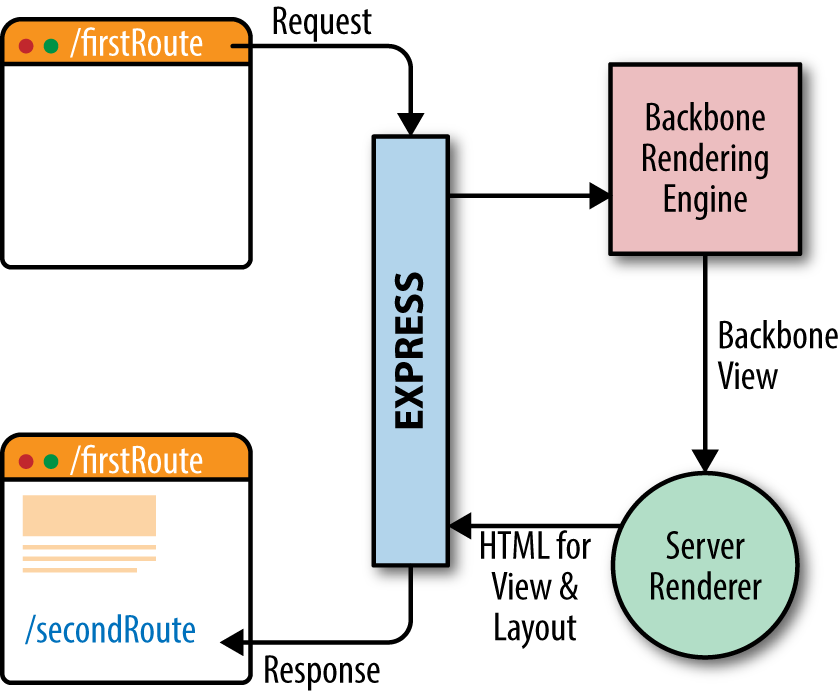

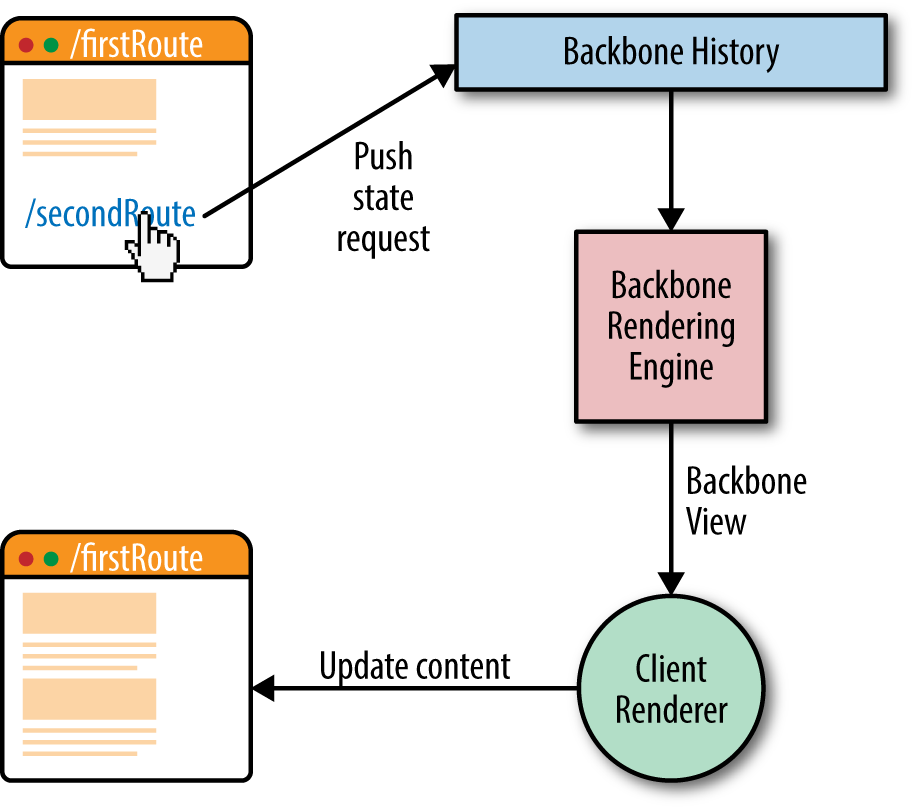

The SPA eliminates the issues that plague classic web applications by shifting the responsibility of rendering entirely to the client. This model separates application logic from data retrieval, consolidates UI code to a single language and runtime, and significantly reduces the impact on the servers (Figure 1-4).

It accomplishes this reduction because the server sends a payload of assets, JavaScript, and templates to the client. From there, the client takes over only fetching the data it needs to render pages/views. This behavior significantly improves the rendering of pages because it does not require the overhead of fetching and parsing an entire document when a user requests a new page or submits data. In addition to the performance gains, this model also solves the engineering concerns that Ajax introduced to the classic web application.

Going back to the product carousel example, the first page of the (related) products carousel was rendered by the application server. Upon pagination, subsequent requests were then rendered by the client. The blurring of the lines of responsibility and duplication of efforts evidenced here are the primary problems of the classic web application in the modern web platform. These issues do not exist in an SPA.

In an SPA there is a clear line of separation between the responsibilities of the server and client. The API server responds to data requests, the application server supplies the static resources, and the client runs the show. In the case of the products carousel, an empty document that contains a payload of JavaScript and template resources would be sent by the application server to the browser. The client application would then initialize in the browser and request the data required to render the view that contains the products carousel. After receiving the data, the client application would render the first set of items for the carousel. Upon pagination, the data fetching and rendering lifecycle would repeat, following the same code path. This is an outstanding engineering solution. Unfortunately, it is not always the best user experience.

In an SPA the initial page load can appear extremely sluggish to the end users, because they have to wait for the data to be fetched before the page can be rendered. So instead of seeing content immediately when the pages loads, they get an animated loading indicator, at best. A common approach to mitigate this delayed rendering is to serve the data for the initial page. However, this requires application server logic, so it begins to blur the lines of responsibility once again and adds another layer of code to maintain.

The next issue SPAs face is both a user experience and a business issue. They are not SEO-friendly by default, which means that users will not be able to find an application’s content by searching. The problem stems from the fact that SPAs leverage the hash fragment for routing. Before we examine why this impacts SEO, let’s take a look at the mechanics of SPA routing.

SPAs rely on the fragment to map faux URI paths to a route handler that renders a view in response. For example, in a classic web application an “about us” page URI might look like http://domain.com/about, but in an SPA it would look like http://domain.com/#about. The SPA uses a hash mark and a fragment identifier at the end of the URL. The reason the SPA router uses the fragment is because the browser does not make a network request when the fragment changes, unlike when there are changes to the URI. This is important because the whole premise of the SPA is that it only requests the data required to render a view/page, as opposed to fetching and parsing a new document for each page.

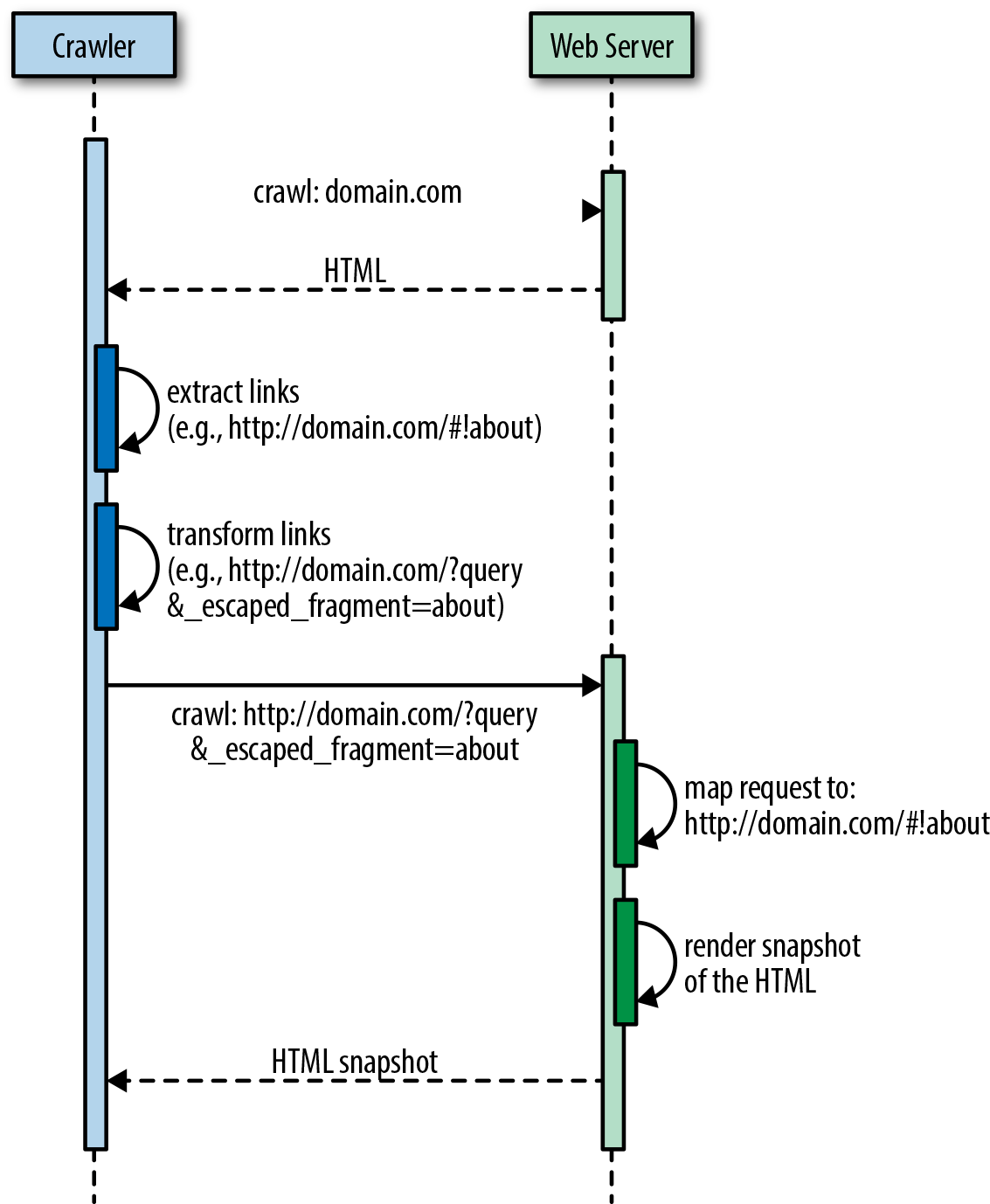

SPA fragments are not SEO-compatible because hash fragments are never sent to the server as part of the HTTP request (per the specification). As far as a web crawler is concerned, http://domain.com/#about and http://domain.com/#faqs are the same page. Fortunately, Google has implemented a work around to provide SEO support for fragments: the hashbang (#!).

Most SPA libraries now support the History API, and recently Google crawlers have gotten better at indexing JavaScript applications—previously, JavaScript was not even executed by web crawlers.

The basic premise is to replace the # in an SPA fragment’s route with a #!, so http://domain.com/#about would become http://domain.com/#!about. This allows the Google crawler to identify content to be indexed from simple anchors.

An anchor tag is used to create links to the content within the body of a document.

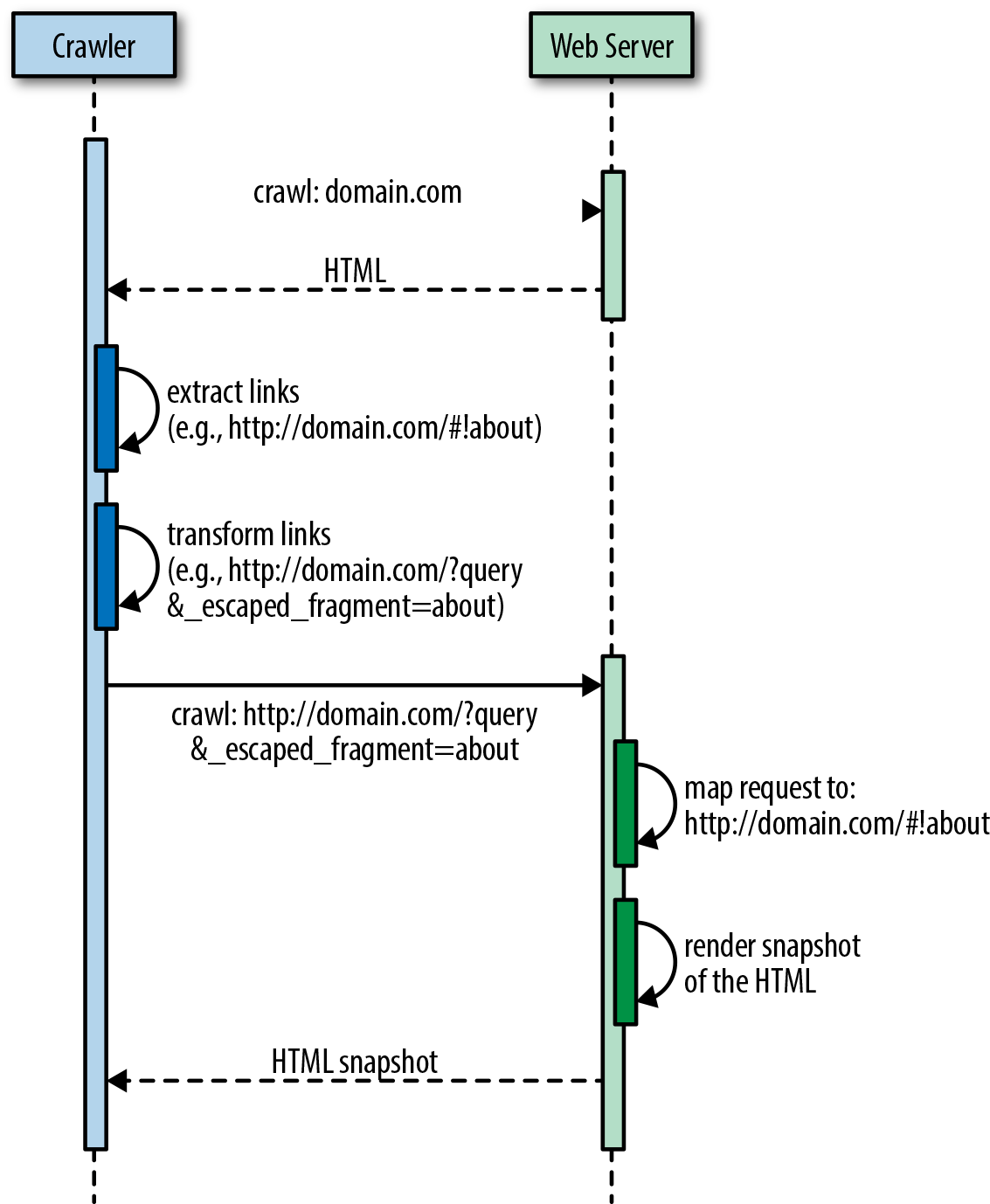

The crawler then transforms the links into fully qualified URI versions, so http://domain.com/#!about becomes http://domain.com/?query&_escaped_fragment=about. At that point it is the responsibility of the server that hosts the SPA to serve a snapshot of the HTML that represents http://domain.com/#!about to the crawler in response to the URI http://domain.com/?query&_escaped_fragment=about. Figure 1-5 illustrates this process.

At this point, the value proposition of the SPA begins to decline even more. From an engineering perspective, one is left with two options:

Spin up the server with a headless browser, such as PhantomJS, to run the SPA on the server to handle crawler requests.

Outsource the problem to a third-party provider, such as BromBone.

Both potential SEO fixes come at a cost, and this is in addition to the suboptimal first page rendering mentioned earlier. Fortunately, engineers love to solve problems. So just as the SPA was an improvement over the classic web application, so was born the next architecture, isomorphic JavaScript.

Isomorphic JavaScript applications are the perfect union of the classic web application and single-page application architectures. They offer:

SEO support using fully qualified URIs by default—no more #! workaround required—via the History API, and graceful fallbacks to server rendering for clients that don’t support the History API when navigating.

Distributed rendering of the SPA model for subsequent page requests for clients that support the History API. This approach also lessens server loads.

A single code base for the UI with a common rendering lifecycle. No duplication of efforts or blurring of the lines. This reduces the UI development costs, lowers bug counts, and allows you to ship features faster.

Optimized page load by rendering the first page on the server. No more waiting for network calls and displaying loading indicators before the first page renders.

A single JavaScript stack, which means that the UI application code can be maintained by frontend engineers versus frontend and backend engineers. Clear separation of concerns and responsibilities means that experts contribute code only to their respective areas.

The isomorphic JavaScript architecture meets all three of the key acceptance criteria outlined at the beginning of this section. Isomorphic JavaScript applications are easily indexed by all search engines, have an optimized page load, and have optimized page transitions (in modern browsers that support the History API; this gracefully degrades in legacy browsers with no impact on application architecture).

Companies like Yahoo!, Facebook, Netflix, and Airbnb, to name a few, have embraced isomorphic JavaScript. However, isomorphic JavaScript architecture might suit some applications more than others. As we’ll explore in this book, isomorphic JavaScript apps require additional architectural considerations and implementation complexity. For single-page applications that are not performance-critical and do not have SEO requirements (like applications behind a login), isomorphic JavaScript might seem like more trouble than it’s worth.

Likewise, many companies and organizations might not be in a situation in which they are prepared to operate and maintain a JavaScript execution engine on the server side. For example, Java-, Ruby-, Python-, or PHP-heavy shops might lack the know-how for monitoring and troubleshooting JavaScript application servers (e.g., Node.js) in production. In such cases, isomorphic JavaScript might present an additional operational cost that is not easily taken on.

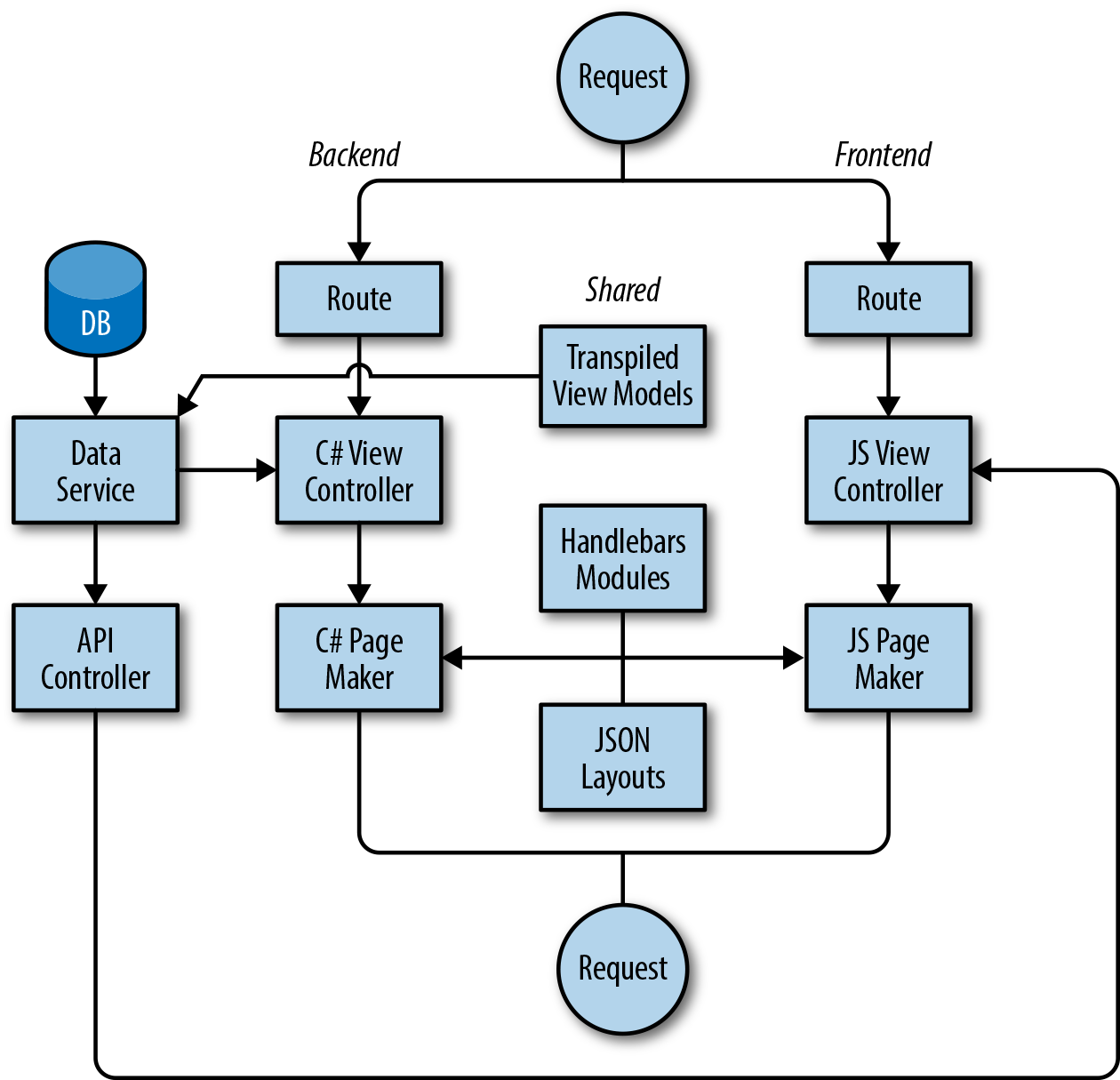

Node.js provides a remarkable server-side JavaScript runtime. For servers using Java, Ruby, Python, or PHP, there are two main alternative options: 1) run a Node.js process alongside the normal server as a local or remote “render service,” or 2) use an embedded JavaScript runtime (e.g., Nashorn, which comes packaged with Java 8). However, there are clear downsides to these approaches. Running Node.js as a render service adds an additional overhead cost of serializing data over a communication socket. Likewise, using an embedded JavaScript runtime in languages that are traditionally not optimized for JavaScript execution can offer additional performance challenges (although this may improve over time).

If your project or company does not require what isomorphic JavaScript architecture offers (as outlined in this chapter), then by all means, use the right tool for the job. However, when server-side rendering is no longer optional and initial page load performance and search engine optimization do become concerns for you, don’t worry; this book will be right here, waiting for you.

In this chapter we defined isomorphic JavaScript applications—applications that share the same JavaScript code for both the browser client and the web application server—and identified the primary type of isomorphic JavaScript app that we’ll be covering in this book: the ecommerce web application. We then took a stroll back through history and saw how other architectures evolved, weighing the architectures against the key acceptance criteria of SEO support, optimized first page load, and optimized page transitions. We saw that the architectures that preceded isomorphic JavaScript did not meet all of these acceptance criteria. We ended the chapter with the merging of two architectures, the classic web application and the single-page application, which resulted in the isomorphic JavaScript architecture.

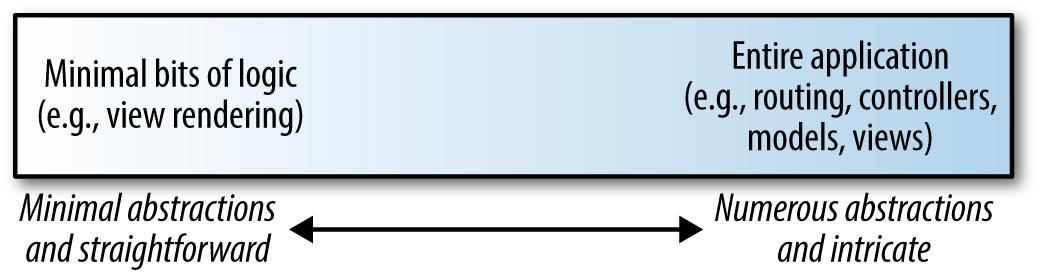

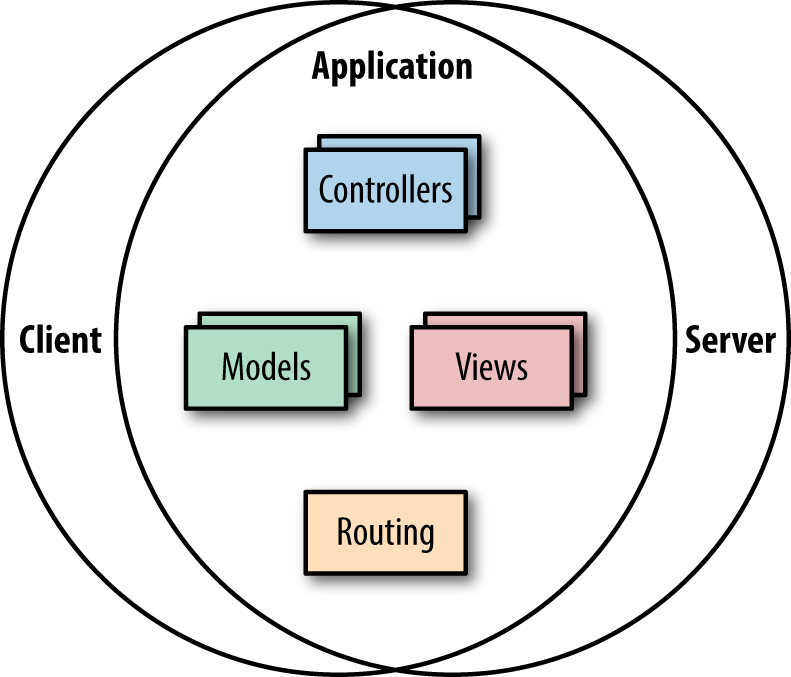

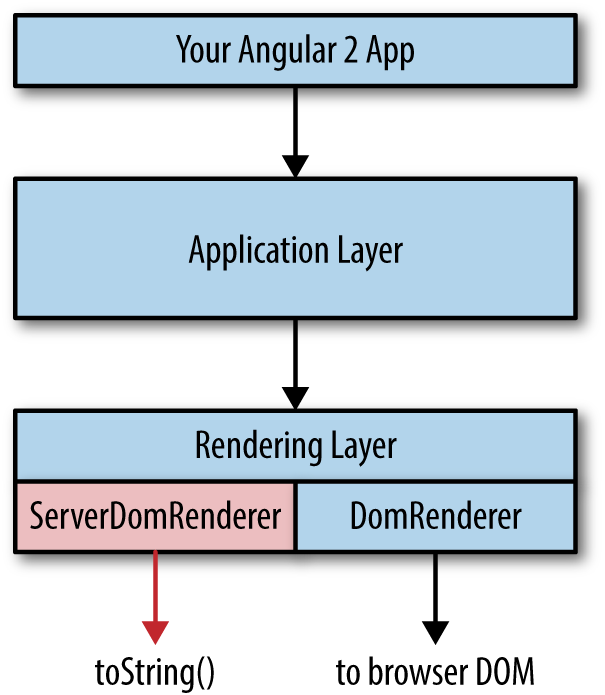

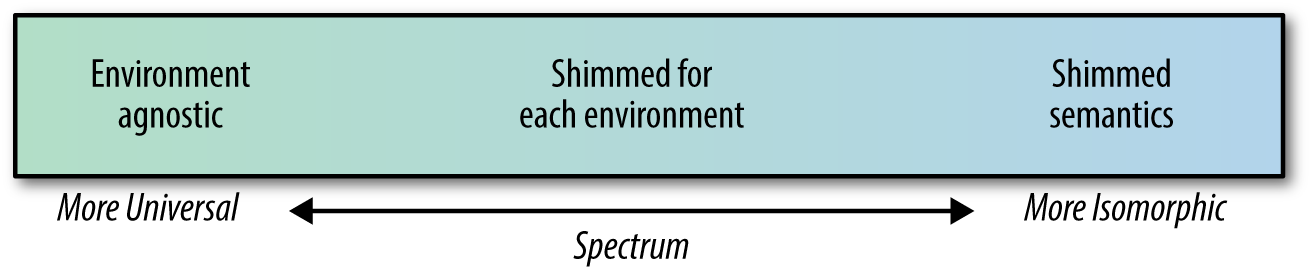

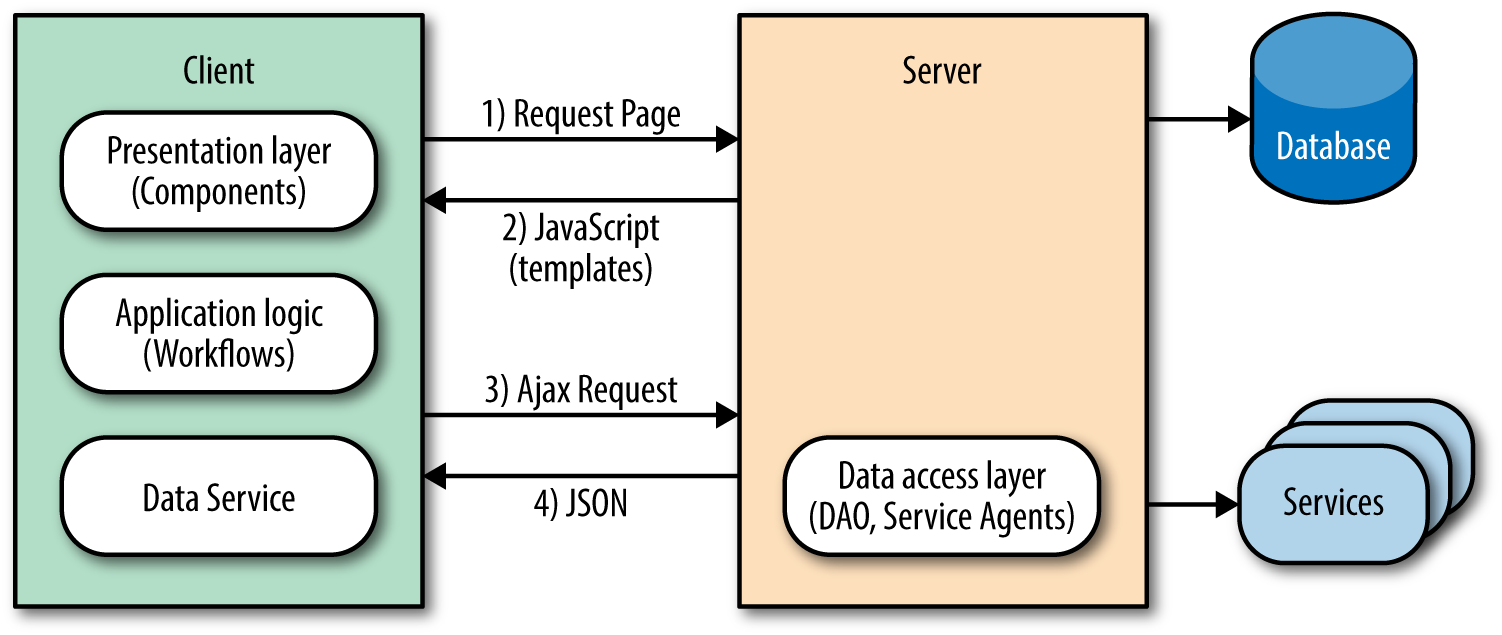

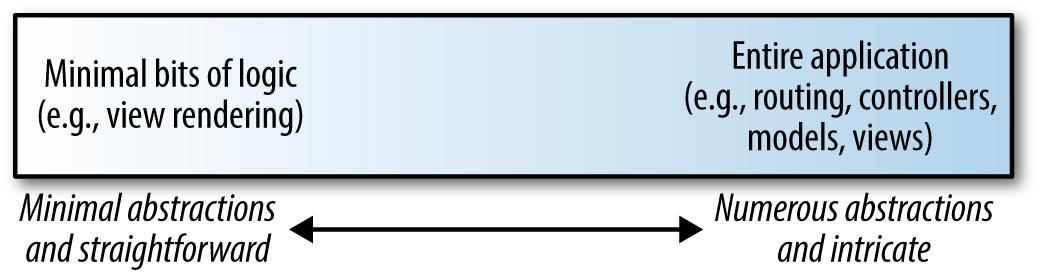

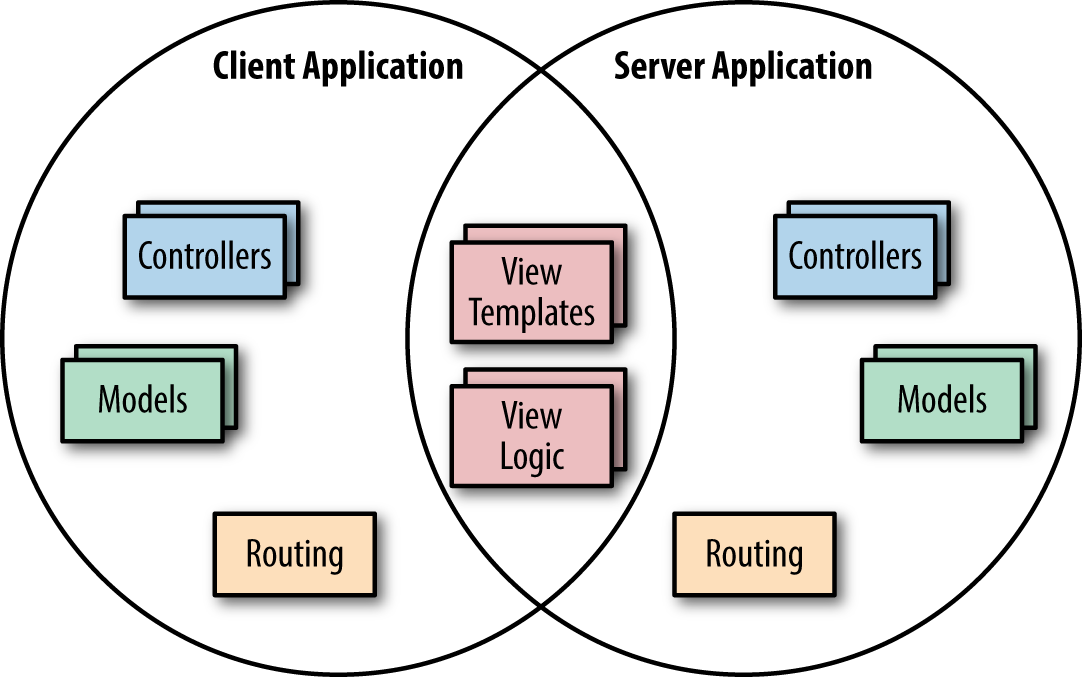

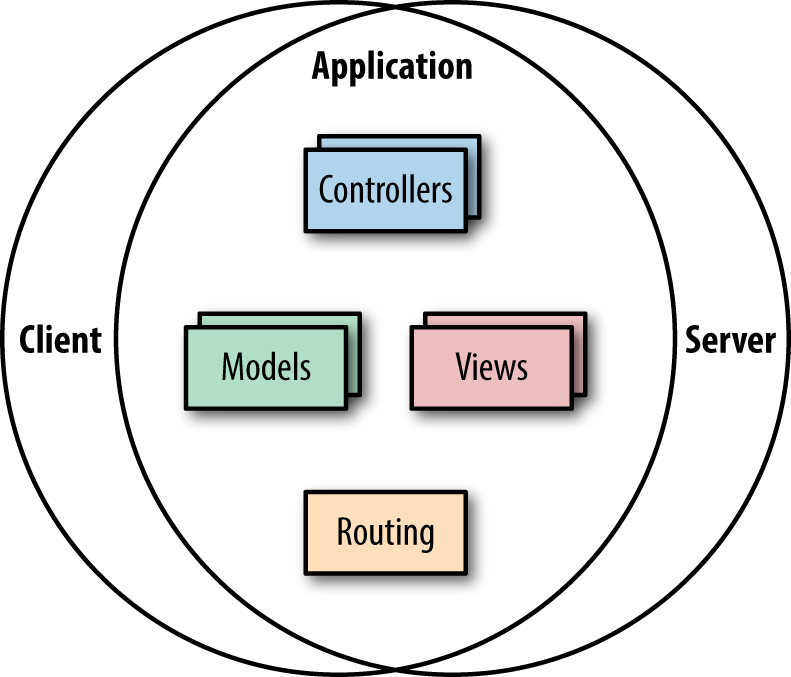

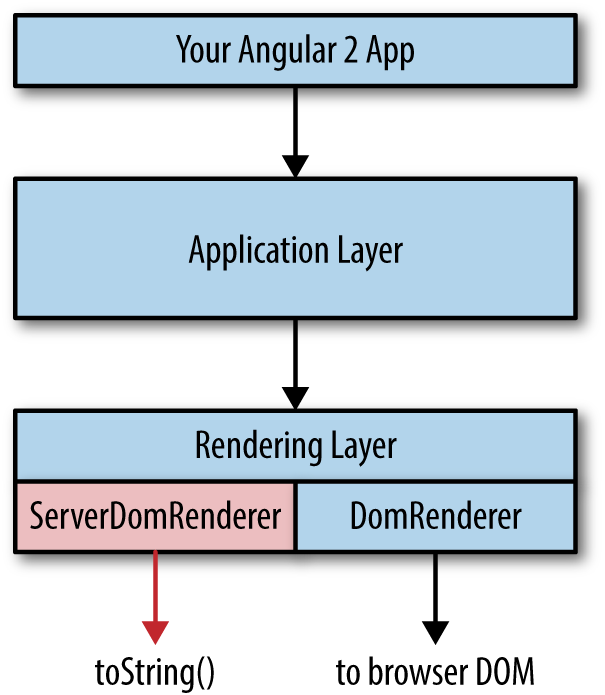

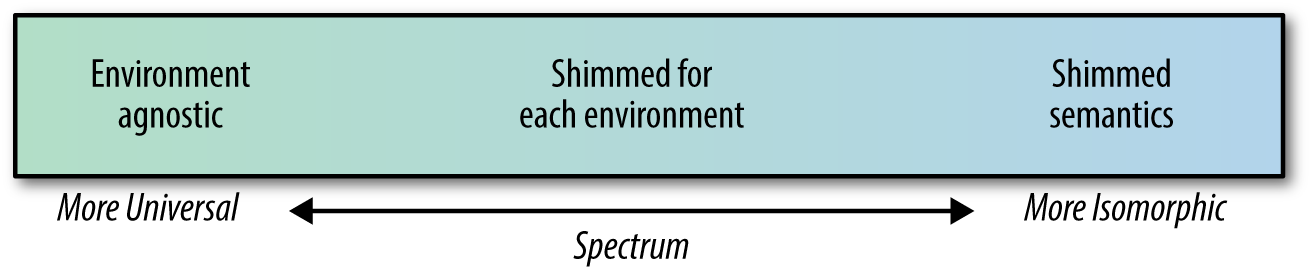

Isomorphic JavaScript is a spectrum (Figure 2-1). On one side of the spectrum, the client and server share minimal bits of view rendering (like Handlebars.js templates); some name, date, or URL formatting code; or some parts of the application logic. At this end of the spectrum we mostly find a shared client and server view layer with shared templates and helper functions (Figure 2-2). These applications require fewer abstractions, since many useful libraries found in popular JavaScript libraries like Underscore.js or Lodash.js can be shared between the client and the server.

On the other side of this spectrum, the client and server share the entire application (Figure 2-3). This includes sharing the entire view layer, application flows, user access constraints, form validations, routing logic, models, and states. These applications require more abstractions since the client code is executing in the context of the DOM (document object model) and window, whereas the server works in the context of a request/response object.

In Part II of this book we will dive into the mechanics of sharing code between the client and server. However, as a form of introduction, this chapter will briefly explore the two ends of the spectrum by looking at various functional application pieces that can be shared between the client and server (we will also point out some of the abstractions required to allow these functional pieces to work isomorphically).

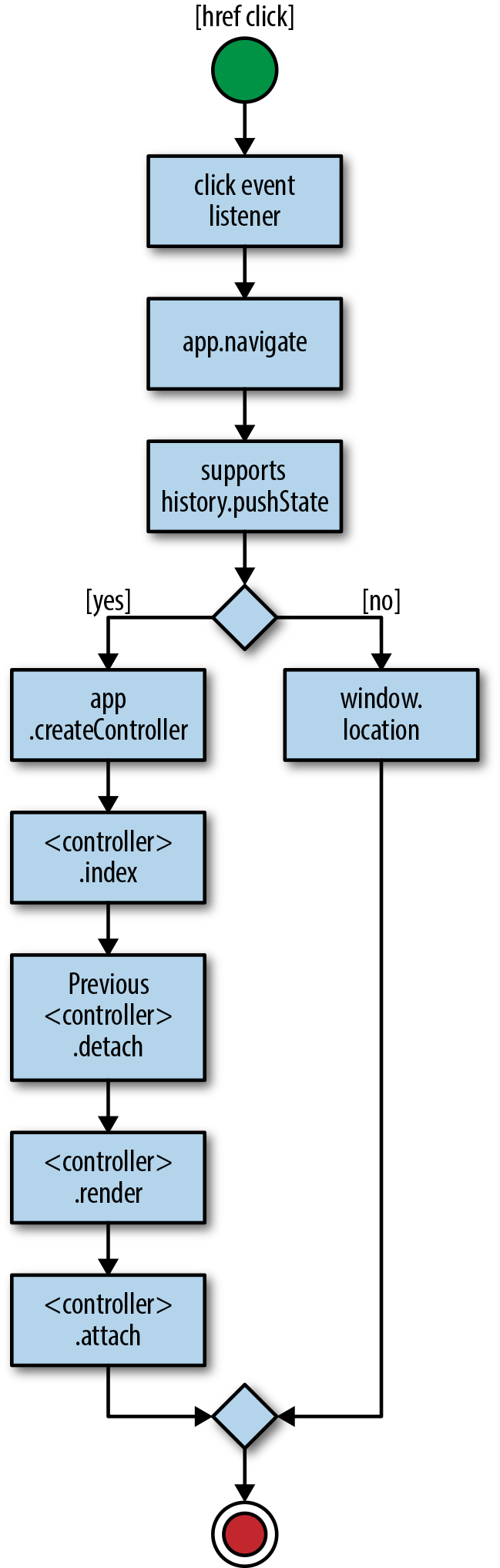

Single-page applications (SPAs) provide users with a more fluid experience by reducing the total number of full page reloads that they must experience as they navigate from one page to another. Instead, SPAs partially render parts of a page as a result of user interaction. SPAs utilize client-side template engines for taking a template (with simple placeholders) and executing it against a model object to output HTML that can be attached to the DOM. Client-side templates help separate the view markup from the view logic to create more maintainable code. Sharing views isomorphically means sharing both the template and the view logic associated with those templates.

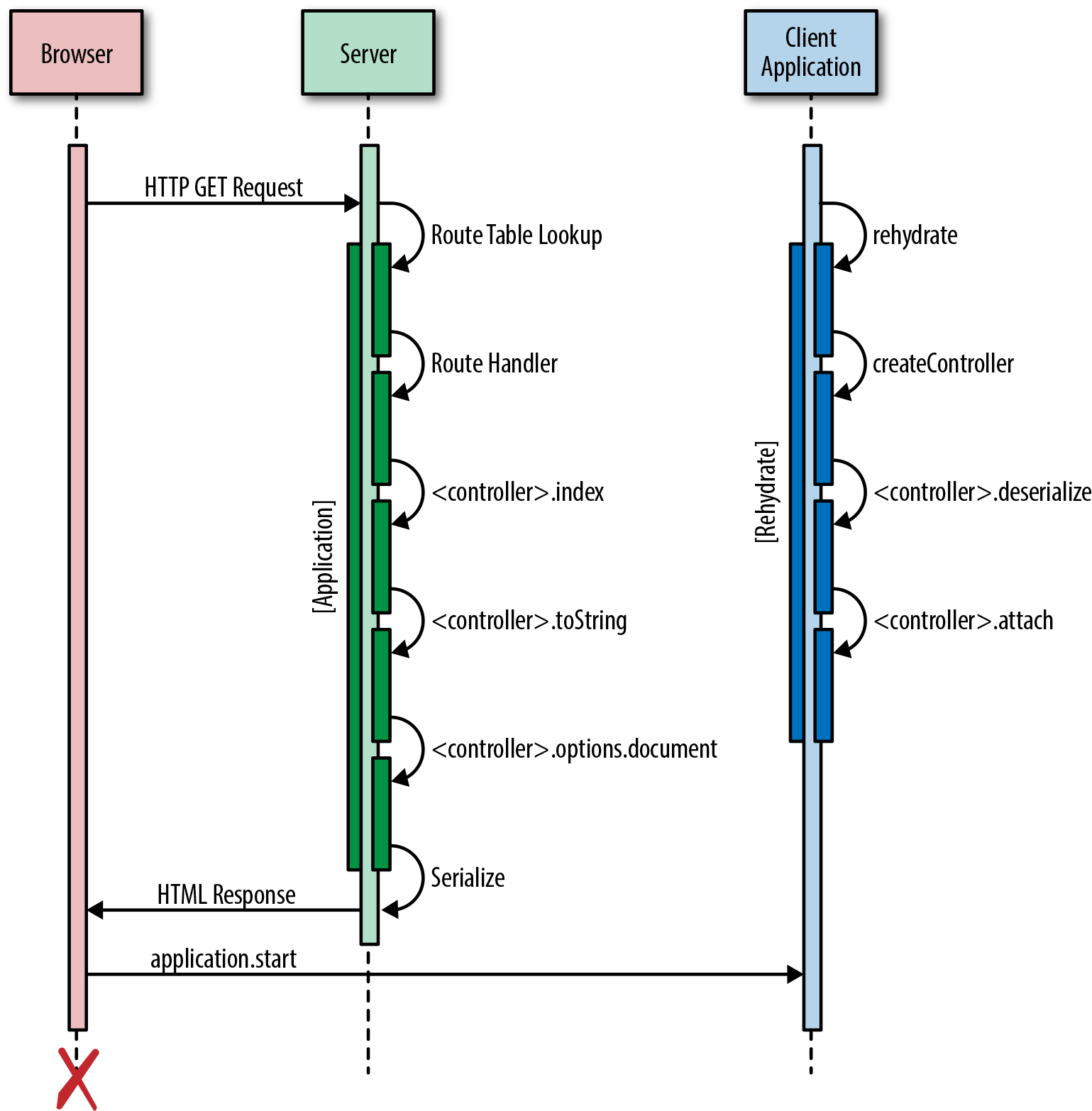

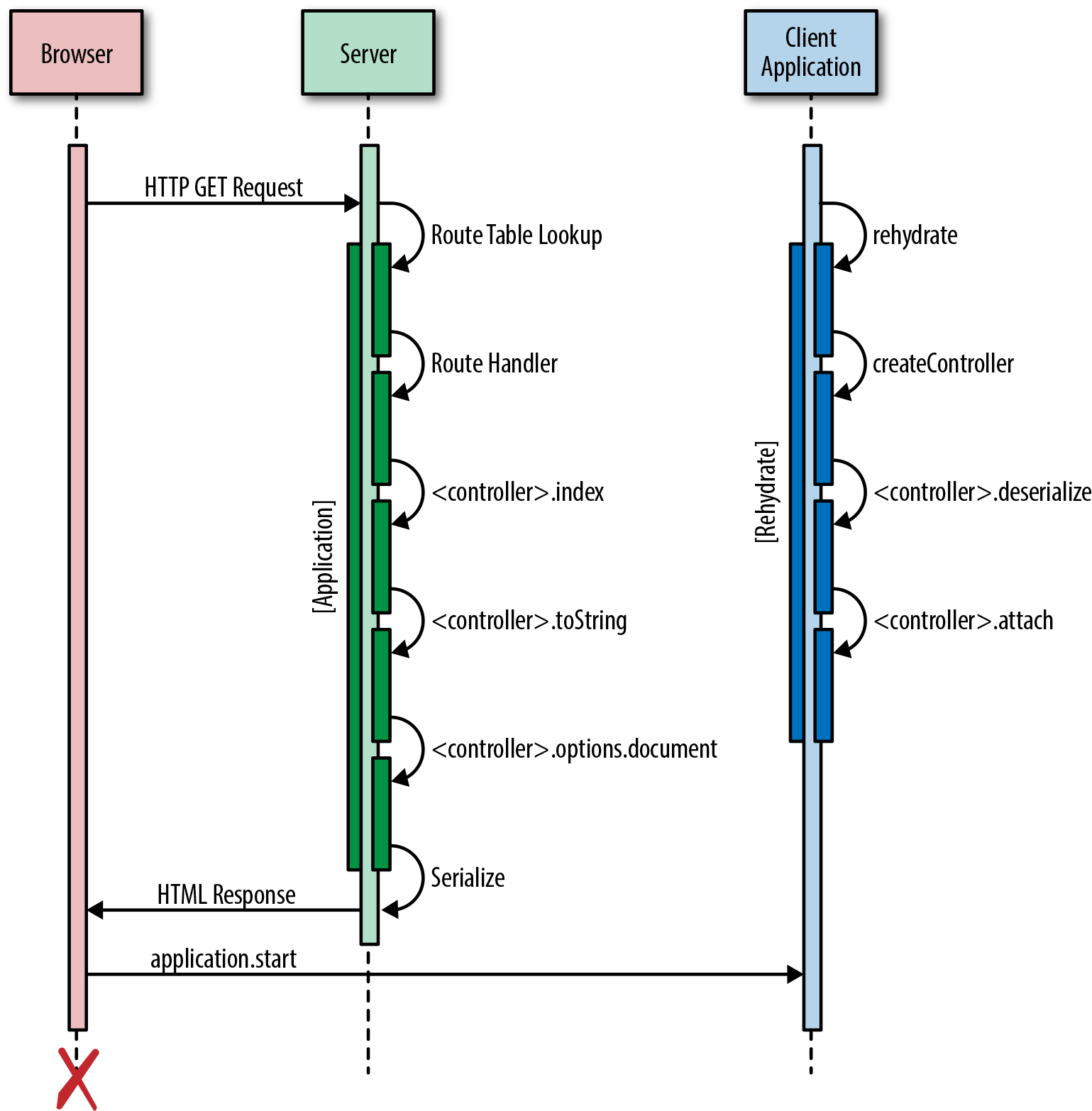

In order to achieve faster (perceived) performance and proper search engine indexing, we want to be able to render any view on the server as well as the client. On the client, template rendering is as simple as evaluating a template and attaching the output to a DOM element. On the server, the same template is rendered as a string and returned in the response. The tricky part of isomorphic view rendering is that the client has to pick up wherever the server left off. This is often called the client/server transition; that is, the client should properly transition and not “destroy” the DOM generated by the server after the application is loaded in the browser. The server needs to “dehydrate” the state by sending it to the client, and the client will “rehydrate” (or reanimate) the view and initialize it to the same state it was in on the server.

Example 2-1 illustrates a typical response from the server, which has the rendered markup in the body of the page and the serialized state within a <script> tag. The server puts the serialized state in the rendered view, and the client will deserialize the state and attach it to the prerendered markup.

<html><body><div>[[server_side_rendered_markup]]</div><script>window.__state__=[[serialized_state]<]/script>...</body></html>

Template helpers are objects, like numbers, strings, or hash objects, and are typically easy to share. For sharing formatting like dates, many formatting libraries work both on the server and on the client. Moment.js, for example, can parse, validate, manipulate, and display dates in JavaScript both on the server and on the client. URL formatting, on the other hand, requires prepending the host and port to the path, whereas on the client we can simply use the relative URL.

Most modern SPA frameworks support the concept of a router, which tracks the user’s state as she navigates from one view or page to another. In an SPA, routing is the main mechanism needed for handling navigation events, changing the state and view of the page, and updating the browser’s navigation history. In an isomorphic application, we also need a set of routing configurations (i.e., a map of URI patterns to route handlers) that are easily shared between the server and the client. The challenge of sharing routes is found in the route handlers themselves, since they often need access to environment APIs that require accessing URL information, HTTP headers, and cookies. On the server this information is accessed via the request object’s APIs, whereas on the client the browser’s APIs are used instead.

Models are often referred to as business/domain objects or entities. Models establish an abstraction for data by removing state storage and retrieval from the DOM. In the simplest approach, an isomorphic application initializes the client in the exact same state it was in on the server before being sent back the initial page response. At the extreme end of the isomorphic JavaScript spectrum, sharing the state and specification of the models includes two-way synchronization of state between the server and the client (please see Chapter 4 for a further exploration of this approach).

Applications can differ in their position on the isomorphic JavaScript spectrum. The amount of code shared between the server and the client can vary, starting from sharing templates, to sharing the application’s entire view layer, all the way up to sharing the majority of the application’s logic. As applications progress along the isomorphic spectrum, more abstractions need to be created. In the next chapter we will discuss the different categories of isomorphic JavaScript and dive deeper into these abstractions.

Charlie Robbins is commonly credited for coining the term “isomorphic JavaScript” in a 2011 blog post entitled “Scaling Isomorphic Javascript Code”. The term was later popularized by Spike Brehm in a 2013 blog post entitled “Isomorphic JavaScript: The Future of Web Apps” along with subsequent articles and conference talks. However, there has been some contention over the word “isomorphic” in the JavaScript community. Michael Jackson, a React.js trainer and coauthor of the react-router project, has suggested the term “universal” JavaScript. Jackson argues that the term “universal” highlights JavaScript code that can run “not only on servers and browsers, but on native devices and embedded architectures as well.”

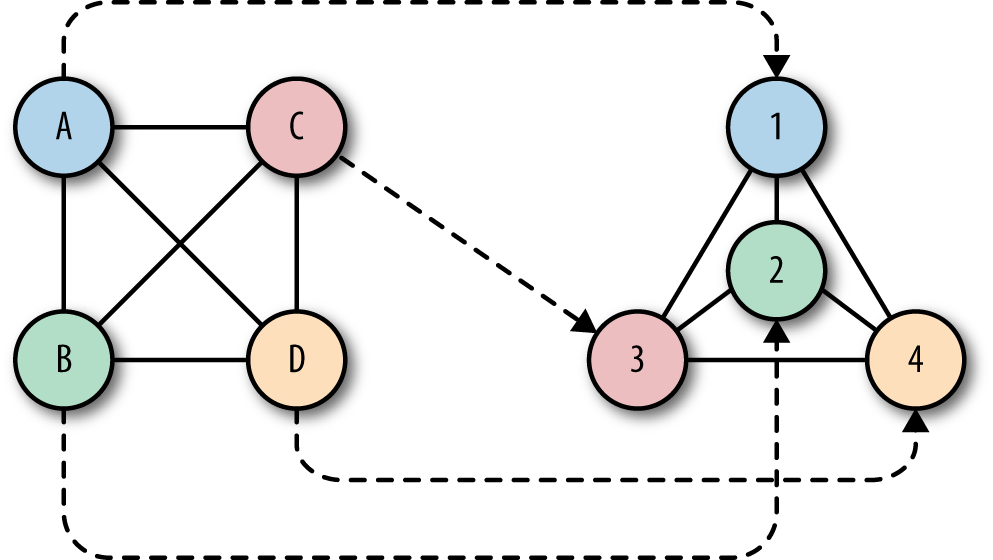

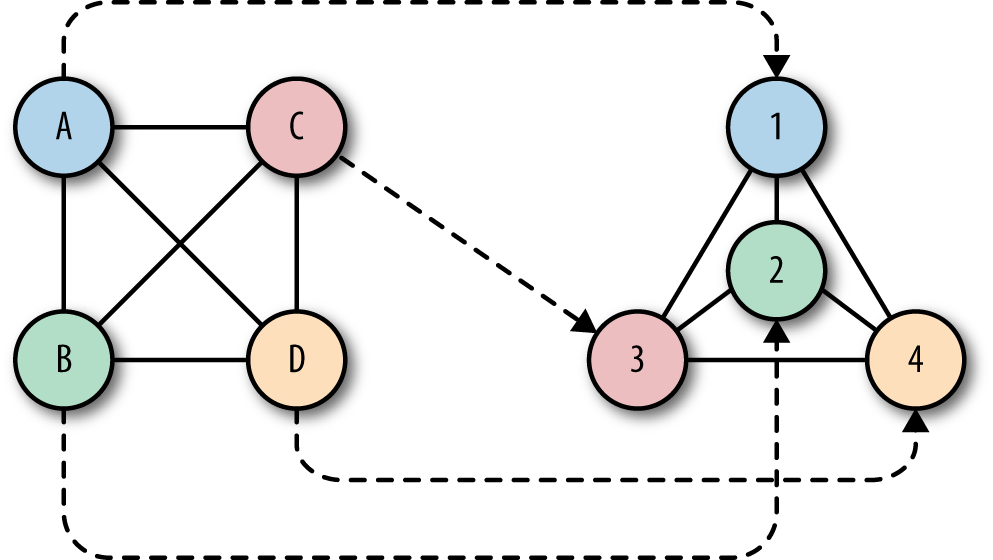

“Isomorphism,” on the other hand, is a mathematical term, which captures the notion of two mathematical objects that have corresponding or similar forms when we simply ignore their individual distinctions. When applying this mathematical concept to graph theory, it becomes easy to visualize. Take for example the two graphs in Figure 3-1.

These graphs are isomorphic, even though they look very different. The two graphs have the same number of nodes, with each node having the same number of edges. But what makes them isomorphic is that there exists a mapping for each node from the first graph to a corresponding node in the second graph while maintaining certain properties. For example, the node A can be mapped to node 1 while maintaining its adjacency in the second graph. In fact, all nodes in the first graph have an exact one-to-one correspondence to nodes in the second graph while maintaining adjacency.

This is what is nice about the “isomorphic” analogy. In order for JavaScript code to run in both the client and server environments, these environments have to be isomorphic; that is, there should exist a mapping of the client environment’s functionality to the server environment, and vice versa. Just as the two isomorphic graphs shown in Figure 3-1 have a mapping, so do isomorphic JavaScript environments.

JavaScript code that does not depend on environment-specific features—for example, code that avoids using the window or request objects—can easily run on both sides of the wire. But for JavaScript code that accesses environment-specific properties—e.g., req.path or window.location.pathname—a mapping (sometimes referred to as a “shim”) needs to be provided to abstract or “fill in” a given environment-specific property. This leads us to two general categories of isomorphic JavaScript: 1) environment agnostic and 2) shimmed for each environment.

Environment-agnostic node modules use only pure JavaScript functionality, and no environment-specific APIs or properties like window (for the client) and process (for the server). Examples are Lodash.js, Async.js, Moment.js, Numeral.js, Math.js, and Handlebars.js, to name a few. Many modules fall into this category, and they simply work out of the box in an isomorphic application.

The only thing we need to address with these kinds of Node modules is that they use Node’s require(module_id) module loader. Browsers don’t support the node require(..) method. To deal with this, we need to use a build tool that will compile the Node modules for the browser. There are two main build tools that do just that: namely, Browserify and Webpack.

In Example 3-1, we use Moment.js to define a date formatter that will run on both the server and the client.

'use strict';varmoment=require('moment');//Node-specific require statementvarformatDate=function(date){returnmoment(date).format('MMMM Do YYYY, h:mm:ss a');};module.exports=formatDate

We also have a simple main.js that will call the formatDate(..) function to format the current time:

varformatDate=require('./dateFormatter.js');console.log(formatDate(Date.now()));

When we run main.js on the server (using Node.js), we get the output like the following:

$nodemain.jsJuly25th2015,11:27:27pm

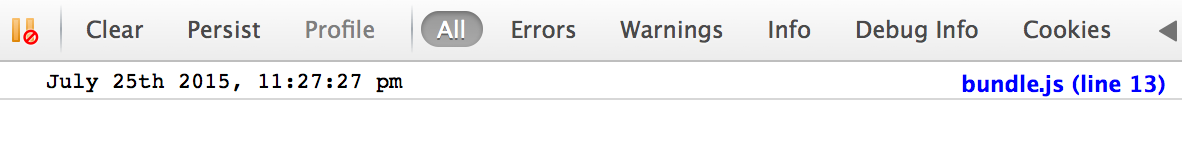

Browserify is a tool for compiling CommonJS modules by bundling up all the required Node modules for the browser. Using Browserify, we can output a bundled JavaScript file that is browser-friendly:

$browserifymain.js>bundle.js

When we open the bundle.js file in a browser, we can see the same date message in the browser’s console (Figure 3-2).

<scriptsrc="bundle.js"></script>

Pause for a second and think about what just happened. This is a simple example, but you can see the astonishing ramifications. With a simple build tool, we can easily share logic between the server and the client with very little effort. This opens many possibilities that we’ll dive deeper into in Part II of this book.

There are many differences between client- and server-side JavaScript. On the client there are global objects like window and different APIs like localStorage, the History API, and WebGL. On the server we are working in the context of a request/response lifecycle and the server has its own global objects.

Running the following code in the browser returns the current URL location of the browser. Changing the value of this property will redirect the page:

console.log(window.location.href);window.location.href='http://www.oreilly.com'

Running that same code on the server returns an error:

>console.log(window.location.href);ReferenceError:windowisnotdefined

This makes sense since window is not a global object on the server. In order to do the same redirect on the server we must write a header to the response object with a status code to indicate a URL redirection (e.g., 302) and the location that the client will navigate to:

varhttp=require('http');http.createServer(function(req,res){console.log(req.path);res.writeHead(302,{'Location':'http://www.oreilly.com'});res.end();}).listen(1337,'127.0.0.1');

As we can see, the server code looks much different from the client code. So, then, how do we run the same code on both sides of the wire?

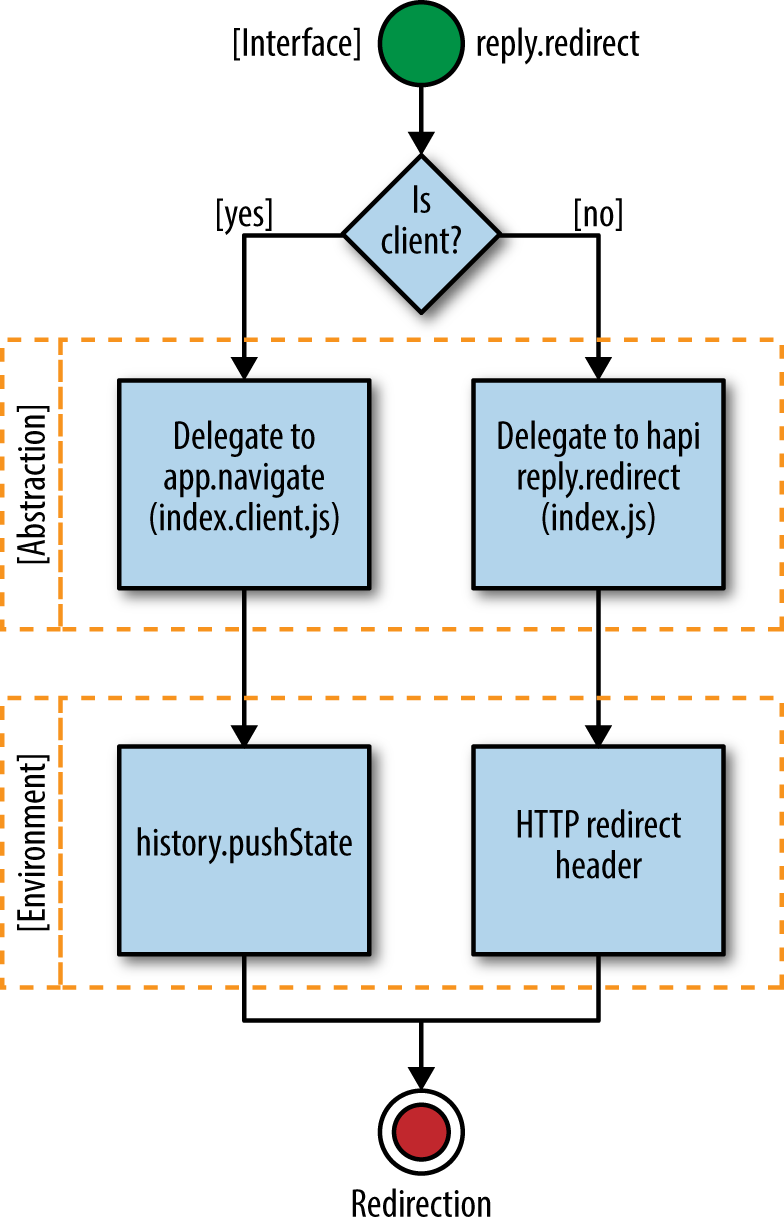

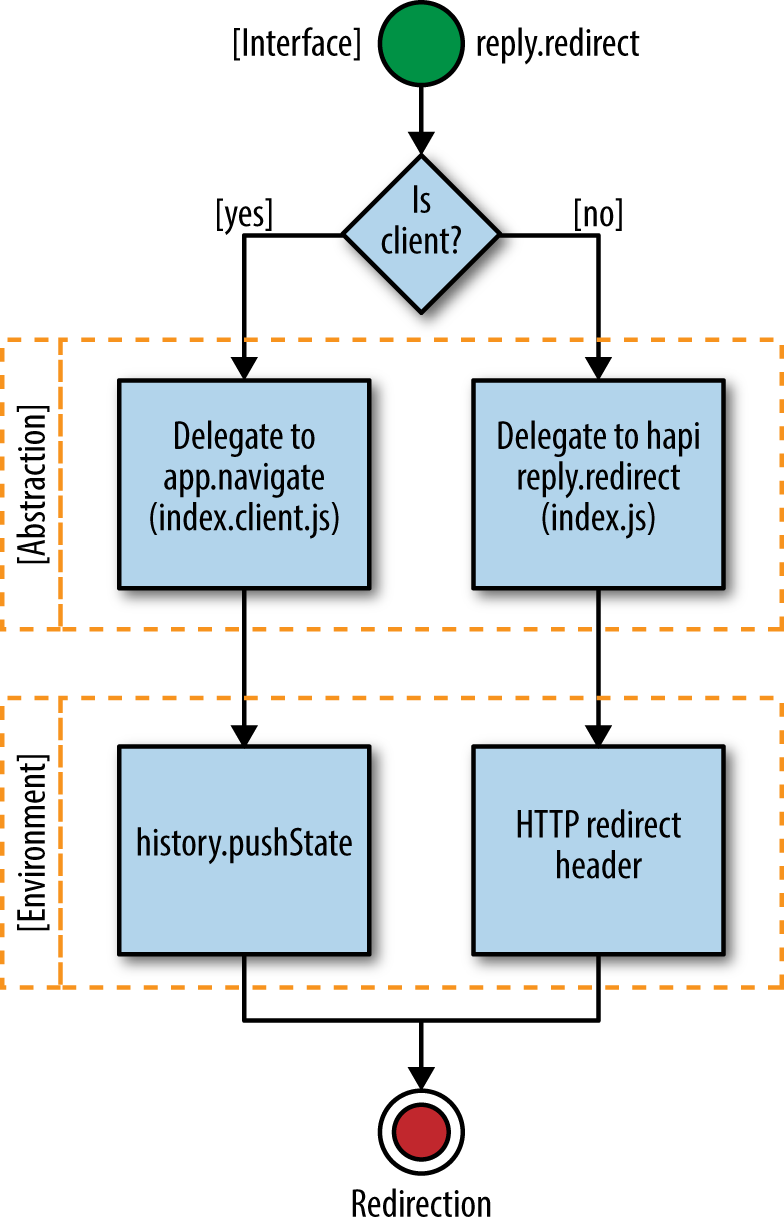

We have two options. The first option is to extract the redirect logic into a separate module that is aware of which environment it is running in. The rest of the application code simply calls this module, being completely agnostic to the environment-specific implementation:

varredirect=require('shared-redirect');// Do some interesting application logic that decides if a redirect is requiredif(isRedirectRequired){redirect('http://www.oreilly.com');}// Continue with interesting application logic

With this approach the application logic becomes environment agnostic and can run on both the client and the server. The redirect(..) function implementation needs to account for the environment-specific implementations, but this is self-contained and does not bleed into the rest of the application logic. Here is a possible implementation of the redirect(..) function:

if(window){window.location.href='http://www.oreilly.com'}else{this._res.writeHead(302,{'Location':'http://www.oreilly.com'});}

Notice that this function must be aware of the window implementation and must use it accordingly.

The alternative approach is to simply use the server’s response interface on the client, but shimmed to use the window property instead. This way, the application code always calls res.writeHead(..), but in the browser this will be shimmed to call the window.location.href property. We will look into this approach in more detail in Part II of this book.

In this chapter, we looked at two different categories of isomorphic JavaScript code. We saw how easy it is to simply port environment-agnostic Node modules to the browser using a tool like Browserify. We also saw how environment-specific implementations can be shimmed for each environment to allow code to be reused on the client and the server. It’s now time to take it to the next level. In the next chapter we’ll go beyond server-side rendering and look at how isomorphic JavaScript can be used for different solutions. We’ll explore innovative, forward-looking application architectures that use isomorphic JavaScript to accomplish novel things.

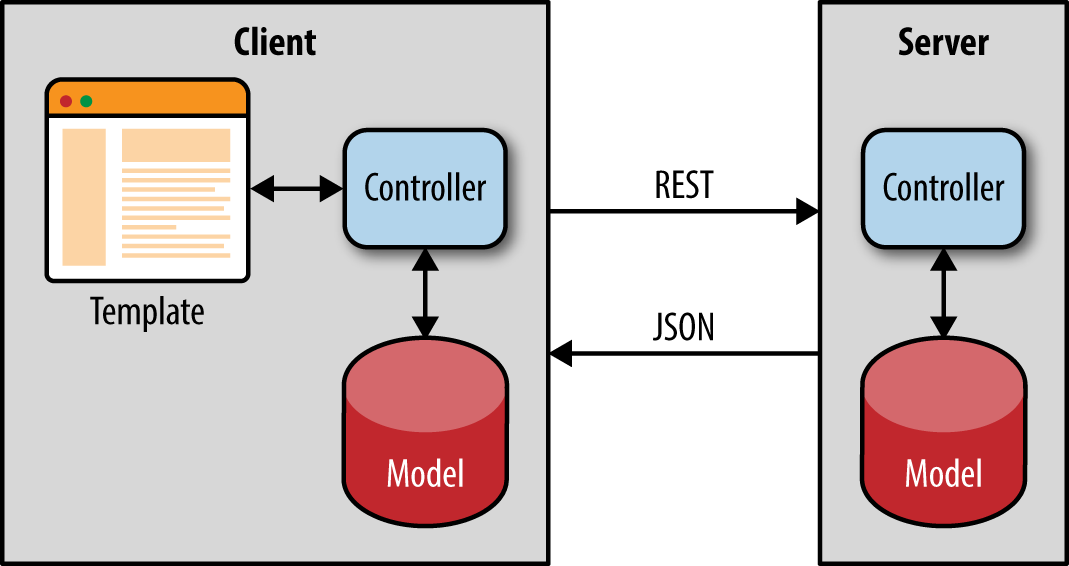

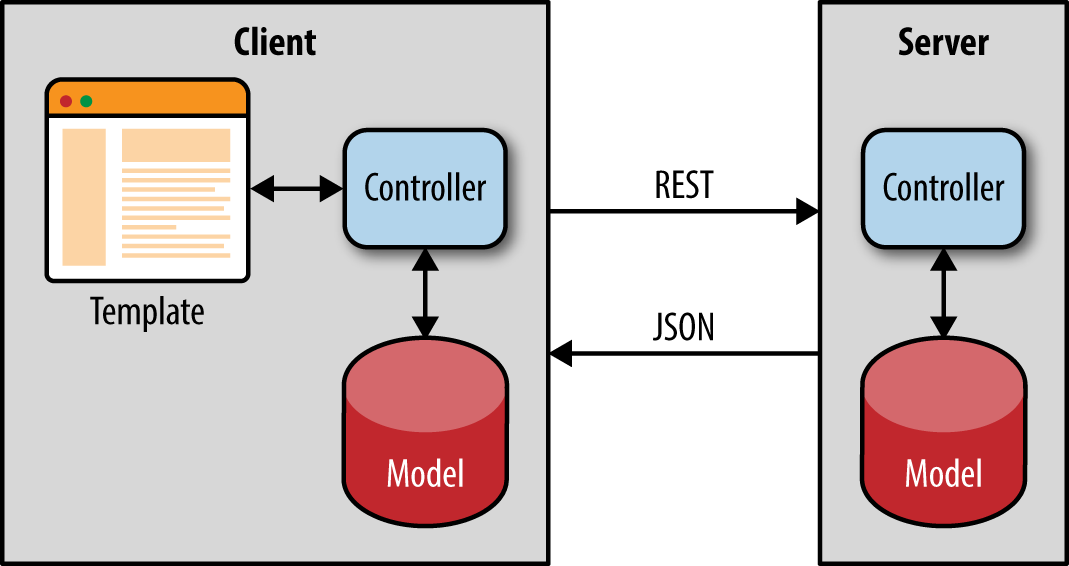

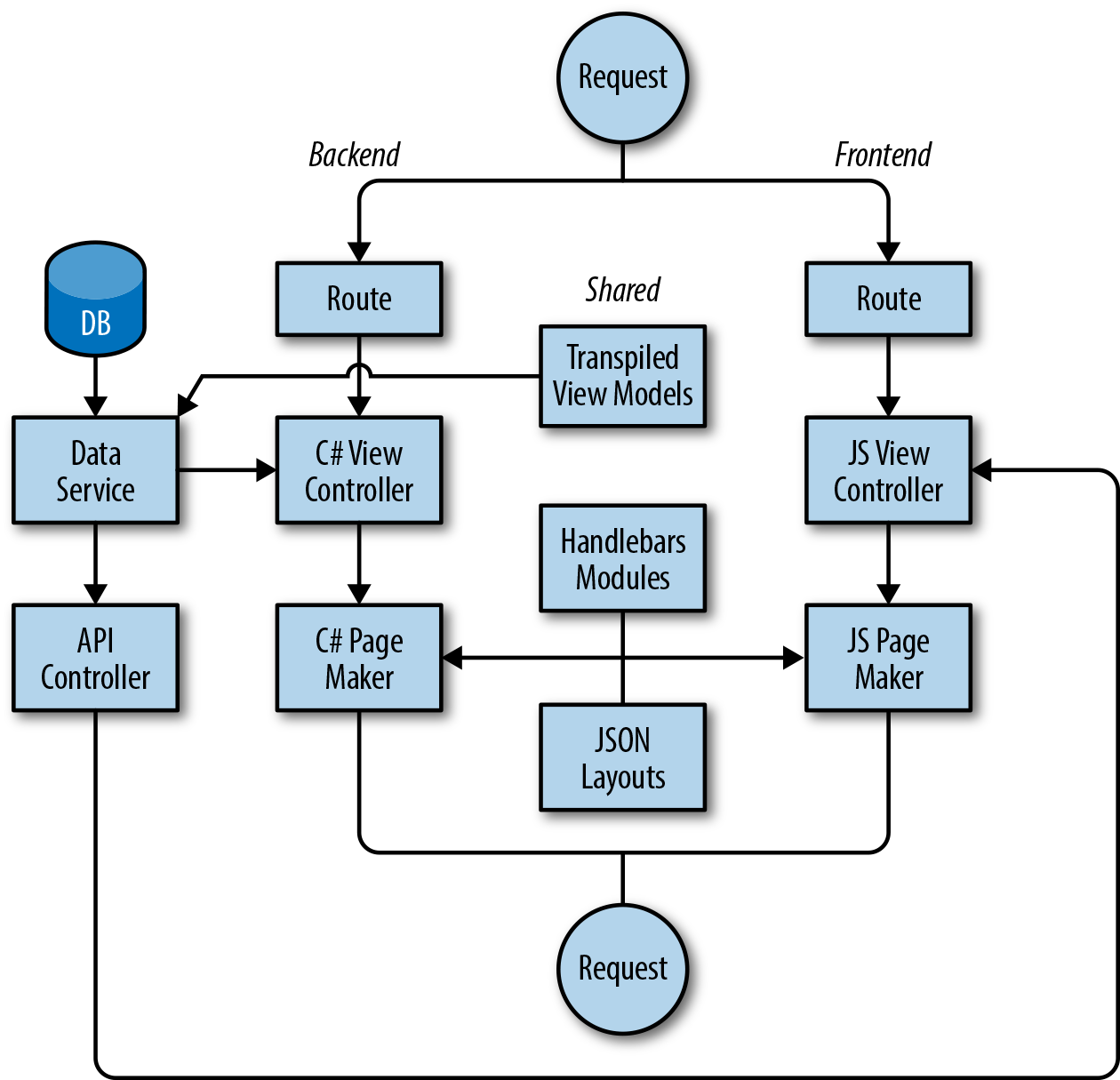

Applications come in different shapes and sizes. In the introductory chapters we focused mainly on single-page applications that can also be rendered on the server. The server renders the initial page to improve perceived page load time and for search engine optimization (SEO). That discussion was focused around the classic application architecture, in which the client initiates a REST call and is routed to one of the stateless backend servers, which in turn queries the database and returns the data to the client (Figure 4-1).

This approach is great for classic ecommerce web applications. But there is another class of applications, often referred to as “real-time” applications. In fact, we can say that there are two classes of isomorphic JavaScript applications: single-page apps that can be server-rendered and apps that use isomorphic JavaScript for real-time, offline, and data-syncing capabilities.

Matt Debergalis has described real-time applications as a natural evolutionary step in the rich history of application architectures. He believes that changes in application architecture are driven by new eras of cheap CPUs, the Internet, and the emergence of mobile. Each of these changes resulted in the development of new application architectures. However, even though we are seeing more complex, live-updating, and collaborative real-time applications, the majority of applications remain single-page apps that can benefit from server-side rendering. Nonetheless, we feel this subject is important to the future of application architecture and very relevant to our discussion of isomorphic JavaScript applications.

Real-time applications have a rich interactive interface and a collaborative element that allows users to share data with other users. Think of a chat application like Slack; a shared document application like Google Docs; or a ride-share application like Uber, which shows all the available drivers and their locations in real time to all the users. For these kinds of applications we end up designing and implementing ways to push data from the server to the client to show other users’ changes as they happen. We also need a way to reactively update the screen on each client once that data comes from the server. Most of these real-time applications have similar functional pieces. These applications must have some mechanism for watching a database for changes, some kind of protocol that runs on top of a push technology like WebSockets to push data to the client (or emulate server data pushes using long polling—i.e., where the server holds the request open until new data is available and has been sent), and some kind of cache on the client to avoid the round-trips to the server when redrawing the screen.

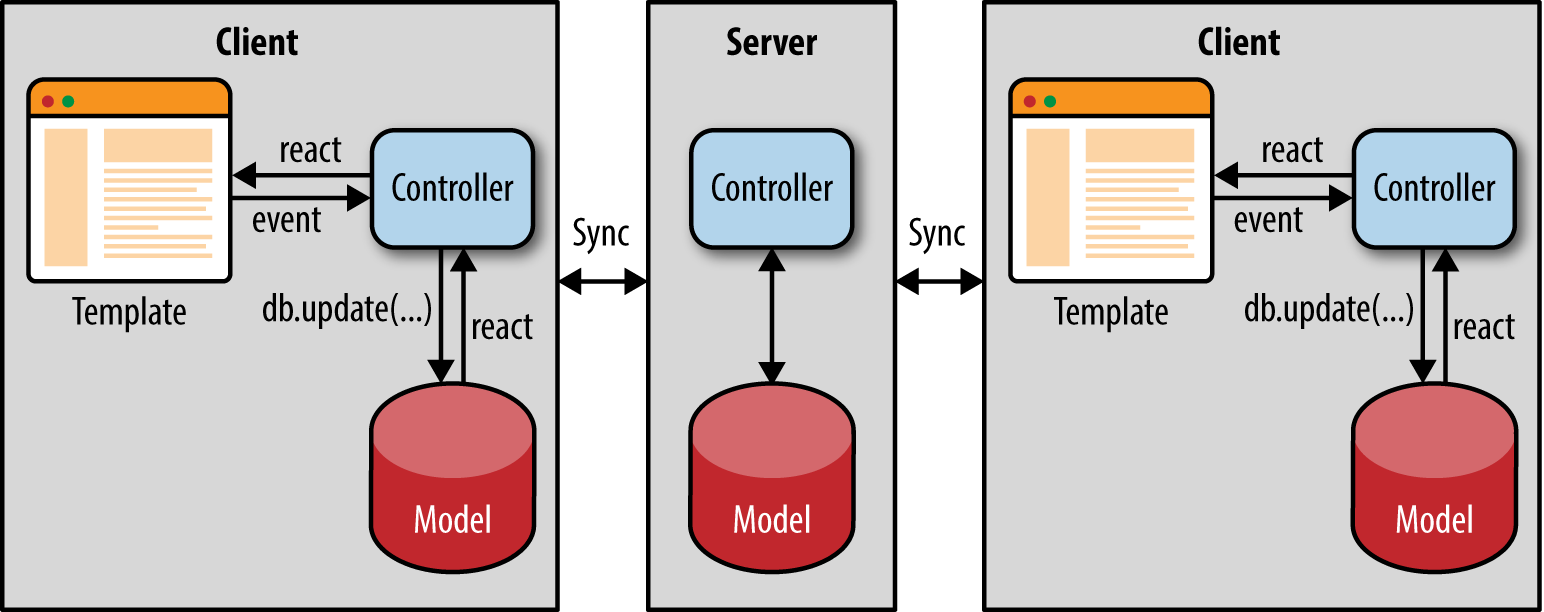

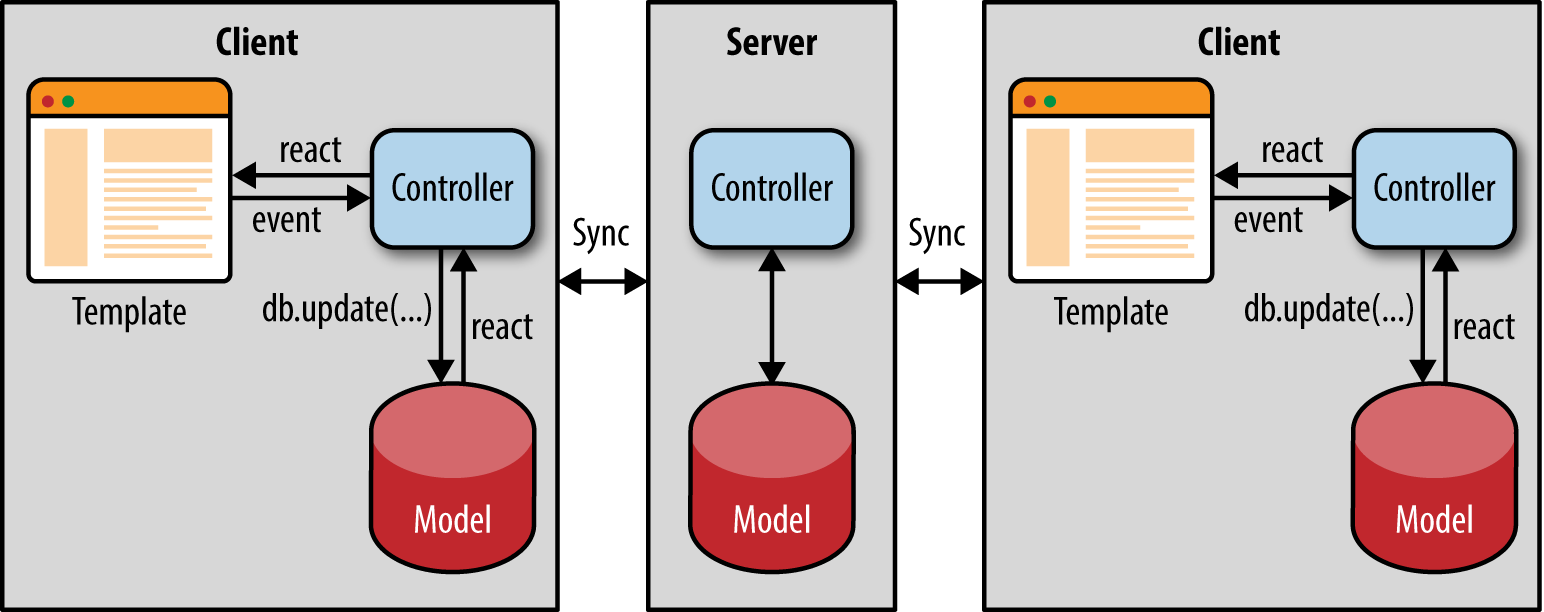

In Figure 4-2 we can see how data flows from the user’s interaction with the view. We can also see how changes from other clients propagate to all users and how the view rerenders when data changes are sent from the server. There are three interesting isomorphic concepts that come up in this kind of architecture: isomorphic APIs, bidirectional data synchronization, and client simulation on the server.

In an isomorphic real-time application, the client interacts with its local data cache similarly to how the server interacts with the backing database. The server code is executing a statement against a database. That same statement is executed on the client to fetch data out of an in-memory cache using the same database API. This symmetry between client and server APIs is often referred to as isomorphic APIs. Isomorphic APIs relieve the developer from having to juggle different strategies for accessing data. But more importantly, isomorphic APIs make it possible to run an application’s core business logic (especially across the model layer) and rendering logic on both the client and the server. Having an isomorphic API for accessing data allows us to share server code and the client application code for validating the updates to the data, accessing and storing the data, and transforming data. By using a consistent API, we remove the friction of having to write different variations of doing the same thing, having to test it in different ways, and having to update code twice when we need to change the data model. Isomorphic APIs are about being intelligent by following the DRY (Don’t Repeat Yourself) principle.

Another important aspect of real-time applications is the synchronization between the server’s database and the client’s local cache. Updates to the server from a client should be updated in the client’s local cache, and vice versa. Meteor.js is a good example of a real-time isomorphic framework that lets developers write JavaScript that runs on both the server and the client. Meteor has a “Database Everywhere” principle. Both the client and the server in Meteor use the same isomorphic APIs to access the database. Meteor also uses database abstractions, like minimongo, on the client and an observable collection over a DDP (dynamic data protocol) to keep the data in sync between the server and the client. It is the client database (not the server database) that drives the UI. The client database does lazy data synchronizing to keep the server database up to date. This allows the client to be offline and to still process user data changes locally. A write on the client can optionally be a speculative write to the client’s local cache before hearing a confirmation back from the server. Meteor has a built-in latency compensation mechanism for refreshing the speculative cache if any writes to the server’s database fail or are overwritten by another client.

Taking isomorphic JavaScript to the extreme, real-time isomorphic applications may run separate processes on the server for each client session. This allows the server to look at the data that the application loads and proactively send data to the client, essentially simulating the UI on the server. This technique has been famously used by the Asana application. Asana is a collaborative project management tool built on an in-house, closed-source framework called Luna. Luna is tailored for writing real-time web applications and is similar to Meteor.js in providing a common isomorphic API for accessing data on the client and the server. However, what makes Luna unique is that it runs a complete copy of the application on the server. Luna simulates the client on the server by executing the same JavaScript code on the server that is running in the client. As a user clicks around in the Asana UI, the JavaScript events in the client are synchronized with the server. The server maintains an exact copy of the user state by executing all the views and events, but simply throws away the HTML.

A recent post on Asana’s engineering blog indicates that Asana is moving away from this kind of client/server simulation, though. Performance is an issue, especially when the server has to simulate the UI in multiple states so that it can anticipate and preload data on the client for immediate availability. The post also cites versioning as an issue for mobile clients that may run older versions of the code, which makes simulation tricky since the client and the server are not running exactly the same code.

Isomorphic JavaScript is an attempt to share an application on both sides of the wire. By looking at real-time isomorphic frameworks, we have seen different solutions to sharing application logic. These frameworks take a more novel approach than simply taking a single-page application and rendering it on the server. There has been a lot of discussion around these concepts, and we hope this has provided a good introduction to the many facets of isomorphic JavaScript. In the next part of the book, we will build on these key concepts and create our first isomorphic application.

Knowing what, when, and where to abstract is key to good software design. If you abstract too much and too soon, then you add layers of complexity that add little value, if any. If you do not abstract enough and in the right places, then you end up with a solution that is brittle and won’t scale. When you have the perfect balance, it is truly beautiful. This is the art of software design, and engineers appreciate it like an art critic appreciates a good Picasso or Rembrandt.

In this part of the book we will attempt to create something of beauty. Many others have blazed the path ahead of us. Some have gotten it right and some have gotten it wrong. I have had experiences in both categories, with many more failures than successes, but with each failure I have learned a lesson or two. Armed with the knowledge gained from these lessons I will lead us through the precarious process of designing and implementing a lightweight isomorphic JavaScript application framework.

This will not be an easy process—most people get the form, structure, and abstractions wrong—but I am up for the challenge if you are. In the end, we may not have achieved perfection, but we will have learned lessons that we can apply to our future isomorphic JavaScript endeavors and we will have a nice base from which we can expand. After all, this is just a step in the evolution of software and us. Are you with me? Well then, let’s push forward and blaze our own path from the ground up.

Now that we have a solid understanding of isomorphic JavaScript, it’s time to go from theory to practice! In this chapter we lay the foundation that we’ll progressively build upon until we have a fully functioning isomorphic application. This foundation will be comprised of the following main technologies:

Node.js will be the server runtime for our application. It is a platform built on Chrome’s JavaScript runtime for easily building fast, scalable network applications. Node.js uses an event-driven, nonblocking I/O model that makes it lightweight and efficient, perfect for data-intensive real-time applications that run across distributed devices.

Hapi.js will be used to power the HTTP application server portion of our application. It is a rich framework for building applications and services. Hapi.js enables developers to focus on writing reusable application logic rather than spending time building infrastructure.

Gulp.js will be used to compile our JavaScript (ES6 to ES5), create bundles for the browser, and manage our development workflow. It is a streaming build system based on Node streams: file manipulation is all done in memory, and it doesn’t write any files until you tell it to do so.

Babel.js will allow us to begin leveraging ES6 syntax and features now by compiling our code to an ES5-compatible distributable. It is the compiler for writing next-generation JavaScript.

If you are already comfortable with using Node, npm, and Gulp then feel free to skip this chapter and install the project that is the end result by running npm install thaumoctopus-mimicus@"0.1.x".

Installing Node is very easy. Node will run on Linux, Mac OS, Windows, and Unix. You can install it from source via the terminal, using a package manager (e.g., yum, homebrew, apt-get, etc.), or by using one of the installers for Mac OS or Windows.

This section outlines how to install Node from source. I highly recommend using one of the installers or a package manager, but if you are one of those rare birds who enjoy installing software from source, then this is the section for you. Otherwise, jump ahead to “Interacting with the Node REPL”.

The first step is to download the source from Nodejs.org:

$wgethttp://nodejs.org/dist/v0.12.15/node-v0.12.15.tar.gz

node-v0.12.15.tar.gz was the latest stable version at the time of writing. Check https://nodejs.org/download/release/ for a more recent version and replace v0.12.15 in the URL of the wget example with the latest stable version.

Next, you’ll need to extract the source in the downloaded file:

$tarzxvfnode-v0.12.15.tar.gz

This command unzips and extracts the Node source. For more information on the tar command options, execute man tar in your terminal.

Now that you have the source code on your computer, you need to run the configuration script in the source code directory. This script finds the required Node dependencies on your system and informs you if any are missing on your computer:

$cdnode-v0.12.15$./configure

Once all dependencies have been found, the source code can then be compiled to a binary. This is done using the make command:

$make

The last step is to run the make install command. This installs Node globally and requires sudo privileges:

$sudomakeinstall

If everything went smoothly, you should see output like the following when you check the Node.js version:

$node-vv0.12.15

Node is a runtime environment with a REPL (read–eval–print loop), which is a JavaScript shell that allows one to write JavaScript code and have it evaluated upon pressing Enter. It is like having the console from the Chrome developer tools in your terminal. You can try it out by entering a few basic commands:

$node>(newDate()).getTime();1436887590047>(^Cagaintoquit)>$

This is useful for testing out code snippets and debugging.

npm is the package manager for Node. It allows developers to easily reuse their code across projects and share their code with other developers. npm comes packaged with Node, so when you installed Node you also installed npm:

$npm-v2.7.0

There are numerous tutorials and extensive documentation on the Web for npm, which is beyond the scope of this book. In the examples in the book we will primarily be working with package.json files, which contain metadata for the project, and the init and install commands. We’ll use our application project as working example for learning how to leverage npm.

Aside from source control, having a way to package, share, and deploy your code is one of the most important aspects of managing a software project. In this section, we will be using npm to set up our project.

The npm CLI (command-line interface) is terminal program that allows you to quickly execute commands that help you manage your project/package. One of these commands is init.

If you are already familiar with npm init or if you prefer to just take a look at the source code, then feel free to skip ahead to “Installing the Application Server”.

init is an interactive command that will ask you a series of questions and create a package.json file for your project. This file contains the metadata for your project (package name, version, dependencies, etc.). This metadata is used to publish your package to the npm repository and to install your package from the repository. Let’s take a walk through the command:

$npminitThisutilitywillwalkyouthroughcreatingapackage.jsonfile.Itonlycoversthemostcommonitems,andtriestoguesssanedefaults.See`npmhelpjson`fordefinitivedocumentationonthesefieldsandexactlywhattheydo.Use`npminstall<pkg>--save`afterwardstoinstallapackageandsaveitasadependencyinthepackage.jsonfile.Press^Catanytimetoquit.

The computer name, directory path, and username (fantastic-planet:thaumoctopus-mimicus jstrimpel $) in the terminal code examples have been omitted for brevity. All terminal commands from this point forward are executed from the project directory.

The first prompt you’ll see is for the package name. This will default to the current directory name, which in our case is thaumoctopus-mimicus.

name:(thaumoctopus-mimicus)

“thaumoctopus-mimicus” is the name of the project that we will be building throughout Part II. Each chapter will be pinned to a minor version. For example, this chapter will be 0.1.x.

Press enter to continue. The next prompt is for the version:

version: (0.0.0)

0.0.0 will do fine for now since we are just getting started and there will not be anything of significance to publish for some time. The next prompt is for a description of your project:

description:

Enter “Isomorphic JavaScript application example”. Next, you’ll be asked for for the entry point of your project:

entrypoint:(index.js)

The entry point is the file that is loaded when a user includes your package in his source code. index.js will suffice for now. The next prompt is the for the test command. Leave it blank (we will not be covering testing because it is outside the scope of this book):

test command:Next, you’ll be prompted for the project’s GitHub repository. The default provided here is https://github.com/isomorphic-javascript-book/thaumoctopus-mimicus.git, which is this project’s repository. Yours will likely be blank.

git repository:(https://github.com/isomorphic-javascript-book/thaumoctopus-mimicus.git)

The next prompt is for keywords for the project:

keywords:

Enter “isomorphic javascript”. Then you’ll be asked for the author’s name:

author:

Enter your name here. The final prompt is for the license. The default value will be (ISC) MIT or (ISC), depending on the NPM version, which is what we want:

license:(ISC)MIT

If you navigate to the project directory you will now see a package.json file with the contents shown in Example 5-1.

{"name":"thaumoctopus-mimicus","version":"0.0.0","description":"Isomorphic JavaScript application example","main":"index.js","scripts":{"test":"echo \"Error: no test specified\" && exit 1"},"repository":{"type":"git","url":"https://github.com/isomorphic-javascript-book/thaumoctopus-mimicus.git"},"keywords":["isomorphic","javascript"],"author":"Jason Strimpel","license":"MIT","bugs":{"url":"https://github.com/isomorphic-javascript-book/thaumoctopus-mimicus/issues"},"homepage":"https://github.com/isomorphic-javascript-book/thaumoctopus-mimicus"}

In the previous section, we initialized our project and created a package.json file that contains the metadata for our project. While this is a necessary process, it does not provide any functionality for our project, nor is it very exciting—so let’s get on with the show and have some fun!

All web applications, including isomorphic JavaScript applications, require an application server of sorts. Whether it is simply serving static files or assembling HTML document responses based on service requests and business logic, it is a necessary part and a good place to begin our coding journey together. For our application server we will be using hapi. Installing hapi is a very easy process:

$npminstallhapi--save

This command not only installs hapi, but also adds a dependency entry to the project’s package.json file. This is so that when someone (including you) installs your project, all the dependencies required to run the project are installed as well.

Now that we have hapi installed, we can write our first application server. The goal of this first example is to respond with “hello world”. In your index.js file, enter the code shown in Example 5-2.

varHapi=require('hapi');// Create a server with a host and portvarserver=newHapi.Server();server.connection({host:'localhost',port:8000});// Add the routeserver.route({method:'GET',path:'/hello',handler:function(request,reply){reply('hello world');}});// Start the serverserver.start();

To start the application execute node . in your terminal, and open your browser to http://localhost:8000/hello. If you see “hello world”, congratulations! If not, review the previous steps and see if there is anything that you or I might have missed. If all else fails you can try copying this gist into your index.js file.

ECMAScript 6, or ES6, is the latest version of JavaScript, which adds quite a few new features to the language. The specification was approved and published on June 17, 2015. People have mixed opinions about some features, such as the introduction of classes, but overall ES6 has been well received and at the time of writing is being widely adopted by many companies.

ES6 classes are just syntactic sugar on top of prototypal inheritance, like many other nonnative implementations. They were likely added to make the language more appealing to a larger audience by providing a common frame of reference to those coming from classical inheritance languages. We will be utilizing classes throughout the book.