3

Overview of Regular Expression Features and Flavors

Now that you have a feel for regular expressions and a few diverse tools that use them, you might think we’re ready to dive into using them wherever they’re found. But even a simple comparison among the egrep versions of the first chapter and the Perl and Java in the previous chapter shows that regular expressions and the way they’re used can vary wildly from tool to tool.

When looking at regular expressions in the context of their host language or tool, there are three broad issues to consider:

• What metacharacters are supported, and their meaning. Often called the regex “flavor.”

• How regular expressions “interface” with the language or tool, such as how to specify regular-expression operations, what operations are allowed, and what text they operate on.

• How the regular-expression engine actually goes about applying a regular expression to some text. The method that the language or tool designer uses to implement the regular-expression engine has a strong influence on the results one might expect from any given regular expression.

Regular Expressions and Cars

The considerations just listed parallel the way one might think while shopping for a car. With regular expressions, the metacharacters are the first thing you notice, just as with a car it’s the body shape, shine, and nifty features like a CD player and leather seats. These are the types of things you’ll find splashed across the pages of a glossy brochure, and a list of metacharacters like the one on page 32 is the regular-expression equivalent. It’s important information, but only part of the story.

How regular expressions interface with their host program is also important. The interface is partly cosmetic, as in the syntax of how to actually provide a regular expression to the program. Other parts of the interface are more functional, defining what operations are supported, and how convenient they are to use. In our car comparison, this would be how the car “interfaces” with us and our lives. Some issues might be cosmetic, such as what side of the car you put gas in, or whether the windows are powered. Others might be a bit more important, such as if it has an automatic or manual transmission. Still others deal with functionality: can you fit the thing in your garage? Can you transport a king-size mattress? Skis? Five adults? (And how easy is it for those five adults to get in and out of the car—easier with four doors than with two.) Many of these issues are also mentioned in the glossy brochure, although you might have to read the small print in the back to get all the details.

The final concern is about the engine, and how it goes about its work to turn the wheels. Here is where the analogy ends, because with cars, people tend to understand at least the minimum required about an engine to use it well: if it’s a gasoline engine, they won’t put diesel fuel into it. And if it has a manual transmission, they won’t forget to use the clutch. But, in the regular-expression world, even the most minute details about how the regex engine goes about its work, and how that influences how expressions should be crafted and used, are usually absent from the documentation. However, these details are so important to the practical use of regular expressions that the entire next chapter is devoted to them.

In This Chapter

As the title might suggest, this chapter provides an overview of regular expression features and flavors. It looks at the types of metacharacters commonly available, and some of the ways regular expressions interface with the tools they’re part of. These are the first two points mentioned at the chapter’s opening. The third point —how a regex engine goes about its work, and what that means to us in a practical sense—is covered in the next few chapters.

One thing I should say about this chapter is that it does not try to provide a reference for any particular tool’s regex features, nor does it teach how to use regexes in any of the various tools and languages mentioned as examples. Rather, it attempts to provide a global perspective on regular expressions and the tools that implement them. If you lived in a cave using only one particular tool, you could live your life without caring about how other tools (or other versions of the same tool) might act differently. Since that’s not the case, knowing something about your utility’s computational pedigree adds interesting and valuable insight.

A Casual Stroll Across the Regex Landscape

I’d like to start with the story about the evolution of some regular expression flavors and their associated programs. So, grab a hot cup (or frosty mug) of your favorite brewed beverage and relax as we look at the sometimes wacky history behind the regular expressions we have today. The idea is to add color to our regex understanding, and to develop a feeling as to why “the way things are” are the way things are. There are some footnotes for those that are interested, but for the most part, this should be read as a light story for enjoyment.

The Origins of Regular Expressions

The seeds of regular expressions were planted in the early 1940s by two neuro-physiologists, Warren McCulloch and Walter Pitts, who developed models of how they believed the nervous system worked at the neuron level.† Regular expressions became a reality several years later when mathematician Stephen Kleene formally described these models in an algebra he called regular sets. He devised a simple notation to express these regular sets, and called them regular expressions.

Through the 1950s and 1960s, regular expressions enjoyed a rich study in theoretical mathematics circles. Robert Constable has written a good summary‡ for the mathematically inclined.

Although there is evidence of earlier work, the first published computational use of regular expressions I have actually been able to find is Ken Thompson’s 1968 article Regular Expression Search Algorithm§ in which he describes a regular-expression compiler that produced IBM 7094 object code. This led to his work on qed, an editor that formed the basis for the Unix editor ed.

ed’s regular expressions were not as advanced as those in qed, but they were the first to gain widespread use in non-technical fields. ed had a command to display lines of the edited file that matched a given regular expression. The command, “g/Regular Expression/p”, was read  This particular function was so useful that it was made into its own utility, grep (after which egrep—extended grep—was later modeled).

This particular function was so useful that it was made into its own utility, grep (after which egrep—extended grep—was later modeled).

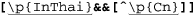

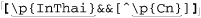

Grep’s metacharacters

The regular expressions supported by grep and other early tools were quite limited when compared to egrep’s. The metacharacter * was supported, but + and ? were not (the latter’s absence being a particularly strong drawback). grep’s capturing metacharacters were \(...\), with unescaped parentheses representing literal text.† grep supported line anchors, but in a limited way. If ^ appeared at the beginning of the regex, it was a metacharacter matching the beginning of the line. Otherwise, it wasn’t a metacharacter at all and just matched a literal circumflex (also called a “caret”). Similarly, $ was the end-of-line metacharacter only at the end of the regex. The upshot was that you couldn’t do something like ⌈end$ | ^start⌋. But that’s OK, since alternation wasn’t supported either!

The way metacharacters interact is also important. For example, perhaps grep’s largest shortcoming was that star could not be applied to a parenthesized expression, but only to a literal character, a character class, or dot. So, in grep, parentheses were useful only for capturing matched text, and not for general grouping. In fact, some early versions of grep didn’t even allow nested parentheses.

Grep evolves

Although many systems have grep today, you’ll note that I’ve been using past tense. The past tense refers to the flavor of the old versions, now upwards of 30 years old. Over time, as technology advances, older programs are sometimes retrofitted with additional features, and grep has been no exception.

Along the way, AT&T Bell Labs added some new features, such as incorporating the \{min, max\) notation from the program lex. They also fixed the -y option, which in early versions was supposed to allow case-insensitive matches but worked only sporadically. Around the same time, people at Berkeley added start-and end-of-word metacharacters and renamed -y to -i. Unfortunately, you still couldn’t apply star or the other quantifiers to a parenthesized expression.

Egrep evolves

By this time, Alfred Aho (also at AT&T Bell Labs) had written egrep, which provided most of the richer set of metacharacters described in Chapter 1. More importantly, he implemented them in a completely different (and generally better) way. Not only were ⌈+⌋ and ⌈?⌋ added, but they could be applied to parenthesized expressions, greatly increasing egrep expressive power.

Alternation was added as well, and the line anchors were upgraded to “first-class” status so that you could use them almost anywhere in your regex. However, egrep had problems as well—sometimes it would find a match but not display the result, and it didn’t have some useful features that are now popular. Nevertheless, it was a vastly more useful tool.

Other species evolve

At the same time, other programs such as awk, lex, and sed, were growing and changing at their own pace. Often, developers who liked a feature from one program tried to add it to another. Sometimes, the result wasn’t pretty. For example, if support for plus was added to grep, + by itself couldn’t be used because grep had a long history of a raw ‘+’ not being a metacharacter, and suddenly making it one would have surprised users. Since ‘\+’ was probably not something a grep user would have otherwise normally typed, it could safely be subsumed as the “one or more” metacharacter.

Sometimes new bugs were introduced as features were added. Other times, added features were later removed. There was little to no documentation for the many subtle points that round out a tool’s flavor, so new tools either made up their own style, or attempted to mimic “what seemed to work” with other tools.

Multiply that by the passage of time and numerous programmers, and the result is general confusion (particularly when you try to deal with everything at once).†

POSIX—An attempt at standardization

POSIX, short for Portable Operating System Interface, is a wide-ranging standard put forth in 1986 to ensure portability across operating systems. Several parts of this standard deal with regular expressions and the traditional tools that use them, so it’s of some interest to us. None of the flavors covered in this book, however, strictly adhere to all the relevant parts. In an effort to reorganize the mess that regular expressions had become, POSIX distills the various common flavors into just two classes of regex flavor, Basic Regular Expressions (BREs), and Extended Regular Expressions (EREs). POSIX programs then support one flavor or the other. Table 3-1 on the next page summarizes the metacharacters in the two flavors.

One important feature of the POSIX standard is the notion of a locale, a collection of settings that describe language and cultural conventions for such things as the display of dates, times, and monetary values, the interpretation of characters in the active encoding, and so on. Locales aim to allow programs to be internationalized. They are not a regex-specific concept, although they can affect regular-expression use. For example, when working with a locale that describes the Latin-1 encoding (also called “ISO-8859-1”), à and À (characters with ordinal values 224 and 160, respectively) are considered “letters,” and any application of a regex that ignores capitalization would know to treat them as identical.

Table 3-1: Overview of POSIX Regex Flavors

Regex feature |

BERs |

EREs |

dot, |

|

|

“any number” quantifier |

* |

* |

|

|

+ ? |

range quantifier |

|

{min, max} |

grouping |

\(...\) |

(...) |

can apply quantifiers to parentheses |

|

|

backreferences |

|

|

alternation |

|

|

Another example is \w, commonly provided as a shorthand for a “word-constituent character” (ostensibly, the same as [a-zA-Z0-9_] in many flavors). This feature is not required by POSIX, but it is allowed. If supported, \w would know to allow all letters and digits defined in the locale, not just those in ASCII.

Note, however, that the need for this aspect of locales is mostly alleviated when working with tools that support Unicode. Unicode is discussed further beginning on page 106.

Henry Spencer’s regex package

Also first appearing in 1986, and perhaps of more importance, was the release by Henry Spencer of a regex package, written in C, which could be freely incorporated by others into their own programs — a first at the time. Every program that used Henry’s package — and there were many — provided the same consistent regex flavor unless the program’s author went to the explicit trouble to change it.

Perl evolves

At about the same time, Larry Wall started developing a tool that would later become the language Perl. He had already greatly enhanced distributed software development with his patch program, but Perl was destined to have a truly monumental impact.

Larry released Perl Version 1 in December 1987. Perl was an immediate hit because it blended so many useful features of other languages, and combined them with the explicit goal of being, in a day-to-day practical sense, useful.

One immediately notable feature was a set of regular expression operators in the tradition of the specialty tools sed and awk—a first for a general scripting language. For the regular expression engine, Larry borrowed code from an earlier project, his news reader rn (which based its regular expression code on that in James Gosling’s Emacs).† The regex flavor was considered powerful by the day’s standards, but was not nearly as full-featured as it is today. Its major drawbacks were that it supported at most nine sets of parentheses, and at most nine alternatives with ⌈|⌋, and worst of all, ⌈|⌋ was not allowed within parentheses. It did not support case-insensitive matching, nor allow \w within a class (it didn’t support \s or \d anywhere). It didn’t support the {min, max} range quantifier.

Perl 2 was released in June 1988. Larry had replaced the regex code entirely, this time using a greatly enhanced version of the Henry Spencer package mentioned in the previous section. You could still have at most nine sets of parentheses, but now you could use ⌈|⌋ inside them. Support for \d and \s was added, and support for \w was changed to include an underscore, since then it would match what characters were allowed in a Perl variable name. Furthermore, these metacharacters were now allowed inside classes. (Their opposites, \D, \W, and \S, were also newly supported, but weren’t allowed within a class, and in any case sometimes didn’t work correctly.) Importantly, the /i modifier was added, so you could now do case-insensitive matching.

Perl 3 came out more than a year later, in October 1989. It added the /e modifier, which greatly increased the power of the replacement operator, and fixed some backreference-related bugs from the previous version. It added the ⌈{min, max}⌋ range quantifiers, although unfortunately, they didn’t always work quite right. Worse still, with Version 3, the regular expression engine couldn’t always work with 8-bit data, yielding unpredictable results with non-ASCII input.

Perl 4 was released a year and a half later, in March 1991, and over the next two years, it was improved until its last update in February 1993. By this time, the bugs were fixed and restrictions expanded (you could use \D and such within character classes, and a regular expression could have virtually unlimited sets of parentheses). Work also went into optimizing how the regex engine went about its task, but the real breakthrough wouldn’t happen until 1994.

Perl 5 was officially released in October 1994. Overall, Perl had undergone a massive overhaul, and the result was a vastly superior language in every respect. On the regular-expression side, it had more internal optimizations, and a few metacharacters were added (including \G, which increased the power of iterative matches  130), non-capturing parentheses (

130), non-capturing parentheses ( 45), lazy quantifiers (

45), lazy quantifiers ( 141), look-ahead (

141), look-ahead ( 60), and the

60), and the /x modifier† ( 72).

72).

More important than just for their raw functionality, these “outside the box” modifications made it clear that regular expressions could really be a powerful programming language unto themselves, and were still ripe for further development.

The newly-added non-capturing parentheses and lookahead constructs required a way to be expressed. None of the grouping pairs—(...), [...], <...>, or {...} — were available to be used for these new features, so Larry came up with the various ‘(?’ notations we use today. He chose this unsightly sequence because it previously would have been an illegal combination in a Perl regex, so he was free to give it meaning. One important consideration Larry had the foresight to recognize was that there would likely be additional functionality in the future, so by restricting what was allowed after the ‘(?’ sequences, he was able to reserve them for future enhancements.

Subsequent versions of Perl grew more robust, with fewer bugs, more internal optimizations, and new features. I like to believe that the first edition of this book played some small part in this, for as I researched and tested regex-related features, I would send my results to Larry and the Perl Porters group, which helped give some direction as to where improvements might be made.

New regex features added over the years include limited lookbehind ( 60), “atomic” grouping (

60), “atomic” grouping ( 139), and Unicode support. Regular expressions were brought to a new level by the addition of conditional constructs (

139), and Unicode support. Regular expressions were brought to a new level by the addition of conditional constructs ( 140), allowing you to make if-then-else decisions right there within the regular expression. And if that wasn’t enough, there are now constructs that allow you to intermingle Perl code within a regular expression, which takes things full circle (

140), allowing you to make if-then-else decisions right there within the regular expression. And if that wasn’t enough, there are now constructs that allow you to intermingle Perl code within a regular expression, which takes things full circle ( 327). The version of Perl covered in this book is 5.8.8.

327). The version of Perl covered in this book is 5.8.8.

A partial consolidation of flavors

The advances seen in Perl 5 were perfectly timed for the World Wide Web revolution. Perl was built for text processing, and the building of web pages is just that, so Perl quickly became the language for web development. Perl became vastly more popular, and with it, its powerful regular expression flavor did as well.

Developers of other languages were not blind to this power, and eventually regular expression packages that were “Perl compatible” to one extent or another were created. Among these were packages for Tcl, Python, Microsoft’s .NET suite of languages, Ruby, PHP, C/C++, and many packages for Java.

Another form of consolidation began in 1997 (coincidentally, the year the first edition of this book was published) when Philip Hazel developed PCRE, his library for Perl Compatible Regular Expressions, a high-quality regular-expression engine that faithfully mimics the syntax and semantics of Perl regular expressions. Other developers could then integrate PCRE into their own tools and languages, thereby easily providing a rich and expressive (and well-known) regex functionality to their users. PCRE is now used in popular software such as PHP, Apache Version 2, Exim, Postfix, and Nmap.†

Versions as of this book

Table 3-2 shows a few of the version numbers for programs and libraries that I talk about in the book. Older versions may well have fewer features and more bugs, while newer versions may have additional features and bug fixes (and new bugs of their own).

Table 3-2: Versions of Some Tools Mentioned in This Book

GNU awk 3.1 |

|

Procmail 3.22 |

GNU egrep/grep 2.5.1 |

.NET Framework 2.0 |

Python 2.3.5 |

GNU Emacs 21.3.1 |

PCRE 6.6 |

Ruby 1.8.4 |

flex 2.5.31 |

Perl 5.8.8 |

GNU sed 4.0.7 |

MySQL 5.1 |

PHP (preg routines) 5.1.4 / 4.4.3 |

Tcl 8.4 |

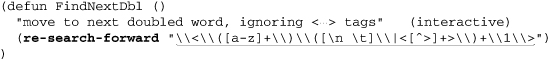

At a Glance

A chart showing just a few aspects of some common tools gives a good clue to how different things still are. Table 3-3 provides a very superficial look at a few aspects of the regex flavors of a few tools.

A chart like Table 3-3 is often found in other books to show the differences among tools. But, this chart is only the tip of the iceberg — for every feature shown, there are a dozen important issues that are overlooked.

Foremost is that programs change over time. For example, Tcl didn’t used to support backreferences and word boundaries, but now does. It first supported word boundaries with the ungainly-looking [:<:] and [:>:], and still does, although such use is deprecated in favor of its more-recently supported \m, \M, and \y (start of word boundary, end of word boundary, or either).

Along the same lines, programs such as grep and egrep, which aren’t from a single provider but rather can be provided by anyone who wants to create them, can have whatever flavor the individual author of the program wishes. Human nature being what is, each tends to have its own features and peculiarities. (The GNU versions of many common tools, for example, are often more powerful and robust than other versions.)

Table 3-3: A (Very) Superficial Look at the Flavor of a Few Common Tools

Feature |

Modern grep |

Modern egrep |

GNU Emacs |

Tcl |

Perl |

.NET |

Sun’s Java package |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

grouping |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

word boundary |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

backreferences |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Perhaps as important as the easily visible features are the many subtle (and some not-so-subtle) differences among flavors. Looking at the table, one might think that regular expressions are exactly the same in Perl, .NET, and Java, which is certainly not true. Just a few of the questions one might ask when looking at something like Table 3-3 are:

• Are star and friends allowed to quantify something wrapped in parentheses?

• Does dot match a newline? Do negated character classes match it? Do either match the NUL character?

• Are the line anchors really line anchors (i.e., do they recognize newlines that might be embedded within the target string)? Are they first-class metacharacters, or are they valid only in certain parts of the regex?

• Are escapes recognized in character classes? What else is or isn’t allowed within character classes?

• Are parentheses allowed to be nested? If so, how deeply (and how many parentheses are even allowed in the first place)?

• If backreferences are allowed, when a case-insensitive match is requested, do backreferences match appropriately? Do backreferences “behave” reasonably in fringe situations?

• Are octal escapes such as \123 allowed? If so, how do they reconcile the syntactic conflict with backreferences? What about hexadecimal escapes? Is it really the regex engine that supports octal and hexadecimal escapes, or is it some other part of the utility?

• Does \w match only alphanumerics, or additional characters as well? (Among the programs shown supporting \w in Table 3-3, there are several different interpretations). Does \w agree with the various word-boundary metacharacters on what does and doesn’t constitute a “word character”? Do they respect the locale, or understand Unicode?

Many issues must be kept in mind, even with a tidy little summary like Table 3-3 as a superficial guide. If you realize that there’s a lot of dirty laundry behind that nice façade, it’s not too difficult to keep your wits about you and deal with it.

As mentioned at the start of the chapter, much of this is just superficial syntax, but many issues go deeper. For example, once you understand that something such as ⌈(Jul|July)⌋ in egrep needs to be written as ⌈\(Jul\July)⌋ for GNU Emacs, you might think that everything is the same from there, but that’s not always the case. The differences in the semantics of how a match is attempted (or, at least, how it appears to be attempted) is an extremely important issue that is often overlooked, yet it explains why these two apparently identical examples would actually end up matching differently: one always matches ‘Jul’, even when applied to ‘July’. Those very same semantics also explain why the opposite, ⌈(July|Jul)⌋ and ⌈\(July\|Jul\)⌋, do match the same text. Again, the entire next chapter is devoted to understanding this.

Of course, what a tool can do with a regular expression is often more important than the flavor of its regular expressions. For example, even if Perl’s expressions were less powerful than egrep’s, Perl’s flexible use of regexes provides for more raw usefulness. We’ll look at a lot of individual features in this chapter, and in depth at a few languages in later chapters.

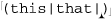

Care and Handling of Regular Expressions

The second concern outlined at the start of the chapter is the syntactic packaging that tells an application “Hey, here’s a regex, and this is what I want you to do with it.” egrep is a simple example because the regular expression is expected as an argument on the command line. Any extra syntactic sugar, such as the single quotes I used throughout the first chapter, are needed only to satisfy the command shell, not egrep. Complex systems, such as regular expressions in programming languages, require more complex packaging to inform the system exactly what the regex is and how it should be used.

The next step, then, is to look at what you can do with the results of a match. Again, egrep is simple in that it pretty much always does the same thing (displays lines that contain a match), but as the previous chapter began to show, the real power is in doing much more interesting things. The two basic actions behind those interesting things are match (to check if a regex matches in a string, and to perhaps pluck information from the string), and search and replace, to modify a string based upon a match. There are many variations of these actions, and many variations on how individual languages let you perform them.

In general, a programming language can take one of three approaches to regular expressions: integrated, procedural, and object-oriented. With the first, regular expression operators are built directly into the language, as with Perl. In the other two, regular expressions are not part of the low-level syntax of the language. Rather, normal strings are passed as arguments to normal functions, which then interpret the strings as regular expressions. Depending on the function, one or more regex-related actions are then performed. One derivative or another of this style is use by most (non-Perl) languages, including Java, the .NET languages, Tcl, Python, PHP, Emacs lisp, and Ruby.

Integrated Handling

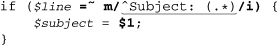

We’ve already seen a bit of Perl’s integrated approach, such as this example from page 55:

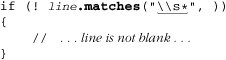

Here, for clarity, variable names I’ve chosen are in italic, while the regex-related items are bold, and the regular expression itself is underlined. We know that Perl applies the regular expression ⌈^Subject: • (.*)⌋ to the text held in $line, and if a match is found, executes the block of code that follows. In that block, the variable $1 represents the text matched within the regular expression’s parentheses, and this gets assigned to the variable $subject.

Another example of an integrated approach is when regular expressions are part of a configuration file, such as for procmail (a Unix mail-processing utility.) In the configuration file, regular expressions are used to route mail messages to the sections that actually process them. It’s even simpler than with Perl, since the operands (the mail messages) are implicit.

What goes on behind the scenes is quite a bit more complex than these examples show. An integrated approach simplifies things to the programmer because it hides in the background some of the mechanics of preparing the regular expression, setting up the match, applying the regular expression, and deriving results from that application. Hiding these steps makes the normal case very easy to work with, but as we’ll see later, it can make some cases less efficient or clumsier to work with.

But, before getting into those details, let’s uncover the hidden steps by looking at the other methods.

Procedural and Object-Oriented Handling

Procedural and object-oriented handling are fairly similar. In either case, regex functionality is provided not by built-in regular-expression operators, but by normal functions (procedural) or constructors and methods (object-oriented). In this case, there are no true regular-expression operands, but rather normal string arguments that the functions, constructors, or methods choose to interpret as regular expressions.

The next sections show examples in Java, VB.NET, PHP, and Python.

Regex handling in Java

Let’s look at the equivalent of the “Subject” example in Java, using Sun’s java.util.regex package. (Java is covered in depth in Chapter 8.)

Variable names I’ve chosen are again in italic, the regex-related items are bold, and the regular expression itself is underlined. Well, to be precise, what’s underlined is a normal string literal to be interpreted as a regular expression.

This example shows an object-oriented approach with regex functionality supplied by two classes in Sun’s java.util.regex package: Pattern and Matcher. The actions performed are:

Inspect the regular expression and compile it into an internal form that matches in a case-insensitive manner, yielding a “

Inspect the regular expression and compile it into an internal form that matches in a case-insensitive manner, yielding a “Pattern” object.

Associate it with some text to be inspected, yielding a “

Associate it with some text to be inspected, yielding a “Matcher” object.

Actually apply the regex to see if there is a match in the previously-associated text, and let us know the result.

Actually apply the regex to see if there is a match in the previously-associated text, and let us know the result.

If there is a match, make available the text matched within the first set of capturing parentheses.

If there is a match, make available the text matched within the first set of capturing parentheses.

Actions similar to these are required, explicitly or implicitly, by any program wishing to use regular expressions. Perl hides most of these details, and this Java implementation usually exposes them.

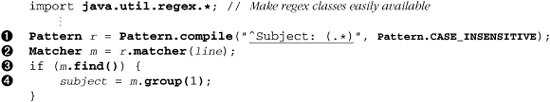

A procedural example. Java does, however, provide a few procedural-approach “convenience functions” that hide much of the work. Instead of you having to first create a regex object and then use that object’s methods to apply it, these static functions create a temporary object for you, throwing it away once done.

Here’s an example showing the Pattern.matches (...) function:

This function wraps an implicit ⌈^...$⌋ around the regex, and returns a Boolean indicating whether it can match the input string. It’s common for a package to provide both procedural and object-oriented interfaces, just as Sun did here. The differences between them often involve convenience (a procedural interface can be easier to work with for simple tasks, but more cumbersome for complex tasks), functionality (procedural interfaces generally have less functionality and options than their object-oriented counterparts), and efficiency (in any given situation, one is likely to be more efficient than the other — a subject covered in detail in Chapter 6).

Sun has occasionally integrated regular expressions into other parts of the language. For example, the previous example can be written using the string class’s matches method:

Again, this is not as efficient as a properly-applied object-oriented approach, and so is not appropriate for use in a time-critical loop, but it’s quite convenient for “casual” use.

Regex handling in VB and other .NET languages

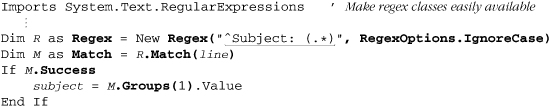

Although all regex engines perform essentially the same basic tasks, they differ in how those tasks and services are exposed to the programmer, even among implementations sharing the same approach. Here’s the “Subject” example in VB.NET (.NET is covered in detail in Chapter 9):

Overall, this is generally similar to the Java example, except that .NET combines steps  and

and  , and requires an extra

, and requires an extra Value in  . Why the differences? One is not inherently better or worse — each was just chosen by the developers who thought it was the best approach at the time. (More on this in a bit.)

. Why the differences? One is not inherently better or worse — each was just chosen by the developers who thought it was the best approach at the time. (More on this in a bit.)

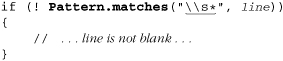

.NET also provides a few procedural-approach functions. Here’s one to check for a blank line:

If Not Regex.IsMatch(Line, "^\s*$") Then

' ... line is not blank ...

End If

Unlike Sun’s Pattern.matches function, which adds an implicit ⌈^...$⌋ around the regex, Microsoft chose to offer this more general function. It’s just a simple wrapper around the core objects, but it involves less typing and variable corralling for the programmer, at only a small efficiency expense.

Regex handling in PHP

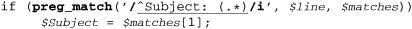

Here’s the ⌈Subject⌋ example with PHP’s preg suite of regex functions, which take a strictly procedural approach. (PHP is covered in detail in Chapter 10.)

Regex handling in Python

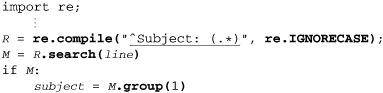

As a final example, let’s look at the ⌈Subject⌋ example in Python, which uses an object-oriented approach:

Again, this looks very similar to what we’ve seen before.

Why do approaches differ?

Why does one language do it one way, and another language another? There may be language-specific reasons, but it mostly depends on the whim and skills of the engineers that develop each package. There are, for example, many different regex packages for Java, each written by someone who wanted the functionality that Sun didn’t originally provide. Each has its own strengths and weaknesses, but it’s interesting to note that they all provide their functionality in quite different ways from each other, and from what Sun eventually decided to implement themselves.

Another clear example of the differences is PHP, which includes three wholly unrelated regex engines, each utilized by its own suite of functions. At different times, PHP developers, dissatisfied with the original functionality, updated the PHP core by adding a new package and a corresponding suite of interface functions. (The “preg” suite is generally considered superior overall, and is what this book covers.)

A Search-and-Replace Example

The “Subject” example is simple, so the various approaches really don’t have an opportunity to show how different they really are. In this section, we’ll look at a somewhat more complex example, further highlighting the different designs.

In the previous chapter ( 73), we saw this Perl search and replace to “linkize” an email address:

73), we saw this Perl search and replace to “linkize” an email address:

$text =~ s{

\b

# Capture the address to $1 ...

(

\w[-.\w]* # username

@

[-\w]+(\.[-\w]+)*\.(com|edu|info) # hostname

)

\b

}{<a href="mailto:$1">$1</a>}gix;

Perl’s search-and-replace operator works on a string “in place,” meaning that the variable being searched is modified when a replacement is done. Most other languages do replacements on a copy of the text being searched. This is quite convenient if you don’t want to modify the original, but you must assign the result back to the same variable if you want an in-place update. Some examples follow.

Search and replace in Java

Here’s the search-and-replace example with Sun’s java.util.regex package:

import java.util.regex.*; // Make regex classes easily available

.

.

.

Pattern r = Pattern.compile(

"\\b \n"+

"# Capture the address to $1 ... \n"+

"( \n"+

" \\w[-.\\w]* # username \n"+

" @ \n"+

" [-\\w]+(\\.[-\\w]+)*\\.(com|edu|info) # hostname \n"+

") \n"+

"\\b \n",

Pattern.CASE_INSENSITIVE|Pattern.COMMENTS);

Matcher m = r.matcher(text);

text = m.replaceAll("<a href=\"mailto:$1\">$1</a>");

Note that each ‘\’ wanted in a string’s value requires ‘\\’ in the string literal, so if you’re providing regular expressions via string literals as we are here, ⌈\w⌋ requires ‘\\w’. For debugging, System.out.println(r.pattern()) can be useful to display the regular expression as the regex function actually received it. One reason that I include newlines in the regex is so that it displays nicely when printed this way. Another reason is that each ‘#’ introduces a comment that goes until the next newline; so, at least some of the newlines are required to restrain the comments.

Perl uses notations like /g, /i, and /x to signify special conditions (these are the modifiers for replace all, case-insensitivity, and free formatting modes  135), but

135), but java.util.regex uses either different functions (replaceAll versus replace) or flag arguments passed to the function (e.g., Pattern.CASE_INSENSITIVE and Pattern.COMMENTS).

Search and replace in VB.NET

The general approach in VB.NET is similar:

Dim R As Regex = New Regex _

("\b " & _

"(?# Capture the address to $1 ... ) " & _

"( " & _

" \w[-.\w]* (?# username) " & _

" @ " & _

" [-\w]+(\.[-\w]+)*\.(com|edu|info) (?# hostname) " & _

") " & _

"\b ", _

RegexOptions.IgnoreCase Or RegexOptions.IgnorePatternWhitespace)

text = R.Replace(text, "<a href=""mailto:${1}" ">${1}</a>")

Due to the inflexibility of VB.NET string literals (they can’t span lines, and it’s difficult to get newline characters into them), longer regular expressions are not as convenient to work with as in some other languages. On the other hand, because ‘\’ is not a string metacharacter in VB.NET, the expression can be less visually cluttered. A double quote is a metacharacter in VB.NET string literals: to get one double quote into the string’s value, you need two double quotes in the string literal.

Search and replace in PHP

Here’s the search-and-replace example in PHP:

$text = preg_replace('{

\b

# Capture the address to $1 ...

(

\w[-.\w]* # username

@

[-\w]+(\.[-\w]+)*\.(com|edu|info) # hostname

)

\b

}ix' ,

'<a href="mailto:$1">$1</a>', # replacement string

$text);

As in Java and VB.NET, the result of the search-and-replace action must be assigned back into $text, but otherwise this looks quite similar to the Perl example.

Search and Replace in Other Languages

Let’s quickly look at a few examples from other traditional tools and languages.

Awk

Awk uses an integrated approach, /regex/, to perform a match on the current input line, and uses “var ~ ...” to perform a match on other data. You can see where Perl got its notation for matching. (Perl’s substitution operator, however, is modeled after sed’s.) The early versions of awk didn’t support a regex substitution, but modern versions have the sub(...) operator:

sub(/mizpel/, "misspell")

This applies the regex ⌈mizpel⌋ to the current line, replacing the first match with misspell. Note how this compares to Perl’s (and sed’s) s/mizpel/misspell/.

To replace all matches within the line, awk does not use any kind of /g modifier, but a different operator altogether: gsub(/mizpel/, "misspell").

Tcl

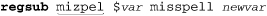

Tcl takes a procedural approach that might look confusing if you’re not familiar with Tcl’s quoting conventions. To correct our misspellings with Tcl, we might use:

This checks the string in the variable var, and replaces the first match of ⌈mizpel⌋ with misspell, putting the now possibly-changed version of the original string into the variable newvar (which is not written with a dollar sign in this case). Tcl expects the regular expression first, the target string to look at second, the replacement string third, and the name of the target variable fourth. Tcl also allows optional flags to its regsub, such as -all to replace all occurrences of the match instead of just the first:

Also, the -nocase option causes the regex engine to ignore the difference between uppercase and lowercase characters (just like egrep’s -i flag, or Perl’s /i modifier).

GNU Emacs

The powerful text editor GNU Emacs (just “Emacs” from here on) supports elisp (Emacs lisp) as a built-in programming language. It provides a procedural regex interface with numerous functions providing various services. One of the main ones is re-search-forward, which accepts a normal string as an argument and interprets it as a regular expression. It then searches the text starting from the “current position,” stopping at the first match, or aborting if no match is found.

(re-search-forward is what’s executed when one invokes a “regexp search” while using the editor.)

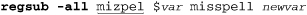

As Table 3-3 ( 92) shows, Emacs’ flavor of regular expressions is heavily laden with backslashes. For example,

92) shows, Emacs’ flavor of regular expressions is heavily laden with backslashes. For example, ⌈\<\([a-z]+\)\([\n•\t]\|<[^>]+>\)+\i\>⌋ is an expression for finding doubled words, similar to the problem in the first chapter. We couldn’t use this regex directly, however, because the Emacs regex engine doesn’t understand \t and \n. Emacs double-quoted strings, however, do, and convert them to the tab and newline values we desire before the regex engine ever sees them. This is a notable benefit of using normal strings to provide regular expressions. One drawback, particularly with elisps regex flavor’s propensity for backslashes, is that regular expressions can end up looking like a row of scattered toothpicks. Here’s a small function for finding the next doubled word:

Combine that with (define-key global-map "\C-x\C-d" 'FindNextDbl) and you can use the “Control-x Control-d" sequence to quickly search for doubled words.

Care and Handling: Summary

As you can see, there’s a wide range of functionalities and mechanics for achieving them. If you are new to these languages, it might be quite confusing at this point. But, never fear! When trying to learn any one particular tool, it is a simple matter to learn its mechanisms.

Strings, Character Encodings, and Modes

Before getting into the various type of metacharacters generally available, there are a number of global issues to understand: regular expressions as strings, character encodings, and match modes.

These are simple concepts, in theory, and in practice, some indeed are. With most, though, the small details, subtleties, and inconsistencies among the various implementations sometimes makes it hard to pin down exactly how they work in practice. The next sections cover some of the common and sometimes complex issues you’ll face.

Strings as Regular Expressions

The concept is simple: in most languages except Perl, awk, and sed, the regex engine accepts regular expressions as normal strings — strings that are often provided as string literals like "^From: (.*) “. What confuses many, especially early on, is the need to deal with the language’s own string-literal metacharacters when composing a string to be used as a regular expression.

Each language’s string literals have their own set of metacharacters, and some languages even have more than one type of string literal, so there’s no one rule that works everywhere, but the concepts are all the same. Many languages’ string literals recognize escape sequences like \t, \\, and \x2A, which are interpreted while the string’s value is being composed. The most common regex-related aspect of this is that each backslash in a regex requires two backslashes in the corresponding string literal. For example, "\\n" is required to get the regex ⌈\n⌋.

If you forget the extra backslash for the string literal and use "\n", with many languages you’d then get ⌈ ⌋, which just happens to do exactly the same thing as ⌈

⌋, which just happens to do exactly the same thing as ⌈\n⌋. Well, actually, if the regex is in an /x type of free-spacing mode, ⌈ ⌋ becomes empty, while ⌈

⌋ becomes empty, while ⌈\n⌋ remains a regex to match a newline. So, you can get bitten if you forget. Table 3-4 below shows a few examples involving \t and \x2A (2A is the ASCII code for ‘*’.) The second pair of examples in the table show the unintended results when the string-literal metacharacters aren’t taken into account.

Table 3-4: A Few String-Literal Examples

String literal |

|

|

|

|

String value |

|

|

|

|

As regex |

|

⌈ |

⌈ |

⌈ |

Matches |

tab or star |

tab or star |

any number tabs |

tab followed by star |

In |

tab or star |

tab or star |

error |

tab followed by star |

Every language’s string literals are different, but some are quite different in that ‘\’ is not a metacharacter. For example. VB.NET’s string literals have only one metacharacter, a double quote. The next sections look at the details of several common languages’ string literals. Whatever the individual string-literal rules, the question on your mind when using them should be “what will the regular expression engine see after the language’s string processing is done?”

Strings in Java

Java string literals are like those presented in the introduction, in that they are delimited by double quotes, and backslash is a metacharacter. Common combinations such as '\t' (tab), '\n' (newline), ‘\\’ (literal backslash), etc. are supported. Using a backslash in a sequence not explicitly supported by literal strings results in an error.

Strings in VB.NET

String literals in VB.NET are also delimited by double quotes, but otherwise are quite different from Java’s. VB.NET strings recognize only one metasequence: a pair of double quotes in the string literal add one double quote into the string’s value. For example, "he said ""hi""\." results in ⌈he said "hi"\.⌋

Strings in C#

Although all the languages of Microsoft’s .NET Framework share the same regular expression engine internally, each has its own rules about the strings used to create the regular-expression arguments. We just saw Visual Basic’s simple string literals. In contrast, Microsoft’s C# language has two types of string literals.

C# supports the common double-quoted string similar to the kind discussed in this section’s introduction, except that “” rather than \” adds a double quote into the string’s value. However, C# also supports “verbatim strings,” which look like @”···“. Verbatim strings recognize no backslash sequences, but instead, just one special sequence: a pair of double quotes inserts one double quote into the target value. This means that you can use "\\t\\x2A" or @"\t\x2A" to create the ⌈\t\x2A⌋ example. Because of this simpler interface, one would tend to use these @“···” verbatim strings for most regular expressions.

Strings in PHP

PHP also offers two types of strings, yet both differ from either of C#’s types. With PHP’s double-quoted strings, you get the common backslash sequences like '\n', but you also get variable interpolation as we’ve seen with Perl ( 77), and also the special sequence

77), and also the special sequence {...} which inserts into the string the result of executing the code between the braces.

These extra features of PHP double-quoted strings mean that you’ll tend to insert extra backslashes into regular expressions, but there’s one additional feature that helps mitigate that need. With Java and C# string literals, a backslash sequence that isn’t explicitly recognized as special within strings results in an error, but with PHP double-quoted strings, such sequences are simply passed through to the string’s value. PHP strings recognize \t, so you still need "\\t" to get ! \t", but if you use "\w", you’ll get ⌈\w⌋ because \w is not among the sequences that PHP double-quoted strings recognize. This extra feature, while handy at times, does add yet another level of complexity to PHP double-quoted strings, so PHP also offers its simpler single-quoted strings.

PHP single-quoted strings offer uncluttered strings on the order of VB.NET’s strings, or C#’s @”...” strings, but in a slightly different way. Within a PHP single-quoted string, the sequence \’ includes one single quote in the target value, and \\ includes a backslash. Any other character (including any other backslash) is not considered special, and is copied to the target value verbatim. This means that ‘\t\x2A' creates ⌈\t\x2A⌋. Because of this simplicity, single-quoted strings are the most convenient for PHP regular expressions.

PHP single-quoted strings are discussed further in Chapter 10 ( 445).

445).

Strings in Python

Python offers a number of string-literal types. You can use either single quotes or double quotes to create strings, but unlike PHP, there is no difference between the two. Python also offers “triple-quoted” strings of the form ‘ ’ ‘...’ ‘ ’ and ““”...”””, which are different in that they may contain unescaped newlines. All four types offer the common backslash sequences such as \n, but have the same twist that PHP has in that unrecognized sequences are left in the string verbatim. Contrast this with Java and C# strings, for which unrecognized sequences cause an error.

Like PHP and C#, Python offers a more literal type of string, its “raw string.” Similar to C#’s @”...” notation, Python uses an 'r' before the opening quote of any of the four quote types. For example, r"\t\x2A" yields ⌈\t\x2A⌋. Unlike the other languages, though, with Python’s raw strings, all backslashes are kept in the string, including those that escape a double quote (so that the double quote can be included within the string): r"he said \"hi\"\." results in ⌈he said \"hi\"\.⌋. This isn’t really a problem when using strings for regular expressions, since Python’s regex flavor treats ⌈\”⌋ as ⌈"⌋, but if you like, you can bypass the issue by using one of the other types of raw quoting: r'he said "hi"\.’

Strings in Tcl

Tcl is different from anything else in that it doesn’t really have string literals at all. Rather, command lines are broken into “words,” which Tcl commands can then consider as strings, variable names, regular expressions, or anything else as appropriate to the command. While a line is being parsed into words, common backslash sequences like \n are recognized and converted, and backslashes in unknown combinations are simply dropped. You can put double quotes around the word if you like, but they aren’t required unless the word has whitespace in it.

Tcl also has a raw literal type of quoting similar to Python’s raw strings, but Tcl uses braces, {...}, instead of r'---'. Within the braces, everything except a back-slash-newline combination is kept as-is, so you can use {\t\x2A} to get ⌈\t\x2A⌋.

Within the braces, you can have additional sets of braces so long as they nest. Those that don’t nest must be escaped with a backslash, although the backslash does remain in the string’s value.

Regex literals in Perl

In the Perl examples we’ve seen so far in this book, regular expressions have been provided as literals (“regular-expression literals”). As it turns out, you can also provide them as strings. For example:

$str =~ m/(\w+)/;

can also be written as:

$regex = '(\w+)';

$str =~ $regex;

or perhaps:

$regex = "(\\w+)";

$str =~ $regex;

(Note that using a string can be much less efficient  242, 348.)

242, 348.)

When a regex is provided as a literal, Perl provides extra features that the regular-expression engine itself does not, including:

• The interpolation of variables (incorporating the contents of a variable as part of the regular expression).

• Support for a literal-text mode via ⌈\Q...\E⌋ ( 113).

113).

• Optional support for a \N{name} construct, which allows you to specify characters via their official Unicode names. For example, you can match ‘¡Hola!’ with ⌈\N{INVERTED EXCLAMATION MARK}Hola!⌋.

In Perl, a regex literal is parsed like a very special kind of string. In fact, these features are also available with Perl double-quoted strings. The point to be aware of is that these features are not provided by the regular-expression engine. Since the vast majority of regular expressions used within Perl are as regex literals, most think that ⌈\Q...\E⌋ is part of Perl’s regex language, but if you ever use regular expressions read from a configuration file (or from the command line, etc.), it’s important to know exactly what features are provided by which aspect of the language.

More details are available in Chapter 7, starting on page 288.

Character-Encoding Issues

A character encoding is merely an explicit agreement on how bytes with various values should be interpreted. A byte with the decimal value 110 is interpreted as the character 'n' with the ASCII encoding, but as ‘>’ with EBCDIC. Why? Because that’s what someone decided — there’s nothing intrinsic about those values and characters that makes one encoding better than the other. The byte is the same; only the interpretation changes.

ASCII defines characters for only half the values that a byte can hold. The ISO-8859-1 encoding (commonly called “Latin-1”) fills in the blank spots with accented characters and special symbols, making an encoding usable by a larger set of languages. With this encoding, a byte with a decimal value of 234 is to be interpreted as ê, instead of being undefined as it is with ASCII.

The important question for us is: when we intend for a certain set of bytes to be considered in the light of a particular encoding, does the program actually do so? For example, if we have four bytes with the values 234, 116, 101, and 115 that we intend to be considered as Latin-1 (representing the French word “êtes”), we’d like the regex ⌈^"\w+$⌋ or ⌈^\b⌋ to match. This happens if the program’s \w and \b know to treat those bytes as Latin-1 characters, and probably doesn’t happen otherwise.

Richness of encoding-related support

There are many encodings. When you’re concerned with a particular one, important questions you should ask include:

• Does the program understand this encoding?

• How does it know to treat this data as being of that encoding?

• How rich is the regex support for this encoding?

The richness of an encoding’s support has several important issues, including:

• Are characters that are encoded with multiple bytes recognized as such? Do expressions like dot and [^x] match single characters, or single bytes?

• Do \w, \d, \s, \b, etc., properly understand all the characters in the encoding? For example, even if ê is known to be a letter, do \w and \b treat it as such?

• Does the program try to extend the interpretation of class ranges? Is ê matched by [a-z]?

• Does case-insensitive matching work properly with all the characters? For example, are ê and Ê treated as being equal?

Sometimes things are not as simple as they might seem. For example, the \b of Sun’s java.util.regex package properly understands all the word-related characters of Unicode, but its \w does not (it understands only basic ASCII). We’ll see more examples of this later in the chapter.

Unicode

There seems to be a lot of misunderstanding about just what “Unicode” is. At the most basic level, Unicode is a character set or a conceptual encoding— a logical mapping between a number and a character. For example, the Korean character  is mapped to the number 49,333. The number, called a code point, is normally shown in hexadecimal, with “U

is mapped to the number 49,333. The number, called a code point, is normally shown in hexadecimal, with “U+” prepended. 49,333 in hex is C0B5, so  is referred to as U

is referred to as U+C0B5. Included as part of the Unicode concept is a set of attributes for many characters, such as “3 is a digit” and “É is an uppercase letter whose lowercase equivalent is é.”

At this level, nothing is yet said about just how these numbers are actually encoded as data on a computer. There are a variety of ways to do so, including the UCS-2 encoding (all characters encoded with two bytes), the UCS-4 encoding (all characters encoded with four bytes), UTF-16 (most characters encoded with two bytes, but some with four), and the UTF-8 encoding (characters encoded with one to six bytes). Exactly which (if any) of these encodings a particular program uses internally is usually not a concern to the user of the program. The user’s concern is usually limited to how to convert external data (such as data read from a file) from a known encoding (ASCII, Latin-1, UTF-8, etc.) to whatever the program uses. Programs that work with Unicode usually supply various encoding and decoding routines for doing the conversion.

Regular expressions for programs that work with Unicode often support a \unum metasequence that can be used to match a specific Unicode character ( 117). The number is usually given as a four-digit hexadecimal number, so

117). The number is usually given as a four-digit hexadecimal number, so \uC0B5 matches  . It’s important to realize that

. It’s important to realize that \uC0B5 is saying “match the Unicode character U+C0B5,” and says nothing about what actual bytes are to be compared, which is dependent on the particular encoding used internally to represent Unicode code points. If the program happens to use UTF-8 internally, that character happens to be represented with three bytes. But you, as someone using the Unicode-enabled program, don’t normally need to care. (Sometimes you do, as with PHP’s preg suite and its u pattern modifier;  447).

447).

There are a few related issues that you may need to be aware of...

Characters versus combining-character sequences

What a person considers a “character” doesn’t always agree with what Unicode or a Unicode-enabled program (or regex engine) considers to be a character. For example, most would consider à to be a single character, but in Unicode, it can be composed of two code points, U+0061 (a) combined with the grave accent U+0300(’). Unicode offers a number of combining characters that are intended to follow (and be combined with) a base character. This makes things a bit more complex for the regular-expression engine — for example, should dot match just one code point, or the entire U+0061 plus U+0300 combination?

In practice, it seems that many programs treat “character” and “code point” as synonymous, which means that dot matches each code point individually, whether it is base character or one of the combining characters. Thus, à (U+0061 plus U+0300) is matched by ⌈^..$⌋, and not by ⌈^.$⌋.

Perl and PCRE (and by extension, PHP’s preg suite) support the \X metasequence, which fulfills what many might expect from dot (“match one character”) in that it matches a base character followed by any number of combining characters. See more on page 120.

It’s important to keep combining characters in mind when using a Unicode-enabled editor to input Unicode characters directly into regular-expressions. If an accented character, say Å, ends up in a regular expression as ‘A’ plus ‘°,’ it likely can’t match a string containing the single code point version of Å (single code point versions are discussed in the next section). Also, it appears as two distinct characters to the regular-expression engine itself, so specifying ⌈[...Å...]⌋ adds the two characters to the class, just as the explicit ⌈[...A °...]⌋ does.

In a similar vein, if a two-code-point character like Å is followed by a quantifier, the quantifier actually applies only to the second code point, just as with an explicit ⌈A ° +⌋.

Multiple code points for the same character

In theory, Unicode is supposed to be a one-to-one mapping between code points and characters, but there are many situations where one character can have multiple representations. The previous section notes that à is U+0061 followed by U+0300. It is, however, also encoded separately as the single code point U+00E0. Why is it encoded twice? To maintain easier conversion between Unicode and Latin-1. If you have Latin-1 text that you convert to Unicode, à will likely be converted to U+00E0. But, it could well be converted to a U+0061, U+0300 combination. Often, there’s nothing you can do to automatically allow for these different ways of expressing characters, but Sun’s java.util.regex package provides a special match option, CANON_EQ, which causes characters that are “canonically equivalent” to match the same, even if their representations in Unicode differ ( 368).

368).

Somewhat related is that different characters can look virtually the same, which could account for some confusion at times among those creating the text you’re tasked to check. For example, the Roman letter I (U+0049) could be confused with I, the Greek letter Iota (U+0399). Add dialytika to that to get Ï or Ï, and it can be encoded four different ways (U+00CF; U+03AA; U+0049 U+0308; U+0399 U+0308). This means that you might have to manually allow for these four possibilities when constructing a regular expression to match Ï. There are many examples like this.

Also plentiful are single characters that appear to be more than one character. For example, Unicode defines a character called "SQUARE HZ" (U+3390), which appears as Hz. This looks very similar to the two normal characters Hz (U+0048 U+007A).

Although the use of special characters like Hz is minimal now, their adoption over the coming years will increase the complexity of programs that scan text, so those working with Unicode would do well to keep these issues in the back of their mind. Along those lines, one might already expect, for example, the need to allow for both normal spaces (U+0020) and no-break spaces (U+00A0), and perhaps also any of the dozen or so other types of spaces that Unicode defines.

Unicode 3.1+ and code points beyond U+FFFF

With the release of Unicode Version 3.1 in mid 2001, characters with code points beyond U+FFFF were added. (Previous versions of Unicode had built in a way to allow for characters at those code points, but until Version 3.1, none were actually defined.) For example, there is a character for musical symbol C Clef defined at U+1D121. Older programs built to handle only code points U+FFFF and below won’t be able to handle this. Most programs’ \unum indeed allow only a four-digit hexadecimal number.

Programs that can handle characters at these new code points generally offer the \x{num} sequence, where num can be any number of digits. (This is offered instead of, or in addition to, the four-digit \unum notation.) You can then use \x{1D121} to match the C Clef character.

Unicode line terminator

Unicode defines a number of characters (and one sequence of two characters) that are to be considered lineterminators, shown in Table 3-5.

Table 3-5: Unicode Line Terminators

Characters |

Description |

|

LF |

U |

ASCII Line Feed |

VT |

U |

ASCII Vertical Tab |

FF |

U |

ASCII Form Feed |

CR |

U |

ASCII Carriage Return |

CR/LF |

U |

ASCII Carriage Return / Line Feed sequence |

NEL |

U |

Unicode NEXT LINE |

LS |

U |

Unicode LINE SEPARATOR |

PS |

U |

Unicode PARAGRAPH SEPARATOR |

When fully supported, line terminators influence how lines are read from a file (including, in scripting languages, the file the program is being read from). With regular expressions, they can influence both what dot matches ( 111), and where⌈^⌋, ⌈

111), and where⌈^⌋, ⌈$⌋, and ⌈\Z⌋ match ( 112).

112).

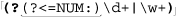

Regex Modes and Match Modes

Most regex engines support a number of different modes for how a regular expression is interpreted or applied. We’ve seen an example of each with Perl’s /x modifier (regex mode that allows free whitespace and comments  72) and

72) and /i modifier (match mode for case-insensitive matching  47).

47).

Modes can generally be applied globally to the whole regex, or in many modern flavors, partially, to specific subexpressions of the regex. The global application is achieved through modifiers or options, such as Perl’s /i, PHP’s i pattern modifier ( 446), or

446), or java.util.regex’s Pattern.CASE_INSENSITIVE flag ( 99). If supported, the partial application of a mode is achieved with a regex construct that looks like ⌈

99). If supported, the partial application of a mode is achieved with a regex construct that looks like ⌈(?i)⌋ to turn on case-insensitive matching, or ⌈(?-i)⌋ to turn it off. Some flavors also support ⌈(?i:...)⌋ and ⌈(?-i:...)⌋, which turn on and off case-insensitive matching for the subexpression enclosed.

How these modes are invoked within a regex is discussed later in this chapter ( 135). In this section, we’ll merely review some of the modes commonly available in most systems.

135). In this section, we’ll merely review some of the modes commonly available in most systems.

Case-insensitive match mode

The almost ubiquitous case-insensitive match mode ignores letter case during matching, so that ⌈b⌋ matches both 'b' and 'B'. This feature relies upon proper character encoding support, so all the cautions mentioned earlier apply.

Historically, case-insensitive matching support has been surprisingly fraught with bugs, but most have been fixed over the years. Still, Ruby’s case-insensitive matching doesn’t apply to octal and hex escapes.

There are special Unicode-related issues with case-insensitive matching (which Unicode calls “loose matching”). For starters, not all alphabets have the concept of upper and lower case, and some have an additional title case used only at the start of a word. Sometimes there’s not a straight one-to-one mapping between upper and lower case. A common example is that a Greek Sigma, Σ, has two lowercase versions, ς and σ; all three should mutually match in case-insensitive mode. Of the systems I’ve tested, only Perl and Java’s java.util.regex handle this correctly.

Another issue is that sometimes a single character maps to a sequence of multiple characters. One well known example is that the uppercase version of β is the two-character combination “SS”. Only Perl handles this properly.

There are also Unicode-manufactured problems. One example is that while there’s a single character  (U

(U+01F0), it has no single-character uppercase version. Rather,  requires a combining sequence (

requires a combining sequence ( 107), U

107), U+004A and U+030C. Yet,  and

and  should match in a case-insensitive mode. There are even examples like this that involve one-to-three mappings. Luckily, most of these do not involve commonly used characters.

should match in a case-insensitive mode. There are even examples like this that involve one-to-three mappings. Luckily, most of these do not involve commonly used characters.

Free-spacing and comments regex mode

In this mode, whitespace outside of character classes is mostly ignored. White-space within a character class still counts (except in java.util.regex), and comments are allowed between # and a newline. We’ve already seen examples of this for Perl ( 72), Java (

72), Java ( 98), and VB.NET (

98), and VB.NET ( 99).

99).

Except for java.util.regex, it’s not quite true that all whitespace outside of classes is ignored, but that it’s turned into a do-nothing metacharacter. The distinction is important with something like ⌈\l2•3⌋, which in this mode is taken as ⌈\l2⌋ followed by ⌈3⌋, and not ⌈\123⌋, as some might expect.

Of course, just what is and isn’t “whitespace” is subject to the character encoding in effect, and its fullness of support. Most programs recognize only ASCII whitespace.

Dot-matches-all match mode (a.k.a., “single-line mode”)

Usually, dot does not match a newline. The original Unix regex tools worked on a line-by-line basis, so the thought of matching a newline wasn’t an issue until the advent of sed and lex. By that time, ⌈.*⌋ had become a common idiom to match “the rest of the line,” so the new languages disallowed it from crossing line boundaries in order to keep it familiar.† Thus, tools that could work with multiple lines (such as a text editor) generally disallow dot from matching a newline.

For modern programming languages, a mode in which dot matches a newline can be as useful as one where dot doesn’t. Which of these is most convenient for a particular situation depends on, well, the situation. Many programs now offer ways for the mode to be selected on a per-regex basis.

There are a few exceptions to the common standard. Unicode-enabled systems, such as Sun’s Java regex package, may expand what dot normally does not match to include any of the single-character Unicode line terminators ( 109). Tcl’s normal state is that its dot matches everything, but in its special “newline-sensitive” and “partial newline-sensitive” matching modes, both dot and a negated character class are prohibited from matching a newline.

109). Tcl’s normal state is that its dot matches everything, but in its special “newline-sensitive” and “partial newline-sensitive” matching modes, both dot and a negated character class are prohibited from matching a newline.

An unfortunate name. When first introduced by Perl with its /s modifier, this mode was called “single-line mode.” This unfortunate name continues to cause no end of confusion because it has nothing whatsoever to do with ⌈^⌋ and ⌈$⌋, which are influenced by the “multiline mode” discussed in the next section. “Single-line mode” merely means that dot has no restrictions and can match any character.

Enhanced line-anchor match mode (a.k.a., “multiline mode”)

An enhanced line-anchor match mode influences where the line anchors, ⌈^⌋ and ⌈$⌋, match. The anchor ⌈^⌋ normally does not match at embedded newlines, but rather only at the start of the string that the regex is being applied to. However, in enhanced mode, it can also match after an embedded newline, effectively having ⌈^⌋ treat the string as multiple logical lines if the string contains newlines in the middle. We saw this in action in the previous chapter ( 69) while developing a Perl program to converting text to

69) while developing a Perl program to converting text to HTML. The entire text document was within a single string, so we could use the search-and-replace s/^$/<p>/mg to convert “...tags.

It’s ...” to “...tags.

It’s ...” to “...tags.

<p>  It’s . . .” The substitution replaces empty “lines” with paragraph tags.

It’s . . .” The substitution replaces empty “lines” with paragraph tags.

It’s much the same for ⌈$⌋, although the basic rules about when ⌈$⌋ can normally match can be a bit more complex to begin with ( 129). However, as far as this section is concerned, enhanced mode simply includes locations before an embedded newline as one of the places that ⌈

129). However, as far as this section is concerned, enhanced mode simply includes locations before an embedded newline as one of the places that ⌈$⌋ can match.

Programs that offer this mode often offer ⌈\A⌋ and ⌈\Z⌋, which normally behave the same as ⌈^⌋ and ⌈$⌋ except they are not modified by this mode. This means that ⌈\A⌋ and ⌈\Z⌋ never match at embedded newlines. Some implementations also allow ⌈$⌋ and ⌈\Z⌋ to match before a string-ending newline. Such implementations often offer ⌈\z⌋, which disregards all newlines and matches only at the very end of the string. See page 129 for details.

As with dot, there are exceptions to the common standard. A text editor like GNU Emacs normally lets the line anchors match at embedded newlines, since that makes the most sense for an editor. On the other hand, lex has its ⌈$⌋ match only before a newline (while its ⌈^⌋ maintains the common meaning.)

Unicode-enabled systems, such as Sun’s java.util.regex, may allow the line anchors in this mode to match at any line terminator ( 109). Ruby’s line anchors normally do match at any embedded newline, and Python’s ⌈

109). Ruby’s line anchors normally do match at any embedded newline, and Python’s ⌈\Z⌋ behaves like its ⌈\z⌋, rather than its normal ⌈$⌋.

Traditionally, this mode has been called “multiline mode.” Although it is unrelated to “single-line mode,” the names confusingly imply a relation. One simply modifies how dot matches, while the other modifies how ⌈^⌋ and ⌈$⌋ match. Another problem is that they approach newlines from different views. The first changes the concept of how dot treats a newline from “special” to “not special,” while the other does the opposite and changes the concept of how ⌈^⌋ and ⌈$⌋ treat newlines from “not special” to “special.”†

Literal-text regex mode

A “literal text” mode is one that doesn’t recognize most or all regex metacharacters. For example, a literal-text mode version of ⌈[a-z]*⌋ matches the string '[a-z]*'. A fully literal search is the same as a simple string search (“find this string” as opposed to “find a match for this regex”), and programs that offer regex support also tend to offer separate support for simple string searches. A regex literal-text mode becomes more interesting when it can be applied to just part of a regular expression. For example, PCRE (and hence PHP) regexes and Perl regex literals offer the special sequence \Q···\E, the contents of which have all metacharacters ignored (except the \E itself, of course).

Common Metacharacters and Features

The remainder of this chapter — the next 30 pages or so — offers an overview of common regex metacharacters and concepts, as outlined on the next page. Not every issue is discussed, and no one tool includes everything presented here.

In one respect, this section is just a summary of much of what you’ve seen in the first two chapters, but in light of the wider, more complex world presented at the beginning of this chapter. During your first pass through, a light glance should allow you to continue on to the next chapters. You can come back here to pick up details as you need them.

Some tools add a lot of new and rich functionality and some gratuitously change common notations to suit their whim or special needs. Although I’ll sometimes comment about specific utilities, I won’t address too many tool-specific concerns here. Rather, in this section I’ll just try to cover some common metacharacters and their uses, and some concerns to be aware of. I encourage you to follow along with the manual of your favorite utility.

Constructs Covered in This Section

Character Representations

Character Shorthands: |

|

Octal Escapes: \num |

|

Hex/Unicode Escapes: |

|

Control Characters: |

Character Classes and Class-Like Constructs

Normal classes: |

|

Almost any character: dot |

|

Exactly one byte: \C |

|

Unicode Combining Character Sequence: |

|

Class shorthands: |

|

Unicode properties, blocks, and categories: |

|

Class set operations: |

|

POSIX bracket-expression “character class”: |

|

POSIX bracket-expression “collating sequences”: |

|

POSIX bracket-expression “character equivalents”: |

|

Emacs syntax classes |

Anchors and Other “Zero-Width Assertions”

Start of line/string: |

|

End of line/string: |

|

Start of match (or end of previous match): |

|

Word boundaries: |

|

Lookahead |

Comments and Mode Modifiers

Mode modifier: |

|

Mode-modified span: |

|

Comments: |

|

Literal-text span: |

Grouping, Capturing, Conditionals, and Control

Capturing/groupingparentheses: |

|

Grouping-onlyparentheses: |

|

Named capture: |

|

Atomic grouping: |

|

Alternation: ...|...|... |

|

Conditional: |

|

Greedy quantifiers: |

|

Lazy quantifiers: |

|

Possessive quantifiers: |

Character Representations

This group of metacharacters provides visually pleasing ways to match specific characters that are otherwise difficult to represent.

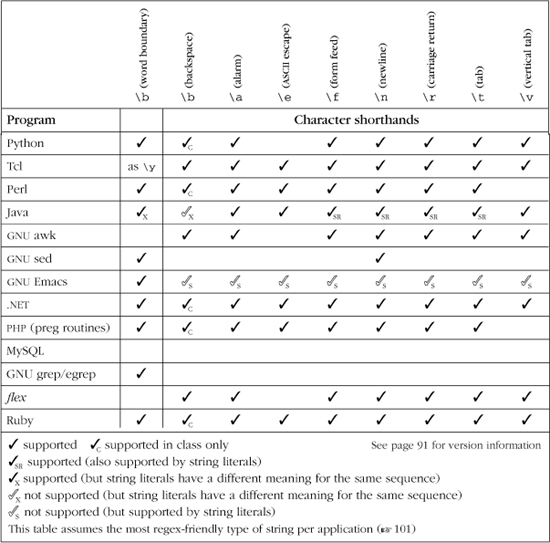

Character shorthands

Many utilities provide metacharacters to represent certain control characters that are sometimes machine-dependent, and which would otherwise be difficult to input or to visualize:

|

Alert (e.g., to sound the bell when “printed”) Usually maps to the ASCII |

|

Backspace Usually maps to the ASCII |

|

Escape character Usually maps to the ASCII |

|

Form feed Usually maps to the ASCII |

|

Newline On most platforms (including Unix and |

|

Carriage return Usually maps to the ASCII |

|

Normal horizontal tab Maps to the ASCII |

|

Vertical tab Usually maps to the ASCII |