Chapter 9. Unsupervised Learning Techniques

Although most of the applications of Machine Learning today are based on supervised learning (and as a result, this is where most of the investments go to), the vast majority of the available data is actually unlabeled: we have the input features X, but we do not have the labels y. Yann LeCun famously said that “if intelligence was a cake, unsupervised learning would be the cake, supervised learning would be the icing on the cake, and reinforcement learning would be the cherry on the cake”. In other words, there is a huge potential in unsupervised learning that we have only barely started to sink our teeth into.

For example, say you want to create a system that will take a few pictures of each item on a manufacturing production line and detect which items are defective. You can fairly easily create a system that will take pictures automatically, and this might give you thousands of pictures every day. You can then build a reasonably large dataset in just a few weeks. But wait, there are no labels! If you want to train a regular binary classifier that will predict whether an item is defective or not, you will need to label every single picture as “defective” or “normal”. This will generally require human experts to sit down and manually go through all the pictures. This is a long, costly and tedious task, so it will usually only be done on a small subset of the available pictures. As a result, the labeled dataset will be quite small, and the classifier’s performance will be disappointing. Moreover, every time the company makes any change to its products, the whole process will need to be started over from scratch. Wouldn’t it be great if the algorithm could just exploit the unlabeled data without needing humans to label every picture? Enter unsupervised learning.

In Chapter 8, we looked at the most common unsupervised learning task: dimensionality reduction. In this chapter, we will look at a few more unsupervised learning tasks and algorithms:

-

Clustering: the goal is to group similar instances together into clusters. This is a great tool for data analysis, customer segmentation, recommender systems, search engines, image segmentation, semi-supervised learning, dimensionality reduction, and more.

-

Anomaly detection: the objective is to learn what “normal” data looks like, and use this to detect abnormal instances, such as defective items on a production line or a new trend in a time series.

-

Density estimation: this is the task of estimating the probability density function (PDF) of the random process that generated the dataset. This is commonly used for anomaly detection: instances located in very low-density regions are likely to be anomalies. It is also useful for data analysis and visualization.

Ready for some cake? We will start with clustering, using K-Means and DBSCAN, and then we will discuss Gaussian mixture models and see how they can be used for density estimation, clustering, and anomaly detection.

Clustering

As you enjoy a hike in the mountains, you stumble upon a plant you have never seen before. You look around and you notice a few more. They are not perfectly identical, yet they are sufficiently similar for you to know that they most likely belong to the same species (or at least the same genus). You may need a botanist to tell you what species that is, but you certainly don’t need an expert to identify groups of similar-looking objects. This is called clustering: it is the task of identifying similar instances and assigning them to clusters, i.e., groups of similar instances.

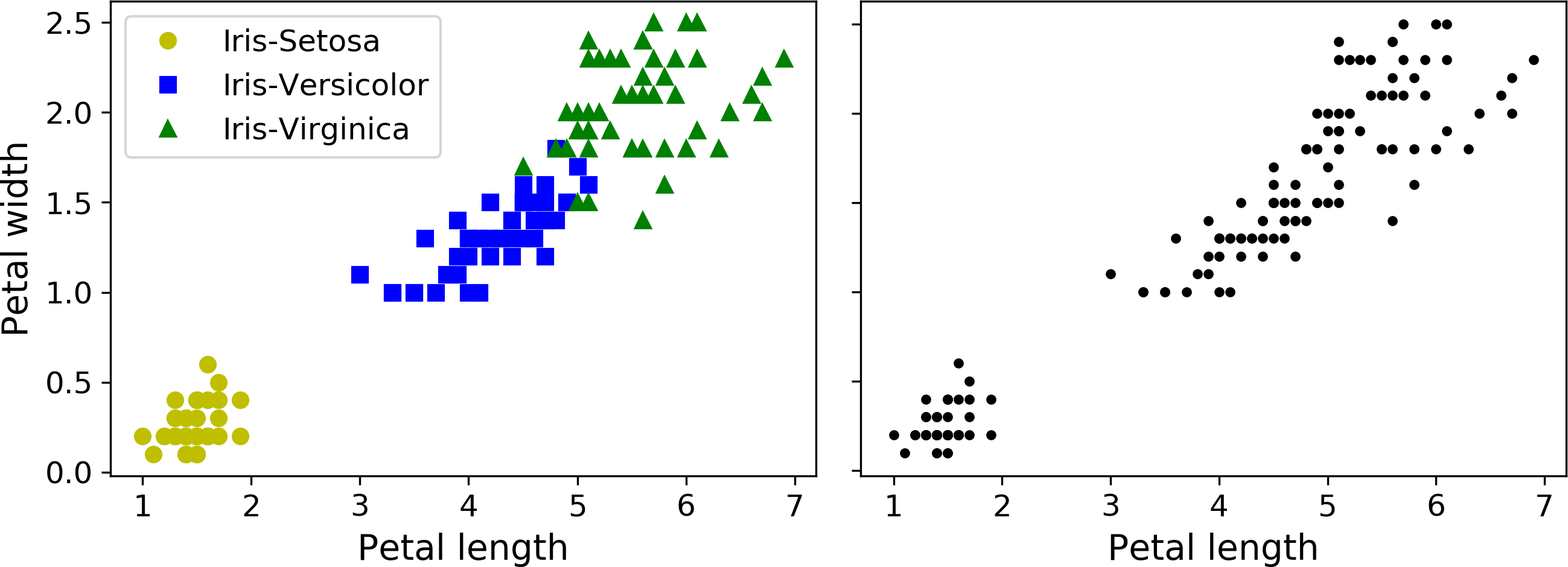

Just like in classification, each instance gets assigned to a group. However, this is an unsupervised task. Consider Figure 9-1: on the left is the iris dataset (introduced in Chapter 4), where each instance’s species (i.e., its class) is represented with a different marker. It is a labeled dataset, for which classification algorithms such as Logistic Regression, SVMs or Random Forest classifiers are well suited. On the right is the same dataset, but without the labels, so you cannot use a classification algorithm anymore. This is where clustering algorithms step in: many of them can easily detect the top left cluster. It is also quite easy to see with our own eyes, but it is not so obvious that the lower right cluster is actually composed of two distinct sub-clusters. That said, the dataset actually has two additional features (sepal length and width), not represented here, and clustering algorithms can make good use of all features, so in fact they identify the three clusters fairly well (e.g., using a Gaussian mixture model, only 5 instances out of 150 are assigned to the wrong cluster).

Figure 9-1. Classification (left) versus clustering (right)

Clustering is used in a wide variety of applications, including:

-

For customer segmentation: you can cluster your customers based on their purchases, their activity on your website, and so on. This is useful to understand who your customers are and what they need, so you can adapt your products and marketing campaigns to each segment. For example, this can be useful in recommender systems to suggest content that other users in the same cluster enjoyed.

-

For data analysis: when analyzing a new dataset, it is often useful to first discover clusters of similar instances, as it is often easier to analyze clusters separately.

-

As a dimensionality reduction technique: once a dataset has been clustered, it is usually possible to measure each instance’s affinity with each cluster (affinity is any measure of how well an instance fits into a cluster). Each instance’s feature vector x can then be replaced with the vector of its cluster affinities. If there are k clusters, then this vector is k dimensional. This is typically much lower dimensional than the original feature vector, but it can preserve enough information for further processing.

-

For anomaly detection (also called outlier detection): any instance that has a low affinity to all the clusters is likely to be an anomaly. For example, if you have clustered the users of your website based on their behavior, you can detect users with unusual behavior, such as an unusual number of requests per second, and so on. Anomaly detection is particularly useful in detecting defects in manufacturing, or for fraud detection.

-

For semi-supervised learning: if you only have a few labels, you could perform clustering and propagate the labels to all the instances in the same cluster. This can greatly increase the amount of labels available for a subsequent supervised learning algorithm, and thus improve its performance.

-

For search engines: for example, some search engines let you search for images that are similar to a reference image. To build such a system, you would first apply a clustering algorithm to all the images in your database: similar images would end up in the same cluster. Then when a user provides a reference image, all you need to do is to find this image’s cluster using the trained clustering model, and you can then simply return all the images from this cluster.

-

To segment an image: by clustering pixels according to their color, then replacing each pixel’s color with the mean color of its cluster, it is possible to reduce the number of different colors in the image considerably. This technique is used in many object detection and tracking systems, as it makes it easier to detect the contour of each object.

There is no universal definition of what a cluster is: it really depends on the context, and different algorithms will capture different kinds of clusters. For example, some algorithms look for instances centered around a particular point, called a centroid. Others look for continuous regions of densely packed instances: these clusters can take on any shape. Some algorithms are hierarchical, looking for clusters of clusters. And the list goes on.

In this section, we will look at two popular clustering algorithms: K-Means and DBSCAN, and we will show some of their applications, such as non-linear dimensionality reduction, semi-supervised learning and anomaly detection.

K-Means

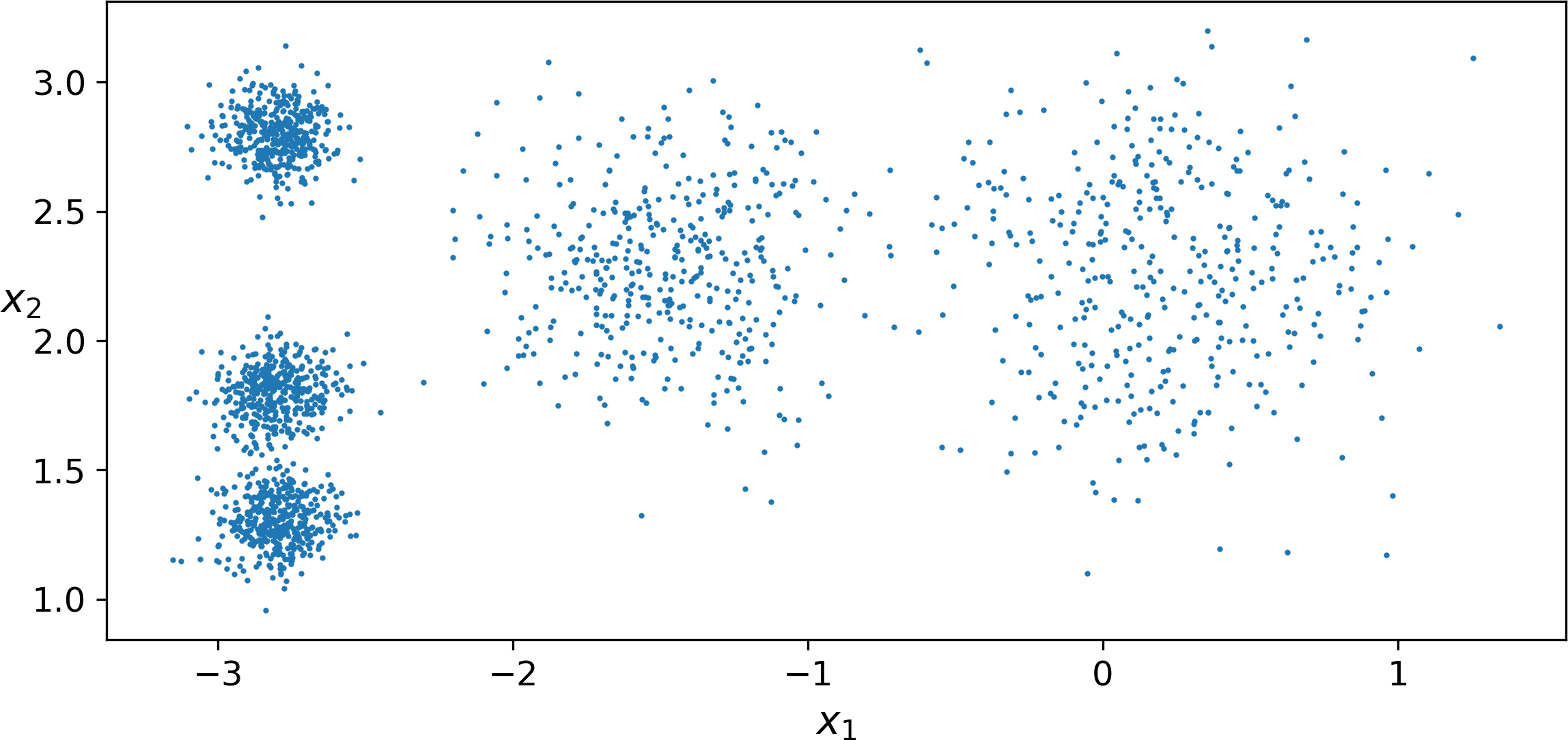

Consider the unlabeled dataset represented in Figure 9-2: you can clearly see 5 blobs of instances. The K-Means algorithm is a simple algorithm capable of clustering this kind of dataset very quickly and efficiently, often in just a few iterations. It was proposed by Stuart Lloyd at the Bell Labs in 1957 as a technique for pulse-code modulation, but it was only published outside of the company in 1982, in a paper titled “Least square quantization in PCM”.1 By then, in 1965, Edward W. Forgy had published virtually the same algorithm, so K-Means is sometimes referred to as Lloyd-Forgy.

Figure 9-2. An unlabeled dataset composed of five blobs of instances

Let’s train a K-Means clusterer on this dataset. It will try to find each blob’s center and assign each instance to the closest blob:

fromsklearn.clusterimportKMeansk=5kmeans=KMeans(n_clusters=k)y_pred=kmeans.fit_predict(X)

Note that you have to specify the number of clusters k that the algorithm must find. In this example, it is pretty obvious from looking at the data that k should be set to 5, but in general it is not that easy. We will discuss this shortly.

Each instance was assigned to one of the 5 clusters. In the context of clustering, an instance’s label is the index of the cluster that this instance gets assigned to by the algorithm: this is not to be confused with the class labels in classification (remember that clustering is an unsupervised learning task). The KMeans instance preserves a copy of the labels of the instances it was trained on, available via the labels_ instance variable:

>>>y_predarray([4, 0, 1, ..., 2, 1, 0], dtype=int32)>>>y_prediskmeans.labels_True

We can also take a look at the 5 centroids that the algorithm found:

>>>kmeans.cluster_centers_array([[-2.80389616, 1.80117999],[ 0.20876306, 2.25551336],[-2.79290307, 2.79641063],[-1.46679593, 2.28585348],[-2.80037642, 1.30082566]])

Of course, you can easily assign new instances to the cluster whose centroid is closest:

>>>X_new=np.array([[0,2],[3,2],[-3,3],[-3,2.5]])>>>kmeans.predict(X_new)array([1, 1, 2, 2], dtype=int32)

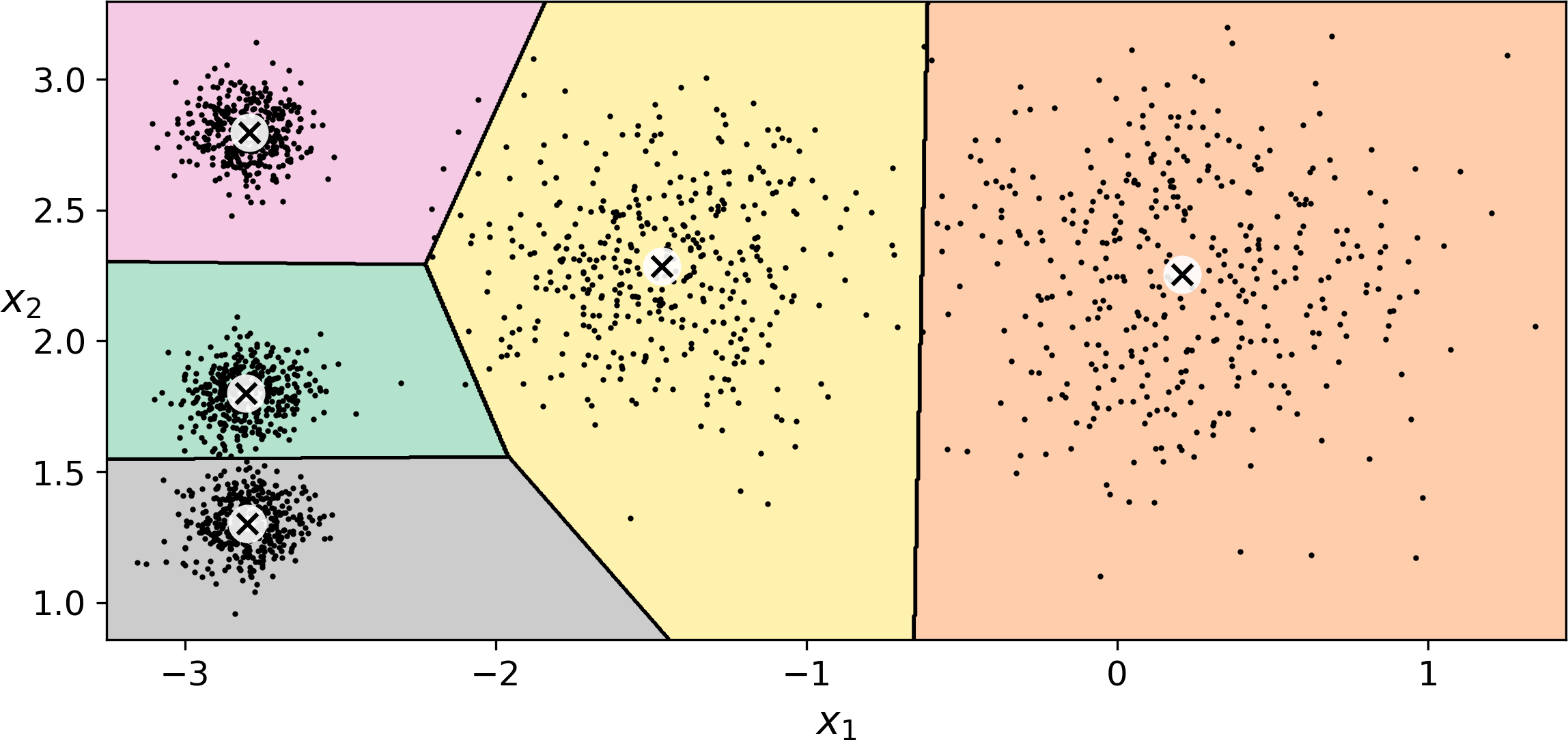

If you plot the cluster’s decision boundaries, you get a Voronoi tessellation (see Figure 9-3, where each centroid is represented with an X):

Figure 9-3. K-Means decision boundaries (Voronoi tessellation)

The vast majority of the instances were clearly assigned to the appropriate cluster, but a few instances were probably mislabeled (especially near the boundary between the top left cluster and the central cluster). Indeed, the K-Means algorithm does not behave very well when the blobs have very different diameters since all it cares about when assigning an instance to a cluster is the distance to the centroid.

Instead of assigning each instance to a single cluster, which is called hard clustering, it can be useful to just give each instance a score per cluster: this is called soft clustering. For example, the score can be the distance between the instance and the centroid, or conversely it can be a similarity score (or affinity) such as the Gaussian Radial Basis Function (introduced in Chapter 5). In the KMeans class, the transform() method measures the distance from each instance to every centroid:

>>>kmeans.transform(X_new)array([[2.81093633, 0.32995317, 2.9042344 , 1.49439034, 2.88633901],[5.80730058, 2.80290755, 5.84739223, 4.4759332 , 5.84236351],[1.21475352, 3.29399768, 0.29040966, 1.69136631, 1.71086031],[0.72581411, 3.21806371, 0.36159148, 1.54808703, 1.21567622]])

In this example, the first instance in X_new is located at a distance of 2.81 from the first centroid, 0.33 from the second centroid, 2.90 from the third centroid, 1.49 from the fourth centroid and 2.87 from the fifth centroid. If you have a high-dimensional dataset and you transform it this way, you end up with a k-dimensional dataset: this can be a very efficient non-linear dimensionality reduction technique.

The K-Means Algorithm

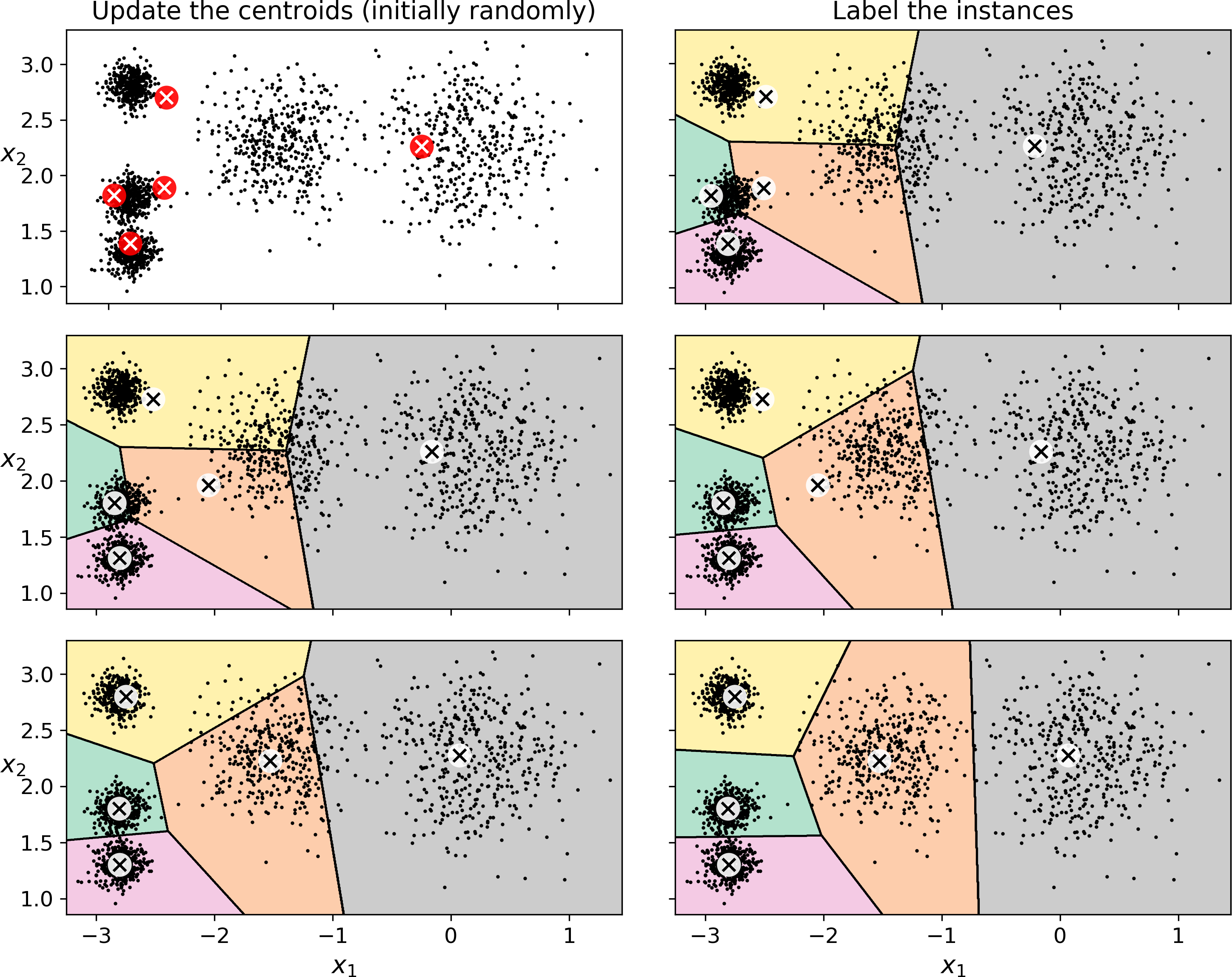

So how does the algorithm work? Well it is really quite simple. Suppose you were given the centroids: you could easily label all the instances in the dataset by assigning each of them to the cluster whose centroid is closest. Conversely, if you were given all the instance labels, you could easily locate all the centroids by computing the mean of the instances for each cluster. But you are given neither the labels nor the centroids, so how can you proceed? Well, just start by placing the centroids randomly (e.g., by picking k instances at random and using their locations as centroids). Then label the instances, update the centroids, label the instances, update the centroids, and so on until the centroids stop moving. The algorithm is guaranteed to converge in a finite number of steps (usually quite small), it will not oscillate foreverfootenote:[This can be proven by pointing out that the mean squared distance between the instances and their closest centroid can only go down at each step.]. You can see the algorithm in action in Figure 9-4: the centroids are initialized randomly (top left), then the instances are labeled (top right), then the centroids are updated (center left), the instances are relabeled (center right), and so on. As you can see, in just 3 iterations the algorithm has reached a clustering that seems close to optimal.

Figure 9-4. The K-Means algorithm

Note

The computational complexity of the algorithm is generally linear with regards to the number of instances m, the number of clusters k and the number of dimensions n. However, this is only true when the data has a clustering structure. If it does not, then in the worst case scenario the complexity can increase exponentially with the number of instances. In practice, however, this rarely happens, and K-Means is generally one of the fastest clustering algorithms.

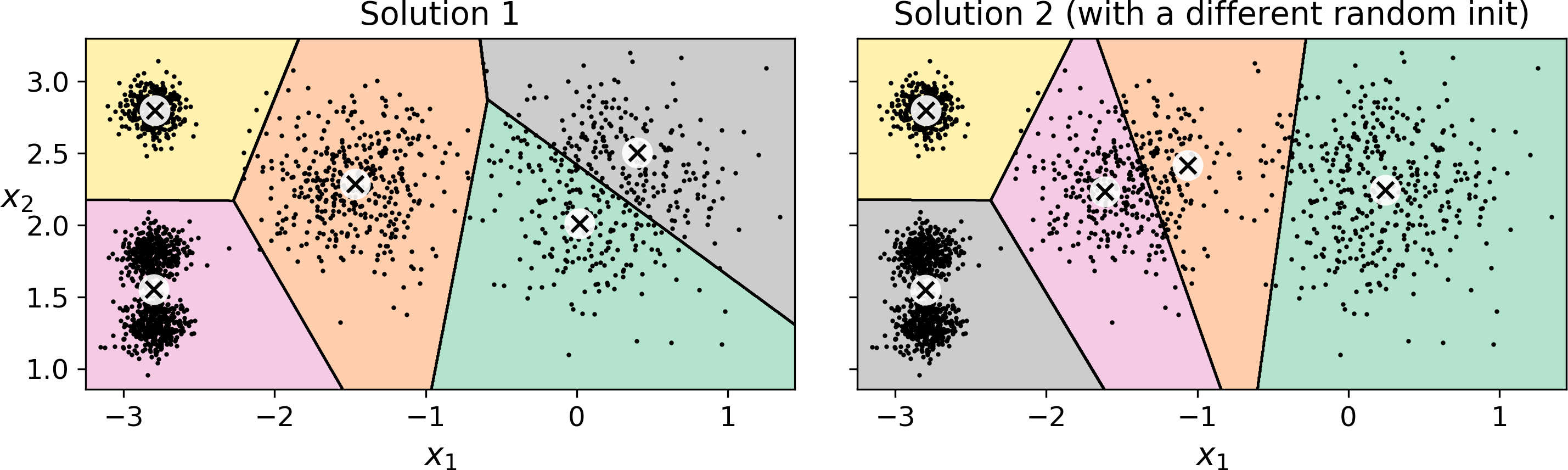

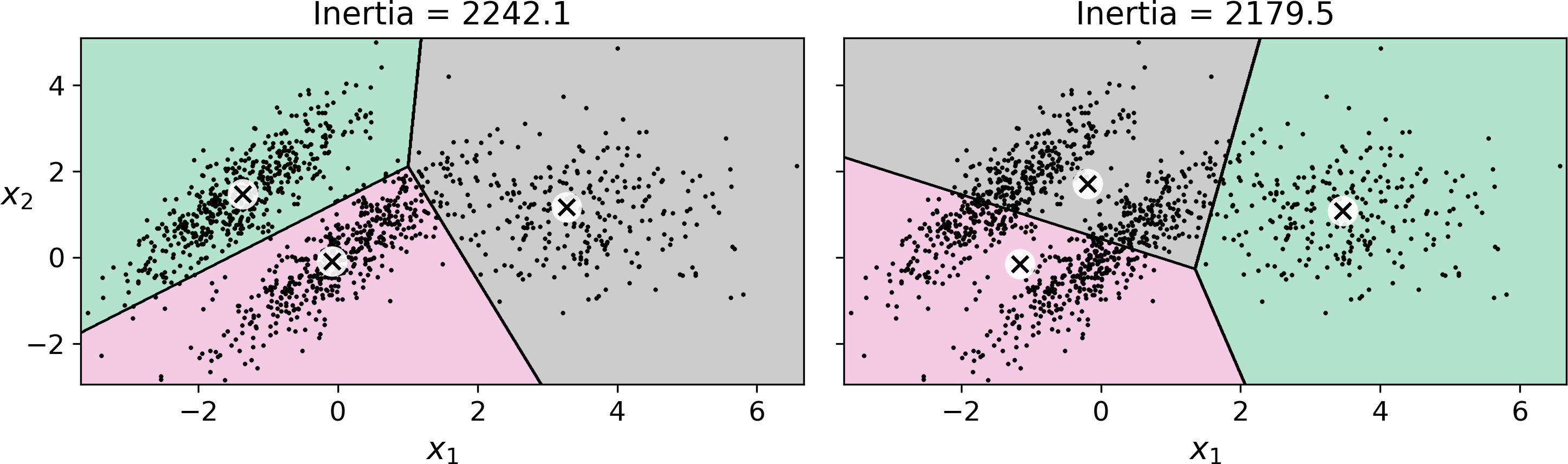

Unfortunately, although the algorithm is guaranteed to converge, it may not converge to the right solution (i.e., it may converge to a local optimum): this depends on the centroid initialization. For example, Figure 9-5 shows two sub-optimal solutions that the algorithm can converge to if you are not lucky with the random initialization step:

Figure 9-5. Sub-optimal solutions due to unlucky centroid initializations

Let’s look at a few ways you can mitigate this risk by improving the centroid initialization.

Centroid Initialization Methods

If you happen to know approximately where the centroids should be (e.g., if you ran another clustering algorithm earlier), then you can set the init hyperparameter to a NumPy array containing the list of centroids, and set n_init to 1:

good_init=np.array([[-3,3],[-3,2],[-3,1],[-1,2],[0,2]])kmeans=KMeans(n_clusters=5,init=good_init,n_init=1)

Another solution is to run the algorithm multiple times with different random initializations and keep the best solution. This is controlled by the n_init hyperparameter: by default, it is equal to 10, which means that the whole algorithm described earlier actually runs 10 times when you call fit(), and Scikit-Learn keeps the best solution. But how exactly does it know which solution is the best? Well of course it uses a performance metric! It is called the model’s inertia: this is the mean squared distance between each instance and its closest centroid. It is roughly equal to 223.3 for the model on the left of Figure 9-5, 237.5 for the model on the right of Figure 9-5, and 211.6 for the model in Figure 9-3. The KMeans class runs the algorithm n_init times and keeps the model with the lowest inertia: in this example, the model in Figure 9-3 will be selected (unless we are very unlucky with n_init consecutive random initializations). If you are curious, a model’s inertia is accessible via the inertia_ instance variable:

>>>kmeans.inertia_211.59853725816856

The score() method returns the negative inertia. Why negative? Well, it is because a predictor’s score() method must always respect the "great is better" rule.

>>>kmeans.score(X)-211.59853725816856

An important improvement to the K-Means algorithm, called K-Means+\+, was proposed in a 2006 paper by David Arthur and Sergei Vassilvitskii:2 they introduced a smarter initialization step that tends to select centroids that are distant from one another, and this makes the K-Means algorithm much less likely to converge to a sub-optimal solution. They showed that the additional computation required for the smarter initialization step is well worth it since it makes it possible to drastically reduce the number of times the algorithm needs to be run to find the optimal solution. Here is the K-Means++ initialization algorithm:

-

Take one centroid c(1), chosen uniformly at random from the dataset.

-

Take a new centroid c(i), choosing an instance x(i) with probability: where D(x(i)) is the distance between the instance x(i) and the closest centroid that was already chosen. This probability distribution ensures that instances further away from already chosen centroids are much more likely be selected as centroids.

-

Repeat the previous step until all k centroids have been chosen.

The KMeans class actually uses this initialization method by default. If you want to force it to use the original method (i.e., picking k instances randomly to define the initial centroids), then you can set the init hyperparameter to "random". You will rarely need to do this.

Accelerated K-Means and Mini-batch K-Means

Another important improvement to the K-Means algorithm was proposed in a 2003 paper by Charles Elkan.3 It considerably accelerates the algorithm by avoiding many unnecessary distance calculations: this is achieved by exploiting the triangle inequality (i.e., the straight line is always the shortest4) and by keeping track of lower and upper bounds for distances between instances and centroids. This is the algorithm used by default by the KMeans class (but you can force it to use the original algorithm by setting the algorithm hyperparameter to "full", although you probably will never need to).

Yet another important variant of the K-Means algorithm was proposed in a 2010 paper by David Sculley.5 Instead of using the full dataset at each iteration, the algorithm is capable of using mini-batches, moving the centroids just slightly at each iteration. This speeds up the algorithm typically by a factor of 3 or 4 and makes it possible to cluster huge datasets that do not fit in memory. Scikit-Learn implements this algorithm in the MiniBatchKMeans class. You can just use this class like the KMeans class:

fromsklearn.clusterimportMiniBatchKMeansminibatch_kmeans=MiniBatchKMeans(n_clusters=5)minibatch_kmeans.fit(X)

If the dataset does not fit in memory, the simplest option is to use the memmap class, as we did for incremental PCA in Chapter 8. Alternatively, you can pass one mini-batch at a time to the partial_fit() method, but this will require much more work, since you will need to perform multiple initializations and select the best one yourself (see the notebook for an example).

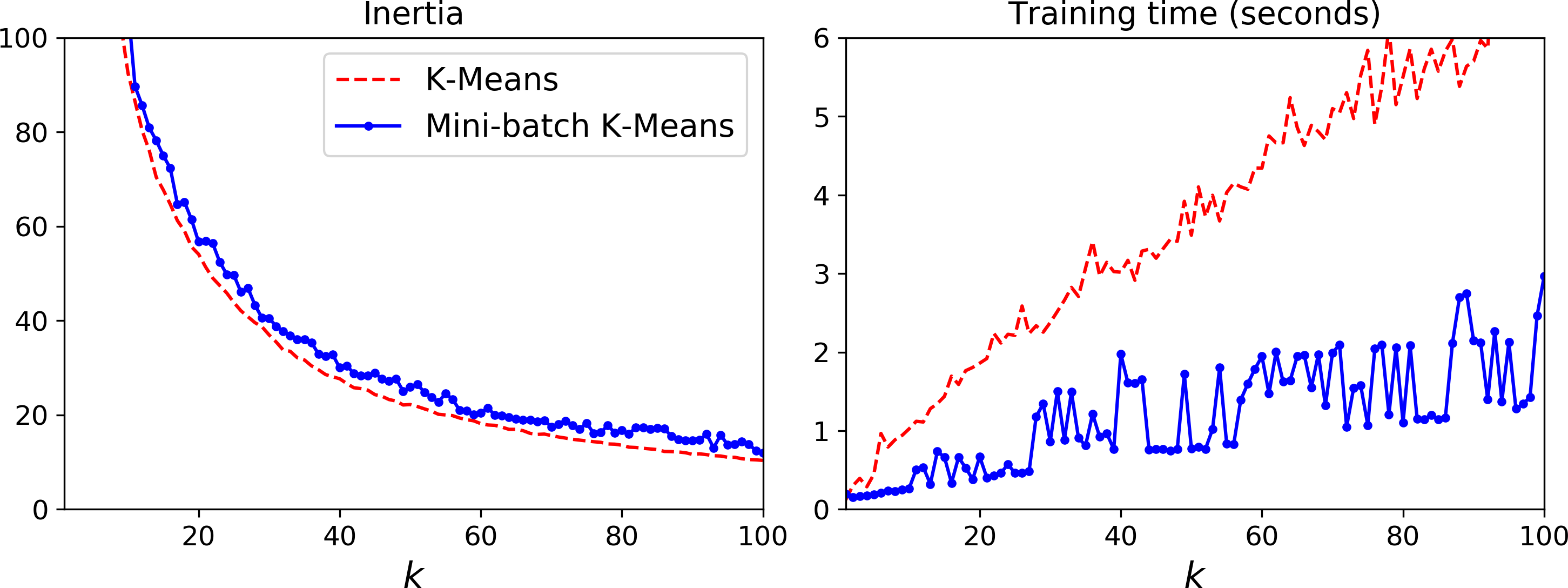

Although the Mini-batch K-Means algorithm is much faster than the regular K-Means algorithm, its inertia is generally slightly worse, especially as the number of clusters increases. You can see this in Figure 9-6: the plot on the left compares the inertias of Mini-batch K-Means and regular K-Means models trained on the previous dataset using various numbers of clusters k. The difference between the two curves remains fairly constant, but this difference becomes more and more significant as k increases, since the inertia becomes smaller and smaller. However, in the plot on the right, you can see that Mini-batch K-Means is much faster than regular K-Means, and this difference increases with k.

Figure 9-6. Mini-batch K-Means vs K-Means: worse inertia as k increases (left) but much faster (right)

Finding the Optimal Number of Clusters

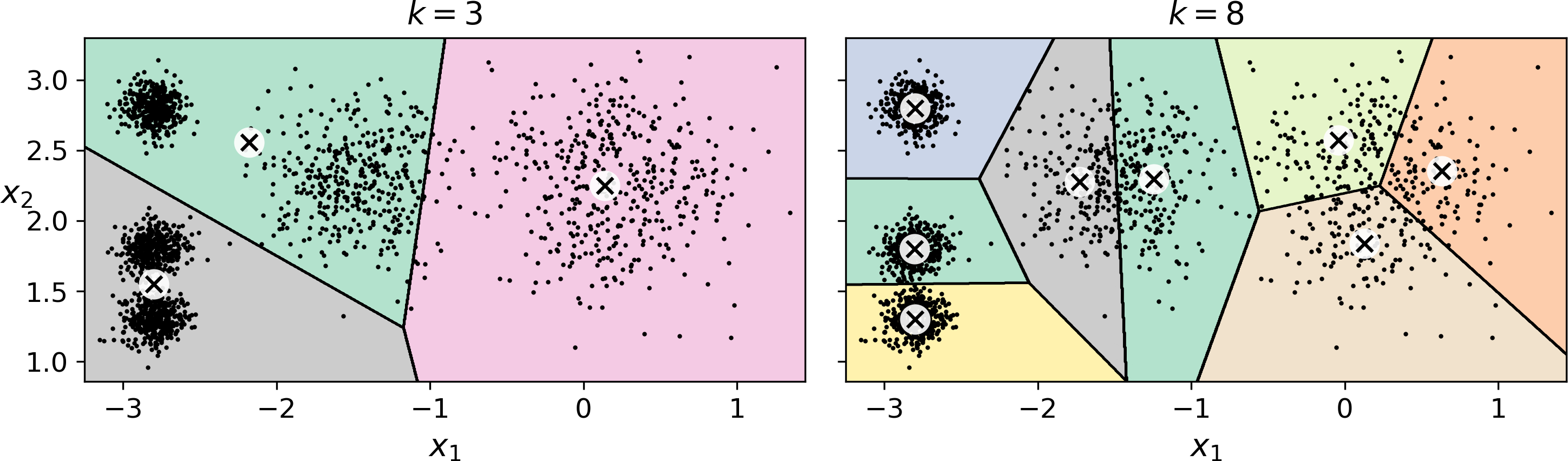

So far, we have set the number of clusters k to 5 because it was obvious by looking at the data that this is the correct number of clusters. But in general, it will not be so easy to know how to set k, and the result might be quite bad if you set it to the wrong value. For example, as you can see in Figure 9-7, setting k to 3 or 8 results in fairly bad models:

Figure 9-7. Bad choices for the number of clusters

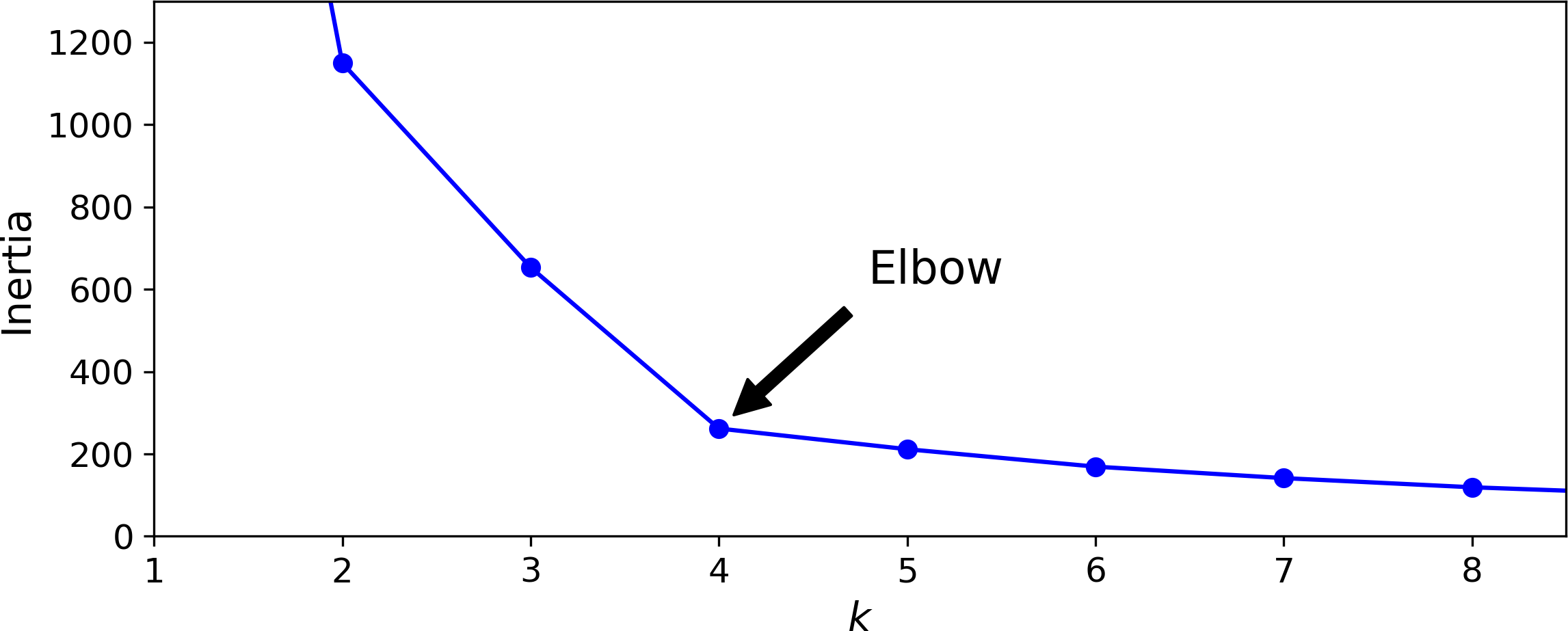

You might be thinking that we could just pick the model with the lowest inertia, right? Unfortunately, it is not that simple. The inertia for k=3 is 653.2, which is much higher than for k=5 (which was 211.6), but with k=8, the inertia is just 119.1. The inertia is not a good performance metric when trying to choose k since it keeps getting lower as we increase k. Indeed, the more clusters there are, the closer each instance will be to its closest centroid, and therefore the lower the inertia will be. Let’s plot the inertia as a function of k (see Figure 9-8):

Figure 9-8. Selecting the number of clusters k using the “elbow rule”

As you can see, the inertia drops very quickly as we increase k up to 4, but then it decreases much more slowly as we keep increasing k. This curve has roughly the shape of an arm, and there is an “elbow” at k=4 so if we did not know better, it would be a good choice: any lower value would be dramatic, while any higher value would not help much, and we might just be splitting perfectly good clusters in half for no good reason.

This technique for choosing the best value for the number of clusters is rather coarse. A more precise approach (but also more computationally expensive) is to use the silhouette score, which is the mean silhouette coefficient over all the instances. An instance’s silhouette coefficient is equal to (b – a) / max(a, b) where a is the mean distance to the other instances in the same cluster (it is the mean intra-cluster distance), and b is the mean nearest-cluster distance, that is the mean distance to the instances of the next closest cluster (defined as the one that minimizes b, excluding the instance’s own cluster). The silhouette coefficient can vary between -1 and +1: a coefficient close to +1 means that the instance is well inside its own cluster and far from other clusters, while a coefficient close to 0 means that it is close to a cluster boundary, and finally a coefficient close to -1 means that the instance may have been assigned to the wrong cluster. To compute the silhouette score, you can use Scikit-Learn’s silhouette_score() function, giving it all the instances in the dataset, and the labels they were assigned:

>>>fromsklearn.metricsimportsilhouette_score>>>silhouette_score(X,kmeans.labels_)0.655517642572828

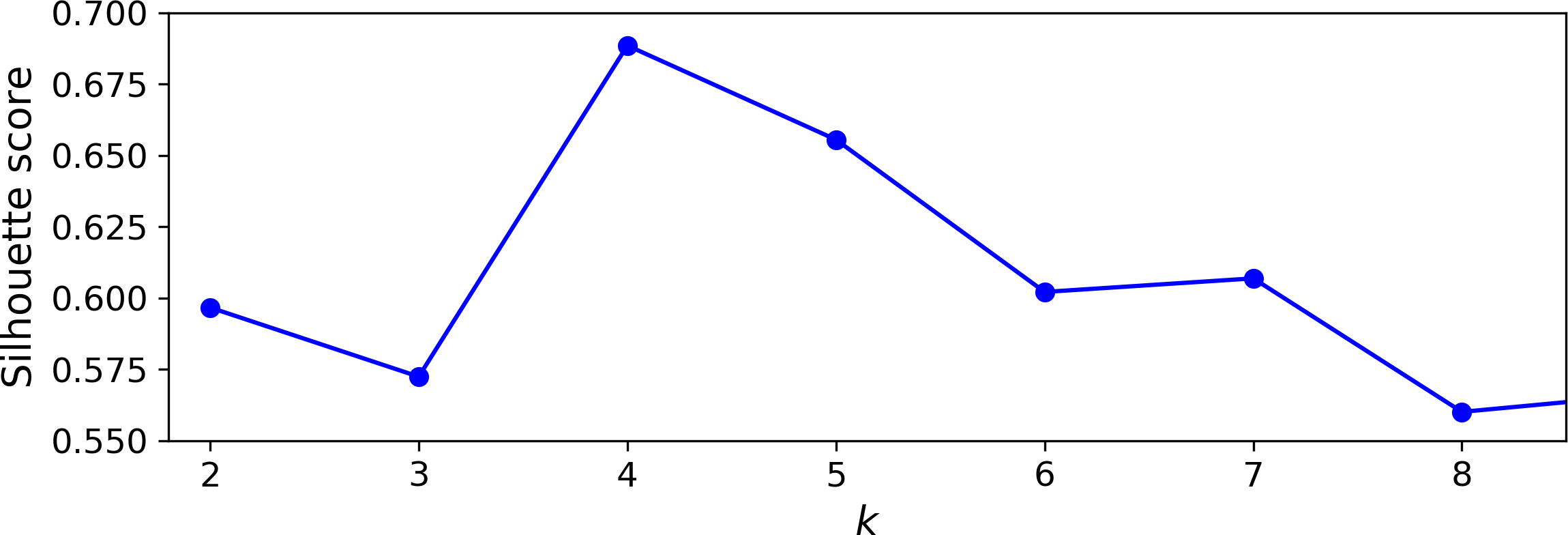

Let’s compare the silhouette scores for different numbers of clusters (see Figure 9-9):

Figure 9-9. Selecting the number of clusters k using the silhouette score

As you can see, this visualization is much richer than the previous one: in particular, although it confirms that k=4 is a very good choice, it also underlines the fact that k=5 is quite good as well, and much better than k=6 or 7. This was not visible when comparing inertias.

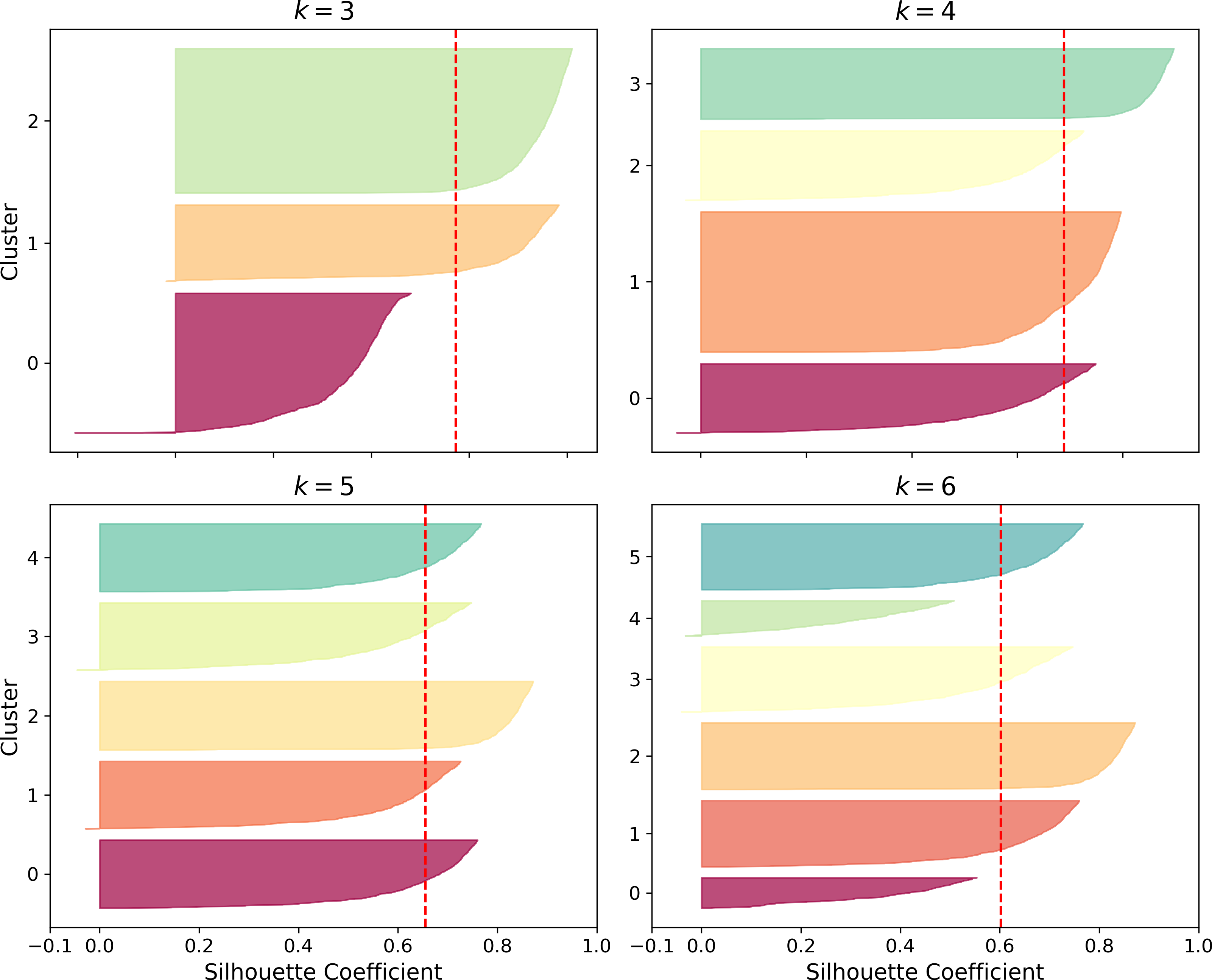

An even more informative visualization is obtained when you plot every instance’s silhouette coefficient, sorted by the cluster they are assigned to and by the value of the coefficient. This is called a silhouette diagram (see Figure 9-10):

Figure 9-10. Silouhette analysis: comparing the silhouette diagrams for various values of k

The vertical dashed lines represent the silhouette score for each number of clusters. When most of the instances in a cluster have a lower coefficient than this score (i.e., if many of the instances stop short of the dashed line, ending to the left of it), then the cluster is rather bad since this means its instances are much too close to other clusters. We can see that when k=3 and when k=6, we get bad clusters. But when k=4 or k=5, the clusters look pretty good – most instances extend beyond the dashed line, to the right and closer to 1.0. When k=4, the cluster at index 1 (the third from the top), is rather big, while when k=5, all clusters have similar sizes, so even though the overall silhouette score from k=4 is slightly greater than for k=5, it seems like a good idea to use k=5 to get clusters of similar sizes.

Limits of K-Means

Despite its many merits, most notably being fast and scalable, K-Means is not perfect. As we saw, it is necessary to run the algorithm several times to avoid sub-optimal solutions, plus you need to specify the number of clusters, which can be quite a hassle. Moreover, K-Means does not behave very well when the clusters have varying sizes, different densities, or non-spherical shapes. For example, Figure 9-11 shows how K-Means clusters a dataset containing three ellipsoidal clusters of different dimensions, densities and orientations:

Figure 9-11. K-Means fails to cluster these ellipsoidal blobs properly

As you can see, neither of these solutions are any good. The solution on the left is better, but it still chops off 25% of the middle cluster and assigns it to the cluster on the right. The solution on the right is just terrible, even though its inertia is lower. So depending on the data, different clustering algorithms may perform better. For example, on these types of elliptical clusters, Gaussian mixture models work great.

Tip

It is important to scale the input features before you run K-Means, or else the clusters may be very stretched, and K-Means will perform poorly. Scaling the features does not guarantee that all the clusters will be nice and spherical, but it generally improves things.

Now let’s look at a few ways we can benefit from clustering. We will use K-Means, but feel free to experiment with other clustering algorithms.

Using clustering for image segmentation

Image segmentation is the task of partitioning an image into multiple segments. In semantic segmentation, all pixels that are part of the same object type get assigned to the same segment. For example, in a self-driving car’s vision system, all pixels that are part of a pedestrian’s image might be assigned to the “pedestrian” segment (there would just be one segment containing all the pedestrians). In instance segmentation, all pixels that are part of the same individual object are assigned to the same segment. In this case there would be a different segment for each pedestrian. The state of the art in semantic or instance segmentation today is achieved using complex architectures based on convolutional neural networks (see Chapter 14). Here, we are going to do something much simpler: color segmentation. We will simply assign pixels to the same segment if they have a similar color. In some applications, this may be sufficient, for example if you want to analyze satellite images to measure how much total forest area there is in a region, color segmentation may be just fine.

First, let’s load the image (see the upper left image in Figure 9-12) using Matplotlib’s imread() function:

>>>frommatplotlib.imageimportimread# you could also use `imageio.imread()`>>>image=imread(os.path.join("images","clustering","ladybug.png"))>>>image.shape(533, 800, 3)

The image is represented as a 3D array: the first dimension’s size is the height, the second is the width, and the third is the number of color channels, in this case red, green and blue (RGB). In other words, for each pixel there is a 3D vector containing the intensities of red, green and blue, each between 0.0 and 1.0 (or between 0 and 255 if you use imageio.imread()). Some images may have less channels, such as grayscale images (one channel), or more channels, such as images with an additional alpha channel for transparency, or satellite images which often contain channels for many light frequencies (e.g., infrared). The following code reshapes the array to get a long list of RGB colors, then it clusters these colors using K-Means. For example, it may identify a color cluster for all shades of green. Next, for each color (e.g., dark green), it looks for the mean color of the pixel’s color cluster. For example, all shades of green may be replaced with the same light green color (assuming the mean color of the green cluster is light green). Finally it reshapes this long list of colors to get the same shape as the original image. And we’re done!

X=image.reshape(-1,3)kmeans=KMeans(n_clusters=8).fit(X)segmented_img=kmeans.cluster_centers_[kmeans.labels_]segmented_img=segmented_img.reshape(image.shape)

This outputs the image shown in the upper right of Figure 9-12. You can experiment with various numbers of clusters, as shown in the figure. When you use less than 8 clusters, notice that the ladybug’s flashy red color fails to get a cluster of its own: it gets merged with colors from the environment. This is due to the fact that the ladybug is quite small, much smaller than the rest of the image, so even though its color is flashy, K-Means fails to dedicate a cluster to it: as mentioned earlier, K-Means prefers clusters of similar sizes.

Figure 9-12. Image segmentation using K-Means with various numbers of color clusters

That was not too hard, was it? Now let’s look at another application of clustering: preprocessing.

Using Clustering for Preprocessing

Clustering can be an efficient approach to dimensionality reduction, in particular as a preprocessing step before a supervised learning algorithm. For example, let’s tackle the digits dataset which is a simple MNIST-like dataset containing 1,797 grayscale 8×8 images representing digits 0 to 9. First, let’s load the dataset:

fromsklearn.datasetsimportload_digitsX_digits,y_digits=load_digits(return_X_y=True)

Now, let’s split it into a training set and a test set:

fromsklearn.model_selectionimporttrain_test_splitX_train,X_test,y_train,y_test=train_test_split(X_digits,y_digits)

Next, let’s fit a Logistic Regression model:

fromsklearn.linear_modelimportLogisticRegressionlog_reg=LogisticRegression(random_state=42)log_reg.fit(X_train,y_train)

Let’s evaluate its accuracy on the test set:

>>>log_reg.score(X_test,y_test)0.9666666666666667

Okay, that’s our baseline: 96.7% accuracy. Let’s see if we can do better by using K-Means as a preprocessing step. We will create a pipeline that will first cluster the training set into 50 clusters and replace the images with their distances to these 50 clusters, then apply a logistic regression model.

Warning

Although it is tempting to define the number of clusters to 10, since there are 10 different digits, it is unlikely to perform well, because there are several different ways to write each digit.

fromsklearn.pipelineimportPipelinepipeline=Pipeline([("kmeans",KMeans(n_clusters=50)),("log_reg",LogisticRegression()),])pipeline.fit(X_train,y_train)

Now let’s evaluate this classification pipeline:

>>>pipeline.score(X_test,y_test)0.9822222222222222

How about that? We almost divided the error rate by a factor of 2!

But we chose the number of clusters k completely arbitrarily, we can surely do better. Since K-Means is just a preprocessing step in a classification pipeline, finding a good value for k is much simpler than earlier: there’s no need to perform silhouette analysis or minimize the inertia, the best value of k is simply the one that results in the best classification performance during cross-validation. Let’s use GridSearchCV to find the optimal number of clusters:

fromsklearn.model_selectionimportGridSearchCVparam_grid=dict(kmeans__n_clusters=range(2,100))grid_clf=GridSearchCV(pipeline,param_grid,cv=3,verbose=2)grid_clf.fit(X_train,y_train)

Let’s look at best value for k, and the performance of the resulting pipeline:

>>>grid_clf.best_params_{'kmeans__n_clusters': 90}>>>grid_clf.score(X_test,y_test)0.9844444444444445

With k=90 clusters, we get a small accuracy boost, reaching 98.4% accuracy on the test set. Cool!

Using Clustering for Semi-Supervised Learning

Another use case for clustering is in semi-supervised learning, when we have plenty of unlabeled instances and very few labeled instances. Let’s train a logistic regression model on a sample of 50 labeled instances from the digits dataset:

n_labeled=50log_reg=LogisticRegression()log_reg.fit(X_train[:n_labeled],y_train[:n_labeled])

What is the performance of this model on the test set?

>>>log_reg.score(X_test,y_test)0.8266666666666667

The accuracy is just 82.7%: it should come as no surprise that this is much lower than earlier, when we trained the model on the full training set. Let’s see how we can do better. First, let’s cluster the training set into 50 clusters, then for each cluster let’s find the image closest to the centroid. We will call these images the representative images:

k=50kmeans=KMeans(n_clusters=k)X_digits_dist=kmeans.fit_transform(X_train)representative_digit_idx=np.argmin(X_digits_dist,axis=0)X_representative_digits=X_train[representative_digit_idx]

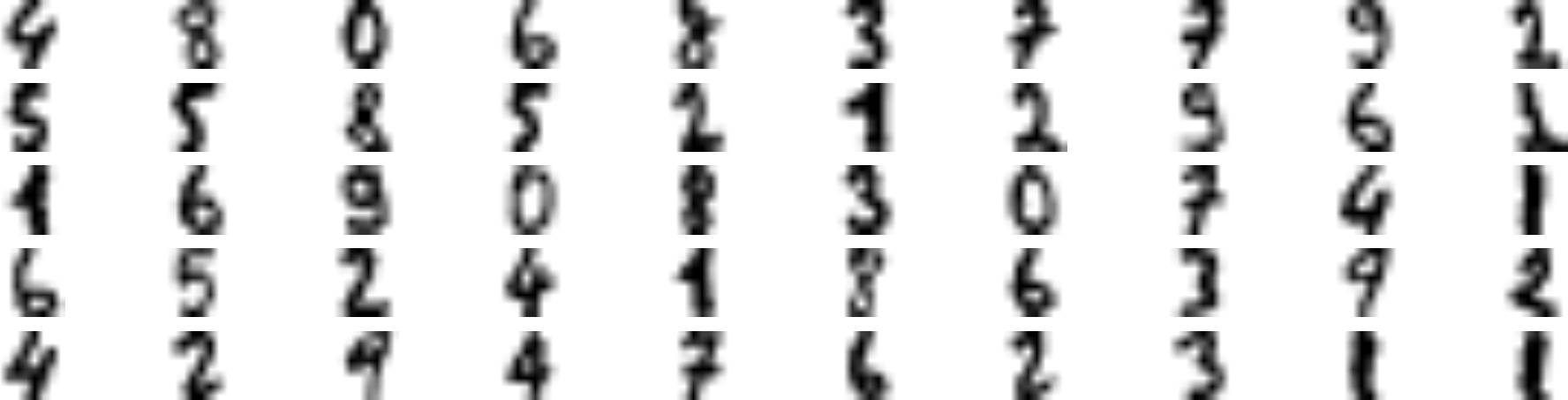

Figure 9-13 shows these 50 representative images:

Figure 9-13. Fifty representative digit images (one per cluster)

Now let’s look at each image and manually label it:

y_representative_digits=np.array([4,8,0,6,8,3,...,7,6,2,3,1,1])

Now we have a dataset with just 50 labeled instances, but instead of being completely random instances, each of them is a representative image of its cluster. Let’s see if the performance is any better:

>>>log_reg=LogisticRegression()>>>log_reg.fit(X_representative_digits,y_representative_digits)>>>log_reg.score(X_test,y_test)0.9244444444444444

Wow! We jumped from 82.7% accuracy to 92.4%, although we are still only training the model on 50 instances. Since it is often costly and painful to label instances, especially when it has to be done manually by experts, it is a good idea to label representative instances rather than just random instances.

But perhaps we can go one step further: what if we propagated the labels to all the other instances in the same cluster? This is called label propagation:

y_train_propagated=np.empty(len(X_train),dtype=np.int32)foriinrange(k):y_train_propagated[kmeans.labels_==i]=y_representative_digits[i]

Now let’s train the model again and look at its performance:

>>>log_reg=LogisticRegression()>>>log_reg.fit(X_train,y_train_propagated)>>>log_reg.score(X_test,y_test)0.9288888888888889

We got a tiny little accuracy boost. Better than nothing, but not astounding. The problem is that we propagated each representative instance’s label to all the instances in the same cluster, including the instances located close to the cluster boundaries, which are more likely to be mislabeled. Let’s see what happens if we only propagate the labels to the 20% of the instances that are closest to the centroids:

percentile_closest=20X_cluster_dist=X_digits_dist[np.arange(len(X_train)),kmeans.labels_]foriinrange(k):in_cluster=(kmeans.labels_==i)cluster_dist=X_cluster_dist[in_cluster]cutoff_distance=np.percentile(cluster_dist,percentile_closest)above_cutoff=(X_cluster_dist>cutoff_distance)X_cluster_dist[in_cluster&above_cutoff]=-1partially_propagated=(X_cluster_dist!=-1)X_train_partially_propagated=X_train[partially_propagated]y_train_partially_propagated=y_train_propagated[partially_propagated]

Now let’s train the model again on this partially propagated dataset:

>>>log_reg=LogisticRegression()>>>log_reg.fit(X_train_partially_propagated,y_train_partially_propagated)>>>log_reg.score(X_test,y_test)0.9422222222222222

Nice! With just 50 labeled instances (only 5 examples per class on average!), we got 94.2% performance, which is pretty close to the performance of logistic regression on the fully labeled digits dataset (which was 96.7%). This is because the propagated labels are actually pretty good, their accuracy is very close to 99%:

>>>np.mean(y_train_partially_propagated==y_train[partially_propagated])0.9896907216494846

Before we move on to Gaussian mixture models, let’s take a look at DBSCAN, another popular clustering algorithm that illustrates a very different approach based on local density estimation. This approach allows the algorithm to identify clusters of arbitrary shapes.

DBSCAN

This algorithm defines clusters as continuous regions of high density. It is actually quite simple:

-

For each instance, the algorithm counts how many instances are located within a small distance ε (epsilon) from it. This region is called the instance’s ε-neighborhood.

-

If an instance has at least

min_samplesinstances in its ε-neighborhood (including itself), then it is considered a core instance. In other words, core instances are those that are located in dense regions. -

All instances in the neighborhood of a core instance belong to the same cluster. This may include other core instances, therefore a long sequence of neighboring core instances forms a single cluster.

-

Any instance that is not a core instance and does not have one in its neighborhood is considered an anomaly.

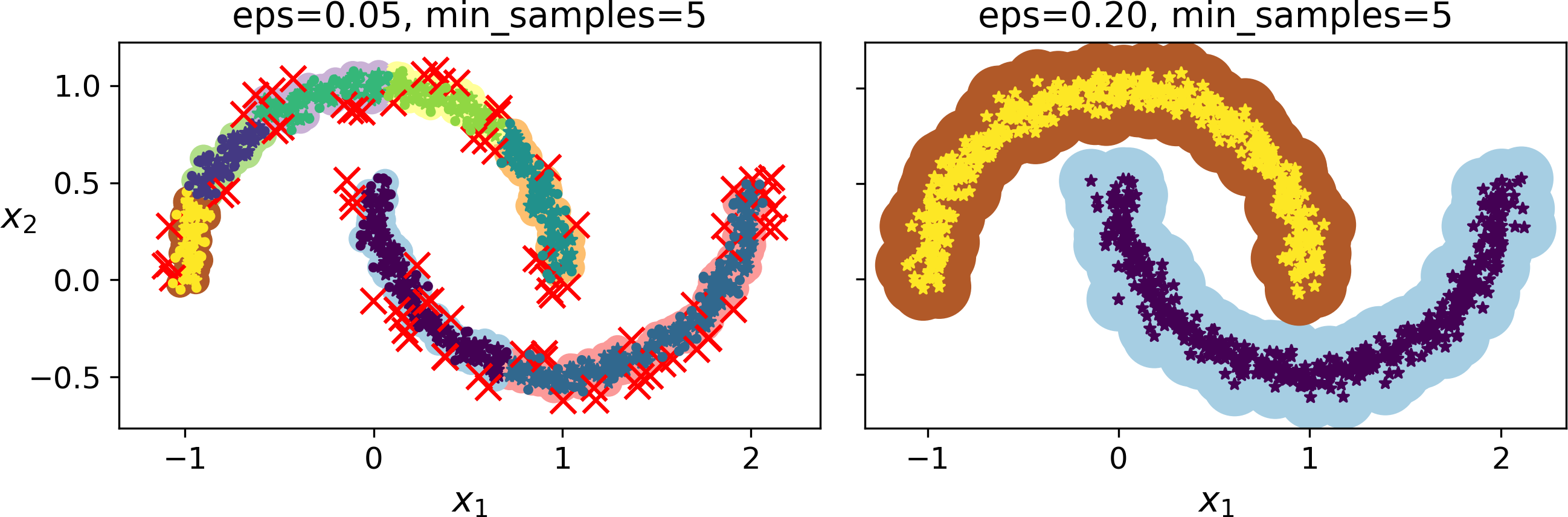

This algorithm works well if all the clusters are dense enough, and they are well separated by low-density regions. The DBSCAN class in Scikit-Learn is as simple to use as you might expect. Let’s test it on the moons dataset, introduced in Chapter 5:

fromsklearn.clusterimportDBSCANfromsklearn.datasetsimportmake_moonsX,y=make_moons(n_samples=1000,noise=0.05)dbscan=DBSCAN(eps=0.05,min_samples=5)dbscan.fit(X)

The labels of all the instances are now available in the labels_ instance variable:

>>>dbscan.labels_array([ 0, 2, -1, -1, 1, 0, 0, 0, ..., 3, 2, 3, 3, 4, 2, 6, 3])

Notice that some instances have a cluster index equal to -1: this means that they are considered as anomalies by the algorithm. The indices of the core instances are available in the core_sample_indices_ instance variable, and the core instances themselves are available in the components_ instance variable:

>>>len(dbscan.core_sample_indices_)808>>>dbscan.core_sample_indices_array([ 0, 4, 5, 6, 7, 8, 10, 11, ..., 992, 993, 995, 997, 998, 999])>>>dbscan.components_array([[-0.02137124, 0.40618608],[-0.84192557, 0.53058695],...[-0.94355873, 0.3278936 ],[ 0.79419406, 0.60777171]])

This clustering is represented in the left plot of Figure 9-14. As you can see, it identified quite a lot of anomalies, plus 7 different clusters. How disappointing! Fortunately, if we widen each instance’s neighborhood by increasing eps to 0.2, we get the clustering on the right, which looks perfect. Let’s continue with this model.

Figure 9-14. DBSCAN clustering using two different neighborhood radiuses

Somewhat surprisingly, the DBSCAN class does not have a predict() method, although it has a fit_predict() method. In other words, it cannot predict which cluster a new instance belongs to. The rationale for this decision is that several classification algorithms could make sense here, and it is easy enough to train one, for example a KNeighborsClassifier:

fromsklearn.neighborsimportKNeighborsClassifierknn=KNeighborsClassifier(n_neighbors=50)knn.fit(dbscan.components_,dbscan.labels_[dbscan.core_sample_indices_])

Now, given a few new instances, we can predict which cluster they most likely belong to, and even estimate a probability for each cluster. Note that we only trained them on the core instances, but we could also have chosen to train them on all the instances, or all but the anomalies: this choice depends on the final task.

>>>X_new=np.array([[-0.5,0],[0,0.5],[1,-0.1],[2,1]])>>>knn.predict(X_new)array([1, 0, 1, 0])>>>knn.predict_proba(X_new)array([[0.18, 0.82],[1. , 0. ],[0.12, 0.88],[1. , 0. ]])

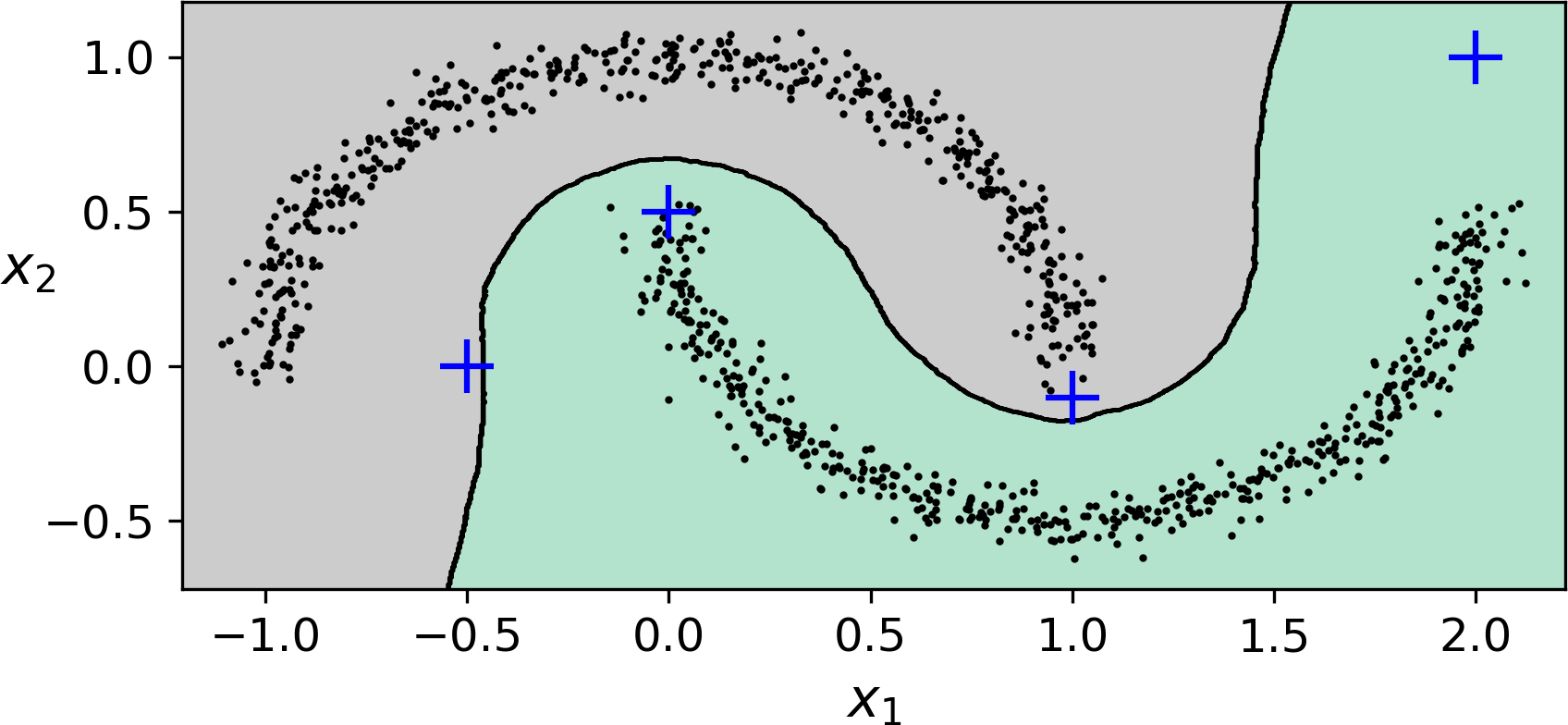

The decision boundary is represented on Figure 9-15 (the crosses represent the 4 instances in X_new). Notice that since there is no anomaly in the KNN’s training set, the classifier always chooses a cluster, even when that cluster is far away. However, it is fairly straightforward to introduce a maximum distance, in which case the two instances that are far away from both clusters are classified as anomalies. To do this, we can use the kneighbors() method of the KNeighborsClassifier: given a set of instances, it returns the distances and the indices of the k nearest neighbors in the training set (two matrices, each with k columns):

>>>y_dist,y_pred_idx=knn.kneighbors(X_new,n_neighbors=1)>>>y_pred=dbscan.labels_[dbscan.core_sample_indices_][y_pred_idx]>>>y_pred[y_dist>0.2]=-1>>>y_pred.ravel()array([-1, 0, 1, -1])

Figure 9-15. cluster_classification_diagram

In short, DBSCAN is a very simple yet powerful algorithm, capable of identifying any number of clusters, of any shape, it is robust to outliers, and it has just two hyperparameters (eps and min_samples). However, if the density varies significantly across the clusters, it can be impossible for it to capture all the clusters properly. Moreover, its computational complexity is roughly O(m log m), making it pretty close to linear with regards to the number of instances. However, Scikit-Learn’s implementation can require up to O(m2) memory if eps is large.

Other Clustering Algorithms

Scikit-Learn implements several more clustering algorithms that you should take a look at. We cannot cover them all in detail here, but here is a brief overview:

-

Agglomerative clustering: a hierarchy of clusters is built from the bottom up. Think of many tiny bubbles floating on water and gradually attaching to each other until there’s just one big group of bubbles. Similarly, at each iteration agglomerative clustering connects the nearest pair of clusters (starting with individual instances). If you draw a tree with a branch for every pair of clusters that merged, you get a binary tree of clusters, where the leaves are the individual instances. This approach scales very well to large numbers of instances or clusters, it can capture clusters of various shapes, it produces a flexible and informative cluster tree instead of forcing you to choose a particular cluster scale, and it can be used with any pairwise distance. It can scale nicely to large numbers of instances if you provide a connectivity matrix. This is a sparse m by m matrix that indicates which pairs of instances are neighbors (e.g., returned by

sklearn.neighbors.kneighbors_graph()). Without a connectivity matrix, the algorithm does not scale well to large datasets. -

Birch: this algorithm was designed specifically for very large datasets, and it can be faster than batch K-Means, with similar results, as long as the number of features is not too large (<20). It builds a tree structure during training containing just enough information to quickly assign each new instance to a cluster, without having to store all the instances in the tree: this allows it to use limited memory, while handle huge datasets.

-

Mean-shift: this algorithm starts by placing a circle centered on each instance, then for each circle it computes the mean of all the instances located within it, and it shifts the circle so that it is centered on the mean. Next, it iterates this mean-shift step until all the circles stop moving (i.e., until each of them is centered on the mean of the instances it contains). This algorithm shifts the circles in the direction of higher density, until each of them has found a local density maximum. Finally, all the instances whose circles have settled in the same place (or close enough) are assigned to the same cluster. This has some of the same features as DBSCAN, in particular it can find any number of clusters of any shape, it has just one hyperparameter (the radius of the circles, called the bandwidth) and it relies on local density estimation. However, it tends to chop clusters into pieces when they have internal density variations. Unfortunately, its computational complexity is O(m2), so it is not suited for large datasets.

-

Affinity propagation: this algorithm uses a voting system, where instances vote for similar instances to be their representatives, and once the algorithm converges, each representative and its voters form a cluster. This algorithm can detect any number of clusters of different sizes. Unfortunately, this algorithm has a computational complexity of O(m2), so it is not suited for large datasets.

-

Spectral clustering: this algorithm takes a similarity matrix between the instances and creates a low-dimensional embedding from it (i.e., it reduces its dimensionality), then it uses another clustering algorithm in this low-dimensional space (Scikit-Learn’s implementation uses K-Means). Spectral clustering can capture complex cluster structures, and it can also be used to cut graphs (e.g., to identify clusters of friends on a social network), however it does not scale well to large number of instances, and it does not behave well when the clusters have very different sizes.

Now let’s dive into Gaussian mixture models, which can be used for density estimation, clustering and anomaly detection.

Gaussian Mixtures

A Gaussian mixture model (GMM) is a probabilistic model that assumes that the instances were generated from a mixture of several Gaussian distributions whose parameters are unknown. All the instances generated from a single Gaussian distribution form a cluster that typically looks like an ellipsoid. Each cluster can have a different ellipsoidal shape, size, density and orientation, just like in Figure 9-11. When you observe an instance, you know it was generated from one of the Gaussian distributions, but you are not told which one, and you do not know what the parameters of these distributions are.

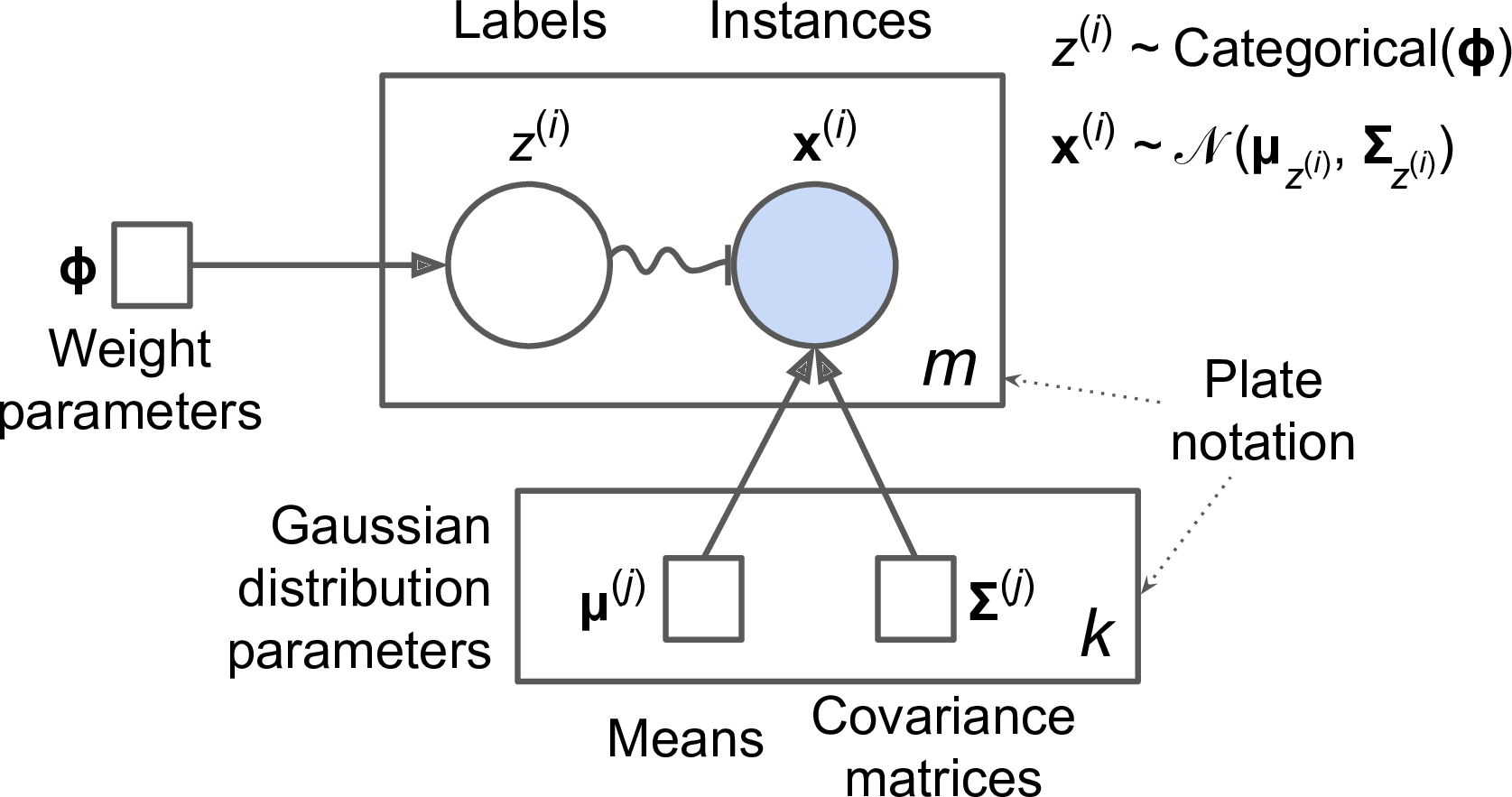

There are several GMM variants: in the simplest variant, implemented in the GaussianMixture class, you must know in advance the number k of Gaussian distributions. The dataset X is assumed to have been generated through the following probabilistic process:

-

For each instance, a cluster is picked randomly among k clusters. The probability of choosing the jth cluster is defined by the cluster’s weight ϕ(j).6 The index of the cluster chosen for the ith instance is noted z(i).

-

If z(i)=j, meaning the ith instance has been assigned to the jth cluster, the location x(i) of this instance is sampled randomly from the Gaussian distribution with mean μ(j) and covariance matrix Σ(j). This is noted .

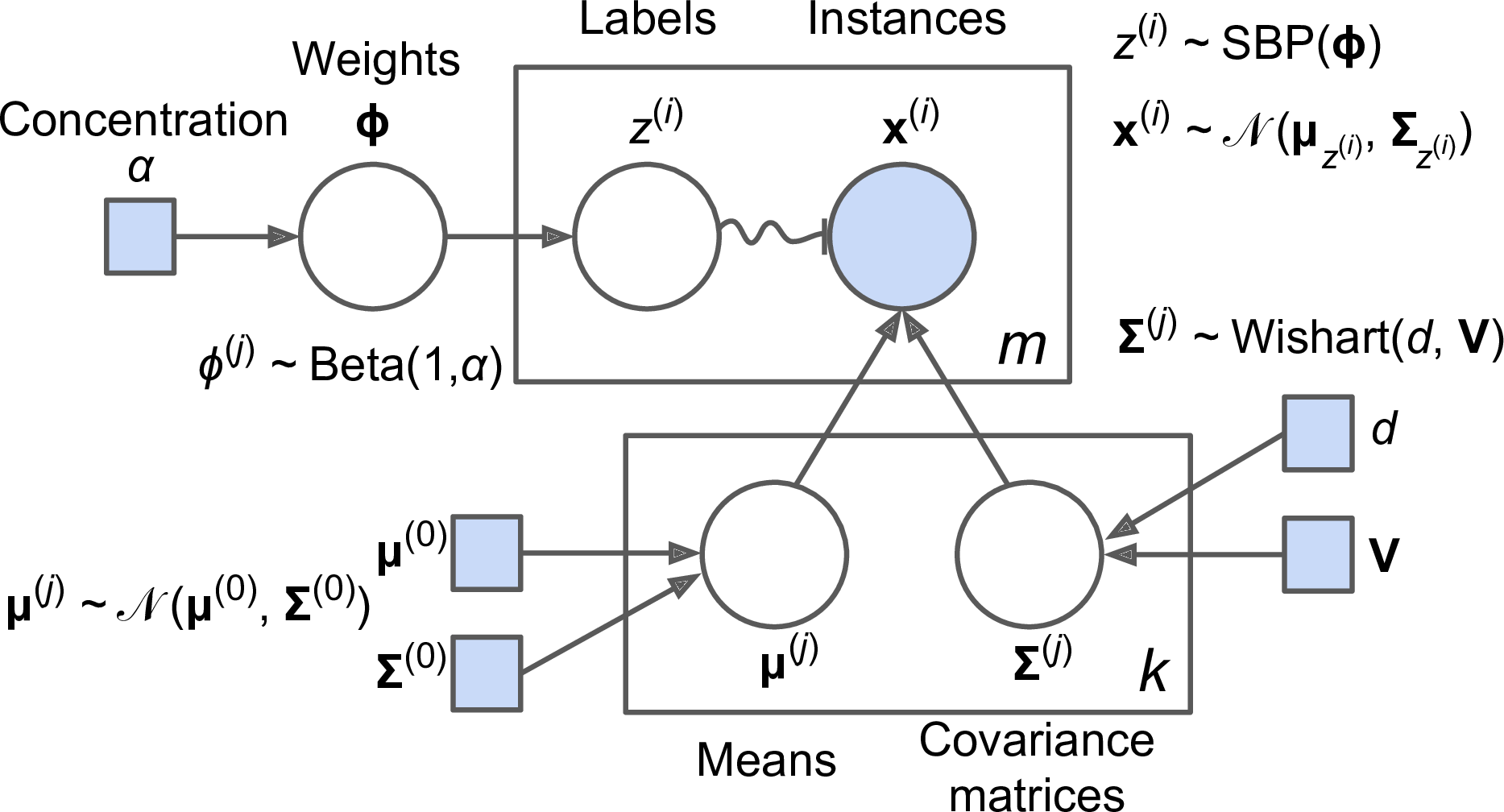

This generative process can be represented as a graphical model (see Figure 9-16). This is a graph which represents the structure of the conditional dependencies between random variables.

Figure 9-16. Gaussian mixture model

Here is how to interpret it:7

-

The circles represent random variables.

-

The squares represent fixed values (i.e., parameters of the model).

-

The large rectangles are called plates: they indicate that their content is repeated several times.

-

The number indicated at the bottom right hand side of each plate indicates how many times its content is repeated, so there are m random variables z(i) (from z(1) to z(m)) and m random variables x(i), and k means μ(j) and k covariance matrices Σ(j), but just one weight vector ϕ (containing all the weights ϕ(1) to ϕ(k)).

-

Each variable z(i) is drawn from the categorical distribution with weights ϕ. Each variable x(i) is drawn from the normal distribution with the mean and covariance matrix defined by its cluster z(i).

-

The solid arrows represent conditional dependencies. For example, the probability distribution for each random variable z(i) depends on the weight vector ϕ. Note that when an arrow crosses a plate boundary, it means that it applies to all the repetitions of that plate, so for example the weight vector ϕ conditions the probability distributions of all the random variables x(1) to x(m).

-

The squiggly arrow from z(i) to x(i) represents a switch: depending on the value of z(i), the instance x(i) will be sampled from a different Gaussian distribution. For example, if z(i)=j, then .

-

Shaded nodes indicate that the value is known, so in this case only the random variables x(i) have known values: they are called observed variables. The unknown random variables z(i) are called latent variables.

So what can you do with such a model? Well, given the dataset X, you typically want to start by estimating the weights ϕ and all the distribution parameters μ(1) to μ(k) and Σ(1) to Σ(k). Scikit-Learn’s GaussianMixture class makes this trivial:

fromsklearn.mixtureimportGaussianMixturegm=GaussianMixture(n_components=3,n_init=10)gm.fit(X)

Let’s look at the parameters that the algorithm estimated:

>>>gm.weights_array([0.20965228, 0.4000662 , 0.39028152])>>>gm.means_array([[ 3.39909717, 1.05933727],[-1.40763984, 1.42710194],[ 0.05135313, 0.07524095]])>>>gm.covariances_array([[[ 1.14807234, -0.03270354],[-0.03270354, 0.95496237]],[[ 0.63478101, 0.72969804],[ 0.72969804, 1.1609872 ]],[[ 0.68809572, 0.79608475],[ 0.79608475, 1.21234145]]])

Great, it worked fine! Indeed, the weights that were used to generate the data were 0.2, 0.4 and 0.4, and similarly, the means and covariance matrices were very close to those found by the algorithm. But how? This class relies on the Expectation-Maximization (EM) algorithm, which has many similarities with the K-Means algorithm: it also initializes the cluster parameters randomly, then it repeats two steps until convergence, first assigning instances to clusters (this is called the expectation step) then updating the clusters (this is called the maximization step). Sounds familiar? Indeed, in the context of clustering you can think of EM as a generalization of K-Means which not only finds the cluster centers (μ(1) to μ(k)), but also their size, shape and orientation (Σ(1) to Σ(k)), as well as their relative weights (ϕ(1) to ϕ(k)). Unlike K-Means, EM uses soft cluster assignments rather than hard assignments: for each instance during the expectation step, the algorithm estimates the probability that it belongs to each cluster (based on the current cluster parameters). Then, during the maximization step, each cluster is updated using all the instances in the dataset, with each instance weighted by the estimated probability that it belongs to that cluster. These probabilities are called the responsibilities of the clusters for the instances. During the maximization step, each cluster’s update will mostly be impacted by the instances it is most responsible for.

Warning

Unfortunately, just like K-Means, EM can end up converging to poor solutions, so it needs to be run several times, keeping only the best solution. This is why we set n_init to 10. Be careful: by default n_init is only set to 1.

You can check whether or not the algorithm converged and how many iterations it took:

>>>gm.converged_True>>>gm.n_iter_3

Okay, now that you have an estimate of the location, size, shape, orientation and relative weight of each cluster, the model can easily assign each instance to the most likely cluster (hard clustering) or estimate the probability that it belongs to a particular cluster (soft clustering). For this, just use the predict() method for hard clustering, or the predict_proba() method for soft clustering:

>>>gm.predict(X)array([2, 2, 1, ..., 0, 0, 0])>>>gm.predict_proba(X)array([[2.32389467e-02, 6.77397850e-07, 9.76760376e-01],[1.64685609e-02, 6.75361303e-04, 9.82856078e-01],[2.01535333e-06, 9.99923053e-01, 7.49319577e-05],...,[9.99999571e-01, 2.13946075e-26, 4.28788333e-07],[1.00000000e+00, 1.46454409e-41, 5.12459171e-16],[1.00000000e+00, 8.02006365e-41, 2.27626238e-15]])

It is a generative model, meaning you can actually sample new instances from it (note that they are ordered by cluster index):

>>>X_new,y_new=gm.sample(6)>>>X_newarray([[ 2.95400315, 2.63680992],[-1.16654575, 1.62792705],[-1.39477712, -1.48511338],[ 0.27221525, 0.690366 ],[ 0.54095936, 0.48591934],[ 0.38064009, -0.56240465]])>>>y_newarray([0, 1, 2, 2, 2, 2])

It is also possible to estimate the density of the model at any given location. This is achieved using the score_samples() method: for each instance it is given, this method estimates the log of the probability density function (PDF) at that location. The greater the score, the higher the density:

>>>gm.score_samples(X)array([-2.60782346, -3.57106041, -3.33003479, ..., -3.51352783,-4.39802535, -3.80743859])

If you compute the exponential of these scores, you get the value of the PDF at the location of the given instances. These are not probabilities, but probability densities: they can take on any positive value, not just between 0 and 1. To estimate the probability that an instance will fall within a particular region, you would have to integrate the PDF over that region (if you do so over the entire space of possible instance locations, the result will be 1).

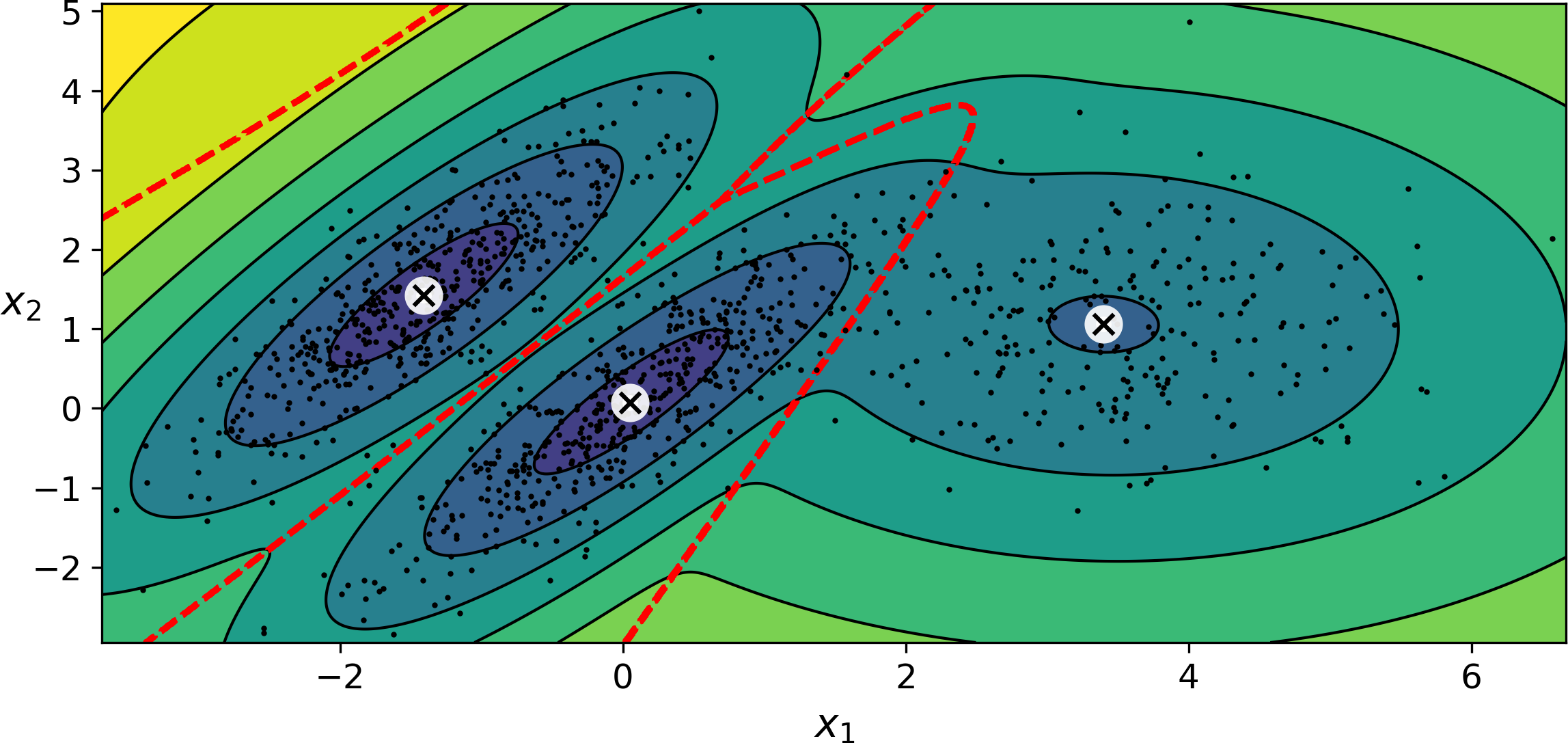

Figure 9-17 shows the cluster means, the decision boundaries (dashed lines), and the density contours of this model:

Figure 9-17. Cluster means, decision boundaries and density contours of a trained Gaussian mixture model

Nice! The algorithm clearly found an excellent solution. Of course, we made its task easy by actually generating the data using a set of 2D Gaussian distributions (unfortunately, real life data is not always so Gaussian and low-dimensional), and we also gave the algorithm the correct number of clusters. When there are many dimensions, or many clusters, or few instances, EM can struggle to converge to the optimal solution. You might need to reduce the difficulty of the task by limiting the number of parameters that the algorithm has to learn: one way to do this is to limit the range of shapes and orientations that the clusters can have. This can be achieved by imposing constraints on the covariance matrices. To do this, just set the covariance_type hyperparameter to one of the following values:

-

"spherical": all clusters must be spherical, but they can have different diameters (i.e., different variances). -

"diag": clusters can take on any ellipsoidal shape of any size, but the ellipsoid’s axes must be parallel to the coordinate axes (i.e., the covariance matrices must be diagonal). -

"tied": all clusters must have the same ellipsoidal shape, size and orientation (i.e., all clusters share the same covariance matrix).

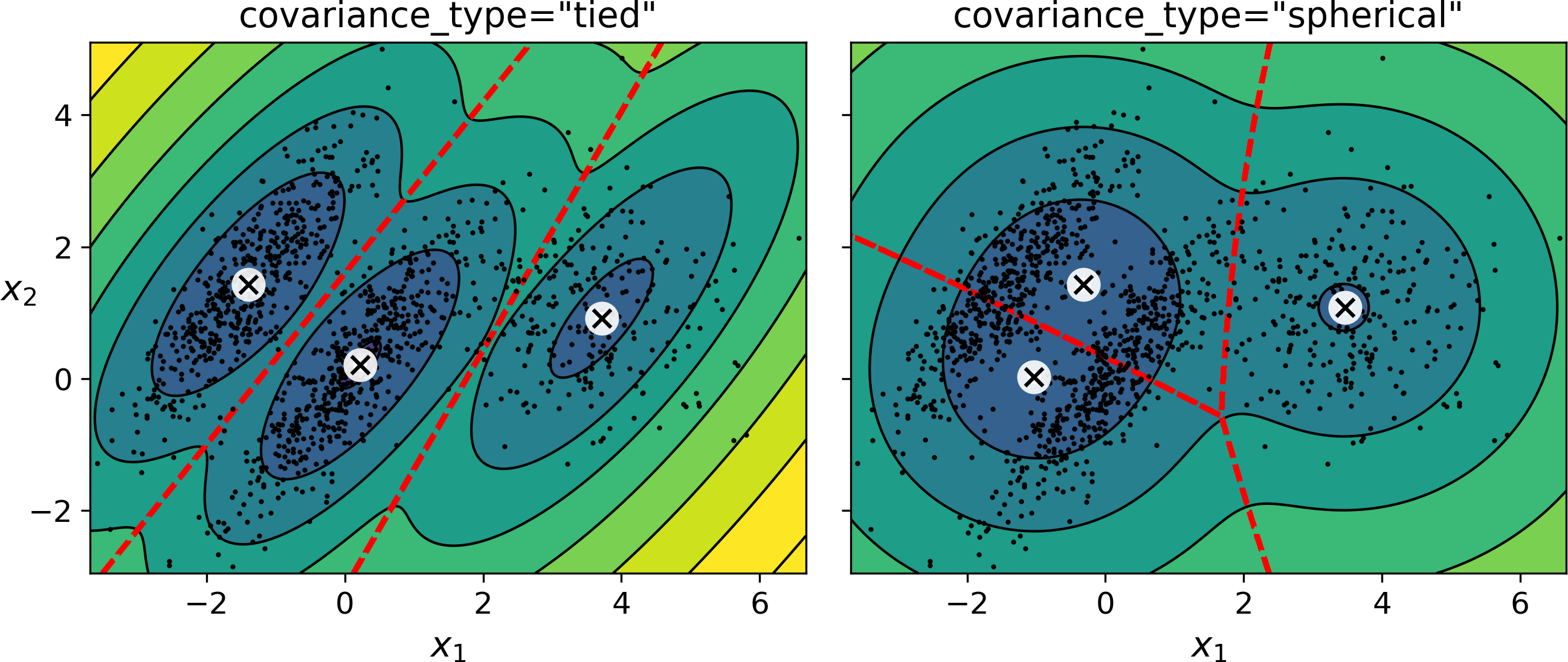

By default, covariance_type is equal to "full", which means that each cluster can take on any shape, size and orientation (it has its own unconstrained covariance matrix). Figure 9-18 plots the solutions found by the EM algorithm when covariance_type is set to "tied" or "spherical“.

Figure 9-18. covariance_type_diagram

Note

The computational complexity of training a GaussianMixture model depends on the number of instances m, the number of dimensions n, the number of clusters k, and the constraints on the covariance matrices. If covariance_type is "spherical or "diag", it is O(kmn), assuming the data has a clustering structure. If covariance_type is "tied" or "full", it is O(kmn2 + kn3), so it will not scale to large numbers of features.

Gaussian mixture models can also be used for anomaly detection. Let’s see how.

Anomaly Detection using Gaussian Mixtures

Anomaly detection (also called outlier detection) is the task of detecting instances that deviate strongly from the norm. These instances are of course called anomalies or outliers, while the normal instances are called inliers. Anomaly detection is very useful in a wide variety of applications, for example in fraud detection, or for detecting defective products in manufacturing, or to remove outliers from a dataset before training another model, which can significantly improve the performance of the resulting model.

Using a Gaussian mixture model for anomaly detection is quite simple: any instance located in a low-density region can be considered an anomaly. You must define what density threshold you want to use. For example, in a manufacturing company that tries to detect defective products, the ratio of defective products is usually well-known. Say it is equal to 4%, then you can set the density threshold to be the value that results in having 4% of the instances located in areas below that threshold density. If you notice that you get too many false positives (i.e., perfectly good products that are flagged as defective), you can lower the threshold. Conversely, if you have too many false negatives (i.e., defective products that the system does not flag as defective), you can increase the threshold. This is the usual precision/recall tradeoff (see Chapter 3). Here is how you would identify the outliers using the 4th percentile lowest density as the threshold (i.e., approximately 4% of the instances will be flagged as anomalies):

densities=gm.score_samples(X)density_threshold=np.percentile(densities,4)anomalies=X[densities<density_threshold]

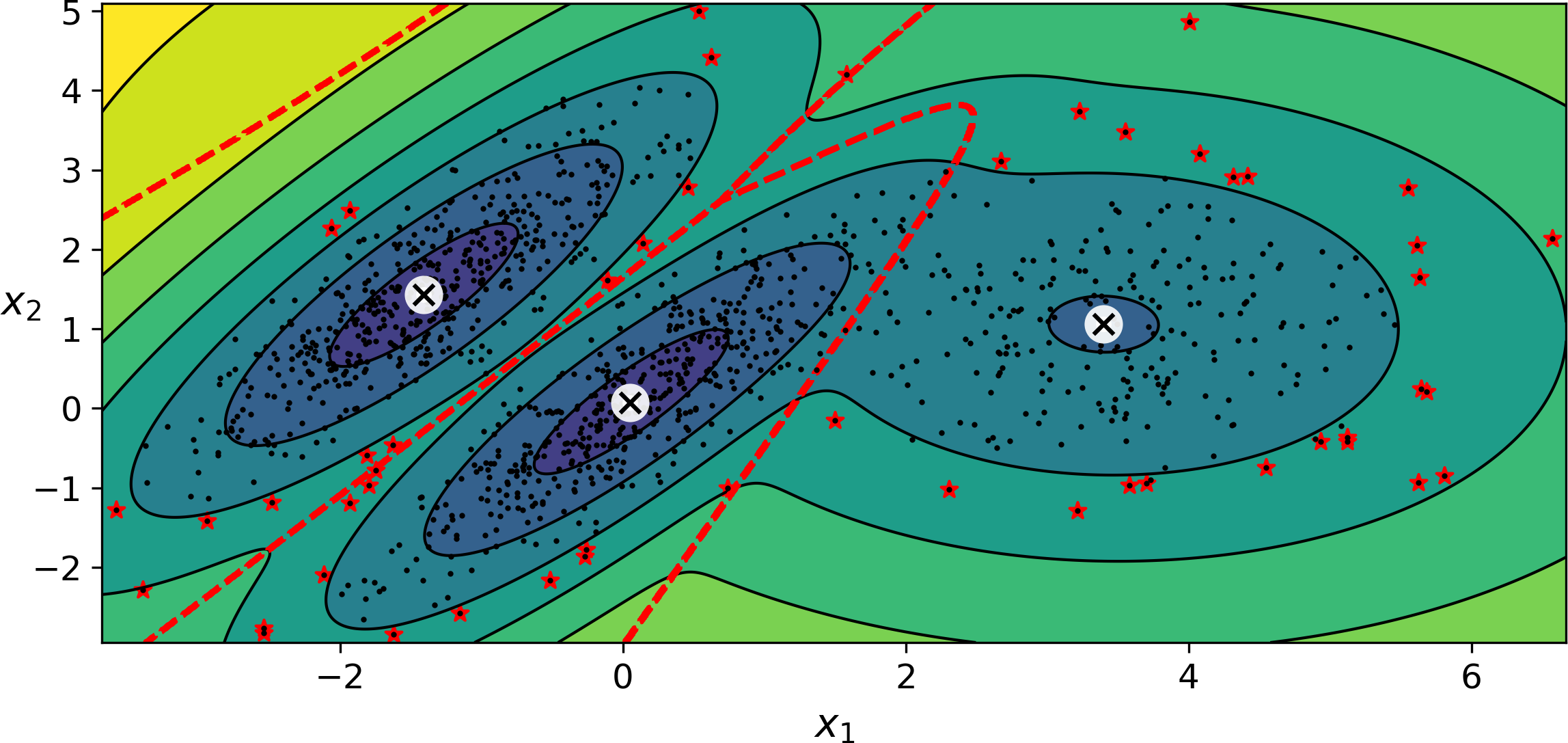

These anomalies are represented as stars on Figure 9-19:

Figure 9-19. Anomaly detection using a Gaussian mixture model

A closely related task is novelty detection: it differs from anomaly detection in that the algorithm is assumed to be trained on a “clean” dataset, uncontaminated by outliers, whereas anomaly detection does not make this assumption. Indeed, outlier detection is often precisely used to clean up a dataset.

Tip

Gaussian mixture models try to fit all the data, including the outliers, so if you have too many of them, this will bias the model’s view of “normality”: some outliers may wrongly be considered as normal. If this happens, you can try to fit the model once, use it to detect and remove the most extreme outliers, then fit the model again on the cleaned up dataset. Another approach is to use robust covariance estimation methods (see the EllipticEnvelope class).

Just like K-Means, the GaussianMixture algorithm requires you to specify the number of clusters. So how can you find it?

Selecting the Number of Clusters

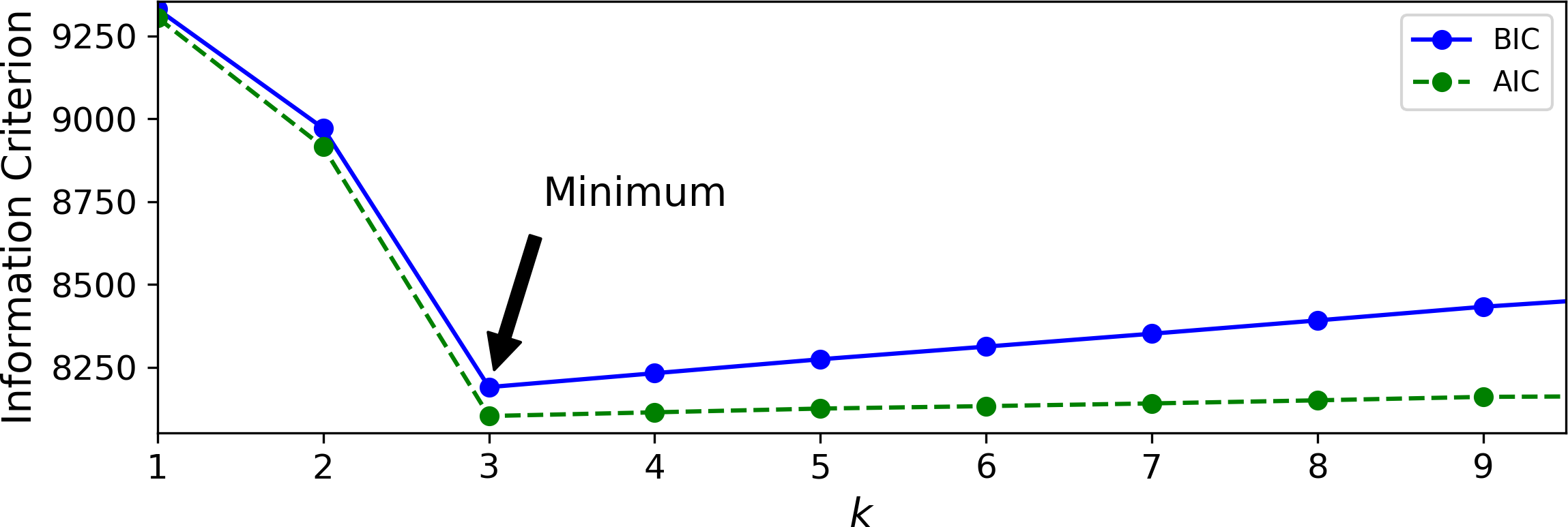

With K-Means, you could use the inertia or the silhouette score to select the appropriate number of clusters, but with Gaussian mixtures, it is not possible to use these metrics because they are not reliable when the clusters are not spherical or have different sizes. Instead, you can try to find the model that minimizes a theoretical information criterion such as the Bayesian information criterion (BIC) or the Akaike information criterion (AIC), defined in Equation 9-1.

Equation 9-1. Bayesian information criterion (BIC) and Akaike information criterion (AIC)

-

m is the number of instances, as always.

-

p is the number of parameters learned by the model.

-

is the maximized value of the likelihood function of the model.

Both the BIC and the AIC penalize models that have more parameters to learn (e.g., more clusters), and reward models that fit the data well. They often end up selecting the same model, but when they differ, the model selected by the BIC tends to be simpler (fewer parameters) than the one selected by the AIC, but it does not fit the data quite as well (this is especially true for larger datasets).

To compute the BIC and AIC, just call the bic() or aic() methods:

>>>gm.bic(X)8189.74345832983>>>gm.aic(X)8102.518178214792

Figure 9-21 shows the BIC for different numbers of clusters k. As you can see, both the BIC and the AIC are lowest when k=3, so it is most likely the best choice. Note that we could also search for the best value for the covariance_type hyperparameter. For example, if it is "spherical" rather than "full", then the model has much fewer parameters to learn, but it does not fit the data as well.

Figure 9-21. AIC and BIC for different numbers of clusters k

Bayesian Gaussian Mixture Models

Rather than manually searching for the optimal number of clusters, it is possible to use instead the BayesianGaussianMixture class which is capable of giving weights equal (or close) to zero to unnecessary clusters. Just set the number of clusters n_components to a value that you have good reason to believe is greater than the optimal number of clusters (this assumes some minimal knowledge about the problem at hand), and the algorithm will eliminate the unnecessary clusters automatically. For example, let’s set the number of clusters to 10 and see what happens:

>>>fromsklearn.mixtureimportBayesianGaussianMixture>>>bgm=BayesianGaussianMixture(n_components=10,n_init=10,random_state=42)>>>bgm.fit(X)>>>np.round(bgm.weights_,2)array([0.4 , 0.21, 0.4 , 0. , 0. , 0. , 0. , 0. , 0. , 0. ])

Perfect: the algorithm automatically detected that only 3 clusters are needed, and the resulting clusters are almost identical to the ones in Figure 9-17.

In this model, the cluster parameters (including the weights, means and covariance matrices) are not treated as fixed model parameters anymore, but as latent random variables, like the cluster assignments (see Figure 9-22). So z now includes both the cluster parameters and the cluster assignments.

Figure 9-22. Bayesian Gaussian mixture model

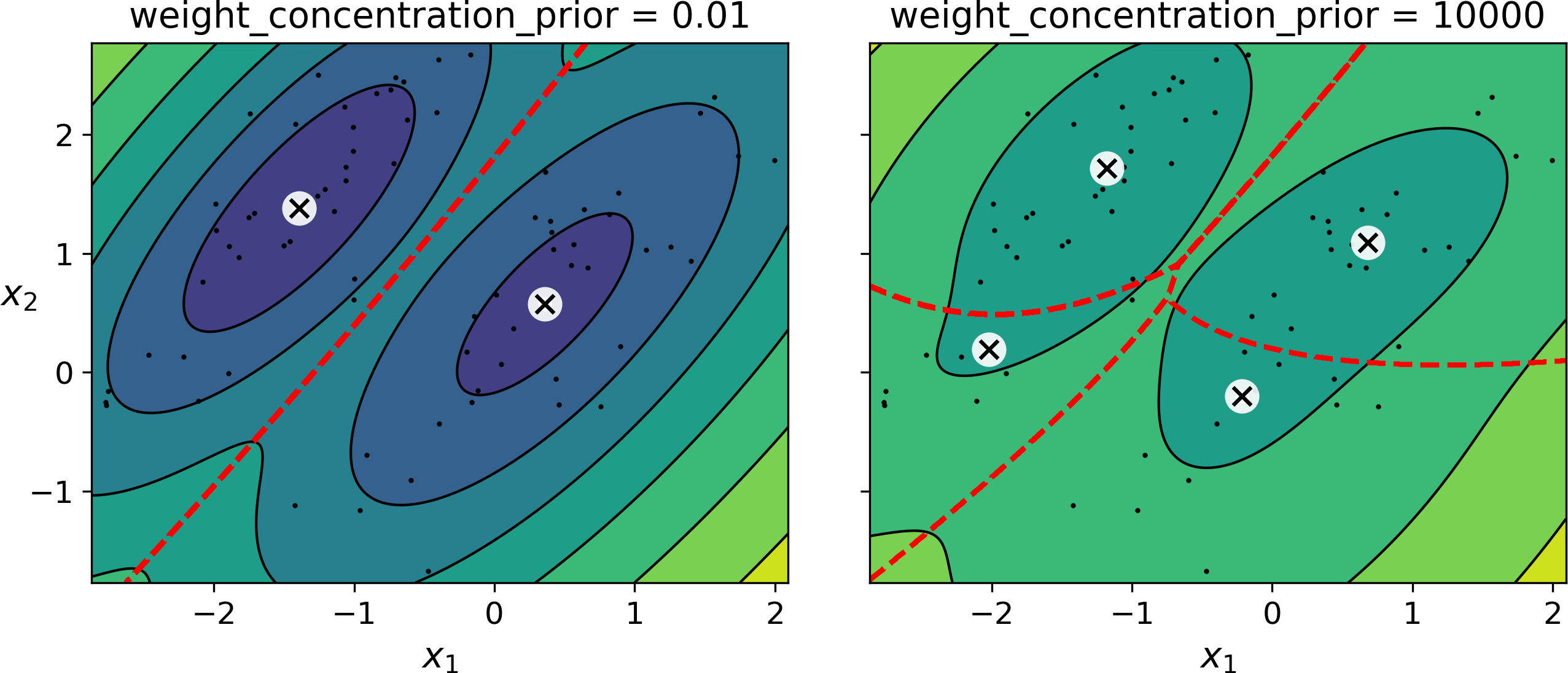

Prior knowledge about the latent variables z can be encoded in a probability distribution p(z) called the prior. For example, we may have a prior belief that the clusters are likely to be few (low concentration), or conversely, that they are more likely to be plentiful (high concentration). This can be adjusted using the weight_concentration_prior hyperparameter. Setting it to 0.01 or 1000 gives very different clusterings (see Figure 9-23). However, the more data we have, the less the priors matter. In fact, to plot diagrams with such large differences, you must use very strong priors and little data.

Figure 9-23. Using different concentration priors

Note

The fact that you see only 3 regions in the right plot although there are 4 centroids is not a bug: the weight of the top-right cluster is much larger than the weight of the lower-right cluster, so the probability that any given point in this region belongs to the top-right cluster is greater than the probability that it belongs to the lower-right cluster, even near the lower-right cluster.

Bayes’ theorem (Equation 9-2) tells us how to update the probability distribution over the latent variables after we observe some data X. It computes the posterior distribution p(z|X), which is the conditional probability of z given X.

Equation 9-2. Bayes’ theorem

Unfortunately, in a Gaussian mixture model (and many other problems), the denominator p(x) is intractable, as it requires integrating over all the possible values of z (Equation 9-3). This means considering all possible combinations of cluster parameters and cluster assignments.

Equation 9-3. The evidence p(X) is often intractable

This is one of the central problems in Bayesian statistics, and there are several approaches to solving it. One of them is variational inference, which picks a family of distributions q(z; λ) with its own variational parameters λ (lambda), then it optimizes these parameters to make q(z) a good approximation of p(z|X). This is achieved by finding the value of λ that minimizes the KL divergence from q(z) to p(z|X), noted DKL(q‖p). The KL divergence equation is shown in (see Equation 9-4), and it can be rewritten as the log of the evidence (log p(X)) minus the evidence lower bound (ELBO). Since the log of the evidence does not depend on q, it is a constant term, so minimizing the KL divergence just requires maximizing the ELBO.

Equation 9-4. KL divergence from q(z) to p(z|X)

In practice, there are different techniques to maximize the ELBO. In mean field variational inference, it is necessary to pick the family of distributions q(z; λ) and the prior p(z) very carefully to ensure that the equation for the ELBO simplifies to a form that can actually be computed. Unfortunately, there is no general way to do this, it depends on the task and requires some mathematical skills. For example, the distributions and lower bound equations used in Scikit-Learn’s BayesianGaussianMixture class are presented in the documentation. From these equations it is possible to derive update equations for the cluster parameters and assignment variables: these are then used very much like in the Expectation-Maximization algorithm. In fact, the computational complexity of the BayesianGaussianMixture class is similar to that of the GaussianMixture class (but generally significantly slower). A simpler approach to maximizing the ELBO is called black box stochastic variational inference (BBSVI): at each iteration, a few samples are drawn from q and they are used to estimate the gradients of the ELBO with regards to the variational parameters λ, which are then used in a gradient ascent step. This approach makes it possible to use Bayesian inference with any kind of model (provided it is differentiable), even deep neural networks: this is called Bayesian deep learning.

Tip

If you want to dive deeper into Bayesian statistics, check out the Bayesian Data Analysis book by Andrew Gelman, John Carlin, Hal Stern, David Dunson, Aki Vehtari, and Donald Rubin.

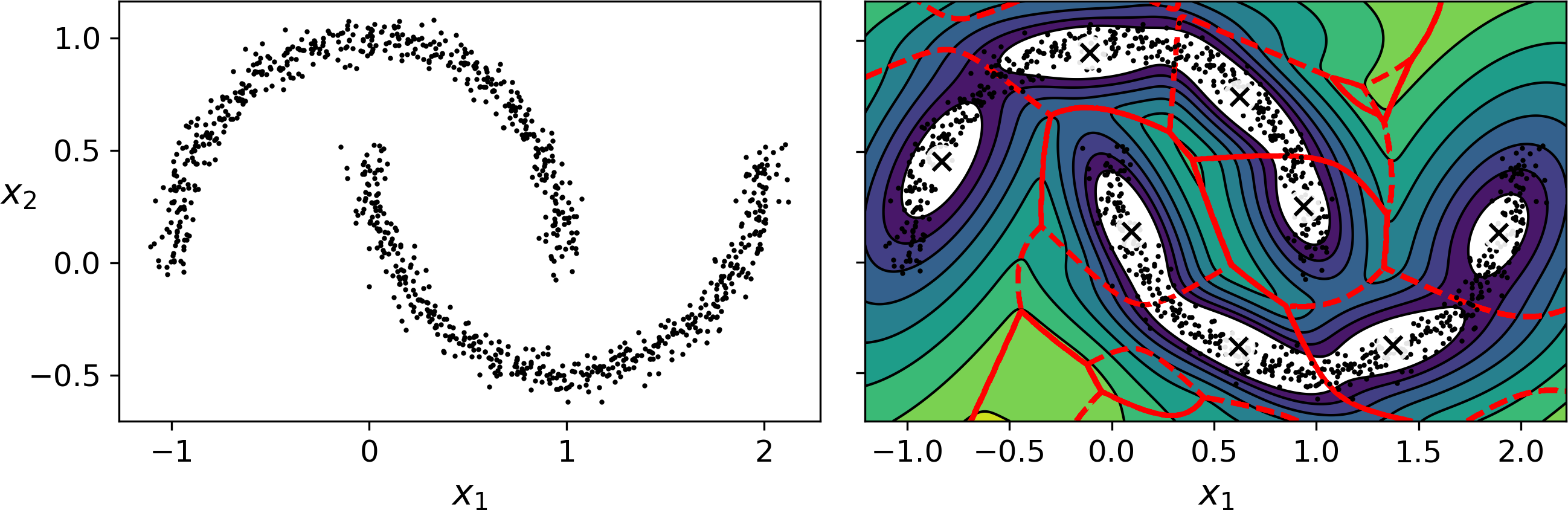

Gaussian mixture models work great on clusters with ellipsoidal shapes, but if you try to fit a dataset with different shapes, you may have bad surprises. For example, let’s see what happens if we use a Bayesian Gaussian mixture model to cluster the moons dataset (see Figure 9-24):

Figure 9-24. moons_vs_bgm_diagram

Oops, the algorithm desperately searched for ellipsoids, so it found 8 different clusters instead of 2. The density estimation is not too bad, so this model could perhaps be used for anomaly detection, but it failed to identify the two moons. Let’s now look at a few clustering algorithms capable of dealing with arbitrarily shaped clusters.

Other Anomaly Detection and Novelty Detection Algorithms

Scikit-Learn also implements a few algorithms dedicated to anomaly detection or novelty detection:

-

Fast-MCD (minimum covariance determinant), implemented by the

EllipticEnvelopeclass: this algorithm is useful for outlier detection, in particular to cleanup a dataset. It assumes that the normal instances (inliers) are generated from a single Gaussian distribution (not a mixture), but it also assumes that the dataset is contaminated with outliers that were not generated from this Gaussian distribution. When it estimates the parameters of the Gaussian distribution (i.e., the shape of the elliptic envelope around the inliers), it is careful to ignore the instances that are most likely outliers. This gives a better estimation of the elliptic envelope, and thus makes it better at identifying the outliers. -

Isolation forest: this is an efficient algorithm for outlier detection, especially in high-dimensional datasets. The algorithm builds a Random Forest in which each Decision Tree is grown randomly: at each node, it picks a feature randomly, then it picks a random threshold value (between the min and max value) to split the dataset in two. The dataset gradually gets chopped into pieces this way, until all instances end up isolated from the other instances. An anomaly is usually far from other instances, so on average (across all the Decision Trees) it tends to get isolated in less steps than normal instances.

-

Local outlier factor (LOF): this algorithm is also good for outlier detection. It compares the density of instances around a given instance to the density around its neighbors. An anomaly is often more isolated than its k nearest neighbors.

-

One-class SVM: this algorithm is better suited for novelty detection. Recall that a kernelized SVM classifier separates two classes by first (implicitly) mapping all the instances to a high-dimensional space, then separating the two classes using a linear SVM classifier within this high-dimensional space (see Chapter 5). Since we just have one class of instances, the one-class SVM algorithm instead tries to separate the instances in high-dimensional space from the origin. In the original space, this will correspond to finding a small region that encompasses all the instances. If a new instance does not fall within this region, it is an anomaly. There are a few hyperparameters to tweak: the usual ones for a kernelized SVM, plus a margin hyperparameter that corresponds to the probability of a new instance being mistakenly considered as novel, when it is in fact normal. It works great, especially with high-dimensional datasets, but just like all SVMs, it does not scale to large datasets.

1 “Least square quantization in PCM,” Stuart P. Lloyd. (1982).

2 “k-means\++: The advantages of careful seeding,” David Arthur and Sergei Vassilvitskii (2006).

3 “Using the Triangle Inequality to Accelerate k-Means,” Charles Elkan (2003).

4 The triangle inequality is AC ≤ AB + BC where A, B and C are three points, and AB, AC and BC are the distances between these points.

5 “Web-Scale K-Means Clustering,” David Sculley (2010).

6 Phi (ϕ or φ) is the 21st letter of the Greek alphabet.

7 Most of these notations are standard, but a few additional notations were taken from the Wikipedia article on plate notation.