A Guide to Configuration Management and Automation

Copyright © 2016 Jo Rhett. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://safaribooksonline.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781491907665 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Learning Puppet 4, the cover image of an European polecat, and related trade dress are trademarks of O’Reilly Media, Inc.

While the publisher and the author have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the author disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-90766-5

[LSI]

I first met Jo Rhett online in the early 2000s. We were both active on the CFengine mailing lists, trying to administer production systems with methodologies that would come to be known as infrastructure as code. At the time, the concept of repeatable, convergent automation tools was fairly edgy, and the community that arose around the space also had its sharp edges. I seem to recall standing up at a LISA config management birds-of-a-feather session circa 2003, pointing at Mark Burgess, CFengine’s author, and shouting, “You ruined my life! But you saved my life!”

Luke Kanies was also a CFengine user at the time, and was consistently exhorting the community to introspect its shortcomings and evolve to the next level. His edginess led to some pretty epic—though mostly collegial—debates, borne out in papers such as Steve Traugott’s “Turing Equivalence in Systems Administration” and Luke’s “ISConf: Theory, Practice, and Beyond.”

Around 2005, Luke began writing a new tool in Ruby that was intended to address the problems with usability and flexibility he was running into with the rest of the ecosystem. Originally called Blink, the thing we know today as Puppet began its infancy as a CFengine “module” (actually a plugin, thus perpetuating an unfortunate relationship with English names that Puppet continues to this day), and Jo and I, along with the rest of the nascent configuration management community, followed its development with interest.

Fast-forward several years through a few iterations of Moore’s law, and witness the resultant explosion in processing power, distributed systems complexity, and their attendant burden on operations folk. Puppet had matured to become the dominant lingua franca of infrastructure; the “tool wars” of the mid-2000s had achieved détente and our focus turned to culture, process, and the wider problems of tying infrastructure operations to business value: in a word, DevOps.

I’d done tens of thousands of infrastructure buildouts on Puppet and eventually came to work at Puppet Labs, the company Luke formed around supporting and productizing Puppet. Jo was consulting at large companies around Silicon Valley, pushing Puppet to its limits and feeding bug fixes and feature requests into the community. As Puppet’s new product manager, this relationship was a little different (and frankly, sometimes far less comfortable, on my end!) than working as peers on the same project, but over the past several years it’s turned out to be hugely positive.

The thing I appreciate most about Jo, which I think shines through in the book you’re about to read, is his sincere desire to help others. This is a core principle of the DevOps movement, usually stated as “sharing” or “empathy,” and Jo’s embodied it since the early days of CFengine. He consistently advocates for the “tough right thing” for the users, and while he will describe a range of possibilities with deep technical acumen and clear-eyed candor, in the end he’ll steer us, the readers, in the direction that’s going to work out the best in the long term.

Puppet’s an amazing tool, but as the saying goes: “With great power comes great responsibility.” Jo’s depth of experience and empathy for other operations engineers make him the right person to show us how to use Puppet’s power responsibly. This book is the result, and I’m deeply grateful to him for writing it.

Twenty years ago, it was common for a single server to provide services for hundreds of users. A system administrator was often responsible for as few as 10 servers. Most people used only one computer.

In 2015, it is common for a normal, everyday person to own and utilize more than five highly advanced and capable computers. Think about the devices you use on a daily basis: every one of them—the phone on your desk, the cell phone on your hip, the tablet you read from, your laptop, and even the car you drive—is thousands of times more powerful and capable than the large, room-sized servers used a few generations ago.

We live today in the midst of an information revolution. Systems capable of powerful computation that once required server rooms to contain are now able to be held in your hands. Explosive growth in the adoption and capabilities of modern technology has created a world full of computers. More and more devices every day contain powerful small computers that participate in the Internet of Things.

When I started my career, it was difficult to convince managers that every worker needed his or her own computer. Today, the workers are outnumbered by computers almost 20:1, and in certain industries by as much as 100:1. Advanced computing capability combined with cheap memory have revolutionized what businesses can accomplish with data. Even small teams of people utilize and depend upon thousands of computers. Every one of these devices needs to be managed. It’s simply not possible to do it all by hand.

For this, we use Puppet.

This book is primarily aimed at system administrators and operations or DevOps engineers. If you are responsible for development or production nodes, this book will provide you with immediately useful tools to make your job easier than ever before. If you run a high-uptime production environment, you’re going to learn how Puppet can enforce standards throughout the implementation. Soon you’ll wonder how you ever got along without it.

No matter what you call yourself, if you feel that you spend too much time managing computers, then this book is for you. You’d like to get it done faster so you can focus on something else. You’d like to do it more consistently, so that you don’t have to chase down one-off problems in your reports. Or you’ve got some new demands that you’re looking for a way to solve. If any of these statements fit, Puppet will be one of the best tools in your toolbox.

This book will not be a heavy tome filled with reference material irrelevant to the day-to-day system administrator—exactly the opposite. Throughout this book we will never stray from one simple goal: using Puppet to manage real-life administration concerns.

This book will never tell you to run a script and not tell you what it does, or why. I hate trying to determine what an installation script did, and I won’t do this to you. In this book you will build up the entire installation by hand. Every step you take will be useful to you in a production deployment. You’ll know where every configuration file lives. You’ll learn every essential configuration parameter and what it means.

By the time you have finished this book, you’ll know how Puppet works inside and out. You will have the tools and knowledge to deploy Puppet seamlessly throughout your environment.

You may use any modern Linux, Mac, or Windows system and successfully follow the hands-on tutorials in this book.

While there are some web dashboards for Puppet, the process of configuring and running Puppet will be performed through the command line. We will help you install all necessary software.

A beginner to system administration can follow every tutorial in this book. Any experience with scripts, coding, or configuration management will enhance what you can get out of this book, but is not necessary. It is entirely possible to deploy Puppet to manage complex environments without writing a single line of code.

Part II documents how to build custom modules for Puppet. You will create modules using the Puppet configuration language that will be taught in this book. When you’ve become an expert in building Puppet modules, you may want to add new extensions to the Puppet configuration language. Some extensions are currently only supported in the Ruby language:

puppetReference materials such as Michael Fitzgerald’s Learning Ruby can be helpful when creating extensions for a custom Puppet module.

The Introduction provides an overview of what Puppet does, how it works, and why you want to use it.

Part I will get you up and running with a working Puppet installation. You will learn how to write declarative Puppet policies to produce consistency in your systems. This part will also cover the changes to the language in Puppet 4.

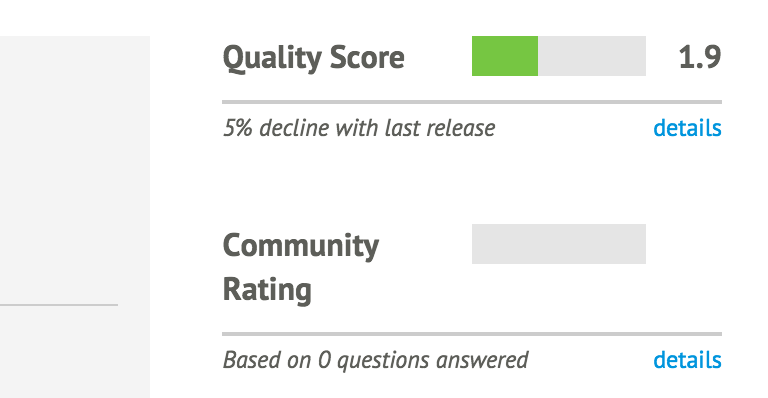

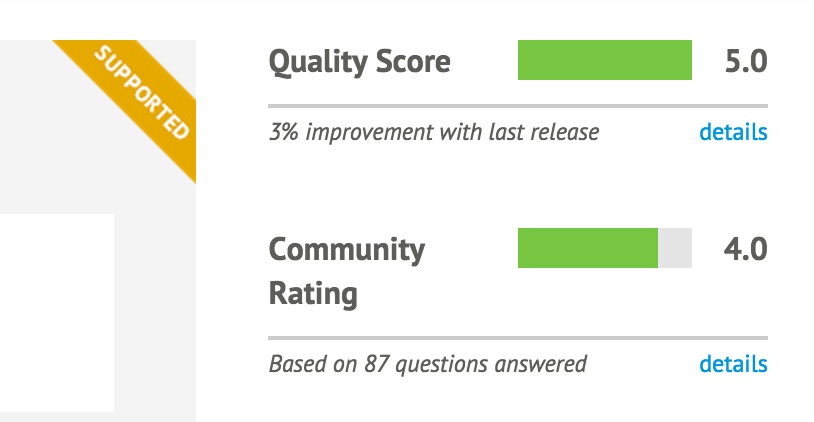

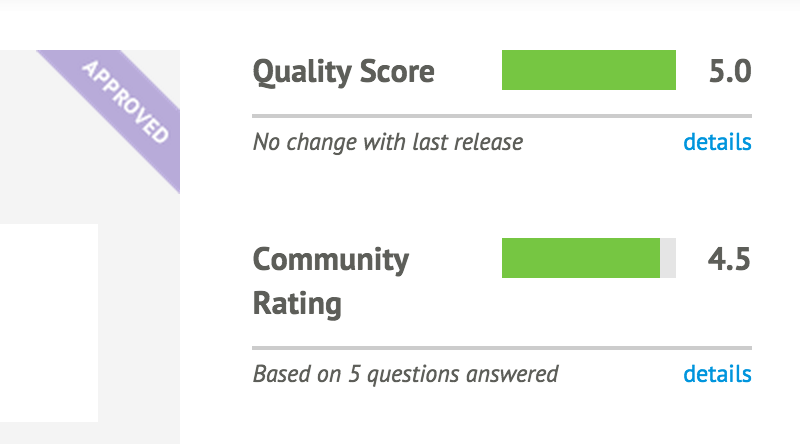

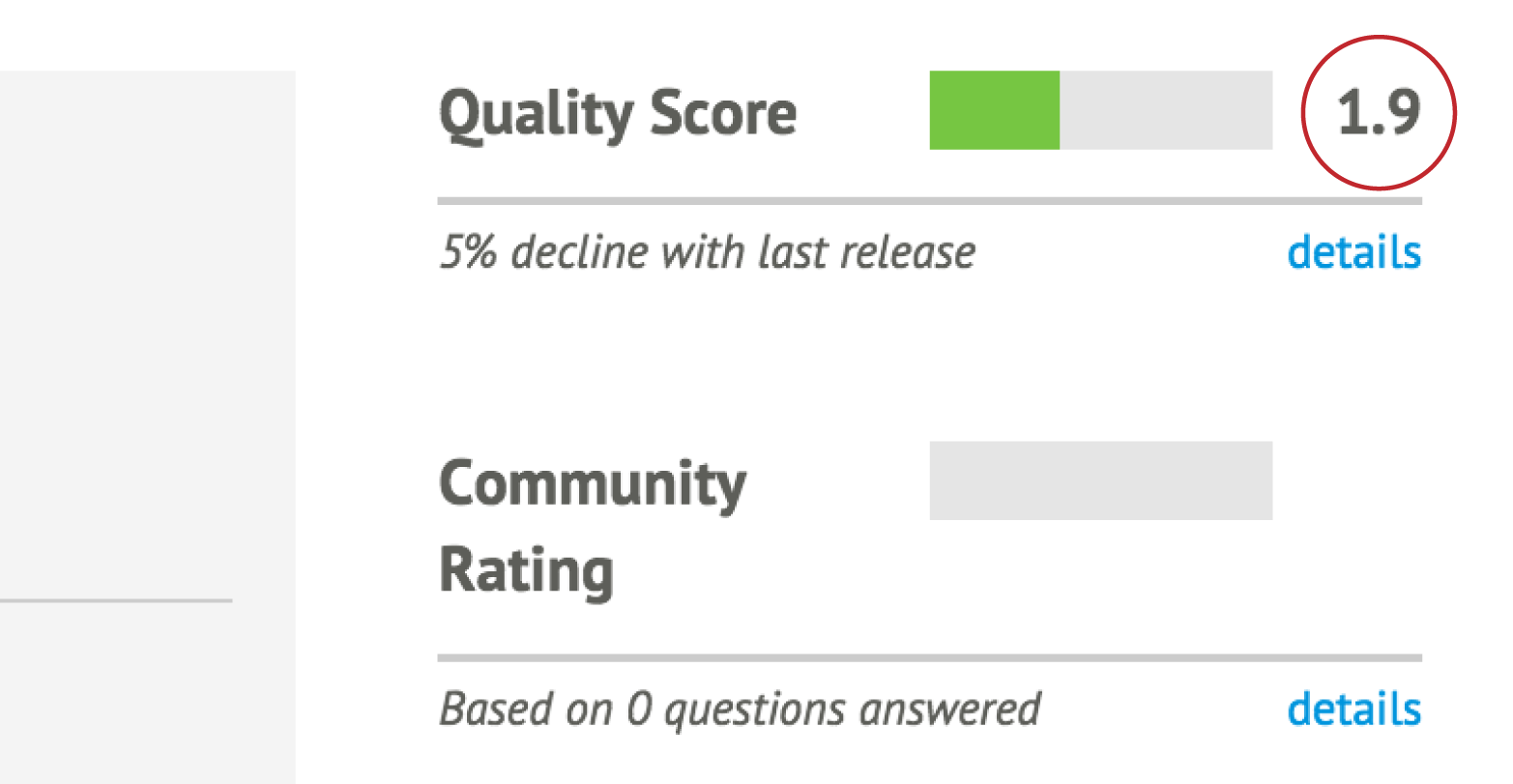

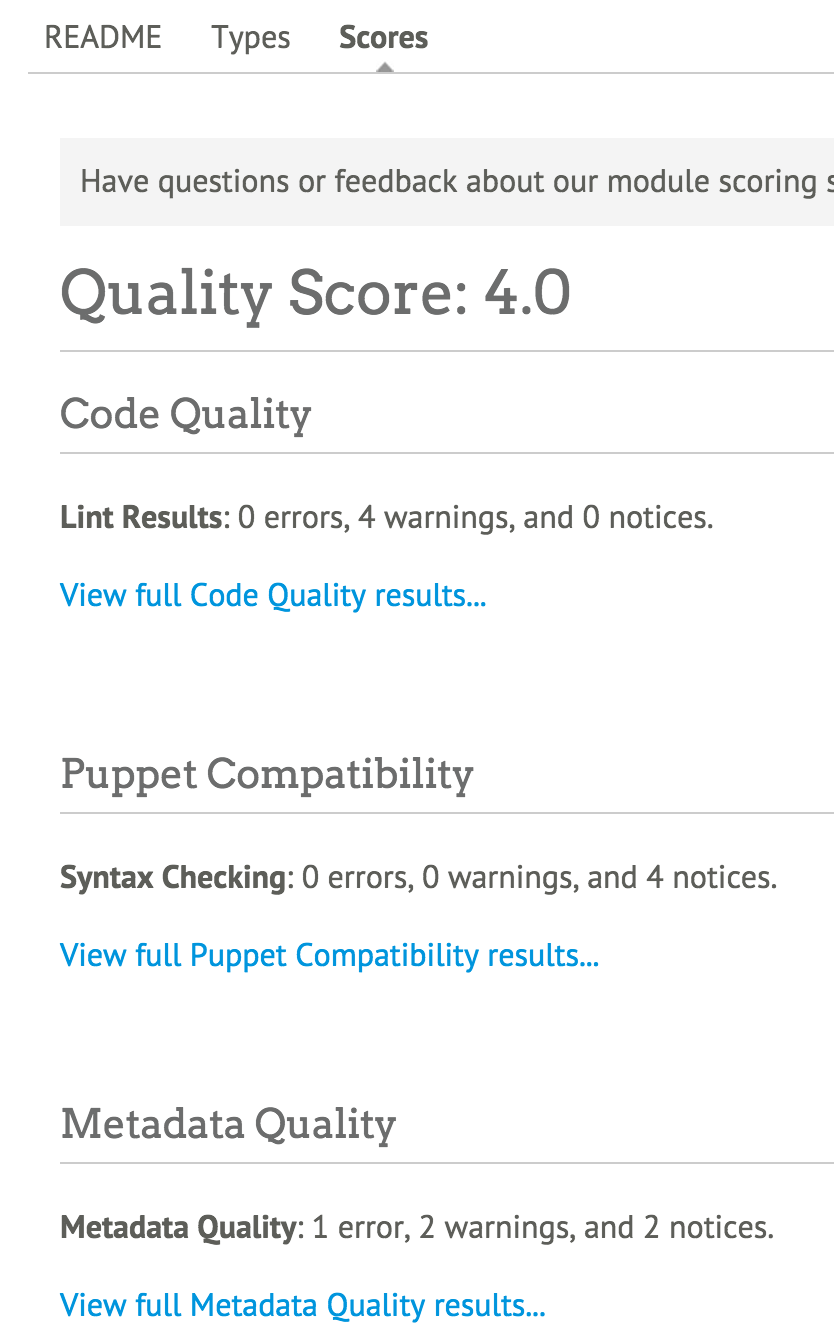

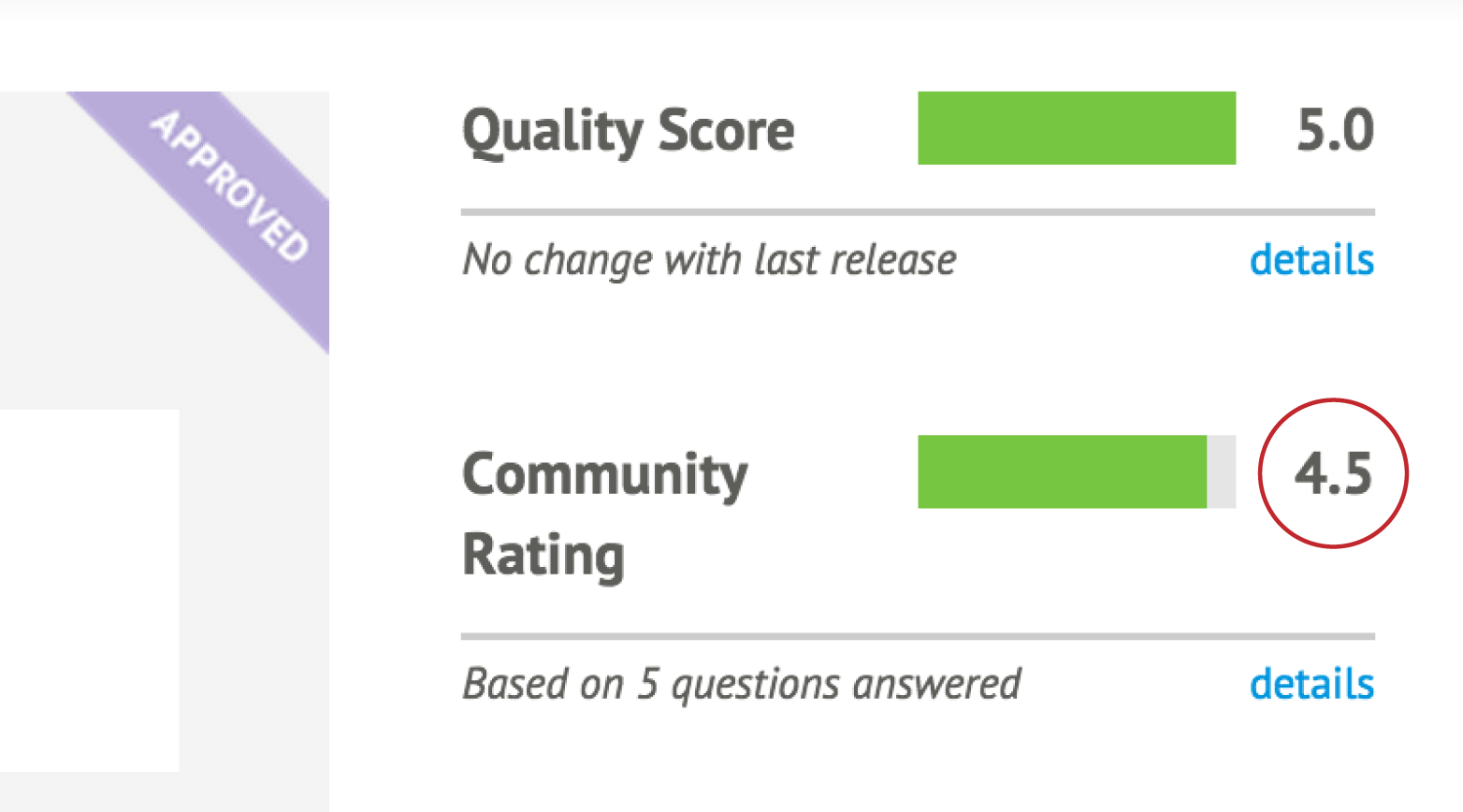

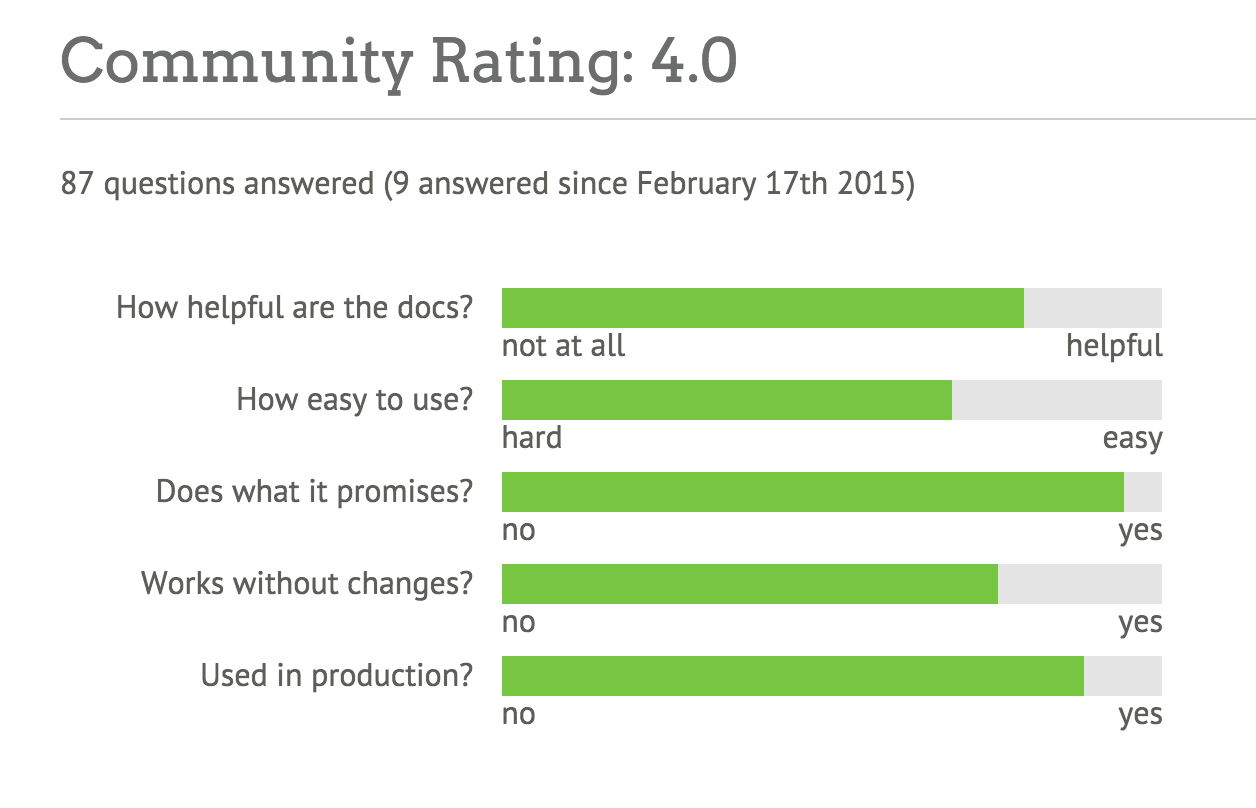

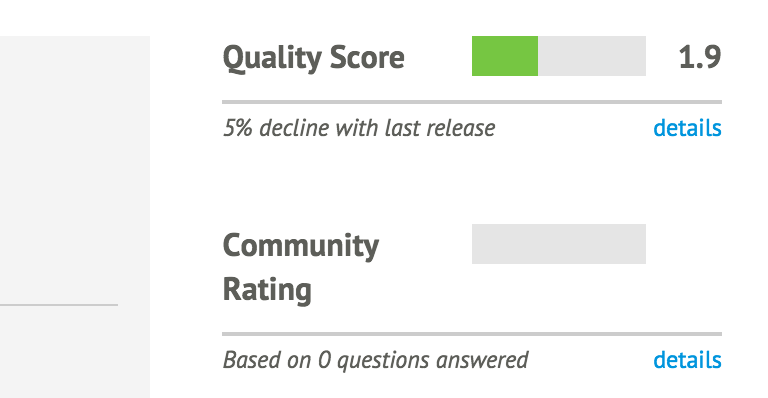

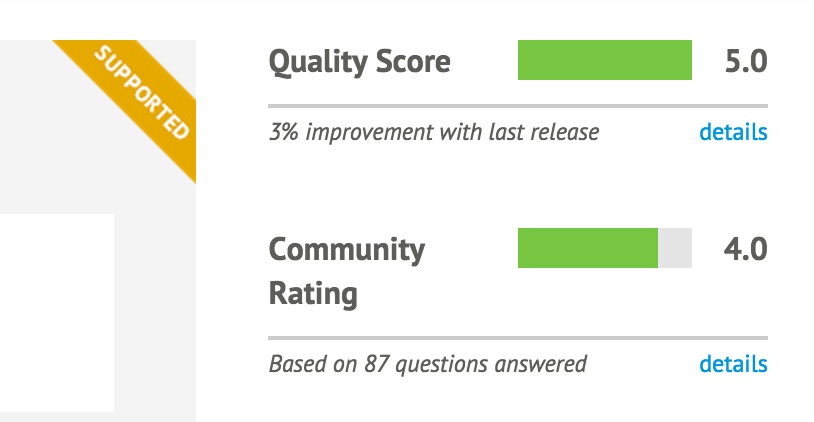

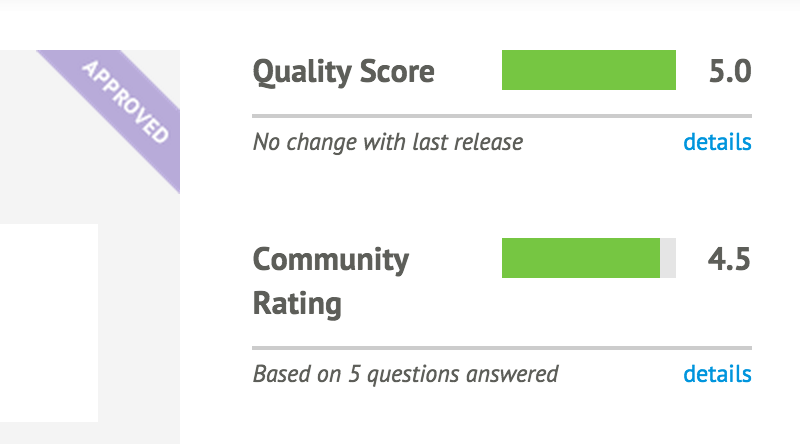

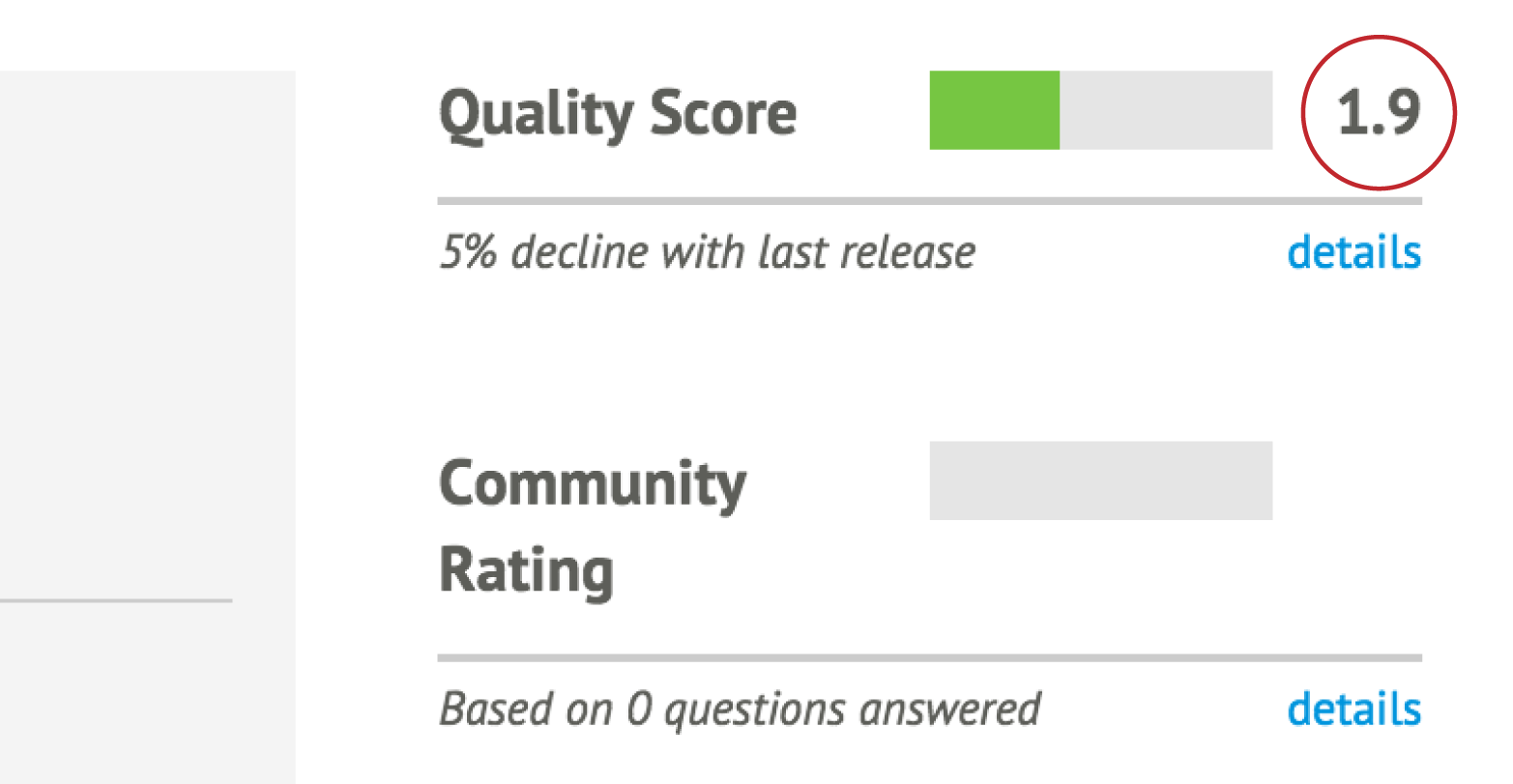

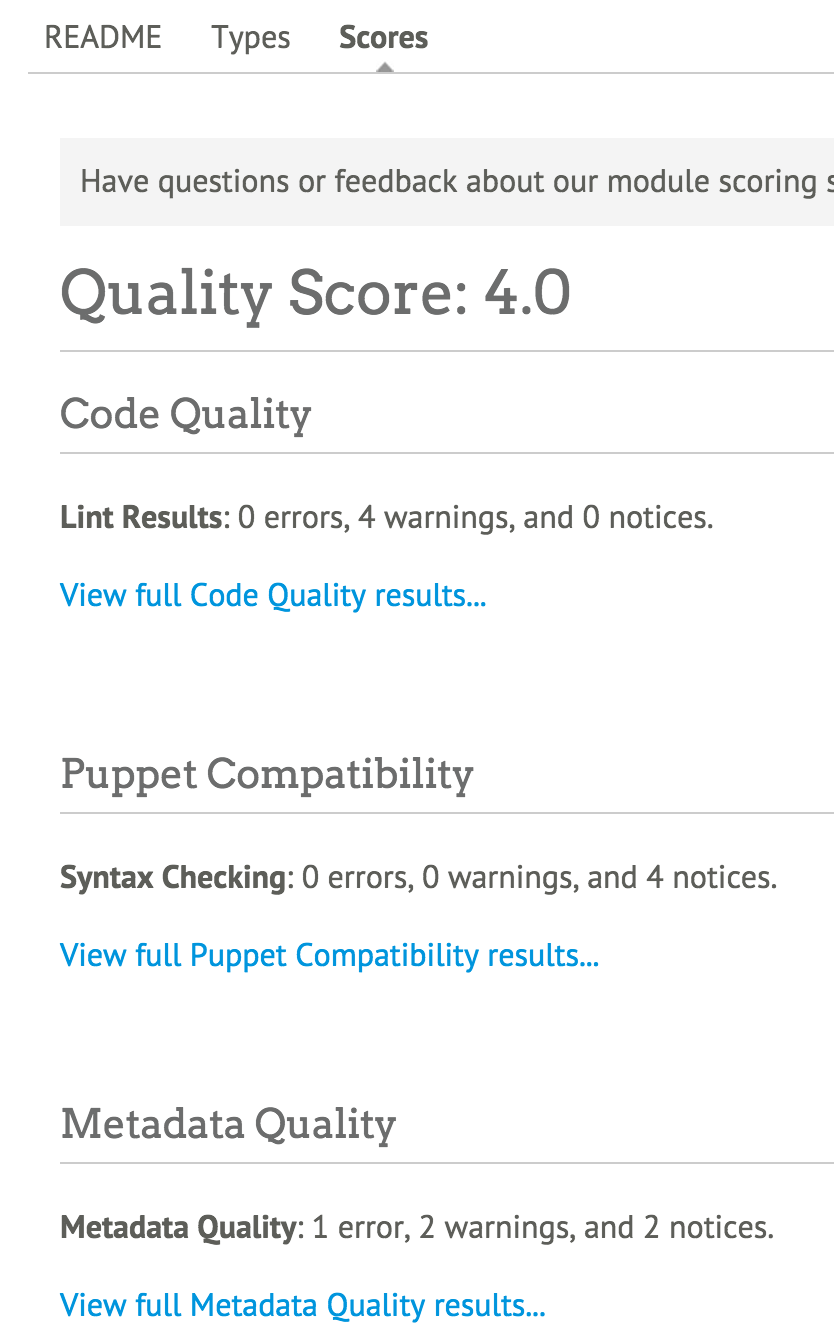

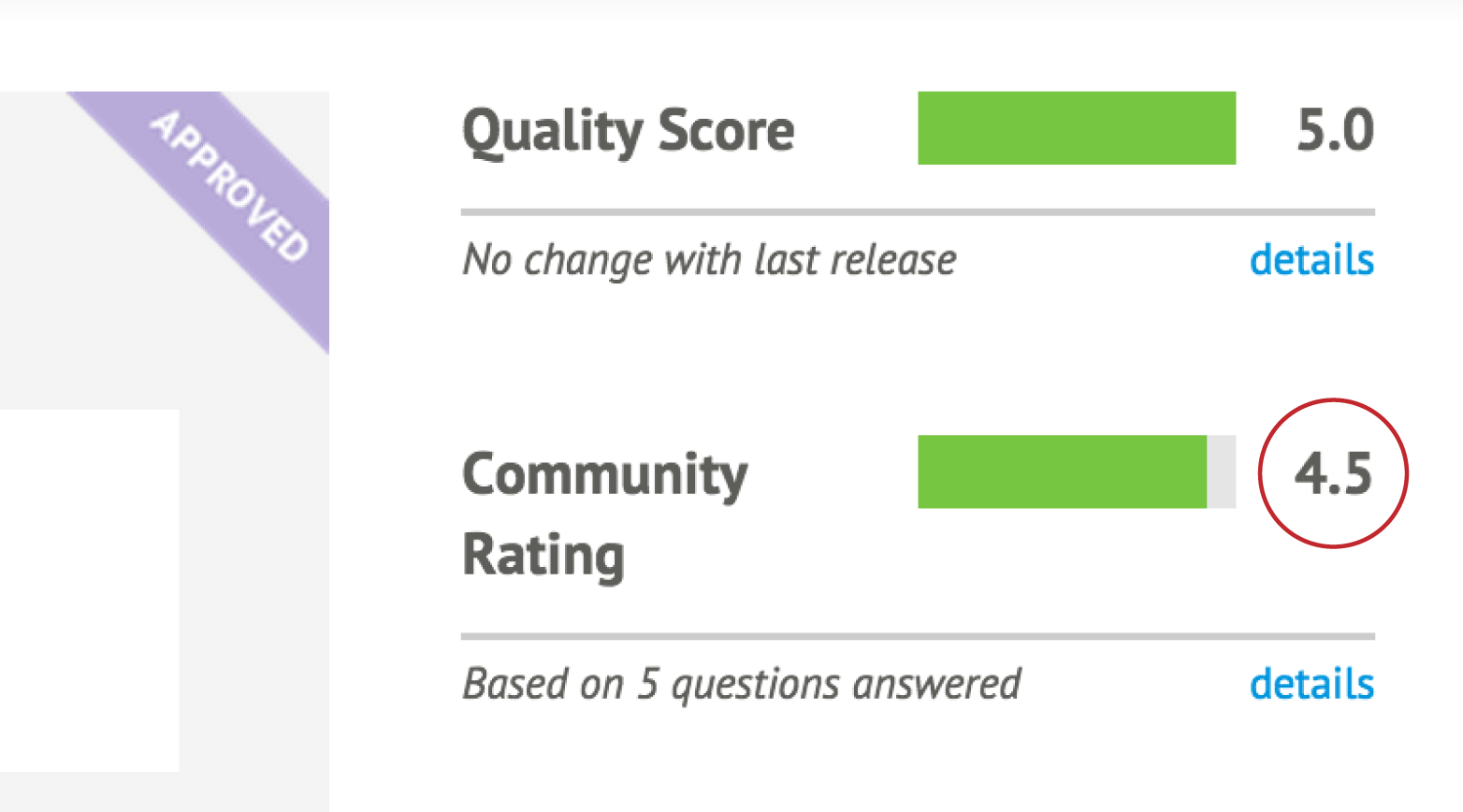

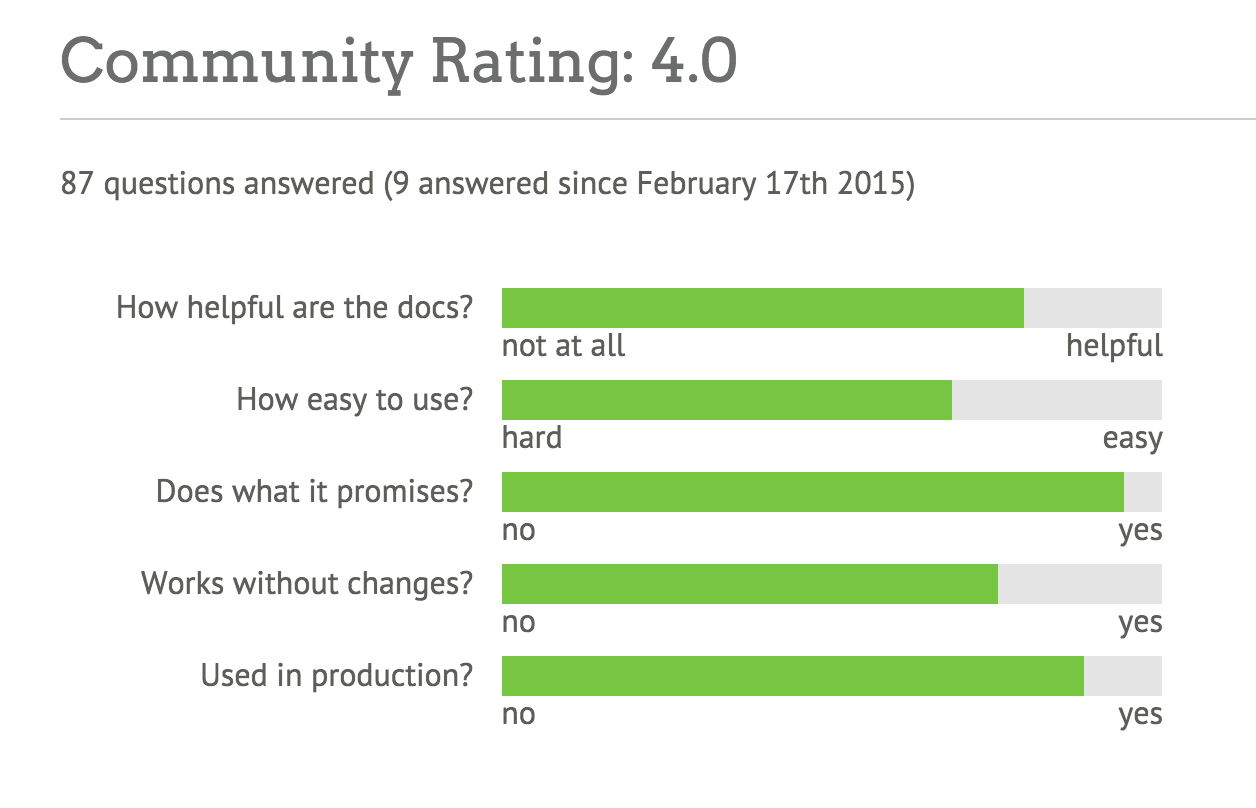

Part II will introduce you to Puppet modules, the building blocks used for Puppet policies. You will learn how to find and evaluate Puppet modules. You’ll learn how to distinguish Puppet Supported and Puppet Approved modules. You’ll learn tips for managing configuration data in Hiera. Finally, you’ll learn how to build, test, and publish your own Puppet modules.

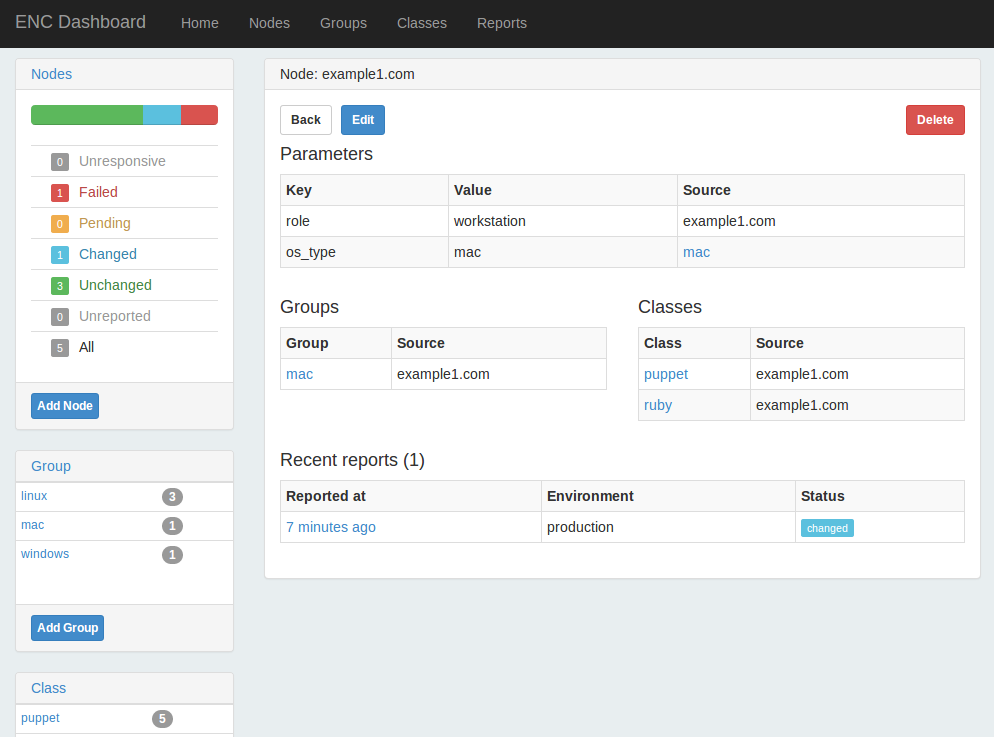

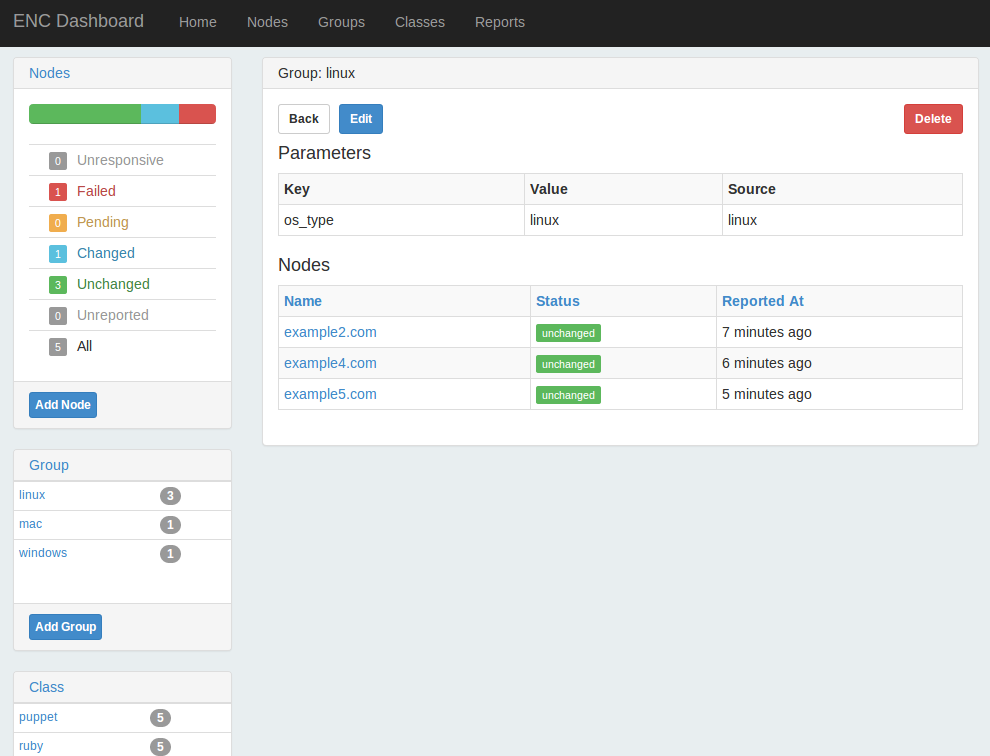

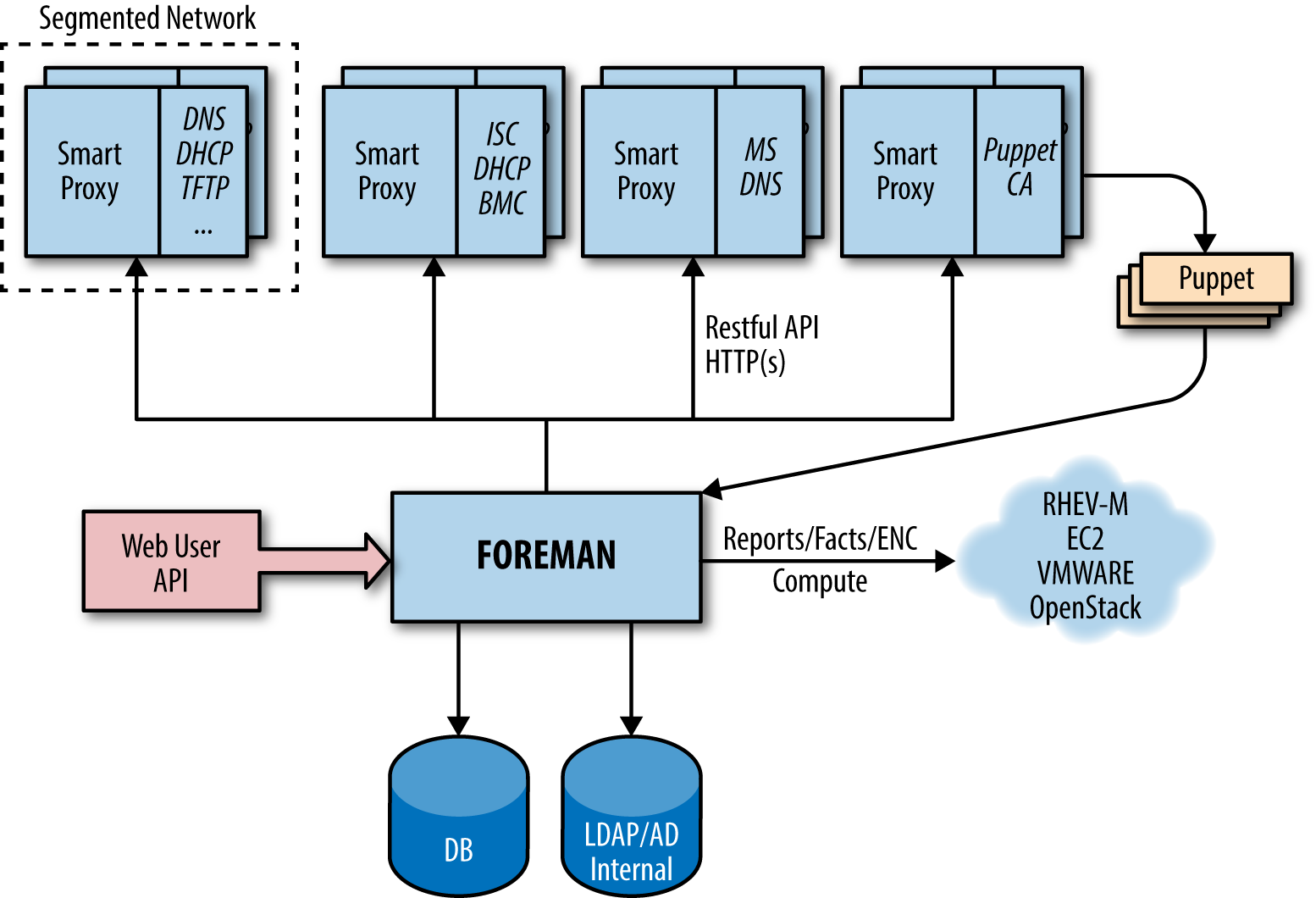

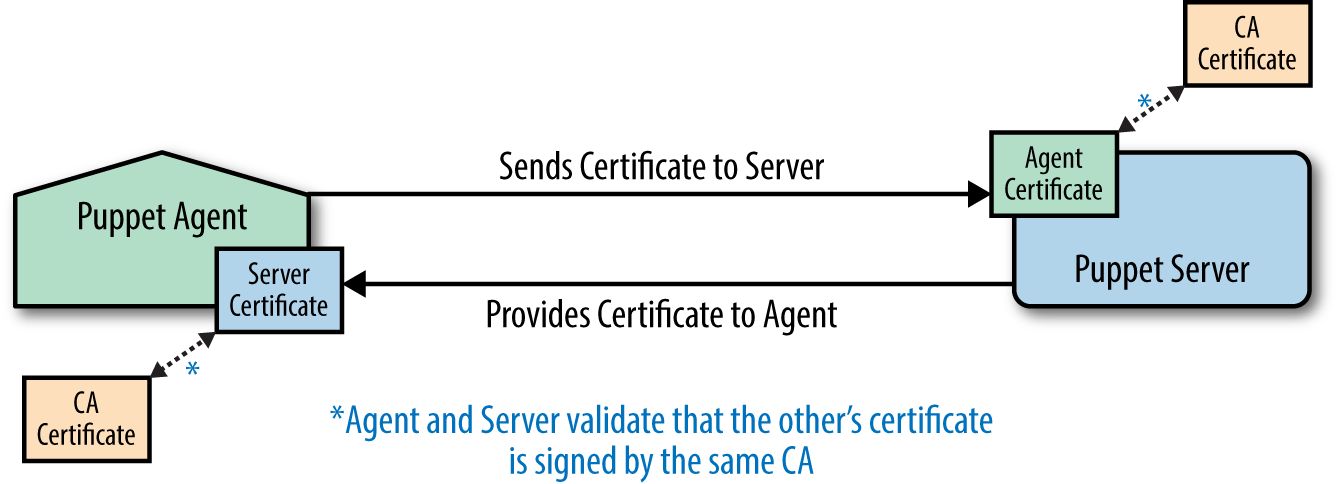

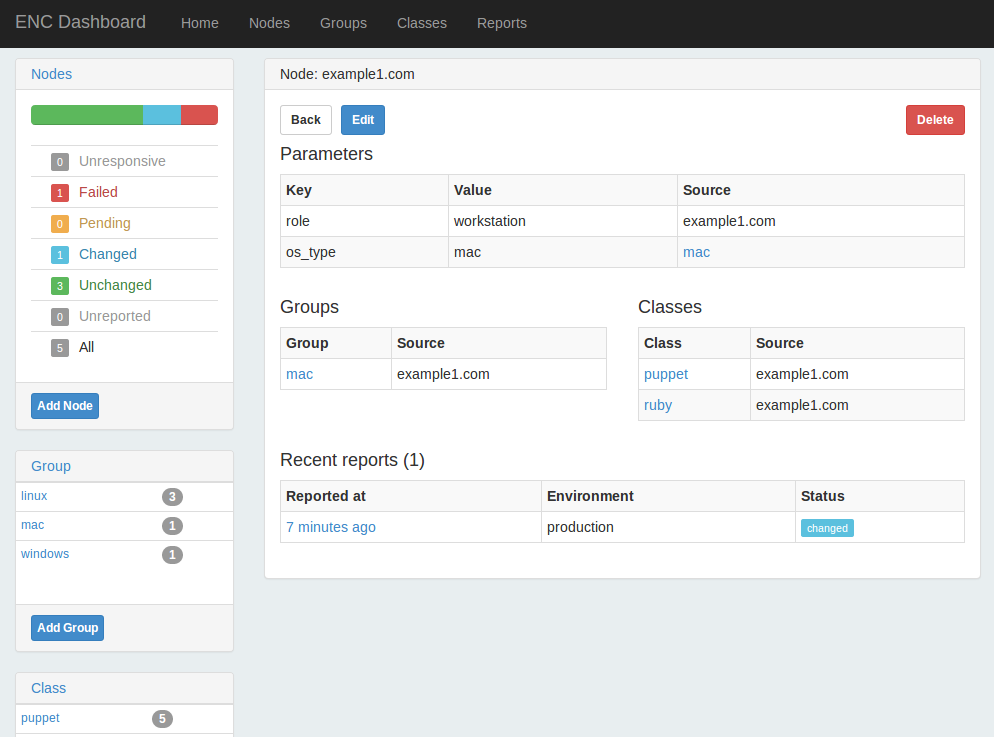

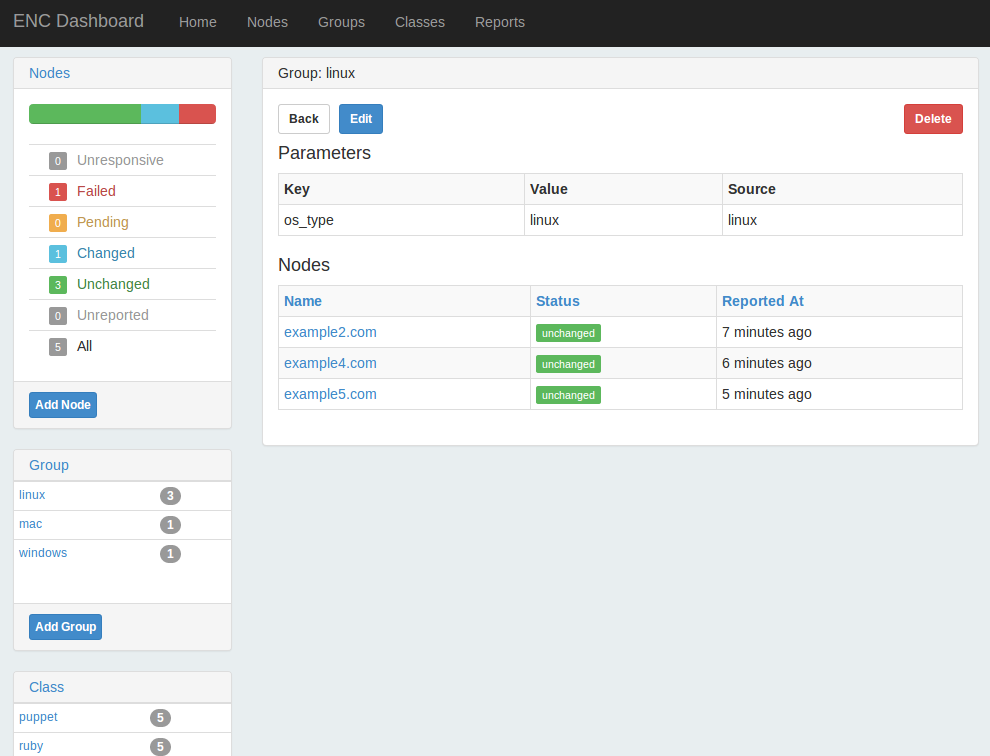

Part III will help you install the new Puppet Server and the deprecated but stable Puppet master. You’ll learn how to centralize the certificate authority, or use a third-party provider. You will configure an external node classifier (ENC). You’ll find advice and gain experience on how to scale Puppet servers for high availability and performance.

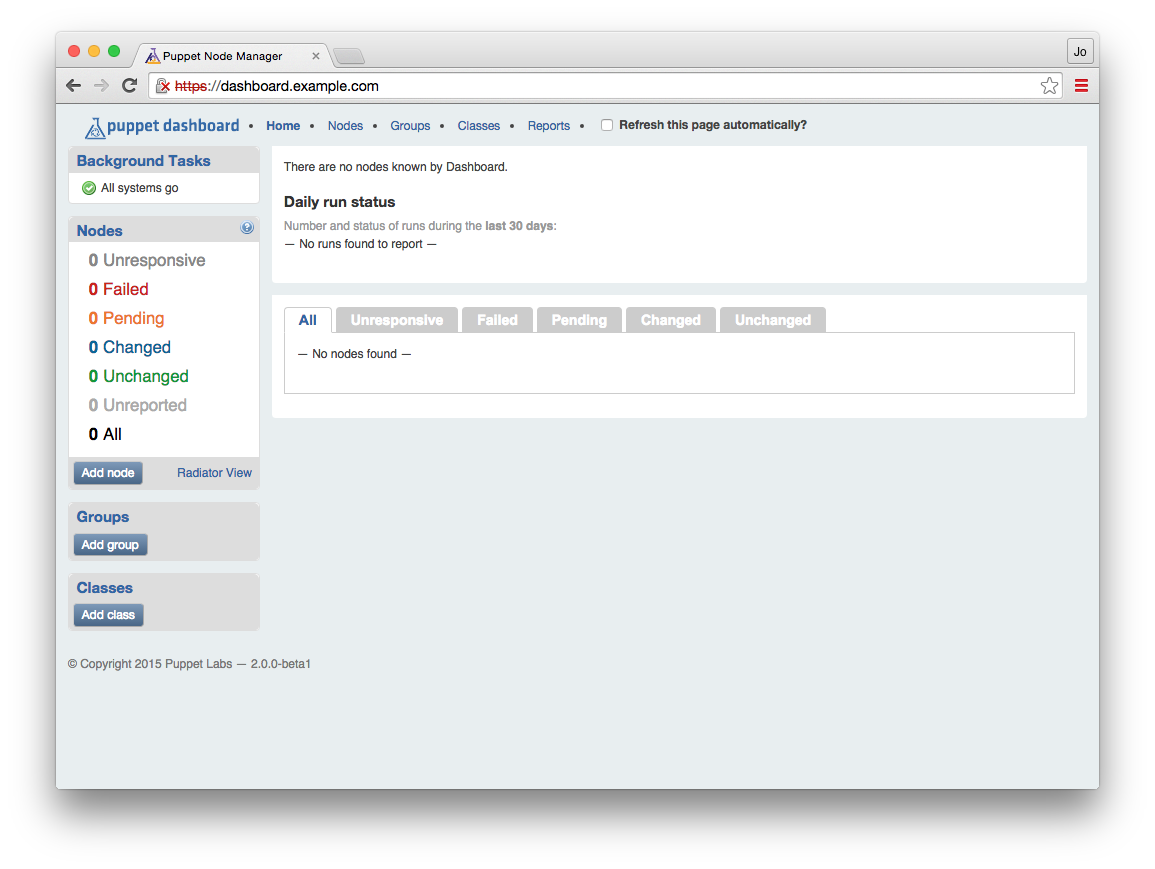

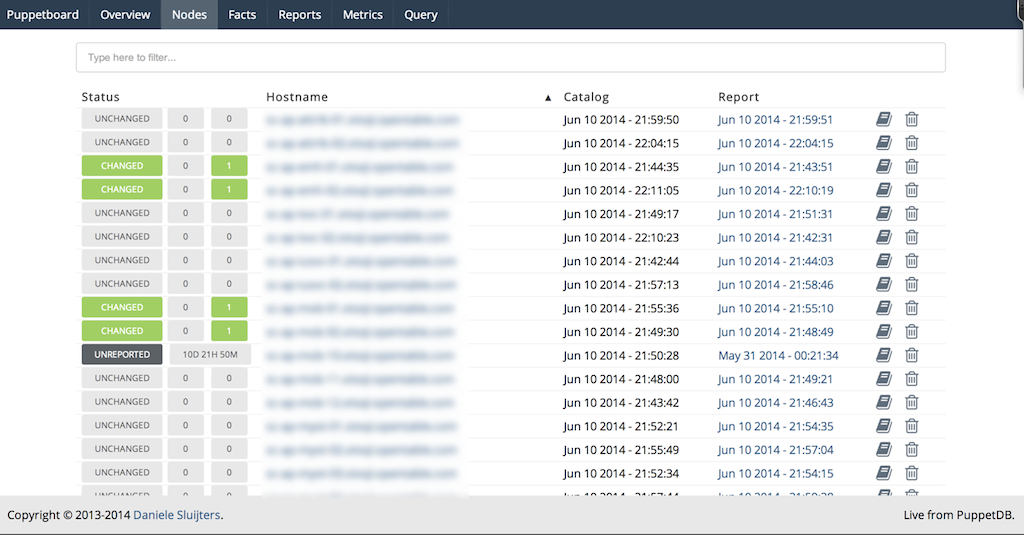

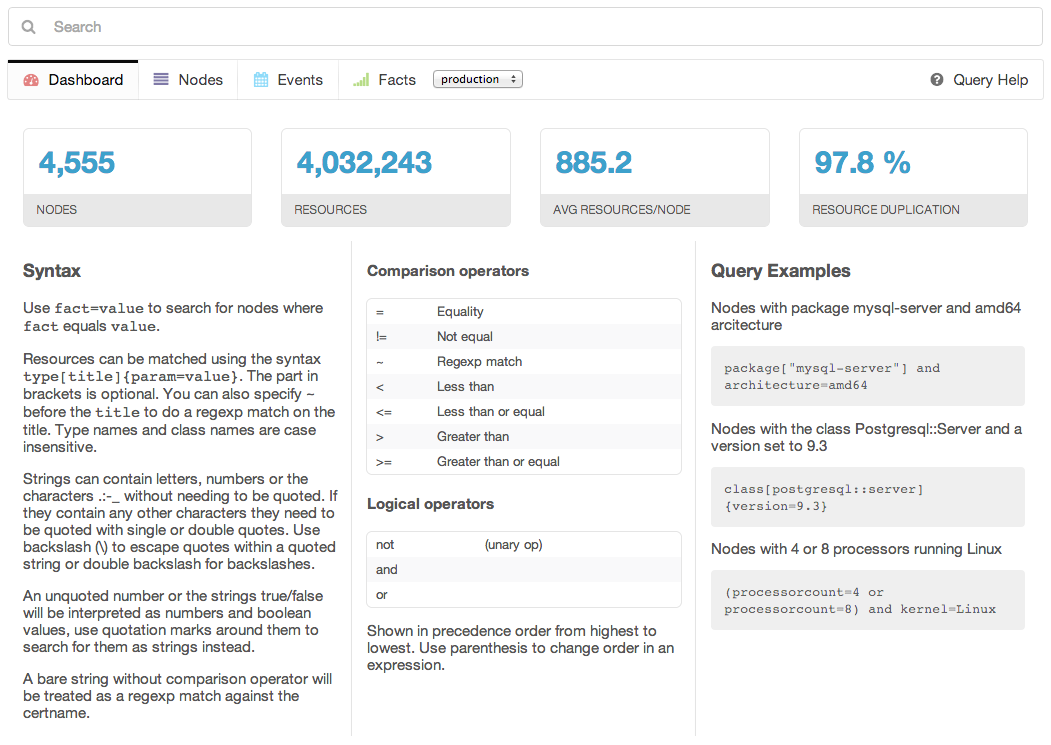

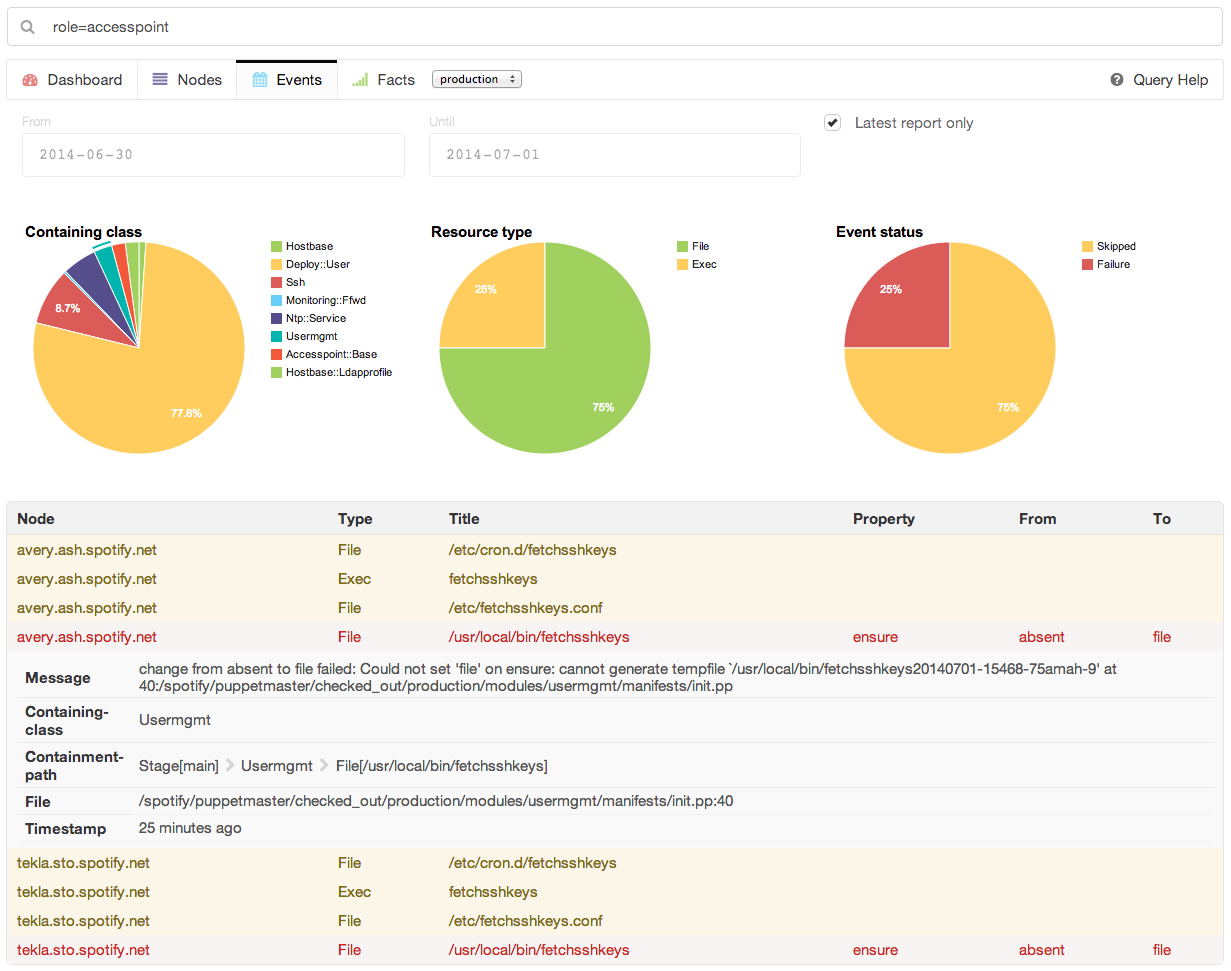

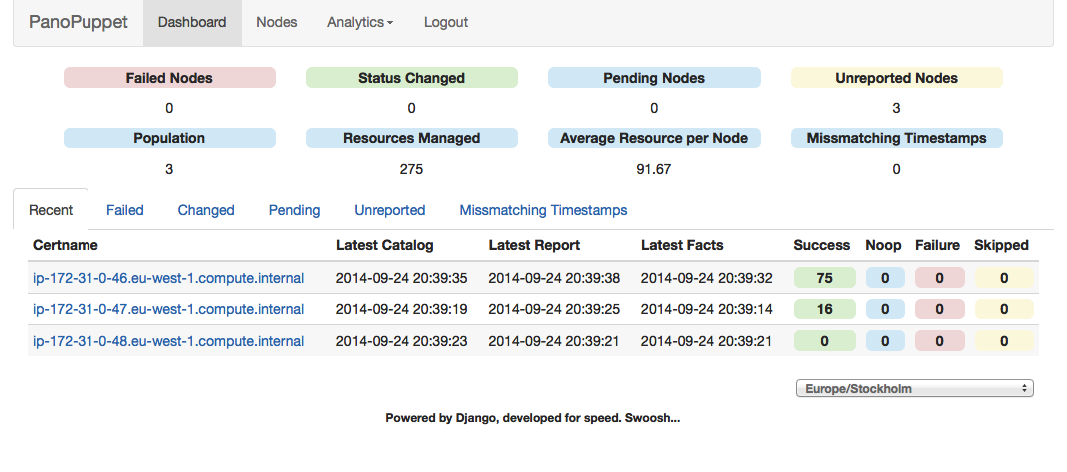

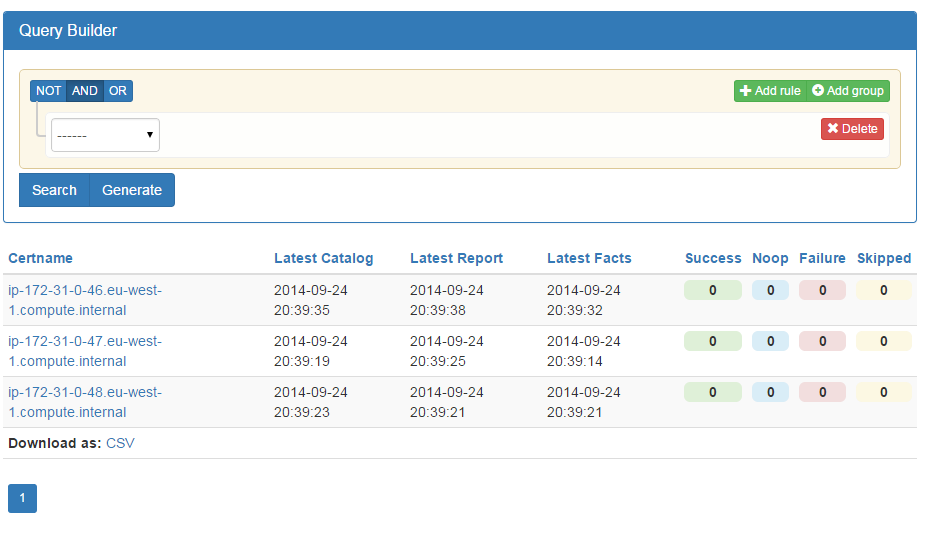

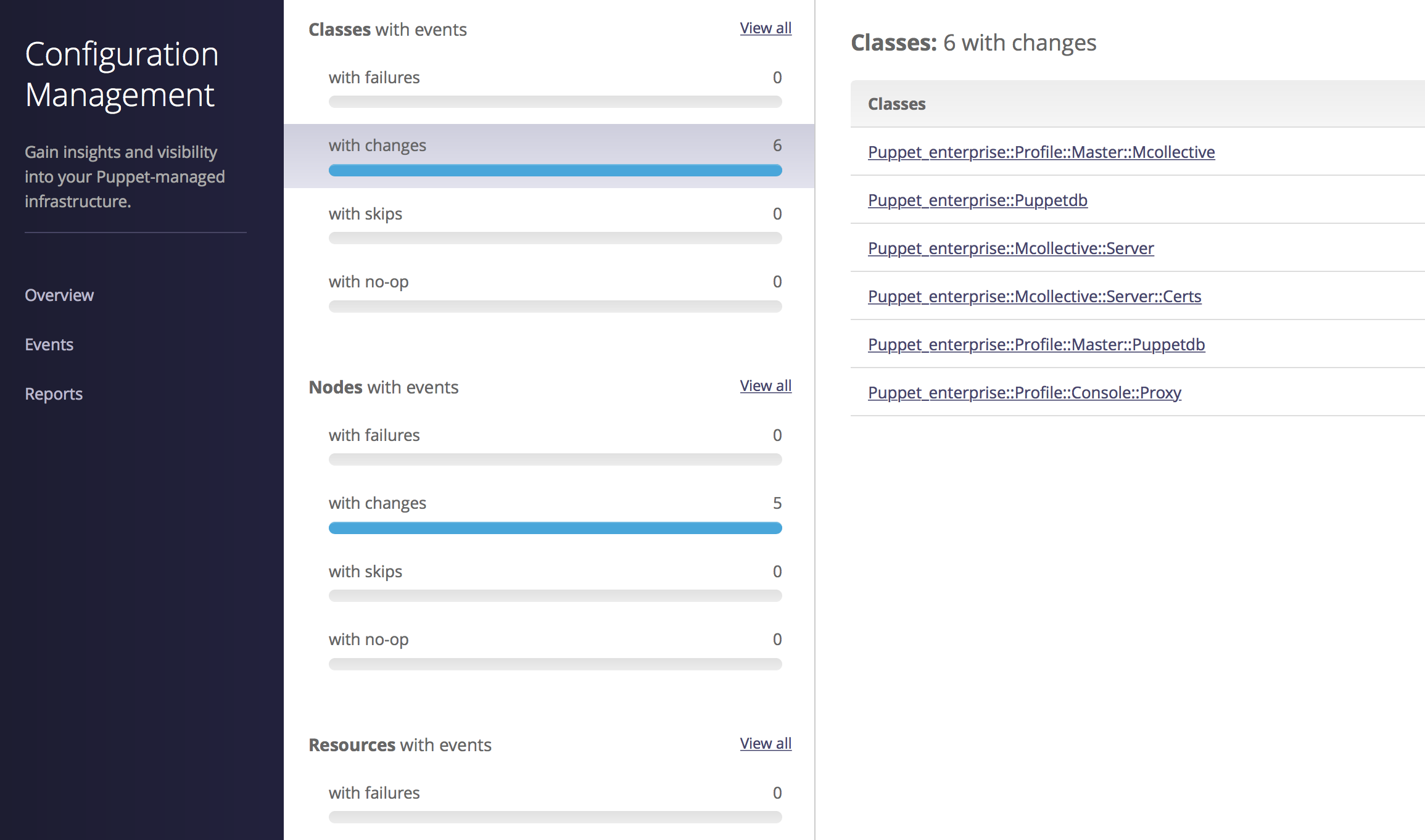

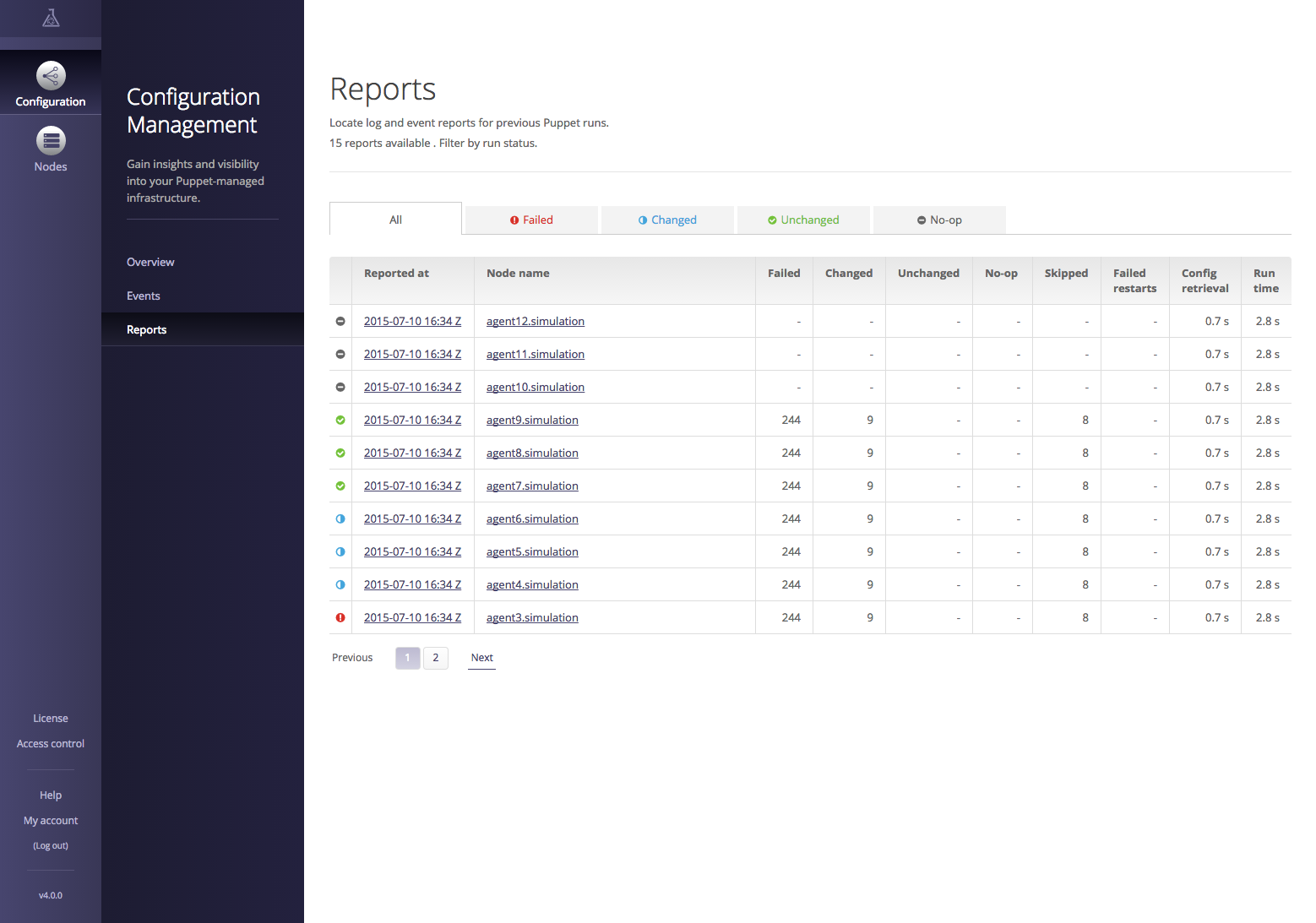

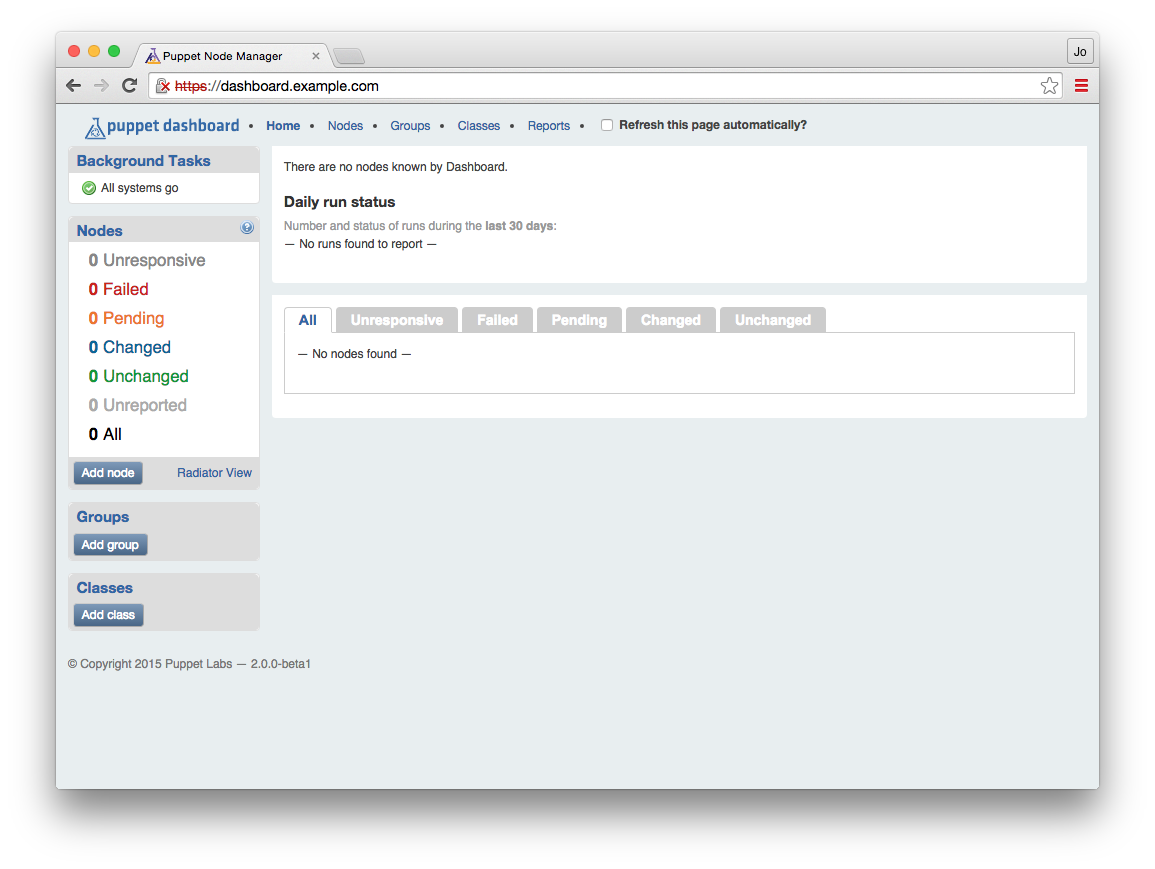

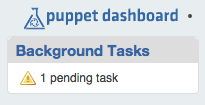

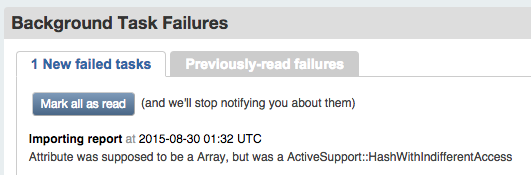

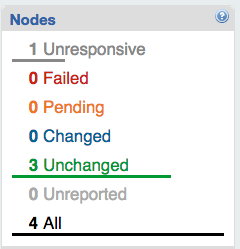

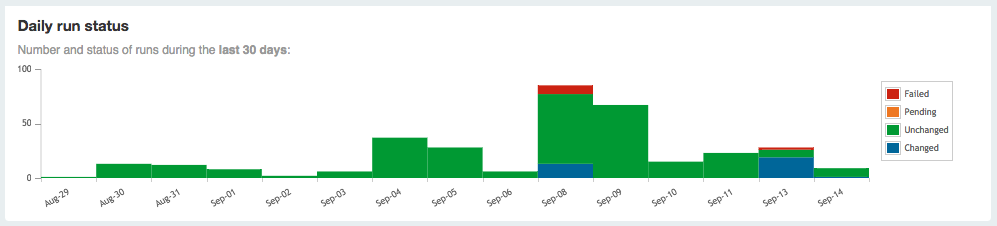

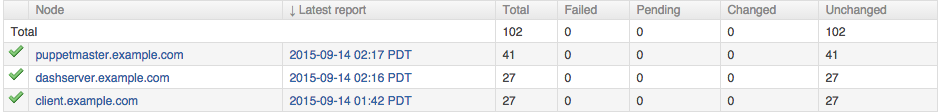

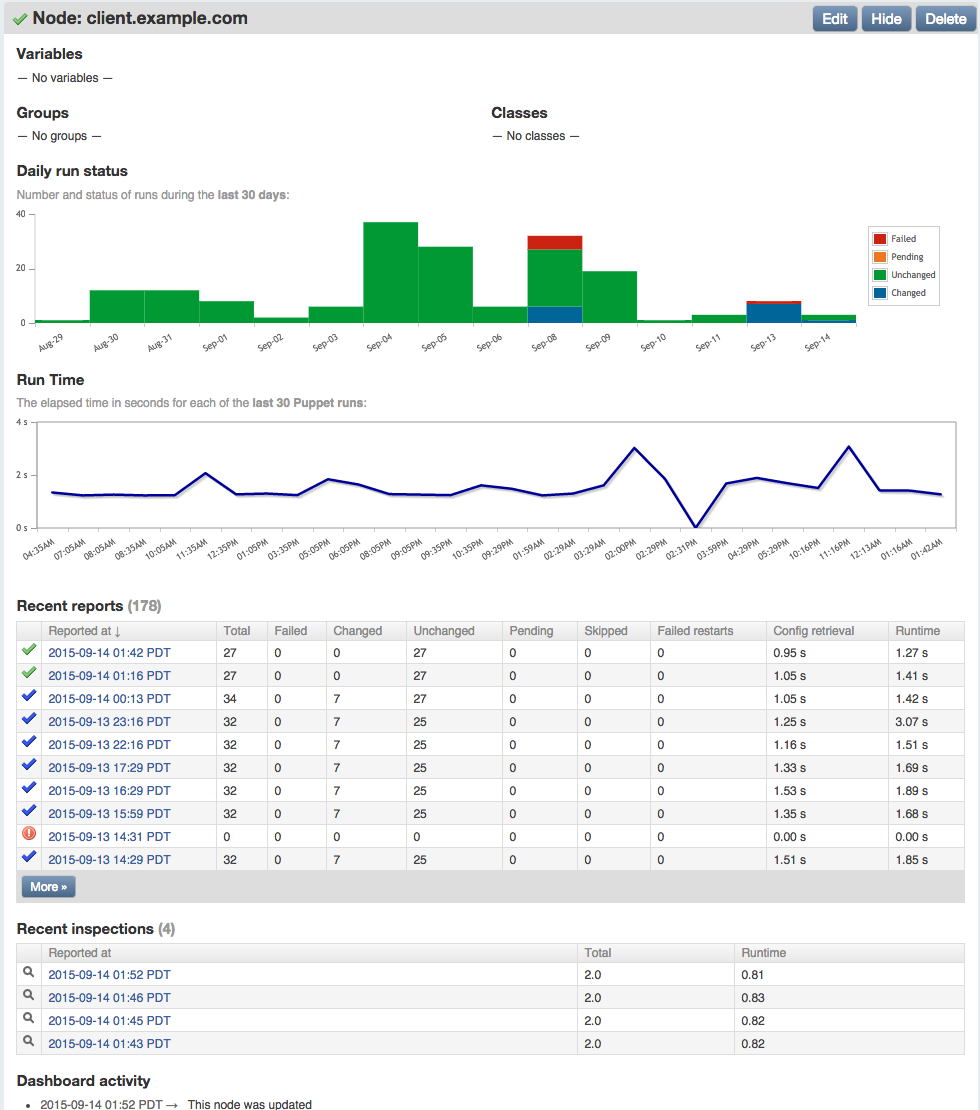

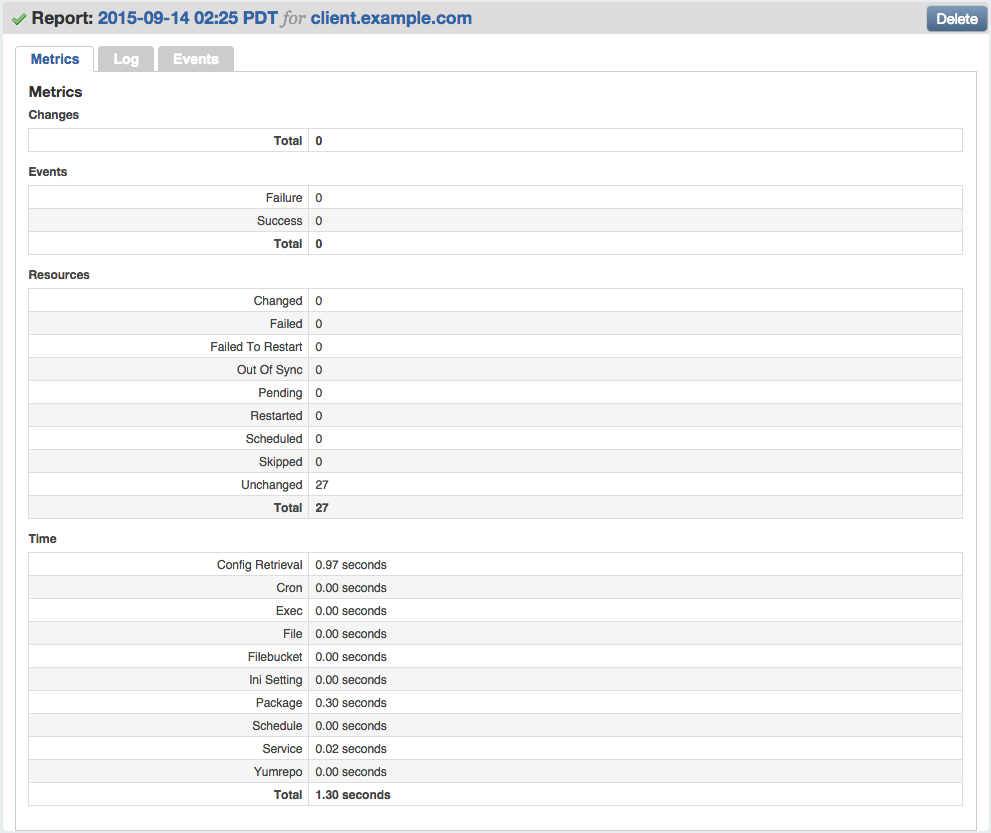

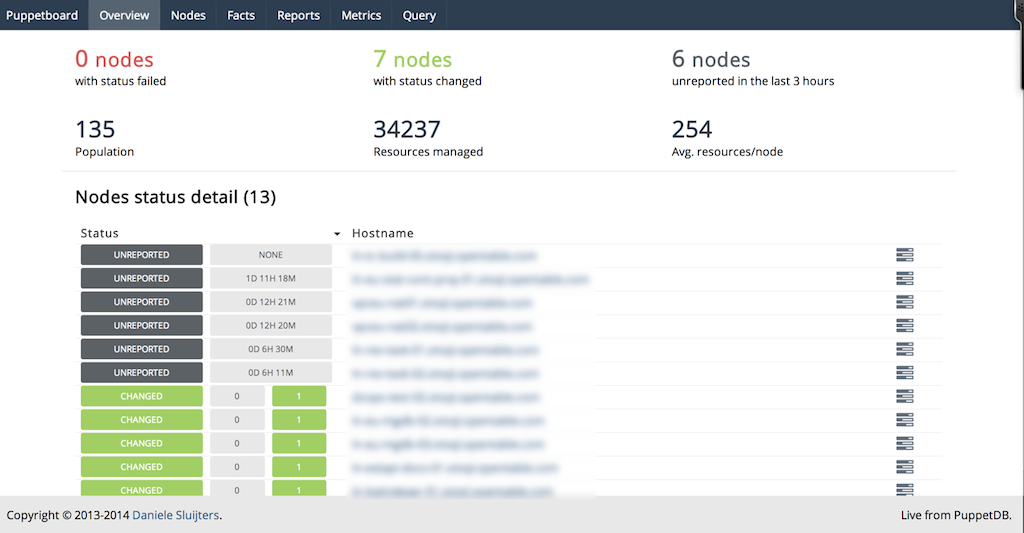

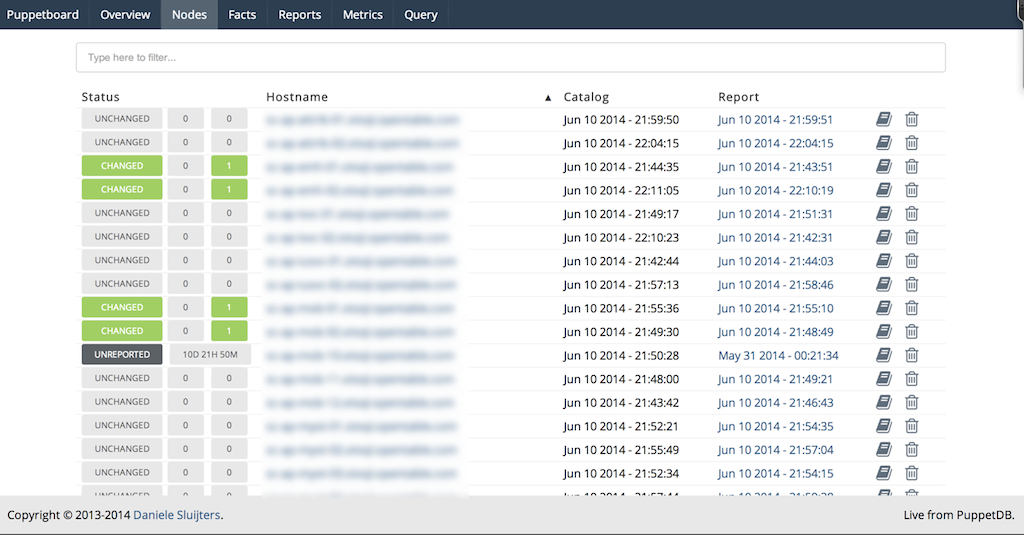

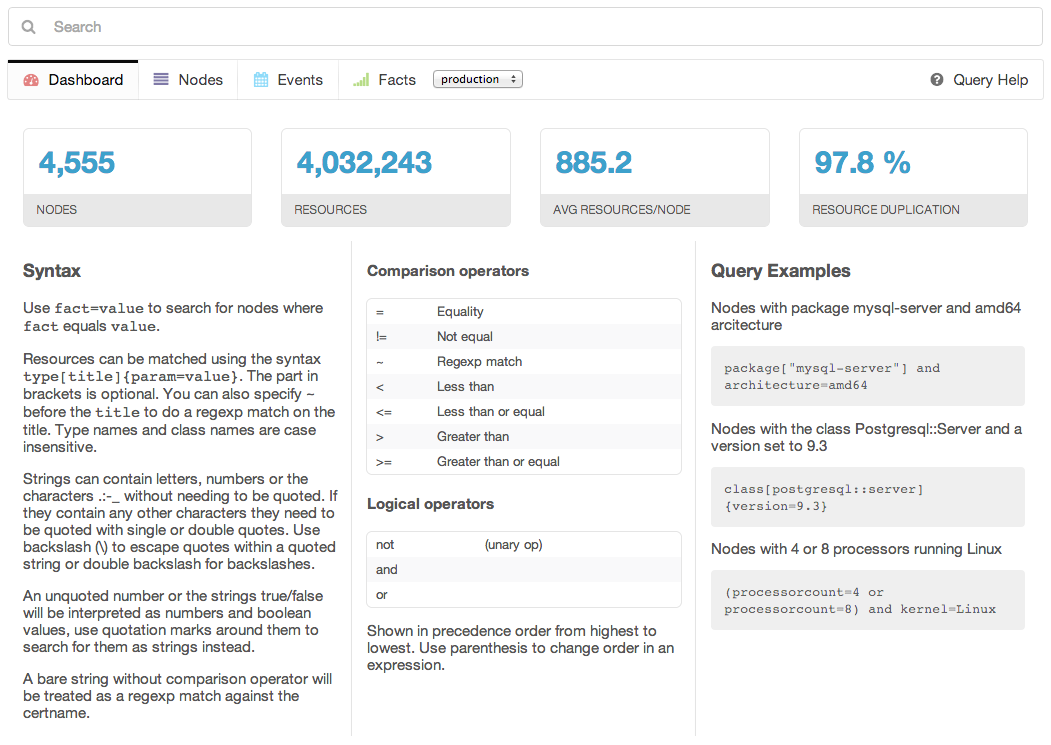

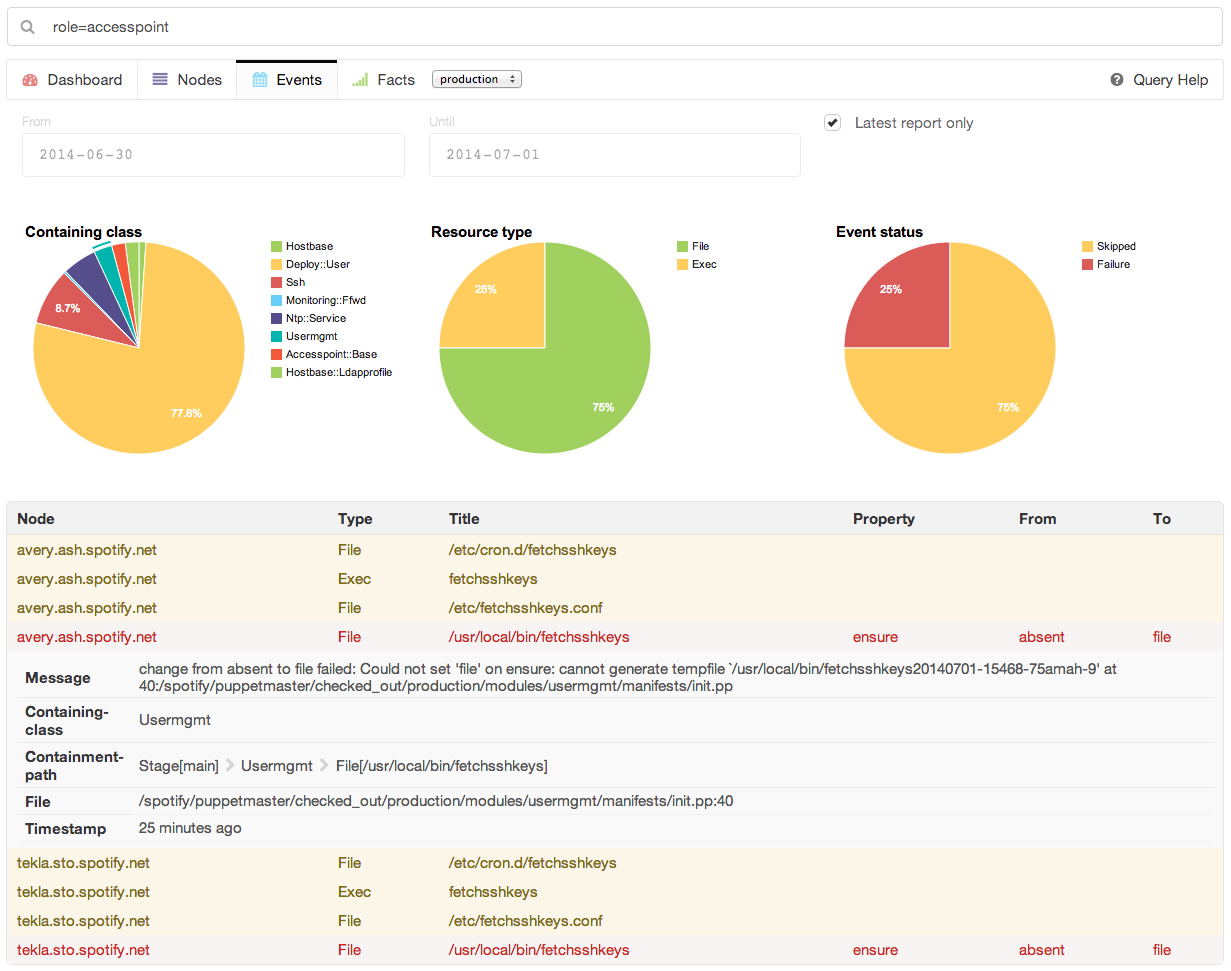

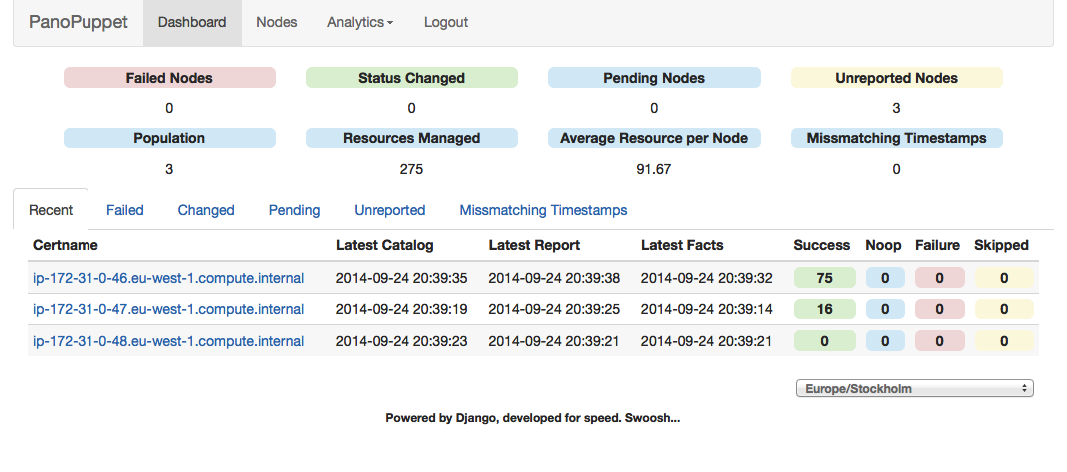

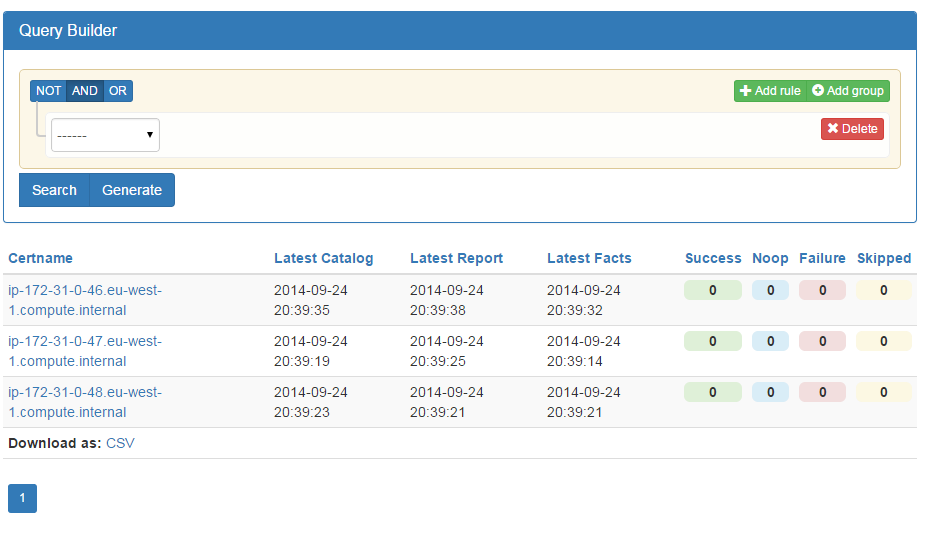

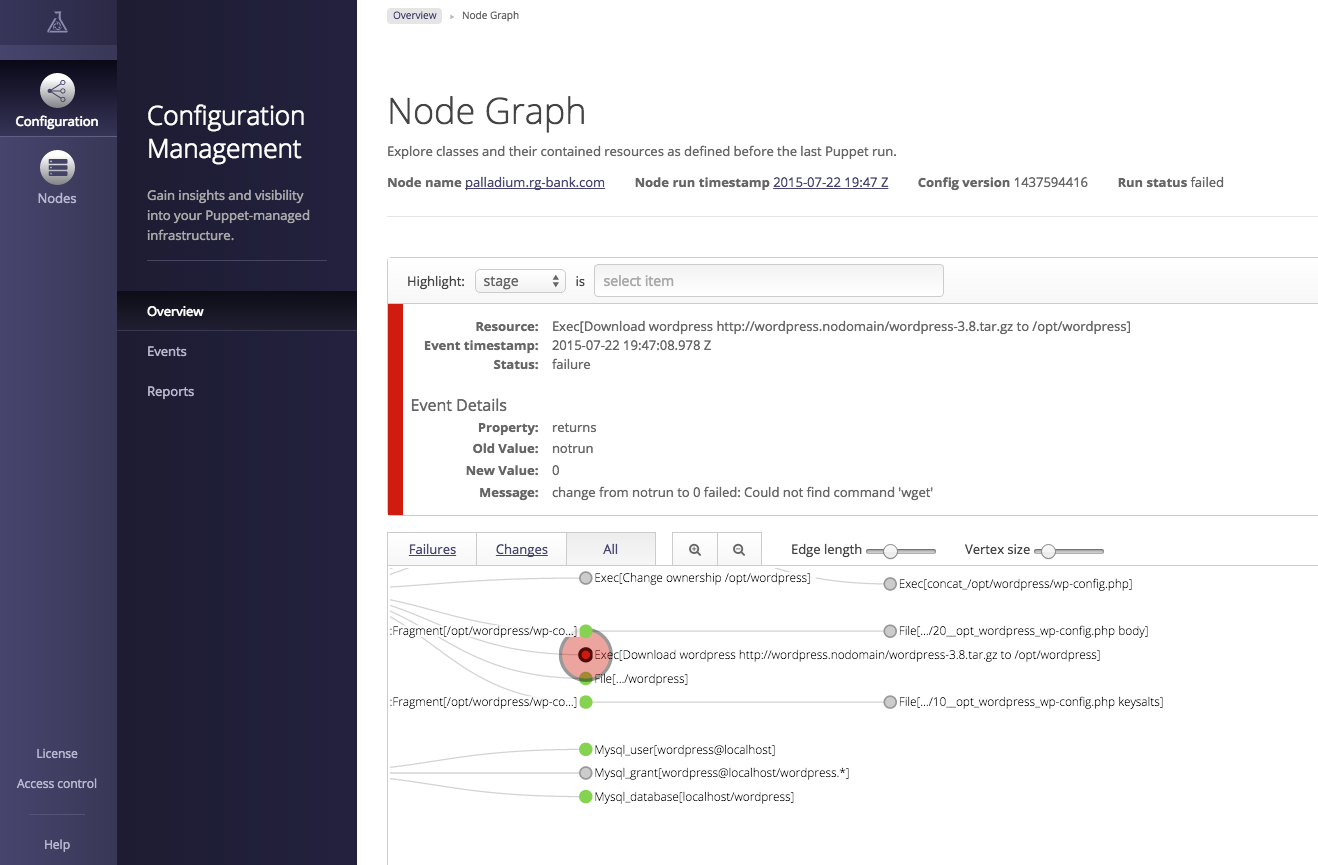

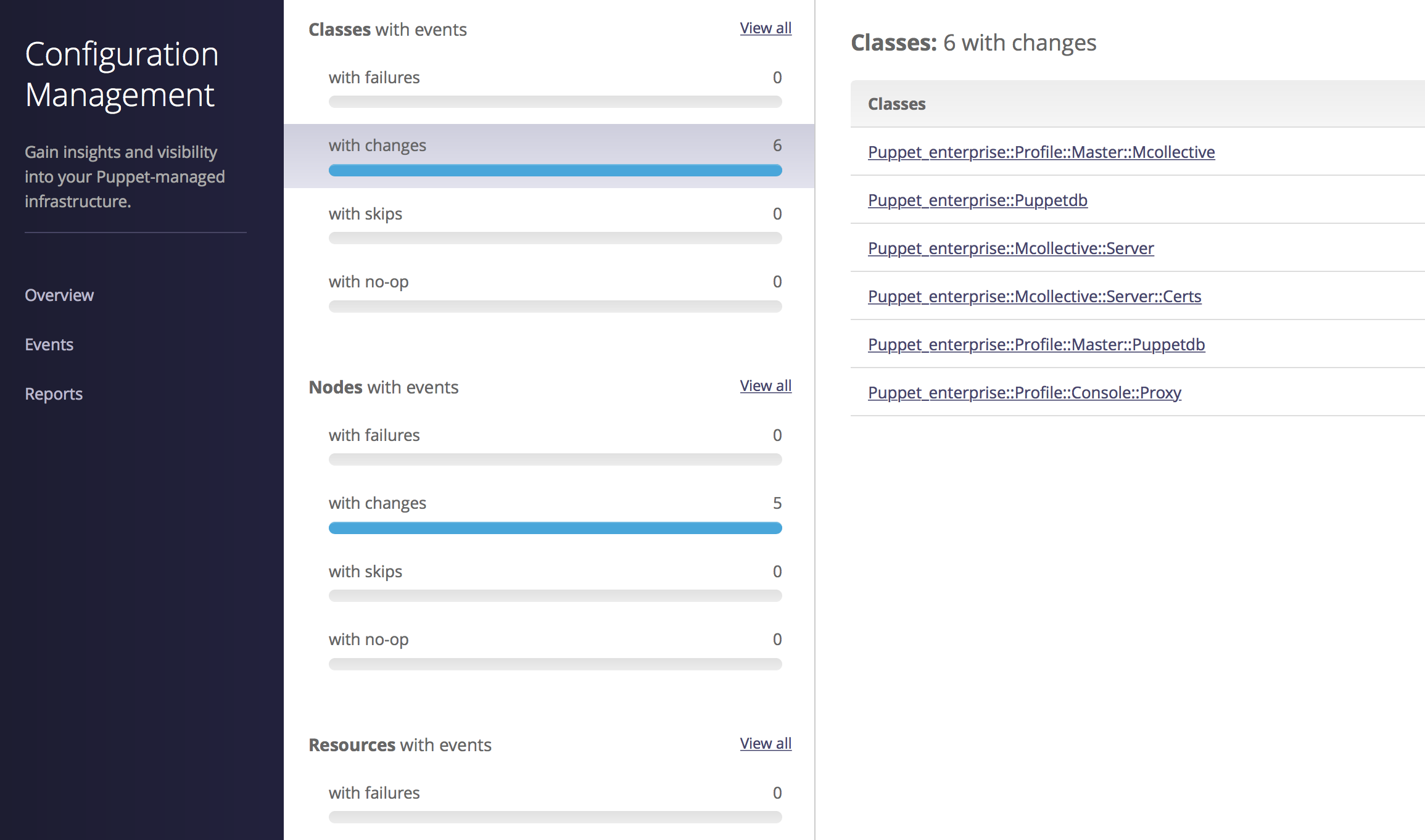

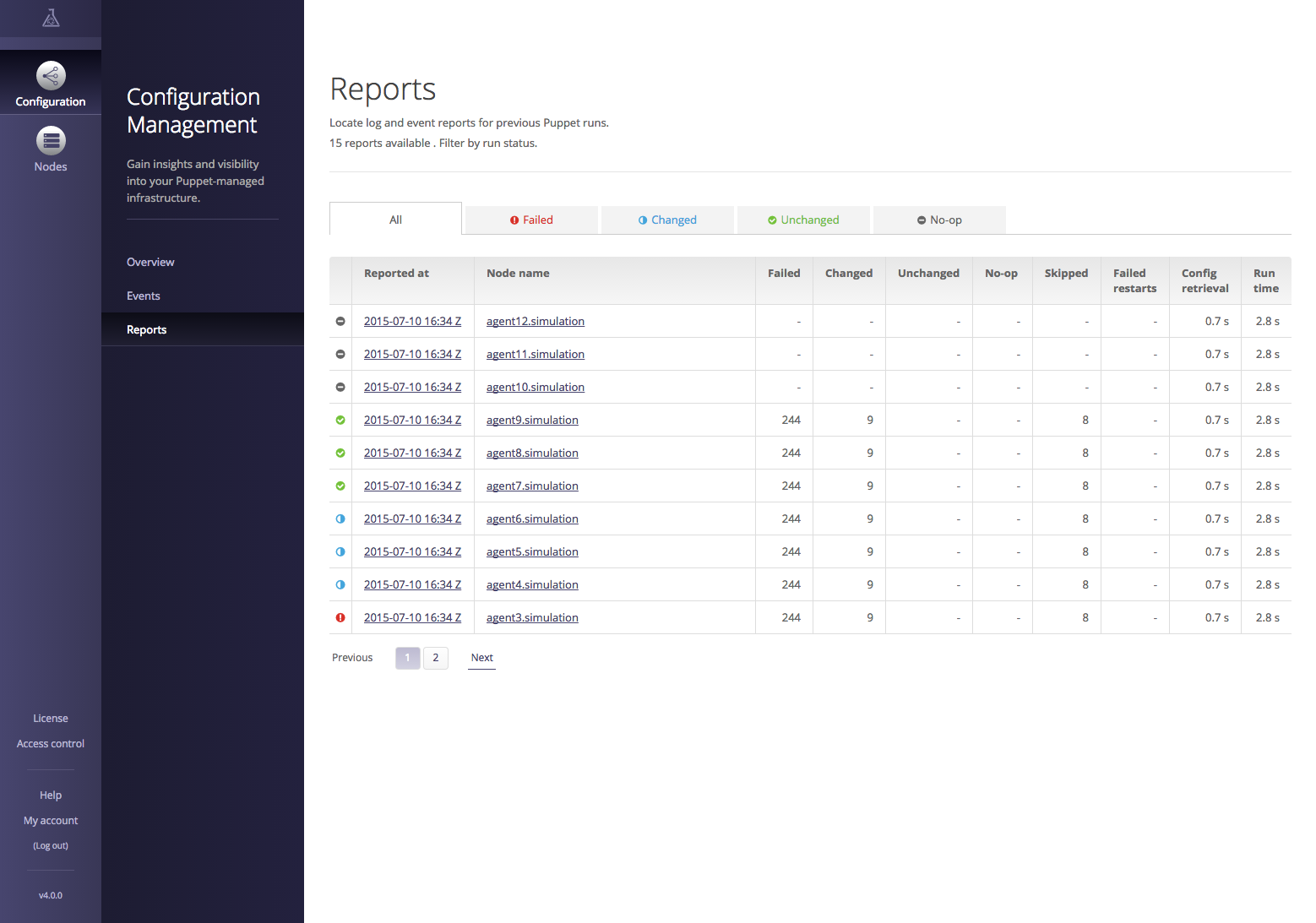

Part IV will review dashboards and orchestration tools that supplement and complement Puppet. The web dashboards provide a way to view the node status and history of changes made by Puppet. The orchestration tools enable you to interact instantly with Puppet nodes for massively parallel orchestration events.

Every step of the way you’ll perform hands-on installation and configuration of every component. There are no magic scripts, no do-it-all installers. You’ll see how easy it is to deploy Puppet from scratch, and experience firsthand the power of the tools it provides. You’ll finish this book with everything you need to build out a production service.

Throughout this book you’ll find commentary and practical advice that is based on years of experience deploying, scaling, and tuning Puppet environments. You will find advice about managing small shops, large commercial enterprises, and globally distributed teams. You’ll also learn several ways to scale Puppet to thousands of nodes.

This book provides explicit instructions for configuring and using Puppet from the command line without the use of external tools beyond your favorite text editor.

The book will help you create Puppet manifests and Puppet modules that utilize every feature of Puppet. You will create configuration policies to handle your specific needs from the examples in this book.

Everything you learn in this book can be done entirely from your laptop or workstation without any impact on production environments. However, it will teach you everything necessary to deploy Puppet in real environments, and will include numerous tips and recommendations for production use.

Every example with IP addresses will include both IPv4 and IPv6 statements. If you’re only using one of these protocols, you can ignore the other. Puppet will happily use any combination of them. Specific advice for managing Puppet in dual-stack IPv6 environments can be found in multiple parts of the book.

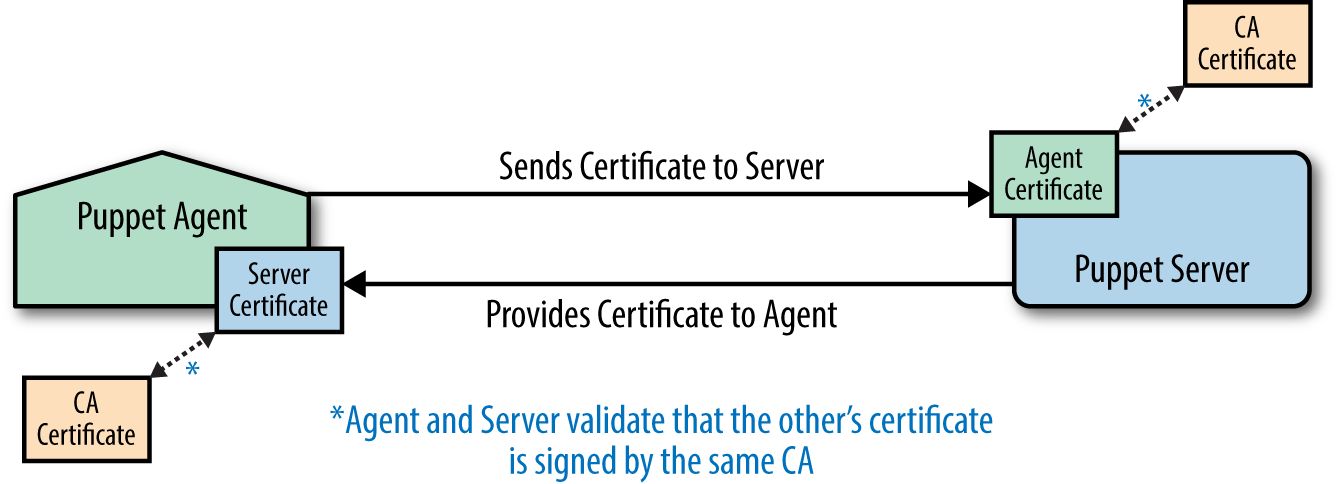

You are likely familiar with the term SSL when referring to transport layer security and encryption. You’re also likely aware that SSL v3 was renamed TLS 1.0 when it became an IETF standard. At this time, all versions of SSL and up to TLS 1.1 are subject to known exploits. Rather than constantly refer to both terms (SSL/TLS) throughout this book, I will refer to it only by the new name (TLS). This is technically more accurate, as Puppet 4 requires TLS 1.2 and will not accept SSL connections.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

Constant width boldShows commands or other text that should be typed literally by the user.

Constant width italicShows text that should be replaced with user-supplied values or by values determined by context.

This element signifies a tip or suggestion.

This element signifies a general note.

This element indicates a warning or caution.

Safari Books Online is an on-demand digital library that delivers expert content in both book and video form from the world’s leading authors in technology and business.

Technology professionals, software developers, web designers, and business and creative professionals use Safari Books Online as their primary resource for research, problem solving, learning, and certification training.

Safari Books Online offers a range of plans and pricing for enterprise, government, education, and individuals.

Members have access to thousands of books, training videos, and prepublication manuscripts in one fully searchable database from publishers like O’Reilly Media, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, Course Technology, and hundreds more. For more information about Safari Books Online, please visit us online.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://bit.ly/learningPuppet4.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

Documented the new Hiera v5 configuration file format in Chapter 29.

Documented how to create a simple custom Hiera backend in Chapter 11.

Removed references to deprecated environment_data_provider and environment::data functions.

Improved spacing and indentation problems in code examples.

Clarified how schedules and other dependencies affect resource application.

Added note about inspect’s removal in Puppet 5 (“Running Audit Inspections” in Chapter 24).

Improved guidelines for problems with and ways around chained execs.

Added Semver, SemVerRange, Timespan, and Timestamp values from Puppet 4.8 to “Valid Types” (Chapter 14).

Added description of Puppet 4.8’s next(), break(), and return() functions in Chapter 5.

Demonstrated how to redact passwords by marking them Sensitive, in Chapter 5.

Added instructions for puppet lookup --explain in “Debugging Lookup” (Chapter 14).

Added the examples/ directory to “Understanding Module Structure” (Chapter 13).

Fixed all errata reported on the O’Reilly site.

I owe a deep debt of gratitude to Nova, Kevin, Andy, Lance, and far too many other friends whom I have neglected while adding “just one more essential thing” (more than a hundred times) to this book. I lack the words to express how thankful I am that you are in my life, and have apparently forgiven me for being a ghost over the last year.

Growing and improving happens only by surrounding yourself with smart people. The following individuals have provided incalculable feedback and insight that has influenced my learning process, and deserve both accolades for their technical efforts and my sincere appreciation for their advice:

There are far too many Puppet Labs employees to list who have accepted, rejected, and negotiated my suggestions, feedback, and patches to Puppet and related tools. Thank you for your assistance over the years. We all appreciate your efforts far more than we remember to share with you.

I owe a drink and many thanks to many people who provided input and feedback on the book during the writing process, including but definitely not limited to the technical reviewers:

If you find that the examples in this book work well for you, it’s likely due to the helpful feedback provided by numerous readers who posted errata on the book page or comments on the Safari book that helped me address concerns I would have missed. Their insights have been invaluable and deeply appreciated.

And finally, I’d like to thank my O’Reilly editor, Brian Anderson, who gave me excellent guidance on the book and was a pleasure to work with. I’m likewise deeply indebted to my patient and helpful production and copy editors, Kristen and Rachel, without whom my jumbled pile of letters wouldn’t make any sense at all.

All of us who use Puppet today owe significant gratitude to Luke Kanies, who conceived of Puppet and continues to direct its growth in Puppet Labs. His vision and foresight made all of this possible.

This book will teach you how to install and use Puppet for managing computer systems. It will introduce you to how Puppet works, and how it provides value. To better understand Puppet and learn the basics, you’ll set up a testing environment, which will evolve as your Puppet knowledge grows. You’ll learn how to declare and evaluate configuration policy for hundreds of nodes.

This book covers modern best practices for Puppet. You’ll find tips throughout the book labeled Best Practice.

You’ll learn how to update Puppet 2 or Puppet 3 puppet code for the increased features and improved parser of Puppet 4. You’ll learn how to deploy the new Puppet Server. You’ll have a clear strategy for upgrading older servers to Puppet 4 standards. You’ll learn how to run Puppet services over IPv6 protocol.

Most important of all, this book will cover how to scale your Puppet installation to handle thousands of nodes. You’ll learn multiple strategies for handling diverse and heterogenous environments, and reasons why each of these approaches may or may not be appropriate for your needs.

Puppet manages configuration data, including users, packages, processes, and services—in other words, any resource of the node you can define. Puppet can manage complex and distributed components to ensure service consistency and availability. In short, Puppet brings computer systems into compliance with a configuration policy.

Puppet can ensure configuration consistency across servers, clients, your router, and that computer on your hip. Puppet utilizes a flexible hierarchy of data sources combined with node-specific data to tune the policy appropriately for each node. Puppet can help you accomplish a variety of tasks. For example, you can use it to do any of the following:

Twenty years ago, people were impressed that I was responsible for 100 servers. At a job site last year, I was responsible for over 17,000 servers. At my current job I’d have to go check somewhere to find out, as we scale up and down dynamically based on load. These days, my Puppet code spins up more servers while I’m passed out asleep than I did in the first 10 years of my career. You can achieve this only by fully embracing what Puppet provides.

I was recently reminded of something I quipped to a CEO almost six years ago:

You can use Puppet to do more faster if you have ten nodes.

You must use Puppet if you have ten hundred nodes.

Puppet enables you to make a lot of changes both quickly and consistently. You don’t have to write out every step, you only have to define how it should be. You are not required to write out the process for evaluating and adjusting each platform. Instead, you utilize the Puppet configuration language to declare the final state of the computing resources. Thus, we describe Puppet as declarative.

When analyzing hand-built automation systems, you’ll invariably find commands such as the following:

$echo"param: newvalue">>configuration-file

This command appends a new parameter and value to a configuration file. This works properly the first time you run it. However, if the same operation is run again, the file has the value twice. This isn’t a desirable effect in configuration management. To avoid this, you’d have to write code that checks the file for the configuration parameter and its current value, and then makes any necessary changes.

Language that describes the actions to perform is called imperative. It defines what to do, and how to do it. It must define every change that should be followed to achieve the desired configuration. It must also deal with any differences in each platform or operating system.

When managing computer systems, you want the operations applied to be idempotent, where the operation achieves the same results every time it executes. This allows you to apply and reapply (or converge) the configuration policy and always achieve the desired state.

To achieve a configuration state no matter the existing conditions, the specification must avoid describing the actions required to reach the desired state. Instead, the specification should describe the desired state itself, and leave the evaluation and resolution up to the interpreter.

Language that declares the final state is called declarative. Declarative language is much easier to read, and less prone to breakage due to environment differences. Puppet was designed to achieve consistent and repeatable results. Every time Puppet evaluates the state of the node, it will bring the node to a state consistent with the specification.

Any node you control contains an application named puppet agent. The agent evaluates and applies Puppet manifests, or files containing Puppet configuration language that declares the desired state of the node. The agent evaluates the state of each component described in a manifest, and determines whether or not any change is necessary. If the component needs to be changed, the agent makes the requested changes and logs the event.

If Puppet is configured to utilize a centralized Puppet server, Puppet will send the node’s data to the server, and receive back a catalog containing only the node’s specific policy to enforce.

Now you might be thinking to yourself, “What if I only want the command executed on a subset of nodes?” Puppet provides many different ways to classify and categorize nodes to limit which resources should be applied to which nodes. You can use node facts such as hostname, operating system, node type, Puppet version, and many others. Best of all, new criteria custom to your environment can be easily created.

The Puppet agent evaluates the state of only one node. In this model, you can have agents on tens, hundreds, or thousands of nodes evaluating their catalogs and applying changes on their nodes at exactly the same time. The localized state machine ensures a scalable and fast parallel execution environment.

As we have discussed, Puppet provides a well-designed infrastructure for managing the state of many nodes simultaneously. Here are a few reasons to use it:

In Part I, you will learn how to write simple declarative language that will make changes only when necessary.

In Part II, you will create a module that uses Puppet to install and configure the Puppet agent. This kind of recursion is not only possible but common.

In Part III, you will learn how to use Puppet masters and Puppet Server to offload and centralize catalog building, report processing, and backup of changed files.

In Part IV, you will use MCollective to orchestrate immediate changes with widespread Puppet agents.

Puppet provides a flexible framework for policy enforcement that can be customized for any environment. After reading this book and using Puppet for a while, you’ll be able to tune your environment to exactly your needs. Puppet’s declarative language not only allows but encourages creativity.

While Puppet is a tool used by many DevOps teams, using Puppet will not by itself give you all the benefits of adopting DevOps practices within your team.

In practice, Puppet is used by many classic operations teams who handle all change through traditional planning and approval processes. It provides them with many benefits, most especially a readable, somewhat self-documenting description of what is deployed on any system. This provides tremendous value to operations where change management control and auditing are primary factors.

On the other hand, the ability to manage rapid change across many systems that Puppet provides has also cracked open the door to DevOps for many operations teams. Classic operations teams were often inflexible because they were responsible for the previously difficult task of tracking and managing change. Puppet makes it possible to not only track and manage change, but to implement locally customized change quickly and seamlessly across thousands of nodes. This makes it possible for operations teams to embrace practices that are flexible for changing business needs.

You don’t have to be working with developers to utilize DevOps practices. This is a common misconception of DevOps. The developer in DevOps is not a different person or team, it is you! There are many teams which utilize DevOps practices that don’t support developers; rather, they manage systems that support a completely different industry. You are participating in DevOps when you utilize Agile development processes to develop code that implements operations and infrastructure designs.

Perhaps the biggest source of confusion comes when people try to compare using Puppet (a tool) to implement DevOps practices, and the idea of “valuing individuals and interactions over processes and tools.” It is easiest to explain this by first outlining the reasons that operations teams were historically perceived as inflexible. The tools available for managing software within the enterprise used to be shockingly limited. Many times a very small change, or a customization for one group, would require throwing away the software management tool and embracing another. That’s a tremendous amount of work for an operations team.

When using Puppet, if an individual can make a case for the value of a change (interaction), then rolling out a local customization usually involves only a small refactoring of the code. Applying changes becomes easy, and thus avoids a conflict between valuable requests and the limitations of the tools available. Discussion of the merits of the change has higher value than processes used to protect operations teams from unmanageable change.

No tool or set of tools, product, or job title will give an operations team all the benefits of utilizing DevOps practices. I have seen far too many teams with all of the keywords, all of the titles, and none of the philosophy. It’s not a product, it’s an evolving practice. You don’t get the benefits without changing how you think.

I highly recommend making the effort to fully understand both Agile processes and DevOps practices and methodologies. Don’t skimp. Someone on your team who is good at promoting and guiding others should be fully trained on Agile processes. Get the people responsible for creating change out to conferences where they can learn from others’ experiences, such as DevOps Days, PuppetConf, and O’Reilly’s Velocity conference.

That’s not an obligatory push of the publisher’s conference. Many people consider John Allspaw’s “10+ Deploys Per Day: Dev and Ops Cooperation,” which was presented at Velocity 2009, to be a founding cornerstone of the DevOps movement. Velocity was the first large conference to add DevOps items to its agenda, and DevOps practices are a major focus of the conference today.

Puppet is a great tool, and you’ll find that it’s even more valuable when used with DevOps practices. While this is not a book about DevOps, it will present many useful tools and strategies for practicing DevOps in your organization.

As we proceed, this book will show you how Puppet can help you do more, and do it faster and more consistently than ever before. You’ll learn how to extend Puppet to meet your specific needs:

By the time you finish this book, you will understand not just how powerful Puppet is, but also exactly how it works. You’ll have the knowledge and understanding to debug problems within any part of the infrastructure. You’ll know what to tune as your deployment grows. You’ll have a resource to use for further testing as your knowledge and experience expands.

It’s time to get declarative.

In this part, you’ll learn about the Puppet configuration language and how to think in a declarative manner. You’ll set up a testing environment that you can use to learn Puppet while reading this book. You’ll be able to continue to use this setup to develop and test your Puppet code long after you have finished this book.

To start, you will install Puppet and create your first Puppet manifests. You’ll learn how to utilize resources, how to associate them, and how to order and limit the changes upon them.

When you finish this part of the book, you’ll have a solid understanding of the Puppet configuration language. As you make your way through the chapters in this part, you will write and test your own Puppet manifests. This part establishes a foundation of modern best practices from which you can explore Puppet’s features and capabilities.

If you have experience writing shell, Ruby, Python, or Perl scripts that make changes to a system, you’ve very likely been performing imperative programming. Imperative programming issues commands that change a target’s state, much as the imperative grammatical mood in natural language expresses commands for people to act on.

You may be using procedural programming standards, where state changes are handled within procedures or subroutines to avoid duplication. This is a step toward declarative programming, but the main program still tends to define each operation, each procedure to be executed, and the order in which to execute them in an imperative manner.

While it can be useful to have a background in procedural programming, a common mistake is to attempt to use Puppet to make changes in an imperative fashion. The very best thing you can do is forget everything you know about imperative or procedural programming.

If you are new to programming, don’t feel intimidated. People without a background in imperative or procedural programming can often learn good Puppet practices faster.

Writing good Puppet manifests requires declarative programming. When it comes to maintaining configuration on systems, you’ll find declarative programming to be easier to create, easier to read, and easier to maintain. Let’s show you why.

The reason that you need to cast aside imperative programming is to handle change better.

When you write code that performs a sequence of operations, that sequence will make the desired change the first time it is run. If you run the same code the second time in a row, the same operations will either fail or create a different state than desired. Here’s an example:

$sudo useradd -u1001-g1001-c"Joe User"-m joe$sudo useradd -u1001-g1000-c"Joe User"-m joe useradd: user'joe'already exists

So then you need to change the code to handle that situation:

# bash excerptgetent passwd$USERNAME> /dev/null 2> /dev/nullif[$?-ne0];thenuseradd -u$UID-g$GID-c"$COMMENT"-s$SHELL-m$USERNAMEelseusermod -u$UID-g$GID-c"$COMMENT"-s$SHELL-m$USERNAMEfi

OK, that’s six lines of code and all we’ve done is ensure that the username isn’t already in use. What if we need to check to ensure the UID is unique, the GID is valid, and that the password expiration is set? You can see that this will be a very long script even before we adjust it to ensure it works properly on multiple operating systems.

This is why we say that imperative programming doesn’t handle change very well. It takes a lot of code to cover every situation you need to test.

When managing computer systems, you want the operations applied to be idempotent, where the operation achieves the same results every time it executes. Idempotence allows you to apply and reapply (or converge) a configuration manifest and always achieve the desired state.

In order for imperative code to be idempotent, it needs to have instructions for how to compare, evaluate, and apply not just every resource, but also each attribute of the resource. As you saw in the previous section, even the simplest of operations will quickly become ponderous and difficult to maintain.

As we mentioned in Introduction, for a configuration state to be achieved no matter the conditions, the configuration language must avoid describing the actions required to reach the desired state. Instead, the configuration language should describe the desired state itself, and leave the actions up to the interpreter. Language that declares the final state is called declarative.

Rather than writing extensive imperative code to handle every situation, it is much simpler to declare what you want the final state to be. In other words, instead of including dozens of lines of comparison, the code reflects only the desired final state of the resource (a user account, in this example). Here we will introduce you to your first bit of Puppet configuration language, a resource declaration for the same user we created earlier:

user{'joe':ensure=>present,uid=>'1001',gid=>'1000',comment=>'Joe User',managehome=>true,}

As you can see, the code is not much more than a simple text explanation of the desired state. A user named Joe User should be present, a home directory for the user should be created, and so on. It is very clear, very easy to read. Exactly how the user should be created is not within the code, nor are instructions for handling different operating systems.

Declarative language is much easier to read, and less prone to breakage due to environment differences. Puppet was designed to achieve consistent and repeatable results. You describe what the final state of the resource should be, and Puppet will evaluate the resource and apply any necessary changes to reach that state.

Conventional programming languages create change by listing exact operations that should be performed. Code that defines each state change and the order of changes is known as imperative programming.

Good Puppet manifests are written with declarative programming. Instead of defining exactly how to make changes, in which you must write code to test and compare the system state before making that change, you instead declare how it should be. It is up to the Puppet agent to evaluate the current state and apply the necessary changes.

As this chapter has demonstrated, declarative programming is easier to create, easier to read, and easier to maintain.

1 First seen in George Boole’s book The Mathematical Analysis of Logic, originally published in 1847.

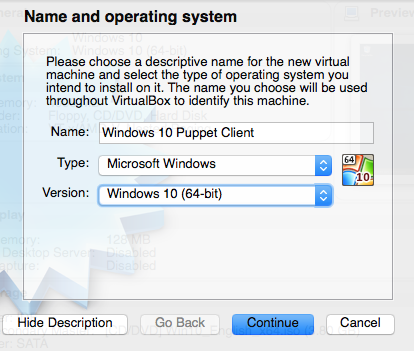

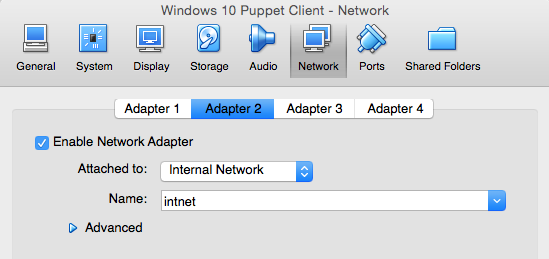

In this chapter, we will create a virtualized environment suitable for learning and testing Puppet. We will utilize Vagrant and VirtualBox to set up this environment on your desktop or laptop. You can keep using this environment long after you have finished this book, or rebuild it quickly any time you’d like to test something new.

If you are an experienced developer or operations engineer, you are welcome to use a testing environment of your own choice. Anything that can host multiple Linux nodes will work. Puppet’s needs are minimal. Any of the following would be suitable for use as a Puppet test lab:

You can build your own test lab using one of the preceding solutions, or you can use an existing test lab you maintain. In all cases, I recommend using an OS compatible with RedHat Enterprise Linux 7 for learning purposes. The CentOS platform is freely available, and fully supported by both Red Hat and Puppet Labs. This will allow you to breeze through the learning exercises without distractions.

We recommend and are going to use Vagrant for the remainder of this book, for the following reasons:

If you plan to use your own testing environment, skip ahead to “Initializing Non-Vagrant Systems”.

If you are going to follow the recommendation to use Vagrant, let’s get started installing it. You’ll need to download two packages.

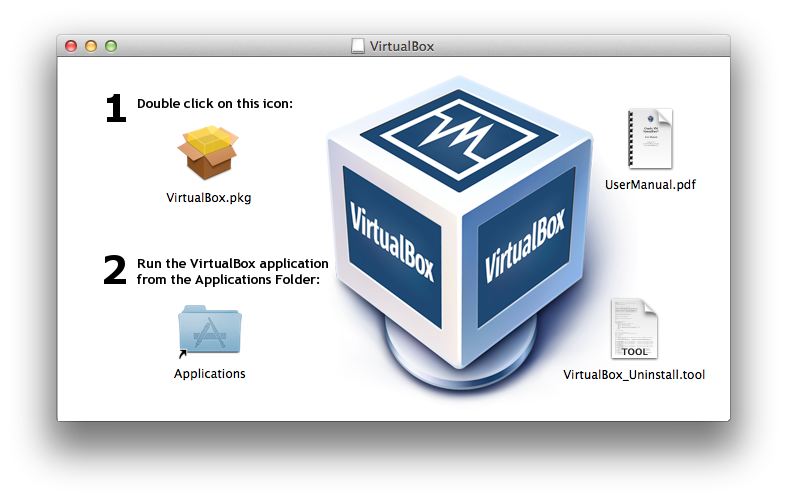

Go to VirtualBox Downloads and download the appropriate platform package for your system.

Next, go to Vagrant Downloads and download the appropriate platform package for your system.

Install these packages according to the instructions for your operating system. I’ve included detailed instructions for Windows and Mac users in the following subsections. If you’re running Vagrant on Linux or another platform, I’m going to assume you are expert enough to handle this installation yourself.

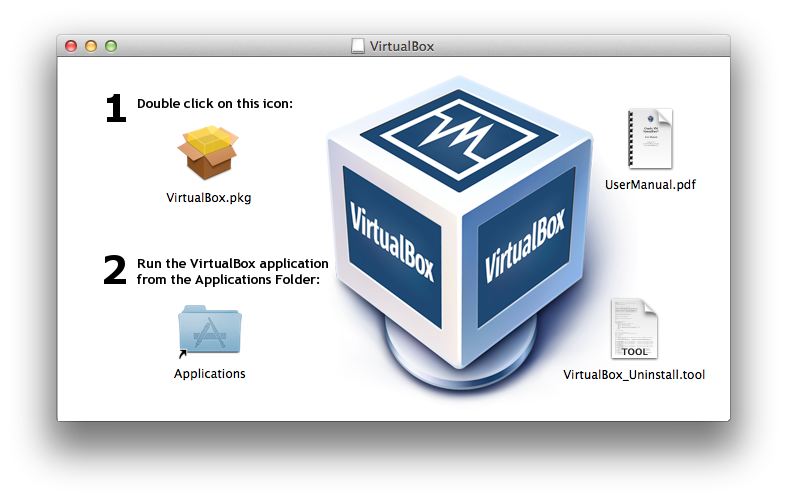

First, you should run the VirtualBox installer. Open the VirtualBox DMG image file you downloaded and click on the Virtualbox.pkg installer, as shown in Figure 2-1.

Accept the license and the installer will complete the installation.

Next, you should run the Vagrant installer. Open the Vagrant DMG image file you downloaded and click on the Vagrant.pkg installer, as shown in Figure 2-2.

Likewise, accept the license agreement and the installer will complete the installation.

Finally, if you don’t already have it, you will need to install Xcode on your machine. Perform the following steps:

xcode into the search bar on the right.On Windows platforms, you must install Git and its tools in order to use Vagrant successfully.

Open your browser to Git Source Code Management and click the Windows Build on the lower right of the page. Run the installer when the download completes.

Accept the default components.

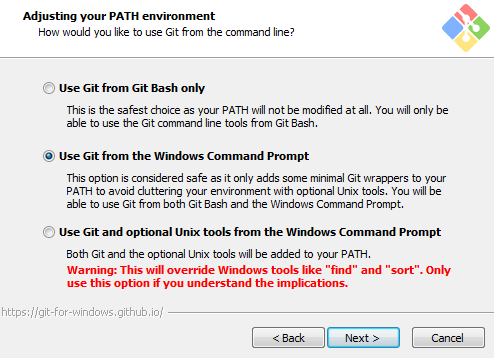

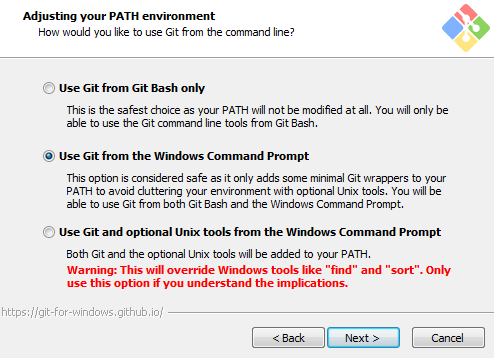

On the “Adjusting your PATH environment” screen, select “Use Git from the Windows Command Prompt,” as shown in Figure 2-3.

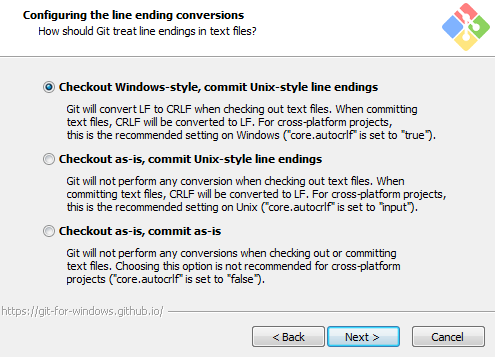

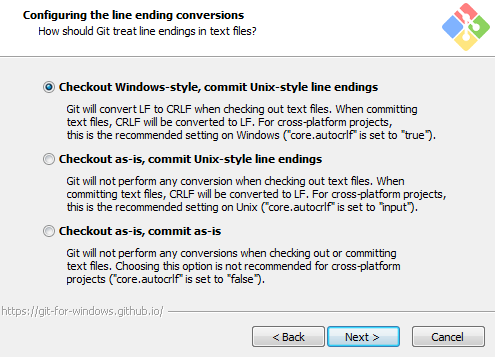

On the “Configuring the line ending conversions” screen (as shown in Figure 2-4), any option will work successfully for this book. Select the default option if you are unsure. You can change this later.

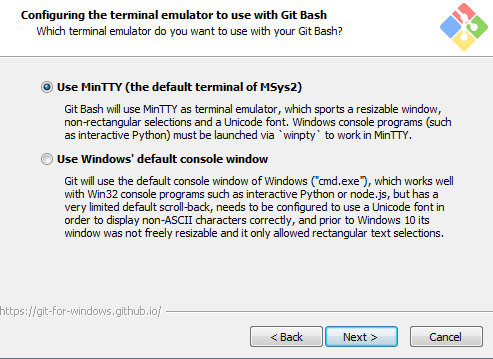

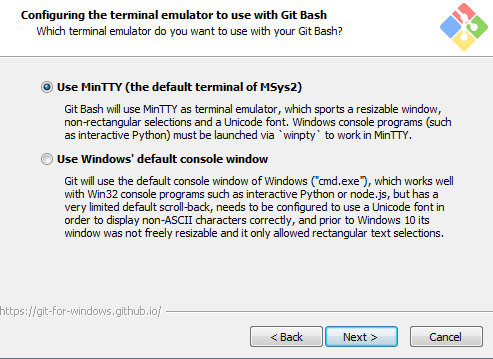

Select the MinTTY terminal emulator on the following screen (Figure 2-5).

Accept the final prompts to complete the installation.

Vagrant will use the VirtualBox virtualization engine to run the test systems. Installing VirtualBox is very straightforward. Run the VirtualBox installer package that you downloaded on the previous page.

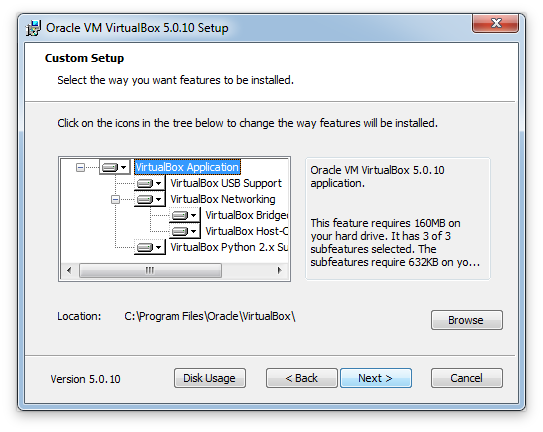

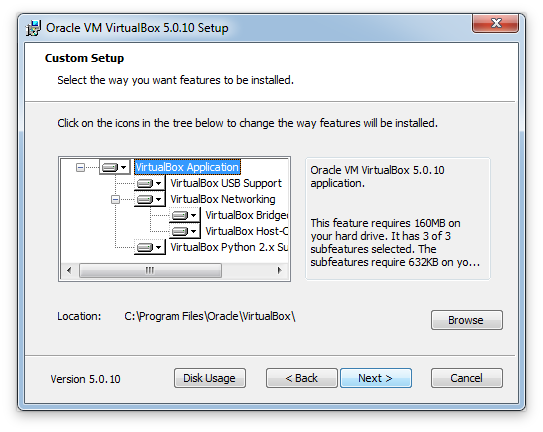

Click Next to start the installation, and then click Next again to install all features (Figure 2-6).

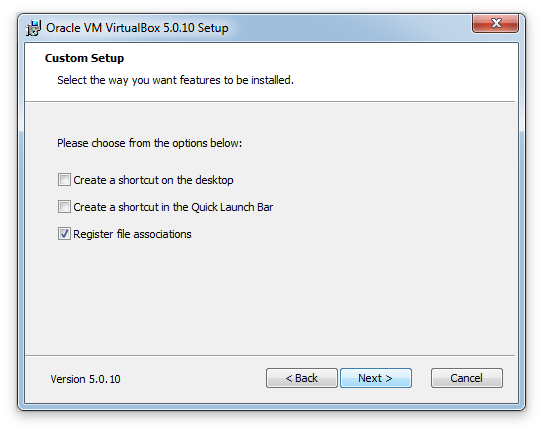

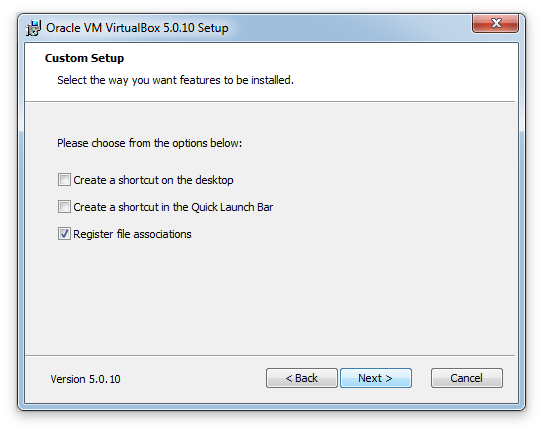

You can disable the options to create shortcuts (Figure 2-7), as these won’t be necessary for the learning environment.

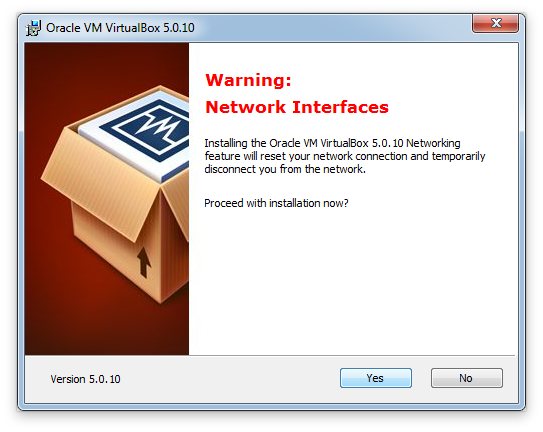

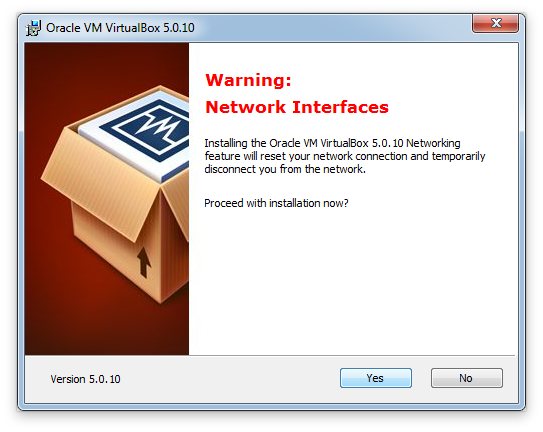

Accept the warning about a short interruption to networking (Figure 2-8). In most situations, you won’t notice any effect.

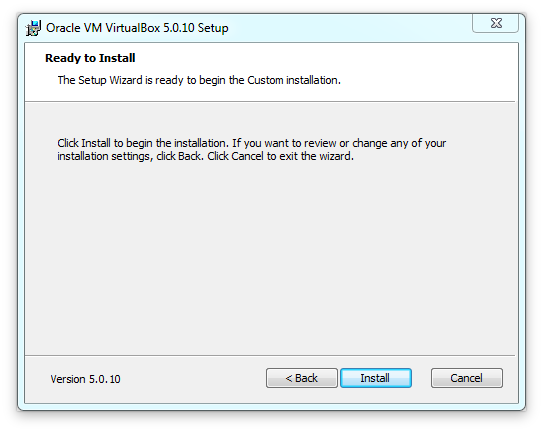

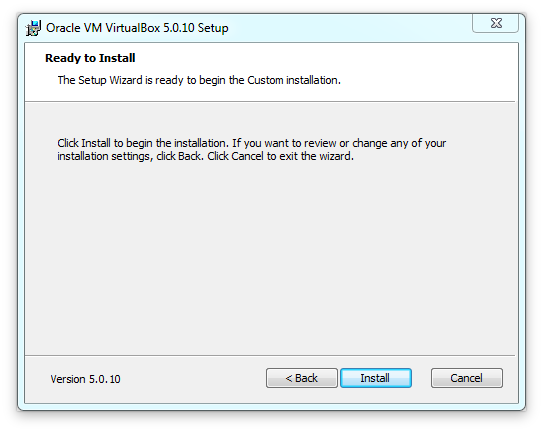

To install VirtualBox, click Install, as shown in Figure 2-9.

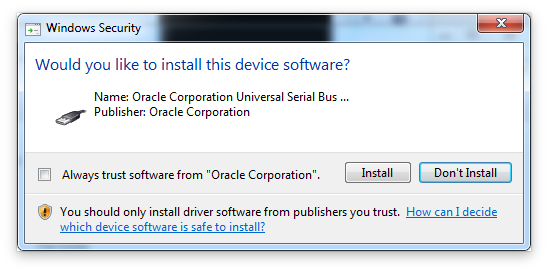

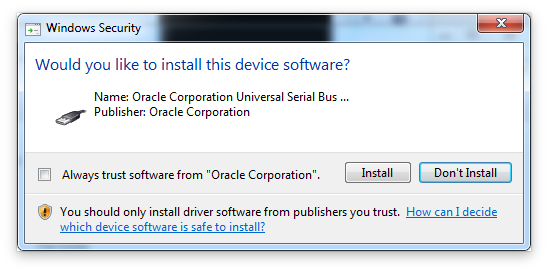

At this point, you’ll be prompted to install several virtualization drivers (Figure 2-10). You can either choose to always trust software from Oracle, or click Install for each one.

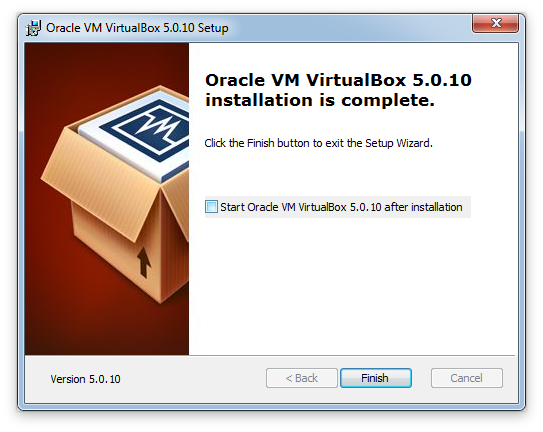

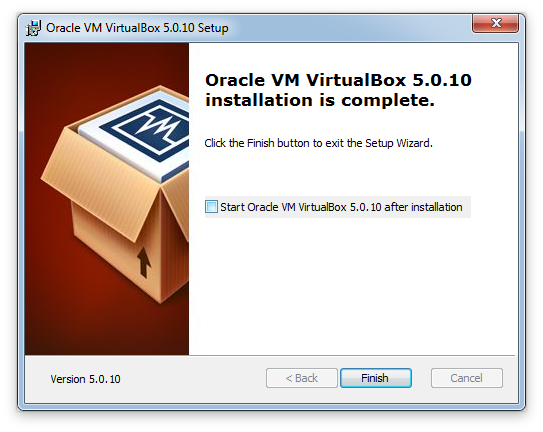

Disable the checkbox to start VirtualBox after installation (Figure 2-11). Vagrant will start it automatically.

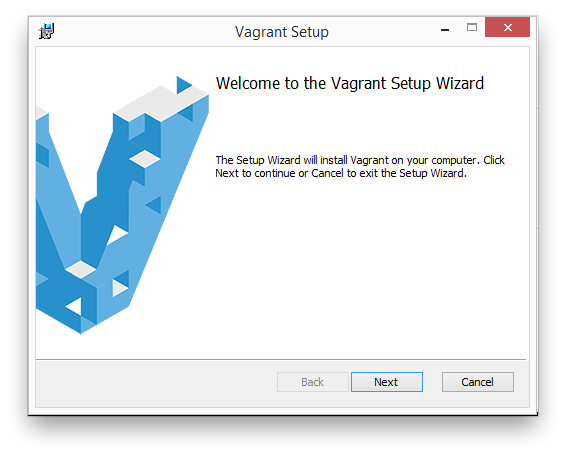

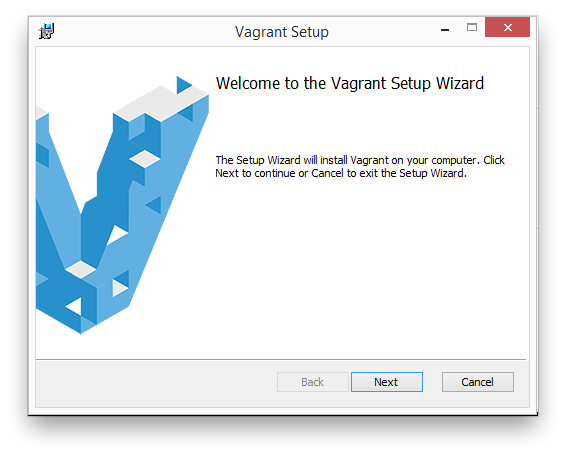

Now we should install Vagrant. Run the Vagrant installer package that you downloaded (Figure 2-12).

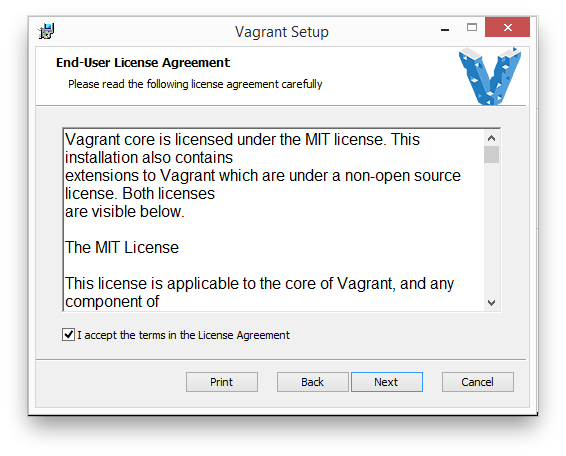

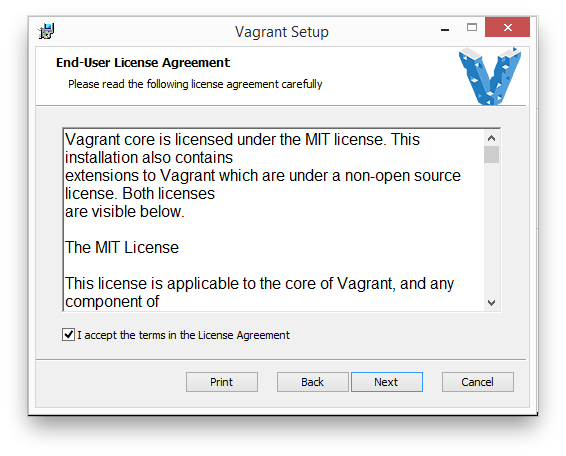

You’ll need to accept the license agreement, as shown in Figure 2-13.

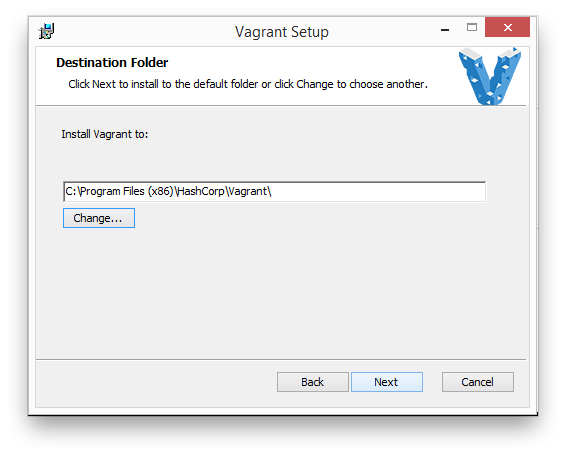

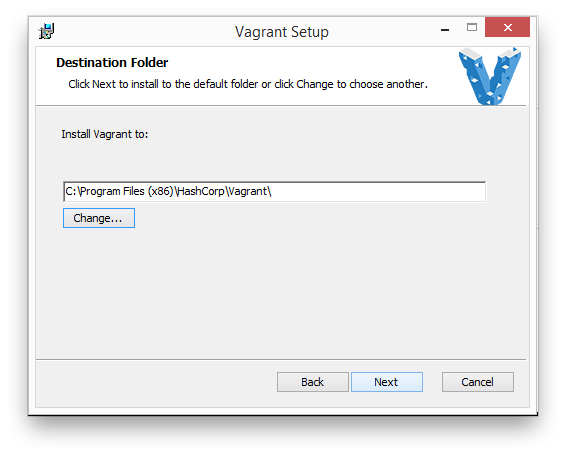

Select where you want to install Vagrant. As Figure 2-14 shows, I prefer to install Vagrant in the normal system location for 64-bit programs. It doesn’t matter which path you choose here.

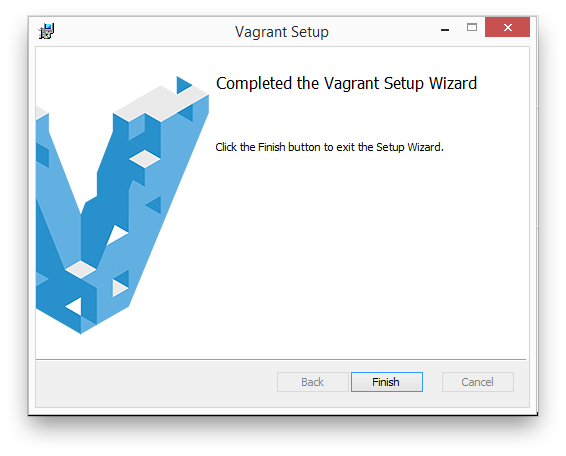

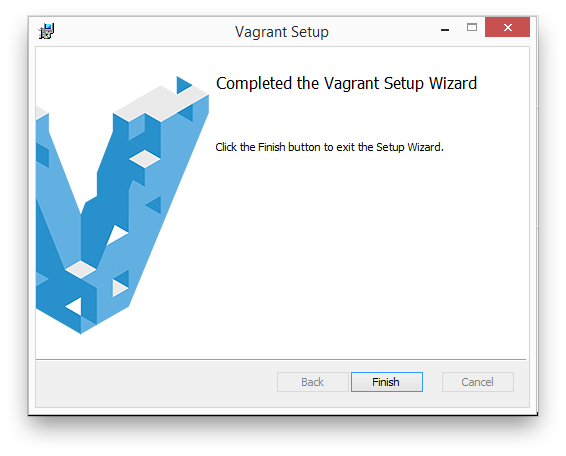

Once you get to the confirmation screen acknowledging completion of the installation, you can exit the Setup Wizard by clicking Finish (Figure 2-15).

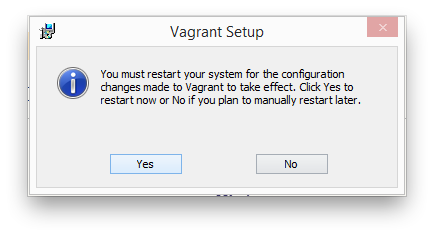

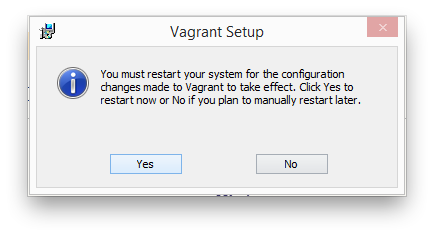

Windows systems will need to reboot, as shown in Figure 2-16.

At this point, you’ll need to start a Bash prompt. We’ll be using Bash for the remainder of this book, so now is a good time to become comfortable using it.

On a Macintosh, follow these steps:

On Windows 7 and before, follow these steps:

On Windows 8, follow these steps:

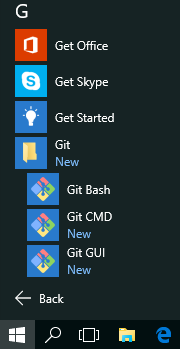

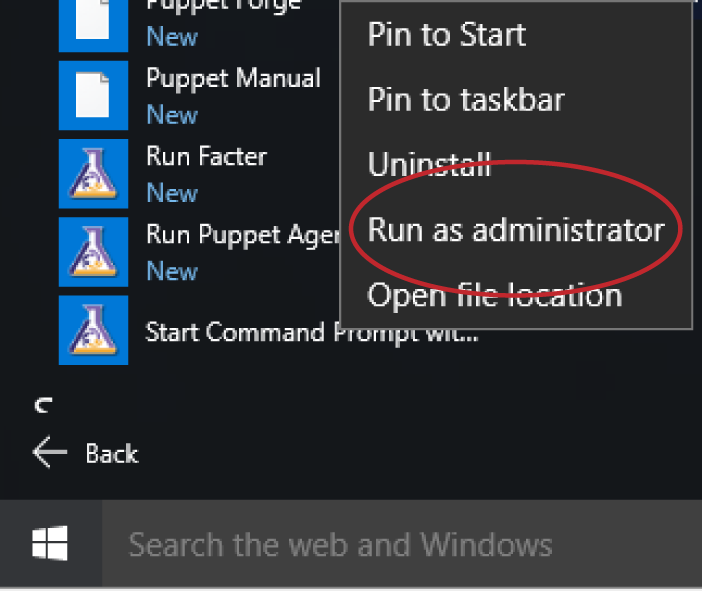

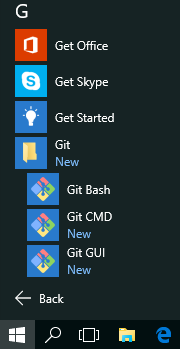

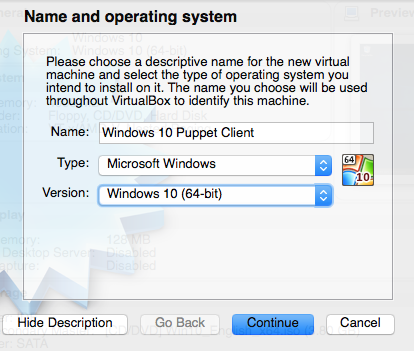

On Windows 10 (see Figure 2-17), follow these steps:

On Linux or any other platform, use the terminal prompt of your choice.

You may use any third-party terminal or command prompt that you prefer. Two popular choices are ConEmu for Windows and iTerm2 for Mac. Tabbed session support will be helpful, as you’ll log in to multiple instances at the same time in some exercises.

No matter which operating system you are using, the instructions assume you will start at a command prompt in your home directory.

To save some time later, you can download the virtual box image used as the base system for our learning environment (as mentioned before, we’ll use CentOS 7, as it is well supported by all Puppet Labs programs and modules):

$vagrantboxadd--providervirtualboxcentos/7==>box:Loadingmetadataforbox'centos/7'box:URL:https://vagrantcloud.com/centos/7==>box:Addingbox'centos/7'(v1707.01)forprovider:virtualboxbox:Downloading:https://vagrantcloud.com/centos/boxes/7/versions/1707.01==>box:Successfullyaddedbox'centos/7'(v1707.01)for'virtualbox'!

You may note that Vagrant Cloud contains many boxes that already have Puppet installed. For this book you will go through the installation of Puppet on a stock system so that you have that experience.

Now we’ll download a repository that contains virtual machine configurations and learning materials. Starting in your home directory, use git to clone the following repository:

$gitclonehttps://github.com/jorhett/learning-puppet4Cloninginto'learning-puppet4'...remote:Countingobjects:238,done.remote:Total238(delta0),reused0(delta0),pack-reused238Receivingobjects:100%(238/238),38.50KiB|0bytes/s,done.Resolvingdeltas:100%(110/110),done.$cdlearning-puppet4/

As shown, change into the learning-puppet4 directory. All further instructions will assume you are in this directory.

Now you should install the vagrant-vbguest plugin. This plugin ensures that the VirtualBox extensions on the virtual machine are kept up to date.

$vagrantplugininstallvagrant-vbguestInstallingthe'vagrant-vbguest'plugin.Thiscantakeafewminutes...Fetching:vagrant-share-1.1.9.gem(100%)Fetching:micromachine-2.0.0.gem(100%)Fetching:vagrant-vbguest-0.14.2.gem(100%)

In particular, this plugin helps avoid problems that happen when a new kernel is installed, such as the /vagrant shared mount not being available.

The learning repository contains a Vagrantfile that lists the systems we’ll use in this book. If you are familiar with Vagrant, you’ll know that we can easily start these systems with vagrant up. We’ll do that now to initialize a client system for learning Puppet:

$vagrantupclientBringingmachine'client'upwith'virtualbox'provider...==>client:Importingbasebox'centos/7'...==>client:MatchingMACaddressforNATnetworking...==>client:Checkingifbox'centos/7'isuptodate...==>client:SettingthenameoftheVM:learning-puppet4_client_1504376676610_6140==>client:Clearinganypreviouslysetnetworkinterfaces...==>client:Preparingnetworkinterfacesbasedonconfiguration...client:Adapter1:natclient:Adapter2:hostonly==>client:Forwardingports...client:22(guest)=>2222(host)(adapter1)==>client:Running'pre-boot'VMcustomizations...==>client:BootingVM...==>client:Waitingformachinetoboot.Thismaytakeafewminutes...client:SSHaddress:127.0.0.1:2222client:SSHusername:vagrantclient:SSHauthmethod:privatekeyclient:client:Vagrantinsecurekeydetected.Vagrantwillautomaticallyreplaceclient:thiswithanewlygeneratedkeypairforbettersecurity.client:client:Insertinggeneratedpublickeywithinguest...client:Removinginsecurekeyfromtheguestifit'spresent...client:Keyinserted!DisconnectingandreconnectingusingnewSSHkey...==>client:Machinebootedandready!

On a first boot the vbguest plugin will install the Virtualbox Guest Additions. This requires installing some CentOS packages we’ll skip over, but it should end like this:

Copy iso file VBoxGuestAdditions.iso into the box /tmp/VBoxGuestAdditions.iso mount: /dev/loop0 is write-protected, mountingread-only Installing Virtualbox Guest Additions 5.1.26 - guest version is unknown Verifying archive integrity... All good. Uncompressing VirtualBox 5.1.26 Guest AdditionsforLinux........... VirtualBox Guest Additions installer Copying additional installer modules ... Installing additional modules ... vboxadd.sh: Starting the VirtualBox Guest Additions. Could not find the X.Org or XFree86 Window System, skipping. Redirecting to /bin/systemctl start vboxadd.service Redirecting to /bin/systemctl start vboxadd-service.service==> client: Checkingforguest additions in VM...==> client: Setting hostname...==> client: Configuring and enabling network interfaces... client: SSH address: 127.0.0.1:2222 client: SSH username: vagrant client: SSH auth method: privatekey==> client: Rsyncing folder: /Users/jorhett/learning-puppet4/=> /vagrant==> client: Running provisioner: shell... client: Running: inline script

We’ve started only a single machine to learn from, named client. There are several other machines available that we will utilize in future chapters:

$vagrant status Current machine states: client running(virtualbox)puppetmaster not created(virtualbox)puppetserver not created(virtualbox)dashboard not created(virtualbox)web1 not created(virtualbox)This environment represents multiple VMs. The VMs are all listed above with their current state. For more information about a specific VM, run`vagrant status NAME`.

You can suspend, resume, and destroy these instances quite easily:

$vagrantsuspendclient==> client: Saving VM state and suspending execution...$vagrant resumeclient==> client: Resuming suspended VM...==> client: Booting VM...==> client: Waitingformachine to boot. This may take a few minutes... client: SSH address: 127.0.0.1:2222 client: SSH username: vagrant client: SSH auth method: private key client: Warning: Connection refused. Retrying...==> client: Machine booted and ready!$vagrant destroy client client: Are you sure you want to destroy the'client'VM?[y/N]n==> client: The VM'client'will not be destroyed, since theconfirmation==> client: was declined.

If you do destroy the instance, just run vagrant up client to create a new instance and start over. We’re not going to spend any more time covering Vagrant here, but remember suspend and resume when you want to stop your test environment but don’t want to have to restart from scratch.

Now that this is running, let’s log in to the client system and get started.

$vagrant ssh client[vagrant@client ~]$

If you are using Windows and a version of Vagrant below 1.7.5, it has a bug that prevents it from recognizing MinTTY properly. Upgrade to a more recent version.

If you are using Windows and not using Git Bash, you may get an error about SSH not being found. This is due to not having the Git binaries in your path. You can correct that for this command prompt like so:

C:\>setPATH=%PATH%;C:\ProgramFiles(x86)\Git\bin

The folder learning-puppet4 from your personal system should be synced to the client node as /vagrant. Let’s do a quick verification that it shows up here. You should see directory contents similar to this:

$ls/vagrantbindocetc-puppetLICENSEmanifestsREADME.mdsystemd-puppetVagrantfile

If you don’t see that, read back in your terminal to see if there was an error message about rsync of the /vagrant directory.

Unfortunately this isn’t a book about VirtualBox, so I feel obliged to send you to the VirtualBox support forum for a solution.

vagrant-vbguest plugin as shown in “Install the Vagrant vbguest Plugin” and restarting the virtual machine.If you are using a virtual machine of your own choice, we’ll need to take a couple of steps so that you can follow the instructions in this book. If possible, mount the learning-puppet4/ directory to the virtual machine as /vagrant/. If you’re not sure how to do this, you can simulate it with the following commands:

$ git clone https://github.com/jorhett/learning-puppet4 … $ sudo ln -s /home/username/learning-puppet4 /vagrant

Throughout this book, you will see the following prompt shown in the examples:

[vagrant@client ~]$

You’ll need to mentally replace this with whatever your virtual node’s shell prompt is. If you wish to ensure it looks the same, you can use the following environment variable to sync them up:

$exportPS1='[vagrant@client \W]\$ '

Before we install Puppet on your virtual system, stop and take a moment to ensure you have a good text editor available to use. There are two ways to use text editors:

One issue for both Mac and Windows users is the handling of carriage returns and line feeds within files. Puppet and Git both work best when lines are terminated by line feeds without carriage returns, which is known as “Unix file format.” It is important to select an editor that can read and write files in this format without any surprises.

Many editors on Windows open Unix format files correctly, but will quietly replace the endings with a carriage return when saving. As your target nodes may expect line-feed–terminated files, this can cause file corruption on the target host. Likewise, the opposite problem can exist: if you are editing files to be written out to Windows nodes, then you may need to preserve the carriage returns in the file. If you have a mixed environment of Unix/Linux and Windows nodes, it is essential that your editor can handle both file formats.

The following subsections provide a list of editors we recommend. I have limited this list to only include editors that handle both file formats well. If you don’t have any Windows nodes, then you can use any editor you like.

There are three editors immediately available to you on the virtual system. All three of these normally create Unix format files, but can edit Windows format files without causing corruption. You can use the editors shown in Table 2-1 from the command line inside your virtual system.

| Text editor | Command | Notes |

|---|---|---|

| Nano | sudo yum install nano |

An easy text editor for beginners—no book required! |

| Vim | sudo yum install vim |

A powerful editor for experienced users; see Learning the vi and Vim Editors (O’Reilly). |

| Emacs | sudo yum install emacs-nox |

A powerful editor for experienced users; see Learning GNU Emacs (O’Reilly). |

As it happens, all three of the aforementioned editors are installed on Macs by default, and available with the Cygwin package for Windows. You can get quite comfortable using these editors on your desktop.

When writing Puppet modules using a Windows system, you’ll run into problems with line endings within templates and source files. Windows uses both a carriage-return character and a line-feed character to end a line, whereas Mac, Linux, and Unix systems use only the line-feed character.

If you open up the files we use in this book with the Windows Notepad editor, you will see that they show up as a single, unbroken line. WorkPad can display the file, but will change the endings when it writes out changes. None of the built-in editors on Windows—Notepad, WordPad, or Word—are safe to use with Unix format files.

For Windows users, I highly recommend Notepad++. It can open and write out both Unix and Windows format files without changing the line endings. It does not reformat files unless you explicitly tell it to.

For Mac users, I recommend the TextWrangler and TextMate editors. The built-in TextEdit editor is minimally sufficient for Unix format files, but cannot handle files in Windows format properly.

If you are already a fan of the Unix editor Vim, you can find a GUI version of it at GVim or MacVim. Vim can safely read and write files in both formats.

If you have experience with the Eclipse IDE, the workspace editor can safely read, write, and convert upon request files in both Unix and Windows formats.

Use the following command to configure your editor of choice in the environment on the virtual machine (you’ll obviously need to set the editor to the one you want to use):

[vagrant@client~]$echo"EDITOR=nano">>~/.bashrc

When following the instructions in this book, any time you see the word $EDITOR in a command to type, you can either type this exactly or replace it with your editor. So, for example:

[vagrant@client~]$$EDITORfilename

would work exactly the same as:

[vagrant@client~]$nanofilename

In this chapter, you created a virtualized environment suitable for learning and testing Puppet without affecting any production or personal systems.

The default learning environment we recommended had you install the Git development tools, Vagrant, and VirtualBox to provide a virtualized CentOS system on which you can test and develop.

If you already have a virtualization platform that you prefer to work with, you are welcome to use that instead. We recommended using an operating system compatible with RedHat Enterprise Linux 6 or 7, as it is the best tested and guaranteed version for compatibility with Puppet Supported modules that we will be using throughout the book. After you feel confident in the use of Puppet, there are detailed notes for use on other platforms in Appendix A.

During the course of this chapter, you cloned a Git repository that contains Vagrant system definitions, some example manifests, and other helpful files we’ll use throughout this book.

We also discussed the need for editing text files, and how you can use either an editor on the virtualized system or an editor on your desktop to create and edit Puppet manifests.

This environment is yours to keep. It will be useful as you develop and test Puppet manifests and modules during the learning process.

In this chapter, you will install the Puppet agent and its dependencies. We have deliberately chosen a Vagrant box that doesn’t have Puppet preinstalled, so that you can go through and learn the process. You can repeat these exact steps on any test or production node to install or upgrade Puppet.

Let’s get started by installing the Puppet Labs package repository.

Now we’ll install and enable a Puppet Labs Puppet Collection repository on your fresh new system. Our first step is to check what the latest Puppet Collection is. You can find this at “Using Puppet Collections” in the Puppet Labs online documents.

Get the URL for the Enterprise Linux 7 Collection and install that package as described in the documentation.

For EL/CentOS versions 6 and above, you can simply use yum install rather than rpm -ivh:

[vagrant@client~]$sudoyuminstall-y\http://yum.puppetlabs.com/puppetlabs-release-pc1-el-7.noarch.rpm

This installs and enables a Puppet Collection package repository, which contains the Puppet 4 package. After it has finished installing, you can confirm it is enabled with the following command:

[vagrant@client~]$sudoyumrepolistLoadedplugins:fastestmirror...sniprepositorychecks...repoidreponamestatusbase/7/x86_64CentOS-7-Base9,363extras/7/x86_64CentOS-7-Extras451puppetlabs-pc1/x86_64PuppetLabsPC1Repositoryel7-x86_64128updates/7/x86_64CentOS-7-Updates2,146repolist:12,088

This shows you that there were 128 packages in the puppetlabs-pc1 repository (when this book was last updated).

The Puppet ecosystem contains many tightly related and dependent packages. Puppet, Facter, MCollective, and the Ruby interpreter are all tightly related dependencies. The Puppet agent, Puppet server, and PuppetDB are self-standing but interdependent applications.

Production Puppet environments have been struggling with several conflicting needs:

Puppet Labs has chosen to address these concerns with two related changes:

Now let’s go ahead and install the Puppet agent:

[vagrant@client~]$sudoyuminstall-ypuppet-agentLoadedplugins:fastestmirrorLoadingmirrorspeedsfromcachedhostfile*base:centos.sonn.com*extras:mirrors.loosefoot.com*updates:mirrors.sonic.netResolvingDependencies-->Runningtransactioncheck--->Packagepuppet-agent.x86_640:1.10.9-1.el7willbeinstalled-->FinishedDependencyResolution...sniplotsofoutput...RunningtransactioncheckRunningtransactiontestTransactiontestsucceededRunningtransactionInstalling:puppet-agent-1.10.9-1.el7.x86_641/1Verifying:puppet-agent-1.10.9-1.el7.x86_641/1Installed:puppet-agent.x86_640:1.10.9-1.el7Complete!

Guides for installing Puppet on other platforms can be found in Appendix A.

If you have installed previous versions of Puppet, you’re accustomed to seeing dependency packages installed along with Puppet. Puppet 4 uses an All In One (AIO) installer, where all dependencies are installed together with Puppet. You can view these in the new installation directory:

[vagrant@client ~]$ls -la /opt/puppetlabs/bin/ total 0 drwxr-xr-x2root root68Apr64:41 . drwxr-xr-x4root root29Apr64:41 .. lrwxrwxrwx1root root33Apr64:41 facter -> /opt/puppetlabs/puppet/bin/facter lrwxrwxrwx1root root32Apr64:41 hiera -> /opt/puppetlabs/puppet/bin/hiera lrwxrwxrwx1root root30Apr64:41 mco -> /opt/puppetlabs/puppet/bin/mco lrwxrwxrwx1root root33Apr64:41 puppet -> /opt/puppetlabs/puppet/bin/puppet

Unlike previous versions, the Puppet commands are not installed in /usr/bin, and won’t be available in your default path. This puppet-agent package adds the file /etc/profile.d/puppet-agent.sh, which will add /opt/puppetlabs/bin to your path. This file is automatically included by the Bash shell during initialization. Let’s use that now:

[vagrant@client~]$whichpuppet/usr/bin/which:nopuppetin(/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin)[vagrant@client~]$source/etc/profile.d/puppet-agent.sh[vagrant@client~]$whichpuppet/opt/puppetlabs/bin/puppet

$PATH in your shell configuration files, you must add source /etc/profile.d/puppet-agent.sh after that point to have the path added.Let’s review the other programs you see in this directory besides Puppet:

Facter is a program that evaluates a system and provides a number of facts about it. These facts include node-specific information (e.g., architecture, hostname, and IP address), in addition to custom information from plugins provided by Puppet modules. For a sneak preview, run the command facter right now and look at all the information it has.

We’ll be covering how to make use of Facter facts in Chapter 5, and how to create custom facts in Part II.

Hiera is a component we’ll use to load in the data used by Puppet manifests and modules. Hiera allows you to provide default values and then override or expand them through a customizable hierarchy. This may sound complex, but Hiera’s beauty and elegance comes from its simplicity.

You’ll learn how to use Hiera in Part II, and after its introduction, you will see Hiera used in every subsequent example in the book.

Marionette Collective, or MCollective, is an orchestration framework tightly integrated with Puppet.

You’ll learn how to use it in Part IV, where we’ll use the mco client to manipulate the Puppet agent.

If you have installed previous versions of Puppet, you’ll notice that the installation packages and the configuration file paths have all changed.

For Unix and Linux systems, the following changes have taken place:

puppet-agent packagegem are installed in /opt/puppetlabs/puppet, to avoid them being accidentally called by users.$confdir is now /etc/puppetlabs/puppet$ssldir directory is now $confdir/ssl$vardir for Puppet is now /opt/puppetlabs/puppet/cache/puppet agent and puppet apply. You can change this by setting $vardir in the Puppet config file.$rundir for Puppet agent is now /var/run/puppetlabsPuppet code and data has moved from $confdir to a new directory $codedir. This directory contains:

$environmentpath$basemodulepathOn Windows, very little has changed. The Puppet package has always been an AIO installer. The package now includes MCollective. Executables remain in the same location, and the MSI package still adds Puppet’s tools to the PATH. The $confdir and $rundir have not changed.

For review, the file locations are:

$confdirCOMMON_APPDATA defaults to C:\ProgramData\PuppetLabs\puppet\etc$codedir$vardir$rundirThroughout this book, you’ll edit files and make changes within the Puppet configuration directory. Normally, this would require you to type sudo every time you want to change a file.

I have found that most people prefer the following change to create an easier-to-use environment for learning (this command will make the vagrant user the owner of all files in that directory):

[vagrant@client~]$sudochown-Rvagrant/etc/puppetlabs

The remainder of this book assumes you have made this change. If you decide to continue using sudo, you’ll need to add it to any command involving files in /etc/puppetlabs.

[vagrant@client~]$sudochown-Rvagrant/etc/puppetlabs[vagrant@client~]$sudopuppetapply-e''Notice:Compiledcatalogforclient.example.cominenvironmentproductionNotice:Appliedcatalogin0.01seconds

Obviously you won’t be using the vagrant user in a production environment. However, it is not uncommon to see something like the following done to give write access to all members of a certain group:

[vagrant@client ~]$ sudo chgrp -R sysadmin /etc/puppetlabs [vagrant@client ~]$ sudo chmod -R g+w /etc/puppetlabs

One reason you may not want to change the ownership of this directory would be if your Puppet deployment was managed by continuous integration tools, and you want to ensure that everybody uses the tools. That won’t be a problem when using this book, and chown -R vagrant /etc/puppetlabs is highly recommended for the lessons here.

When you run Puppet with sudo, it uses the system-level configuration files, like so:

[vagrant@client~]$sudopuppetconfig|grepdirconfdir=/etc/puppetlabs/puppetcodedir=/etc/puppetlabs/codevardir=/opt/puppetlabs/puppet/cachelogdir=/var/log/puppetlabs/puppet...etc...

When you run Puppet without sudo, it will use paths in your home directory for configuration files. This can be useful when you are writing and testing small manifests:

[vagrant@client~]$puppetconfig|grepdirconfdir=/home/vagrant/.puppetlabs/etc/puppetcodedir=/home/vagrant/.puppetlabs/etc/codevardir=/home/vagrant/.puppetlabs/opt/puppet/cachelogdir=/home/vagrant/.puppetlabs/var/log...etc...

Most people don’t want to be constantly migrating changes back and forth between two different directory structures. There are two ways to resolve this dilemma:

sudo every time you run PuppetI much prefer the second approach, and I recommend it for daily use. For this reason, we have preinstalled the ~/.puppetlabs/etc/puppet/puppet.conf configuration file on all Vagrant configurations, which enables this for you. Check it out:

[vagrant@client~]$cat~/.puppetlabs/etc/puppet/puppet.conf# Allow "puppet hiera" and "puppet module" commands without sudo[main]logdest=consoleconfdir=/etc/puppetlabs/puppetcodedir=/etc/puppetlabs/code

If you don’t like this setup, you are welcome to remove this file. Just remember to use sudo with every Puppet command, or it will use a different configuration file than you expect.

On most systems, the sudo command resets the user search path to a list of known safe directories. Unfortunately, this does not include the Puppet directory. You can see the effect of this change with the following command:

[vagrant@clientvagrant]$env|grepPATHPATH=/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/puppetlabs/bin:/home/vagrant/.local/bin:/home/vagrant/bin[vagrant@clientvagrant]$sudoenv|grepPATHPATH=/bin:/sbin:/bin:/usr/sbin:/usr/bin

If you want to run Puppet with sudo, you’ll need to add the /opt/puppetlabs/bin directory to sudo’s secure_path default. The following command will get the current secure_path from the sudoers file, and create a sudoers.d/ config extension that appends /opt/puppetlabs/bin:

$sudogrepsecure_path/etc/sudoers\|sed-e's#$#:/opt/puppetlabs/bin#'\|sudotee/etc/sudoers.d/puppet-securepathDefaultssecure_path=/sbin:/bin:/usr/sbin:/usr/bin:/opt/puppetlabs/bin$sudoenv|grepPATHPATH=/sbin:/bin:/usr/sbin:/usr/bin:/opt/puppetlabs/bin

During the course of this chapter, you did the following:

Best of all, none of this was done with magic helper scripts that hid the details from you. You can repeat these exact steps on any test or production node to install or upgrade Puppet.

The very first concept we want to introduce you to is the Puppet manifest. A manifest is a file containing Puppet configuration language that describes how resources should be configured. The manifest is the closest thing to what one might consider a Puppet program. It declares resources that define state to be enforced on a node. It is therefore the base component for Puppet configuration policy, and a building block for complex Puppet modules.

This chapter will focus on how to write configuration policies for Puppet 4 manifests. Writing manifests well is the single most important part of building Puppet policies.

Let’s get started with the smallest component within a manifest.

Resources are the smallest building block of the Puppet configuration language. They represent a singular element that you wish to evaluate, create, or remove. Puppet comes with many built-in resource types. The stock resource types manipulate system components that you are already familiar with, including:

Furthermore, you can create your own resources. However, we’ll begin with one of the simplest resources—the notify resource. Let’s start with the standard first program written in every language:

notify{'greeting':message=>'Hello, world!'}

This code declares a notify resource with a title of greeting. It has a single attribute, message, which is assigned the value we’d expect in our first program. Attributes are separated from their values by a hash rocket (also called a fat comma), which is a very common way to identify key/value pairs in Perl, Ruby, and PHP scripting languages.

This tiny bit of code is a fully functional and valid manifest. This manifest (with one single resource) does only one thing, which is to output that greeting message every time it is called. Let’s go ahead and use Puppet to evaluate this manifest:

[vagrant@client~]$cat/vagrant/manifests/helloworld.ppnotify{'greeting':message=>'Hello, world!'}

As you can see from this example, manifests are text files named with a .pp file extension—they describe resources using the Puppet configuration language. You can create or modify a Puppet manifest using any text editor.

One of Puppet’s best features is the ease of testing your code. Puppet does not require you to set up complicated testing environments to evaluate Puppet manifests. It is easy—nay, downright trivial—to test a Puppet manifest.

Let’s go ahead and apply this manifest. We will do this using the puppet apply command, which tells Puppet to apply a single Puppet manifest:

[vagrant@client~]$puppetapply/vagrant/manifests/helloworld.ppNotice:Compiledcatalogforclient.example.cominenvironmentproductionNotice:Hello,world!Notice:/Stage[main]/Main/Notify[greeting]/message:defined'message'as'Hello, world!'Notice:Finishedcatalogrunin0.01seconds

As you can see, Puppet has applied the manifest. It does this in several steps:

Don’t worry about memorizing these steps at this point in the learning process. For now, it’s just important that you have a general idea of the process—we’ll discuss these concepts in increasing depth throughout this book. We’ll cover the catalog build and evaluation process in great detail at the end of Part I.

For now, just remember that you’ll use puppet apply to apply Puppet manifests. It will provide you verbose feedback on actions Puppet took to bring the target resources into alignment with the declared policy.

There are only a few rules to remember when declaring resources. The format is always the same:

resource_type{'resource_title':ensure=>present,# usually 'present' or 'absent'attribute1=>1234,# numbers are unquotedattribute2=>'value',# strings should be quotedattribute3=>['red','blue'],# arrays contain other data typesnoop=>false,# boolean is unquoted true/false}

The most important rule for resources is: there can be only one. Within a manifest or set of manifests being applied together (the catalog for a node), a resource of a given type can only be declared once with a given title. Every resource of that type must have a unique title.

For example, the following manifest will fail because the same title is used for both file resources:

[vagrant@client~]$catmyfile.ppfile{'my_file':ensure=>present,path=>'my_file.txt',}file{'my_file':ensure=>present,path=>'my_file.csv',}notify{'my_file':message=>"My file is present",}[vagrant@client~]$puppetapplymyfile.ppError:EvaluationError:ErrorwhileevaluatingaResourceStatement,Duplicatedeclaration:File[my_file]isalreadydeclaredinfile/home/vagrant/myfile.pp:1;cannotredeclareat/home/vagrant/myfile.pp:6

You’ll notice that no complaint was given for the notify resource with the same title. This is not a conflict. Only resources of the same type cannot utilize the same title. Naming the preceding files with their full paths ensures no conflicts:

file{'/home/vagrant/my_file.txt':ensure=>present,path=>'/home/vagrant/my_file.txt',}file{'/home/vagrant/my_file.csv':ensure=>present,path=>'/home/vagrant/my_file.csv',}

Puppet can show you an existing resource written out in the Puppet configuration language. This makes it easy to generate code based on existing configurations. Let’s demonstrate how to view a resource with an email alias:

[vagrant@client~]$puppetresourcemailaliaspostmastermailalias{'postmaster':ensure=>'present',recipient=>['root'],target=>'/etc/aliases',}

The puppet resource command queries the resource and shows its current state as Puppet sees it. The output gives you the exact structure, syntax, and attributes to declare this mailalias resource in a Puppet manifest. You can add this to a manifest file, change the recipient, and then use puppet apply to change the postmaster alias on this node.

Let’s examine another resource—the user you are logged in as:

[vagrant@client~]$puppetresourceuservagrantError:Couldnotrun:undefinedmethod'exists?'fornil:NilClass

This somewhat confusing error message means that you don’t have the privileges to view that resource—so let’s escalate our privileges to complete this command with sudo:

[vagrant@client~]$sudopuppetresourceuservagrantuser{'vagrant':ensure=>'present',gid=>'1000',groups=>['vagrant'],home=>'/home/vagrant',password=>'$1$sC3NqLSG$FsXVyW7azpoh76edOfAWm1',password_max_age=>'99999',password_min_age=>'0',shell=>'/bin/bash',uid=>'1000',}

If you look at the resource, you’ll see why root access was necessary. The user resource contains the user’s password hash, which required root privilege to read from the shadow file. As with the previous mailalias resource, you could write this user resource to a manifest file, replace the password hash, and use sudo puppet apply to change the root password.

Let’s examine a resource type that executes commands. Use the exec resource to execute programs as part of your manifest:

exec{'echo-holy-cow':path=>['/bin'],cwd=>'/tmp',command=>'echo "holy cow!" > testfile.txt',creates=>'/tmp/testfile.txt',returns=>[0],logoutput=>on_failure,}

Now when you apply this manifest, it will create the testfile.txt file. Notice that we use single quotes to encapsulate the values given to the attributes.

Use single quotes for any value that does not contain a variable. This protects against accidental interpolation of a variable. Use double quotes with strings containing variables.

The exec resource declared above uses the creates attribute. This attribute defines a file that will be created by the command execution. When the named file exists, the command is not executed. This means the manifest can be run repeatedly and nothing will change after the file is initially created. Let’s test this out here:

[vagrant@client~]$puppetapply/vagrant/manifests/tmp-testfile.ppNotice:Compiledcatalogforclient.example.cominenvironmentproductionNotice:/Stage[main]/Main/Exec[echo-holy-cow]/returns:executedsuccessfullyNotice:Finishedcatalogrunin0.07seconds[vagrant@client~]$puppetapply/vagrant/manifests/tmp-testfile.ppNotice:Compiledcatalogforclient.example.cominenvironmentproductionNotice:Finishedcatalogrunin0.01seconds

There are a wide variety of attributes you can use to control whether or not an exec resource will be executed, and which exit codes indicate success or failure. This is a complex and feature-rich resource. Whenever you create an exec resource, test carefully and refer to “Puppet Type Reference: exec” on the Puppet docs site.

I must beg your forgiveness: I have deliberately led you astray to teach you a common mistake for newcomers to declarative programming. While exec is an essential resource type, it is best to avoid using it whenever possible. We’ll apply the exact same result with a more appropriate file resource next.

If you examine the preceding exec resource, you’ll note that we had to declare how to make the change, and also whether or not to make the change. This is very similar to imperative programming, and can be very difficult to maintain.

This resource protects itself against running again with the creates attribute. However, if the contents of the file were changed, this resource would not repair the contents. We would need to add more tests to validate the file contents.

It is generally difficult to write declarative code using an exec resource. There is a tendency to revert to an imperative programming style. Except in circumstances where no other method is possible, use of an exec is generally an indication of a poorly written manifest. In fact, at several companies where I have worked, the presence of an exec resource within a commit caused it to be flagged for a mandatory code review.

How else could we create this file? We could have used the file resource. Let’s examine one now:

file{'/tmp/testfile.txt':ensure=>present,mode=>'0644',replace=>true,content=>'holy cow!',}

This is a proper declarative policy. We declare that the file should exist, and what the contents of the file should be. We do not need to concern ourselves with how, or when to make changes to the file. Furthermore, we were able to ensure the contents of the file remained consistent, which is not possible within an echo command.

Let’s apply this policy now:

[vagrant@client~]$puppetapply/vagrant/manifests/file-testfile.ppNotice:Compiledcatalogforclient.example.cominenvironmentproductionNotice:/Stage[main]/Main/File[/tmp/testfile.txt]/content:contentchanged'{md5}0eb429526e5e170cd9ed4f84c24e4'to'{md5}3d508c8566858d8a168a290dd709c'Notice:/Stage[main]/Main/File[/tmp/testfile.txt]/mode:modechanged'0664'to'0644'Notice:Finishedcatalogrunin0.03seconds[vagrant@client~]$puppetapply/vagrant/manifests/file-testfile.ppNotice:Compiledcatalogforclient.example.cominenvironmentproductionNotice:Finishedcatalogrunin0.02seconds

Unlike with the previous exec resource, Puppet observed that the contents were different and changed the file to match. Now, you’re probably thinking to yourself, “Aren’t the file contents the same in both?” Nope. It’s not obvious in the exec declaration, but echo appends a trailing newline to the text. As you can see here, the file contents don’t include a newline:

[vagrant@client~]$cat/tmp/testfile.txtholycow![vagrant@client~]$

You can easily adjust the file contents to include as many newline characters as you want. Because the newline character is interpolated, you’ll need to use double quotes around the contents.

You could also change the replace attribute from true to false if you want to initially create a missing file, but not replace one that has been changed.

file{'/tmp/testfile.txt':ensure=>present,mode=>'0644',replace=>false,content=>"holy cow!\n",}

You can find complete details of the many attributes available for the file resource at “Puppet Type Resource: file” on the Puppet docs site.

Every file changed by a Puppet file resource is backed up on the node in a directory specified by the $clientbucketdir configuration setting. Unfortunately, the file backups are stored in directories indexed by a hash of the file contents, which makes them quite tricky to find.

You can back up a file to this storage any time you want, like so:

$sudopuppetfilebucket--localbackup/tmp/testfile.txt/tmp/testfile.txt:3d508c856685853ed8a168a290dd709c

Use this command to get a list of every file backed up. Here you can see the backup performed by puppet apply when it changed the file, as well as your manual backup a few minutes later:

$sudopuppetfilebucket--locallist0eb429526e5e170cd9ed4f84c24e442b2015-11-1908:18:06/tmp/testfile.txt3d508c856685853ed8a168a290dd709c2015-11-1908:23:42/tmp/testfile.txt

Use the hash associated with a specific file version to view the contents of the file at that point in time:

$sudopuppetfilebucket--localget0eb429526e5e170cd9ed4f84c24e442bholycow!

You can compare an installed file to a backup version, or compare two backup versions, using the filebucket diff command:

$sudopuppetfilebucket--localdiff\0eb429526e5e170cd9ed4f84c24e442b/tmp/testfile.txt---/tmp/diff20151119-4940-1h3dwn92015-11-1908:20:59.994974403+0000+++/tmp/testfile.txt2015-11-1908:18:06.162104809+0000@@-1+1@@-holycow!+holycow!\Nonewlineatendoffile

You can restore a stored file to any location: