Essential Tools and Best Practices for Deploying Code to Production

Copyright © 2019 Daniel Bryant and Cosota Team Ltd. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781491986028 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Continuous Delivery in Java, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

The views expressed in this work are those of the authors, and do not represent the publisher’s views. While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-98602-8

[LSI]

There’s been broad agreement in the Continuous Delivery community that tools don’t matter ever since Dave Farley and Jez Humble wrote Continuous Delivery. There are plenty of good programming languages out there, and plenty of good tools for building, testing, and deploying your code to production. The wisdom of the crowd has been: it doesn’t matter which tools you use, as long as you avoid the really terrible tools.

This year, that wisdom has been augmented by the work of Dr. Nicole Forsgren, Jez Humble, and Gene Kim. Their book Accelerate summarises multiple years of research into Continuous Delivery and IT performance. One of their conclusions is the ability for teams to choose their own tools has a strong, statistical impact on Continuous Delivery. So, now the wisdom of the crowd is: it doesn’t matter which tools you use, as long as you’re able to choose them yourself and you avoid the really terrible tools.

Take me, for example. The first time I did Continuous Delivery on a team was at Elsevier in 2007. We built a journal website in Java 6, Spring 2, and Tomcat 6 using XP practices like TDD and CI. The pipeline was Ant and Cruise Control. The codebase was always releasable, and once a week we deployed to production.

The first time I did Continuous Delivery across an entire company was at LMAX in 2008. We built a state of the art financial exchange in Java 6, Spring 3, and Resin 3 using XP practices and Domain Driven Design. The pipeline was Ant and Cruise Control, with a lot of custom dashboards. The codebase was always releasable, and once a fortnight we deployed to production.

I’m sure you can see the similarities there. Groups of smart people worked closely together. XP practices and sound design principles were essential. The tools used were deliberately chosen for the task at hand. And in the case of LMAX, it helped that the Head of Development was writing the inaugural book on Continuous Delivery at the time. I think his name was Dafydd, or Dev, or something.

What this all means is you can use Java, or PHP, or .NET, and successfully implement Continuous Delivery. You can use Solaris Zones, or Docker. You can use AWS, Azure, or that on-premises data centre your Head Of Platform keeps saying is cheaper than AWS. Just make sure you choose your tools yourself, for the particular problems you face. And don’t use MKS for version control, QTP for testing, or any commercial release management tool. They’re terrible.

So, if tools don’t matter for Continuous Delivery as long as you choose them yourself and they’re not terrible choices, why am I writing this foreword?

There’s actually a nuanced answer in here, if we look hard enough. Tools don’t matter as much as the principles and practices of Continuous Delivery, but they still matter a great deal. Programming languages can help people to quickly create new features and defect fixes, which reduces the Cost of Delay associated with product development. Programming languages can also encourage a testable, releasable application architecture, which are key enablers of Continuous Delivery. Build, test, and deploy tooling can nudge people in the right direction, towards practices such as TDD and Trunk Based Development.

I was reminded of that nuance recently, when I cleared out my childhood bedroom and found my university copy of Ivor Horton’s Understanding Java 2. Java and I married each other in 1999, and now it’s been so long we’ve both forgotten it’s our 20 year anniversary very soon. In my opinion, it’s a great programming language. Over the years Java, JUnit, Gradle, Spring and many other tools have helped me to build well-tested, releasable applications, and encourage people to adopt Continuous Delivery.

With Cloud, containerization, and Serverless leading our inexorable march towards Skynet, we all need guidance from experienced practitioners on how to use the latest tools and work towards Continuous Delivery. In this book, Daniel and Abraham explain how to use Java and popular tools such as Spring Boot, Kubernetes, and AWS EKS to deliver modern web applications frequently enough to meet market demand. IT practitioners working with Java and any of the other tools mentioned in this book can rely on Daniel and Abraham to explain how to implement a Continuous Delivery toolchain for their applications.

Continuous delivery is one of the key practices that should be at the heart of any engineering team. We have often been asked what are some of the key enablers of success at jClarity and new build farm for OpenJDK/Java at adoptopenjdk.net. The answer is that we can deploy daily with the greatest of confidence, and do this with minimal engineering teams. Ever since Dave Farley’s and Jez Humble’s seminal Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation (Addison-Wesley Signature) came out in 2010, folks have started adopting continuous delivery practices, but there has not been a comprehensive guide on how to do this for the ~10 million Java developers out there. Well, now there is!

Daniel and Abraham are both real-world practitioners and their book contains everything you need as a Java developer on this topic, with in-depth explanations as to “why” you want to follow the practices of continuous delivery; how to architect your application in a way that is amenable to this; how to put together build, test, and deploy pipelines; and also the intricacies of deploying to cloud and container environments.

The impact of “cloud native” technologies on Java cannot be understated—modern applications must now contend with such concerns as connectivity to a larger number of external components (both JVM and not), and a very different approach to handling resources (such as I/O) that had traditionally been provided by the local operating system. Even the life cycle of applications, and their locality to specific machines, is changing, with approaches such as immutable infrastructure and serverless requiring Java developers to shift their thinking to take full advantage of the capabilities of these new ways of delivering applications.

Within this brave new world, techniques such as continuous deployment, the tooling and architectural thinking required to support it, and the requirements of a cloud-centric local development environment, are of paramount importance. Until now, there has been no text that specifically caters to Java developers to guide them on their journey into full adoption of continuous delivery and the benefits it offers.

Both of us have been Java developers long enough to witness, and be part of, several shifts within our chosen profession. Java the language has evolved a long way since we both wrote our first lines of code: Java 1.4 gave us nonblocking I/O, Java 8 gave us streams and lambdas, Java 9 gave us modules, and Java 10 finally gave us local variable type inference. Deployment platforms have also evolved in leaps and bounds, and the emergence of cloud and containers has provided many opportunities and challenges. One thing has not changed, though, and that is the need to deliver value to end users and customers. We have needed to use all of our skills, tools, and practices as best as we could in order to make the delivery of software as effective (and fun) as possible. Perhaps even more importantly, we have needed to work with and lead our teams to share this responsibility.

With an ever-increasing range of “best practices” emerging around software development, architecture, and deployment platforms, there is one thing that developers can generally agree on: the principles of continuous integration and continuous delivery add enormous value to the software delivery life cycle. With the increasing demands from customers on the speed and stability of delivery, you need a framework that provides fast feedback and enables the automation of both quality assurance and the deployment processes. However, the challenges for modern software developers are manyfold, and attempting to introduce a methodology like continuous delivery—which touches on all aspect of software design and delivery—means that several new skills must be mastered, and some are typically outside a developer’s existing comfort zone.

As our careers have progressed, we’ve frequently found ourselves working on aspects of a project that used to be handled by other individuals or teams, and as such we’ve learned the hard way the new three key areas of developer skillsets that are needed to harness the benefits of continuous delivery:

This book distills our learning and offers guidance for mastering these new skills.

If you are a Java developer who wants to learn more about continuous delivery, or are currently struggling with embracing this way of delivering software, then this is the book for you. We have provided not only the “how” and “what” of implementing the various practices and tools associated with continuous delivery, but also the “why.” We believe this is important, because if you understand the motivations, you will be well placed to adapt particular practices that don’t quite work for you as described. Understanding the reasoning behind an approach also helps build strong foundations, and helps you share and teach these ideas to others. As the Japanese poet Matsuo Bashō said, “Do not seek to follow in the footsteps of the wise; seek what they sought.”

We have also written this book as a call to action to you, as a Java developer, to get outside your comfort zone and learn more about architecture, automation, and operations. In the current software development career space, we see increasingly fewer opportunities for pure Java programming roles, with many new roles expecting knowledge of continuous delivery, platforms, and operational tooling. By investing in yourself and increasing your software development knowledge and skills, you will not only be open to more opportunities, but also become a better programmer.

As we wrote this book, we had no single idea of a prototypical reader of this book, other than you being a Java developer, but one of the following target personas may resonate with you:

This book specifically emphasizes the complete approach to implementing continuous delivery for Java applications, and as such, it doesn’t contain a deep dive into everything related to architecture, testing, or cloud technologies. Sure, you’ll learn the essentials of all these subjects, but many of the chapter topics could be extended into their own book, and we simply didn’t have the time or space to do this. Whereas others have written books that are focused on specific topics, we have attempted to reference and recommend their work.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

Constant width boldShows commands or other text that should be typed literally by the user.

Constant width italicShows text that should be replaced with user-supplied values or by values determined by context.

This element signifies a tip or suggestion.

This element signifies a general note.

This element indicates a warning or caution.

Supplemental material (code examples, exercises, etc.) is available for download at https://github.com/continuous-delivery-in-java.

This book is here to help you get your job done. In general, if example code is offered with this book, you may use it in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing a CD-ROM of examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

We appreciate, but do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “Continuous Delivery in Java by Daniel Bryant and Abraham Marín-Pérez (O’Reilly). Copyright 2019 Daniel Bryant and Cosota Team Ltd., 978-1-491-98602-8.”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

Safari (formerly Safari Books Online) is a membership-based training and reference platform for enterprise, government, educators, and individuals.

Members have access to thousands of books, training videos, Learning Paths, interactive tutorials, and curated playlists from over 250 publishers, including O’Reilly Media, Harvard Business Review, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Adobe, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, and Course Technology, among others.

For more information, please visit http://oreilly.com/safari.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://bit.ly/continuous-delivery-in-java.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

As with almost all technical books, only two names may be listed as authors on the front of this book, but the reality is that many people have contributed, either directly in the form of feedback as the book was written, or indirectly by their teaching and guidance over the years.

Although we can’t possibly list everyone who has helped us during this journey, we would like to explicitly thank the people who took time out of their busy schedules to provide extensive discussions, feedback, and support. In particular, we would like to express our gratitude to (in alphabetical order of last name): Tareq Abedrabbo, Alex Blewitt, the Devoxx team, Ben Evans, Trisha Gee, Arun Gupta, Charles Humble, Nic Jackson, James Lewis, Richard Li, Simon Maple, Sam Newman, the SpectoLabs team, Chris Newland, Christian Posta, Chris Richardson, Mani Sarkar, Richard Seroter, Matthew Skelton, Steve Smith, the entire Tomitribe crew (and #usualsuspects), Martijn Verburg, Richard Warburton, and Nicki Watt (and past and present members of the OpenCredo team).

We would also like to express our thanks to the entire O’Reilly team, and although there are surely many people we haven’t met behind the scenes who have helped us, we would like to explicitly thank Brian Foster for the opportunity to write this book, Virginia Wilson for providing motivation and a lot of great editorial advice (and for sticking with us when times were tough!), and Susan Conant and Nan Barber for their initial guidance.

Daniel’s acknowledgements: I would like to thank my entire famiy for their love and support, both during the writing process and throughout my career. I would also like to thank Abraham for joining me midway through the writing process; there aren’t many people who would have accepted and excelled at the challenge quite so quickly. Finally, I would like to thank everyone involved in the London Java Community (LJC), Skills Matter, and the InfoQ/QCon team. These three communities have provided me with access to mentors, guidance, and so many opportunities. I hope to someday pay all of this forward.

Abraham’s acknowledgements: It’s odd how a city as big and diverse as London can sometimes work in such small circles. The first time that I ever performed a public presentation was next to Daniel, and now it feels only fitting that my first large-scale publication also happens next to him. I was truly excited when I was offered to join this project, and thankful for the support and mentoring that I have received throughout. Other organizations have helped me get to this point, among them the London Java Community, Skills Matter, InfoQ, Equal Experts, and “the usual suspects,” who have made learning not just possible, but also incredibly fun. Finally, I need to thank Bea for her patience, for her support, for just being there. Thank you.

In this chapter, you will explore the core concepts of continuous delivery and learn more about the benefits for developers, QA, operations, and business teams. An important question to ask before embarking on any change in the way you work is “Why?” Here you will learn how enabling rapid feedback reduces context switching; how automatic, repeatable, and reliable releases reduce much of the stress and challenges with delivering software to customers; and how codifying the definition of “done” allows rapid verification and facilitates any auditing required. Finally, you will examine what a typical Java continuous delivery build pipeline looks like and learn the fundamentals of each stage in the pipeline.

Continuous delivery (CD) is fundamentally a set of practices and disciplines in which software delivery teams produce valuable and robust software in short cycles. Care is taken to ensure that functionality is added in small increments and that the software can be reliably released at any time. This maximizes the opportunity for rapid feedback and learning, both from a business and technical perspective. In 2010, Jez Humble and Dave Farley published their seminal book Continuous Delivery (Addison-Wesley), which collated their experiences of deploying software delivery projects around the world, and this publication is still the go-to reference for CD. The book contains a valuable collection of techniques, methodologies, and advice from the perspective of both technology and organizations.

Much has changed in the world of software development and delivery over the past 20 years. Business requirements and expectations have changed dramatically, with a focus on innovation, speed, and time to market. Architects and developers have reacted accordingly, and new architectures have been designed to support these requirements. New deployment fabrics and platforms have been created and have co-evolved alongside new methodologies like DevOps, Release Engineering, and Site Reliability Engineering (SRE). Alongside these changes, a series of best practices for creating a continuous delivery build pipeline has co-evolved. The core concept is that any candidate change to the software being delivered is built, integrated, tested, and validated before determining that it is ready for deployment to a production environment.

In this book, you will focus on accomplishing the task of creating an effective build pipeline for modern Java-based applications, whether you are creating a monolith, microservices, or “serverless” style function as a service (FaaS) application.

An important questions to ask before undertaking any major task within software development and your approach to this is “Why?” Why, as a Java developer, should you invest your valuable time in embracing continuous delivery and creating a build pipeline?

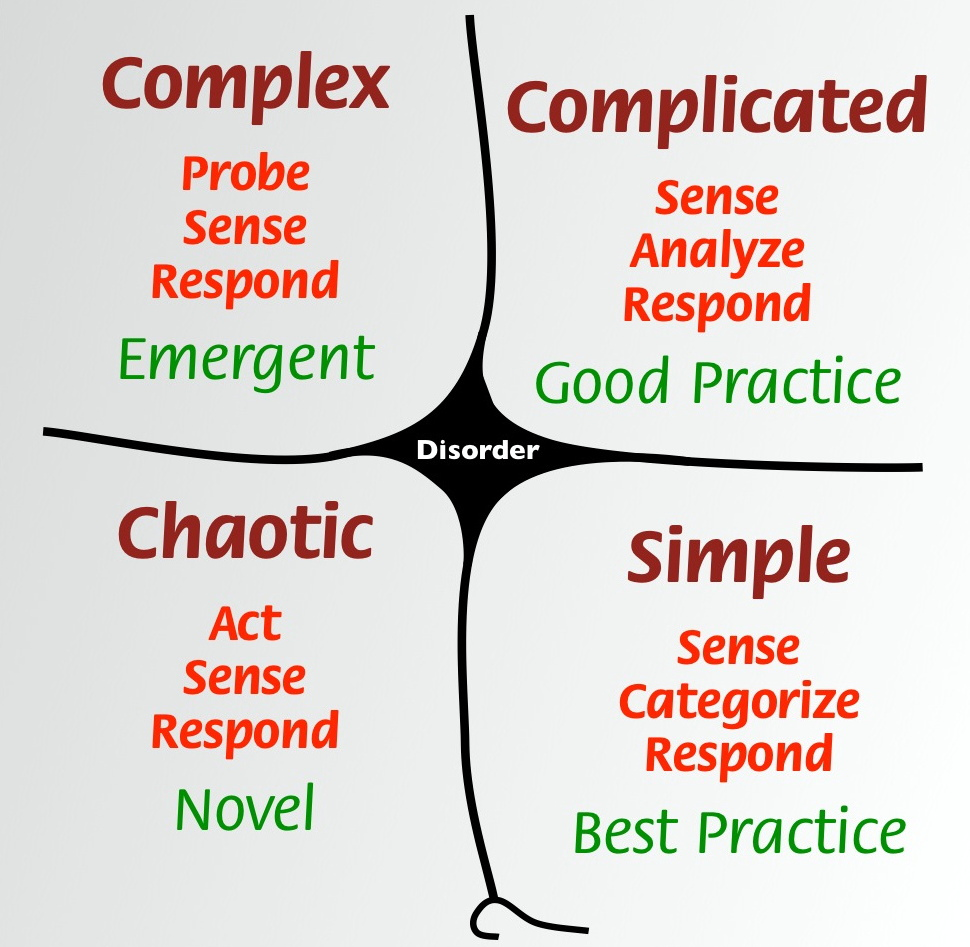

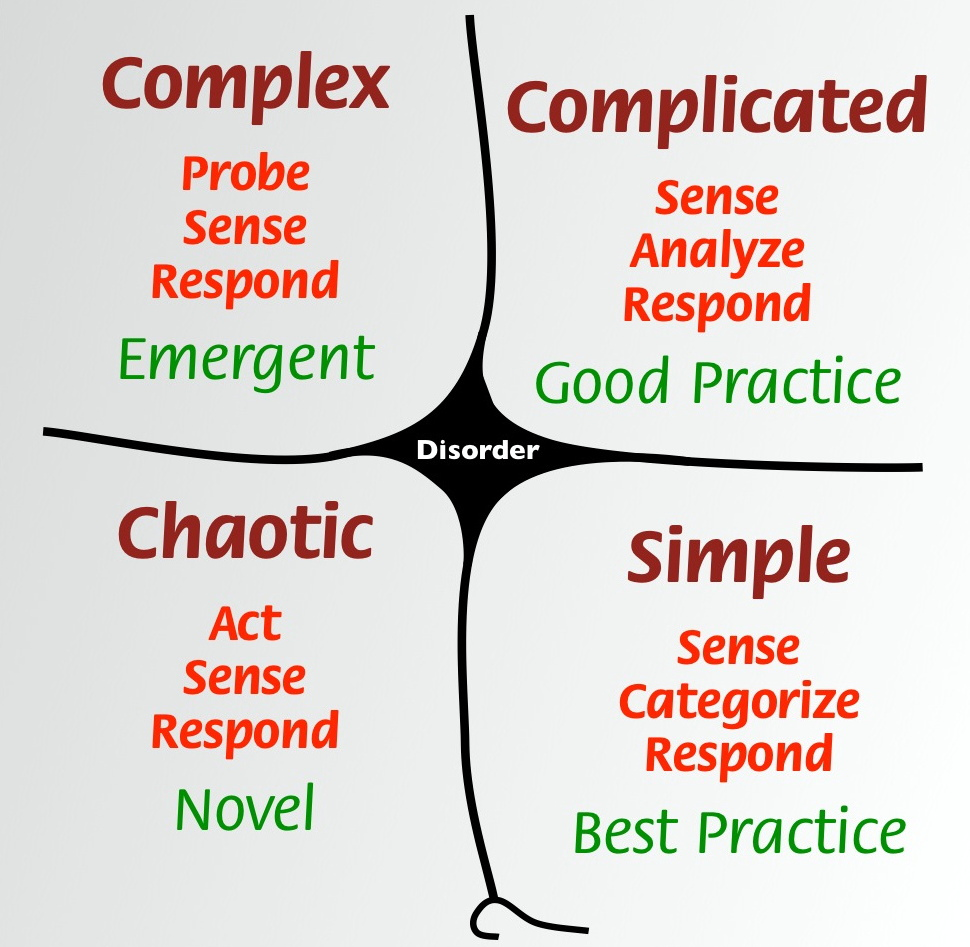

Feedback is vital when working with complex systems, and nearly all software applications are complex adaptive systems. This is especially true of modern component-based software systems that are deployed to the web, which are essentially distributed systems. A quick review of the IT press publications over the past 20 years reveals that software development issues are often discovered only when large (and costly) failures occur. Continual, rapid, and high-quality feedback provides early opportunities to detect and correct errors. This allows the detection and remediation of problems while they are smaller, cheaper, and easier to fix.

From a developer’s point of view, one of the clear advantages of rapid feedback is the reduced cost in context switching and attempting to remember what you were doing with a piece of code that contains a bug. We don’t need to remind you that it is much easier to fix an issue that you were working on five minutes ago, rather than one you were working on five months ago.

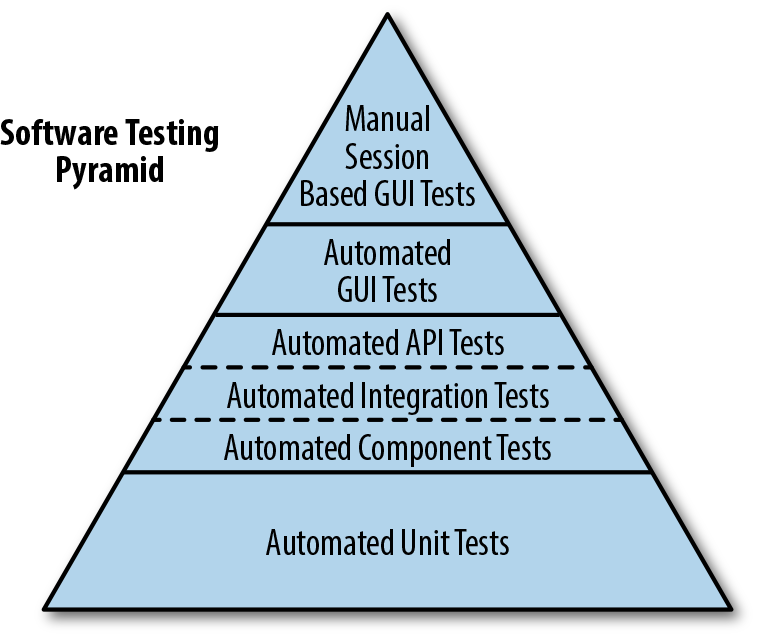

The build pipeline must provide rapid feedback for the development team in order to be useful within their daily work cycles, and the operation of the pipeline must be highly repeatable and reliable. Accordingly, automation is used extensively, with the goal of 100% automation or as close as you can realistically get to this. The following items should be automated:

Software compilation and code-quality static analysis

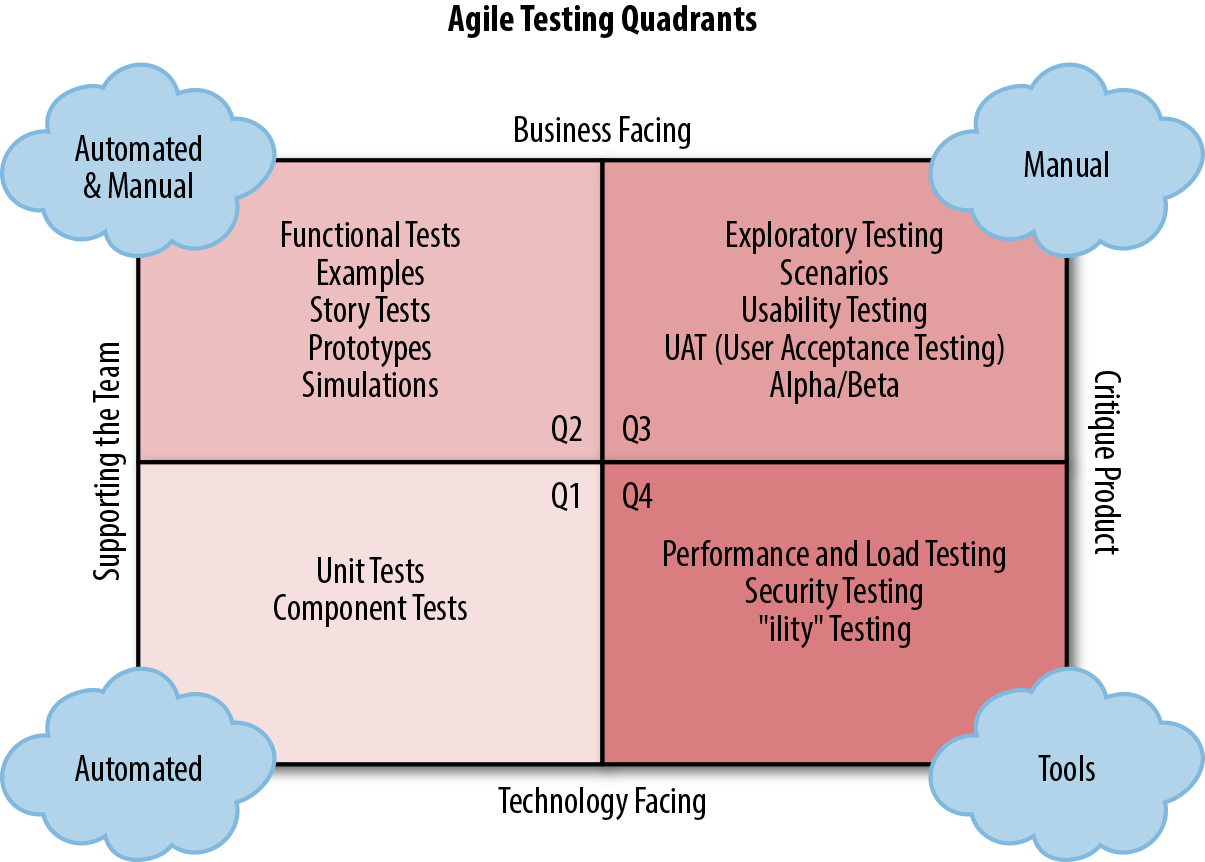

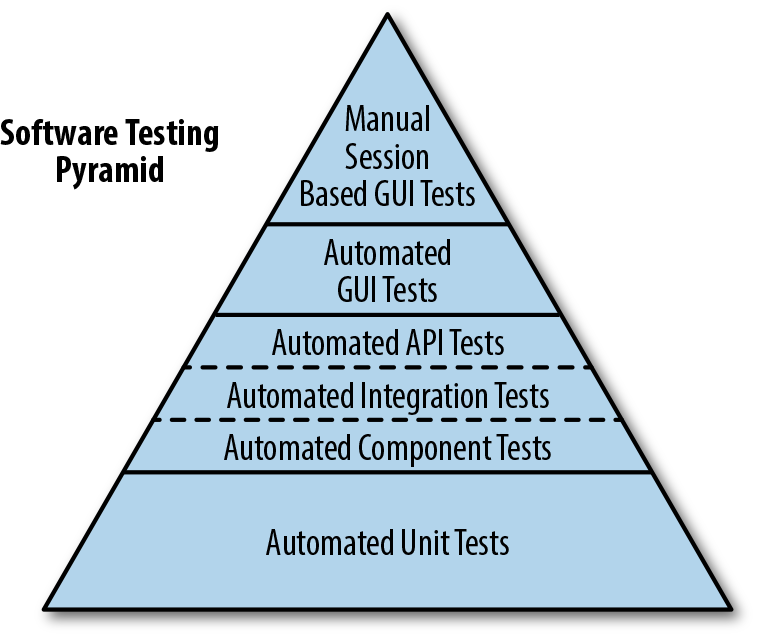

Functional testing, including unit, component, integration, and end-to-end

Provisioning of all environments, including the integration of logging, monitoring, and alerting hooks

Deployment of software artifacts to all environments, including production

Data store migrations

System testing, including nonfunctional requirements like fault tolerance, performance, and security

Tracking and auditing of change history

With the automation of the release process complete (and repeatable and reliable), you, as a developer or operator, have confidence in continually releasing new functionality without causing breakages or regressions. Nothing destroys morale as quickly as having to rely on an unreliable and flaky deployment process. This leads to fear in deploying code, which, in turn, encourages teams to batch large amounts of functionality in a “big bang” release, which ultimately leads to even more problematic releases. This negative feedback loop must be broken, and the adoption of the continuous delivery of functionality in small batch sizes (ideally, with single-piece flow) is a great approach to help encourage this.

The fast feedback and automation of the release process is useful for developers in and of itself. However, another clear advantage of creating a build pipeline is that you can codify the definition of “done.” When a software component successfully traverses a build pipeline, this should unequivocally indicate that it is ready to go into production, provide the value planned, and function within acceptable operational parameters that include availability, security, and cost. Historically, it has been difficult for teams to ensure a consistent definition of “done,” and this can be a friction point between development and business teams within an organization.

As we will show in later chapters, the assertion of many functional and nonfunctional (cross-functional) properties can be codified within a modern Java build pipeline, including fault tolerance, the absence of known security vulnerabilities, and basic performance/load characteristics (which, in turn, can support the calculation of cost).

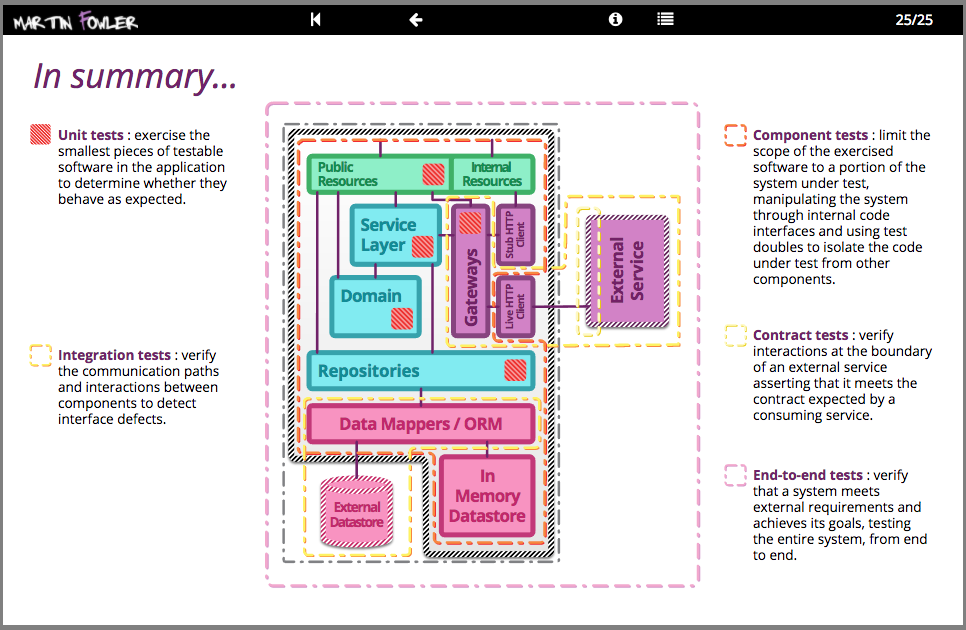

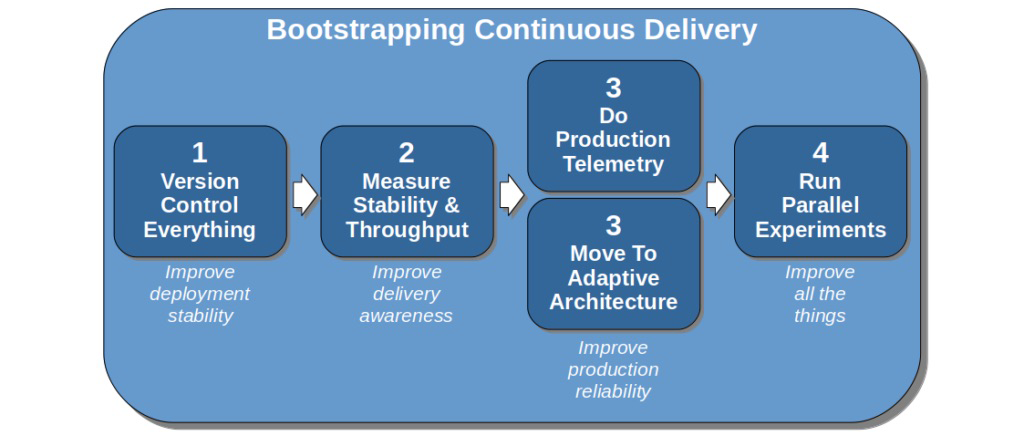

It is vital that you understand the “what,” or purpose, of each of the core stages within a continuous delivery pipeline, as the goals and principles are often more important than specific implementation details (e.g., whether you use Jenkins or CircleCI, JUnit, or TestNG).

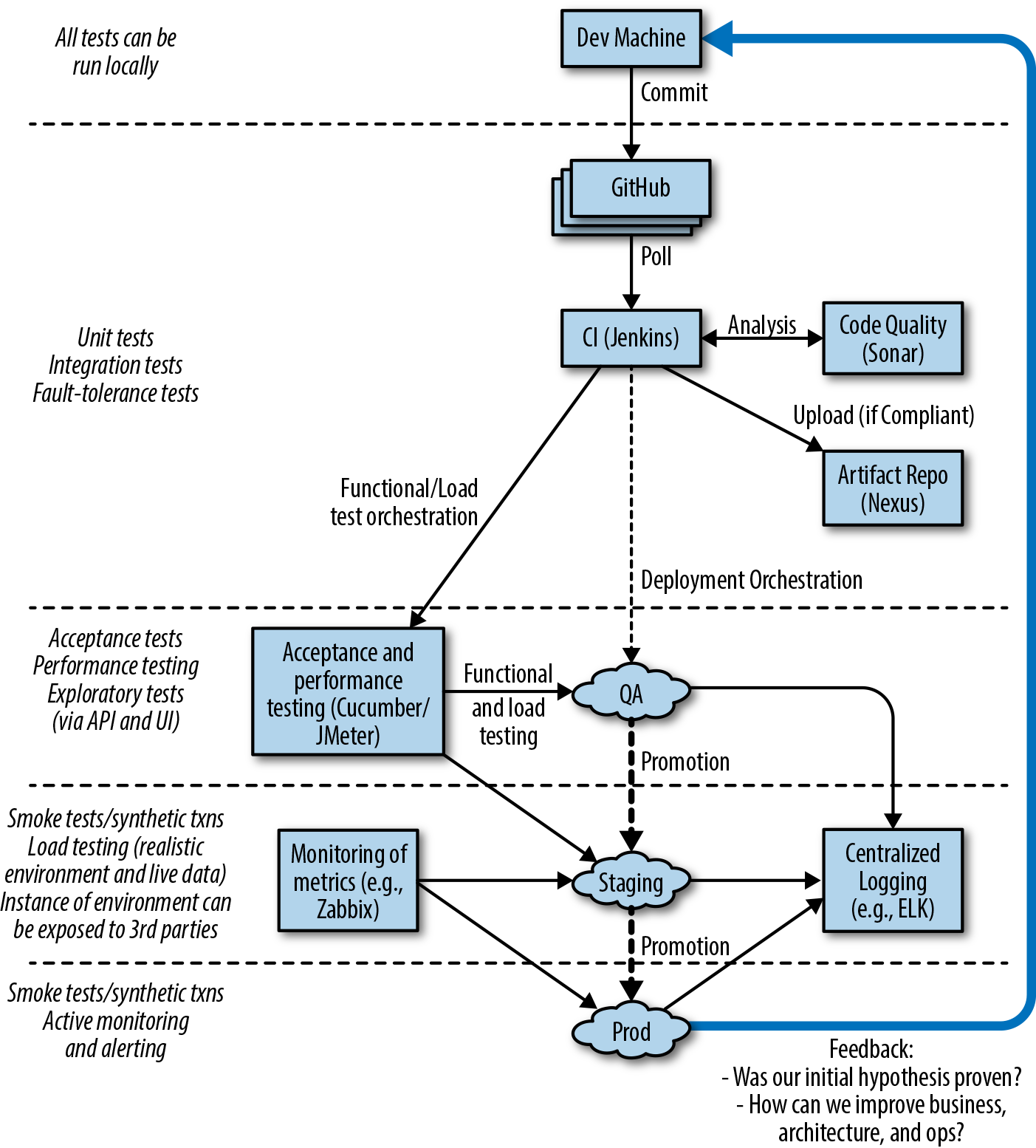

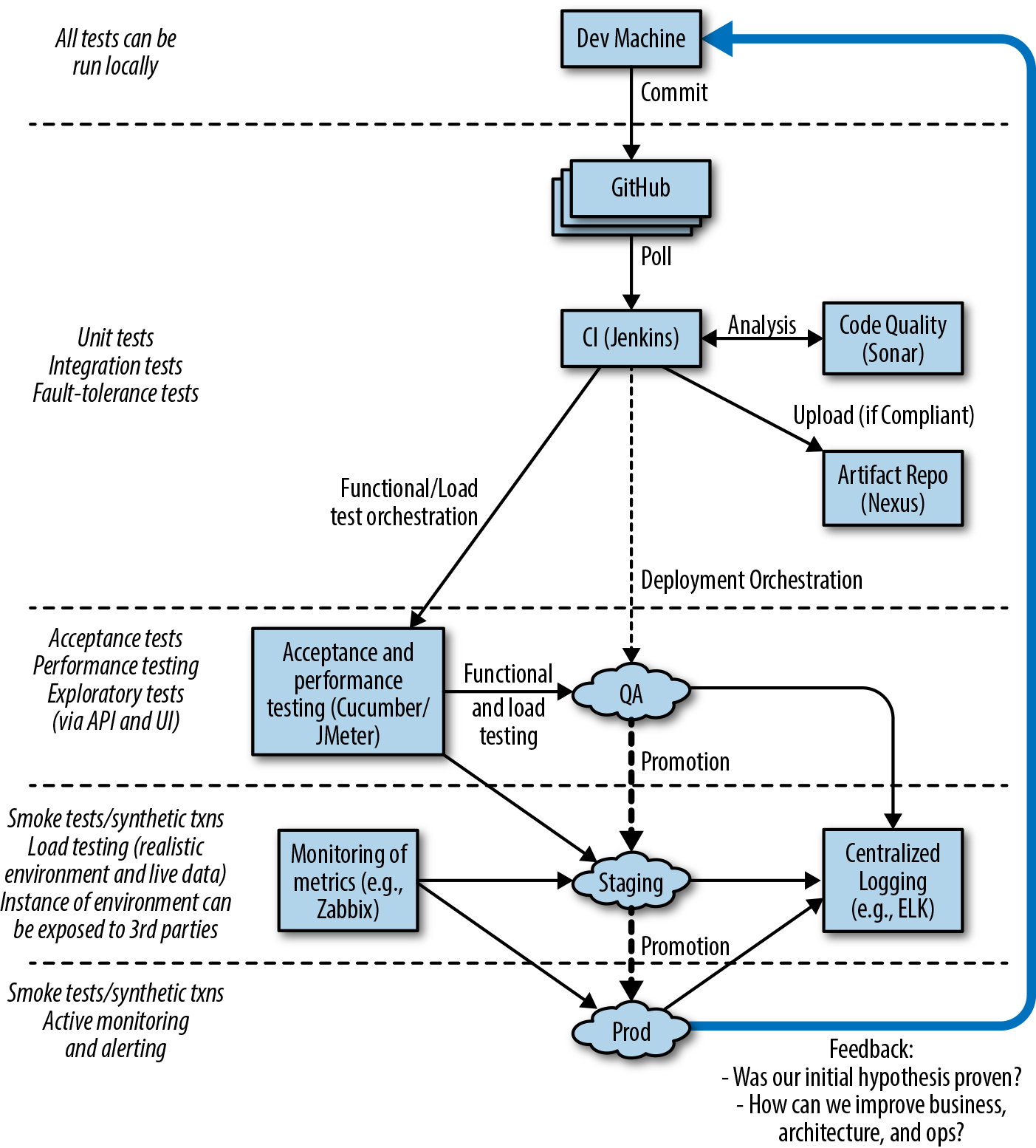

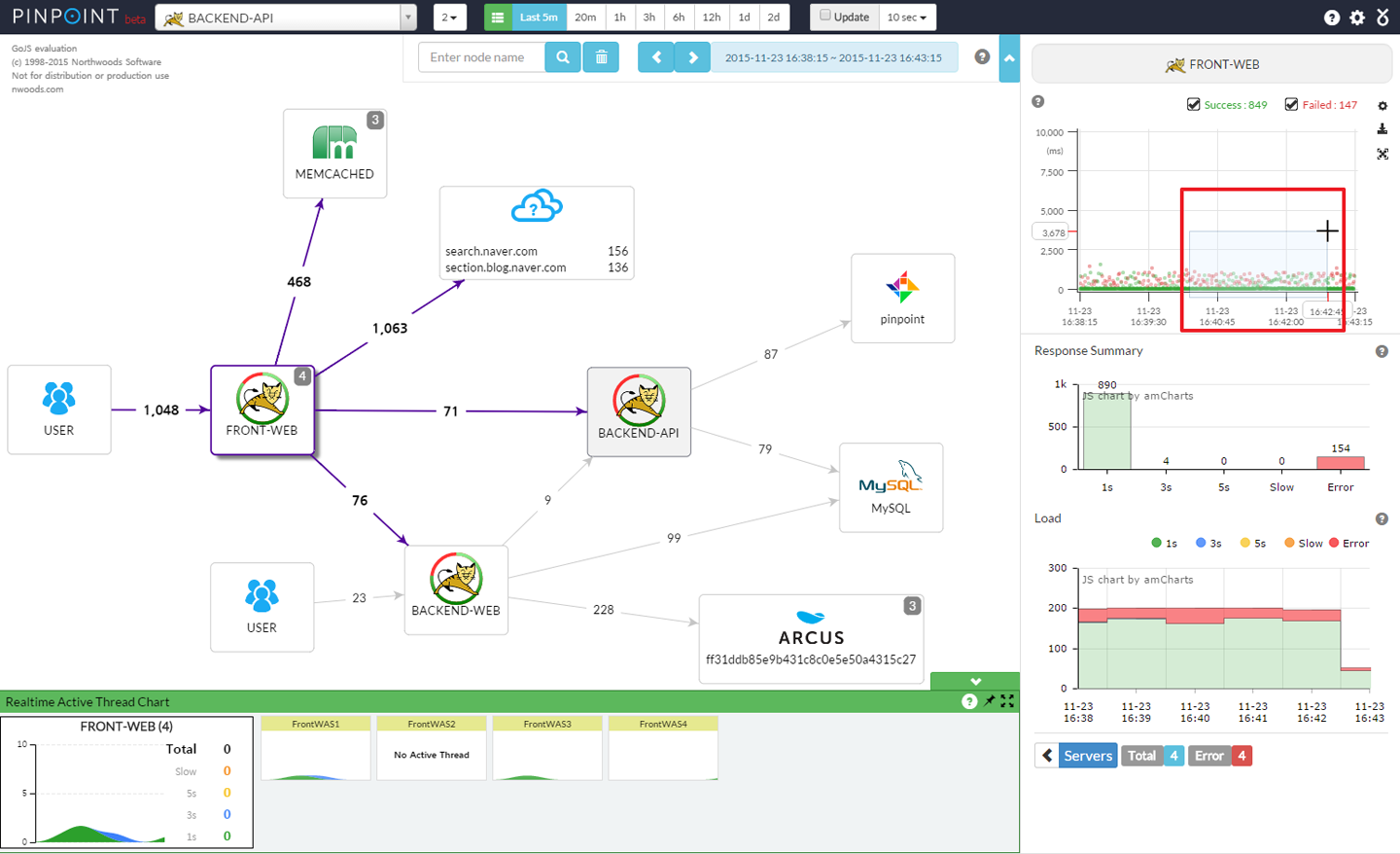

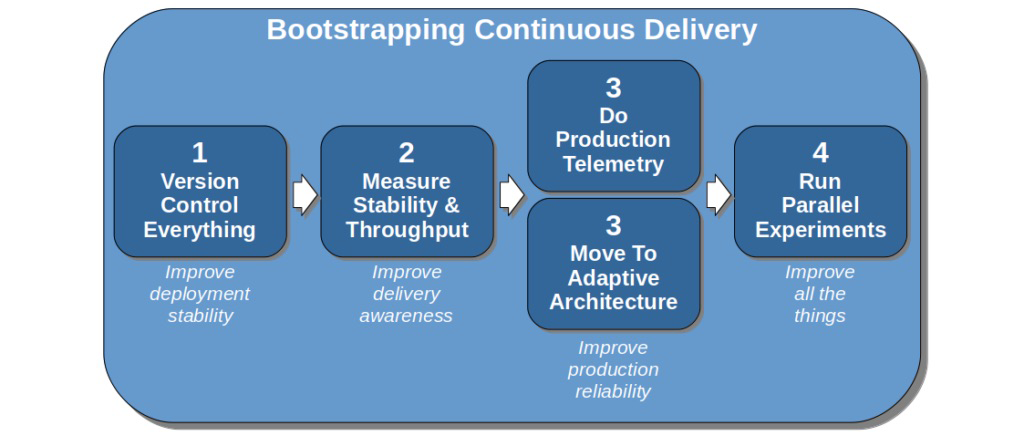

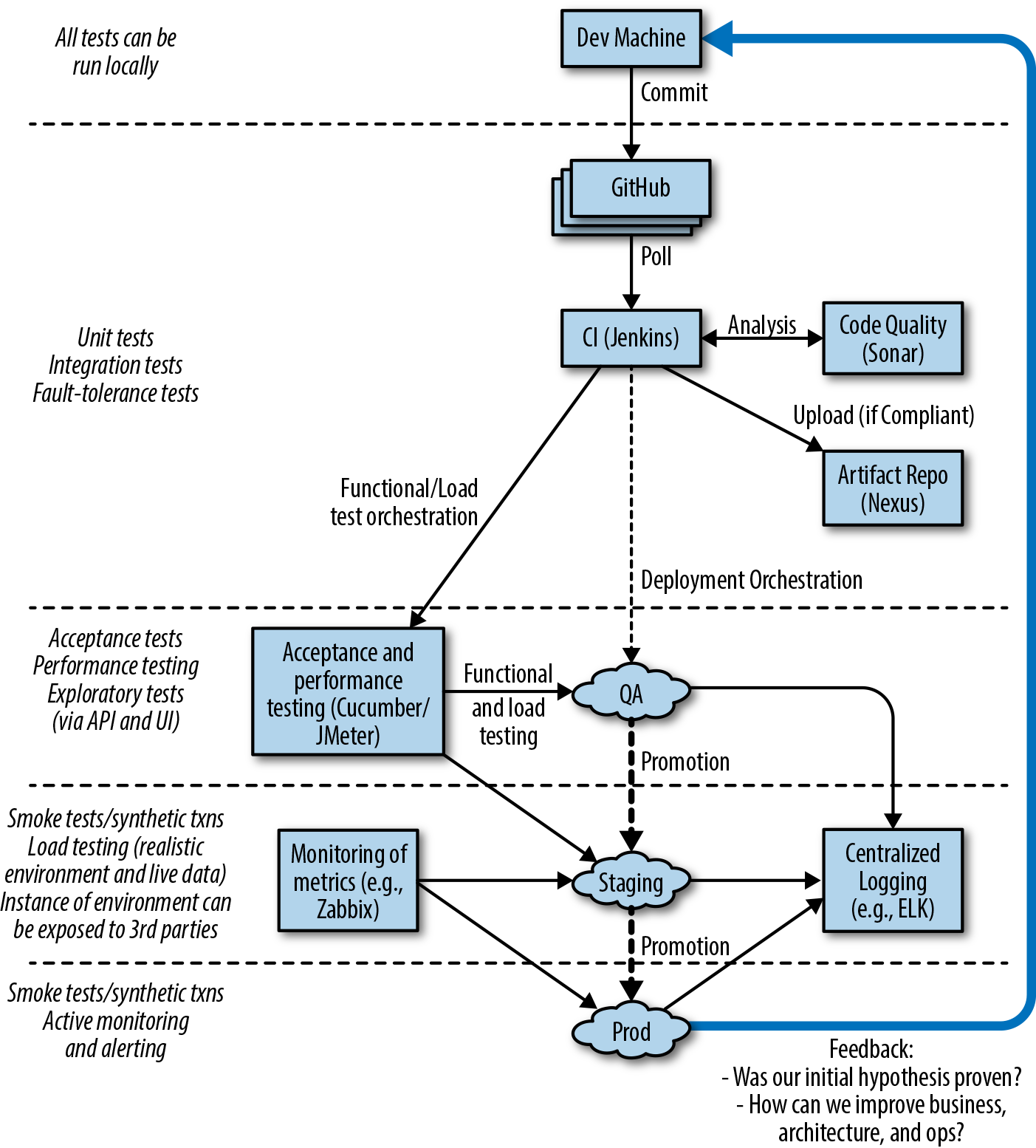

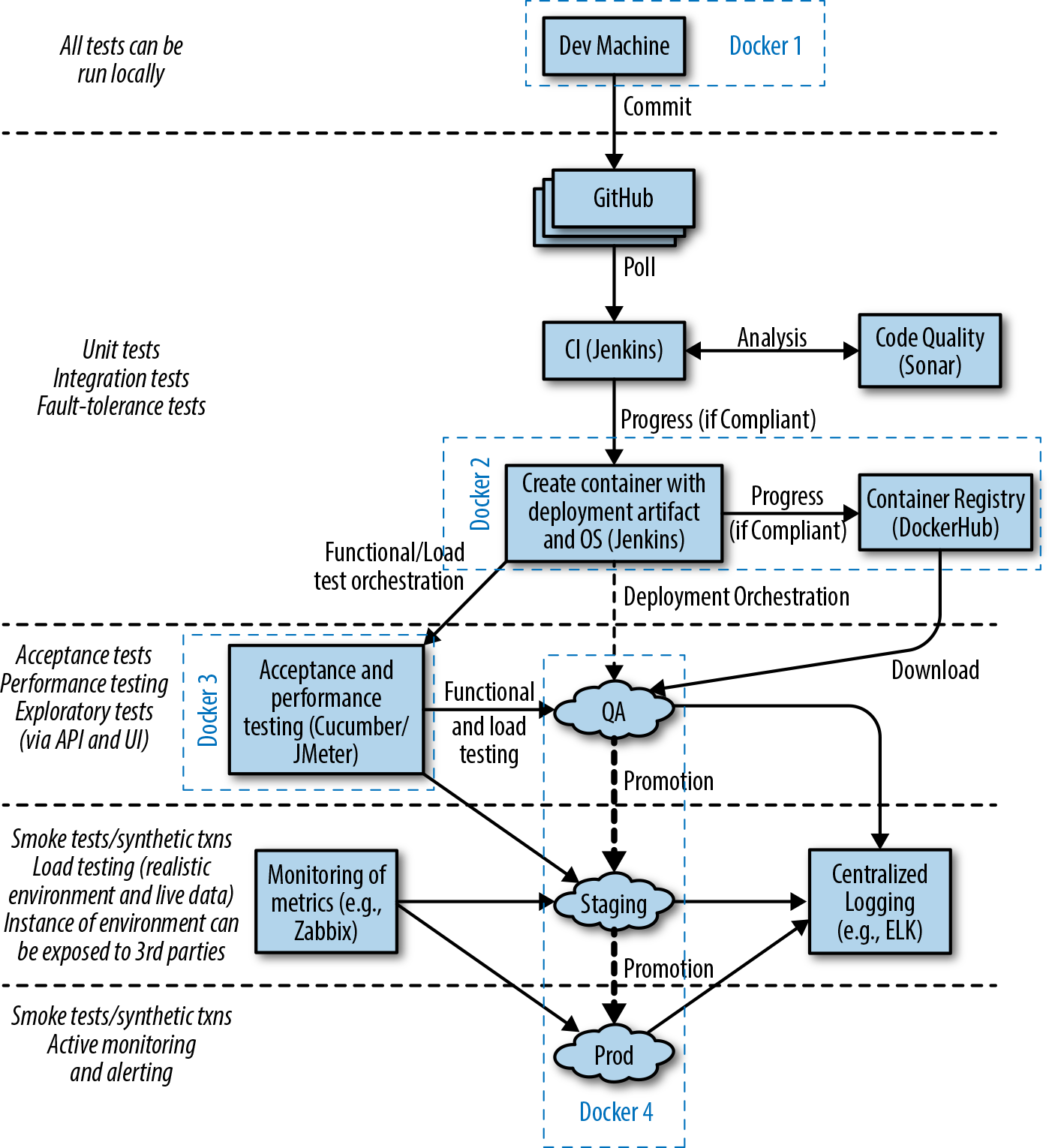

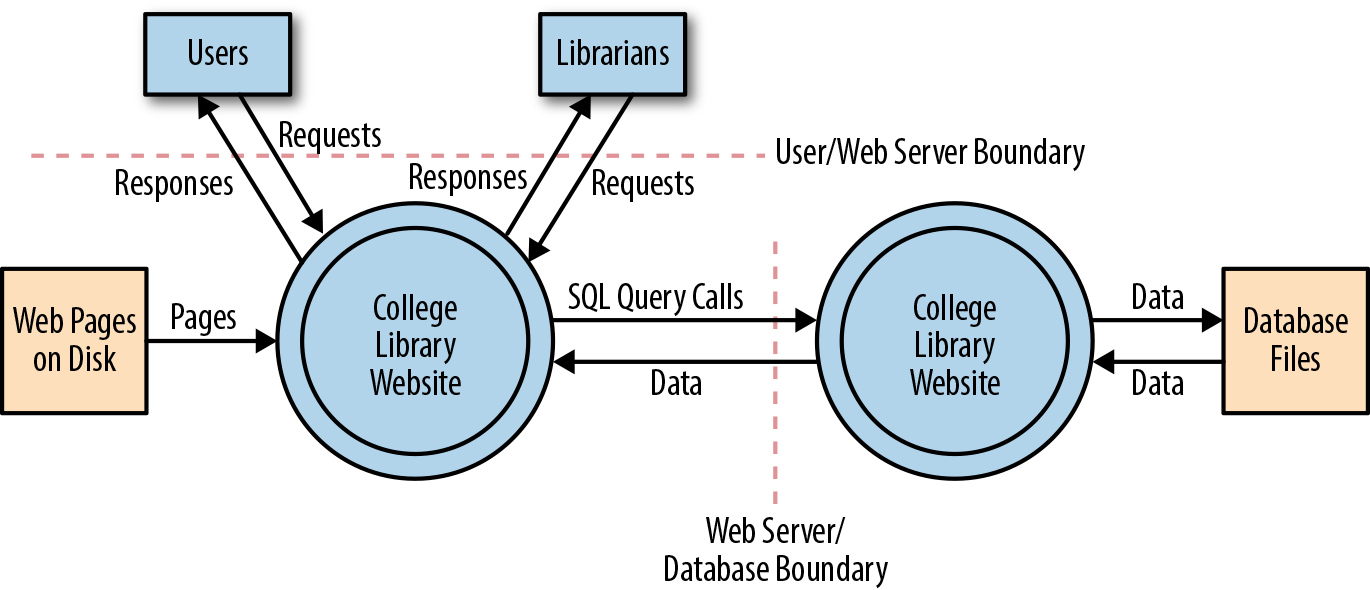

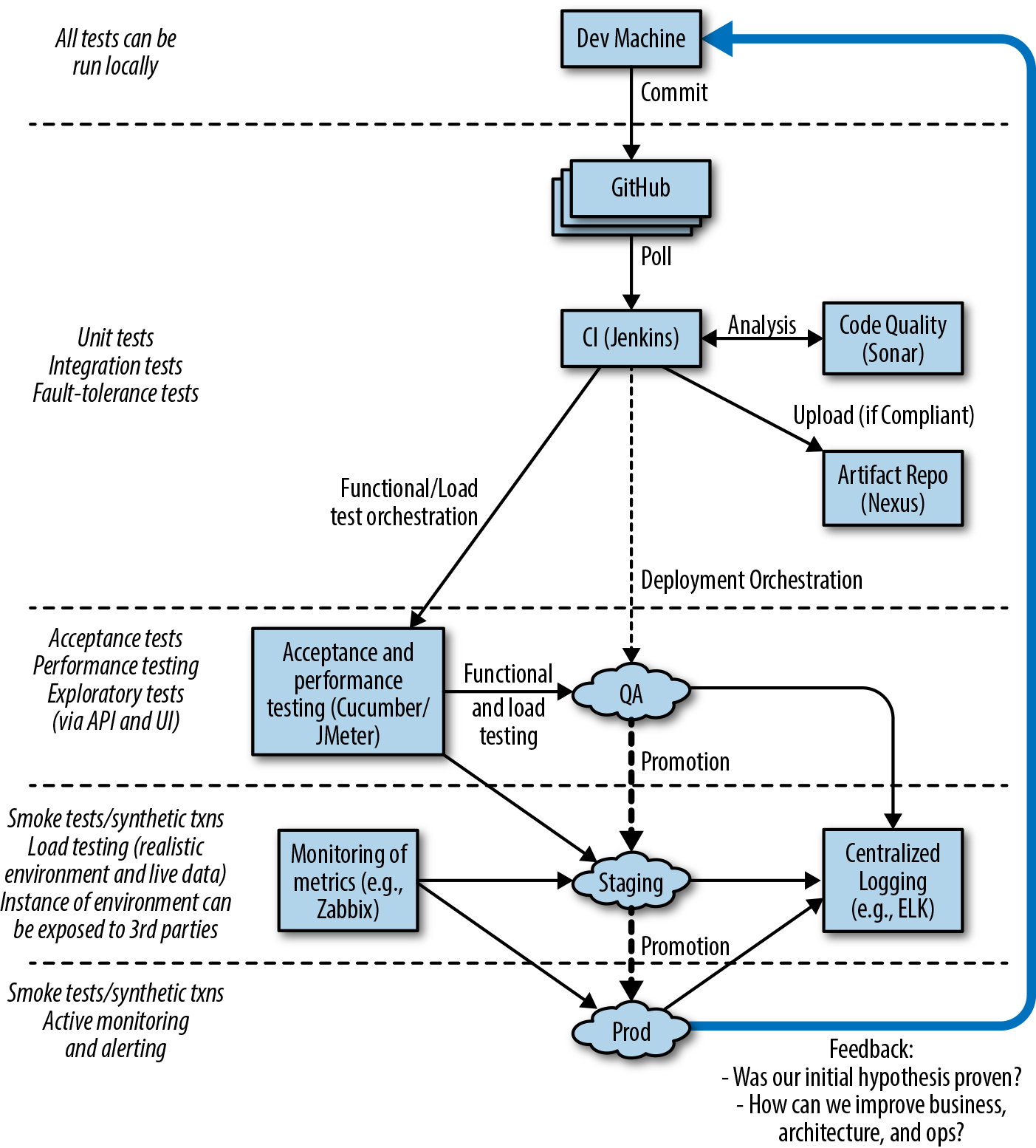

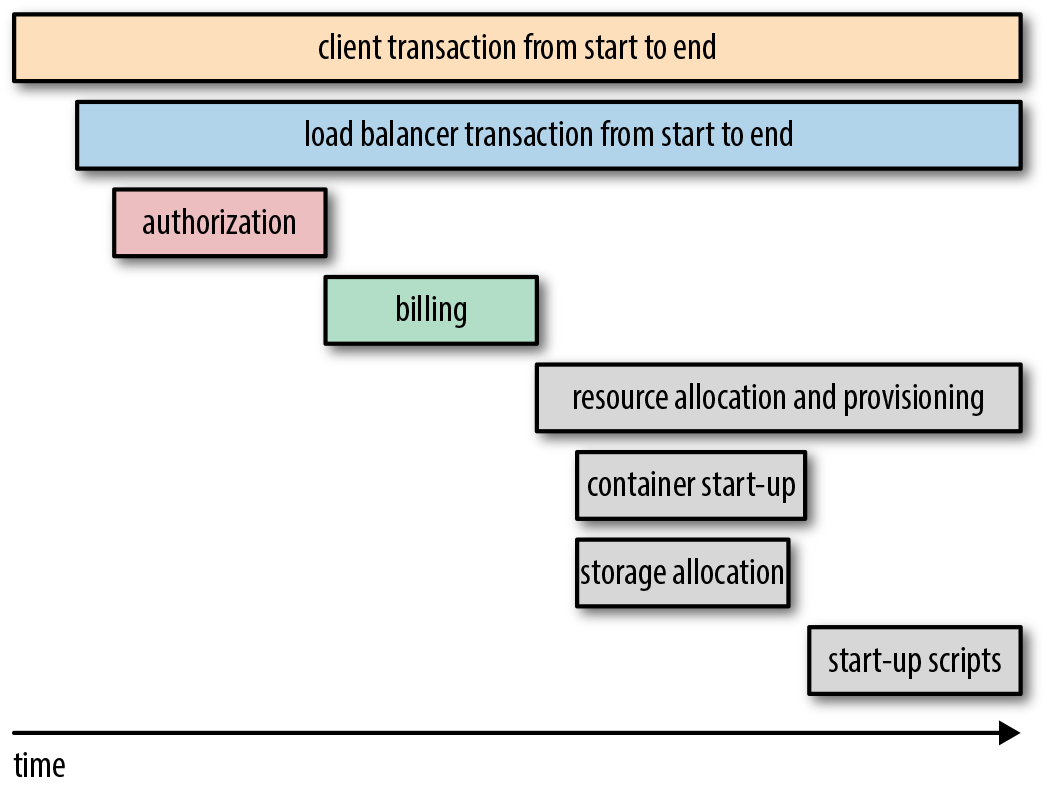

Figure 1-1 demonstrates a typical continuous delivery build pipeline for a Java-based application. The first step of the process of CD is continuous integration (CI). Code that is created on a developer’s laptop is continually committed (integrated) into a shared version-control repository, and is automatically built and packaged into an artifact. After CI, the resulting artifact is submitted to a series of automated acceptance and system quality attribute verification stages, before undergoing manual user acceptance testing and promotion through progressively more production-like environments.

The primary goal of the build pipeline is to prove that any changes to code or configuration are production-ready. A proposed modification can fail at any stage of the pipeline, and this change will accordingly be rejected and not marked as ready for deployment to production. Artifacts that do pass all verification steps can be deployed into production, and this is where both technical and business telemetry can be collected and used to create a positive feedback loop.

Let’s look at the purpose of each of the pipeline stages in more depth.

Initially, a developer or engineer makes a change on their local copy of the code. They may develop new functionality using practices such as behavior-driven development (BDD), test-driven development (TDD), and other extreme programming (XP) practices like pair programming. One of the core goals of this stage is to make the local development environment as production-like as possible; for example, running certain tests in a locally installed virtualization- or container-based environment. Another goal, one that can be challenging with larger applications, is that a local development should not require all of the system components to be installed and running in order for a developer to work effectively. This is where design principles like loose coupling and high cohesion come into play, to test supporting practices like contract verification, doubles, and service virtualization.

Developers working locally typically commit their proposed code and configuration changes to a remotely hosted distributed version control system (DVCS) like Git or Mercurial. Depending on the workflow that the team or organization has implemented, this process may require some merging of changes from other branches or the trunk/master, and potentially discussion and collaboration with other developers working in the same area of the codebase.

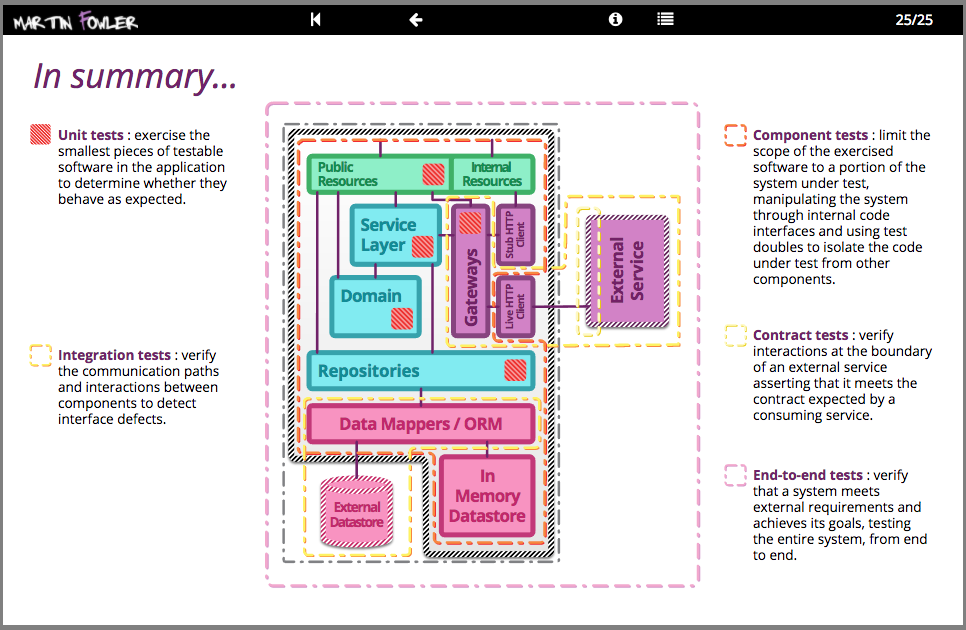

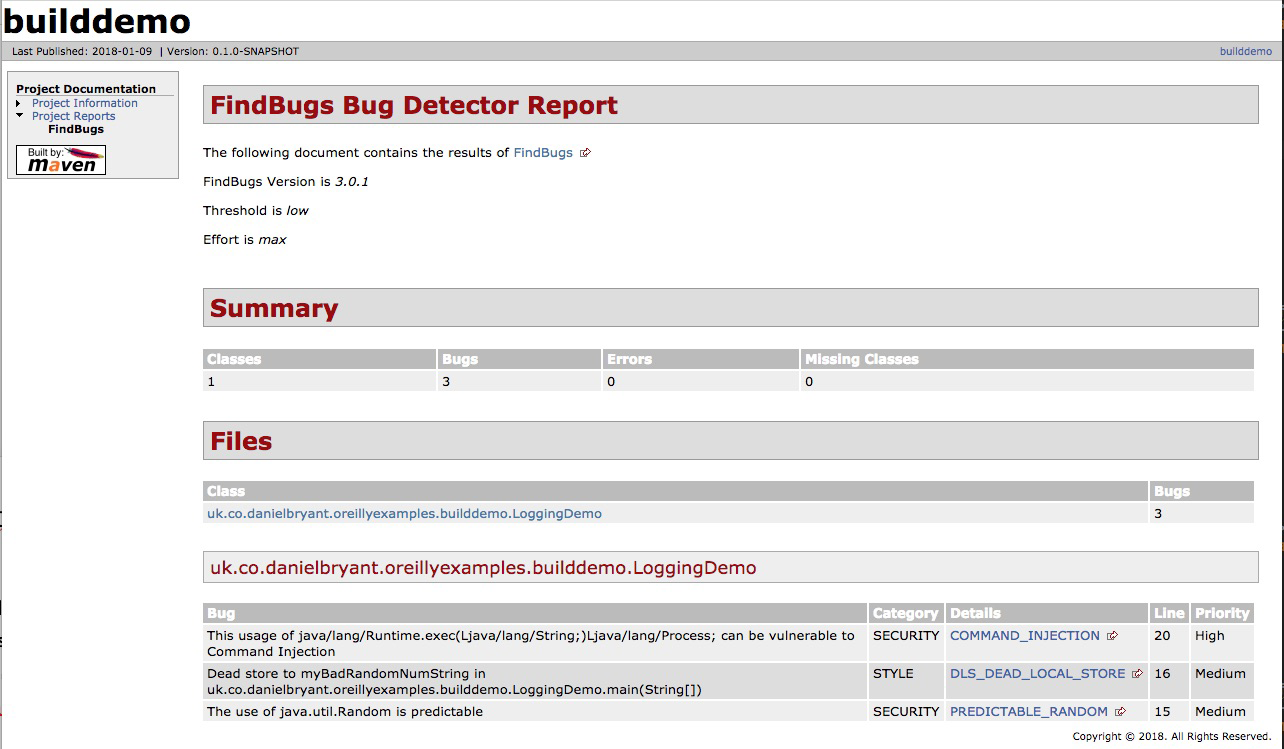

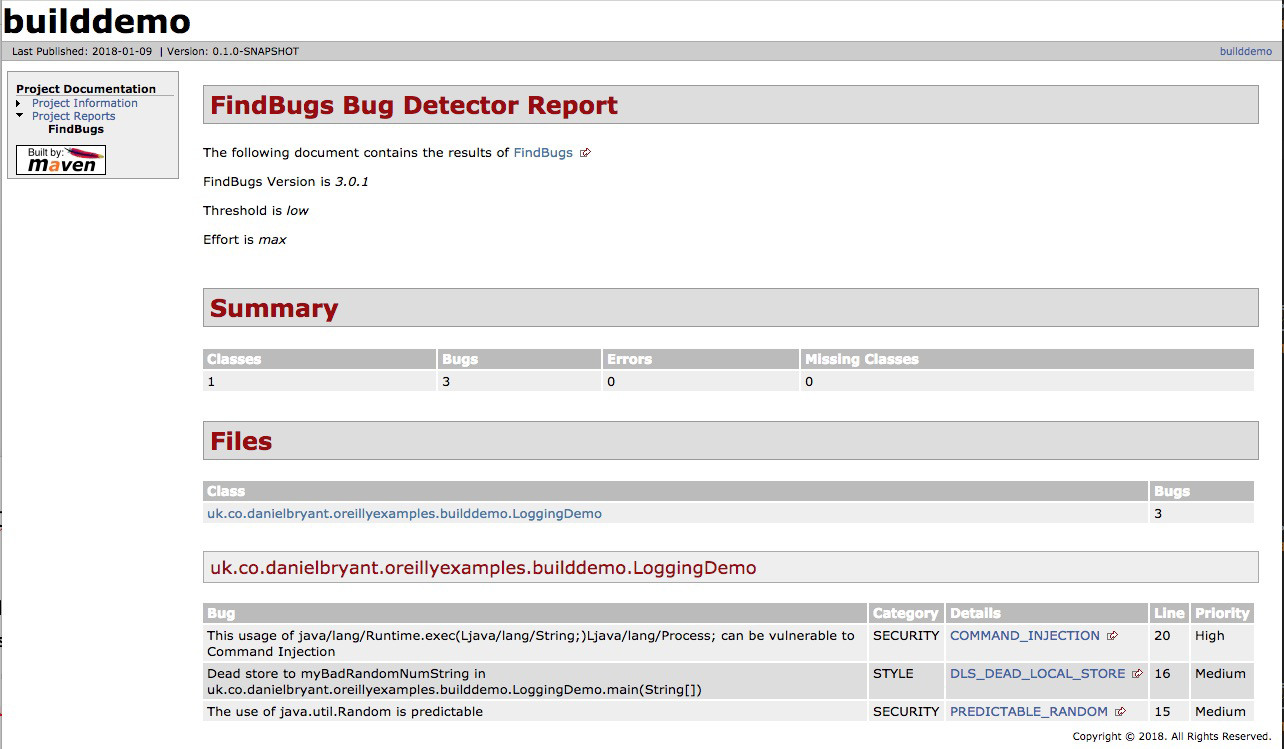

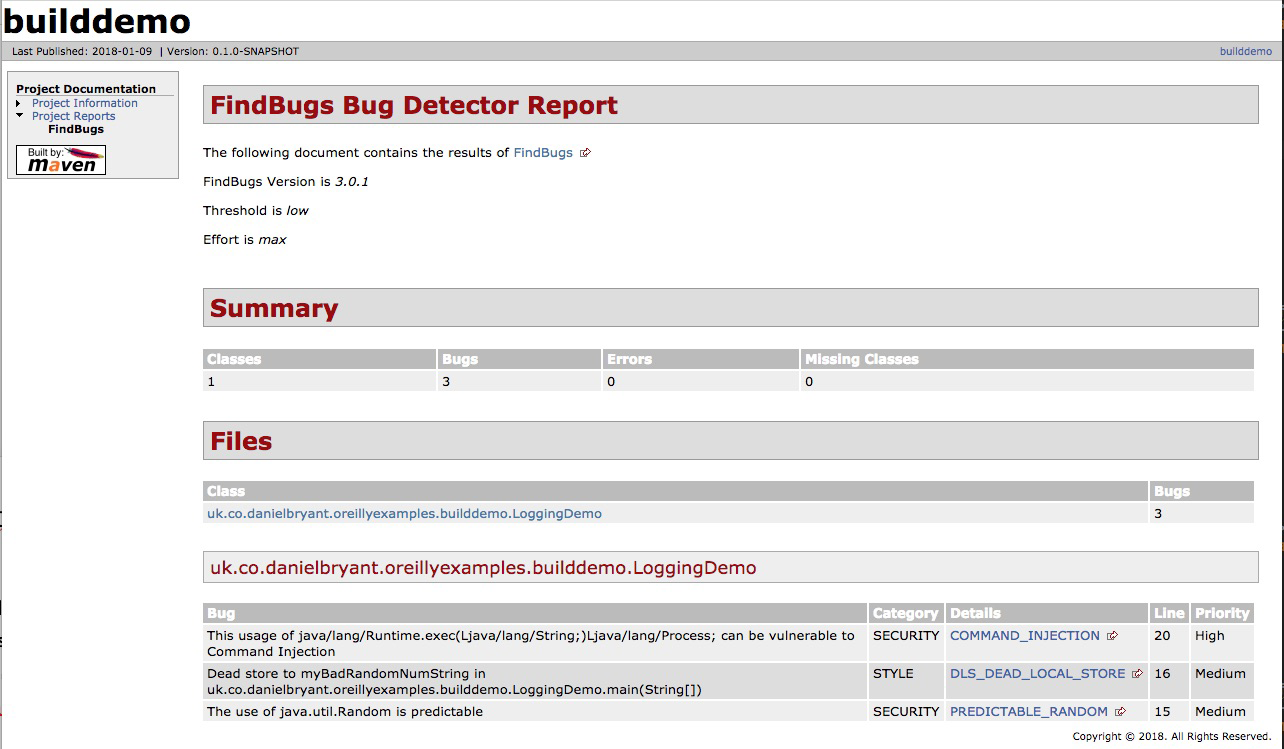

At this stage of the pipeline, the software application to which a code or configuration change is being proposed undergoes continuous integration (CI). Using integrated code stored within the trunk or master branch of a version control system (VCS), an artifact is built and tested in isolation, and some form of code quality analysis should be applied, perhaps using tools like PMD, FindBugs, or SonarQube. A successful CI run results in the new artifact being stored within a centralized repository, such as Sonatype Nexus or JFrog Artifactory.

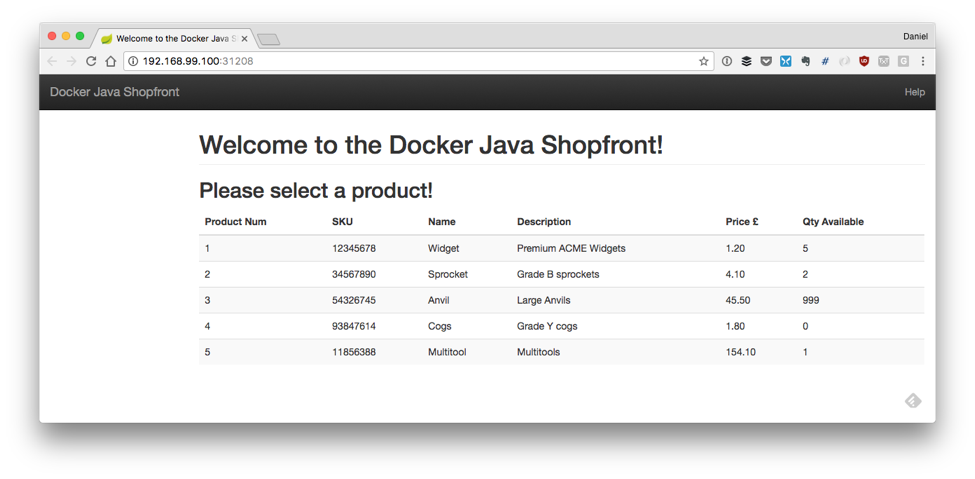

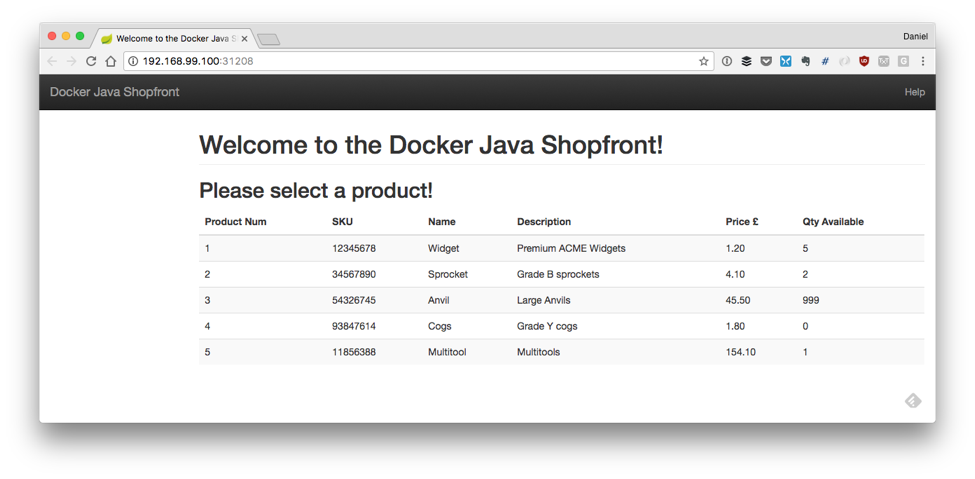

Code that successfully passes the initial unit and component tests and the code-quality metrics moves to the right in the pipeline, and is exercised within a larger integrated context. A small number of automated end-to-end tests can be used to verify the core happy paths or user journeys within the application that are essential for the provision of business value. For example, if you are building an e-commerce application, critical user journeys most likely include searching for a product, browsing a product, adding a product to your cart, and checkout and payment.

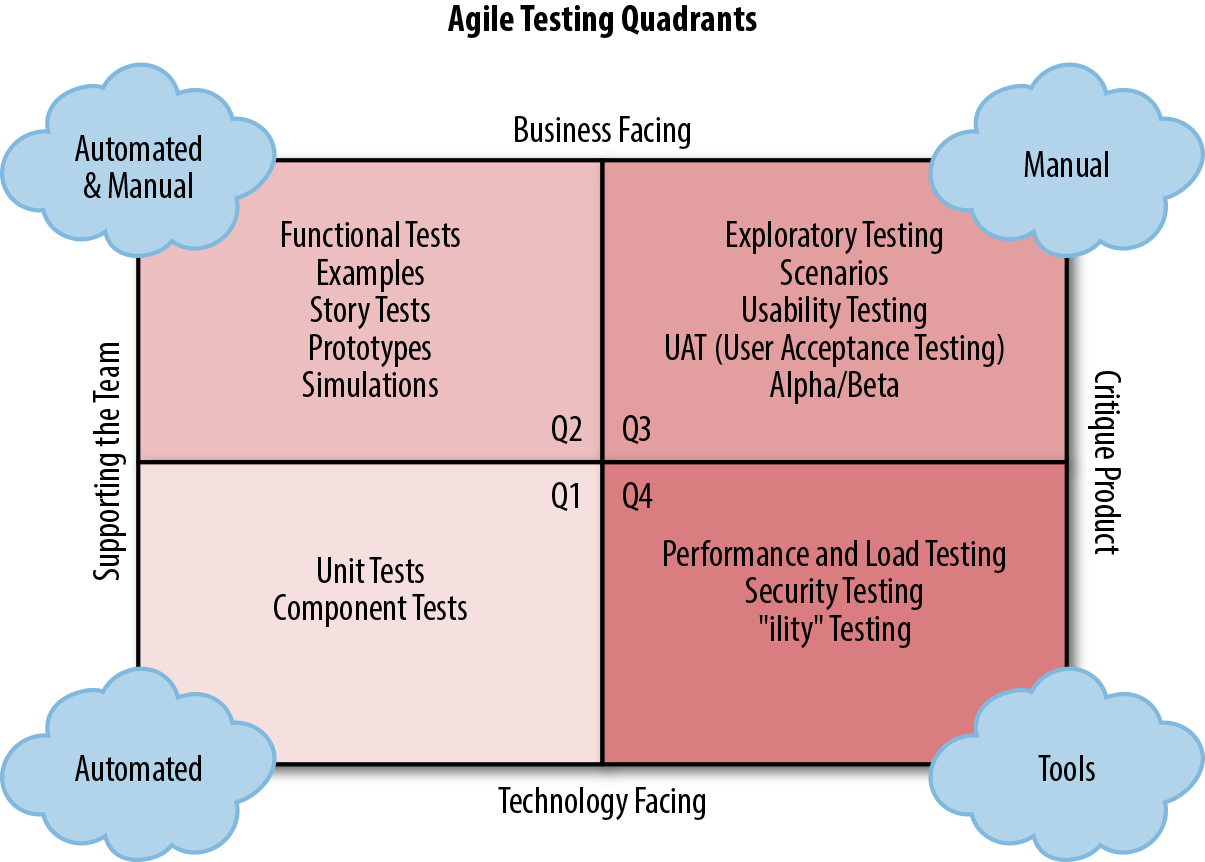

This is also the stage in which the system quality attributes (also referred to as nonfunctional requirements) are validated. Examples of verifications run here include reliability and performance, such as load and soak tests; scalability, such as capacity and autoscaling tests; and security, involving the scanning of code you wrote, the dependencies utilized, and the verification and scanning of associated infrastructure components.

At this stage, testers or actual users start preforming exploratory testing. This manual testing should focus on the value of human cognition, and not simply consist of testers following large test scripts. (The repetitive behavior of validating functionality from scripts is ideally suited to computers and should be automated.)

Once a proposed change has passed acceptance tests and other fundamental quality assurance (QA) tests, the artifact may be deployed into a staging environment. This environment is typically close to the production environment; in fact some organizations do test in a clone of production or the production environment itself. A realistic quantity of representative data should be used for any automated or exploratory tests performed here, and integrations with third-party or external systems should be as realistic as possible; for example, using sandboxes or service virtualization that mimics the characteristics of the associated real service.

Ultimately, code that has been fully validated emerges from the pipeline and is marked as ready for deployment into production. Some organizations automatically deploy applications that have successfully navigated the build pipeline and passed all quality checks—this is known as continuous deployment—but this is not an essential practice.

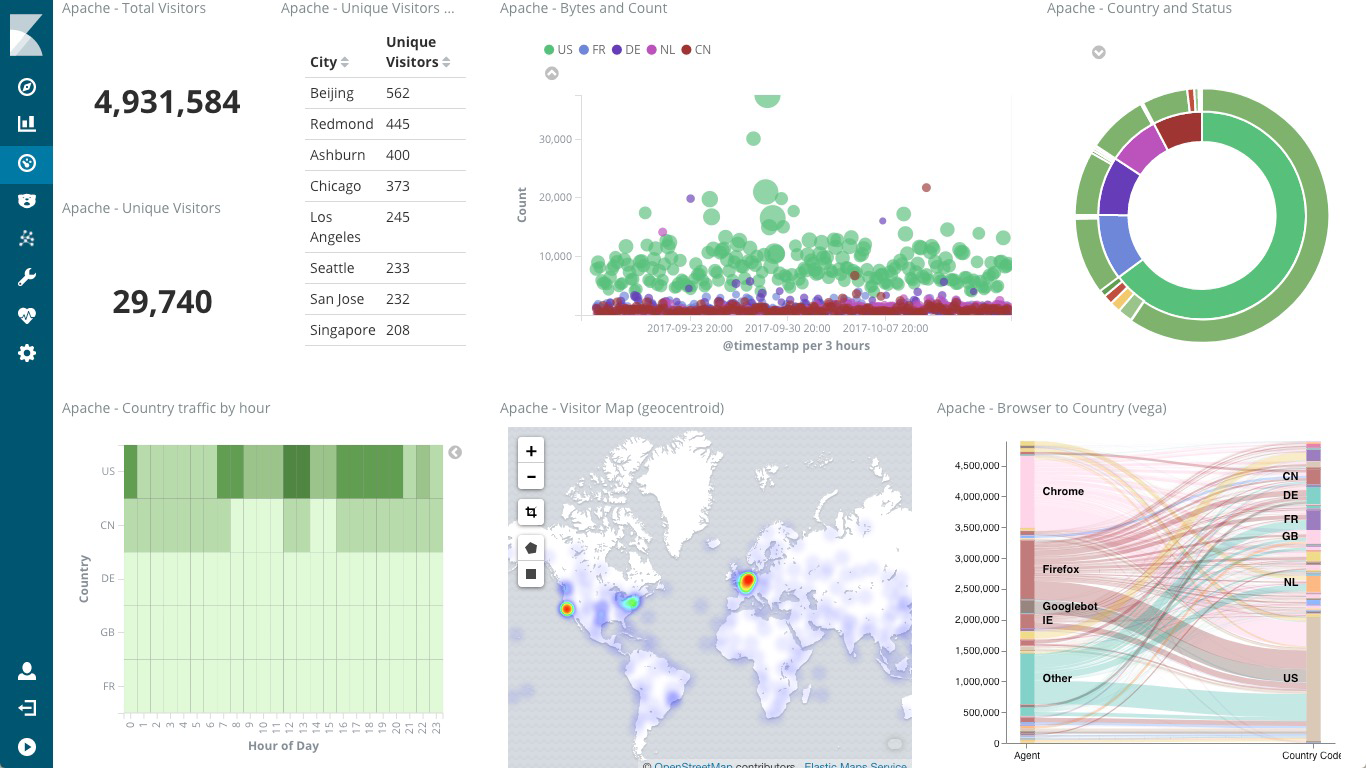

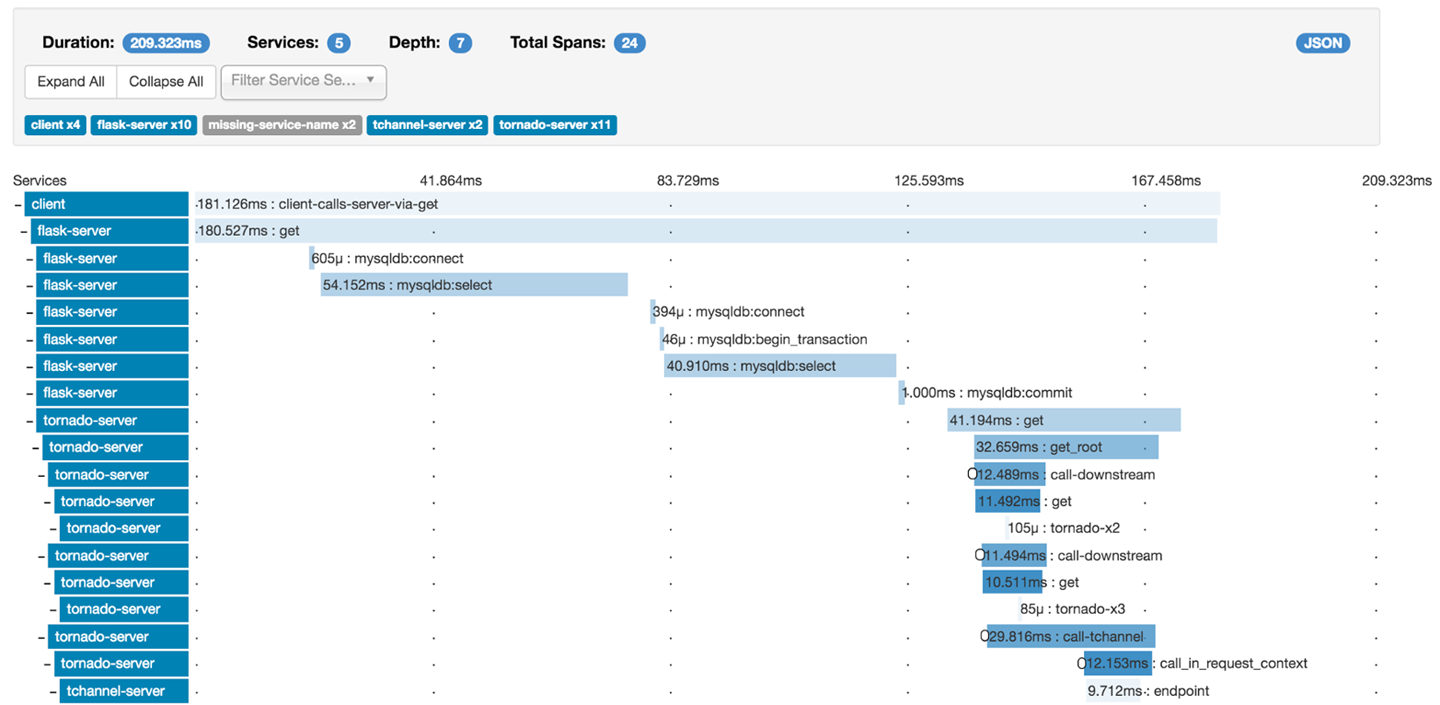

Once code has been deployed to production, you should take care not to forget about observability—monitoring, logging, and alerting—for both the purposes of enabling a positive feedback loop for business and technical hypotheses, and for facilitating potential debugging of issues that occur within production.

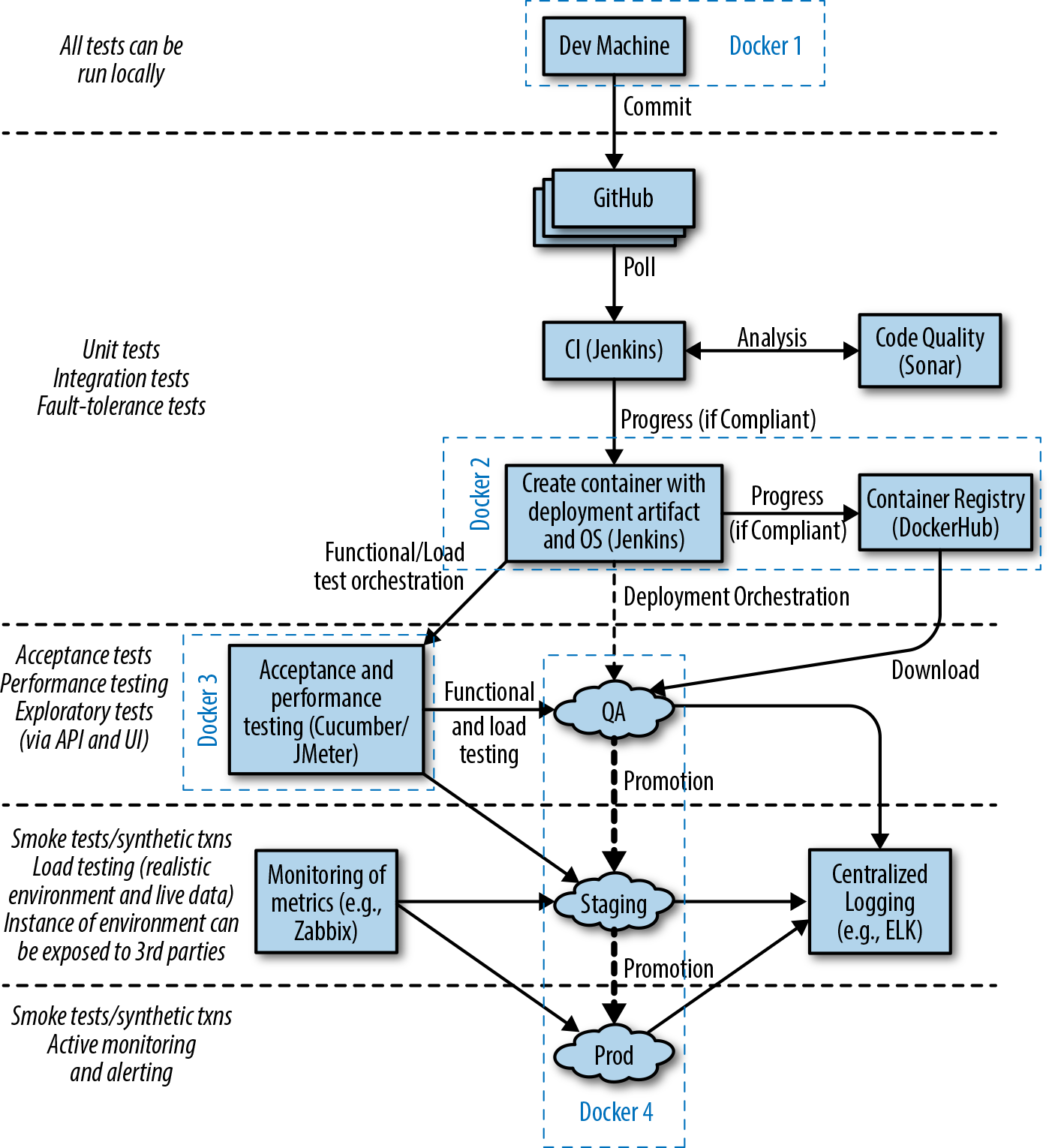

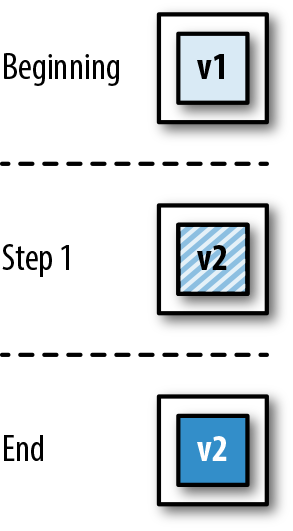

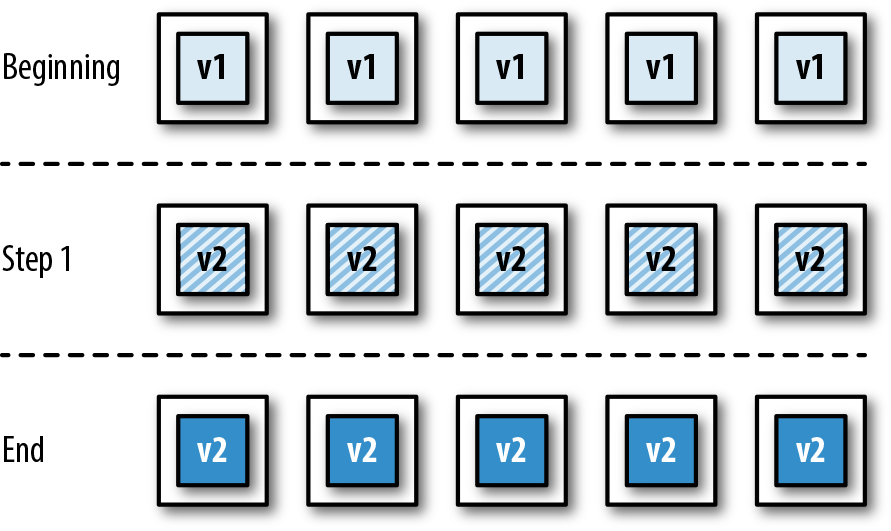

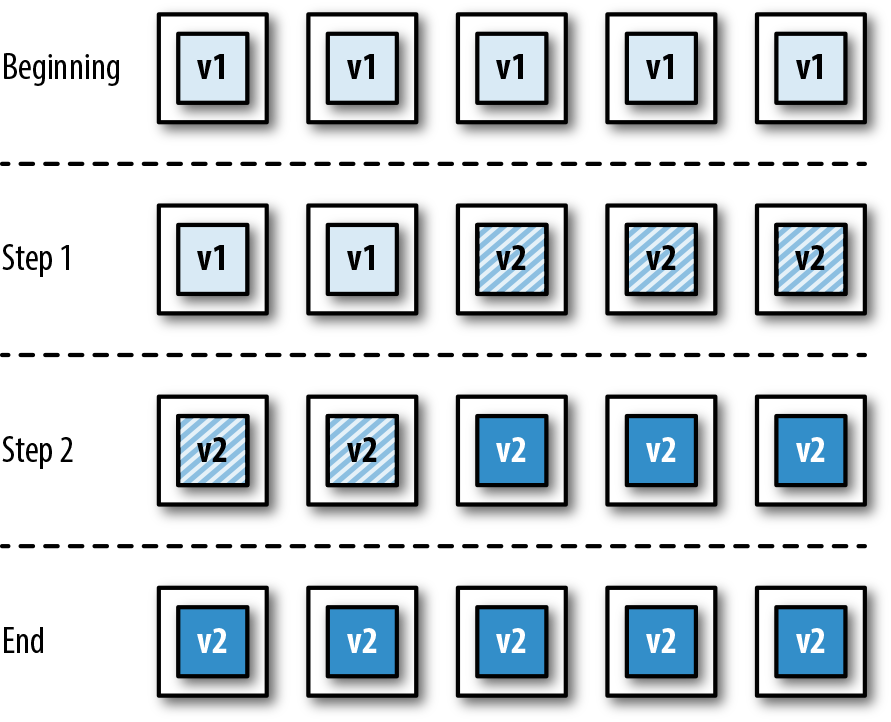

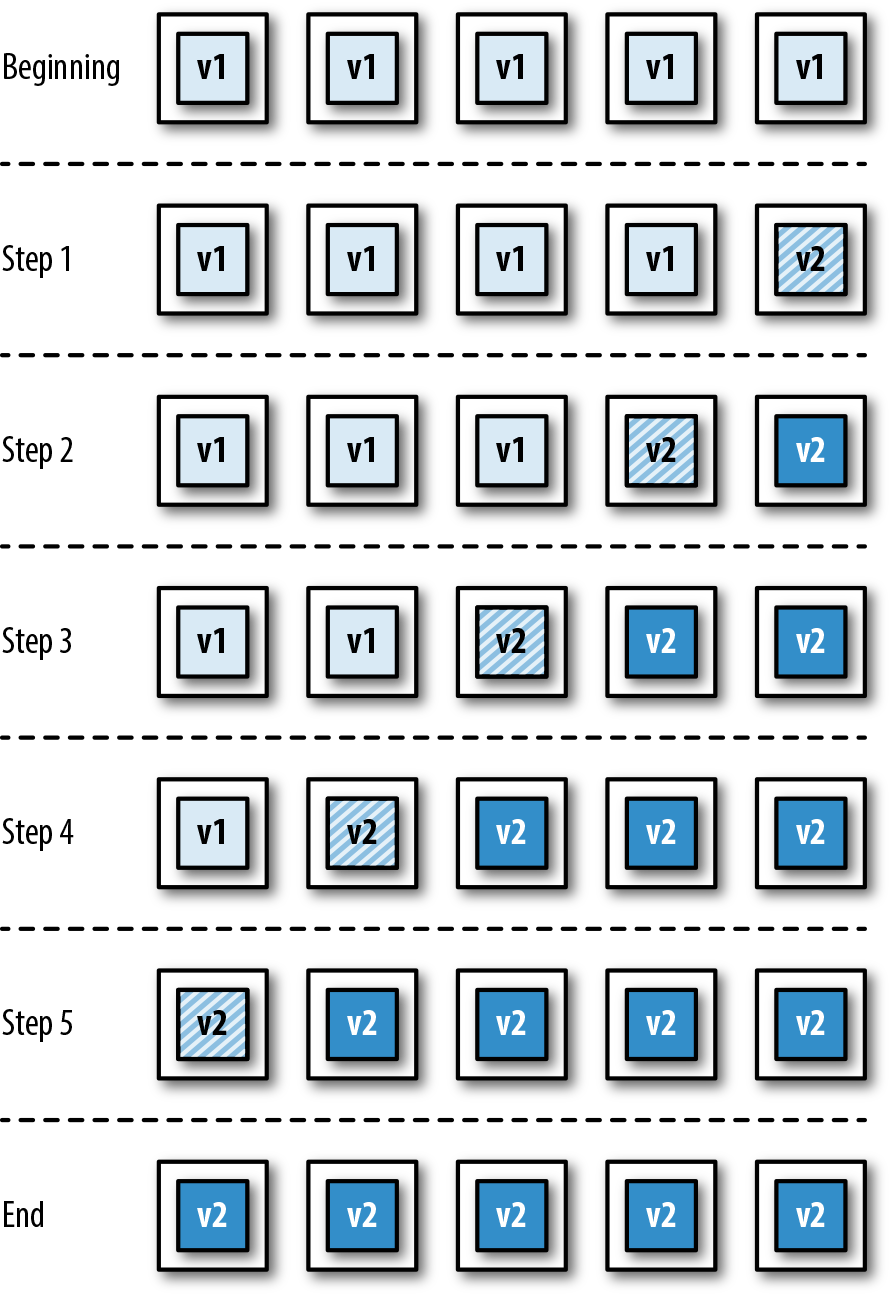

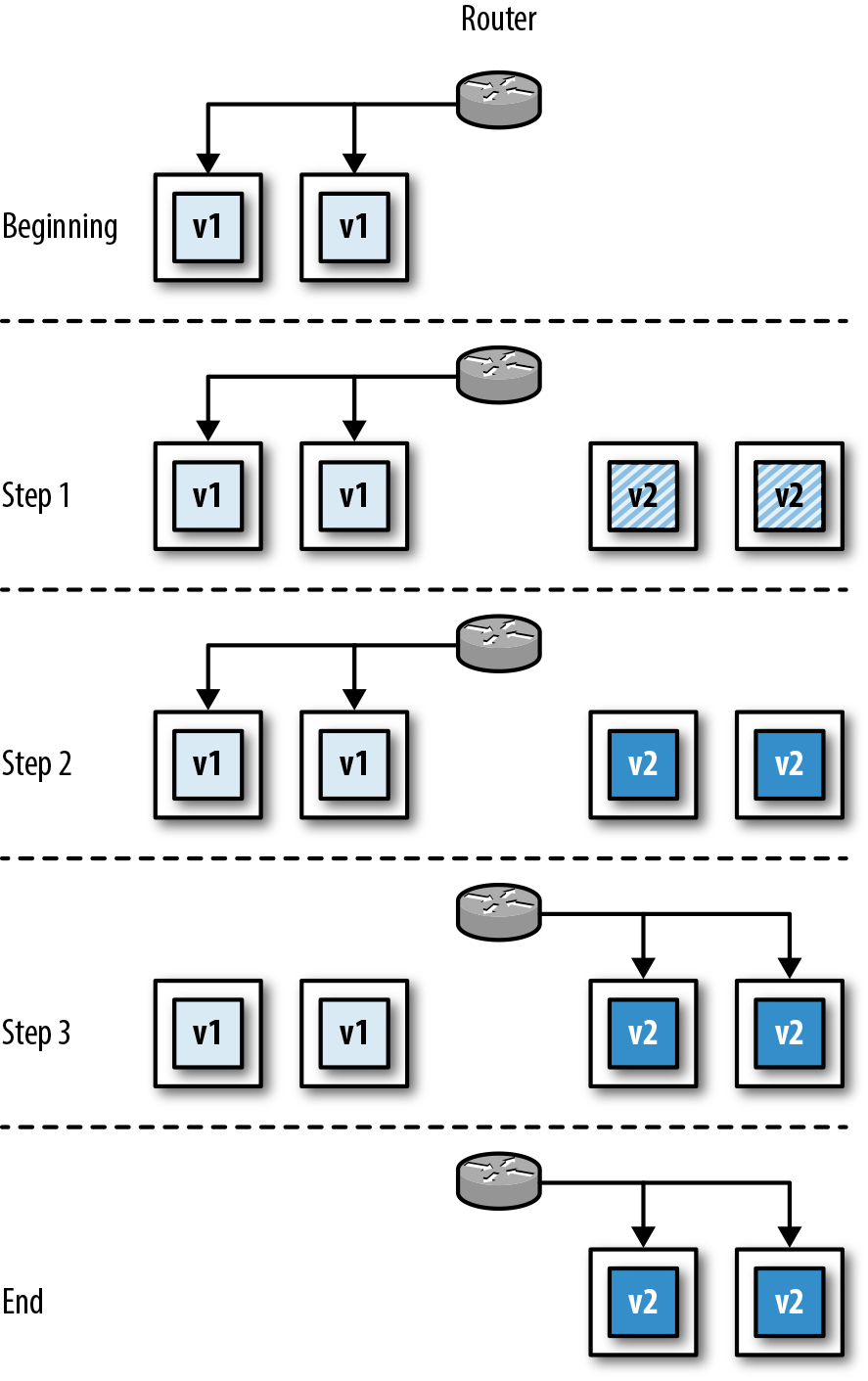

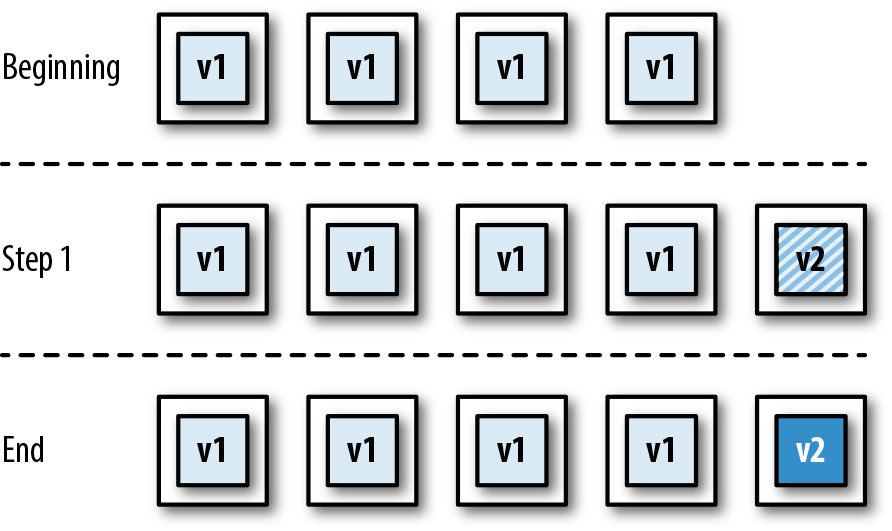

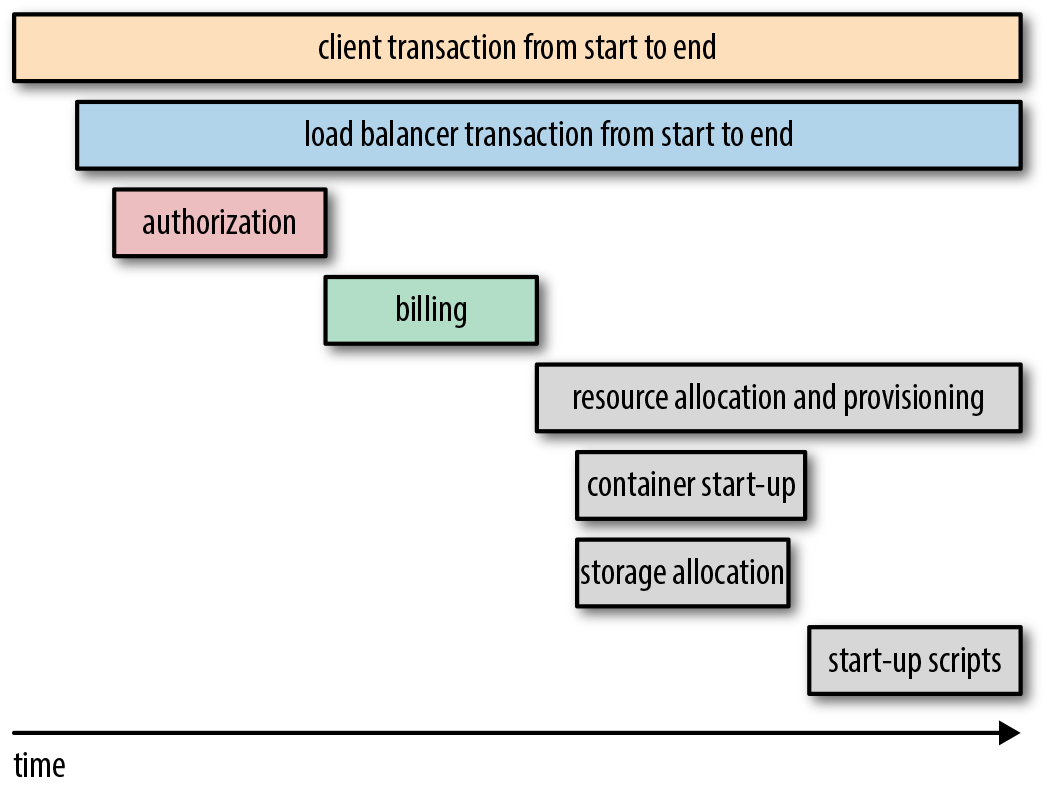

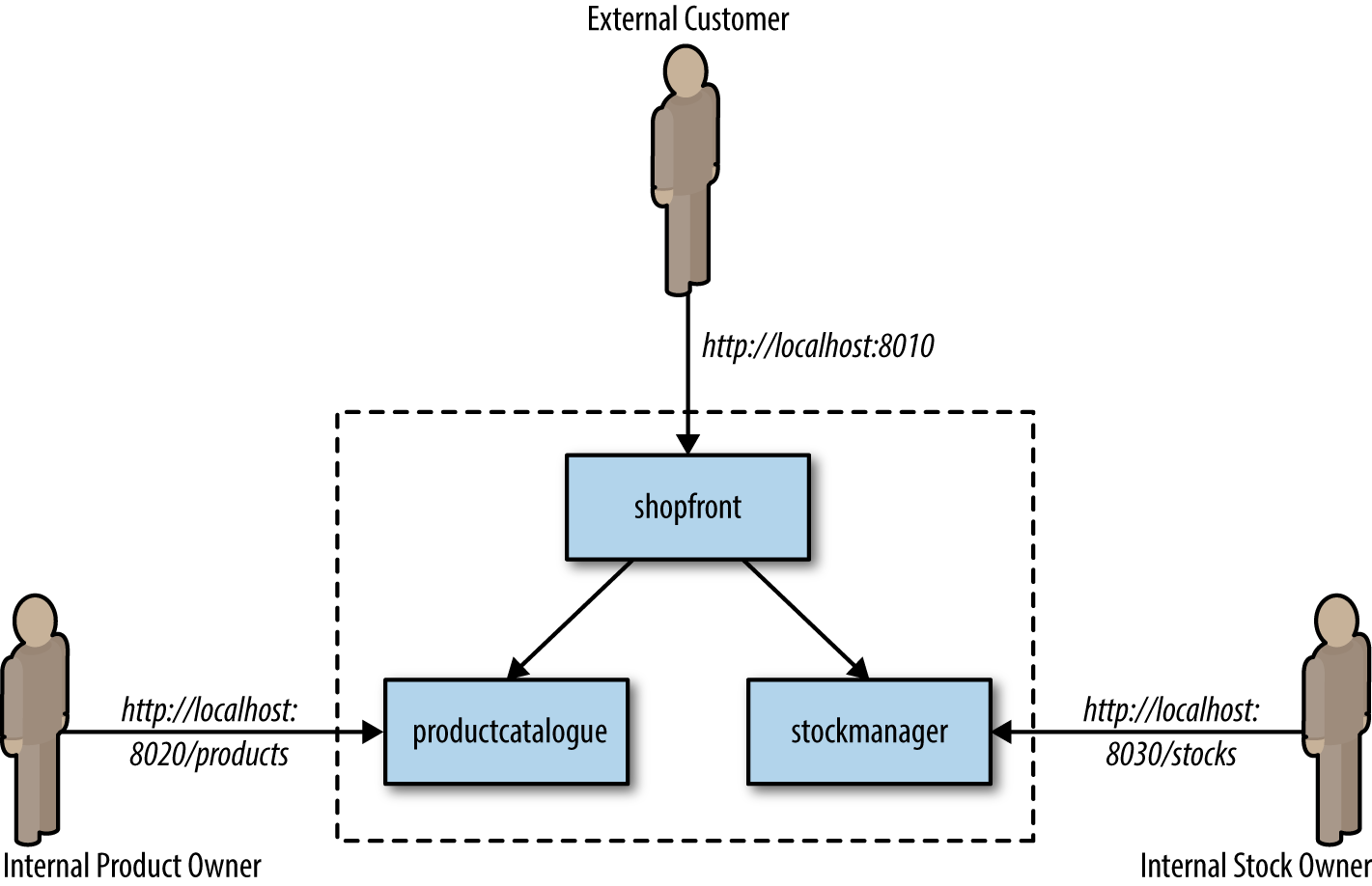

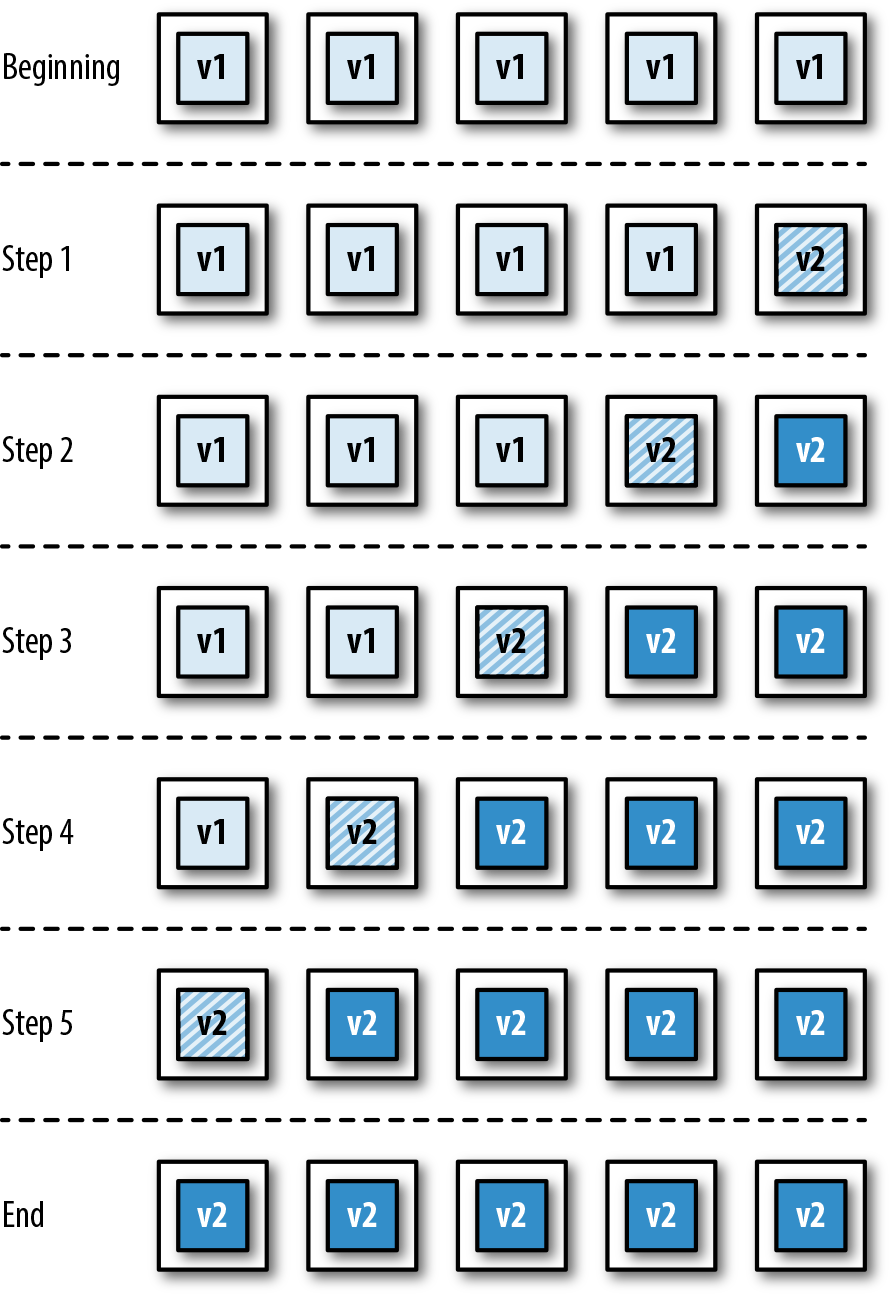

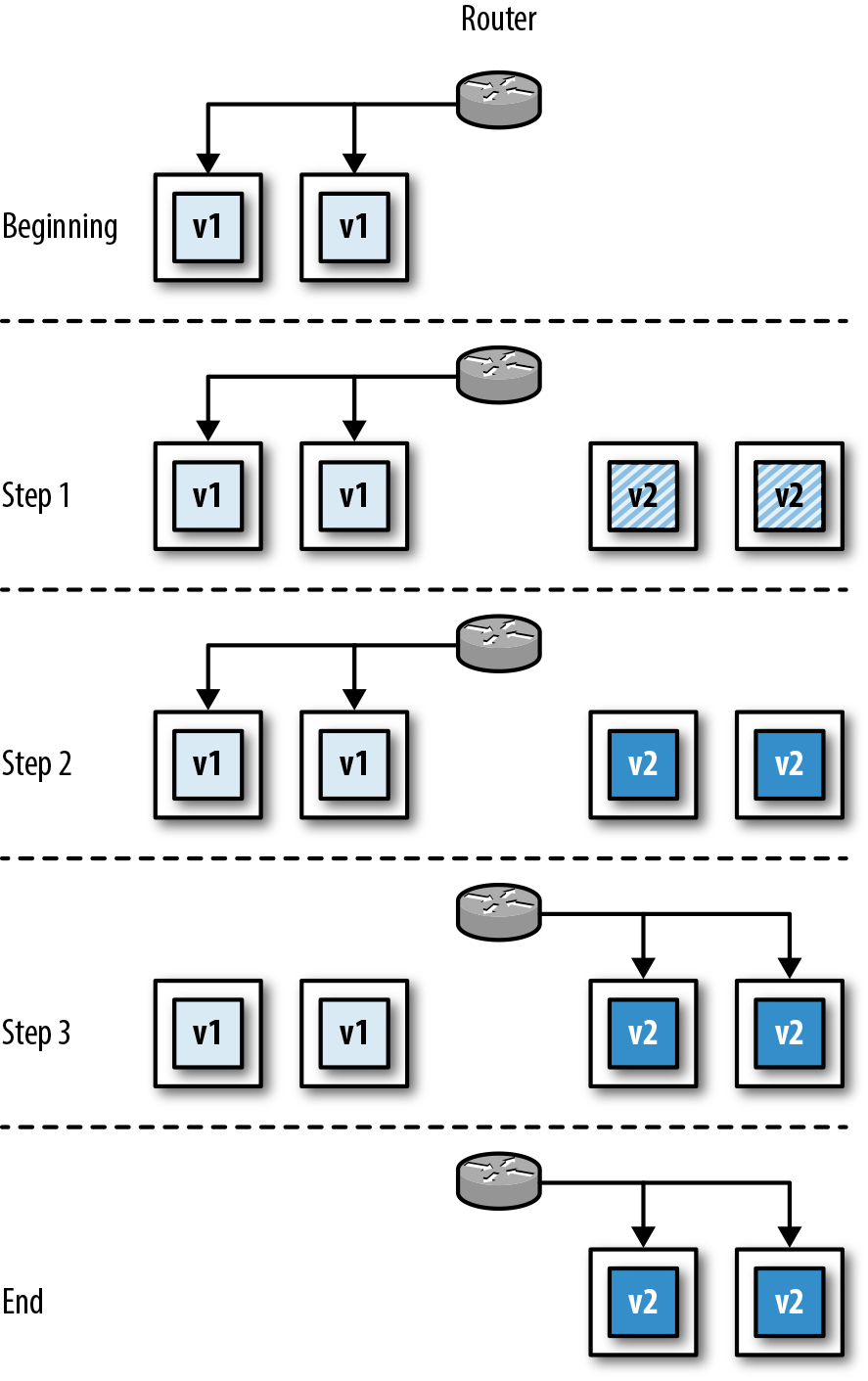

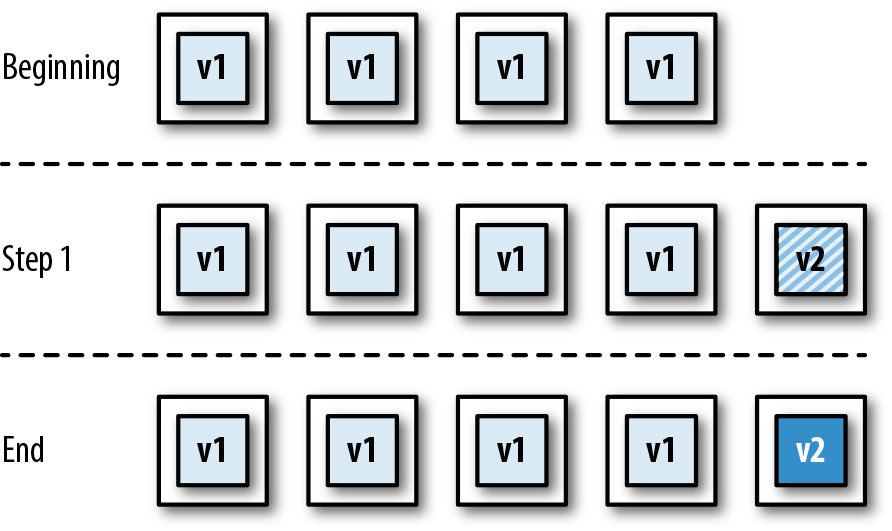

It is increasingly common that software delivery teams are packaging their Java applications within container technology like Docker, and this can alter the way tasks such as local development, artifact packaging, and testing are conducted. Figure 1-2 identifies four key stages where changes occur:

Local development now typically requires the ability to provision a containerized environment

Packaging of the deployment artifact now focuses on the creation of a container image

The mechanism for initializing tests must now interact with and manage the container runtime environment

The deployment environments now typically use another layer of abstraction for the dynamic orchestration and scheduling of containers

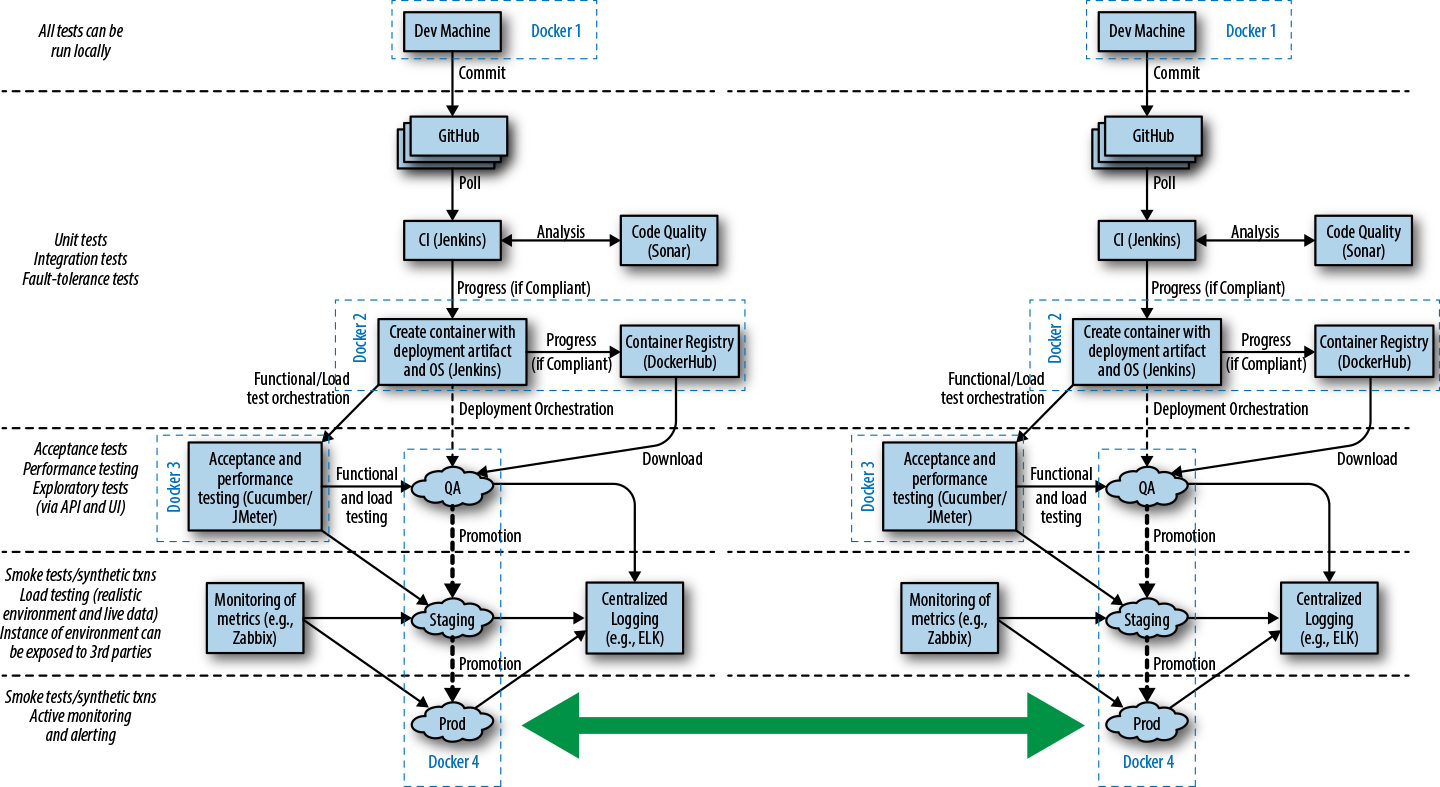

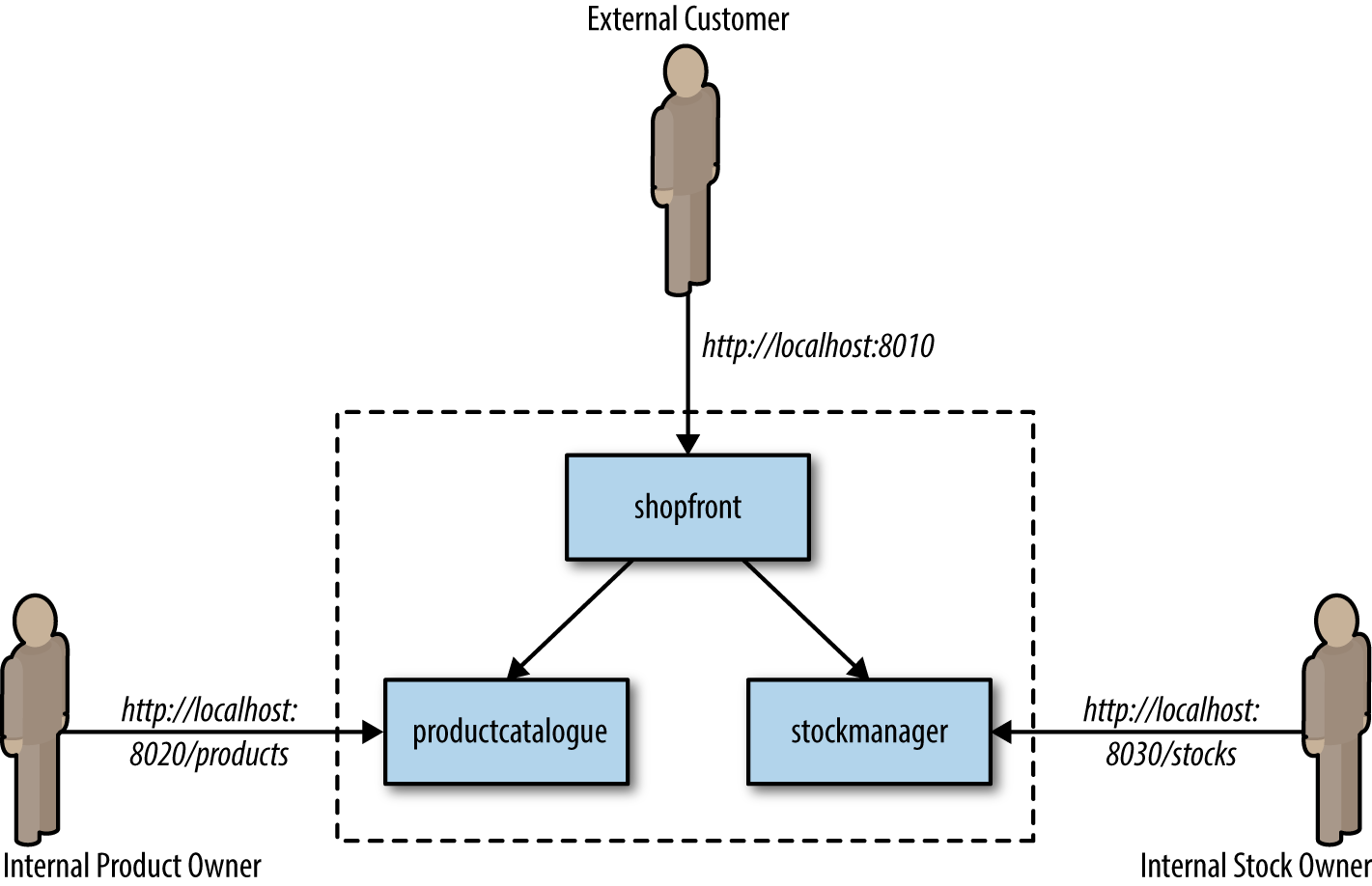

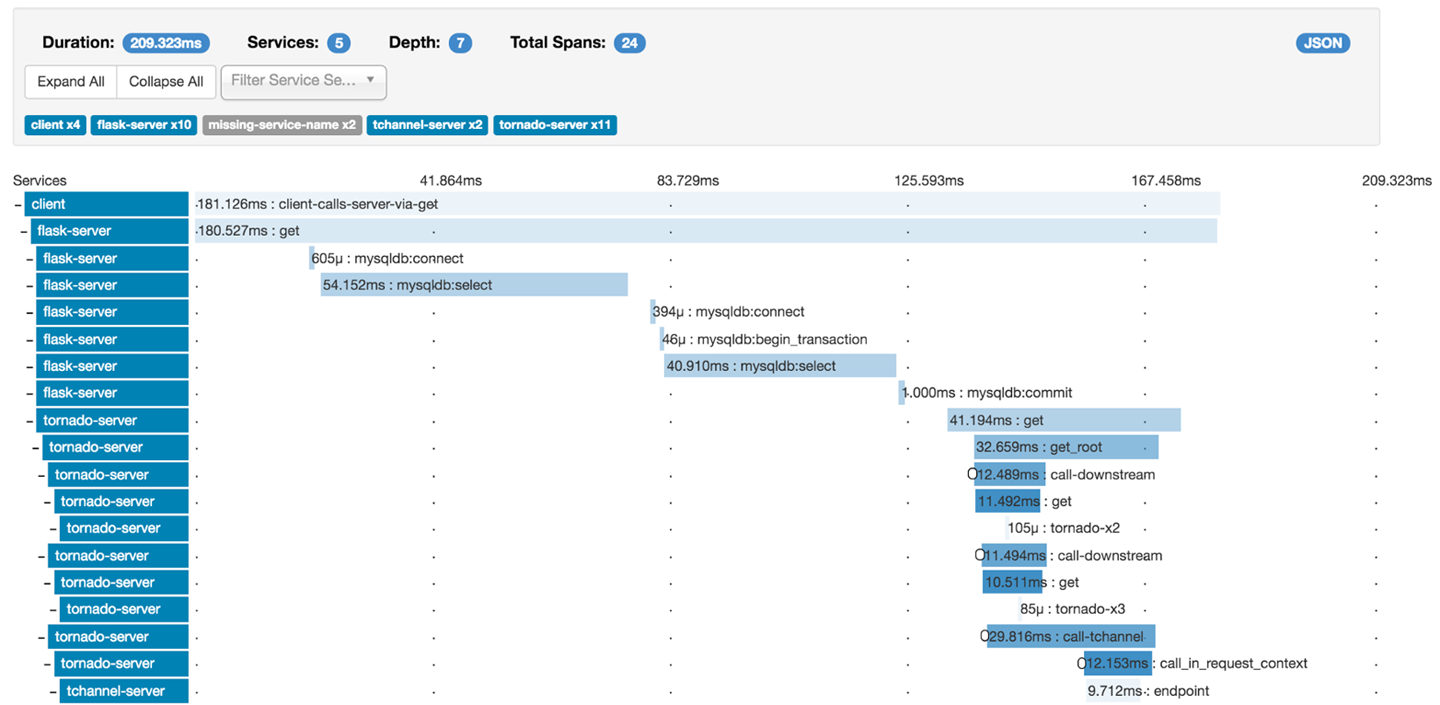

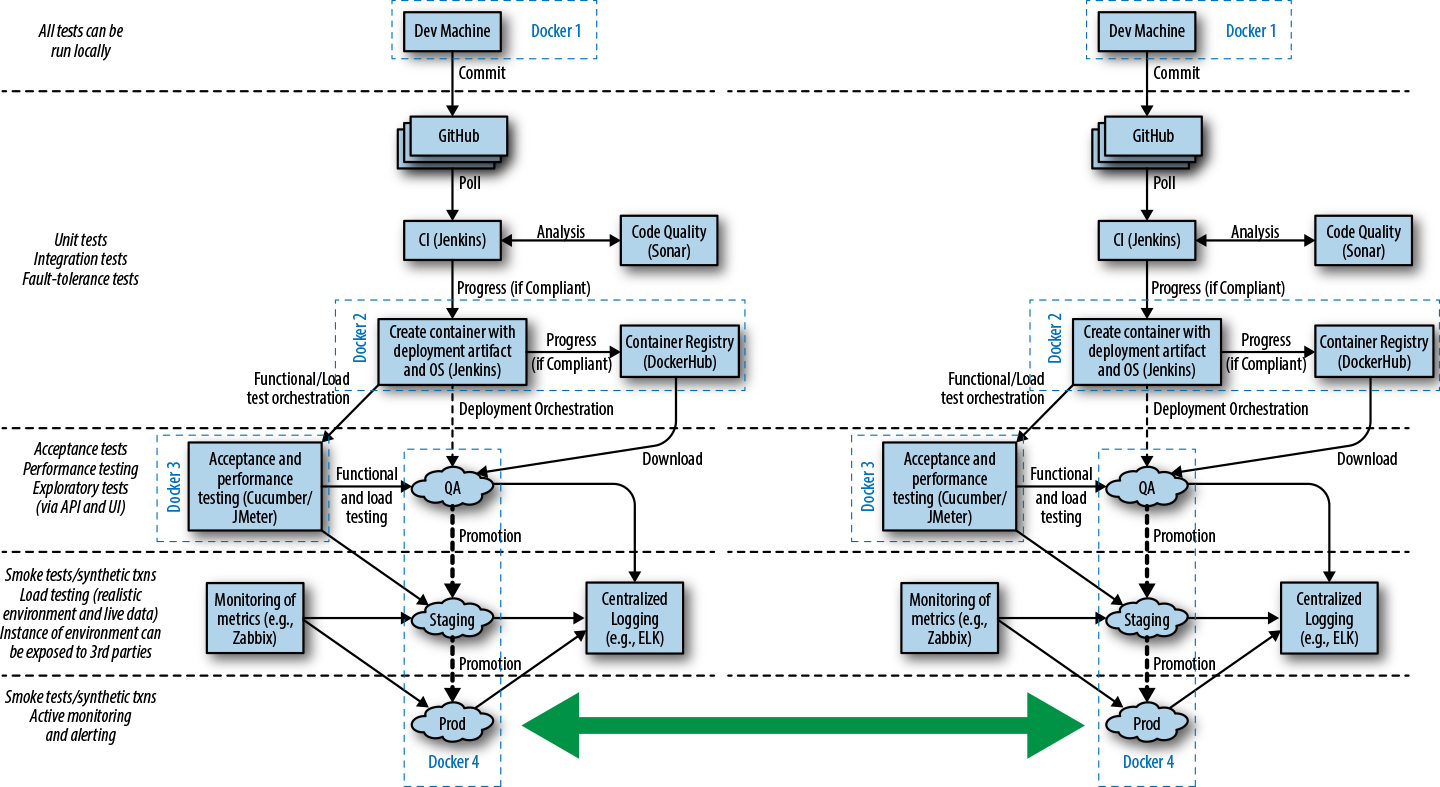

Many teams are now also building applications by using the microservices or FaaS architecture style, and this can require that multiple build pipelines are created, one for each service or function. With these types of architectures, a series of additional integration tests or contract tests are often required in order to ensure that changes to one service do not affect others. Figure 1-3 shows the impact of container technology on the build pipeline steps, as well as the challenges of multiple service integration, as shown by the large shaded arrow.

Throughout the book, we will look at creating each stage of these types of pipelines, and share our advice and experience.

In this introductory chapter, you have learned the core foundations of continuous delivery and explored the associated principles and practices:

Next you will learn about the evolution of software delivery over the past 20 years, with a focus on how Java application development has changed, and how some of the new challenges and risks introduced can be mitigated with continuous delivery. You will also explore how the ever-changing and evolving requirements, architectural and infrastructure best practices, and shifting roles within IT are increasingly driving changes in the skills required for modern software developers.

Since the introduction of Java in 1995, much has changed, and in this chapter you will learn about how this affects your role as a Java developer. Your journey begins with a brief look back in time in order to understand how Java applications and deployment platforms have evolved, with a key focus on the impact this has had on the ability to rapidly and safely deliver new software to production environments. Finally, you will explore the human and “soft skills” aspect of continuous delivery, which focuses on increasing the shared responsibility for the creation and operation of software, such as the approaches of DevOps and Site Reliability Engineering (SRE).

Many Java developers have been practicing continuous integration and some form of continuous delivery for the past decade. Innovative books including Java Power Tools (O’Reilly) by John Smart provided the guidelines and frameworks to make this possible. Technologies have obviously changed within the last 10 years, and so have associated programming and architectural styles. In particular, business teams within organizations have increasingly demanded that IT teams become more flexible and be capable of rapidly responding to changes in customer preferences and market conditions.

The emergence of dynamic and programmable compute resources and deployment platforms, combined with teams and organizations exposing application programming interfaces (APIs) as products, has resulted in the architectures that Java developers are creating to converge toward component/service/function-based architectures. All of these factors have led to (and, in turn, have been driven by) the emergence of popular movements such as Agile, Lean, DevOps, cloud computing, programmable infrastructure, microservices, and serverless or FaaS.

During his time as a cloud architect at Netflix, Adrian Cockcroft talked a lot about “time to market” being a competitive advantage, and in many modern markets “speed kills.” Uwe Friedrichsen, CTO at codecentric, has also talked extensively about this trend beginning in the 1980s: globalization, market saturation, and the internet led to highly competitive and dynamic ecosystems. The markets became highly demand-driven, and the new biggest challenge of the companies was to adapt to the changing demands of the customers quickly enough. The key driver changed from cost-efficient scaling to responsiveness.

Over the same time period, the move to public commodity infrastructure (the cloud) in combination with increasing transaction value flowing through global computer systems has meant that new failure modes are being discovered, and new attackers are emerging from the shadows. This has caused the need to balance stability and security against the requirement for speed. Often this isn’t an easy balance to maintain.

Continuous delivery is achieved when stability and speed can satisfy business demand.

Discontinuous delivery occurs when stability and speed are insufficient.

Steve Smith (@AgileSteveSmith)

Accordingly, you now need to create applications that support rapid, safe, and stable change, and continually ensure that you are meeting these requirements through automated testing and validation.

APIs are at the core of the internet and a modern developer’s daily life. RESTful services are the de facto way to expose and consume third-party online business services. However, as stated by Jennifer Riggins when attending the 2017 APIDays conference, what people might not realize is how much the API will be at the center of the future technology and part of every connected person’s daily life. APIs will continue to play a central role in trends like chatbots and virtual assistants, the Internet of Things (IoT), mobile services, and so much more.

APIs are also being increasingly consumed as “shadow IT” by departments that were traditionally less “tech-savvy,” like marketing, sales, finance, and human resources. Mediated APIs—APIs that act as bridges between new and old applications—are becoming increasingly popular, as they provide adaptations and opportunities for innovation in businesses that have considerable investment locked within legacy infrastructure. Gartner, the US-based research and advisory firm, suggests that concepts such as the API marketplace and the API economy are becoming increasingly important within the global economy.

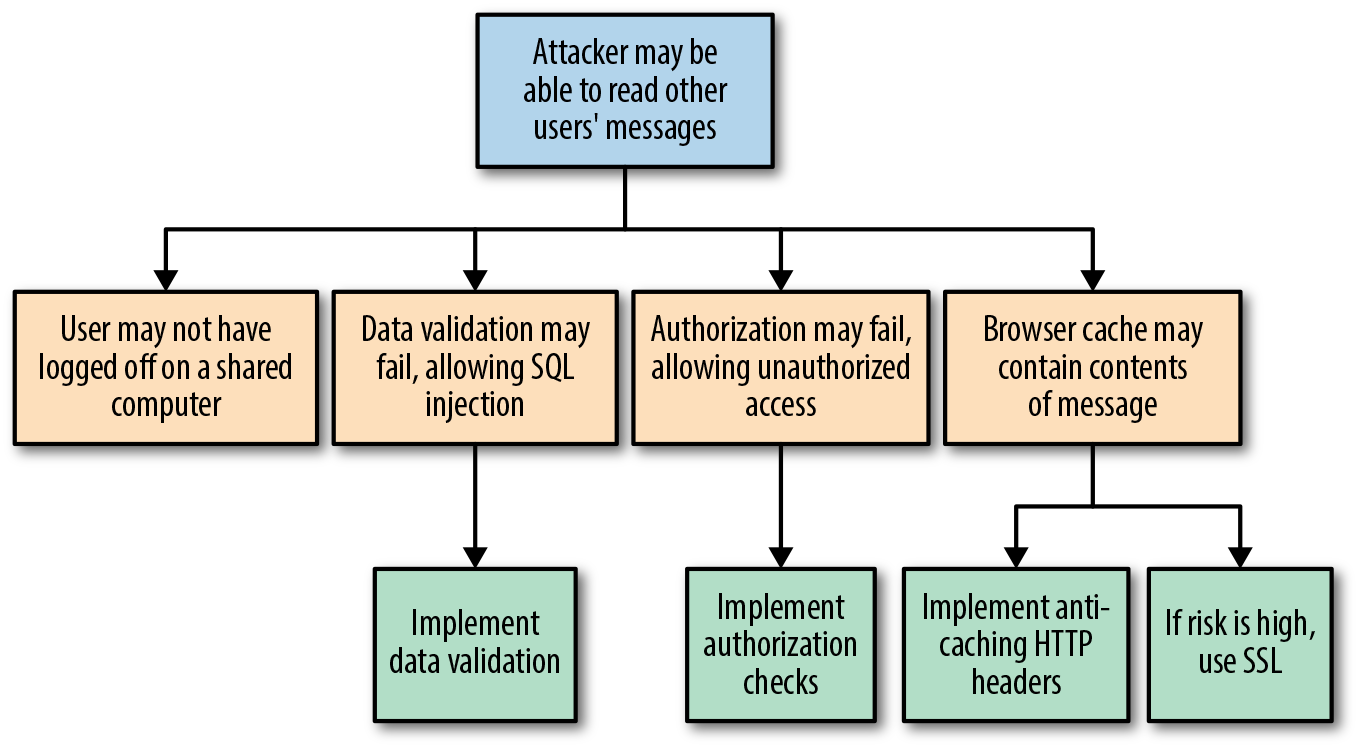

As the API marketplace becomes more sophisticated and widespread, the risks for failure and security issues become more apparent. APIs have made technology more accessible than ever, which means that enterprise architects, the traditional bastions of technology adoption, are no longer the gatekeepers for technical decision-making. Accordingly, this empowers every developer in an organization to innovate, but at the same time can lead to unintended consequences. It is essential to codify not only functional requirements for an API—for example, using BDD and automated testing—but also nonfunctional (or cross-functional) requirements and service-level agreements (SLAs) related to security, performance, and expected cost. These must be continually tested and validated, as this has a direct impact on the product being offered to customers.

It can be argued that the cloud computing revolution began when Amazon Web Services (AWS) was officially launched in March 2006. Now the cloud computing market includes other big players like Microsoft Azure and Google Cloud Platform, and generates $200+ billion in revenue annually. Cloud computing technologies have brought many advantages—on-demand hardware, rapid scalability and provisioning, and flexible pricing—but have also provided many challenges for developers and architects. These include the requirements to design for the ephemeral nature of cloud computing resources, the need to understand the underlying characteristics of a cloud system (including mechanical sympathy and fault tolerance), and the requirement for an increase in operational and sysadmin knowledge (such as operating systems, configuration management, and networking).

Developers unfamiliar with cloud technologies must be able to experiment and implement continuous testing with these deployment fabrics and platforms, and this must be done in a repeatable and reliable way. Early testing within a build pipeline using applications deployed on infrastructure and platforms that are as like production as possible is essential to ensure that assumptions on performance, fault tolerance, and security are valid.

The combination of the need for speed from the business, the adoption of REST-like APIs, and the emergence of cloud computing has provided new opportunities and challenges to software architecture. Core topics in this space include the scaling of both the organizational aspects of developing software (e.g., Conway’s law) and the technical aspects (e.g., modularization), as well as the requirement to deploy and operate parts of the codebase independently of each other. Much of this has been incorporated within the emerging architectural pattern known as the microservices.

This book discusses the drivers and core concepts of microservices in Chapter 3 and explores how this helps and hinders the implementation of CD. A further introduction to microservices can be found in Christian Posta’s Microservices for Java Developers (O’Reilly), and a more thorough treatment can be found in Sam Newman’s Building Microservices (O’Reilly) and Irakli Nadareishvili et al.’s Microservice Architecture (O’Reilly). At a high level, the building of Java-based microservices impacts the implementation of CD in several ways:

Multiple build pipelines (or branches within a single pipeline) must be created and managed.

Deployment of multiple services to an environment have to be orchestrated, managed, and tracked.

Component testing may have to mock, stub, or virtualize dependent services.

End-to-end testing must orchestrate multiple services (and associated state) before and after executing tests.

Process must be implemented to manage service version control (e.g., the enforcement of allowing the deployment of only compatible, interdependent services).

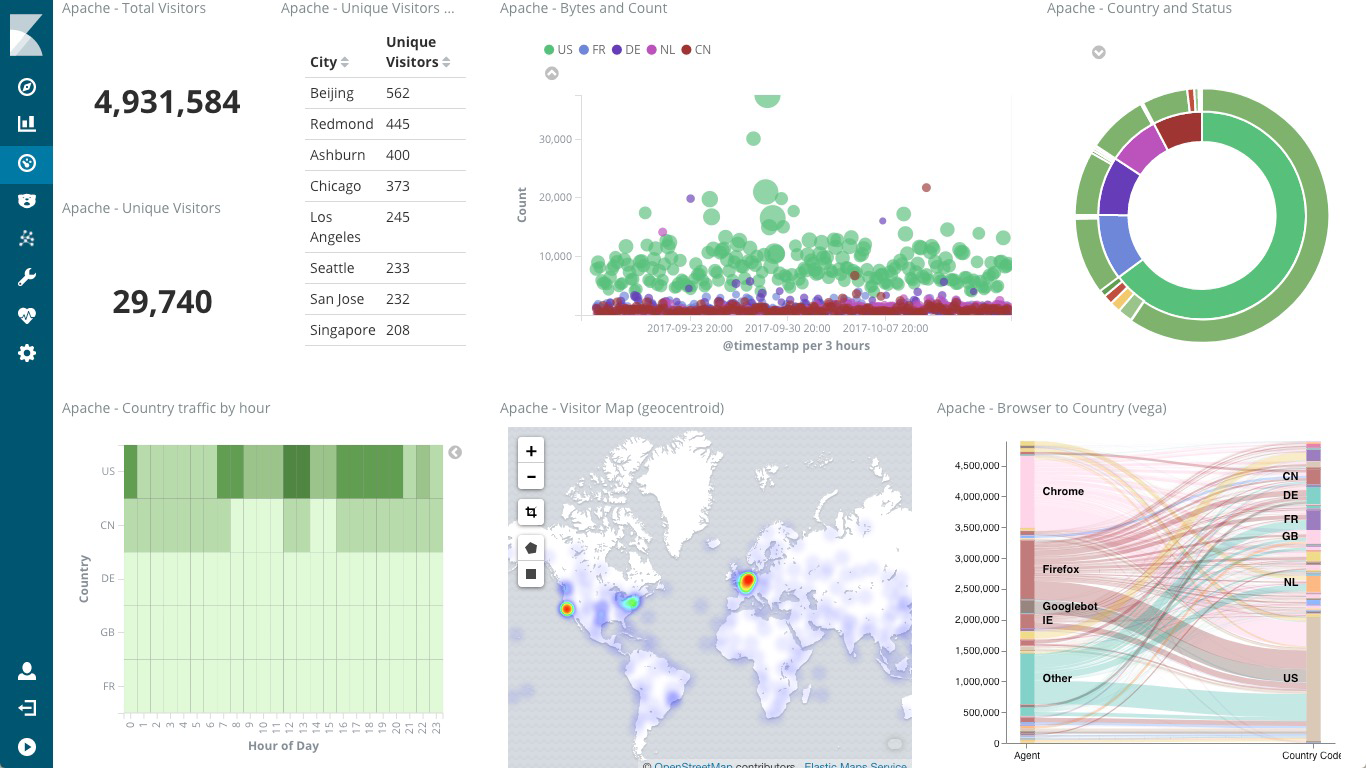

Monitoring, metrics, and application performance management (APM) tooling must be adapted to handle multiple services.

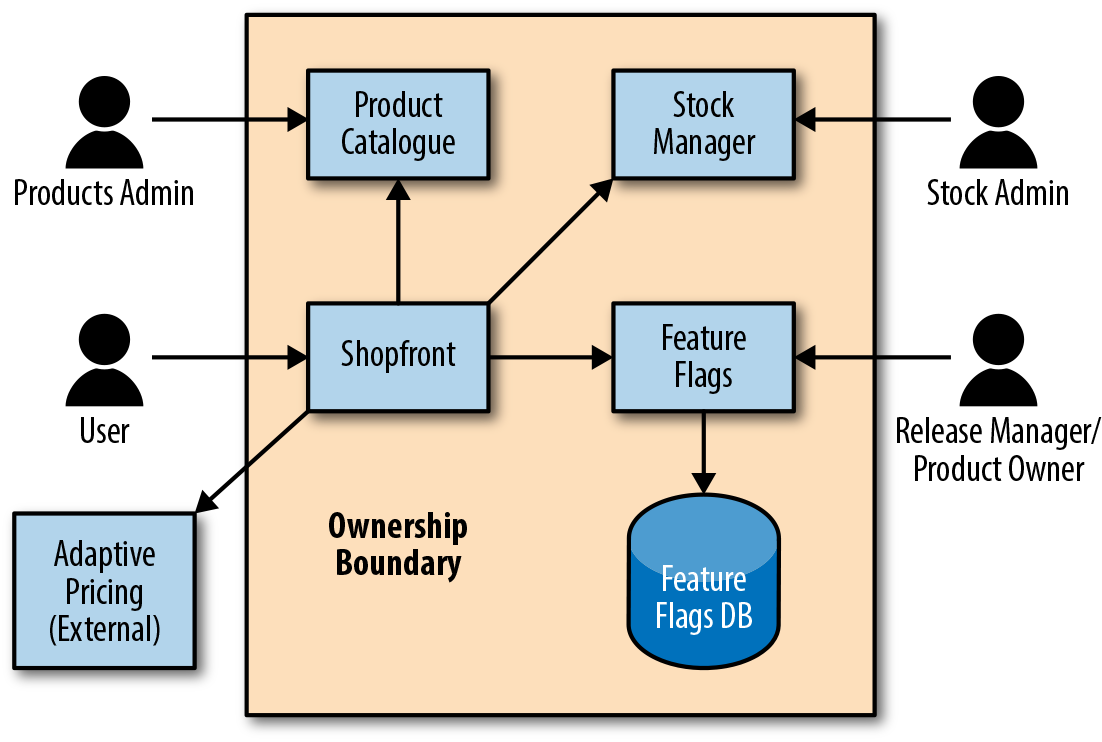

Decomposing an existing monolithic application, or creating a new application that provides functionality through a composite of microservices, is a nontrivial task. Techniques such as context mapping, from domain-driven design, can help developers (working alongside stakeholders and the QA team) understand how application/business functionality should be composed as a series of bounded contexts or focused services. Regardless of how applications are composed, it is still vitally important that both individual components and the system as a whole are continually being integrated and validated. The need for continuous delivery only increases as more and more components are combined, as it becomes nearly impossible to manually reason about their combined interactions and functionality.

Hopefully, this exploration of the requirements of modern Java applications has highlighted the benefits—and in some cases, the essential need—of continuous delivery to ensure that software systems provide the required functionality. The changing requirements, infrastructure, and architectural styles are just parts of the puzzle, though. At the same time, new platforms have emerged that have either codified several of the architectural best practices or have attempted to help address some of the same problems.

Java has an amazing history, and not many languages that are still relevant today can claim to have been used for more than 20 years. Obviously, during this time, the language has evolved itself, partly to continually drive improvement and developer productivity, and partly to meet the requirements imposed by new hardware and architectural practices. Because of this long history, there are now a multitude of ways to deploy Java applications into production.

The native packaging format for Java is the Java Application Archive (JAR) file, which can contain library code or a runnable artifact. The initial best-practice approach to deploying Java Enterprise Edition (J2EE) applications was to package code into a series of JARs, often consisting of modules that contain Enterprise JavaBeans (EJB) class files and EJB deployment descriptors. These were further bundled up into another specific type of JAR with a defined directory and structure and required metadata file.

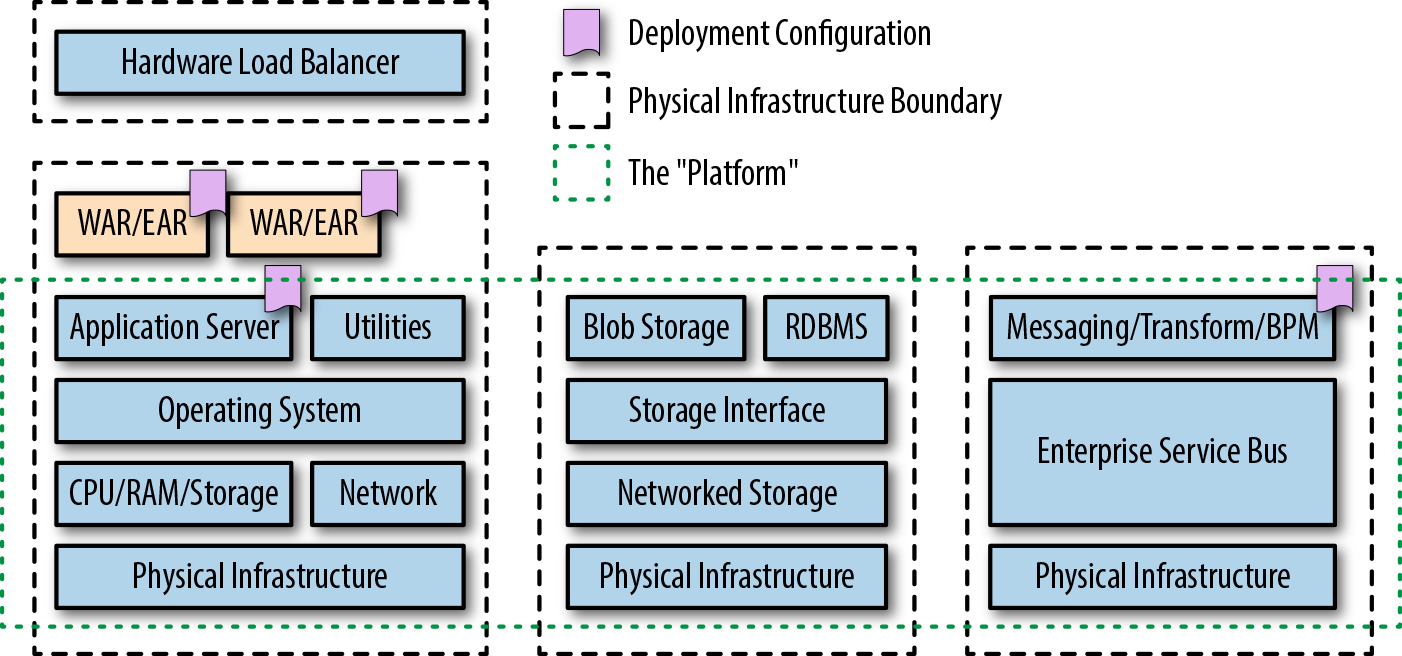

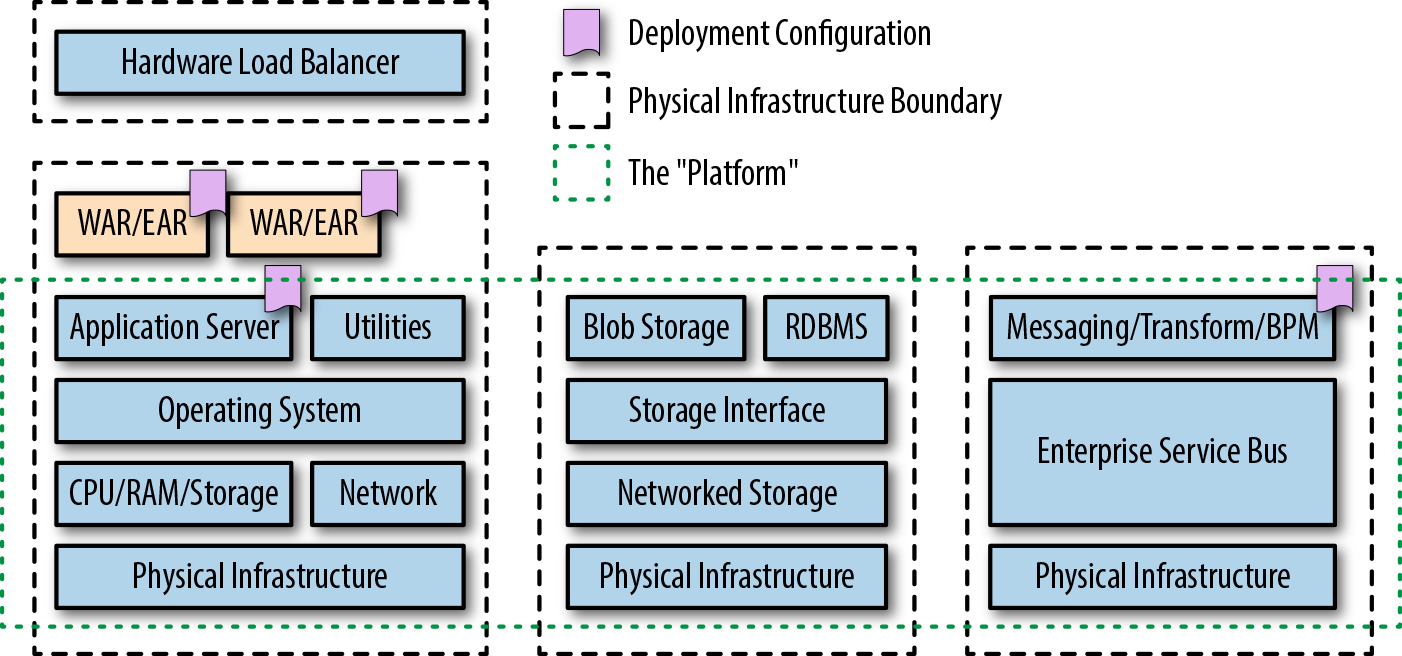

The bundling resulted in either a Web Application Archive (WAR)—which consisted of servlet class files, JSP files, and supporting files—or an Enterprise Application Archive (EAR) file—which contained all the required mix of JAR and WAR files for the deployment of a full J2EE application. As shown in Figure 2-1, this artifact was then deployed into a heavyweight application server (commonly referred to at the time as a “container”) such as WebLogic, WebSphere, or JBoss EAP. These application servers offered container-managed enterprise features such as logging, persistence, transaction management, and security.

Several lightweight application servers also emerged in response to changing developer and operational requirements, such as Apache Tomcat, TomEE, and Red Hat’s Wildfly. Classic Java Enterprise applications and service-oriented architecture (SOA) were also typically supported at runtime by the deployment of messaging middleware, such as enterprise service buses (ESBs) and heavyweight message queue (MQ) technologies.

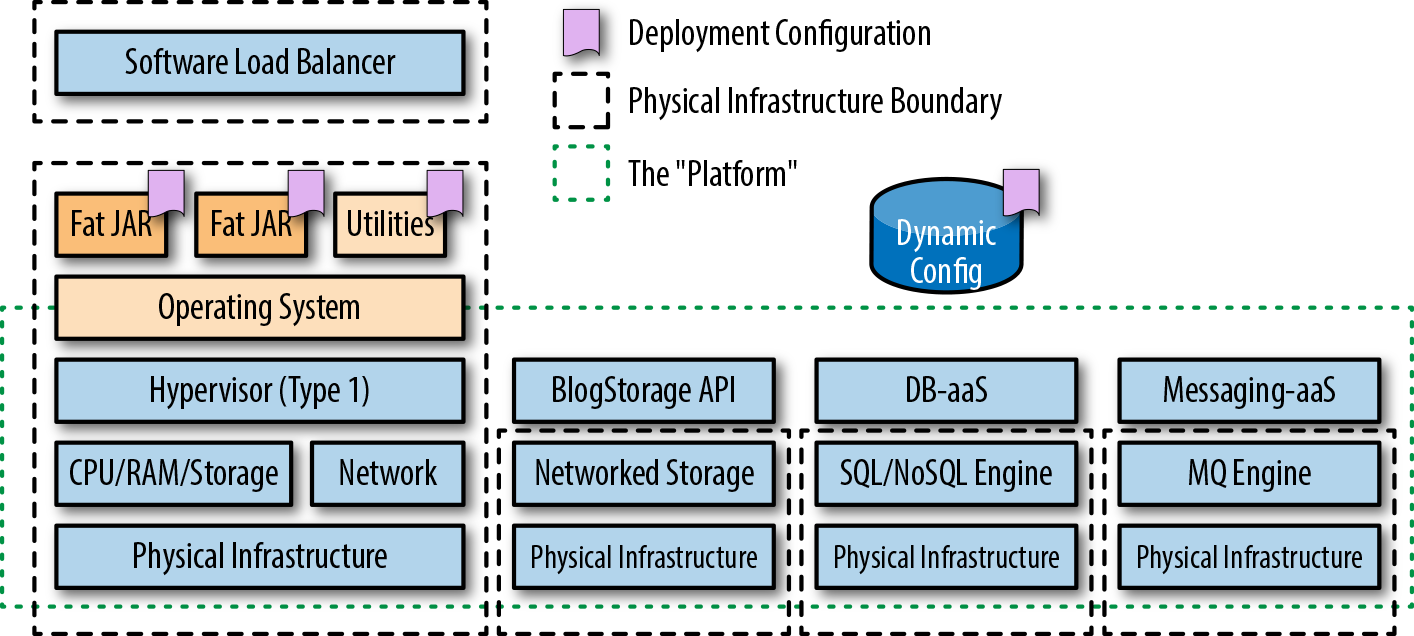

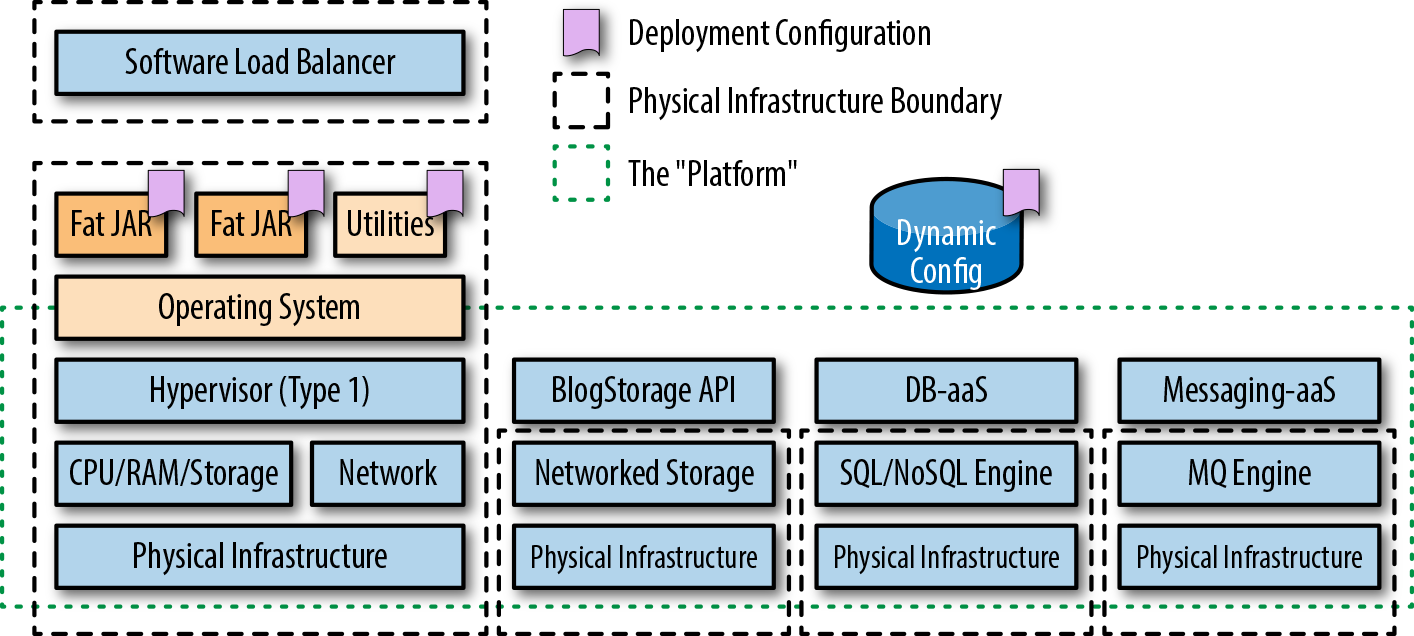

With the emergence of the next generation of cloud-friendly service-based architectures and the introduction of open source and commercial platform-as-a-service (PaaS) platforms like Google App Engine and Cloud Foundry, deploying Java applications by using lightweight and embedded application servers became popular, as shown in Figure 2-2. Technologies that emerged to support this included the in-memory Jetty web server and later editions of Tomcat. Application frameworks such as DropWizard and Spring Boot soon began providing mechanisms through Maven and Gradle to package (for example, using Apache Shade) and embed these application servers into a single deployable unit that can run as a standalone process—the executable fat JAR was born.

The best practices for developing, deploying, and operating this new generation of applications was codified by the team at Heroku as the Twelve-Factor App.

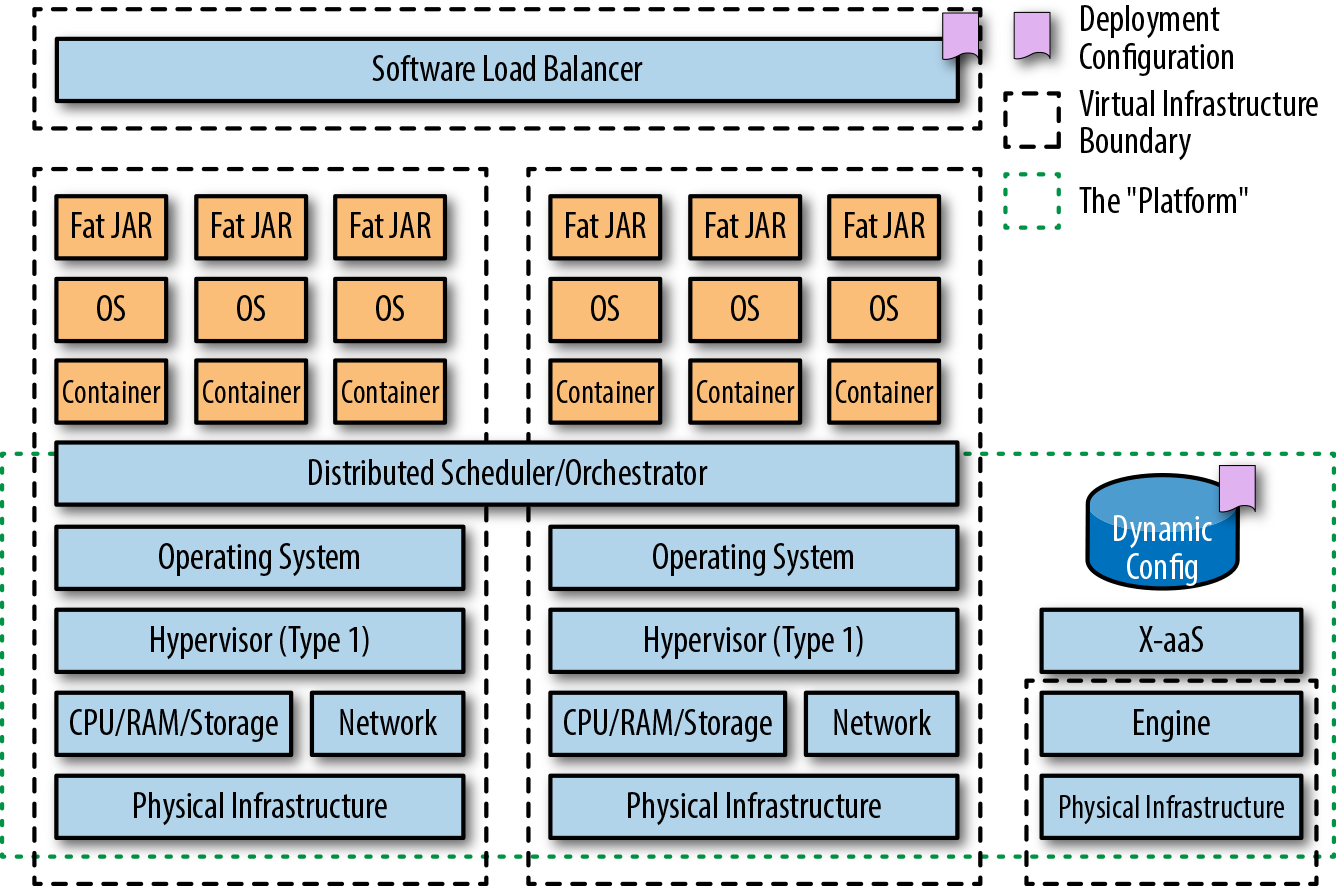

Although Linux container technology had been around for quite some time, the creation of Docker in March 2013 brought this technology to the masses. At the core of containers is Linux technologies like cgroups, namespaces, and a (pivot) root filesystem. If fat JARs extended the scope of traditional Java packaging and deployment mechanisms, containers have taken this to the next level. Now, in addition to packaging your Java application as a fat JAR, you must include an operating system (OS) within your container image.

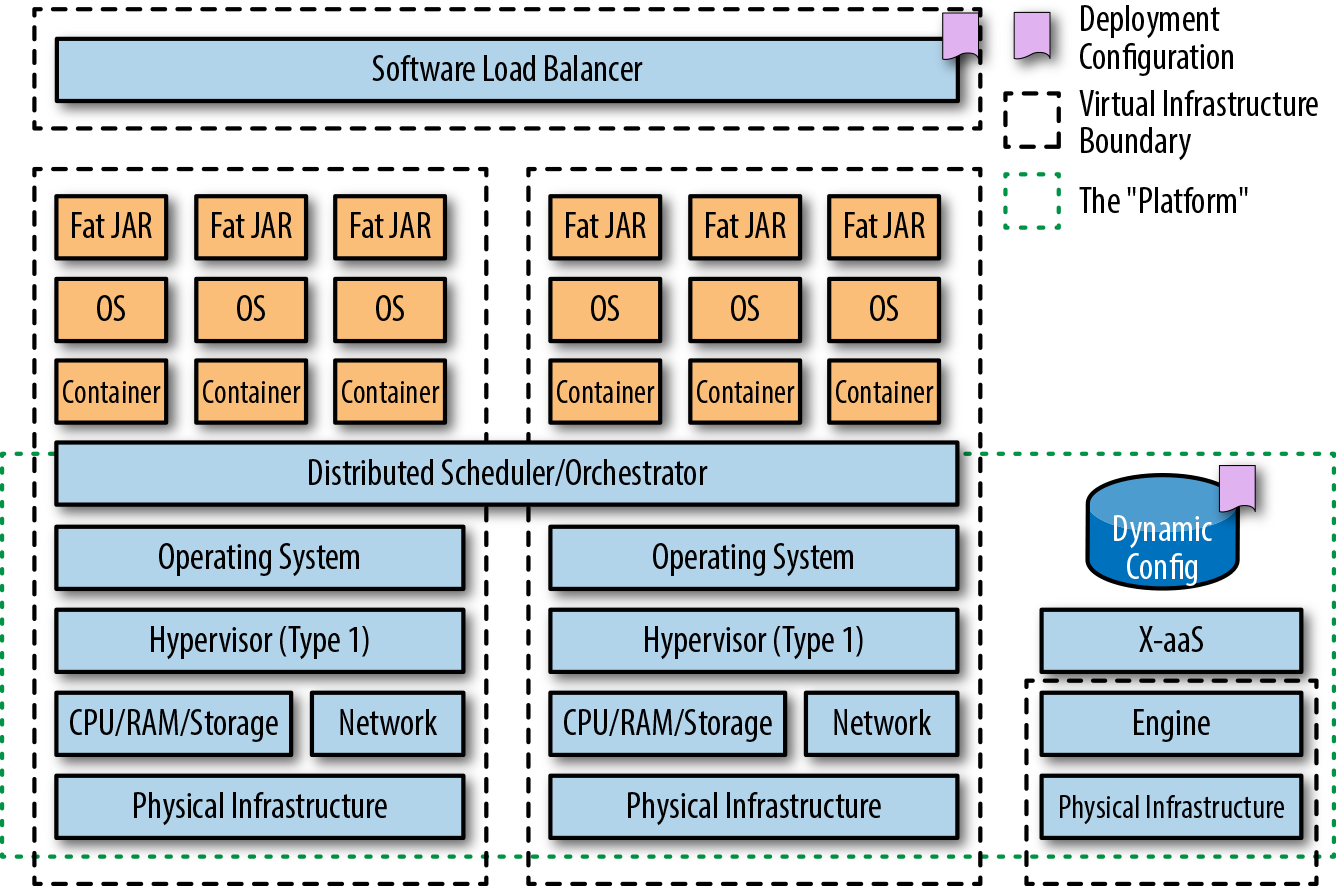

Because of the complexity and dynamic nature of running containers at scale, the resulting image is typically run on a container orchestration and scheduling platform like Kubernetes, Docker Swarm, or Amazon ECS, as shown in Figure 2-3.

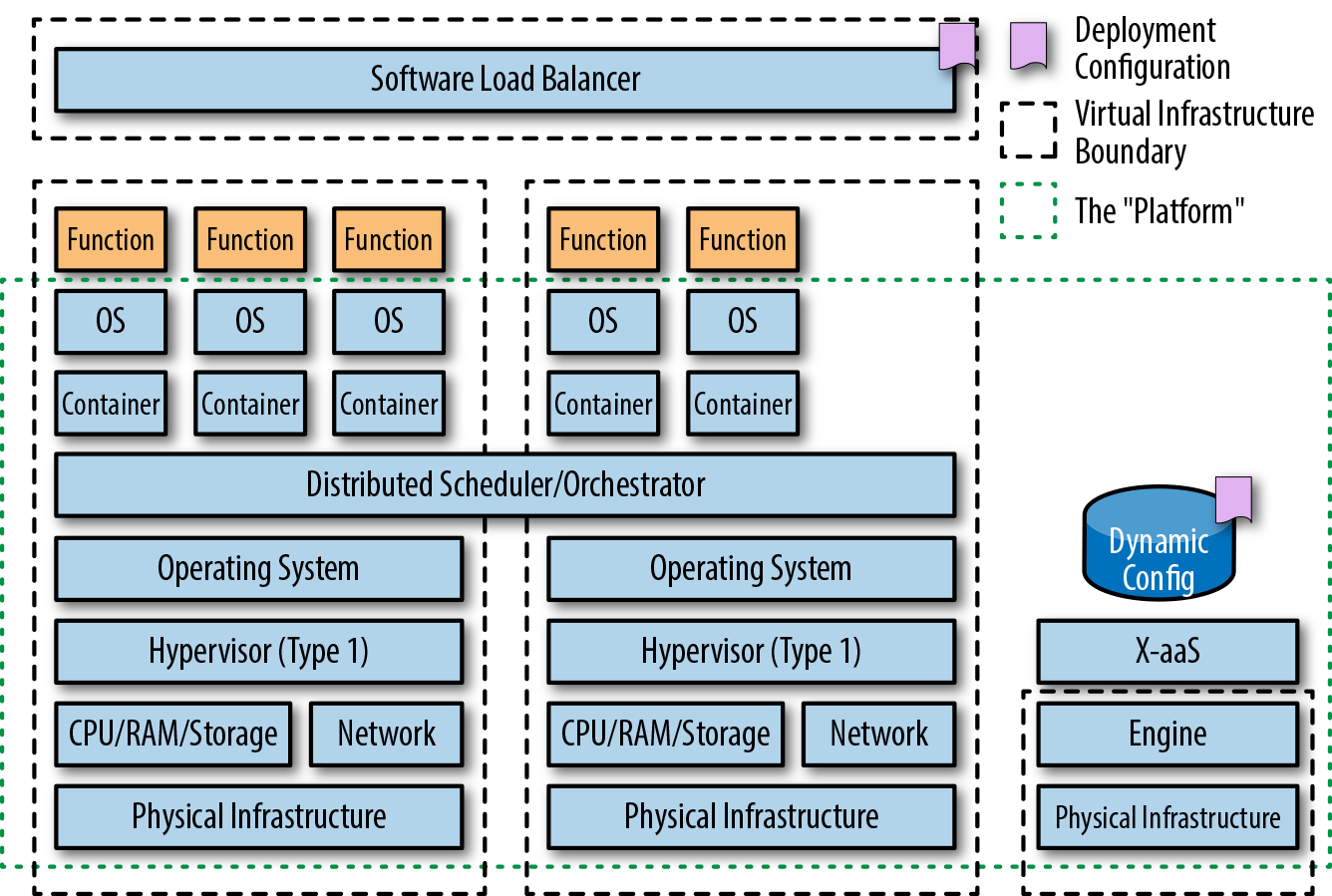

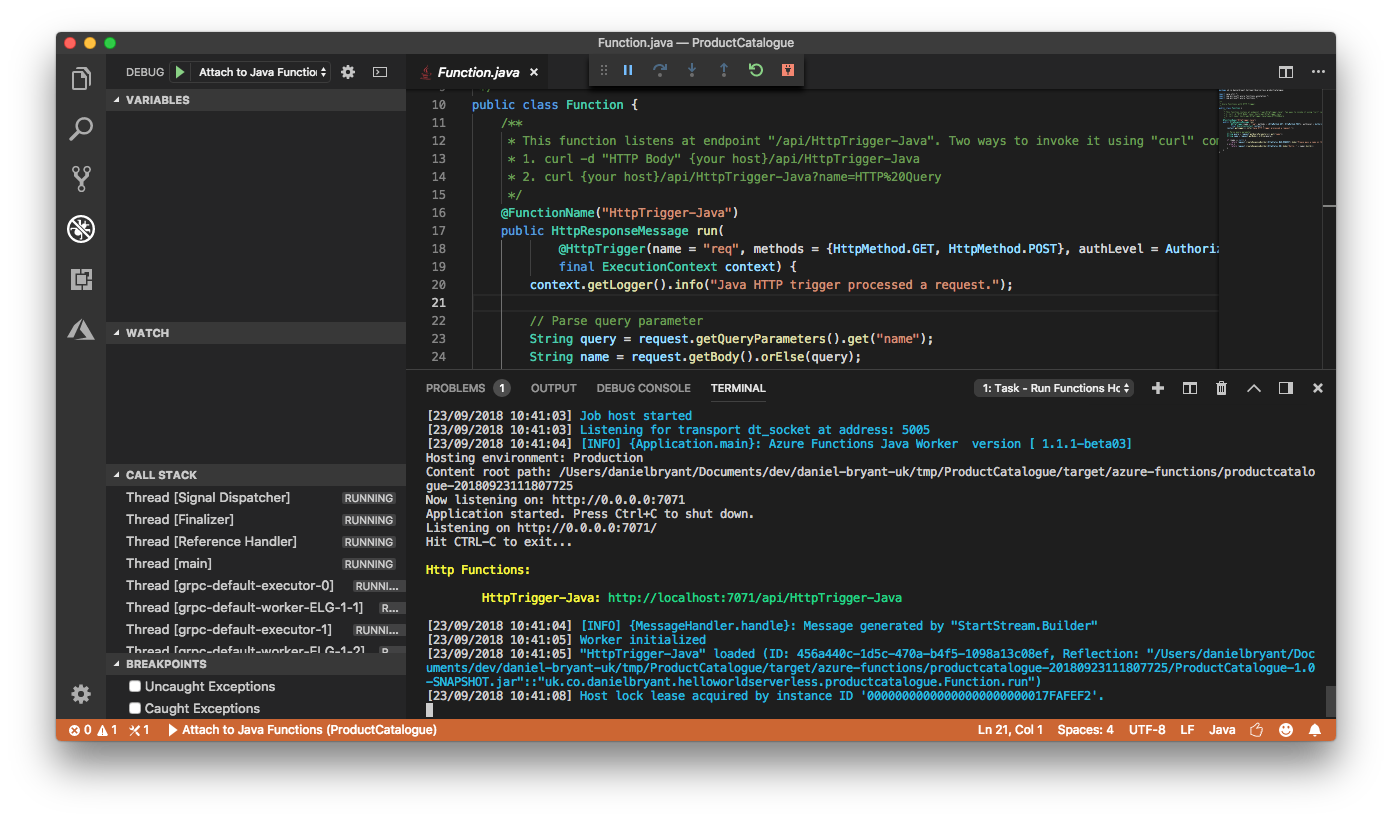

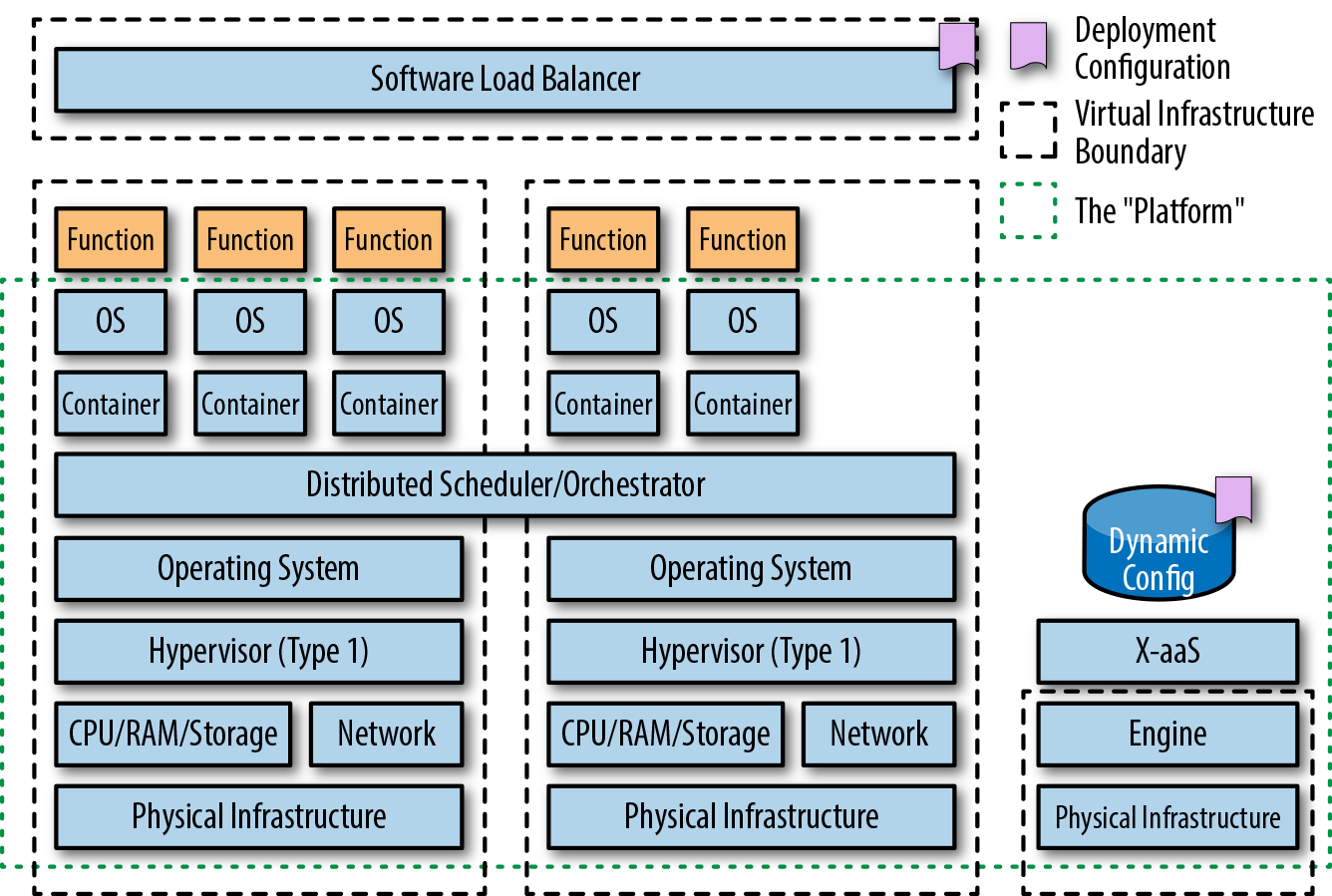

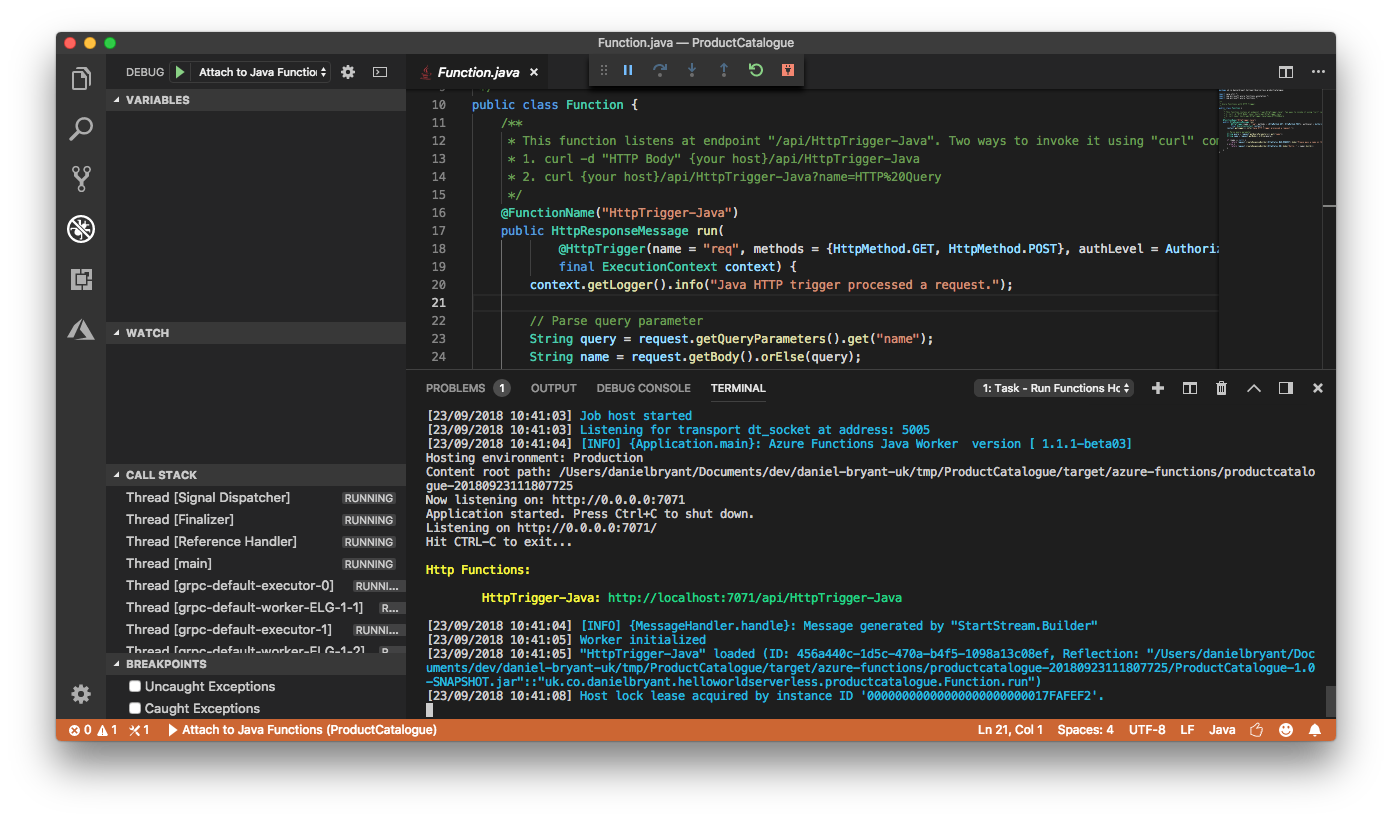

In November 2014, Amazon Web Services launched a preview of AWS Lambda at its global re:Invent conference, held annually in Las Vegas. Other vendors followed suit, and in 2016 Azure Functions and Google Cloud Functions were released in preview. As shown in Figure 2-4, these platforms lets developers run code without provisioning or managing servers; this is commonly referred to as “serverless,” although FaaS is a more correct term, as serverless offerings are actually a superset of FaaS, which also includes other backend as a service (BaaS) offerings like blob storage and NoSQL data stores. With FaaS, servers are still required to run the functions that make up an application, but the focus is typically on reducing the operational burden of running and maintaining the function’s underlying runtime and infrastructure. The development and billing model is also unique in that functions are triggered by external events—which can include a timer, a user request via an attached API gateway, or an object being uploaded into a blobstore—and you pay for only the time your function runs and the memory consumed.

Both AWS Lambda and Azure Functions offer support for Java, and you now return back to the deployment requirement for a JAR or ZIP file containing Java code to be uploaded to the corresponding service.

Developers often ask whether the required platform packaging format of the application artifacts affect the implementation of continuous delivery. Our answer to this question, as with any truly interesting question, is, “It depends.” The answer is yes, because the packaging format clearly has an impact on the way an artifact is built, tested, and executed: both from the moving parts involved and the technological implementation of a build pipeline (and potential integration with the target platform). However, the answer is also no, because the core concepts, principles, and assertions of continuously delivering a valid artifact remain unchanged.

Throughout this book, we demonstrate core concepts at an abstract level, but will also provide concrete examples, where appropriate, for each of the three most relevant packaging styles: fat JARs, container images, and FaaS functions.

Over the last 10 years, we have seen roles within software development evolve and change, with a particular focus on shared responsibility. We’ll now discuss the new approaches and philosophies that have emerged, and share our understanding of how this has impacted continuous delivery, and vice versa.

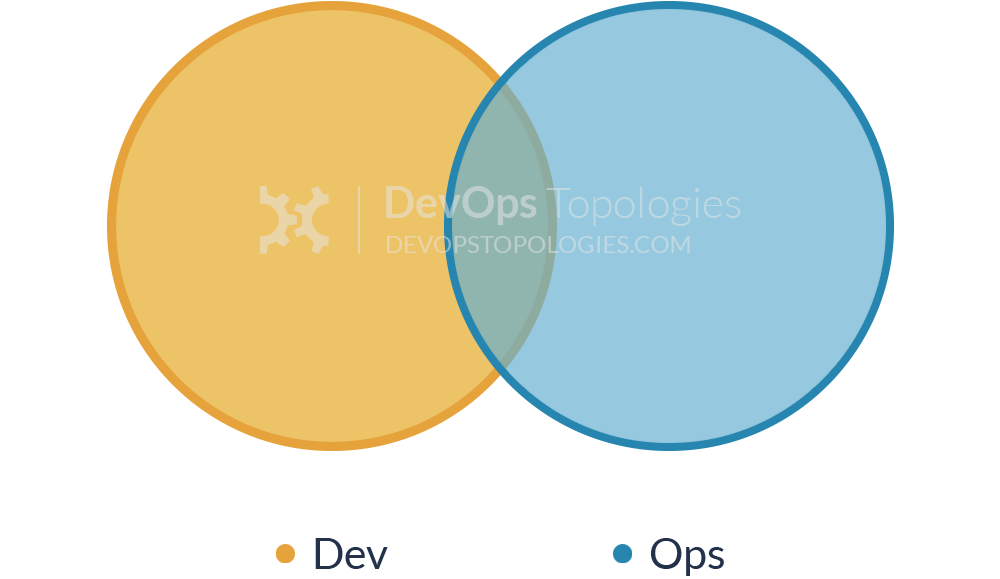

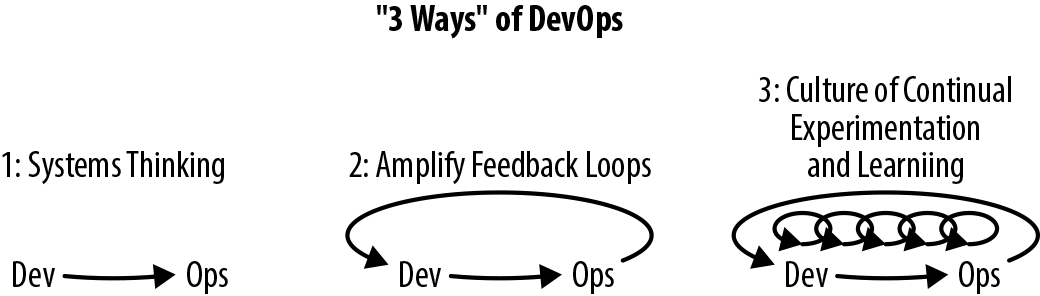

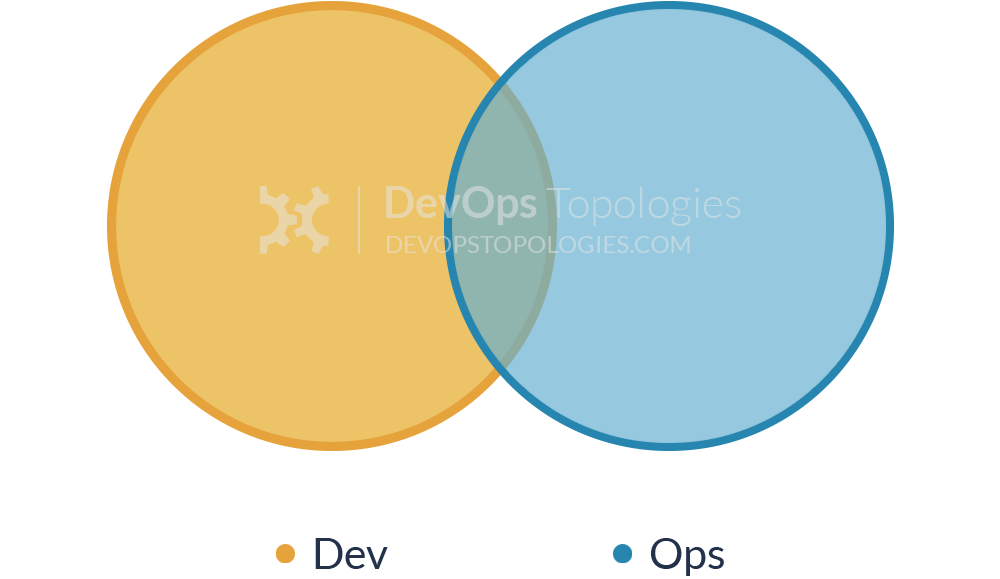

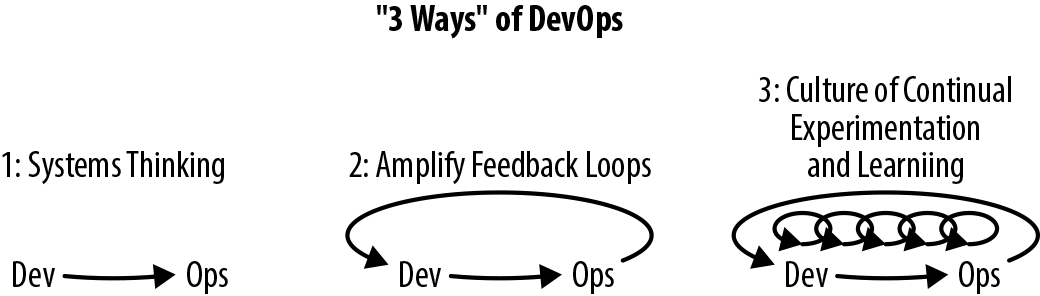

At the 2008 Agile Toronto conference, Andrew Shafer and Patrick Debois introduced the term DevOps in their talk on Agile infrastructure. From 2009, the term has been steadily promoted and brought into more mainstream usage through a series of “devopsdays” events, which started in Belgium and have now spread globally. It can be argued that the compound of “development” and “operations”—DevOps no longer truly captures the spirit of the associated movement or philosophy; potentially, the term “Businss-Development-QA-Security-Operations” (BizDevQaSecOps) captures the components better, but this is far too much of a mouthful.

DevOps at its core is a software development and delivery philosophy that emphasizes communication and collaboration between product management, software development, and operations/sysadmins teams, and close alignment with business objectives. It supports this by automating and monitoring the process of software integration, testing, deployment, and infrastructure changes by establishing a culture (with associated practices) where building, testing, and releasing software can happen rapidly, frequently, and more reliably.

We are sure many of you reading will think this sounds a lot like the principles of continuous delivery—and you would be right! However, continuous delivery is just one tool in the DevOps toolbox. It is an essential and valuable tool, but to truly have success with designing, implementing, and operating a continuous delivery build pipeline, there typically needs to be a certain level of buy-in throughout the organization, and this is where the practices associated with DevOps shine.

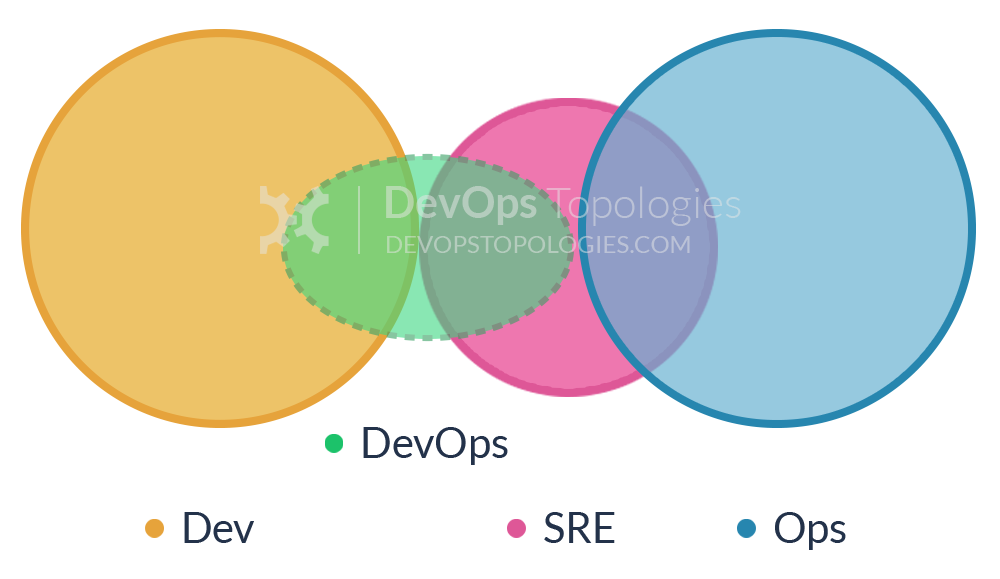

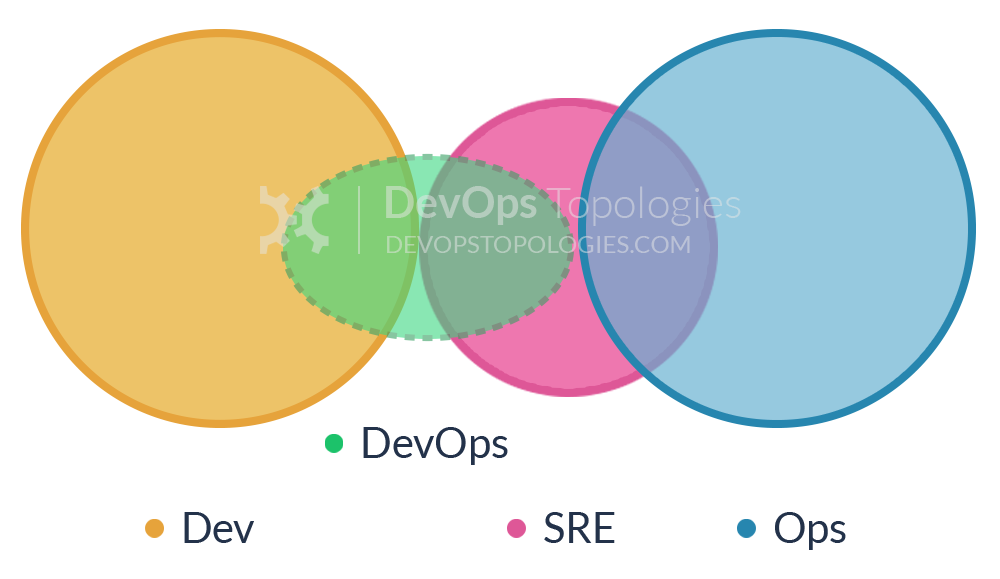

The term Site Reliability Engineering (SRE) was made popular by the book of the same name that was written by the SRE team at Google. In an interview with Niall Richard Murphy and Benjamin Treynor Sloss, both of whom worked within the engineering division at Google, they stated that fundamentally SRE is what happens when you ask a software engineer to design an operations function: “using engineers with software expertise, and banking on the fact that these engineers are inherently both predisposed to, and have the ability to, substitute automation for human labor.”

In general, an SRE team is responsible for availability, latency, performance, efficiency, change management, monitoring, emergency response, and capacity planning. This overlap with DevOps and pure operational concerns can be seen in Figure 2-6. However, a key characteristic of SRE teams at Google is that each engineer should be doing only a maximum of 50% operations work, or “toil” as they refer to it, and the rest of their time should be spent designing and building systems and the supporting tooling. At Google, this split between workloads is continually measured and reviewed regularly. SRE teams at Google are a scarce and valuable resource, and development teams typically have to create a case for SRE support on their projects, particularly in the early proof-of-concept stage with a product.

Google has institutionalized responses to providing SRE support, with processes like the Production Readiness Review (PRR). The PRR helps to avoid getting into a bad situation where the development teams are not incentivized to create production-ready software with a low operational load by examining both the system and its characteristics before taking it on, and also by having shared responsibility.

The Google SRE team has also talked extensively about the way it monitors systems. A classic approach to monitoring is to watch a value or a condition, and when the monitoring system observes something interesting, it sends an email. However, email is not the right approach for this; if you are requiring a human to read the email and decide whether something needs to be done, the Google team SRE believes you are making a mistake. Ideally, a human never interprets anything in the alerting domain. Interpretation is done by the software you write. You just get notified when you need to take action. Accordingly, the SRE book states that there are only three kinds of valid monitoring output:

These indicate that a human must take action right now. Something is happening or about to happen, and a human needs to take action immediately to improve the situation.

A human needs to take action but not immediately. You have maybe hours, typically, days, but some human action is required.

No one ever needs to look at this information, but it is available for diagnostic or forensic purposes. The expectation is that no one reads it.

This information is important, because as developers, we must implement appropriate logging and metrics within our systems. This also must be tested as part of a continuous delivery pipeline.

Release engineering is a relatively new and fast-growing discipline of software engineering that can be described as building and delivering software. Release engineers focus on building a continuous delivery pipeline and have expert understanding of source code management, compilers, automated build tools, package managers, installation, and configuration management. According to the Google SRE book, a release engineer’s skill set includes deep knowledge of multiple domains: development, configuration management, test integration, system administration, and customer support.

The Google SRE workbook builds on the required skillset, and presents the basic principles of release engineering as follows:

Reproducible builds

Automated builds

Automated tests

Automated deployments

Small deployments

We’re sure you can see the similarity between these principles and those of continuous delivery. Even the additional operator-focused principles discussed are understandable to developers: reducing operational load on engineers by removing manual and repetitive tasks; enforcing peer review and version control; and establishing consistent, repeatable, automated processes to minimize mistakes.

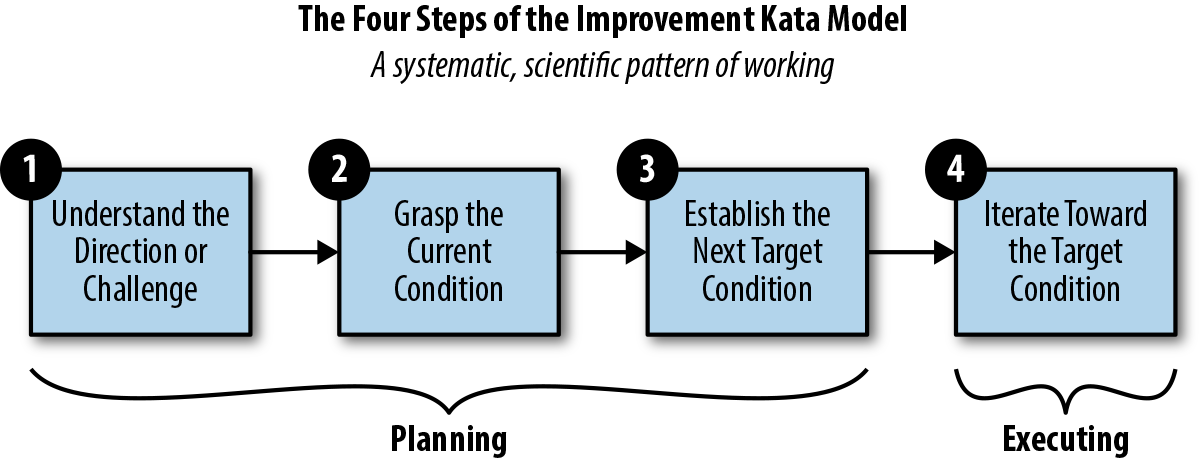

The success of release engineering within an organization is highly correlated with the successful implementation of a build pipeline, and typically consists of metrics focused on time taken for a code change to be deployed to production, the number of open bugs, the percentage of successful releases, and the percentage of releases that were abandoned or aborted after they began. Steve Smith has also talked extensively in his book Measuring Continuous Delivery (Leanpub) about the need to collect, analyze, and take action based on these metrics.

If you work within a team at a large enterprise company, the concepts of DevOps, SRE, and release engineering may appear alien at first glance. A common pushback from such teams is that these approaches work for only the “unicorn” companies like Google, Facebook, and Amazon, but in reality these organizations are blazing a trail that many of us are now following. For example, Google was the first to embrace containerization to facilitate rapid deployment and flexible orchestration of services; Facebook promoted the use of a monorepo to store code, and released associated open source build tooling that is now used extensively; and Amazon drove the acceptance of exposing internal service functionality only via well-defined APIs.

Although you should never “cargo cult,” or blindly copy only the things or results you can, you can learn much from their approaches and processes. The key trends discussed in the previous sections also have a direct impact on the implementation of continuous delivery:

Increasing shared responsibility across development, QA, and operations (and arguably the entire organization) is essential for the successful adoption of continuous delivery.

The definition, capture, and analysis of software build, deployment, and operation metrics are vital to continuous delivery. They help the organization understand where it currently is and what success will look like, and assist in charting and monitoring the journey toward this.

Automation is essential for reliably building, testing, and deploying software.

In this chapter, you have explored the evolution of Java architecture, deployment platforms, and the associated organizational and role changes within IT:

Modern software architecture must adapt to meet the changing requirements from the business of speed and stability, and implementing an effective continuous delivery pipeline is a core part of delivering and verifying this.

Java deployment packages and platforms have changed over the years, from WARs and EARs deployed onto application servers, through to fat (runnable) JARs deployed in the cloud or PaaS, and ultimately to container images deployed into container orchestration or FaaS platforms. A continuous delivery pipeline must be built to support your specific platform.

The focus on shared responsibility over the last 10 years—through DevOps, SRE, and release engineering—has increased your responsibilities as a developer implementing continuous delivery. You must now implement continual testing and observability within the software you write.

In the next chapter, you will explore how to design and implement an effective software architecture that supports the implementation of continuous delivery.

Now that you have been introduced to the motivations for continuous delivery, you are ready to explore the technical foundations to enable this practice: software architecture. In this chapter, you will learn about the importance of designing systems that are loosely coupled and have high cohesion, and the associated technical and business costs if these guidelines aren’t followed. You will be introduced to the importance of designing effective APIs, how cloud computing has impacted software architecture, and why many Java developers are embracing service-oriented development. The key goal of this chapter is for you to understand how to create and cultivate an architecture that supports continuously delivering Java applications.

The Software Engineering Institute (SEI) defines software architecture as “the set of structures needed to reason about the system, which comprises software elements, relations among them, and properties of both.” Although this may at first glance appear quite abstract, the mention of structures, elements, and properties is core to what the majority of software engineers think of as architecture. Taking a slightly different perspective, it is quite possible that you can relate to Martin Fowler’s definition that software architecture consists of the “things that people perceive as hard to change.” Regardless of which definition you prefer, several properties of a software system are fundamental to creating fit-for-purpose architecture.

A loosely coupled system is one in which each of its components has, or makes use of, little or no knowledge of the definitions of other separate components. The obvious advantage is that components within a loosely coupled system can be replaced with alternative implementations that provide the same functionality. Loose coupling within programming is often interpreted as encapsulation—or information-hiding—versus nonencapsulation.

Within the Java programming language, this can be seen in primarily two places. First, method signatures utilize interface types versus concrete class types; the former makes extending applications much easier by loosely coupling and deferring the choice of concrete class until runtime. Second, JavaBeans or Plain Old Java Objects (POJOs) getters and setters (accessors and mutators), which enable hiding and controlling the access to internal state, give you much more control in making changes to the internals of the class.

At the application or service level, loose coupling is typically achieved through well-defined and flexible component interfaces; for example, using REST contracts (e.g., Pact or Spring Cloud Contract) with JSON over HTTP/S; using an interface definition language (IDL) such as gRPC, Thrift, or Avro; or messaging via RabbitMQ or Kafka. Examples of tight coupling include Java RMI, where domains objects are exchanged in the native Java serialization format.

Cohesion refers to the degree to which the elements within a component belong together, and can be thought of within programming as the measure of strength of the relationship between pieces of functionality within a given module or class. Modules with high cohesion tend to be preferable, because high cohesion is associated with several desirable traits of software, including robustness, reliability, reusability, and understandability. In contrast, low cohesion is associated with undesirable traits such as being difficult to maintain, test, reuse, or even understand. A good example in the Java language can be found within the java.util.concurrent package, which contains classes that cohesively offer functions related to concurrency. The classic Java counterexample is the java.util package itself, which contains functions that relate to concurrency, collections, and a scanner for reading text input; these functions are clearly not cohesive.

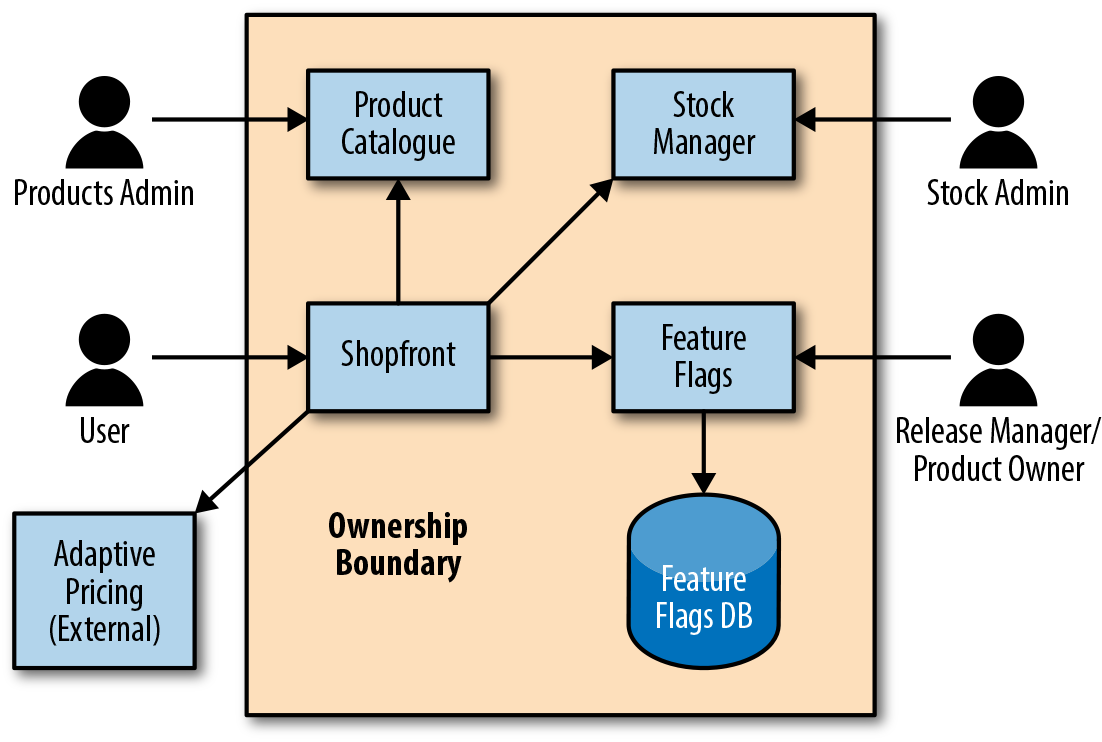

At the application and service level, the level of cohesion is often evident by the interface exposed. For example, if a User service exposed functionality related only to working with application Users, such as add new User, update contact email address, or promote the User’s customer loyalty tier, this would be highly cohesive. A counter example would include a User service that also offered functionality to add items to an e-commerce shopping basket, or a payment API that also allowed stock information to be added to the system.

Applications with a loosely coupled and highly cohesive architecture are easier to continuously deliver. Therefore, you should strive to design and evolve systems with this in mind. A good architecture facilitates CD through the following mechanisms:

During the design phase of a new or evolving system, having clear and well-defined interfaces specified throughout the system allows for loose coupling and high cohesion. This, in turn, makes it easier to reason about the system. When given new requirements for a specific area of functionality, a highly cohesive system immediately directs you to where the work should take place, as an alternative to you having to trawl through the code of several multifunctional modules with low cohesion. Loose coupling allows you to change design details of an application (perhaps in order to reduce resource consumption) with a significant reduction in concern that you will impact other components within the overall system.

A highly cohesive service or module facilitates dependency management (and the associated testing), as the amount of functionality offered is limited within scope. Unit testing, mocking, and stubbing is also much easier in a loosely coupled system, as you can simply swap configurable synthetic test doubles in for the real thing when testing.

Components that are highly cohesive lead to easy-to-understand test suites, as the context needed by developers in order to grok the tests and assertions is generally limited. Loose coupling of components allows external dependencies to be easily simulated or virtualized as required.

Systems that are loosely coupled and highly cohesive are easier to orchestrate when performing end-to-end tests. Highly coupled systems tend to share data sources, which can make the curation of realistic test data fiendishly difficult. When the inevitable issues do occur with end-to-end testing, a highly cohesive system will generally be much easier to diagnose and debug, as functionality is logically grouped in relation to theme.

Applications and services that are loosely coupled are generally easy to deploy in a continuous fashion, because each service has little or no knowledge of others. Highly coupled services typically have to be deployed in lockstep (or sequentially) because of the tight integration of functionality, which makes the process time-consuming and error prone. Highly cohesive services typically minimize the number of subsystems that have to be deployed in order to release new functionality, and this results in fewer artifacts being pushed down the pipeline, reducing resource consumption and coordination.

A cohesive service is easy to observe and comprehend. Imagine you have a service that performs five unrelated tasks, and suddenly your production monitoring tool alerts you to high CPU usage. It will be difficult to understand which functionality is causing the issue. A highly coupled application is often difficult to diagnose when things inevitably go wrong, as failures can cascade throughout the system, which, in turn, obfuscate the underlying causes.

With this foundational guidance in place, let’s now take a look at designing applications that provide business value by using modern architectural themes and practices.

If you have ever worked on a large-scale software system that is continually being driven by new functional requirements, you will most likely at some point have bumped into a limiting factor imposed by the system architecture. This is almost inevitable because of the increased focus in the business world on short-term gains versus long-term investment, and unforeseen changes in both the business and the development of the technological landscape. Software architecture also tends to evolve over time within many companies, with occasional refactoring sprints being allocated to teams in order to prop up major issues, or in the worst case, little attention being paid until a “big bang” rewrite is forced. Continuous delivery can be used to monitor and enforce certain architectural properties, but you have to understand the principles of how architecture relates to business value, and then design applications and process accordingly.

If a business has no well-defined architecture to its systems, it is much harder to properly assess the cost of doing something in a well-defined time frame. The “mess” of your architecture creates excessive costs and missed opportunities. This can have really bad competitive consequences, as the overhead incurred can increase dramatically, depending on the number of systems and overall complexity. Often developers and architects find it difficult to convince nontechnical management of these issues. Although empathy must be developed by both sides, one analogy that spans disciplines is building a house from a complete plan with the intended usage versus starting from an empty plot of land and adding rooms and floors as you go along and watching how the building is being used. Just as it would be much easier to design and build a house with the intended usage in mind from day one, the same conclusion can be drawn for designing and building software.

The other hidden cost with a lack of architectural quality is that an inordinate amount of time is spent patching systems rather than innovating. If more time is spent playing software bug whack-a-mole than actually creating new features, you know you have a rotten architecture. Good software architectures encourage the production of bug-free software and guard against the negative consequences of a bug; well-architected systems “contain” the error, and through their structure provide mechanisms to overcome any problems caused with minimal cost. Good architecture also encourages greater innovation, as it is clearer what needs doing to support innovation on top of it. In addition, good architecture in itself can be a catalyst for innovation. You might see gaps or opportunities that would have otherwise been hidden.

Although the continual monitoring and analysis of architectural qualities in relation to business value is somewhat orthogonal to the implementation of continuous delivery, the creation of a build pipeline can be an effective way to introduce the capture of relevant metrics. Tools such as SonarQube can be woven into the build process and used to show cohesion, coupling, and complexity hotspots within the code and also report high-level complexity metrics such as cyclomatic complexity (the quantitative measure of the number of linearly independent paths through a program’s source code) and the design structure quality index (DSQI) (an architectural design metric used to evaluate a computer program’s design structure and the efficiency of its modules).

Additional tooling, such as Adam Tornhill’s Code Maat, can also mine and analyze data from version-control systems, and show areas of the codebase that are regularly churning. This can demonstrate to the business that spending time and money on improving the architecture will facilitate understanding within these high-churn areas of the code, and, in turn, provide a high return on investment.

A typical mature or legacy architecture usually consists of a mix of technologies, often based on frameworks that were popular at the time of construction and the experiences of the engineers involved. This is where such a lack of structure (or an overly complex structure) in the architecture can greatly impact an organization; instead of having to consider just one technology when making changes, you end with a forest of interconnecting technologies in which no one person or team is the subject-matter expert. Making any change is a risky undertaking with a lot of inherent cost. If you compare this to an organization that has been careful to keep its architectural complexity under control, its costs of change could be a fraction of that experienced by its “complex” competitor.

Some organizations have fine-tuned management of their technical complexity and architecture to such a point that they are able, with complete confidence, to ship live multiple updates each day. The end result is that the organization with a complex architecture cannot keep pace with its more technically lean rival; this can result in a “death by a thousand paper cuts” with many delayed and failed features and bug fixes.

A well-defined software architecture assists the management of complexity by showing and tracking the following:

The real interdependencies between systems

What system holds which data and when

The overall technical complexity in terms of operating systems, frameworks, libraries, and programming languages used

Many of these properties can be verified and monitored within a good continuous delivery pipeline.

All software applications expose APIs somewhere within the system, from the internal classes, packages, and modules, to the external systems interface. Since 2008, there has been an increase in APIs being seen as software products themselves: just look at the prevalence of SaaS-based API offerings like Google Maps, Stripe payments, and the Auth0 authentication API. From a programmer’s perspective, an API that is easy to work with must be highly cohesive and loosely coupled, and the same applies for integrating API-based services into the CD pipeline.

A good API is typically designed outside-in, as this is the best way to meet user requirements without overly exposing internal implementation details. One of the challenges with classical SOA was that APIs were often designed inside-out, which meant that the interface presented “leaked” details on the internal entities and functions provided. This broke the principle of encapsulating data, and, in turn, meant that services integrating with other services were highly coupled as they relied on internal implementation details.

Many teams attempt to define a service API up front, but in reality the design process will be iterative. A useful technique to enable this iterative approach is the BDD technique named The Three Amigos, where any requirement should be defined with at least one developer, one QA specialist, and one project stakeholder present. The typical outputs from this stage of the service design process include a series of BDD-style acceptance tests that assert component-level (single microservice) requirements, such as Cucumber Gherkin syntax acceptance test scripts; and an API specification, such as a Swagger or RAML file, which the test scripts will operate against.

We also recommend that each service has basic (happy path) performance test scripts created (for example, using Gatling or JMeter) and security tests (for example, using bdd-security). These service-level component tests can then be run continuously within the build pipeline, and will validate local microservice functional and nonfunctional requirements. Additional internal resource API endpoints can be added to each service, which can be used to manipulate the internal state for test purposes or to expose metrics.

The benefits to the CD process of exposing application or service functionality via a well-defined API include the following:

Easier automation of test fixture setup and teardown via internal resource endpoints (and this limits or removes the need to manipulate state via filesystem or data store access).

Easier automation of specification tests (e.g., REST Assured). Triggering functionality through a fragile UI is no longer required for every test.

API contracts can be validated automatically, potentially using techniques like consumer contracts and consumer-driven contracts (e.g., Pact-JVM).

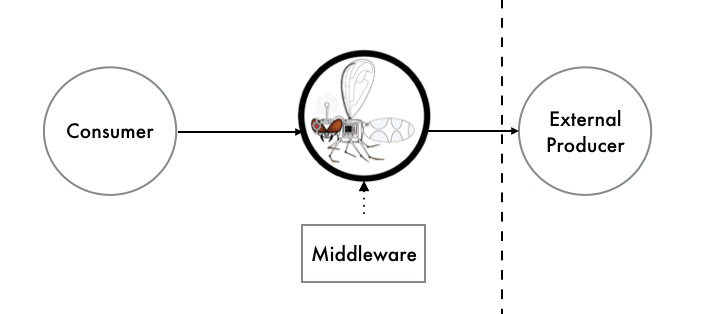

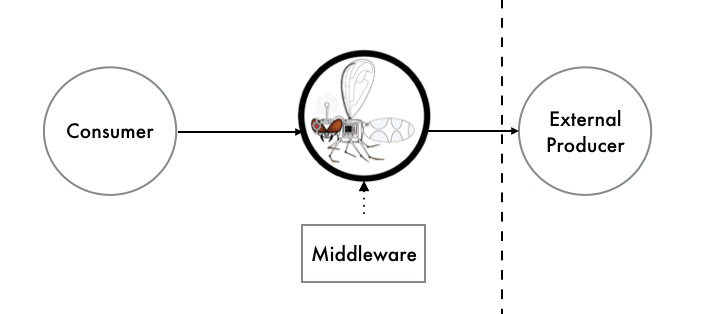

Dependent services that expose functionality through an API can be efficiently mocked (e.g., WireMock), stubbed (e.g., stubby4j), or virtualized (e.g., Hoverfly).

Easier access to metrics and monitoring data via internal resource endpoints (e.g., Codahale Metrics or Spring Boot Actuator).

The popularity of APIs has increased exponentially in recent history, but with good reason, and embracing good architectural practices around this clearly makes implementing continuous delivery much easier.

In 2003, the deployment options for enterprise Java applications were relatively limited, consisting of mostly heavyweight application servers that attempted to provide cross-cutting platform concerns such as application life cycle management, configuration, logging, and transaction management. With the emergence of cloud computing from Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure; platform-as-a-service (PaaS) offerings, such as Heroku, Google App Engine, and Cloud Foundry; and container-as-a-service (CaaS) offerings like Kubernetes, Mesos, and Docker Swarm, there are now a lot more choices for Java developers. As the underlying deployment fabrics and platforms have changed, so too have the associated architectural best practices.

In early 2012, PaaS pioneer Heroku developed the Twelve-Factor App, a series of rules and guidance for helping developers build cloud-ready PaaS applications that:

Use declarative formats for setup automation, to minimize time and cost for new developers joining the project

Have a clean contract with the underlying operating system, offering maximum portability between execution environments

Are suitable for deployment on modern cloud platforms, minimizing the need for servers and systems administration

Minimize divergence between development and production, enabling continuous deployment for maximum agility

Can scale up without significant changes to tooling, architecture, or development practices

Let’s look briefly at each of the factors now, and see how they map to continuously deploying Java applications:

Each Java application (or service) should be tracked in a single, shared code repository. Deployment configuration files, such as scripts, Dockerfiles, and Jenkinsfiles, should be stored alongside the application code.

Dependencies are commonly managed within Java applications by using build tooling such as Maven or Gradle, and OS-level dependencies should be clearly specified in the associated virtual machine (VM) image manifest, Dockerfile, or serverless configuration files.

The Twelve-Factor App guidelines suggest that configuration data should be injected into an application via environment variables. In practice, many Java developers prefer to use configuration files to manage these variables, and there can be potential security issues with exposing secrets via environment variables, particularly when building VMs or containers that contain secrets.

Storing nonsensitive configuration data in a remote service like Spring Cloud Config (backed by Git or Consul) and secrets in a service like HashiCorp’s Vault can be a good compromise between the Twelve-Factor recommendations and current best practices.

Java developers are accustomed to treating data stores and middleware in this fashion, and in-memory substitutes (e.g., HSQLDB, Apache Qpid, and Stubbed Cassandra) or service virtualization (e.g., Hoverfly and WireMock) can be used for in-process component testing within the build pipeline.

For a compiled language such as Java, this guideline comes as no surprise (and with little choice of implementation). It is worth mentioning that the flexibility provided by VM and container technology means that separate artifacts can be used to build, test, and run the application, each configured as appropriate. For example, a deployment artifact can be created for build and test with a full OS, JDK, and diagnostic tools; and an artifact can be built for running an application in production with only a minimal OS and JRE.

However, we see this as an anti‐pattern, as there should be only one artifact created that is the “single source of truth” that is pushed along the build pipeline. Using multiple artifacts can easily lead to configuration drift, in which the development and production artifacts have a subtly different configuration that can cause issues and make debugging challenging.

Building and running a Java application as a series of microservices can be made easier by using VM images, container images, or serverless functions.

Java developers are used to exposing application services via ports (e.g., running an application on Jetty or Apache Tomcat).