Enabling Security in a Continuous Delivery Pipeline

Copyright © 2017 Laura Bell, Rich Smith, Michael Brunton-Spall, Jim Bird. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781491938843 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Agile Application Security, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-93884-3

[LSI]

Software is eating the world. Developers are the new kingmakers. The internet of things means there will be a computer in every light bulb.

These statements indicate the growing dominance of software development, to the point where most people in the world will never be further than a meter away from a computer, and we will expect much of our life to interact with computer-assisted objects and environments all the time.

But this world comes with some dangers. In the old world of computing, security was often only considered in earnest for banking and government systems. But the rise of ubiquitous computing means a rise in the value that can be realized from the abuse of systems, which increases incentives for misuse, which in turn increases the risks systems face.

Agile software development techniques are becoming rapidly adopted in most organizations. By being responsive to change and dramatically lowering the cost of development, they provide a standard that we expect will continue to grow until the majority of software is built in an Agile manner.

However, security and Agile have not historically been great bedfellows.

Security professionals have had their hands full with the aforementioned government, ecommerce, and banking systems, trying to architect, test, and secure those systems, all in the face of a constantly evolving set of threats. Furthermore, what is often seen as the most fun and exciting work in security, the things that get covered on the tech blogs and the nightly news, is done by teams of professional hackers focusing on vulnerability research, exploit development, and stunt hacks.

You can probably name a few recent branded vulnerabilities like Heartbleed, Logjam, or Shellshock (or heaven forbid even recognize their logos), or recognize the teams of researchers who have achieved a jailbreak on the latest iPhones and Android devices. But when was the last time a new defensive measure or methodology had a cool, media-friendly name, or you picked up the name of a defender and builder?

Security professionals are lagging behind in their understanding and experience of Agile development, and that creates a gap that is scary for our industry.

Equally, Agile teams have rejected and thrown off the shackles of the past. No more detailed requirements specifications, no more system modeling, no more traditional Waterfall handoffs and control gates. The problem with this is that Agile teams have thrown the baby out with the bathwater. Those practices, while sometimes slow and inflexible, have demonstrated value over the years. They were done for a reason, and Agile teams in rejecting them can easily forget and dismiss their value.

This means that Agile teams rarely consider security as much as they should. Some of the Agile practices make a system more secure, but that is often a beneficial side effect rather than the purpose. Very few Agile teams have an understanding of the threats that face their system; they don’t understand the risks they are taking; they don’t track or do anything to control those risks; and they often have a poor understanding of who it even is that is attacking their creations.

We don’t know if you are an Agile team leader, or a developer who is curious or wants to know more about security. Maybe you are a security practitioner who has just found an entire development team you didn’t know existed and you want to know more.

This book was written with three main audiences in mind.

You live, breathe, and do Agile. You know your Scrum from your Kaizen, your test-driven-development from your feedback loop. Whether you are a Scrum Master, developer, tester, Agile coach, Product Owner, or customer proxy, you understand the Agile practices and values.

This book should help you understand what security is about, what threats exist, and the language that security practitioners use to describe what is going on. We’ll help you understand how we model threats, measure risks, build software with security in mind, install software securely, and understand the operational security issues that come with running a service.

Whether you are a risk manager, an information assurance specialist, or a security operations analyst, you understand security. You are probably careful how you use online services, you think about threats and risks and mitigations all of the time, and you may have even found new vulnerabilities and exploited them yourself.

This book should help you understand how software is actually developed in Agile teams, and what on earth those teams are talking about when they talk about sprints and stories. You will learn to see the patterns in the chaos, and that should help you interact with and influence the team. This book should show you where you can intervene or contribute that is most valuable to an Agile team and has the best effect.

From risk to sprints, you know it all. Whether you are a tool builder who is trying to help teams do security well, or a consultant who advises teams, this book is also for you. The main thing to get out of this book is to understand what the authors consider to be the growing measure of good practice. This book should help you be aware of others in your field, and of the ideas and thoughts and concepts that we are seeing pop up in organizations dealing with this problem. It should give you a good, broad understanding of the field and an idea for what to research or learn about next.

You could read this book from beginning to end, one chapter at a time. In fact, we recommend it; we worked hard on this book, and we hope that every chapter will contain something valuable to all readers, even if it’s just our dry wit and amusing anecdotes!

But actually, we think that some chapters are more useful to some of you than others.

We roughly divided this book into three parts.

Agile and security are very broad fields, and we don’t know what you already know. Especially if you come from one field, you might not have much knowledge or experience of the other.

If you are an Agile expert, we recommend first reading Chapter 1, Getting Started with Security, to be sure that you have a baseline understanding of security.

If you aren’t doing Agile yet, or you are just starting down that road, then before we move on to the introduction to Agile, we recommend that you read Chapter 2, Agile Enablers. This represents what we think the basic practices are and what we intend to build upon.

Chapter 3, Welcome to the Agile Revolution, covers the history of Agile software development and the different ways that it can be done. This is mostly of interest to security experts or people who don’t have that experience yet.

We then recommend that everybody starts with Chapter 4, Working with Your Existing Agile Life Cycle.

This chapter attempts to tie together the security practices that we consider, with the actual Agile development life cycle, and explains how to combine the two together.

Chapters 5 through 7 give an understanding of requirements and vulnerability management and risk management, which are more general practices that underpin the product management and general planning side of development.

Chapters 8 through 13 cover the various parts of a secure software development life cycle, from threat assessment, code review, testing, and operational security.

Chapter 14 looks at regulatory compliance and how it relates to security, and how to implement compliance in an Agile or DevOps environment.

Chapter 15 covers the cultural aspects of security. Yes, you could implement every one of the practices in this book, and the previous chapters will show you a variety of tools you can use to make those changes stick. Yet Agile is all about people, and the same is true of effective security programs: security is really cultural change at heart, and this chapter will provide examples that we have found to be effective in the real world.

For a company to change how it does security, it takes mutual support and respect between security professionals and developers for them to work closely together to build secure products. That can’t be ingrained through a set of tools or practices, but requires a change throughout the organization.

Finally, Chapter 16 looks at what Agile security means to different people, and summarizes what each of us has learned about what works and what doesn’t in trying to make teams Agile and secure.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords. If you see the ↲ at the end of a code line, this indicates the line continues on the next line.

Constant width boldShows commands or other text that should be typed literally by the user.

Constant width italicShows text that should be replaced with user-supplied values or by values determined by context.

This element signifies a tip or suggestion.

This element signifies a general note.

This element indicates a warning or caution.

Safari (formerly Safari Books Online) is a membership-based training and reference platform for enterprise, government, educators, and individuals.

Members have access to thousands of books, training videos, Learning Paths, interactive tutorials, and curated playlists from over 250 publishers, including O’Reilly Media, Harvard Business Review, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Adobe, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, and Course Technology, among others.

For more information, please visit http://oreilly.com/safari.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://bit.ly/agile-application-security.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

First, thank you to our wonderful editors: Courtney Allen, Virgnia Wilson, and Nan Barber. We couldn’t have got this done without all of you and the rest of the team at O’Reilly.

We also want to thank our technical reviewers for their patience and helpful insights: Ben Allen, Geoff Kratz, Pete McBreen, Kelly Shortridge, and Nenad Stojanovski.

And finally, thank you to our friends and families with putting up with yet another crazy project.

A deceptively simple question to ask, rather more complex to answer.

When first starting out in the world of security, it can be difficult to understand or to even to know what to look at first. The successful hacks you will read about in the news paint a picture of Neo-like adversaries who have a seemingly infinite range of options open to them with which to craft their highly complex attacks. When thought about like this, security can feel like a possibly unwinnable field that almost defies reason.

While it is true that security is a complex and ever-changing field, it is also true that there are some relatively simple first principles that, once understood, will be the undercurrent to all subsequent security knowledge you acquire. Approach security as a journey, not a destination—one that starts with a small number of fundamentals upon which you will continue to build iteratively, relating new developments back to familiar concepts.

With this in mind, and regardless of our backgrounds, it is important that we all understand some key security principles before we begin. We will also take a look at the ways in which security has traditionally been approached, and why that approach is no longer as effective as it once was now that Agile is becoming more ubiquitous.

Security for development teams tends to focus on information security (as compared to physical security like doors and walls, or personnel security like vetting procedures). Information security looks at security practices and procedures during the inception of a project, during the implementation of a system, and on through the operation of the system.

While we will be talking mostly about information security in this book, for the sake of brevity we will just use security to refer to it. If another part of the security discipline is being referred to, such as physical security, then it will be called out explicitly.

As engineers we often discuss the technology choices of our systems and their environment. Security forces us to expand past the technology. Security can perhaps best be thought of as the overlap between that technology and the people who interact with it day-to-day as shown in Figure 1-1.

So what can this picture tell us? It can be simply viewed as an illustration that security is more than just about the technology and must, in its very definition, also include people.

People don’t need technology to do bad things or take advantage of each other; such activities happened well before computers entered our lives; and we tend to just refer to this as crime. People have evolved for millennia to lie, cheat, and steal items of value to further themselves and their community. When people start interacting with technology, however, this becomes a potent combination of motivations, objectives, and opportunity. In these situations, certain motivated groups of people will use the concerted circumvention of technology to further some very human end goal, and it is this activity that security is tasked with preventing.

However, it should be noted that technological improvements have widened the fraternity of people who can commit such crime, whether that be by providing greater levels of instruction, or widening the reach of motivated criminals to cover worldwide services. With the internet, worldwide telecommunication, and other advances, you are much more easily attacked now than you could have been before, and for the perpetrators there is a far lower risk of getting caught. The internet and related technologies made the world a much smaller place and in doing so have made the asymmetries even starker—the costs have fallen, the paybacks increased, and the chance of being caught drastically reduced. In this new world, geographical distance to the richest targets has essentially been reduced to zero for attackers, while at the same time there is still the old established legal system of treaties and process needed for cross-jurisdictional investigations and extraditions—this aside from the varying definitions of what constitutes a computer crime in different regions. Technology and the internet also help shield perpetrators from identification: no longer do you need to be inside a bank to steal its money—you can be half a world away.

Circumvention is used deliberately to avoid any implicit moral judgments whenever insecurity is discussed.

The more technologies we have in our lives, the more opportunities we have to both use and benefit from them. The flip side of this is that society’s increasing reliance on technology creates greater opportunities, incentives, and benefits for its misuse. The greater our reliance on technology, the greater our need for that technology to be stable, safe, and available. When this stability and security comes into question, our businesses and communities suffer. The same picture can also help to illustrate this interdependence between the uptake of technology by society and the need for security in order to maintain its stability and safety, as shown in Figure 1-2.

As technology becomes ever more present in the fabric of society, the approaches taken to thinking about its security become increasingly important.

A fundamental shortcoming of classical approaches to information security is failing to recognize that people are just as important as technology. This is an area we hope to provide a fresh perspective to in this book.

There was a time that security was the exclusive worry of government and geeks. Now, with the internet being an integral part of people’s lives the world over, securing the technologies that underlie it is something that is pertinent to a larger part of society than ever before.

If you use technology, security matters because a failure in security can directly harm you and your communities.

If you build technology, you are now the champion of keeping it stable and secure so that we can improve our business and society on top of its foundation. No longer is security an area you can mentally outsource:

You are responsible for considering the security of the technology.

You provide for people to embrace security in their everyday lives.

Failure to accept this responsibility means the technology you build will be fundamentally flawed and fail in one of its primary functions.

Security, or securing software more specifically, is about minimizing risk. It is the field in which we attempt to reduce the likelihood that our people, systems, and data will be used in a way that would cause financial or physical harm, or damage to our organization’s reputation.

Most security practices are about preventing bad things from happening to your information or systems. But risk calculation isn’t about stopping things; it’s about understanding what could happen, and how, so that you can prioritize your improvements.

To calculate risk you need to know what things are likely to happen to your organization and your system, how likely they are to happen, and the cost of them happening. This allows you to work out how much money and effort to spend on protecting against that stuff.

Vulnerability is about exposure. Outside the security field, vulnerability is how we talk about being open to harm either physically or emotionally. In a systems and security sense, we use the word vulnerability to describe any flaw in a system, component, or process that would allow our data, systems, or people to be misused, exposed, or harmed in some way.

You may hear phrases such as, “a new vulnerability has been discovered in…software” or perhaps, “The attacker exploited a vulnerability in…” as you start to read about this area in more depth. In these examples, the vulnerability was a flaw in an application’s construction, configuration or business logic that allowed an attacker to do something outside the scope of what was authorized or intended. The exploitation of the vulnerability is the actual act of exercising the flaw itself, or the way in which the problem is taken advantage of.

Likelihood is the way we measure how easy (or likely) it is that an attacker would be able (and motivated) to exploit a vulnerability.

Likelihood is a very subjective measurement and has to take into account many different factors. In a simple risk calculation you may see this simplified down to a number, but for clarity, here are the types of things we should consider when calculating likelihood:

Do you need to be a deep technical specialist, or will a passing high-level knowledge be enough?

Does the exploit work reliably? What about over the different versions, platforms, and architectures where the vulnerability may be found? The more reliable the exploit, the less likely attacks are to cause a side effect that is noticeable: this makes it a safer exploit for an attacker to use, as it can reduce the chances of detection.

Does the exploitation of the vulnerability lend itself well to be automated? This can help its inclusion in things like exploit kits or self-propagating code (worms), which means you are more likely to be subject to indiscriminate exploit attempts.

Do you need to be have the ability to communicate directly with a particular system on a network or have a particular set of user privileges? Do you need to have already compromised one or more other parts of the system to make use of the vulnerability?

Would the end result of exploiting this vulnerability be enough to motivate someone into spending the time?

Impact is the effect that exploiting a vulnerability or having your systems misused or breached in someway would have on you, your customers, and your organization.

For the majority of businesses, we measure impact in terms of money lost. This could be actual theft of funds (via credit card theft or fraud, for example), or it could be cost of recovering from a breach. Cost of recovery often includes not just addressing the vulnerability, but also:

Responding to the incident itself

Repairing other systems or data that may have been damaged or destroyed

Implementing new approaches to help increase the security of the system in an effort to prevent a repeat

Increased audit, insurance, and compliance costs

Marketing costs and public relations

Increased operating costs or less favorable rates from suppliers

At the more serious end of the scale are those of us who build control systems or applications that have direct impact on human lives. In those circumstances, measuring the impact of a security issue is much more personal, and may include death and injury to individuals or groups of people.

In a world where we are rapidly moving toward automation of driving and many physical roles in society, computerized medical devices, and computers in every device in our homes, the impact of security vulnerabilities will move toward an issue of protecting people rather than just money or reputation.

We are used to the idea that we can remove the imperfections from our systems. Bugs can be squashed and inefficiencies removed by clever design. In fact, we can perfect the majority of things we build and control ourselves.

Risk is a little different.

Risk is about external influences to our systems, organizations, and people. These influences are mostly outside of our control (economists often refer to such things as externalities). They could be groups or individuals with their own motivations and plans, vendors and suppliers with their own approaches and constraints, or environmental factors.

As we don’t control risk or its causes, we can never fully avoid it. It would be an impossible and fruitless task to attempt to do so. Instead we must focus on understanding our risks, minimizing them (and their impacts) where we can, and maintaining watch across our domain for new evolving or emerging risks.

The acceptance of risk is also perfectly OK, as long as it is mindful and the risk being accepted is understood. Blindly accepting risks, however, is a recipe for disaster and is something you should be on the lookout for, as it can occur all too easily.

While we are on this mission to minimize and mitigate risks, we also have be aware that we live in an environment of limits and finite resources. Whether we like it or not, there are only 24 hours in a day, and we all need to sleep somewhere in that period. Our organizations all have budgets and a limited number of people and resources to throw at problems.

As a result, there are few organizations that can actually address every risk they face. Most will only be able to mitigate or reduce a small number. Once our resources are spent, we can only make a list of the risks that remain, do our best to monitor the situation, and understand the consequence of not addressing them.

The smaller our organizations are, the more acute this can be. Remember though, even the smallest teams with the smallest budget can do something. Being small or resource poor is not an excuse for doing nothing, but an opportunity to do the best you can to secure your systems, using existing technologies and skills in creative ways.

Choosing which risks we address can be hard and isn’t a perfect science. Throughout this book, we should give you tools and ideas for understanding and measuring your risks more accurately so that you can make the best use of however much time and however many resources you have.

So who or what are we protecting against?

While we would all love to believe that it would take a comic-book caliber super villain to attack us or our applications, we need to face a few truths.

There is a range of individuals and groups that could or would attempt to exploit vulnerabilities in your applications or processes. Each has their own story, motivations, and resources; and we need to know how these things come together to put our organizations at risk.

In recent years we have been caught up with using the word cyber to describe any attacker that approaches via our connected technologies or over the internet. This has led to the belief that there is only one kind of attacker and that they probably come from a nation-state actor somewhere “far away.”

Cyber is a term that, despite sounding like it originated from a William Gibson novel, actually emanated from the US military.

The military considered there to be four theaters of war where countries can legally fight: land, sea, air, and space. When the internet started being used by nations to interfere and interact with each other, they recognized that there was a new theater of war: cyber, and from there the name has stuck.

Once the government started writing cyber strategies and talking about cyber crime, it was inevitable that large vendors would follow the nomenclature, and from there we have arrived at a place where it is commonplace to hear about the various cybers and their associated threats. Unfortunately cyber has become the all-encompassing marketing term used to both describe threats and brand solutions. This commercialization and over-application has had the effect of diluting the term and making it something that has become an object of derision for many in the security community. In particular, those who are more technically and/or offensively focused often use “cyber” as mockery.

While some of us (including more than one of your authors) might struggle with using the word “cyber,” it is undeniable that it is a term well understood by nonsecurity and nontechnical people; alternate terms such as “information security,” “infosec,” “commsec,” or “digital security” are all far more opaque to many. With this in mind, if using “cyber” helps you get bigger and more points across to those who are less familiar with the security space or whose roles are more focused on PR and marketing, then so be it. When in more technical conversations or interacting with people more toward the hacker end of the infosec spectrum, be aware that using the term may devalue your message or render it mute altogether.

That’s simply not the case.

There are many types of attackers out there, the young, impetuous, and restless; automated scripts and roaming search engines looking for targets; disgruntled ex-employees; organized crime; and the politically active. The range of attackers is much more complex than our “cyber” wording would allow us to believe.

When you are trying to examine the attackers that might be interested in your organization you must consider both your organization’s systems and its people. When you do this, there are three different aspects of the attacker’s profile or persona worth considering:

Their motivations and objectives (why they want to attack and what they hope to gain)

Their resources (what they can do, what they can use to do it, and the time they have available to invest)

Their access (what they can get hold of, into, or information from)

When we try to understand which attacker profiles our organization should protect against, how likely each is to attack, and what impact it would have, we have to look at all of these attributes in the context of our organization, its values, practices, and operations.

We will cover this subject in much more detail as we learn to create security personas and integrate them into our requirements capture and testing regimes.

We have a right (and an expectation) that when we go about our days and interact with technologies and systems, we will not come to harm while our data remains intact and private.

Security is how we achieve this and we get it by upholding a set of values.

Before anything else, stop for a second and understand what it is that you are trying to secure, what are the crown jewels in your world, and where are they kept? It is surprising how many people embark on their security adventure without this understanding, and as such waste a lot of time and money trying to protect the wrong things.

Every field has its traditional acronyms, and confidentiality, integrity, and availability (CIA) is a treasure in traditional security fields. It is used to describe and remember the three tenets of secure systems—the features that we strive to protect.

There are very few systems now that allow all people to do all things. We separate our application users into roles and responsibilities. We want to ensure that only those people we can trust, who have authenticated and been authorized to act, can access and interact with our data.

Maintaining this control is the essence of confidentiality.

Our systems and applications are built around data. We store it, process it, and share it in dozens of ways as part of normal operations.

When taking responsibility for this data, we do so under the assumption that we will keep it in a controlled state. That from the moment we are entrusted with data, we understand and can control the ways in which is is modified (who can change it, when it can be changed, and in what ways). Maintaining data integrity is not about keeping data preserved and unchanged; it is about having it subjected to a controlled and predictable set of actions such that we understand and preserve its current state.

A system that can’t be accessed or used in the way that it was intended is no use to anyone. Our businesses and lives rely on our ability to interact with and access data and systems on a nearly continuous basis.

The not-so-much witty, as cynical among us will say that to secure a system well, we should power it down, encase it in concrete, and drop it to the bottom of the ocean. This, however, wouldn’t really help us maintain the requirement for availability.

Security requires that we keep our data, systems, and people safe without getting in the way of interacting with them.

This means finding a balance between the controls (or measures) we take to restrict access or protect information and the functionality we expose to our users as part of our application. As we will discuss, it is this balance that provides a big challenge in our information sharing and always-connected society.

Nonrepudiation is a proof of both the origin and integrity of data; or put another way, is the assurance that an activity cannot be denied as having been taken. Non-repudiation is the counterpart to auditability, which taken together provide the foundation upon which every activity in our system—every change and every task—should be traceable to an individual or an authorized action.

This mechanism of linking activity to a usage narrative or an individual’s behavior gives us the ability to tell the story of our data. We can recreate and step through the changes and accesses made, and build a timeline. This timeline can help us identify suspicious activity, investigate security incidents or misuse, and even debug functional flaws in our systems.

One of the main drivers for security programs in many organizations is compliance with legal or industry-specific regulatory frameworks. These dictate how our businesses are required to operate, and how we need to design, build, and operate our systems.

Love them or hate them, regulations have been—and continue to be—the catalyst for security change, and often provide us with the management buy-in and support that we need to drive security initiatives and changes. Compliance “musts” can sometimes be the only way to convince people to do some of the tough but necessary things required for security and privacy.

Something to be eyes-wide-open about from the outset is that compliance and regulation are related but distinct from security. You can be compliant and insecure, as well as secure and noncompliant. In an ideal world, you will be both compliant and secure; however, it is worth noting that one does not necessarily ensure the other.

These concepts are so important, in fact, that Chapter 14, Compliance is devoted to them.

When learning about something, anti-patterns can be just as useful as patterns; understanding what something is not helps you take steps toward understanding what it is.

What follows below is an (almost certainly incomplete) collection of common misconceptions that people have about security. When you start looking for them, you will see them exhibited with an often worrying frequency, not only in the tech industry, but also in the mass media and your workplace in general.

Security is not black and white; however, the concept of something being secure or insecure is one that is chased and stated countless times per day. For any sufficiently complex system, a statement of (in)security in an absolute sense is incredibly difficult, if not impossible, to make, as it all depends on context.

The goal of a secure system is to ensure the appropriate level of control is put in place to mitigate the threats you see are relevant to that system’s use case. If the use case changes, so do the controls that are needed to render that system secure. Likewise, if the threats the system faces change, the controls must evolve to take into account the changes.

Security from who? Security against what? and How could that mitigation be circumvented? are all questions that should be on the tip of your tongue when considering the security of any system.

No organization or system will ever be “secure.” There is no merit badge to earn, and nobody will come and tell you that your security work is done and you can go home now. Security is a culture, a lifestyle choice if you prefer, and a continuous approach to understanding and reacting to the world around us. This world and its influences on us are always changing, and so must we.

It is much more useful to think of security as being a vector to follow rather than a point to be reached. Vectors have a size and a direction, and you should think about the direction you want to go in pursuit of security and how fast you’d like to chase it. However, it’s a path you will continue to walk forever.

The classic security focus is summed up by the old joke about two men out hunting when they stumble upon a lion. The first man stops to do up his shoes, and the second turns to him and cries, “Are you crazy? You can’t outrun a lion.” The first man replies, “I don’t have to outrun the lion. I just have to outrun you.”

Your systems will be secure if the majority of attackers would get more benefit by attacking somebody else. For most organizations, actually affecting the attackers’ motivations or behavior is impossible, so your best defense is to make it so difficult or expensive to attack you that it’s not worth it.

Security tools, threats, and approaches are always evolving. Just look at how software development has changed in the last five years. Think about how many new languages and libraries have been released and how many conferences and papers have been presented with new ideas. Security is no different. Both the offensive (attacker) security worlds and the defensive are continually updating their approaches and developing new techniques. As quickly as the attackers discover a new vulnerability and weaponize it, the defenders spring to action and develop mitigations and patches. It’s a field where you can’t stop learning or trying, much like software development.

Despite the rush of vendors and specialists available to bring security to your organization and systems, the real truth is you don’t need anything special to get started with security. Very few of the best security specialists have a certificate or special status that says they have passed a test; they just live and breathe their subject every day. Doing security is about attitude, culture, and approach. Don’t wait for the perfect time, tool, or training course to get started. Just do something.

As you progress on your security journey, you will inevitably be confronted by vendors who want to sell you all kinds of solutions that will do the security for you. While there are many tools that can make meaningful contributions to your overall security, don’t fall into the trap of adding to an ever-growing pile. Complexity is the enemy of security, and more things almost always means more complexity (even if those things are security things). A rule of thumb followed by one of the authors of this book is not to add a new solution unless it lets you decommission two, which may be something to keep in mind.

If you picked up this book, there is a good chance that you are either a developer who wants to know more about this security thing, or you are a security geek who feels you should learn some more about this Agile thing you hear all the developers rabbiting on about. (If you don’t fall into either of these groups, then we’ll assume you have your own, damn fine reasons for reading an Agile security book and just leave it at that.)

One of the main motivations for writing this book was that, despite the need for developers and security practitioners to deeply understand each other’s rationale, motivations, and goals, the reality we have observed over the years is that such understanding (and dare we say empathy) is rarely the case. What’s more, things often go beyond merely just not quite understanding each other and step into the realm of actively trying to minimize interactions; or worse, actively undermining the efforts of their counterparts.

It is our hope that some of the perspectives and experience captured in this book will help remove some of the misunderstandings, and potentially even distrust, that exist between developers and security practitioners, and shine a light into what the others do and why.

Much of this book is written to help security catch up in an Agile world. We have worked in organizations that are successfully delivering with Agile methodologies, but we also work with companies that are still getting to grips with Agile and DevOps.

Many of the security practices in this book will work regardless of whether or not you are doing Agile development, and no matter how effectively your organization has embraced Agile. However, there are some important precursor behaviors and practices which enable teams to get maximum value from Agile development, as well as from the security techniques that we outline in this book.

All these enabling techniques, tools, and patterns are common in high-functioning, Agile organizations. In this chapter, we will give an overview of each technique and how it builds on the others to enhance Agile development and delivery. You’ll find more information on these subjects further on in the book.

The first, and probably the most important of these enabling techniques from a development perspective, is the concept of a build pipeline. A build pipeline is an automated, reliable, and repeatable way of producing consistent deployable artifacts.

The key feature of a build pipeline is that whenever the source code is changed, it is possible to initiate a build process that is reliably and repeatably consistent.

Some companies invest in repeatable builds to the point where the same build on different machines at different times will produce exactly the same binary output, but many organizations simply instantiate a build machine or build machines that can be used reliably.

The reason this is important is because it gives confidence to the team that all code changes have integrity. We know what it is like to work without build pipelines, where developers create release builds on their own desktops, and mistakes such as forgetting to integrate a coworker’s changes frequently cause regression bugs in the system.

If you want to move faster and deploy more often, you must be absolutely confident that you are building the entire project correctly every time.

The build pipeline also acts as a single consistent location for gateway reviews. In many pre-Agile companies, gateway reviews are conducted by installing the software and manually testing it. Once you have a build pipeline, it becomes much easier to automate those processes, using computers to do the checking for you.

Another benefit of build pipelines is that you can go back in time and check out older versions of the product and build them reliably, meaning that you can test a specific version of the system that might exhibit known issues and check patches against it.

Automating and standardizing your build pipeline reduces the risk and cost of making changes to the system, including security patches and upgrades. This means that you can close your window of exposure to vulnerabilities much faster.

However, just because you can compile and build the system fast and repeatedly doesn’t mean it will work reliably. For that you will need to use automated testing.

Testing is an important part of most software quality assurance programs. It is also a high source of costs, delays, and wastes in many traditional programs.

Test scripts take time to design and write, and more time to run against your systems. Many organizations need days or weeks of testing time, and more time to fix and re-test the bugs that are found in testing before they can finally release.

When testing takes weeks of work, it is impossible to release code into test any faster than the tests take to execute. This means that code changes tend to get batched up, making the releases bigger and more complicated, which necessitates even more testing, which necessitates longer test times, in a negative spiral.

However, much of the testing done by typical user acceptance testers following checklists or scripts adds little value and can be (and should be) automated.

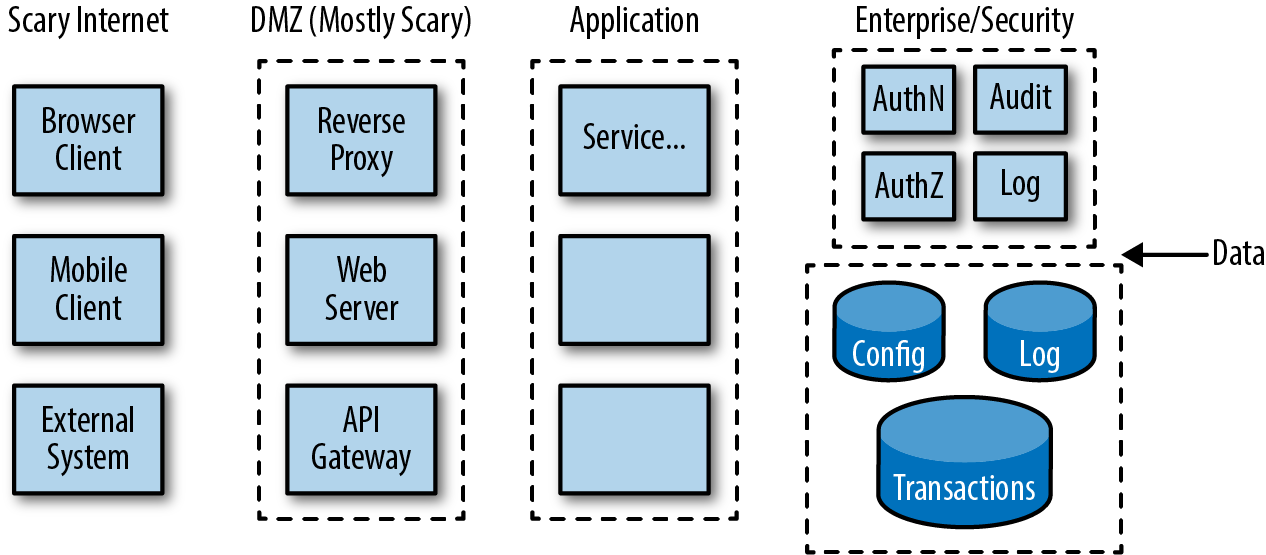

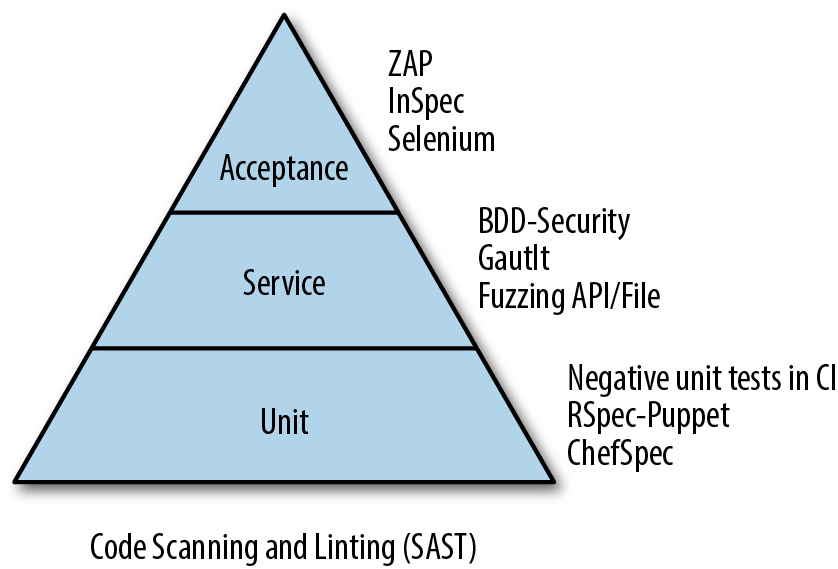

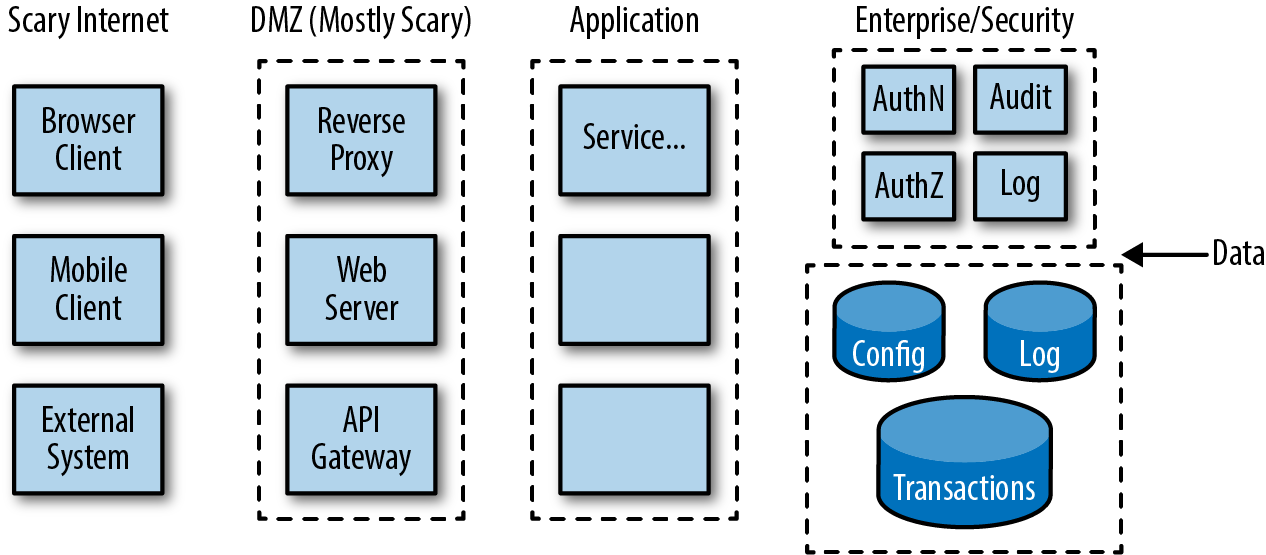

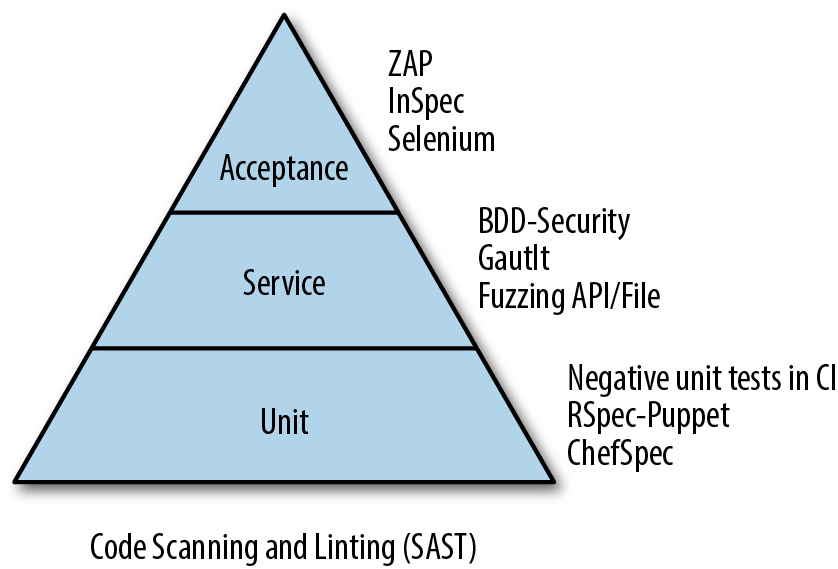

Automated testing generally follows the test pyramid, where most tests are low level, cheap, fast to execute, simple to automate, and easy to change. This reduces the team’s dependence on end-to-end acceptance tests, which are expensive to set up, slow to run, hard to automate, and even harder to maintain.

As we’ll see in Chapter 11, modern development teams can take advantage of a variety of automated testing tools and techniques: unit testing, test-driven development (TDD), behavior-driven design (BDD), integration testing through service virtualization, and full-on user acceptance testing. Automated test frameworks, many of which are open source, allow your organization to capture directly into code the rules for how the system should behave.

A typical system might execute tens of thousands of automated unit and functional tests in a matter of seconds, and perform more complex integration and acceptance testing in only a few minutes.

Each type of test achieves a different level of confidence:

These tests use white-box testing techniques to ensure that code modules work as the author intended. They require no running system, and generally test the inputs to expected outputs or expected side effects. Good unit tests will also test boundary conditions and known error conditions.

These tests test whole suites of functions. They often don’t require a running system, but they do require some setup, tying together many of the code modules. In a system comprised of many subsystems, they check a single subsystem, ensuring each subsystem works as expected. These tests try to model real-life case scenarios using known test data and the common actions that system users will perform.

These tests are the start of standing up an entire system. They check that all the connecting configuration works, and that the subsystems communicate with each other properly. In many organizations, integration testing is performed only on internal services, so external systems are stubbed with fake versions that behave in consistent ways. This makes testing more repeatable.

This is the standing up of a fully integrated system, with external integrations and accounts. These tests ensure that the whole system runs as expected, and that core functions or features work correctly from end-to-end.

Automation gets harder the further down the table you go, but here are the benefits of testing that way:

Automated tests (especially unit tests) can be executed often without needing user interfaces or slow network calls. They can also be parallelized, so that thousands of tests can be run in mere seconds.

Manual testers, even when following checklists, may miss a test or perform tests incorrectly or inconsistently. Automated tests always perform the same actions in the same way each time. This means that variability is dramatically reduced in testing, far reducing the false positives (and more important, the false negatives) possible in manual testing.

Automated tests, since they are fast and consistent, can be relied on by developers each time that they make changes. Some Agile techniques even prescribe writing a test first, which will fail, and then implementing the function to make the test pass. This helps prevent regressions, and in the case of test-driven development, helps to define the outward behavior as the primary thing under test.

Automated tests have to be coded. This code can be kept in version control along with the system under test, and undergoes the same change control mechanisms. This means that you can track a change in system behavior by looking at the history of the tests to see what changed, and what the reason for the change was.

These properties together give a high level of confidence that the system does what its implementers intended (although not necessarily what was asked for or the users wanted, which is why it is so important to get software into production quickly and get real feedback). Furthermore, it gives a level of confidence that whatever changes have been made to the code have not had an unforeseen effect on other parts of the system.

Automated testing is not a replacement for other quality assurance practices, but it does massively increase the confidence of the team to move fast and make changes to the system. It also allows any manual testing and reviews to focus on the high-value acceptance criteria.

Furthermore, the tests, if well written and maintained, are valuable documentation for what the system is intended to do.

Naturally, automated testing combines well with a build pipeline to ensure that every build has been fully tested, automatically, as a result of being built. However, to really get the benefits of these two techniques, you’ll want to tie them together to get continuous integration.

It’s common and easy to assume that you can automate all of your security testing using the same techniques and processes.

While you can—and should—automate security testing in your build pipelines (and we’ll explain how to do this in Chapter 12), it’s nowhere near as easy as the testing outlined here.

While there are good tools for security testing that can be run as part of the build, most security tools are hard to use effectively, difficult to automate, and tend to run significantly slower than other testing tools.

We recommend against starting with just automated security tests, unless you have already had success automating functional tests and know how to use your security tools well.

Once we have a build pipeline, ensuring that all artifacts are created consistently and an automated testing capability that ensures basic quality checks, we can combine those two systems. This is most commonly called continuous integration (CI), but there’s a bit more to this practice than just that.

The key to continuous integration is the word “continuous.” The idea of a CI system is that it constantly monitors the state of the code repository, and if there has been a change, automatically triggers building the artifact and then testing the artifact.

In some organizations, the building and testing of an artifact can be done in seconds, while in larger systems or more complex build-test pipelines, it can take several minutes to perform. Where the times get longer, teams tend to start to separate out tests and steps, and run them in parallel to maintain fast feedback loops.

If the tests and checks all pass, the output of continuous integration is an artifact that could be deployed to your servers after each code commit by a developer. This gives almost instantaneous feedback to the developer that he hasn’t made a mistake or broken anybody else’s work.

This also provides the capability for the team to maintain a healthy, ready-to-deploy artifact at all times, meaning that emergency patches or security responses can be applied easily and quickly.

However, when you release the artifact, the environment you release it to needs to be consistent and working, which leads us to infrastructure as code.

While the application or product can be built and tested on a regular basis, it is far less common for the systems infrastructure to go through this process—until now.

Traditionally, the infrastructure of a system is purchased months in advance, and is relatively fixed. However the advent of cloud computing and programmable configuration management means that it is now possible, and even common, to manage your infrastructure in code repositories.

There are many different ways of doing this, but the common patterns are that you maintain a code repository that defines the desired state for the system. This will include information on operating systems, hostnames, network definitions, firewall rules, installed application sets, and so forth. This code can be executed at any time to put the system into a desired state, and the configuration management system will make the necessary changes to your infrastructure to ensure that this happens.

This means that making a change to a system, whether opening a firewall rule or updating a software version of a piece of infrastructure, will look like a code change. It will be coded, stored in a code repository (which provides change management and tracking), and reliably and repeatably rolled out.

This code is versioned, reviewed, and tested in the same way that your application code is. This gives the same levels of confidence in infrastructure changes that you have over your application changes.

Most configuration management systems regularly inspect the system and infrastructure, and if they notice any differences, are able to either warn or proactively set the system back to the desired state.

Using this approach, you can audit your runtime environment by analyzing the code repository rather than having to manually scan and assess your infrastructure. It also gives confidence of repeatability between environments. How often have you known software to work in the development environment but fail in production because somebody had manually made a change in development and forgotten to promote that change through into production?

By sharing much of the infrastructure code between production and development, we can track and maintain the smallest possible gap between the two environments and ensure that this doesn’t happen.

While configuration management does an excellent job of keeping the operating environment in a consistent and desired state, it is not intended to monitor or alert on changes to the environment that may be associated with the actions of an adversary or an ongoing attack.

Configuration management tools check the actual state of the environment against the desired state on a periodic basis (e.g., every 30 minutes). This leaves a window of exposure for an adversary to operate in, where configurations could be changed, capitalized upon, and reverted, all without the configuration management system noticing the changes.

Security monitoring/alerting and configuration management systems are built to solve different problems, and it’s important to not confuse the two.

It is of course possible, and desirable by high-performing teams, to apply build pipelines, automated testing, and continuous integration onto the infrastructure itself, ensuring that you have a high confidence that your infrastructure changes will work as intended.

Once you know you have consistent and stable infrastructure to deploy to, you need to ensure that the act of releasing the software is repeatable, which leads to release management.

A common issue in projects is that the deployment and release processes for promoting code into production can fill a small book, with long lists of individual steps and checks that must be carried out in a precise order to ensure that the release is smooth.

These runbooks are often the last thing to be updated and so contain errors or omissions; and because they are executed rarely, time spent improving them is not a priority.

To make releases less painful and error prone, Agile teams try to release more often. Procedures that are regularly practiced and executed tend to be well maintained and accurate. They are also obvious candidates to be automated, making deployment and release processes even more consistent, reliable, and efficient.

Releasing small changes more often reduces operational risks as well as security risks. As we’ll explain more in this book, small changes are easier to understand, review, and test, reducing the chance of serious security mistakes getting into production.

These processes should be followed in all environments to ensure that they work reliably, and if automated, can be hooked into the continuous integration system. If this is done, we can move toward a continuous delivery or continuous deployment approach, where a change committed to the code repository can pass through the build pipeline and its automated testing stages and be automatically deployed, possibly even into production.

Continuous delivery and continuous deployment are subtly different.

Continuous delivery ensures that changes are always ready to be deployed to production by automating and auditing build, test, packaging, and deployment steps so that they are executed consistently for every change.

In continuous deployment, changes automatically run through the same build and test stages, and are automatically and immediately promoted to production if all the steps pass. This is how organizations like Amazon and Netflix achieve high rates of change.

If you want to understand the hows and whys of continuous delivery, and get into the details of how to set up your continuous delivery pipeline properly, you need to read Dave Farley and Jezz Humble’s book, Continuous Delivery (Addison-Wesley).

One of us worked on a team that deployed changes more than a hundred times each day, where the time between changing code and seeing it in production was under 30 seconds.

However, this is an extreme example from an experienced team that had been working this way for years. Most teams that we come into contact with are content to reach turnaround times of under 30 minutes, and 1 to 5 deploys a day, or even as few as 2 to 3 times a month.

Even if you don’t go all the way to continuously deploying each change to production, by automating the release process you take out human mistakes, and you gain repeatability, consistency, speed, and auditability.

This gives you confidence that deploying a new release of software won’t cause issues in production, because the build is tested, and the release process is tested, and all the steps have been exercised and proven to work.

Furthermore, built-in auditability means you can see exactly who decided to release something and what was contained in that change, meaning that should an error occur, it is much easier to identify and fix.

It’s also much more reliable in an emergency situation. If you urgently need to patch a software security bug, which would you feel more confident about: a patch that had to bypass much of your manual testing and be deployed by someone who hasn’t done that in a number of months, or a patch that has been built and tested the same as all your other software and deployed by the same script that does tens of deploys a day?

Moving the concept of a security fix to be no different than any other code change is huge in terms of being able to get fixes rapidly applied and deployed, and automation is key to being able to make that mental step forward.

But now we can release easily and often, we need to ensure that teams don’t interfere with each other, for that we need visible tracking.

Given this automated pipeline or pathway to production, it becomes critical to know what is going to go down that path, and for teams to not interfere with each other’s work.

Despite all of this testing and automation, there are always possible errors, often caused by dependencies in work units. One piece of work might be reliant on another piece of work being done by another team. In these more complex cases, it’s possible that work can be integrated out of order and make its way to production before the supporting work is in place.

Almost every Agile methodology highly prioritizes team communication, and the most common mechanism for this is big visible tracking of work. This might be Post-it notes or index cards on a wall, or a Kanban board, or it might be an electronic story tracker; but whatever it is, there are common requirements:

Everybody on the team and related teams should be able to see at a glance what is being worked on and what is in the pathway to production.

For this information to be useful and reliable, it must be complete and current. Everything about the project—the story backlog, bugs, vulnerabilities, work in progress, schedule milestones and velocity, cycle time, risks, and the current status of the build pipeline—should be available in one place and updated in real-time.

This is not a system to track all the detailed requirements for each piece of work. Each item should be a placeholder that represents the piece of work, showing a few major things, who owns it, and what state it is in.

Of course having the ability to see what work people are working on is no use if the work itself isn’t valuable, which brings us to centralized feedback.

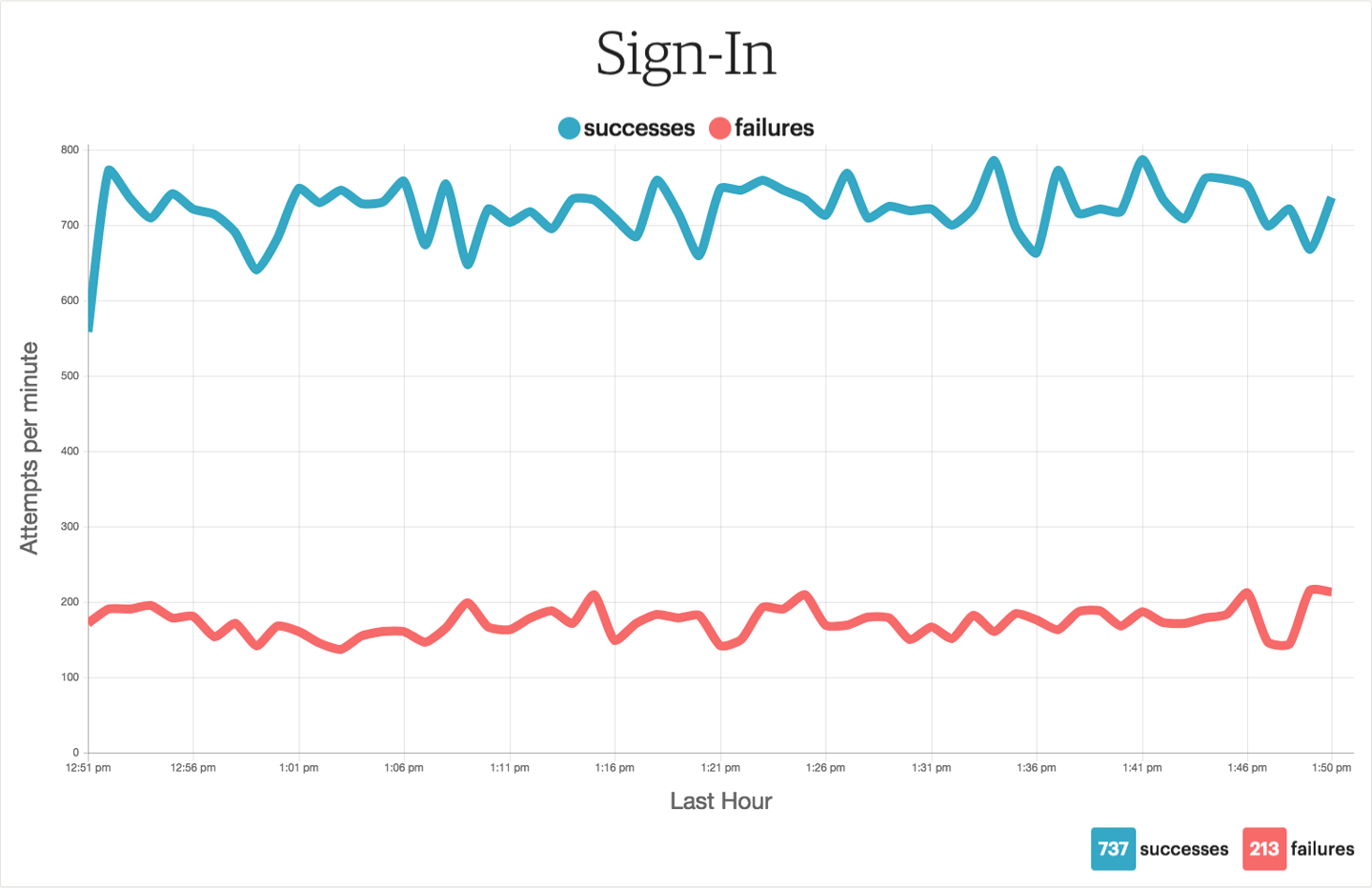

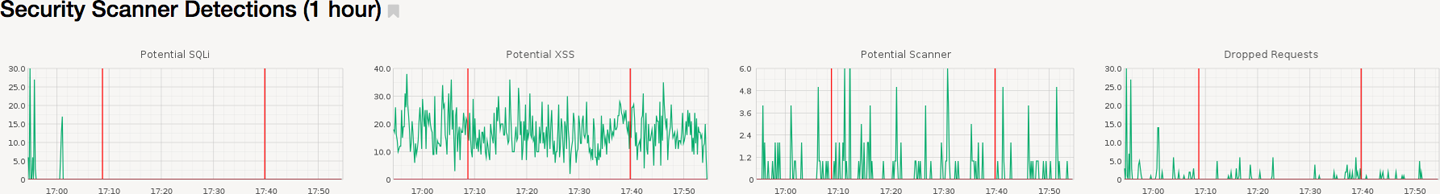

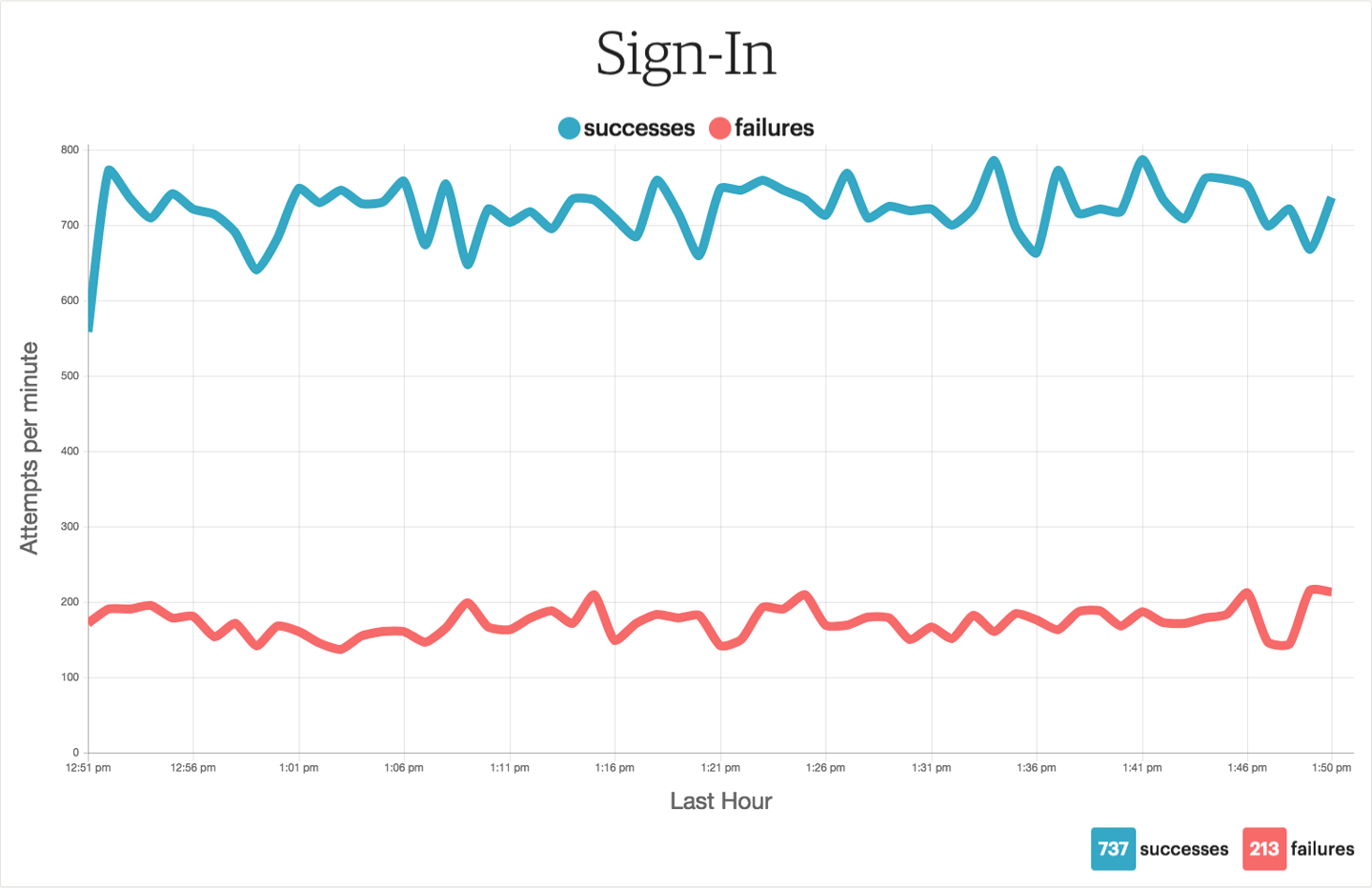

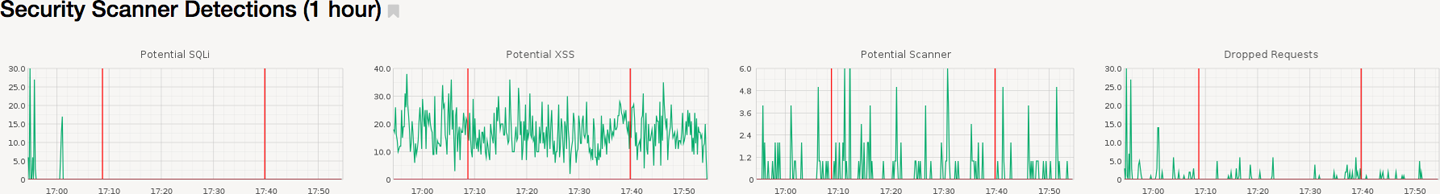

Finally, if you have an efficient pipeline to production, and are able to automatically test that your changes haven’t broken your product, you need some way to monitor the effectiveness of the changes you make. You need to be able to monitor the system, and in particular how it is working, to understand your changes.

This isn’t like system monitoring, where you check whether the machines are working. It is instead value chain monitoring: metrics that are important to the team, to the users of the system, and to the business, e.g., checking conversion rate of browsers to buyers, dwell time, or clickthrough rates.

The reason for this is that highly effective Agile teams are constantly changing their product in response to feedback. However, to optimize that cycle time, the organization needs to know what feedback to collect, and more specifically, whether the work actually delivered any value.

Knowing that a team did 10, 100, or 1000 changes is pointless unless you can tie that work back to meaningful work for the organization.

Indicators vary a lot by context and service, but common examples might include value for money, revenue per transaction, conversion rates, dwell time, or mean time to activation. These values should be monitored and displayed on the visible dashboards that enable the team to see historical and current values.

Knowing whether your software actually delivers business value, and makes a visible difference to business metrics, helps you to understand that the only good code is deployed code.

Software engineering and Agile development is not useful in and of itself. It is only valuable if it helps your company achieve its aims, whether that be profit or behavioral change in your users.

A line of code that isn’t in production is not only entirely valueless to the organization, but also a net liability, since it slows down development and adds complexity. Both have a negative effect on the security of the overall system.

Agile practices help us shorten the pathway to production, by recognizing that quick turnaround of code is the best way to get value from the code that we write.

This of course all comes together when you consider security in your Agile process. Any security processes that slow down the path to production, without significant business gains, are a net liability for the organization and encourage value-driven teams to route around them.

Security is critically involved in working out what the Definition of Done is for an Agile team, ensuring that the team has correctly taken security considerations into account. But security is only one voice in the discussion, responsible for making sure that the team is aware of risks, and enable the business to make informed decisions about these risks.

We hope that the rest of this book will help you understand how and where security can fit into this flow, and give you ideas for doing it well in your organization.

What happens if you can’t follow all of these practices?

There are some environments where regulations prevent releasing changes to production without legal sign-off, which your lawyers won’t agree to do multiple times per day or even every week. Some systems hold highly confidential data which the developers are not expected or perhaps not even allowed to have access to, which puts constraints on the roles that they can play in supporting and running the system. Or you might be working on legacy enterprise systems that cannot be changed to support continuous delivery or continuous deployment.

None of these techniques are fundamentally required to be Agile, and you don’t need to follow all of them to take advantage of the ideas in this book. But if you are aren’t following most of these practices to some extent, you need to understand that you will be missing some levels of assurance and safety to operate at speed.

You can still move fast without this high level of confidence, but you are taking on unnecessary risks in the short term, such as releasing software with critical bugs or vulnerabilities, and almost certainly building up technical debt and operational risks over the longer term. You will also lose out on some important advantages, such as being able to minimize your time for resolving problems, and closing your window of security exposure by taking the human element out of the loop as much as possible.

It’s also important to understand that you probably can’t implement all these practices at once in a team that is already established in a way of working, and you probably shouldn’t even try. There are many books that will help you to adopt Agile and explain how to deal with the cultural and organizational changes that are required, but we recommend that you work with the team to help it understand these ideas and practices, how they work, and why they are valuable, and implement them iteratively, continuously reviewing and improving as you go forward.

The techniques described in this chapter build on each other to create fast cycle times and fast feedback loops:

By standardizing and automating your build pipeline, you establish a consistent foundation for the other practices.

Test automation ensures that each build is correct.

Continuous integration automatically builds and tests each change to provide immediate feedback to developers as they make changes.

Continuous delivery extends continuous integration to packaging and deployment, which in turn requires that these steps are also standardized and automated.

Infrastructure as code applies the same engineering practices and workflows to making infrastructure configuration changes.

To close the feedback loop, you need metrics and monitoring at all stages, from development to production, and from production back to development.

As you continue to implement and improve these practices, your team will be able to move faster and with increasing confidence. These practices also provide a control framework that you can leverage for standardizing and automating security and compliance, which is what we will explore in the rest of this book.

For a number of years now, startups and web development teams have been following Agile software development methods. More recently, we’ve seen governments, enterprises, and even organizations in heavily regulated environments transitioning to Agile. But what actually is it? How can you possibly build secure software when you haven’t even fleshed out the design or requirements properly?

Reading this book, you may be a long-time security professional who has never worked with an Agile team. You might be a security engineer working with an Agile or DevOps team. Or you may be a developer or team lead in an Agile organization who wants to understand how to deal with security and compliance requirements. No matter what, this chapter should ensure that you have a good grounding in what your authors know about Agile, and that we are all on the same page.

Agile (whether spelled with a small “a” or a big “A”) means different things to different people. Very few Agile teams work in the same way, partly because there is a choice of Agile methodologies, and partly because all Agile methodologies encourage you to adapt and improve the process to better suit your team and your context.

“Agile” is a catch-all term for a variety of different iterative and incremental software development methodologies. It was created as a term when a small group of thought leaders went away on a retreat to a ski lodge in Snowbird, Utah, back in 2001 to discuss issues with modern software development. Large software projects routinely ran over budget and over schedule, and even with extra time and money, most projects still failed to meet business requirements. The people at Snowbird had recognized this and were all successfully experimenting with simpler, faster, and more effective ways to deliver software. The “Agile Manifesto” is one of the few things that the 17 participants could agree on.

What is critical about the manifesto is that these are simply value statements. The signatories didn’t believe that working software was the be-all and end-all of everything, but merely that when adopting a development methodology, any part of the process had to value the first values more than the second ones.

For example, negotiating a contract is important, but only if it helps encourage customer collaboration rather than replace customer collaboration.

Behind the value statements are 12 principles which form the backbone of the majority of Agile methodologies.

These principles tell us that Agile methods are about delivering software in regular increments, embracing changes to requirements instead of trying to freeze them up front, and valuing the contributions of a team by enabling decision making within the team, among others.

So what does “Agile” development look like?

Most people who say they are doing Agile tend to be doing one of Scrum, Extreme Programming, Kanban, or Lean development—or something loosely based on one or more of these well-known methods. Teams often cherry-pick techniques or ideas from various methods (mostly Scrum, with a bit of XP, is a common recipe), and will naturally adjust how they work over time. Normally this is because of context, but also the kind of software we write changes over time, and the methods need to match.

There are a number of other Agile methods and approaches, such as SAFe or LeSS or DAD for larger projects, Cynefin, RUP, Crystal, and DSDM. But looking closer at some of the most popular approaches can help us to understand how to differentiate Agile methodologies and to see what consistencies there actually are.

Scrum is, at the time of writing, by far the most popular Agile methodology, with many Certified Scrum Masters, and training courses continually graduating certified Scrum practitioners and trainers. Scrum is conceptually simple and can integrate into many existing project and program management frameworks. This makes it very popular among managers and senior executives, as they feel they can understand more easily what a team is doing and when the team will be finished doing it.

Scrum projects are delivered by small, multidisciplinary product development teams (generally between 5 and 11 people in total) that work off of a shared requirements backlog. The team usually contains developers, testers, and designers, a product manager or Product Owner, and someone playing the Scrum Master role, a servant leader and coach for the team.

The product backlog or Scrum backlog is a collection of stories, or very high-level requirements for the product. The product manager will continually prioritize work in the backlog and check that stories are still relevant and usable, a process called “backlog grooming.”

Scrum teams work in increments called sprints, traditionally one month long, although many modern Scrum teams work in shorter sprints that last only one or two weeks. Each sprint is time-boxed: at the end of each sprint, the teams stop work, assess the work that they have done and how well they did it, and reset for the next sprint.

At the beginning of a sprint, the team, including the product manager, will look through the product backlog and select stories to be delivered, based on priority.

The team is asked to estimate the expense of completing each unit of work as a team, and the stories are committed to the sprint backlog in priority order. Scrum teams can use whatever means of estimation they chose. Some use real units of time (that work will take three days), but many teams use relative but abstract sizing (e.g., t-shirt sizes: small, medium, and large; or animals: snail, quail, and whale). Abstract sizing allows for much looser estimates: a team is simply saying that a given story is bigger than another story, and the Scrum Master will monitor the team’s ability to deliver on those stories.

Once stories are put into the sprint backlog, the Scrum team agrees to commit to delivering all of this work within the sprint. Often in these cases the team will look at the backlog and may take some stories out, or may select some extra stories. This often happens if there are a lot of large stories: the team has less confidence in completing large stories, and so may swap for a few small stories instead.

The agreement between the team and the product manager is vital here: the product manager gets to prioritize the stories, but the team has to accept the stories into the sprint backlog.

Scrum teams during a sprint generally consider the sprint backlog to be sacrosanct. Stories are never played into the sprint backlog during the sprint. Instead, they are put into the wider product backlog so that they can be prioritized appropriately.

This is part of the contract between the Scrum team and the product manager: the product manager doesn’t change the Scrum backlog mid-sprint, and the team can deliver reliably and repeatedly from sprint to sprint. This trades some flexibility to make changes to the product and tune it immediately in response to feedback, for consistency of delivery.

Team members are co-located if possible, sitting next to one another and able to discuss or engage during the day, which helps form team cohesion. If security team members are working with an Agile team, then it is also important for them to sit with the rest of the team. Removing barriers to communication encourages sharing security knowledge, and helps build trusted relationships, preventing an us versus them mentality.

The team’s day always starts with a stand-up: a short meeting where everybody addresses a whiteboard or other record of the stories for the sprint and discusses the day’s work. Some teams use a physical whiteboard for tracking their stories, with the stories represented as individual cards that move through swimlanes of state change. Others use electronic systems that present stories on a virtual card wall.

Each team organizes its whiteboard differently, but most move from left to right across the board from “Ready to play” through “In development” to “Done.” Some teams add extra swimlanes for tasks or states like design, testing, or states to represent stories being queued for the next state.

A pig and a chicken are walking down the road.

The chicken says, “Hey, pig, I was thinking we should open a restaurant!”

Pig replies: “Hmm, maybe; what would we call it?”

Chicken responds: “How about ham-n-eggs?”

The pig thinks for a moment and says, “No, thanks. I’d be committed, but you’d only be involved.”

Each member of the team must attend the daily stand-up. We divide attendance into “chickens” and “pigs,” where pigs are delivering team members, and any observers are chickens. Chickens are not allowed to speak or interrupt the stand-up.

Most teams go around the team, one team member at a time, and they answer the following questions:

What did you do yesterday?

What are you going to do today?

What is blocking you?

A team member who completed a story moves the story card to the next column, and can often get a clap or round of applause. The focus for the team is on delivering the stories as agreed, and anything that prevents that is called a blocker.

The Scrum Master’s principal job day-to-day is to remove blockers from the team. Stories can be blocked because they weren’t ready to play, but most often are blocked by a dependency on a third-party resource of some form, something from outside the team. The Scrum Master will chase these problems down and clear them up for the team.

Scrum also depends on strong feedback loops. After each sprint, the team will get together, hold a retrospective on the sprint, and look to see what it can do better in the next sprint.

These feedback loops can be a valuable source of information for security teams to be a part of, to both learn directly from the development teams about a project, as well as to provide continuous security support during the ongoing development process, rather than only at security-specific gating points or reviews.

One of the key questions to work out with Scrum teams is whether security is a chicken (a passive outside observer) or a pig (an active, direct participant in team discussions and problem-solving). Having security expertise as part of the regular life cycle is crucial to building trusted relationships and to open, honest, and effective security-relevant dialog.

This core of Scrum—team communication, ownership, small iterative cycles, and feedback loops—makes Scrum simple for teams (and managers) to understand, and easy to adopt. However, keep in mind that many teams and organizations deviate from pure Scrum, which means that you need to understand and work with their specific interpretation or implementation of Scrum.

You also need to understand the limitations and restrictions that Scrum places on how people work. For example, Scrum prevents or at least severely limits changes during a sprint time-box so that the team can stay committed to meeting its sprint goals, and it tends to discourage engagement with the development team itself by funneling everything through the Product Owner.

Extreme Programming (XP) is one of the earliest Agile methodologies, and is one of the most Agile, but it tends to look the most different from traditional software development.

These days, teams using XP are comparatively rare, since it’s incredibly disciplined and intense; but because many of the technical practices are in active use by other Agile teams, it’s worth understanding where they come from.

The following are the core concepts of Extreme Programming:

The team has the customer accessible to it at all times.

It commits to deliver working code in regular, small increments.

The team follows specific technical practices, using test-driven development, pair programming, refactoring, and continuous integration to build high-quality software.