For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact

Special Sales Department Manning Publications Co. 20 Baldwin Road PO Box 761 Shelter Island, NY 11964 Email: orders@manning.com

©2018 by Manning Publications Co. All rights reserved.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher.

Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps.

Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed

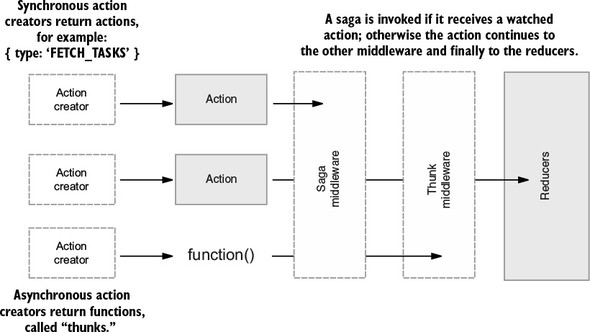

on acid-free paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources

of our planet, Manning books are printed on paper that is at least 15 percent recycled and processed without the use of elemental

chlorine.

Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed

on acid-free paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources

of our planet, Manning books are printed on paper that is at least 15 percent recycled and processed without the use of elemental

chlorine.

|

Manning Publications Co. 20 Baldwin Road PO Box 761 Shelter Island, NY 11964 |

Acquisitions editor: Brian Sawyer Development editor: Toni Arritola Technical development editor: German Frigerio Review editor: Ivan Martinović Project manager: David Novak Copy editor: Katie Petito Technical proofreader: Ryan Burrows Proofreader: Alyson Brener Typesetter: Dennis Dalinnik Cover designer: Marija Tudor

ISBN: 9781617294976

Printed in the United States of America

1 2 3 4 5 6 7 8 9 10 – EBM – 23 22 21 20 19 18

To my wife for her dance moves, my family and friends for pretending to know what I’m on about, and the universe for plain good luck

Marc Garreau

For M + D

Will Faurot

Chapter 2. Your first Redux application

Chapter 3. Debugging Redux applications

Chapter 6. Handling complex side effects

Chapter 7. Preparing data for components

Chapter 8. Structuring a Redux store

Chapter 9. Testing Redux applications

Chapter 11. Structuring Redux code

Chapter 2. Your first Redux application

2.1. Building a task-management application

2.4. Revisiting the Redux architecture

2.5. Configuring the Redux store

2.6. Connecting Redux and React with react-redux

2.6.1. Adding the Provider component

2.9. Handling actions with reducers

Chapter 3. Debugging Redux applications

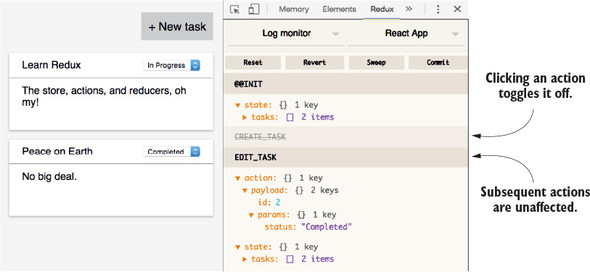

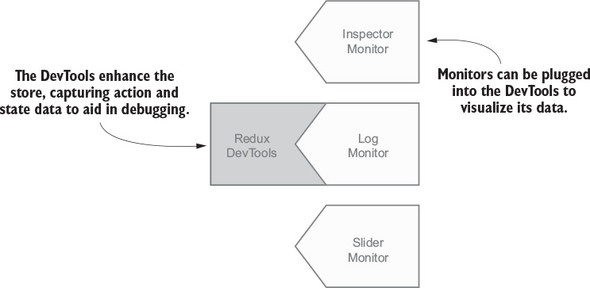

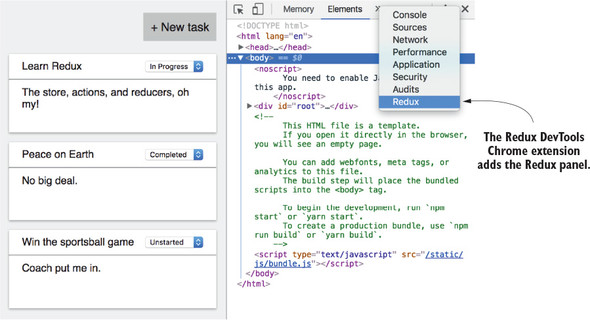

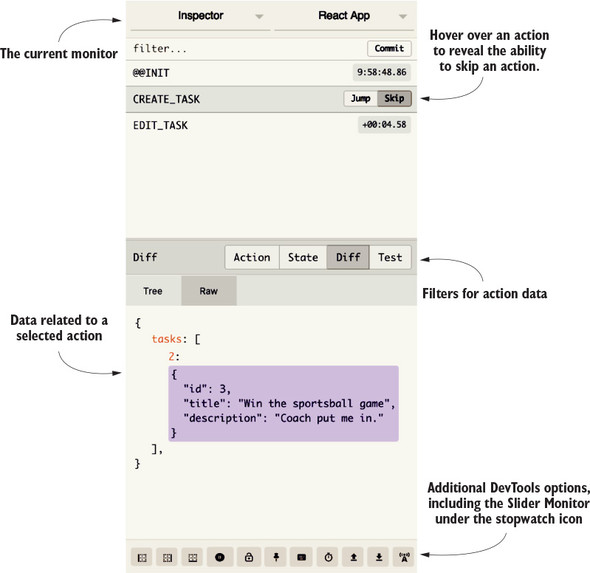

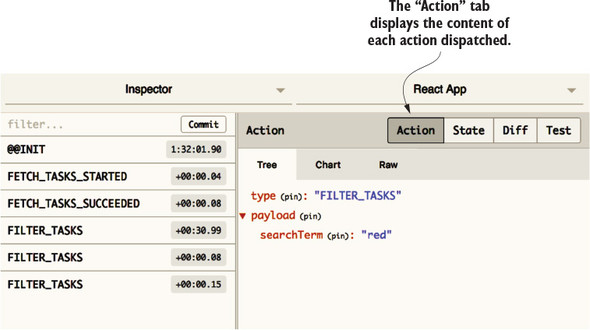

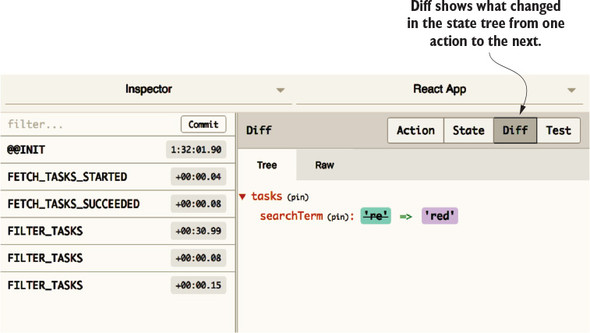

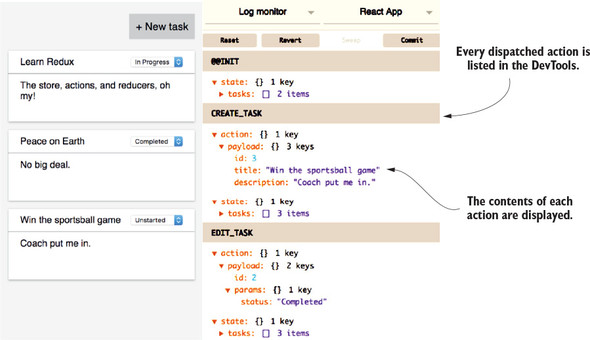

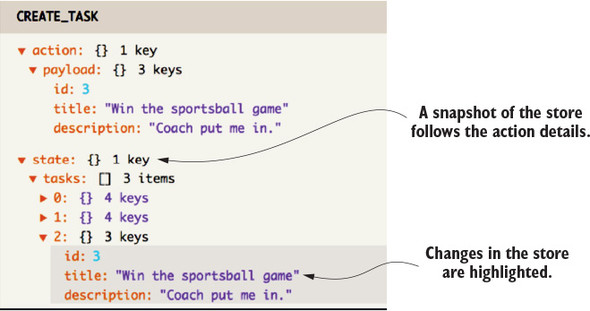

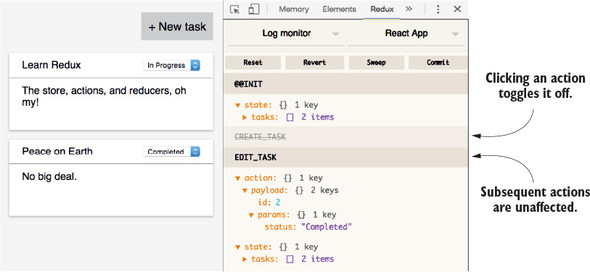

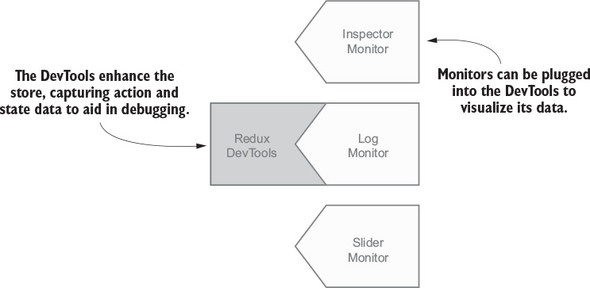

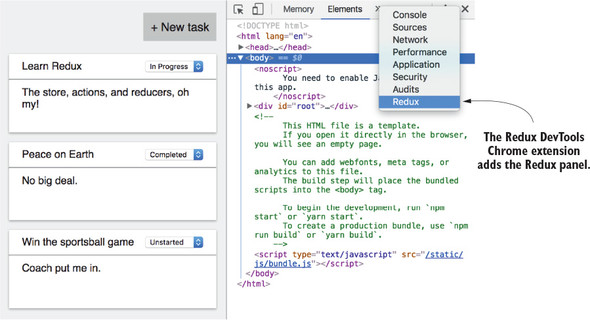

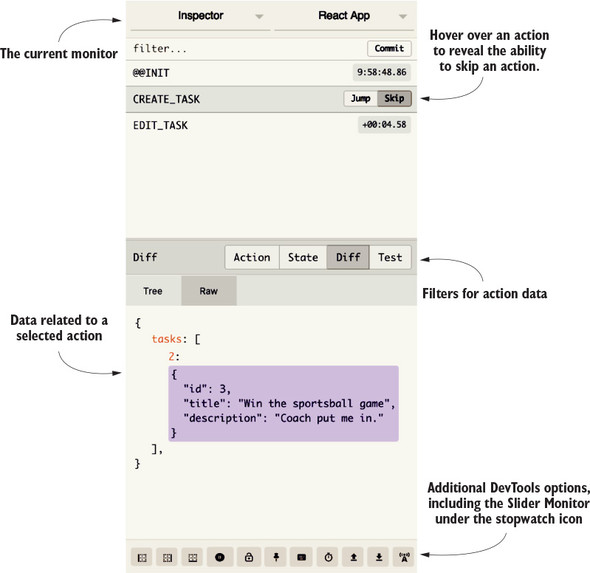

3.1. Introducing the Redux DevTools

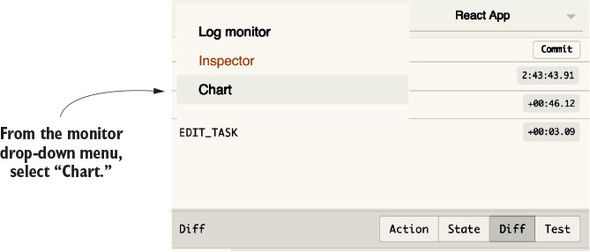

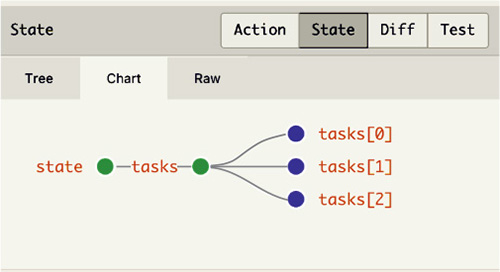

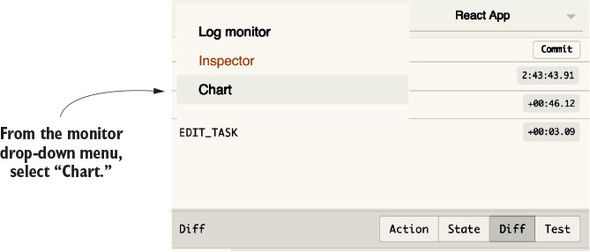

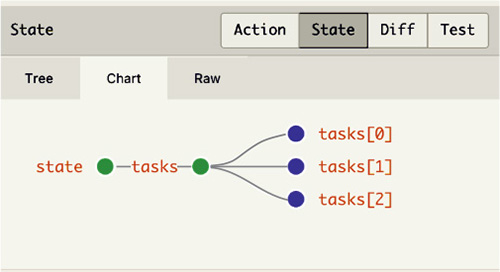

3.3. Visualizing changes with DevTools monitors

3.4. Implementing the Redux DevTools

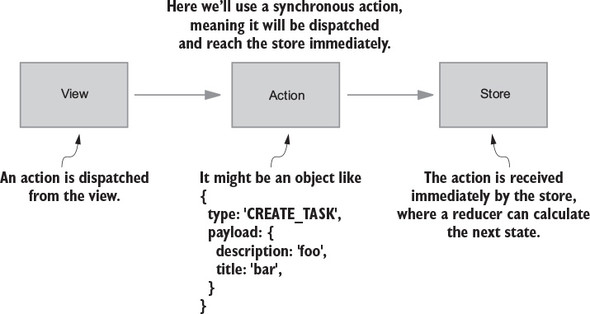

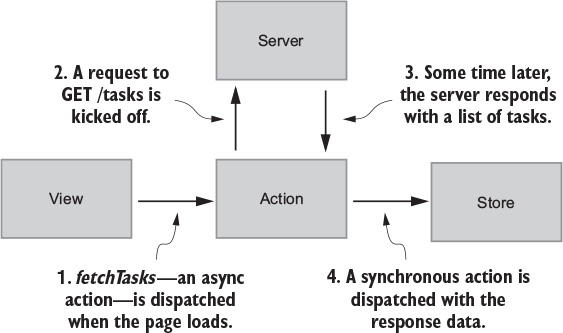

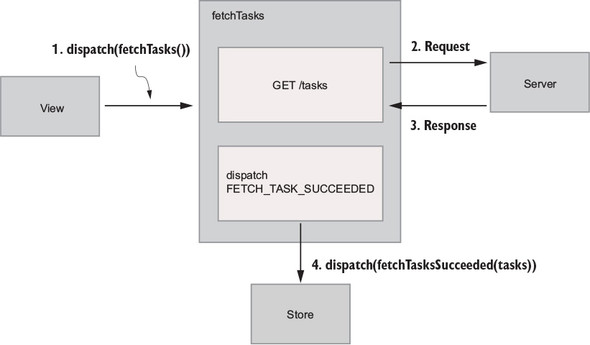

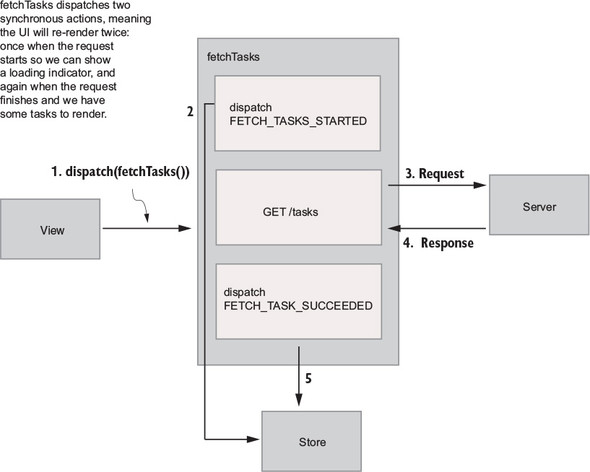

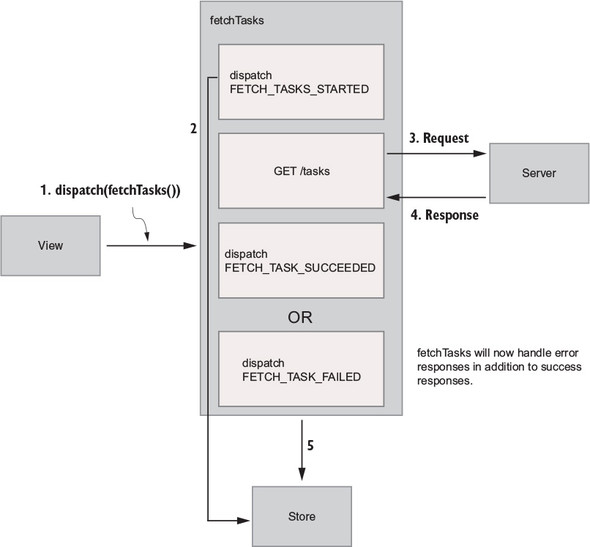

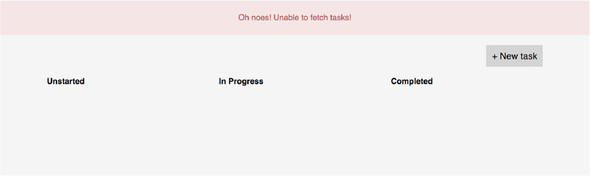

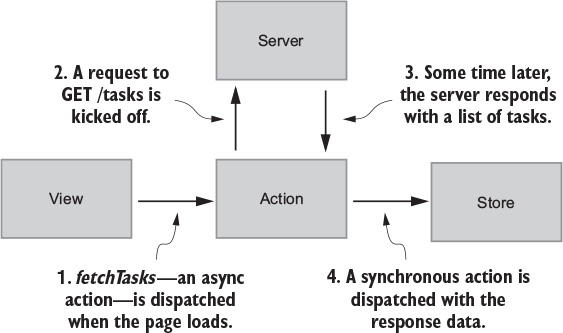

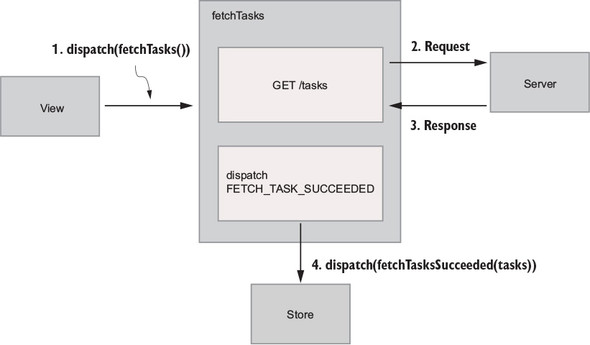

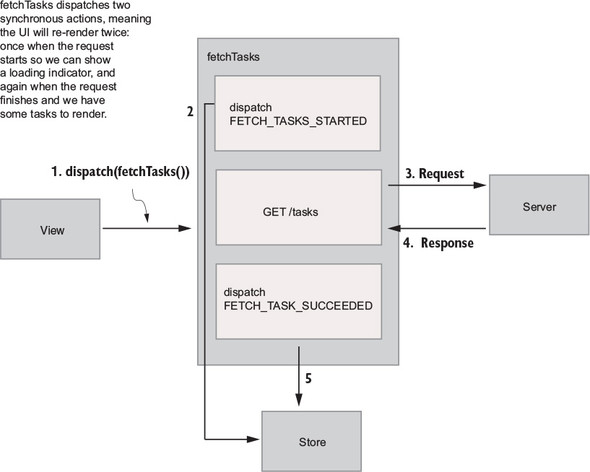

4.2. Invoking async actions with redux-thunk

4.3. Saving tasks to the server

5.3. Example: logging middleware

5.4. Example: analytics middleware

5.4.2. Adding analytics middleware

Chapter 6. Handling complex side effects

6.5.1. Connecting saga middleware to the store

6.6. Handling long-running processes

6.6.2. Updating the user interface

6.6.4. Writing a long-running saga

6.9. Additional side-effect management strategies

6.9.1. Asynchronous functions with async/await

Chapter 7. Preparing data for components

7.1. Decoupling Redux from React components

7.3.2. Local state versus Redux state

7.3.3. Dispatching a filter action

Chapter 8. Structuring a Redux store

8.1. How should I store data in Redux?

8.2. An introduction to normalized data

8.3. Implementing projects with nested data

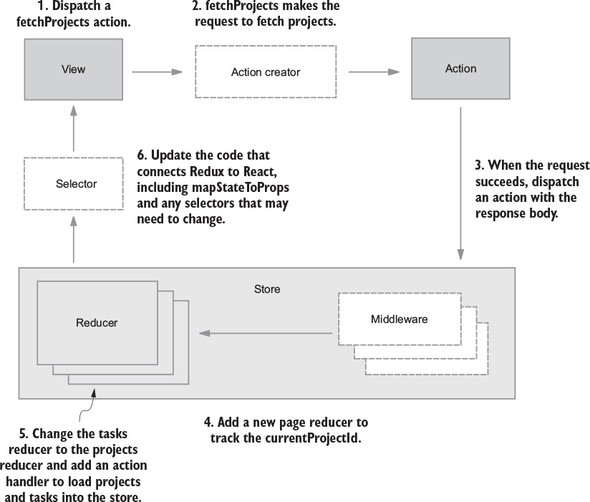

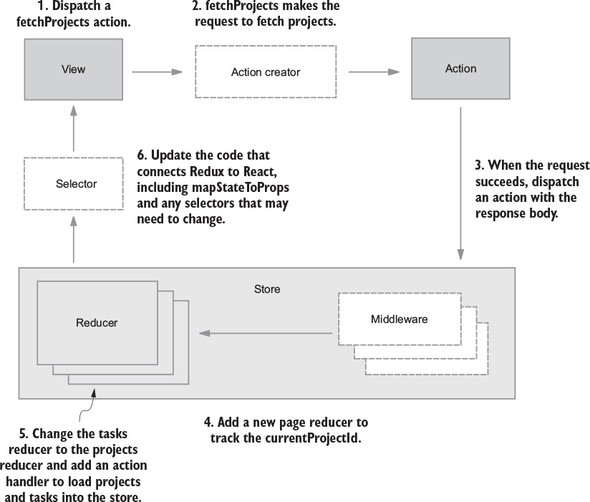

8.3.1. Overview: fetching and rendering projects

8.3.2. Updating the server with projects

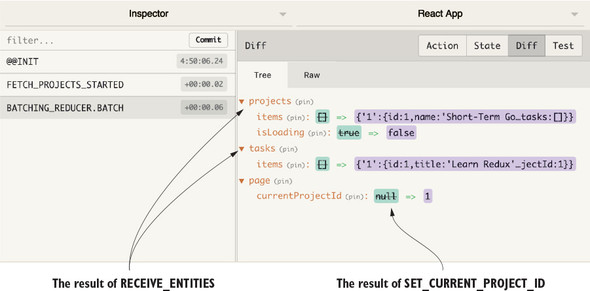

8.3.3. Adding and dispatching fetchProjects

8.3.5. Updating mapStateToProps and selectors

8.4. Normalizing projects and tasks

Chapter 9. Testing Redux applications

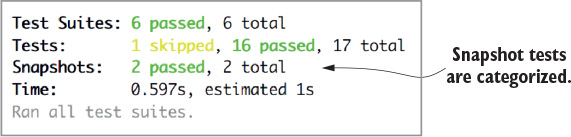

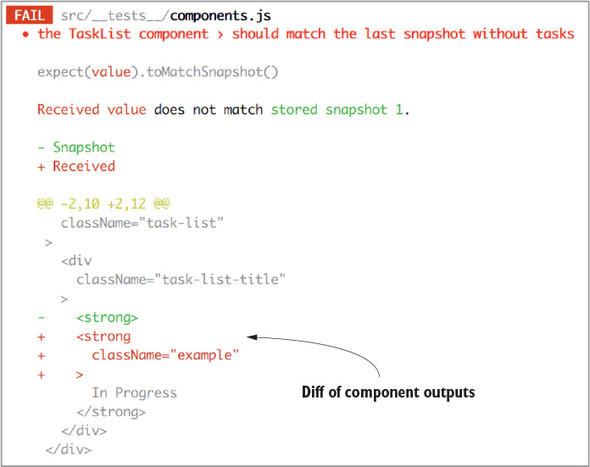

9.1. Introduction to testing tools

9.1.1. What does Jasmine provide?

9.2. How does testing Redux differ from React?

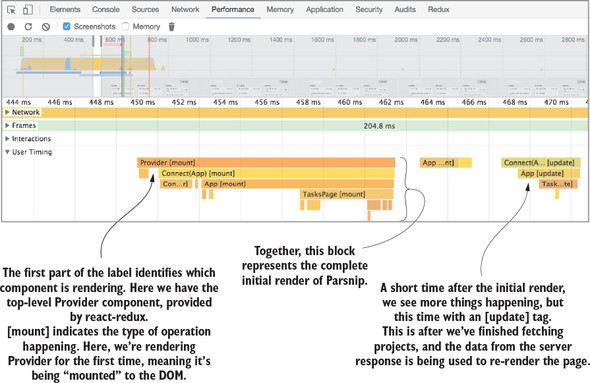

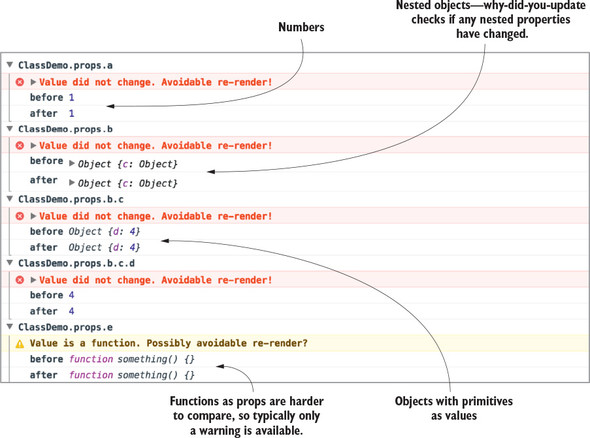

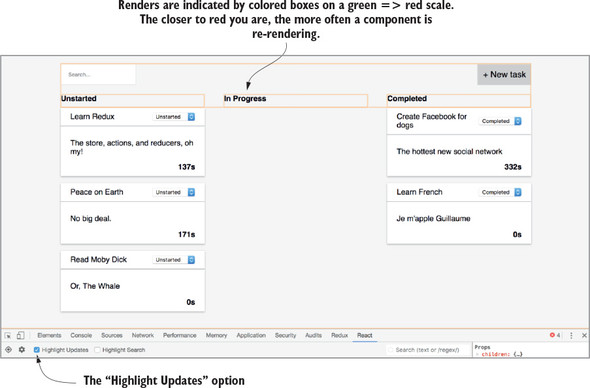

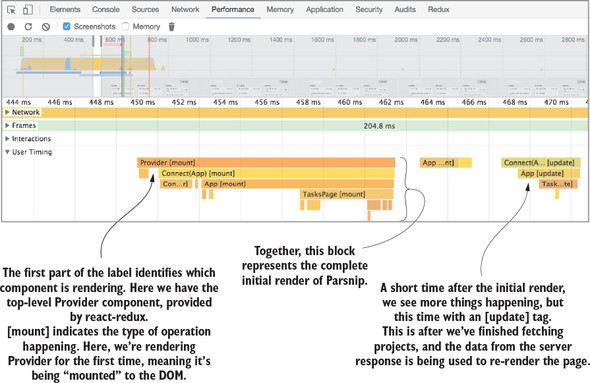

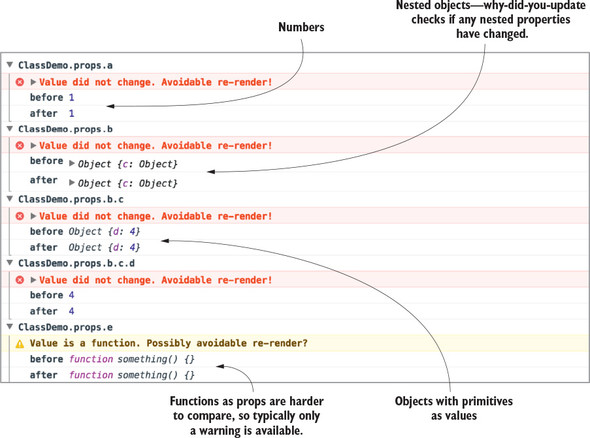

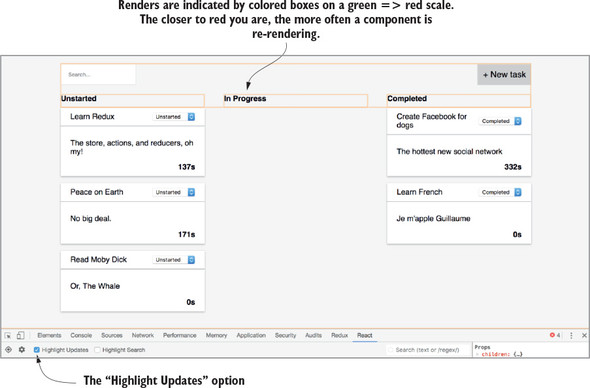

10.1. Performance-assessment tools

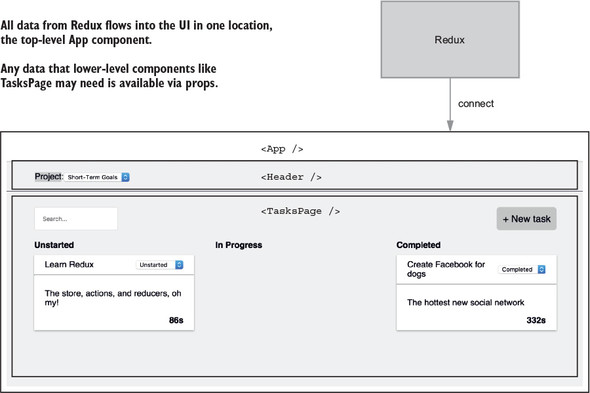

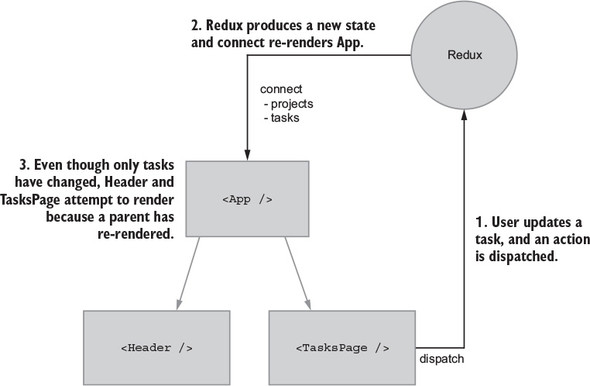

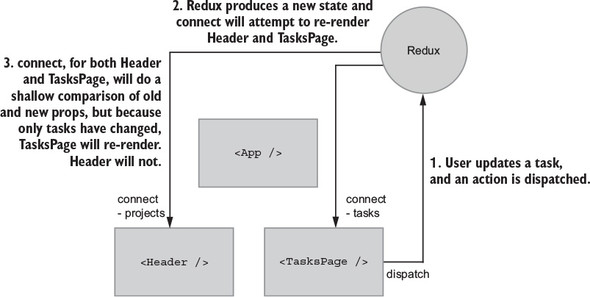

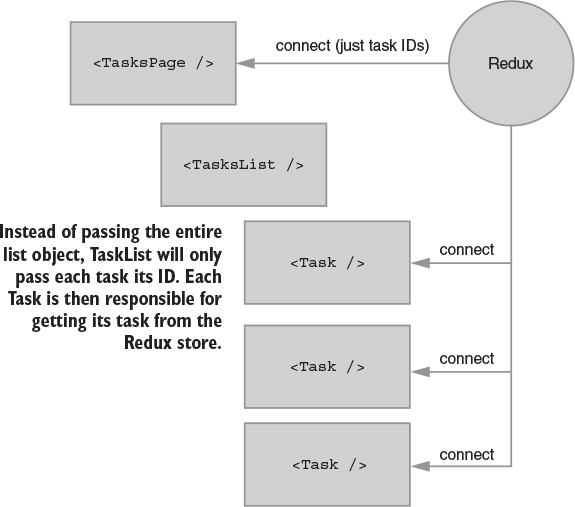

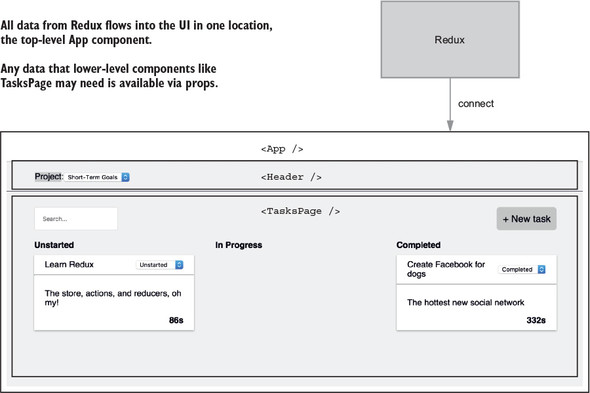

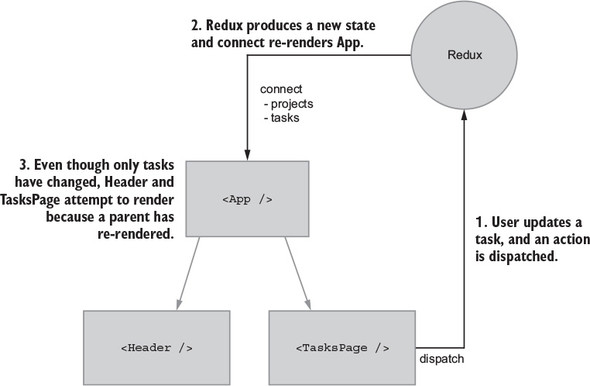

10.3.1. Connecting the right components

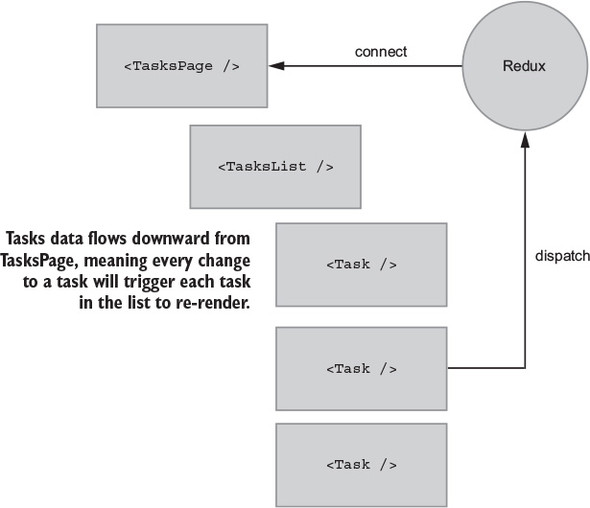

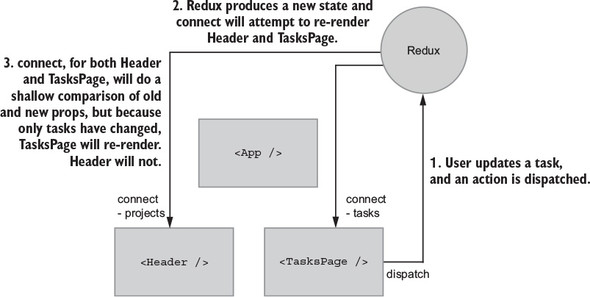

10.3.3. Connecting additional components to Redux

10.3.4. Adding connect to Header and TasksPage

10.3.5. mapStateToProps and memoized selectors

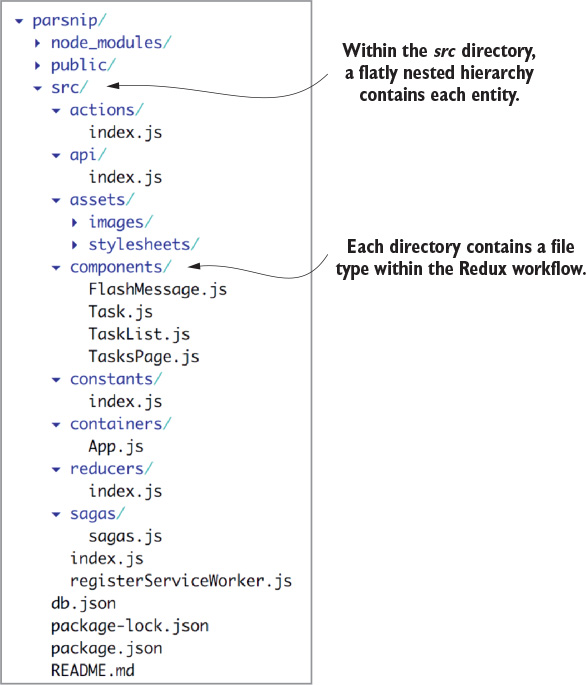

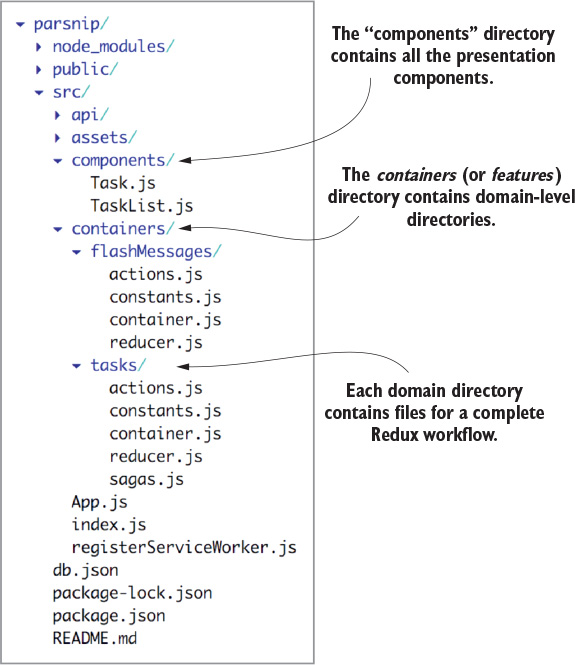

Chapter 11. Structuring Redux code

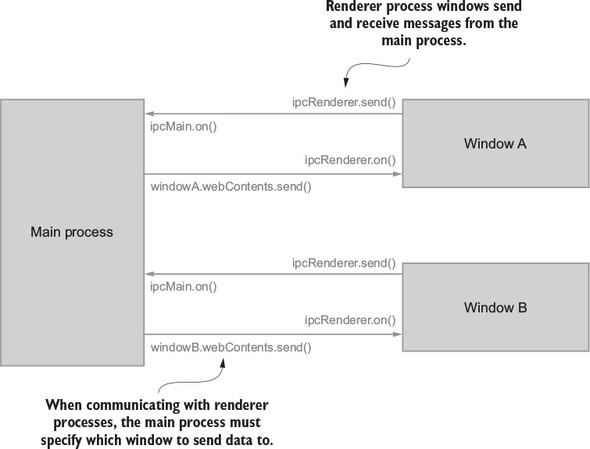

Chapter 12. Redux beyond React

12.1. Mobile Redux: React Native

Since its release in mid-2015, Redux has captured the attention of the JavaScript world. From its humble beginnings as a proof-of-concept for a conference demo and label as “just another Flux implementation,” it’s grown to become the most widely used state management solution for React applications. It’s also been adopted for use by the Angular, Ember, and Vue communities and inspired dozens of imitations and spinoffs.

One of my favorite quotes is, “Redux is a generic framework that provides a balance of just enough structure and just enough flexibility. As such, it provides a platform for developers to build customized state management for their use-cases, while being able to reuse things like the graphical debugger or middleware.”[1] Indeed, while Redux supplies a basic set of tools to work with and outlines a general pattern to follow for organizing your app’s update logic, it’s ultimately up to you to decide how to build your app around Redux. You lay out your app’s file structures, write the reducer logic, connect the components, and determine how much abstraction you want to use on top of Redux.

Joseph Savona, Facebook engineer (https://github.com/reactjs/redux/issues/775#issuecomment-257923575).

The learning curve for Redux can be steep at times. Functional programming and immutability are unfamiliar concepts to most developers coming from object-oriented languages. Writing yet another TodoMVC example doesn’t really showcase the benefits of Redux, or how to tackle building a “real” application. But the end benefits are worth it. The ability to clearly trace data flow in your application and understand where/when/why/how a particular piece of state changed is incredibly valuable, and good Redux usage ultimately leads to code that’s more maintainable and predictable for the long term.

I’ve spent most of my time as a Redux maintainer helping people learn Redux by answering questions, improving the docs, and writing tutorial blog posts. In the process, I’ve seen hundreds of different Redux tutorials. With that in mind, I’m extremely happy to recommend Redux in Action as one of the best resources to learn Redux.

With Redux in Action, Marc Garreau and Will Faurot have written the Redux book I wish I’d written myself. It’s comprehensive, it’s practical, and it does a great job of teaching many key topics for real-world Redux apps. I especially appreciate the way this book covers areas that don’t always have a single clear-cut answer, such as structuring a project, by laying out the pros and cons and letting the reader know this is an area where they may have to decide for themselves.

In today’s fast-moving programming world, no one book can completely capture everything there is to know about a tool. But, Redux in Action will give you a solid foundation and understanding of the fundamentals of Redux, how the pieces fit together, how to use that knowledge for real-world apps, and where to look for more information. I’m excited to see this book released and look forward to having you join the Redux community!

MARK ERIKSON

Redux co-maintainer

Redux is a curious little tool. As you’ll discover, there’s not all that much to it. You can familiarize yourself with each of its methods before you finish a cup of coffee.

Not only is Redux well-contained, but it’s also a finished product. How often do you hear that? There’s no roadmap, project manager, or Kanban board. Commits are still added to the GitHub repository, but they’re usually improvements to documentation or the official examples.

How’s that possible? You may find it helpful to think of Redux as an architecture pattern. The package you install from npm is an implementation of that pattern and it provides you with enough functionality to get your application off the ground.

The real kicker is how much you can accomplish with only those few methods. The stark truth is that the Redux pattern can completely untangle a JavaScript application, leaving behind something more predictable, intuitive, and performant. Thanks to the same pattern, the developer tools also provide unprecedented insight into an application’s state and the flow of data through it.

But what’s the catch? All software choices come with tradeoffs, and Redux is no exception. The cost is tremendous flexibility. That may sound like another advantage, but it presents interesting challenges. The Redux pattern isn’t strictly enforced by the library or any other tool, and the small package cannot hope to educate or guide the developer to use the pattern effectively itself.

In the end, it’s up to the developer to find their own way. This explains why the lines of documentation in the GitHub repository dramatically outnumber the lines of implementation code. As excellent as the official documentation is, developers typically gather context and best practices from scattered resources on and off the web: blog posts, books, tweets, videos, online courses, and so on.

The flexibility allowed by Redux also results in a rich ecosystem of add-ons: libraries for selectors, enhancers, middleware, and more. You’ll be hard-pressed to find two Redux applications using exactly the same toolset. While it’s great that each project can tailor their tools to their unique needs, this can be a source of confusion for newly introduced developers. Newcomers to Redux often find themselves staring down a challenging learning curve when they’re asked to absorb not only Redux, but also the complexity of supplemental packages layered on. This is the main reason we wanted to write the book: to distill our personal experience and knowledge from dozens of different sources into one neat, accessible package.

We believe the real value of this book will be measured by how well it guides you through the rich Redux ecosystem, one bite-sized piece at a time. This won’t be an exhaustive look at all the supplemental tooling. Instead, we’ve chosen a handful of the most popular add-ons that you’re likely to see in the wild and are robust enough to tackle any client project. With that, happy reading! We’re grateful you’ve chosen to spend your time with us.

Writing a book is quite the undertaking. There are so many people who were vital to the process, whether directly or indirectly, that naming them all here may require all of the 300+ pages left in the book. We stand on the shoulders of decades of giants.

A strong community is the foundation of all successful software. The Redux community is a particularly strong one, and we’re indebted to everyone who shared an approach they liked in a blog post, helped a fellow Redux user on a GitHub issue, or answered a question on any of the many online platforms frequented by Redux users across the globe.

First and foremost, this book wouldn’t be possible without the work of Dan Abramov and Andrew Clark, the creators of Redux. On top of spending the time to research and implement Redux, they’ve spent countless hours supporting developers over the past few years. We’d also like to thank the current maintainers of Redux, Mark Erikson and Tim Dorr. On top of regular maintenance, like responding to issues and merging code, they volunteer their time on several different platforms. Together, these folks contributed a substantial amount of research to this book, and it wouldn’t have been possible without them. Whether its weighing in on best practices, writing documentation, or providing feedback to curious developers, none of it goes unnoticed. We appreciate you.

Thanks to the entire team at Manning, including all our editors, for their guidance and support. We’d like to extend a special thank you to Ryan Burrows for his valuable feedback that helped improve the code for this book, as well as Mark Erikson for taking the time to put together a wonderful foreword. We would also like to recognize the reviewers who took the time to read and comment on our book: Alex Chittock, Clay Harris, Fabrizio Cucci, Ferit Topcu, Ian Lovell, Jeremy Lange, John Hooks, Jorge Ezequiel Bo, Jose San Leandro, Joyce Echessa, Matej Strasek, Matthew Heck, Maura Wilder, Michael Stevens, Pardo David, Rebecca Peltz, Ryan Burrows, Ryan Huber, Thomas Overby Hansen, and Vasile Boris. Thanks to all of you.

An extra-special thank you goes to our MEAP readers and forum participants. Your feedback and encouragement were crucial to the development of the book.

Thanks first to my wife, Becky, who made the ultimate sacrifice: living with someone who’s writing a book. I promise that I will probably not write another. Thank you to my family for mirroring my excitement, even if I were writing a book about slugs. Thanks to my friends for inspiring me, helping me combat imposter syndrome, and providing healthy distractions. More thanks to Jeff Casimir, Jorge Téllez, Steve Kinney, Rachel Warbelow, Josh Cheek, and Horace Williams for opening doors for Will and me in this industry. Thank you to Ingrid Alongi and Chris McAvoy for modeling empathic technical leadership in my career. Finally, thank you to my early JavaScript mentors, and particularly Michael Phillips, for imparting not only tolerance, but also enthusiasm for the technology.

Thank you first and foremost to my parents. I wouldn’t have made it without your guidance, enthusiasm, and encouragement. You helped me realize that something like this was even possible. You taught me how to believe in myself. Thank you.

Thank you to my family for all your support and love.

Thanks to everyone at Instacart who helped by giving feedback or by talking over ideas. Special thanks to Dominic Cocchiarella and Jon Hsieh.

Finally, thanks to Lovisa Svallingson, Alan Smith, Allison Larson, Gray Gilmore, Tan Doan, Hilary Denton, Andrew Watkins, Krista Nelson, and Regan Kuchan, who all provided invaluable feedback and encouragement throughout the writing process. You’re the best friends and software confidants I could ask for.

Redux is a state management library, designed to make building complicated user interfaces easier. It’s most commonly used with React, which we pair it with in this book, but it’s also becoming popular with other front end libraries such as Angular.

In 2015, the React ecosystem sorely needed Redux to come along. Predating Redux, the Flux architecture pattern was an exciting breakthrough, and React developers around the world tried their hand at an implementation. Dozens of libraries received noteworthy attention and use. Eventually, the excitement gave way to exhaustion. The number of choices for managing state in a React application was overwhelming.

Redux immediately started to pick up steam after its release, and soon became the most recommended Flux-inspired library. Its use of a single store, focus on immutability, and amazing developer experience proved Redux to be a more simple, elegant, and intuitive solution to most of the issues facing existing Flux libraries. You still have several options for managing state in complex applications, but for those who prefer a Flux-like pattern, Redux has become the default.

This book will walk you through the fundamentals of Redux before moving on to explore the powerful developer tools. Together, we’ll work step-by-step through a task-management application, where we’ll explore real-world Redux usage with a focus on best practices. Finally, we’ll circle back to testing strategies and the various conventions for structuring your applications.

Readers should be comfortable with JavaScript (including ES2015) and have at least basic proficiency with React. We understand, though, that many developers end up getting thrown into Redux at approximately the same moment they’re being introduced to React. We’ve tried our best to accommodate those in this category, and we believe they can make their way through this book with a little extra effort. However, our official recommendation is to gain a strong foundation in React prior to reading this book. If you haven’t done any React development, consider the Manning titles React Quickly (https://www.manning.com/books/react-quickly) or React in Action (https://www.manning.com/books/react-in-action).

This book includes 12 chapters and an appendix.

Chapter 1 introduces the landscape that Redux was born into and why it was created. You’ll learn what Redux is, what it’s used for, and when not to use it. The chapter wraps up with several state management alternatives to Redux.

Chapter 2 jumps headlong into your first React and Redux application. It’s a whirlwind tour of a typical workflow used to create new features. You’ll get a good high-level view of each of the actors involved: actions, reducers, the store, and so on.

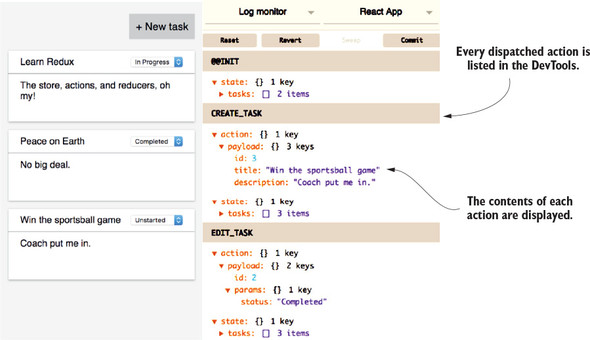

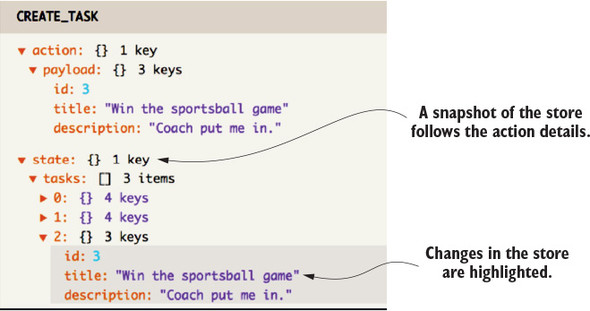

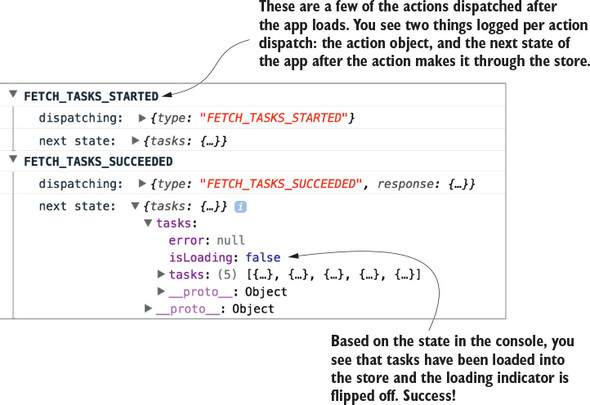

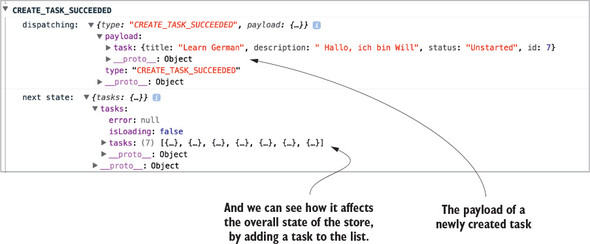

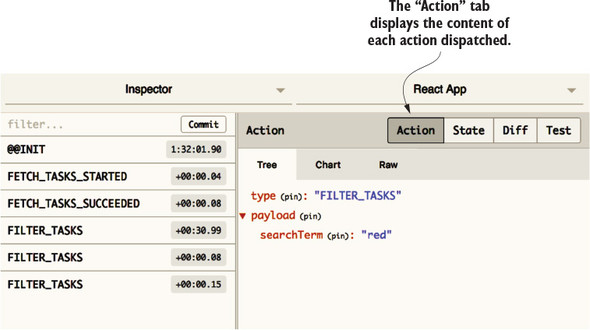

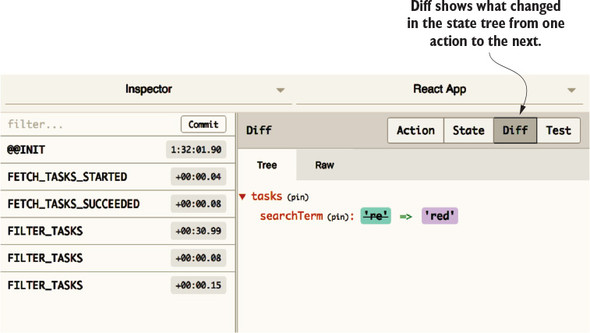

Chapter 3 takes a step back to introduce the high-powered Redux DevTools. The developer tools are one of the biggest selling points for using Redux, and this chapter demonstrates why.

Chapter 4 finally introduces side effects to the example started in chapter 2. You’ll set up a local server and handle API requests within the Redux pattern.

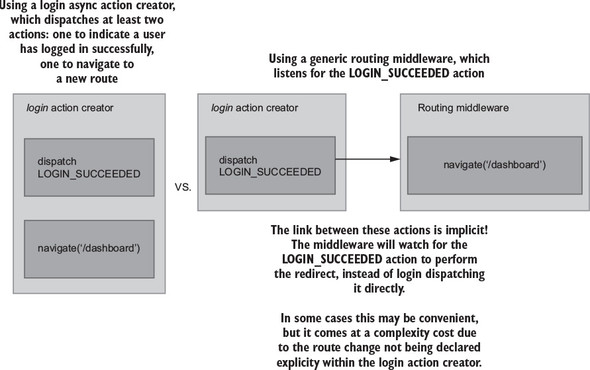

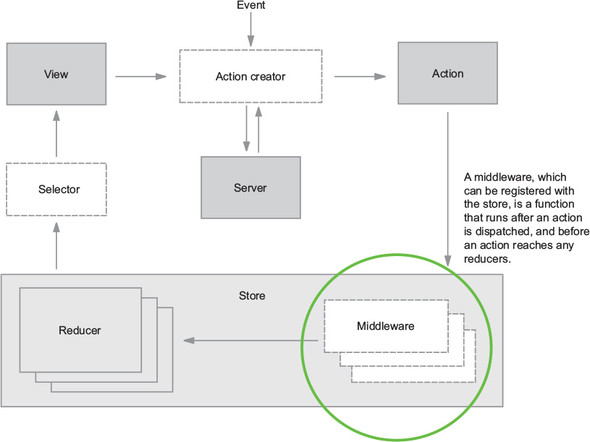

Chapter 5 dives into a more advanced feature: middleware. You’ll learn where middleware sits in the stack, what it’s capable of, and how to build custom middleware of your own.

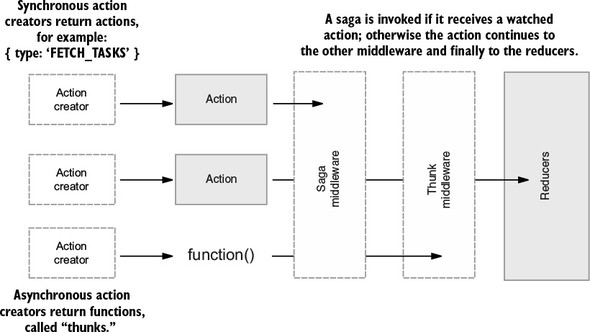

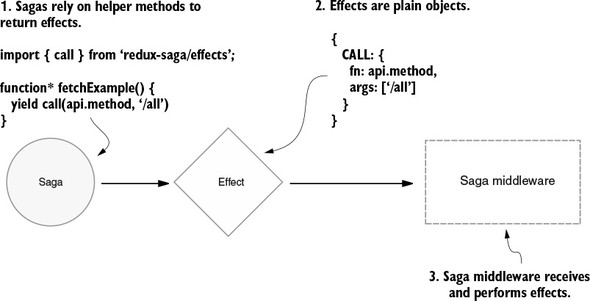

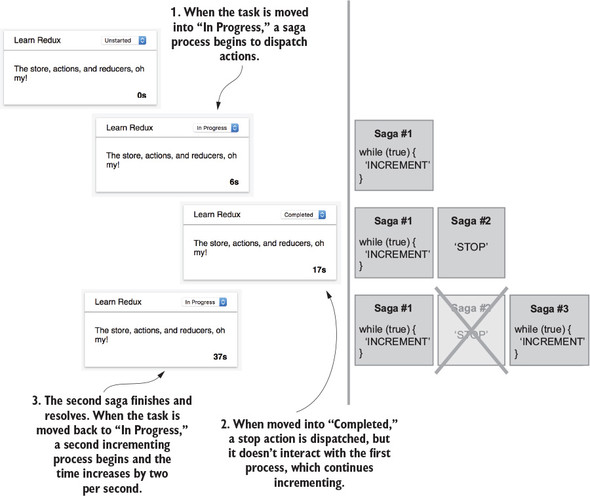

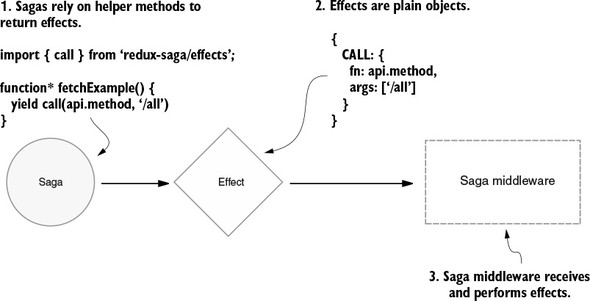

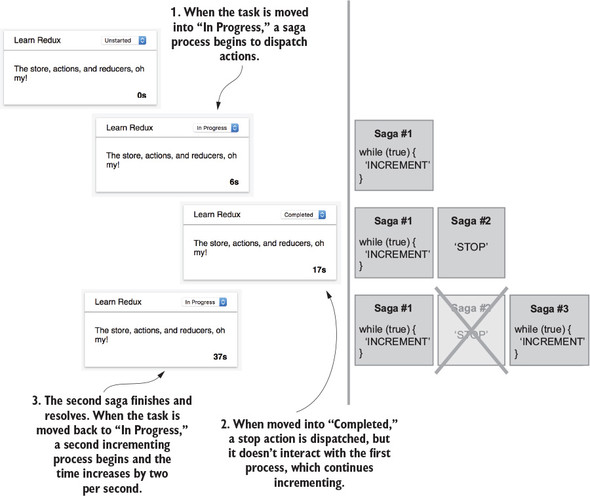

Chapter 6 explores an advanced pattern for handling more complex side effects. You’ll learn how to leverage ES6 generator functions; then you’ll learn how to use sagas to manage long-running processes.

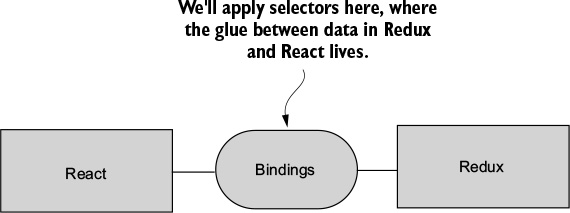

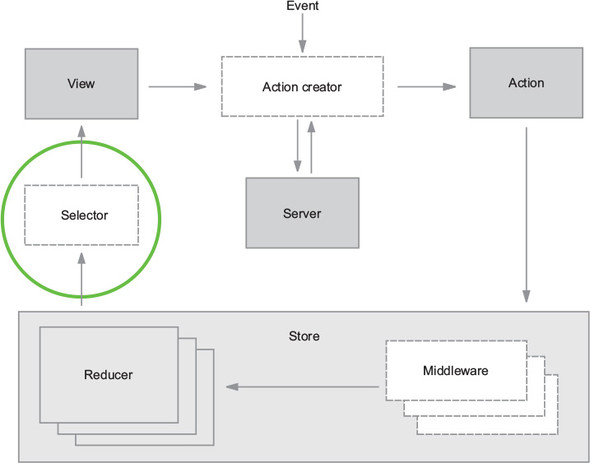

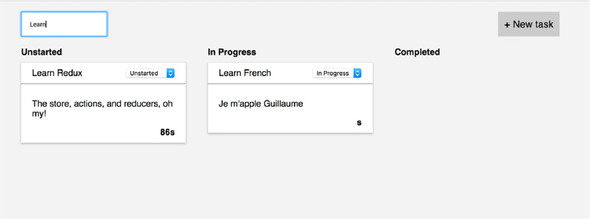

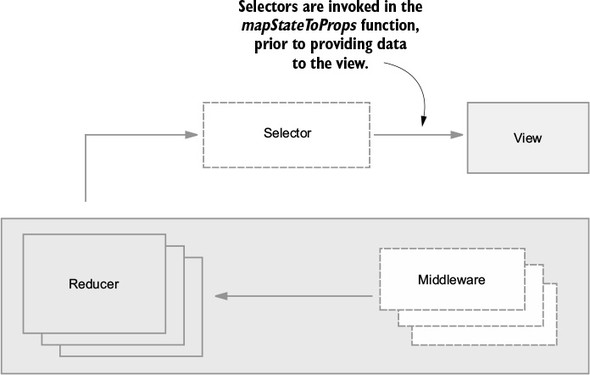

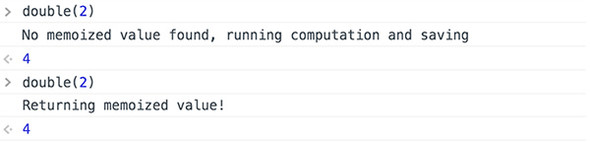

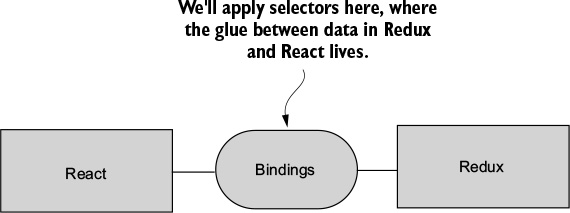

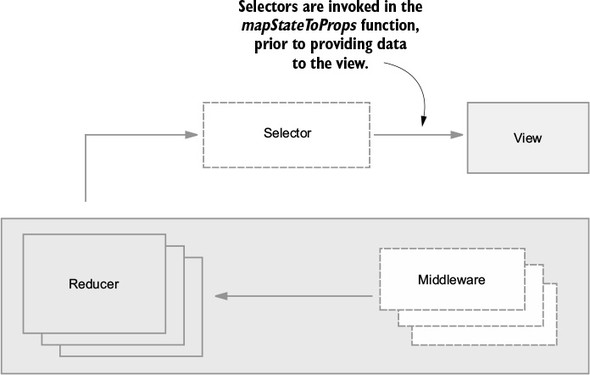

Chapter 7 puts the spotlight on the connection between the Redux store and your views. You’ll learn how selector functions work, then implement a robust solution using the reselect library.

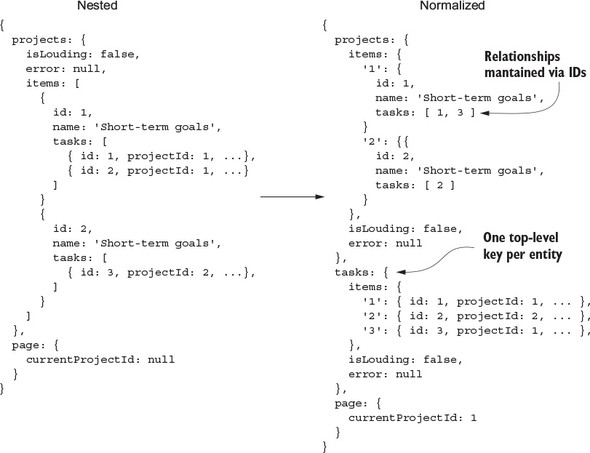

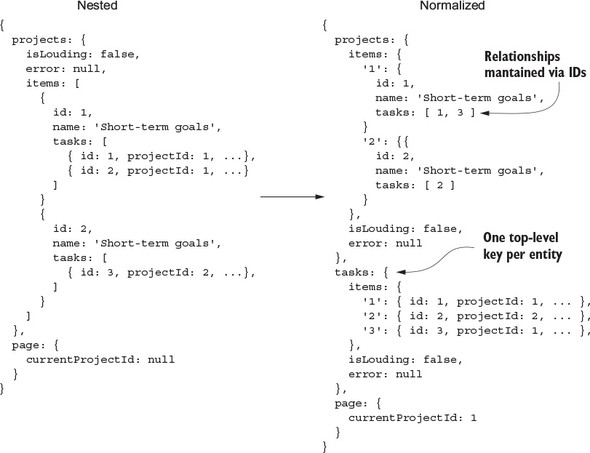

Chapter 8 addresses the common question as to how best to structure data in a Redux store. You’ll reflect on the strategy used up to this point in the book, then explore an alternative approach: normalization.

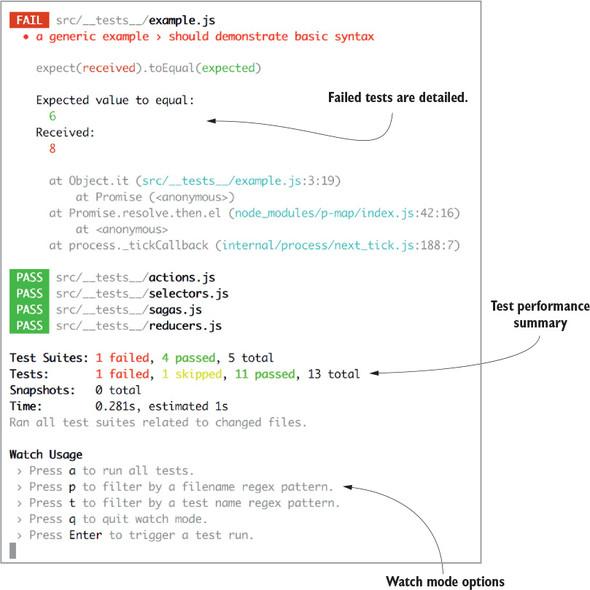

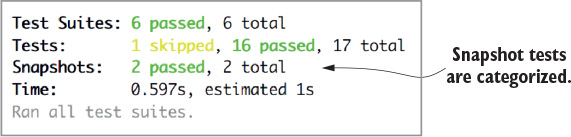

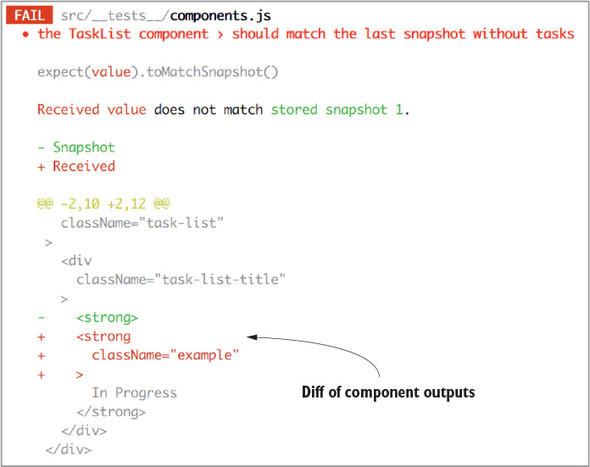

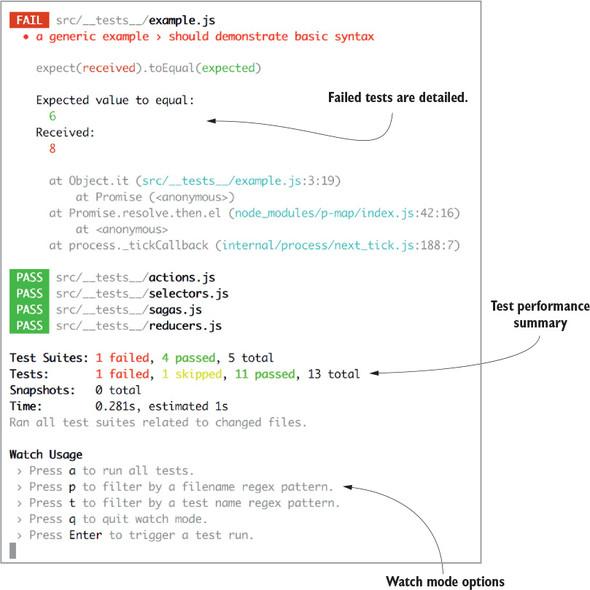

Chapter 9 circles back to cover all things testing. You’ll learn about popular testing tools such as Jest and Enzyme, as well as strategies for testing Redux actions, reducers, selectors, and much more.

Chapter 10 is all about keeping your application lean and mean. It covers performance profiling tools, React best practices, and Redux-specific strategies for boosting performance.

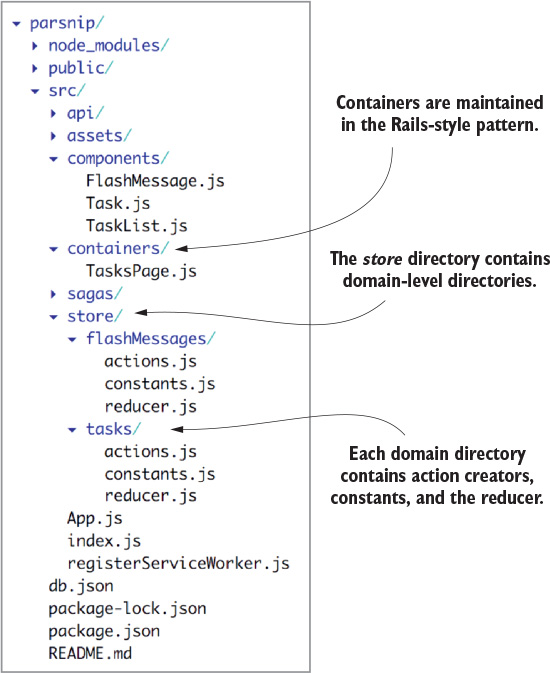

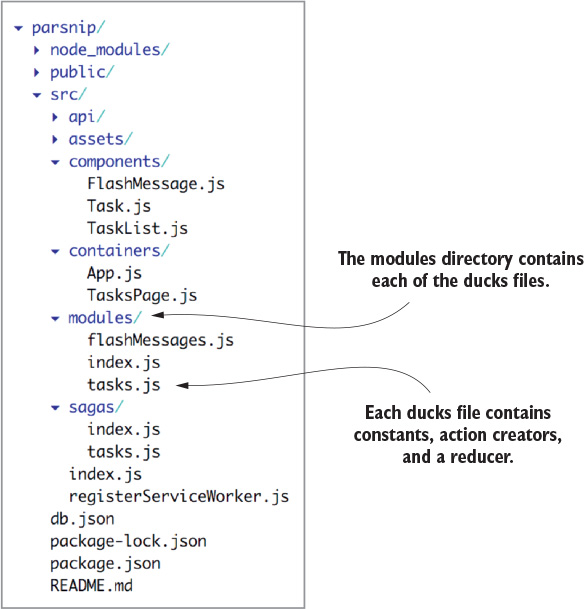

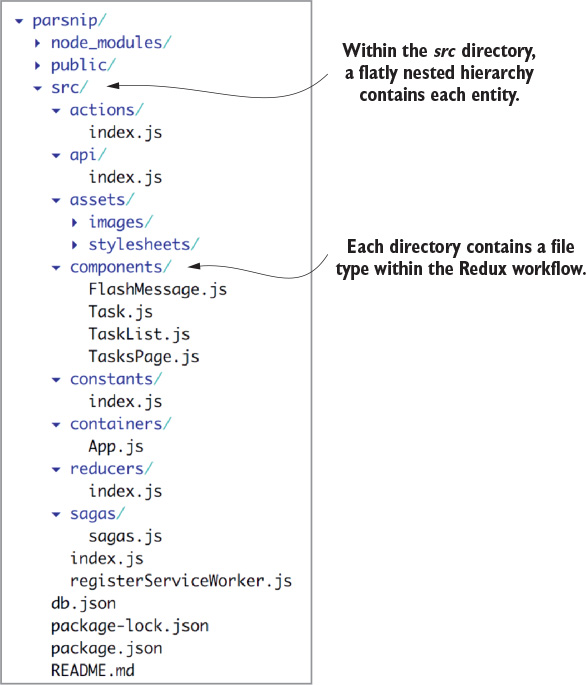

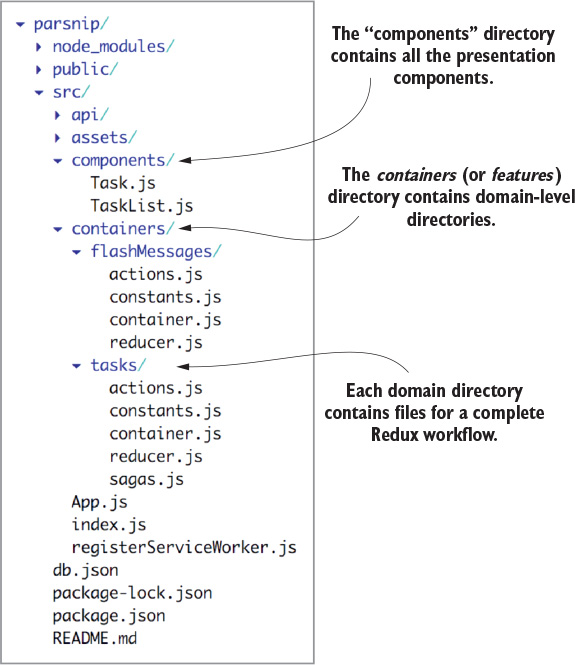

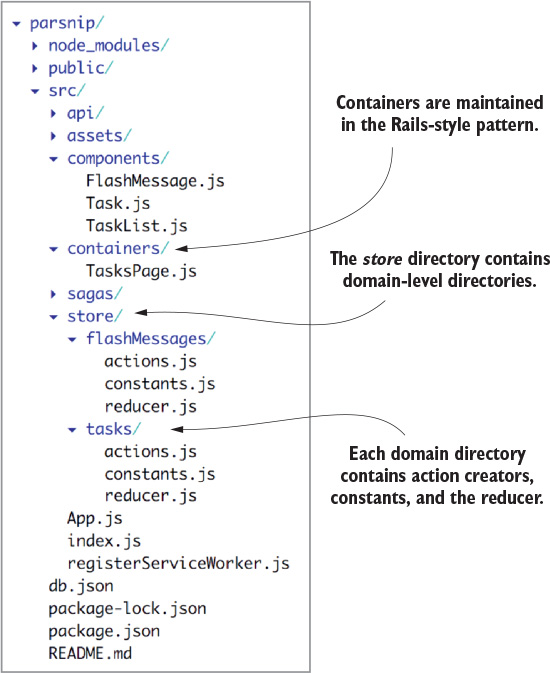

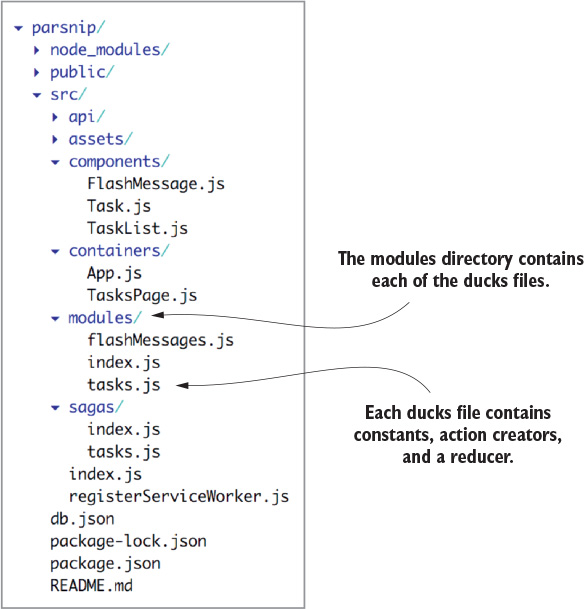

Chapter 11 covers several strategies for organizing your Redux application. Redux doesn’t mind where you put things, so you’ll learn popular conventions that have been established.

Chapter 12 reminds you that Redux can manage the state of more than a React web application. You’ll get a quick tour of the role Redux can play in mobile, desktop, and other web application environments.

The appendix provides instructions for environment setup and tool installation. The book will direct you to the appendix at appropriate points.

Most of the code examples are for Parsnip, the book’s example application. These examples are included as numbered listings, many of which are annotated to provide clarity and reasoning behind certain code choices. Code examples directly in the text can be identified by a fixed-width typeface, like this.

Source code for the examples in the book can be downloaded from the publisher’s website at https://www.manning.com/books/redux-in-action or at https://github.com/wfro/parsnip.

One-step install scripts are available for OSX, Linux, and Windows, and are available along with the rest of the book’s source code. See the appendix for instructions on getting started.

Most of the code examples, especially those related to the example application, require a web browser. We recommend Chrome, which will work seamlessly with React and Redux developer tools.

We bootstrapped the example application with create-react-app, which isn’t strictly required, but highly recommended. It’s the most painless way to set up a modern React development environment.

We used the following Create React App and Redux versions:

Purchase of Redux in Action includes free access to a private web forum run by Manning Publications where you can make comments about the book, ask technical questions, and receive help from the authors and from other users. To access the forum, go to https://forums.manning.com/forums/redux-in-action. You can also learn more about Manning’s forums and the rules of conduct at https://forums.manning.com/forums/about.

Manning’s commitment to our readers is to provide a venue where a meaningful dialogue between individual readers and between readers and the authors can take place. It isn’t a commitment to any specific amount of participation on the part of the authors, whose contribution to the forum remains voluntary (and unpaid). We suggest you try asking the authors challenging questions lest their interest stray! The forum and the archives of previous discussions will be accessible from the publisher’s website as long as the book is in print.

The Redux community is incredibly active on several different platforms. We recommend all the following resources to learn more, help solidify concepts, and ask questions:

MARC GARREAU is a developer at the Ethereum Foundation on the Mist core team, where he wrangles application state in the Mist browser. Previously, he architected and executed applications using Redux at consultancies Cognizant and Quick Left. He’s written a number of popular Redux blog posts and has spoken at several JavaScript meetups in the Denver area.

WILL FAUROT is a full stack developer at Instacart, where he works on various consumer-facing products. A lover of all things front end, he specializes in building complex user interfaces with React and Redux. In past lives he taught tennis professionally and recorded old-time and bluegrass music. If you listen closely on a quiet night in the Bay Area, you may hear him plucking a few banjo strings.

The figure on the cover of Redux in Action is captioned “Habit of a Moorish Woman in 1695.” The illustration is taken from Thomas Jefferys’ A Collection of the Dresses of Different Nations, Ancient and Modern (four volumes), London, published between 1757 and 1772. The title page states that these are hand-colored copperplate engravings, heightened with gum arabic. Thomas Jefferys (1719–1771) was called “Geographer to King George III.” He was an English cartographer who was the leading map supplier of his day. He engraved and printed maps for government and other official bodies and produced a wide range of commercial maps and atlases, especially of North America. His work as a map maker sparked an interest in local dress customs of the lands he surveyed and mapped, which are brilliantly displayed in this collection.

Fascination with faraway lands and travel for pleasure were relatively new phenomena in the late 18th century and collections such as this one were popular, introducing both the tourist as well as the armchair traveler to the inhabitants of other countries. The diversity of the drawings in Jefferys’ volumes speaks vividly of the uniqueness and individuality of the world’s nations some 200 years ago. Dress codes have changed since then and the diversity by region and country, so rich at the time, has faded away. It is now often hard to tell the inhabitant of one continent from another. Perhaps, trying to view it optimistically, we have traded a cultural and visual diversity for a more varied personal life. Or a more varied and interesting intellectual and technical life.

At a time when it is hard to tell one computer book from another, Manning celebrates the inventiveness and initiative of the computer business with book covers based on the rich diversity of regional life of two centuries ago, brought back to life by Jeffreys’ pictures.

If you hop into any React web application in 2018, there’s a good chance you’ll find Redux there to manage its state. It’s remarkable that we reached this place so quickly, though. A few years ago, Redux had yet to be created and React enjoyed an excited and blossoming user base. Early adopters of React believed that they’d found the best solution yet to the view layer—the “V” of the MVC (Model-View-Controller) front-end framework puzzle. What they couldn’t agree on was how to manage the state of those applications once they became the size and complexity that the real world demands. Eventually, Redux settled the debate.

Throughout the course of this book, we’ll explore Redux and its ecosystem through the lens of a React application. As you’ll learn, Redux can be plugged into JavaScript applications of all flavors, but React is an ideal playground for a few reasons. Chief among those reasons: Redux was created in the context of React. You’re most likely to encounter Redux within a React application, and React is agnostic about how you manage the data layer of your application. Without further ado, let’s jump in.

React components have the concept of local, or component, state. Within any given component, you can keep track of the value of an input field or whether a button has been toggled, for example. Local state makes easy work of managing a single component’s behavior. However, today’s single-page applications often require synchronizing a complex web of state. Nested levels of components may render a different user experience based on the pages a user has already visited, the status of an AJAX request, or whether a user is logged in.

Let’s consider a use case involving the authentication status of a user. Your product manager tells you that when a user is logged into an ecommerce store, the navigation bar should display the user’s avatar image, the store should display items nearest to the user’s zip code first, and the newsletter signup form should be hidden. Within a vanilla React architecture, your options are limited for syncing state across each of the components. In the end, you’ll likely end up passing the authentication status and additional user data from one top-level component down to each of these nested components.

This architecture has several disadvantages. Along the way, data may filter through components that have no use for it other than to pass the data on to their children. In a large application, this can result in tons of data moving through unrelated components, passed down via props or passed up using callbacks. It’s likely that a small number of components at the top of the application end up with an awareness of most of the state used throughout the entire application. At a certain scale, maintaining and testing this code becomes untenable. Because React wasn’t intended to solve the same breadth of problems that other MVC frameworks attempted to address, an opportunity existed to bridge those gaps.

With React in mind, Facebook eventually introduced Flux, an architecture pattern for web applications. Flux became tremendously influential in the world of front-end development and began a shift in how we thought about state management in client-side applications. Facebook offered its own implementation of this pattern, but soon more than a dozen Flux-inspired state management libraries emerged and competed for React developers’ attention.

This was a tumultuous time for React developers looking to scale an application. We saw the light with Flux but continued to experiment to find more elegant ways to manage complex state in applications. For a time, newcomers encountered a paradox of choice; a divided community effort had produced so many options, it was anxiety-inducing. To our surprise and delight, though, the dust is already settling and Redux has emerged as a clear winner.

Redux took the React world by storm with a simple premise, a big payoff, and a memorable introduction. The premise is to store your entire application state in a single object using pure functions. The payoff is a totally predictable application state. The introduction, for most early users, came in Dan Abramov’s 2015 React Europe conference talk, titled “Live React: Hot Reloading with Time Travel.” Dan wowed attendees by demonstrating a Redux developer experience that blew established workflows out of the water. A technique called hot loading makes live application updates while maintaining existing state, and his nascent Redux developer tools enable you to time travel through application state—rewinding and replaying user actions with a single click. The combined effect offers developers debugging super powers, which we’ll explain in detail in chapter 3.

To understand Redux, we’d first like to properly introduce you to Flux, the architecture pattern developed at Facebook and credited to Jing Chen. Redux and many of its alternatives are variations of this Flux architecture.

Flux is foremost an architecture pattern. It was developed as an alternative to the prevailing MVC JavaScript patterns popularized by incumbent frameworks, such as Backbone, Angular, or Ember. Although each framework puts its own spin on the MVC pattern, many share similar frustrations: generally, the flow of data between models, views, and controllers can be difficult to follow.

Many of these frameworks use two-way data binding, in which changes to the views update corresponding models, and changes in the models update corresponding views. When any given view can update one or more models, which in turn can update more views, you can’t be blamed for losing track of the expected outcome at a certain scale. Chen contested that although MVC frameworks work well for smaller applications, the two-way data-binding models that many of them employ don’t scale well enough for the size of Facebook’s application. Developers at the company became apprehensive of making changes, for fear of the tangled web of dependencies producing unintended consequences.

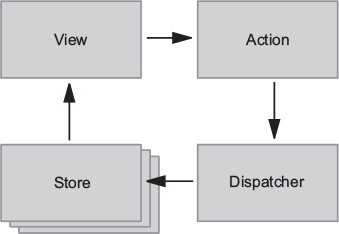

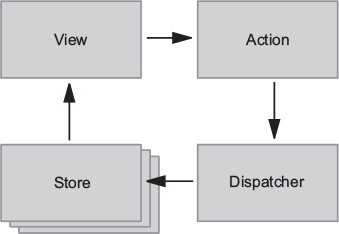

Flux sought to address the unpredictability of state and the fragility of a tightly coupled model and view architecture. Chen scrapped the two-way data-binding model in favor of a unidirectional data flow. Instead of permitting each view to interact with its corresponding models, Flux requires all changes to state to follow a single path. When a user clicks a Submit button on a form, for example, an action is sent to the application’s one and only dispatcher. The dispatcher will then send the data through to the appropriate data stores for updating. Once updated, the views will become aware of the new data to render. Figure 1.1 illustrates this unidirectional data flow.

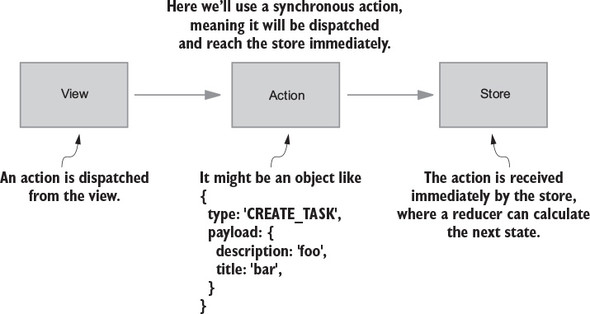

Every change to state starts with an action (figure 1.1). An action is a JavaScript object describing an event in your application. They’re typically generated by either a user interaction or by a server event, such as an HTTP response.

All data flow in a Flux application is funneled through a single dispatcher. The dispatcher itself has little functionality, because its purpose is to receive all actions and send them to each store that has been registered. Every action will be sent to every store.

Each store manages the state of one domain within an application. In an ecommerce site, you may expect to find a shopping cart store and a product store, for example. Once a store is registered with the dispatcher, it begins to receive actions. When it receives an action type that it cares about, the store updates accordingly. Once a change to the store is made, an event is broadcast to let the views know to update using the new state.

Flux may have been designed with React in mind, but the views aren’t required to be React components. For their part, the views need only subscribe to the stores from which they want to display data. The Flux documentation encourages the use of the controller-view pattern, whereby a top-level component handles communication with the stores and passes data to child components. Having both a parent and a nested child component communicating with stores can lead to extra renders and unintended side-effects.

Again, Flux is an architecture pattern first. The Facebook team maintains one simple implementation of this pattern, aptly (or confusingly, depending on your perspective) named Flux. Many alternative implementations have emerged since 2014, including Alt, Reflux, and Redux. A more comprehensive list of these alternative implementations can be found in section 1.6.

We can’t put it much better than the official docs: “Redux is a predictable state container for JavaScript applications” (https://redux.js.org/). It’s a standalone library, but it’s used most often as a state management layer with React. Like Flux, its major goal is to bring consistency and predictability to the data in applications. Redux divides the responsibilities of state management into a few separate units:

Technically speaking, Redux may not qualify as a Flux implementation. It nontrivially deviates from several of the components of the prescribed Flux architecture, such as the removal of the dispatcher altogether. Ultimately though, Redux is Flux-like and the distinction is a matter of semantics.

Redux enjoys the benefits of a predictable data flow from the Flux architecture, but it has also found ways to alleviate the uncertainty of store callback registrations. As alluded to in the previous section, it can be a pain to reconcile the state of multiple Flux stores. Redux, instead, prescribes a single store to manage the state of an entire application. You’ll learn more about how this works and what the implications are in the coming sections.

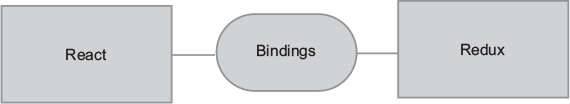

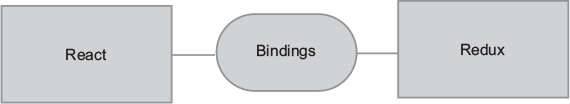

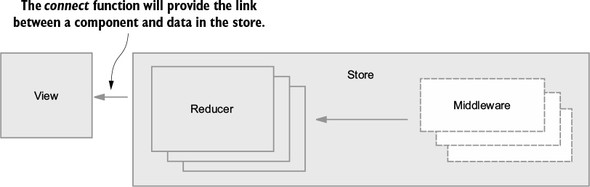

Although Redux was designed and developed in the context of React, the two libraries are completely decoupled. React and Redux are connected using bindings, as shown in figure 1.2.

It turns out that the Redux paradigm for state management can be implemented alongside most JavaScript frameworks. Bindings exist for Angular, Backbone, Ember, and many more technologies.

Although this book is fundamentally about Redux, our treatment of it is closely tied to React. Redux is a small, standalone library, but it fits particularly well with React components. Redux will help you define what your application does; React will handle how your application looks.

Most of the code we’ll write over the course of the book, not to mention most of the React/Redux code you’ll write period, will fall into a few categories:

You’ll find that React is a natural ecosystem for Redux. While React has mechanisms to manage state directly in components, the door is wide open for Redux to come in and manage the greater application state. If you’re interested in an alternative ecosystem, chapter 12 explores the relationship between Redux and several other JavaScript frameworks.

You have covered substantial ground by grokking that state in Redux is represented by a single source of truth, is read-only, and changes to it must be made with pure functions.

Unlike the various domain stores prescribed by the Flux architecture, Redux manages an entire application’s state in one object, inside one store. The use of a single store has important implications. The ability to represent the entire application state in a single object simplifies the developer experience; it’s dramatically easier to think through the application flow, predict the outcome of new actions, and debug issues produced by any given action. The potential for time-travel debugging, or the ability to flip back and forth through snapshots of application state, is what inspired the creation of Redux in the first place.

Like Flux, actions are the only way to initiate changes in application state. No stray AJAX call can produce a change in state without being communicated via an action. Redux differs from many Flux implementations, though, in that these actions don’t result in a mutation of the data in the store. Instead, each action results in a shiny, new instance of the state to replace the current one. More on that subject in the next section.

Actions are received by reducers. It’s important that these reducers be pure functions. Pure functions are deterministic; they always produce the same output given the same inputs, and they don’t mutate any data in the process. If a reducer mutates the existing state while producing the new one, you may end up with an erroneous new state, but you also lose the predictable transaction log that each new action should provide. The Redux developer tools and other features, such as undo and redo functionality, rely on application state being computed by pure functions.

We’ve touched briefly upon topics such as actions, reducers, and the store, but in this section, we cover each in more depth. What’s important to take away here is the role that each element plays and how they work together to produce a desired result. For now, don’t worry about finer implementation details, because you’ll have plenty of time in later chapters to apply the concepts you’re about to explore.

Modern web applications are ultimately about handling events. They could be initiated by a user, such as navigating to a new page or submitting a form. Or they could be initiated by another external source, such as a server response. Responding to events usually involves updating state and re-rendering with that updated state. The more your application does, the more state you need to track and update. Combine this with the fact that most of these events occur asynchronously, and you suddenly have real obstacles to maintaining an application at scale.

Redux exists to create structure around how you handle events and manage state in your application, hopefully making you a more productive and happy human in the process.

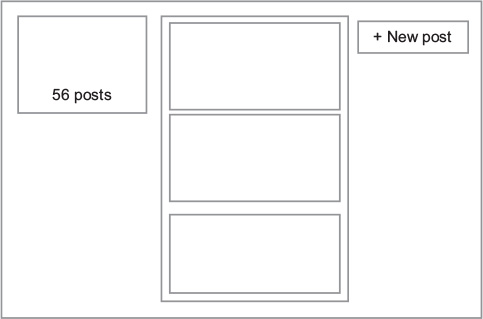

Let’s look at how to handle a single event in an application using Redux and React. Say you were tasked with implementing one of the core features of a social network—adding a post to your activity feed. Figure 1.3 shows a quick mockup of a user profile page, which may or may not take its inspiration from Twitter.

The following distinct steps are involved in handling an event such as a new post:

Sounds reasonable, right? If you’ve used React before, you’ve likely implemented features similar to this directly in components. Redux takes a different approach. Code to satisfy the three tasks is moved out of React components into a few separate entities. You’re already familiar with the View in figure 1.4, but we’re excited to introduce a new cast of characters you’ll hopefully learn to love.

You want to do two things in response to a user submitting a new post: add the post to the user’s activity feed and increment their total post count. After the user submits, you’ll kick off the process by dispatching an action. Actions are plain old JavaScript objects that represent an event in your application, as follows:

{

type: 'CREATE_POST',

payload: {

body: 'All that is gold does not glitter'

}

}

Let’s break that down. You have an object with two properties:

Actions have the advantage of serving as audits, which keep a historical record of everything happening in your application, including any data needed to complete a transaction. It’s hard to understate how valuable this is in maintaining a grasp on a complex application. Once you get used to having a highly readable stream describing the behavior of your application in real time, you’ll find it hard to live without.

Throughout the book, we’ll frequently come back to this idea of what versus how. You can think of Redux as decoupling what happens in an application from how we respond to an event. Actions handle the what in this equation. They describe an event; they don’t know and don’t care what happens downstream. Somewhere down the road you’ll eventually have to specify how to handle an action. Sounds like a job fit for a reducer!

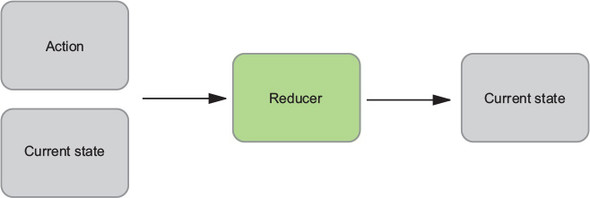

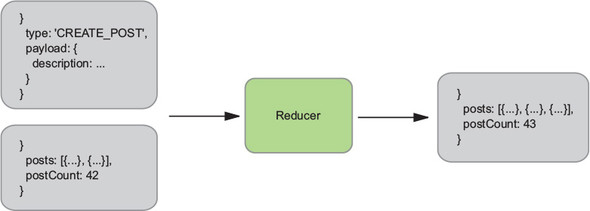

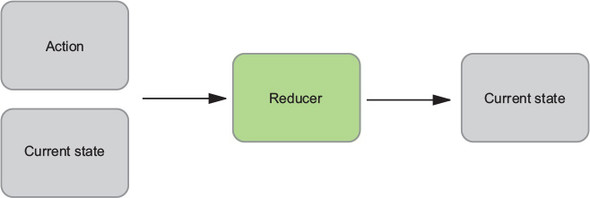

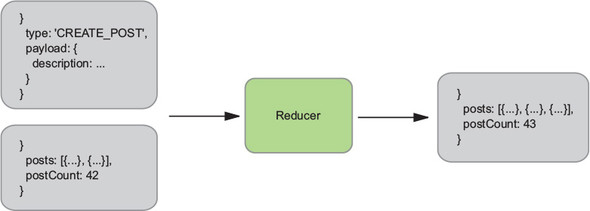

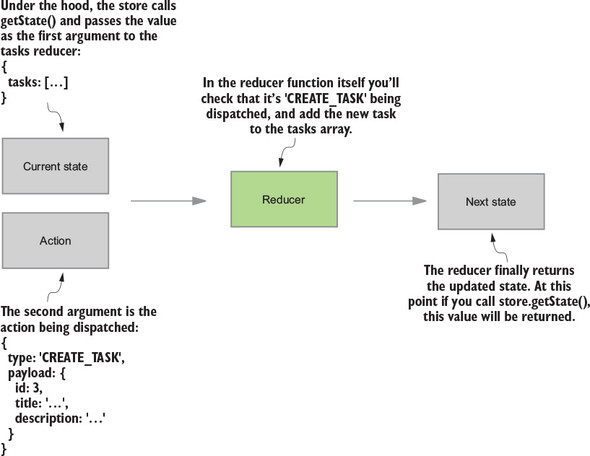

Reducers are functions responsible for updating your state in response to actions. They’re simple functions that take your current state and an action as arguments, and return the next state. See figure 1.5.

Reducers are typically easy to work with. Similar to all pure functions, they produce no side effects. They don’t affect the outside world in any way, and they’re referentially transparent. The same inputs will always yield the same return value. This makes them particularly easy to test. Given certain inputs, you can verify that you receive the expected result. Figure 1.6 shows how our reducer might update the list of posts and the total post count.

You’re focusing on a single event in this example, which means you need only one reducer. However, you certainly aren’t limited to only one. In fact, more sizable applications frequently implement several reducer functions, each concerned with a different slice of the state tree. These reducers are combined, or composed, into a single “root reducer.”

Reducers describe how to update state in response to an action, but they can’t modify state directly. That privilege rests solely with the store.

In Redux, application state is stored in a single object. The store has a few main roles, which follow:

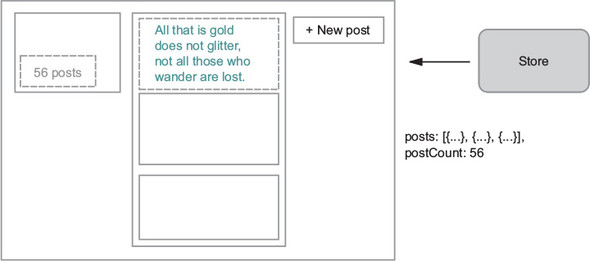

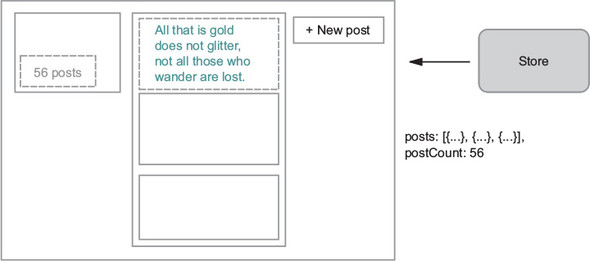

The reducer processed the action and computed the next state. Now it’s time for the store to update itself and broadcast the new state to all registered listeners (you care specifically about the components that make up your profile page). See figure 1.7.

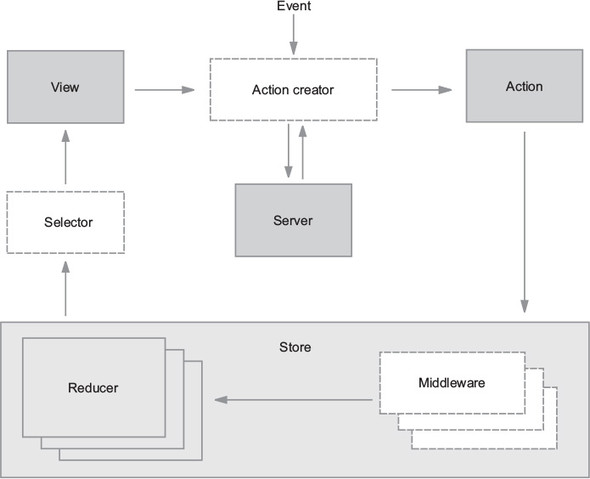

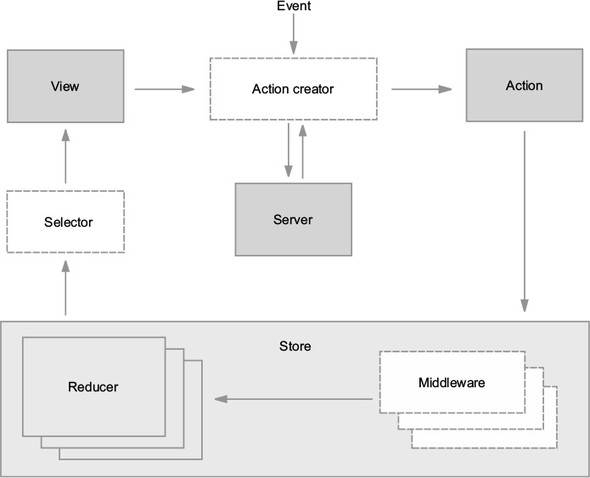

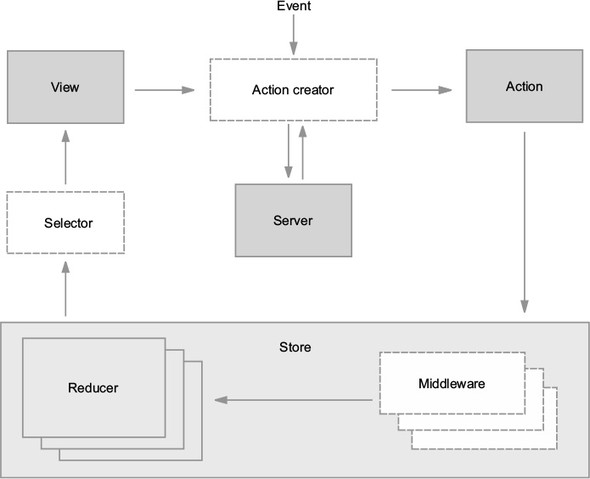

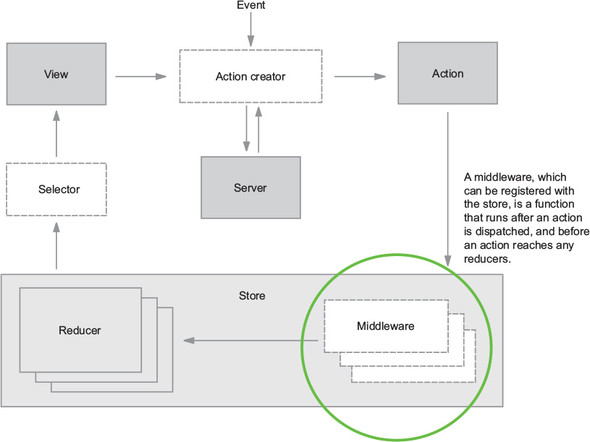

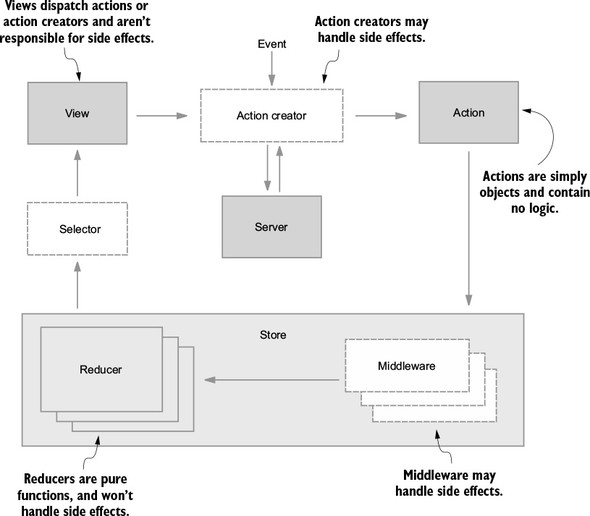

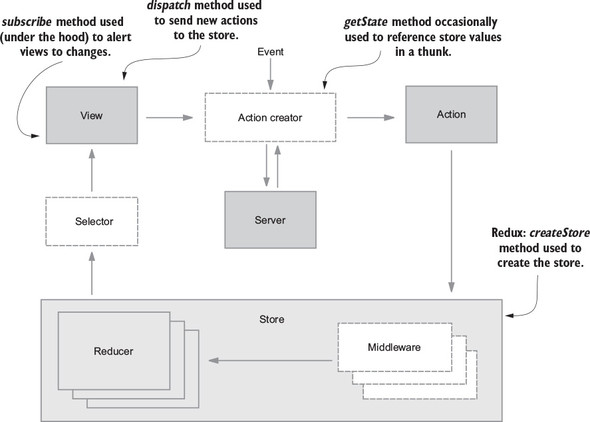

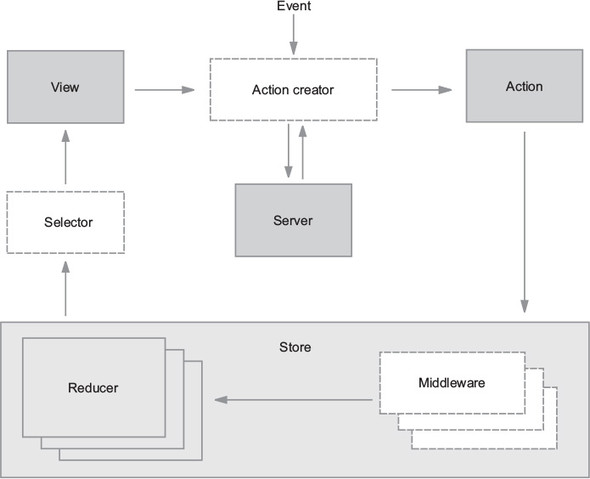

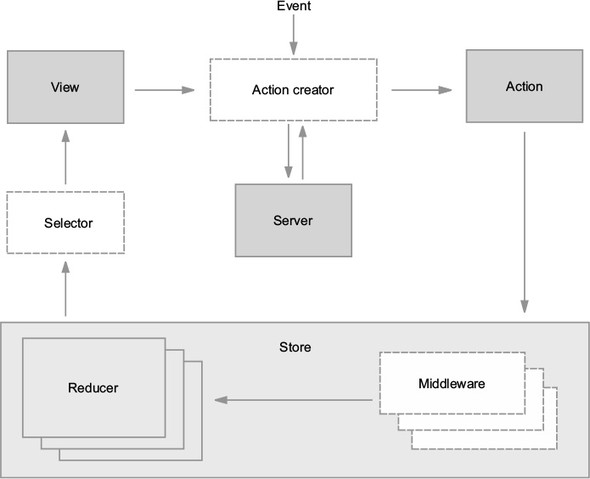

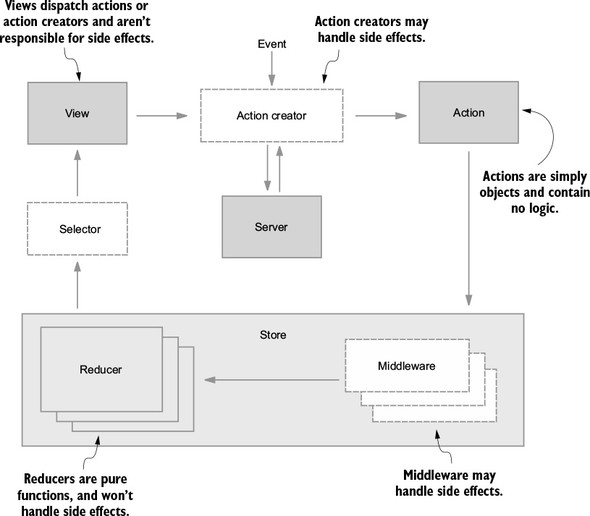

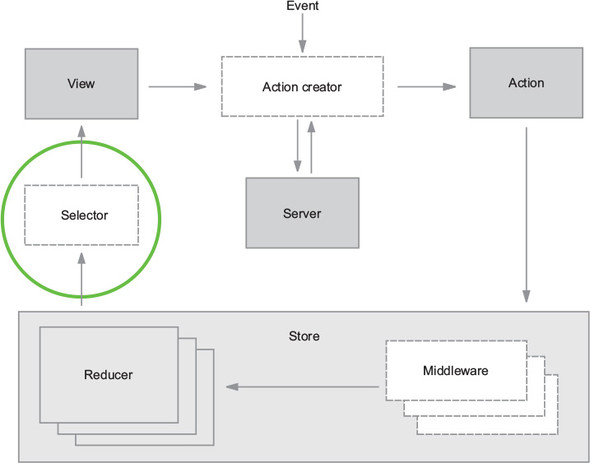

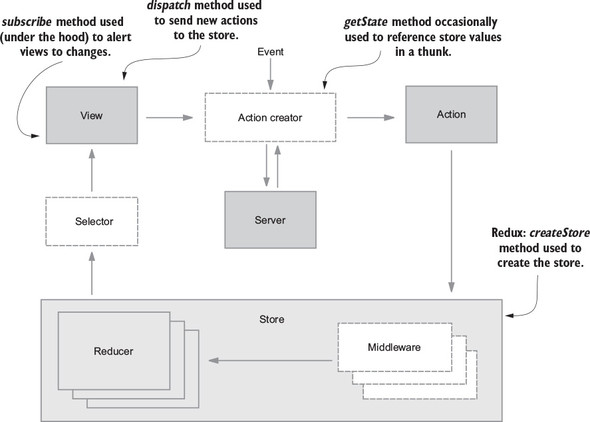

Now that you’re familiar with several of the most important building blocks, let’s look at a more comprehensive diagram of the Redux architecture. Several pieces will be unfamiliar now, but we’ll revisit this diagram (figure 1.8) repeatedly throughout this book, and over time, we’ll fill in each of those gaps.

To review, an interaction with a view may produce an action. That action will filter through one or more reducers and produce a new state tree within the store. Once the state updates, the views will be made aware that there’s new data to render. That’s the whole cycle! Items in figure 1.8 with a dashed border (action creators, middleware, and selectors) are optional, but powerful, tools in a Redux architecture. We cover each of these topics in future chapters.

If this feels like a lot, don’t fret. If you’re new to the kind of one-directional architecture that we’re beginning to explore, it can be initially overwhelming (we certainly thought so at first). It takes time to let these concepts sink in. Developing a sense for what role they play and what type of code belongs where is as much art as it is science, and it’s a skill you’ll develop over time as you continue to get your hands dirty.

By this point, you’ve been exposed to many of the Redux talking points. If you have to pitch your boss on Redux by the time you finish the first chapter, let’s consolidate those ideas into a highlight reel. In short, Redux is a small, easy-to-learn state management library that results in a highly predictable, testable, and debuggable application.

The biggest selling point for Redux is the sanity it provides to applications juggling complex state. The Redux architecture offers a straightforward way to conceptualize and manage state, one action at a time. Regardless of application size, actions within the unidirectional data flow result in predictable changes to a single store.

Predictability enables world-class debugging tools. Hot-loading and time-travel debugging provide developers with wildly faster development cycles, whether building new features or hunting down bugs. Your boss will like that you’re a happier developer, but she’ll love that you’re a faster one.

The Redux implementation code you’ll write is primarily functions, many of them pure. Each piece of the puzzle can be broken out and unit-tested in isolation with ease. Official documentation uses Jest and Enzyme, but whichever JavaScript testing libraries your organization prefers will do the trick.

Redux is a natural step up from vanilla React. The library has a remarkably small footprint, exposing only a handful of APIs to get the job done. You can become familiar with all of it in a day. Writing Redux code also requires your team to become familiar with several functional programming patterns. This will be new territory for certain developers, but the concepts are straightforward. Once you understand that changes to state can be produced only by pure functions, you’re most of the way there.

If your boss is doing her job, one of the items on her checklist is dependency size. Redux is a tiny library—under 7KB when minified. Checkmate.

We’ve hit you over the head with how great Redux is, but it’s certainly no cure-all. We’ve argued in favor of why you should use Redux, but as we all know, nothing in life is free and no software pattern exists without tradeoffs.

The cost of Redux is a fair amount of boilerplate code and the added complexity of something more than React’s local component state. It’s important to realize that Redux, and the usage patterns you establish while using it, is one more thing for a new developer on your team to learn before they can contribute.

Redux co-creator Dan Abramov weighed in here, even publishing a blog post entitled “You Might Not Need Redux.” He recommends starting without Redux and introducing the library only after you’ve reached enough state management pain points to justify including it. The recommendation is intentionally vague, because that turning point will be slightly different for every team. Smaller applications without complex data requirements are the most common scenario where it might be more appropriate to not use Redux in favor of plain React.

What might those pain points look like? Teams use a few common scenarios to justify bringing in Redux. The first is the passing of data through several layers of components that don’t have any use for it. The second scenario deals with sharing and syncing data between unrelated parts of the application. We all have a tolerance for performing these tasks in React, but eventually you have a breaking point.

Redux is likely a good fit out of the gate if you know you’ll want to build a specific feature that it excels at. If you know your application will have complex state and require undo and redo functionality, cut to the chase and pull in Redux. If server-side rendering is a requirement, consider Redux upfront.

As mentioned already, Redux entered a crowded state-management market and more options have appeared since. Let’s run through the most popular alternatives for managing state in React applications.

While researching, we stopped counting Flux implementation libraries somewhere in the low 20s. Astoundingly, at least 8 of them have received more than 1,000 stars on GitHub. This highlights an important era in React’s history—the Flux architecture was a groundbreaking idea that spurred excitement in the community and, as a result, a great deal of experimentation and growth. During this period, libraries came and went at such an exhausting rate that the term JavaScript Fatigue was coined. With hindsight, it’s clear that each of those experiments was an important stepping stone along the way. Over time, many of the alternative Flux implementation maintainers have graciously bowed out of the race in favor of Redux or one of the other popular options, but there are still several well-maintained options out there.

Flux, of course, is the one that started it all. In the maintainers’ own words, “Flux is more of a pattern than a framework.” You’ll find great documentation about the Flux architecture pattern in this repository, but a small API is exposed to facilitate building applications with the architecture. The Dispatcher is at the core of that API, and, in fact, several other Flux implementations have incorporated that Dispatcher into their libraries. Measured in GitHub stars, this library is about half as popular as Redux and continues to be actively maintained by the Facebook team.

Reflux was a fast follow to the original Flux library. The library introduces functional reactive programming ideas to the Flux architecture by ripping out the single Dispatcher in favor of giving each action the ability to dispatch itself. Callbacks can be registered with actions to update stores. Reflux is still maintained and about one-sixth as popular as Redux, measured by GitHub stars.

Unlike Reflux, Alt stays true to the original Flux ideas and uses the Flux Dispatcher. Alt’s selling points are its adherence to the Flux architecture and a reduction in boilerplate code. Although it once enjoyed an enthusiastic community, at the time of writing, there have been no commits to the project in more than six months.

To round out the bunch with greater than 1000 GitHub stars, you also have Fluxible, Fluxxor, NuclearJS, and Flummox. Fluxible continues to be well-maintained by the Yahoo team. Fluxxor, NuclearJS, and Flummox may be maintained, but are no longer active. To underscore the idea that these projects were important stepping stones, Flummox was created by Andrew Clark, who went on to co-create Redux with Dan Abramov.

MobX offers a functional reactive solution to state management. Like Flux, MobX uses actions to modify state, but components react to that mutated, or observable, state. Although part of the terminology in functional reactive programming can be intimidating, the features are approachable in practice. MobX also requires less boilerplate code than Redux but does more for you under the hood and is therefore less explicit. The first commits for MobX predate those of Redux by only a couple of months, in early 2015.

GraphQL is an exciting new technology, also being developed by the Facebook team. It’s a query language that allows you to specify and receive exactly the data that is required by a component. This paradigm fits well with the intended modularity of React components; any data fetching that’s required by the component is encapsulated within it. Queries to the API are optimized for the data needs of parent and children components.

Typically, GraphQL is used with a GraphQL client. The two most popular clients today are Relay and Apollo Client. Relay is another project developed and maintained by the Facebook team (and open source community). Apollo was originally implemented with Redux under the hood, but now offers additional configurability.

While it’s possible to bring in both Redux and a GraphQL client to manage the same application’s state, you may find the combination to be overly complex and unnecessary. Although GraphQL clients handle data fetching from a server and Redux is more general-purpose, there’s overlap in usage between the packages.

This chapter introduced the Flux architecture pattern and where Redux ran with those ideas. You learned several practical details about the library.

Now you’re ready to put the basic building blocks together and see a functioning Redux application end to end. In the next chapter, you’ll build a task management application with React and Redux.

Key points you’ve learned

By now, you’re almost certainly itching to get started on a Redux application. You have more than enough context to begin, so let’s scratch that itch. This chapter guides you through the set up and development of a simple task-management application using Redux to manage its state.

By the end of the chapter, you’ll have walked through a complete application, but more importantly, you’ll have learned enough of the fundamentals to leave the nest and create simple Redux applications of your own. Through the introduction of components that were strategically omitted in chapter 1, you’ll develop a better understanding of the unidirectional data flow and how each piece of the puzzle contributes to that flow.

You may wonder if introducing Redux is overkill for the small application you’ll build in this chapter. To iterate a point made in chapter 1, we encourage the use of vanilla React until you experience enough pain points to justify bringing in Redux.

If this chapter were the whole of it, Redux would indeed be overkill. It’s not until you reach features introduced in later chapters that it really begins to make sense. As a matter of practicality, you’ll head straight for Redux; that’s why you’re here, after all! As a thought experiment, you may enjoy rebuilding the application in React to determine when including Redux makes sense for yourself, once you become comfortable with the fundamentals.

The path you’ll walk is a well-trodden one: building a project task-management application. In this chapter, you’ll implement simple functionality, but you’ll add increasingly complex features throughout the rest of the book as we cover each concept in more detail.

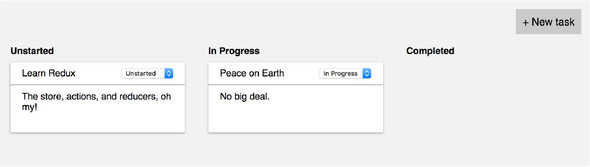

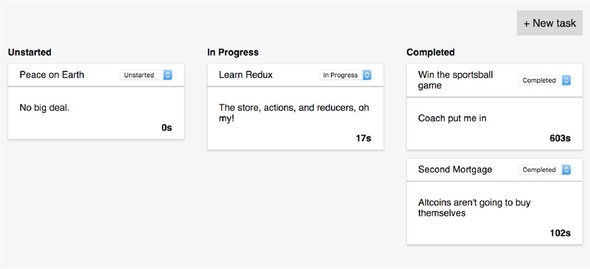

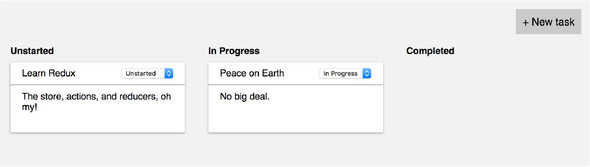

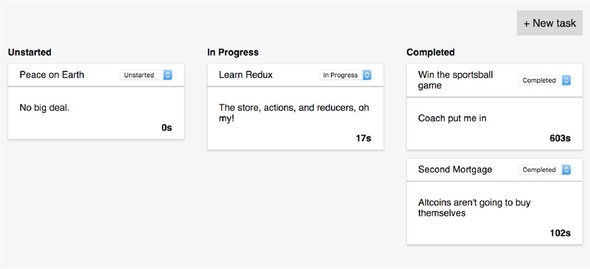

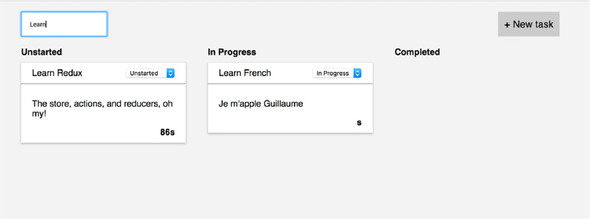

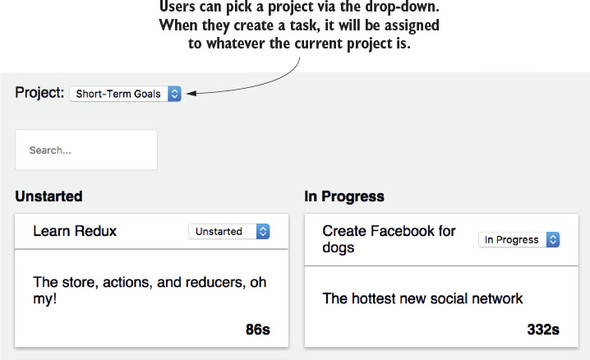

This app is lovingly named Parsnip. Why Parsnip? No good reason. It spoke to us in the moment, and we went with it. Specifically, Parsnip will be a Kanban board, a tool that allows users to organize and prioritize work (similar to Trello, Waffle, Asana, and a number of other tools). An app like this is highly interactive and requires complex state management—a perfect vehicle for us to apply Redux skills.

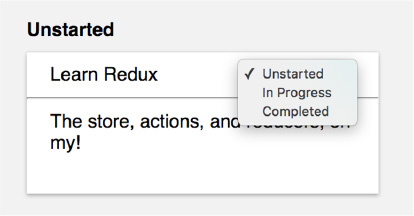

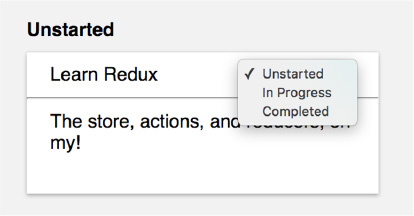

To see Redux in action without the bells and whistles, you’ll start with one resource, a task. Your users should

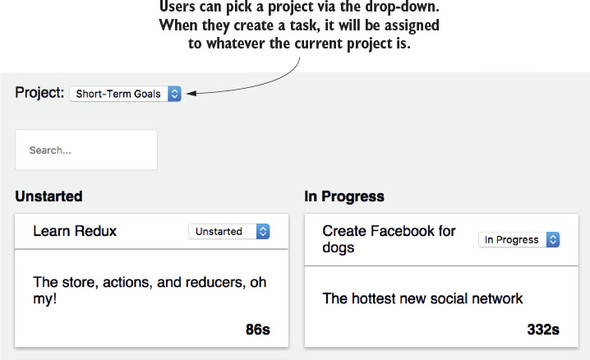

By the end of the chapter, you’ll have something similar to figure 2.1.

There’s no single right way to approach problems with Redux, but we recommend taking time to think about how the application state should look before implementing a new feature. If React applications are a reflection of the current state, what should your state object look like to satisfy the requirements? What properties should it have? Are arrays or objects more appropriate? These are the kinds of questions you should ask when you approach new features. To recap, you know you need to do the following:

What state do you need to track to make all this possible? It turns out that our requirements are straightforward: you need a list of task objects with a title, description, and status. Application state that lives in Redux is a simple JavaScript object. The following listing is an example of what that object might look like.

{

tasks: [ 1

{

id: 1, 2

title: 'Learn Redux',

description: 'The store, actions, and reducers, oh my!',

status: 'In Progress',

},

{

id: 2,

title: 'Peace on Earth',

description: 'No big deal.',

status: 'Unstarted',

}

]

}

The store is simple, a tasks field with an array of task objects. How you organize the data in your Redux store is completely up to you, and we’ll explore popular patterns and best practices later in the book.

Deciding upfront how the data will look will be a big help down the road in determining what kinds of actions and reducers you might need. Remember, it may be helpful to think of client-side state like a database. Similarly to if you were dealing with a persistent data store such as a SQL database, declaring a data model will help you organize your thoughts and drive out the code you need. Throughout the book, you’ll start each new feature by revisiting this process of defining a desired state shape.

React has always enjoyed a reputation for being beginner-friendly. Compared with larger frameworks such as Angular and Ember, its API and feature set are small. The same can’t be said for many of the surrounding tools you’ll find in many production-ready applications. This includes Webpack, Babel, ESLint, and a dozen others with varying learning curves. We developers couldn’t be bothered to do all this configuration for each new project or prototype from scratch, so an abundance of starter kits and boilerplate applications were created. Although popular, many of these starter kits became wildly complex and equally intimidating for beginners to use.

Fortunately, in mid-2016, Facebook released an officially supported tool that does this complex configuration work for you and abstracts most of it away. Create React App is a command line interface (CLI) tool that will generate a relatively simple, production-ready React application. Provided you agree with enough of the choices made within the project, Create React App can easily save days of setup and configuration time. We’re sold on this tool as the preferred way to get new React projects off the ground, so we’ll use it to kick-start this application.

Create React App is a module that can be installed using your favorite package manager. In this book, you’ll use npm. In a terminal window, run the following command at the prompt:

npm install --global create-react-app

Once installed, you can create a new project with

create-react-app parsnip

Creating a new application can take a few minutes, depending on the time it takes to install the dependencies on your machine. When it completes, there will be a newly created parsnip directory waiting for you. Navigate to that directory now, and we’ll get up and running.

To view the application, you’ll start the development server, which takes care of serving your JavaScript code to the browser (among other things). Run the following command from within the parsnip directory:

npm start

If create-react-app didn’t open a browser window automatically after starting the development server, open a browser and head to localhost:3000. You should see something similar to figure 2.2.

Go ahead and follow the instructions. Try changing the “To get started...” text by editing the src/App.js file. You should see the browser refresh automatically, without having to reload the page. We’ll cover this feature and more development workflow enhancements in-depth in chapter 3.

Before you jump into configuring Redux, let’s lay groundwork by building a few simple React components. We generally like to approach features “from the outside in,” meaning you’ll start by building the UI first, then hook up any necessary behavior. It helps you stay grounded in what the user will eventually experience, and the earlier you can interact with a working prototype, the better. It’s much better to iron out issues with a design or feature spec early, before too much work gets underway.

You also want to make sure you’re building flexible, reusable UI components. If you define your components with clear interfaces, reusing and rearranging them becomes easy. Start by creating a new directory under src/ called components/, then create files for the new components, Task.js, TaskList.js, and TasksPage.js.

Task and TaskList will be stateless functional components, introduced in React v0.14. They don’t have access to lifecycle methods such as componentDidMount, only accept props, don’t use this.state or this.setState, and they’re defined as plain functions instead of with createReactClass or ES2015 classes.

These kinds of components are wonderfully simple; you don’t have to worry about this, they’re easier to work with and test, and they cut down on the number of lines of code you might need with classes. They accept props as input and return some UI. What more could you ask for? Copy the code in the following listing to Task.js.

import React from 'react';

const Task = props => { 1

return (

<div className="task">

<div className="task-header">

<div>{props.task.title}</div> 2

</div>

<hr />

<div className="task-body">{props.task.description}</div>

</div>

);

}

export default Task;

We aren’t including the contents of CSS files in this book. They’re verbose and don’t aid in the understanding of Redux topics. Please see the supplementary code if you want to replicate the styles found in screenshots.

The implementation for the TaskList component is equally straightforward. The column name and a list of tasks will be passed in from a parent component. Copy the code in the following listing to TaskList.js.

import React from 'react';

import Task from './Task';

const TaskList = props => {

return (

<div className="task-list">

<div className="task-list-title">

<strong>{props.status}</strong>

</div>

{props.tasks.map(task => (

<Task key={task.id} task={task} />

))}

</div>

);

}

export default TaskList;

Redux allows you to implement a significant chunk of our React components as these stateless functional components. Because you get to offload most of the app’s state and logic to Redux, you can avoid the component bloat that’s typical of nearly all large React applications. The Redux community commonly refers to these types of components as presentational components, and we cover them in more detail later in the chapter.

Within TasksPage.js, import the newly created TaskList component and display one for each status (see the following listing). Although it doesn’t yet, this component needs to manage local state when you introduce the new task form. For that reason, it’s implemented as an ES6 class.

import React, { Component } from 'react';

import TaskList from './TaskList';

const TASK_STATUSES = ['Unstarted', 'In Progress', 'Completed']; 1

class TasksPage extends Component { 2

renderTaskLists() {

const { tasks } = this.props;

return TASK_STATUSES.map(status => { 3

const statusTasks = tasks.filter(task => task.status === status);

return <TaskList key={status} status={status} tasks={statusTasks} />;

});

}

render() {

return (

<div className="tasks">

<div className="task-lists">

{this.renderTaskLists()}

</div>

</div>

);

}

}

export default TasksPage;

To start, TasksPage will receive mock tasks from the top-level component, App. App will also be created using an ES6 class, because it will eventually connect to the Redux store, as shown in the following listing.

import React, { Component } from 'react';

import TasksPage from './components/TasksPage';

const mockTasks = [ 1

{

id: 1,

title: 'Learn Redux',

description: 'The store, actions, and reducers, oh my!',

status: 'In Progress',

},

{

id: 2,

title: 'Peace on Earth',

description: 'No big deal.',

status: 'In Progress',

},

];

class App extends Component {

render() {

return (

<div className="main-content">

<TasksPage tasks={mockTasks} />

</div>

);

}

}

export default App;

At this point, you can run your small React application with npm start and view it in the browser. Bear in mind that it’ll look dreadfully boring until you circle back to apply styles. Again, you can borrow ours from the supplemental code if you like.

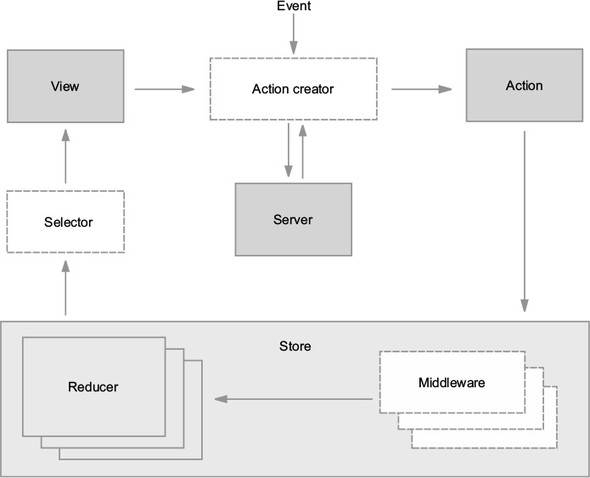

Your small React application is now ready to be introduced to Redux. Before you dive straight in, let’s consider the full arc of what will be required by revisiting the Redux architecture, introduced in chapter 1. See figure 2.3.

The store is a logical starting point for introducing Redux into an application. The Redux package exposes a few methods that facilitate the creation of a store. Once a store is created, you’ll connect it to the React application using the react-redux package, enabling a view (component) to dispatch actions. Actions eventually return to the store, to be read by reducers, which determine the next state of the store.

The main hub of functionality in Redux is the store—the object responsible for managing application state. Let’s look at the store and its API in an isolated context. As an example, we’ll look at a tiny program to increment a number.

In reading about Redux and talking with other community members, you’ll see or hear references to the store, the Redux store, or the state tree often used interchangeably. Generally, what these terms refer to is a JavaScript object like any other. Let’s look at the API that Redux provides to interact with the store.

The Redux package exports a createStore function that, you guessed it, is used to create a Redux store. Specifically, the Redux store is an object with a few core methods that can read and update state and respond to any changes: getState, dispatch, and subscribe. You’ll capture all three in the quick example in the following listing.

import { createStore } from 'redux';

function counterReducer(state = 0, action) { 1

if (action.type === 'INCREMENT') {

return state + 1;

}

return state;

}

const store = createStore(counterReducer); 2

console.log(store.getState()); 3

store.subscribe(() => { 4

console.log('current state: ', store.getState());

});

store.dispatch({ type: 'INCREMENT' }); 5

The first argument passed to the createStore function is a reducer. Recall from chapter 1 that reducers are functions that inform the store how it should update state in response to actions. The store requires at least one reducer.

As promised, there are three methods on the store to show off. The first, getState, can read the contents of the store. You’ll need to call this method yourself infrequently.

subscribe allows us to respond to changes in the store. For the sake of this example, you’re logging out the newly updated state to the console. When you start connecting Redux to React, this method is used under the hood to allow React components to re-render when any state changes in the store.

Because you can’t mutate the store yourself and only actions can result in a new state, you need a way to send new actions on to the reducers. That method is dispatch.

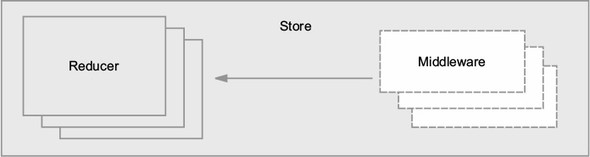

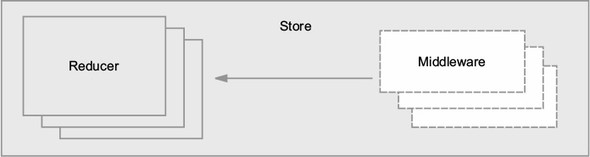

Back to business! In this section, you’ll begin to create your store and its dependencies. A store contains one or more reducers and, optionally, middleware. We’ll save middleware for a subsequent chapter, but at least one reducer is required to create a store.

Let’s begin by adding Redux as a dependency of the project, then move your initial tasks data into Redux. Make sure you’re in the parsnip directory and install the package by running the following command in a terminal window:

npm install -P redux

The –P flag is an alias for --save-prod, resulting in the package being added to your dependencies in the package.json file. Starting in npm5, this is the default install behavior. Now that Redux has been added, the next step is to integrate it into your existing React components. First create the store by adding the code shown in the following listing to index.js.

import React from 'react'

import ReactDOM from 'react-dom'

import App from './App';

import { createStore } from 'redux' 1

import tasks from './reducers' 2

import './index.css';

const store = createStore(tasks) 3

...

The next step is to make the store available to the React components in the app, but the code you added in listing 2.7 isn’t functional yet. Before going any further in index.js, you need to provide a barebones implementation of the tasks reducer.

As you’ve learned, creating a new Redux store requires a reducer. The goal of this section is to get enough done to create a new store, and you’ll fill out the rest of the functionality as you move through the chapter.

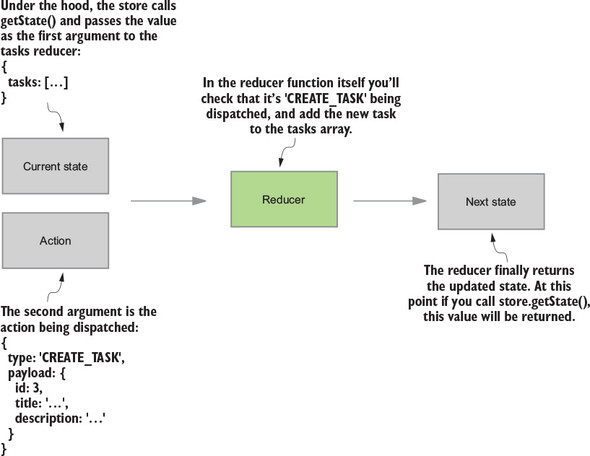

If you recall from chapter 1, a reducer is a function that takes the current state of the store and an action and returns the new state after applying any updates. The store is responsible for storing state, but it relies on reducers that you’ll create to determine how to update that state in response to an action.

You won’t handle any actions yet; you’ll return the state without modifications. Within the src directory, create a new directory, reducers, with an index.js file. In this file, you’ll create and export a single function, tasks, that returns the given state, as shown in the following listing.

export default function tasks(state = [], action) { 1

return state

}

That’s it! Do a little dance, because you’ve written your first reducer. You’ll be back later to make this function more interesting.

It’s common to provide reducers with an initial state, which involves nothing more than providing a default value for the state argument in the tasks reducer. Before you get back to connecting the Redux store to your application, let’s move the list of mock tasks out of App.js and into src/reducers/index.js, a more appropriate place for initial state to live. This is shown in the following listing.

const mockTasks = [

{

id: 1,

title: 'Learn Redux',

description: 'The store, actions, and reducers, oh my!',

status: 'In Progress',

},

{

id: 2,

title: 'Peace on Earth',

description: 'No big deal.',

status: 'In Progress',

},

];

export default function tasks(state = { tasks: mockTasks }, action) { 1

return state;

}

Don’t worry if your App component is breaking as a result of removing the mock data. You’ll fix that shortly. At this point the store has the correct initial data, but you still need to somehow make this data available to the UI. Enter react-redux!

Though it’s not a strict requirement, it’s highly encouraged to keep your data immutable; that is, not mutating values directly. Immutability has inherent benefits like being easy to work with and test, but in the case of Redux, the real benefit is that it enables extremely fast and simple equality checks.

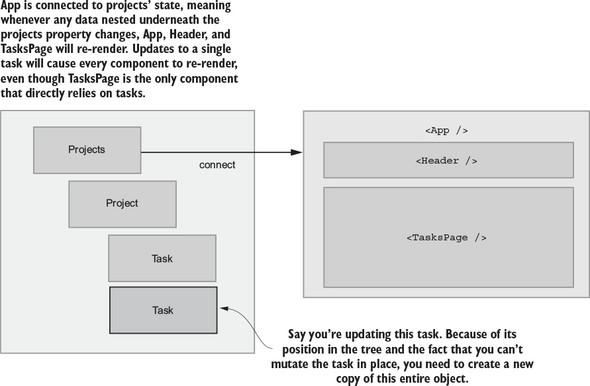

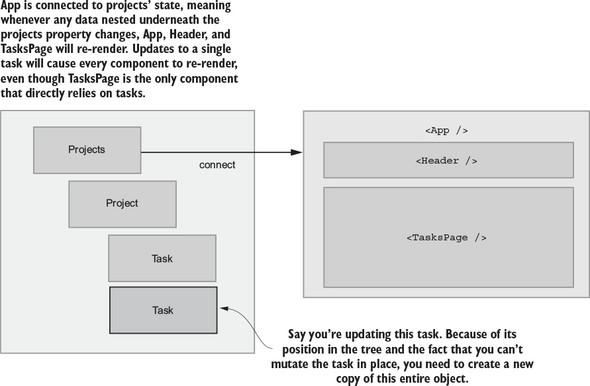

For example, if you mutate an object in a reducer, React-Redux’s connect may fail to correctly update its corresponding component. When connect compares old and new states to decide whether it needs to go ahead with a re-render, it checks only if two objects are equal, not that every individual property is equal. Immutability is also great for dealing with historical data, and it’s required for advanced Redux debugging features such as time travel.

The long and short of it is to never mutate data in place with Redux. Your reducers should always accept the current state as input and calculate an entirely new state. JavaScript doesn’t offer immutable data structures out of the box, but there are several great libraries. ImmutableJS (https://facebook.github.io/immutable-js/) and Updeep (https://github.com/substantial/updeep) are two popular examples, and in addition to enforcing immutability, they also provide more advanced APIs for updating deeply nested objects. If you want something more lightweight, Seamless-Immutable (https://github.com/rtfeldman/seamless-immutable) gives you immutable data structures, but allows you to continue using standard JavaScript APIs.

As we discussed in chapter 1, Redux was built with React in mind, but they’re two totally discrete packages. To connect Redux with React, you’ll use the React bindings from the react-redux package. Redux provides only the means to configure a store. react-redux bridges the gap between React and Redux by providing the ability to enhance a component, allowing it to read state from the store or dispatch actions. react-redux gives you two primary tools for connecting your Redux store to React:

Pause here to install the package: npm install –P react-redux.

Provider is a component that takes the store as a prop and wraps the top-level component in your app—in this case, App. Any child component rendered within Provider can access the Redux store, no matter how deeply it’s nested.

In index.js, import the Provider component and wrap the App component, using the code in the following listing.

import React from 'react';

import ReactDOM from 'react-dom';

import { createStore } from 'redux';

import { Provider } from 'react-redux'; 1

import tasks from './reducers';

import App from './App';

import './index.css';

const store = createStore(tasks);

ReactDOM.render(

<Provider store={store}> 2

<App />

</Provider>,

document.getElementById('root')

);

Think of the Provider component as an enabler. You won’t interact with it directly often, typically only in a file such as index.js, which takes care of initially mounting the app to the DOM. Behind the scenes, Provider ensures you can use connect to pass data from the store to one or more React components.

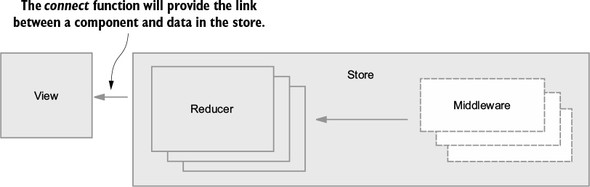

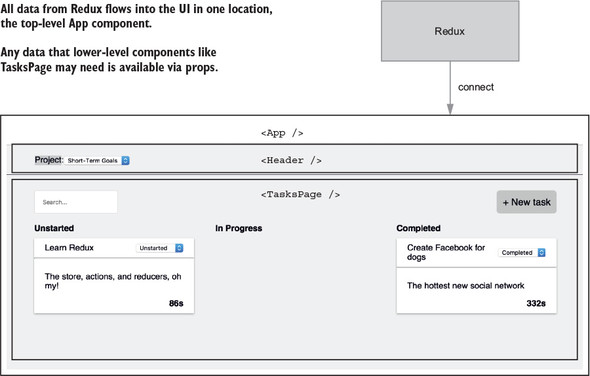

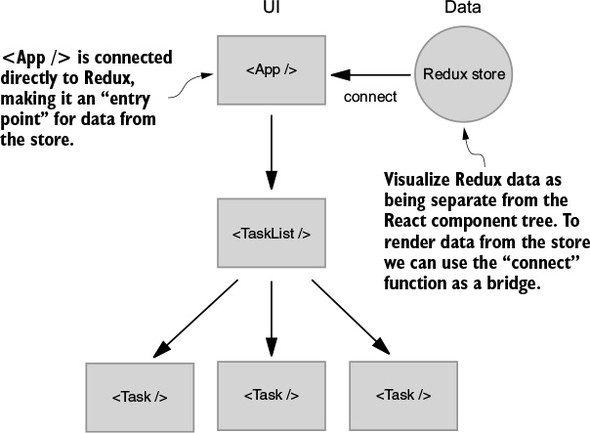

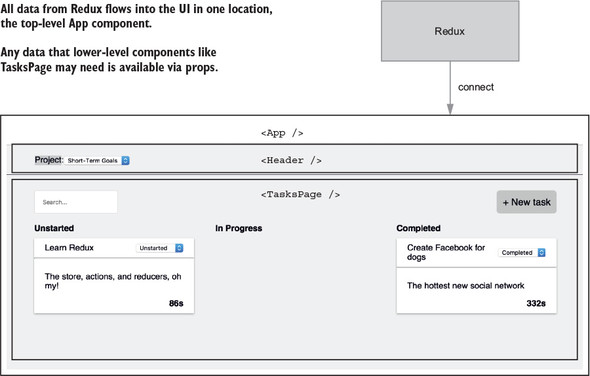

You’ve laid the groundwork to pass data from the store into a React component. You have a Redux store with a tasks reducer, and you’ve used the Provider component from react-redux to make the store available to our React components. Now it’s nearly time to enhance a React component with connect. See figure 2.5.

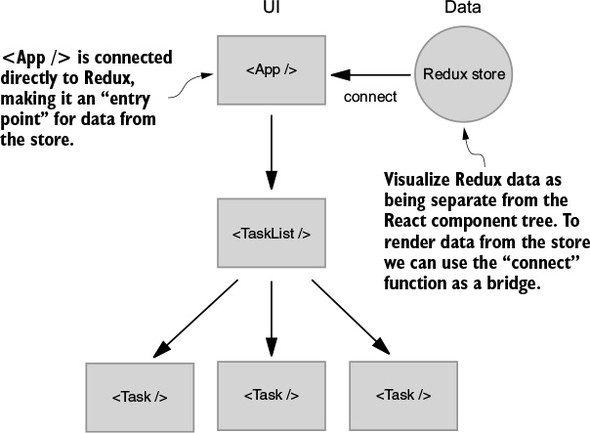

Generally, you can break visual interfaces into two major concerns: data and UI. In your case, the data is the JavaScript objects that represent tasks, and the UI is the few React components that take these objects and render them on the page. Without Redux, you’d deal with both concerns directly within React components.

As you can see in figure 2.6, the data used to render your UI is moved entirely out of React and into Redux. The App component will be considered an entry point for data from Redux. As the application grows, you’ll introduce more data, more UI, and as a result, more entry points. This kind of flexibility is one of Redux’s greatest strengths. Your application state lives in one place, and you can pick and choose how you want that data to flow into the application.

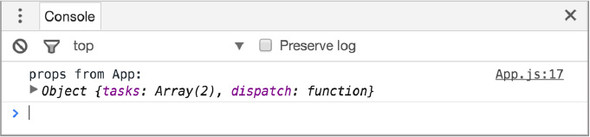

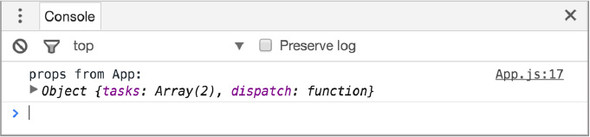

Listing 2.11 introduces a couple of new concepts: connect and mapStateToProps. By adding connect to the App component, you declare it as an entry point for data from the Redux store. You’ve only connected one component here, but as your application grows, you’ll start to discover best practices for when to use connect with additional components.

Listing 2.11 passes connect a single argument, the mapStateToProps function. Note that the name mapStateToProps is a convention, not a requirement. The name stuck for a reason: because it’s an effective descriptor of the role of this function. State refers to the data in the store, and props are what get passed to the connected component. Whatever you return from mapStateToProps will be passed to your component as props.

import React, { Component } from 'react';

import { connect } from 'react-redux'; 1

import TasksPage from './components/TasksPage';

class App extends Component {

render() {

return (

<div className="main-content">

<TasksPage tasks={this.props.tasks} /> 2

</div>

);

}

}

function mapStateToProps(state) { 3

return {

tasks: state.tasks 4

}

}

export default connect(mapStateToProps)(App);

Now the application successfully renders data from the Redux store! Notice how you didn’t have to update the TasksPage component? That’s by design. Because TasksPage accepts its data via props, it doesn’t care what the source of those props is. They could come from Redux, from React’s local state, or from another data library altogether.

Recall that TaskList is a presentational or UI component. It accepts data as props and returns output according to the markup you defined. By using connect in the App component, you secretly introduced their counterparts, known as container components.

Presentational components don’t have dependencies on Redux. They don’t know or care that you’re using Redux to manage your application state. By using presentational components, you introduced determinism into your view renders. Given the same data, you’ll always have the same rendered output. Presentational components are easily tested and provide your application with sweet, sweet predictability.

Presentational components are great, but something needs to know how to get data out of the Redux store and pass it to your presentational components. This is where container components, such as App, come in. In this simple example, they have a few responsibilities:

Again, separating things into container and presentational components is a convention, not a hard-and-fast rule that React or Redux enforces. But it’s one of the most popular and pervasive patterns for a reason. It allows you to decouple how your app looks from what it does. Defining your UI as presentational components means you have simple, flexible building blocks that are easy to reconfigure and reuse. When you’re working with data from Redux, you can deal with container components without having to worry about markup. The inverse applies for when you’re working with a UI.

At this point, you can view the data being rendered in the browser; your app renders a simple list of tasks retrieved from the Redux store. Now it’s time to wire up behavior! Let’s see what it takes to add a new task to the list.

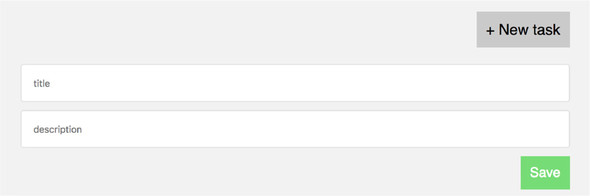

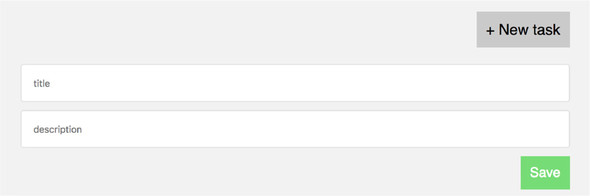

You’ll follow the same workflow that you used to render the static list of tasks. You’ll start with the UI, then implement functionality. Let’s start with a “New task” button and a form. When a user clicks the button, the form renders with two fields, a title, and a description. Eventually, it’ll look roughly like figure 2.7.

Modify the code in TasksPage.js to match the following listing. This code is still plain React, so much of it may be familiar to you.

import React, { Component } from 'react';

import TaskList from './TaskList';

class TasksPage extends Component {

constructor(props) {

super(props);

this.state = { 1

showNewCardForm: false,

title: '',

description: '',

};

}

onTitleChange = (e) => { 2

this.setState({ title: e.target.value });

}

onDescriptionChange = (e) => {

this.setState({ description: e.target.value });

}

resetForm() {

this.setState({

showNewCardForm: false,

title: '',

description: '',

});

}

onCreateTask = (e) => {

e.preventDefault();

this.props.onCreateTask({ 3

title: this.state.title,

description: this.state.description,

});

this.resetForm(); 4

}

toggleForm = () => {

this.setState({ showNewCardForm: !this.state.showNewCardForm });

}

renderTaskLists() {

const { tasks } = this.props;

return TASK_STATUSES.map(status => {