Practical Modern JavaScript brings a whole ton of new syntax and semantics that makes your code more expressive and declarative. Nicolás does an amazing job of distilling this with simple examples so you can get up to speed quickly.

Kent C. Dodds (PayPal, TC39)

Nicolás demystifies the massive ES6 specification with a pragmatic and practical dive into the features that are redefining modern JavaScript development.

Rod Vagg (NodeSource, Node.js TSC)

Nicolás has an amazing ability to break down highly complex technical topics into easy-to-understand prose and sample code.

Mathias Bynens (Google, TC39)

JavaScript is a tough language to master, and the 2015 edition adds a lot of new features. Practical Modern JavaScript helps break them down and explains use cases, conceptual models, and best practices, with simple examples—making these new patterns much easier to grasp.

Jordan Harband (Airbnb, TC39)

ES6 brought significant changes to the language—changes that take time to grasp even for advanced JavaScript developers. You’ll need a guide on this journey, and Nicolás’ book is one of the best you can get.

Ingvar Stepanyan (CloudFlare)

When I created JavaScript in 1995, I had no definite idea that it would become the most widely used programming language on the Internet. This Modular JavaScript book series fulfills my hope for an incremental and straightforward pedagogy about JavaScript. I encourage you to dive in, to discover and embrace JavaScript, and to contribute to developing a better web for everyone.

Brendan Eich (creator of JavaScript)

Copyright © 2017 Nicolás Bevacqua. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781491943533 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Practical Modern JavaScript, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

While the publisher and the author have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the author disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-94353-3

[LSI]

When I created JavaScript in 1995 at Netscape, I had no definite idea that it would become the most widely used programming language on the Internet. I did know that I had very little time to get it into “minimum viable shipping” state, and so I made it extensible and mutable from global object on down, even to base-level meta-object protocol hooks (e.g., toString and valueOf, styled after Java’s methods of the same names).

Yet in spite of its ongoing evolution and still-rising popularity, JavaScript always benefits from an incremental and careful teaching approach that puts first things first. I think this follows inevitably from the hurried design and intentional extensibility. I overloaded two kernel elements, functions and objects, so that programmers could use them in various ways as general workalikes for individual tools in a larger Swiss army knife. This meant that students would need to learn which tool was best to use for a specific task, and how precisely to wield that particular blade.

Netscape was a whirlwind for me, and I think for anyone there from early 1995 on. It was rushing toward an initial public offering predicated on competing with Microsoft via the infamous “Netscape + Java kills Windows” formula repeated by Marc Andreessen on the IPO roadshow that year. Java was the big-brother or “Batman” programming language to little-brother, “Robin the boy hostage” sidekick “scripting language,” JavaScript.

But I knew while I was writing the first version (code-named “Mocha”) that JavaScript and not Java would be integrated deeply with the Netscape browser and the Document Object Model I created at the same time. There was no way across the Netscape/Sun organizational boundary, or the browser/JVM code bases, to embed Java other than as a plugin.

So I did have a vague sense that JavaScript would either succeed over time and do well, or else fade quickly in the shadow of something else. I remember telling my friend and cubicle-mate Jeff Weinstein, when he asked me what I’d be doing in 20 years, that it would be “JavaScript or bust.” Even then I felt a sense of deep obligation to JavaScript’s users that was inherent in the “two-blade Swiss army knife” design I had chosen under the dual constraints of extremely short schedule and “make it look like Java” management edict.

The Modular JavaScript book series fulfills my hope for an incremental and straightforward pedagogy, starting with easily applicable code examples and scaling through design patterns to entire module-based applications. This series nicely covers best testing practices and winning techniques for deploying JavaScript applications. It is another jewel in O’Reilly’s crown of books on JavaScript.

I am delighted to support Nicolás’ endeavor because his book looks exactly like what people who are coming to JavaScript with fresh eyes need. I first met Nicolás at a dinner in Paris and got to know him a bit there, and over time online. His pragmatism combined with empathy for newcomers to the language and a great sense of humor convinced me to review this book in draft form. The finished work is easy to digest and fun. I encourage you to dive in, to discover and embrace JavaScript, and to contribute to developing a better web for everyone.

Back in 1998, when I was using FrontPage for all my web development needs at school, if someone had told me that I’d end up working on the web for a living, I would’ve probably chuckled. JavaScript has grown along with us in all the years since, and it’s hard to imagine how the web could’ve thrived without it. Bit by bit, this book will give you a comprehensive picture of the modern JavaScript landscape.

This book is meant for web developers, enthusiasts, and professionals with a working knowledge of plain JavaScript. These developers and anyone looking to further their understanding of the JavaScript language shall benefit from reading Practical Modern JavaScript.

The goal of this book is to provide an approachable way of learning the latest developments in JavaScript: ES6 and later. ES6 was a huge update to the language, and it came out around the same time as a streamlined specification development process. Around this time I wrote quite a few blog posts about the different features in ES6, which became a bit popular. There are quite a few other books on ES6 out there, but they’re a little different from what I had in mind for a book on ES6 and beyond. This book tries to explain features in detail without getting caught up in the specification, its implementation details, or unlikely corner cases that would almost certainly need to be researched online if happened upon.

Instead of extreme thoroughness, the book places its utmost focus in the learning process, having sorted its material in such an incremental way that you don’t have to scan ahead in the book for the definition of something else. Armed with practical examples, Practical Modern JavaScript goes beyond ES6 to capture the changes to the language since June 2015—when the ES6 specification was finalized—including async functions, object destructuring, dynamic imports, Promise#finally, and async generators.

Lastly, this book has the goal of establishing a baseline we can take for granted in the rest of the Modular JavaScript series. After having learned the latest language features in this first book, we’ll be all set to discuss modular design, testing, and deployment, without having to diverge into language features whenever they’re used in a code example. This incremental and modular approach is meant to be pervasive across the series, each book, each chapter, and each section.

In Chapter 1, we open with an introduction to JavaScript and its standards development process, how it has evolved over the years, where it is now, and where it’s going. You’ll also get a short introduction to Babel and ESLint, modern tools that can aid us in the process of discovering modern JavaScript.

Chapter 2 covers all of the absolutely essential changes in ES6, including arrow functions, destructuring, let and const, template literals, and a few other syntax embellishments.

In Chapter 3, we’ll discuss the new class syntax for declaring object prototypes, a new primitive type known as Symbol, and a few new Object methods.

Chapter 4 goes over all of the new ways we can deal with flow control starting in ES6. We discuss Promises, iterators, generators, and async functions in great detail and with heaps of accompanying examples, uncovering the synergistic properties between all the different techniques. You won’t only learn how to use these flow control techniques but how to best reason about them so that they truly simplify your code.

Chapter 5 describes the new collection built-ins found in ES6, which we can use to create object maps and unique sets. You’ll find usage examples for each of these collection types.

Chapter 6 covers the new Proxy and Reflect built-ins. We’ll learn all about how we can use proxies and the reasons why we need to be careful when choosing to do so.

Chapter 7 is dedicated to the rest of built-in improvements that can be found in ES6. Particularly around Array, Math, numbers, strings, Unicode, and regular expressions.

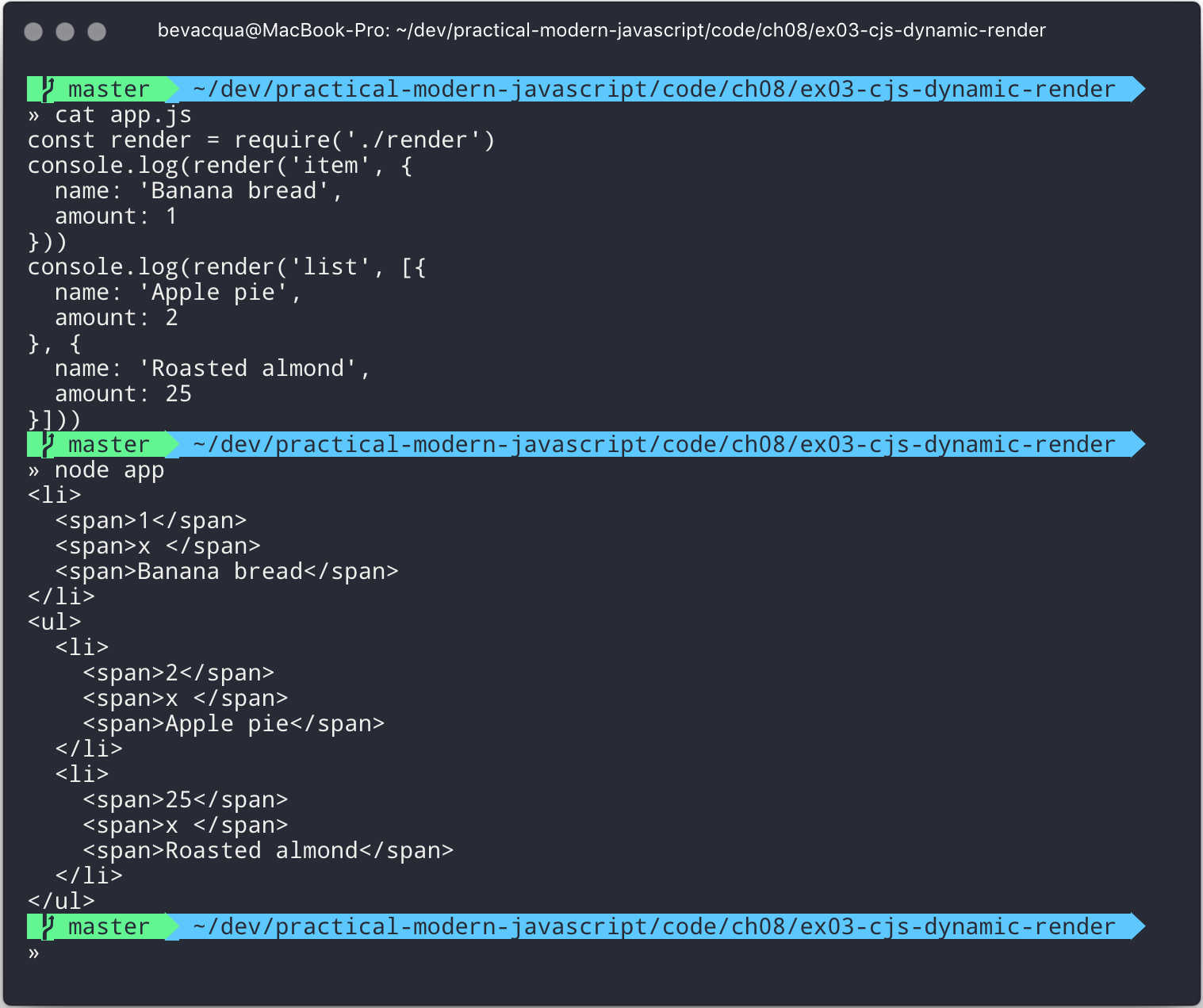

Chapter 8 is devoted to native JavaScript Modules and, briefly, the history that led to their inception. We’ll then discuss their syntax implications at length.

Chapter 9, the last chapter—dubbed “Practical Considerations”—is somewhat unconventional for books about programming languages. Rather than spreading my personal views throughout the book, I condensed them into the last chapter. Here you’ll find reasoning behind how to decide when to use which kind of variable declaration or string literal quotation mark, advice on handling asynchronous code flows and whether using classes or proxies is a good idea, and a few more nuggets of insight.

For those of you who are already somewhat familiar with ES6 and comfortable jumping around the pages in a book, I recommend reading Chapter 4 in detail, as you should find the insight into flow control most valuable. Chapters 7 and 8 are also a must, since they provide details around the areas of ES6 that are less often discussed in the open. The last chapter will indubitably—regardless of whether you agree with the views expressed therein—challenge you to think deeply about what works and what doesn’t in the context of JavaScript applications written under the wild new world order that is modern JavaScript.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

This icon signifies a tip, suggestion, or general note.

This icon indicates a warning or caution.

Safari (formerly Safari Books Online) is a membership-based training and reference platform for enterprise, government, educators, and individuals.

Members have access to thousands of books, training videos, Learning Paths, interactive tutorials, and curated playlists from over 250 publishers, including O’Reilly Media, Harvard Business Review, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Adobe, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, and Course Technology, among others.

For more information, please visit http://oreilly.com/safari.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://www.oreilly.com/catalog/0636920047124.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

Like virtually every human task ever carried out, Practical Modern JavaScript was made possible only by building upon the work of others. I want to thank Nan Barber, my editor at O’Reilly, for her fantastic support along the ride of writing this book. Ally MacDonald, another editor at O’Reilly, helped me out in the early days of the project and is the foremost reason why Modular JavaScript became a book series, as she helped me conjure up a modular approach to teaching JavaScript.

This book had a phenomenal ensemble of technical reviewers. Many of these folks are on TC39, the technical committee devoted to pushing JavaScript forward, and it’s deeply humbling that they gave up some of their time to help future-proof this book. As always, Mathias Bynens (ex-Opera) proved instrumental to proofing everything in the book with regards to Unicode standards and held my work to a high degree of consistency in terms of code snippets. Kent C. Dodds (TC39, PayPal) ingeniously offered video reviews where he identified weak spots and helped improve the book. Jordan Harband (TC39, Airbnb) came through with deep technical commentary about many of the JavaScript features discussed in the book, and along with Alex Russell (TC39, Google) helped me iron out the history of JavaScript and its standards body for the first chapter. Ingvar Stepanyan (Cloudflare) was also a sharp eye in identifying code issues and pinpointed mistakes around low-level aspects of the specification. Brian Terlson (TC39 editor, Microsoft) also helped out with timelines and details around TC39. Rod Vagg (Node.js) provided insight that lead to better code examples and more consistent code style throughout the book.

Brendan Eich (TC39, Brave CEO) provided a trove of insight into the early days of JavaScript and TC39, which proved essential to the development of the first chapter. And naturally, if not for him, you wouldn’t be holding this book in your hands.

Lastly, I’d like to thank my wife, Marianela, for her sacrifices and putting up with me through the development of the first book in a long series. Marian, I couldn’t do this without you!

JavaScript has gone from being a 1995 marketing ploy to gain a tactical advantage to becoming the core programming experience in the world’s most widely used application runtime platform in 2017. The language doesn’t merely run in browsers anymore, but is also used to create desktop and mobile applications, in hardware devices, and even in space suit design at NASA.

How did JavaScript get here, and where is it going next?

Back in 1995, Netscape envisioned a dynamic web beyond what HTML could offer. Brendan Eich was initially brought into Netscape to develop a language that was functionally akin to Scheme, but for the browser. Once he joined, he learned that upper management wanted it to look like Java, and a deal to that effect was already underway.

Brendan created the first JavaScript prototype in 10 days, taking Scheme’s first-class functions and Self’s prototypes as its main ingredients. The initial version of JavaScript was code-named Mocha. It didn’t have array or object literals, and every error resulted in an alert. The lack of exception handling is why, to this day, many operations result in NaN or undefined. Brendan’s work on DOM level 0 and the first edition of JavaScript set the stage for standards work.

This revision of JavaScript was marketed as LiveScript when it started shipping with a beta release of Netscape Navigator 2.0, in September 1995. It was rebranded as JavaScript (trademarked by Sun, now owned by Oracle) when Navigator 2.0 beta 3 was released in December 1995. Soon after this release, Netscape introduced a server-side JavaScript implementation for scripting in Netscape Enterprise Server, and named it LiveWire.1 JScript, Microsoft’s reverse-engineered implementation of JavaScript, was bundled with IE3 in 1996. JScript was available for Internet Information Server (IIS) in the server side.

The language started being standardized under the ECMAScript name (ES) into the ECMA-262 specification in 1996, under a technical committee at ECMA known as TC39. Sun wouldn’t transfer ownership of the JavaScript trademark to ECMA, and while Microsoft offered JScript, other member companies didn’t want to use that name, so ECMAScript stuck.

Disputes by competing implementations, JavaScript by Netscape and JScript by Microsoft, dominated most of the TC39 standards committee meetings at the time. Even so, the committee was already bearing fruit: backward compatibility was established as a golden rule, bringing about strict equality operators (=== and !==) instead of breaking existing programs that relied on the loose Equality Comparison Algorithm.

The first edition of ECMA-262 was released June 1997. A year later, in June 1998, the specification was refined under the ISO/IEC 16262 international standard, after much scrutiny from national ISO bodies, and formalized as the second edition.

By December 1999 the third edition was published, standardizing regular expressions, the switch statement, do/while, try/catch, and Object#hasOwnProperty, among a few other changes. Most of these features were already available in the wild through Netscape’s JavaScript runtime, SpiderMonkey.

Drafts for an ES4 specification were soon afterwards published by TC39. This early work on ES4 led to JScript.NET in mid-20002 and, eventually, to ActionScript 3 for Flash in 2006.3

Conflicting opinions on how JavaScript was to move forward brought work on the specification to a standstill. This was a delicate time for web standards: Microsoft had all but monopolized the web and they had little interest in standards development.

As AOL laid off 50 Netscape employees in 2003,4 the Mozilla Foundation was formed. With over 95% of web-browsing market share now in the hands of Microsoft, TC39 was disbanded.

It took two years until Brendan, now at Mozilla, had ECMA resurrect work on TC39 by using Firefox’s growing market share as leverage to get Microsoft back in the fold. By mid-2005, TC39 started meeting regularly once again. As for ES4, there were plans for introducing a module system, classes, iterators, generators, destructuring, type annotations, proper tail calls, algebraic typing, and an assortment of other features. Due to how ambitious the project was, work on ES4 was repeatedly delayed.

By 2007 the committee was split in two: ES3.1, which hailed a more incremental approach to ES3; and ES4, which was overdesigned and underspecified. It wouldn’t be until August 20085 when ES3.1 was agreed upon as the way forward, but later rebranded as ES5. Although ES4 would be abandoned, many of its features eventually made its way into ES6 (which was dubbed Harmony at the time of this resolution), while some of them still remain under consideration and a few others have been abandoned, rejected, or withdrawn. The ES3.1 update served as the foundation on top of which the ES4 specification could be laid in bits and pieces.

In December 2009, on the 10-year anniversary since the publication of ES3, the fifth edition of ECMAScript was published. This edition codified de facto extensions to the language specification that had become common among browser implementations, adding get and set accessors, functional improvements to the Array prototype, reflection and introspection, as well as native support for JSON parsing and strict mode.

A couple of years later, in June 2011, the specification was once again reviewed and edited to become the third edition of the international standard ISO/IEC 16262:2011, and formalized under ECMAScript 5.1.

It took TC39 another four years to formalize ECMAScript 6, in June 2015. The sixth edition is the largest update to the language that made its way into publication, implementing many of the ES4 proposals that were deferred as part of the Harmony resolution. Throughout this book, we’ll be exploring ES6 in depth.

In parallel with the ES6 effort, in 2012 the WHATWG (a standards body interested in pushing the web forward) set out to document the differences between ES5.1 and browser implementations, in terms of compatibility and interoperability requirements. The task force standardized String#substr, which was previously unspecified; unified several methods for wrapping strings in HTML tags, which were inconsistent across browsers; and documented Object.prototype properties like __proto__ and __defineGetter__, among other improvements.6 This effort was condensed into a separate Web ECMAScript specification, which eventually made its way into Annex B in 2015. Annex B was an informative section of the core ECMAScript specification, meaning implementations weren’t required to follow its suggestions. Jointly with this update, Annex B was also made normative and required for web browsers.

The sixth edition is a significant milestone in the history of JavaScript. Besides the dozens of new features, ES6 marks a key inflection point where ECMAScript would become a rolling standard.

Having spent 10 years without observing significant change to the language specification after ES3, and 4 years for ES6 to materialize, it was clear the TC39 process needed to improve. The revision process used to be deadline-driven. Any delay in arriving at consensus would cause long wait periods between revisions, which led to feature creep, causing more delays. Minor revisions were delayed by large additions to the specification, and large additions faced pressure to finalize so that the revision would be pushed through, avoiding further delays.

Since ES6 came out, TC39 has streamlined7 its proposal revisioning process and adjusted it to meet modern expectations: the need to iterate more often and consistently, and to democratize specification development. At this point, TC39 moved from an ancient Word-based flow to using Ecmarkup (an HTML superset used to format ECMAScript specifications) and GitHub pull requests, greatly increasing the number of proposals8 being created as well as external participation by nonmembers. The new flow is continuous and thus, more transparent: while previously you’d have to download a Word doc or its PDF version from a web page, the latest draft of the specification is now always available.

Firefox, Chrome, Edge, Safari, and Node.js all offer over 95% compliance of the ES6 specification,9 but we’ve been able to use the features as they came out in each of these browsers rather than having to wait until the flip of a switch when their implementation of ES6 was 100% finalized.

The new process involves four different maturity stages.10 The more mature a proposal is, the more likely it is to eventually make it into the specification.

Any discussion, idea, or proposal for a change or addition that has not yet been submitted as a formal proposal is considered to be an aspirational “strawman” proposal (stage 0), but only TC39 members can create strawman proposals. At the time of this writing, there are over a dozen active strawman proposals.11

At stage 1 a proposal is formalized and expected to address cross-cutting concerns, interactions with other proposals, and implementation concerns. Proposals at this stage should identify a discrete problem and offer a concrete solution to the problem. A stage 1 proposal often includes a high-level API description, illustrative usage examples, and a discussion of internal semantics and algorithms. Stage 1 proposals are likely to change significantly as they make their way through the process.

Proposals in stage 2 offer an initial draft of the specification. At this point, it’s reasonable to begin experimenting with actual implementations in runtimes. The implementation could come in the form of a polyfill, user code that mangles the runtime into adhering to the proposal; an engine implementation, natively providing support for the proposal; or compiled into something existing engines can execute, using build-time tools to transform source code.

Proposals in stage 3 are candidate recommendations. In order for a proposal to advance to this stage, the specification editor and designated reviewers must have signed off on the final specification. Implementors should’ve expressed interest in the proposal as well. In practice, proposals move to this level with at least one browser implementation, a high-fidelity polyfill, or when supported by a build-time compiler like Babel. A stage 3 proposal is unlikely to change beyond fixes to issues identified in the wild.

In order for a proposal to attain stage 4 status, two independent implementations need to pass acceptance tests. Proposals that make their way through to stage 4 will be included in the next revision of ECMAScript.

New releases of the specification are expected to be published every year from now on. To accommodate the yearly release schedule, versions will now be referred to by their publication year. Thus ES6 becomes ES2015, then we have ES2016 instead of ES7, ES2017, and so on. Colloquially, ES2015 hasn’t taken and is still largely regarded as ES6. ES2016 had been announced before the naming convention changed, thus it is sometimes still referred to as ES7. When we leave out ES6 due to its pervasiveness in the community, we end up with: ES6, ES2016, ES2017, ES2018, and so on.

The streamlined proposal process combined with the yearly cut into standardization translates into a more consistent publication process, and it also means specification revision numbers are becoming less important. The focus is now on proposal stages, and we can expect references to specific revisions of the ECMAScript standard to become more uncommon.

A stage 3 candidate recommendation proposal is most likely to make it into the specification in the next cut, provided two independent implementations land in JavaScript engines. Effectively, stage 3 proposals are considered safe to use in real-world applications, be it through an experimental engine implementation, a polyfill, or using a compiler. Stage 2 and earlier proposals are also used in the wild by JavaScript developers, tightening the feedback loop between implementors and consumers.

Babel and similar compilers that take code as input and produce output native to the web platform (HTML, CSS, or JavaScript) are often referred to as transpilers, which are considered to be a subset of compilers. When we want to leverage a proposal that’s not widely implemented in JavaScript engines in our code, compilers like Babel can transform the portions of code using that new proposal into something that’s more widely supported by existing JavaScript implementations.

This transformation can be done at build time, so that consumers receive code that’s well supported by their JavaScript runtime of choice. This mechanism improves the runtime support baseline, giving JavaScript developers the ability to take advantage of new language features and syntax sooner. It is also significantly beneficial to specification writers and implementors, as it allows them to collect feedback regarding viability, desirability, and possible bugs or corner cases.

A transpiler can take the ES6 source code we write and produce ES5 code that browsers can interpret more consistently. This is the most reliable way of running ES6 code in production today: using a build step to produce ES5 code that most old browsers, as well as modern browsers, can execute.

The same applies to ES7 and beyond. As new versions of the language specification are released every year, we can expect compilers to support ES2017 input, ES2018 input, and so on. Similarly, as browser support becomes better, we can also expect compilers to reduce complexity in favor of ES6 output, then ES7 output, and so on. In this sense, we can think of JavaScript-to-JavaScript transpilers as a moving window that takes code written using the latest available language semantics and produces the most modern code they can output without compromising browser support.

Let’s talk about how you can use Babel as part of your workflow.

Babel can compile modern JavaScript code that relies on ES6 features into ES5. It produces human-readable code, making it more welcoming when we don’t have a firm grasp on all of the new features we’re using.

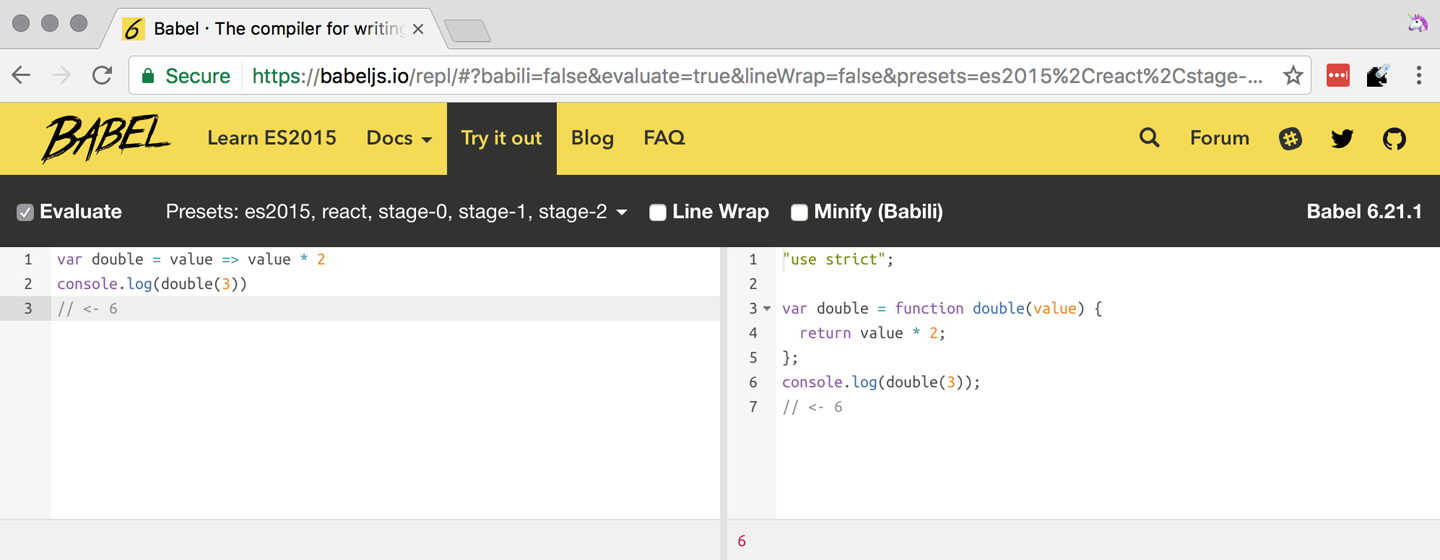

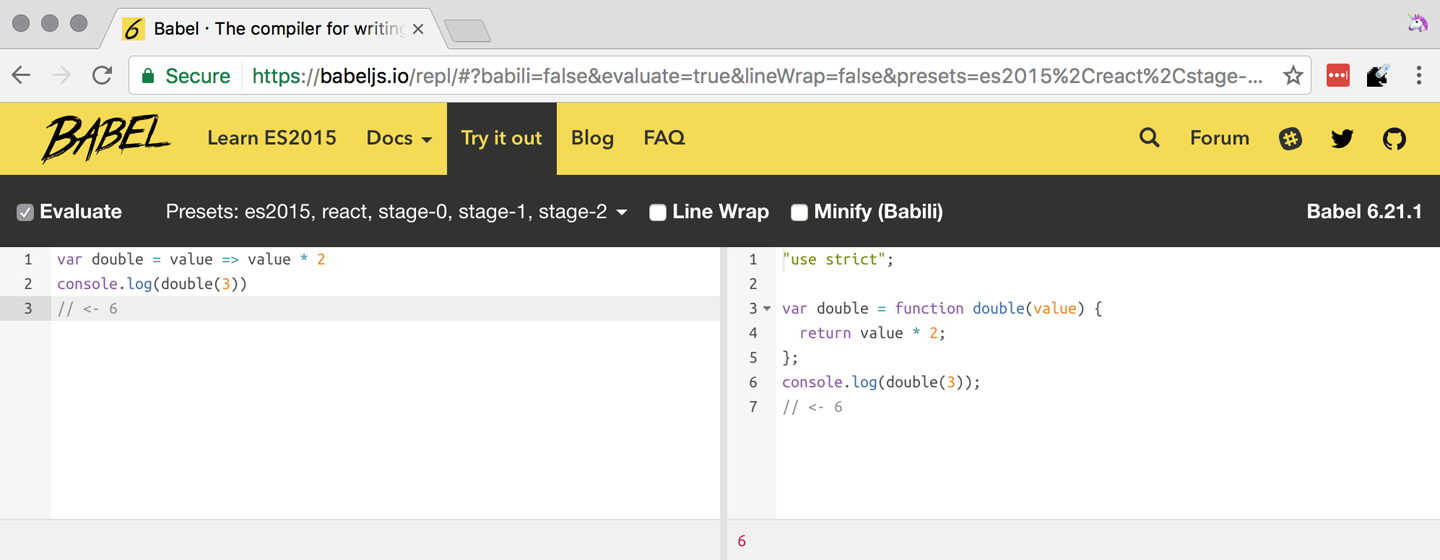

The online Babel REPL (Read-Evaluate-Print Loop) is an excellent way of jumping right into learning ES6, without any of the hassle of installing Node.js and the babel CLI, and manually compiling source code.

The REPL provides us with a source code input area that gets automatically compiled in real time. We can see the compiled code to the right of our source code.

Let’s write some code into the REPL. You can use the following code snippet to get started:

vardouble=value=>value*2console.log(double(3))// <- 6

To the right of the source code we’ve entered, you’ll see the transpiled ES5 equivalent, as shown in Figure 1-1. As you update your source code, the transpiled result is also updated in real time.

The Babel REPL is an effective companion as a way of trying out some of the features introduced in this book. However, note that Babel doesn’t transpile new built-ins, such as Symbol, Proxy, and WeakMap. Those references are instead left untouched, and it’s up to the runtime executing the Babel output to provide those built-ins. If we want to support runtimes that haven’t yet implemented these built-ins, we could import the babel-polyfill package in our code.

In older versions of JavaScript, semantically correct implementations of these features are hard to accomplish or downright impossible. Polyfills may mitigate the problem, but they often can’t cover all use cases and thus some compromises need to be made. We need to be careful and test our assumptions before we release transpiled code that relies on built-ins or polyfills into the wild.

Given the situation, it might be best to wait until browsers support new built-ins holistically before we start using them. It is suggested that you consider alternative solutions that don’t rely on built-ins. At the same time, it’s important to learn about these features, as to not fall behind in our understanding of the JavaScript language.

Modern browsers like Chrome, Firefox, and Edge now support a large portion of ES2015 and beyond, making their developer tools useful when we want to take the semantics of a particular feature for a spin, provided it’s supported by the browser. When it comes to production-grade applications that rely on modern JavaScript features, a transpilation build-step is advisable so that your application supports a wider array of JavaScript runtimes.

Besides the REPL, Babel offers a command-line tool written as a Node.js package. You can install it through npm, the package manager for Node.

Download Node.js. After installing node, you’ll also be able to use the npm command-line tool in your terminal.

Before getting started we’ll make a project directory and a package.json file, which is a manifest used to describe Node.js applications. We can create the package.json file through the npm CLI:

mkdir babel-setup

cd babel-setup

npm init --yes

Passing the --yes flag to the init command configures package.json using the default values provided by npm, instead of asking us any questions.

Let’s also create a file named example.js, containing the following bits of ES6 code. Save it to the babel-setup directory you’ve just created, under a src subdirectory:

vardouble=value=>value*2console.log(double(3))// <- 6

To install Babel, enter the following couple of commands into your favorite terminal:

npm install babel-cli@6 --save-dev npm install babel-preset-env@6 --save-dev

Packages installed by npm will be placed in a node_modules directory at the project root. We can then access these packages by creating npm scripts or by using require statements in our application.

Using the --save-dev flag will add these packages to our package.json manifest as development dependencies, so that when copying our project to new environments we can reinstall every dependency just by running npm install.

The @ notation indicates we want to install a specific version of a package. Using @6 we’re telling npm to install the latest version of babel-cli in the 6.x range. This preference is handy to future-proof our applications, as it would never install 7.0.0 or later versions, which might contain breaking changes that could not have been foreseen at the time of this writing.

For the next step, we’ll replace the value of the scripts property in package.json with the following. The babel command-line utility provided by babel-cli can take the entire contents of our src directory, compile them into the desired output format, and save the results to a dist directory, while preserving the original directory structure under a different root:

{"scripts":{"build":"babel src --out-dir dist"}}

Together with the packages we’ve installed in the previous step, a minimal package.json file could look like the code in the following snippet:

{"scripts":{"build":"babel src --out-dir dist"},"devDependencies":{"babel-cli":"^6.24.0","babel-preset-env":"^1.2.1"}}

Any commands enumerated in the scripts object can be executed through npm run <name>, which temporarily modifies the $PATH environment variable so that we can run the command-line executables found in babel-cli without installing babel-cli globally on our system.

If you execute npm run build in your terminal now, you’ll note that a dist/example.js file is created. The output file will be identical to our original file, because Babel doesn’t make assumptions, and we have to configure it first. Create a .babelrc file next to package.json, and write the following JSON in it:

{"presets":["env"]}

The env preset, which we installed earlier via npm, adds a series of plugins to Babel that transform different bits of ES6 code into ES5. Among other things, this preset transforms arrow functions like the one in our example.js file into ES5 code. The env Babel preset works by convention, enabling Babel transformation plugins according to feature support in the latest browsers. This preset is configurable, meaning we can decide how far back we want to cover browser support. The more browsers we support, the larger our transpiled bundle. The fewer browsers we support, the fewer customers we can satisfy. As always, research is of the essence to identify what the correct configuration for the Babel env preset is. By default, every transform is enabled, providing broad runtime support.

Once we run our build script again, we’ll observe that the output is now valid ES5 code:

» npm run build » cat dist/example.js"use strict"vardouble=functiondouble(value){returnvalue * 2}console.log(double(3))// <- 6

Let’s jump into a different kind of tool, the eslint code linter, which can help us establish a code quality baseline for our applications.

As we develop a codebase we factor out snippets that are redundant or no longer useful, write new pieces of code, delete features that are no longer relevant or necessary, and shift chunks of code around while accommodating a new architecture. As the codebase grows, the team working on it changes as well: at first it may be a handful of people or even one person, but as the project grows in size so might the team.

A lint tool can be used to identify syntax errors. Modern linters are often customizable, helping establish a coding style convention that works for everyone on the team. By adhering to a consistent set of style rules and a quality baseline, we bring the team closer together in terms of coding style. Every team member has different opinions about coding styles, but those opinions can be condensed into style rules once we put a linter in place and agree upon a configuration.

Beyond ensuring a program can be parsed, we might want to prevent throw statements throwing string literals as exceptions, or disallow console.log and debugger statements in production code. However, a rule demanding that every function call must have exactly one argument is probably too harsh.

While linters are effective at defining and enforcing a coding style, we should be careful when devising a set of rules. If the lint step is too stringent, developers may become frustrated to the point where productivity is affected. If the lint step is too lenient, it may not yield a consistent coding style across our codebase.

In order to strike the right balance, we may consider avoiding style rules that don’t improve our programs in the majority of cases when they’re applied. Whenever we’re considering a new rule, we should ask ourselves whether it would noticeably improve our existing codebase, as well as new code going forward.

ESLint is a modern linter that packs several plugins, sporting different rules, allowing us to pick and choose which ones we want to enforce. We decide whether failing to stick by these rules should result in a warning being printed as part of the output, or a halting error. To install eslint, we’ll use npm just like we did with babel in the previous section:

npm install eslint@3 --save-dev

Next, we need to configure ESLint. Since we installed eslint as a local dependency, we’ll find its command-line tool in node_modules/.bin. Executing the following command will guide us through configuring ESLint for our project for the first time. To get started, indicate you want to use a popular style guide and choose Standard,12 then pick JSON format for the configuration file:

./node_modules/.bin/eslint --init ? How would you like to configure ESLint? Use a popular style guide ? Which style guidedoyou want to follow? Standard ? What formatdoyou want your config file to be in? JSON

Besides individual rules, eslint allows us to extend predefined sets of rules, which are packaged up as Node.js modules. This is useful when sharing configuration across multiple projects, and even across a community. After picking Standard, we’ll notice that ESLint adds a few dependencies to package.json, namely the packages that define the predefined Standard ruleset; and then creates a configuration file, named .eslintrc.json, with the following contents:

{"extends":"standard","plugins":["standard","promise"]}

Referencing the node_modules/.bin directory, an implementation detail of how npm works, is far from ideal. While we used it when initializing our ESLint configuration, we shouldn’t keep this reference around nor type it out whenever we lint our codebase. To solve this problem, we’ll add the lint script in the next code snippet to our package.json:

{"scripts":{"lint":"eslint ."}}

As you might recall from the Babel example, npm run adds node_modules to the PATH when executing scripts. To lint our codebase, we can execute npm run lint and npm will find the ESLint CLI embedded deep in the node_modules directory.

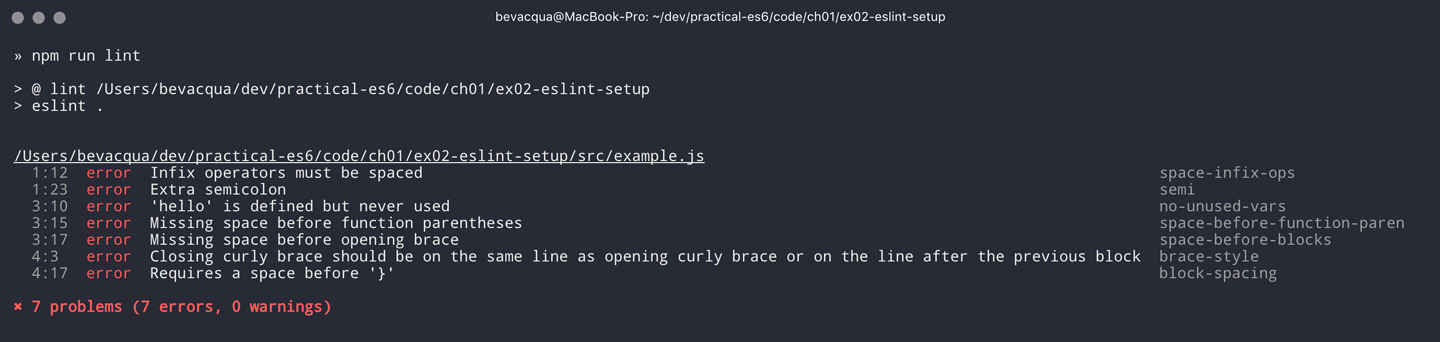

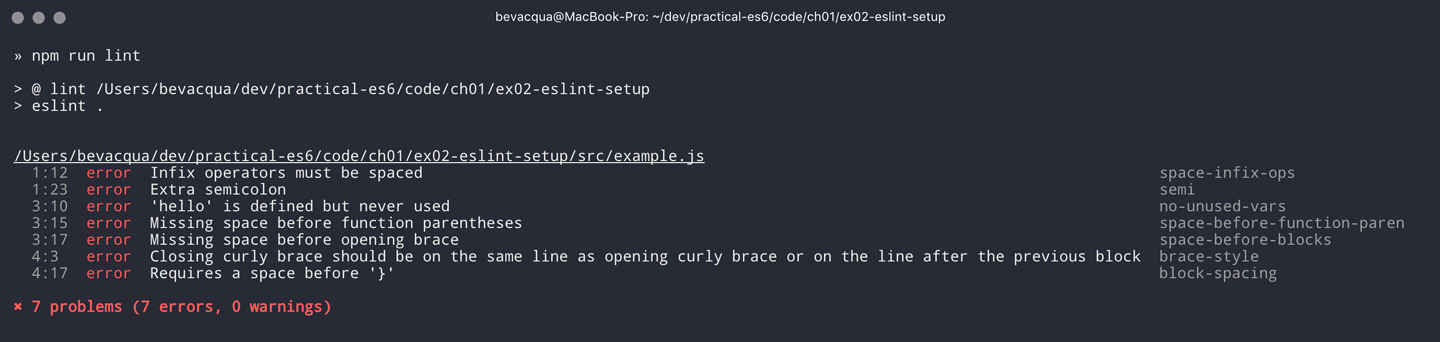

Let’s consider the following example.js file, which is purposely riddled with style issues, to demonstrate what ESLint does:

vargoodbye='Goodbye!'functionhello(){returngoodbye}if(false){}

When we run the lint script, ESLint describes everything that’s wrong with the file, as shown in Figure 1-2.

ESLint is able to fix most style problems automatically if we pass in a --fix flag. Add the following script to your package.json:

{"scripts":{"lint-fix":"eslint . --fix"}}

When we run lint-fix we’ll only get a pair of errors: hello is never used and false is a constant condition. Every other error has been fixed in place, resulting in the following bit of source code. The remaining errors weren’t fixed because ESLint avoids making assumptions about our code, and prefers not to incur semantic changes. In doing so, --fix becomes a useful tool to resolve code style wrinkles without risking a broken program as a result.

vargoodbye='Goodbye!'functionhello(){returngoodbye}if(false){}

A similar kind of tool can be found in prettier, which can be used to automatically format your code. Prettier can be configured to automatically overwrite our code ensuring it follows preferences such as a given amount of spaces for indentation, single or double quotes, trailing commas, or a maximum line length.

Now that you know how to compile modern JavaScript into something every browser understands, and how to properly lint and format your code, let’s jump into ES6 feature themes and the future of JavaScript.

ES6 is big: the language specification went from 258 pages in ES5.1 to over double that amount in ES6, at 566 pages. Each change to the specification falls in some of a few different categories:

Syntactic sugar

New mechanics

Better semantics

More built-ins and methods

Nonbreaking solutions to existing limitations

Syntactic sugar is one of the most significant drivers in ES6. The new version offers a shorter way of expressing object inheritance, using the new class syntax; functions, using a shorthand syntax known as arrow functions; and properties, using property value shorthands. Several other features we’ll explore, such as destructuring, rest, and spread, also offer semantically sound ways of writing programs. Chapters 2 and 3 attack these aspects of ES6.

We get several new mechanics to describe asynchronous code flows in ES6: promises, which represent the eventual result of an operation; iterators, which represent a sequence of values; and generators, a special kind of iterator that can produce a sequence of values. In ES2017, async/await builds on top of these new concepts and constructs, letting us write asynchronous routines that appear synchronous. We’ll evaluate all of these iteration and flow control mechanisms in Chapter 4.

There’s a common practice in JavaScript where developers use plain objects to create hash maps with arbitrary string keys. This can lead to vulnerabilities if we’re not careful and let user input end up defining those keys. ES6 introduces a few different native built-ins to manage sets and maps, which don’t have the limitation of using string keys exclusively. These collections are explored in Chapter 9.

Proxy objects redefine what can be done through JavaScript reflection. Proxy objects are similar to proxies in other contexts, such as web traffic routing. They can intercept any interaction with a JavaScript object such as defining, deleting, or accessing a property. Given the mechanics of how proxies work, they are impossible to polyfill holistically: polyfills exist, but they have limitations making them incompatible with the specification in some use cases. We’ll devote Chapter 6 to understanding proxies.

Besides new built-ins, ES6 comes with several updates to Number, Math, Array, and strings. In Chapter 7 we’ll go over a plethora of new instance and static methods added to these built-ins.

We are getting a new module system that’s native to JavaScript. After going over the CommonJS module format that’s used in Node.js, Chapter 8 explains the semantics we can expect from native JavaScript modules.

Due to the sheer amount of changes introduced by ES6, it’s hard to reconcile its new features with our pre-existing knowledge of JavaScript. We’ll spend all of Chapter 9 analyzing the merits and importance of different individual features in ES6, so that you have a practical grounding upon which you can start experimenting with ES6 right away.

The JavaScript language has evolved from its humble beginnings in 1995 to the formidable language it is today. While ES6 is a great step forward, it’s not the finish line. Given we can expect new specification updates every year, it’s important to learn how to stay up-to-date with the specification.

Having gone over the rolling standard specification development process in Section 1.2: ECMAScript as a Rolling Standard, one of the best ways to keep up with the standard is by periodically visiting the TC39 proposals repository.13 Keep an eye on candidate recommendations (stage 3), which are likely to make their way into the specification.

Describing an ever-evolving language in a book can be challenging, given the rolling nature of the standards process. An effective way of keeping up-to-date with the latest JavaScript updates is by watching the TC39 proposals repository, subscribing to weekly email newsletters14, and reading JavaScript blogs.15

At the time of this writing, the long awaited Async Functions proposal has made it into the specification and is slated for publication in ES2017. There are several candidates at the moment, such as dynamic import(), which enables asynchronous loading of native JavaScript modules, and a proposal to describe object property enumerations using the new rest and spread syntax that was first introduced for parameter lists and arrays in ES6.

While the primary focus in this book is on ES6, we’ll also learn about important candidate recommendations such as the aforementioned async functions, dynamic import() calls, or object rest/spread, among others.

1 A booklet from 1998 explains the intricacies of server-side JavaScript with LiveWire.

2 You can read the original announcement at the Microsoft website (July, 2000).

3 Listen to Brendan Eich in the JavaScript Jabber podcast, talking about the origin of JavaScript.

4 You can read a news report from The Mac Observer, July 2003.

5 Brendan Eich sent an email to the es-discuss mailing list in 2008 where he summarized the situation, almost 10 years after ES3 had been released.

6 For the full set of changes made when merging the Web ECMAScript specification upstream, see the WHATWG blog.

7 Check out the presentation “Post-ES6 Spec Process” from September 2013 that led to the streamlined proposal revisioning process here.

8 Check out all of the proposals being considered by TC39.

9 Check out this detailed table reporting ES6 compatibility across browsers.

10 Take a look at the TC39 proposal process documentation.

11 You can track strawman proposals.

12 Note that Standard is just a self-proclamation, and not actually standardized in any official capacity. It doesn’t really matter which style guide you follow as long as you follow it consistently. Consistency helps reduce confusion while reading a project’s codebase. The Airbnb style guide is also fairly popular and it doesn’t omit semicolons by default, unlike Standard.

13 Check out all of the proposals being considered by TC39.

14 There are many newsletters, including Pony Foo Weekly and JavaScript Weekly.

15 Many of the articles on Pony Foo and by Axel Rauschmayer focus on ECMAScript development.

The sixth edition of the language comes with a plethora of non-breaking syntax improvements, most of which we’ll tackle throughout this chapter. Many of these changes are syntactic sugar; that is, they could be represented in ES5, albeit using more complicated pieces of code. There are also changes that aren’t merely syntactic sugar but a completely different way of declaring variables using let and const, as we’ll see toward the end of the chapter.

Object literals get a few syntax changes in ES6, and they’re a good place to start.

An object literal is any object declaration using the {} shorthand syntax, such as the following example:

varbook={title:'Modular ES6',author:'Nicolas',publisher:'O´Reilly'}

ES6 brings a few improvements to object literal syntax: property value shorthands, computed property names, and method definitions. Let’s go through them and describe their use cases as well.

Sometimes we declare objects with one or more properties whose values are references to variables by the same name. For example, we might have a listeners collection, and in order to assign it to a property called listeners of an object literal, we have to repeat its name. The following snippet has a typical example where we have an object literal declaration with a couple of these repetitive properties:

varlisteners=[]functionlisten(){}varevents={listeners:listeners,listen:listen}

Whenever you find yourself in this situation, you can omit the property value and the colon by taking advantage of the new property value shorthand syntax in ES6. As shown in the following example, the new ES6 syntax makes the assignment implicit:

varlisteners=[]functionlisten(){}varevents={listeners,listen}

As we’ll further explore in the second part of the book, property value shorthands help de-duplicate the code we write without diluting its meaning. In the following snippet, I reimplemented part of localStorage, a browser API for persistent storage, as an in-memory ponyfill.1 If it weren’t for the shorthand syntax, the storage object would be more verbose to type out:

varstore={}varstorage={getItem,setItem,clear}functiongetItem(key){returnkeyinstore?store[key]:null}functionsetItem(key,value){store[key]=value}functionclear(){store={}}

That’s the first of many ES6 features that are aimed toward reducing complexity in the code you have to maintain. Once you get used to the syntax, you’ll notice that code readability and developer productivity get boosts as well.

Sometimes you have to declare objects that contain properties with names based on variables or other JavaScript expressions, as shown in the following piece of code written in ES5. For this example, assume that expertise is provided to you as a function parameter, and is not a value you know beforehand:

varexpertise='journalism'varperson={name:'Sharon',age:27}person[expertise]={years:5,interests:['international','politics','internet']}

Object literals in ES6 aren’t constrained to declarations with static names. With computed property names, you can wrap any expression in square brackets, and use that as the property name. When the declaration is reached, your expression is evaluated and used as the property name. The following example shows how the piece of code we just saw could declare the person object in a single step, without having to resort to a second statement adding the person’s expertise.

varexpertise='journalism'varperson={name:'Sharon',age:27,[expertise]:{years:5,interests:['international','politics','internet']}}

You can’t combine the property value shorthands with computed property names. Value shorthands are simple compile-time syntactic sugar that helps avoid repetition, while computed property names are evaluated at runtime. Given that we’re trying to mix these two incompatible features, the following example would throw a syntax error. In most cases this combination would lead to code that’s hard to interpret for other humans, so it’s probably a good thing that you can’t combine the two.

varexpertise='journalism'varjournalism={years:5,interests:['international','politics','internet']}varperson={name:'Sharon',age:27,[expertise]// this is a syntax error!}

A common scenario for computed property names is when we want to add an entity to an object map that uses the entity.id field as its keys, as shown next. Instead of having to have a third statement where we add the grocery to the groceries map, we can inline that declaration in the groceries object literal itself.

vargrocery={id:'bananas',name:'Bananas',units:6,price:10,currency:'USD'}vargroceries={[grocery.id]:grocery}

Another case may be whenever a function receives a parameter that it should then use to build out an object. In ES5 code, you’d need to allocate a variable declaring an object literal, then add the dynamic property, and then return the object. The following example shows exactly that, when creating an envelope that could later be used for Ajax messages that follow a convention: they have an error property with a description when something goes wrong, and a success property when things turn out okay:

functiongetEnvelope(type,description){varenvelope={data:{}}envelope[type]=descriptionreturnenvelope}

Computed property names help us write the same function more concisely, using a single statement:

functiongetEnvelope(type,description){return{data:{},[type]:description}}

The last enhancement coming to object literals is about functions.

Typically, you can declare methods on an object by adding properties to it. In the next snippet, we’re creating a small event emitter that supports multiple kinds of events. It comes with an emitter#on method that can be used to register event listeners, and an emitter#emit method that can be used to raise events:

varemitter={events:{},on:function(type,fn){if(this.events[type]===undefined){this.events[type]=[]}this.events[type].push(fn)},emit:function(type,event){if(this.events[type]===undefined){return}this.events[type].forEach(function(fn){fn(event)})}}

Starting in ES6, you can declare methods on an object literal using the new method definition syntax. In this case, we can omit the colon and the function keyword. This is meant as a terse alternative to traditional method declarations where you need to use the function keyword. The following example shows how our emitter object looks when using method definitions.

varemitter={events:{},on(type,fn){if(this.events[type]===undefined){this.events[type]=[]}this.events[type].push(fn)},emit(type,event){if(this.events[type]===undefined){return}this.events[type].forEach(function(fn){fn(event)})}}

Arrow functions are another way of declaring functions in ES6, and they come in several flavors. Let’s investigate what arrow functions are, how they can be declared, and how they behave semantically.

In JavaScript you typically declare functions using code like the following, where you have a name, a list of parameters, and a function body.

functionname(parameters){// function body}

You could also create anonymous functions, by omitting the name when assigning the function to a variable, a property, or a function call.

varexample=function(parameters){// function body}

Starting with ES6, you can use arrow functions as another way of writing anonymous functions. Keep in mind, there are several slightly different ways of writing them. The following piece of code shows an arrow function that’s very similar to the anonymous function we just saw. The only difference seems to be the missing function keyword and the => arrow to the right of the parameter list.

varexample=(parameters)=>{// function body}

While arrow functions look very similar to your typical anonymous function, they are fundamentally different: arrow functions can’t be named explicitly, although modern runtimes can infer a name based on the variable they’re assigned to; they can’t be used as constructors nor do they have a prototype property, meaning you can’t use new on an arrow function; and they are bound to their lexical scope, which is the reason why they don’t alter the meaning of this.

Let’s dig into their semantic differences with traditional functions, the many ways to declare an arrow function, and practical use cases.

In the body of an arrow function, this, arguments, and super point to the containing scope, since arrow functions don’t create a new scope. Consider the following example. We have a timer object with a seconds counter and a start method defined using the syntax we learned about earlier. We then start the timer, wait for a few seconds, and log the current amount of elapsed seconds:

vartimer={seconds:0,start(){setInterval(()=>{this.seconds++},1000)}}timer.start()setTimeout(function(){console.log(timer.seconds)},3500)// <- 3

If we had defined the function passed to setInterval as a regular anonymous function instead of using an arrow function, this would’ve been bound to the context of the anonymous function, instead of the context of the start method. We could have implemented timer with a declaration like var self = this at the beginning of the start method, and then referencing self instead of this. With arrow functions, the added complexity of keeping context references around fades away and we can focus on the functionality of our code.

In a similar fashion, lexical binding in ES6 arrow functions also means that function calls won’t be able to change the this context when using .call, .apply, .bind, etc. That limitation is usually more useful than not, as it ensures that the context will always be preserved and constant.

Let’s now shift our attention to the following example. What do you think the console.log statement will print?

functionpuzzle(){returnfunction(){console.log(arguments)}}puzzle('a','b','c')(1,2,3)

The answer is that arguments refers to the context of the anonymous function, and thus the arguments passed to that function will be printed. In this case, those arguments are 1, 2, 3.

What about in the following case, where we use an arrow function instead of the anonymous function in the previous example?

functionpuzzle(){return()=>console.log(arguments)}puzzle('a','b','c')(1,2,3)

In this case, the arguments object refers to the context of the puzzle function, because arrow functions don’t create a closure. For this reason, the printed arguments will be 'a', 'b', 'c'.

I’ve mentioned there are several flavors of arrow functions, but so far we’ve only looked at their fully fleshed version. What are the other ways to represent an arrow function?

Let’s look one more time at the arrow function syntax we’ve learned so far:

varexample=(parameters)=>{// function body}

An arrow function with exactly one parameter can omit the parentheses. This is optional. It’s useful when passing the arrow function to another method, as it reduces the amount of parentheses involved, making it easier for some humans to parse the code:

vardouble=value=>{returnvalue*2}

Arrow functions are heavily used for simple functions, such as the double function we just saw. The following flavor of arrow functions does away with the function body. Instead, you provide an expression such as value * 2. When the function is called, the expression is evaluated and its result is returned. The return statement is implicit, and there’s no need for curly braces denoting the function body anymore, as you can only use a single expression:

vardouble=(value)=>value*2

Note that you can combine implicit parentheses and implicit return, making for concise arrow functions:

vardouble=value=>value*2

Now that you understand arrow functions, let’s ponder about their merits and where they might be a good fit.

As a rule of thumb, you shouldn’t blindly adopt ES6 features wherever you can. Instead, it’s best to reason about each case individually and see whether adopting the new feature actually improves code readability and maintainability. ES6 features are not strictly better than what we had all along, and it’s a bad idea to treat them as such.

There are a few situations where arrow functions may not be the best tool. For example, if you have a large function comprised of several lines of code, replacing function with => is hardly going to improve your code. Arrow functions are often most effective for short routines, where the function keyword and syntax boilerplate make up a significant portion of the function expression.

Properly naming a function adds context to make it easier for humans to interpret them. Arrow functions can’t be explicitly named, but they can be named implicitly by assigning them to a variable. In the following example, we assign an arrow function to the throwError variable. When calling this function results in an error, the stack trace properly identifies the arrow function as throwError:

varthrowError=message=>{thrownewError(message)}throwError('this is a warning')<-UncaughtError:thisisawarningatthrowError

Arrow functions are neat when it comes to defining anonymous functions that should probably be lexically bound anyway, and they can definitely make your code more terse in some situations. They are particularly useful in most functional programming situations, such as when using .map, .filter, or .reduce on collections, as shown in the following example:

[1,2,3,4].map(value=>value*2).filter(value=>value>2).forEach(value=>console.log(value))// <- 4// <- 6// <- 8

This is one of the most flexible and expressive features in ES6. It’s also one of the simplest. It binds properties to as many variables as you need. It works with objects, arrays, and even in function parameter lists. Let’s go step by step, starting with objects.

Imagine you had a program with some comic book characters, Bruce Wayne being one of them, and you want to refer to properties in the object that describes him. Here’s the example object we’ll be using for Batman:

varcharacter={name:'Bruce',pseudonym:'Batman',metadata:{age:34,gender:'male'},batarang:['gas pellet','bat-mobile control','bat-cuffs']}

If you wanted a pseudonym variable referencing character.pseudonym, you could write the following bit of ES5 code. This is commonplace when, for instance, you’ll be referencing pseudonym in several places in your codebase and you’d prefer to avoid typing out character.pseudonym each time:

varpseudonym=character.pseudonym

With destructuring in assignment, the syntax becomes a bit more clear. As you can see in the next example, you don’t have to write pseudonym twice, while still clearly conveying intent. The following statement is equivalent to the previous one written in ES5 code:

var{pseudonym}=character

Just like you could declare multiple comma-separated variables with a single var statement, you can also declare multiple variables within the curly braces of a destructuring expression:

var{pseudonym,name}=character

In a similar fashion, you could mix and match destructuring with regular variable declarations in the same var statement. While this might look a bit confusing at first, it’ll be up to any JavaScript coding style guides you follow to determine whether it’s appropriate to declare several variables in a single statement. In any case, it goes to show the flexibility offered by destructuring syntax:

var{pseudonym}=character,two=2

If you want to extract a property named pseudonym but would like to declare it as a variable named alias, you can use the following destructuring syntax, known as aliasing. Note that you can use alias or any other valid variable name:

var{pseudonym:alias}=characterconsole.log(alias)// <- 'Batman'

While aliases don’t look any simpler than the ES5 flavor, alias = character.pseudonym, they start making sense when you consider the fact that destructuring supports deep structures, as in the following example:

var{metadata:{gender}}=character

In cases like the previous one, where you have deeply nested properties being destructured, you might be able to convey a property name more clearly if you choose an alias. Consider the next snippet, where a property named code wouldn’t have been as indicative of its contents as colorCode could be:

var{metadata:{gender:characterGender}}=character

The scenario we just saw repeats itself frequently, because properties are often named in the context of their host object. While palette.color.code is perfectly descriptive, code on its own could mean a wide variety of things, and aliases such as colorCode can help you bring context back into the variable name while still using destructuring.

Whenever you access a nonexistent property in ES5 notation, you get a value of undefined:

console.log(character.boots)// <- undefinedconsole.log(character['boots'])// <- undefined

With destructuring, the same behavior prevails. When declaring a destructured variable for a property that’s missing, you’ll get back undefined as well.

var{boots}=characterconsole.log(boots)// <- undefined

A destructured declaration accessing a nested property of a parent object that’s null or undefined will throw an Exception, just like regular attempts to access properties of null or undefined would, in other cases.

var{boots:{size}}=character// <- Exceptionvar{missing}=null// <- Exception

When you think of that piece of code as the equivalent ES5 code shown next, it becomes evident why the expression must throw, given that destructuring is mostly syntactic sugar.

varnothing=nullvarmissing=nothing.missing// <- Exception

As part of destructuring, you can provide default values for those cases where the value is undefined. The default value can be anything you can think of: numbers, strings, functions, objects, a reference to another variable, etc.

var{boots={size:10}}=characterconsole.log(boots)// <- { size: 10 }

Default values can also be provided in nested property destructuring.

var{metadata:{enemy='Satan'}}=characterconsole.log(enemy)// <- 'Satan'

For use in combination with aliases, you should place the alias first, and then the default value, as shown next.

var{boots:footwear={size:10}}=character

It’s possible to use the computed property names syntax in destructuring patterns. In this case, however, you’re required to provide an alias to be used as the variable name. That’s because computed property names allow arbitrary expressions and thus the compiler wouldn’t be able to infer a variable name. In the following example we use the value alias, and a computed property name to extract the boots property from the character object.

var{['boo'+'ts']:characterBoots}=characterconsole.log(characterBoots)// <- true

This flavor of destructuring is probably the least useful, as characterBoots = character[type] is usually simpler than { [type]: characterBoots } = character, as it’s a more sequential statement. That being said, the feature is useful when you have properties you want to declare in the object literal, as opposed to using subsequent assignment statements.

That’s it, as far as objects go, in terms of destructuring. What about arrays?

The syntax for destructuring arrays is similar to that of objects. The following example shows a coordinates object that’s destructured into two variables: x and y. Note how the notation uses square brackets instead of curly braces; this denotes we’re using array destructuring instead of object destructuring. Instead of having to sprinkle your code with implementation details like x = coordinates[0], with destructuring you can convey your meaning clearly and without explicitly referencing the indices, naming the values instead.

varcoordinates=[12,-7]var[x,y]=coordinatesconsole.log(x)// <- 12

When destructuring arrays, you can skip uninteresting properties or those that you otherwise don’t need to reference.

varnames=['James','L.','Howlett']var[firstName,,lastName]=namesconsole.log(lastName)// <- 'Howlett'

Array destructuring allows for default values just like object destructuring.

varnames=['James','L.']var[firstName='John',,lastName='Doe']=namesconsole.log(lastName)// <- 'Doe'

In ES5, when you have to swap the values of two variables, you typically resort to a third, temporary variable, as in the following snippet.

varleft=5varright=7varaux=leftleft=rightright=aux

Destructuring helps you avoid the aux declaration and focus on your intent. Once again, destructuring helps us convey intent more tersely and effectively for the use case.

varleft=5varright=7[left,right]=[right,left]

The last area of destructuring we’ll be covering is function parameters.

Function parameters in ES6 enjoy the ability of specifying default values as well. The following example defines a default exponent with the most commonly used value.

functionpowerOf(base,exponent=2){returnMath.pow(base,exponent)}

Defaults can be applied to arrow function parameters as well. When we have default values in an arrow function we must wrap the parameter list in parentheses, even when there’s a single parameter.

vardouble=(input=0)=>input*2

Default values aren’t limited to the rightmost parameters of a function, as in a few other programming languages. You could provide default values for any parameter, in any position.

functionsumOf(a=1,b=2,c=3){returna+b+c}console.log(sumOf(undefined,undefined,4))// <- 1 + 2 + 4 = 7

In JavaScript it’s not uncommon to provide a function with an options object, containing several properties. You could determine a default options object if one isn’t provided, as shown in the next snippet.

vardefaultOptions={brand:'Volkswagen',make:1999}functioncarFactory(options=defaultOptions){console.log(options.brand)console.log(options.make)}carFactory()// <- 'Volkswagen'// <- 1999

The problem with this approach is that as soon as the consumer of carFactory provides an options object, you lose all of your defaults.

carFactory({make:2000})// <- undefined// <- 2000

We can mix function parameter default values with destructuring, and get the best of both worlds.

A better approach than merely providing a default value might be to destructure options entirely, providing default values for each property, individually, within the destructuring pattern. This approach also lets you reference each option without going through an options object, but you lose the ability to reference options directly, which might represent an issue in some situations.

functioncarFactory({brand='Volkswagen',make=1999}){console.log(brand)console.log(make)}carFactory({make:2000})// <- 'Volkswagen'// <- 2000

In this case, however, we’ve once again lost the default value for the case where the consumer doesn’t provide any options. Meaning carFactory() will now throw when an options object isn’t provided. This can be remedied by using the syntax shown in the following snippet of code, which adds a default options value of an empty object. The empty object is then filled, property by property, with the default values on the destructuring pattern.

functioncarFactory({brand='Volkswagen',make=1999}={}){console.log(brand)console.log(make)}carFactory()// <- 'Volkswagen'// <- 1999

Besides default values, you can use destructuring in function parameters to describe the shape of objects your function can handle. Consider the following code snippet, where we have a car object with several properties. The car object describes its owner, what kind of car it is, who manufactured it, when, and the owner’s preferences when he purchased the car.

varcar={owner:{id:'e2c3503a4181968c',name:'Donald Draper'},brand:'Peugeot',make:2015,model:'208',preferences:{airbags:true,airconditioning:false,color:'red'}}

If we wanted to implement a function that only takes into account certain properties of a parameter, it might be a good idea to reference those properties explicitly by destructuring up front. The upside is that we become aware of every required property upon reading the function’s signature.

When we destructure everything up front, it’s easy to spot when input doesn’t adhere to the contract of a function. The following example shows how every property we need could be specified in the parameter list, laying bare the shape of the objects we can handle in the getCarProductModel API.

vargetCarProductModel=({brand,make,model})=>({sku:brand+':'+make+':'+model,brand,make,model})getCarProductModel(car)

Besides default values and filling an options object, let’s explore what else destructuring is good at.

Whenever there’s a function that returns an object or an array, destructuring makes it much terser to interact with. The following example shows a function that returns an object with some coordinates, where we grab only the ones we’re interested in: x and y. We’re avoiding an intermediate point variable declaration that often gets in the way without adding a lot of value to the readability of your code.

functiongetCoordinates(){return{x:10,y:22,z:-1,type:'3d'}}var{x,y}=getCoordinates()

The case for default option values bears repeating. Imagine you have a random function that produces random integers between a min and a max value, and that it should default to values between 1 and 10. This is particularly interesting as an alternative to named parameters in languages with strong typing features, such as Python and C#. This pattern, where you’re able to define default values for options and then let consumers override them individually, offers great flexibility.

functionrandom({min=1,max=10}={}){returnMath.floor(Math.random()*(max-min))+min}console.log(random())// <- 7console.log(random({max:24}))// <- 18

Regular expressions are another great fit for destructuring. Destructuring empowers you to name groups from a match without having to resort to index numbers. Here’s an example RegExp that could be used for parsing simple dates, and an example of destructuring those dates into each of their components. The first entry in the resulting array is reserved for the raw input string, and we can discard it.

functionsplitDate(date){varrdate=/(\d+).(\d+).(\d+)/returnrdate.exec(date)}var[,year,month,day]=splitDate('2015-11-06')

You’ll want to be careful when the regular expression doesn’t match, as that returns null. Perhaps a better approach would be to test for the failure case before destructuring, as shown in the following bit of code.

varmatches=splitDate('2015-11-06')if(matches===null){return}var[,year,month,day]=matches

Before ES6, interacting with an arbitrary amount of function parameters was complicated. You had to use arguments, which isn’t an array but has a length property. Usually you’d end up casting the arguments object into an actual array using Array#slice.call, and going from there, as shown in the following snippet.

functionjoin(){varlist=Array.prototype.slice.call(arguments)returnlist.join(', ')}join('first','second','third')// <- 'first, second, third'

ES6 has a better solution to the problem, and that’s rest parameters.

You can now precede the last parameter in any JavaScript function with three dots, converting it into a special “rest parameter.” When the rest parameter is the only parameter in a function, it gets all arguments passed to the function: it works just like the .slice solution we saw earlier, but you avoid the need for a complicated construct like arguments, and it’s specified in the parameter list.

functionjoin(...list){returnlist.join(', ')}join('first','second','third')// <- 'first, second, third'

Named parameters before the rest parameter won’t be included in the list.

functionjoin(separator,...list){returnlist.join(separator)}join('; ','first','second','third')// <- 'first; second; third'

Note that arrow functions with a rest parameter must include parentheses, even when it’s the only parameter. Otherwise, a SyntaxError would be thrown. The following piece of code is a beautiful example of how combining arrow functions and rest parameters can yield concise functional expressions.

varsumAll=(...numbers)=>numbers.reduce((total,next)=>total+next)console.log(sumAll(1,2,5))// <- 8

Compare that with the ES5 version of the same function. Granted, it’s all in the complexity. While terse, the sumAll function can be confusing to readers unused to the .reduce method, or because it uses two arrow functions. This is a complexity trade-off that we’ll cover in the second part of the book.

functionsumAll(){varnumbers=Array.prototype.slice.call(arguments)returnnumbers.reduce(function(total,next){returntotal+next})}console.log(sumAll(1,2,5))// <- 8

Next up we have the spread operator. It’s also denoted with three dots, but it serves a slightly different purpose.

The spread operator can be used to cast any iterable object into an array. Spreading effectively expands an expression onto a target such as an array literal or a function call. The following example uses ...arguments to cast function parameters into an array literal.

functioncast(){return[...arguments]}cast('a','b','c')// <- ['a', 'b', 'c']

We could use the spread operator to split a string into an array with each code point that makes up the string.

[...'show me']// <- ['s', 'h', 'o', 'w', ' ', 'm', 'e']

You can place additional elements to the left and to the right of a spread operation and still get the result you would expect.

functioncast(){return['left',...arguments,'right']}cast('a','b','c')// <- ['left', 'a', 'b', 'c', 'right']

Spread is an useful way of combining multiple arrays. The following example shows how you can spread arrays anywhere into an array literal, expanding their elements into place.

varall=[1,...[2,3],4,...[5],6,7]console.log(all)// <- [1, 2, 3, 4, 5, 6, 7]

Note that the spread operator isn’t limited to arrays and arguments. The spread operator can be used with any iterable object. Iterable is a protocol in ES6 that allows you to turn any object into something that can be iterated over. We’ll research the iterable protocol in Chapter 4.

Before ES6, whenever you had a dynamic list of arguments that needed to be applied to a function call, you’d use .apply. This is inelegant because .apply also takes a context for this, which, in this scenario, you don’t want to concern yourself with.

fn.apply(null,['a','b','c'])

Besides spreading onto arrays, you can also spread items onto function calls. The following example shows how you could use the spread operator to pass an arbitrary number of arguments to the multiply function.

functionmultiply(left,right){returnleft*right}varresult=multiply(...[2,3])console.log(result)// <- 6