Customize Your Workflow

Copyright © 2016 Chris Dawson, Ben Straub. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781491933503 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Building Tools with GitHub, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-93350-3

[LSI]

This book contains stories about building software tools.

If you write software on a daily basis, you realize the act of writing software is the craft of creating tools. Software is nothing more than a tool. A spreadsheet is fundamentally a tool to add and subtract numbers. A video game is fundamentally a tool to alleviate boredom. Almost immediately after people started writing software tools we then discovered we needed more tools to permit us to write the tools we set out to build in the first place. Let’s call these tools that are strictly to support writing software (rather than software tools for the general population) meta-tools.

One of the most important meta-tools in the software development world is Git. Git is a meta-tool that helps software developers manage the complexity that comes from writing software. Git allows software developers to store snapshots of their programs (and then easily restore those snapshots if necessary) and to easily collaborate with other programmers (a surprisingly complicated problem). Git is called a source code management (SCM) tool and though there were many other SCMs before Git, Git has taken the software world by storm like no other before it and now dominates the SCM landscape.

GitHub is a company that saw the immense potential of Git early on and built a layer of web services on top of the existing features found in Git. Not surprisingly, one of the factors behind its success was that GitHub employees embraced the ethos of writing meta-tools from the beginning. Building meta-tools requires the courage to take a little extra time to build a meta-tool rather than taking the easy route to get the public-facing software out the door. GitHub employees are proud of this prioritization and have written extensively about the benefits, which include easy on-boarding of new hires and a transparent workflow visible to all employees.

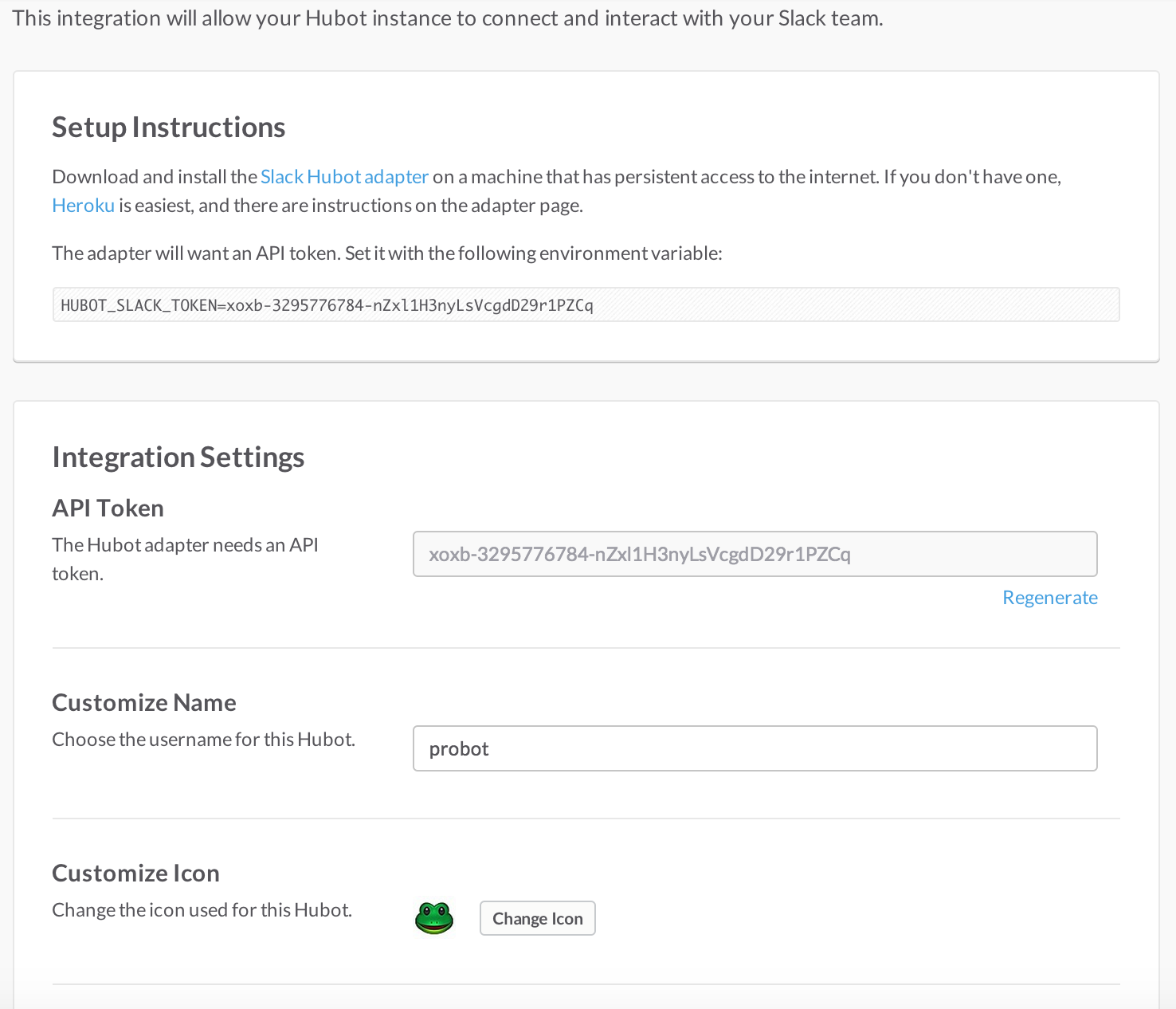

This book looks at the tools GitHub uses internally. The GitHub.com website is itself a meta-tool, and we discuss the many facets of the GitHub service. Specifically these technologies are the GitHub API and related GitHub technologies, Gollum wiki, Jekyll static page generator, and the chat robot called Hubot (if you are not familiar with any of these, we’ll explain them fully in their respective chapters).

To reiterate, this book is not a reference of those technologies. This book is a story-book, a book that relates the process of building software meta-tools, explaining not only the technology specifics, but also the compromises, the realities of refactoring, and the challenges inherent to writing meta-tools in long narrative story form.

Meta-tools require a different mindset than what comes from building software available to the general population. Meta-tools are generally open source, which requires a different level of responsibility and usage. One could argue that software engineers are more demanding of quality than general users because software developers know they can take action to improve or fork software that does not work for them. Meta-tools enforce a higher level of contributory involvement, which makes automated tests almost a requirement. All of these concepts constitute the background story behind meta-tools, and we show you how they play out when building your own.

Using an API to back an application is a common practice today: this is the future of application development. APIs provide a great pattern for making data accessible to the multiscreen world. If your application is backed by a remote service API, the first application could be a mobile app running on Apple’s iOS operating system. Critically, if that business model does not turn out to be correct, you can respond quickly to changing requirements and iterate to build another application for an Android wearable. Or, perhaps you’ll build an integrated car application, or any other console (or even nonconsole) application. As long as your applications can send and receive data using calls to a remote API you are free to build whatever user interface you want on whatever platform you want.

As an author, you could write and host your own API. Many frameworks for popular languages like Ruby, Go, or Java support building APIs using standard architectural styles like REST. Or, you could use a third-party API. In this book we’ll focus on a third-party API: the GitHub API.

Why the GitHub API? The GitHub API is exceedingly relevant if you are building software because you are probably using GitHub to manage your software code. For those that aren’t, you might be using Git without GitHub, and the GitHub API is useful to know there as well, as it layers the functionality of Git into a networked programming interface.

The GitHub API is perhaps the best designed API I’ve ever used. It is a Hypermedia API, which is an arguably successful attempt to make API clients resilient to API changes—a tricky problem. The API is well versioned. It is comprehensive, mapping closely to most features of Git. It is consistent across sections and well organized. The GitHub API is a great API on which to build applications, serving as a case study for a well-designed API.

The GitHub API is extremely comprehensive, permitting access and modification of almost all data and metadata stored or associated with a Git repository. Here is a grouped summary of the sections of the API ordered alphabetically as they are on the GitHub API documentation site:

Activity: notifications of interesting events in your developer life

Gists: programmatically create and share code snippets

Git Data: raw access to Git data over a remote API

Issues: add and modify issues

Miscellaneous: whatever does not fit into the general API categorization

Organizations: access and retrieve organizational membership data

Pull Requests: a powerful API layer on the popular merge process

Repositories: modify everything and anything related to repositories

Search: code-driven search within the entire GitHub database

Users: access user data

Enterprise: specifics about using the API when using the private corporate GitHub

In addition, though not a part of the API, there are other important technologies you should know about when using GitHub that are not covered in the API documentation:

Jekyll and “gh-pages”: hosting blogs and static documentation

Gollum: wikis tied to a repository

Hubot: a programmable chat robot used extensively at GitHub

Each of these sections of the GitHub technology stack are covered in various chapters (with two exceptions, which we explain next). The GitHub API documentation is a stellar reference you will use constantly when writing any application that talks to the API, but the chapters in this book serve a different purpose: these chapters are stories about building applications on top of the technologies provided by GitHub. Within these stories you will learn the trade-offs and considerations you will face when you use the GitHub API. Chapters in this book often cover multiple pieces of the API when appropriate for the story we are telling. We’ve generally tried to focus on a major API section and limit exposure to other pieces as much as possible, but most chapters do need to bring in small pieces of more than one section.

Here is a short synopsis of each chapter:

This chapter covers a first look at the API through the command-line HTTP client called cURL. We talk a bit about the response format and how to parse it within the command line, and also document authentication. This is the only chapter that does not build an application from the technologies presented. Chapter 2: This chapter covers the Gist API, as well as command-line tools and the Ruby language “Octokit” API client. We then take this API and build a simple Ruby server that is stored as a gist and displays gists.

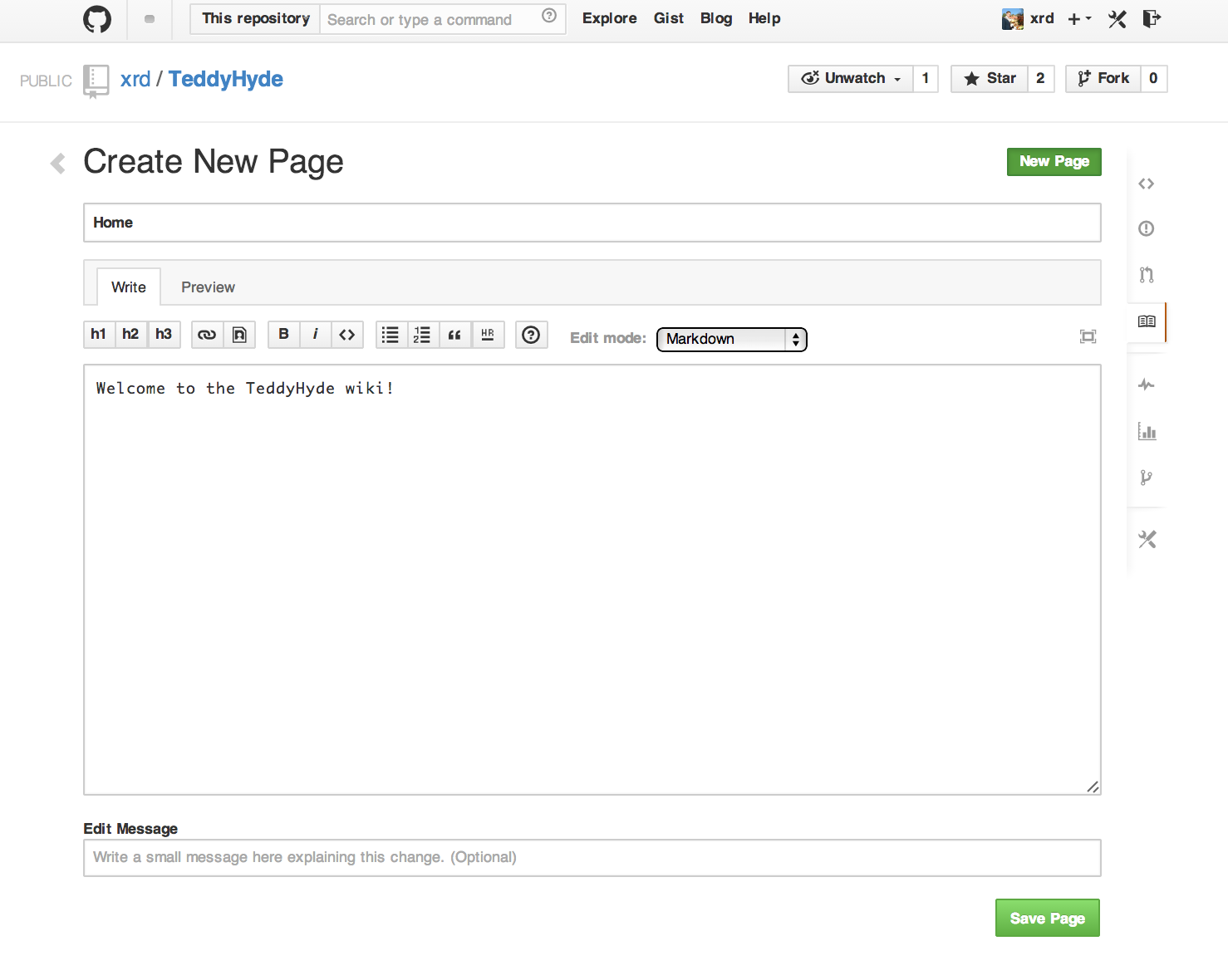

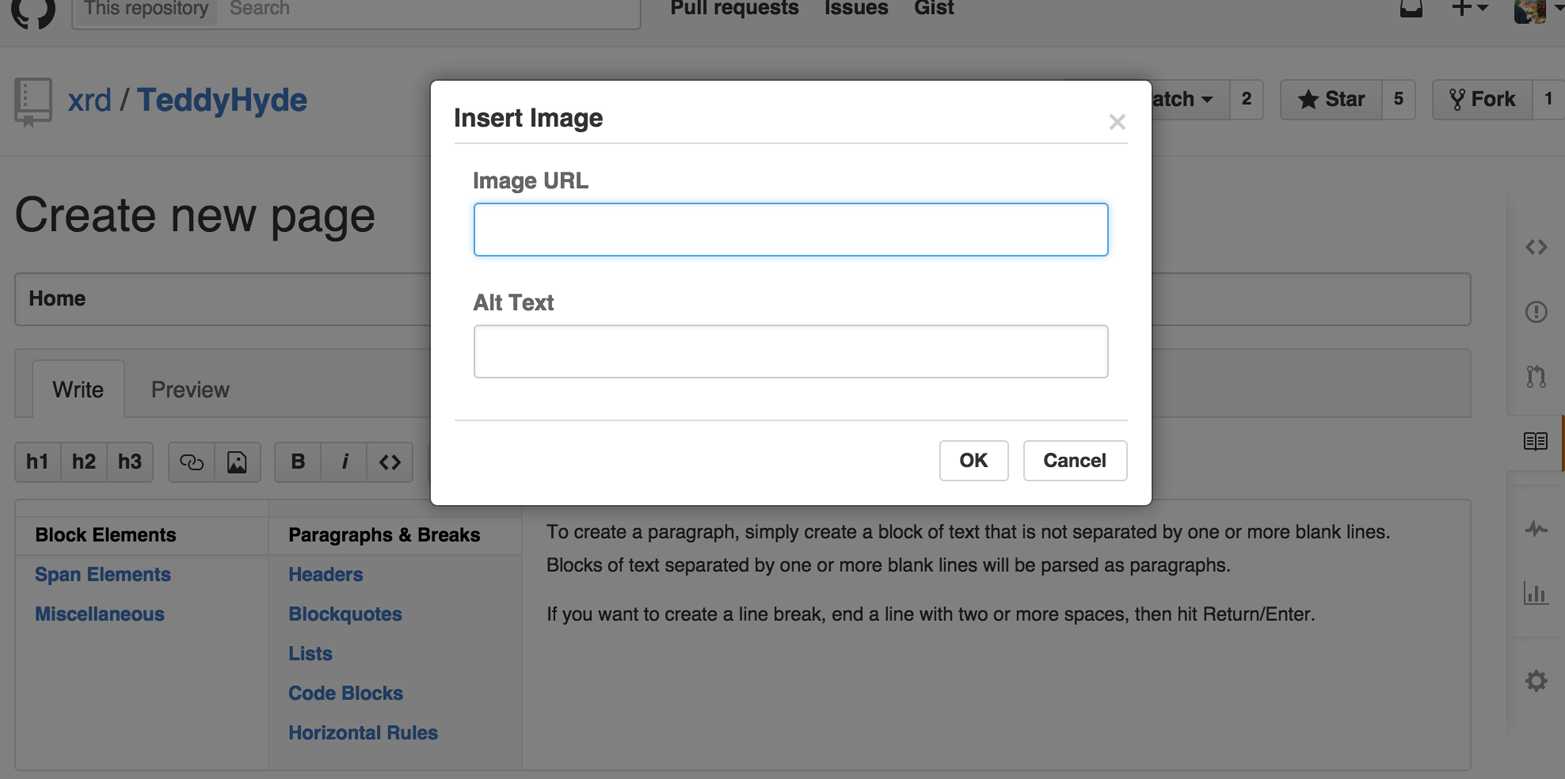

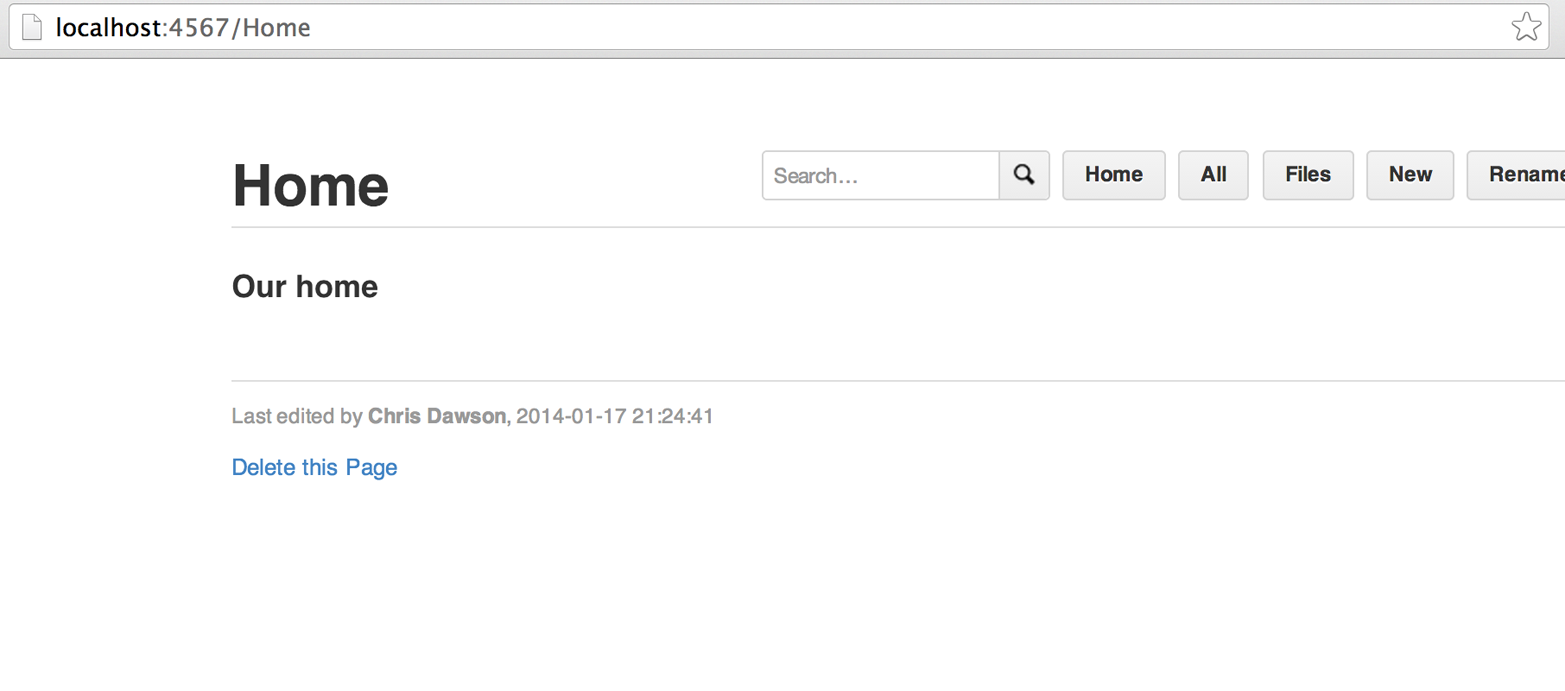

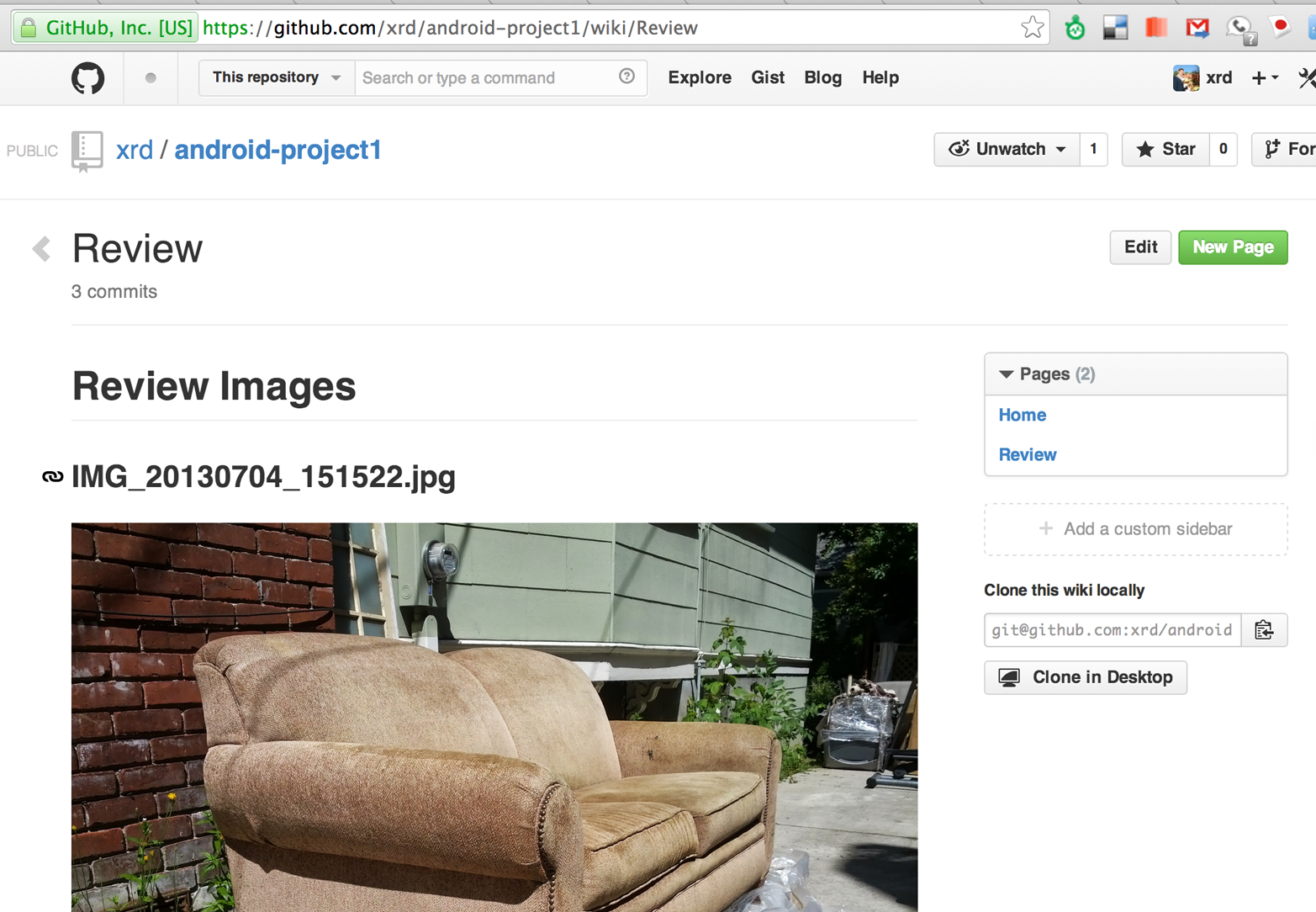

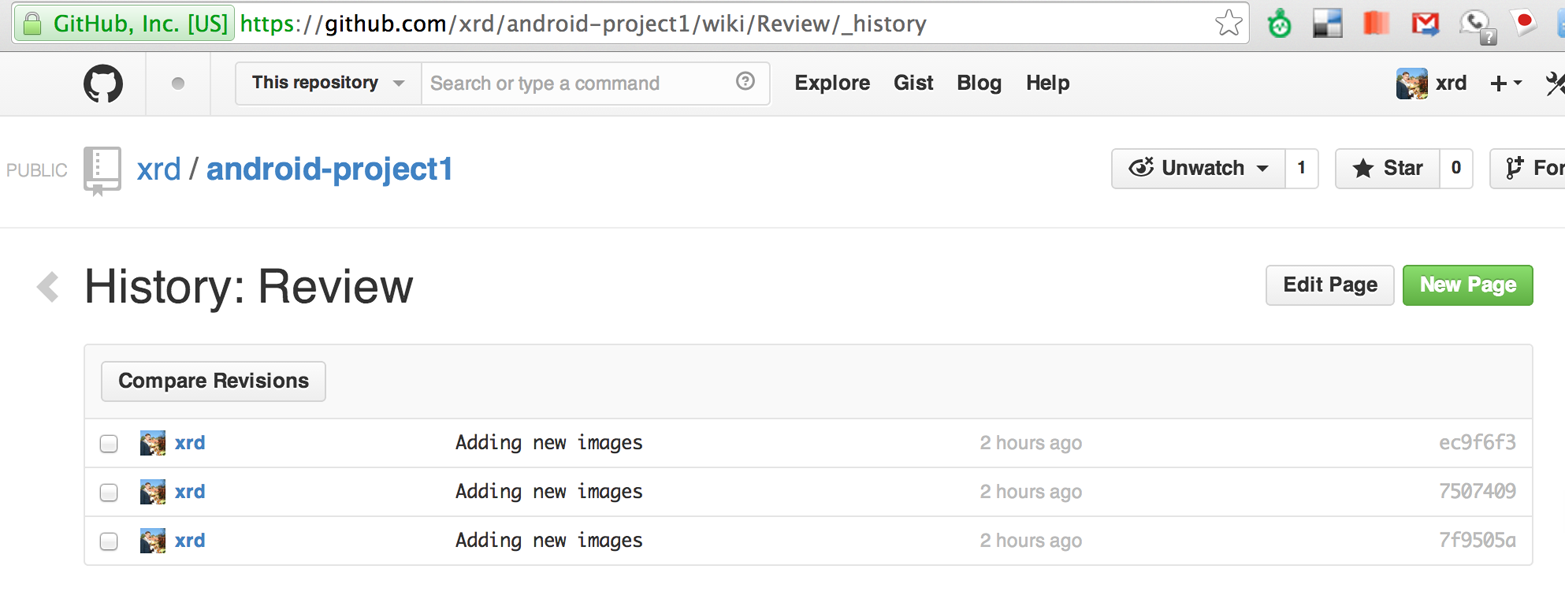

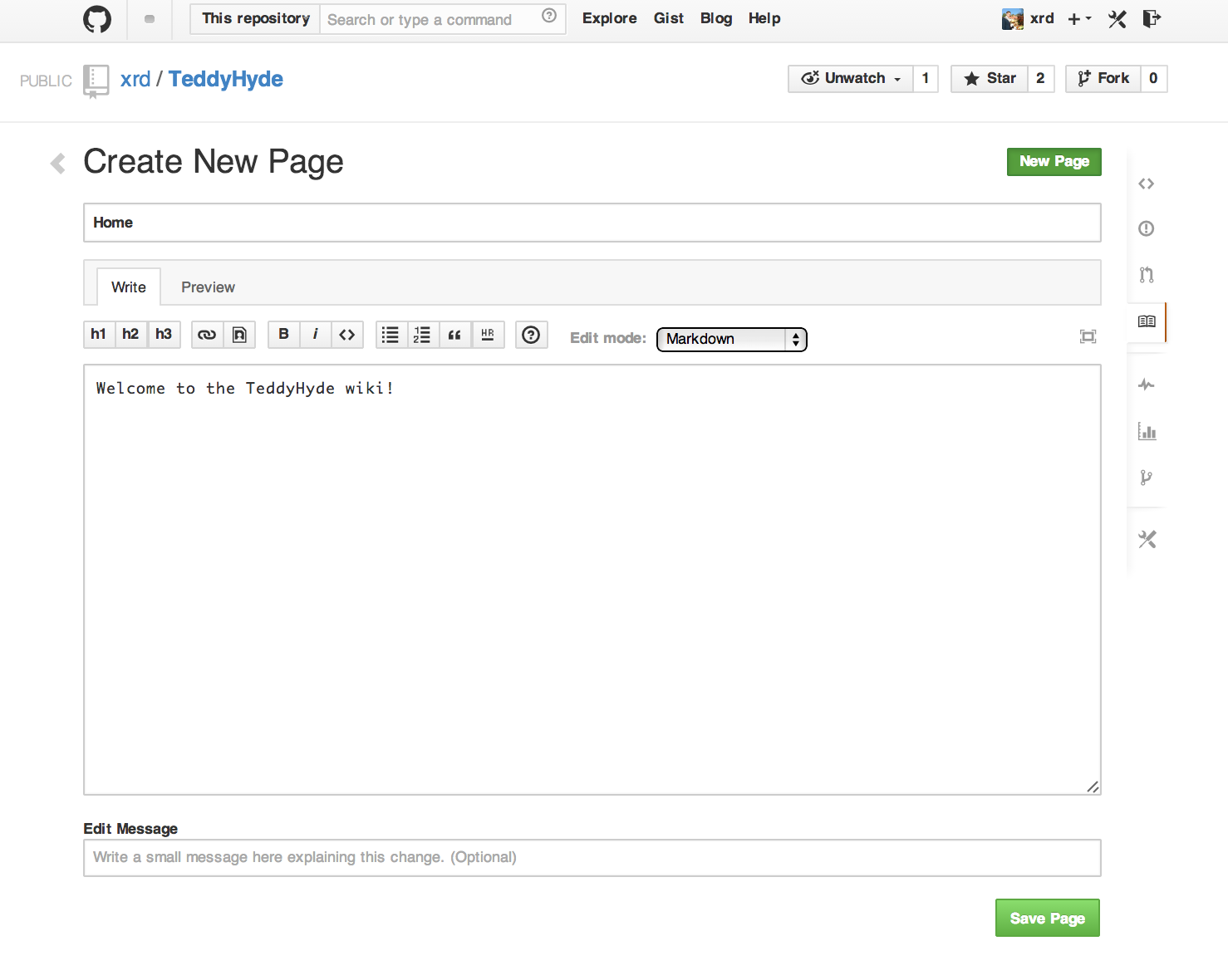

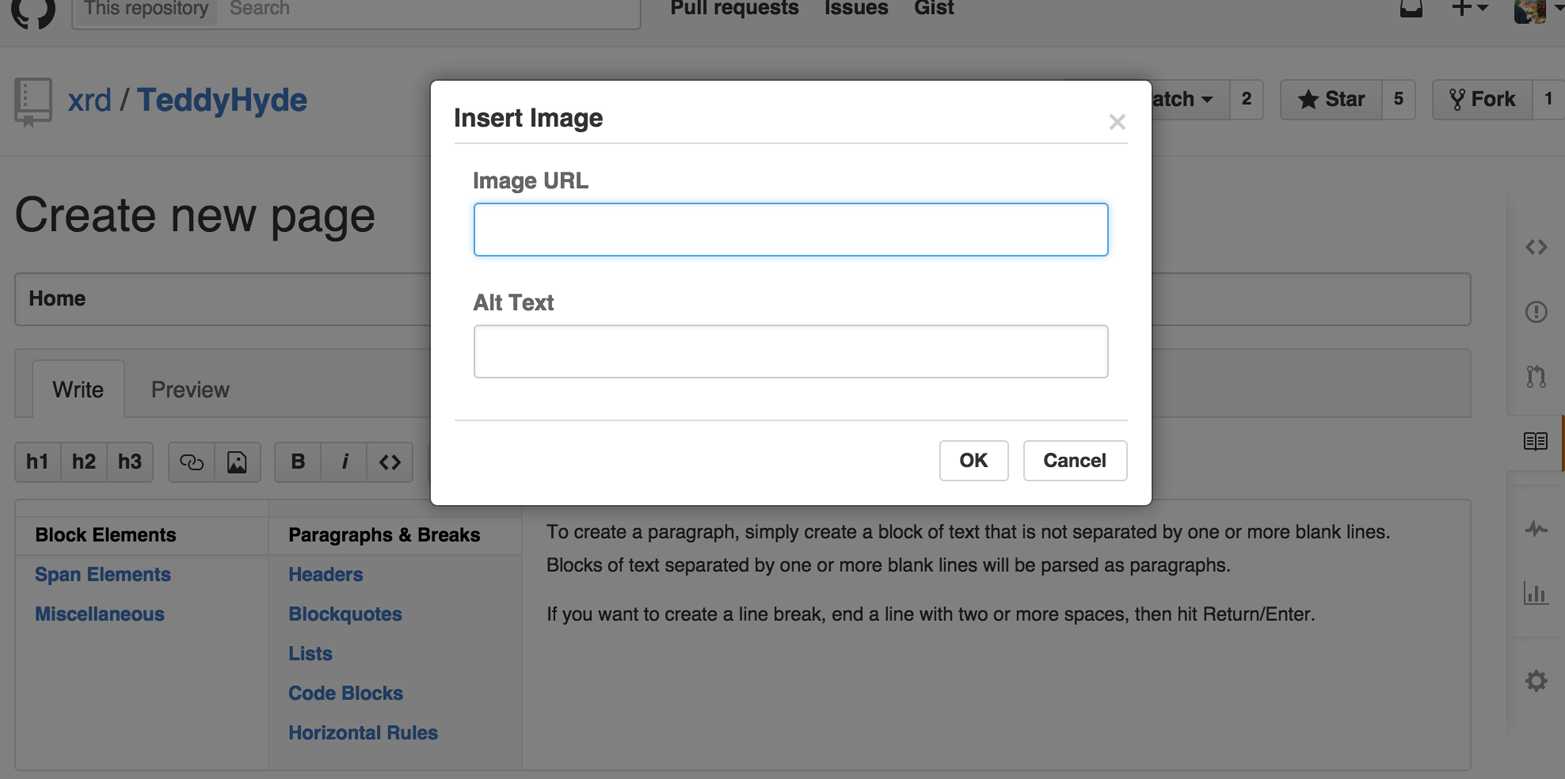

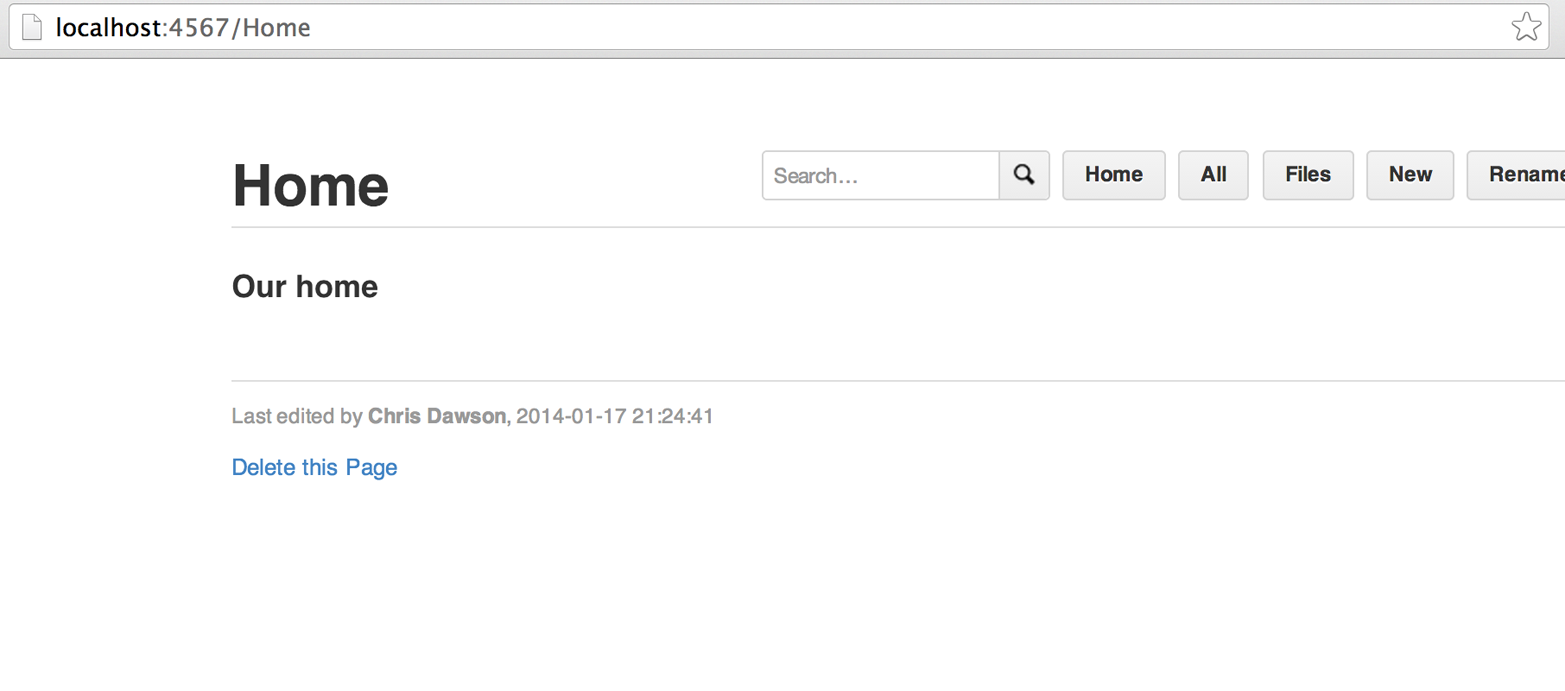

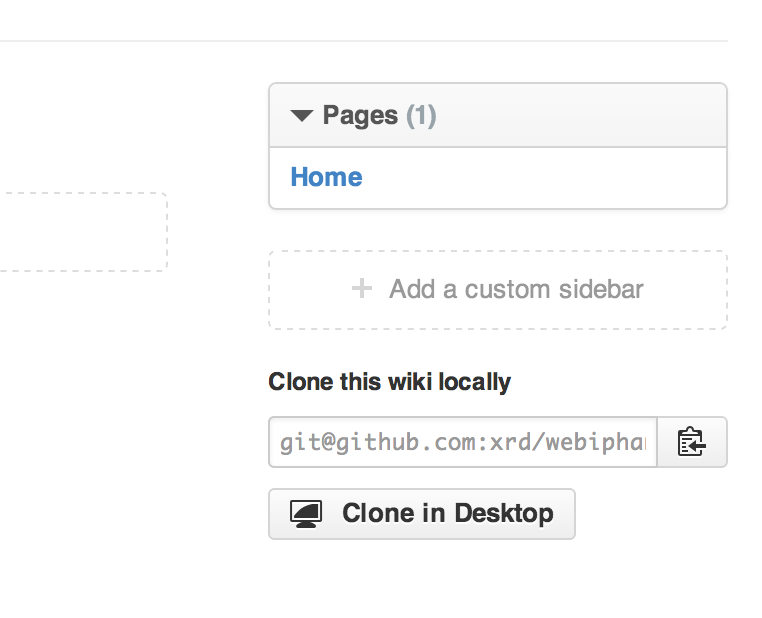

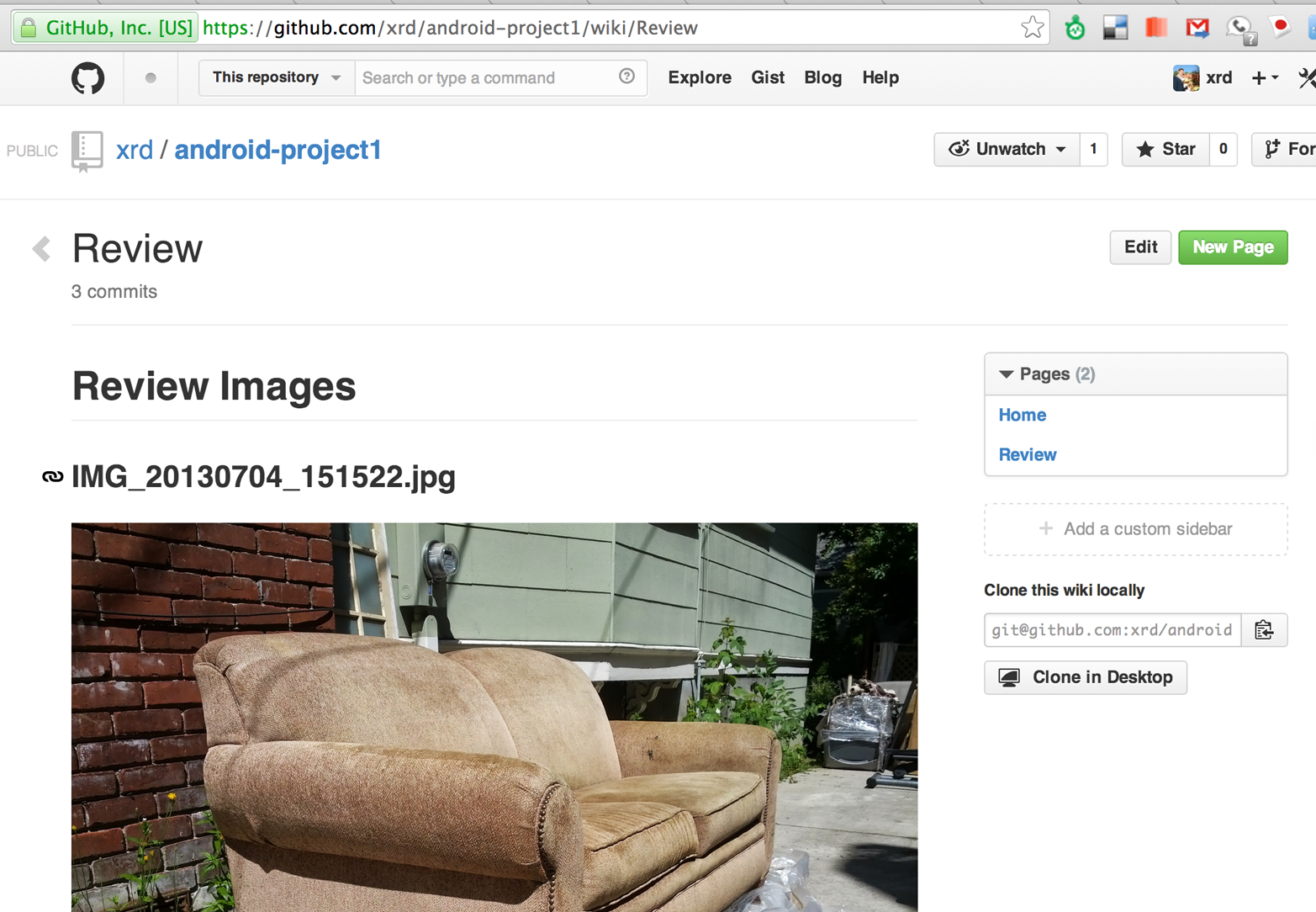

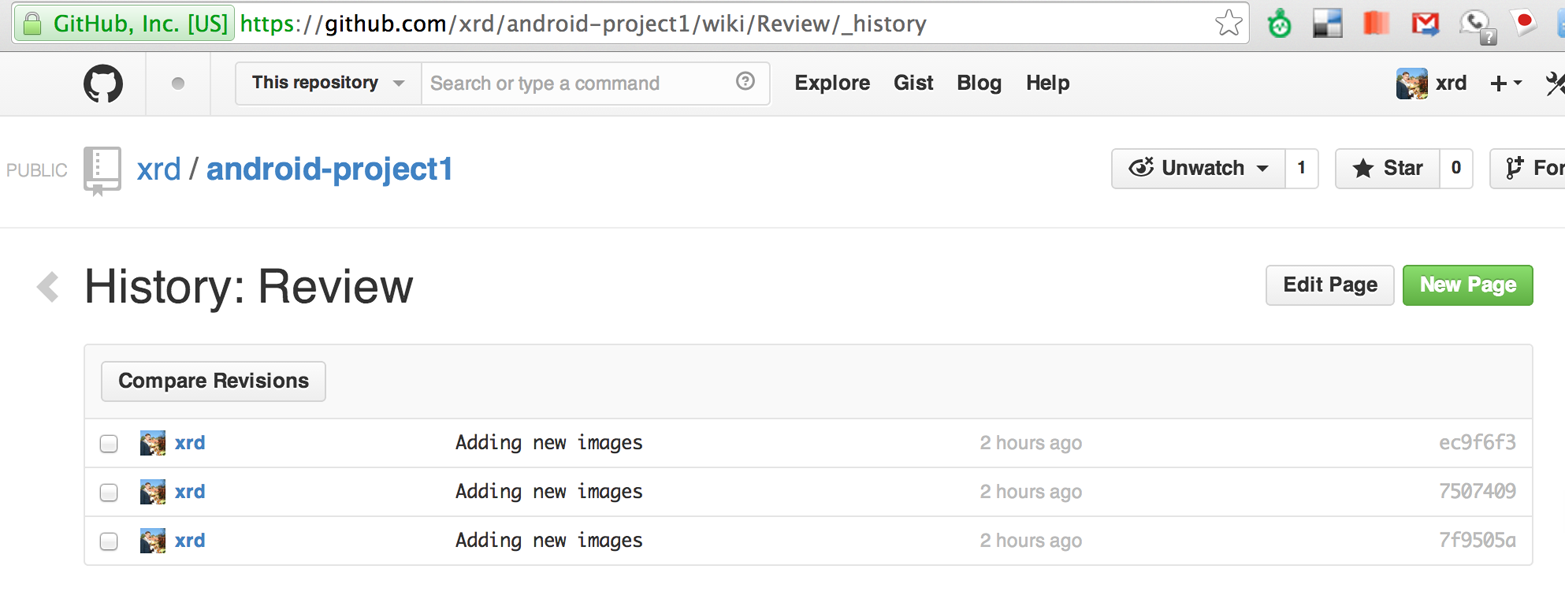

This chapter explains usage of the Gollum command-line tool and associated Ruby library (gem), which is backed by Grit, the C-language bindings for accessing Git repositories. We also document some details of the Git storage format and how it applies to storing large files inside of a Git repository, and show how to use the Git command-line tools to play with this information. We use Gollum and the Grit libraries to build an image management tool that also functions as a regular Gollum wiki, which can be published to GitHub.

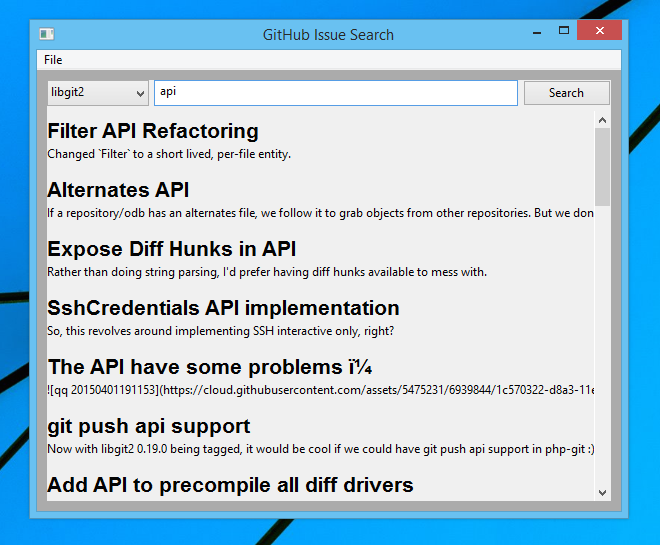

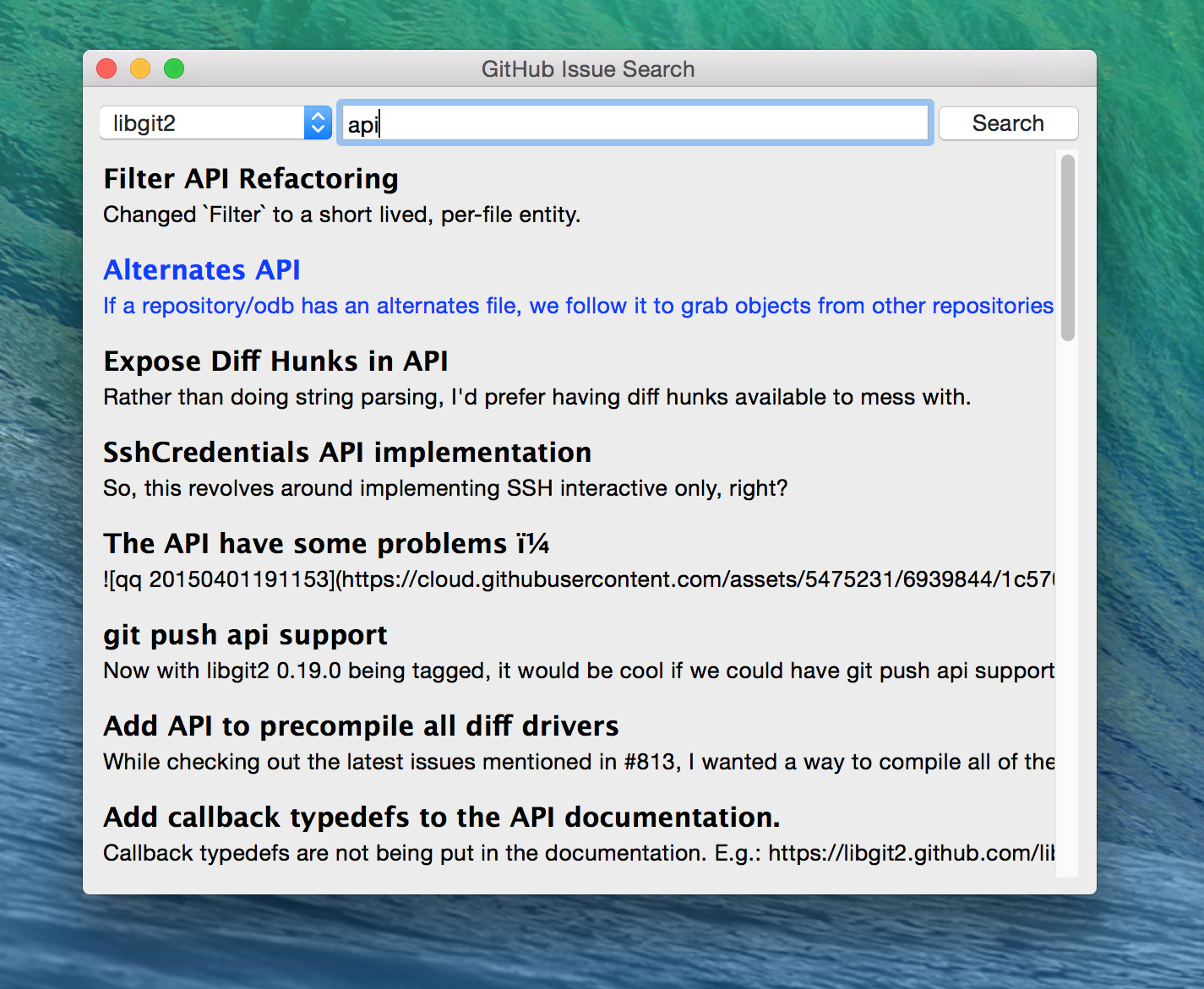

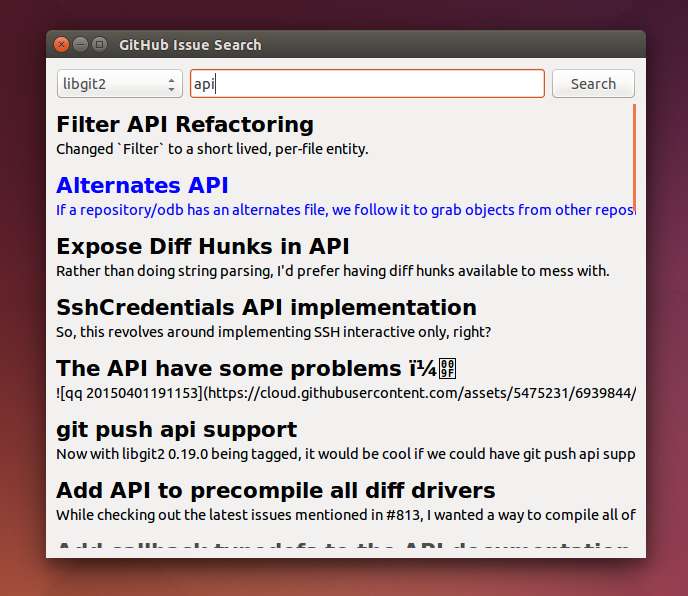

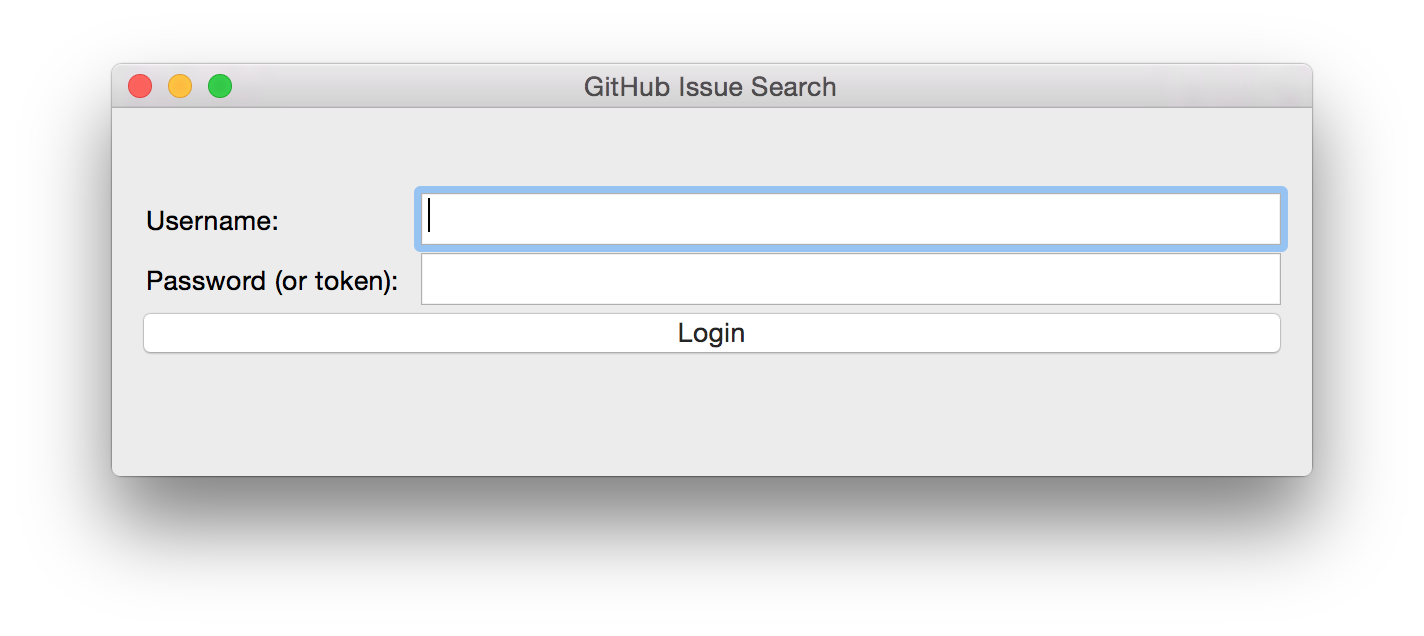

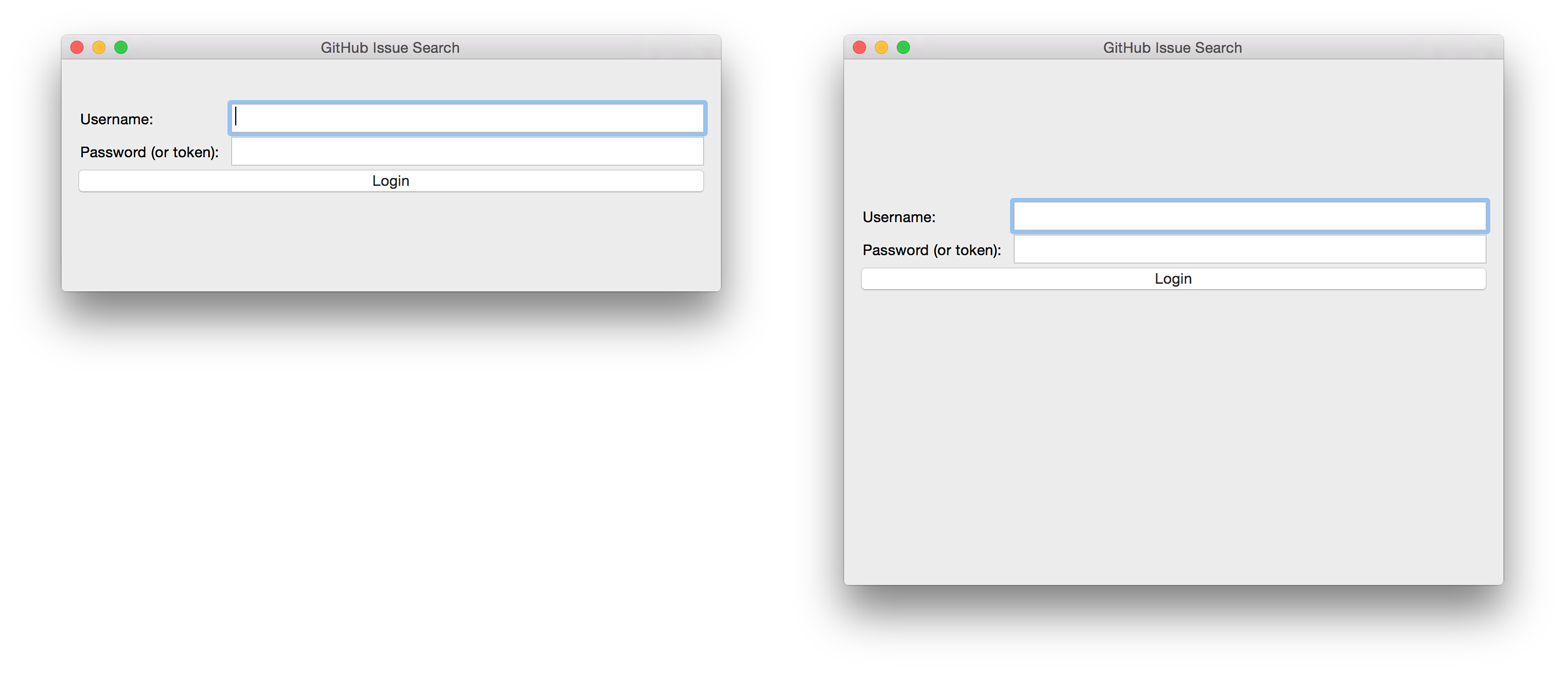

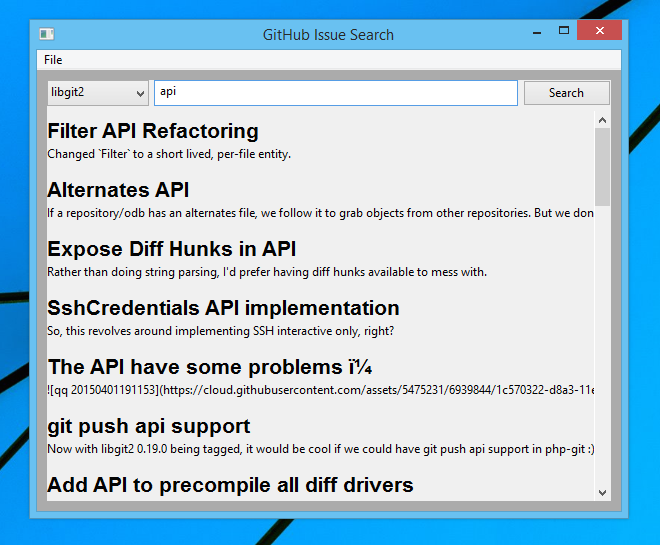

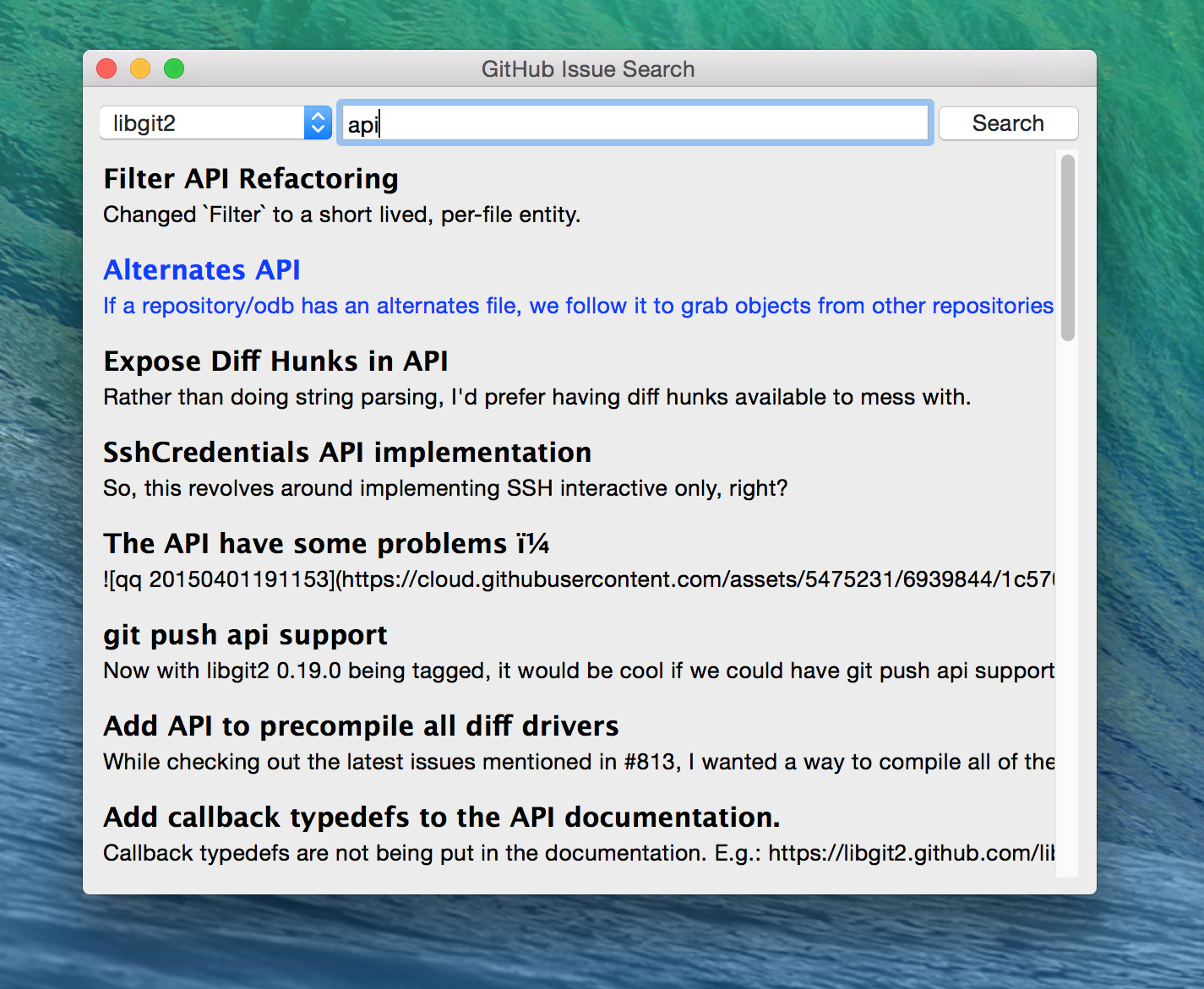

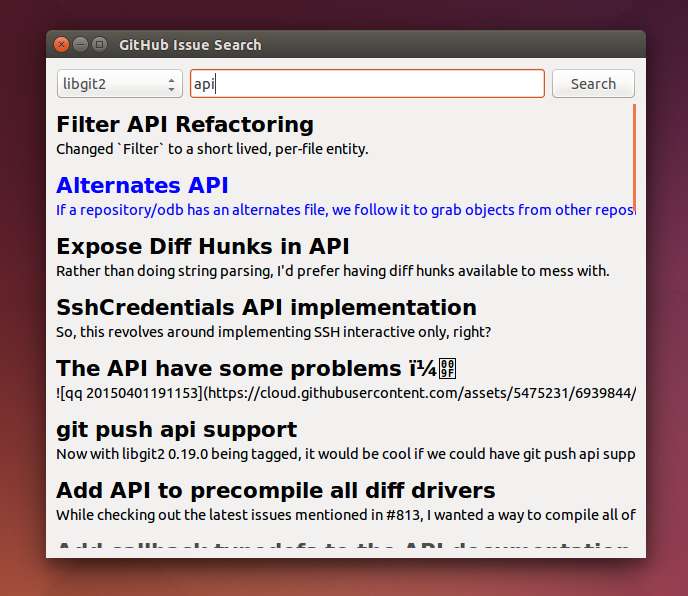

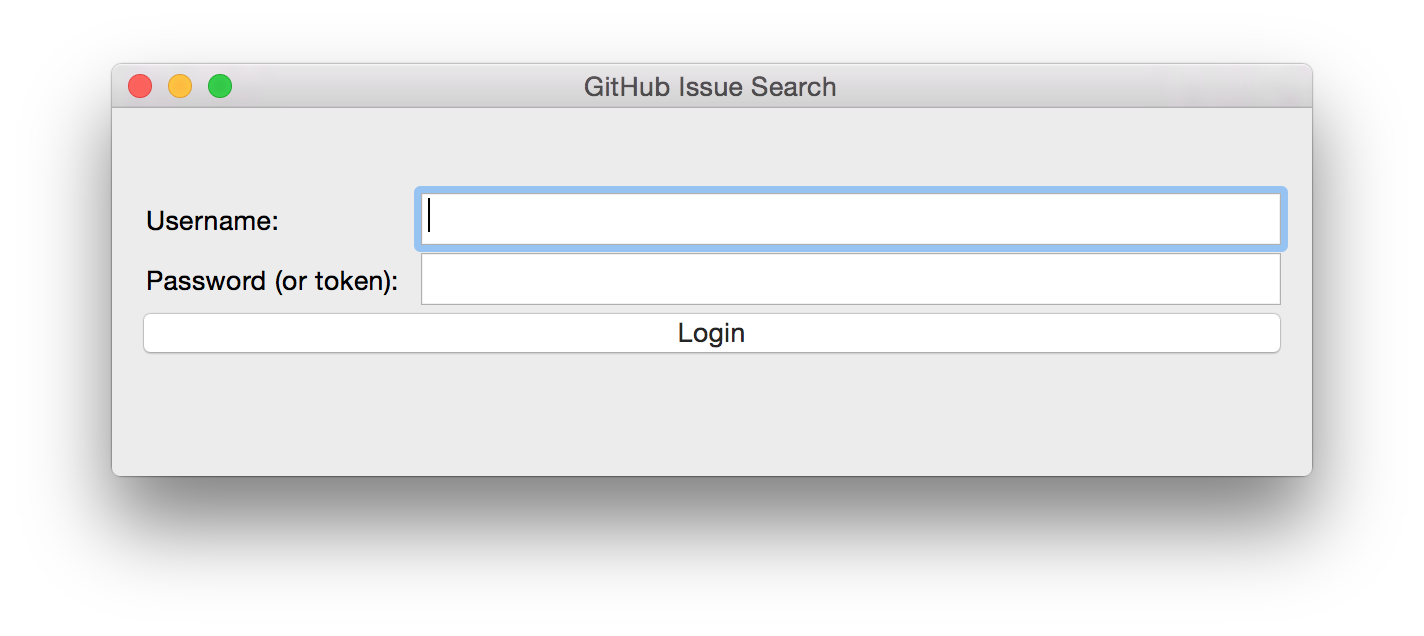

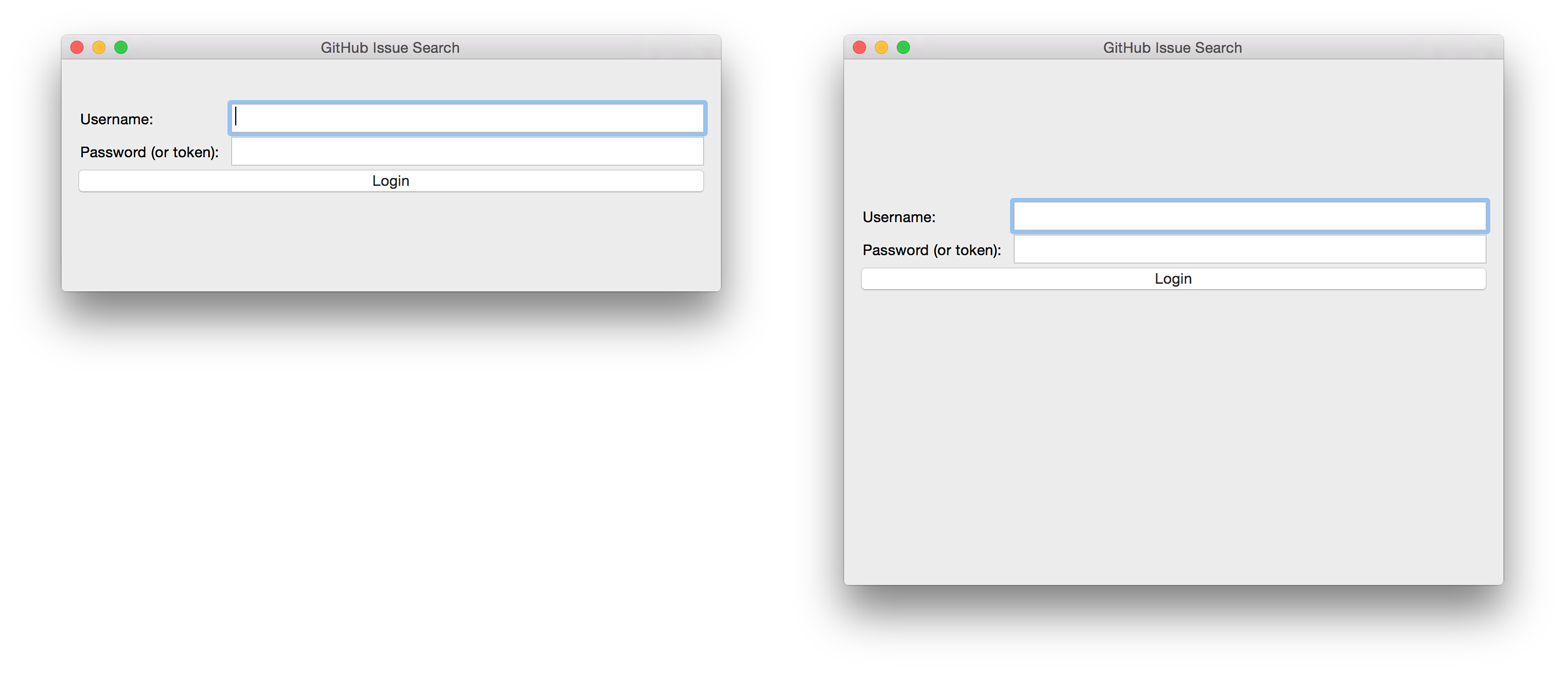

In this chapter we explore the Search API and build a GUI tool to search repositories on GitHub using Python.

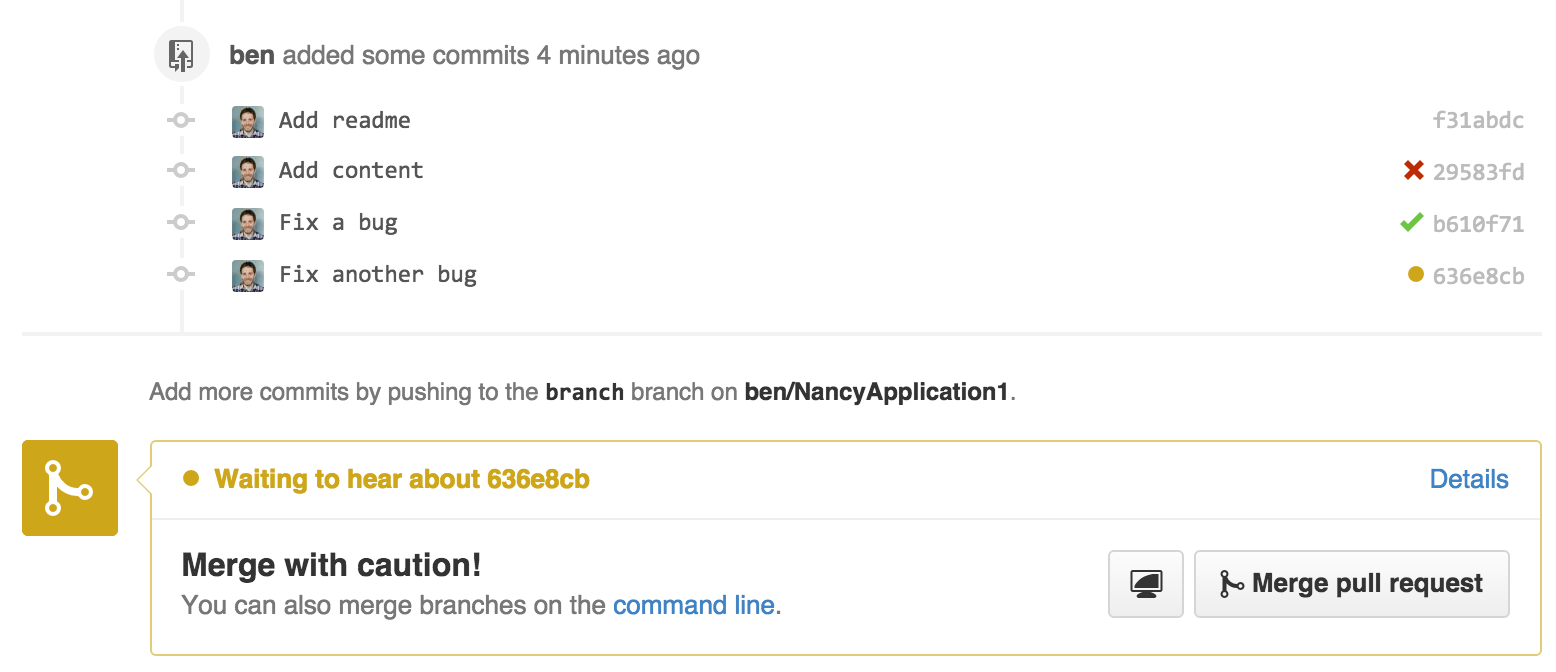

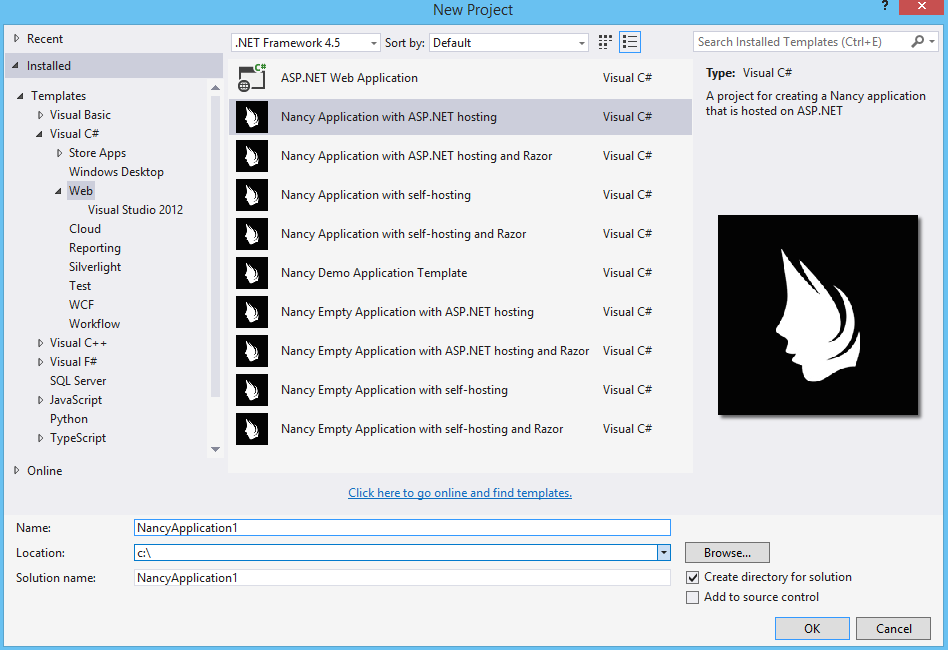

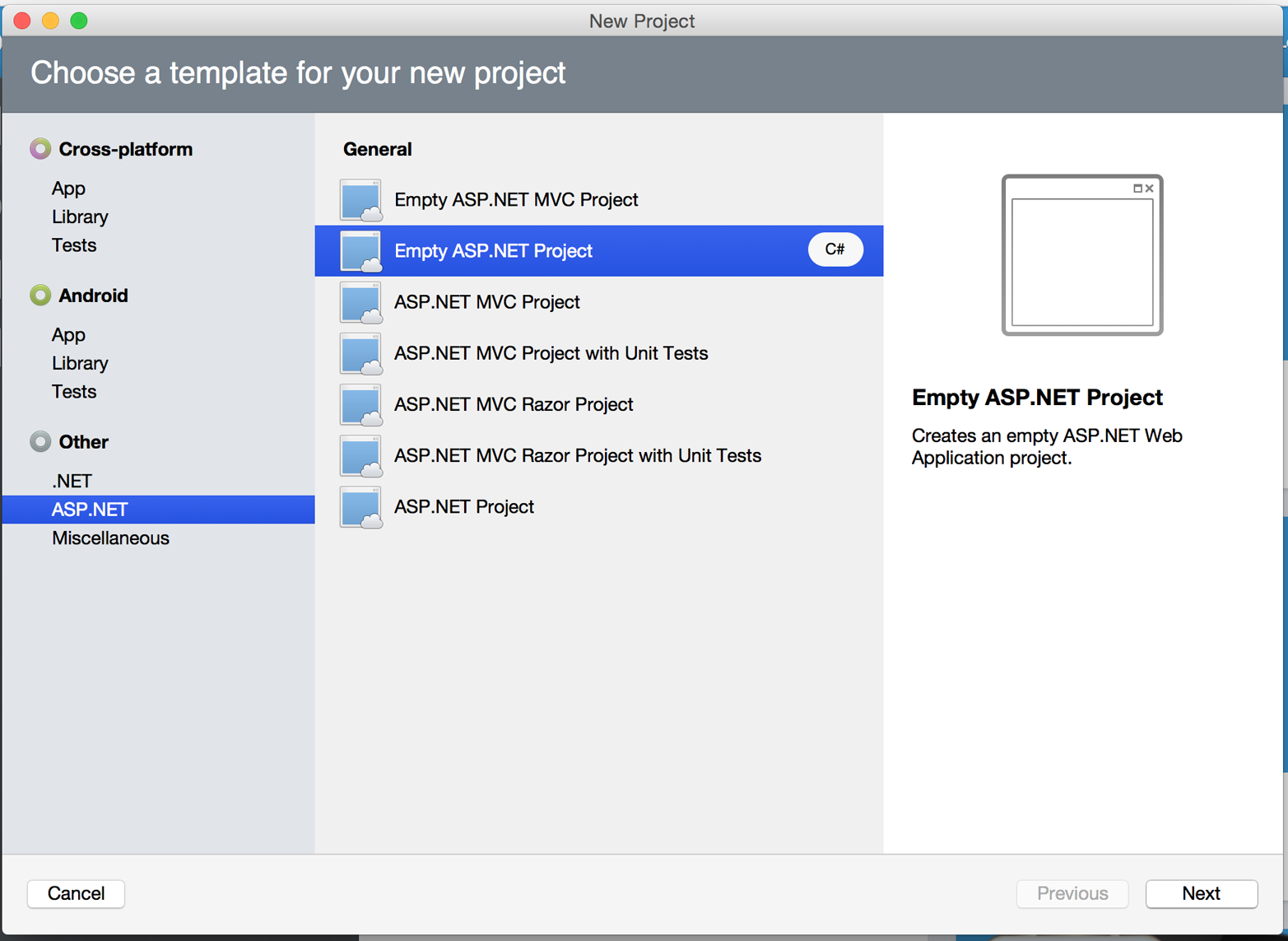

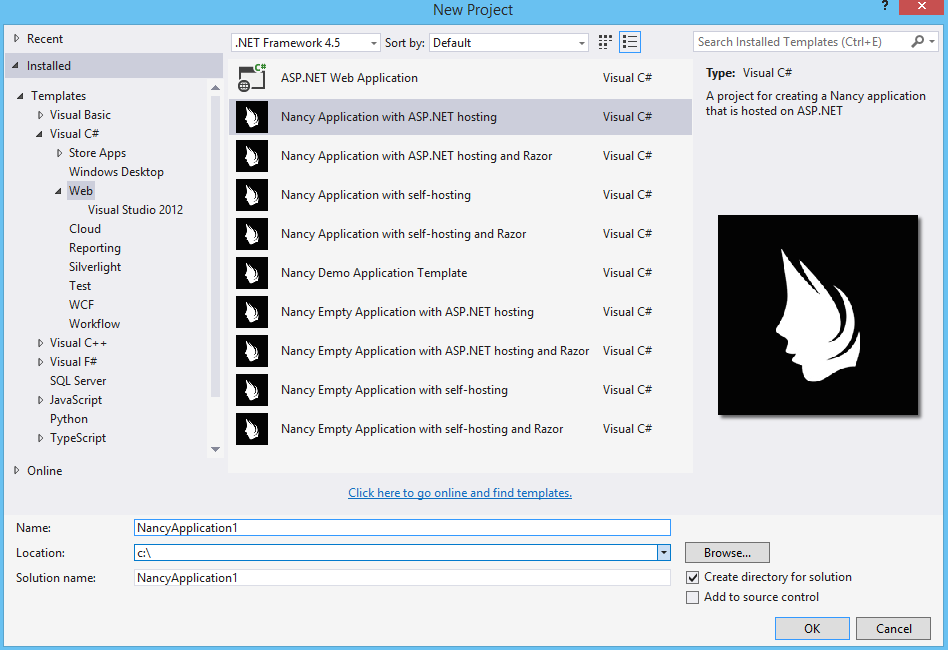

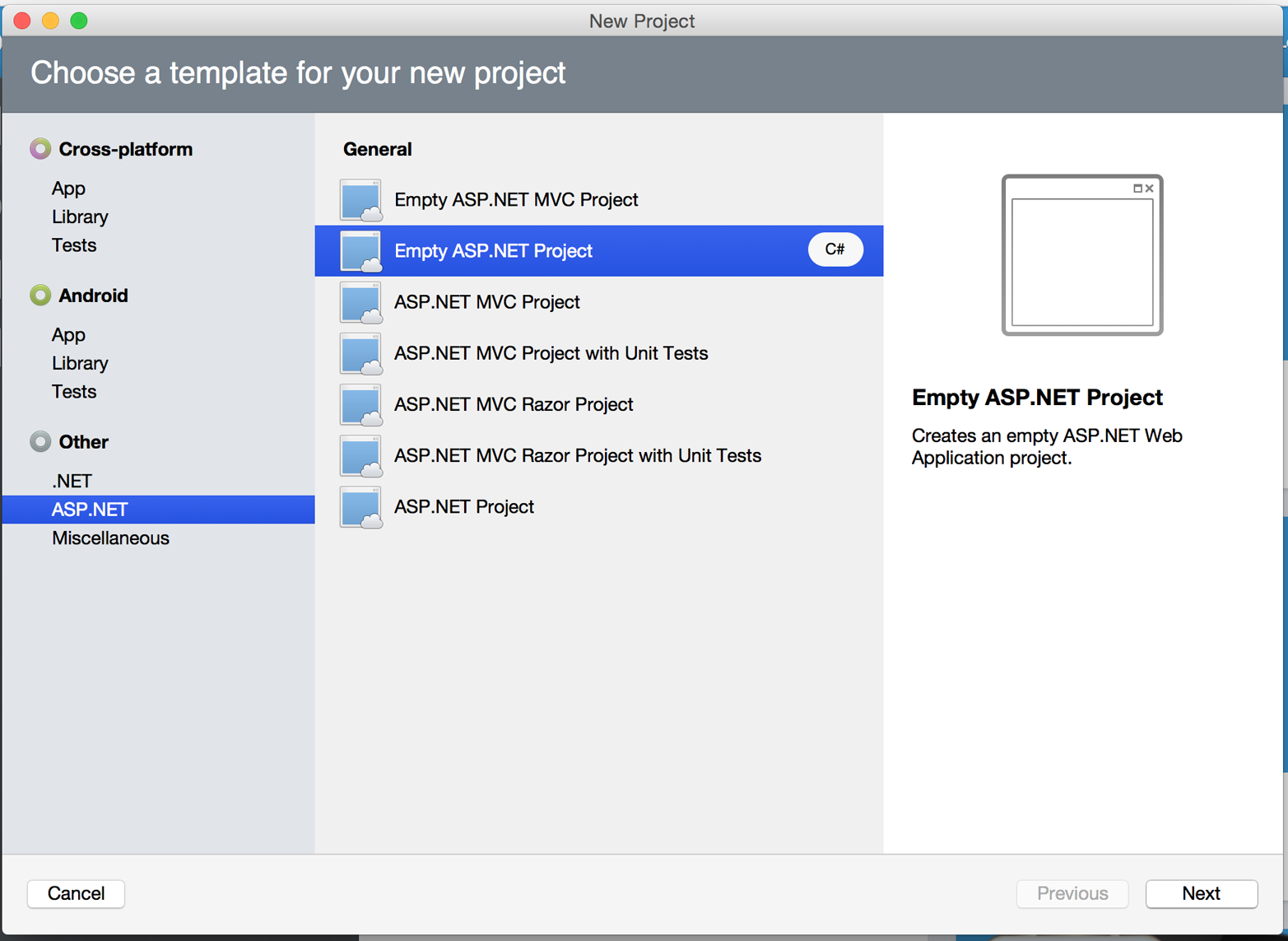

This chapter covers a relatively new part of the API that documents the interactions between third-party tools and your code. This chapter builds an application using C# and the Nancy .NET GitHub API libraries.

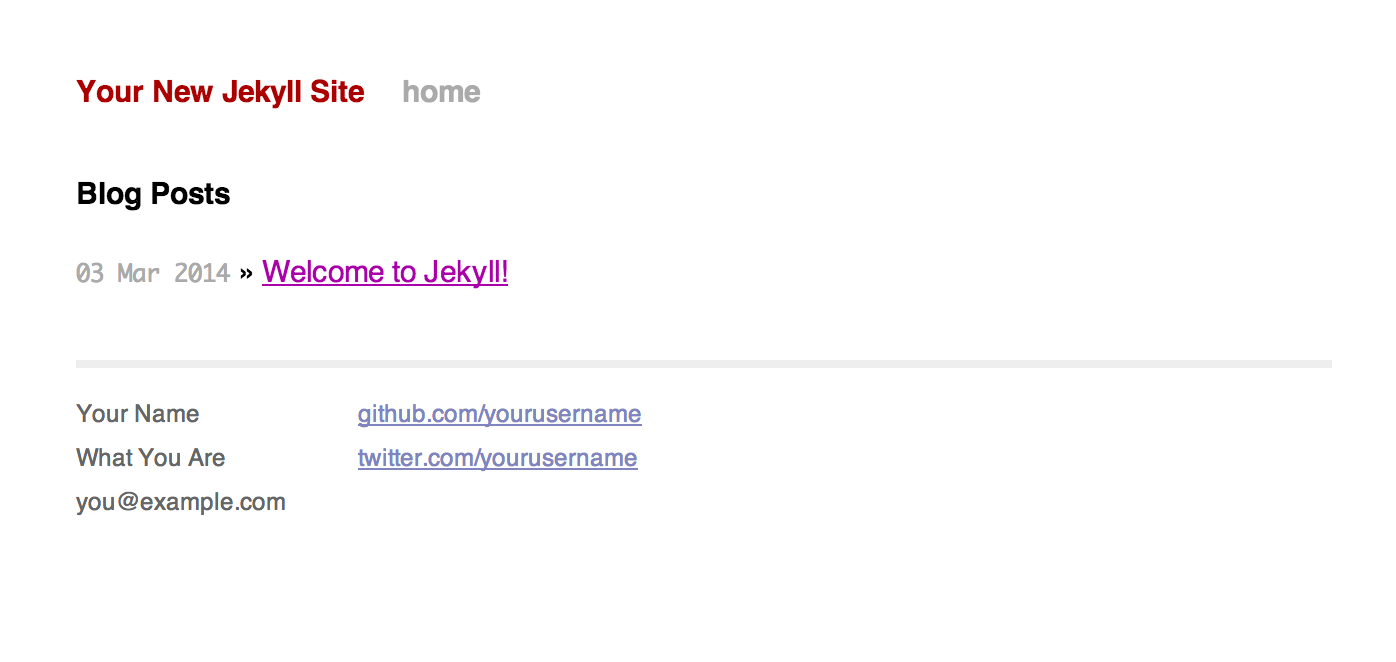

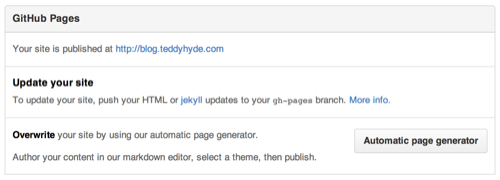

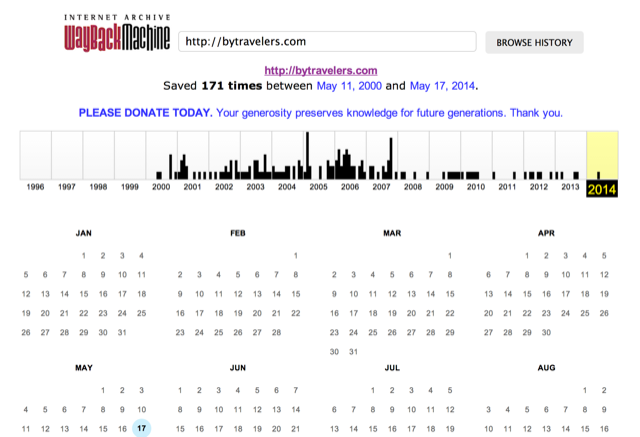

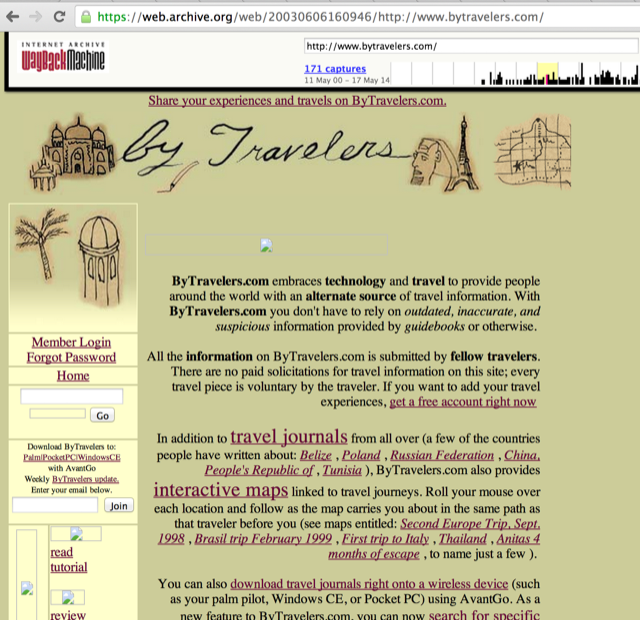

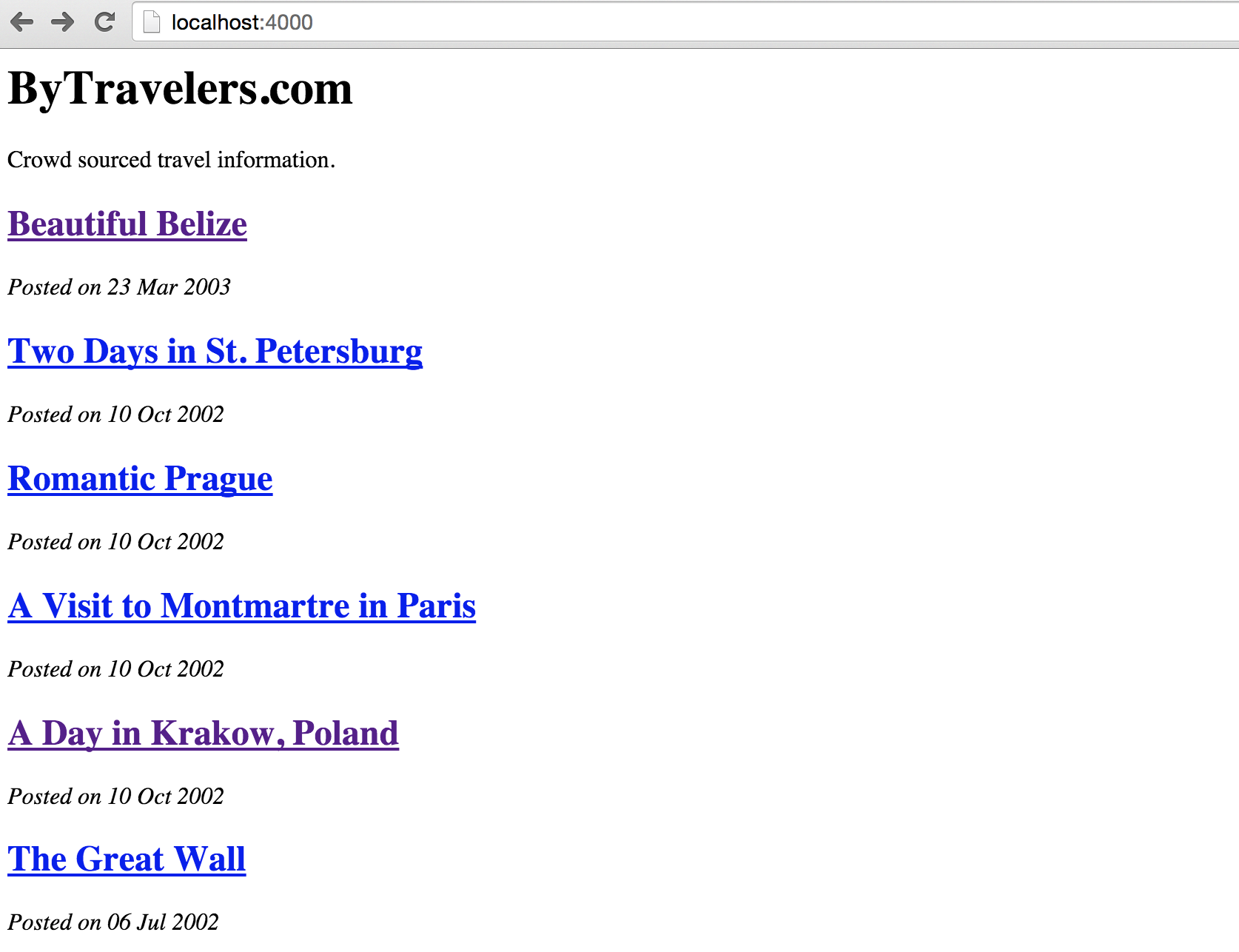

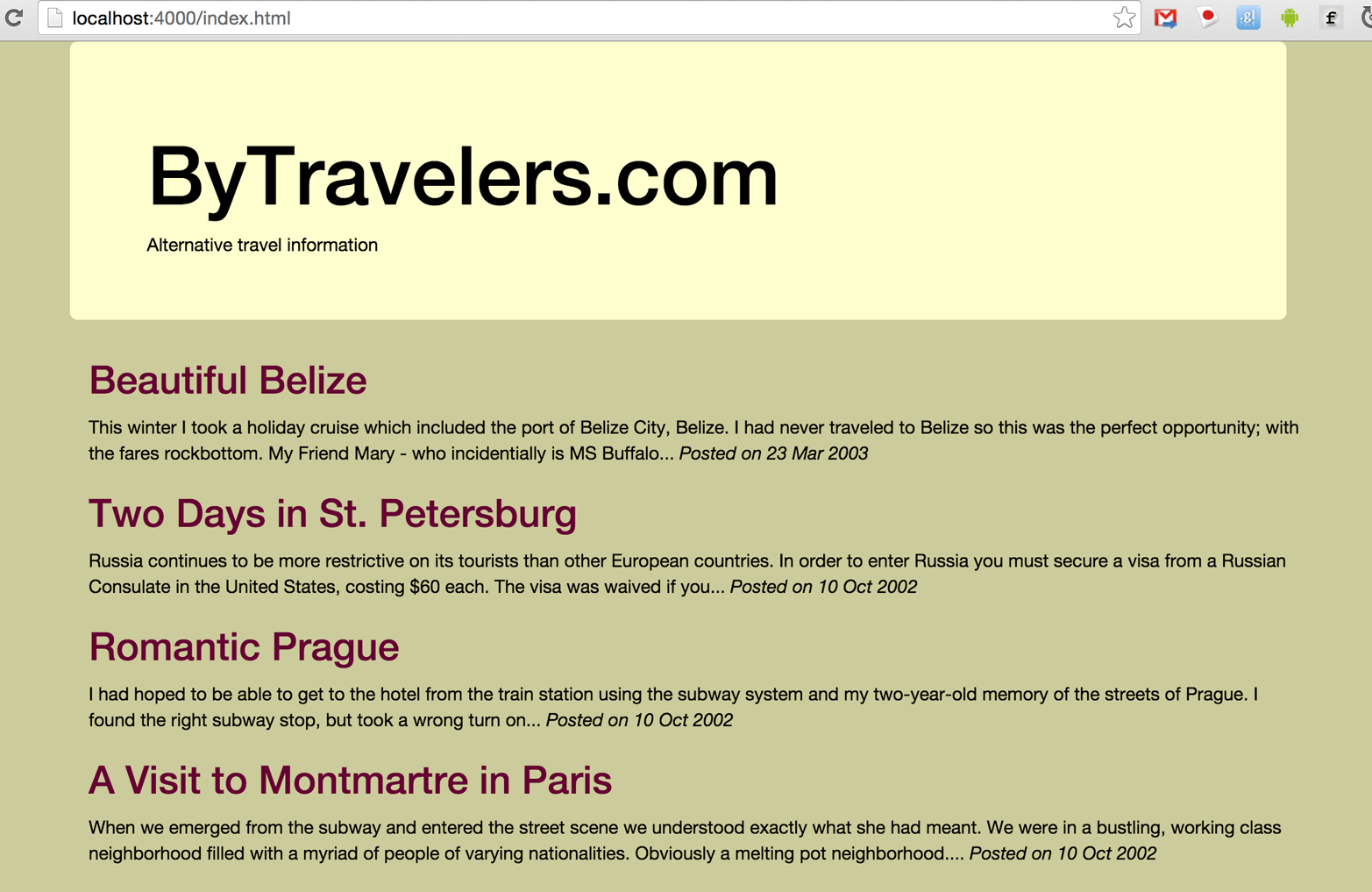

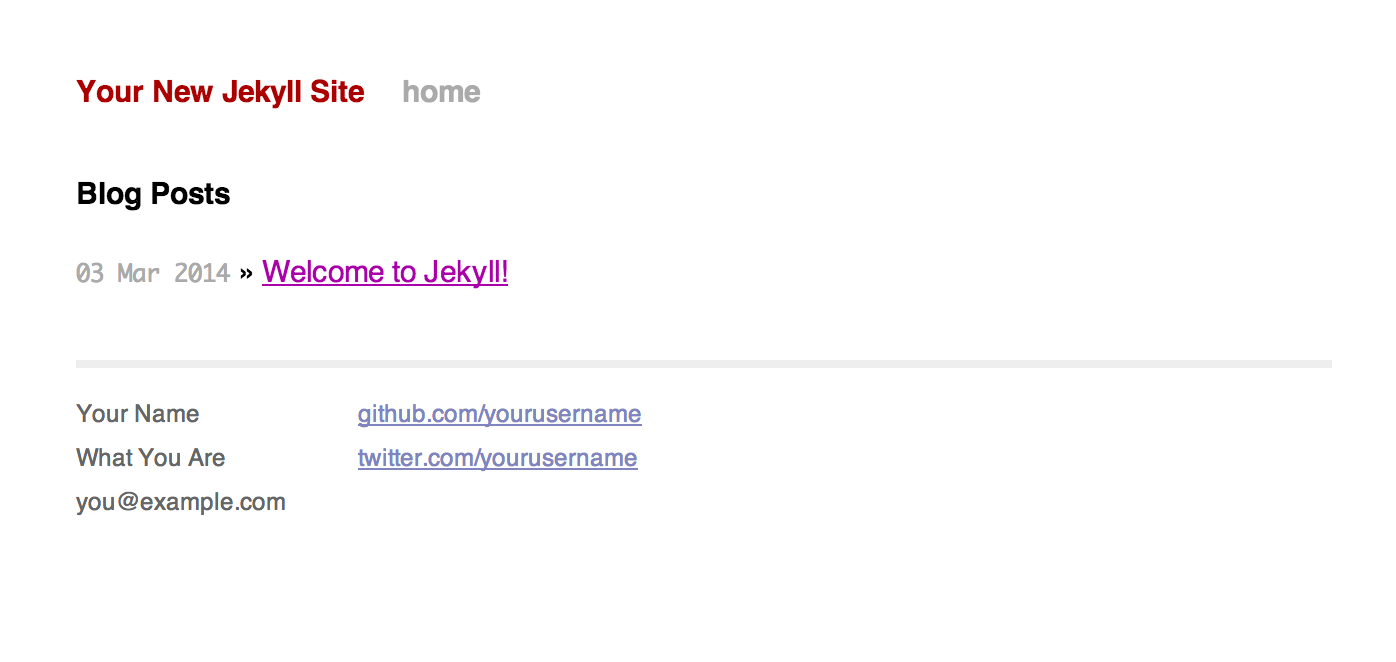

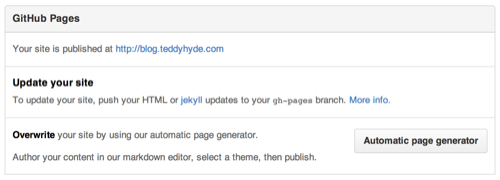

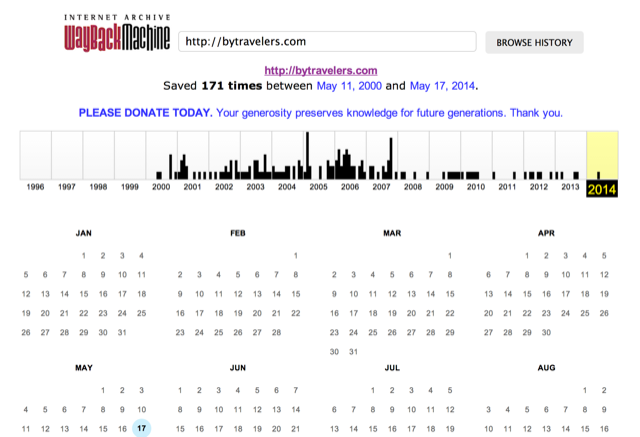

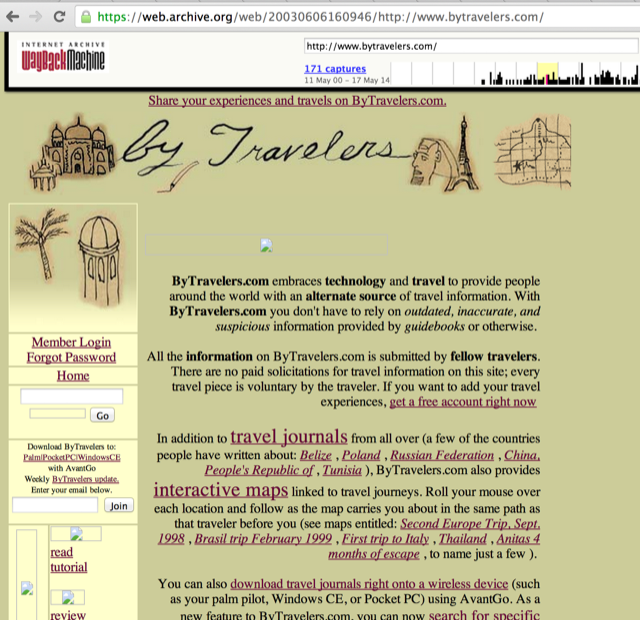

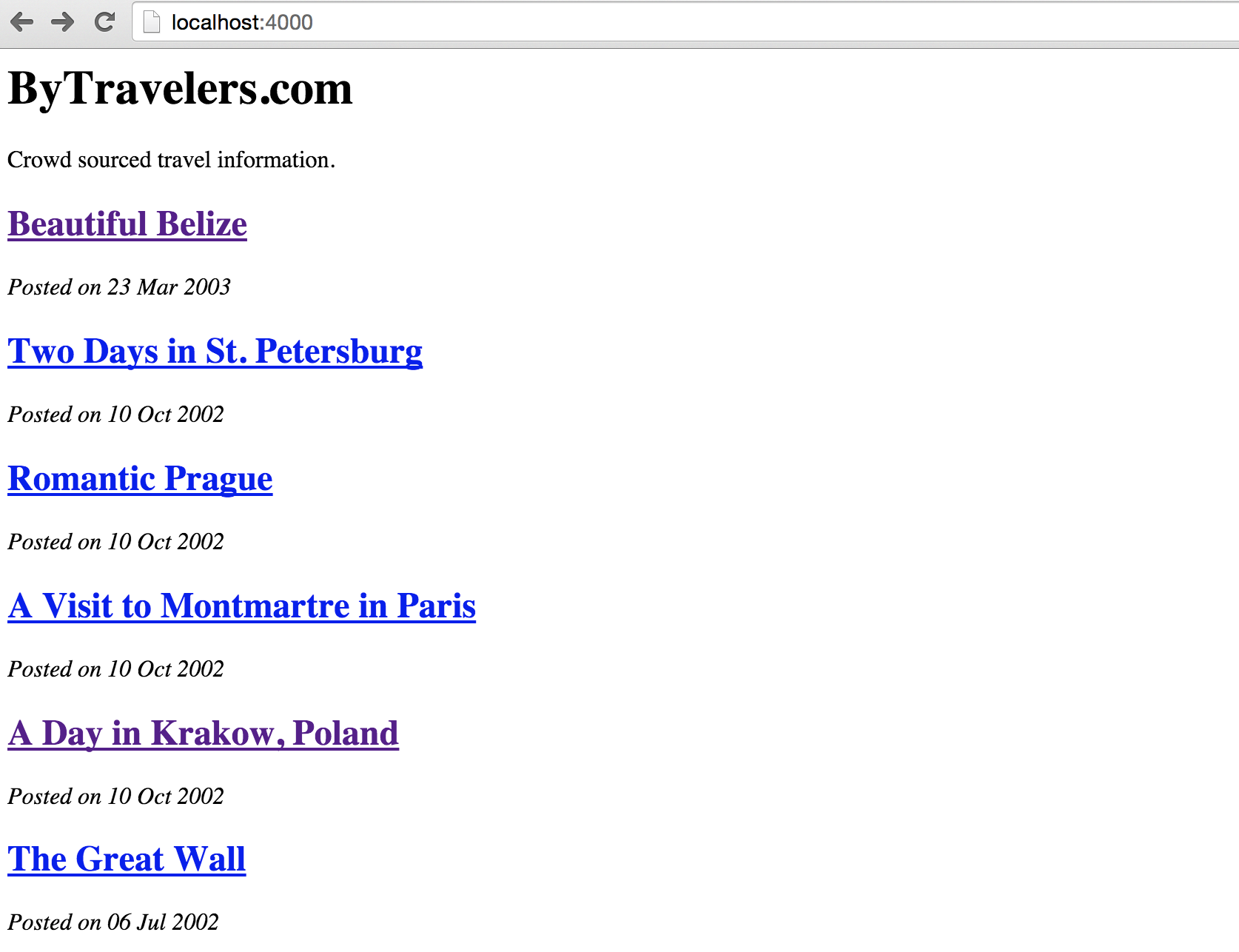

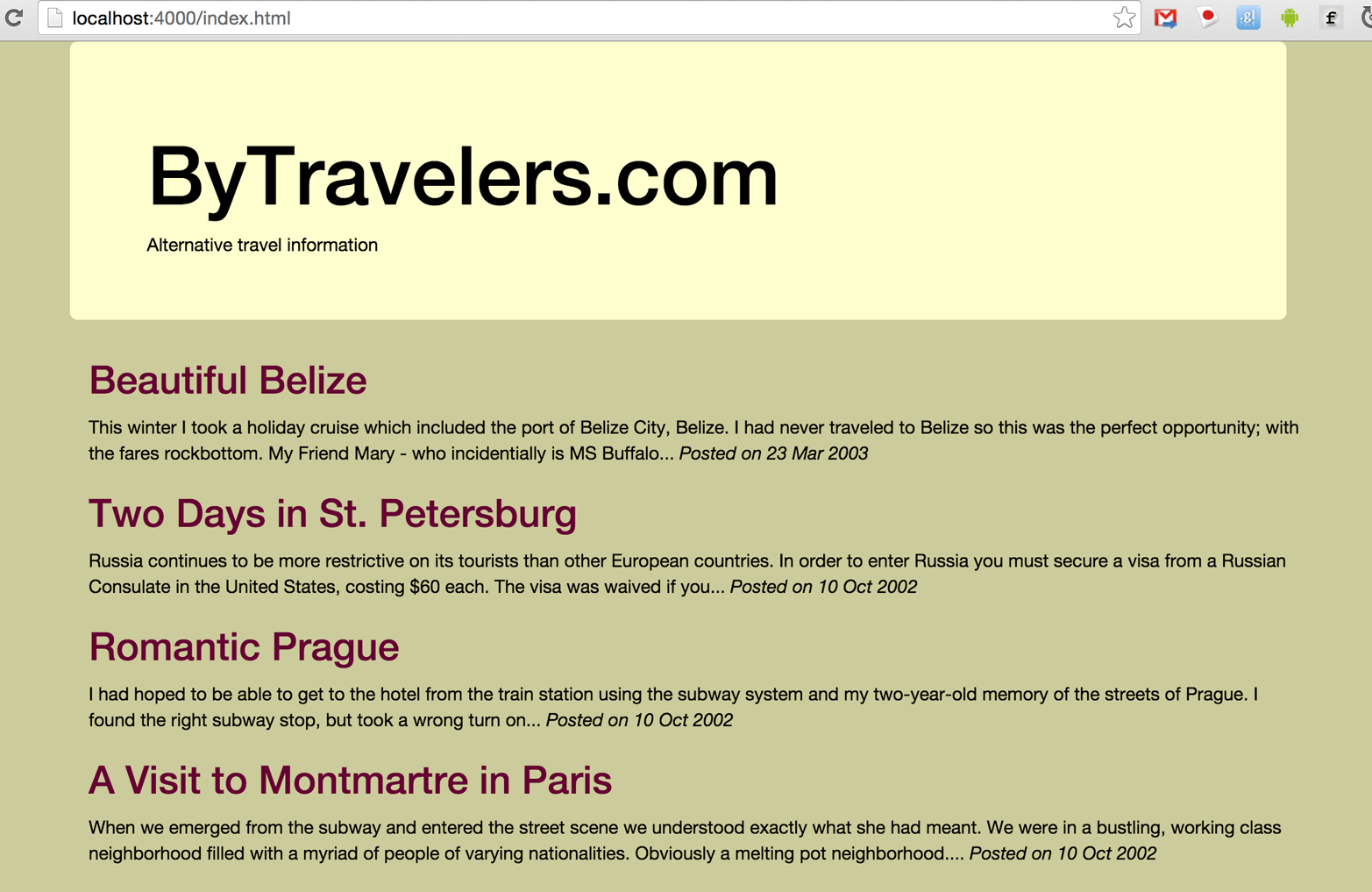

If you push a specifically organized repository into GitHub, GitHub will host a fully featured blog, equivalent in most ways to a Wordpress site (well, except for the complexity part). This chapter documents how to format your repository, how to use Markdown within Jekyll, how to use programmatic looping constructs provided by Liquid Templates, and then shows how to import an entire website from the Internet Archive into the Jekyll format using Ruby. We show how to respectfully spider a site using caching, a valuable technique when using APIs or third-party public information.

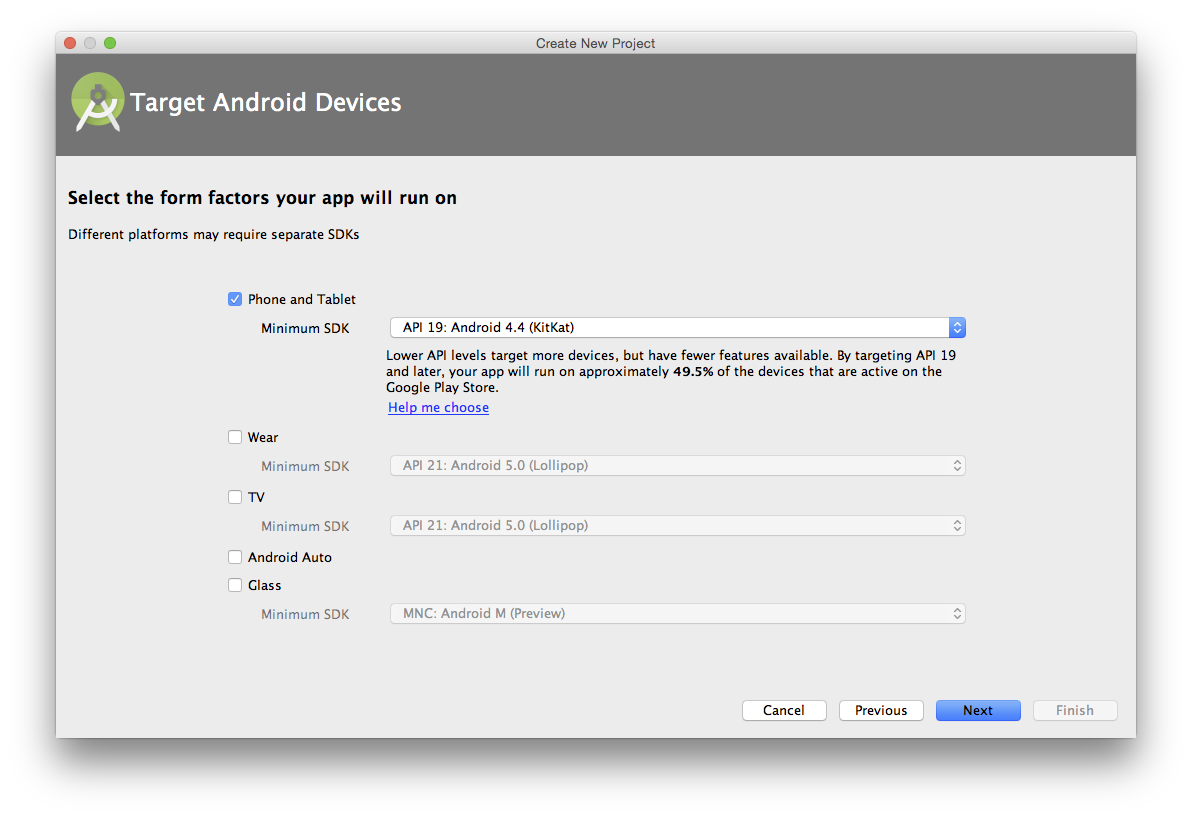

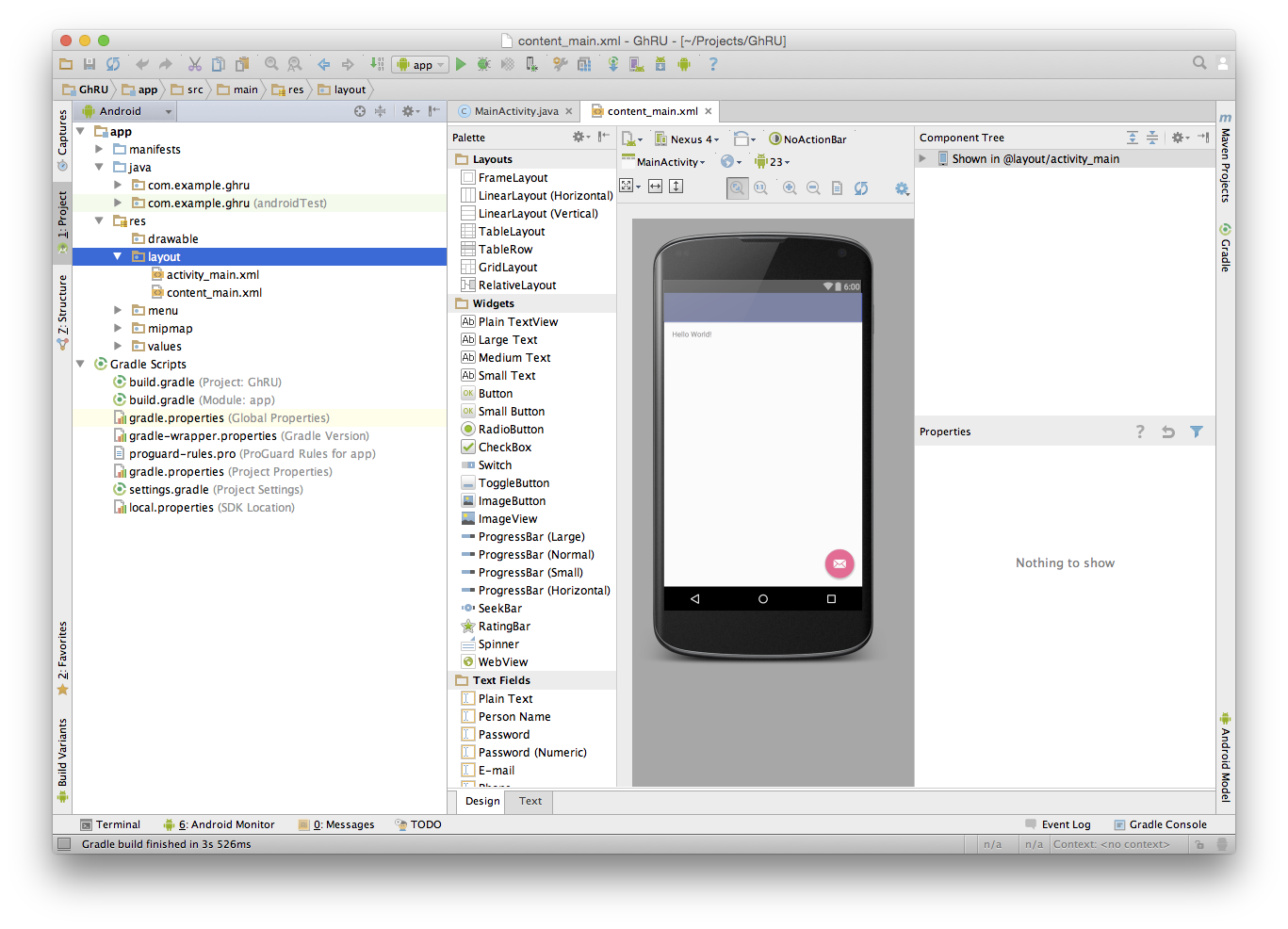

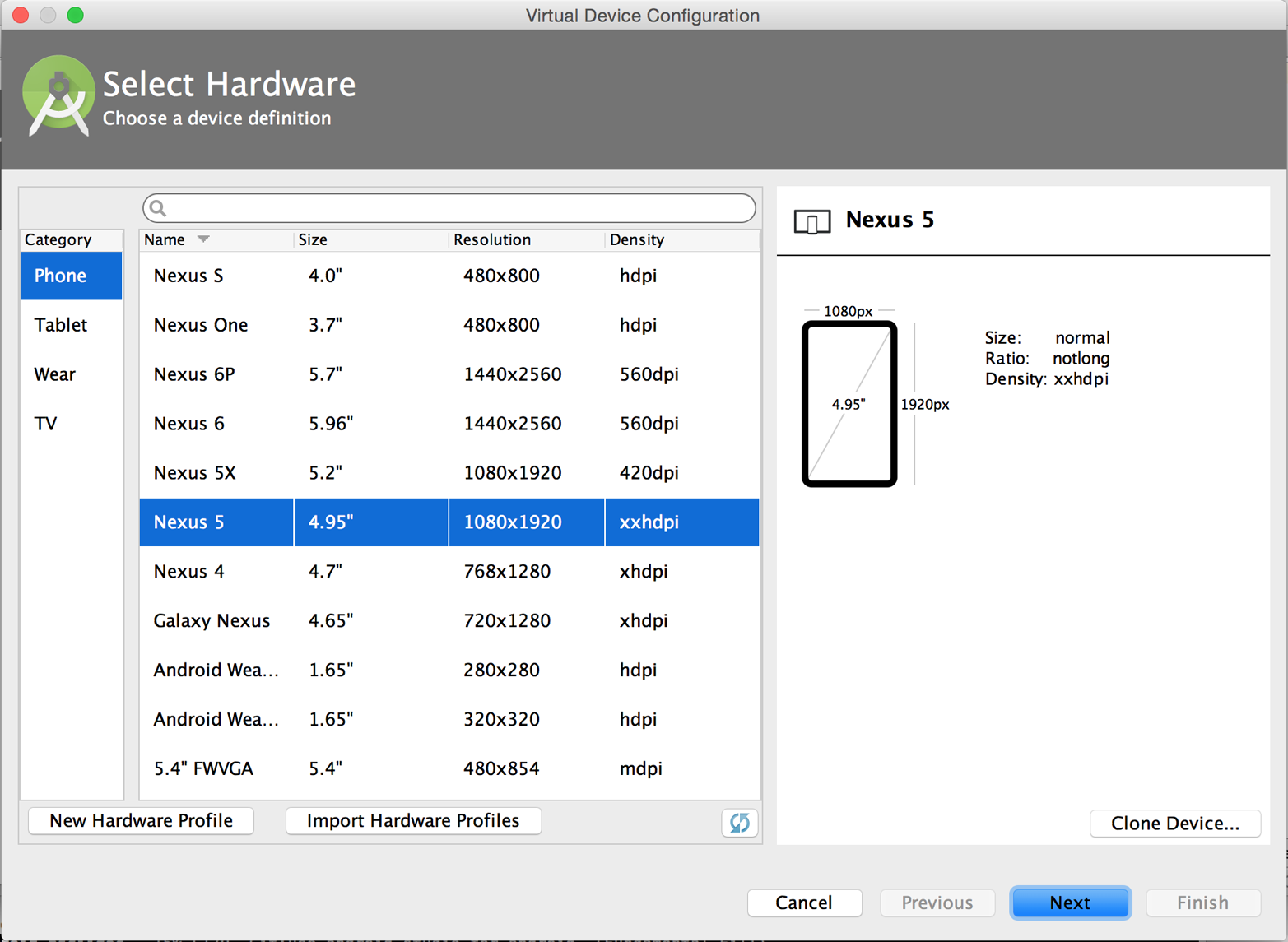

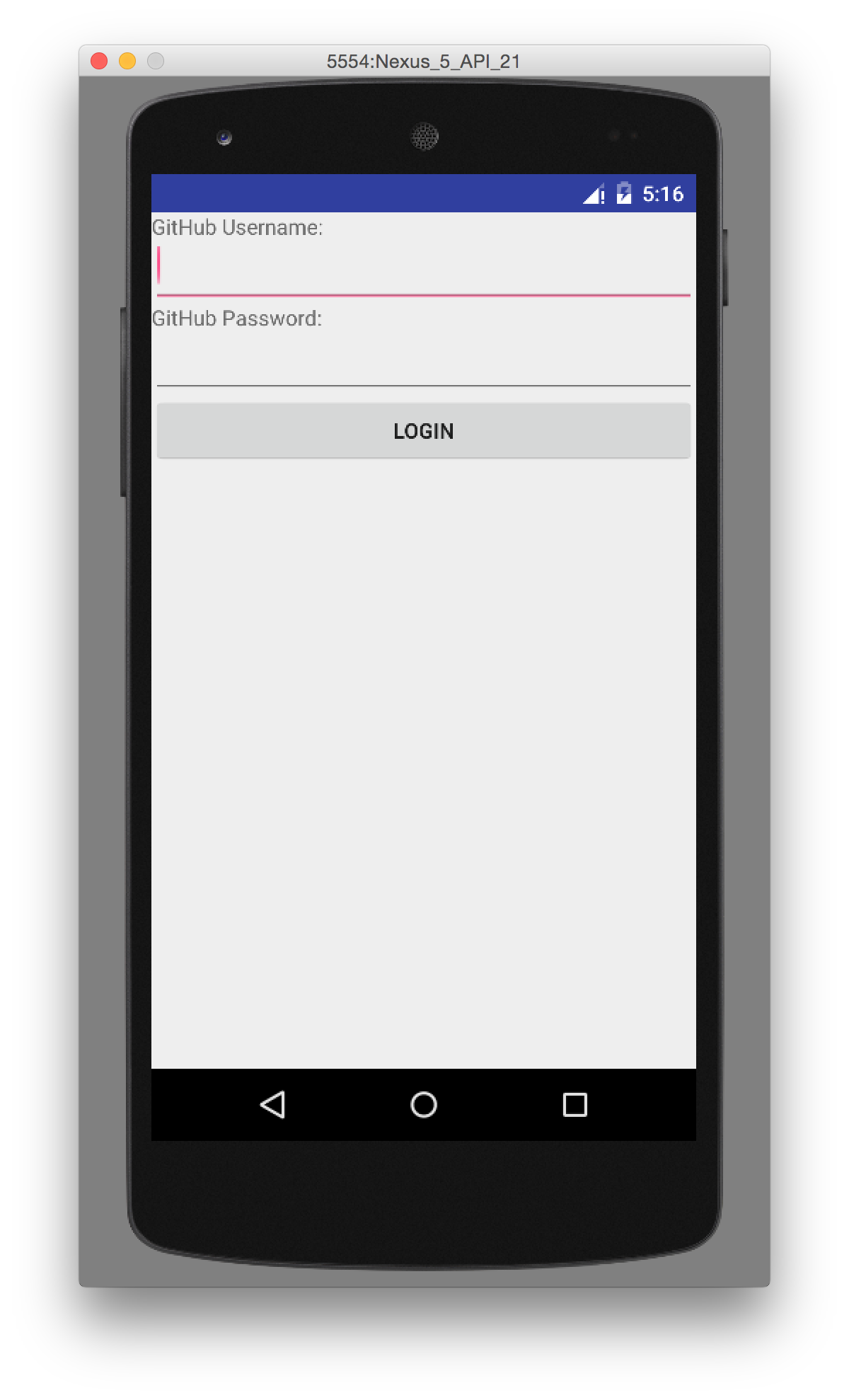

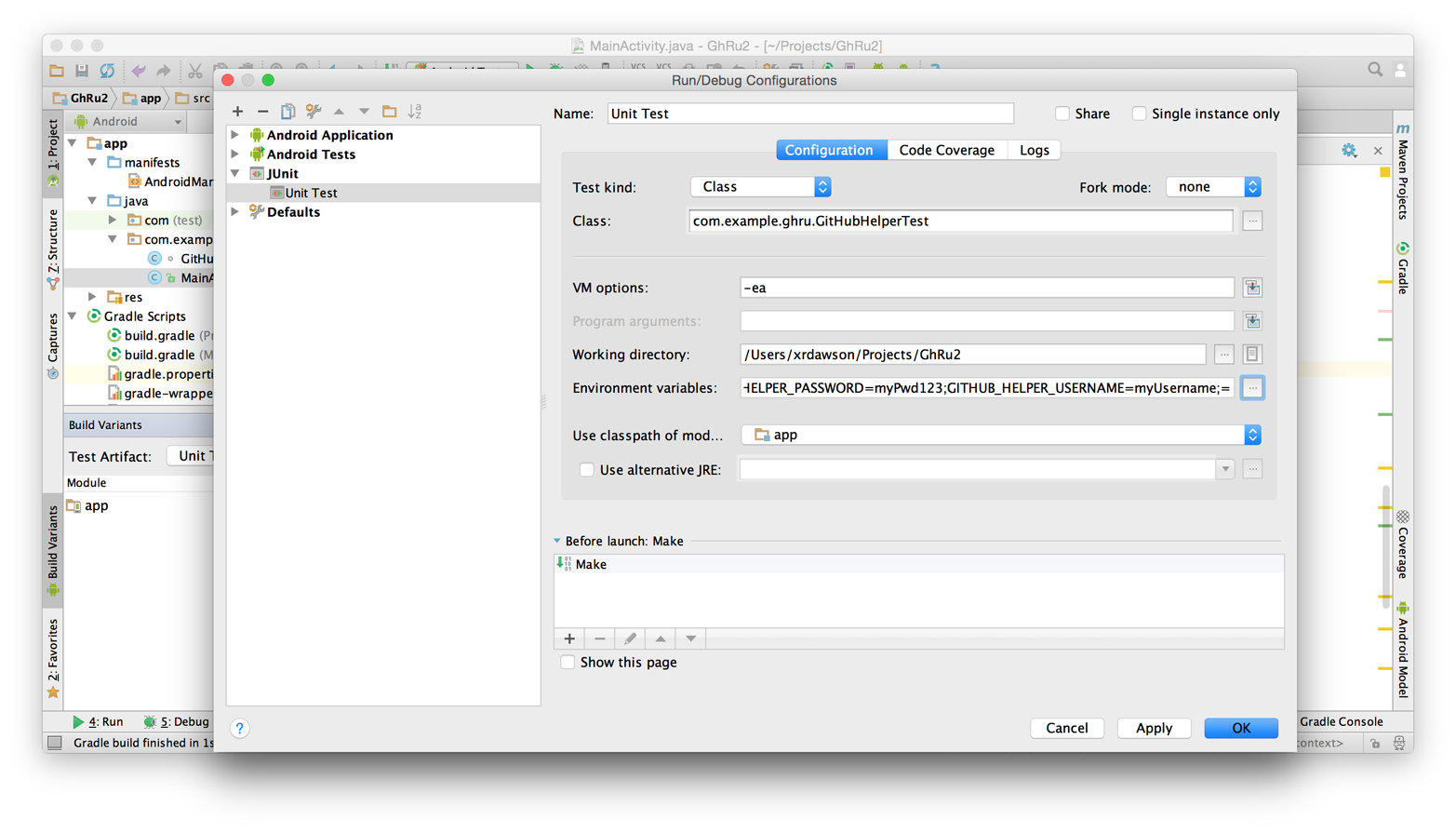

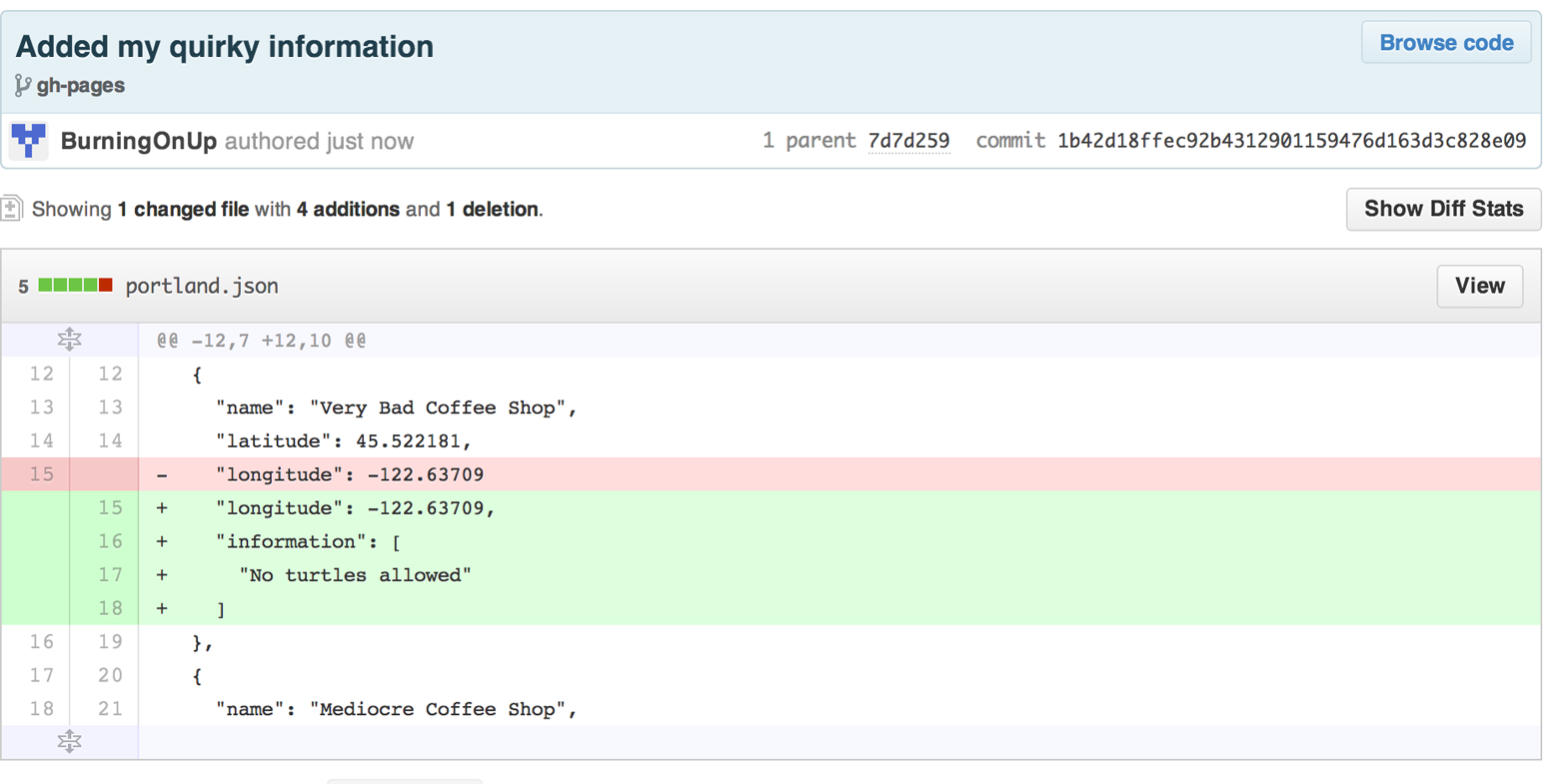

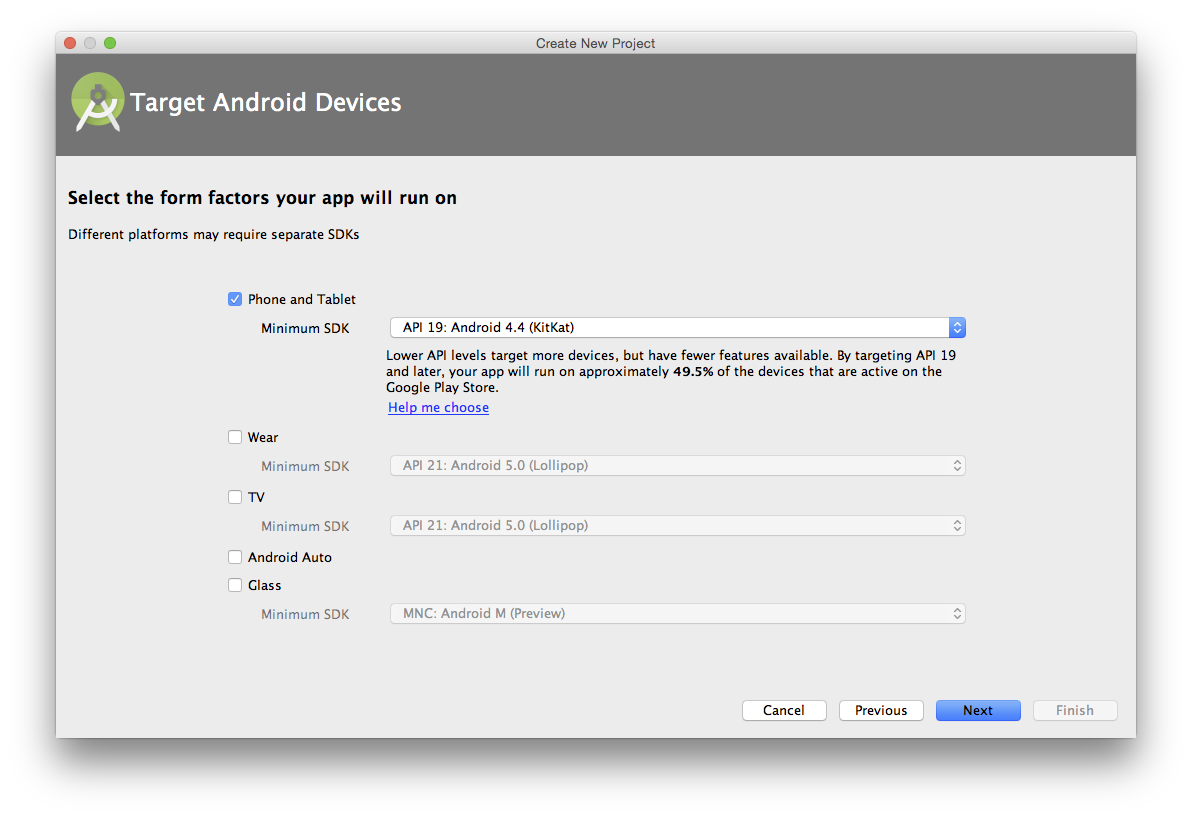

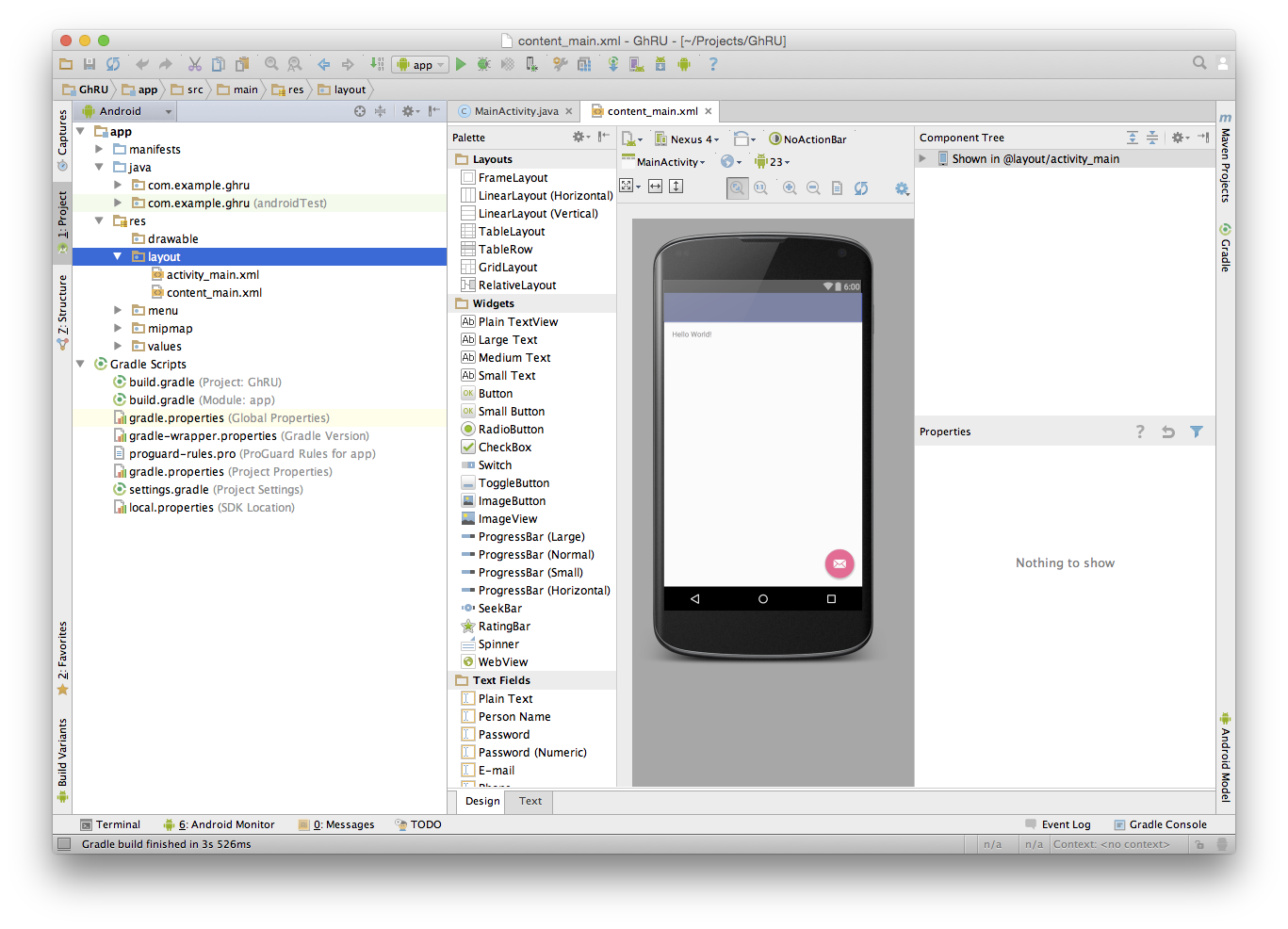

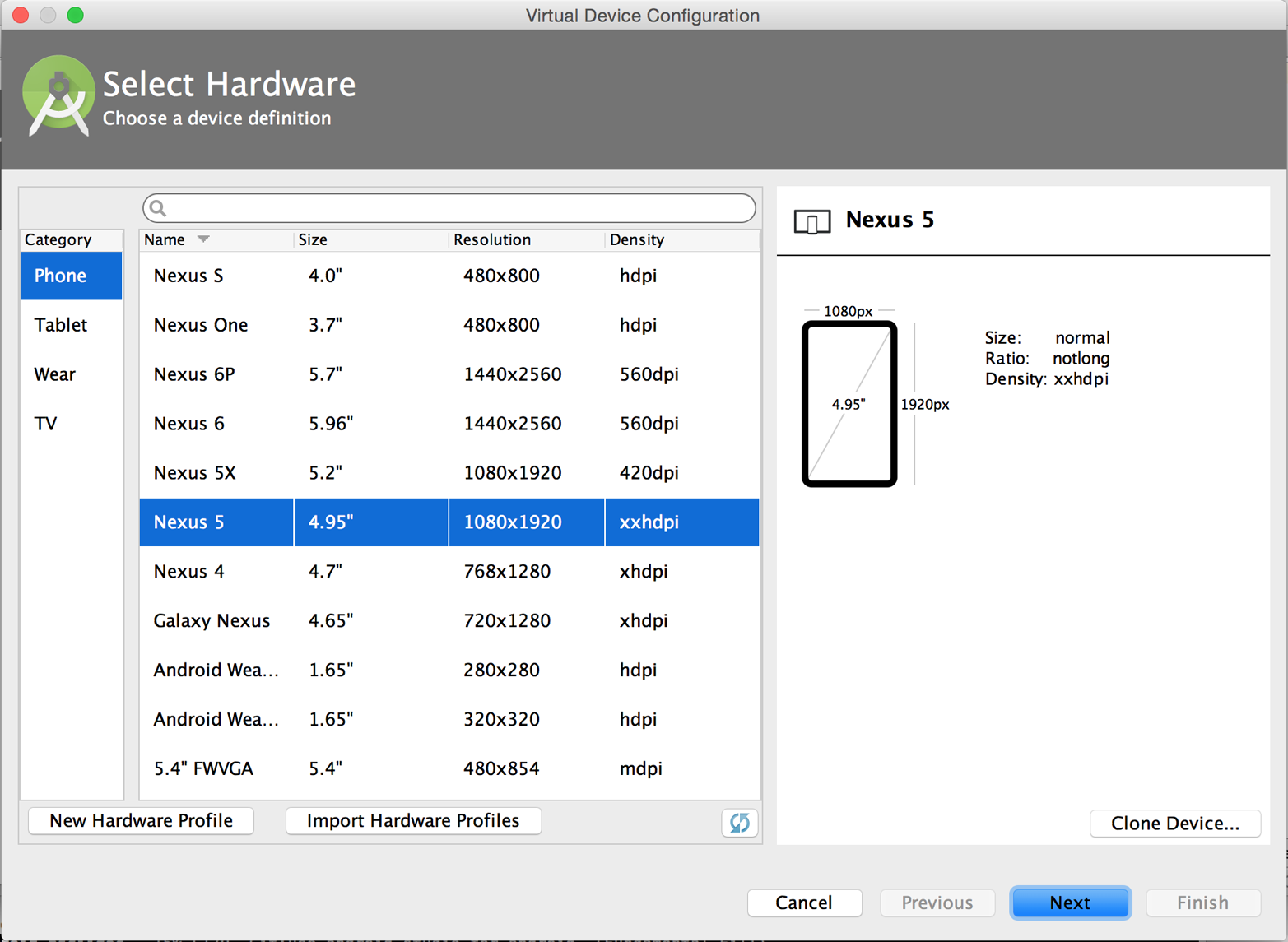

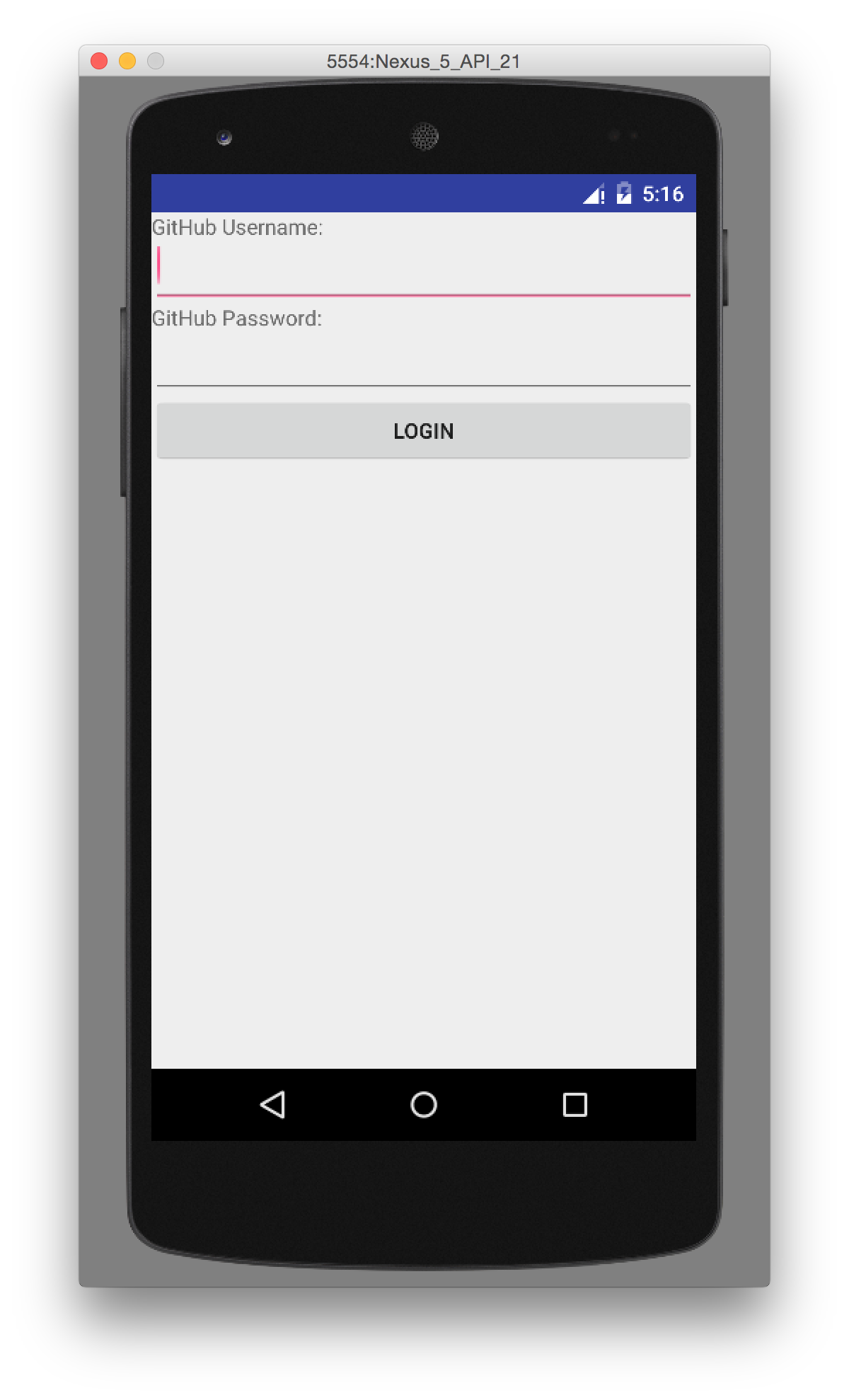

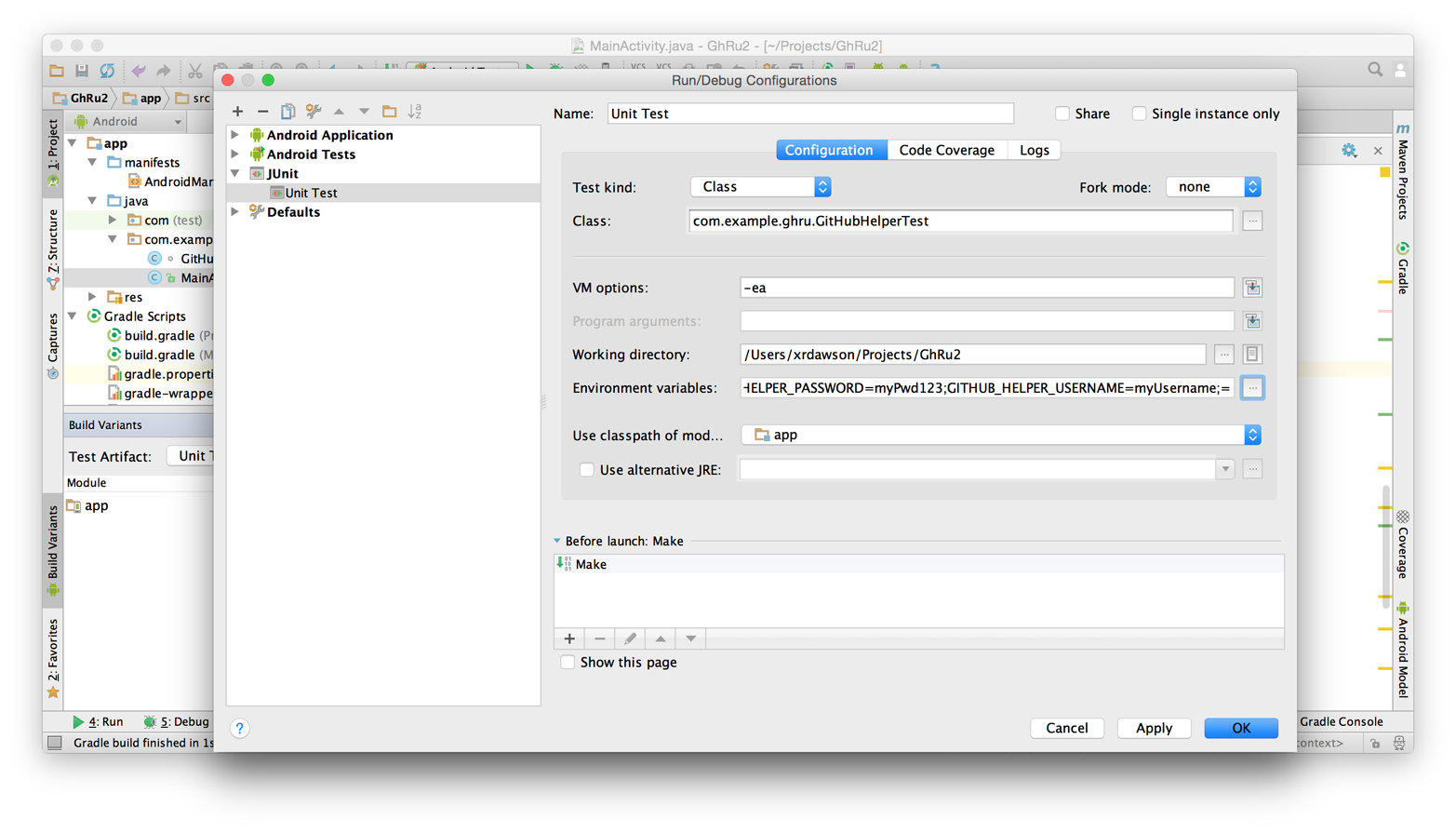

In this chapter we create a mobile application targeting the Android OS. Our application reads and writes information into a Jekyll repository from the Git Data section of the API. We show how to create user interface tests for Android that verify GitHub API responses using the Calabash UI testing tool.

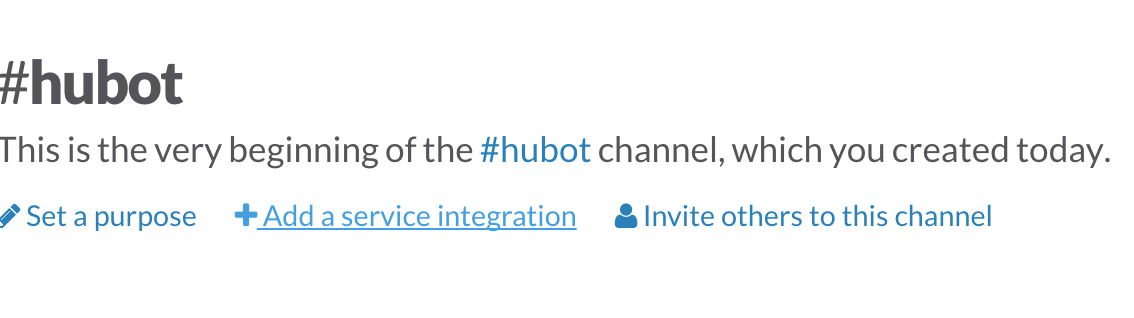

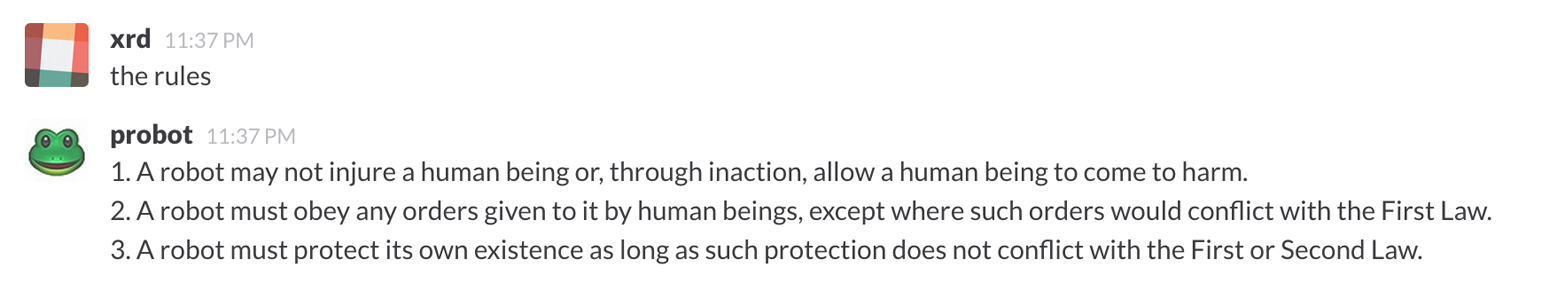

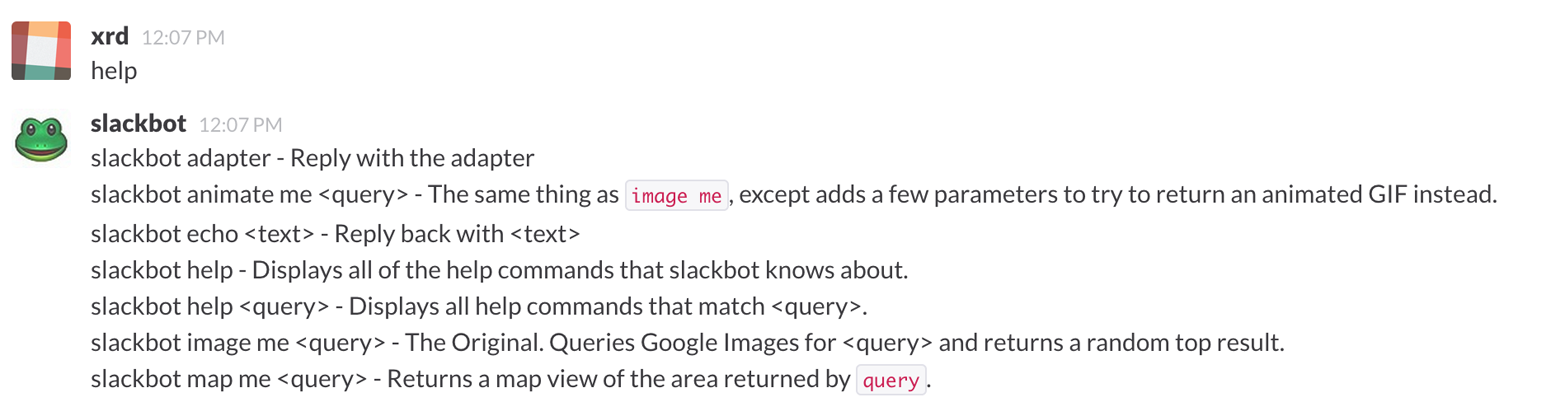

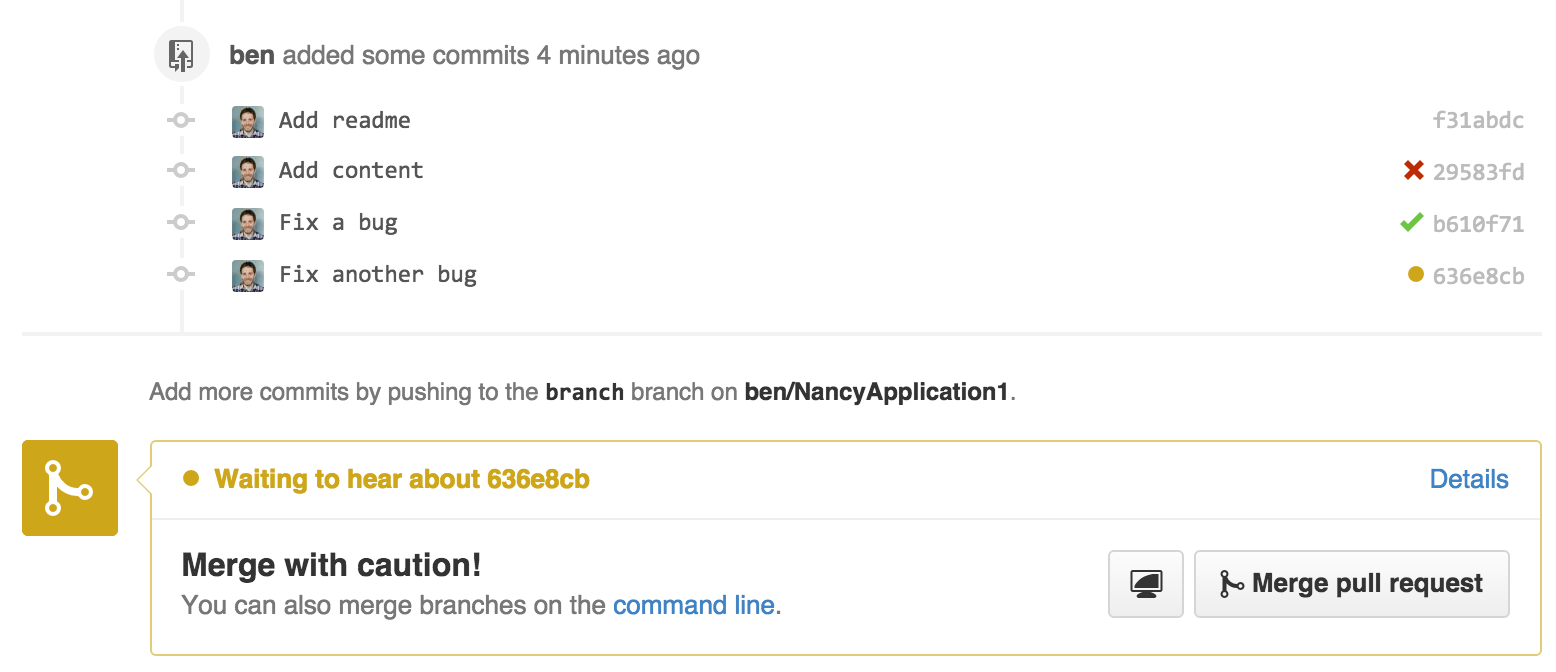

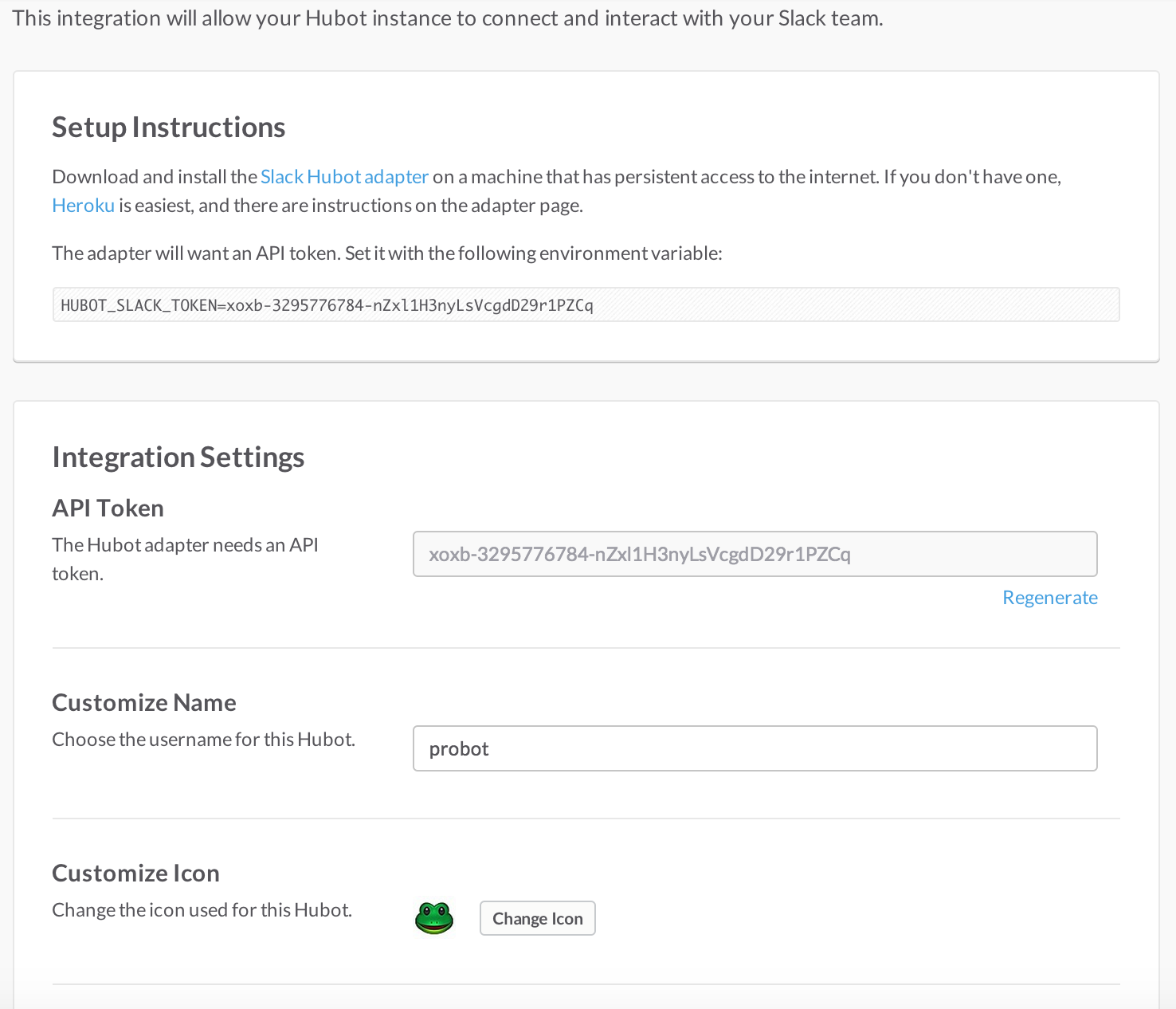

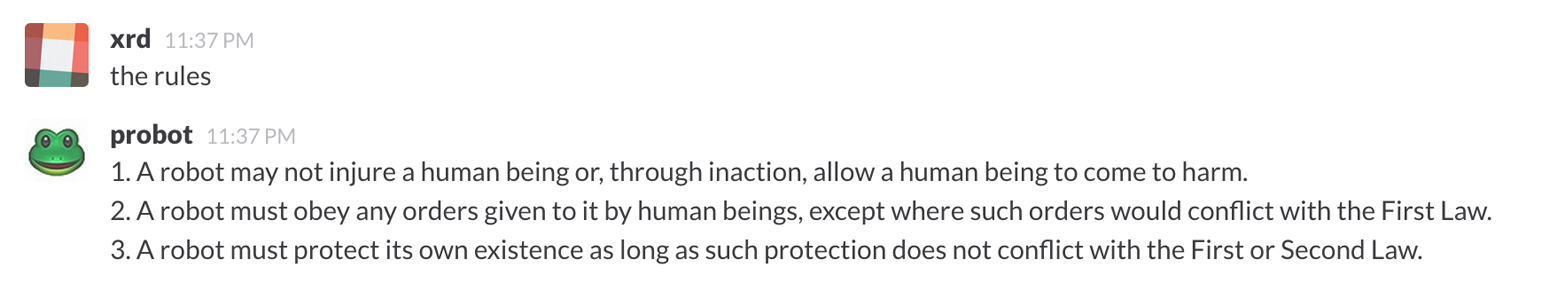

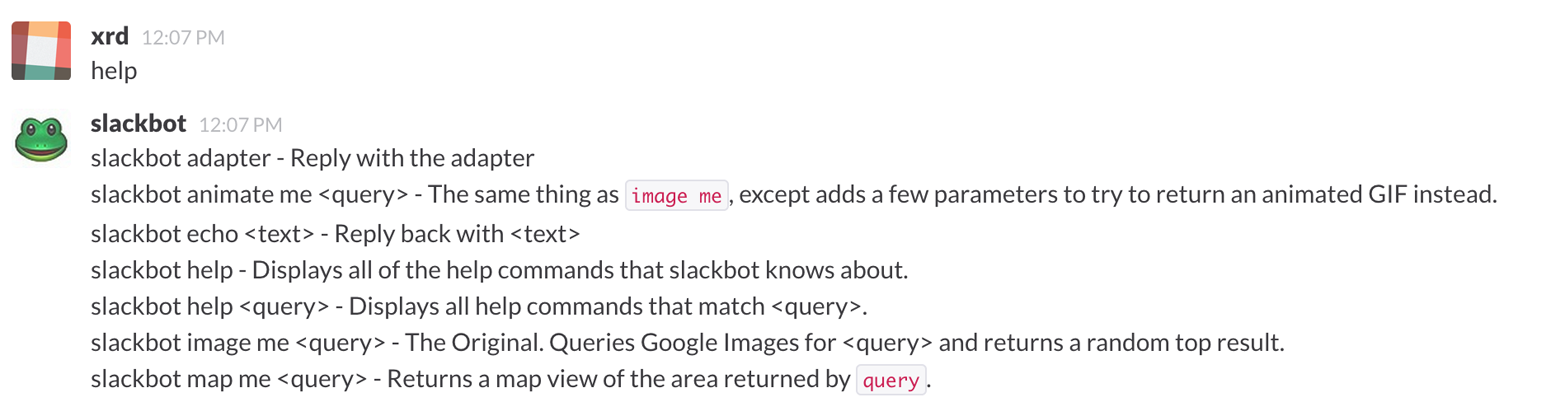

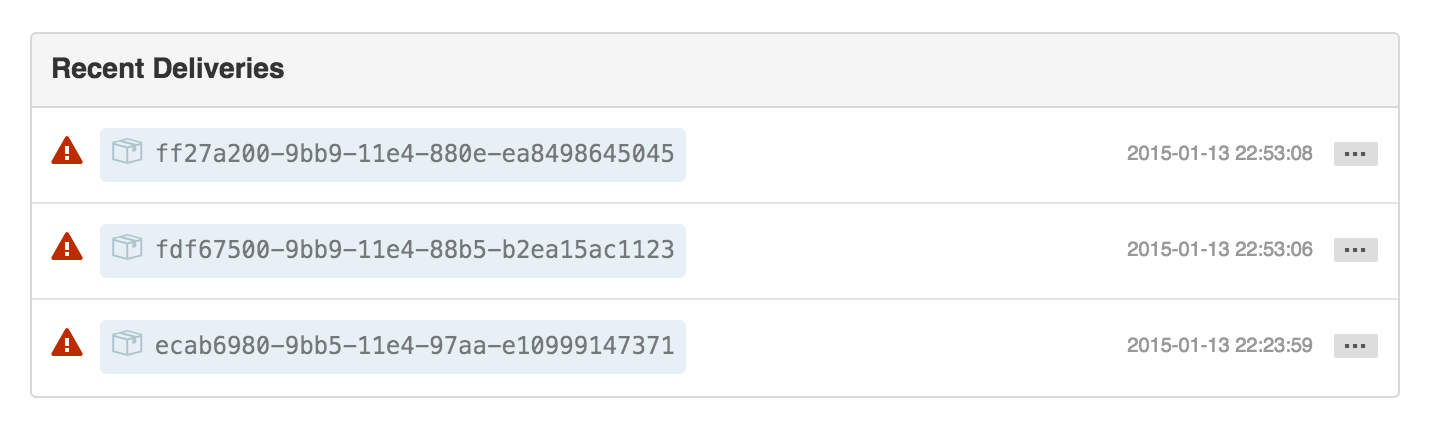

Hubot is a JavaScript (NodeJS) chat robot enabling technologists to go beyond developer operations (“DevOps”) to a new frontier called “ChatOps.” This chapter illustrates using the Activity and Pull Requests section of the API. In addition, we show how you can simulate GitHub notifications and how to write testable Hubot extensions (which is often a challenge when writing JavaScript code). We string all these pieces together and build a robot that automates assigning pull request review requests.

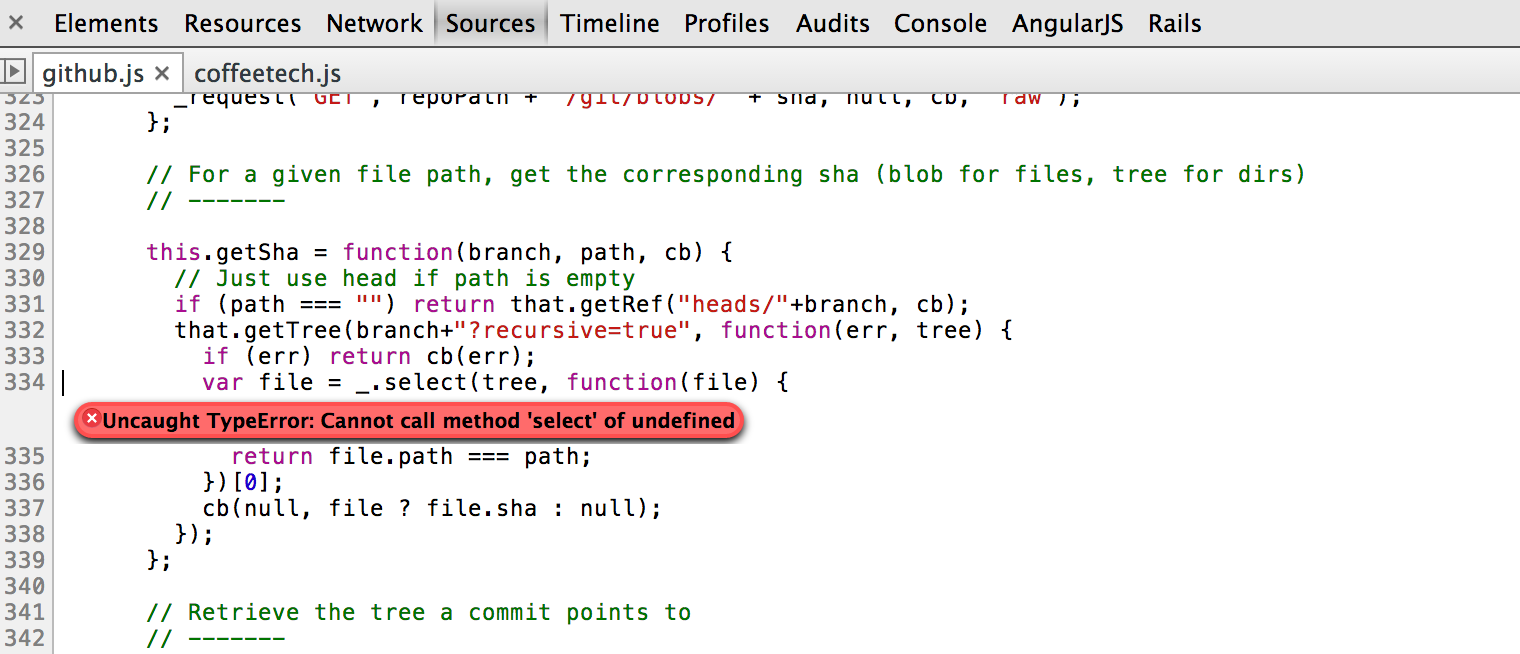

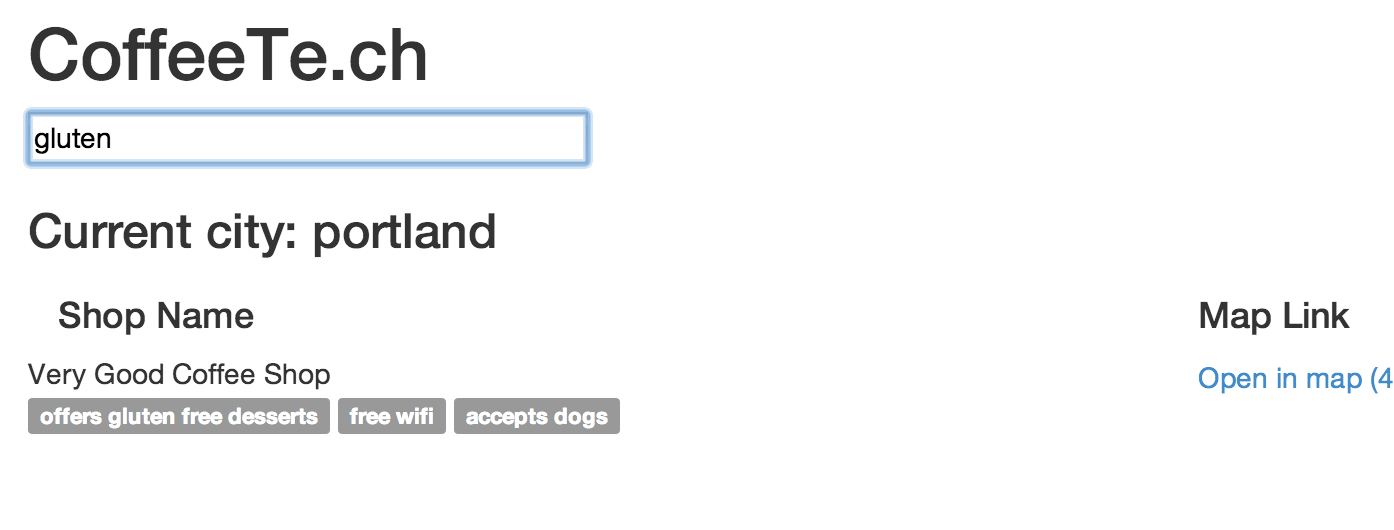

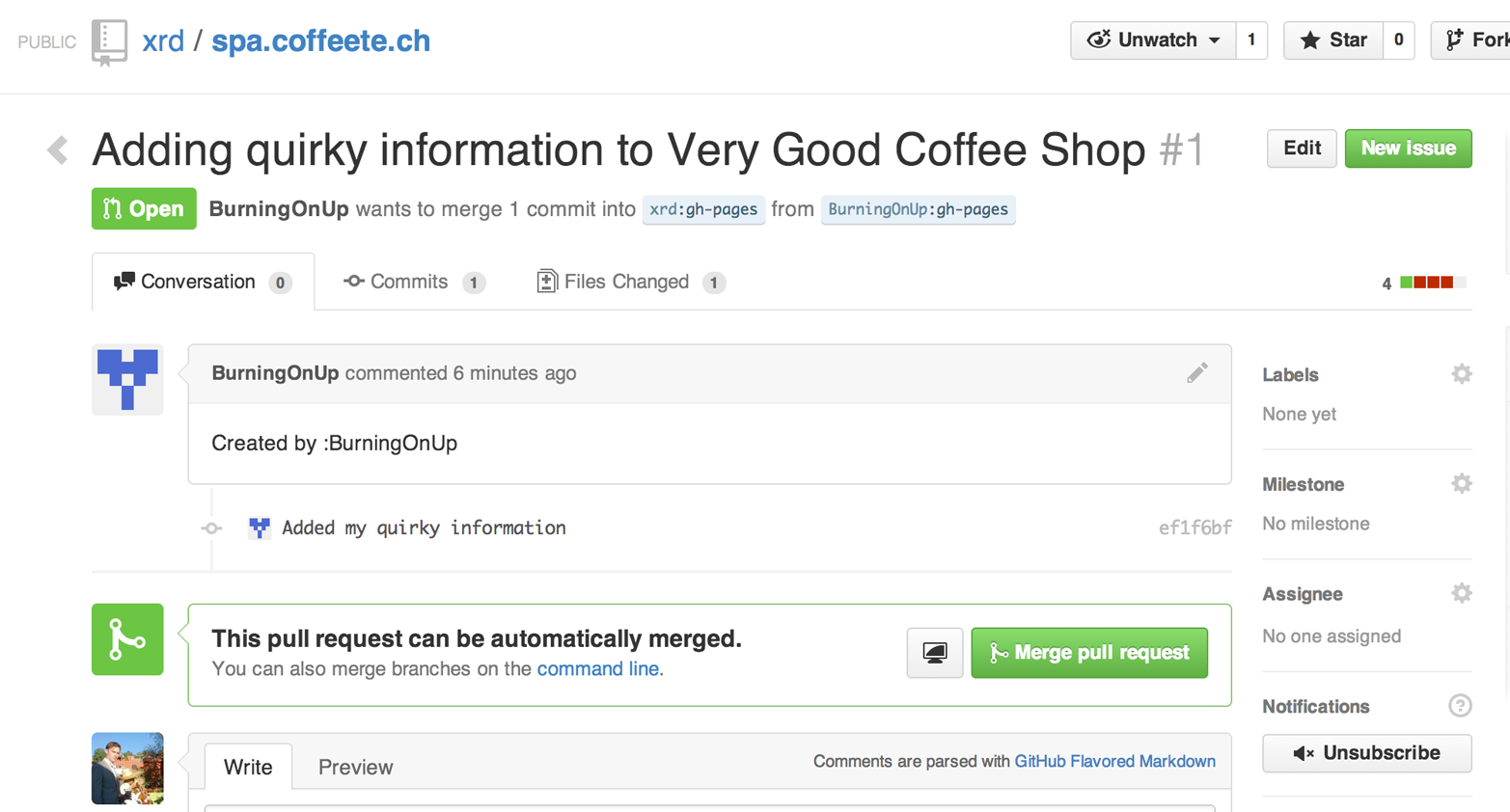

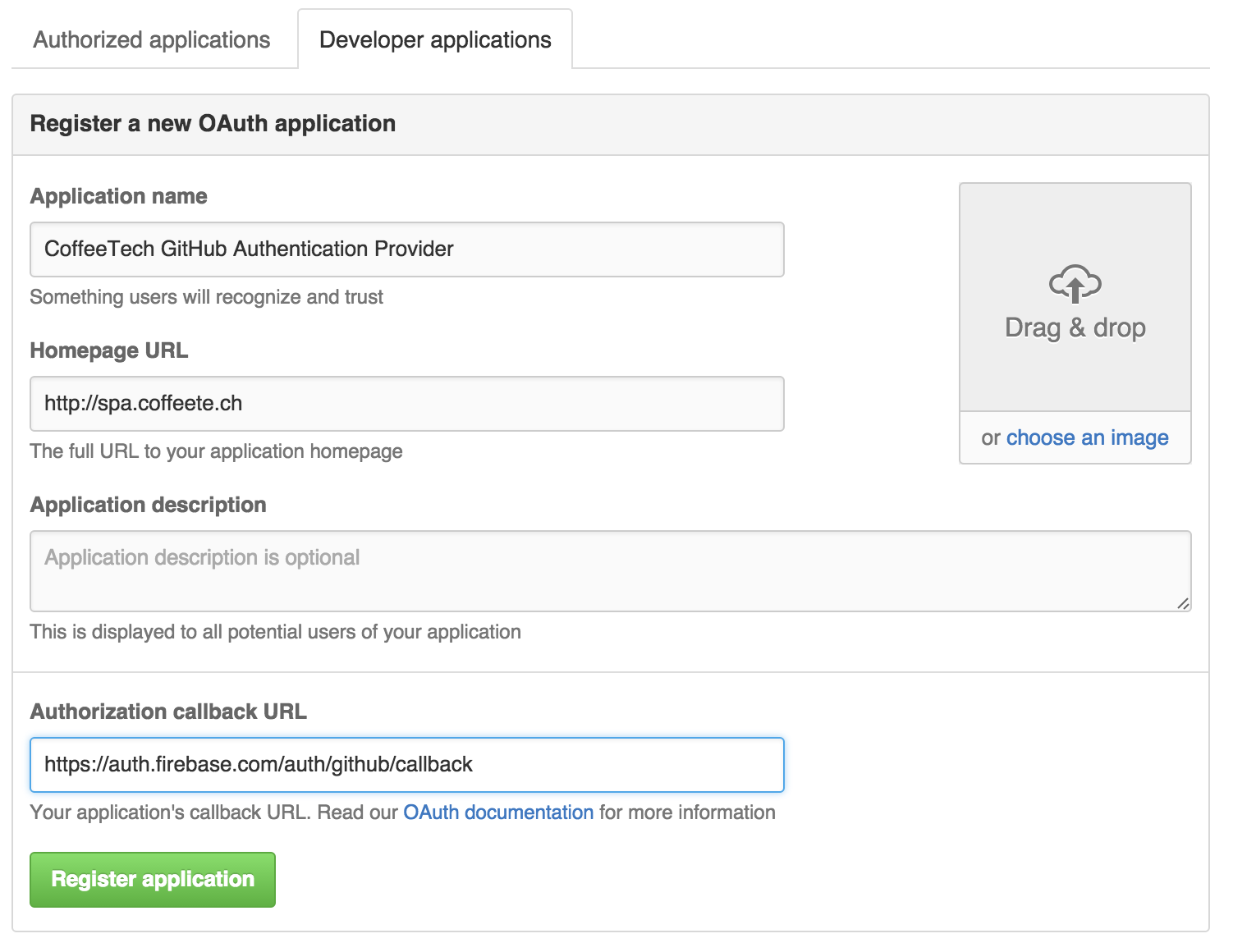

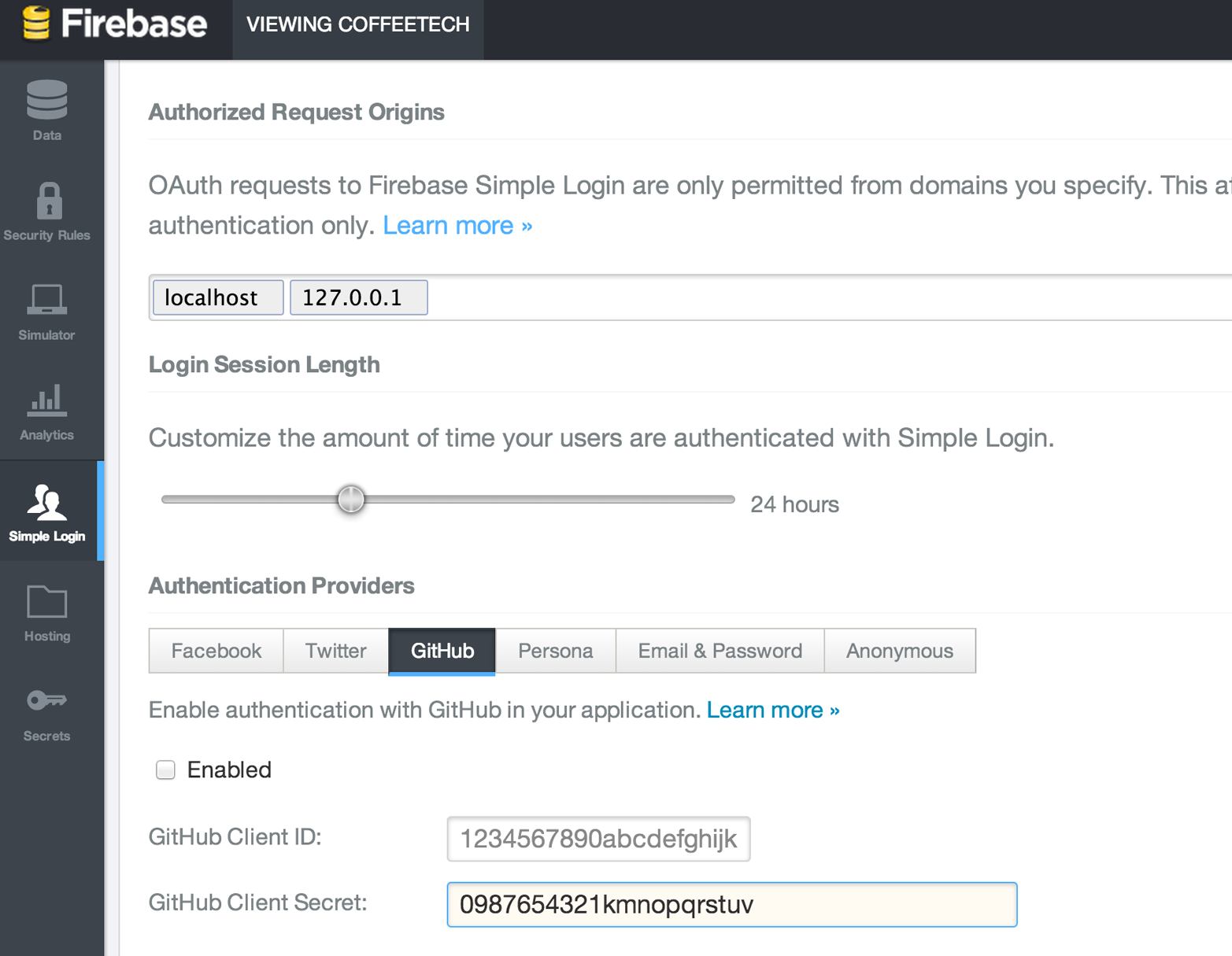

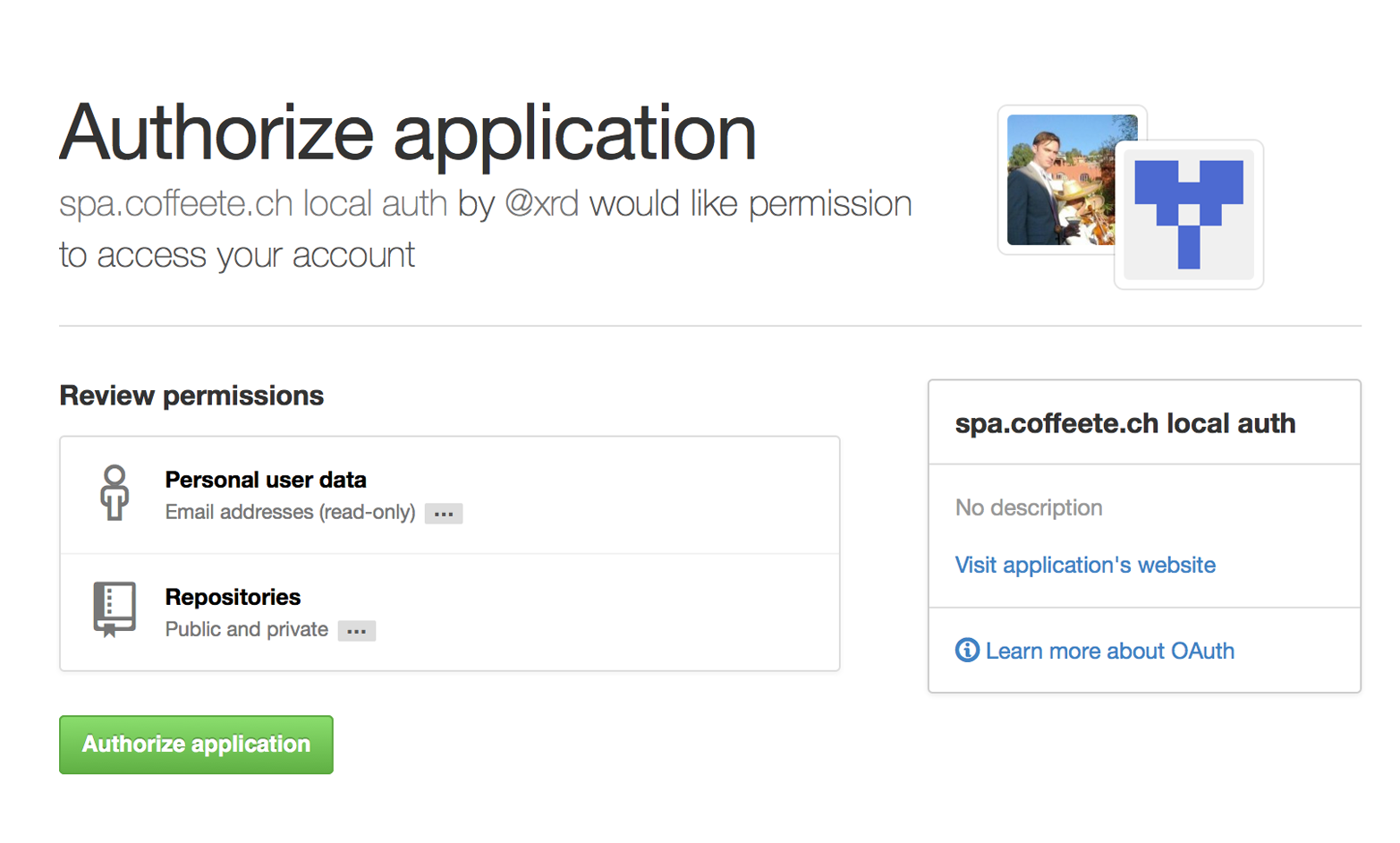

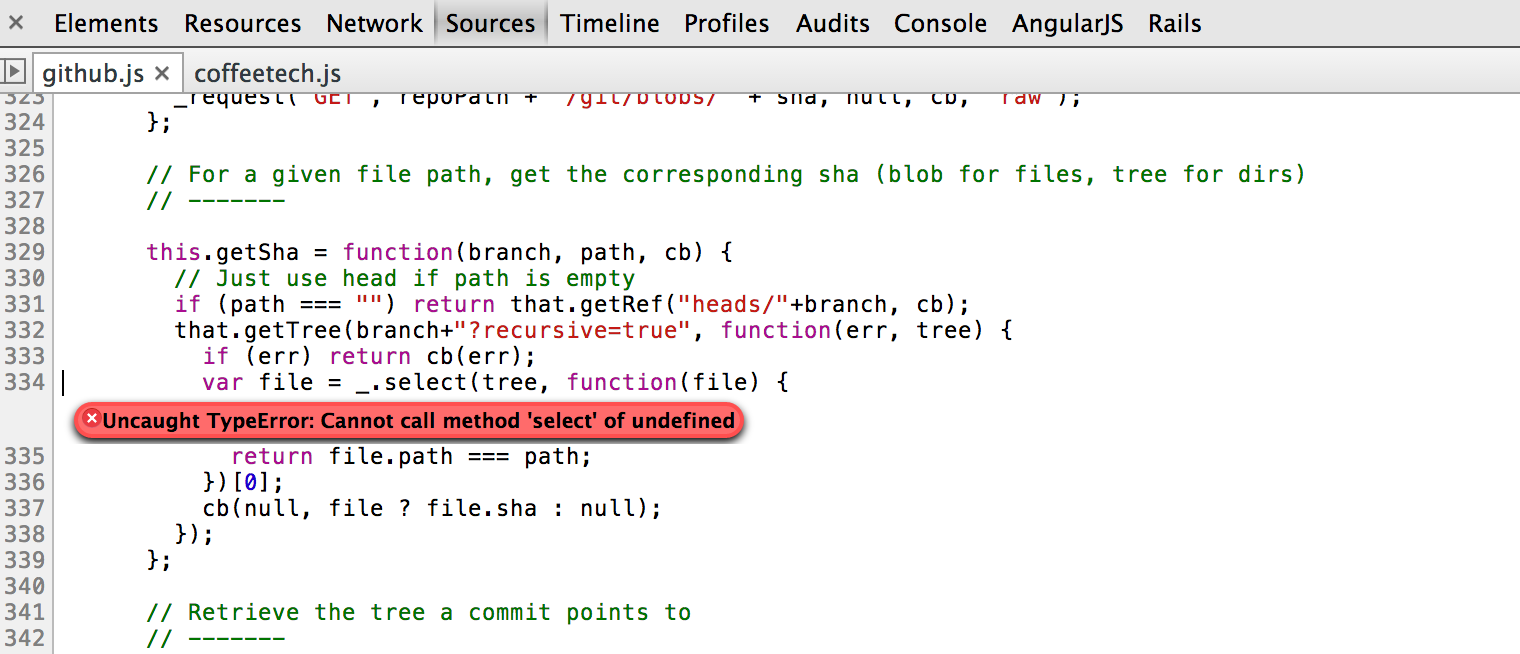

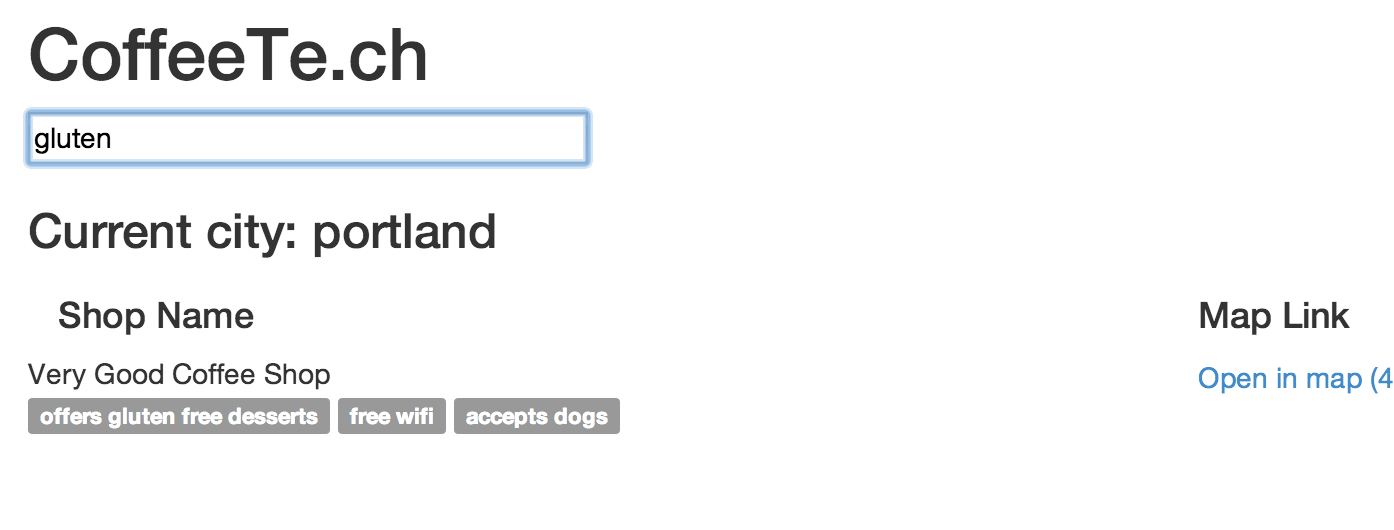

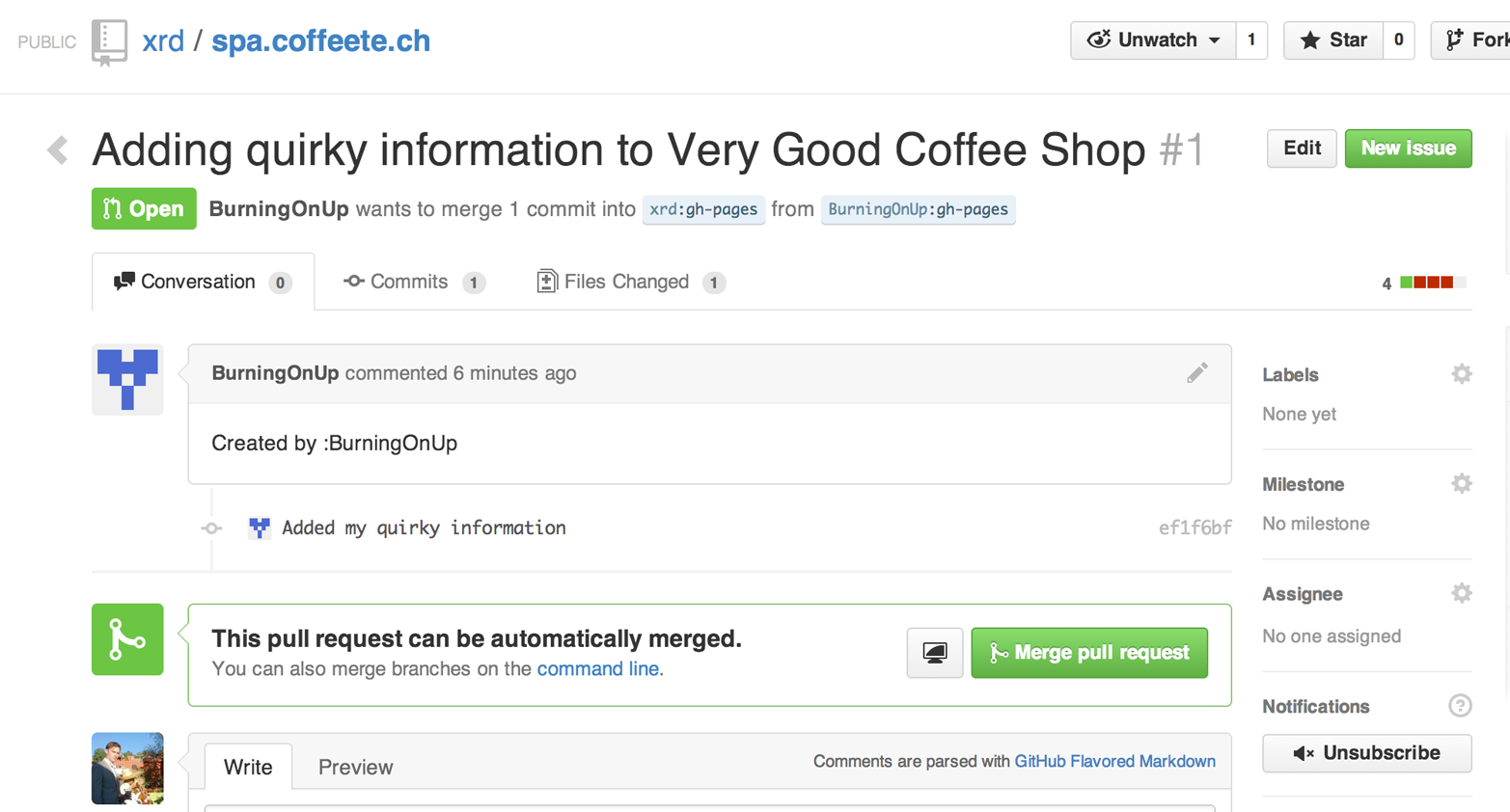

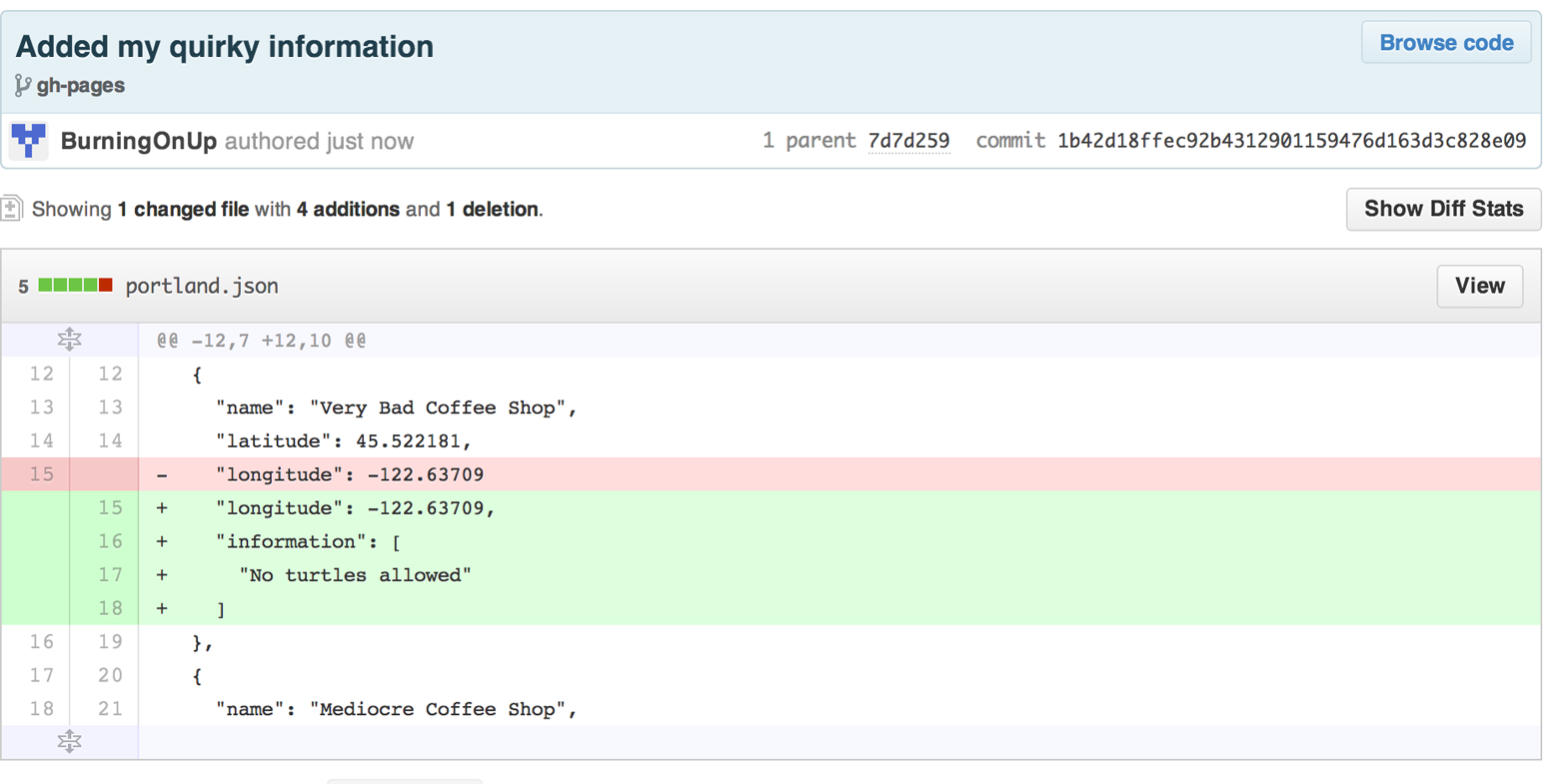

Did you know you can host an entire “single-page application” on GitHub? We show how you can build a coffee shop information app backed by a flat file database hosted on GitHub written in the JavaScript language. Importantly, we show how you can write a testable JavaScript application that mocks out the GitHub API when needed.

We don’t cover the Organizations API: this is a small facet of the API with only the ability to list organizations and modify metadata about your organization; once you have used other parts of the API this nook of the API will be very intuitive.

We also don’t cover the Users section of the API. While you might expect it to be an important part of the API, the Users API is really nothing more than an endpoint to list information about users, add or remove SSH keys, adjust email addresses, and modify your list of followers.

There is not a specific chapter on issues. GitHub originally grouped issues and pull requests into the same API section, but with the growing importance of pull requests GitHub has separated them in the API documentation. In fact, they are still internally stored in the same database and pull requests are, at least for now, just another type of issue. Chapter 8 documents using pull requests and is a good reference for issues in that way.

The Enterprise API works almost exactly the same as the GitHub.com site API. We don’t have a chapter telling a story about an Enterprise version of the API, but we do provide an appendix that contains a few notes about how the examples work when using an Enterprise server. We also provide the specific syntax for each of the languages used in the chapters that will make any of the examples provided work with an Enterprise server.

Through these stories about the technologies behind GitHub we hope to give you an inside look at the inner workings of the brain of a developer building on top of the GitHub API.

This book should be an interesting source of information for people who have used Git or GitHub and want to “level-up” their skills related to these technologies. People without any experience using GitHub or Git should start with an introductory book on these technologies.

You should have good familiarity with at least one imperative modern programming language. You don’t need to be an expert programmer to read this book, but having some programming experience and familiarity with at least one language is essential.

You should understand the basics of the HTTP protocol. The GitHub team uses a very standard RESTful approach for its API. You should understand the difference between a GET request and POST request and what HTTP status codes mean at the very least.

Familiarity with other web APIs will make traversing these chapters easier, although this book simultaneously aspires to provide a guide showing how a well-thought-out, well-designed, and well-tested web API creates a foundation for building fun and powerful tools. If you have not used web APIs extensively, but have experience using other types of APIs, you will be in good company.

Much of the book focuses on the technical capabilities exposed by GitHub and the powerful GitHub API. Perhaps you feel constrained by using Git only from within a certain toolset; for example, if you are an Android developer using Git to manage your app source code and want to unlock Git in other places in your life as a developer, this book provides a wider vista to learn about the power of Git and GitHub. If you have fallen into using Git for your own projects and are now interested in using Git within a larger community, this book can teach you all about the “social coding” style pioneered and dogfooded by the GitHub team. This book provides a stepping stone for software developers who have used other distributed version control systems and are looking for a bridge to using their skills with Git and within a web service like GitHub.

Like any seasoned developer, automation of your tools is important to you. This book provides examples of mundane tasks converted into automated and repeatable processes. We show how to do this using a variety of languages talking to the GitHub API.

To make this book accessible to everyone, regardless of their editor or operating system, many of the programming samples work within the command line. If you are unfamiliar with the “command line” this book will give you a firm understanding of how to use it, and we bet you will find great power there. If you have hated the command line since your father forced you to use it when you were five, this is the perfect book to rekindle a loving relationship with the bash shell.

If you absorb not only the technical facets of using GitHub but also pay attention to the cultural and ideological changes offered behind the tools, you’ll very likely see a new way of working in the modern age. We focus on these “meta” viewpoints as we discuss the tools themselves to help you see these extra opportunities.

Almost every chapter has an associated repository hosted on GitHub where you can review the code discussed. Fork away and take these samples into your own projects and tools!

Finally, we help you write testable API-backed code. Even the most experienced developers often find that writing tests for their code is a challenge, despite the massive body of literature connecting quality code with tests. Testing can be especially challenging when you are testing something backed by an API; it requires a different level of thinking than is found in strict unit testing. To help you get past this roadblock, whenever possible, this book shows you how to write code that interacts with the GitHub API and is testable.

There are two languages that are so fundamentally linked to GitHub that you do need to install and use them in order to get the most out of this book.

A simple, readable programming language the founders of GitHub used extensively early in the life of the company.

The only ubiquitous browser-side programming language; its importance has grown to new heights with the introduction of NodeJS, rivaling even the popularity of Ruby on Rails as a server-side toolkit for web applications, especially for independent developers.

Undoubtedly, many of you picking up this book already have familiarity with Ruby or JavaScript/NodeJS. So, the basics and installation of them are in appendices in the back of the book. The appendices don’t cover syntax of these languages; we expect you have experience with other languages as a prerequisite and can read code from any imperative language regardless of the syntax. Later chapters discuss facets of the API and go into language details at times, but the code is readable regardless of your familiarity with that particular language. These explanatory appendices discuss the history of these tools within the GitHub story as well as important usage notes like special files and installation options.

Your time will not be wasted if you install and play with these two tools. Between them you will have a solid toolset to begin exploration of the GitHub API. Several chapters in this book use Ruby or JavaScript, so putting in some time to learn at least a little bit will make the journey through this book richer for you.

We, the authors, wrote this book using MacBook Pros. MacBooks have a ubiquitous shell (“BASH”) that works almost identically to the one found on any Linux machine. If you use either of these two operating systems, you will be able to run the code from any chapter.

If you use a Windows machine (or an OS that does not include the BASH shell) then some of the commands and code examples may not work without installing additional software.

An easy remedy is to use VirtualBox and Vagrant. VirtualBox is a freely available virtualization system for x86 hardware. Vagrant is a tool for managing development environments: using VirtualBox and Vagrant you can quickly install a Linux virtual machine. To do this, visit the downloads page for VirtualBox and Vagrant. Once you have installed these two tools, you can then install an Ubuntu Linux virtual machine with these two commands:

$vagrant init hashicorp/precise32$vagrant up

If you are looking for a discussion of the GitHub API that focuses on a single language, you should know that we look at the API through many different languages. We do this to describe the API from not only the way the GitHub team designed it to work, but the aspirational way that client library authors made it work within diverse programming languages and communities. We think there is a lot to learn from this approach, but if you are interested in only a specific language and how it works with the GitHub API, this is not the book for you.

This book strives to prove that API-driven code is testable and that there is a benefit to doing so. This book does not intend to provide a manual on how to write perfectly tested code. We cover too many languages to end the healthy debates happening within each community about the right test frameworks. Instead, given our contention that most software projects have zero test coverage, this book tries to help you get past this significant roadblock. There is something transformational about writing tests if you have never done so before. Having these examples in hand, we hope, will allow you to transition to writing testable code for APIs, especially if you have not done so before. Some of the associated repositories have much greater test suites than are documented in this book, but we don’t cover all the entire set of edge cases in every situation.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

Constant width italicShows text that should be replaced with user-supplied values or by values determined by context.

This icon signifies a general note.

This icon indicates a warning or caution.

Supplemental material (code examples, exercises, etc.) is available for download at https://github.com/xrd/building-tools-with-github.

This book is here to help you get your job done. In general, if example code is offered with this book, you may use it in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing a CD-ROM of examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

We appreciate, but do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “Building Tools with GitHub by Chris Dawson and Ben Straub (O’Reilly). Copyright 2016 Chris Dawson and Ben Straub, 978-1-491-93350-3.”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

Safari (formerly Safari Books Online) is a membership-based training and reference platform for enterprise, government, educators, and individuals.

Members have access to thousands of books, training videos, Learning Paths, interactive tutorials, and curated playlists from over 250 publishers, including O’Reilly Media, Harvard Business Review, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Adobe, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, and Course Technology, among others.

For more information, please visit http://oreilly.com/safari.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://bit.ly/building-tools-with-github.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

Chris wants to thank his lovely wife, Nicole. I hope that I have added to this book even a tiny bit of the wit and wisdom you provide to me and our family every day. My son Roosevelt’s energy continues to inspire me and keep me going even when I am at my limits. To my daughter Charlotte, you are my little smiling Buddha. To my mother, who showed me how to write and, most importantly, why to write, which is something we need more of in the technology world. To Tim O’Brien who invited me into this project, thank you, and I hope we can collaborate again. To Bradley Horowitz, who demonstrates how small acts of kindness can have immeasurable impact. And, to David J. Groom, though we have never met face to face, your suggestions and excitement about the book early on came at a critical moment in the life of this book, and I thank you for channeling the excitement I hoped to cultivate with people who would one day pick up this book.

Ben would like to thank his wife, Becky, for her ongoing support and (when needed) push from behind. None of this would have happened without you.

This chapter eases us into reading and writing data from the GitHub API. Successive chapters show you how to access information from the GitHub API using a variety of client libraries. These client libraries, by design, hide the nuts and bolts of the API from you, providing streamlined and idiomatic methods to view and modify data inside a Git repository hosted on GitHub. This chapter, however, gives you a naked viewpoint of the GitHub API and documents the details of the raw HTTP requests and responses. It also discusses the different ways to access public and private data inside of GitHub and where limitations exist. And it gives you an overview of the options for accessing GitHub data when running inside a web browser context where network access is restrained.

There will be times when you want to quickly access information from the GitHub API without writing a formal program. Or, when you want to quickly get access to the raw HTTP request headers and content. Or, where you might even question the implementation of a client library and need confirmation it is doing the right thing from another vantage point. In these situations, cURL, a simple command-line HTTP tool, is the perfect fit. cURL, like the best Unix tools, is a small program with a very specific and purposefully limited set of features for accessing HTTP servers.

cURL, like the HTTP protocol it speaks intimately, is stateless: we will explore solutions to this limitation in a later chapter, but note that cURL works best with one-off requests.

cURL is usually installed on most OS X machines, and can easily be installed using Linux package managers (probably one of apt-get install curl or yum install curl). If you are using Windows or want to manually install it, go to http://curl.haxx.se/download.html.

Let’s make a request. We’ll start with the most basic GitHub API endpoint found at https://api.github.com:

$curl https://api.github.com{"current_user_url":"https://api.github.com/user","current_user_authorizations_html_url":"https://github.com/settings/connections/applications{/client_id}","authorizations_url":"https://api.github.com/authorizations","code_search_url":"https://api.github.com/search/code?q={query}{&page,per_page,sort,order}","emails_url":"https://api.github.com/user/emails","emojis_url":"https://api.github.com/emojis", ...}

We’ve abbreviated the response to make it more readable. A few salient things to notice: there are a lot of URLs pointing to secondary information, parameters are included in the URLs, and the response format is JSON.

What can we learn from this API response?

The GitHub API is a hypermedia API. Though a discussion on what constitutes hypermedia deserves an entire book of its own (check out O’Reilly’s Building Hypermedia APIs with HTML5 and Node), you can absorb much of what makes hypermedia interesting by just looking at a response. First, you can see from the API response that each response contains a map with directions for the next responses you might make. Not all clients use this information, of course, but one goal behind hypermedia APIs is that clients can dynamically adjust their endpoints without recoding the client code. If the thought of GitHub changing an API because clients should be written to handle new endpoints automatically sounds worrisome, don’t fret too much: GitHub is very dilligent about maintaining and supporting its API in a way that most companies would do well to emulate. But you should know that you can rely on having an API reference inside the API itself, rather than hosted externally in documentation, which very easily could turn out to be out of date with the API itself.

These API maps are rich with data. For example, they are not just URLs

to content, but also information about how to provide parameters to the

URLs. Looking at the previous example, the code_search_url key

references a URL that obviously allows you to search within code on

GitHub, but also tells you how to structure the parameters passed to

this URL. If you have an intelligent client who can follow this

programmatic format, you could dynamically generate the query without

involving a developer who can read API documentation. At least that is

the dream hypermedia points us to; if you are skeptical, at least know that APIs such as GitHub encode documentation into themselves, and you can bet GitHub has test coverage to prove that this documentation matches the information delivered by the API endpoints. That’s a strong guarantee that is sadly missing from many other APIs.

Now let’s briefly discuss the format of all GitHub API responses: JSON.

Every response you get back from the GitHub API will be in the JSON (JavaScript Object Notation) format. JSON is a “lightweight data interchange format” (read more on the JSON.org website). There are other competing and effective formats, such as XML (Extensible Markup Language) or YAML (YAML Ain’t Markup Language), but JSON is quickly becoming the de facto standard for web services.

A few of the reasons JSON is so popular:

JSON is readable. JSON has a nice balance of human readability when compared to serialization formats like XML.

JSON can be used within JavaScript with very little modification (and cognitive processing on the part of the programmer). A data format that works equally well on both the client and server side was bound to be victorious, as JSON has been.

You might expect that a site like GitHub, originally built on the Ruby on Rails stack (and some of that code is still live), would support specifying an alternative format like XML, but XML is no longer supported. Long live JSON.

JSON is very straightforward if you have used any other text-based interchange format. One note about JSON that is not always obvious or expected to people new to JSON is that the format only supports using double quotes, not single quotes.

We are using a command-line tool, cURL, to retrieve data from the API. It would be handy to have a simple command-line tool that also processes that JSON. Let’s talk about one such tool next.

JSON is a text format, so you could use any command-line text processing tool, such

as the venerable AWK, to process JSON responses. There is one fantastic JSON-specific parsing tool that complements cURL that is worth knowing:

jq. If you pipe JSON content (using the | character for most shells)

into jq, you can then easily extract pieces of the JSON using filters.

jq can be installed from source, using package managers like brew or

apt-get, and there are binaries on the downloads page for OS X,

Linux, Windows, and Solaris.

Going deeper into the prior example, let’s pull out something interesting from the API map that we receive when we access api.github.com:

$curl https://api.github.com|jq'.current_user_url'% Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed100200410020040044960--:--:-- --:--:-- --:--:-- 4493"https://api.github.com/user"

What just happened? The jq tool parsed the JSON, and using the

.current_user_url filter, it retrieved content from the JSON

response. If you look at the response again, you’ll notice it has

key/value pairs inside an associative array. It uses the

current_user_url as a key into that associative array and prints out the value there.

You also will notice that cURL printed out transfer time

information. cURL printed this information to standard error, which

is a shell convention used for messaging errors and an output stream

that jq will correctly ignore (in other words, the JSON format stream

will not be corrupted by error messages). If we want to

restrict that information and clean up the request we should use the

-s switch, which runs cURL in “silent” mode.

It should be easy to understand how the jq filter is applied to the response JSON. For a more complicated request (for example, we might want to obtain a list of public repositories for a user), we can see the pattern for the jq pattern parameter emerging. Let’s get a more complicated set of information, a user’s list of repositories, and see how we can extract information from the response using jq:

$curl -s https://api.github.com/users/xrd/repos[{"id": 19551182,"name":"a-gollum-test","full_name":"xrd/a-gollum-test","owner":{"login":"xrd","id": 17064,"avatar_url":"https://avatars.githubusercontent.com/u/17064?v=3", ...}]$curl -s https://api.github.com/users/xrd/repos|jq'.[0].owner.id'17064

This response is different structurally: instead of an associative array, we now have an array (multiple items). To get the first one, we specify a numeric index, and then key into the successive associative arrays inside of it to reach the desired content: the owner id.

jq is a great tool for checking the validity of JSON. As mentioned before, JSON key/values are stored only with double quotes, not single quotes. You can verify that JSON is valid and satisfies this requirement using jq:

$echo'{ "a" : "b" }'|jq'.'{"a":"b"}$echo"{ 'no' : 'bueno' }"|jq"."parse error: Invalid numeric literal at line 1, column 7

The first JSON we pass into jq works, while the second, because it

uses invalid single-quote characters, fails with an error. jq filters

are strings passed as arguments, and the shell that provides the

string to jq does not care if you use single quotes or

double quotes, as you can see in the preceding code. The echo command, if you didn’t

already know, prints out whatever string you provide to it; when we

combine this with the pipe character we can easily provide that string

to jq through standard input.

jq is a powerful tool for quickly retrieving content from an arbitray JSON request. jq has many other powerful features, documented at https://stedolan.github.io/jq/.

We now know how to retrieve some interesting information from the GitHub API and parse out bits of information from that response, all in a single line. But there will be times when you incorrectly specify parameters to cURL or the API, and the data is not what you expect. Now we’ll learn about how to debug the cURL tool and the API service itself to provide more context when things go wrong.

As mentioned, cURL is a great tool when you are verifying that a

response is what you expect it to be. The response body is important,

but often you’ll want access to the headers as well. cURL makes

getting these easy with the -i and -v switches. The -i switch

prints out request headers, and the -v switch prints out both

request and response headers (the > character indicates request

data, and the < character indicates response data):

$curl -i https://api.github.com HTTP/1.1200OK Server: GitHub.com Date: Wed,03Jun201519:39:03 GMT Content-Type: application/json;charset=utf-8 Content-Length: 2004 Status:200OK X-RateLimit-Limit: 60 ...{"current_user_url":"https://api.github.com/user", ...}$curl -v https://api.github.com * Rebuilt URL to: https://api.github.com/ * Hostname was NOT found in DNS cache * Trying 192.30.252.137... * Connected to api.github.com(192.30.252.137)port443(#0)* successfullysetcertificate verify locations: * CAfile: none CApath: /etc/ssl/certs * SSLv3, TLS handshake, Client hello(1): * SSLv3, TLS handshake, Server hello(2): ... *CN=DigiCert SHA2 High Assurance Server CA * SSL certificate verify ok. > GET / HTTP/1.1 > User-Agent: curl/7.35.0 > Host: api.github.com > Accept: */* > < HTTP/1.1200OK * Server GitHub.com is not blacklisted ...

With the -v switch you get everything: DNS lookups, information on

the SSL chain, and the full request and response information.

Be aware that if you print out headers, a tool like jq will get confused because you are no longer providing it with pure JSON.

This section shows us that there is interesting information not only in the body (the JSON data) but also in the headers. It is important to understand what headers are here and which ones are important. The HTTP specification requires a lot of these headers, and we can often ignore those, but there are a few that are vital when you start making more than just a few isolated requests.

Three headers are present in every GitHub API response that tell you about the GitHub API rate limits. They are X-RateLimit-Limit, X-RateLimit-Remaining, and X-RateLimit-Reset. These limits are explained in detail in “GitHub API Rate Limits”.

The X-GitHub-Media-Type header contains information that will come in handy when you are starting to retrieve text or blob content from the API. When you make a request to the GitHub API you can specify the format you want to work with by sending an Accept header with your request.

Now, let’s use a response to build another response.

We’ll use the “map” of the API by hitting the base endpoint, and then use the response to manually generate another request:

$curl -i https://api.github.com/ HTTP/1.1200OK Server: GitHub.com Date: Sat,25Apr201505:36:16 GMT ...{"current_user_url":"https://api.github.com/user", ..."organization_url":"https://api.github.com/orgs/{org}", ...}

We can use the organizational URL and substitute "github" in the placeholder:

$curl https://api.github.com/orgs/github{"login":"github","id": 9919,"url":"https://api.github.com/orgs/github", ..."description":"GitHub, the company.","name":"GitHub","company": null,"blog":"https://github.com/about","location":"San Francisco, CA","email":"support@github.com", ..."created_at":"2008-05-11T04:37:31Z","updated_at":"2015-04-25T05:17:01Z","type":"Organization"}

This information allows us to do some forensics on GitHub itself. We get the company blog https://github.com/about. We see that GitHub is located in San Francisco, and we see that the creation date of the organization is May 11th, 2008. Reviewing the blog, we see a blog post from April that indicates GitHub launched as a company a month earlier. Perhaps organizations were not added to the GitHub site features until a month after the company launched?

So far all of our requests have retrieved publicly available information. But the GitHub API has a much richer set of information that is available only once we authenticate and access private information and publicly inaccessible services. For example, if you are using the API to write data into GitHub, you need to know about authentication.

There are two ways to authenticate when making a request to the GitHub API: username and passwords (HTTP Basic) and OAuth tokens.

You can access protected content inside GitHub using a username and

password combination. Username authentication works by using the HTTP

Basic authentication supported by the -u flag in cURL. HTTP Basic

Authentication is synonymous with username and password authentication:

$curl -u xrd https://api.github.com/rate_limit Enter host passwordforuser'xrd': xxxxxxxx{"rate":{"limit": 5000,"remaining": 4995,"reset": 1376251941}}

This cURL command authenticates into the GitHub API and then retrieves information about our own specific rate limits for our user account, protected information only available as a logged-in user.

Almost any client library you use will support HTTP Basic authentication. All the GitHub API clients we looked at support username and passwords. And, writing your own specific client is easy as this is a core feature of the HTTP standard, so if you use any standard HTTP library when building your own client, you will be able to access content inside the GitHub API.

There are many reasons username and password authentication is the wrong way to manage your GitHub API access:

HTTP Basic is an old protocol that never anticipated the granularity of web services. It is not possible to specify only certain features of a web service if you ask users to authenticate with username/passwords.

If you use a username and password to access GitHub API content from your cell phone, and then access API content from your laptop, you have no way to block access to one without blocking the other.

HTTP Basic authentication does not support extensions to the authentication flow. Many modern services now support two-factor authentication and there is no way to inject this into the process without changing the HTTP clients (web browsers, for example) or at least the flow they expect (making the browser repeat the request).

All of these problems are solved (or at least supported) with OAuth flows. Given all these concerns, the only time you will want to use username and password authentication is when convenience trumps all other considerations.

OAuth is an authentication mechanism where tokens are tied to functionality or clients. In other words, you can specify what features of a service you want to permit an OAuth token to carry with it, and you can issue multiple tokens and tie those to specific clients: a cell phone app, a laptop, a smart watch, or even an Internet of Things toaster. And, importantly, you can revoke tokens without impacting other tokens.

The main downside to OAuth tokens is that they introduce a level of complexity that you may not be familiar with if you have only used HTTP Basic. HTTP Basic requests generally only require adding an extra header to the HTTP request, or an extra flag to a client tool like cURL.

OAuth solves the problems just described by linking tokens to scopes (specified subsets of functionality inside a web service) and issuing as many tokens as you need to multiple clients.

When you generate an OAuth token, you specify the access rights you require. Though our examples create the token using HTTP Basic, once you have the token, you no longer need to use HTTP Basic in successive requests. If this token is properly issued, the OAuth token will have permissions to read and write to public repositories owned by that user.

The following cURL command uses HTTP Basic to initiate the token request process:

$curl -u username -d'{"scopes":["public_repo"], "note": "A new authorization"}'\https://api.github.com/authorizations{"id": 1234567,"url":"https://api.github.com/authorizations/1234567","app":{"name":"My app","url":"https://developer.github.com/v3/oauth_authorizations/","client_id":"00000000000000000000"},"token":"abcdef87654321...}

The JSON response, upon success, has a token you can extract and use for applications that need access to the GitHub API.

If you are using two-factor authentication, this flow requires additional steps, all of which are documented within Chapter 8.

To use this token, you specify the token inside an authorization header:

$curl -H"Authorization: token abcdef87654321"...

Scopes clarify how a service or application will use data inside the GitHub API. This makes it easy to audit how you are using the information if this was a token issued for your own personal use. But, most importantly, this provides valuable clarity and protection for those times when a third-party application wants to access your information: you can be assured the application is limited in what data it can access, and you can revoke access easily.

There is one major limitation of scopes to be aware of: you cannot do fine-grained access to certain repositories only. If you provide access to any of your private repositories, you are providing access to all repositories.

It is likely that GitHub will change the way scopes work and address some of these issues. The great thing about the way OAuth works is that to support these changes you will simply need to request a new token with the scope modified, but otherwise, the application authentication flow will be unchaged.

Be very careful about the scopes you request when building a service or application. Users are (rightly) paranoid about the data they are handing over to you, and will evaluate your application based on the scopes requested. If they don’t think you need that scope, be sure to remove it from the list you provide to GitHub when authorizing and consider escalation to a higher scope after you have developed some trust with your users.

You can ask for scope at one point that is very limited, and then later ask for a greater scope. For example, when a user first accesses your application, you could only get the user scope to create a user object inside your service, and only when your application needs repository information for a user, then request to escalate privileges. At this point the user will need to approve or disapprove your request, but asking for everything upfront (before you have a relationship with the user) often results in a user abandoning the login.

Now let’s get into the specifics of authentication using OAuth.

OAuth has many variants, but GitHub uses OAuth2. OAuth2 specifies a flow where:

The application requests access

The service provider (GitHub) requests authentication: username and password usually

If two-factor authentication is enabled, ask for the OTP (one-time password) code

GitHub responds with a token inside a JSON payload

The application uses the OAuth token to make requests of the API

A real-world flow is described in full in Chapter 8.

Now let’s look at the variety of HTTP status codes GitHub uses to communicate feedback when using the API.

The GitHub API uses HTTP status codes to tell you definitive information about how your request was processed. If you are using a basic client like cURL, it will be important to validate the status code before you look at any of the data retrieved. If you are writing your own API client, pay close attention to the status code before anything else. If you are new to the GitHub API, it is worth reviewing the response codes thoroughly until you are familiar with the various conditions that can cause errors when making a request.

If you have worked with any HTTP clients whatsoever, you know that the HTTP status code “200” means success. GitHub will respond with a 200 status code when your request destination URL and associated parameters are correct. If your request creates content on the server, then you will get a 201 status code, indicating successful creation on the server.

$curl -s -i https://api.github.com|grep Status Status:200OK

If your payload (the JSON you send to a request) is invalid, the GitHub API will respond with a 400 error, as shown here:

$curl -i -u xrd -d'yaml: true'-X POST https://api.github.com/gists Enter host passwordforuser'xrd': HTTP/1.1400Bad Request Server: GitHub.com Date: Thu,04Jun201520:33:49 GMT Content-Type: application/json;charset=utf-8 Content-Length: 148 Status:400Bad Request ...{"message":"Problems parsing JSON","documentation_url":"https://developer.github.com/v3/oauth_authorizations/#create...authorization"}

Here we attempt to generate a new gist by using the endpoint described

at the Gist API documentation. We’ll discuss gists in more detail in a later chapter. This issue fails because we

are not using JSON (this looks like it could be YAML, which we will discuss

in Chapter 6). The payload is sent using the -d

switch. GitHub responds with advice on where to find the documentation

for the correct format at the documentation_url key inside the JSON

response. Notice that we use the -X POST switch and value to tell

cURL to make a POST request to GitHub.

If any of the fields in your request are invalid, GitHub will respond with a 422 error. Let’s attempt to fix the previous request. The documentation indicates the JSON payload should look like this:

{"description":"the description for this gist","public":true,"files":{"file1.txt":{"content":"String file contents"}}}

What happens if the JSON is valid, but the fields are incorrect?

$curl -i -u chris@burningon.com -d'{ "a" : "b" }'-X POST https://api.github.com/gists Enter host passwordforuser'chris@burningon.com': HTTP/1.1422Unprocessable Entity ...{"message":"Invalid request.\n\n\"files\" wasn't supplied.","documentation_url":"https://developer.github.com/v3"}

There are two important things to note: first, we get a 422 error,

which indicates the JSON was valid, but the fields were incorrect. We

also get a response that indicates why: we are missing the files

key inside the request payload.

We’ve seen what happens when the JSON is invalid, but what happens when the JSON is valid for our request?

$curl -i -u xrd\-d'{"description":"A","public":true,"files":{"a.txt":{"content":"B"}}} \https://api.github.com/gistsEnter host password for user 'xrd': HTTP/1.1201Created ...{"url":"https://api.github.com/gists/4a86ed1ca6f289d0f6a4","forks_url":"https://api.github.com/gists/4a86ed1ca6f289d0f6a4/forks","commits_url":"https://api.github.com/gists/4a86ed1ca6f289d0f6a4/commits","id":"4a86ed1ca6f289d0f6a4","git_pull_url":"https://gist.github.com/4a86ed1ca6f289d0f6a4.git", ...}

Success! We created a gist and got a 201 status code indicating things

worked properly. To make our command more readable we used the

backslash character to allow parameters to span across lines. Also,

notice the JSON does not require whitespace, which we have completely

removed from the string passed to the -d switch (in order to save

space and make this command a little bit more readable).

304s are like 200s in that they say to the client: yes, your request succeeded. They give a little bit of extra information, however, in that they tell the client that the data has not changed since the last time the same request was made. This is valuable information if you are concerned about your usage limits (and in most cases you will be). We have not yet explained how rate limits work, so let’s discuss that and then return to demonstrate triggering a 304 response code by using conditional headers.

GitHub tries to limit the rate at which users can make requests to the API. Anonymous requests (requests that haven’t authenticated with either a username/password or OAuth information) are limited to 60 requests an hour. If you are developing a system to integrate with the GitHub API on behalf of users, clearly 60 requests per hour isn’t going to be sufficient.

This rate limit is increased to 5000 requests per hour if you are making an authenticated request to the GitHub API, and while this rate is two orders of magnitude larger than the anonymous rate limit, it still presents problems if you intend to use your own GitHub credentials when making requests on behalf of many users.

For this reason, if your website or service uses the GitHub API to request information from the GitHub API, you should consider using OAuth and make requests to the GitHub API using your user’s shared authentication information. If you use a token connected to another user’s GitHub account, the rate limits count against that user, and not your user account.

There are actually two rate limits: the core rate limit and the search rate limit. The rate limits explained in the previous paragraphs were for the core rate limit. For search, requests are limited to 20 requests per minute for authenticated user requests and 5 requests per minute for anonymous requests. The assumption here is that search is a more infrastructure-intensive request to satisfy and that tighter limits are placed on its usage.

Note that GitHub tracks anonymous requests by IP address. This means that if you are behind a firewall with other users making anonymous requests, all those requests will be grouped together.

Reading your rate limit is straightforward—just make a GET request to

/rate_limit. This will return a JSON document that tells you the

limit you are subject to, the number of requests you have remaining,

and the timestamp (in seconds since 1970). Note that this timestamp

is in the Coordinated Universal Time (UTC) time zone.

The following command listing uses cURL to retrieve the rate limit for an anonymous request. This response is abbreviated to save space in this book, but you’ll notice that the quota information is supplied twice: once in the HTTP response headers and again in the JSON response. The rate limit headers are returned with every request to the GitHub API, so there is little need to make a direct call to the /rate_limit API:

$curl https://api.github.com/rate_limit{"resources":{"core":{"limit": 60,"remaining": 48,"reset": 1433398160},"search":{"limit": 10,"remaining": 10,"reset": 1433395543}},"rate":{"limit": 60,"remaining": 48,"reset": 1433398160}}

Sixty requests over the course of an hour isn’t very much, and if you plan on doing anything interesting, you will likely exceed this limit quickly. If you are hitting up against the 60 requests per minute limit, you will likely want to investigate making authenticated requests to the GitHub API. We’ll show that when we discuss authenticated requests.

Calls to the /rate_limit API are not deducted from your rate limits. And, remember, rate limits are reset after 24 hours.

If you are querying the GitHub APIs to obtain activity data for a user or a repository, there’s a good chance that many of your requests won’t return much activity. If you check for new activity once every few minutes, there will be time periods over which no activity has occurred. These constant polls still use up requests in your rate limit even though there’s no new activity to be delivered.

In these cases, you can send the conditional HTTP headers

If-Modified-Since and If-None-Match to tell GitHub to return an HTTP

304 response code telling you that nothing has been modified. When

you send a request with a conditional header and the GitHub API responds

with an HTTP 304 response code, this request is not deducted from your

rate limit.

The following command listing is an example of passing in the

If-Modified-Since HTTP header to the GitHub API. Here we’ve

specified that we’re only interested in receiving content if the

Twitter Bootstrap repositories have been altered after 7:49 PM GMT on

Sunday, August 11, 2013. The GitHub API responds with an HTTP 304

response code that also tells us that the last time this repository

changed was a minute earlier than our cutoff date:

$curl -i https://api.github.com/repos/twbs/bootstrap\-H"If-Modified-Since: Sun, 11 Aug 2013 19:48:59 GMT"HTTP/1.1304Not Modified Server: GitHub.com Date: Sun,11Aug201320:11:26 GMT Status:304Not Modified X-RateLimit-Limit: 60 X-RateLimit-Remaining: 46 X-RateLimit-Reset: 1376255215 Cache-Control: public, max-age=60, s-maxage=60 Last-Modified: Sun,11Aug201319:48:39 GMT

The GitHub API also understands HTTP caching tags. An ETag, or Entity Tag, is an HTTP header that is used to control whether or not content you have previously cached is the most recent version. Here’s how your systems would use an ETag:

Your server requests information from an HTTP server.

Server returns an ETag header for a version of a content item.

Your server includes this ETag in all subsequent requests:

If the server has a newer version it returns new content + a new ETag.

If the server doesn’t have a newer version it returns an HTTP 304.

The following command listing demonstrates two commands. The first

cURL call to the GitHub API generates an ETag value, and the second

value passes this ETag value as an If-None-Match header. You’ll note

that the second response is an HTTP 304, which tells the caller that

there is no new content available:

$curl -i https://api.github.com/repos/twbs/bootstrap HTTP/1.1200OK Cache-Control: public, max-age=60, s-maxage=60 Last-Modified: Sun,11Aug201320:25:37 GMT ETag:"462c74009317cf64560b8e395b9d0cdd"{"id": 2126244,"name":"bootstrap","full_name":"twbs/bootstrap", ....}$curl -i https://api.github.com/repos/twbs/bootstrap\-H'If-None-Match: "462c74009317cf64560b8e395b9d0cdd"'HTTP/1.1304Not Modified Status:304Not Modified Cache-Control: public, max-age=60, s-maxage=60 Last-Modified: Sun,11Aug201320:25:37 GMT ETag:"462c74009317cf64560b8e395b9d0cdd"

Use of conditional request headers is encouraged to conserve resources and make sure that the infrastructure that supports GitHub’s API isn’t asked to generate content unnecessarily.

At this point we have been accessing the GitHub API from a cURL client, and as long as our network permits it, we can do whatever we want. The GitHub API is accessible in other situations as well, like from within a browser context, and certain restrictions apply there, so let’s discuss that next.

If you are using the GitHub API from a server-side program or the command line then you are free to issue any network calls as long as your network permits it. If you are attempting to access the GitHub API from within a browser using JavaScript and the XHR (XmlHttpRequest) object, then you should be aware of limitations imposed by the browser’s same-origin policy. In a nutshell, you are not able to access domains from JavaScript using standard XHR requests outside of the domain from which you retrieved the original page. There are two options for getting around this restriction, one clever (JSON-P) and one fully supported but slightly more onerous (CORS).

JSON-P is a browser hack, more or less, that allows retrieval of

information from servers outside of the same-origin policy. JSON-P

works because <script> tags are not checked against the same-origin

policy; in other words, your page can include references to content on

servers other than the one from which the page originated. With JSON-P, you load

a JavaScript file that resolves to a specially encoded data payload

wrapped in a callback function you implement. The GitHub API supports

this syntax: you request a script with a parameter on the URL

indicating what callback you want the script to execute once loaded.

We can simulate this request in cURL:

$curl https://api.github.com/?callback=myCallback /**/myCallback({"meta":{"X-RateLimit-Limit":"60","X-RateLimit-Remaining":"52","X-RateLimit-Reset":"1433461950","Cache-Control":"public, max-age=60, s-maxage=60","Vary":"Accept","ETag":"\"a5c656a9399ccd6b44e2f9a4291c8289\"","X-GitHub-Media-Type":"github.v3","status": 200},"data":{"current_user_url":"https://api.github.com/user","current_user_authorizations_html_url":"https://github.com/settings/connections/applications{/client_id}","authorizations_url":"https://api.github.com/authorizations", ...}})

If you used the same URL we used in the preceding code inside a script tag on a

web page (<script src="https://api.github.com/?callback=myCallback" type= "text/javascript"></script>), your browser would load the

content displayed in the preceding code, and then a JavaScript function you defined

called myCallback would be executed with the data shown. This

function could be implemented like this inside your web page:

<script>functionmyCallback(payload){if(200==payload.status){document.getElementById("success").innerHTML=payload.data.current_user_url;}else{document.getElementById("error").innerHTML="An error occurred";}}</script>

This example demonstrates taking the current_user_url from the data inside

the payload and putting it into a DIV, one that might look like <div id="success"> </div>.

Because JSON-P works via <script> tags, only GET requests to the API

are supported. If you only need read-only access to the API, JSON-P

can fulfill that need in many cases, and it is easy to configure.

If JSON-P seems too limiting or hackish, CORS is a more complicated but official way to access external services from within a web page.

CORS is the W3C (a web standards body) approved way to access content from a different domain than the original host. CORS requires that the server be properly configured in advance; the server must indicate when queried that it allows cross-domain requests. If the server effectively says “yes, you can access my content from a different domain,” then CORS requests are permitted. The HTML5Rocks website has a great tutorial explaining many details of CORS.

Because XHR using CORS allows the same type of XHR requests you get from the same domain origin, you can make requests beyond GET to the GitHub API: POST, DELETE, and UPDATE. Between JSON-P and CORS you have two options for accessing content from the GitHub API inside of web browsers. The choice is between the simplicity of JSON-P and the power and extra configuration of CORS.

We can prove using cURL that the GitHub API server is responding correctly for

CORS requests. In this case we only care about the headers, so we use

the -I switch, which tells cURL to make a HEAD request, telling the

server not to respond with body content:

curl -I https://api.github.com HTTP/1.1200OK Server: GitHub.com ... X-Frame-Options: deny Content-Security-Policy: default-src'none'Access-Control-Allow-Credentials:trueAccess-Control-Expose-Headers: ETag, Link, X-GitHub-OTP, X-RateLimit-Limit, X-RateLimit-Remaining, X-RateLimit-Reset, X-OAuth-Scopes, X-Accepted-OAuth-Scopes, X-Poll-Interval Access-Control-Allow-Origin: * X-GitHub-Request-Id: C0F1CF9E:07AD:3C493B:557107C7 Strict-Transport-Security: max-age=31536000;includeSubdomains;preload

We can see the Access-Control-Allow-Credentials header is set to

true. It depends on the browser implementation, but some JavaScript

host browsers will automatically make a preflight request to verify

this header is set to true (and that other headers, like the

Access-Control-Allow-Origin, are set correctly and permit requests

from that origin to proceed). Other JavaScript host browsers will need

you to make that request. Once the browser has used the headers to

confirm that CORS is permitted, you can make XHR requests to the

GitHub API domain as you would any other XHR request going into the

same domain.

We’ve covered much of the details of connecting and dissecting the GitHub API, but there are a few other options to know about when using it. One of them is that you can use the GitHub API service to provide rendered content when you need it.

When you send a request to the GitHub API, you have some ability to

specify the format of the response you expect. For example, if you

are requesting content that contains text from a commit’s comment

thread, you can use the Accept header to ask for the raw Markdown or

for the HTML this Markdown generates. You also have the ability to

specify this version of the GitHub API you are using. At this point,

you can specify either version 3 or beta of the API.

The Accept header you send with a request can affect the format of

text returned by the GitHub API. As an example, let’s assume you

wanted to read the body of a GitHub Issue. An issue’s body is stored

in Markdown and will be sent back in the request by default. If we

wanted to render the response as HTML instead of Markdown, we could do

this by sending a different Accept header, as the following cURL

commands demonstrate:

$URL='https://api.github.com/repos/rails/rails/issues/11819'$curl-s$URL|jq'.body'"Hi, \r\n\r\nI have a problem with strong...."$curl-s$URL|jq'.body_html'null$curl-s$URL\-H"Accept: application/vnd.github.html+json"|jq'.body_html'"<p>Hi, </p>\n\n<p>I have a problem with..."

Without specifying an extra header, we get the internal representation of the data, sent as Markdown.

Note that if we don’t request the HTML representation, we don’t see it in the JSON by default.

If we use a customized Accept header like in

the third instance, then our JSON is populated with a rendered version

of the body in HTML.

Besides “raw” and “html” there are two other format options that influence how Markdown content is delivered via the GitHub API. If you specify “text” as a format, the issue body would have been returned as plaintext. If you specify “full” then the content will be rendered multiple times including the raw Markdown, rendered HTML, and rendered plaintext.

In addition to controlling the format of text content, you can also retrieve GitHub blobs either as raw binary or as a BASE64-encoded text. When retrieving commits, you can also specify that the content be returned either as a diff or as a patch. For more information about these fine-grained controls for formatting, see the GitHub API documentation.

The GitHub team has already provided very thorough documentation on their API with examples using cURL. Bookmark this URL: https://developer.github.com/v3/. You’ll use it often. Do note that this URL is tied, obviously, to the current API “version 3,” so this URL will change when a new version is released.

In this chapter we learned how to access the GitHub API from the simplest client available: the command-line cURL HTTP tool. We also explored the API by looking at the JSON and played with a command-line tool (jq) that when paired with cURL gives us the ability to quickly find information in the often large body of data the GitHub API provides. We learned about the different authentication schemes supported by GitHub, and also learned about the possibilities and trade-offs when accessing the GitHub API from within a browser context.

In the next chapter we will look at gists and the Gist API. We’ll use Ruby to build a gist display program, and host all source files for the application as a gist itself.

GitHub revolutionized software development by responding to a deep desire to share information. But calling it just “sharing” does a disservice to the tools GitHub provides: these tools remove barriers to communication and streamline workflows. These tools also arose at exactly the moment when the information technology revolution forced companies to adopt more open technologies that assisted an emerging remote workforce.

Gists service part of this need: they permit intimate code sharing and reuse, refactoring, and experimentation in a way not served by the heavyweight tools predating it. In this chapter we will explore using gists to share code, and then build an application hosted as a gist that uses the Gist API.

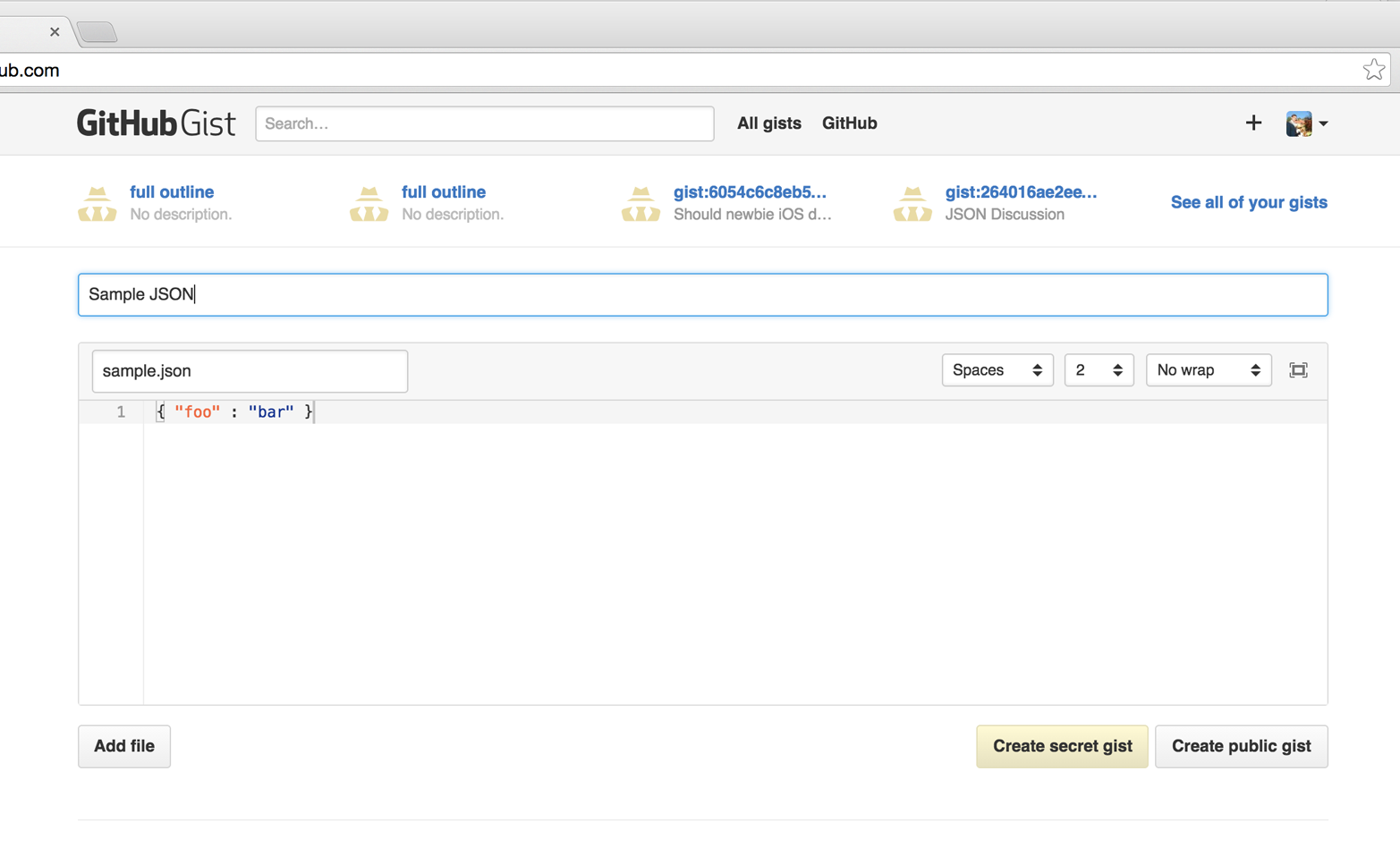

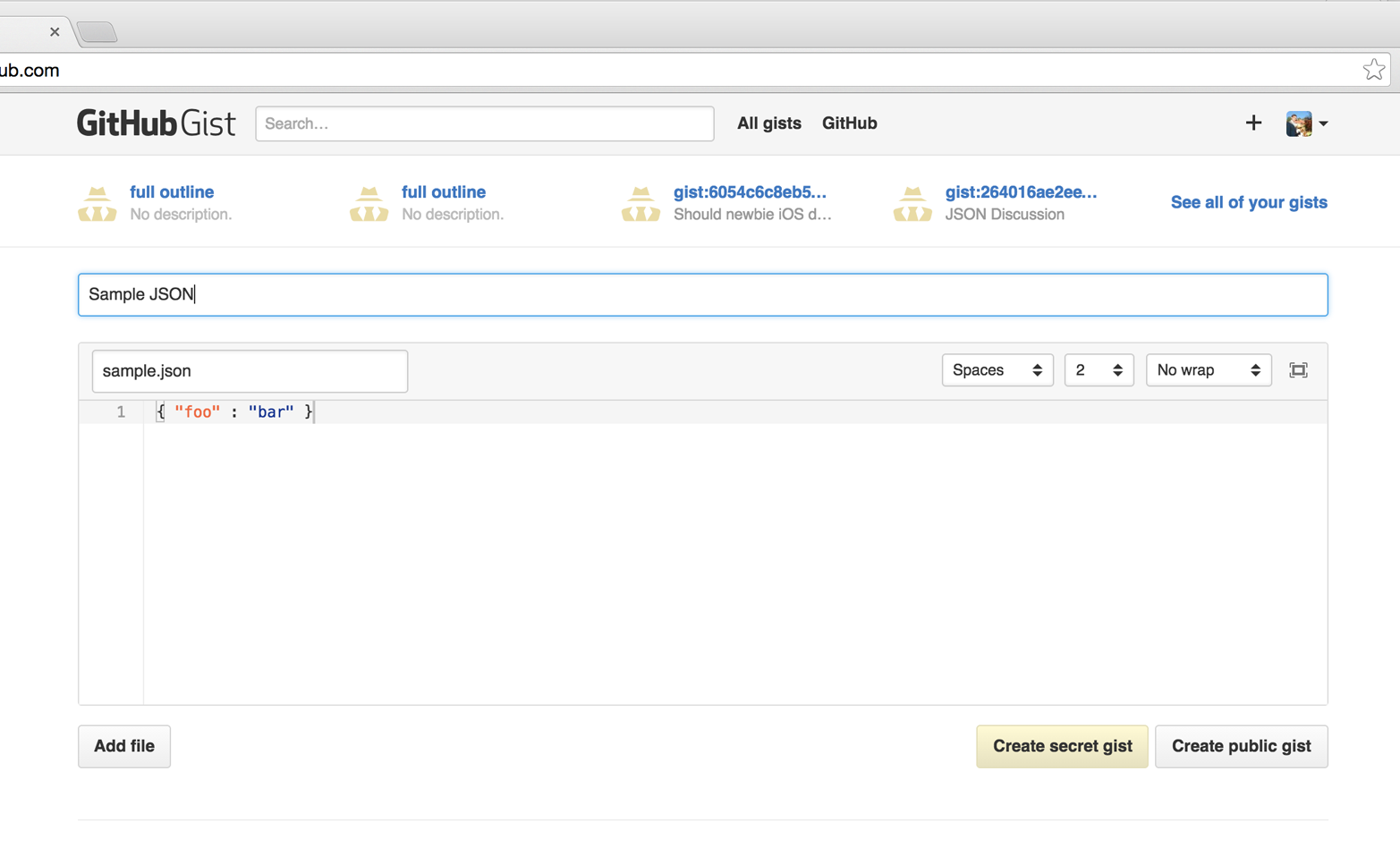

Gists are straightforward to create. You copy a snippet of code into the large text box in the center, optionally enter in a description or filename, and then choose between a public or private gist. Once your gist has been created you are presented with a URL to share. Gists autodetect the language in most cases and syntax highlight according to the language when displayed as in Figure 2-1.

There are other services that do this: pastebin was the first, and there are many others that offer variances on code sharing. But gists by GitHub are not simply a pasting service. Gists are first-class repositories, forkable, editable, and expansive. We’ll go over the basics of what gists are, and how to create them, and then show how they allow you to share code that is also a live application.

Every gist

created is a tiny repository. You can update gists and see

the history using git log. You can download gists, hack on the

repository, and git push them back into the repository on

gist.github.com (which will republish them onto the publicly

facing web page). And, you can “fork” gists, just like

any other repository.

You are allowed to branch within gist repositories; however, branches are not displayed inside of gist.github.com/. But if you need the benefits of branching when using gists you can branch normally inside a repository and the branch information is retained on the upstream repository after you push it up.

You can have an unlimited number of public and secret gists. Secret gists can, in many cases, replace private repositories, and these secret gists don’t count against the limited amount of private repositories you have with paid GitHub accounts. Or, you can make a gist public, and share that URL to mailing lists or anywhere you need public feedback.

As there are two types of gists (public and secret), it is important to understand the differences between them. Public gists are searchable. Secret gists are not searchable, but they are accessible to anyone who knows the URL. Don’t post any code to a gist you need to keep secret as once you put it there, it is only as safe as the URL is secret.

Most people share gists through the URL, but you can embed gists inside of other contexts (like blogs) and get a simple and pretty snippet of code.

To embed inside of an HTML page look for the “Embed this gist” box to

the left of a gist. Copy the code listed there (which will look

something like <script src="https://gist.github.com/xrd/8923697.js"></script>) and paste it

into your HTML.

If you wish to include only a particular file from the gist (if it

contains multiple files), then add ?file=hi.rb to the end of the

URL specified in the src attribute.

Jekyll blogs (explained in Chapter 6) can easily host

gists using a special syntax. The shortcut {% gist 8138797 %} will

embed a private gist, which would be found at

http://gist.github.com/8138797. If you want to use a

specific file within the gist, add a filename to the gist code like

{% gist 8138797 hi.rb %}. Secret gists can also be embedded. If you

use a secret gist, prefix the username of the account holder in the

gist like so: {% gist xrd/8138797 hi.rb %}.

Now let’s look at creating gists from outside the GitHub.com site, using the command-line.

gem install gist will install a command line tool that helps create gists. You can use it simply by typing the command, and then

entering the data you want to post as a gist:

$gist(typea gist. <ctrl-c> to cancel, <ctrl-d> whendone){"foo":"bar"}https://gist.github.com/9106765

The gist command will return the link to the gist just created. Gists

are created anonymously by default. You can log in using the --login

switch. Once you do this, your gists will be linked to

your account:

$ gist --login

Obtaining OAuth2 access_token from github.

GitHub username: xrd

GitHub password:

2-factor auth code: 787878

Success! https://github.com/settings/applicationsYou can pipe text to the gist command to use the contents of that

file:

$echo'{ "foo" : "bar" }'|gist https://gist.github.com/9106799

You can also cat a file to gist:

$cat MyJavaFile.java|gist https://gist.github.com/9345609

Gists are often used to show interesting or troublesome code, and there

are times when you don’t want to display the entirety of a file. In

this case the command-line grep tool can be useful; grep searches

for a specific piece of code and with the right switches can include

several lines of context around that code inside a gist. This command

looks for the function myFunction inside the MyJavaFile.java file

and then prints the next 20 lines of context and stores it as a gist:

$grep -A20myFunction MyJavaFile.java|gist https://gist.github.com/9453069

Adding the -o switch automatically opens the gist inside your

default web browser. You can also copy the gist URL to the clipboard

using the -c switch. Or, you can copy the contents of your clipboard

into a gist using the -P switch.

There are many other fun features of the gist command. To learn more

run the gist command with the --help switch.

As gists are themselves repositories, you can use them for dual purposes: for hosting code samples, and for code samples that are themselves fully working and packaged applications inside a gist repository.

Let’s build a simple Sinatra application to showcase how code hosted as a gist can also be a living application. Sinatra is a Ruby library for creating dead-simple web servers. A Sinatra program can be as simple as this:

require'sinatra'get'/hi'do"Hello World!"end

Create a gist for this by visiting gist.github.com. Enter in the text exactly as shown and then choose public gist.

You now have a share-friendly gist of code anyone can use to review. More importantly, this is a repository with executable code. To clone it, look for the Clone URL to the right of the gist itself. You will likely see a Git protocol URL and an HTTPS URL. If you are cloning the URL and intend only to read the gist, you can use the HTTPS URL. You technically can push changes once you have cloned a repository using the HTTPS URL but not if you have two-factor authentication enabled. In most cases it is easier and more flexible to use the Git protocol URL.

Let’s clone it now:

$ git clone git@gist.github.com:8138797.gitOnce you have cloned the repository, go inside it. You’ll see a list of files, a list that right now includes only one file:

$cd8138797$ls hi.rb

This code is exectuable: to run it enter ruby hi.rb.

If you have not used Sinatra with Ruby before, this will cause an error. This program requires a library called “sinatra” and you have not yet installed it. We could write a read me file, or add documentation into this file itself. Another way to guarantee the user has the proper files installed is to use a Gemfile, which is a file that tells which libraries are installed and from where. That sounds like the best way:

$printf"source 'https://rubygems.org'\ngem 'sinatra'"> Gemfile

The bundle command (from the bundler gem) will install Sinatra and

the associated dependencies:

$bundle Using rack(1.5.2)Using rack-protection(1.5.1)Using tilt(1.4.1)Using sinatra(1.4.4)Using bundler(1.3.5)Your bundle iscomplete! Use`bundle show[gemname]`to see where a bundled gem is installed.

Why did we do things this way? Because now we can add the Gemfile to our repository locally, and then publish into our gist for sharing on the Web. Our repository now not only has the code, but a well-known manifest file that explains the necessary components when running the code.

Let’s add to our application and use the Octokit Ruby gem to pull all public gists for any user we specify. The Octokit library is the the official Ruby library for accessing the GitHub API. Why would we want to make a gist that displays other gists? Self-referential meta code is all the rage, the modern-day response to René Magritte’s famous work: “Ceci n’est pas une pipe.”1

Add a view index.erb at the root of our directory:

<html><body>Userhas<%=count%> public gists</body></html>

Add the Octokit gem to our Gemfile:

gem"octokit"

Run bundle to install Octokit. Then, modify our hi.rb app to look

like this:

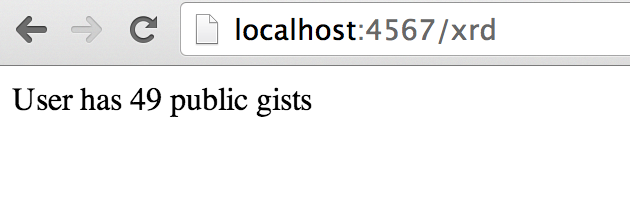

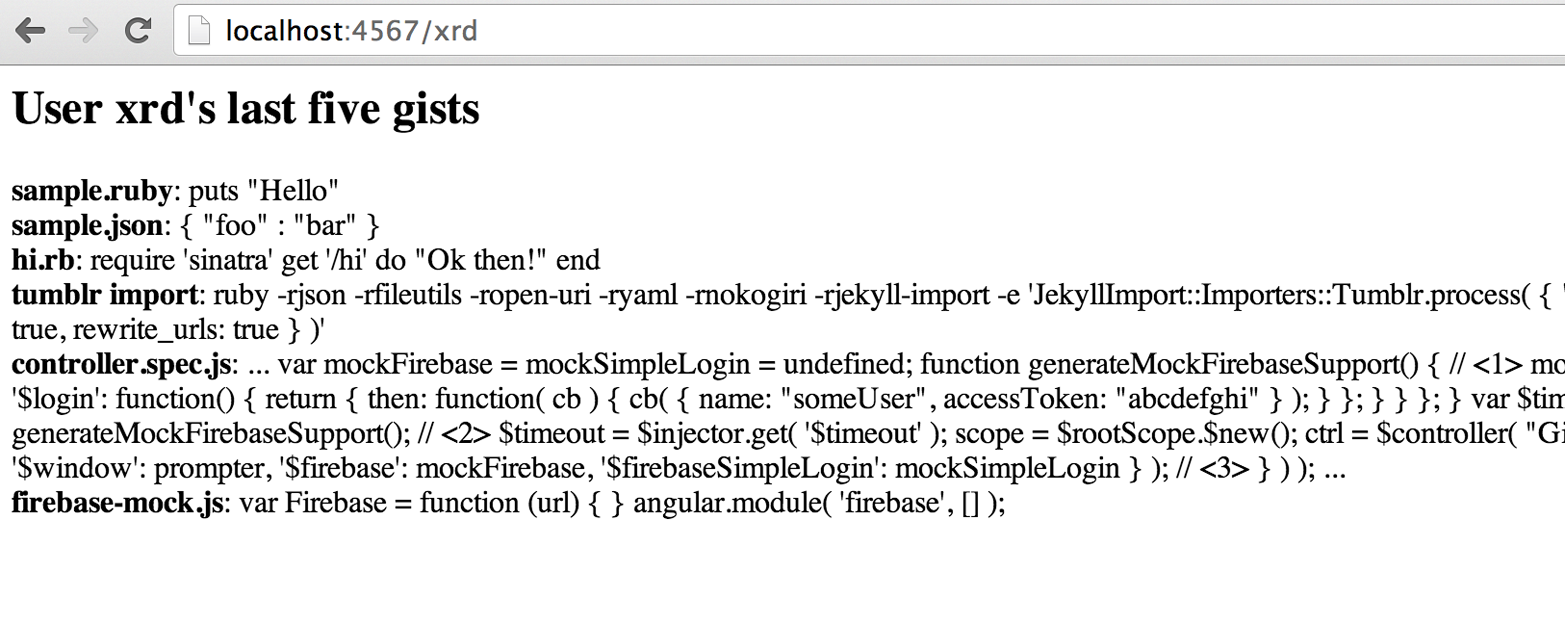

require'sinatra'require'octokit'set:views,"."get'/:username'do|username|user=Octokit.userusernamecount=user.public_gistserb:index,locals:{:count=>count}end

Our filesystem should look like this, with three files:

$ ls -1

Gemfile

hi.rb

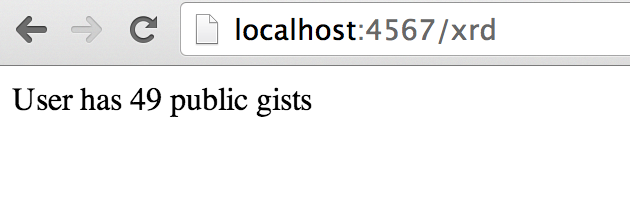

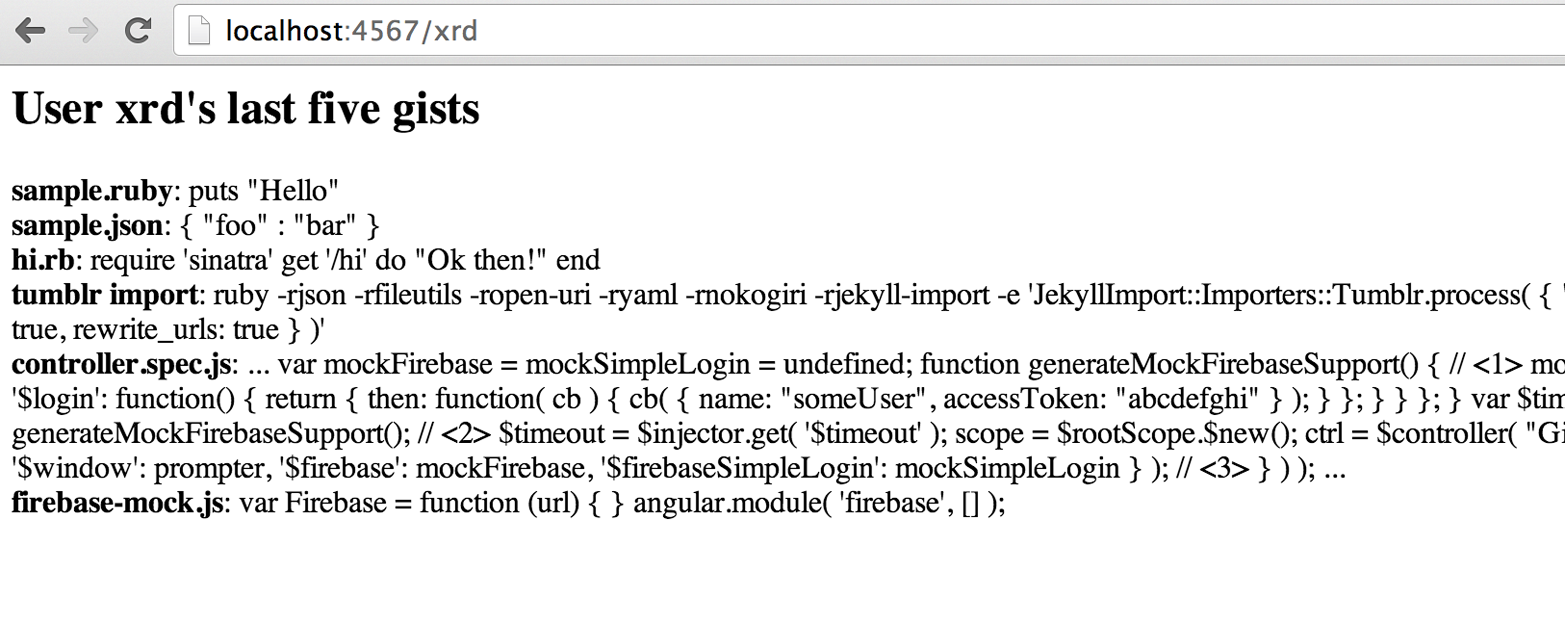

index.erbRestart Sinatra by running Ctrl-C and then ruby hi.rb. If you visit http://localhost:4567/xrd in

your browser, you will see the count of public gists for user xrd (Figure 2-2);

modify the username in the URL to specify any GitHub username and you will see

their last five gists displayed.

The GitHub API uses hypermedia instead of basic resource-driven APIs. If you use a client like Octokit, the hypermedia details are hidden behind an elegant Ruby client. But there is a benefit to understanding how hypermedia works when you need to retrieve deeper information from the GitHub API.

Most RESTful APIs come with a “sitemap,” generally an API reference document that tells a user which endpoints to use. You view the resources available from that API and then apply some HTTP verb to do something to them. Hypermedia thinks of an API differently. Hypermedia APIs describe themselves inside their responses using “affordances.” What this means is that the API might respond like this:

{"_links":{"self":{"href":"http://shop.oreilly.com/product/0636920030300.do"}}"id":"xrd","name":"Chris Dawson"}

In this payload, you can see that there is an id ("xrd") and a name

("Chris Dawson"). This particular payload was forked from the HAL explanation at the

HAL

Primer document, and you can find a more detailed explanation of these concepts

there.

The important thing to note about hypermedia APIs is that payloads contain metadata about data itself and metadata about the possible options of operating on the data. RESTful APIs typically provide a mapping outside of the payload. You have to join the API sitemap with the data in an ad hoc way when using RESTful APIs; with hypermedia APIs your client can react to the payload itself correctly and intelligently without knowing anything about a sitemap stored in human-readable documentation.