Second Edition

Copyright © 2016 Lorna Mitchell. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://safaribooksonline.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781491933091 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. PHP Web Services, the cover image of an alpine accentor, and related trade dress are trademarks of O’Reilly Media, Inc.

While the publisher and the author have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the author disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-93309-1

[LSI]

In this age, when it can sometimes seem like every system is connected to every other system, dealing with data has become a major ingredient in building the Web. Whether you will be delivering services or consuming them, web service is a key part of all modern, public-facing applications, and this book is here to help you navigate your way along the road ahead. We will cover the different styles of service—from RPC, to SOAP, to REST—and you will see how to devise great solutions using these existing approaches, as well as examples of APIs in the wild. Whether you’re sharing data between two internal systems, using a service backend for a mobile application, or just plain building an API so that users can access their data, this book has you covered, from the technical sections on HTTP, JSON, and XML to the “big picture” areas such as creating a robust service.

Why did we pick PHP for this book? Well, PHP has always taken on the mission to “solve the web problem.” Web services are very much part of that “problem” and PHP is ideally equipped to make your life easy, both when consuming external services and when creating your own. As a language, it runs on many platforms and is the technology behind more than half of the Web, so you can be sure that it will be widely available, wherever you are.

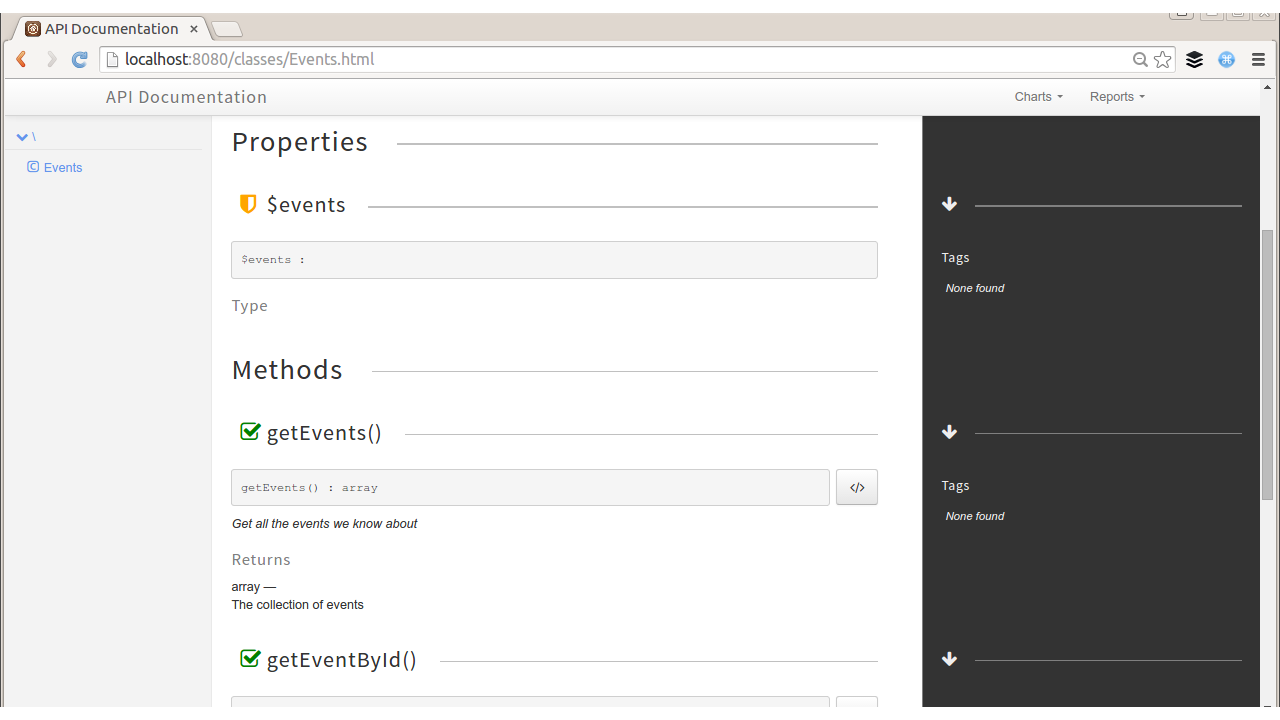

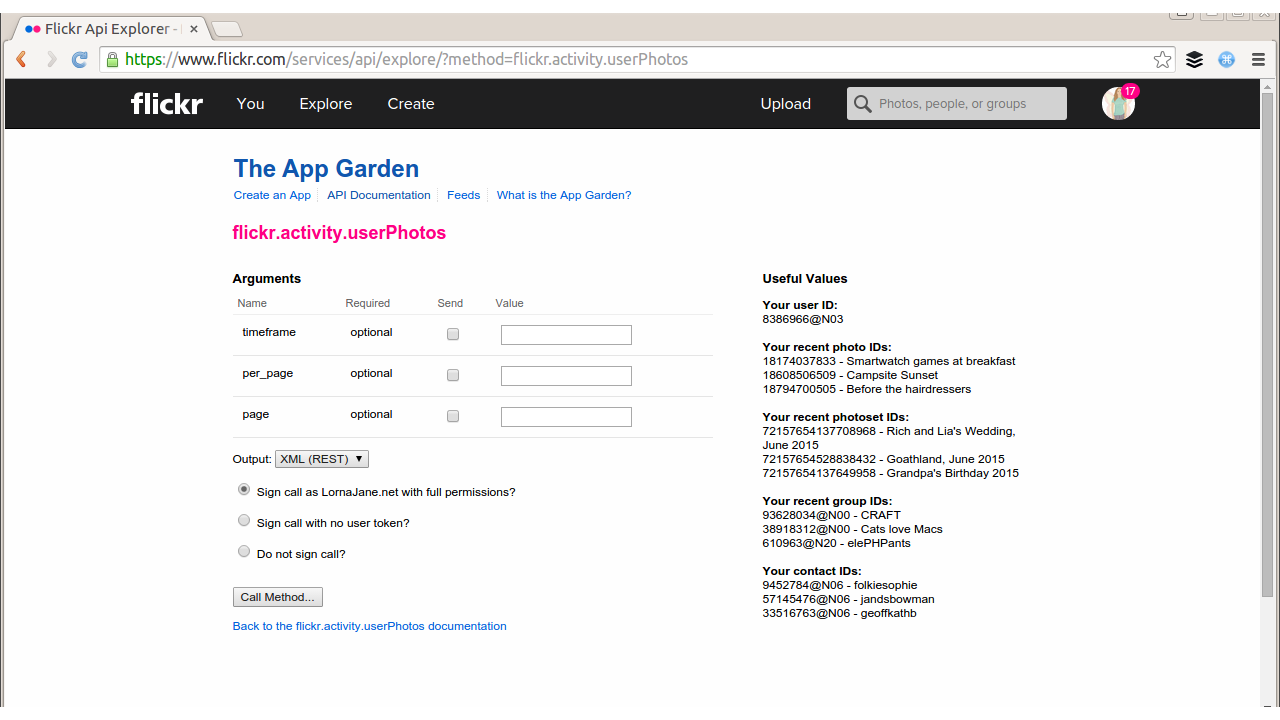

The book walks you through everything you need to know in three broad sections. We begin by covering HTTP and the theory that goes with it, including detailed chapters on the request/response cycle, HTTP verbs and headers, and cookies. There are also chapters on JSON and XML: when to choose each data format, and how to handle them from within PHP. The second section aims to give very practical advice on working with RPC and SOAP services and with RESTful services, and on how to debug almost anything that works over HTTP, using a variety of tools and techniques. In the final section, we look at some of the wider issues surrounding the design of top-quality services, choosing what kind of service will work for your application, and determining how to make it robust. Another chapter is dedicated to handling errors and giving advice on why and how to document your API. Whether you dip into the book as a reference for a specific project, or read it in order to find out more about this area of technology, there’s something here to help you and your project find success. Enjoy!

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

Constant width boldShows commands or other text that should be typed literally by the user.

Constant width italicShows text that should be replaced with user-supplied values or by values determined by context.

This element signifies a tip or suggestion.

This element signifies a general note.

This element indicates a warning or caution.

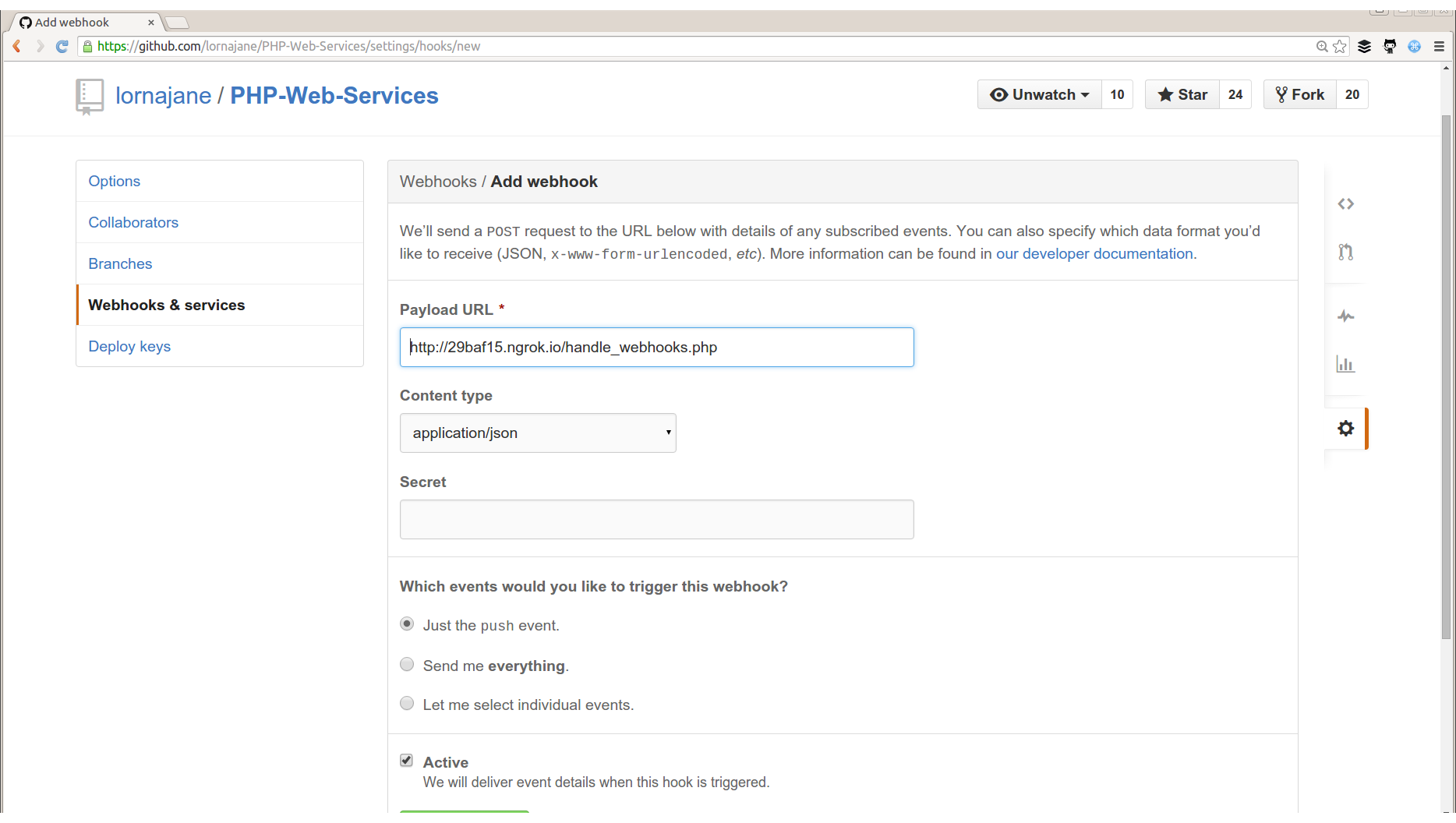

Supplemental material (code examples, exercises, etc.) is available for download at https://github.com/lornajane/PHP-Web-Services.

This book is here to help you get your job done. In general, if this book includes code examples, you may use the code in this book in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing a CD-ROM of examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

We appreciate, but do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “PHP Web Services, 2nd Edition, by Lorna Jane Mitchell (O’Reilly). Copyright 2016 Lorna Mitchell, 978-1-4919-3309-1.”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

Safari Books Online is an on-demand digital library that delivers expert content in both book and video form from the world’s leading authors in technology and business.

Technology professionals, software developers, web designers, and business and creative professionals use Safari Books Online as their primary resource for research, problem solving, learning, and certification training.

Safari Books Online offers a range of plans and pricing for enterprise, government, education, and individuals.

Members have access to thousands of books, training videos, and prepublication manuscripts in one fully searchable database from publishers like O’Reilly Media, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, Course Technology, and hundreds more. For more information about Safari Books Online, please visit us online.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://bit.ly/php-web-services-2e.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

I’d like to extend my thanks to everyone who made this book happen—from the team at O’Reilly that worked so hard to get the book into its final state, to the technical editors who pointed out howlers and made helpful related suggestions, to various members of the geek community who gave me words of encouragement along the way. Special thanks must go to husband and chief cheerleader Kevin, who has been my biggest supporter all the way through the process.

HTTP stands for HyperText Transfer Protocol, and is the basis upon which the Web is built. Each HTTP transaction consists of a request and a response. The HTTP protocol itself is made up of many pieces: the URL at which the request was directed, the verb that was used, other headers and status codes, and of course, the body of the responses, which is what we usually see when we browse the Web in a browser. We’ll see more detailed examples later in the book, but this idea of requests and responses consisting of headers as well as body data is a key concept.

When surfing the Web, ideally we experience a smooth journey between all the various places that we’d like to visit. However, this is in stark contrast to what is happening behind the scenes as we make that journey. As we go along, clicking on links or causing the browser to make requests for us, a series of little “steps” is taking place behind the scenes. Each step is made up of a request/response pair; the client (usually your browser, either on your laptop or your phone) makes a request to the server, and the server processes the request and sends the response back. At every step along the way, the client makes a request and the server sends the response.

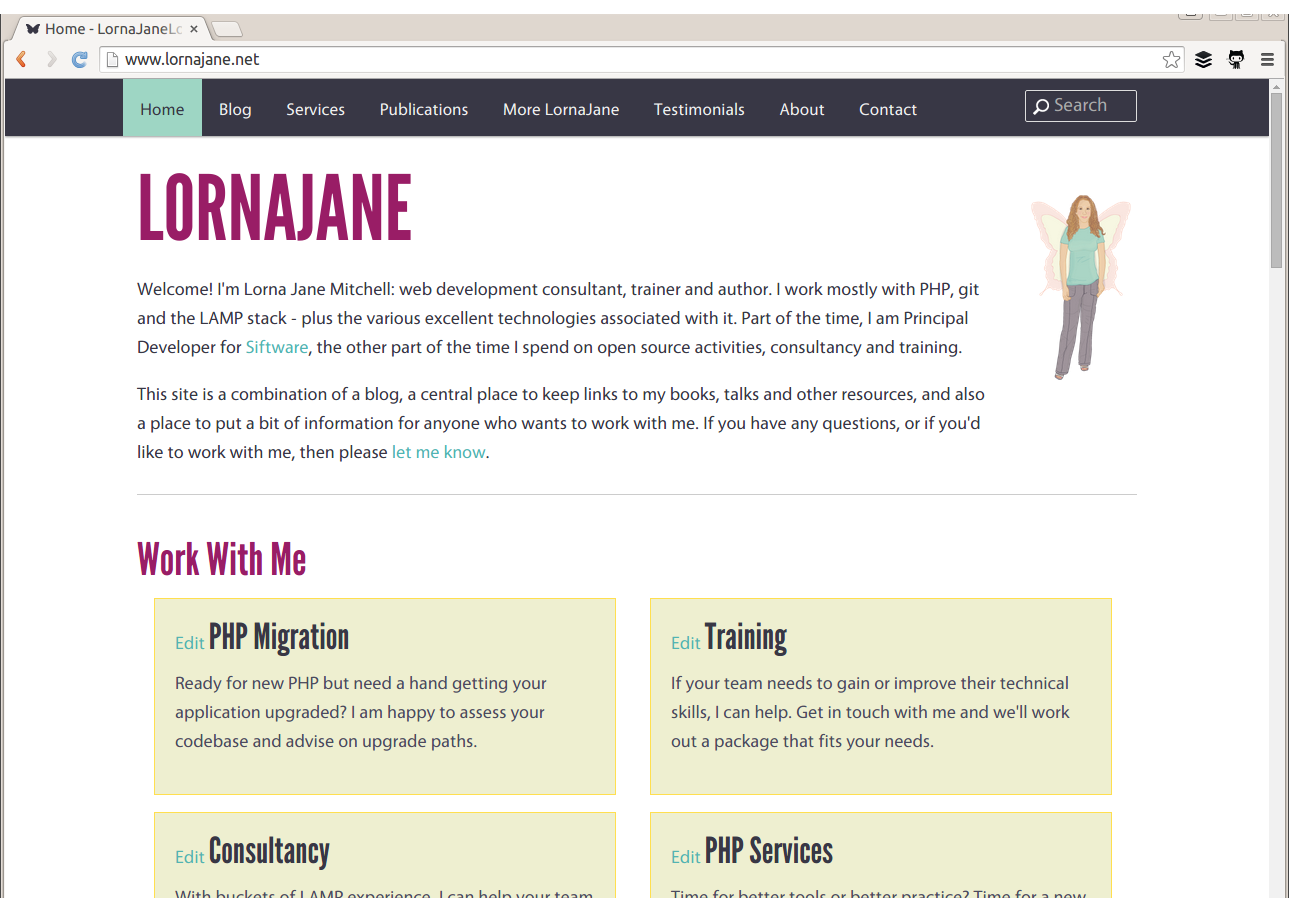

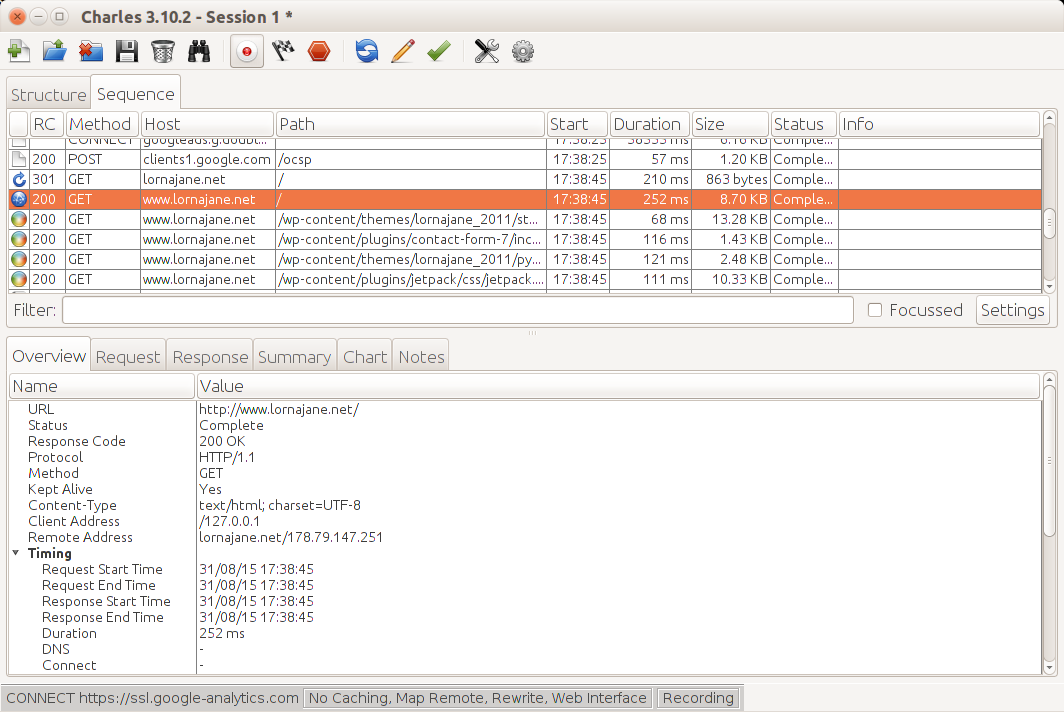

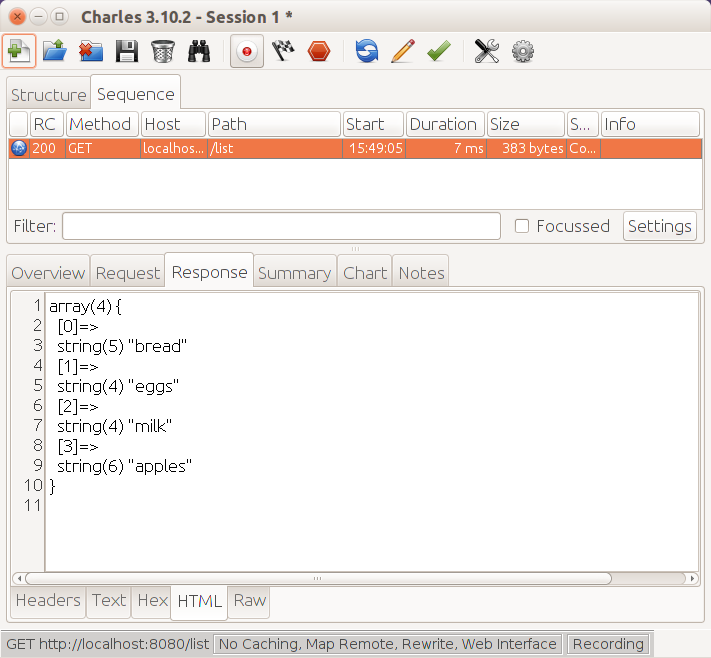

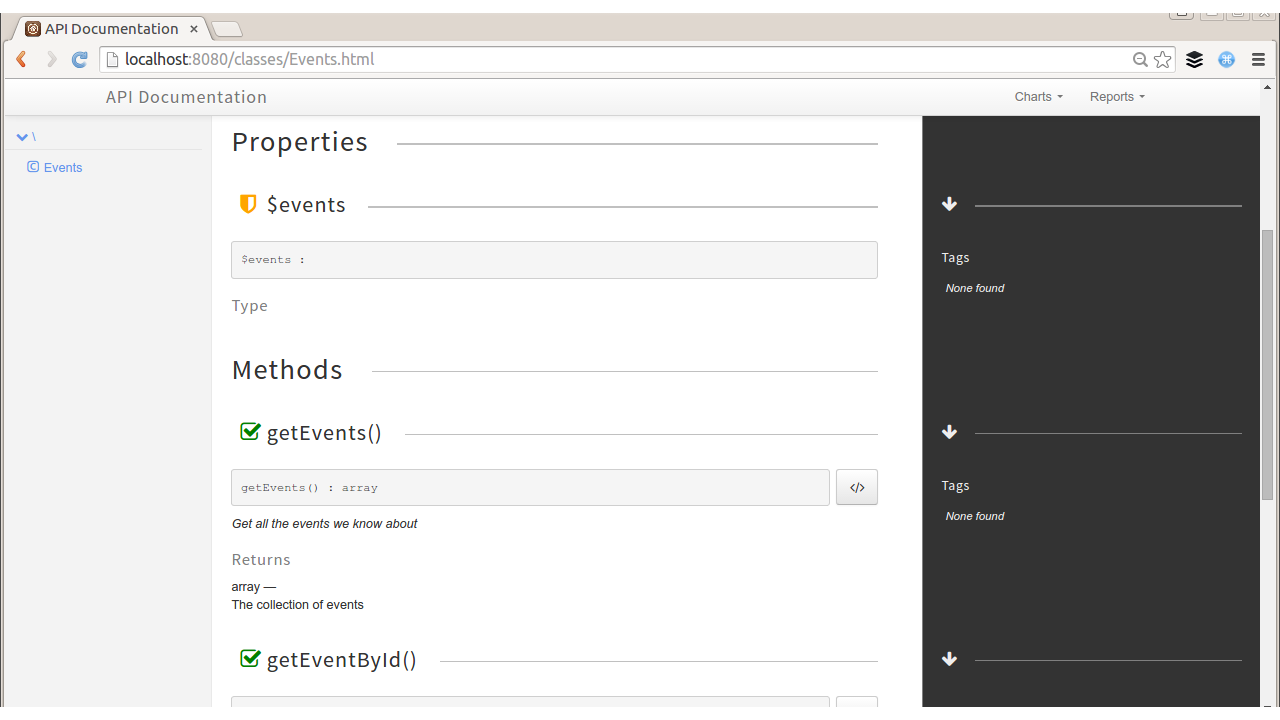

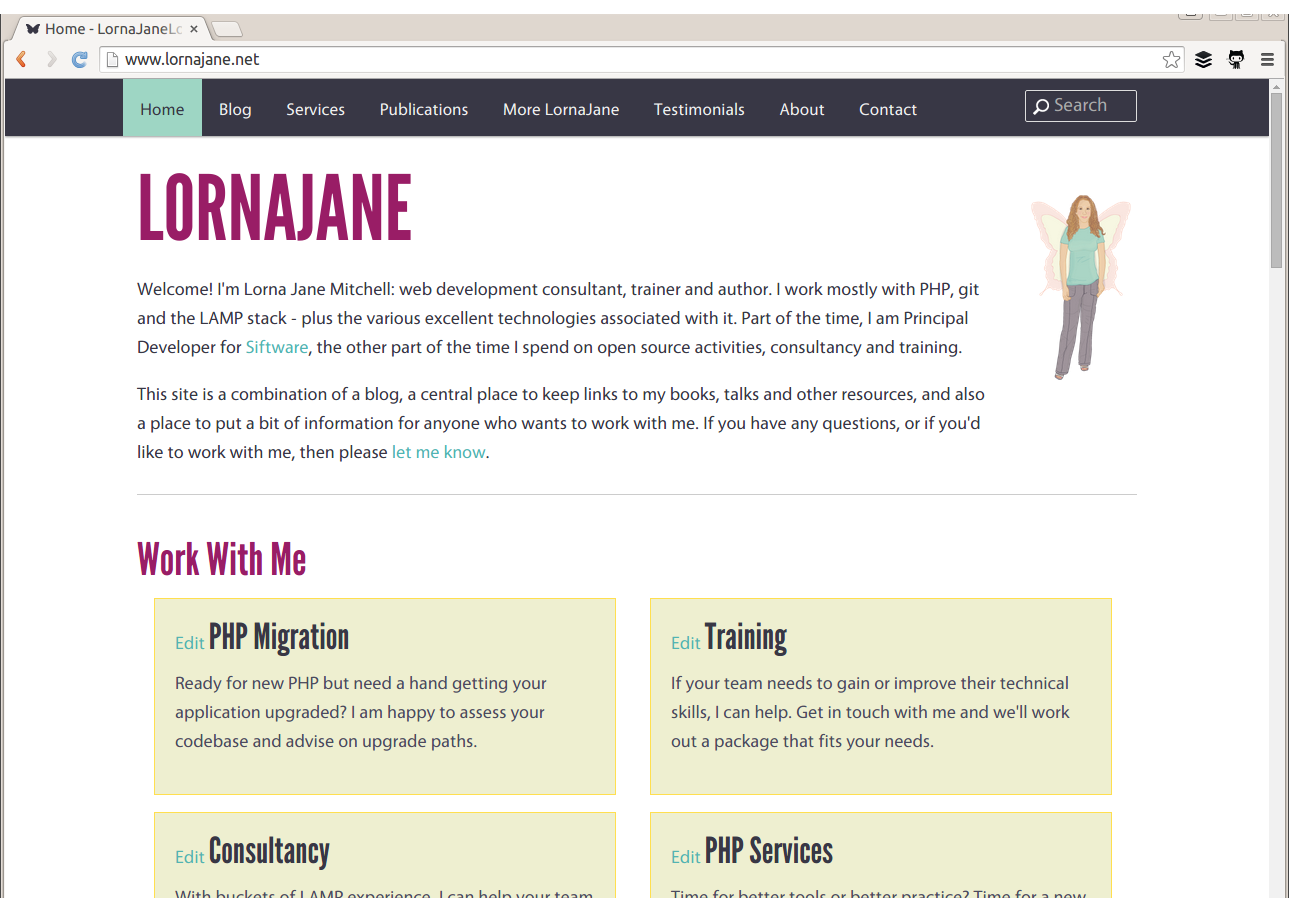

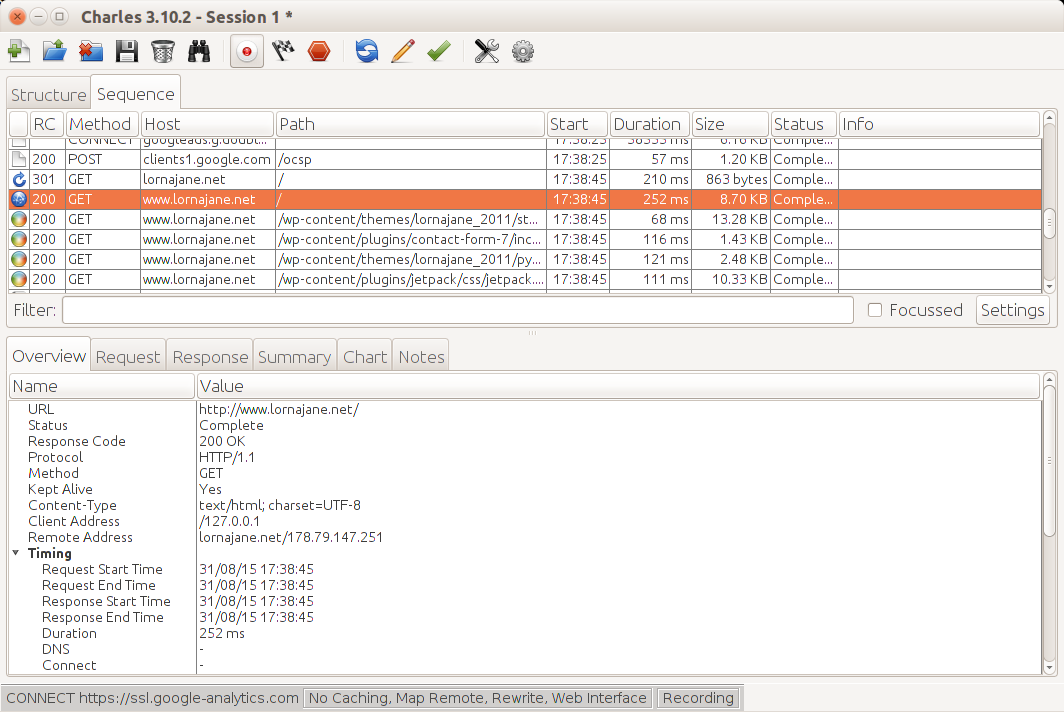

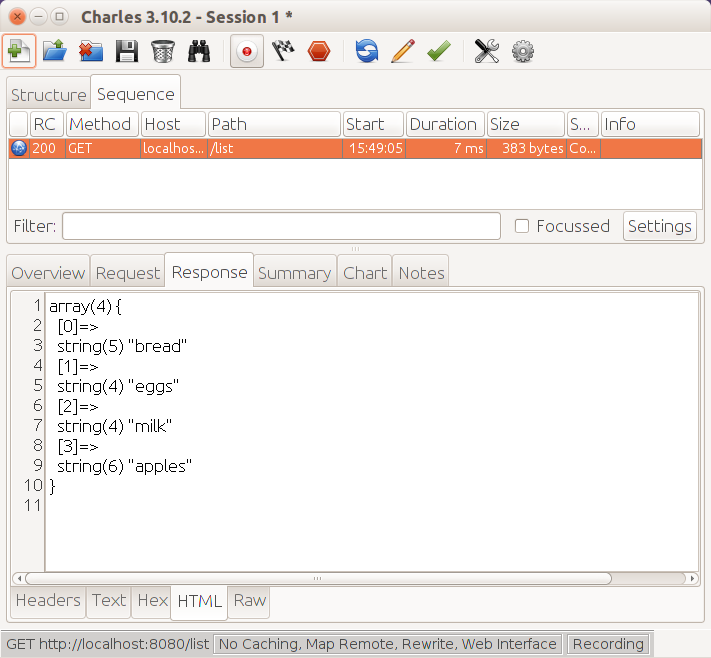

As an example, point a browser to http://lornajane.net and you’ll see a page that looks something like Figure 1-1; either the information desired can be found on the page, or the hyperlinks on that page direct us to journey onward for it.

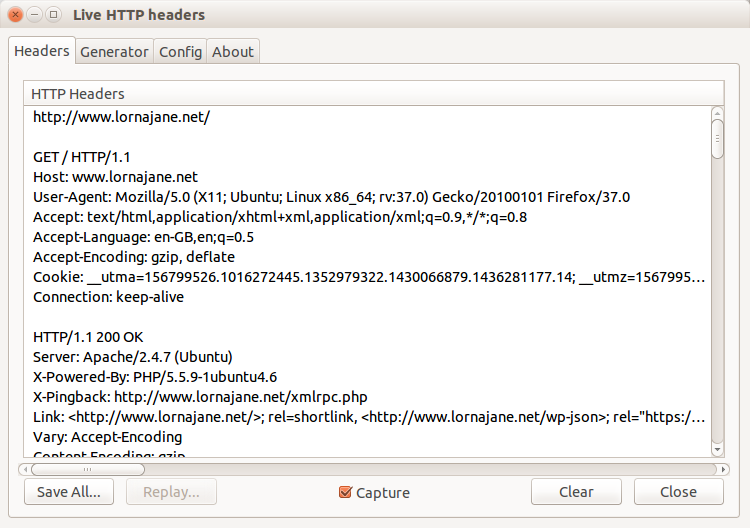

The web page arrives in the body of the HTTP response, but it tells only half of the story. There is so much more going on in the request and response as they happen; let’s inspect that request to http://lornajane.net (a pretty average WordPress blog) in more detail.

Request headers:

GET/HTTP/1.1Host:www.lornajane.netConnection:keep-aliveCache-Control:no-cacheAccept:text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8User-Agent:Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) ...Accept-Encoding:gzip, deflate, sdchAccept-Language:en-GB,en-US;q=0.8,en;q=0.6

Request body: (no body needed for a GET request)

Response headers:

HTTP/1.1200OKServer:Apache/2.4.7 (Ubuntu)X-Powered-By:PHP/5.5.9-1ubuntu4.6X-Pingback:http://www.lornajane.net/xmlrpc.phpExpires:Wed, 11 Jan 1984 05:00:00 GMTCache-Control:no-cache, must-revalidate, max-age=0Content-Encoding:gzipContent-Type:text/html; charset=UTF-8Content-Length:8806Date:Tue, 15 Sep 2015 08:43:54 GMTX-Varnish:612483212Age:0Via:1.1 varnish

Response body (truncated):

<!DOCTYPE html> <head> <meta charset="UTF-8" /> <meta name="viewport" content="width=device-width" /> <meta name="bitly-verification" content="ff69fb2e45ef"/> <title>Home - LornaJaneLornaJane | Lorna Jane Mitchell's Website</title> <link rel="shortcut icon" href="http://www.lornajane.net/wp-content/themes/lj/images/favicon.ico"> ... (truncated)

As you can see, there are plenty of other useful pieces of information being exchanged over HTTP that are not usually seen when using a browser. The browser understands how to work with request and response headers, and handles that so the user doesn’t need to.

Understanding this separation between client and server, and the steps taken by the request and response pairs, is key to understanding HTTP and working with web services. Here’s an example of what happens when we head to Google in search of kittens:

We make a request to http://www.google.com and the response contains a Location header and a 301 status code sending us to a regional search page; for me that’s http://www.google.co.uk.

The browser follows the redirect instruction (without confirmation from the user; browsers follow redirects by default), makes a request to http://www.google.co.uk, and receives the page with the search box (for fun, view the source of this page; there’s a lot going on!). We fill in the box and hit search.

We make a request to https://www.google.co.uk/search?q=kittens (plus a few other parameters) and get a response showing our search results.

The part of the URL after the ? is the “query string” and it’s one way of passing additional data to a particular URL or endpoint.

In the story shown here, all the requests were made from the browser in response to a user’s actions, although some occur behind the scenes, such as following redirects or requesting additional assets. All the assets for a page, such as images, stylesheets, and so on are fetched using separate requests that are handled by a server. Any content that is loaded asynchronously (by JavaScript, for example) also creates more requests. When we work with APIs, we get closer to the requests and make them in a more deliberate manner, but the mechanisms are the same as those we use to make very basic web pages. If you’re already making websites, then you already know all you need to make web services!

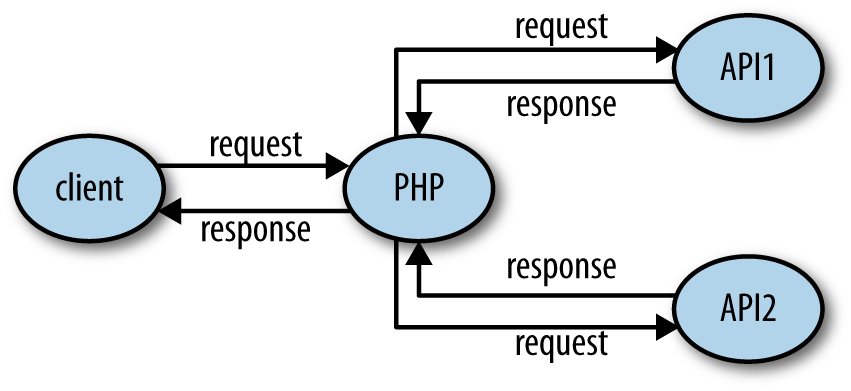

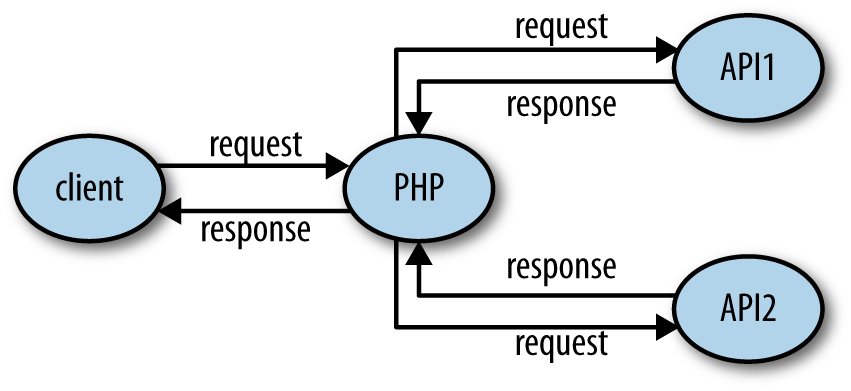

Earlier in this chapter we talked about a request and response between a client and a server. When we make websites with PHP, the PHP part is always the server. When using APIs, we build the server in PHP, but we can consume APIs from PHP as well. This is the point where things can get confusing. We can create either a client or a server in PHP, and requests and responses can be either incoming or outgoing—or both!

When we build a server, we follow patterns similar to those we use to build web pages. A request arrives, and we use PHP to figure out what was requested and craft the correct response. For example, if we built an API for customers so they could get updates on their orders programmatically, we would be building a server.

Using PHP to consume APIs means we are building a client. Our PHP application makes requests to external services over HTTP, and then uses the responses for its own purposes. An example of a client would be a script that fetches your most recent tweets and displays them.

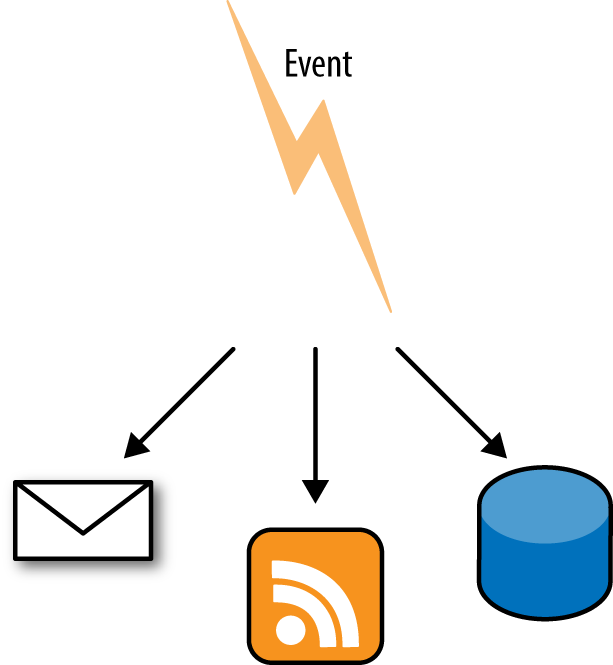

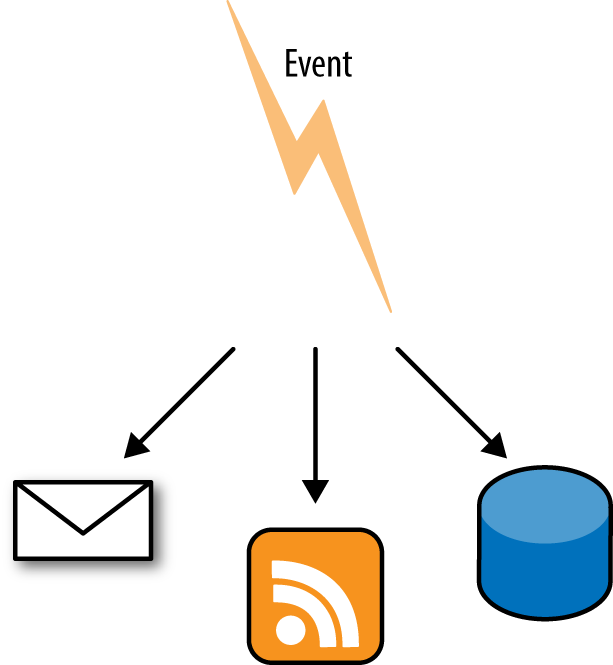

It isn’t unusual for an application to be both a client and a server, as shown in Figure 1-2. An application that accepts a request, and then calls out to other services to gather the information it needs to produce the response, is acting as both a client and a server.

When working on applications that are APIs or consume APIs, take care with how you name variables involving the word “request” to avoid confusion!

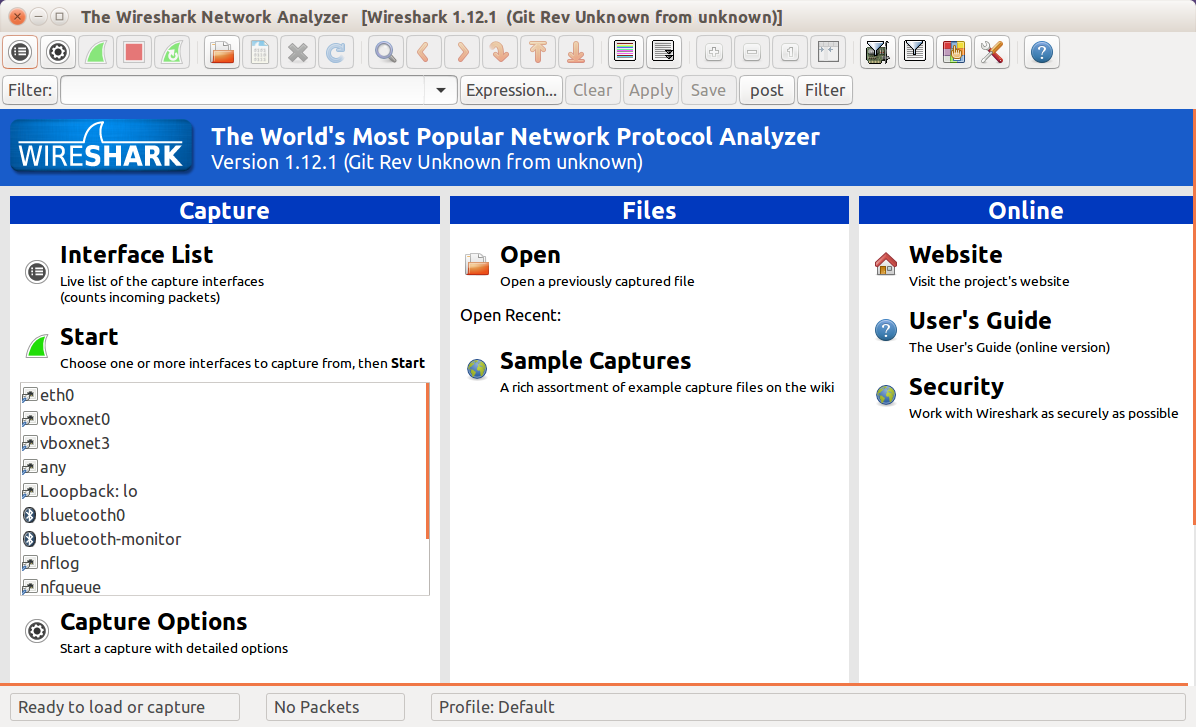

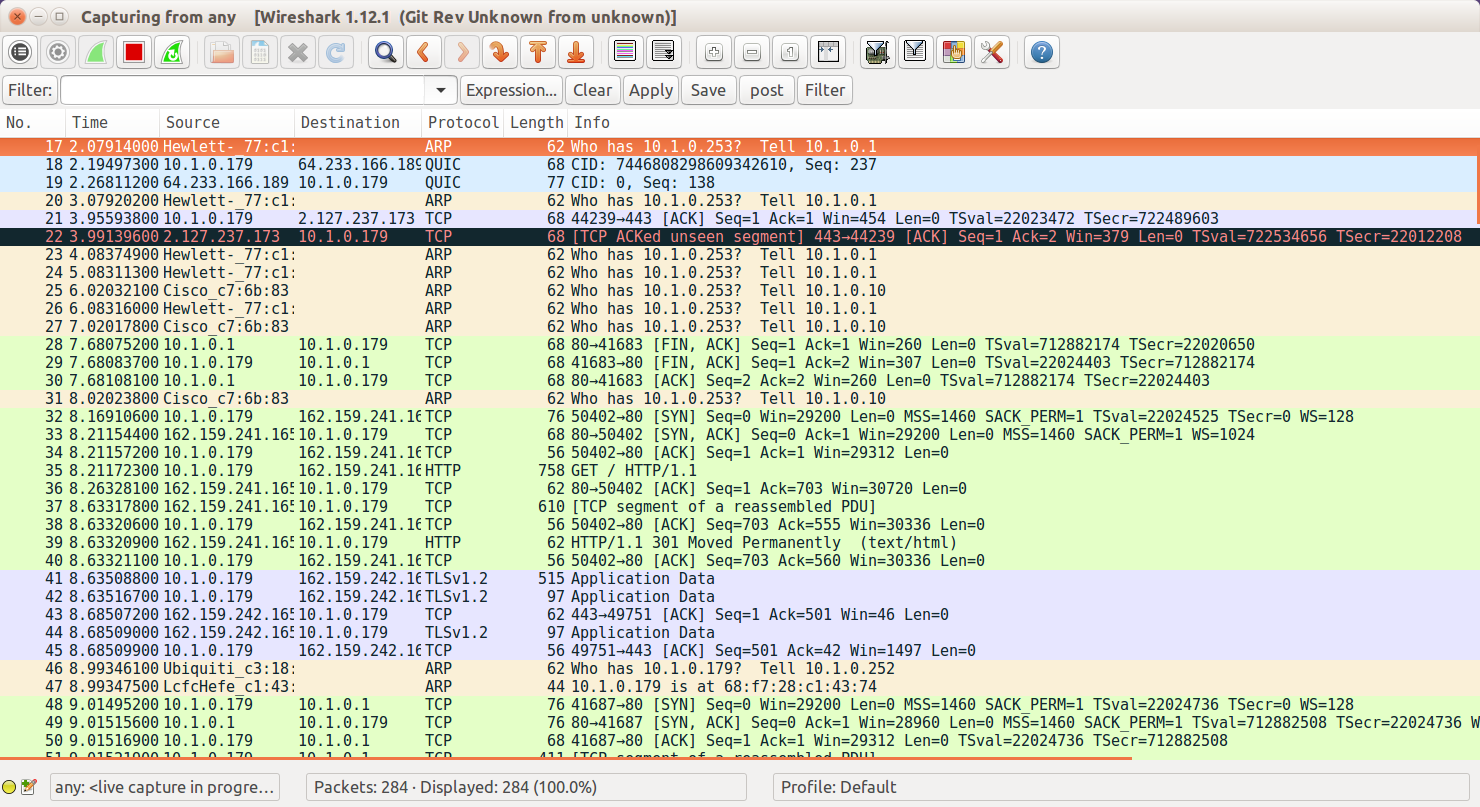

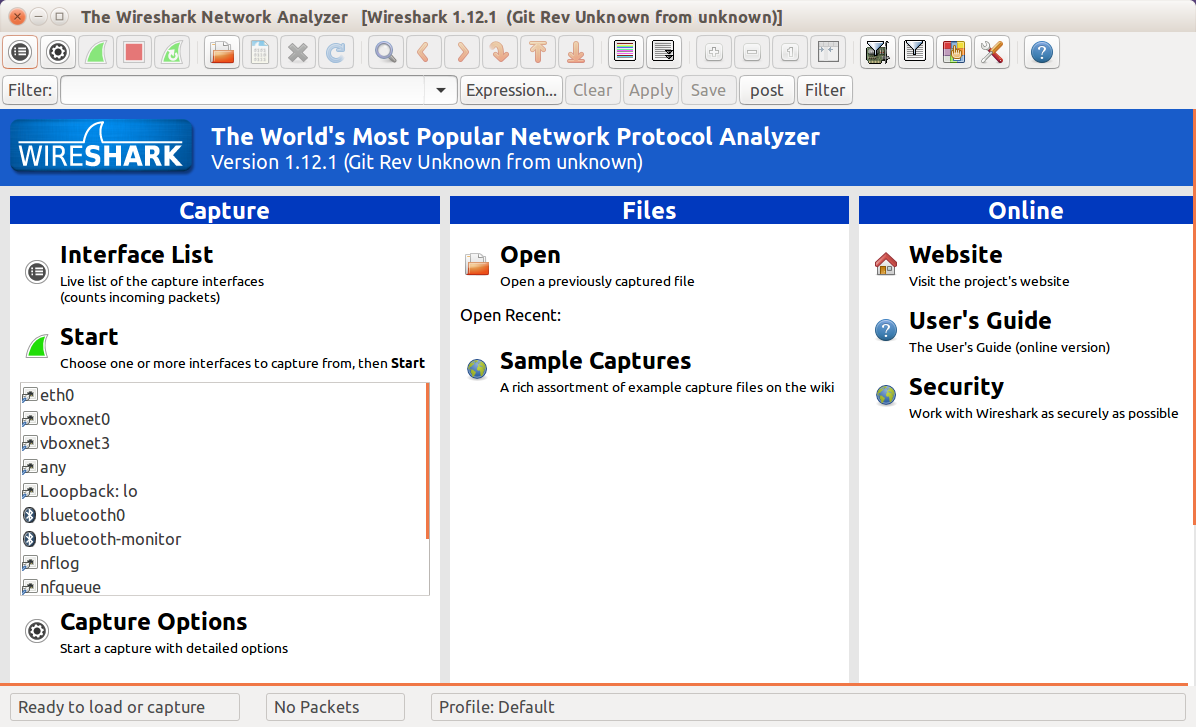

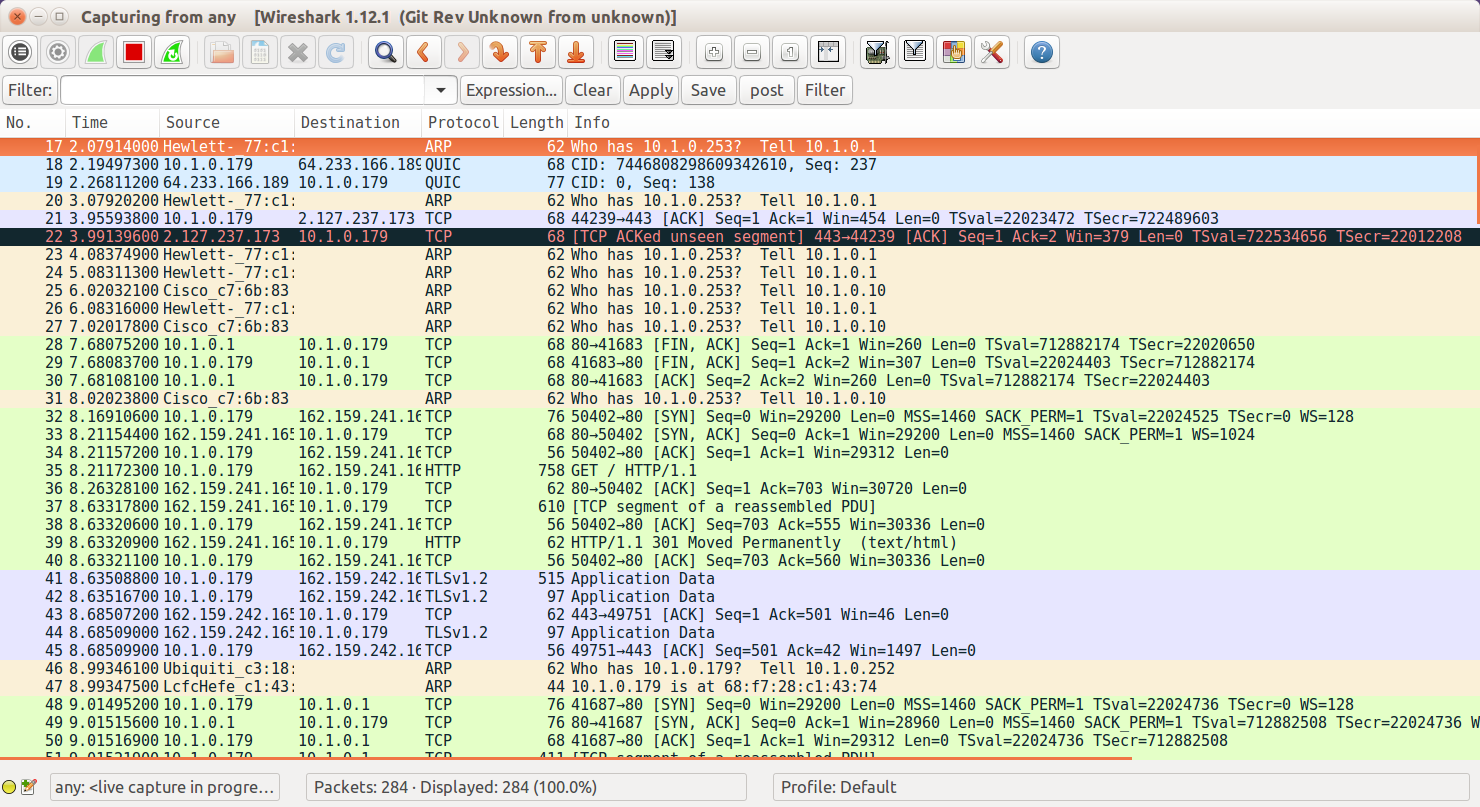

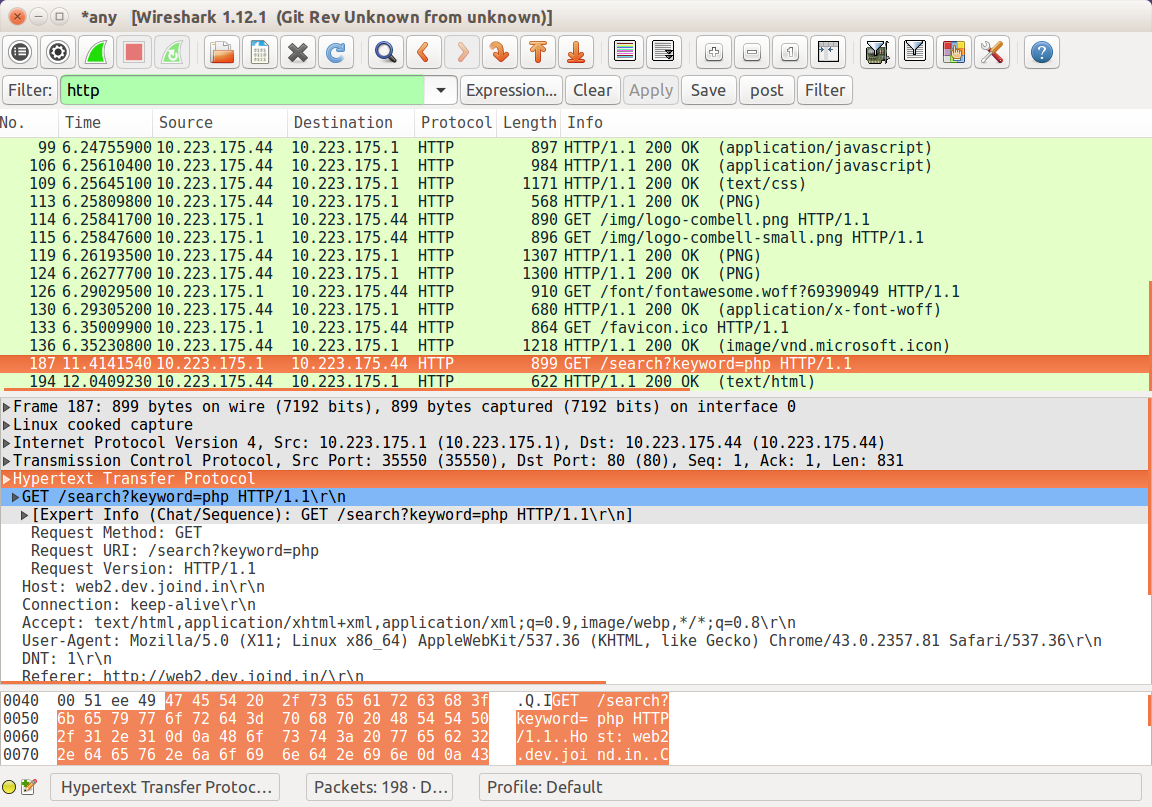

To be able to work with web services, it is important to have a very good understanding of how to work with HTTP from various angles. In this section we’ll cover three common ways of working with HTTP:

Using command-line tools

Using browser tools

Using PHP itself

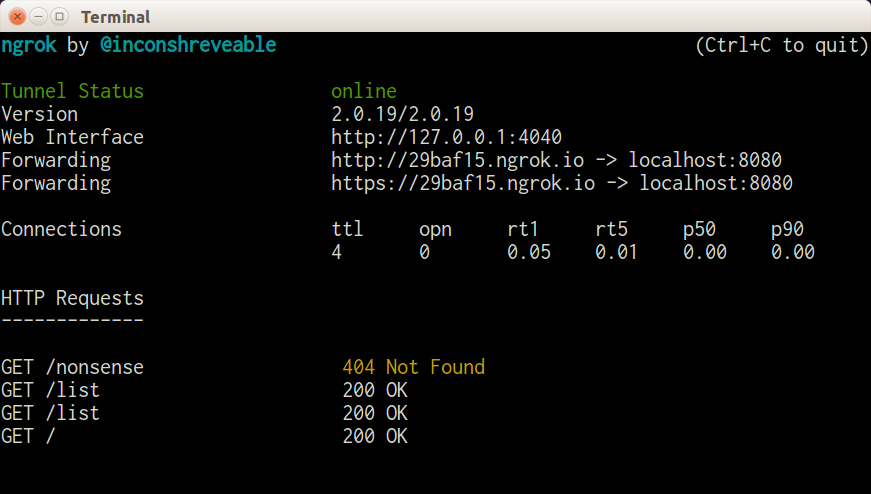

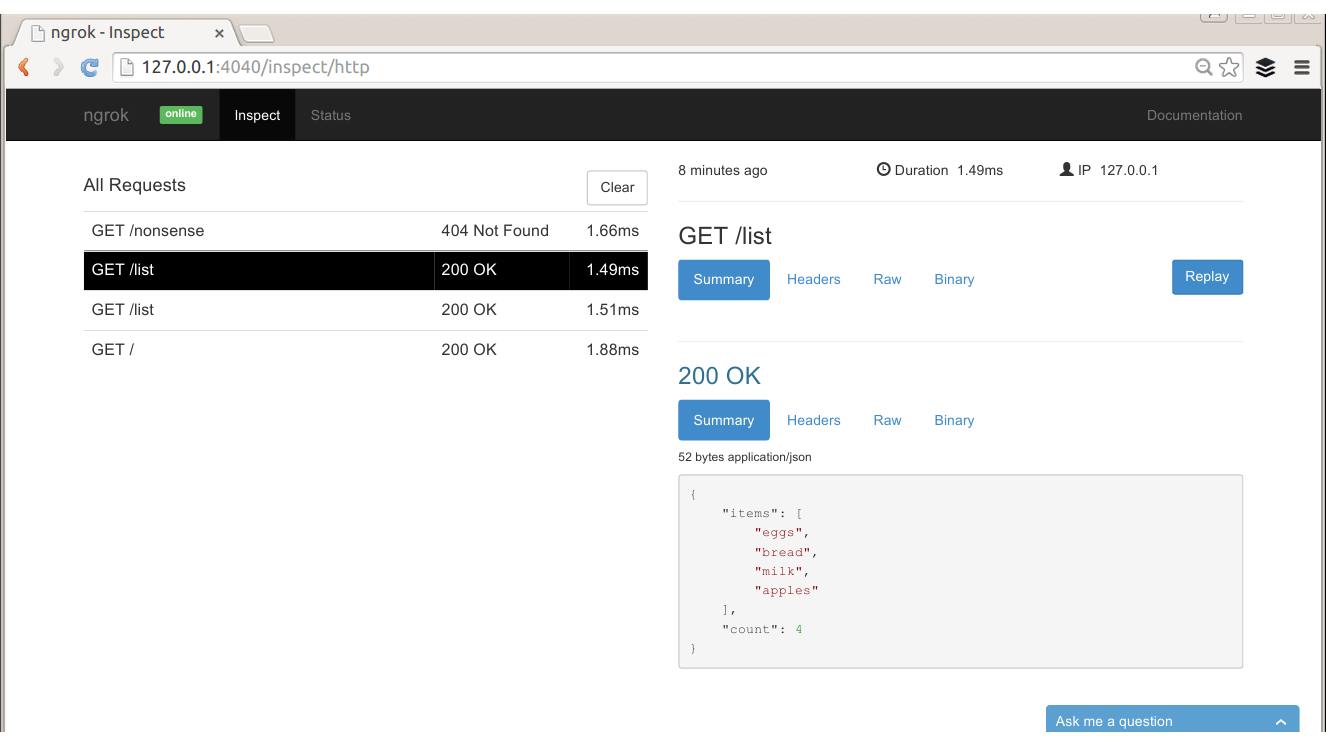

We’ll also look at tools specifically designed for inspecting and debugging HTTP in Chapter 10.

The examples here use a site that logs the requests it receives, which is perfect for exploring how different API requests are seen by a server. To use it, visit the site and create a new “request bin.” You will be given a URL to make requests to and be redirected to a page showing the history of requests made to the bin. This is my own favorite tool, not just for teaching HTTP but also when actually building and testing API clients.

There are a few other tools that are similar and could be useful to you when testing. Try out some of these:

The reserved endpoints (http://example.com, http://example.net, and http://example.org) established by the Internet Assigned Numbers Authority.

HTTPResponder is a similar tool and is on GitHub so you could host/adapt it yourself.

A selection of endpoints with specific behaviors at httpbin.org.

Register your own endpoint at http://requestb.in and use it in place of http://requestb.in/example in the examples that follow.

cURL is a command-line tool available on all platforms. It allows us to make any web request imaginable in any form, repeat those requests, and observe in detail exactly what information is exchanged between client and server. In fact, cURL produced the example output at the beginning of this chapter. It is a brilliant, quick tool for inspecting what’s going on with a web request, particularly when dealing with something that isn’t in a browser or where you need to be more specific about how the request is made. There’s also a cURL extension in PHP; we’ll cover that shortly in “Doing HTTP with PHP”, but this section is about the command-line tool.

In its most basic form, a cURL request can be made like this:

curl http://requestb.in/example

We can control every aspect of the request to send; some of the most commonly used features are outlined here and used throughout this book to illustrate and test the various APIs shown.

If you’ve built websites before, you’ll already know the difference between GET and POST requests from creating web forms. Changing between GET, POST, and other HTTP verbs using cURL is done with the -X switch, so a POST request can be specifically made by using the following:

curl -X POST http://requestb.in/example

There are also specific switches for GET, POST, and so on, but once you start working with a wider selection of verbs, it’s easier to use -X for everything.

To get more information than just the body response, try the -v switch since this will show everything: request headers, response headers, and the response body in full! It splits the response up, though, sending the header information to STDERR and the body to STDOUT:

$ curl -v -X POST http://requestb.in/example -d name="Lorna" -d email="lorna@example.com" -d message="this HTTP stuff is rather excellent" * Hostname was NOT found in DNS cache * Trying 54.197.228.184... * Connected to requestb.in (54.197.228.184) port 80 (#0) > POST /example HTTP/1.1 > User-Agent: curl/7.38.0 > Host: requestb.in > Accept: */* > Content-Length: 78 > Content-Type: application/x-www-form-urlencoded > * upload completely sent off: 78 out of 78 bytes < HTTP/1.1 200 OK < Connection: keep-alive * Server gunicorn/19.3.0 is not blacklisted < Server: gunicorn/19.3.0 < Date: Tue, 07 Jul 2015 14:49:57 GMT < Content-Type: text/html; charset=utf-8 < Content-Length: 2 < Sponsored-By: https://www.runscope.com < Via: 1.1 vegur < * Connection #0 to host requestb.in left intact

When the response is fairly large, it can be hard to find a particular piece of information while using cURL. To help with this, it is possible to combine cURL with other tools such as less or grep; however, cURL shows a progress output bar if it realizes it isn’t outputting to a terminal, which is confusing to these other tools (and to humans). To silence the progress bar, use the -s switch (but beware that it also silences cURL’s errors). It can be helpful to use -s in combination with -v to create output that you can send to a pager such as less in order to examine it in detail, using a command like this:

curl -s -v http://requestb.in/example 2>&1 | less

The extra 2>&1 is there to send the STDERR output to STDOUT so that you’ll see both headers and body; by default, only STDOUT would be visible to less. With the preceding command, you can see the full details of the headers and body, request and response, all available in a pager that allows you to search and page up/down through the output.

Working with the Web in general, and APIs in particular, means working with data. cURL lets us do that in a few different ways. The simplest way is to send data along with a request in key/value pairs—exactly as when a form is submitted on the Web—which uses the -d switch. The switch is used as many times as there are fields to include. To make a POST request as if I had filled in a web form, I can use a curl command like this:

curl -X POST http://requestb.in/example -d name="Lorna" -d email="lorna@example.com" -d message="this HTTP stuff is rather excellent"

APIs accept their data in different formats; sometimes the data cannot be POSTed as a form, but must be created in JSON or XML format, for example. There are dedicated chapters in this book for working with those formats, but in either case we would assemble the data in the correct format and then send it with cURL. We can either send it on the command line by passing a string rather than a key/value pair to a single -d switch, or we can put it into a file and ask cURL to use that file rather than a string (this is a very handy approach for repeat requests where the command line can become very long). If you run the previous request and inspect it, you will see that the body of it is sent as:

name=Lorna&email=lorna@example.com

We can use this body data as an example of using the contents of a file as the body of a request. Store the data in a file and then give the filename prepended with an @ symbol as a single -d switch to cURL:

curl -X POST http://requestb.in/example -d @data.txt

Working with the extended features of HTTP requires the ability to work with various headers. cURL allows the sending of any desired header (this is why, from a security standpoint, the header can never be trusted!) by using the -H switch, followed by the full header to send. The command to set the Accept header to ask for an HTML response becomes:

curl -H "Accept: text/html" http://requestb.in/example

Before moving on from cURL to some other tools, let’s take a look at one more feature: how to handle cookies. Cookies will be covered in more detail in Chapter 4, but for now it is important to know that cookies are stored by the client and sent with requests, and that new cookies may be received with each response. Browsers send cookies with requests as default behavior, but in cURL we need to do this manually by asking cURL to store the cookies in a response and then use them on the next request. The file that stores the cookies is called the “cookie jar”; clearly, even HTTP geeks have a sense of humor.

To receive and store cookies from one request:

curl -c cookiejar.txt http://requestb.in/example

At this point, cookiejar.txt contains the cookies that were returned in the response. The file is a plain-text file, and the way that a browser would store this information is pretty similar; the data is just text. Feel free to open this file in your favorite text editor; it can be amended in any way you see fit (which is another good reminder of why trusting outside information is a bad idea; it may well have been changed), and then sent to the server with the next request you make. To send the cookie jar, amended or otherwise, use the -b switch and specify the file to find the cookies in:

curl -b cookiejar.txt http://requestb.in/example

To capture cookies and resend them with each request, use both the -b and -c switches, referring to the same cookiejar file with each switch. This way, all incoming cookies are captured and sent to a file, and then sent back to the server on any subsequent request, behaving just as they do in a browser. This approach is useful if you want to test something from cURL that requires, for example, logging in.

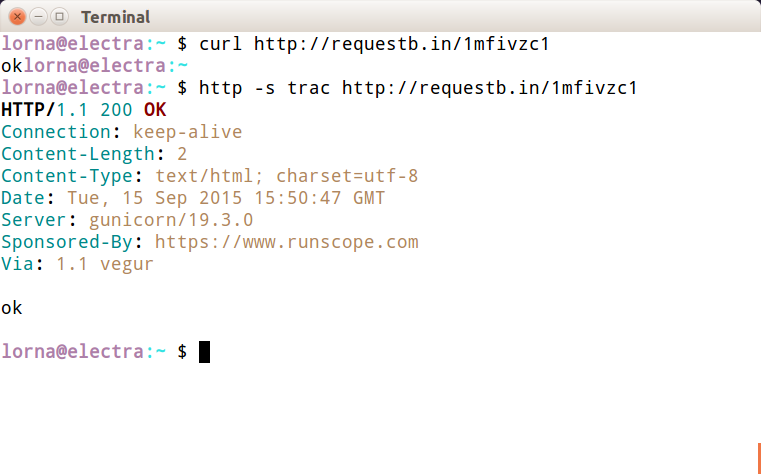

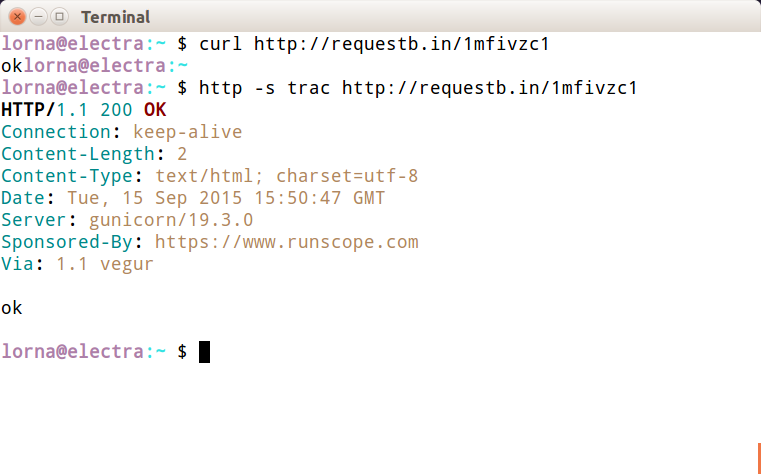

Another command-line tool well worth a mention here is HTTPie, which claims to be a cURL-like tool for humans. It has many nice touches that you may find useful, such as syntax highlighting. Let’s see some examples of the same kinds of requests that we did with cURL.

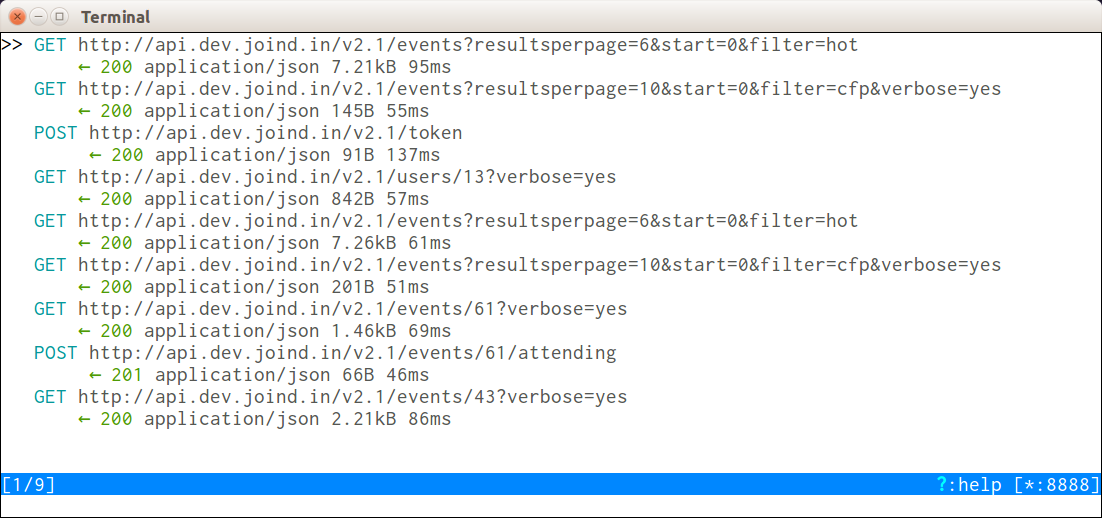

The first thing you will probably notice (for example, in Figure 1-3) is that HTTPie gives more output.

You can control what HTTPie outputs with the --print or -p switch, and pass H to see the request header, B to see the request body, h to see the response header, or b to see the response body. These can be combined in any way you like and the default is hb. To get the same output as cURL gives by default, use the b switch:

http -p b http://requestb.in/example

HTTPie will attempt to guess whether each additional item after the URL is a form field, a header, or something else. This can be confusing, but once you’ve become used to it, it’s very quick to work with. Here’s an example with POSTing data as if submitting a form:

$ http -p bhBH -f http://requestb.in/example name=Lorna email=lorna@example.com message="This HTTP stuff is rather excellent" POST /example HTTP/1.1 Accept: */* Accept-Encoding: gzip, deflate Connection: keep-alive Content-Length: 80 Content-Type: application/x-www-form-urlencoded; charset=utf-8 Host: requestb.in User-Agent: HTTPie/0.8.0 name=Lorna&email=lorna%40example.com&message=This+HTTP+stuff+is+rather+excellent HTTP/1.1 200 OK Connection: keep-alive Content-Length: 2 Content-Type: text/html; charset=utf-8 Date: Tue, 07 Jul 2015 14:46:28 GMT Server: gunicorn/19.3.0 Sponsored-By: https://www.runscope.com Via: 1.1 vegur ok

To add a header, the approach is similar; HTTPie sees the : in the argument and uses it as a header. For example, to send an Accept header:

$ http -p H -f http://requestb.in/example Accept:text/html GET /149njzd1 HTTP/1.1 Accept: text/html Accept-Encoding: gzip, deflate Connection: keep-alive Content-Type: application/x-www-form-urlencoded; charset=utf-8 Host: requestb.in User-Agent: HTTPie/0.8.0

Whether you choose cURL or HTTPie is a matter of taste; they are both worth a try and are useful tools to have in your arsenal when working with HTTP.

All the newest versions of the modern browsers (Chrome, Firefox, Opera, Safari, Internet Explorer) have built-in tools or available plug-ins to help inspect the HTTP that’s being transferred, and for simple services you may find that your browser’s tools are an approachable way to work with an API. These tools vary between browsers and are constantly updating, but here are a few favorites to give you an idea.

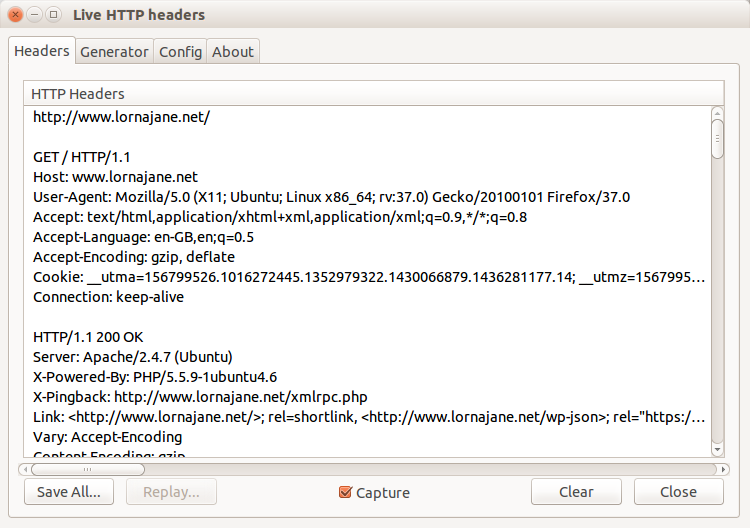

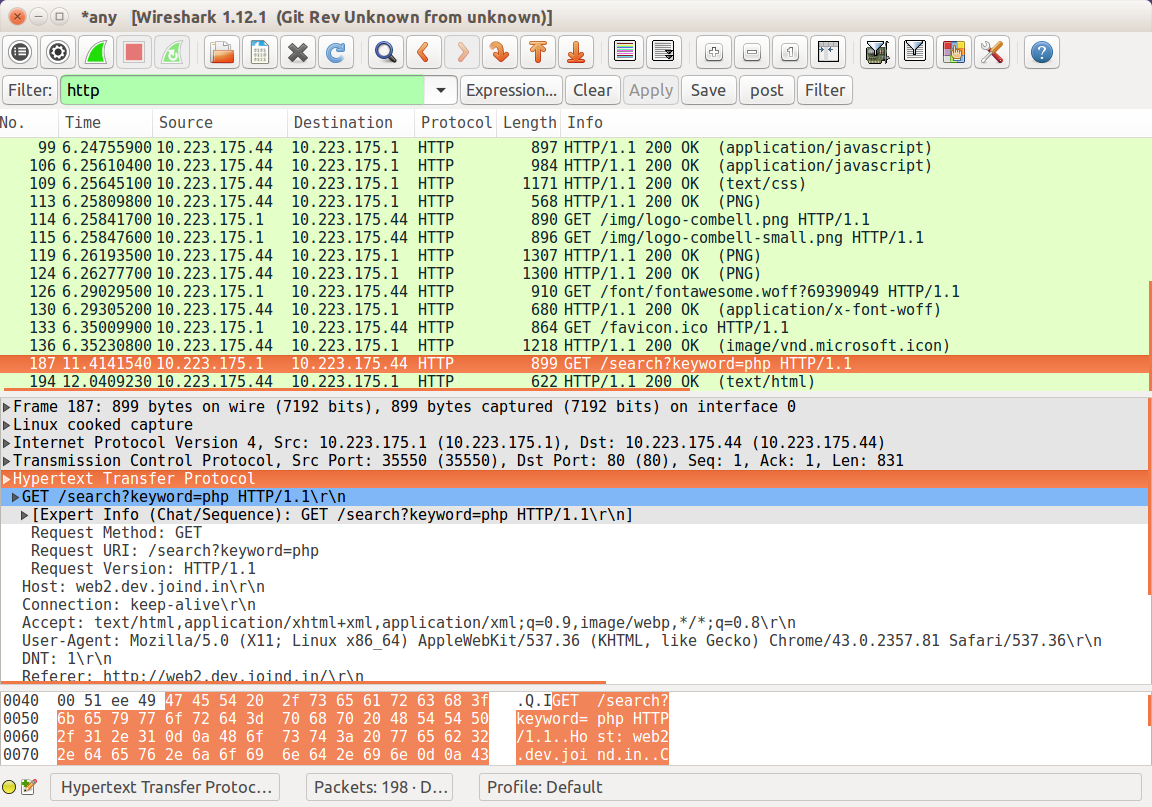

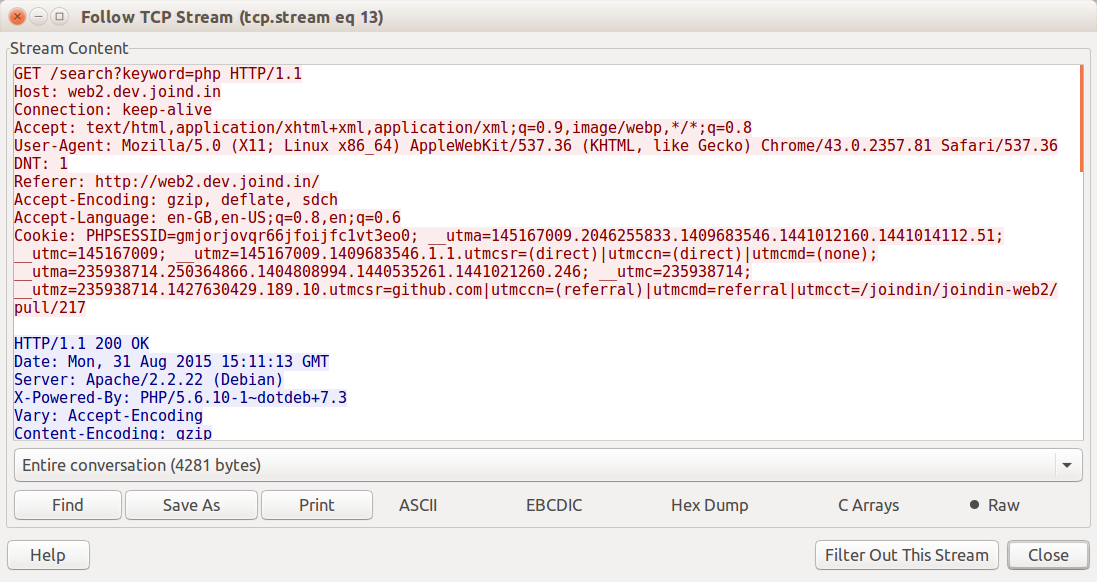

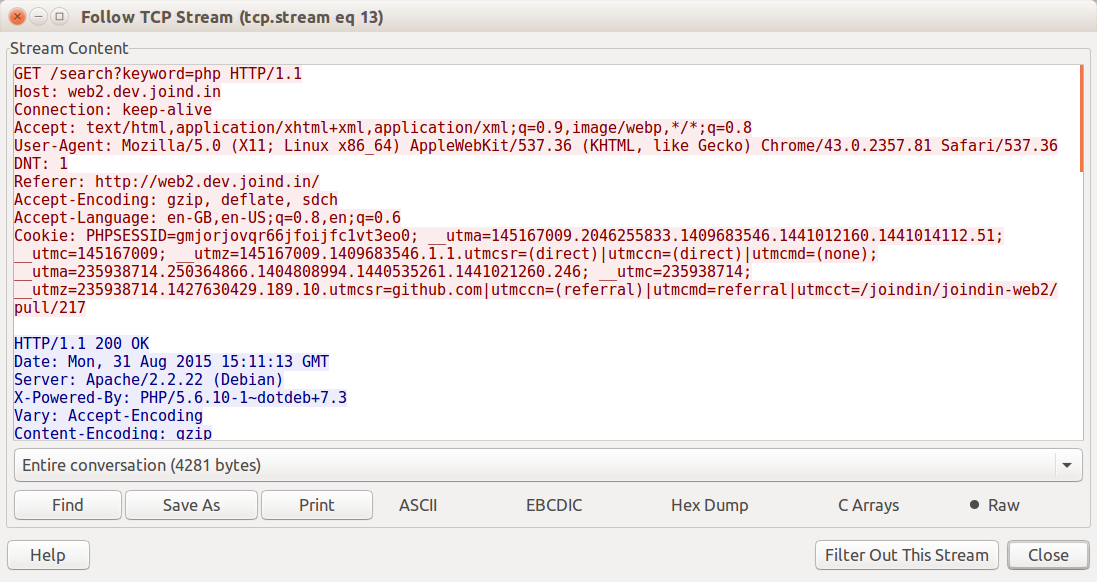

In Firefox, this functionality is provided by the Developer Toolbar and various plug-ins. Many web developers are familiar with FireBug, which does have some helpful tools, but there is another tool that is built specifically to show you all the headers for all the requests made by your browser: LiveHTTPHeaders. Using this, we can observe the full details of each request, as seen in Figure 1-4.

All browsers offer some way to inspect and change the cookies being used for requests to a particular site. In Chrome, for example, this functionality is offered by an extension called “Edit This Cookie,” and other similar extentions. This shows existing cookies and lets you edit and delete them—and also allows you to add new cookies. Take a look at the tools in your favorite browser and see the cookies sent by the sites you visit the most.

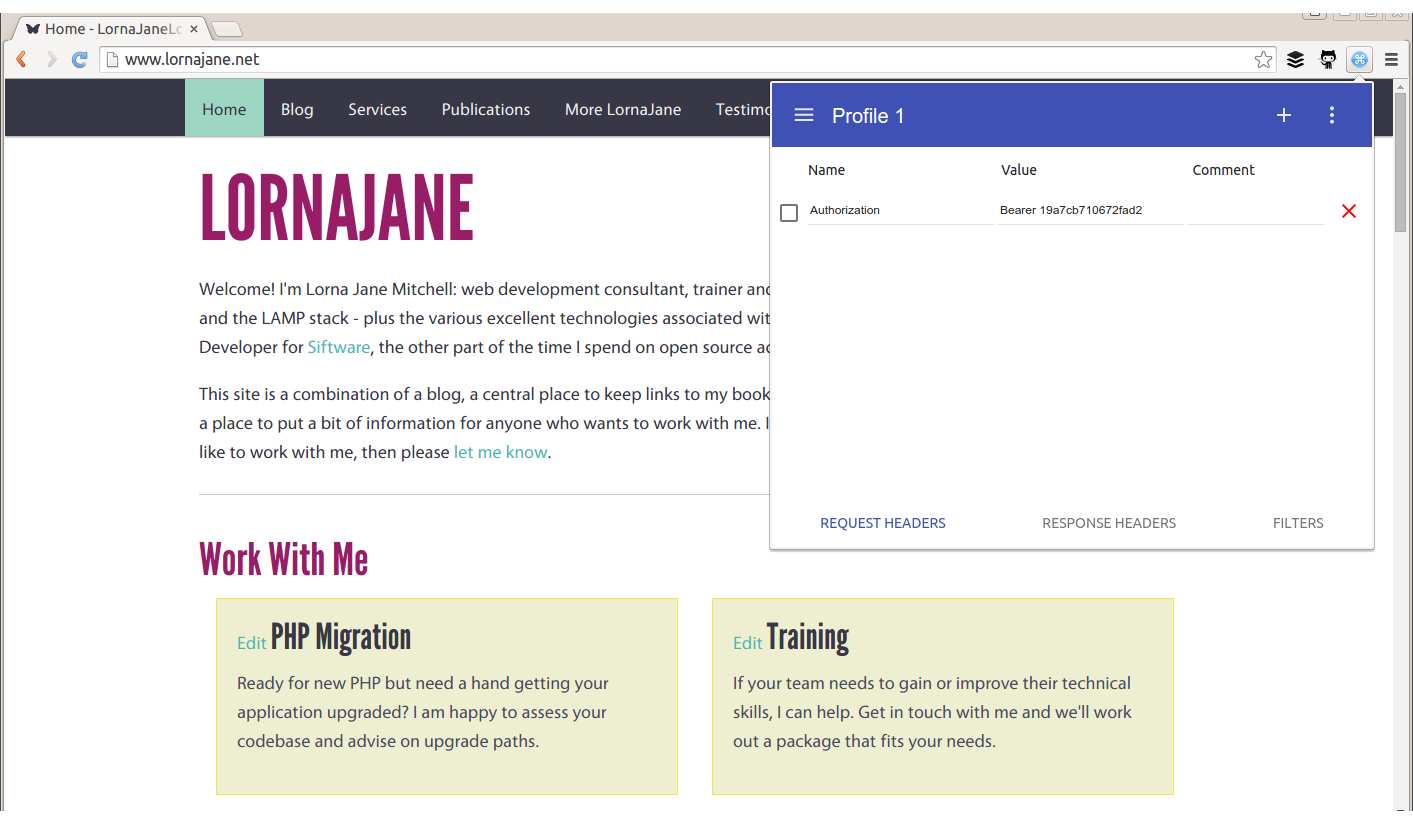

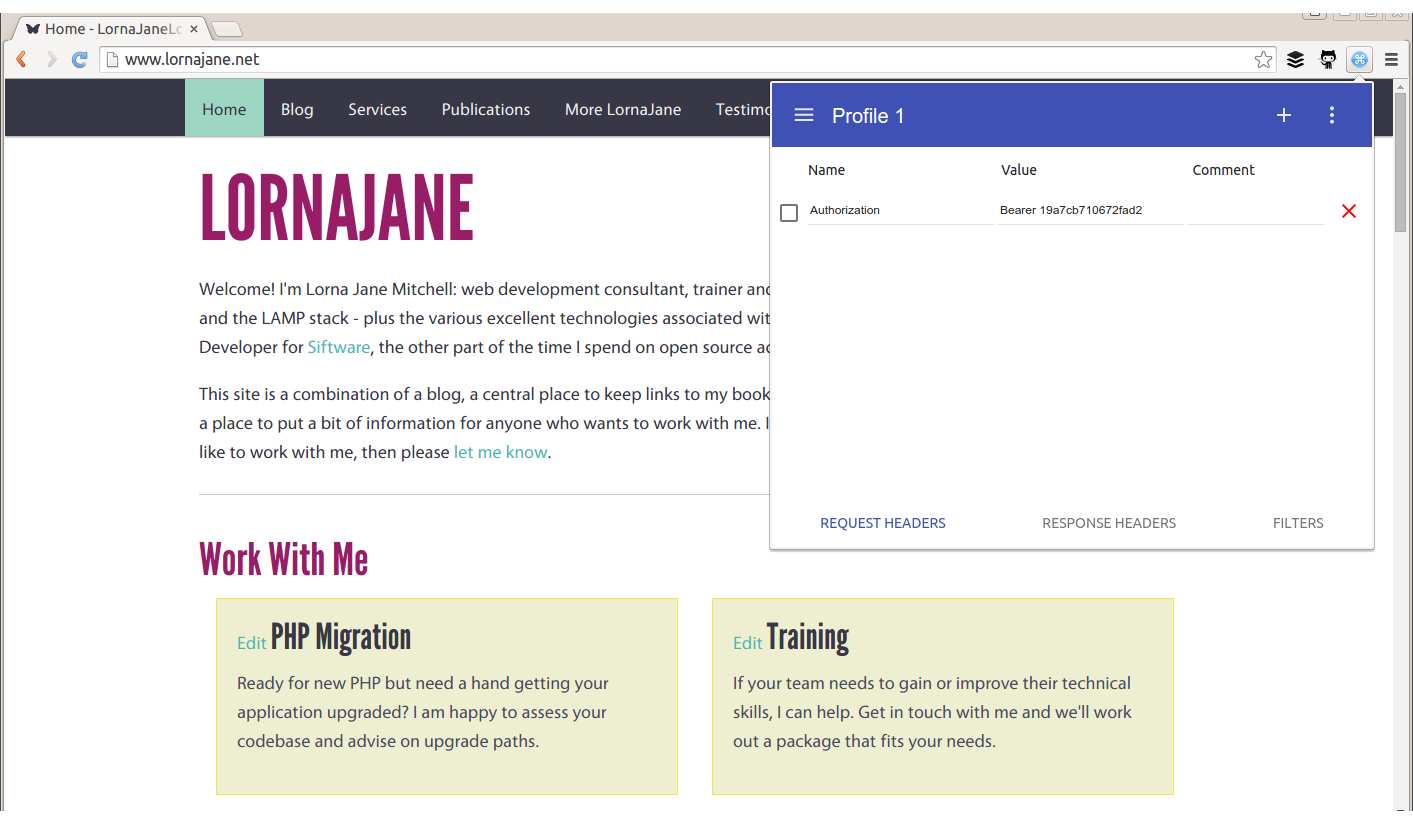

Sometimes, additional headers need to be added to a request, such as when sending authentication headers, or specific headers to indicate to the service that we want some extra debugging. Often, cURL is the right tool for this job, but it’s also possible to add the headers into your browser. Different browsers have different tools, but for Chrome try an extension called ModHeader, seen in Figure 1-5.

You won’t be surprised to hear that there is more than one way to handle HTTP requests using PHP, and each of the frameworks will also offer their own additions. This section focuses on plain PHP and looks at three different ways to work with APIs:

PHP’s cURL extension (usually available in PHP, sometimes via an additional package)

PHP’s built-in stream-handling functionaltiy

Earlier in this chapter, we discussed a command-line tool called cURL (see “Command-Line HTTP”). PHP has its own wrappers for cURL, so we can use the same tool from within PHP. A simple GET request looks like this:

<?php$url="http://www.lornajane.net/";$ch=curl_init($url);curl_setopt($ch,CURLOPT_RETURNTRANSFER,true);$result=curl_exec($ch);curl_close($ch);

The previous example is the simplest form; it sets the URL, makes a request to its location (by default this is a GET request), and capture the output. Notice the use of curl_setopt(); this function is used to set many different options on cURL handles and it has excellent and comprehensive documentation on http://php.net. In this example, it is used to set the CURLOPT_RETURNTRANSFER option to true, which causes cURL to return the results of the HTTP request rather than output them. There aren’t many use cases where you’d want to output the response so this flag is very useful.

We can use this extension to make all kinds of HTTP requests, including sending custom headers, sending body data, and using different verbs to make our request. Take a look at this example, which sends some JSON data and a Content-Type header with the POST request:

<?php$url="http://requestb.in/example";$data=["name"=>"Lorna","email"=>"lorna@example.com"];$ch=curl_init($url);curl_setopt($ch,CURLOPT_POST,1);curl_setopt($ch,CURLOPT_POSTFIELDS,json_encode($data));curl_setopt($ch,CURLOPT_HTTPHEADER,['Content-Type: application/json']);curl_setopt($ch,CURLOPT_RETURNTRANSFER,true);$result=curl_exec($ch);curl_close($ch);

Again, curl_setopt() is used to control the various aspects of the request we send. Here, a POST request is made by setting the CURLOPT_POST option to 1, and passing the data we want to send as an array to the CURLOPT_POSTFIELDS option. We also set a Content-Type header, which indicates to the server what format the body data is in; the various headers are covered in more detail in Chapter 3.

The PHP cURL extension isn’t the easiest interface to use, although it does have the advantage of being reliably available. Another great way of making HTTP requests that is always available in PHP is to use PHP’s stream-handling abilities with the file functions. In its simplest form, this means that, if allow_url_fopen is enabled (see the PHP manual), it is possible to make requests using file_get_contents(). The simplest example is making a GET request and reading the response body in as if it were a local file:

<?php$result=file_get_contents("http://www.lornajane.net/");

We can take advantage of the fact that PHP can handle a variety of different protocols (HTTP, FTP, SSL, and more) and files using streams. The simple GET requests are easy, but what about something more complicated? Here is an example that makes the same POST request as our earlier example with JSON data and headers, illustrating how to use various aspects of the streams functionality:

<?php$url="http://requestb.in/example";$data=["name"=>"Lorna","email"=>"lorna@example.com"];$context=stream_context_create(['http'=>['method'=>'POST','header'=>['Accept: application/json','Content-Type: application/json'),'content'=>json_encode($data)]]];$result=file_get_contents($url,false,$context);

Options are set as part of the context that we create to dictate how the request should work. Then, when PHP opens the stream, it uses the information supplied to determine how to handle the stream correctly—including sending the given data and setting the correct headers.

The third way that I’ll cover here for working with PHP and HTTP is Guzzle, a PHP library that you can include in your own projects with excellent HTTP-handling functionality. It’s installable via Composer, or you can download the code from GitHub and include it in your own project manually if you’re not using Composer yet (the examples here are for version 6 of Guzzle).

For completeness, let’s include an example of making the same POST request as before, but this time using Guzzle:

<?phprequire"vendor/autoload.php";$url="http://requestb.in/example";$data=["name"=>"Lorna","email"=>"lorna@example.com"];$client=new\GuzzleHttp\Client();$result=$client->post($url,["json"=>$data]);echo$result->getBody();

The Guzzle library is object-oriented and it has excellent documentation, so do feel free to take these examples and build on them using the documentation for reference. The preceding example first includes the Composer autoloader since that’s how I installed Guzzle. Then it initializes both the URL that the request will go to and the data that will be sent. Before making a request in Guzzle, a client is initialized, and at this point you can set all kinds of configuration on either the client to apply to all requests, or on individual requests before sending them. Here we’re simply sending a POST request and using the json config shortcut so that Guzzle will encode the JSON and set the correct headers for us. You can see this in action by running this example and then visiting your http://requestb.in page to inspect how the request looked when it arrived.

As you can see, there are a few different options for dealing with HTTP, both from PHP and the command line, and you’ll see all of them used throughout this book. These approaches are all aimed at “vanilla” PHP, but if you’re working with a framework, it will likely offer some functionality along the same lines; all the frameworks will be wrapping one of these methods so it will be useful to have a good grasp of what is happening underneath the wrappings. After trying out the various examples, it’s common to pick one that you will work with more than the others; they can all do the job, so the one you pick is a result of both personal preference and which tools are available (or can be made available) on your platform. Most of my own projects make use of streams unless I need to do something nontrivial, in which case I use Guzzle as it’s so configurable that it’s easy to build up all the various pieces of the request and still understand what the code does when you come back to it later.

HTTP verbs such as GET and POST let us send our intention along with the URL so we can instruct the server what to do with it. Web requests are more than just a series of addresses, and verbs contribute to the rich fabric of the journey. This chapter covers how to make and respond to HTTP requests using a selection of common HTTP verbs, including lots of examples.

I mentioned GET and POST because it’s very likely you’re already familiar with those. There are many verbs that can be used with HTTP—in fact, we can even invent our own—but we’ll get to that later in the chapter (see “Using Other HTTP Verbs”). First, let’s revisit GET and POST in some detail, looking at when to use each one and what the differences are between them.

URLs used with GET can be bookmarked, they can be called as many times as needed, and the request should change the data it accesses. A great example of using a GET request when filling in a web form is when using a search form, which should always use GET. Searches can be repeated safely, and the URLs can be shared.

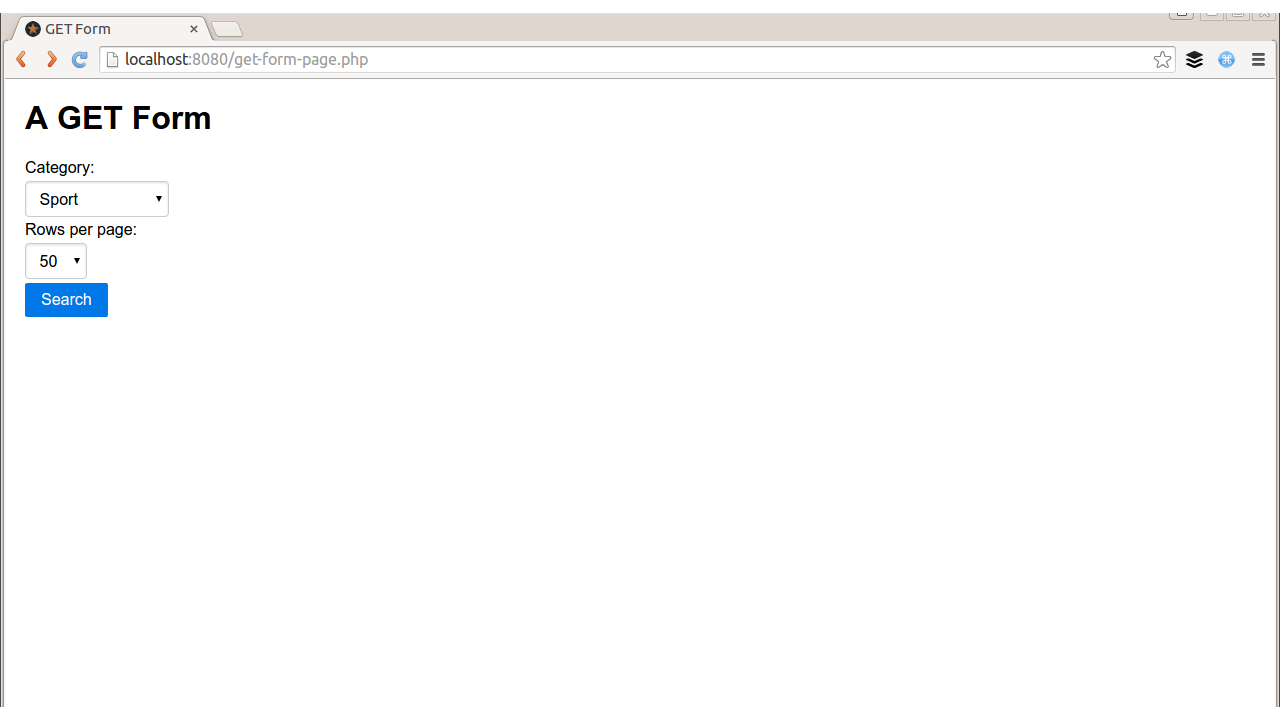

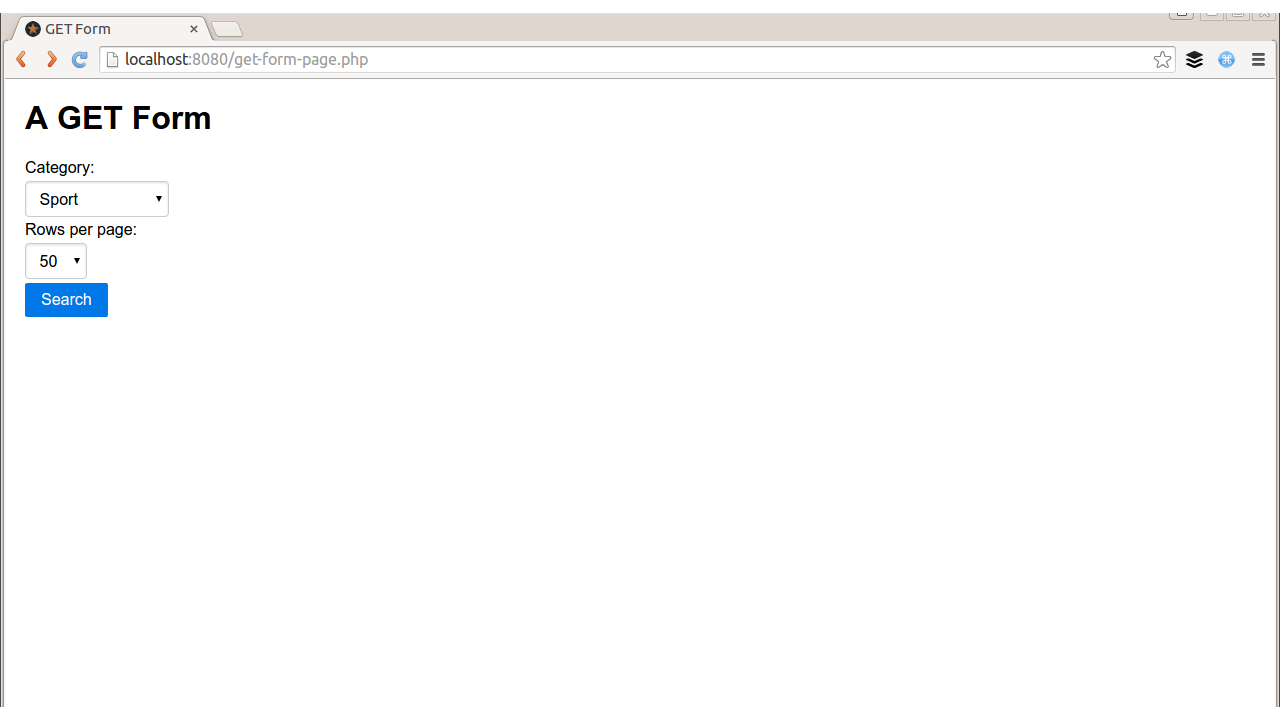

Consider the simple web form in Figure 2-1, which allows users to state which category of results they’d like and how many results to show. The code for displaying the form and the (placeholder) search results on the page could be something like this:

<html><head><title>GET Form</title><linkrel="stylesheet"href="http://yui.yahooapis.com/pure/0.6.0/pure-min.css"></head><body><divstyle="margin: 20px"><h1>A GET Form</h1><?php if(empty($_GET)): ?><formname="search"method="get"class="pure-form pure-form-stacked">Category:<selectname="category"><optionvalue="entertainment">Entertainment</option><optionvalue="sport">Sport</option><optionvalue="technology">Technology</option></select>Rows per page:<selectname="rows"><optionvalue="10">10</option><optionvalue="20">20</option><optionvalue="50">50</option></select><inputtype="submit"value="Search"class="pure-button pure-button-primary"/></form><?php else: ?><p>Wonderfully filtered search results</p><?php endif; ?></div></body></html>

You can see that PHP simply checks if it has been given some search criteria (or indeed any data in the $_GET superglobal) and if not, it displays the empty form. If there was data, then it would process it (although probably in a more interesting way than this trivial example does). The data gets submitted on the URL when the form is filled in (GET requests typically have no body data), resulting in a URL like this:

http://localhost/book/get-form-page.php?category=technology&rows=20

Having the data visible on the URL is a design choice. When this happens, a user can easily bookmark or share this URL with others, which is sometimes very useful, for example, to bookmark a particular set of search results, or a product page. In other use cases, such as submitting a form to update a user’s profile, we really don’t want users to be able to share or save the request that they made, so a POST request would be more appropriate. As software developers, we need to choose whether to submit forms via GET or POST, and in general a good rule of thumb is that if the request is safe to repeat, then GET is a good choice; otherwise use POST. We’ll see more examples of the correct use of verbs in APIs as well as forms during this chapter.

The previous example showed how PHP responds to a GET request, but how does it make one? Well, as discussed in Chapter 1, there are many ways to approach this. For a very quick solution, use PHP’s stream handling to create the complete request to send:

<?php$url='http://localhost/book/get-form-page.php';$data=["category"=>"technology","rows"=>20];$get_addr=$url.'?'.http_build_query($data);$page=file_get_contents($get_addr);echo$page;

In a Real World™ system, it is prudent to be cautious of the data coming in from external APIs; it is best to filter the contents of $page before outputting it or using it anywhere else. As an alternative to using PHP’s stream features, you could use whatever functionality your existing frameworks or libraries offer, or make use of the cURL extension that is built in to PHP.

Using cURL, our code would instead look like this:

<?php$url='http://localhost/book/get-form-page.php';$data=["category"=>"technology","rows"=>20];$get_addr=$url.'?'.http_build_query($data);$ch=curl_init($get_addr);curl_setopt($ch,CURLOPT_RETURNTRANSFER,1);$page=curl_exec($ch);echo$page;

Either of these approaches works well when you want to fetch data into your PHP script from an external API or page. The examples here show web pages, but they apply when working with HTML, XML, JSON, or anything else.

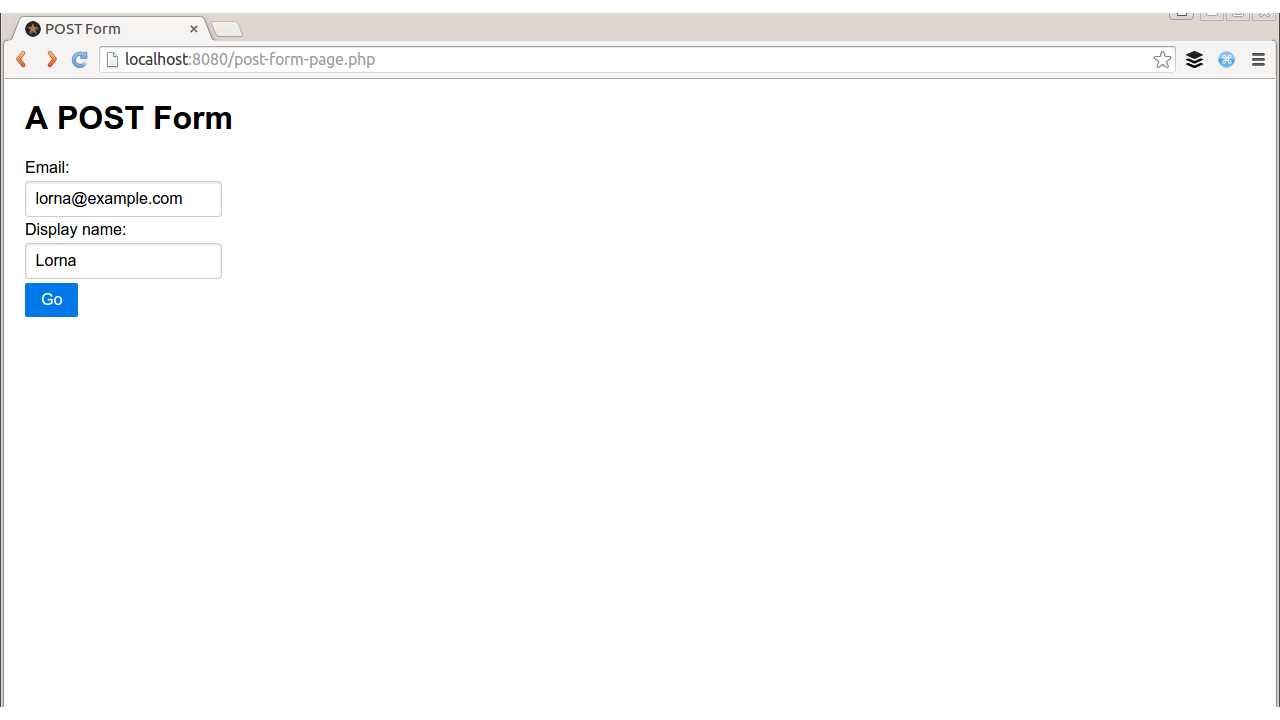

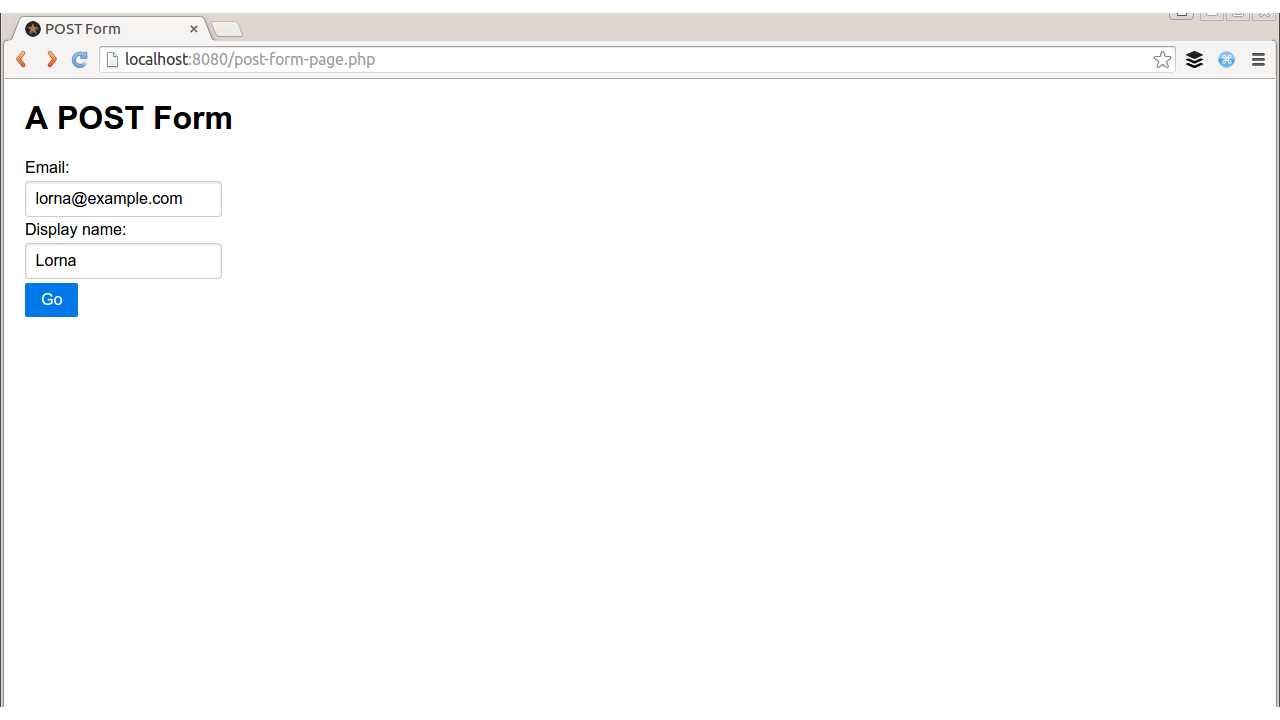

In contrast to GET requests, a POST request is one that does cause change on the server that handles the request. These requests shouldn’t be repeated or bookmarked, which is why your browser warns you when it is resubmitting data. Let’s use a POST form when the request changes data on the server side. Figure 2-2, for example, involves updating a bit of user profile information.

When a form is submitted via GET, we can see the variables being sent on the URL. With POST, however, the data goes into the body of the request, and the Content-Type header denotes what kind of data can be found in the body. When we fill in the form in Figure 2-2, the request looks like this:

POST/book/post-form-page.phpHTTP/1.1Host:localhostContent-Length:48Accept:text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8Content-Type:application/x-www-form-urlencodedAccept-Encoding:gzip,deflate,sdchAccept-Language:en-GB,en-US;q=0.8,en;q=0.6Accept-Charset:ISO-8859-1,utf-8;q=0.7,*;q=0.3email=lorna%40example.com&display_name=LornaJane

In this example, you can see the data in the body, with the Content-Type and Content-Length headers set appropriately so that the server can decode the response (more about content negotiation in Chapter 3). Next we’ll look at the server side of the conversation.

PHP knows how to handle form data, so it can parse this out and place the fields into $_POST, so it will be ready for use in the script. Here is the code behind this page, showing the form without any incoming data; if data existed, it would be displayed:

<html><head><title>POST Form</title><linkrel="stylesheet"href="http://yui.yahooapis.com/pure/0.6.0/pure-min.css"></head><body><divstyle="margin: 20px"><h1>A POST Form</h1><?php if(empty($_POST)): ?><formname="user"method="post"class="pure-form pure-form-stacked">Email:<inputtype="text"length="60"name="email"/>Display name:<inputtype="text"length="60"name="display_name"/><inputtype="submit"value="Go"class="pure-button pure-button-primary"/></form><?php else:echo "New user email: " . filter_input(INPUT_POST,"email", FILTER_VALIDATE_EMAIL);endif; ?></div></body></html>

It is very common to build PHP forms and parse data in this way, but when handling HTTP requests, it is also important to consider how the requests can be made and responded to (spoiler: it looks a lot like our GET request code).

To POST data to this form using streams (as in “Making GET Requests”), the same basic approach can be used, but some context should be added to the stream, so it will know which methods, headers, and verbs to use:

<?php$url='http://localhost/book/post-form-page.php';$data=["email"=>"lorna@example.com","display_name"=>"LornaJane"];$options=["http"=>["method"=>"POST","header"=>"Content-Type: application/x-www-form-urlencoded","content"=>http_build_query($data)]];$page=file_get_contents($url,NULL,stream_context_create($options));echo$page;

When POST data is sent to the page created, the data sent appears in the output rather than in the form, so it shows “New user email: lorna@example.com.” This code looks very similar to the previous streams example, but this example uses stream_context_create() to add some additional information to the stream.

You can see that we added the body content as a simple string, formatted it as a URL using http_build_query(), and indicated which content type the body is. This means that other data formats can very easily be sent by formatting the strings correctly and setting the headers.

Here is an example that makes the same POST request again, but this time using Guzzle (these examples are for version 6 of Guzzle):

<?phprequire"vendor/autoload.php";$url='http://localhost/book/post-form-page.php';$data=["email"=>"lorna@example.com","display_name"=>"LornaJane"];$client=new\GuzzleHttp\Client();$page=$client->post($url,["form_params"=>$data]);echo$page->getBody();

This looks very similar to the previous example, but using the built-in form_params option to Guzzle means that the Content-Type will be specified for us (there is also a multipart option if you need to send file uploads using Guzzle). When we make the request, we get a response object back rather than a string, but we can access the content using the getBody() method.

In these simple examples, we can make our code make POST requests to HTML forms because the forms have no security features. In reality, most forms will have some CSRF (Cross-Site Request Forgery) protection in them, so you’ll find that you usually can’t make requests like this against forms published on the wider Internet. I would always recommend that you include security features in your own forms—except when you’re trying out the previous examples, of course.

There are many specifications relating to HTTP, as well as protocols based upon it, and between them they define a wide selection of verbs that can be used with HTTP. Even better, there is always room to invent new HTTP verbs; so long as your client and server both know how to handle a new verb, it is valid to use it. However, be aware that not all elements of network infrastructure between these two points will necessarily know how to handle every verb. Some pieces of network infrastructure do not support PATCH, for example, or the verbs used by the WebDAV protocol. When working with APIs, particularly RESTful ones, it is normal to make use of two additional verbs: PUT and DELETE. REST is covered in detail in Chapter 8, but for now it is useful to examine some examples of how to use these less common verbs in applications.

The simplest of these two is DELETE, because it doesn’t have any body data associated with it. It is possible to see what kind of request was made to a PHP script acting as a server by inspecting the $_SERVER["REQUEST_METHOD"] value, which indicates which verb was used in the request.

To make the request from PHP, it is necessary to set the verb and then make the request as normal. Here’s an example using the cURL extension:

<?php$url='http://localhost/book/example-delete.php';$ch=curl_init($url);curl_setopt($ch,CURLOPT_CUSTOMREQUEST,"DELETE");curl_exec($ch);

This example simply issues a request to the $url shown using a DELETE verb.

Using PUT is slightly more involved because, like POST, it can be accompanied by data and the data can be in a variety of formats. In “Handling POST Requests”, I mentioned that for incoming form data, PHP reads form-encoded values for POST and creates a $_POST array for us. There is no equivalent $_PUT superglobal, but we can still make use of the php://input stream to inspect the body data of the request to which the script is sending a response at that time.

When using PHP to respond to PUT requests, the code runs along the lines of this example:

<?phpif($_SERVER['REQUEST_METHOD']=="PUT"){$data=[];$incoming=file_get_contents("php://input");parse_str($incoming,$data);echo"New user email: ".filter_var($data["email"],FILTER_VALIDATE_EMAIL);}else{echo"The request did not use a PUT method";}

This example inspects the $_SERVER superglobal to see which verb was used, and then responds accordingly. The data coming into this example is form style, meaning it uses file_get_contents() to grab all the body data, then parse_str() to decode it.

Be careful with parse_str()—if the second argument is omitted, the variables will be extracted as local variables, rather than contained in an array.

In order to use PHP to make a request that the previous script can handle, it is necessary to create the contents of the body of the request and specify that it is a PUT request. Below is an example using the Guzzle library:

<?phprequire"vendor/autoload.php";$url="http://localhost/book/put-form-page.php";$data=["email"=>"lorna@example.com","display_name"=>"LornaJane"];$client=new\GuzzleHttp\Client();$result=$client->put($url,["headers"=>["Content-Type"=>"application/x-www-form-urlencoded"],"body"=>http_build_query($data)]);echo$result->getBody();

The PUT verb is specified in this example, and the correct header for the form-encoded data is set. We dictate the data to PUT (manually building the form elements into a string) and then send the request. We will discuss more about other data formats in Chapter 5 and Chapter 6, which cover JSON and XML specifically, but the basic principles of preparing the data and setting the Content-Type header accordingly still stand.

Armed with this knowledge of how to handle GET, POST, DELETE, and PUT verbs, we are able to work with many different kinds of API acting as both a client and as a server. When using other verbs, either those that already exist as part of the HTTP spec or those that are custom to your application, you can use the approaches described here for PUT and DELETE.

So far, we’ve seen various presentations of the HTTP format, and examined the idea that there is a lot more information being transferred in web requests and responses than what appears in the body of the response. The body is certainly the most important bit, and often is the meatiest, but the headers provide key pieces of information for both requests and responses, which allow the client and the server to communicate effectively. If you think of the body of the request as a birthday card with a check inside it, then the headers are the address, postmark, and perhaps the “do not open until…” instruction on the outside (see Figure 3-1).

This additional information gets the body data to where it needs to go and instructs the target on what to do with it when it gets there.

Many of the headers you see in HTTP make sense in both requests and responses. Others might be specific to either a request or a response. Here’s a sample set of real request and response headers from when I request my own site from a browser (I’m using Chrome).

Request headers:

GET/HTTP/1.1Host:www.lornajane.netConnection:keep-aliveAccept:text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8User-Agent:Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/43.0.2357.81 Safari/537.36Accept-Encoding:gzip, deflate, sdchAccept-Language:en-GB,en-US;q=0.8,en;q=0.6Accept-Charset:ISO-8859-1,utf-8;q=0.7,*;q=0.3

HTTP/1.1200OKServer:Apache/2.4.7 (Ubuntu)X-Powered-By:PHP/5.5.9-1ubuntu4.6X-Pingback:http://www.lornajane.net/xmlrpc.phpExpires:Wed, 11 Jan 1984 05:00:00 GMTCache-Control:no-cache, must-revalidate, max-age=0Vary:Accept-EncodingContent-Encoding:gzipContent-Type:text/html; charset=UTF-8Content-Length:7897Accept-Ranges:bytesDate:Sat, 11 Jul 2015 08:22:57 GMTX-Varnish:600223060Age:0Via:1.1 varnishConnection:keep-alive

Headers can be related to the request, the response, or the “entity,” which is the body of either a request or a response. Some examples might be:

| Request Headers | User-Agent, Accept, Authorization, and Cookie |

|---|---|

| Response Headers | Set-Cookie |

| Entity Headers | Content-Type and Content-Length |

This chapter looks in more detail at the headers you are likely to see when working with web services.

The User-Agent header gives information about the client making the HTTP request and usually includes information about the software client. Take a look at the header here:

User-Agent Mozilla/5.0 (Linux; U; Android 2.3.4; en-gb; SonyEricssonSK17i Build/4.0.2.A.0.62) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1

What device do you think made this request? You would probably guess that it was my Sony Ericsson Android phone…and perhaps you would be right. Or perhaps I used a curl command:

curl -H "User-Agent: Mozilla/5.0 (Linux; U; Android 2.3.4; en-gb; SonyEricssonSK17i Build/4.0.2.A.0.62) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1" http://requestb.in/example

We simply have no way of knowing, when a request is received with a User-Agent like this, if it really came from an Android phone, or if it came from something else pretending to be an Android phone. This information can be used to customize the response we send—after all, if someone wants to pretend to be a tiny Android phone, then it is reasonable to respond with the content that would normally be sent to this phone. It does mean, however, that the User-Agent header cannot be relied upon for anything more important, such as setting a custom header and using it as a means of authenticating users. Just like any other incoming data, it is wide open to abuse and must be treated with suspicion.

In PHP, it is possible both to parse and to send the User-Agent header, as suits the task at hand. Here’s an example of sending the header using streams:

<?php$url='http://localhost/book/user-agent.php';$options=array("http"=>array("header"=>"User-Agent: Advanced HTTP Magic Client"));$page=file_get_contents($url,false,stream_context_create($options));echo$page;

We can set any arbitrary headers we desire when making requests, all using the same approach. Similarly, headers can be retrieved using PHP by implementing the same approach throughout. The data of interest here can all be found in $_SERVER, and in this case it is possible to inspect $_SERVER["HTTP_USER_AGENT"] to see what the User-Agent header was set to.

To illustrate, here’s a simple script:

<?phpecho"This request made by: ".filter_var($_SERVER['HTTP_USER_AGENT'],FILTER_SANITIZE_STRING);

It’s common when developing content for the mobile web to use headers such as User-Agent in combination with WURFL to detect what capabilities the consuming device has, and adapt the content accordingly. With APIs, however, it is better to expect the clients to use other headers so they can take responsibility for requesting the correct content types, rather than allowing the decision to be made centrally.

Commonly, the Content-Type header is used to describe what format the data being delivered in the body of a request or a response is in; this allows the target to understand how to decode this content. Its sister header, Accept, allows the client to indicate what kind of content is acceptable, which is another way of allowing the client to specify what kind of content it actually knows how to handle. As seen in the earlier example showing headers, here’s the Accept header Google Chrome usually sends:

Accept:text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8

To read an Accept header, consider each of the comma-separated values as an individual entity. This client has stated a preference for (in order):

text/html

application/xhtml+xml

image/webp

application/xml

*/*

This means that if any of these formats are supplied, the client will understand our meaning. There are two entries in the list that include some additional information: the q value. This is an indication of how much a particular option is preferred, where the default value is q=1.

Here, Chrome claims to be able to handle a content type of */*. The asterisks are wildcards, meaning it thinks it can handle any format that could possibly exist—which seems unlikely. If an imaginary format is implemented that both our client and server understand, for example, Chrome won’t know how to parse it, so */* is misleading.

Using the Accept and Content-Type headers together to describe what can be understood by the client, and what was actually sent, is called content negotiation. Using the headers to negotiate the usable formats means that meta-information is not tangled up with actual data as it would be when sending both kinds of parameters with the body or URL of the request. Including the headers is generally a better approach.

We can negotiate more than just content, too. The earlier example contained these lines:

Accept-Encoding: gzip, deflate, sdch Accept-Language: en-GB,en-US;q=0.8,en;q=0.6 Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.3

These headers show other kinds of negotiation, such as declaring what encoding the client supports, which languages are preferred, and which character sets can be used. This enables decisions to be made about how to format the response in various ways, and how to determine which formats are appropriate for the consuming device.

Let’s start by looking at how to parse an Accept header correctly. All Accept headers have a comma-separated list of values, and some include a q value that indicates their level of preference. If the q value isn’t included for an entry, it can be assumed that q=1 for that entry. Using the Accept header from my browser again, I can parse it by taking all the segments, working out their preferences, and then sorting them appropriately. Here’s an example function that returns an array of supported formats in order of preference:

<?phpfunctionparseAcceptHeader(){$hdr=$_SERVER['HTTP_ACCEPT'];$accept=array();foreach(preg_split('/\s*,\s*/',$hdr)as$i=>$term){$o=new\stdclass;$o->pos=$i;if(preg_match(",^(\S+)\s*;\s*(?:q|level)=([0-9\.]+),i",$term,$M)){$o->type=$M[1];$o->q=(double)$M[2];}else{$o->type=$term;$o->q=1;}$accept[]=$o;}usort($accept,function($a,$b){/* first tier: highest q factor wins */$diff=$b->q-$a->q;if($diff>0){$diff=1;}elseif($diff<0){$diff=-1;}else{/* tie-breaker: first listed item wins */$diff=$a->pos-$b->pos;}return$diff;});$accept_data=array();foreach($acceptas$a){$accept_data[$a->type]=$a->type;}return$accept_data;}

The headers sent by your browser may differ slightly and result in different output when you try the previous code snippet.

When using the Accept header sent by my browser, I see the following output:

Array([text/html]=>text/html[application/xhtml+xml]=>application/xhtml+xml[image/webp]=>image/webp[application/xml]=>application/xml[*/*]=>*/*)

We can use this information to work out which format it would be best to send the data back in. For example, here’s a simple script that calls the parseAcceptHeader() function, then works through the formats to determine which it can support, and sends that information:

<?phprequire"accept.php";$data=["greeting"=>"hello","name"=>"Lorna"];$accepted_formats=parseAcceptHeader();$supported_formats=["application/json","text/html"];foreach($accepted_formatsas$format){if(in_array($format,$supported_formats)){// yay, use this formatbreak;}}switch($format){case"application/json":header("Content-Type: application/json");$output=json_encode($data);break;case"text/html":default:$output="<p>".implode(', ',$data)."</p>";break;}echo$output;

There are many, many ways to parse the Accept header (and the same techniques apply to the Accept-Language, Accept-Encoding, and Accept-Charset headers), but it is vital to do so correctly. The importance of Accept header parsing can be seen in Chris Shiflett’s blog post, The Accept Header; the parseAcceptHeader() example shown previously came mostly from the comments on this post. You might use this approach, an existing library such as the PHP mimeparse port, a solution you build yourself, or one offered by your framework. Whichever you choose, make sure that it parses these headers correctly, rather than using a string match or something similar.

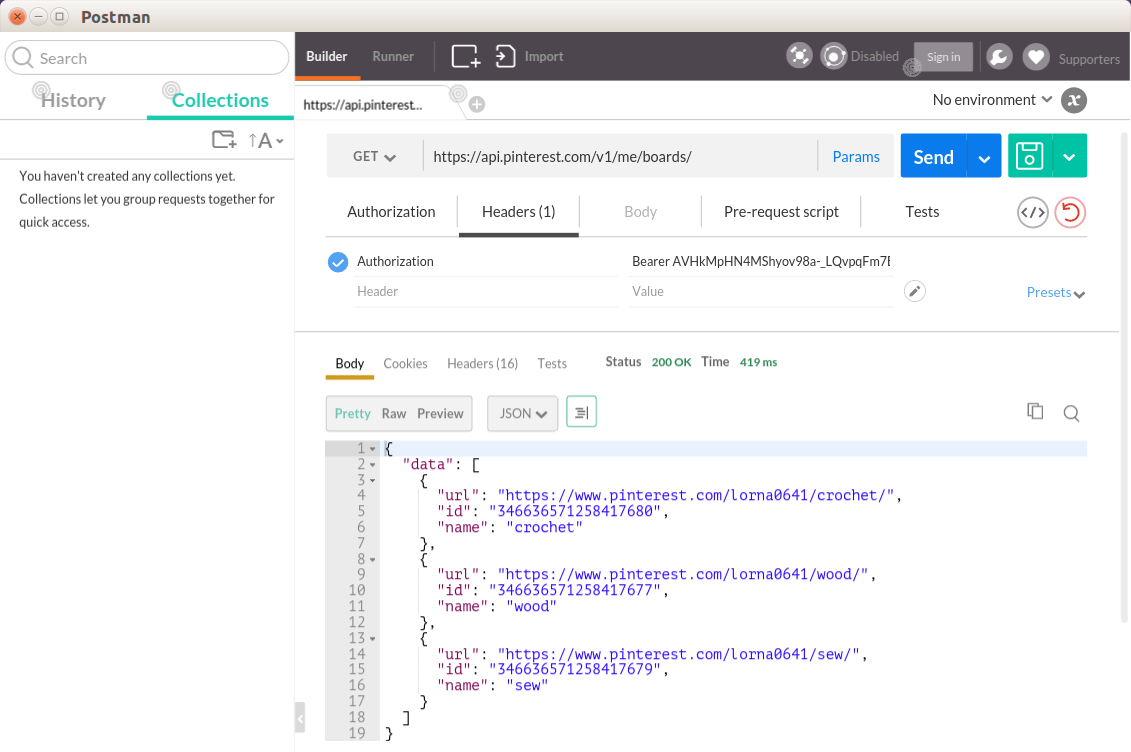

Using cURL from the command line, here are some examples of how to call exactly the same URL by setting different Accept headers and seeing different responses:

curl http://localhost/book/hello.php hello, Lorna

curl -H "Accept: application/json" http://localhost/book/hello.php

{"greeting":"hello","name":"Lorna"}

curl -H "Accept: text/html;q=0.5,application/json"

http://localhost/book/hello.php

{"greeting":"hello","name":"Lorna"}

To make these requests from PHP rather than from cURL, it is possible to simply set the desired headers as the request is made. Here’s an example that uses PHP’s cURL extension to make the same request as the previous example:

<?php$url="http://localhost/book/hello.php";$ch=curl_init($url);curl_setopt($ch,CURLOPT_HEADER,array("Accept: text/html;q=0.5,application/json",));curl_setopt($ch,CURLOPT_RETURNTRANSFER,true);$response=curl_exec($ch);echo$response;curl_close($ch);

The number of headers you need to support in your application will vary. It is common and recommended to offer various content types such as JSON, XML, or even plain text. The selection of supported encodings, languages, and character sets will depend entirely on your application and users’ needs. If you do introduce support for variable content types, however, this is the best way to do it.

Headers can provide information that allows an application to identify users. Again, keeping this type of information separate from the application data makes things simpler and, often, more secure. The key thing to remember when working on user security for APIs is that everything you already know about how to secure a website applies to web services. A common header that has been seen earlier in this book is the Authorization header. This can be used with a variety of different techniques for authenticating users, all of which will be familiar to web developers.

Rather than the Authorization header, some applications may have alternative approaches including using cookies and sessions to record a user’s information after he has supplied credentials to a login endpoint, for example. Others will implement solutions of their own making, and many of these will use a simple API key approach. In this approach, the user acquires a key, often via a web interface or other means, that she can use when accessing the API. A major advantage of this approach is that the keys can be deleted by either party, or can expire, removing the likelihood that they can be used with malicious intent. This is nicer than passing actual user credentials, as the details used can be changed. Sometimes API keys will be passed simply as a query parameter, but the Authorization header would also be an appropriate place for such information.

The simplest approach to authorization is HTTP Basic authentication (for more details, see the RFC), which requires the user to supply a username and password to identify himself. Since this approach is so widespread, it is well supported in most platforms, both client and server. Do beware, though, that these credentials can easily be inspected and reused maliciously, so this approach is appropriate only on trusted networks or over SSL.

When the user tries to access a protected resource using basic authentication, he will receive a 401 status code in response, which includes a WWW-Authenticate header with the value Basic followed by a realm for which to authenticate. As users, we see an unstyled pop up for username and password in our browser; this is basic authentication. When we supply the credentials, the client will combine them in the format username:password and Base64 encode the result before including it in the Authorization header of the request it makes.

The mechanism of the basic authentication is this:

Arrange the username and password into the format username:password.

Base64 encode the result.

Send it in the header, like this: Authorization: Basic base64-encoded string.

Since tokens are sent in plain text, HTTPS should be used throughout.

We can either follow the steps here and manually create the correct header to send, or we can use the built-in features of our toolchain. Here’s PHP’s cURL extension making a request to a page protected by basic authentication:

<?php$url="http://localhost/book/basic-auth.php";$ch=curl_init($url);curl_setopt($ch,CURLOPT_HTTPAUTH,CURLAUTH_BASIC);curl_setopt($ch,CURLOPT_USERPWD,"user:pass");curl_setopt($ch,CURLOPT_RETURNTRANSFER,true);$response=curl_exec($ch);echo$response;curl_close($ch);

In PHP, these details can be found on the $_SERVER superglobal. When basic authentication is in use, the username and password supplied by the user can be found in $_SERVER["PHP_AUTH_USER"] and $_SERVER["PHP_AUTH_PW"], respectively. When a request is made without credentials, or with invalid credentials, a 401 Unauthorized status code can be sent to tell the client why the server is not sending him the content requested.

Similar to basic authentication, but rather more secure, is HTTP Digest authentication (the Wikipedia page includes a great explanation with examples). This process combines the username and password with the realm, a client nonce (a nonce is a cryptographic term meaning “number used once”), a server nonce, and other information, and hashes them before sending. It may sound complicated to implement, but this standard is well understood and widely implemented by both clients and servers.

Very little changes when working with digest authentication when compared to the example of basic authentication just shown; the main things to look out for are:

The CURLOPT_HTTPAUTH option should be set to CURLAUTH_DIGEST.

On the receiving end, you can find the user data in $_SERVER[PHP_AUTH_DIGEST], which will need decoding according to the type of digest authentication you are using.

Digest authentication is preferred over basic authentication unless the connection is over SSL. If you want to work with digest auth then there’s a good resource on Sitepoint.

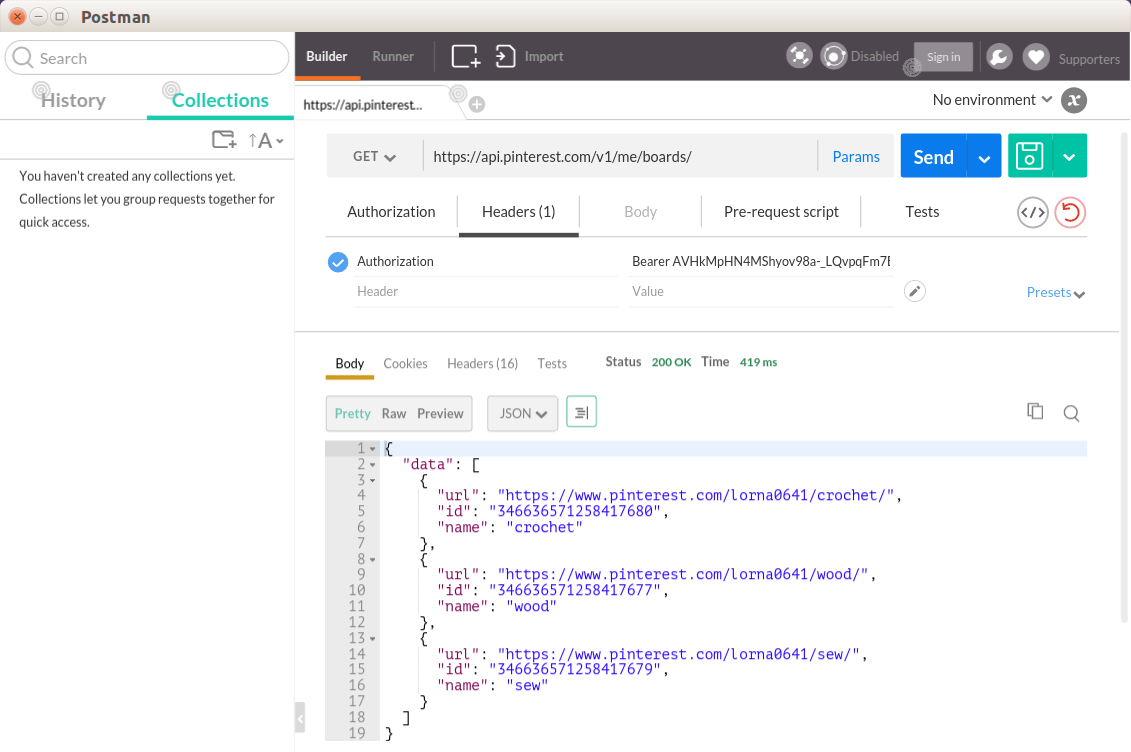

An even better solution has emerged in the last few years: OAuth (version 2 is much better than version 1). OAuth arises as a solution to a very specific and common problem: how do we allow a third party (such as an external application on a mobile device) to have secure access to a user’s data? This is solved by establishing a three-way relationship, so that requests coming to the providing API from the third-party consumer have access to the user’s data, but do not impersonate that user. For every combination of application and user, the external application will send the user to the providing API to confirm that she wants access to be granted. Once the relationship is established, the user can, at any time, visit the providing API (with which she originally had the relationship of trust) to revoke that access. Newer versions of OAuth are simple to implement but, again, should always be used over SSL.

In OAuth terminology, we name the client the “consumer” and the server the “provider.” The consumer could be a app on your smartphone for example, and the provider would then be the system where you already have an account such as GitHub. Features such as “Sign in with GitHub” use this approach.

The basic process looks something like this:

The user chooses to sign in with GitHub, or link their GitHub account to a third-party client.

The client forwards the user to the provider’s page to sign in and give permission for this client to access the user’s data.

The user does sign in and confirm, and arrives back in the app.

The client can then get an access token from the provider.

Once we have the access token, we send this in the Authorization header for every request, something like:

Authorization: Bearer 852990de317

This approach is elegant in two ways:

The identity information is not sent as part of the body of the request. By sending this information in the header, we separate the two concerns.

By using an access token rather than the user’s actual credentials, we give the ability for that access token to expire or be revoked in the future. This allows users to safely grant access to even unknown applications and know that they can always remove that access in the future, even if that application doesn’t offer the option to remove creds (or if the user doesn’t trust it to), without needing to change the user’s credentials and therefore break all of the integrations that use this account.

This solution is very widely used in APIs and is recommended if you need to authenticate users in your own applications.

Hopefully this serves to cover the overall concept of OAuth and how to use an access token in your own application. For a more complete explanation, the book Getting Started with OAuth 2.0 (O’Reilly) is an excellent reference.

Just like for other web requests, getting caching right can help enormously when an API server needs to handle a lot of traffic. Requests that perform actions cannot be cached, as they must be processed by the server each time, but GET requests certainly can be, in the right situation. Caching can either be done by the server, which makes a decision about whether to serve a previous version of a resource, or by clients storing the result of previous requests and allowing us to compare versions.

Giving version information along with a resource is a key ingredient in client-side caching, and also links with the nonatomic update procedures in REST as we mention in “Update a Resource with PUT”. When returning a resource, either an ETag (usually a hash of the representation itself) or a Last-Modified (the date this record last changed) is included with the response. Clients that understand these systems can then store these responses locally, and when making the same request again at a later point, they can tell us which version of a resource they already have. This is very similar to the way that web browsers cache assets such as stylesheets and images.

When a resource is served with an ETag header, this header will contain some textual representation of the resource, perhaps a hash of the resource or a combination of file size and timestamp. When requesting the resource at a later date, the client can send an If-None-Match header with the value of the ETag in it. If the current version of the resource has a nonmatching ETag, then the new resource will be returned with its ETag header. However if the ETag values do match, the server can simply respond with a 304 “Not Modified” status code and an empty body, indicating to the client that it can use the version it already has without transferring the new version. This can help reduce server load and network bandwidth.

In exactly the same way, a resource that is sent with a Last-Modified header can be stored with that header information by the client. A subsequent request would then have an If-Modified-Since header, with the current Last-Modified value in it. The server compares the timestamp it receives with the last update to the resource, and again either serves the resource with new metadata, or with the much smaller 304 response.

As with almost every aspect of HTTP, the headers that can be used aren’t set in stone. It is possible to invent new headers if there’s more information to convey for which there isn’t a header. Headers that aren’t “official” can always be used (sometimes they are prefixed with X- but they don’t have to be), so you can make use of this in your own applications if you wish.

A good example, often seen on the Web, is when a tool such as Varnish has been involved in serving a response, and it adds its own headers. I have Varnish installed in front of my own site, and when I request it, I see:

HTTP/1.1200OKServer:Apache/2.4.7 (Ubuntu)X-Powered-By:PHP/5.5.9-1ubuntu4.6X-Pingback:http://www.lornajane.net/xmlrpc.phpContent-Type:text/html; charset=UTF-8Date:Sat, 11 Jul 2015 08:57:32 GMTX-Varnish:600227065 600227033Age:43Via:1.1 varnishConnection:keep-alive

That additional X-Varnish header shows me that Varnish served the request. It isn’t an official header, but these X-* headers are used to denote all kinds of things in APIs and on the Web. A great example comes from GitHub. Here’s what happens when I make a request to fetch a list of the repositories associated with my user account:

HTTP/1.1200OKServer:GitHub.comDate:Sat, 11 Jul 2015 08:59:01 GMTContent-Type:application/json; charset=utf-8Content-Length:157631Status:200 OKX-RateLimit-Limit:60X-RateLimit-Remaining:59ETag:"8976d7fc7aa861a8581108e59ae76506"X-GitHub-Media-Type:github.v3X-GitHub-Request-Id:5EC19EE1:61C0:10B4CDB:55A0DAD5X-Content-Type-Options:nosniffX-Served-By:13d09b732ebe76f892093130dc088652

There are a few custom headers in this example but the X-RateLimit-* headers are particularly worth noting; they check whether too many requests are being made. Using custom headers like these, any additional data can be transferred between client and server that isn’t part of the body data, which means all parties can stay “on the same page” with the data exchange.

Headers are particularly important when working with APIs as there is often separation between the data and the metadata. Not all APIs are designed that way, but look out for some examples in particular in Chapter 8.

The HTTP protocol is stateless. This means that every request made must include all the information needed in order for the web server to serve the correct responses (at least, in theory!). In practice, that isn’t how we experience the Web as users. As we browse around a shopping site, the website “remembers” which products we already viewed and which we placed in our basket—we experience our journeys on the Web as connected experiences.

So how does this work? Additional information is being saved and sent with our web requests through the use of cookies. Cookies are just key/value pairs: simple variables that can be stored on the client and sent back to us with future requests. A user’s choice of theme or accessibility settings could be stored, or a cookie could be dropped to record something as simple as whether the user has visited the site before, or dismissed a particular alert message that was shown.

In this chapter we’ll look at how cookies work and how they fit into our existing knowledge of HTTP, then discuss how cookies are used in API design (spoiler alert: they’re not).

This isn’t the moment where I tell you how to bake cookies, although the instructions do read a little bit like a recipe. What happens when we work with cookies goes something like this (see Figure 4-1):

A request arrives from the client, without cookies.

Send the response, including cookie(s).

The next request arrives. Since cookies were already sent, they will be sent back to us in these later requests.

Send the next response, also with cookies (either changed or unchanged).

Steps 3–4 are repeated indefinitely.

The main thing to remember is that, for a first visit from a new client (or someone who clears their cookies), there will be no cookies, so it is not possible to rely on them being present. This is easy to miss in testing unless you consciously make the effort to also test the case in which a user arrives without cookies; by default, your browser will keep sending the cookies.

Another thing to note is that cookies are only sent back with subsequent requests by convention; not all clients will do this automatically. Once a cookie is received by a client, even if it isn’t sent again in any later responses, most clients will send that cookie with each and every subsequent request. The most important thing to remember about cookies is that you cannot trust the data. When a cookie is sent to a client, it will be stored in plain text on that computer or device. Users can edit cookies as they please, or add and remove cookies, very easily. This makes incoming cookie data about as trustworthy as data that arrives on the URL with a GET request.

To put that a little more plainly: do not trust cookie data.

How do users edit their data? Well, there are a couple of options. First, let’s look at using cookies with cURL. We can capture cookies into a “cookie jar” by using the -c switch. Take a look at what a well-known site like amazon.com sets for a new visitor:

curl -c cookies.txt http://www.amazon.com/

The cookie jar file that was saved will look something like this:

# Netscape HTTP Cookie File # http://curl.haxx.se/rfc/cookie_spec.html # This file was generated by libcurl! Edit at your own risk. .amazon.com TRUE / FALSE 1355305311 skin noskin .amazon.com TRUE / FALSE 2082787201 session-id-time 2082787201l .amazon.com TRUE / FALSE 2082787201 session-id 000-0000000-0000000

The format here contains the following elements:

Domain the cookie is valid for

Whether it is valid for all machines on this domain (usually TRUE)

Path within the domain that this cookie is valid for

Whether this cookie is only to be sent over a secure connection

When this cookie will expire

Name of the cookie

Value of the cookie

Note the phrase “Edit at your own risk,” which translates to developers as “Edit, and interesting things may happen.” Whether working with a browser or cURL, it is possible to change these values wherever the cookies are stored, and they will be sent back to the server with a later request. With cURL, change the -c switch to a -b switch to send the cookies back with a request (use them both together to also capture incoming ones back into the file).

curl -b cookies.txt http://www.amazon.com/

In the browser, your options will vary depending on which browser you use, but all of the modern browsers have developer tools either built in or available via a plug-in that enables you to see and to change the cookies that are being sent, as was mentioned in “Browser Tools”. I use Chrome so I use the Edit This Cookie plug-in. Most browsers actually use an SQLite database rather than a plain text file to store their cookies; however you can still edit this using standard SQLite tools.

Cookies are key/value pairs, as I’ve mentioned, that are sent to the browser along with some other information, such as which paths the cookie is valid for and when it expires. Since PHP is designed to solve “the Web problem,” it has some great features for working with cookies. To set a cookie, use a function helpfully called setcookie():

<?phpsetcookie("visited",true);

We can use this approach to show a welcome message to a visitor when he first comes to the site—because without any previous cookies, he won’t have the “visited” cookie set. Once he has received one response from this server, his “visited” cookie will be seen on future requests. In PHP, cookies that arrived with a request can be found in the $_COOKIE superglobal variable. It is an array containing the keys and values of the cookies that were sent with the request. Following the preceding example we could read the cookie and the code would look something like this:

<?phpvar_dump($_COOKIE);// 1st request: array(0) { }// later requests: array(1) { ["visited"]=> string(1) "1" }

When working with APIs, the same facilities are available to us. When PHP is a server, the techniques of using setcookie and checking for values in $_COOKIE are all that are needed, exactly like when we are working with a standard web application. When consuming external services in PHP, it is possible to send cookie headers with our requests in the usual way.

There’s some nice support for sending cookies in PHP’s cURL extension, which has a specific flag for setting cookies rather than just adding headers. With PHP’s cURL extension, it is possible to do something like this:

<?php$url="http://requestb.in/example";$ch=curl_init($url);curl_setopt($ch,CURLOPT_COOKIE,"visited=true");curl_setopt($ch,CURLOPT_RETURNTRANSFER,true);$result=curl_exec($ch);curl_close($ch);

A selection of other options can be set using cookies, as seen when we discussed capturing them into the cookie jar in the code examples in “Cookie Mechanics”. The expiry date is probably the most-used setting. The expiry information is used to let the client know how long this cookie is valid for. After this time, the cookie will expire and not be sent with any later requests. This relies on the client and server clocks being vaguely in sync, which is often not the case. Having exactly matching clocks is rare, and in some cases clients can have their clocks set incorrectly by a number of years, so beware.

The expiry can be set in the past to delete a cookie that is no longer needed. If an expiry has not been set for a cookie, it becomes a “session cookie,” which means that it is valid until the user closes the browser. This is why you should always close your browser on any machine or device that is used by others, even after logging out of your accounts.

Don’t confuse the “session cookie” with the cookies PHP uses to track user sessions within a web application.

It’s very unusual to see a cookie used in APIs, and the reason for this is fundamental to APIs: they are stateless, as was already mentioned. Stateless means that they don’t rely on information that is not part of this request; we don’t follow on from information that went before or rely on a particular setting being in a particular state.

A stateless API cannot, by definition, use sessions either. These rely on information already having been exchanged and stored for a particular journey. Many PHP installations turn on sessions by default but this isn’t appropriate for an API.

Having a stateless API also enables it to be “idempotent.” Idempotency is the idea that you can repeat a request and achieve the same outcome each time.