“An accessible, sensible book on the importance of hypermedia APIs that anyone working with APIs should read, whether you are a business stakeholder all the way up to an existing hypermedia skeptic—a must read.”

Kin Lane, The API Evangelist

"RESTful Web Clients is a timely, much needed deep dive on a very important part of the API landscape.”

John Musser, CEO, API Science

“This book does a great job of explaining the basics and benefits of hypermedia and clearly articulates how client apps can make use of them. A must read for both API producers and consumers.”

Lorinda Brandon, API Strategist and Evangelist

“A must read for API practitioners who want to discover a way to design web applications with more resilience, agility, and reusability for both the short and the long term.”

Mehdi Medjaoui, Founder of APIdays conferences/OAuth.io

“A great resource on what to consider and implement when building RESTful web APIs and clients. I highly recommend it for anyone trying to build reusable APIs.”

Jeremy Wilken, Software Architect

“Whether you are a frontend or backend developer, reading this book will help you shift your understanding of designing and consuming APIs into higher gear.”

Mark W. Foster, Enterprise Architect, Distributed Systems

“This book is a must have for anyone building hypermedia based systems. It broaches one of the toughest and least understood topics head on, how to build hypermedia clients.”

Glenn Block, Director of Product Management for Auth0

“Mike’s delightful writing and technical excellence tells an easy-to-understand and relatable story that takes you from an RPC-style rookie to a hypermedia connoisseur in just a few pages. Impressive and highly recommended reading!”

Asbjørn Ulsberg, Business Architect, PayEx

“This book delivers on the piece developers have been missing about hypermedia: Learn to stop worrying and love responding to what the server sends.”

Brian Sletten, President of Bosatsu Consulting, Inc.

"RESTful Web Clients shows how applying hypermedia correctly results in a smaller, generic codebase that can adapt and evolve as the server’s capabilities change.”

Erik Mogensen, Chief Architect, Escenic

“APIs change, clients break. But does it have to be this way? What if they could not only survive, but adapt to change? This book is your guide through this adventure.”

Carles Jove i Buxeda, Full-stack Developer

and OS Contributor

Copyright © 2017 Amundsen.com, Inc. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://www.oreilly.com/safari). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781491921906 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. RESTful Web Clients, the cover image of an Asian badger, and related trade dress are trademarks of O’Reilly Media, Inc.

While the publisher and the author have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the author disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-92190-6

[LSI]

This book is dedicated to all those who have had to endure my repeated promises to write it. My apologies to you all—for all my promises and for the modest results that appear within these pages.

In the foreword to this book’s precursor, RESTful Web APIs (O’Reilly), Sam Ruby says:

Hopefully the pebbles that [RESTful Web APIs] kicks loose will have the same effect as its predecessor [RESTful Web Services] did. Who knows, perhaps in another seven years it will be time to do this all over again, and highlight some other facet of Representational State Transfer that continues to be under-appreciated.

Well, it hasn’t quite been seven years, but this is exactly where RESTful Web Clients comes in. Mike has quite the pedigree in the API space and, with this text, has brought his usual style of clarity, both in writing and in thought, to this oft-ignored part of web APIs.

Roy Fielding’s dissertation, Architectural Styles and the Design of Network-based Software Architectures, is the definitional text of REST. In the very first section, Fielding describes the seven essential architectural constraints that describe REST. The first one is called client–server, and is described like this:

Separation of concerns is the principle behind the client–server constraints. By separating the user interface concerns from the data storage concerns, we improve the portability of the user interface across multiple platforms and improve scalability by simplifying the server components. Perhaps most significant to the Web, however, is that the separation allows the components to evolve independently, thus supporting the Internet-scale requirement of multiple organizational domains.

Clearly, servers and clients are both incredibly important to this way of designing systems. However, there’s been a bias in the works done that build on top of Fielding: almost all of them focus on the servers, and rarely discuss the clients. But doing so leaves out an incredibly important part of the picture: many of the benefits of a RESTful architecture can only be realized with a properly designed client. There are many systems which have a client-server style, but aren’t driven by the same principles as the Web. If we must adapt the way that we code servers to make them more web-like, so must we change the way we code clients.

And indeed, this is a difficult part of moving to a “web way of thinking” when it comes to building APIs. I have had many, many discussions with organizations interested in deploying hypermedia techniques who then struggle when it comes to putting them into practice. I believe that a lot of the difficulty here comes from this focus on the production of hypermedia, without also considering the changes to the way that you consume it. An API designer who expects to build a client for a web API using the same principles as other APIs is going to be frustrated with the results. Yet this is completely understandable, as those of us who advocate this architectural style have often focused almost entirely on production.

In hindsight, this deficiency seems quite obvious: how can we design systems properly if we only focus on half of the equation! I actually believe that this situation is a second-order effect of the way that the majority of people consider APIs today: my organization focuses on how to have an API for my product, but since it’s your organization that consumes it, it’s your job to deal with it. This underlying sentiment has been magnified by the trends of the last five to ten years with APIs: a move toward “simple APIs that don’t require a complex SDK to get useful work done.” An organization that provides an API client is often viewed with skepticism. We’re now starting to see this turned around, as developers grow tired of re-implementing new API clients for every API they wish to use. First-party SDKs are now being viewed in a positive light, as consumers of these APIs can focus more on their application, and worry less about integration.

Furthermore, the Web is increasingly being viewed as an application platform, rather than as a way to easily share text documents. With the rise of proprietary platforms, especially in the mobile space, those who love the free and open nature of the Web are mobilizing to significantly expand the Web platform’s capabilities. To take advantage of this, applications are sprouting more and more JavaScript code as their products grow more ambitious. These applications are yet another kind of client, and one that’s becoming more and more important by the day.

As the gestalt shifts, organizations are in a position to once again consider both sides of the equation. In a broad sense, I think this will lead to even better APIs, but it’s not all roses. As I mentioned before, there are no end to the books you can purchase to help you understand the server side of the equation. But there’s a complete dearth of similar manuals for the client side—until now.

I have been eagerly awaiting this book since Mike first told me he was starting to work on it, and he has not disappointed. It is a fantastic guide to this underserved part of the web API equation, and I am confident that its impact and influence will be noted for years to come. I would tell you that I hope you’ll enjoy reading this book as much as I did, but I don’t need hope—I know you will.

“The beginning is the most important part of the work.”

Plato

Web-based REST and hypermedia services are getting more common every day but there are very few client libraries that take advantage of these powerful API features. This is mostly because the techniques and patterns needed to create successful hypermedia clients have been ignored. Yet, when done right, hypermedia-based client applications exhibit more stability and flexibility than typical one-off custom client code.

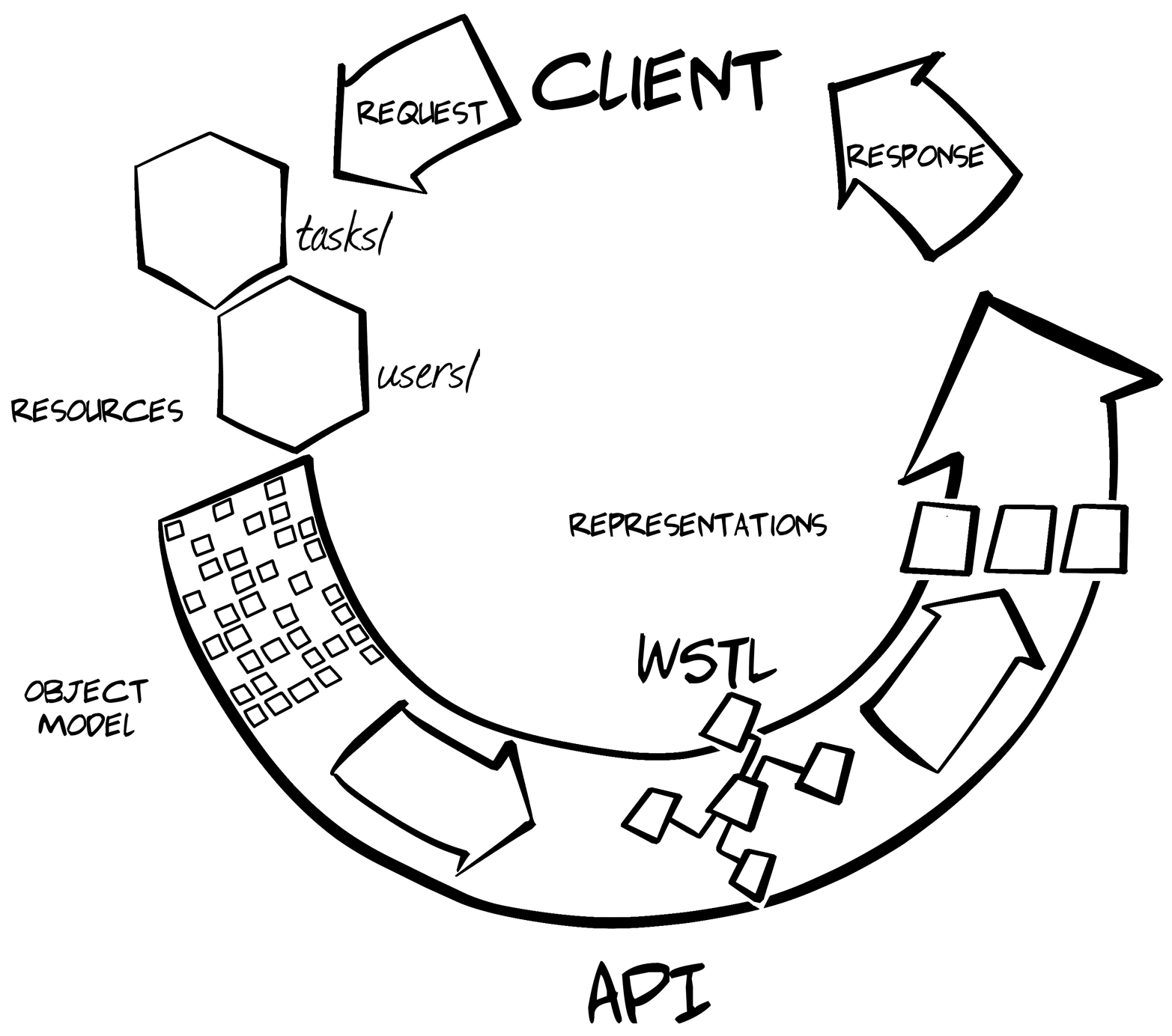

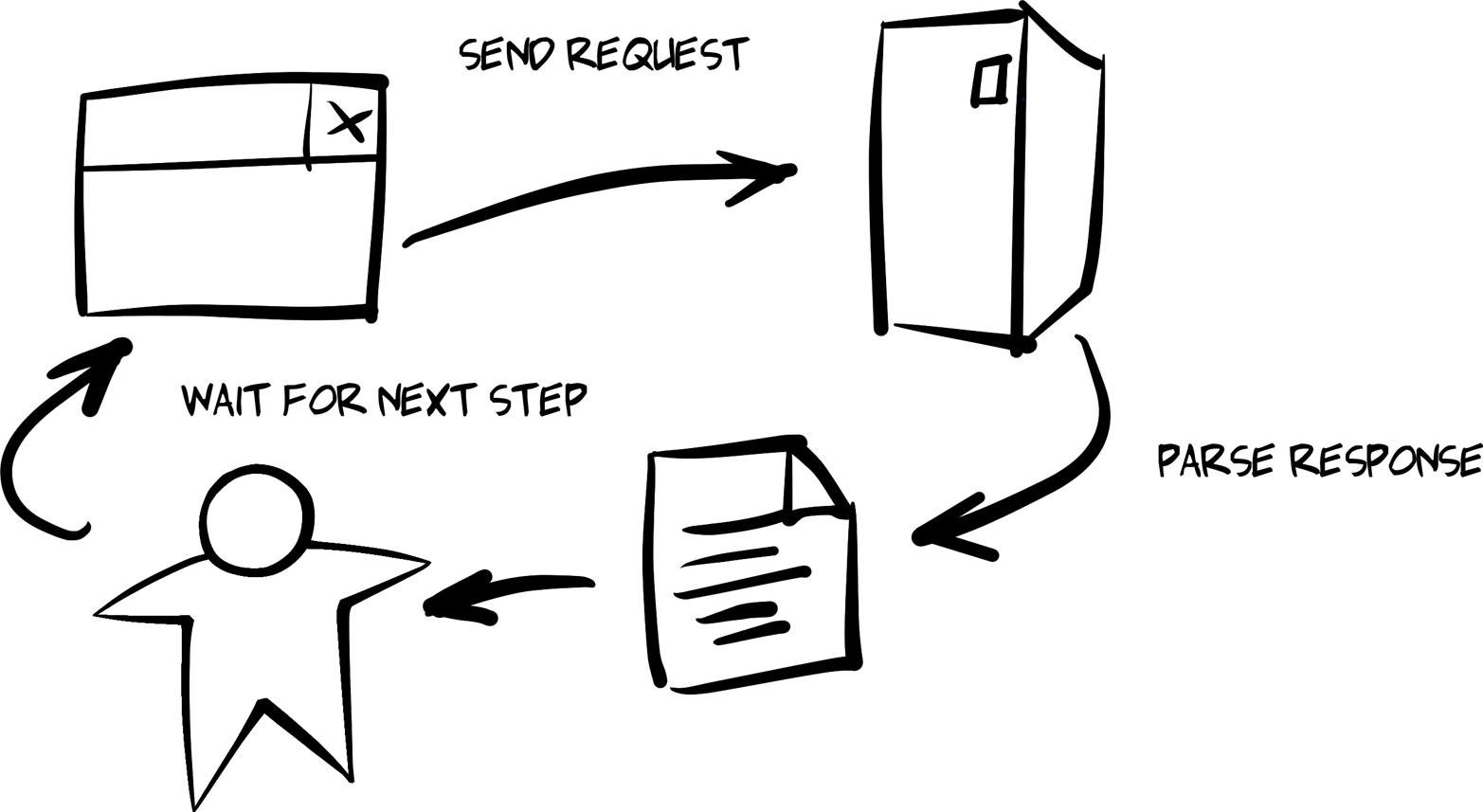

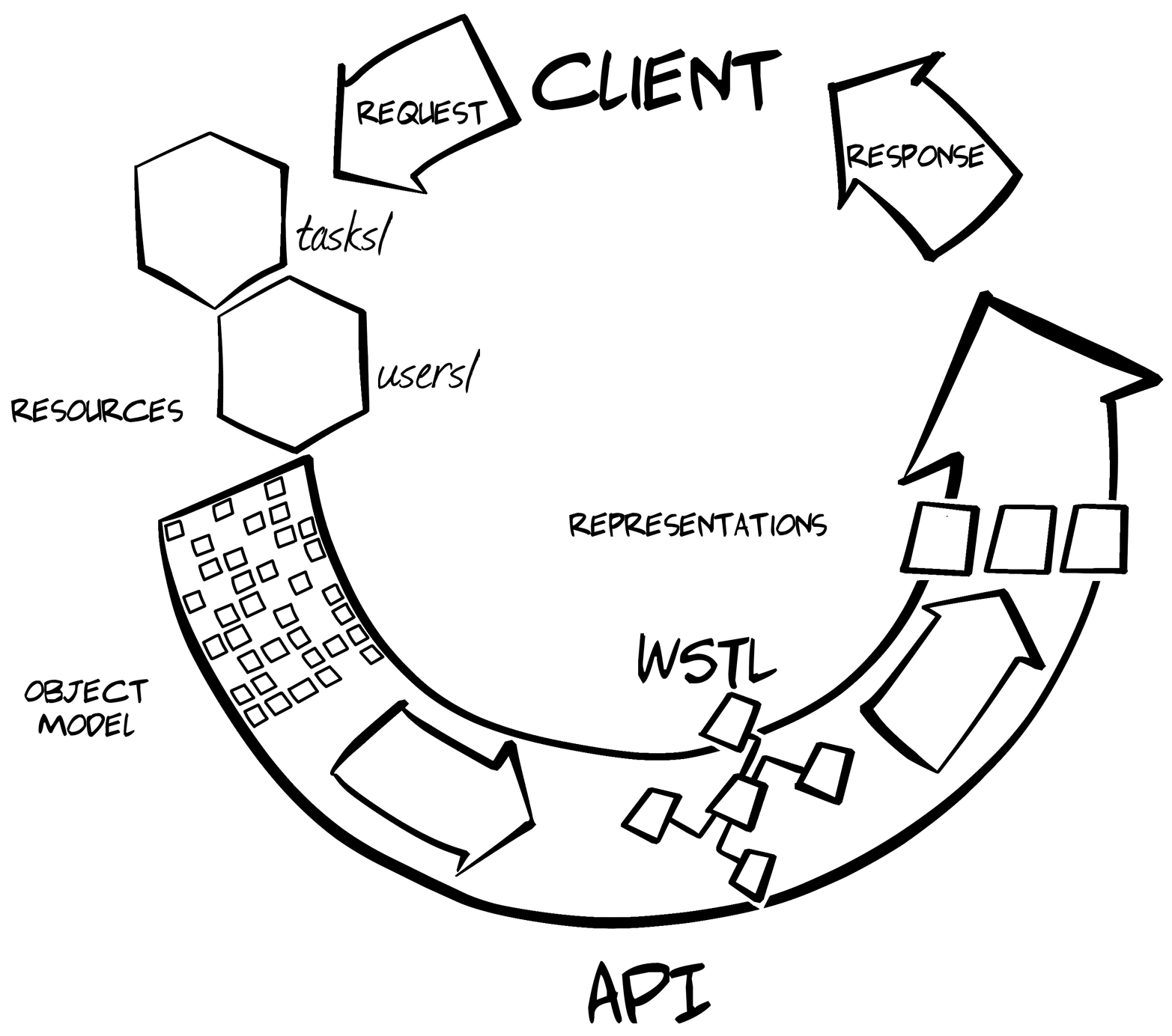

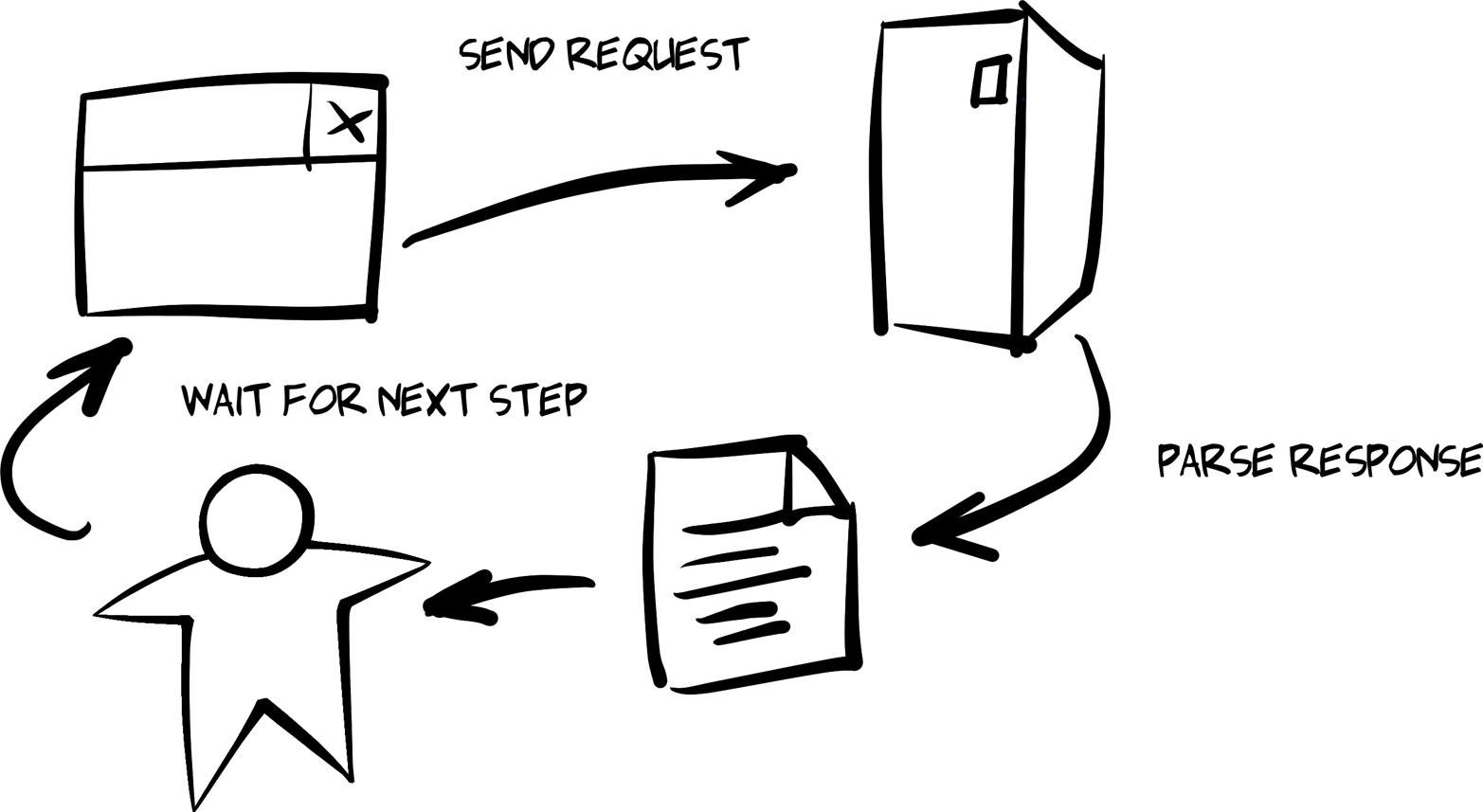

The aim of this book is to give developers both a solid background and a source of working examples that provide clear recommendations for dealing with hypermedia-style APIs. One of the key ideas in this book is that client applications should rely on something I call the Request, Parse, Wait loop or RPW. This is the way all computer games are implemented and it is the way all event-driven interfaces work from windowing-style workstations to reactive machine interfaces.

I’ve been told that some frontend developers might find the RPW model unusual; one person even characterized my recommendations as “radical.” I can understand that viewpoint. So many of the client libraries and practices today focus on designing specially built one-off user interfaces that are difficult to modify and have a hard time reacting to new information provided by services at runtime. However, after reviewing the examples in this book, I hope that frontend developers—most all of whom are much more skilled than I—will be able to build on this initial work and help create a rich collection of best practices, tooling, and reusable libraries for creating high quality user experiences for the growing number of hypermedia APIs, which meet both the desire for high-quality user experience and the need for adaptive clients that can keep up with evolving service interfaces without the need for constant upgrades.

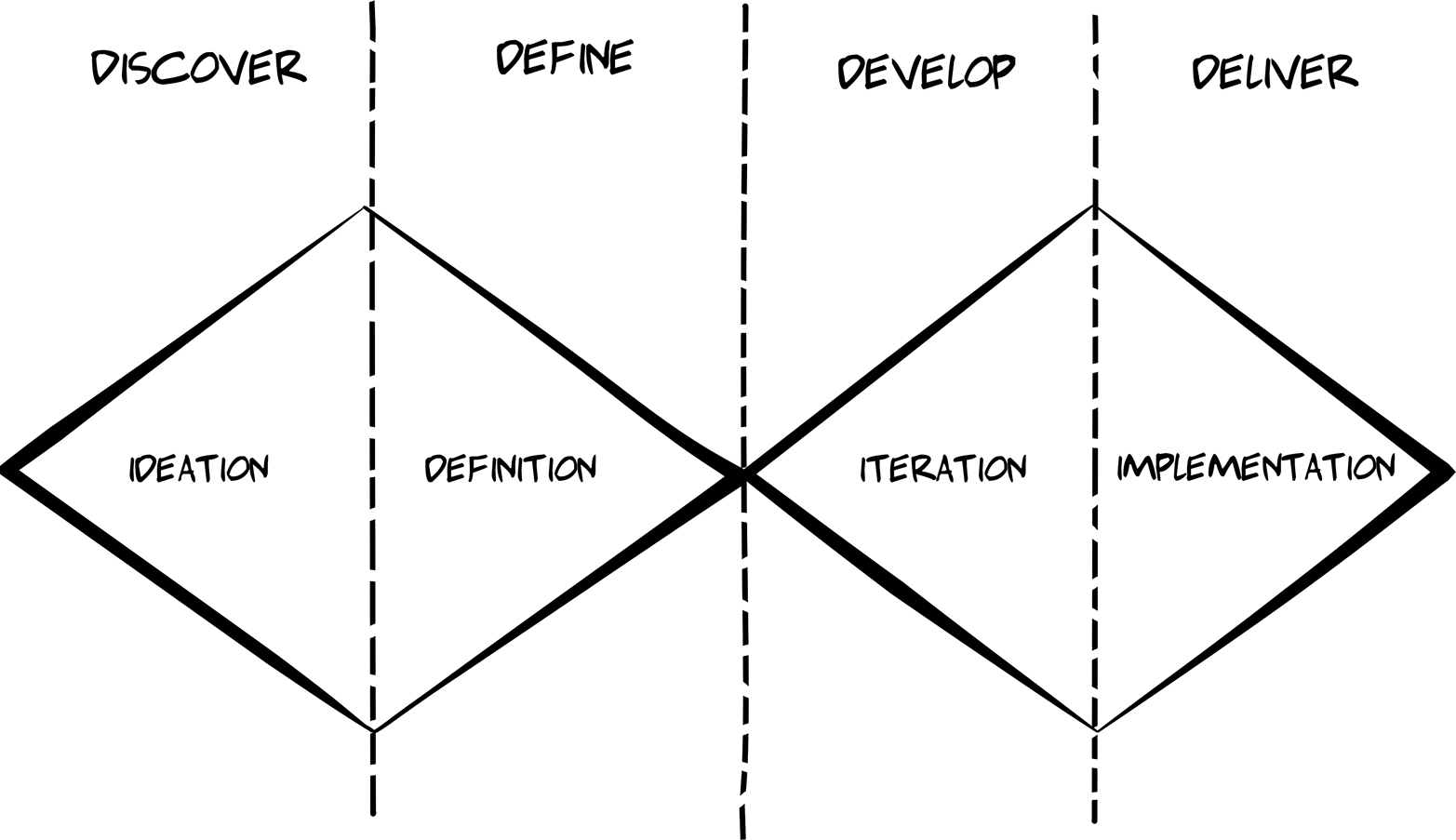

This book takes the reader on a journey from custom bespoke implementations to powerful general-purpose client applications and, along the way, shows how you can harness many of the basic principles that underpin the World Wide Web. The outline is a mix of code-centric chapters and ones that explore important related topics like the representor pattern, human–computer interaction (HCI) modeling, and the challenge of versioning web APIs. Along the way, there is quite a bit of code (over 20 GitHub repos were created for this book). I’ll use small snippets of code within the text of the book, but sometimes those snippets will be hard to understand by themselves. For that reason, I’ll always point the reader to the full code repository online where you’ll find fully functional examples of the ideas covered in the book.

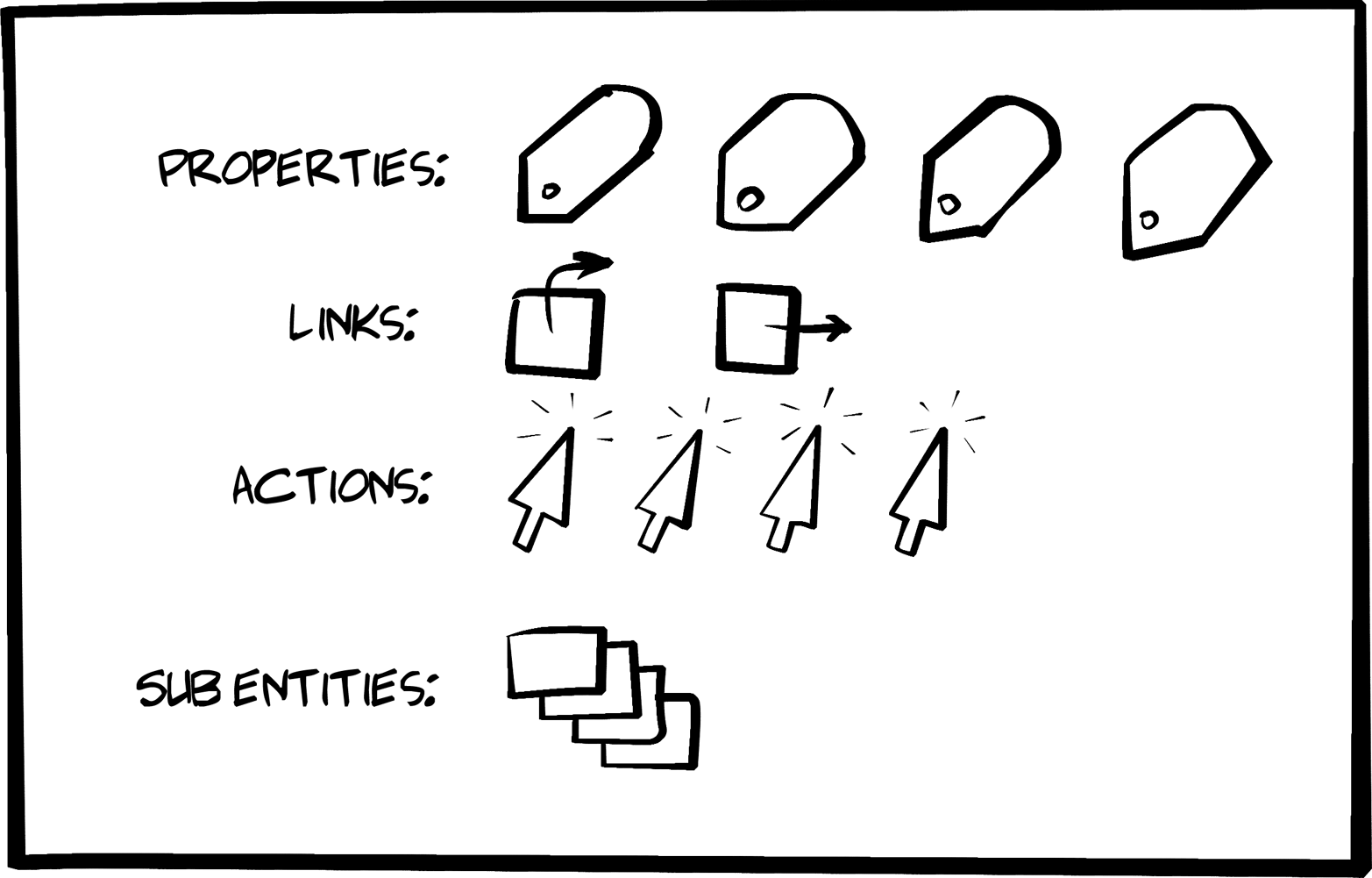

This book explores the world of generic hypermedia-style clients—what they look like, how they differ from typical JSON-object style clients, and how both client and server developers can leverage this style to build systems that are easier to support and adapt over time. The book contains chapters targeting a small set of selected formats (HTML, plain JSON, HAL, Siren, and Collection+JSON) as well as chapters that go into the background on the theories and practices familiar to all web developers, including (1) server-side support for message formats, (2) human–computer interaction modeling, (3) versioning, and (4) implementing a single solution that supports multiple hypermedia formats while interacting with several independent backend services.

Most chapters can stand on their own and could be consumed in any order. But I encourage you to treat the book as a single journey and read it from start to finish in order to get the most out of it. Here is a brief look at how that journey will unfold:

This chapter introduces us to a classic HTML-only client. We’ll use this to quickly review how browsers work and how they’ve affected some people’s view of what’s possible with hypermedia formats on the Web. This chapter also covers the process of converting an HTML-only service output into an initial JSON-only API service. This service will be the basis for all the other client apps we build for this book.

Most client-side web developers have learned to build “JSON clients.” They memorize URLs, consume static objects, and navigate over fixed workflow paths. This is a great way to start but turns out to be a terrible way to work over time. In this chapter we’ll explore ways to overcome the challenges of maintaining plain JSON-style client apps.

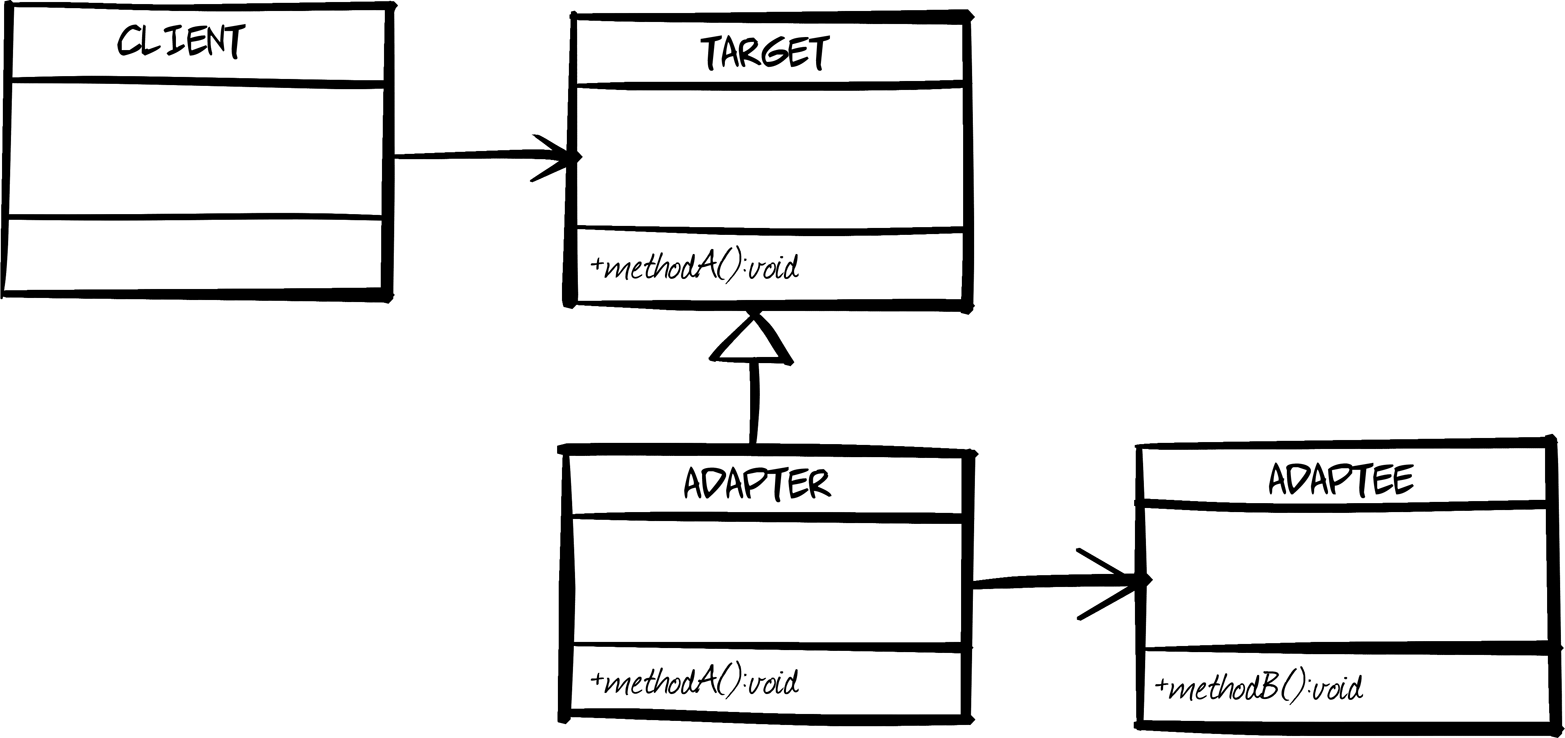

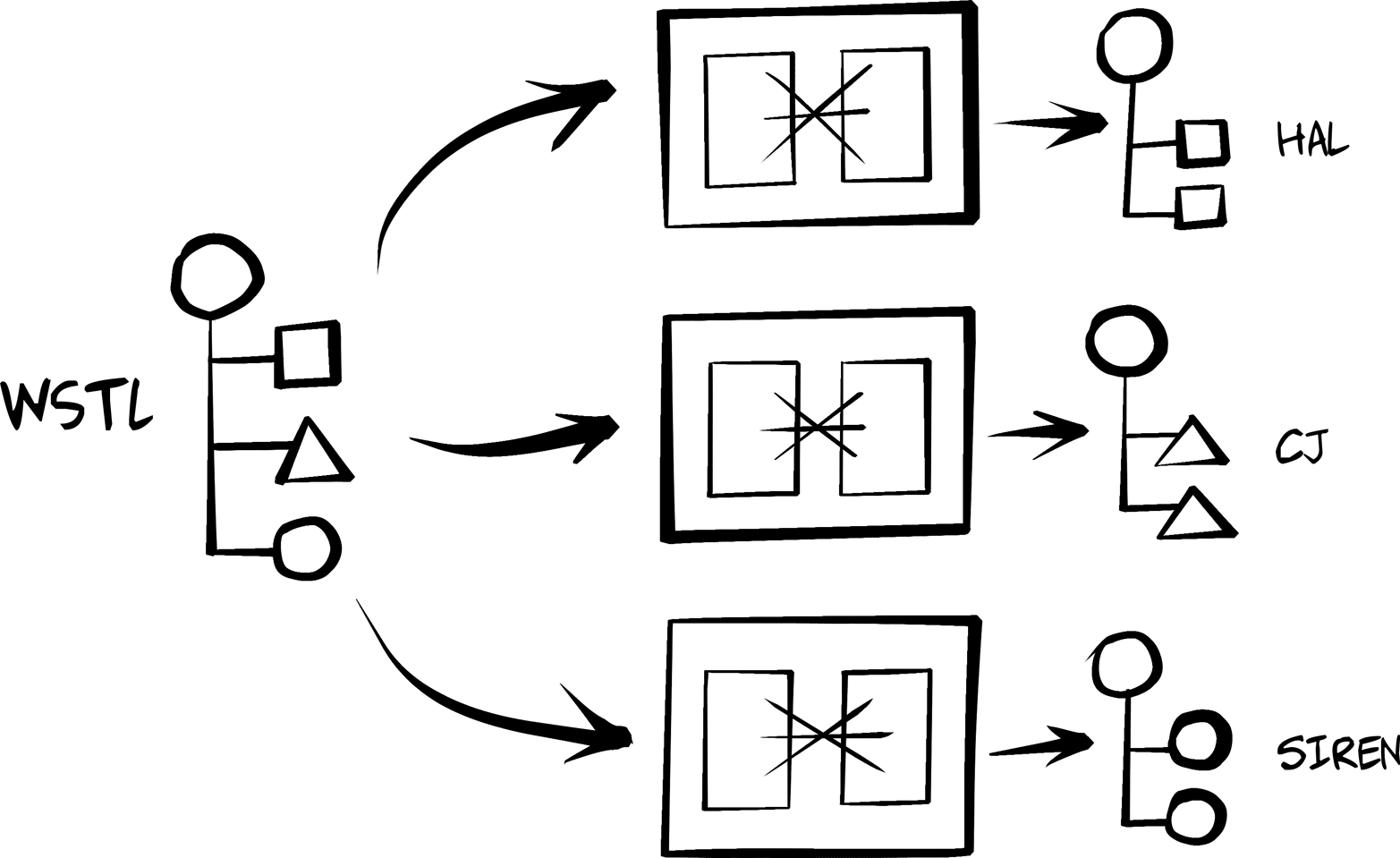

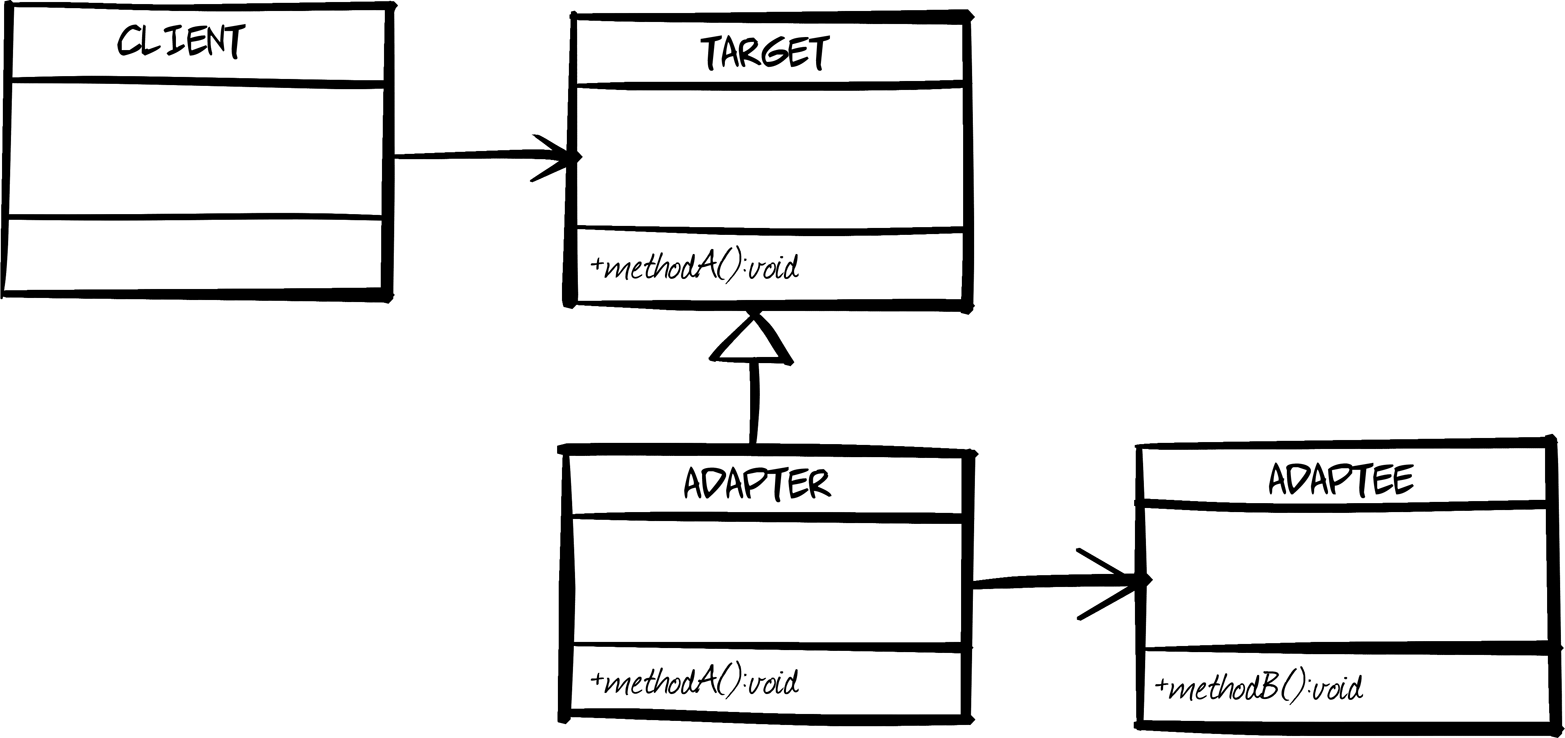

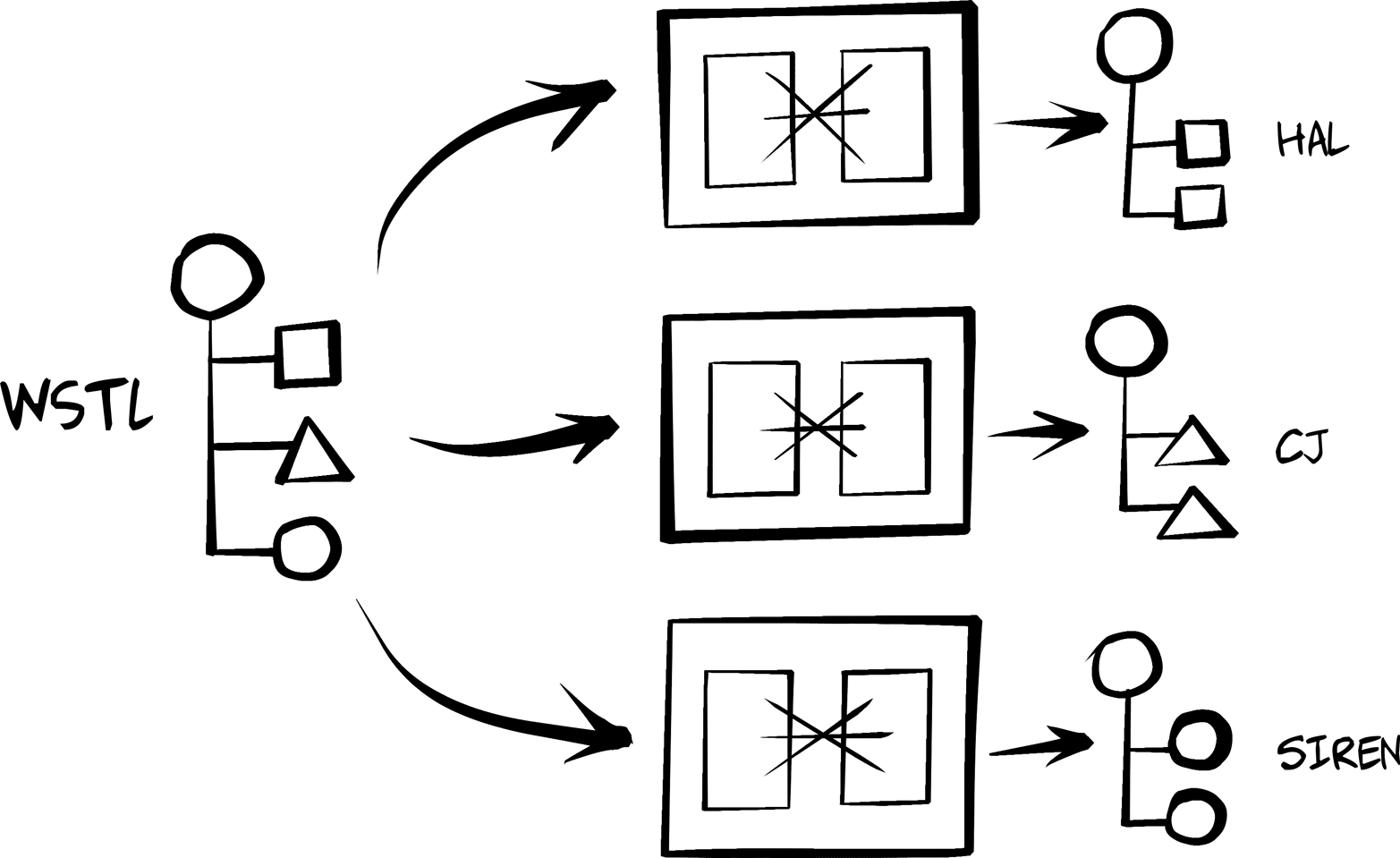

The representor pattern is a simple—and vital—way to deal with web API server output. It is the process of converting internal object models into external message models. We’ll review the pattern (including its roots) and cover how we’ll apply it for providers using the Web Service Transition Language (WeSTL) on the server and the HTML DOM on browser clients.

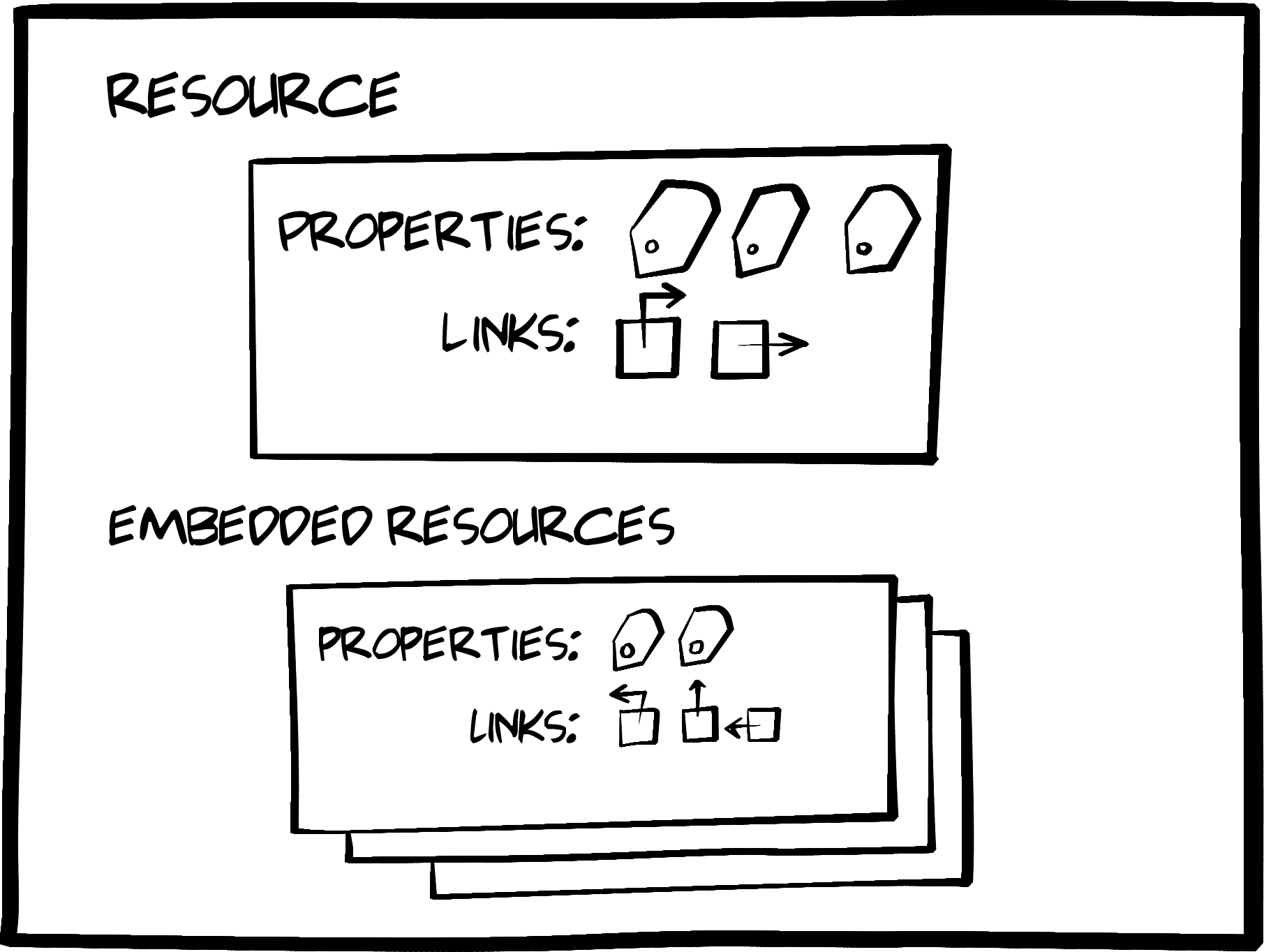

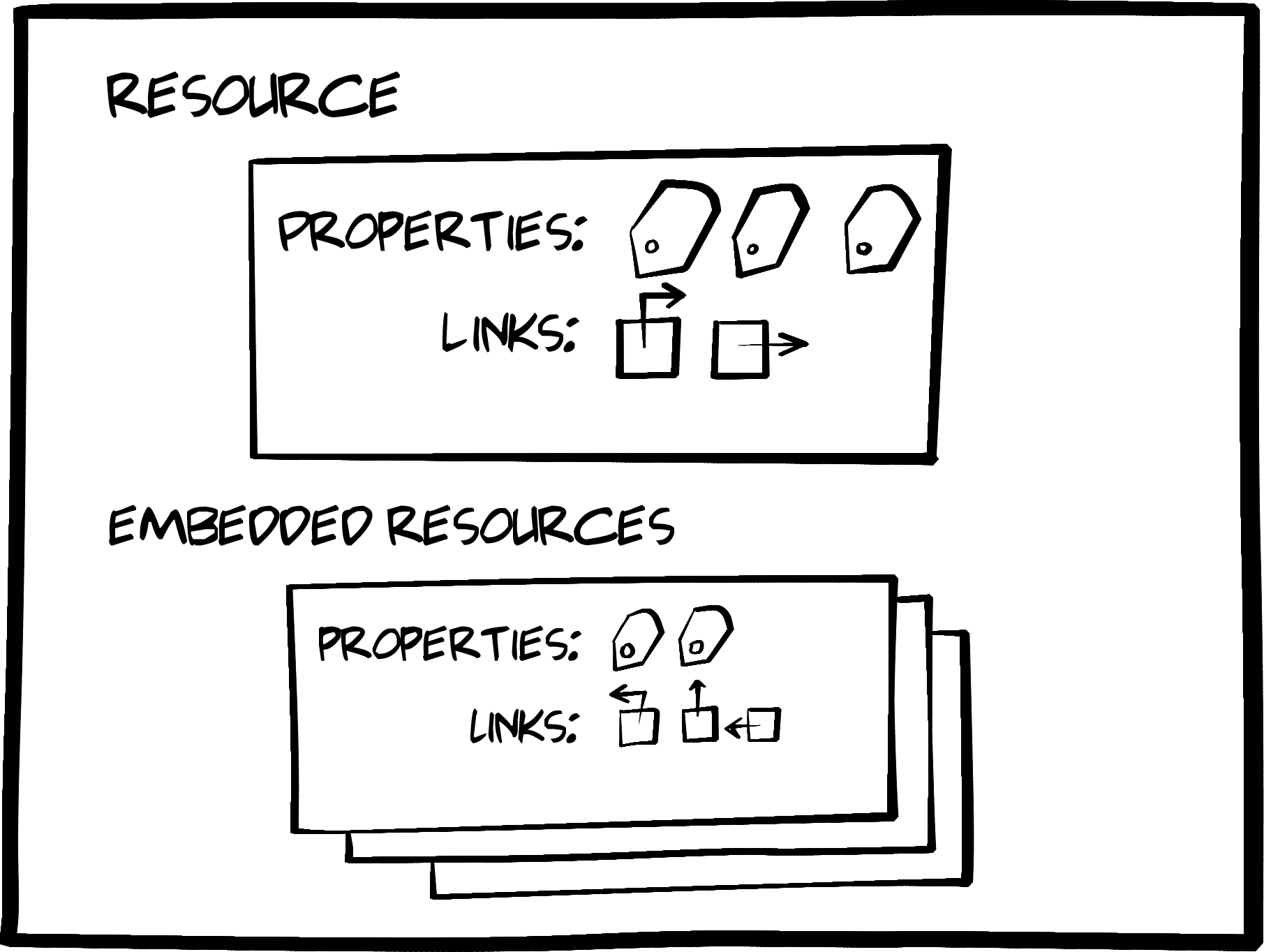

The HAL media type is currently one of the more popular hypermedia formats. It is used, for example, by the Amazon Web Services team for at least two of their APIs. HAL handles one of the three important elements all web clients deal with: Addresses. We’ll see what it’s like to build a generic client using HAL as the message format and introduce the HAL-FORMS extension for improving its action-handling capabilities.

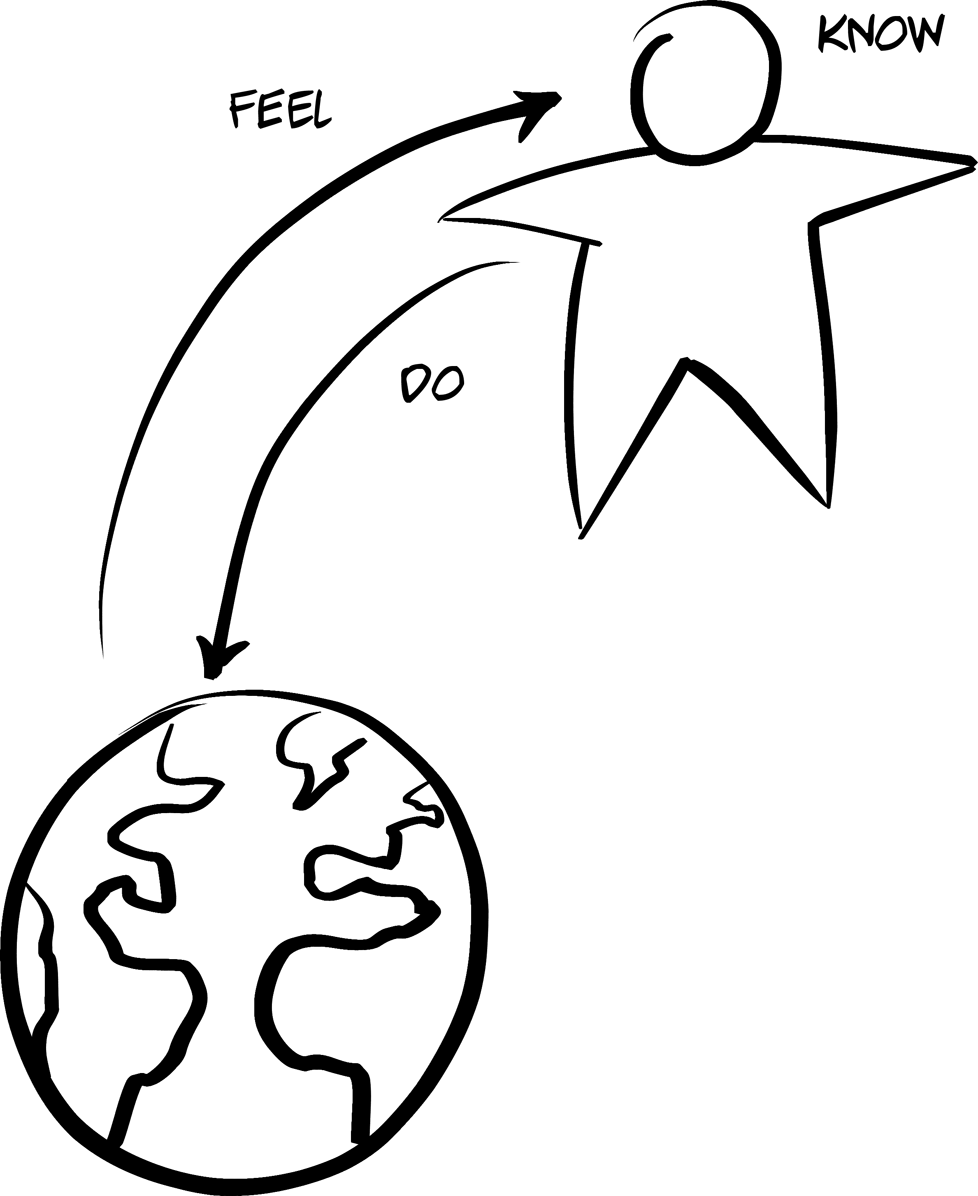

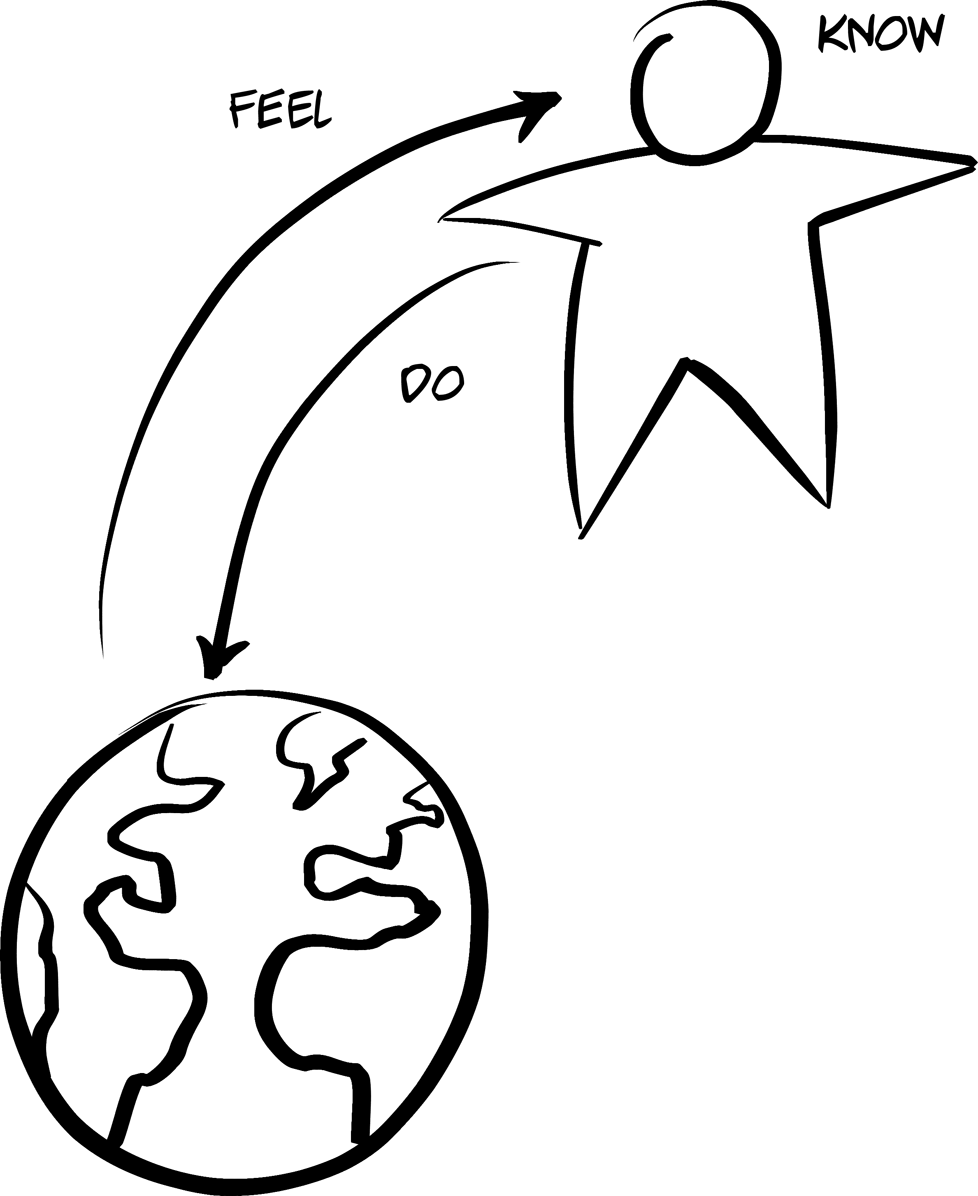

You’ll notice that most of the client applications we’ll build here look similar. Essentially, we’re building “explorers” that can—in some limited way—fend for themselves as they explore the world around them. These clients follow a pattern called the Request, Parse, Wait loop, or RPW. This pattern is based on several classic notions of how we interact with our world, and we’ll explore them here.

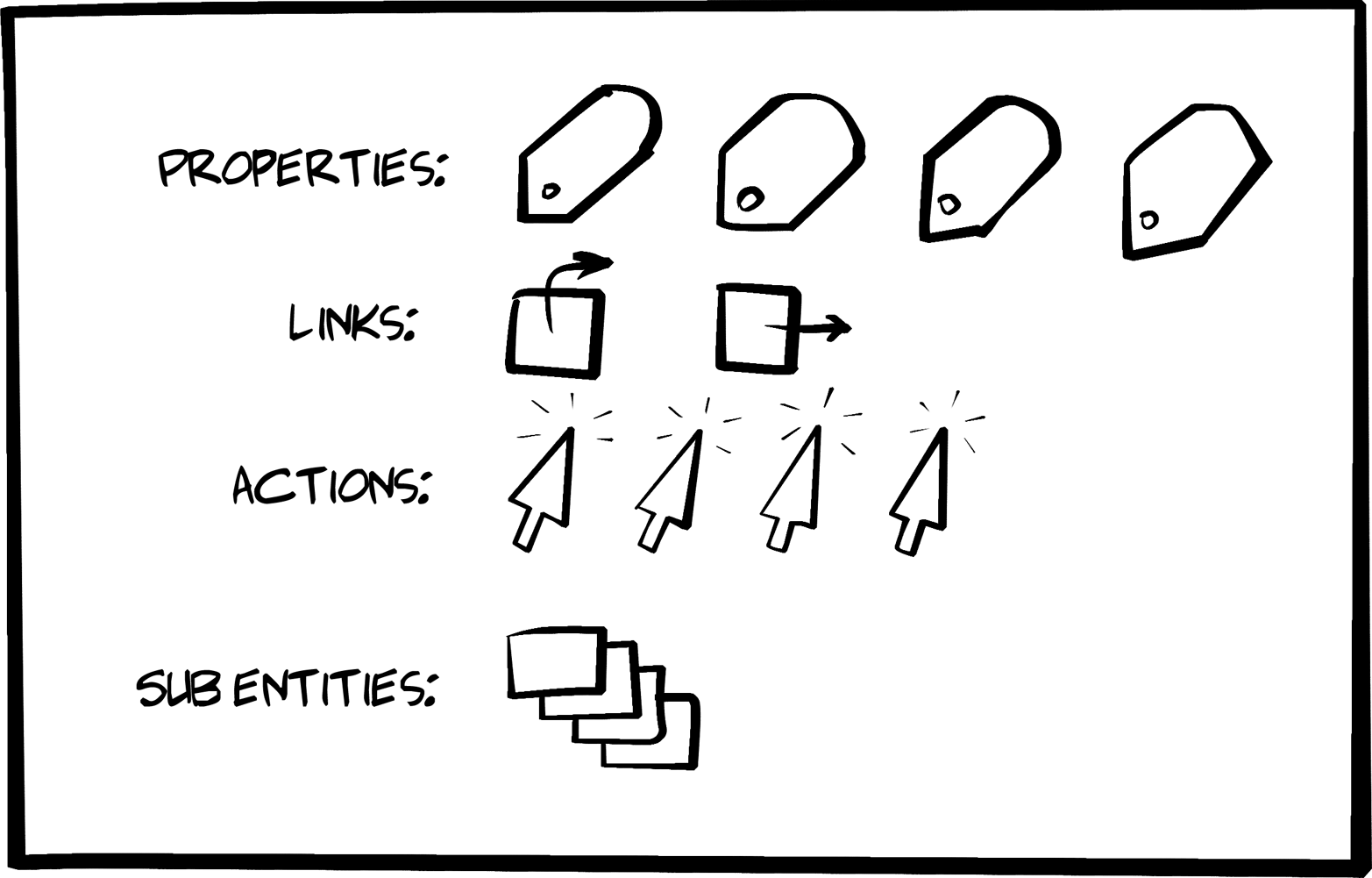

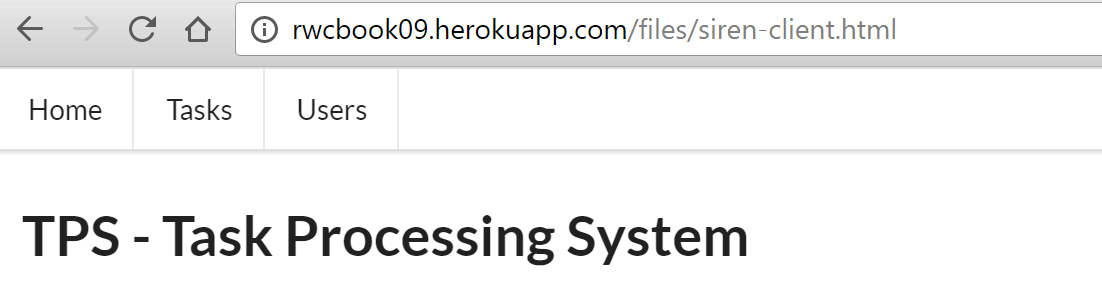

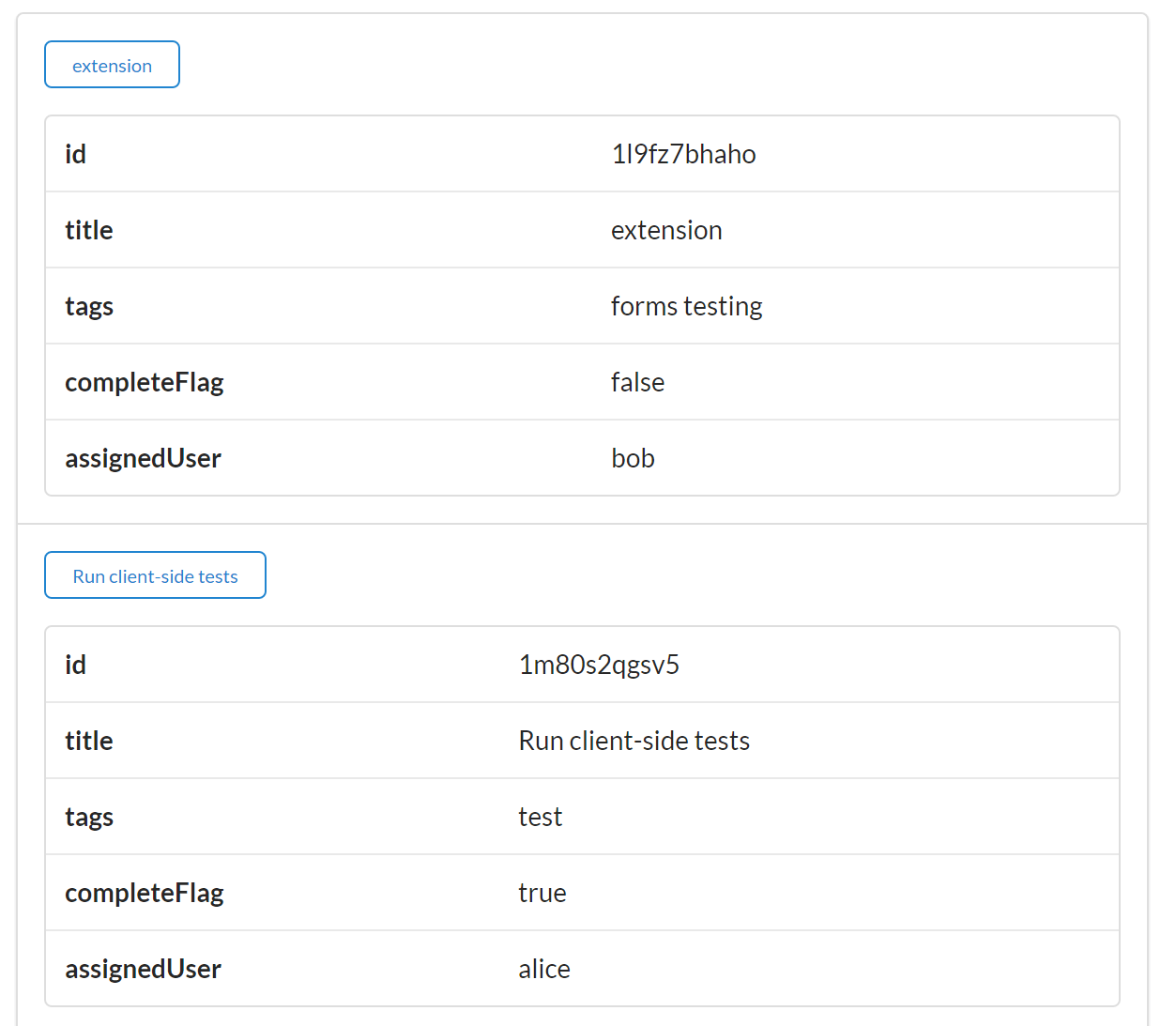

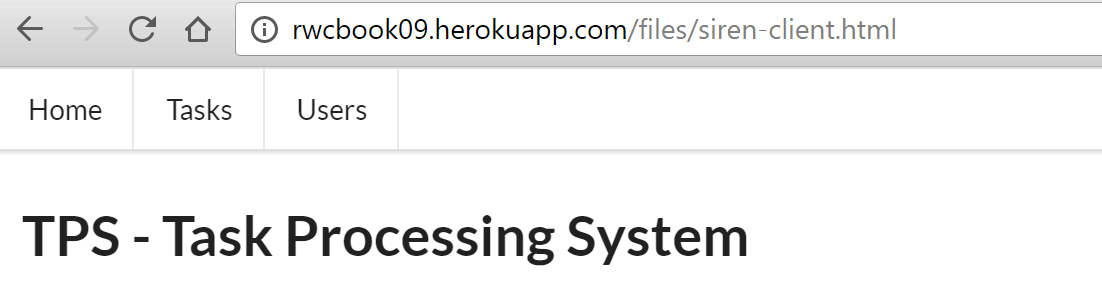

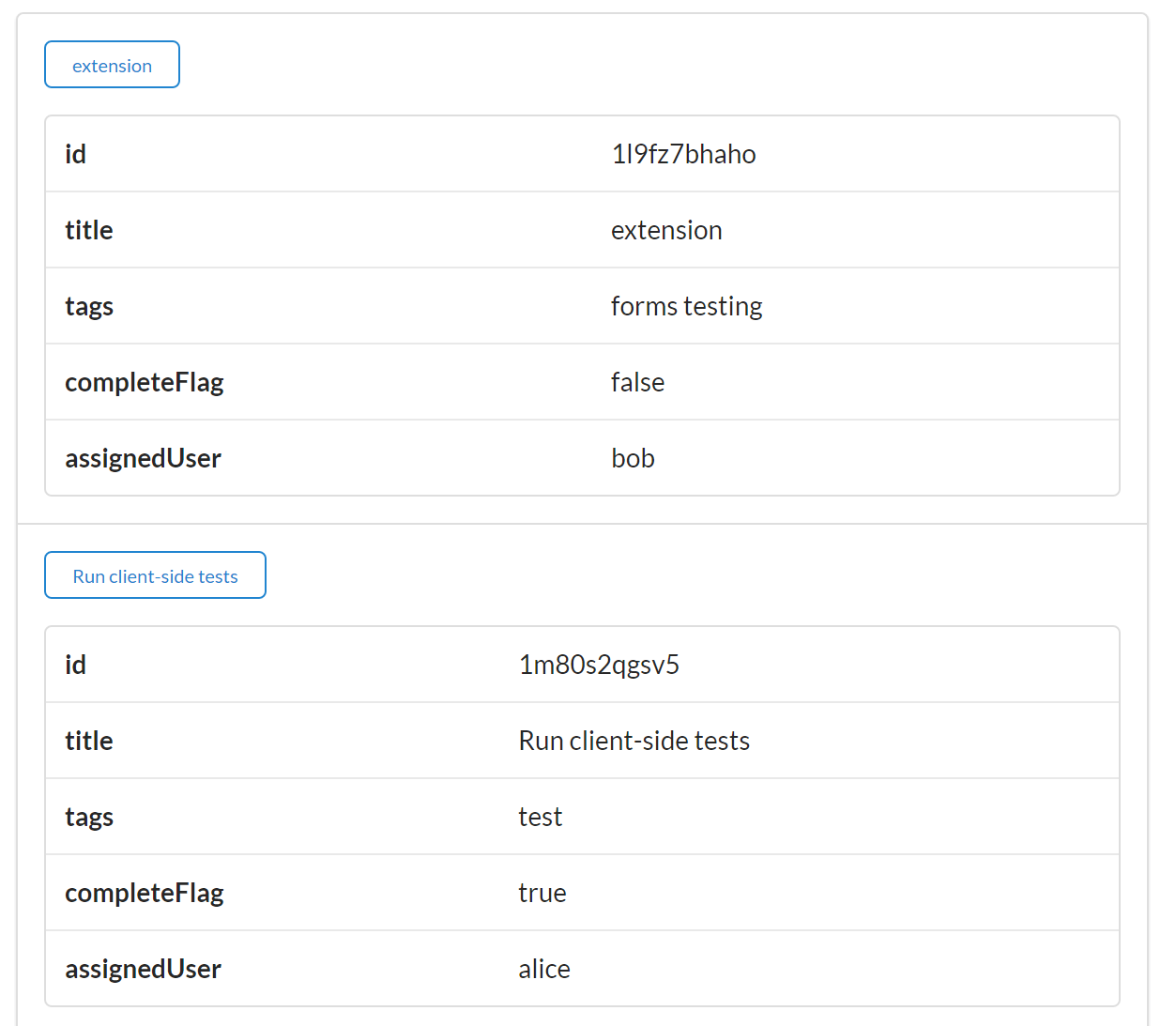

Another powerful hypermedia type is the Siren content type. Currently used as part of the Zetta IoT platform, Siren is designed to handle two of the three key tasks of a web client: Addresses and Actions. We’ll see what it’s like to build a generic client using Siren as the message format and we’ll explore a Siren extension (Profile for Object Display, or POD) that enhances its ability to handle metadata for UI displays.

What happens to the notion of API versions when you start using hypermedia types as the basis for client-side web applications? This chapter looks at the various attempts to manage change over time and how relying on message-based hypermedia-style APIs reduces the need to change the interface contract when you change the interface features.

In this chapter we’ll explore another hypermedia format—Collection+JSON, or Cj. Cj is able to handle all three of the key elements of web clients: Objects, Addresses, and Actions. We’ll see what it’s like to build a generic client using Collection+JSON as the message format and learn how to extend Cj’s data display and validation routines.

What would it take to create a single generic hypermedia client that could seamlessly consume multiple services? And what if those services each emitted different hypermedia media types? What would it take to craft a single client application that is “multilingual” when it comes to message format? We’ll see how that looks in our final chapter.

All of the chapters in this book start and end with short vignettes or dialogues. These are fictional conversations offered as a way to illustrate some of the challenges and thought processes that happen in any organization working to implement scalable, robust projects on the WWW. These dialogues also act as stage settings and summaries for the primary theme of each chapter.

The dialogues are also meant to act as prompts to give you a chance to start thinking about the challenge as presented and how you would approach it and come up with a solution. It may be helpful to start by reading through the dialogues and then take a few minutes to map out (maybe even write down) your ideas about how to solve the problem. Taking the time to interact with the material in this way can result in some additional insight into both the solution that is provided and your own skills in problem solving.

Finally, I’ve added these dialogues so that those who are interested in only skimming the book can still get quite a bit out of the experience. Hopefully, you can read through the dialogues alone and glean the basics of the material. The chapters are then the place where the details and subtleties of the material is explored in depth. You may find that, for at least some of the chapters, reading the dialogues gives you all the information you are looking for on a particular topic or challenge—and that’s fine, too.

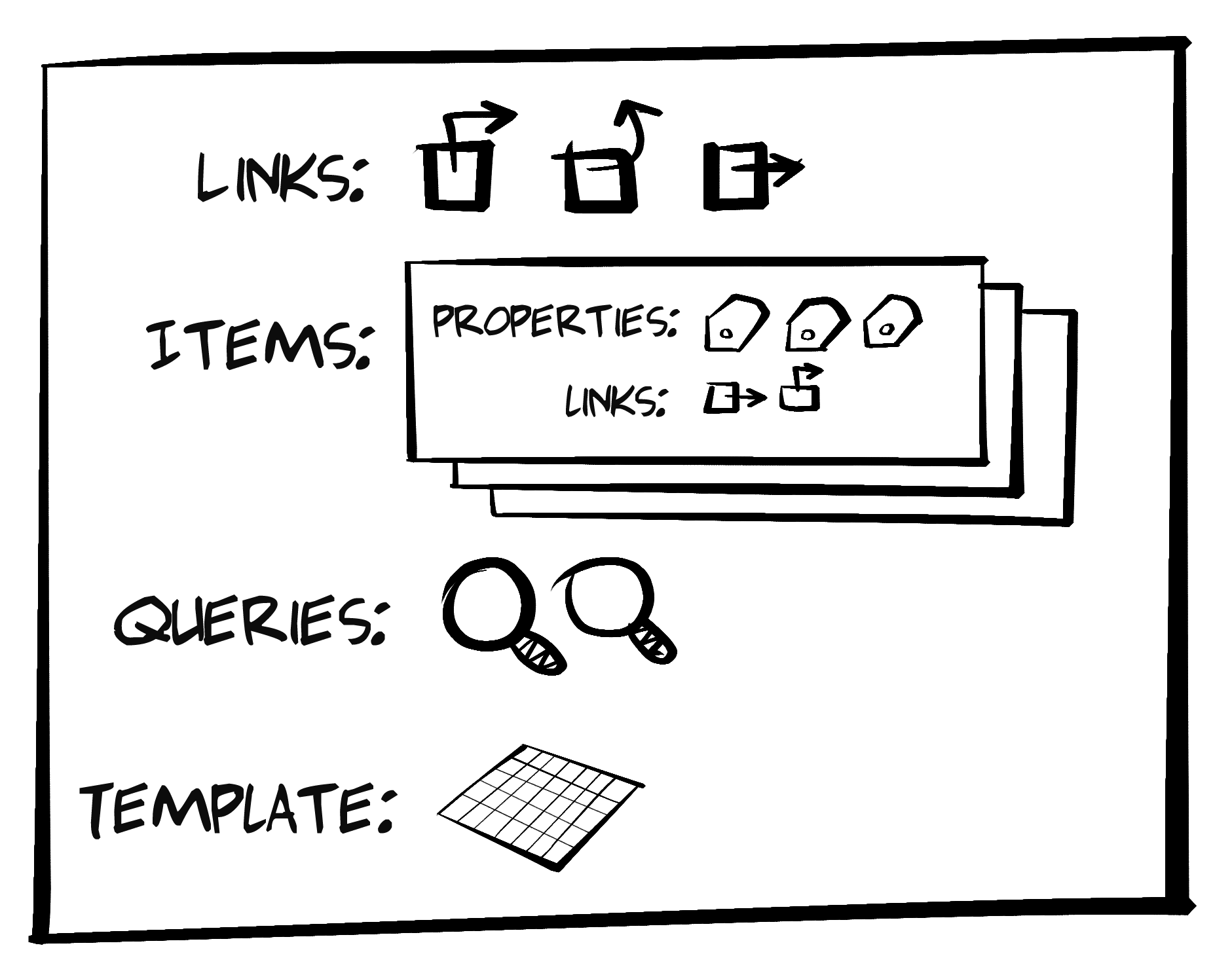

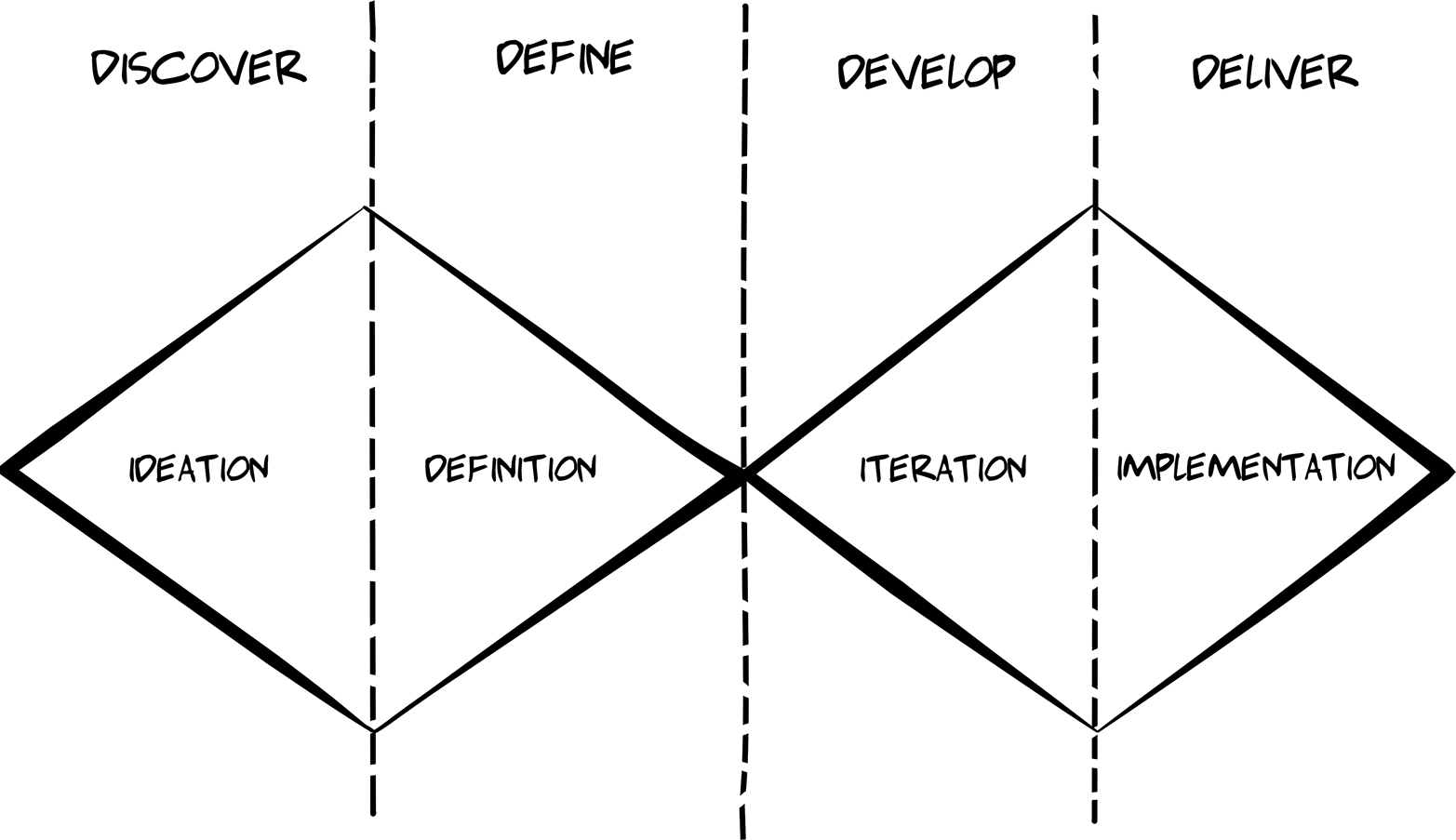

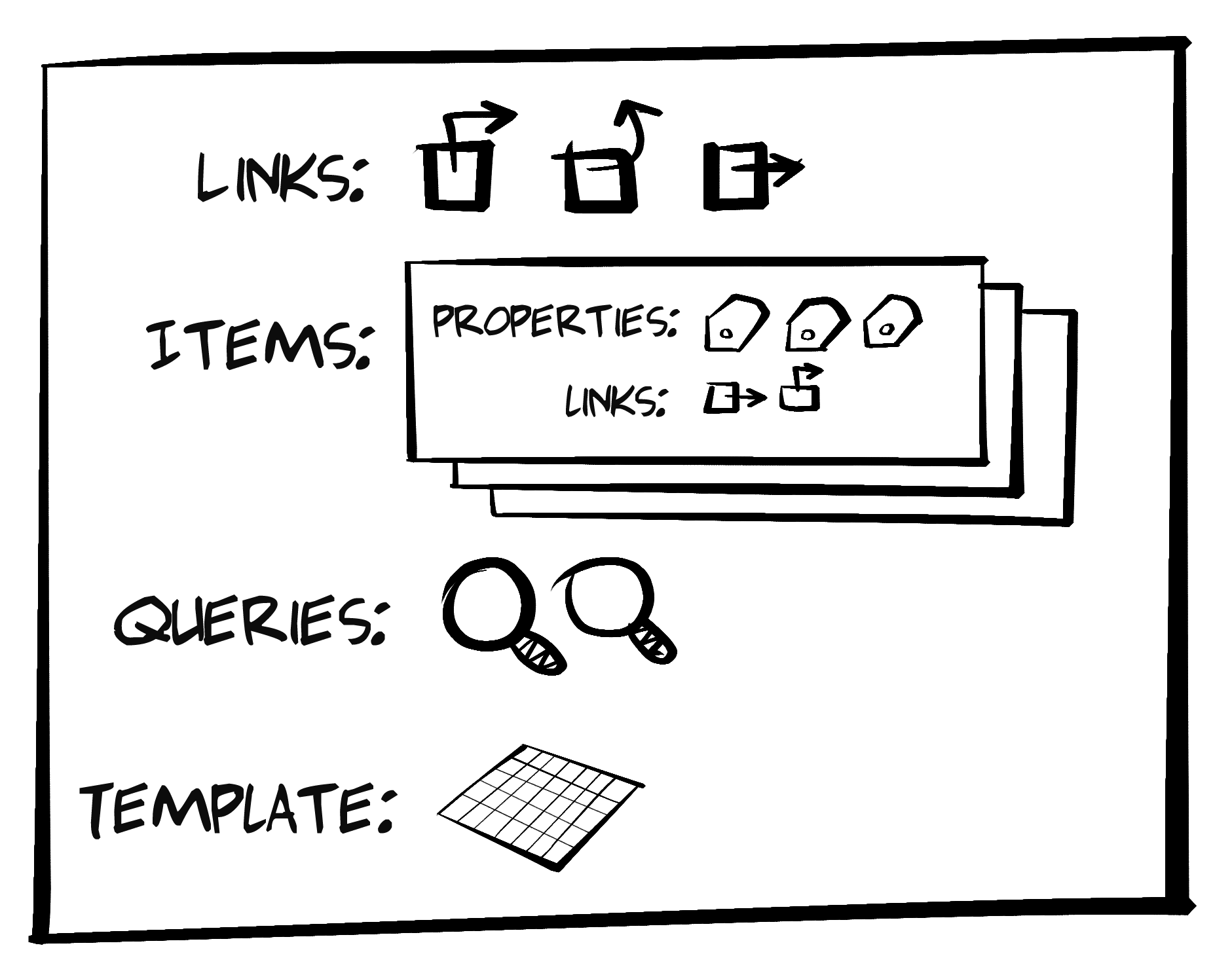

The book includes diagrams and artwork by some very talented people. Dana Amundsen, an accomplished artist based in Louisville, KY, worked with me to create the characters Carol and Bob who appear throughout the book. She also designed the BigCo logo you see in the sample apps. It turns out Dana created much more material than I could include in this book and I hope I can share that art via other means in the near future.

The diagrams you see in this book were created by my good friend, Diogo Lucas; a very skilled software developer, architect, speaker, and teacher. I first met Diogo while on a trip to Brazil (where he lives) when he showed me his amazing dashed-off sketches of some of the ideas I’d been working on for this book. I jumped at the chance to invite him to create the illustrations and, luckily, Diogo agreed to lend his considerable artistic talents to this project.

All the artwork in this book created by Dana and Diogo is licensed under Creative Commons - Attribution-NoDerivatives 4.0 International (CC BY-ND 4.0).

Both Dana and Diogo have added something special to the book and I thank them both very much.

This book covers quite a bit of ground in a relatively short number of pages. In order to do that, I needed to leave out quite a bit of helpful material. Here’s a quick rundown of what I decided to not include in this book.

While I refer to some material on user interface design in the book, those references are cursory. I also do not spend any time on the details of human–computer interaction (HCI) or design methodology in general.

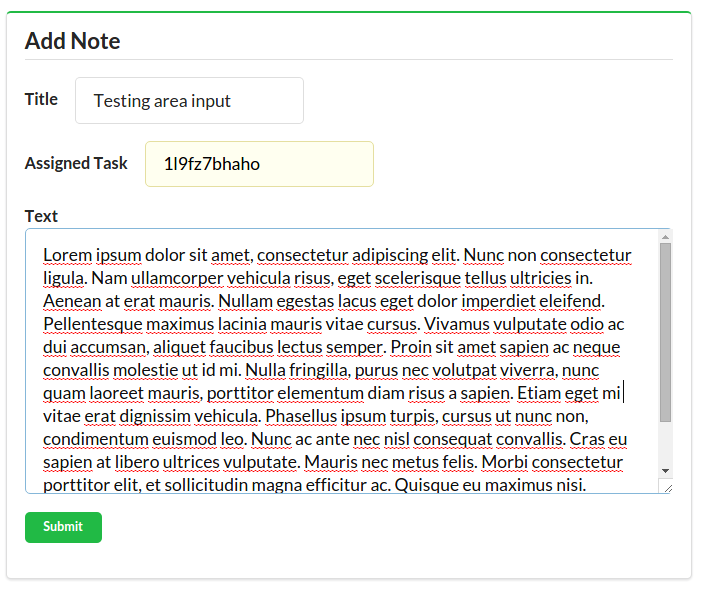

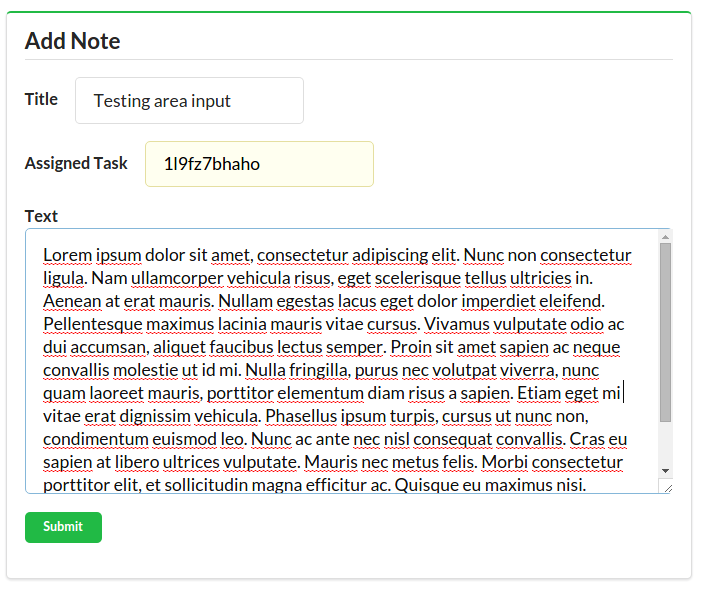

I should also give the reader a heads-up that this book provides a basic UI look-and-feel in the examples. My primary focus is on the network- and message-level techniques that allow services to provide recognizable and useful hypermedia hints to client applications. I also spend time on client-level coding best practices for parsing and activating these hypermedia signals. I leave the work of decorating these interfaces with improved visual appeal to others who are more skilled in this art.

I need to give special recognition to my long-time friend and skilled web developer, Benjamin Young. He took the time to extensively review my rudimentary UI designs and provided a coherent look-and-feel to all the client applications. Truth be told, I gave Benjamin limited room to be creative and he still produced a solid, consistent style guide for all of the apps. If they look at all pleasing, that’s a reflection of Benjamin’s talent and persistence. If they fall short in any way, that’s because I didn’t allow him the freedom he needed.

This book also does not spend much time on the value of hypermedia as an implementation style or go very far to make the case for using hypermedia in your own projects. My work on previous books, RESTful Web APIs (with Leonard Richardson) and Building Hypermedia APIs with HTML5 and Node (both from O’Reilly), are better sources for the history and value of using hypermedia for web APIs, and I encourage the reader to look there and to other sources for this kind of information.

Finally, I don’t devote any pages to the basics of programming on the Web in general, such as the topics of HTTP protocol, HTML5, JavaScript, and CSS. These are all very important subjects and well beyond the scope of this small book. There are many books on these subjects and I am confident the reader can find what they are looking for elsewhere.

There is quite a bit of related source code for this book. Almost every chapter has at least one relevant project and some have two or more. Including all the source code in the pages of this book would make it tedious to read and difficult to navigate. For that reason, only short snippets of important code blocks appear in the text.

The entire set of source code is available in a public Git repo. The repos will be updated from time to time and should be considered the most accurate source for any code that appears in the pages of this book. Readers are encouraged to clone/fork the code in the repos and create pull requests for any needed changes.

Throughout the book, I make references to external source material including other books, published papers, articles, public standards, and blog posts. In the text, I will mention the reference but not include a direct citation or footnote as that tends to interrupt the flow of the material. Instead, I will add a section at the end of each chapter that includes the name of the source and, where appropriate, online links to the cited material.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

Constant width boldShows commands or other text that should be typed literally by the user.

Constant width italicShows text that should be replaced with user-supplied values or by values determined by context.

This element signifies a tip or suggestion.

This element signifies a general note.

This element indicates a warning or caution.

Safari (formerly Safari Books Online) is a membership-based training and reference platform for enterprise, government, educators, and individuals.

Members have access to thousands of books, training videos, Learning Paths, interactive tutorials, and curated playlists from over 250 publishers, including O’Reilly Media, Harvard Business Review, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Adobe, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, and Course Technology, among others.

For more information, please visit http://oreilly.com/safari.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://bit.ly/restful-web-clients.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

A book like this does not happen without lots of input and assistance from several people. I am especially grateful to all those who volunteered to read and comment on early drafts of the book. In particular, I’d like to thank Todd Brackley, Carles Jove i Buxeda, Pedro Felix, Mark Foster, Toru Kawamura, Mike Kelly, Steve Klablnik, Ronnie Mitra, Erik Mogensen, Irakli Nadareishvili, Leonard Richardson, Kevin Swiber, Stefan Tilkov, Ruben Verborgh, and Jeremy Wilken for all their feedback and advice in shaping the book.

I’d also like to thank the team at O’Reilly for all their support and trust. Especially Meg Foley and Simon St.Laurent, who had the unenviable task of constantly prodding me and encouraging me through the long process of making this book a reality. Also, a big thanks to my production editor Colleen Lobner, who had to endure my endless picking at minor layout and font details while she worked to make my material presentable. And I’d especially like to thank my colleagues in the API Academy and CA Technologies for all their help and support throughout the project.

Finally, thanks to my family for once again having to deal with me as I worked through my ideas, hunkered down with headphones for long stretches, and as I stared off into space pondering details and testing scenarios in my head. They dealt with my temporary malady as best they could—with patience and humor. Thanks.

“Experience is the best way to learn.”

Shannon Kaiser, “50 Things My Future Self Wants Me To Know”

Shannon Kaiser’s quote is from a September 2014 article she wrote for MindBodyGreen.

All the chapters in this book start and end with short dialogues between two IT-department colleagues at BigCo, Inc.—a successful multinational enterprise. The two players in these dialogues are Bob and Carol. Both are experienced and well-trained software professionals. Here is a bit of background on each of them and on their employer, BigCo, Inc. to help you get acquainted.

The Bayesian International Group of Companies (BigCo) is an organization with a long history of both prosperity and generosity. Founded in 1827 in Scotland, BigCo’s motto is “The principle end of the company is the happiness of its customers and employees.” Originally focused on demography and finance based on the work of Richard Price, by the 1900s BigCo was a manufacturer of important turn-of-the-century technologies including mobile x-ray machines and hydrophones by working with inventors Frederick Jones and Reginal Fessenden, respectively. BigCo was also one of the few non-US contractors attached to the Manhattan project, providing parts for the first nuclear reactors designed by Dr. Leona Wood.

The Bayesian International Group of Companies (BigCo) is an organization with a long history of both prosperity and generosity. Founded in 1827 in Scotland, BigCo’s motto is “The principle end of the company is the happiness of its customers and employees.” Originally focused on demography and finance based on the work of Richard Price, by the 1900s BigCo was a manufacturer of important turn-of-the-century technologies including mobile x-ray machines and hydrophones by working with inventors Frederick Jones and Reginal Fessenden, respectively. BigCo was also one of the few non-US contractors attached to the Manhattan project, providing parts for the first nuclear reactors designed by Dr. Leona Wood.

During the postwar boom, BigCo opened offices in the United States and played an instrumental role in helping establish fields of study in the modern computer era, including predictive algorithms and robotics controls. Currently, BigCo products focus on “inverse probability” problems and how they can be used to help improve decisions and policy-making in both the private and public sectors.

Carol has been leading successful teams at BigCo for several years and, before that, spent over five years writing code for both desktop and web systems. She has degrees in computer science and information systems and is active in several high-profile open source communities. Carol is also an accomplished visual artist and is a member of a local art society. When she is not working on OSS projects or relaxing at home with her two cats, Carol attends area comics conventions where she sells her artwork and catches up with colleagues in the comics community.

Carol has been leading successful teams at BigCo for several years and, before that, spent over five years writing code for both desktop and web systems. She has degrees in computer science and information systems and is active in several high-profile open source communities. Carol is also an accomplished visual artist and is a member of a local art society. When she is not working on OSS projects or relaxing at home with her two cats, Carol attends area comics conventions where she sells her artwork and catches up with colleagues in the comics community.

Bob recently joined the company from a Silicon Valley startup merger. He’s built several successful web-based companies (one while still attending college) and tells people he has “the startup bug.” After selling his first company for a handsome profit, Bob took time off to complete his bachelor’s degree in computer science and then immediately went back to creating new startups. He spends what little spare time he has rock climbing and trail bike riding at nearby state parks and national forests. A couple of times a year, he heads off to the Rocky Mountains or the Cascades to do some serious Alpine-style climbing with friends.

Bob recently joined the company from a Silicon Valley startup merger. He’s built several successful web-based companies (one while still attending college) and tells people he has “the startup bug.” After selling his first company for a handsome profit, Bob took time off to complete his bachelor’s degree in computer science and then immediately went back to creating new startups. He spends what little spare time he has rock climbing and trail bike riding at nearby state parks and national forests. A couple of times a year, he heads off to the Rocky Mountains or the Cascades to do some serious Alpine-style climbing with friends.

Images and logo design by Dana Amundsen (@DanaAmundsen).

While BigCo is an imaginary company, all the people mentioned in its description (Price, Jones, Fessenden, and Woods) are real historical figures. If BigCo did exist, it is possible they would have been part of its history, too.

“What’s so fascinating and frustrating and great about life is that you’re constantly starting over, all the time, and I love that.”

Billy Crystal

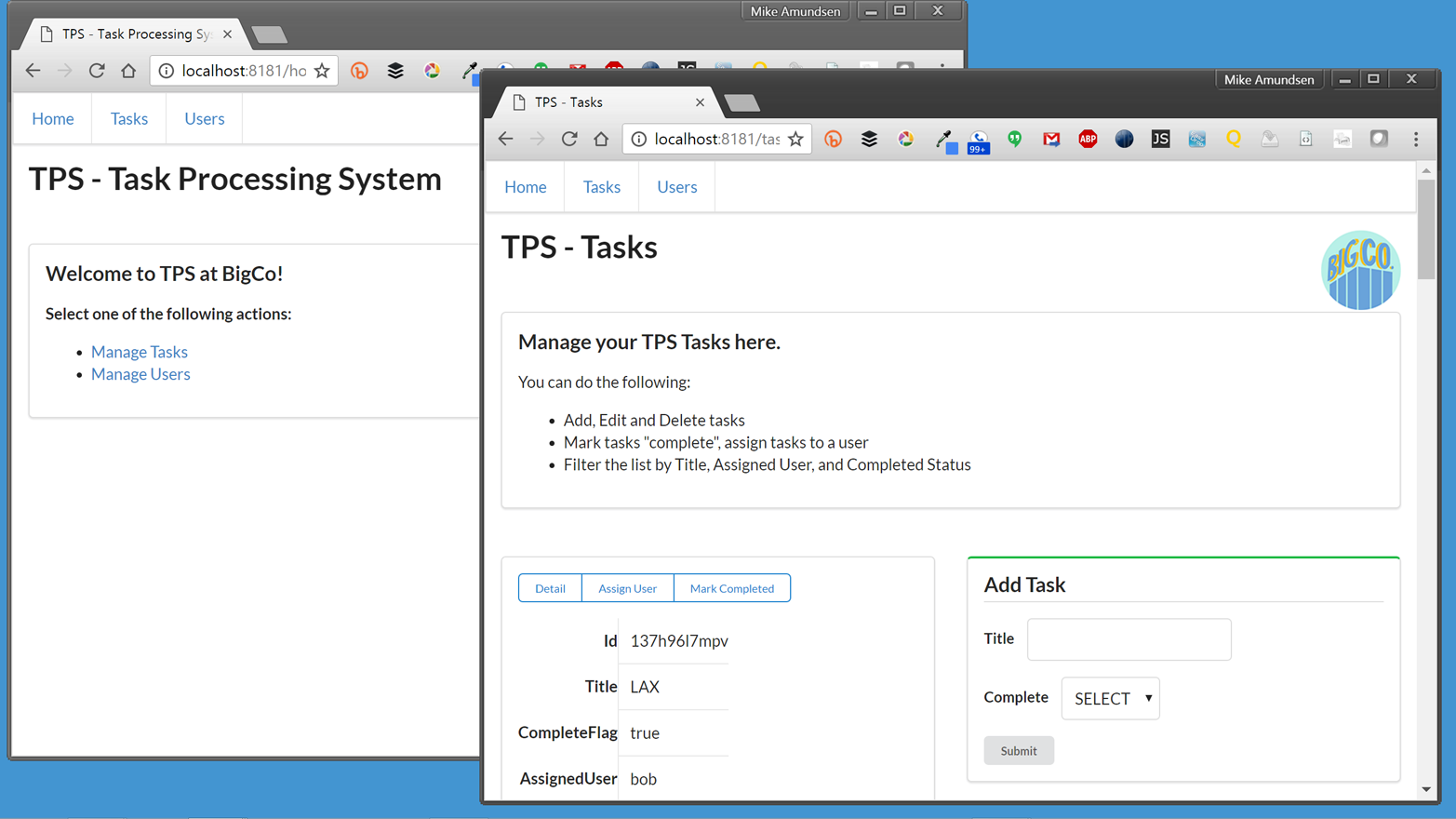

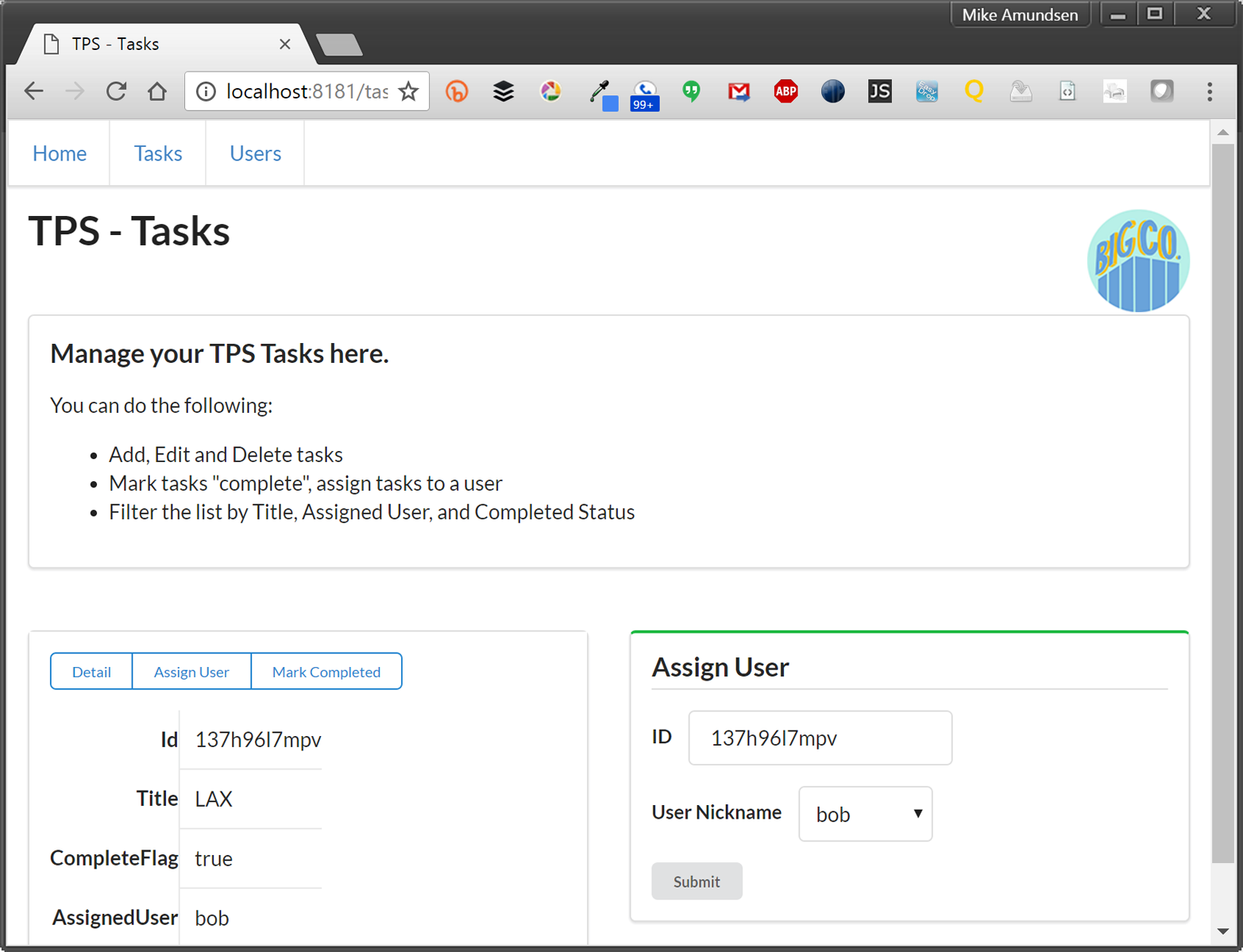

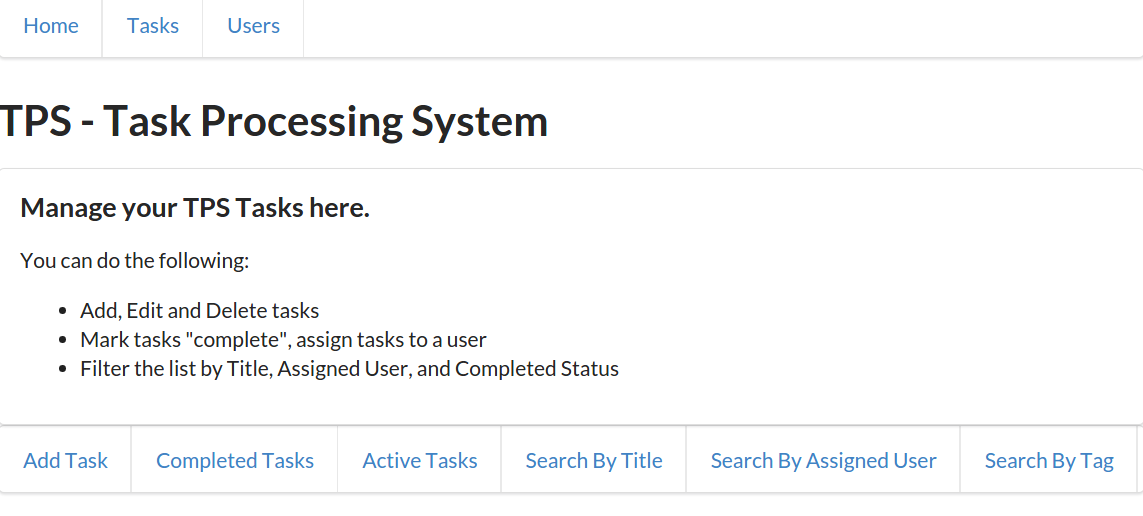

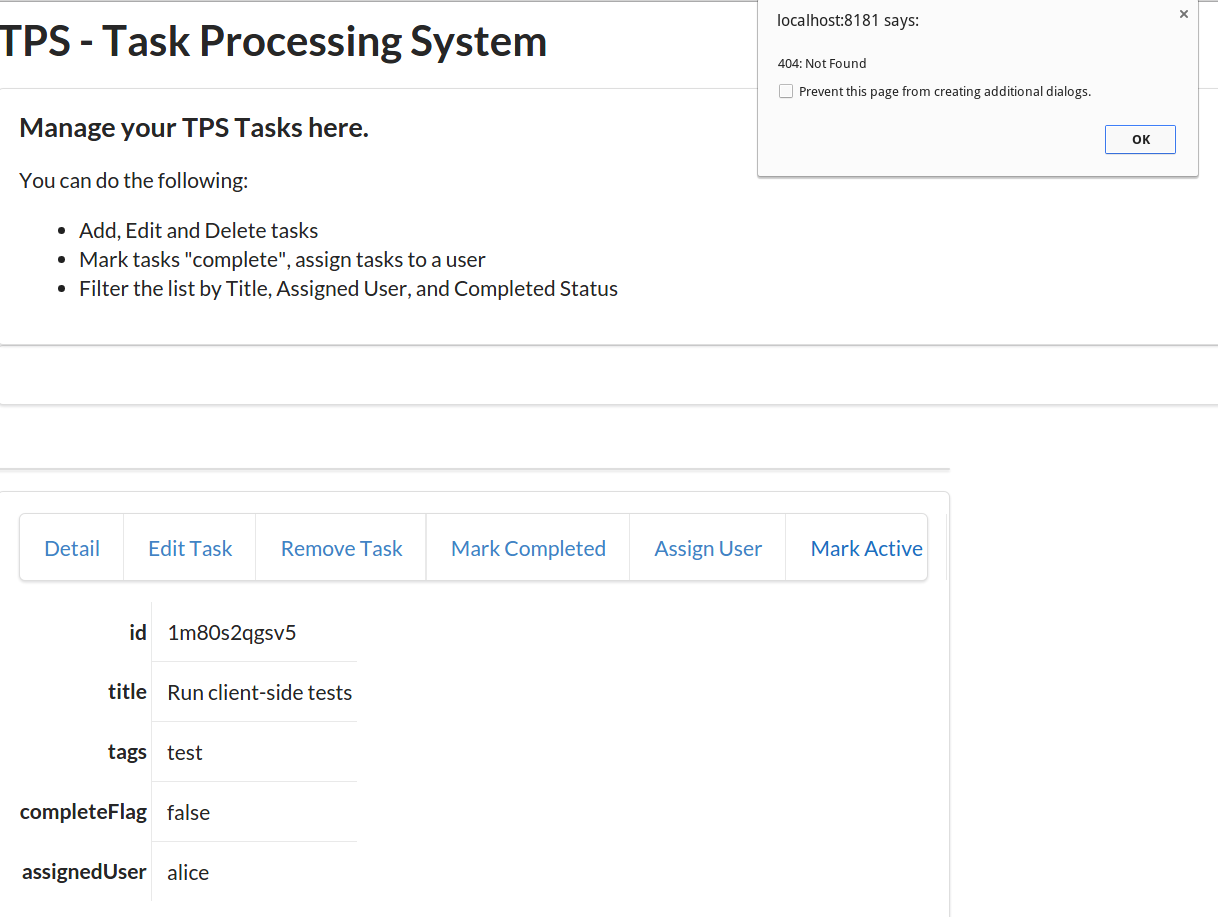

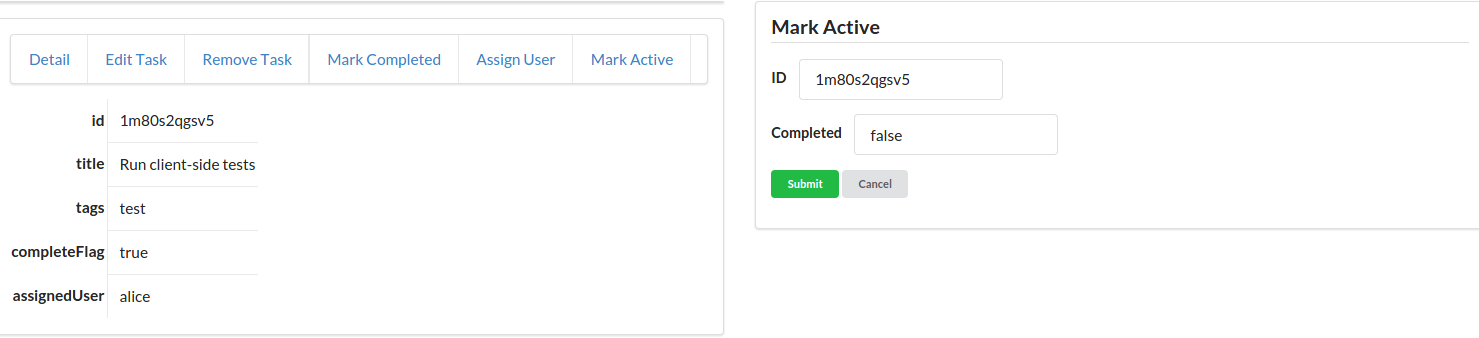

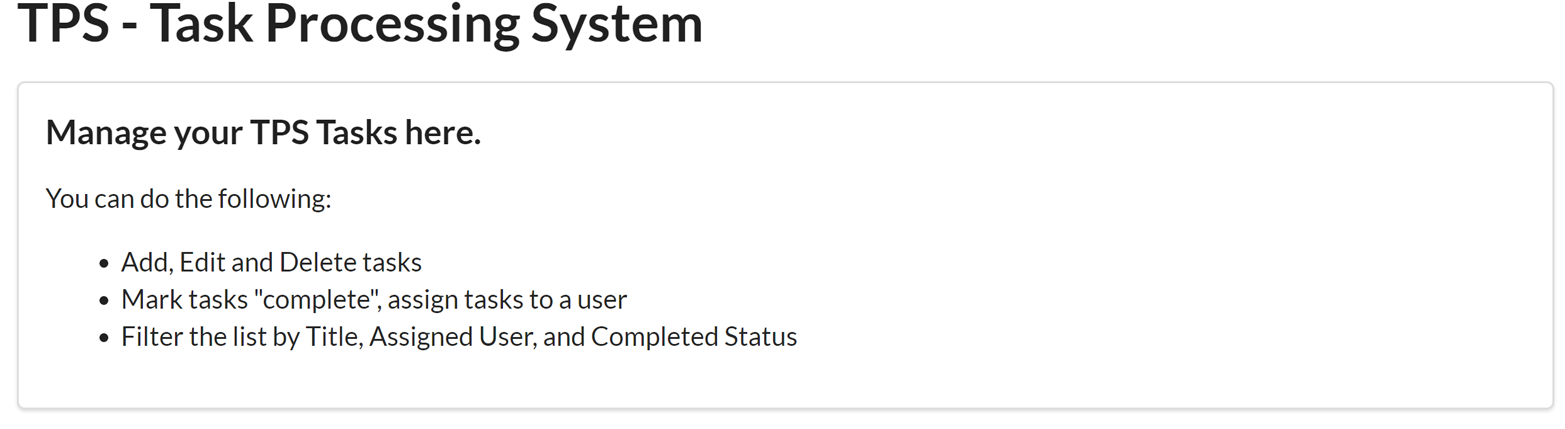

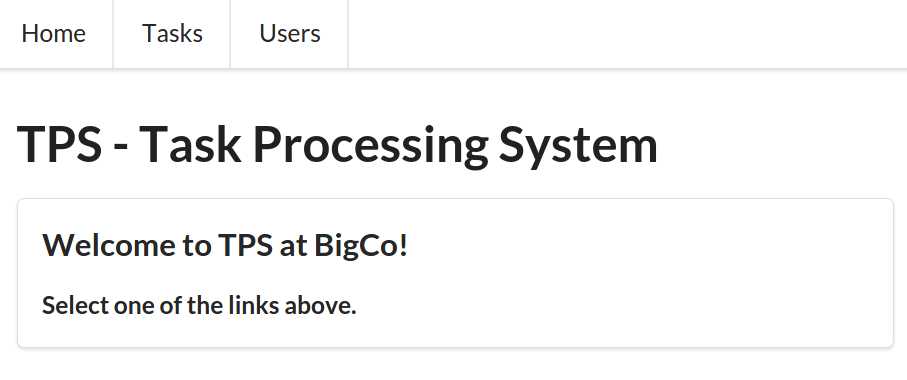

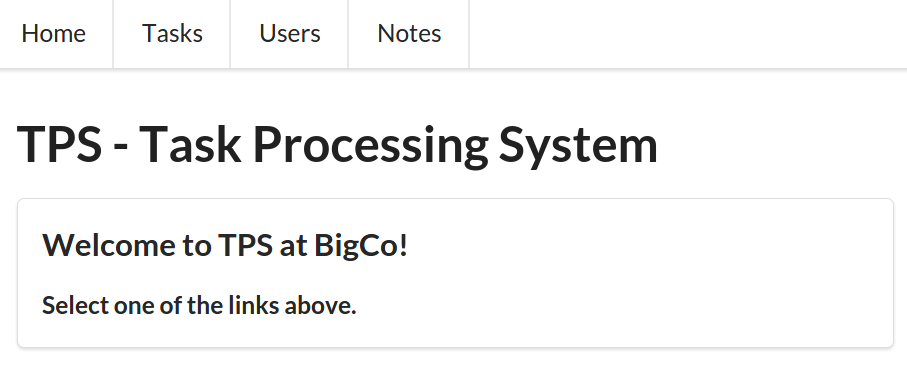

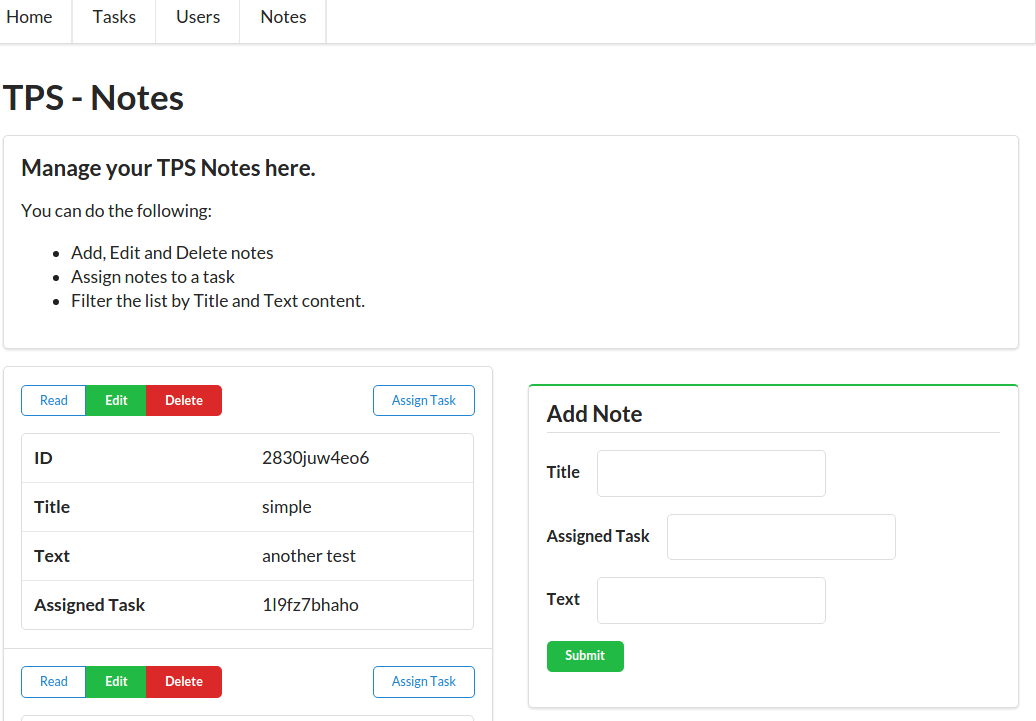

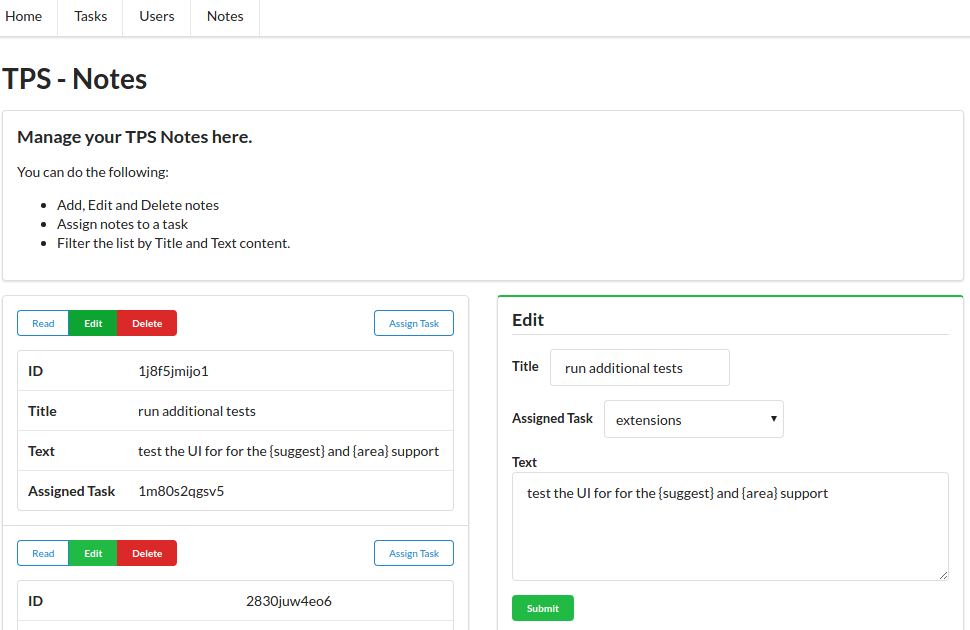

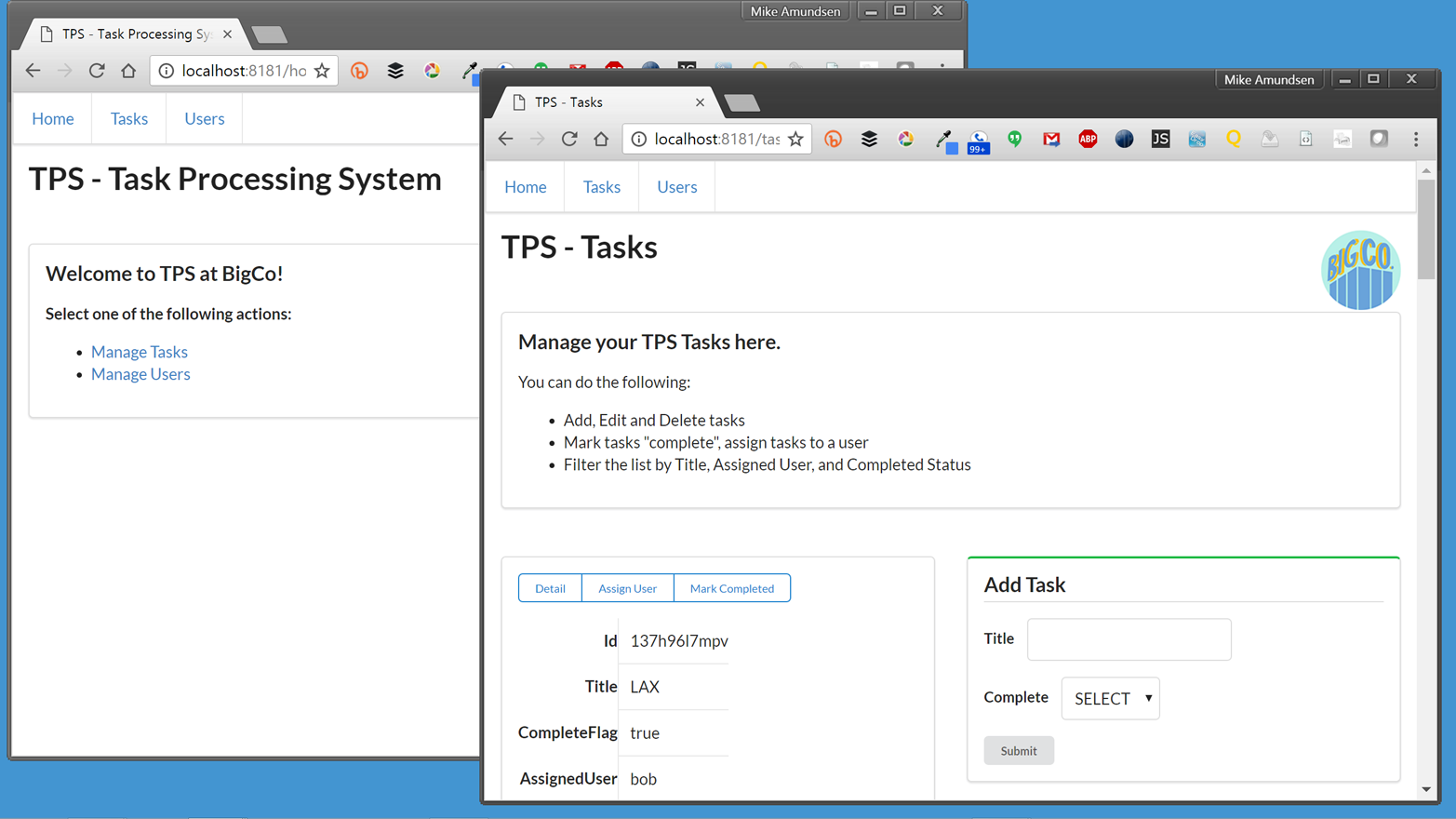

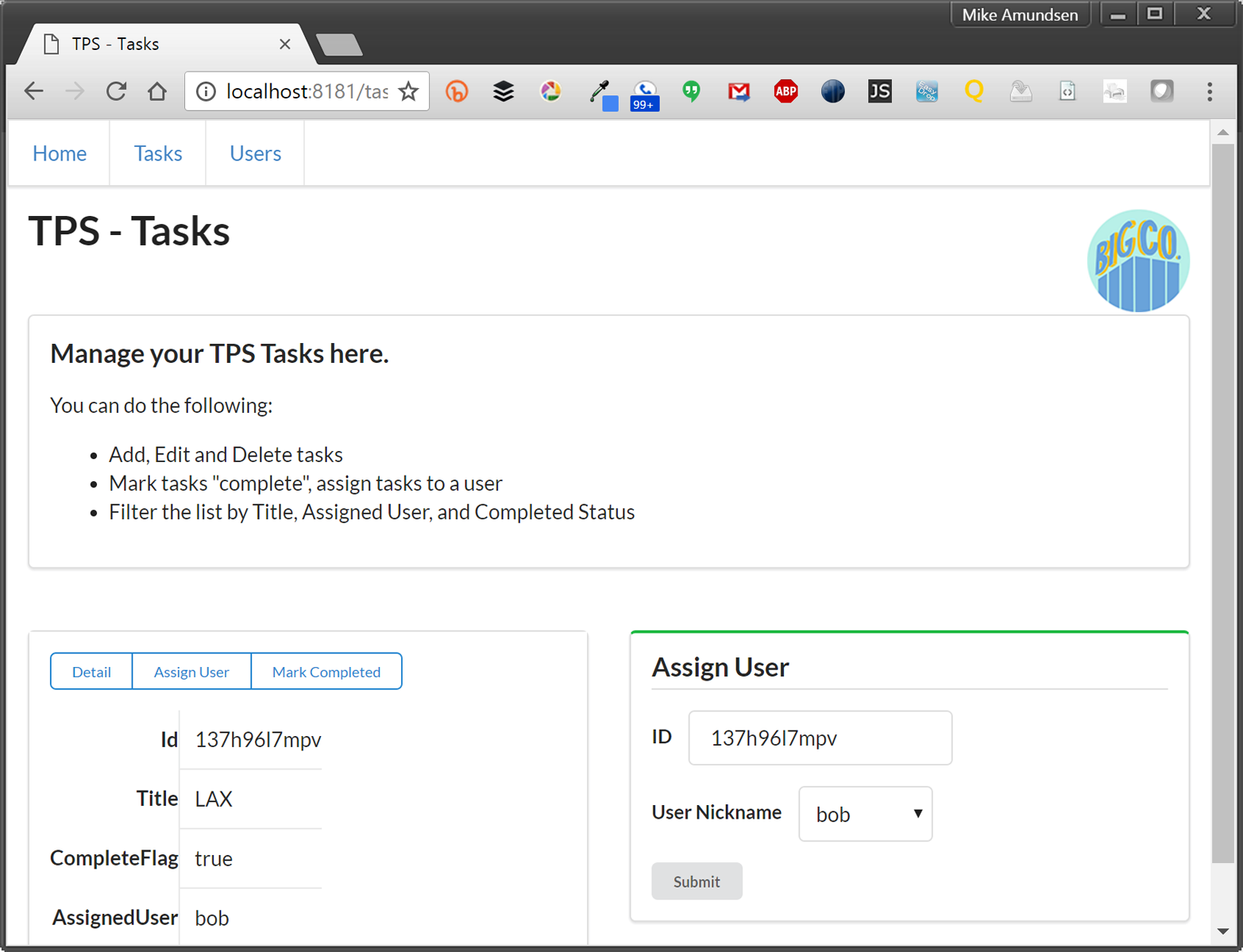

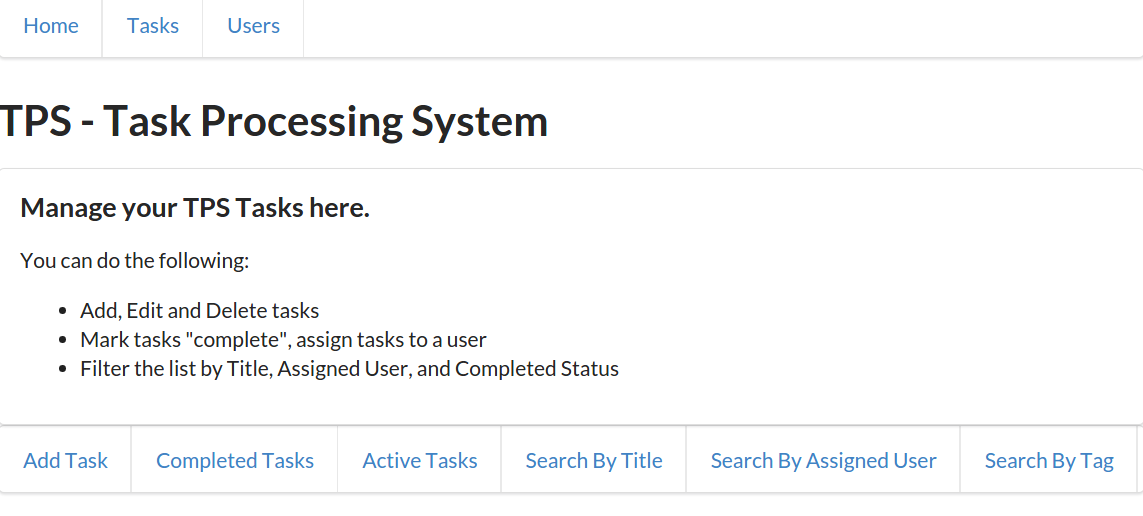

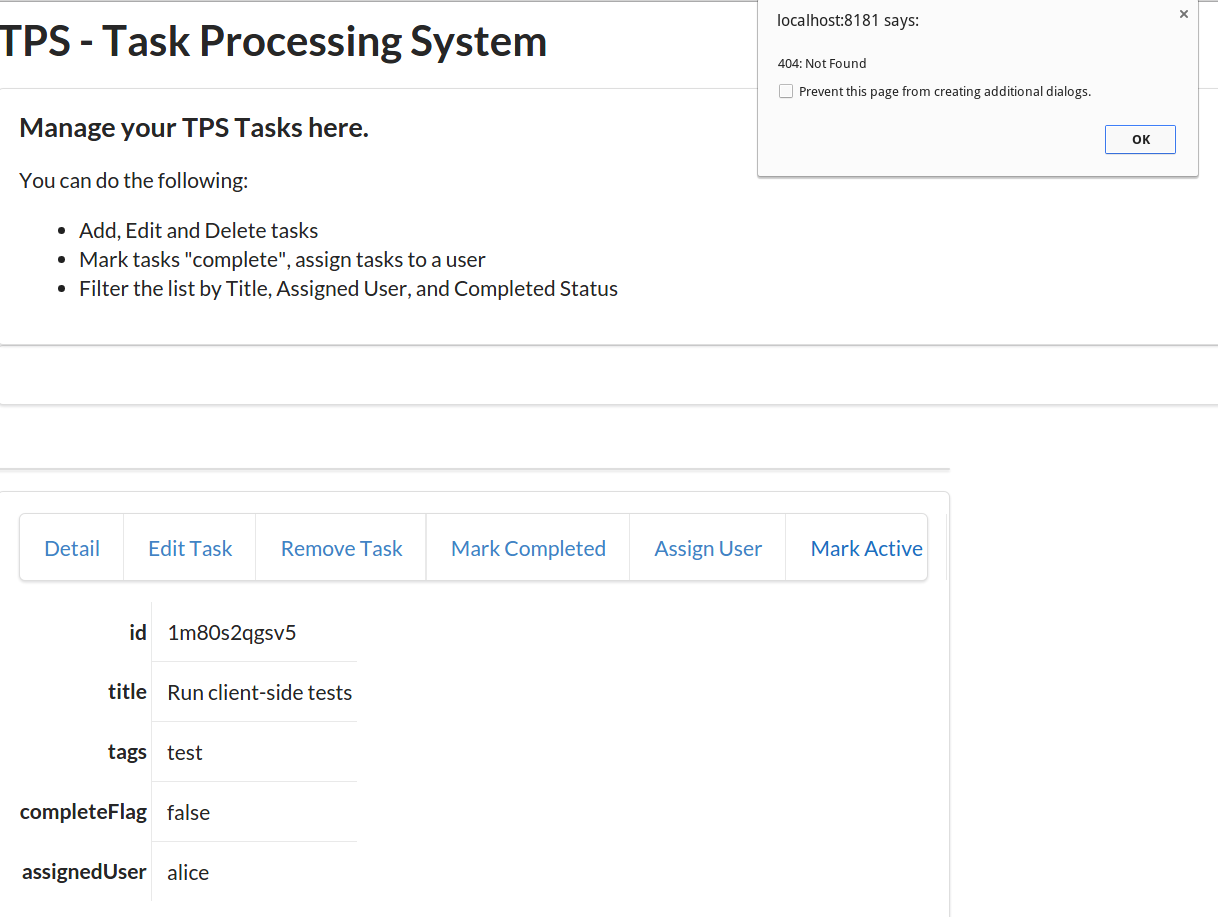

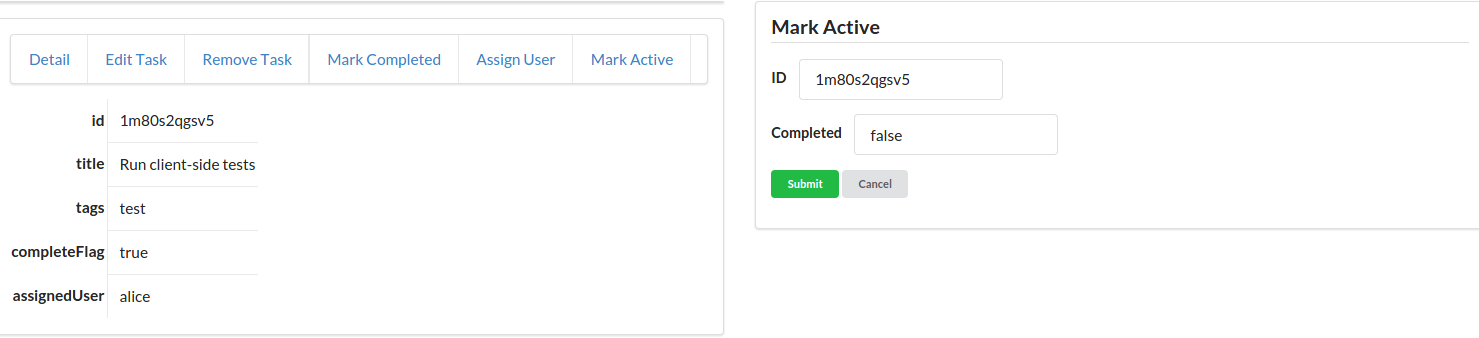

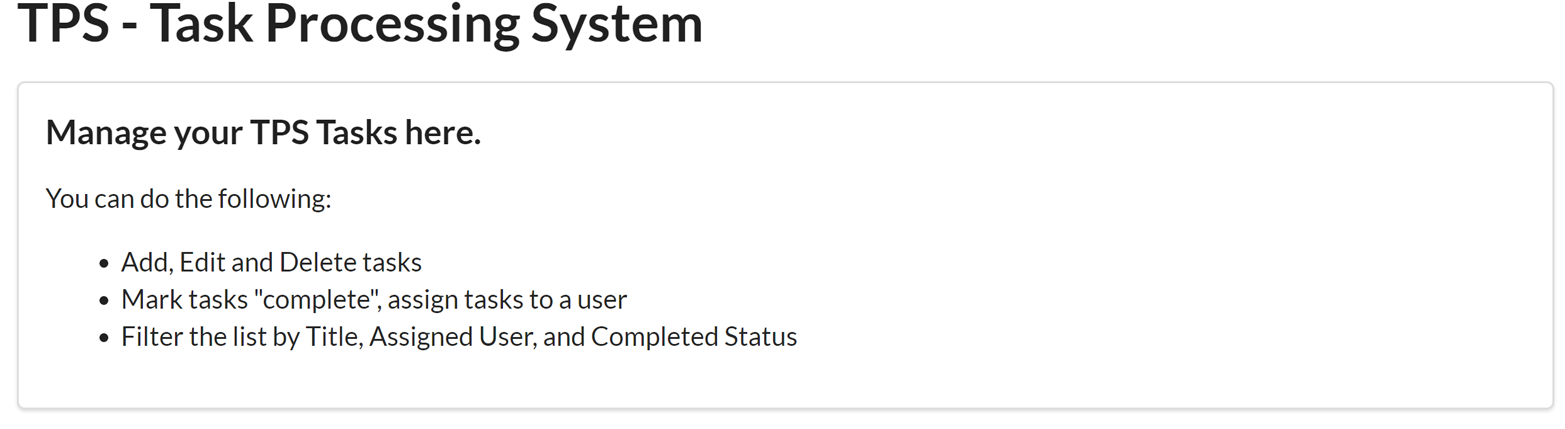

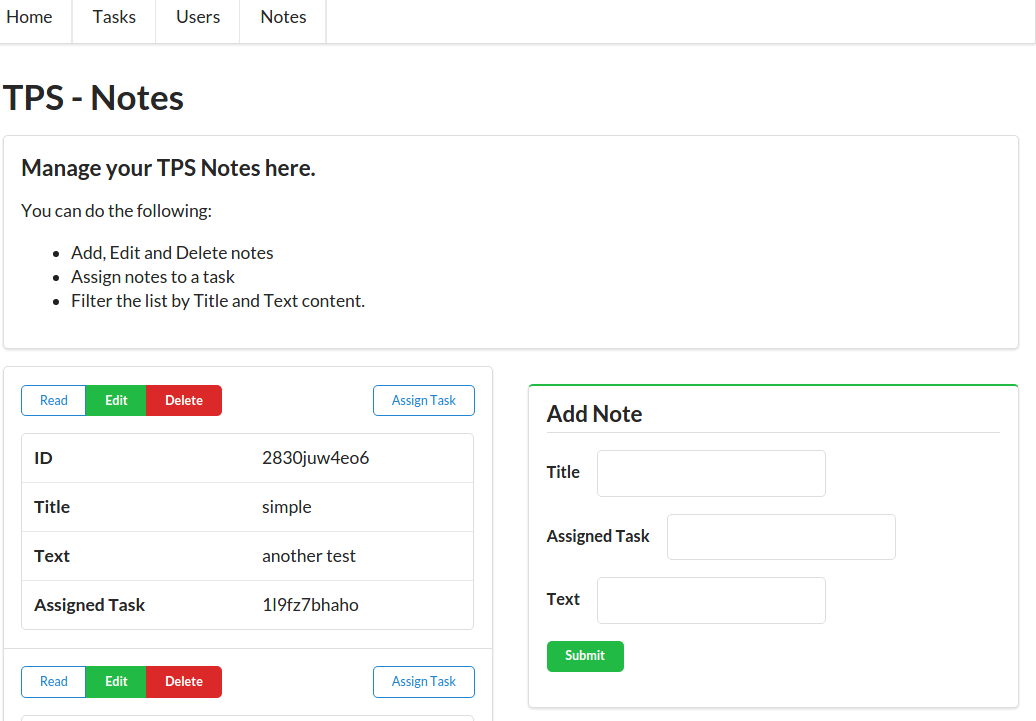

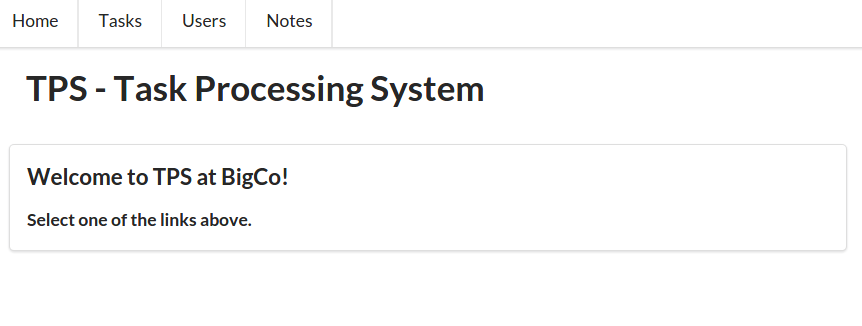

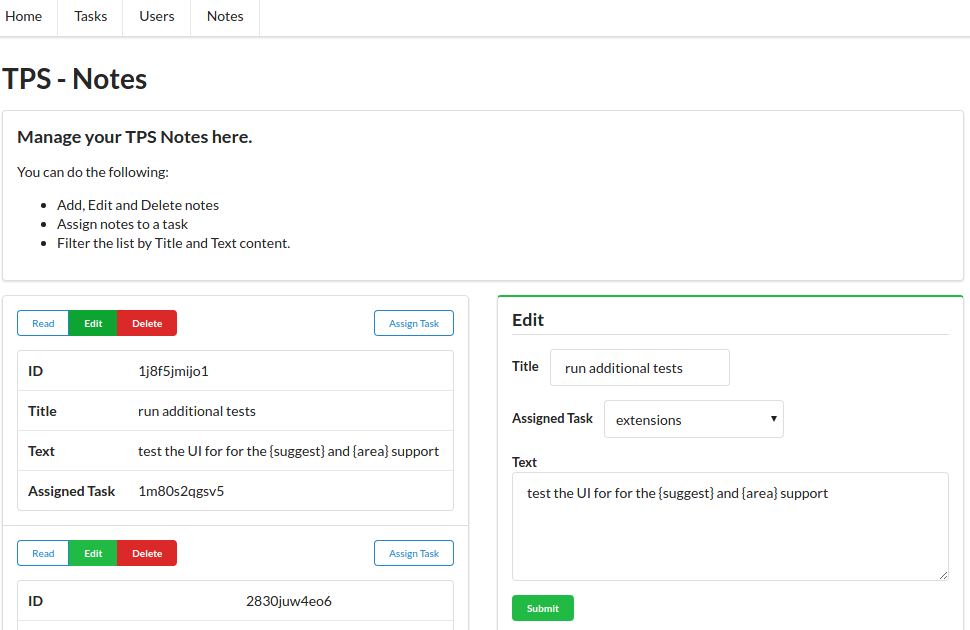

Before jumping right into the process of creating hypermedia client applications, let’s back up a bit and join the quest at the early end of the web application’s history. Many web applications began as websites—as HTML-based pages that were little more than just a list of static documents. In some cases, the initial web app was a pure HTML app. It had tables of data, forms for filtering and adding data, and lots of links to allow the user to transition from one screen to the next (Figure 1-1).

One of the things that make pure HTML applications interesting is that they are written in a very declarative style. They work without the need for imperative programming code within the client application. In fact, even the visual styling of HTML applications is handled declaratively—via Cascading Style Sheets (CSS).

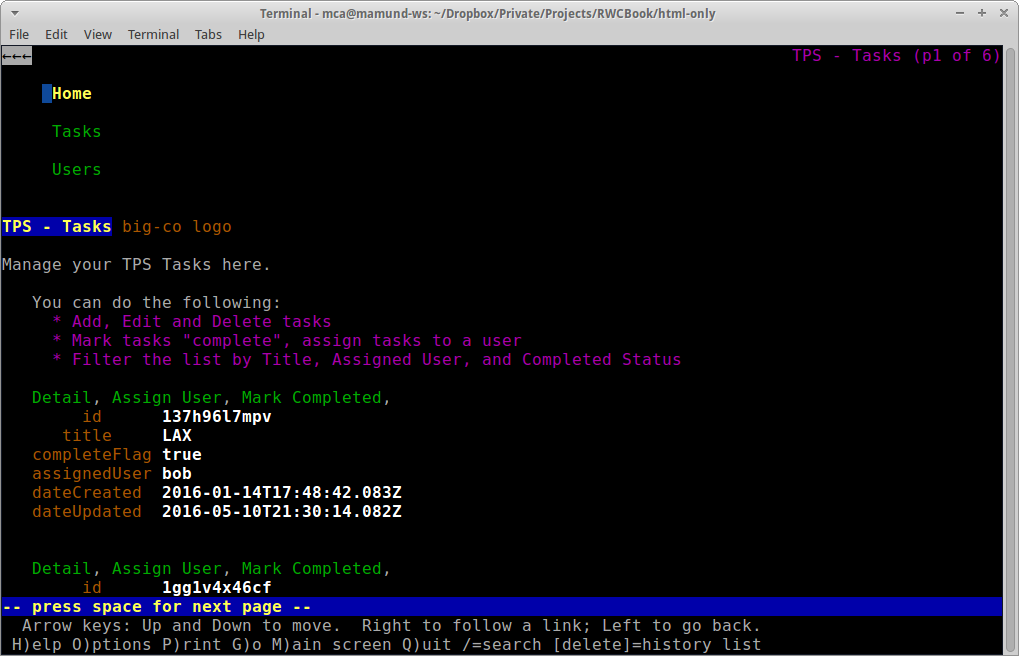

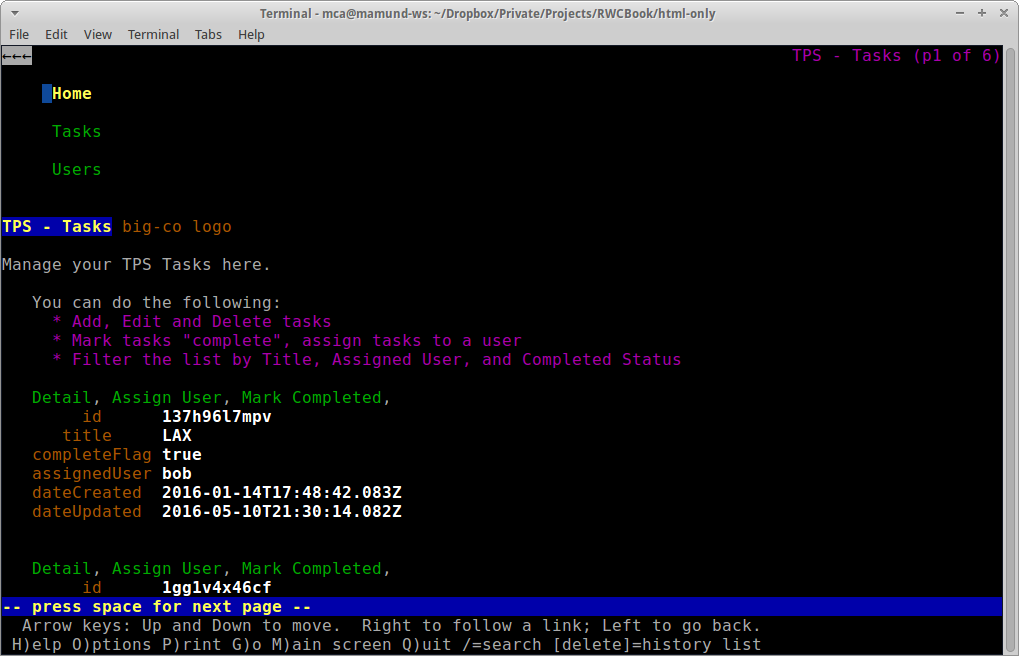

It may seem unusual to attempt to create user experiences using only declarative markup. But it should be noted that many users in the early days of the Web were already familiar with this interaction style from the mainframe world (see Figure 1-2). In many ways, early web applications looked and behaved much like the typical monochrome experiences users had with mainframe, minicomputers, and the early personal computers (see Figure 1-3). And this was seen as a “good thing.”

Typically, at some point in the life of successful HTML-based web app, someone gets the idea to convert it into a web API. There are lots of reasons this happens. Some want to “unleash the value” locked inside a single application to make the underlying functionality available to a wider audience. There might be new opportunities in other UI platforms (e.g., mobile devices, rich desktop apps, etc.) that don’t support the HTML+CSS experience. Maybe someone has a new creative idea and wants to try it out. Whatever the reasons, a web API is born.

Usually, the process is seen as a straightforward effort to expose an API that covers the internal workings of the existing web app but without the baggage of the existing HTML UI. And often the initial work is just that—taking away the UI (HTML) and exposing the data model or object model within the web server code as the web API.

Next, it is assumed, a new team can build a better user interface by consuming the server-side web API directly from a client application. Often the goal is to build a native app for smartphones or an advanced web app using one of the latest client-side frameworks. The first pass at the API is usually pretty easy to understand, and building a set of client apps can go smoothly—especially if both the client- and server-side imperative code are built by the same team or by teams that share the same deep understanding of the original web app.

And that’s the part of the journey we’ll take in this chapter—from HTML to API. That will lay the groundwork for the remainder of the book as we work through the process of building increasingly robust and adaptable client applications powered by the principles and practices of hypermedia.

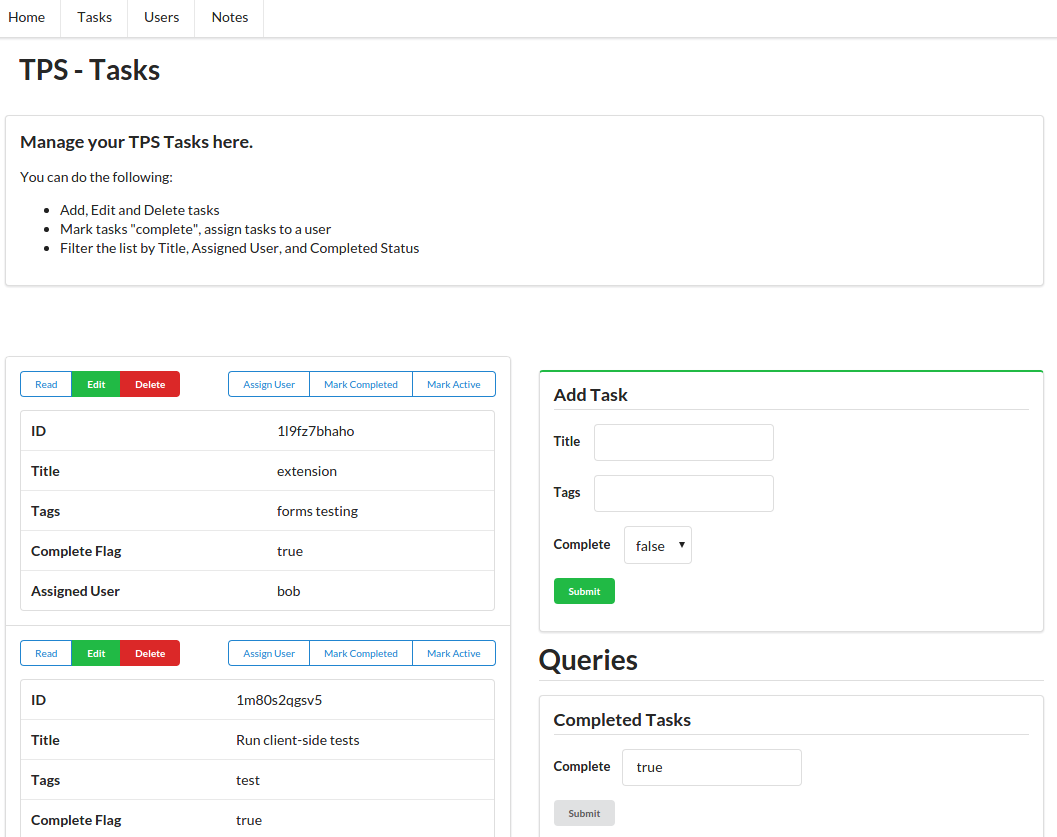

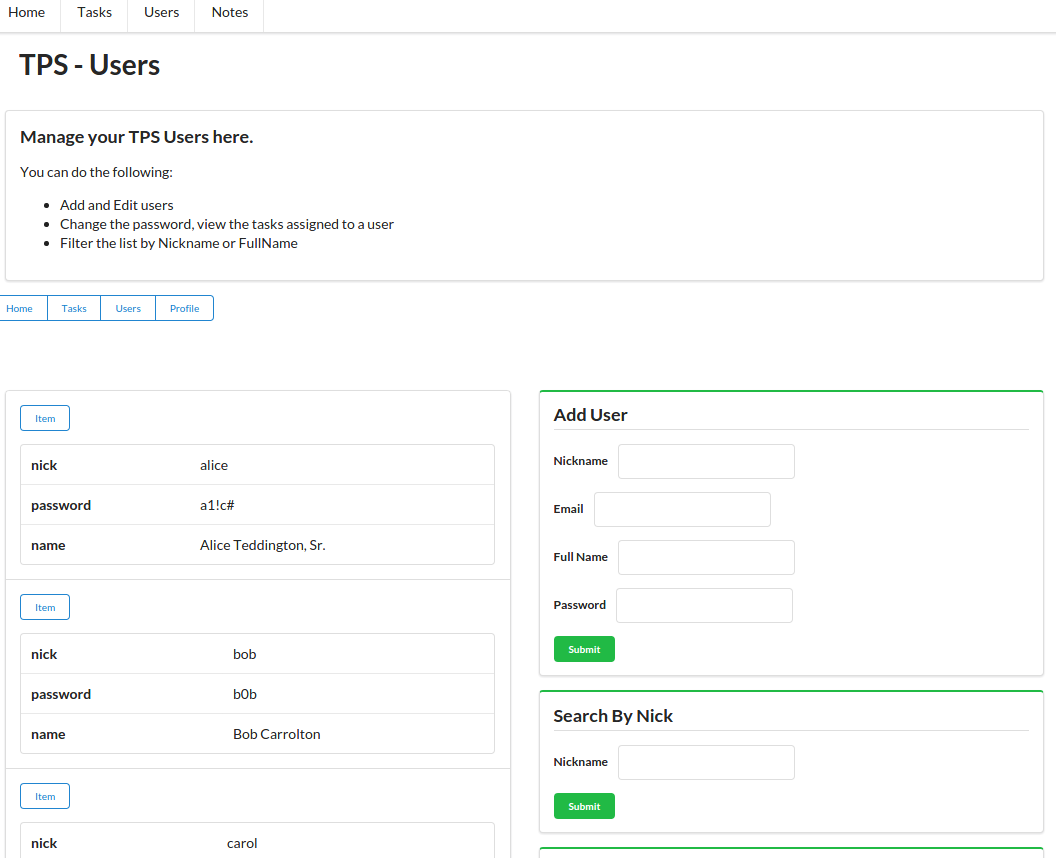

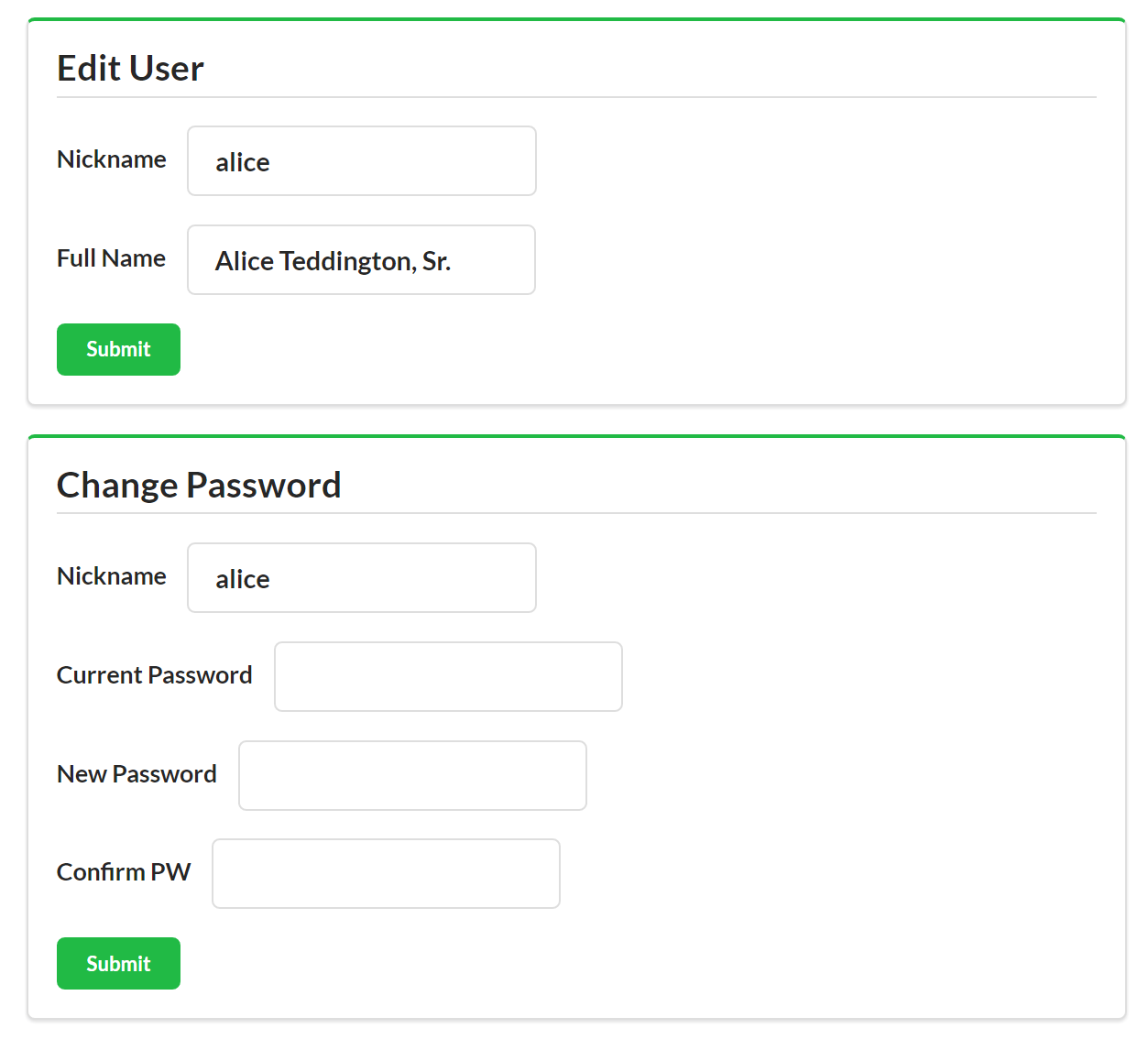

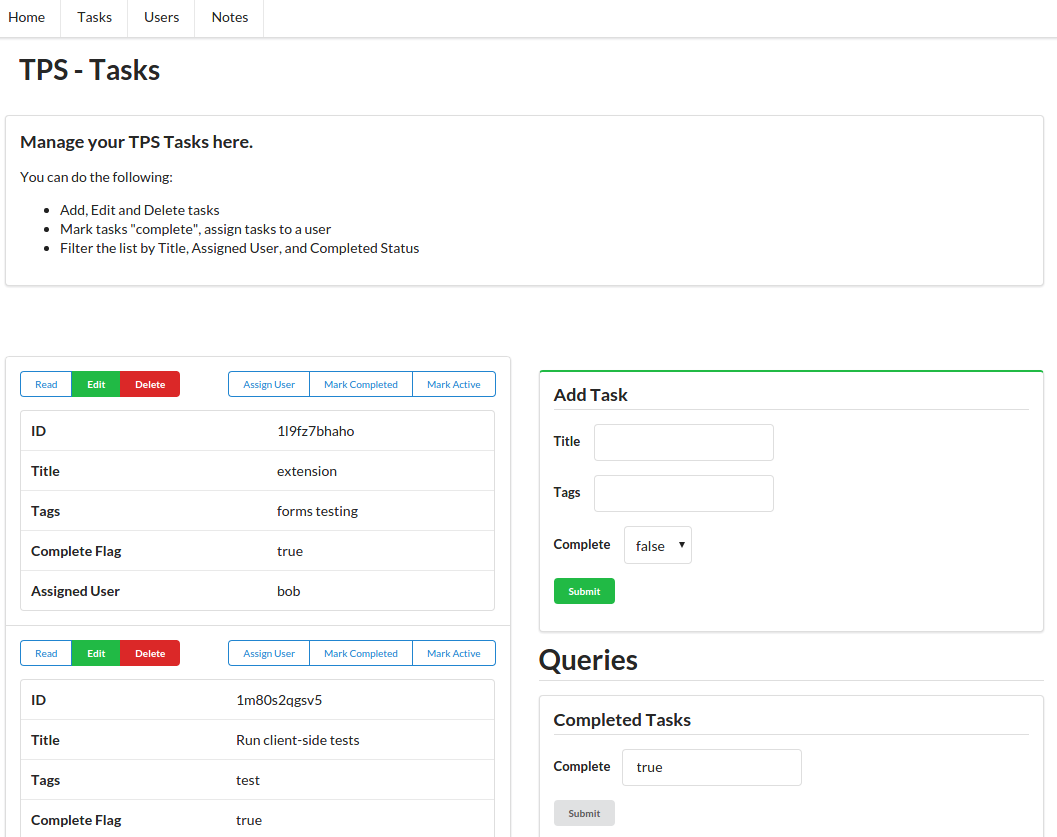

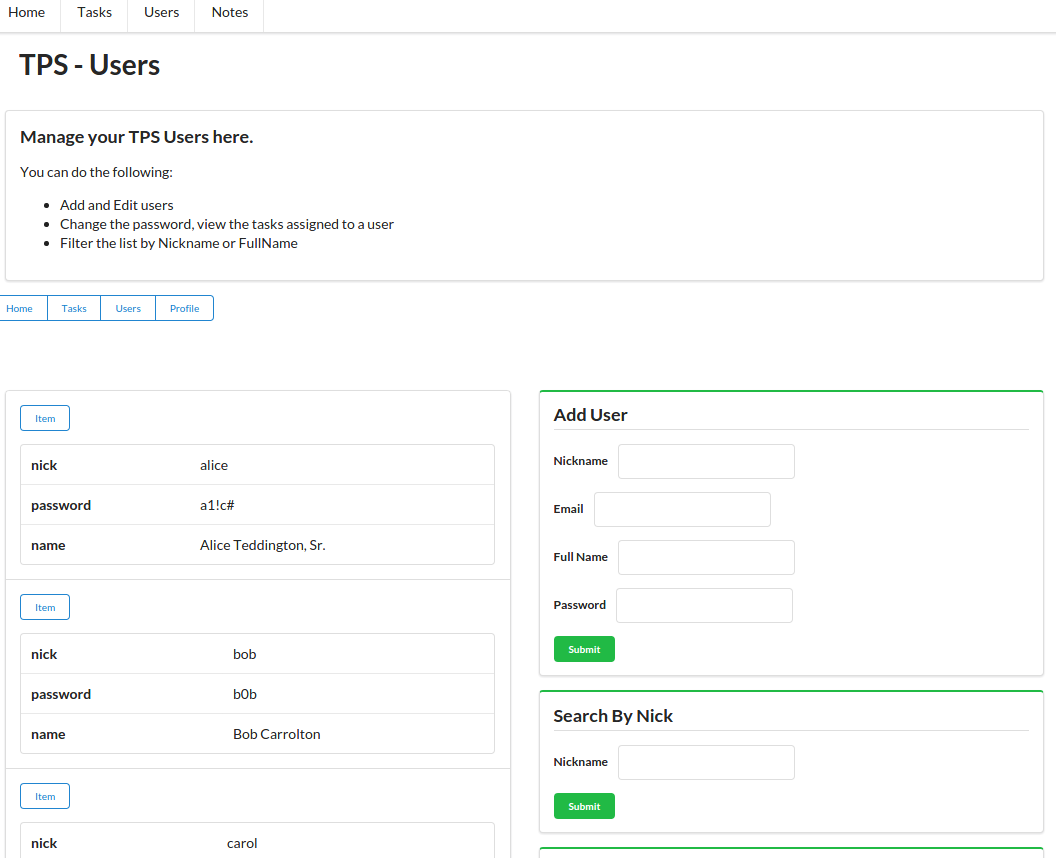

For quick review, Carol’s team built a web app that delivers HTML (and CSS) from the web server directly to common web browsers. This app works in any brand and version of browser (there are no CSS tricks, all HTML is standard, and there is no JavaScript at all). It also runs quickly and gets the job done by focusing on the key use cases originally defined when the app was first designed and implemented.

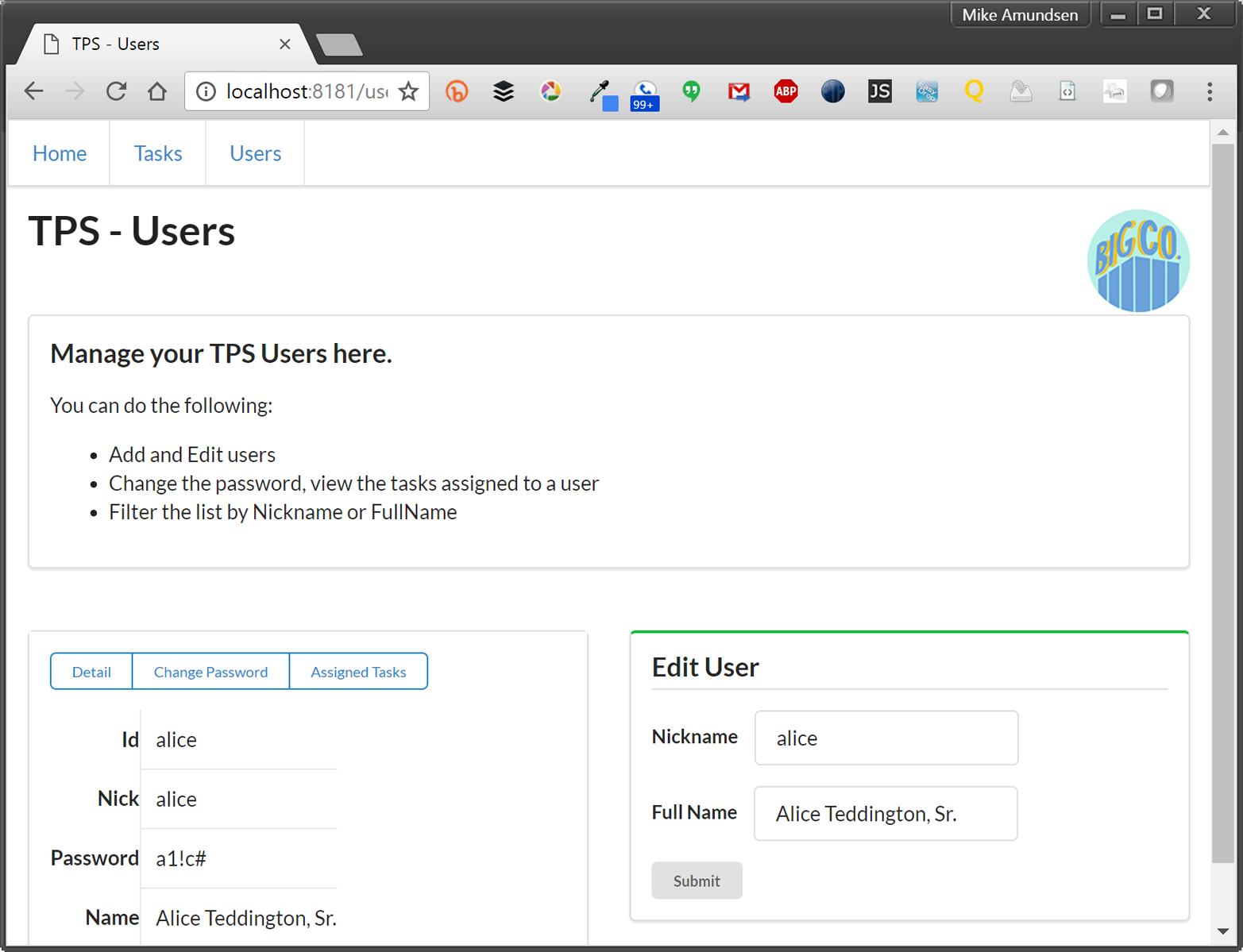

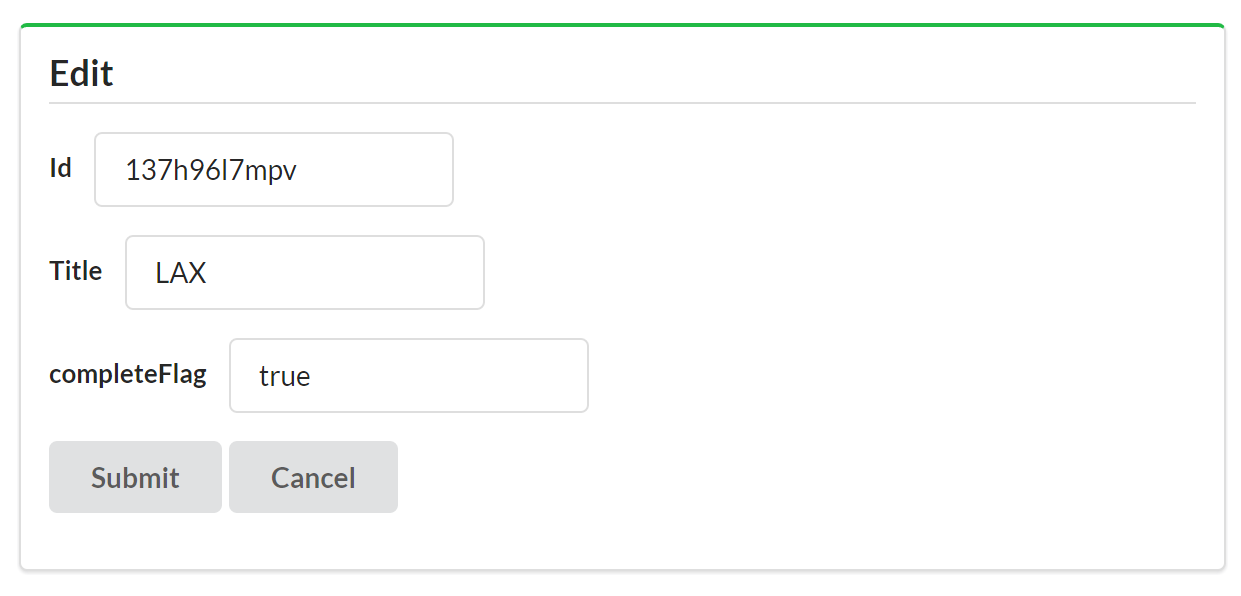

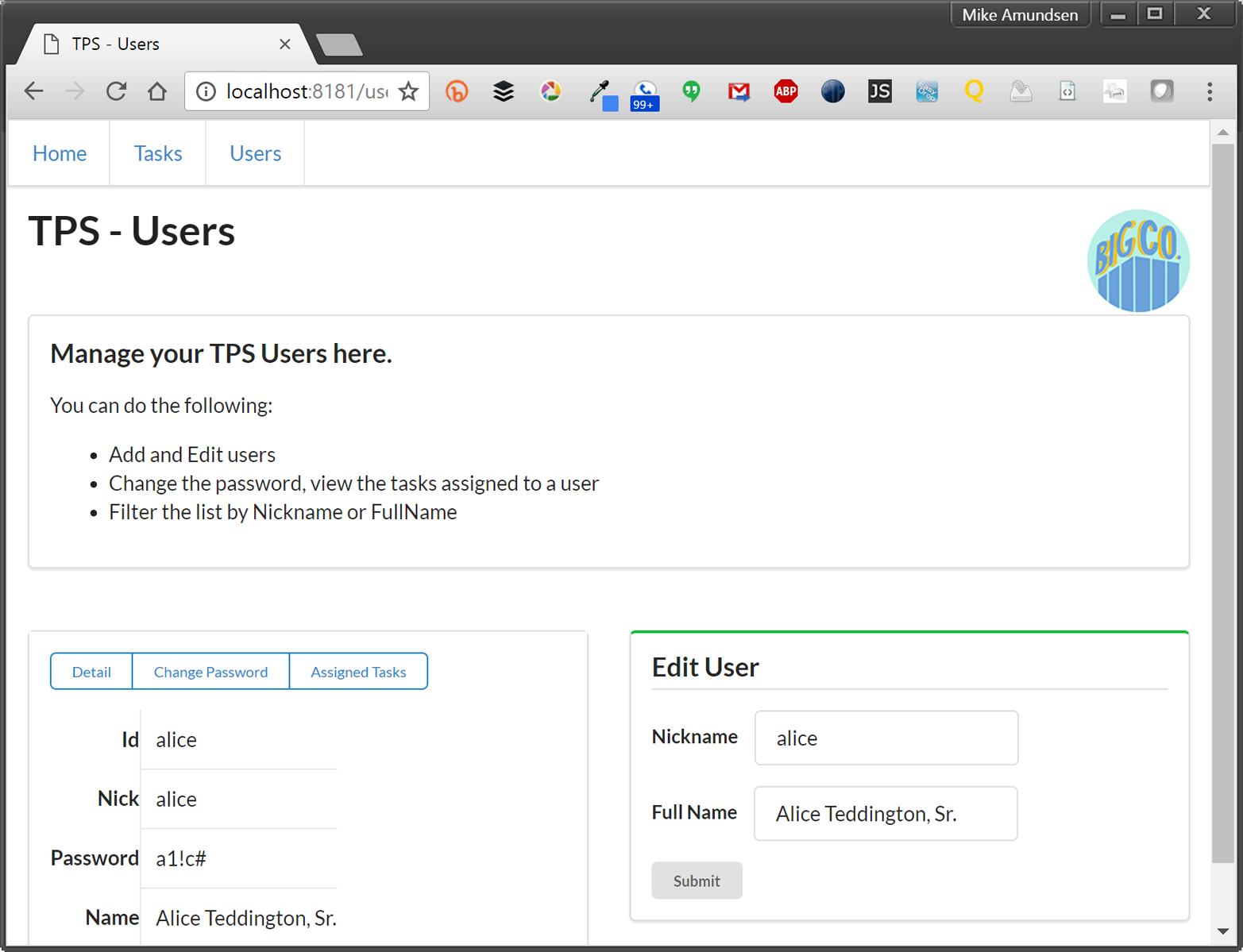

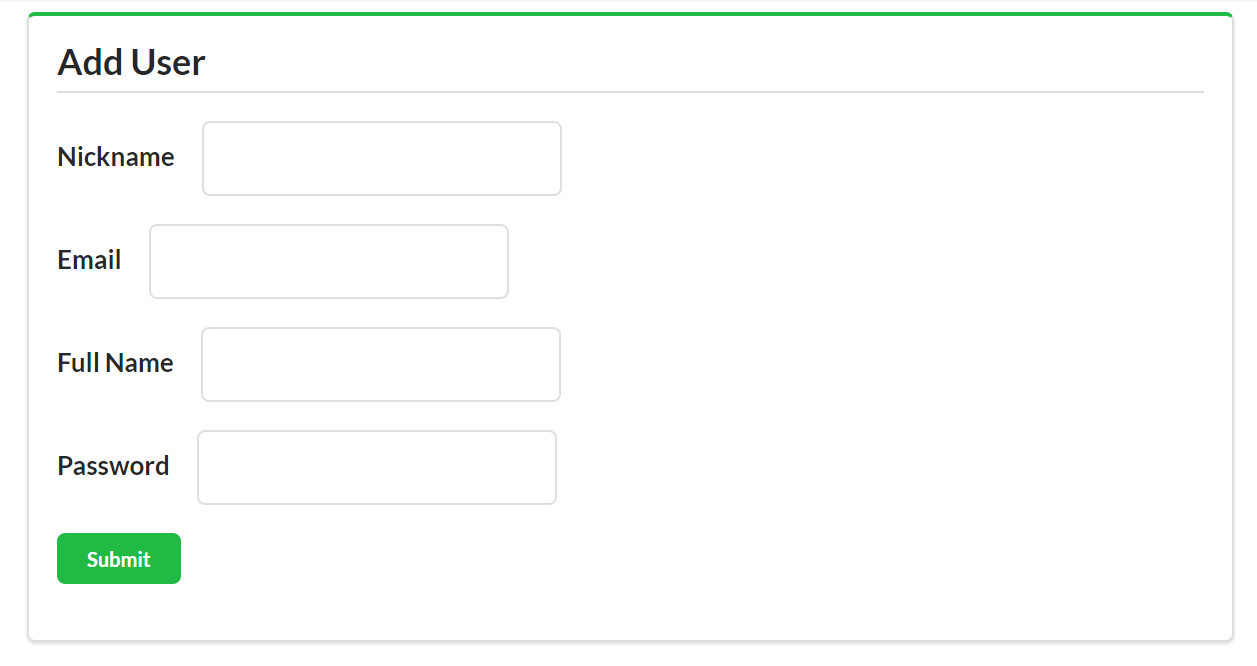

As you can see from Figure 1-4, the UI, while not likely to win any awards, is usable, practical, and reliable—all things we wish for in any application.

The source code for this version of the TPS can be found in the associated GitHub repo. A running version of the app described in this chapter can be found online.

Part of the success of the TPS app is that it is very simple. The web server delivers clean HTML that contains all the links and forms needed to accomplish the required use cases:

<ul><liclass="item"><ahref="https://rwcbook01.herokuapp.com/home/"rel="home">Home</a></li><liclass="item"><ahref="https://rwcbook01.herokuapp.com/task/"rel="collection">Tasks</a></li><liclass="item"><ahref="https://rwcbook01.herokuapp.com/user/"rel="collection">Users</a></li></ul>

For example, in the preceding code listing, you can see the HTML anchor tags (<a>…</a>) that point to related content for the current page. This set of “menu links” appear at the top of each page delivered by the TPS app:

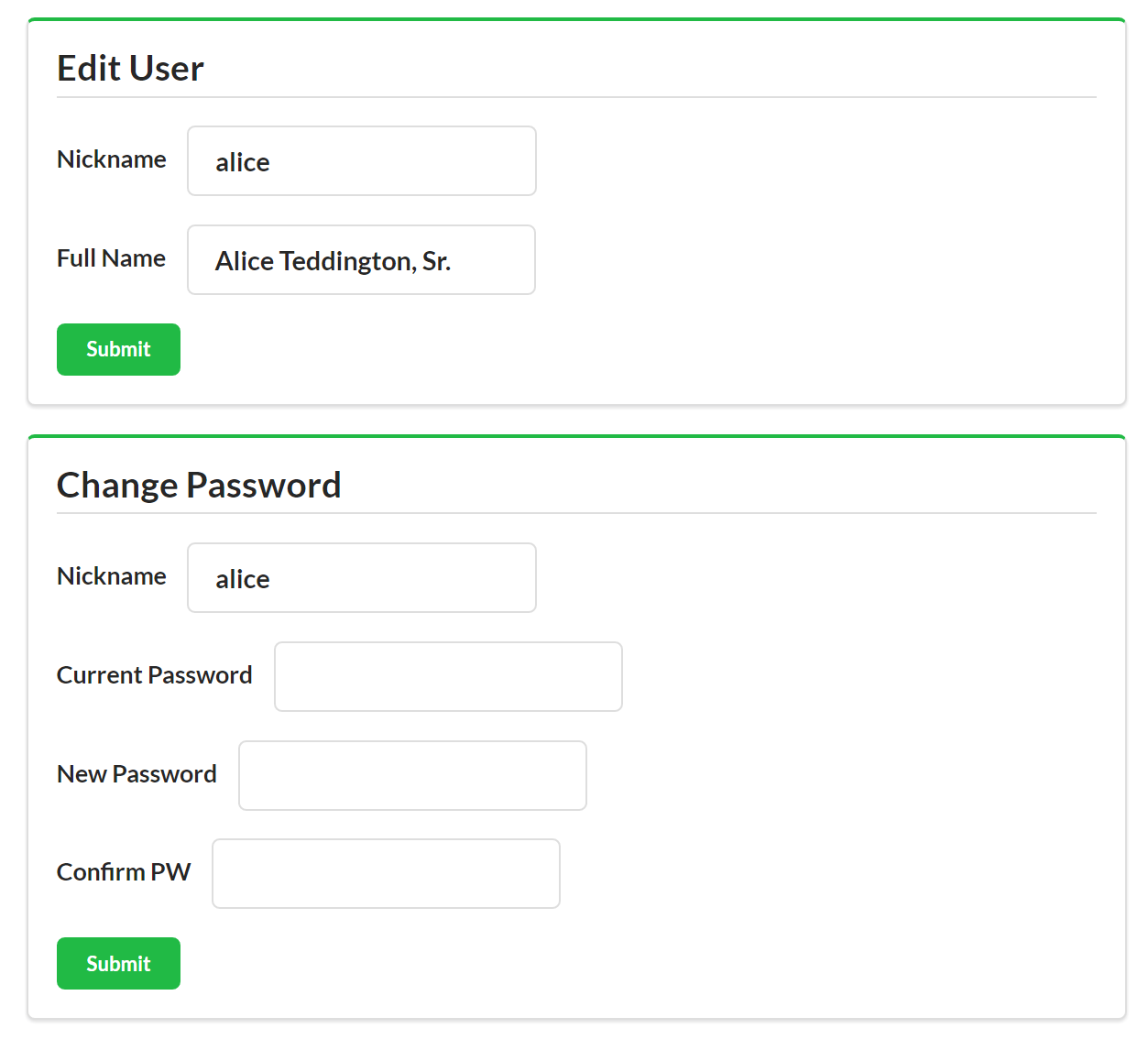

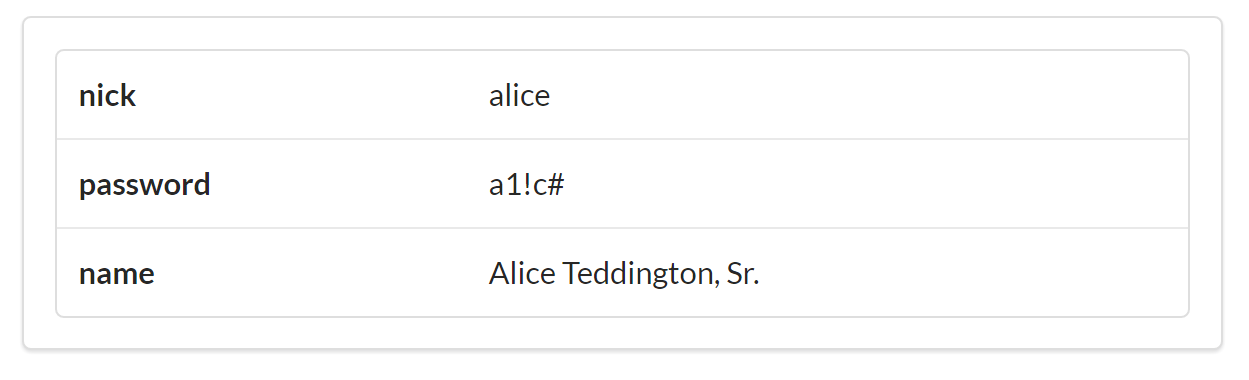

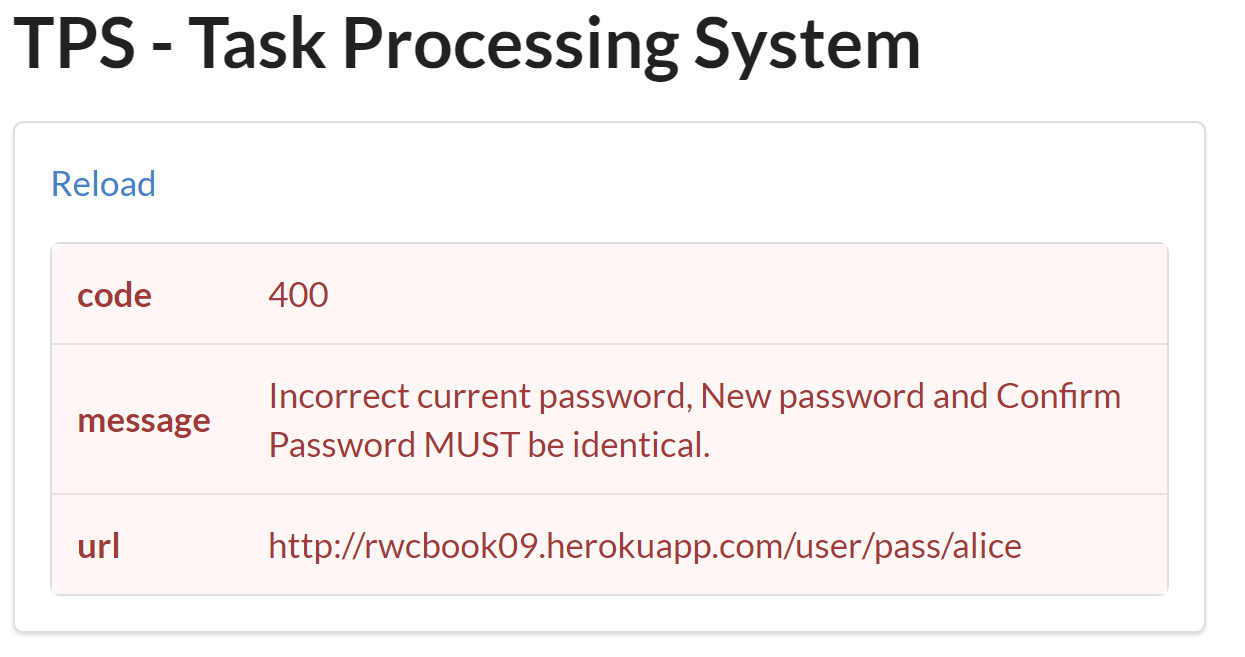

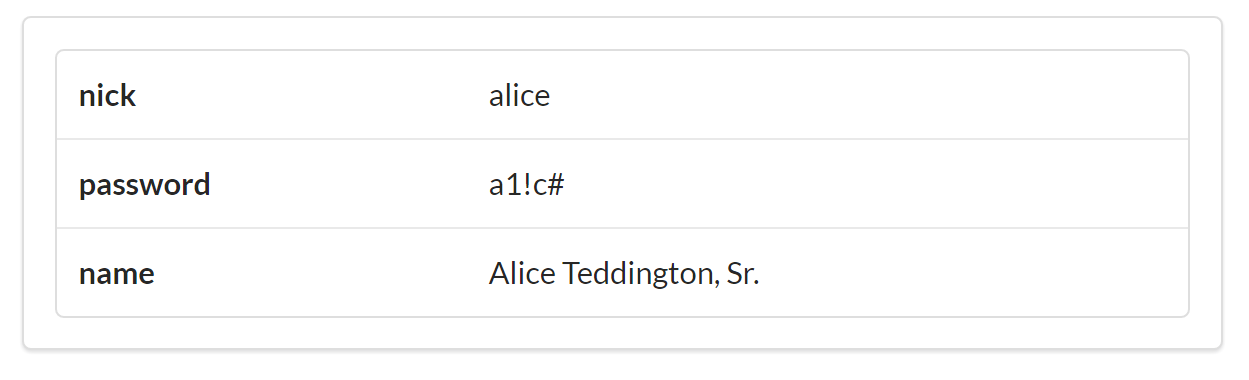

<divid="items"><div><ahref="https://rwcbook01.herokuapp.com/user/alice"rel="item"title="Detail">Detail</a><ahref="https://rwcbook01.herokuapp.com/user/pass/alice"rel="edit"title="Change Password">Change Password</a><ahref="https://rwcbook01.herokuapp.com/task/?assignedUser=alice"rel="collection"title="Assigned Tasks">Assigned Tasks</a></div><table><tr><th>id</th><td>alice</td></tr><tr><th>nick</th><td>alice</td></tr><tr><th>password</th><td>a1!c#</td></tr><tr><th>name</th><td>Alice Teddington, Sr.</td></tr></table></div>

Each user rendered in the list by the server contains a link pointing to a single user item, and that item consists of a pointer ( ), a handful of data fields (

), a handful of data fields ( ), and some links that point to other actions that can be performed for this user (

), and some links that point to other actions that can be performed for this user ( and

and  ). The links allow anyone viewing the page to initiate updates or password changes (assuming they have the rights to perform these actions):

). The links allow anyone viewing the page to initiate updates or password changes (assuming they have the rights to perform these actions):

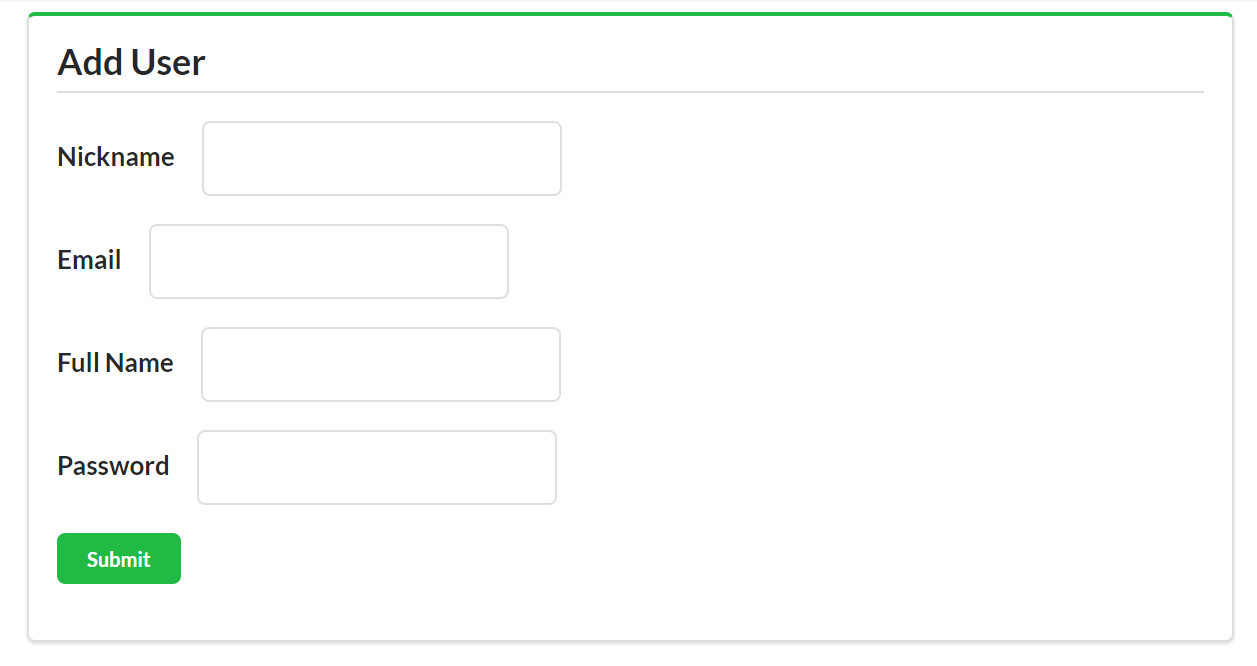

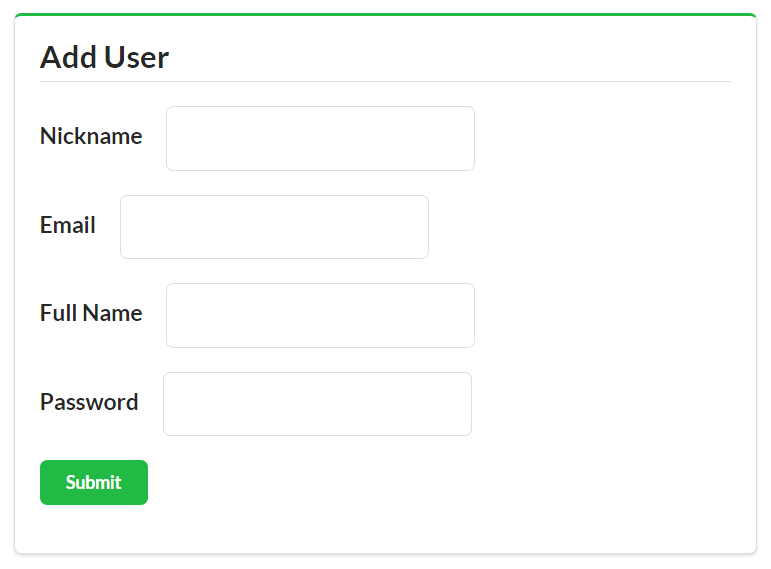

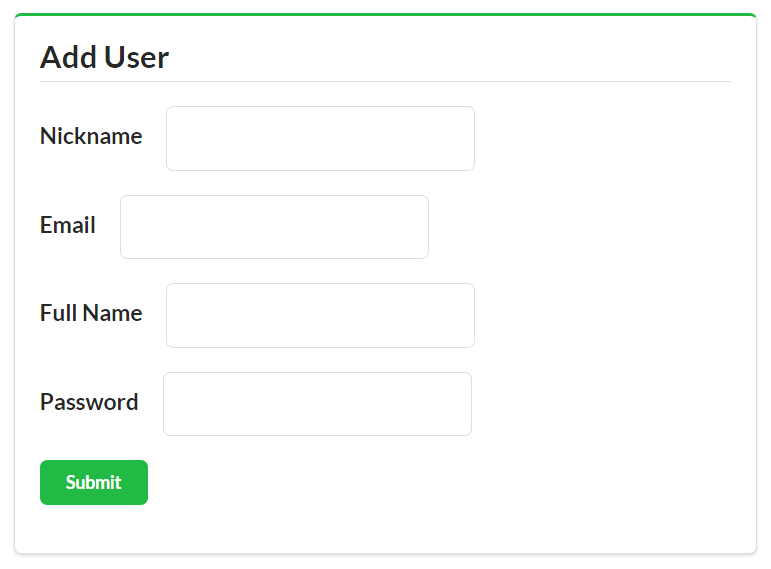

<!-- add user form --><formmethod="post"action="https://rwcbook01.herokuapp.com/user/"><div>Add User</div><p><label>Nickname</label><inputtype="text"name="nick"value=""required="true"pattern="[a-zA-Z0-9]+"/></p><p><label>Full Name</label><inputtype="text"name="name"value=""required="true"/></p><p><label>Password</label><inputtype="text"name="password"value=""required="true"pattern="[a-zA-Z0-9!@#$%^&*-]+"/></p><inputtype="submit"/></form>

The HTML for adding a user is also very simple (see the preceding HTML). It is a clean HTML <form> with associated <label> and <input> elements. In fact, all the input forms in this web app look about the same. Each <form> used for queries (safe operations) has the method property set to get, and each <form> used for writes (unsafe operations) has the method property set to post. That is the only important difference in the <form> settings for this implementation.

In HTTP, the POST method defines a nonidempotent, unsafe operation (RFC7231). Some of the actions in the TPS web app could be handled by an idempotent, unsafe operation, but HTML (still) does not support PUT or DELETE (the two idempotent, unsafe operations in HTTP). As Roy Fielding has pointed out in a 2009 blog post, it is certainly possible to get everything done on the Web with only GET and POST. But it would be a bit easier if some operations were idempotent since that makes replaying failed requests much easier to deal with. As of this writing, the several attempts to bring PUT and DELETE to HTML have been given a chilly reception.

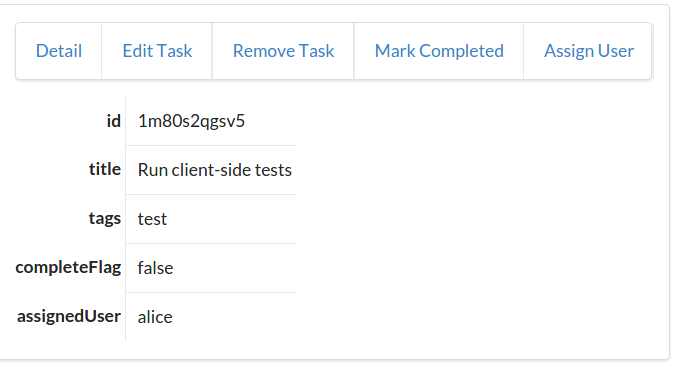

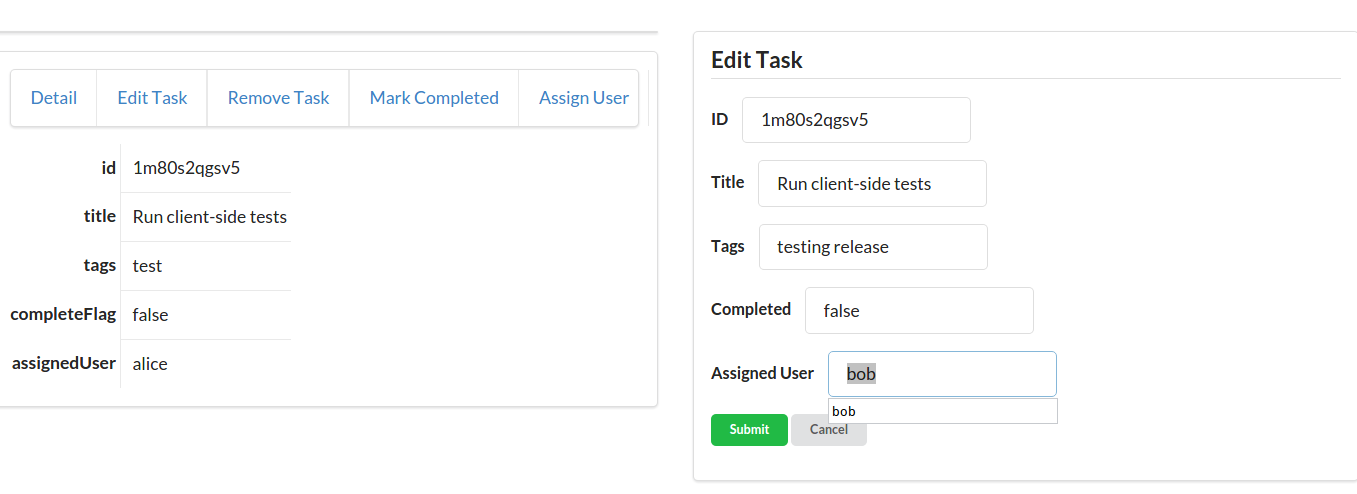

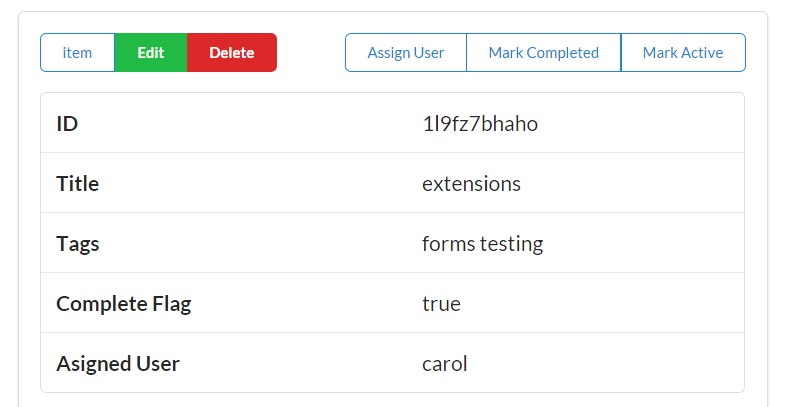

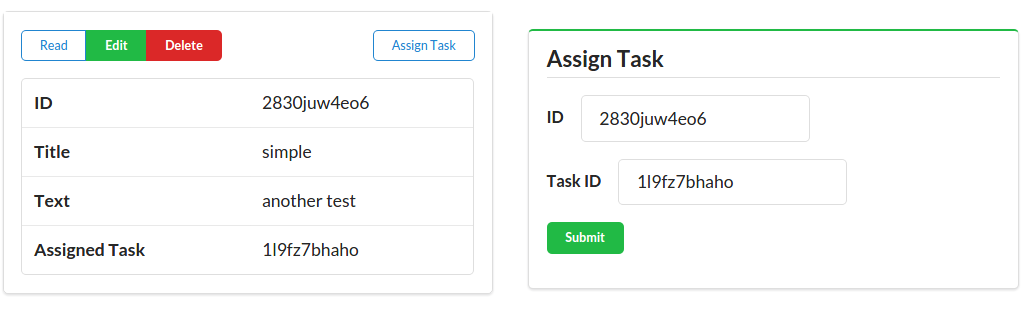

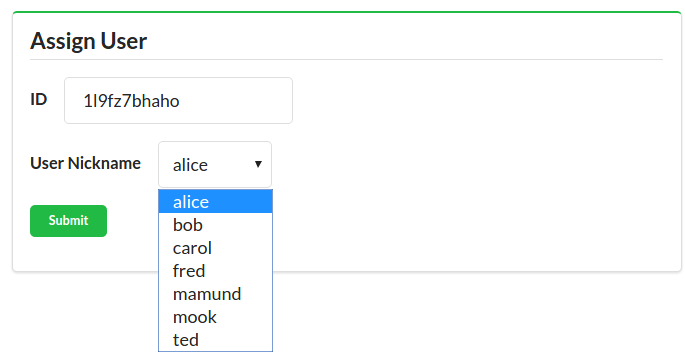

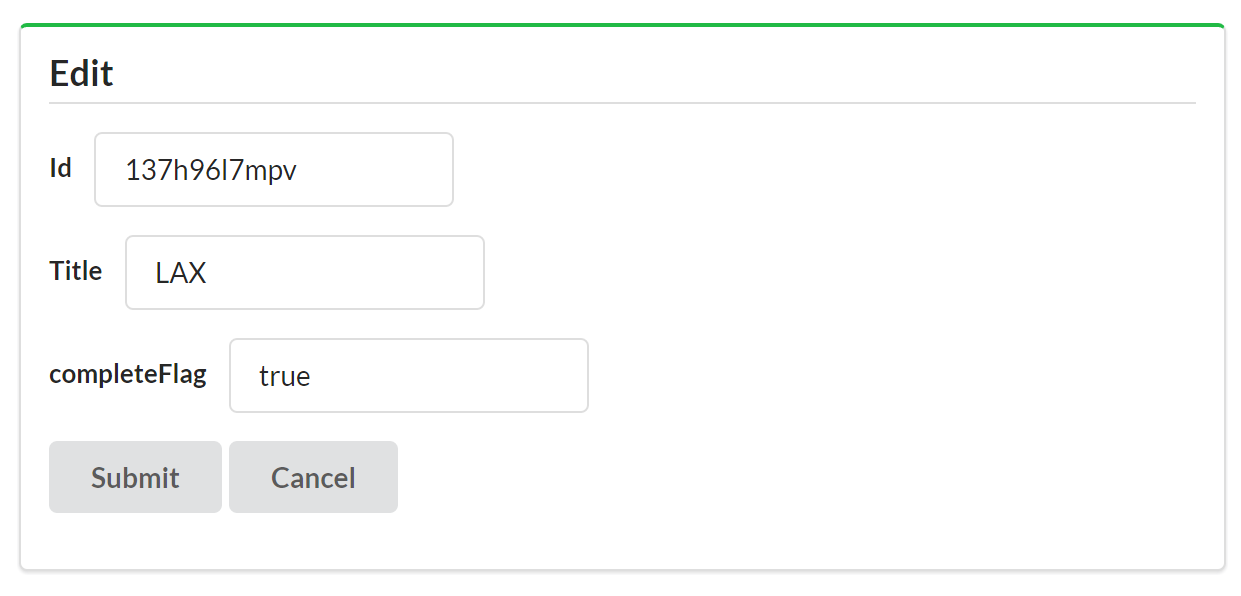

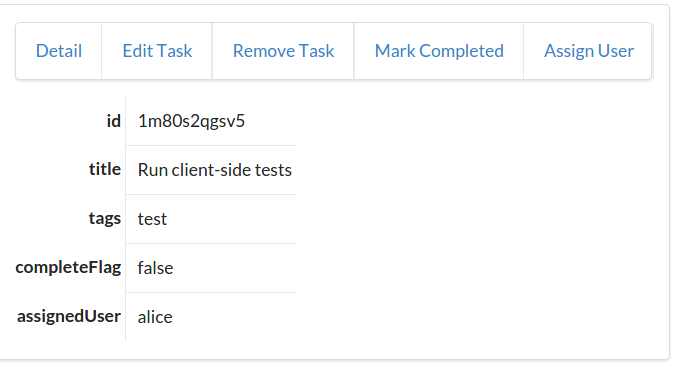

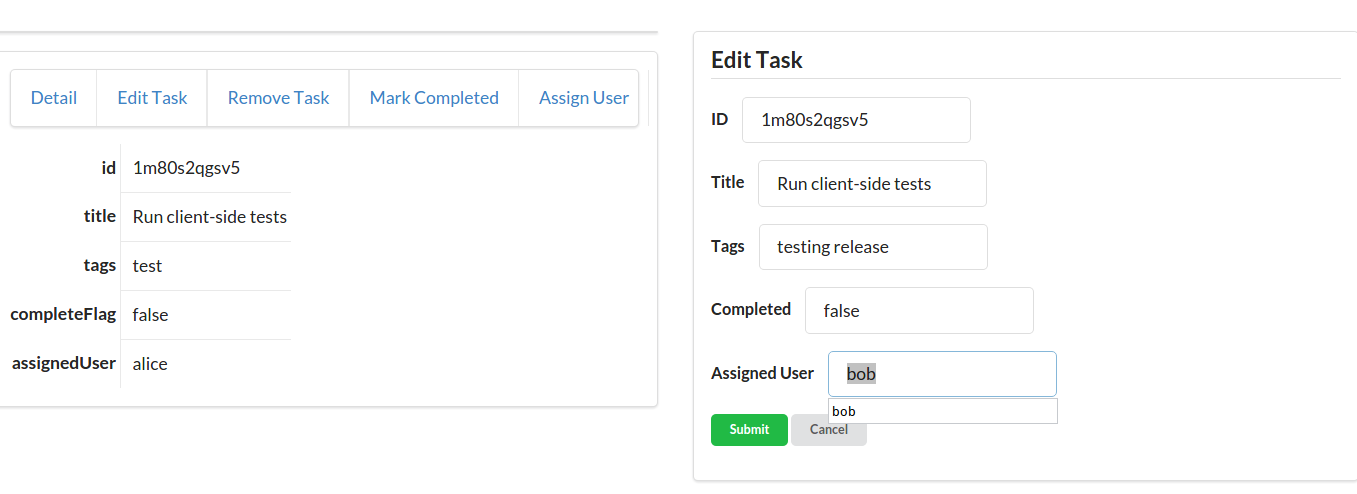

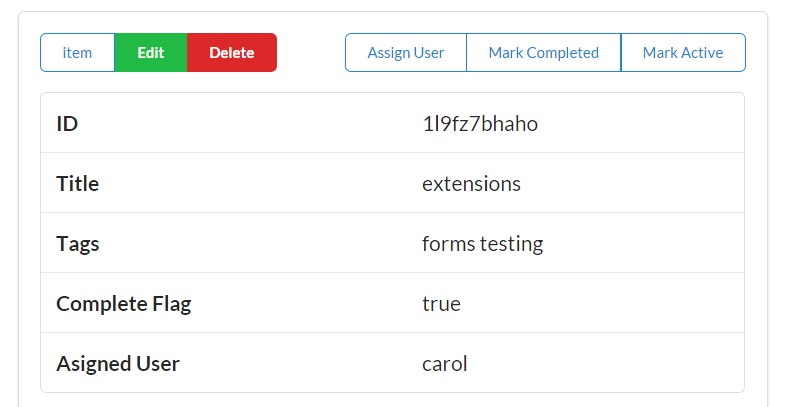

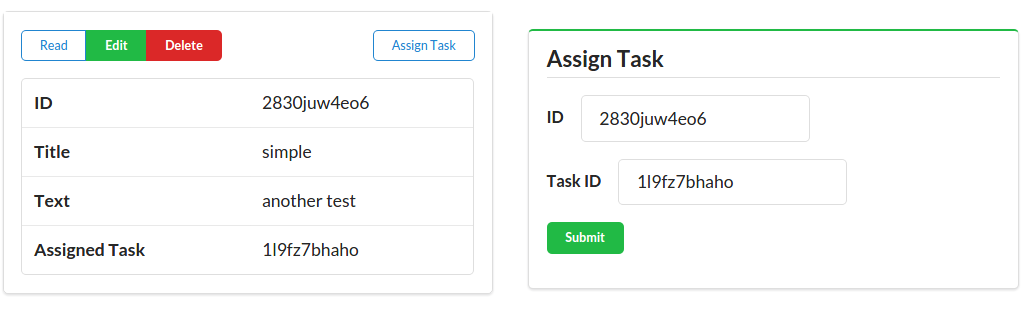

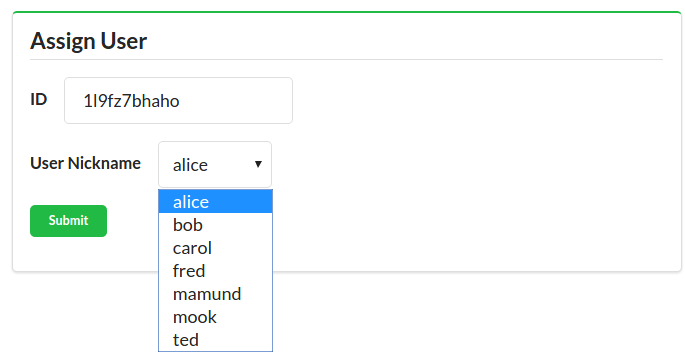

Along with the typical list, read, add, edit, and remove actions, the TPS web app includes actions like Change Password for users and Assign User for tasks. The following HTML is what drives the Assign User screen (Figure 1-5 shows the screen itself):

<!-- assign user form --><formmethod="post"action="//rwcbook01.herokuapp.com/task/assign/137h96l7mpv"><div>Assign User</div><p><label>ID</label><inputtype="text"name="id"value="137h96l7mpv"readonly="readonly"/></p><p><label>User Nickname</label><selectname="assignedUser"><optionvalue="">SELECT</option><optionvalue="alice">alice</option><optionvalue="bob"selected="selected">bob</option><optionvalue="carol">carol</option><optionvalue="mamund">mamund</option><optionvalue="ted">ted</option></select></p><inputtype="submit"/></form>

Note that this form uses the HTTP POST method. Since HTML only provides GET and POST, all unsafe actions (create, update, remove) are enabled using a POST form. We’ll have to deal with this later when we convert this HTML-only web app into a web API.

The client side of common web browser applications like this one is pretty uninteresting. First, this app has no client-side JavaScript dependencies. It runs fine without any JavaScript running locally. The app does take advantage of a handful of HTML5 user experience features such as:

HTML pattern to perform local input validations

HTML required to guide the user in filling out important fields

HTML readonly to prevent users from changing important FORM data

These, along with the use of a SELECT input control to supply users with valid input options, do a pretty good job of providing client-side interaction—all without relying on custom JavaScript. The CSS styling here is handled by a library called Semantic UI. It supports lots of UI design elements while still supporting reasonable HTML markup. Semantic UI libraries also support JavaScript-driven enhancements that may be used in future updates for this app.

It turns out that, at least for this web app, the client-side experience is pretty boring to talk about. There just isn’t much here to cover! That’s actually good news. The common web browser is designed to accept HTML markup and—based on the response links and forms—provide a solid user experience without the requirement of writing imperative JavaScript code.

Here are a few other observations:

Because there is no custom JavaScript code for this client, there are almost no bugs. It is possible that the server will emit broken HTML, of course. And a poorly implemented CSS rule can cause the UI to become unusable. But the fewer lines of code involved, the less likelihood a bug will be encountered. And this app has no imperative client code.

Because the app is limited to HTML-only responses, all data updates such as create, update, and delete, along with the domain-specific actions like assign-user and change-password, are handled using HTML POST requests. This is, strictly speaking, not a bug, but it does run counter to the way most web developers think about actions on the Web. The use of the nonidempotent POST action does introduce a slight complication in edge cases where users are not sure if a POST was successful and will attempt the action a second time. In this case, it is up to the server to prevent double-posting of adds, etc.

One of the nice things about using HTML as a response format is that it contains support for a wide range of hypermedia controls: links and forms. The TPS responses include the <a>…</a> tag to handle simple immutable links, <form method="get"> elements to handle safe searches and queries, and <form method="post"> controls to handle all the unsafe write operations. Each response contains all the details for passing arguments to the server. The <input> elements even include simple client-side validation rules to validate user inputs before the data is sent to the server. Having all this descriptive information in the server responses makes it easy for the browser to enforce specific input rules without having any custom client-side code.

Despite the reality of a “bug-free” app and fully functional write operations via POST, the user experience for this web app is still limited. This might be acceptable within a single team or small company, but if BigCo plans to release this app to a wider public—even to other teams within the company—a more responsive UX would be a good idea.

So, now that we have a baseline web app to start from, let’s take a look at how BigCo’s Bob and Carol can take this app to the next level by creating a server-side web API that can be used to power a standalone web client application.

Often the next logical step in the life of an HTML-based web application is to publish a standalone web API—or application programming interface—that can be used by client applications directly. In the dialogue at the start of this chapter, Bob has taken on the task of leading the server-side team that will design, implement, and publish the Task Processing System API while Carol’s team will build the client applications that consume that API.

The source code for the JSON-based RPC-CRUD web API version of the TPS can be found in the associated GitHub repo. A running version of the app described in this chapter can be found online.

Let’s first do a quick rundown on the design process for a typical web API server followed by a review of the changes needed to convert our existing TPS HTML-only web app into a proper JSON-based RPC-CRUD web API.

The common practice for creating web APIs is to publish a fixed set of Remote Procedure Call (RPC) endpoints expressed as URLs that allow access to the important functionality of the original application. This common practice also covers the design of those URLs, the serialized objects that are passed between server and client, and a set of guidelines on how to use HTTP methods, status codes, and headers in a consistent manner. For most web developers today, this is the state of the art for HTTP.

At this point in many discussions, someone mentions the word “REST,” and a fight (literally or actually) may break out between people who want to argue about the proper way to design URLs, which HTTP headers you should not use, why it is acceptable to ignore some HTTP status codes, and so forth. Disputes about the content and meaning of IETF documents specifying the HTTP protocol, and disagreements about the shape of URLs are all subplots to the main adventure: building solid web applications. Arguing about URLs instead of discussing which interactions are needed to solve a use case is missing the point. HTTP is just tech, and REST is just a style (like punk rock or impressionist painting, etc.). Disagreeing on what is true REST or proper HTTP is a classic cases of Parkinson’s Law of Triviality—debating the trivial points while ignoring the important issues.

It turns out that designing and implementing reliable and flexible applications that live on the Web is nontrivial. It takes a clear head, an eye for the future, and a willingness to spend time engaged in systems-level thinking. Instead of focusing on those hard problems, some get caught up in disagreements on the characters in a URL or other silliness. I plan to avoid those pitfalls and just focus on the functionality.

What follows in this section is a retelling of the common practice for HTTP-based web APIs. It is not, as I will illustrate in the ensuing chapters, the only way to implement services on the Web. Once we get beyond this particular design and implementation detail we can move on to explore additional approaches.

Essentially, we need to design the web API. Typically this means (1) defining a set of objects that will be manipulated via the API, and (2) applying a fixed set of actions on those objects. The actions are Create, Read, Update, and Delete—the CRUD operations. In the case of the TPS example, the list of published objects and actions would look something like that shown in Table 1-1.

| URL | Method | Returns Object | Accepts Object |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

This looks fairly simple: four endpoints and about ten operations (we’ll handle the missing one in a minute).

As you can see from the table, there are essentially two forms of the object URL: list and item. The list form of the URL contains the object name (Task or User) and supports (1) HTTP GET to return a list of objects, and (2) HTTP POST to create a new object and add it to the list. The item form of the URL contains both the object name (Task or User) and the object’s unique id value. This URL supports (1) HTTP GET to return a single object, (2) HTTP PUT to support updating the single object, and (3) HTTP DELETE to support removing that object from the collection.

However, there are some exceptions to this simple CRUD approach. Looking at the table, you’ll notice that the TPS User object does not support the DELETE operation. This is a variant to the common CRUD model, but not a big problem. We’d need to document that exception and make sure the API service rejects any DELETE request for User objects.

Also, the TPS web app offers a few specialized operations that allow clients to modify server data. These are:

TaskMarkCompletedAllow client apps to mark a single Task object with the completeFlag="true"

TaskAssignUserAllow client apps to assign a User.nick to a single Task object

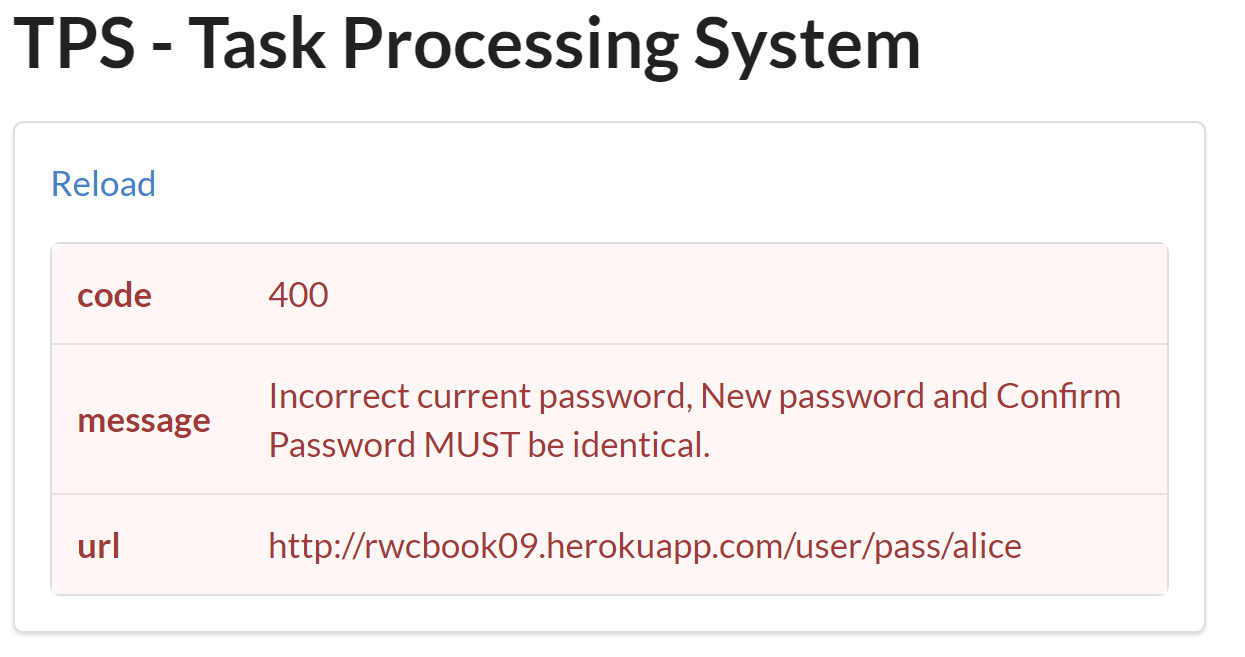

UserChangePasswordAllow client apps to change the password value of User object

None of the operations just listed falls neatly into the CRUD pattern, which happens quite often when implementing web APIs. This complicates the API design a bit. Typically, these special operations are handled by creating a unique URL (e.g., /task/assign-user or /user/change-pw/) and executing an HTTP POST request with a set of arguments to pass to the server.

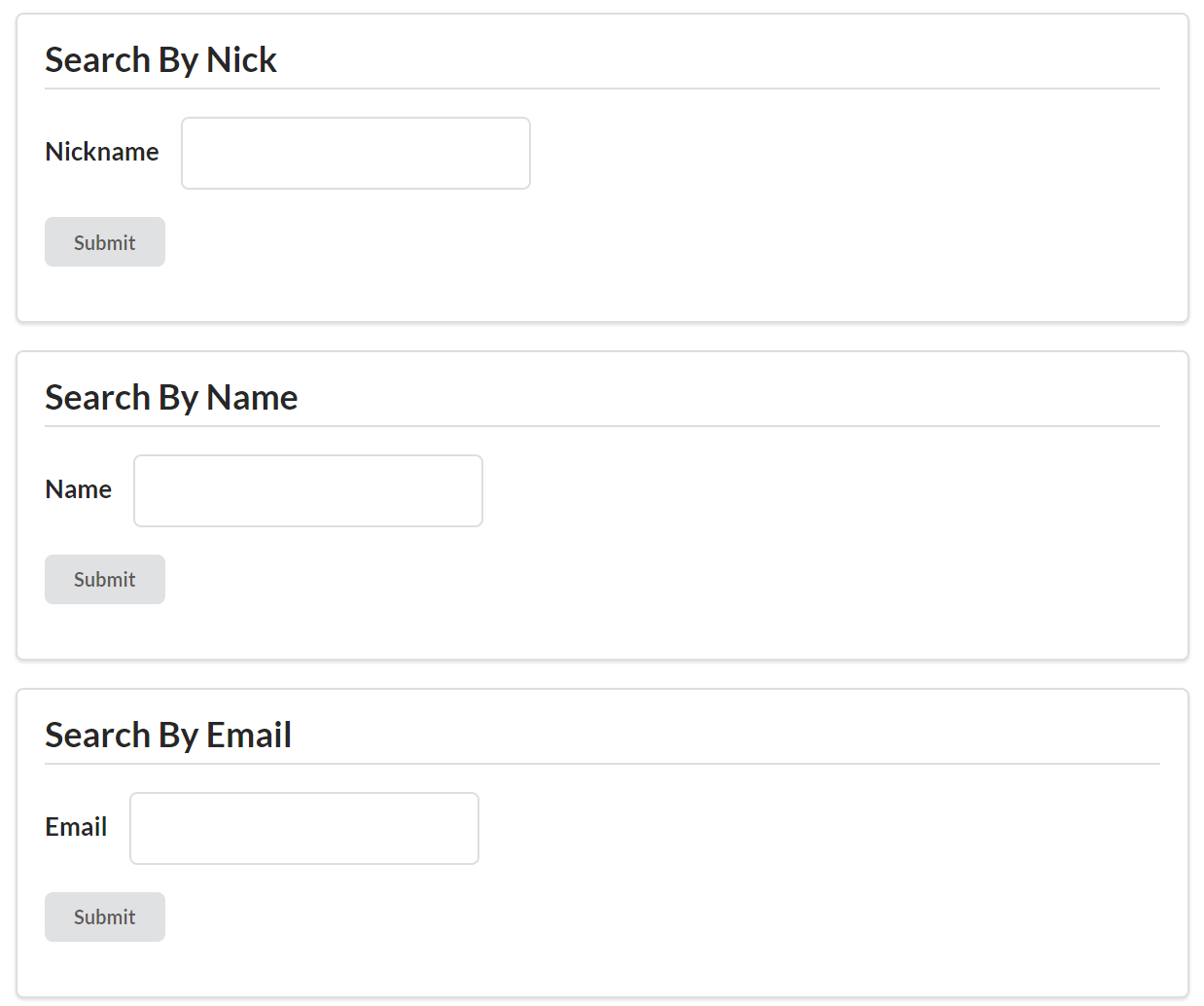

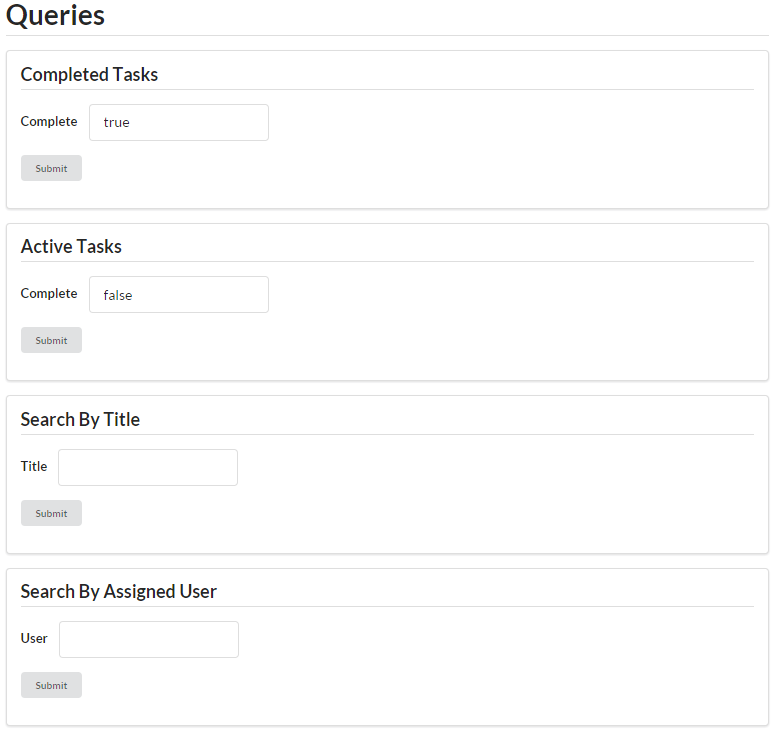

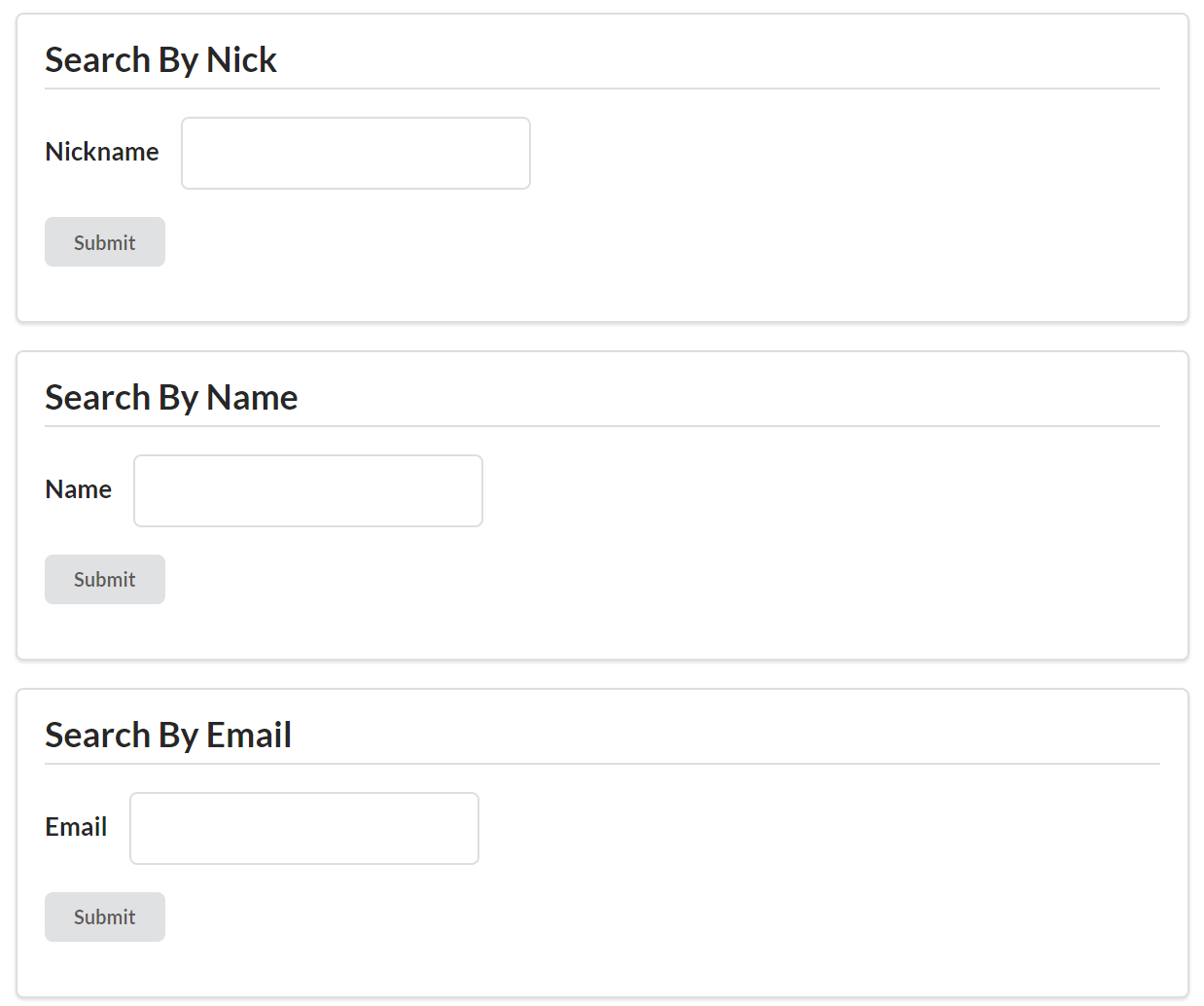

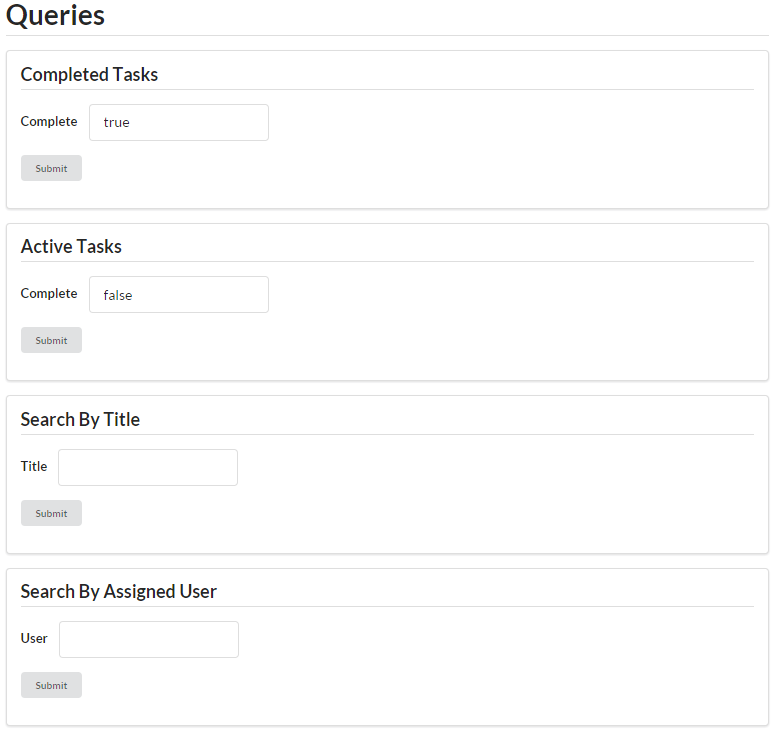

Finally, the TPS web API supports a handful of filter operations that need to be handled. They are:

TaskFilterByTitleReturn a list of Task objects whose title property contains the passed-in string value

TaskFilterByStatusReturn a list of Task objects whose completeFlag property is set to true (or set to false)

TaskFilterByUserReturn a list of Task objects whose assignedUser property is set to the passed-in User.nick value

UserFilterByNickReturn a list of User objects whose nick property contains the passed-in string value

UserFilterByNameReturn a list of User objects whose name property contains the passed-in string value

The common design approach here is to make an HTTP GET request to the object’s list URL (/task/ or /user/) and pass query arguments in the URL directly. For example, to return a list of Task objects that have their completeFlag set to true, you could use the following HTTP request: GET /task/?completeFlag=true.

So, we have the standard CRUD operations (nine in our case), plus the special operations (three), and then the filter options (five). That’s a fixed set of 17 operations to define, document, and implement.

A more complete set of API Design URLs—one that includes the arguments to pass for the write operations (POST and PUT)—would look like the one in Table 1-2.

| Operation | URL | Method | Returns | Inputs |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

The URLs in the Task System API example are just one of a number of ways to design URLs for a web API. There are several books (Allamaraju, Masse) that devote pages to the proper way to design a URL for human use. In truth, machines don’t care about the shape of the URL—they only care that it follows the standards for valid URLs (RFC3986) and that each URL contains enough information for the service to route the request to the right place for processing. In this book, you’ll find a wide range of URL designs, none of which are meant to be the single right way to design URLs.

By now, you’ve probably noticed that what we have done here is document a set of Remote Procedure Calls (RPCs). We’ve identified the actions using URLs and listed the arguments to pass for each of them. The arguments are listed in the table but it is worth calling them out separately, too. We’ll need to share these with API developers so that they know which data element to pass for each request.

| Agument Name | Operation(s) |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Notice that the last three arguments in the table (oldPass, newPass, and checkPass) do not belong to any TPS objects (e.g., Task or User). They only exist in order to complete the UserChangePassword operation. Usually, RPC-CRUD-style APIs restrict data-passing to arguments that belong to some defined object. But, as we’ve seen already, there are exceptions to this general rule. This is another challenge you’ll encounter when attempting to implement web APIs in the RPC-CRUD style.

Some RPC-CRUD API designs will document an additional set of objects just for passing arguments. I’ll not be covering that here, but it is an option you may encounter when working with other RPC-CRUD APIs.

It is not enough to just document which data arguments are passed with each HTTP request. It is also important to document the format used to pass arguments from the client to the service when passing HTTP bodies. There is no set standard for data-passing with JSON-based APIs, but the typical option is to pass arguments as JSON dictionary objects. For example, the TaskAdd operation in Table 1-2 lists two inputs: title and completeFlag. Using a JSON dictionary to pass this data would look like this:

POST /task/ HTTP/1.1

content-type: application/json

...

{

"title" : "This is my job",

"completeFlag" : "false"

}

Even though the most common way to pass data from client to server on the WWW is using the common HTML FORM media type (application/x-www-form-urlencoded), it is limited to sending simple name–value pairs from client to server. JSON is a bit more flexible than FORM data since it is possible to pass arbitrarily nested trees of data in a single request. However, for this implementation, we’ll use the typical JSON dictionary approach.

That covers the endpoints, arguments, and format details for sending data from client to server. But there is another important interface detail missing here—the format of the responses. We’ll pick that up in the next section.

Another important element of the RPC-CRUD style of web API practice is to identity the format and shape of the serialized objects passed from server to client and back again. In the case of the TPS web API, Bob has decided to use simple JSON-serialized objects to pass state back and forth. Some implementations will use nested object trees to pass between parties, but BigCo’s serialized objects are rather simple for the moment.

Scanning the Returns column of Table 1-2, you’ll notice there are four different return elements defined:

TaskList

Task

UserList

User

These are the return collections/objects that need to be explicitly defined for API developers. Lucky for us, the TPS web API has only two key objects as that will make our definition list rather short.

Tables 1-4 and 1-5 define the properties for the Task and User objects in our TPS web API.

| Property | Type | Status | Default |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Property | Type | Status | Default |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

All fields are defined as "string" types. This is just to simplify the implementation of the TPS API for the book. While some APIs employ schemas or other means of “strong-typing” data passed between client and server, these add another level of complication to the implementation. We’ll talk more about this in Chapter 7. Also, the stored record layout includes dateCreated and dateUpdated fields that are not listed in our design here. These were left out of the tables for clarity.

For our TPS app, we’ll make things easy and define the TaskList and UserList return objects as simply JSON arrays of the Task and User objects respectively. Following are examples of each object:

/* TaskList */{"task":[{"id":"dr8ar791pk","title":"compost","completeFlag":false,"assignedUser":"mamund"}...moretasksappearhere...]}/* UserList */{"user":[{"nick":"lee","name":"Lee Amundsen","password":"p@ss"}...moreuserrecordsappearhere...]}

So we’ve defined the following for our TPS web API:

URLs and HTTP methods for each RPC endpoint

Arguments and format for passing data to the service

JSON objects returned to the clients

There are a few other implementation details that we’ll skip over here (handling errors, HTTP return codes, etc.). These would all appear in a complete documentation set for RPC-CRUD APIs. For now, we’ll make some assumptions and move on to some implementation details for creating the running TPS web API.

We need to make some changes to the existing TPS website/app in order to implement our JSON web API. We don’t need to start from scratch (although in some real-life cases that might be the way to go). For our example, we’ll just fork the existing HTML web implementation to create a new standalone codebase that we can alter and turn into a functioning JSON-based RPC-CRUD web API.

The source code for the JSON-based RPC-CRUD web API version of the TPS can be found in the associated GitHub repo. A running version of the app described in this chapter can be found online.

We have two important things to do here. First, we need to modify the TPS website to get it to stop emitting HTML and start emitting valid JSON responses. That won’t be too tough since the TPS server has some smart tech built in to make representing stored data in various media types relatively easy.

We’ll dig into the tech for representing responses in Chapter 3.

The second job is to add support for all the HTTP requests documented in Table 1-2. The good news is most of those operations are already supported by the TPS website app. We just need to add a few of them (three, actually) and clean up some of the server-side code to make sure we have all the operations working properly.

So, let’s get started.

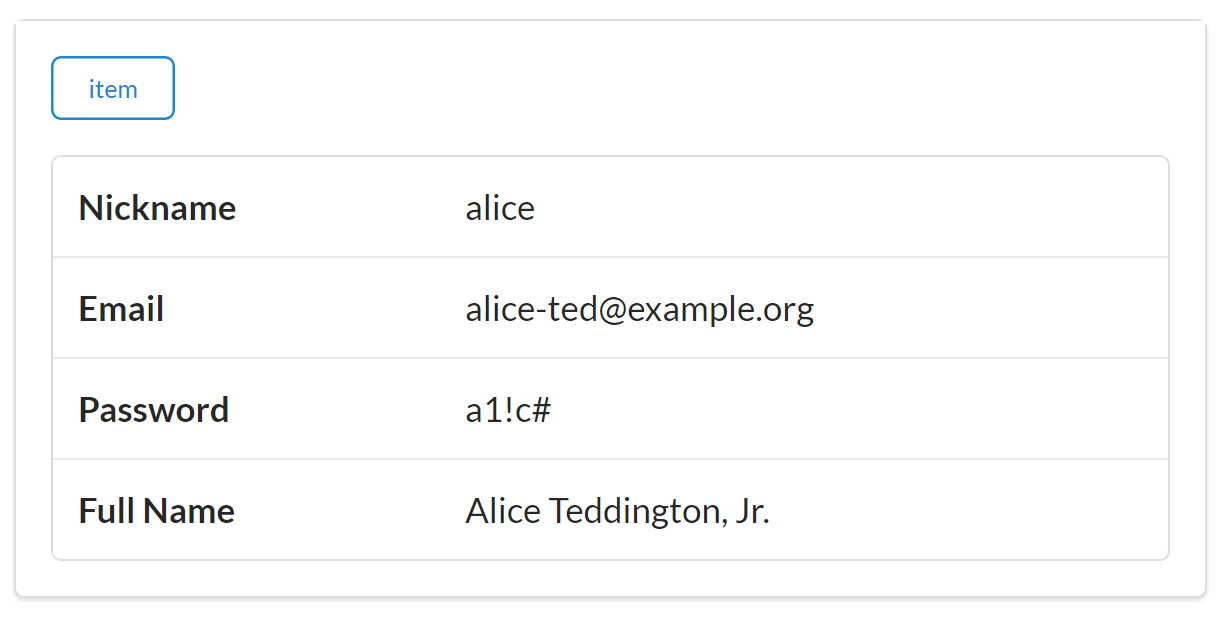

The TPS website/app emits HTML for all responses. Instead of HTML (text/html), our TPS web API will emit JSON (application/json) for all responses. Another important change we’ll make is to limit the service responses to only send the actual stored Task and User objects and properties. This will follow along with the information documented in Table 1-2 and the details in Tables 1-4 (Task Object Properties) and 1-5 (User Object Properties).

Based on that information, here is an example of the JSON output from a request to the /task/ URL:

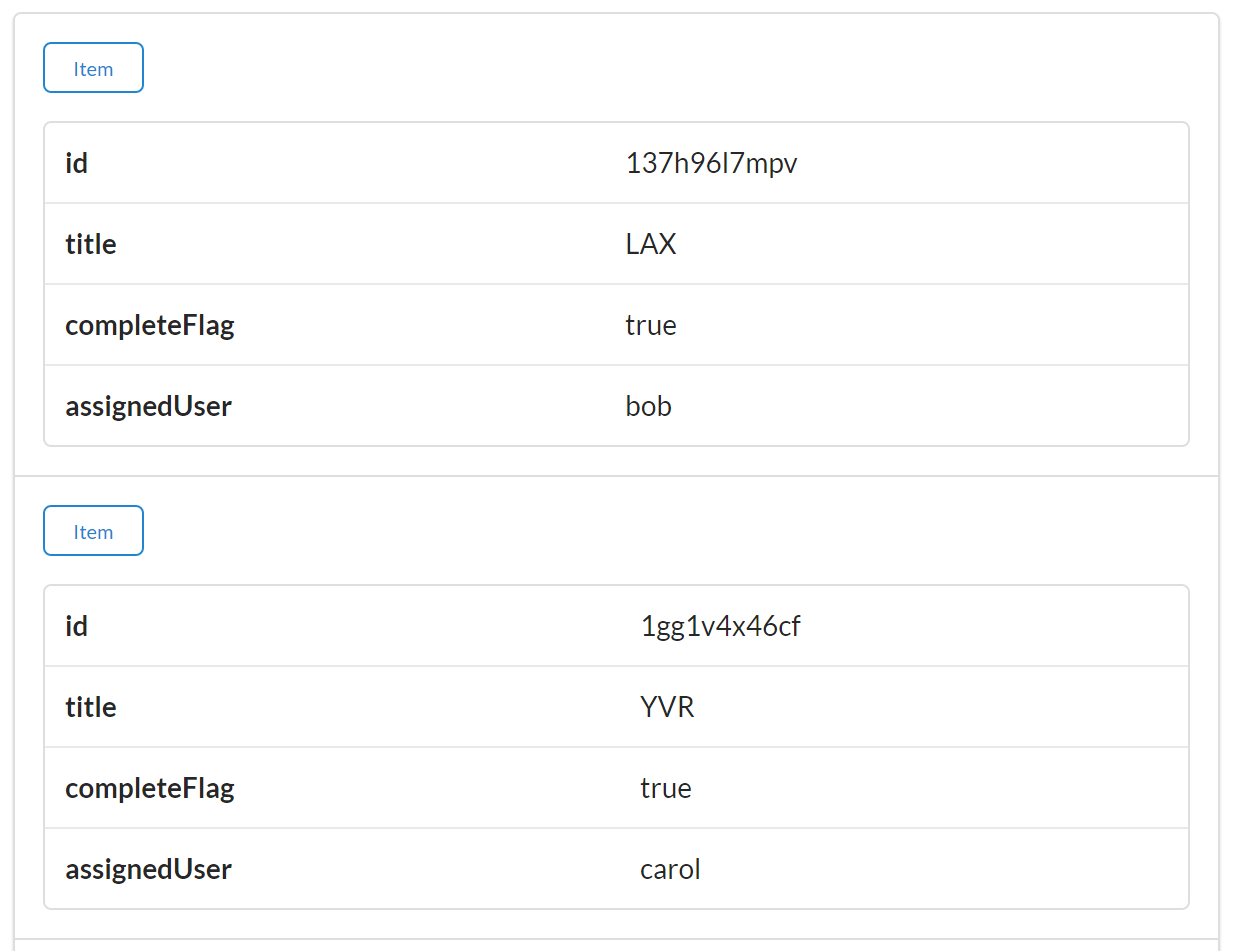

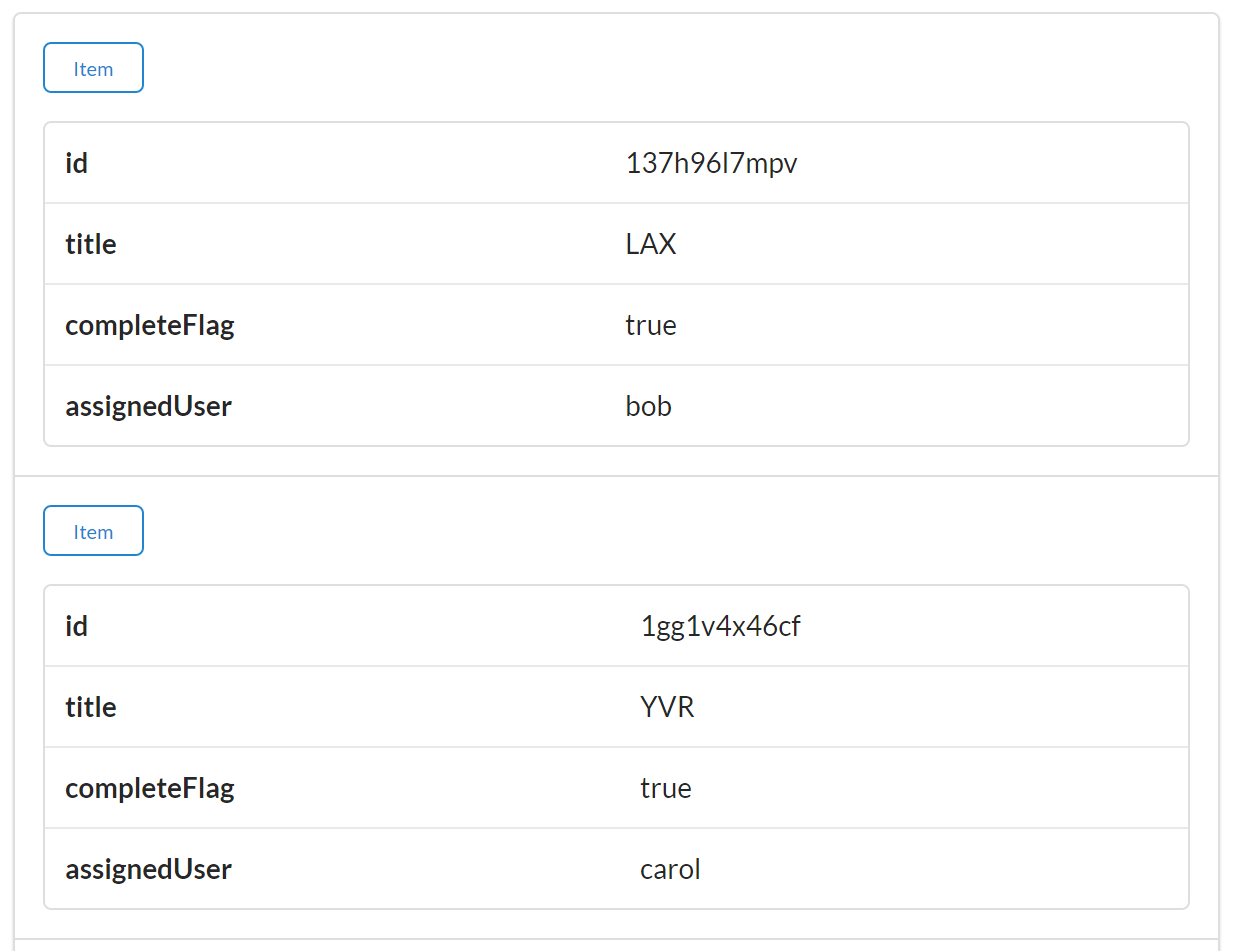

{"task":[{"id":"137h96l7mpv","title":"Update TPS Web API","completeFlag":"true","assignedUser":"bob"},{"id":"1gg1v4x46cf","title":"Review Client API","completeFlag":"false","assignedUser":"carol"},{"id":"1hs5sl6bdv1","title":"Carry Water","completeFlag":"false","assignedUser":"mamund"}...moretaskrecordshere]}

Note that there are no links or forms in the JSON responses. This is typical for RPC-CRUD style API responses. The URLs and action details are included in the human-readable documentation for this project (in GitHub) and will be hardcoded into the client application calling this API.

The human-readable documentation for this RPC-CRUD API can be found in the GitHub repository and we’ll cover the details of creating a JSON-based RPC-CRUD client based on those docs in Chapter 2, JSON Clients.

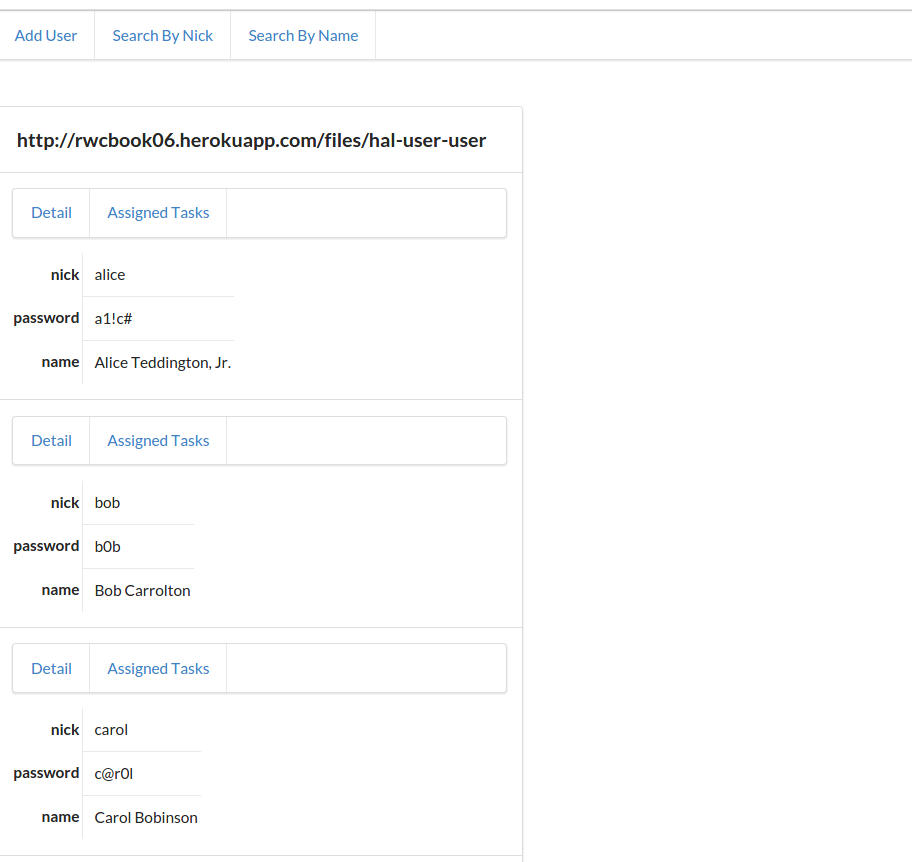

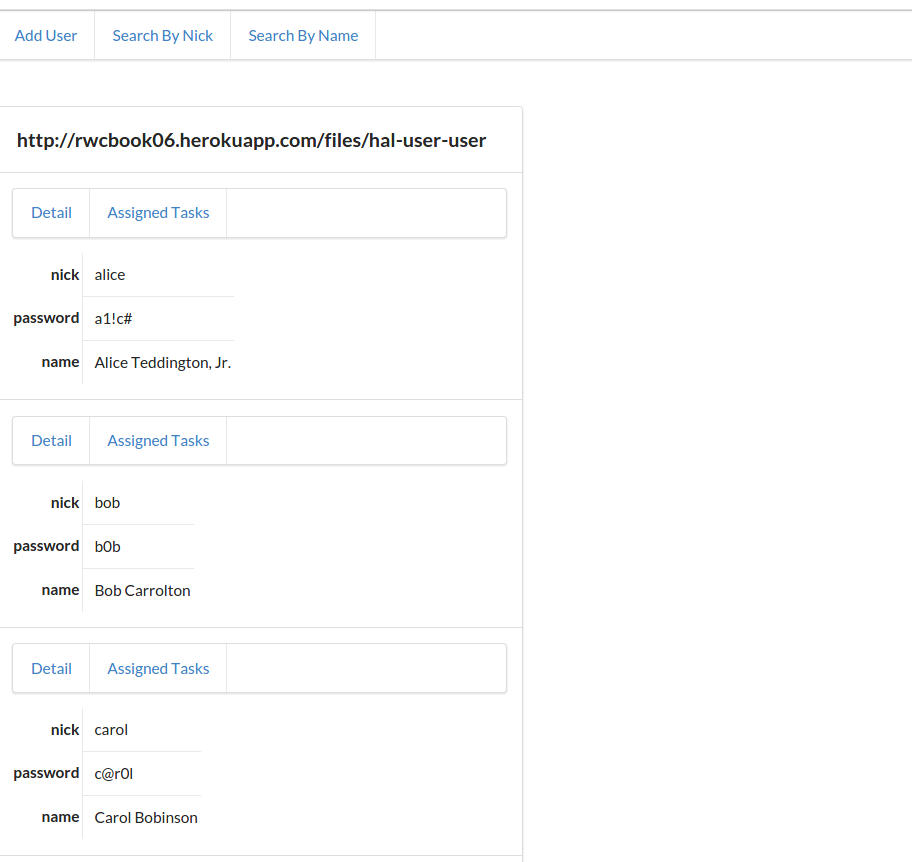

As you would expect, the responses for calls to the /user/ endpoint look similar to those from the /task/ URL:

{"user":[{"id":"alice","nick":"alice","password":"a1!c#","name":"Alice Teddington, Sr."},{"id":"bob","nick":"bob","password":"b0b","name":"Bob Carrolton"},....moreuserrecordshere]}

So, that covers the service responses. Next, we need to make sure all the actions documented in Table 1-2 are covered in the code.

The TPS HTML web app supported edit and remove operations via the HTML POST method. While this is perfectly fine from an HTML and HTTP point of view, it runs counter to the common practice that has grown up around the JSON-based RPC-CRUD pattern. In CRUD-style APIs, Edit operations are handled by the HTTP PUT method and Remove operations are handled by the HTTP DELETE operations.

To make our TPS web API compliant, we need to add two things:

Support for PUT and DELETE on /task/{id} URLs

Support for PUT on the /user/{nick} URLs

Since the TPS service already supports the actions of Update and Remove for Tasks (and Update for Users), the only thing we need to add to the server-side code is support for executing those actions via HTTP PUT and DELETE. A quick look at the code from our TPS server (with the functionality updated) is provided in Example 1-1.

...case'POST':if(parts[1]&&parts[1].indexOf('?')===-1){switch(parts[1].toLowerCase()){/* Web API no longer supports update and remove via POSTcase "update": updateTask(req, res, respond, parts[2]); break; case "remove": removeTask(req, res, respond, parts[2]); break; */case"completed":markCompleted(req,res,respond,parts[2]);break;case"assign":assignUser(req,res,respond,parts[2]);break;default:respond(req,res,utils.errorResponse(req,res,'Method Not Allowed',405));}}else{addTask(req,res,respond);}break;/* add support for update via PUT */case'PUT':if(parts[1]&&parts[1].indexOf('?')===-1){updateTask(req,res,respond,parts[1]);}else{respond(req,res,utils.errorResponse(req,res,'Method Not Allowed',405));}break;/* add support for remove via DELETE */case'DELETE':if(parts[1]&&parts[1].indexOf('?')===-1){removeTask(req,res,respond,parts[1]);}else{respond(req,res,utils.errorResponse(req,res,'Method Not Allowed',405));}break;...

Don’t worry if this isolated code snippet is hard to parse in your head. The complete source code for the JSON-based RPC-CRUD web API version of the TPS can be found in the associated GitHub repo.

As you can see from the preceding code snippet, the HTTP handler for Task data no longer supports the Update and Remove actions via POST ( ). They are now accessed via HTTP

). They are now accessed via HTTP PUT ( ) and

) and DELETE ( ). A similar change was made to support Update for

). A similar change was made to support Update for User data, too.

To be complete, the web API service should also be updated to no longer serve up the assignUser, markCompleted, and changePassword pages. These were provided by the TPS website/app to allow users to enter data via HTML standalone <form> responses. Because our web API doesn’t support <form>, we don’t need these pages anymore.

Here is the TPS web API Task handler with the assignUser and markCompleted <form> pages turned off:

....case'GET':/* Web API no longer serves up assign and completed formsif(flag===false && parts[1]==="assign" && parts[2]) {flag=true;sendAssignPage(req, res, respond, parts[2]);}if(flag===false && parts[1]==="completed" && parts[2]) {flag=true;sendCompletedPage(req, res, respond, parts[2]);}*/if(flag===false&&parts[1]&&parts[1].indexOf('?')===-1){flag=true;sendItemPage(req,res,respond,parts[1]);}if(flag===false){sendListPage(req,res,respond);}break;....

Even though we need a fully functioning JSON CRUD client (or test runner) to test all of the TPS web API, we can still do some basic testing using the curl command-line utility. This will confirm that we have set up the TPS web API correctly (per the API design just shown) and allow us to do some simple interactions with the running API service.

The following is a short curl session that shows running all the CRUD operations on the Task endpoint as well as the TaskMarkCompleted special operation:

// create a new task record

curl -X POST -H "content-type:application/json" -d '{"title":"testing"}'

http://localhost:8181/task/

// fetch the newly created task record

curl http://localhost:8181/task/1z4yb9wjwi1

// update the existing task record

curl -X PUT -H "content-type:application/json" -d '{"title":"testing again"}'

http://localhost:8181/task/1z4yb9wjwi1

// mark the record completed

curl -X POST -H "content-type:application/json"

http://localhost:8181/task/completed/1z4yb9wjwi1

// delete the task record

curl -X DELETE -H "content-type:application/json"

http://localhost:8181/task/1z4yb9wjwi1

To save space and stay within the page layout, some of the command lines are printed on two lines. If you are running these commands yourself, you’ll need to place each command on a single line.

To review, we’ve made all the implementation changes needed to get the TPS web API up and running:

Set the API responses to all emit simple JSON (application/json) arrays

Added support for PUT (Update) and DELETE (Remove) for Task objects

Removed support for POST (Update) and POST (Remove) for Task objects

Removed support for GET (assignUser) and GET (markCompleted) FORMS for Task objects

Added support for PUT (Update) for User objects

Removed support for POST (Update) for User objects

Removed support for GET (changePassword) FORMS for User objects

As you can see from the list, we actually did more to remove support in the web API than anything else. Remember that we also removed all the links and forms from all the web API responses. The description of what it takes to filter and modify data on the TPS service will now need to be documented in human-readable form and that will need to be coded into the JSON client application. We’ll see how that works in Chapter 2.

Now that we have a working TPS web API service up and running, it’s worth making a few observations on the experience.

A hallmark of web APIs today is to emit plain JSON responses—no more HTML, just JSON. The advantage is that supporting JSON in JavaScript-based browser clients is easier than dealing with XML or parsing HTML responses. Although we didn’t get to see it in our simple example, JSON responses can carry a large nested graph of data more efficiently than HTML, too.

When we were designing our web API, we spent most of the time and effort crafting URLs and deciding which methods and arguments to pass for each request. We also needed to make sure the exposed URLs map the Create-Read-Update-Delete (CRUD) semantics against important JSON objects. There were a few actions that didn’t map well to CRUD (three for our use case) and we had to create special URLs for them.

Another common feature of web APIs is the lack of links and forms in responses. Common web browsers use the links and forms in HTML responses to render a user interface for humans to scan and activate. This works because the browser already understands links and forms in HTML. Since JSON doesn’t have things like <a>…</a> and <form method="get"> or <form method="post">, the information needed to execute actions from the UI will need to be baked into the API client code.

Since most of what we did to make our TPS web app into a web API is remove features, it seems building API servers is relatively easy to do. There are certainly challenges to it—our TPS web API is pretty simple—but for the most part, we have less things to decide when creating these RPC-CRUD style APIs than when we are creating both the data responses and the connections (LINKS) and data-passing instructions (FORMS) from the standard website/app.

We found out that once you have the web API up and running, you still need to test it with some kind of client. We can’t just point a web browser at the API because browsers don’t know about our CRUD and special operations. For now, we used the curl command-line utility to execute HTTP-level requests against the API to make sure it was behaving as expected.

In this chapter, we started our journey toward hypermedia clients by first stepping back a bit and reviewing a kind of early history of typical web APIs—especially their roots in simple HTML-only websites/apps. We were introduced to BigCo’s Task Processing System (TPS) web app and learned that the HTML5 app worked just fine without any JavaScript code at all.

But we’re interested in API services and API clients. So the first task was to convert this simple HTML-only web app into a pure JSON web API service. And it was not too hard. We adopted the common RPC-CRUD design model by establishing a key URL for each API object (Task and User) and implementing the Create-Read-Update-Delete (CRUD) pattern against these objects and their URLs. We had to create a few other special URLs to support unique operations (using POST), and documented a set of filter routines against the web API’s collection URLs (/task/ and /user/). We then documented the JSON objects that were returned and established that all payloads sent from client to server should be formatted as JSON dictionary objects.

With the design completed, we needed to actually implement the API. We were able to fork the existing website app and spent most of our efforts removing functionality, simplifying the format (we dropped all the links and forms in the JSON responses), and cleaning up the web API code. Finally, we used the curl command-line utility to confirm that our API was functioning as expected.

This gives us a great start on our TPS API service. The next challenge is building a fully functional JSON CRUD client that understands the TPS web API documentation. Since we spent a lot of our time eliminating descriptive information in the web API responses, we’ll need to add that information to the API client instead. We’ll take on that challenge next.

The monochrome computer screen image is a photo of an IBM Portable PC from Hubert Berberich (HubiB) (Own work) [CC BY-SA 3.0], via Wikimedia Commons.

The Lynx browser screenshot is taken from a modern implementation of the Lynx specification. The data displayed is our own TPS web app, too.

Roy Fielding’s 2009 blog post “It is okay to use POST” points out that his dissertation never mentions CRUD and that it is fine to use GET and POST for web apps.

Two books I recommend when looking for guides in URL design are RESTful Web Services Cookbook by Subbu Allamaraju (O’Reilly, 2010) and REST API Design Rulebook by Mark Masse (O’Reilly, 2011). There are quite a few more, but these are the books I find I use often.

Parkinson’s Law of Triviality is sometimes referred to as bikeshedding and was first described by C. Northcote Parkinson in his book Parkinson’s Law and Other Studies in Administration (Houghton Mifflin Company, 1957). When referring to the case of committees working through a busy agenda, Parkinson observed, “The time spent on any item of the agenda will be in inverse proportion to the sum [of money] involved.”

“All my books started out as extravagant and ended up pure and plain.”

Annie Dillard

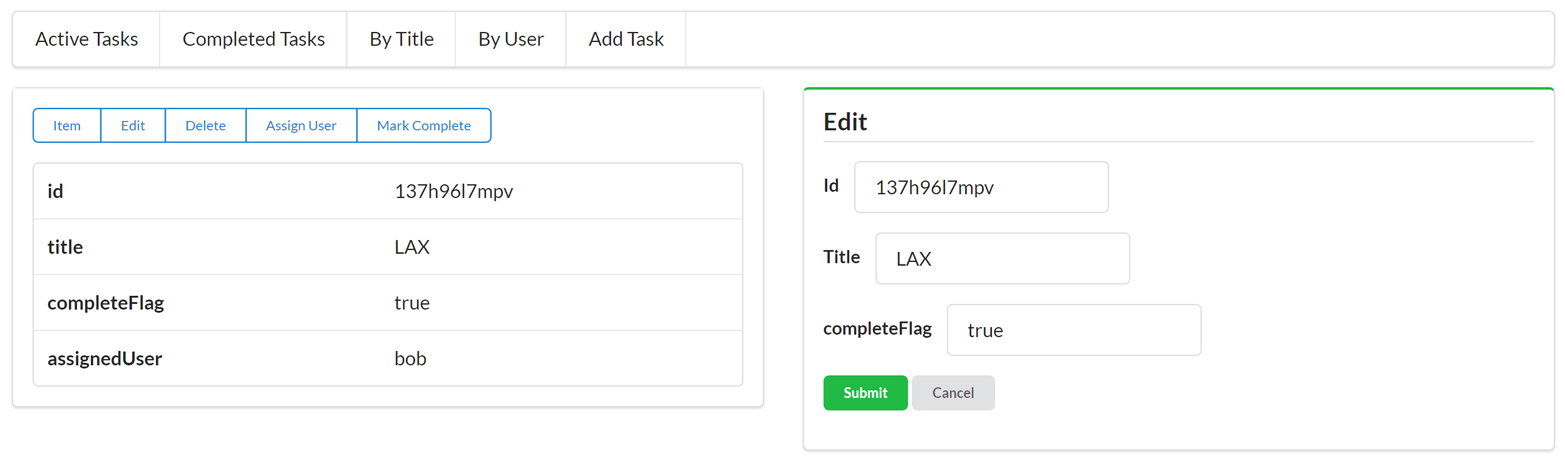

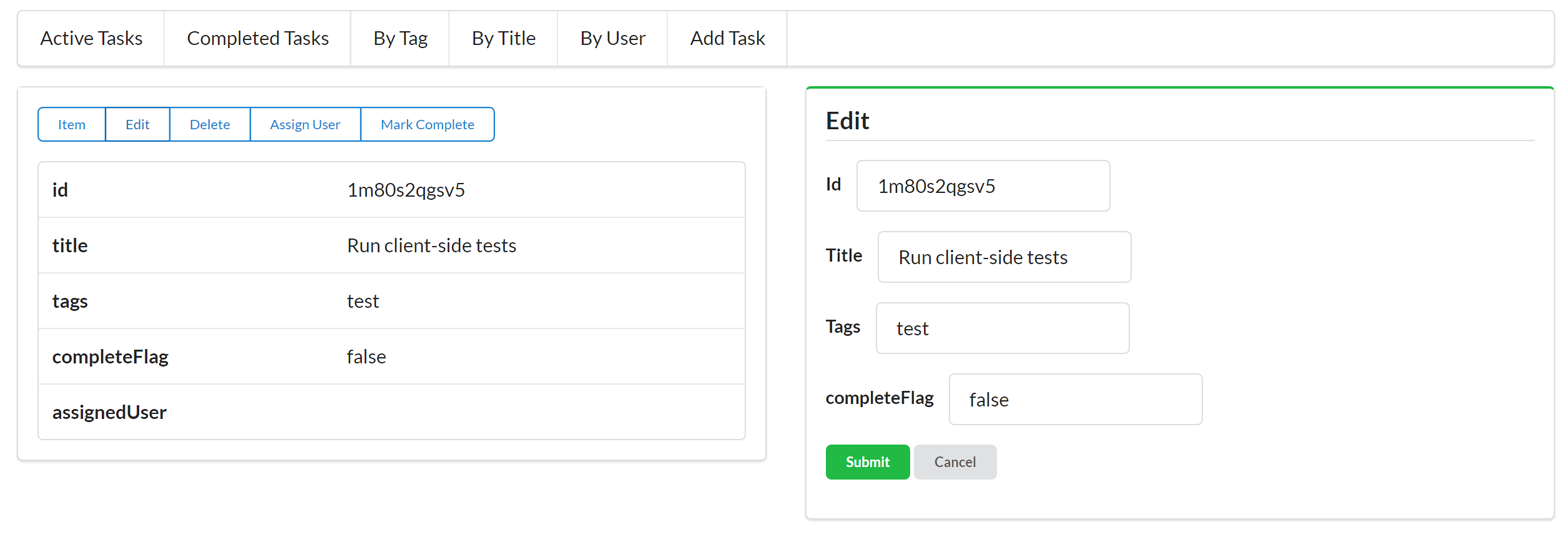

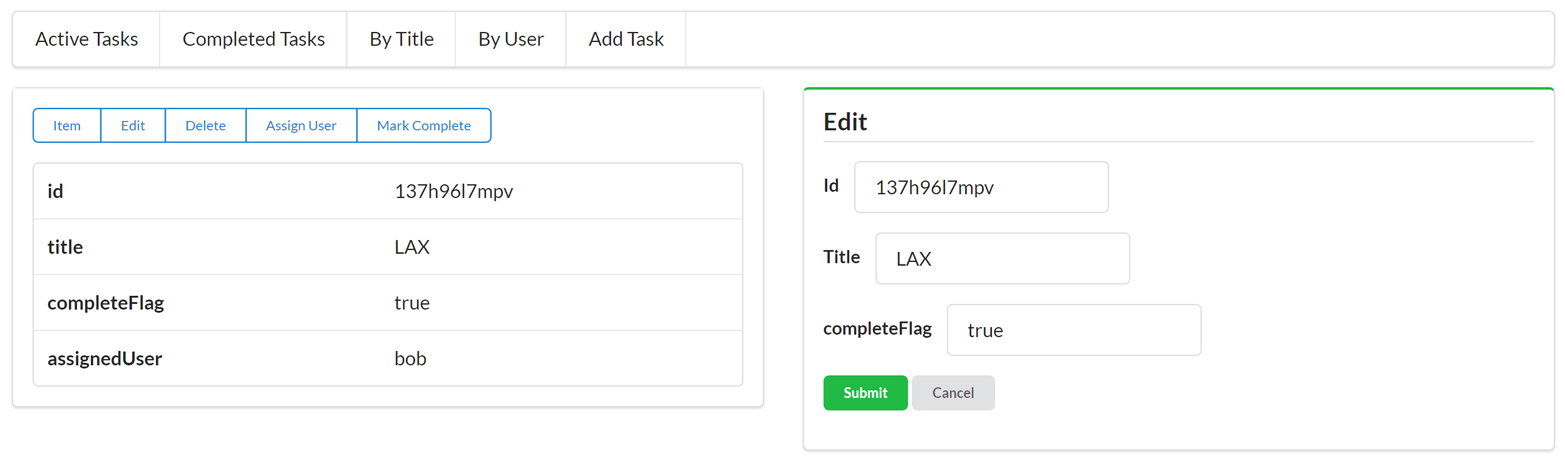

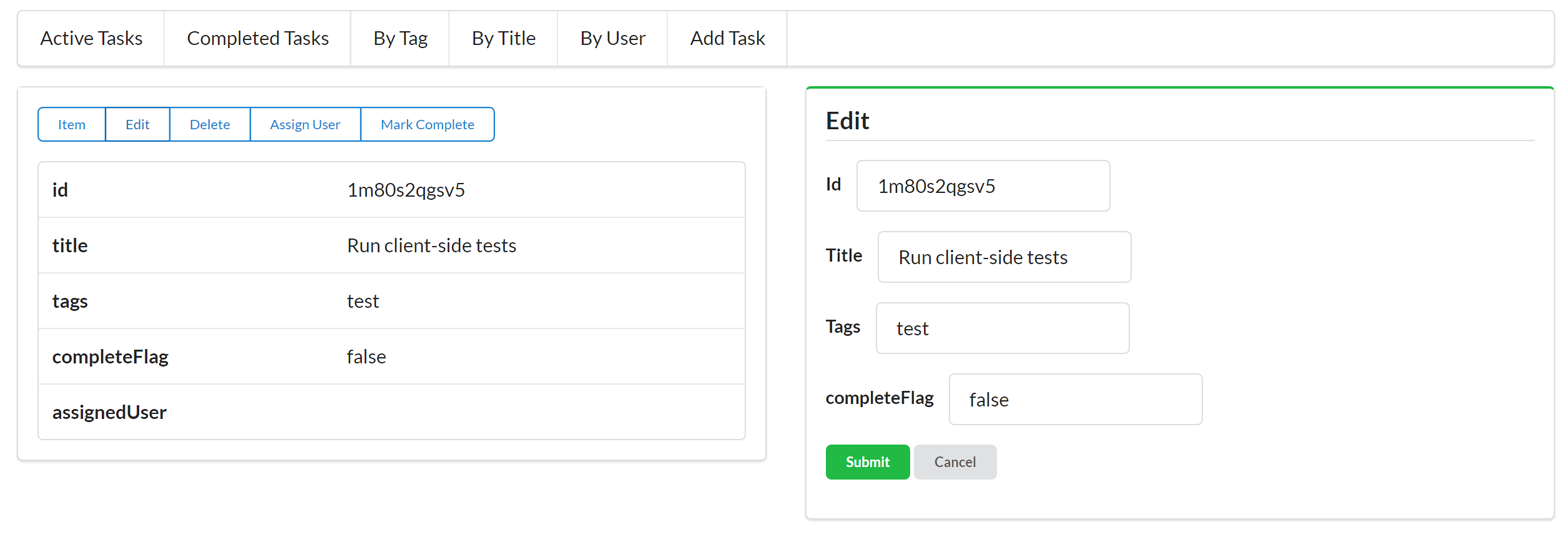

Now that we have a fully functional JSON web API (covered in Chapter 1), we’re ready to build the client app. Since the web API we’re targeting is an RPC-CRUD API, we’ll need to consult the documentation and be sure to build into the app all the rules for constructing URLs, handling unique response objects and collections, and knowing the full details on how to execute all the operations for filtering, displaying, and modifying service data (almost 20 of them in our TPS app).

Also, after building and releasing our API client, we’ll simulate some real-life scenarios and update the backend service to see how that affects our client in production. Ideally, we’d like that client to support any new features of the updated backend. But anyone who has built these RPC-CRUD clients knows that’s not very likely. At least we’d like the app to not crash when the backend changes and even that is an iffy proposition depending on how we implement the client. We’ll work through the changes needed to keep our web API client up-to-date and close the chapter with some observations before we move on to our next project.

So, let’s get started.

For many readers, the typical JSON web API client is nothing new—it’s the style that most web APIs are designed to support right now. We’ll review some of the basic elements of this client and then, after a short detour into the service code to explore JSON API output, we’ll work through the coding needed to create a fully functional JSON web API client. Along the way, we’ll learn how the JSON client needs to handle important elements such as:

Recognizing the Objects in responses

Constructing Addresses (the URLs) for interacting with the service

Handling ACTIONS such as filtering, editing, or deleting data

Let’s take a minute to review each of these three elements before starting to look at the way client apps deal with them.

Throughout the book, I’ll refer to this as the OAA Challenge (as in Objects, Addresses, and ACTIONS). We’ll see that every web API client app needs to deal with them and, especially when we start looking at the hypermedia-style clients, that there are varying ways to handle this challenge.

One of the most important things that JSON web API clients need to deal with are the JSON objects that appear in responses. Most JSON web APIs expose a unique object model via the responses. Before you can even start using the API in any meaningful way, your client app needs to understand the object model.

For example, as of this writing, the Twitter API Overview page lists five baseline objects:

Users

Tweets

Entities

Entities in Objects

Places

Many of these objects contain nested dictionary and array objects, too. And there are several complete sets of JSON objects in addition to these for related Twitter APIs such as streaming services, ad services, and others.

Lucky for us, the TPS web API has only two main objects (Task and User) and each of them are just a set of name–value pairs. This simple design makes our sample apps easy to work with and explore. However, most non-trivial production apps are likely to have several objects and tens (possibly hundreds) of properties.

As we saw in Chapter 1, the TPS web API responses are simple arrays:

{"task":[{"id":"137h96l7mpv","title":"LAX","completeFlag":"true","assignedUser":"bob","dateCreated":"2016-01-14T17:48:42.083Z","dateUpdated":"2016-01-27T22:03:02.850Z"},{"id":"1gg1v4x46cf","title":"YVR","completeFlag":"false","assignedUser":"carol","dateCreated":"2016-01-14T18:03:18.804Z","dateUpdated":"2016-01-27T17:45:46.597Z"},....moreTASKobjectshere]}

Our client app will need to recognize the "task" array name and act accordingly at runtime. One good way to do this is to use the object identifier as a context switch. When our app sees "task":[…] in the response, it will switch to “task-mode” and display the data (and possible actions) related to tasks. When the server response contains "user":[…], the app can switch to “user-mode.” Of course, our app won’t know what to do if a service response contains "note

":[…] or some other unknown context value. For now, we’ll need to ignore anything we don’t recognize.

“Ignore anything you don’t recognize” is a valuable implementation pattern for writing robust client apps. I’ll cover this and other patterns for creating resilient client apps in Chapter 7.

Just knowing the objects and their properties is not enough. Client applications also need to know how to deal with them when they show up in an API response. For example, whether to show the data item at all, and if so, which properties to display, the human prompts associated with the properties, and so on.

For example, the Task object emitted by the TPS web API looks like this:

{"id":"137h96l7mpv","title":"Review API Design","completeFlag":"false","assignedUser":"bob","dateCreated":"2016-05-14T17:48:42.083Z","dateUpdated":"2016-05-27T22:03:02.850Z"},

For our client app, we’ve decided to not display the dateCreated and dateUpdated fields. In fact, we’ll need to keep track of which fields to hide and which to show for all the TPS objects.

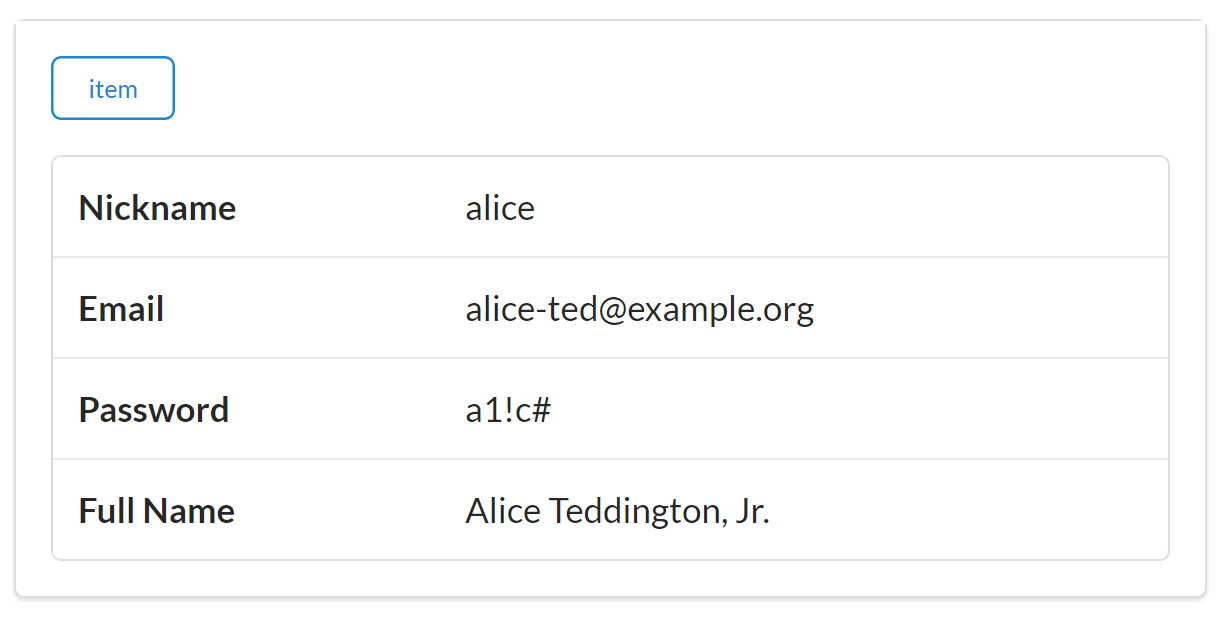

We also need to decide which prompts to display for each property in the TPS Task and User objects. Most of the time, client-side developers need to keep an internal set of prompts (possibly even tagged for more than one language) and map those prompts to the property names at runtime. For our simple app, we’ll just use a CSS trick to capitalize the property names when displaying them as prompts (see  in the code provided in Example 2-1).

in the code provided in Example 2-1).

span.prompt{display:block;width:125px;font-weight:bold;text-align:right;text-transform:capitalize;}

This works because we only need to support a single language and we’re just working with a simple demo app. Production apps will need more attention to detail on this point. We’ll see a more robust way to handle onscreen displays in Chapter 6, Siren Clients.

So, our client app will keep track of all the important JSON objects coming from the server, know how to handle each of them, and know which properties to display and what prompts are associated with them.

The next thing to deal with is the object’s Addresses—their URLs.

Most JSON RPC-CRUD API responses don’t include URLs—the addresses of the objects and arrays the client application is processing. Instead, the URL information is written up in human-readable documentation and it is up to the developer to work out the details. Often this involves hardcoding URLs (or URL templates) into the app, associating those addresses with objects and collections at runtime, and resolving any parameters in the URL templates before actually using them in the app.

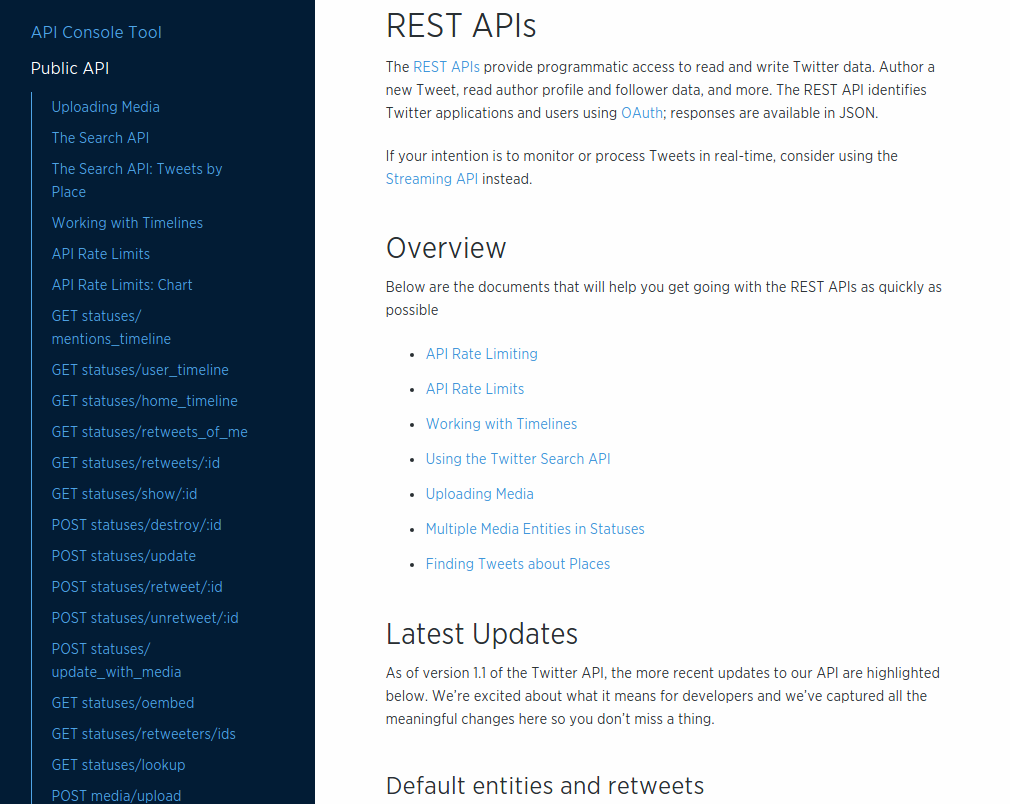

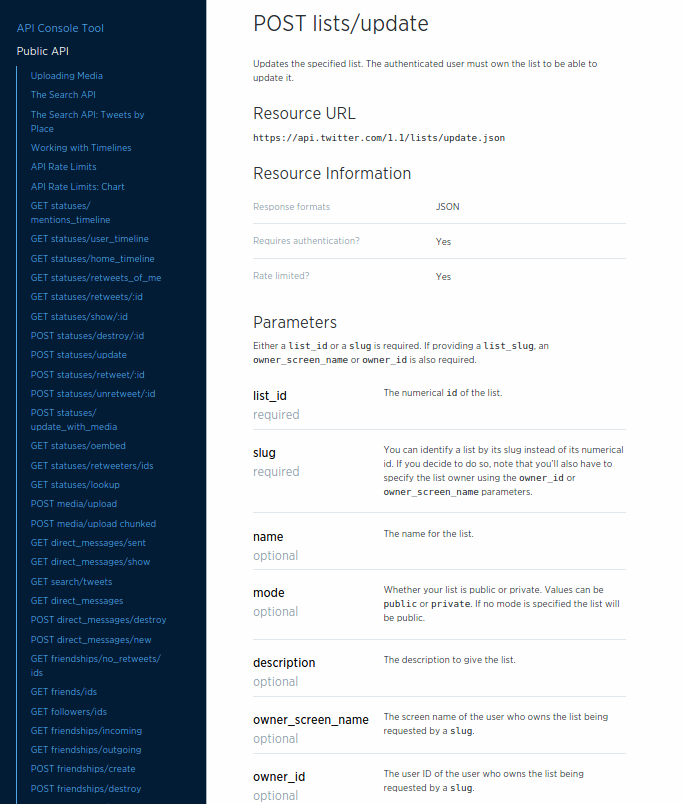

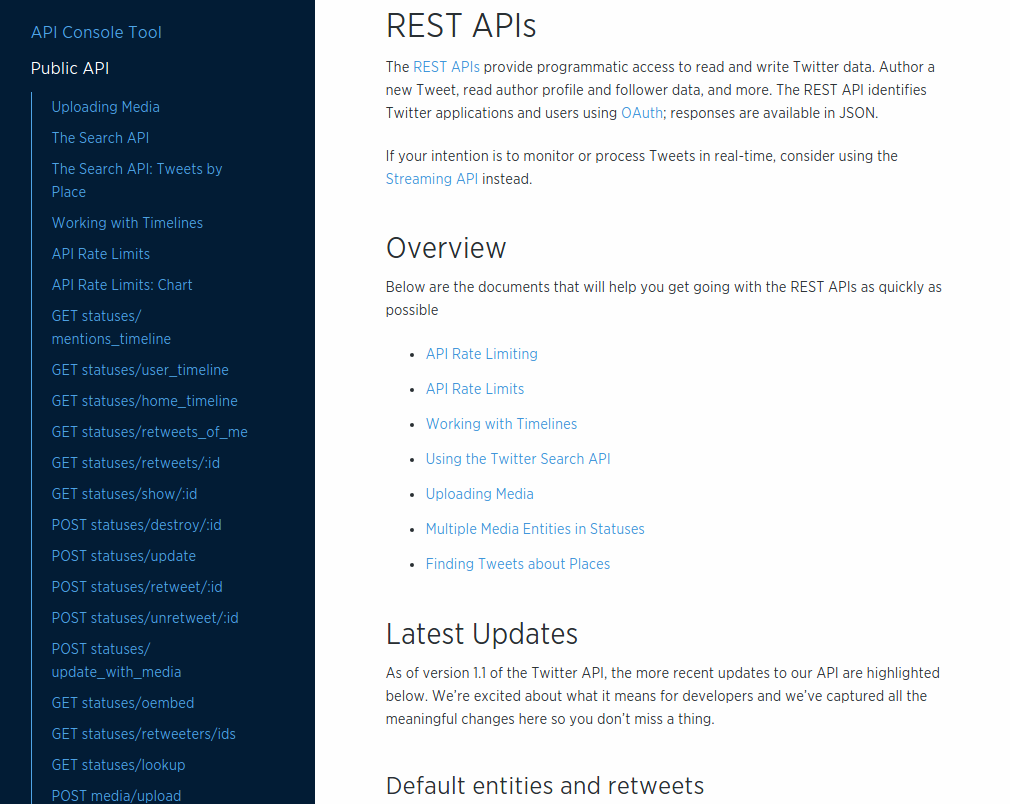

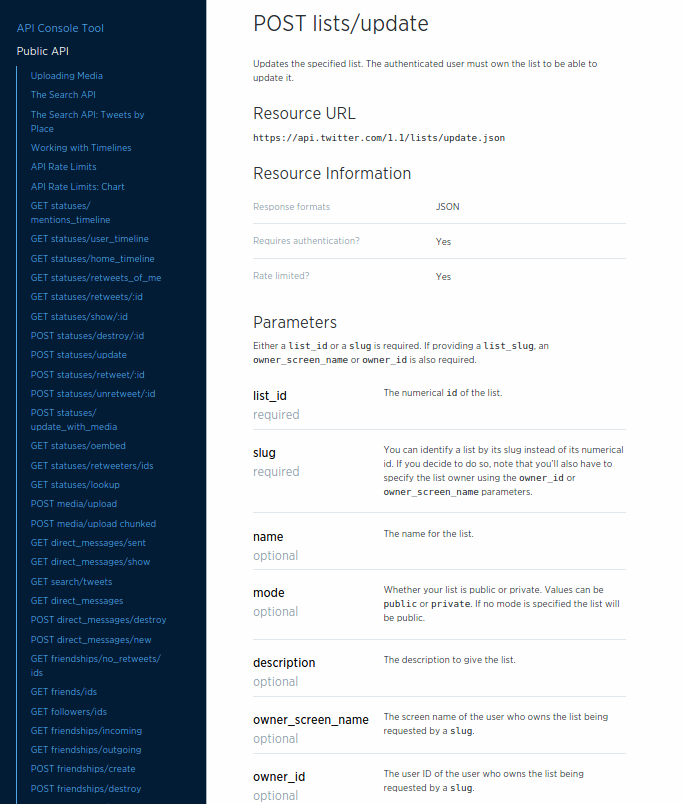

The number of URLs and templates in an API can be very large. For example, using the Twitter API (mentioned previously) as an example, there are close to 100 URL endpoints displayed on just one page of the Twitter API documentation (see Figure 2-2). While it is likely that a single API client will not need to handle all 97 of the URLs listed on that page, any useful Twitter client app will likely need to deal with dozens of them.

For the TPS web API, there are 17 URLs and templates to deal with. They’re listed in Table 1-2. We’ll need to sort out which addresses belong to each context object (Task or User) and which of them are more than simple read actions (e.g., HTTP POST, PUT, and DELETE actions).

There are many different ways of handling URLs and templates for JSON web APIs. The approach I’ll use in our sample app is to create a JSON dictionary of all the URLs for each object. For example, Example 2-2 shows how I’ll “memorize” some of the Task operations (Note that I included a prompt element for use when displaying these URLs as links in the client UI).

actions.task = {

tasks: {href:"/task/", prompt:"All Tasks"},

active: {href:"/task/?completeFlag=false", prompt:"Active Tasks"},

closed: {href:"/task/?completeFlag=true", prompt:"Completed Tasks"},

}I’ll also need to keep some information on when to display links. Should they appear on every page? Just on the pages associated with tasks? Only when a single task is displayed? Various client-side JavaScript frameworks deal with these details in different ways. Since I’m not tying my client or server to any single framework, my solution is to use an additional property of my address list, called target, which is set to values such as "all" or "list" or "single-item", etc. We’ll see that later in this chapter.

So,