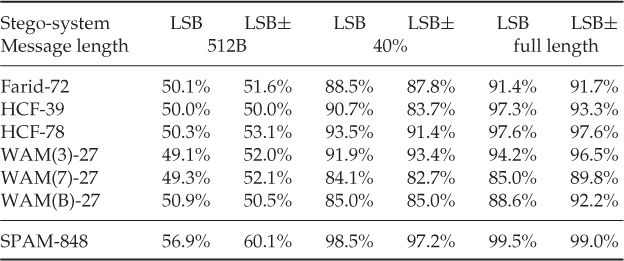

Table of Contents

1.2 Artificial Intelligence and Learning

Chapter 2: Steganography and Steganalysis

2.1 Cryptography versus Steganography

Chapter 3: Getting Started with a Classifier

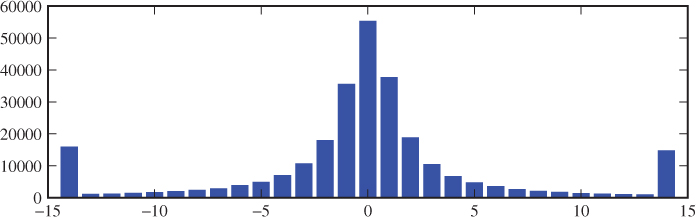

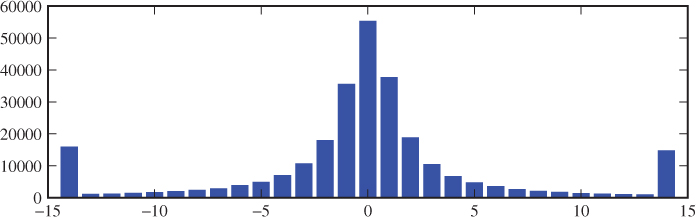

4.3 Additive Independent Noise

4.4 Multi-dimensional Histograms

5.3 Binary Similarity Measures

Chapter 6: More Spatial Domain Features

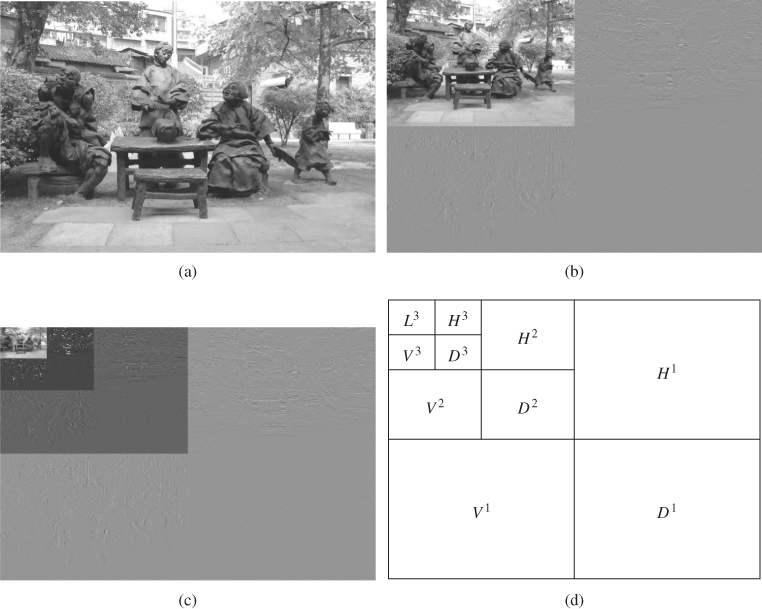

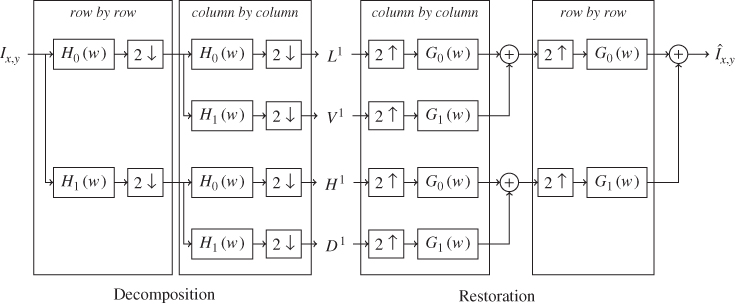

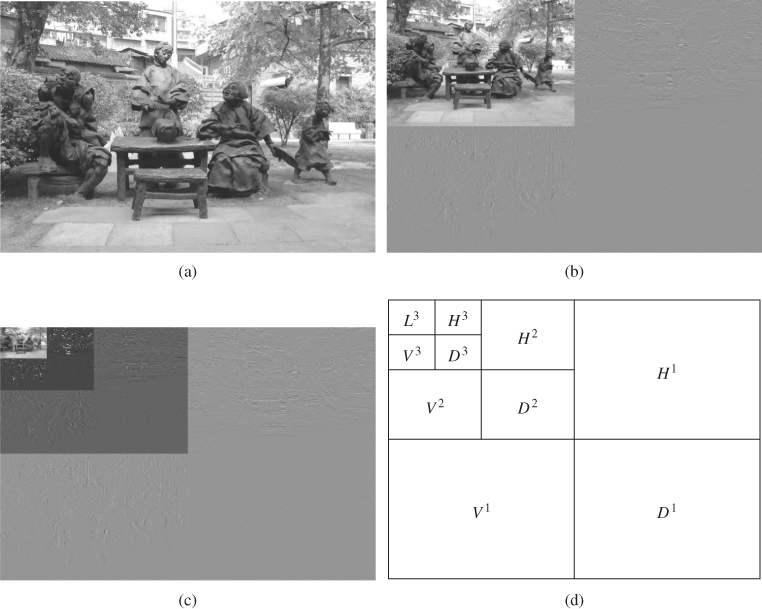

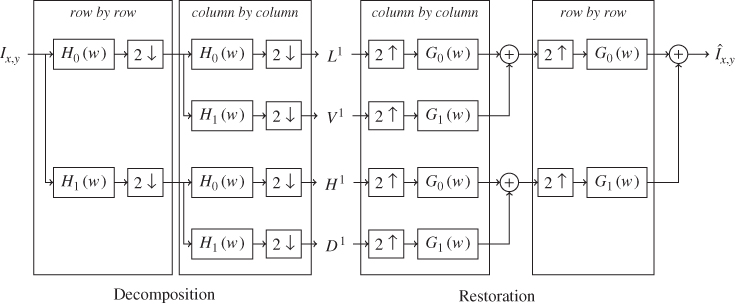

Chapter 7: The Wavelets Domain

7.5 Denoising and the WAM Features

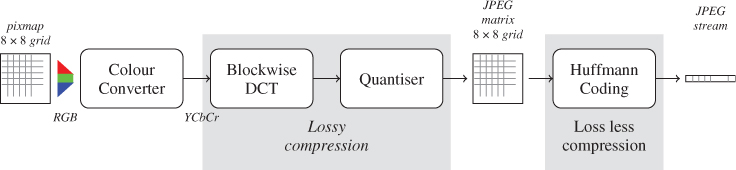

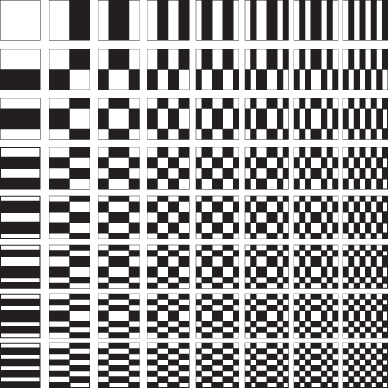

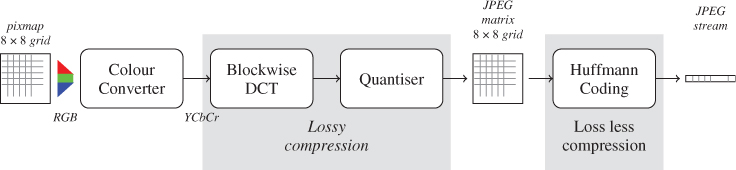

Chapter 8: Steganalysis in the JPEG Domain

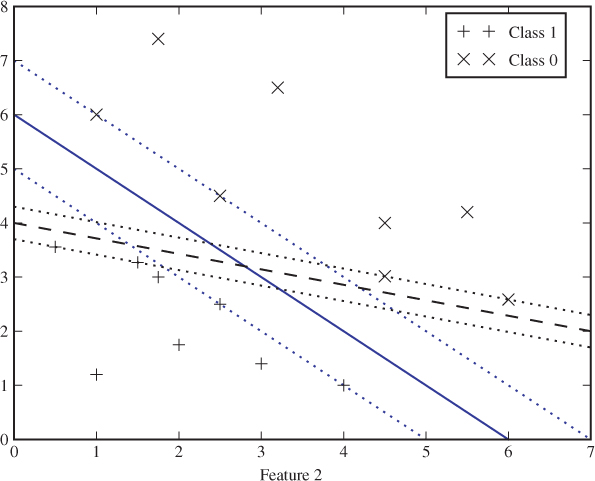

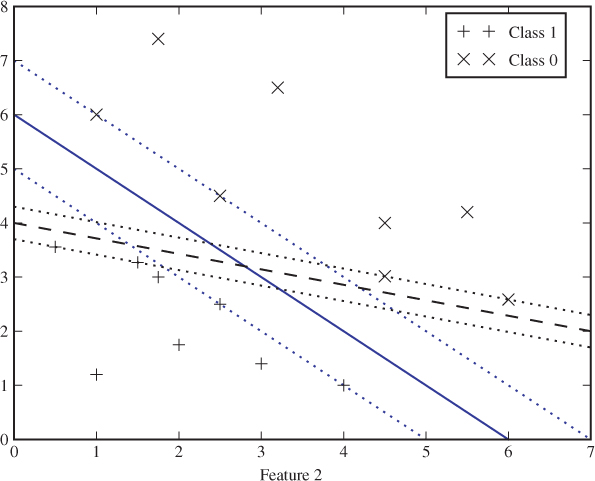

8.4 Markov Model-based Features

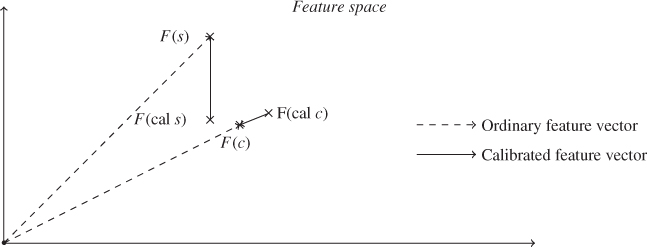

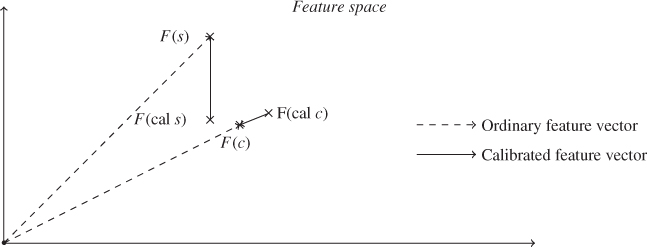

Chapter 9: Calibration Techniques

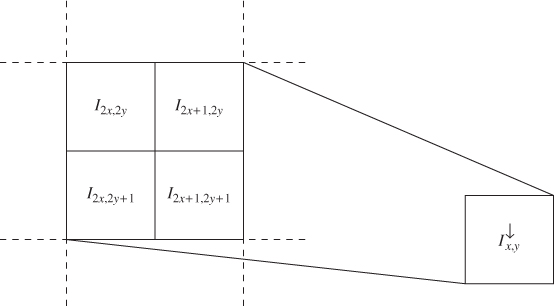

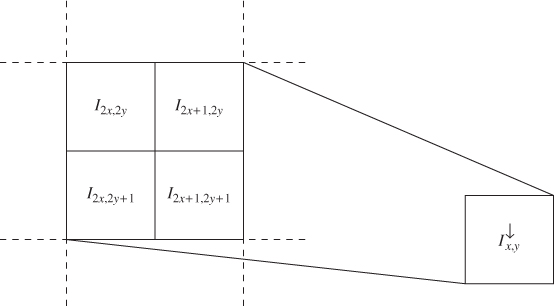

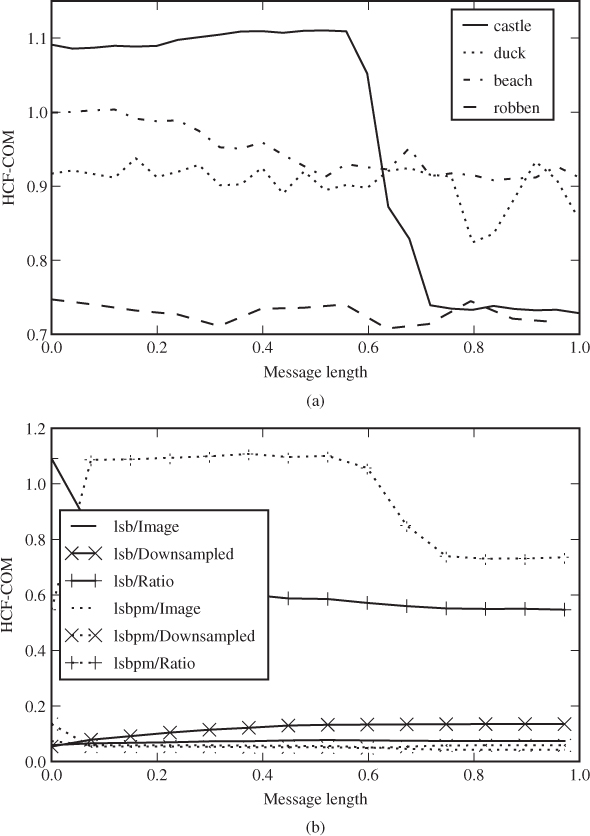

9.3 Calibration by Downsampling

Chapter 10: Simulation and Evaluation

10.1 Estimation and Simulation

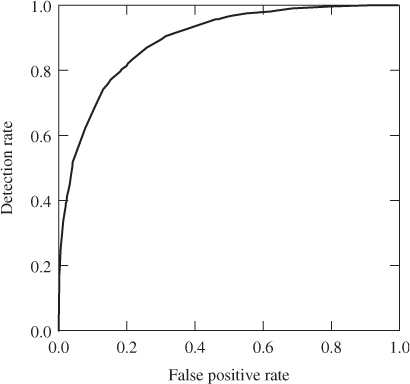

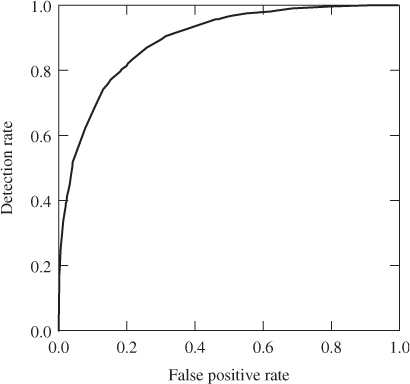

10.3 The Receiver Operating Curve

10.5 Comparison and Hypothesis Testing

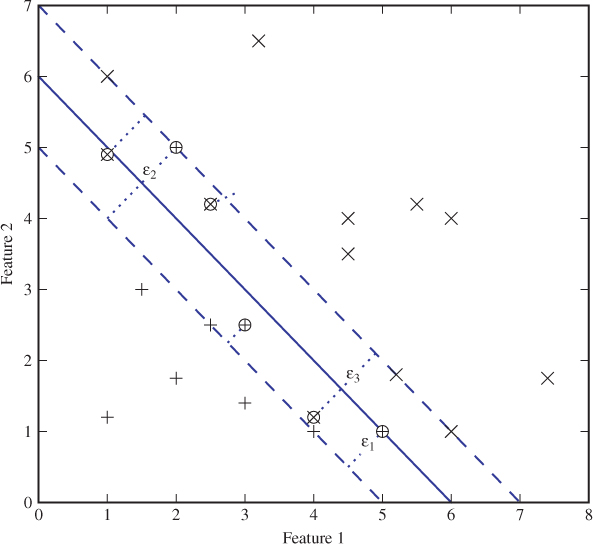

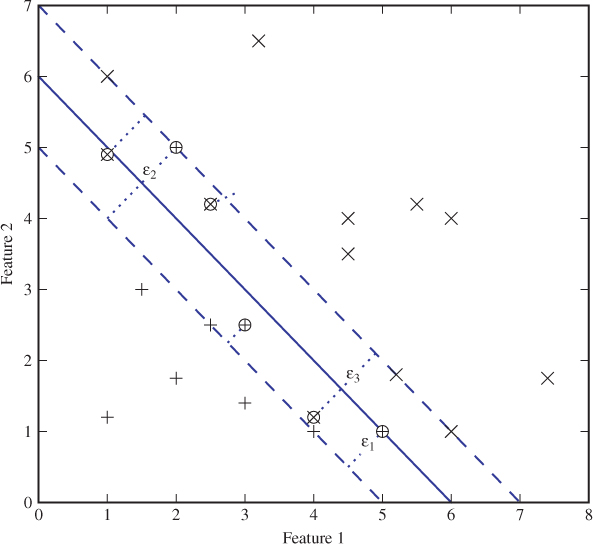

Chapter 11: Support Vector Machines

Chapter 12: Other Classification Algorithms

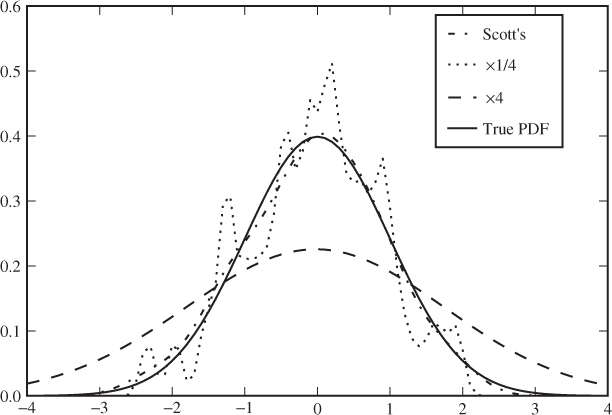

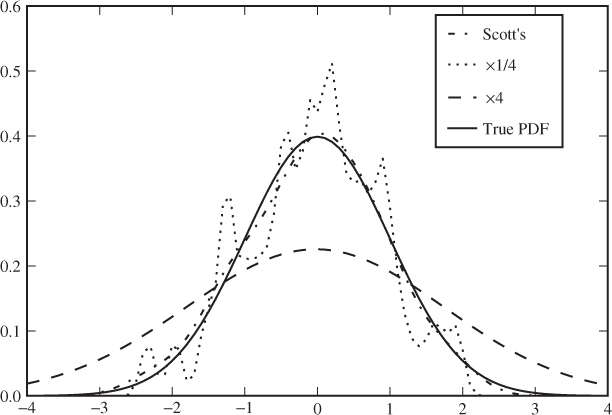

12.2 Estimating Probability Distributions

12.3 Multivariate Regression Analysis

Chapter 13: Feature Selection and Evaluation

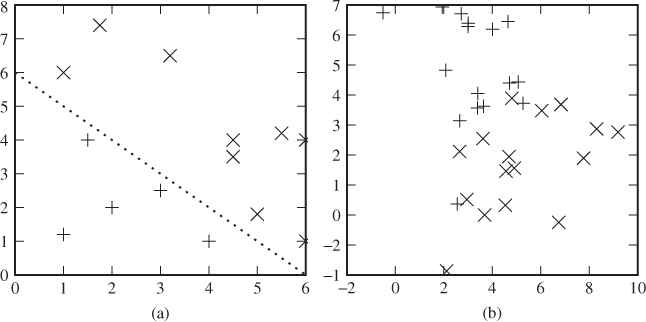

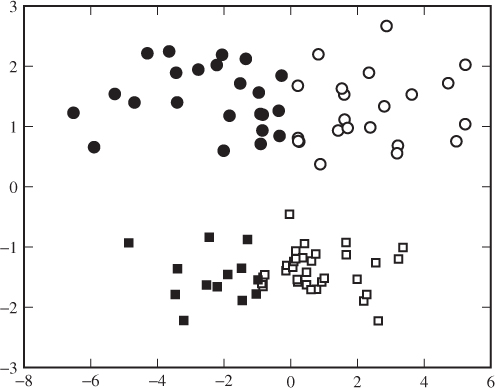

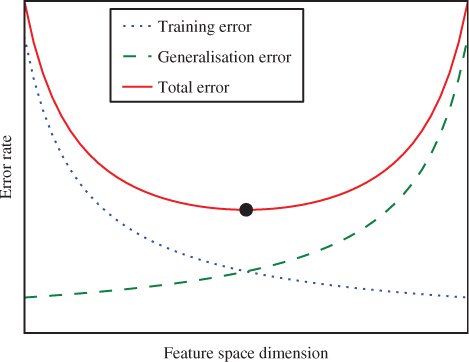

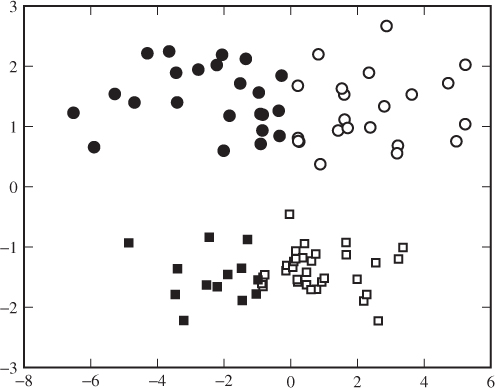

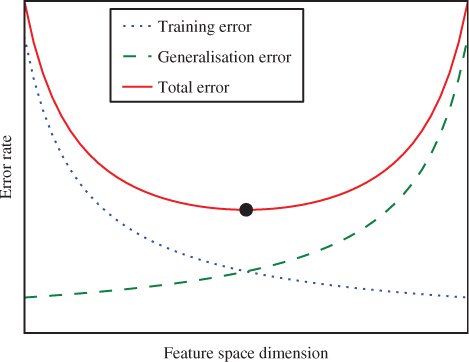

13.1 Overfitting and Underfitting

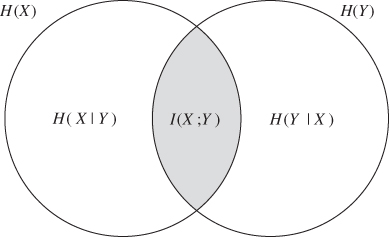

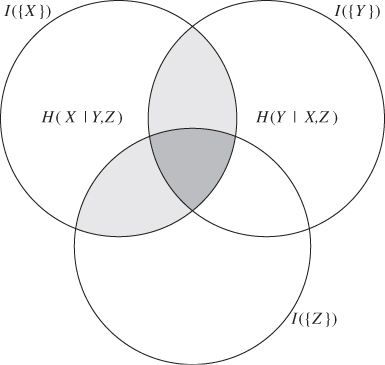

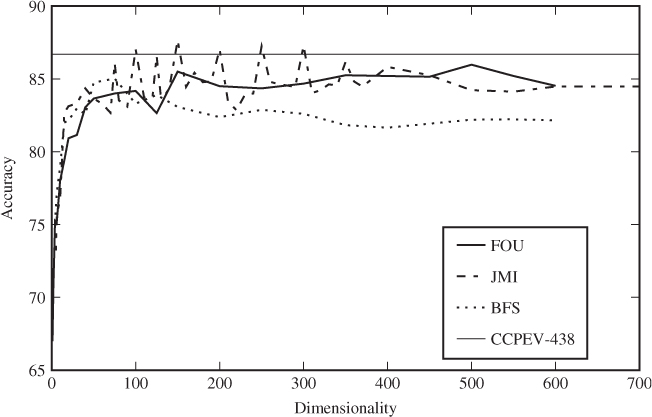

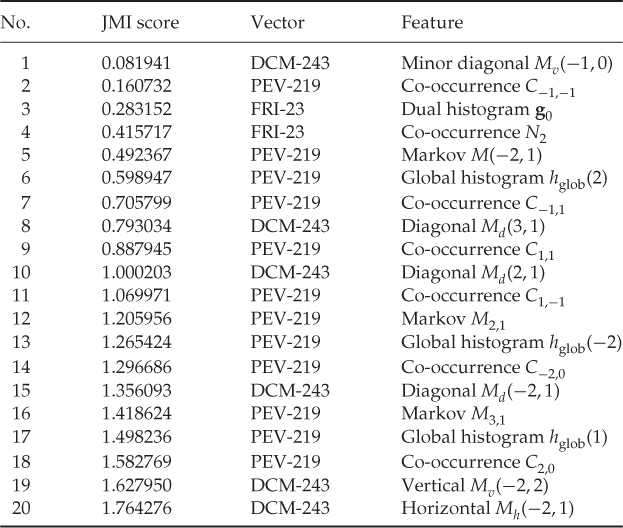

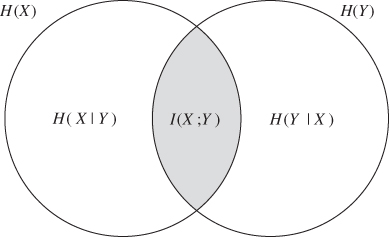

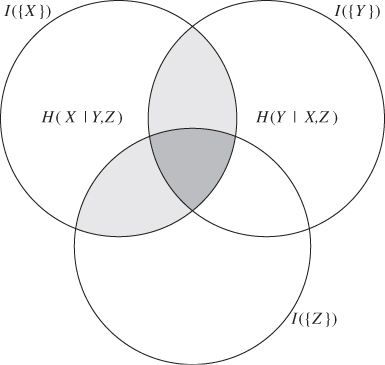

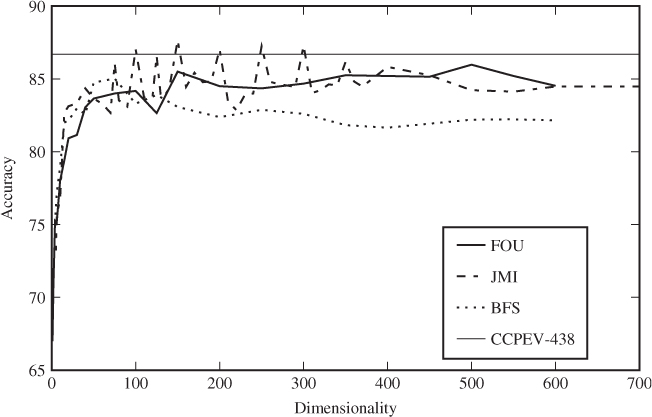

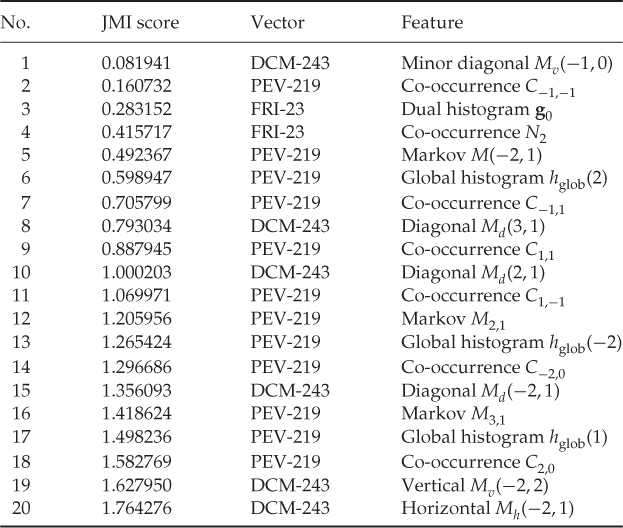

13.4 Selection Using Information Theory

13.5 Boosting Feature Selection

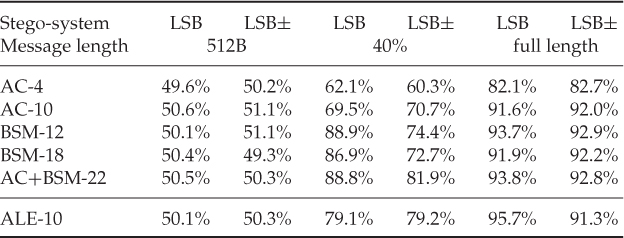

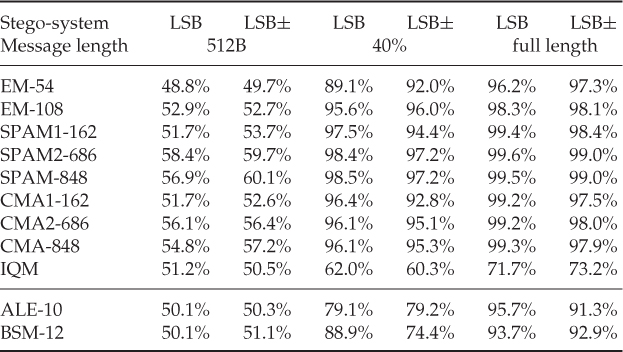

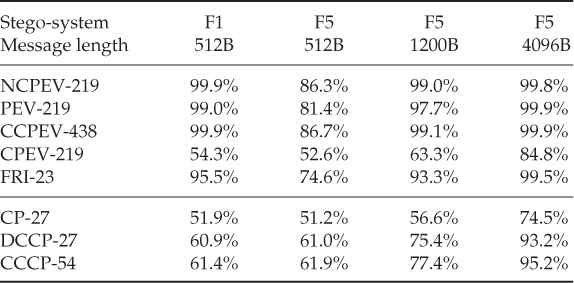

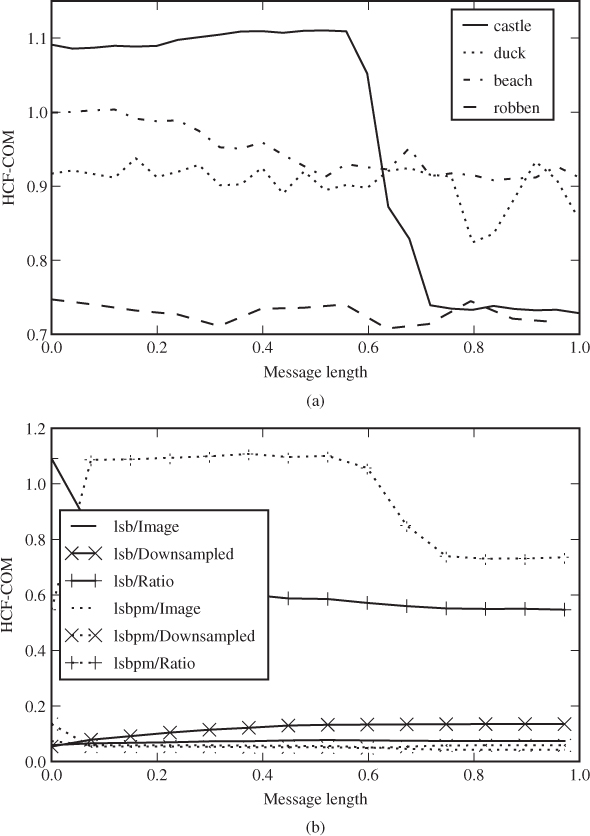

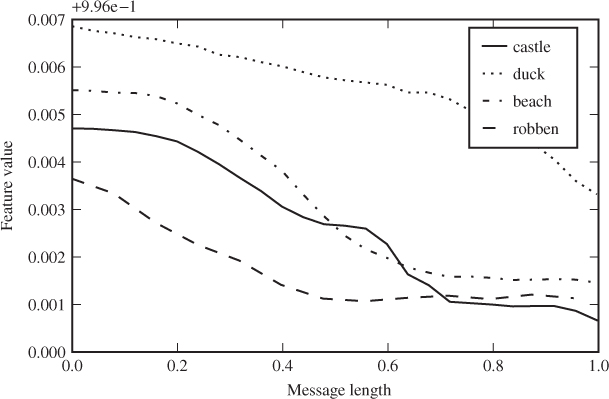

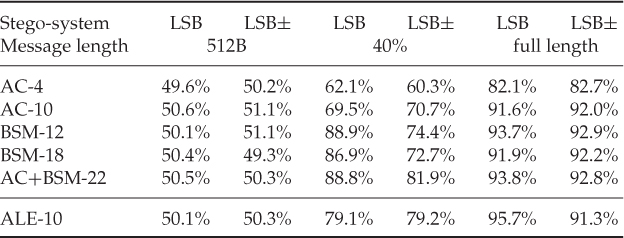

13.6 Applications in Steganalysis

Chapter 14: The Steganalysis Problem

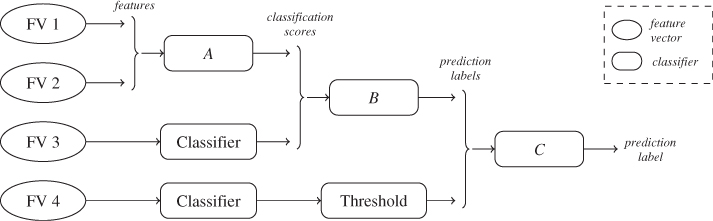

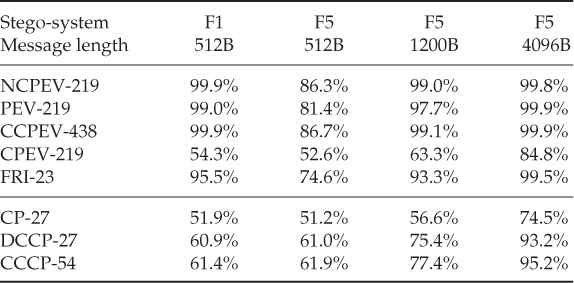

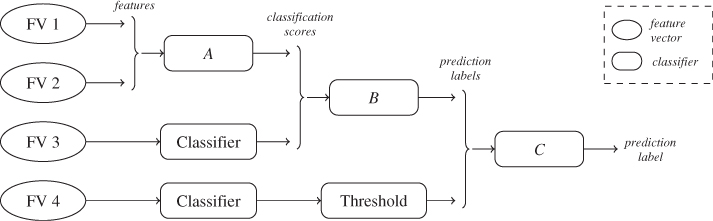

14.3 Composite Classifier Systems

This edition first published 2012

© 2012, John Wiley & Sons Ltd

Registered office

John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, United Kingdom

For details of our global editorial offices, for customer services and for information about how to apply for permission to reuse the copyright material in this book please see our website at www.wiley.com.

The right of the author to be identified as the author of this work has been asserted in accordance with the Copyright, Designs and Patents Act 1988.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by the UK Copyright, Designs and Patents Act 1988, without the prior permission of the publisher.

Wiley also publishes its books in a variety of electronic formats. Some content that appears in print may not be available in electronic books.

Designations used by companies to distinguish their products are often claimed as trademarks. All brand names and product names used in this book are trade names, service marks, trademarks or registered trademarks of their respective owners. The publisher is not associated with any product or vendor mentioned in this book. This publication is designed to provide accurate and authoritative information in regard to the subject matter covered. It is sold on the understanding that the publisher is not engaged in rendering professional services. If professional advice or other expert assistance is required, the services of a competent professional should be sought.

Library of Congress Cataloging-in-Publication Data

Schaathun, Hans Georg.

Machine learning in image steganalysis / Hans Georg Schaathun.

p. cm.

Includes bibliographical references and index.

ISBN 978-0-470-66305-9 (hardback)

1. Machine learning. 2. Wavelets (Mathematics) 3. Data encryption (Computer science) I. Title.

Q325.5.S285 2012

006.3′1—dc23

2012016642

A catalogue record for this book is available from the British Library.

Preface

Books are conceived with pleasure and completed with pain. Sometimes the result is not quite the book which was planned.

This is also the case for this book. I have learnt a lot from writing this book, and I have discovered a lot which I originally hoped to include, but which simply could not be learnt from the literature and will require a substantial future research effort. If the reader learns half as much as the author, the book will have been a great success.

The book was written largely as a tutorial for new researchers in the field, be they postgraduate research students or people who have earned their spurs in a different field. Therefore, brief introductions to much of the basic theory have been included. However, the book should also serve as a survey of the recent research in steganalysis. The survey is not complete, but since there has been no other book1 dedicated to steganalysis, and very few longer survey papers, I hope it will be useful for many.

I would like to take this opportunity to thank my many colleagues in the Computing Department at the University of Surrey, UK and the Engineering departments at Ålesund University College, Norway for all the inspiring discussions. Joint research with Dr Johann Briffa, Dr Stephan Wesemeyer and Mr (now Dr) Ainuddin Abdul Wahab has been essential to reach this point, and I owe them a lot. Most of all, thanks to my wife and son for their patience over the last couple of months. My wife has also been helpful with some of the proofreading and some of the sample photographs.

1 We have later learnt that two other books appeared while this was being written: Rainer Böhme: Advanced Statistical Steganalysis and Mahendra Kumar: Steganography and Steganalysis in JPEG images.

Chapter 1

Introduction

Steganography is the art of communicating a secret message, from Alice to Bob, in such a way that Alice's evil sister Eve cannot even tell that a secret message exists. This is (typically) done by hiding the secret message within a non-sensitive one, and Eve should believe that the non-sensitive message she can see is all there is. Steganalysis, on the contrary, is Eve's task of detecting the presence of a secret message when Alice and Bob employ steganography.

A frequently asked question is Who needs steganalysis? Closely related is the question of who is using steganography. Unfortunately, satisfactory answers to these questions are harder to find.

A standard claim in the literature is that terrorist organisations use steganography to plan their operations. This claim seems to be founded on a report in USA Today, by Kelley (2001), where it was claimed that Osama bin Laden was using the Internet in an ‘e-jihad’ half a year before he became world famous in September 2001. The idea of the application is simple. Steganography, potentially, makes it possible to hide detailed plans with maps and photographs of targets within images, which can be left on public sites like e-Bay or Facebook as a kind of electronic dead-drop.

The report in USA Today was based on unnamed sources in US law enforcement, and there has been no other evidence in the public domain that terrorist organisations really are using steganography to plan their activities. Goth (2005) described it as a hype, and predicted that the funding opportunities enjoyed by the steganography community in the early years of the millennium would fade. It rather seems that he was correct. At least the EU and European research councils have shown little interest in the topic.

Steganography has several problems which may make it unattractive for criminal users. Bagnall (2003) (quoted in Goth (2005)) points out that the acquisition, possession and distribution of tools and knowledge necessary to use steganography in itself establishes a traceable link which may arouse as much suspicion as an encrypted message. Establishing the infrastructure to use steganography securely, and keeping it secret during construction, is not going to be an easy exercise.

More recently, an unknown author in The Technical Mujahedin (Givner-Forbes, 2007; Unknown, 2007) has advocated the use of steganography in the jihad, giving some examples of software to avoid and approaches to evaluating algorithms for use. There is no doubt that the technology has some potential for groups with sufficient resources to use it well.

In June 2010 we heard of ten persons (alleged Russian agents) being arrested in the USA, and according to the news report the investigation turned up evidence of the use of steganography. It is too early to say if these charges will give steganalysis research a new push. Adee (2010) suggests that the spies may have been thwarted by old technology, using very old and easily detectable stego-systems. However, we do not know if the investigators first identified the use of steganography by means of steganalysis, or if they found the steganographic software used on the suspects' computers first.

So currently, as members of the general public and as academic researchers, we are unable to tell whether steganography is a significant threat or mainly a brain exercise for academics. We have no strong evidence of significant use, but then we also know that MI5 and MI6, and other secret services, who would be the first to know if such evidence existed, would hardly tell us about it. In contrast, by developing public knowledge about the technology, we make it harder for criminal elements to use it successfully for their own purposes.

Most of the current steganalysis techniques are based on machine learning in one form or another. Machine learning is an area well worth learning, because of its wide applications within medical image analysis, robotics, information retrieval, computational linguistics, forensics, automation and control, etc. The underlying idea is simple; if a task is too complex for a human being to learn, let's train a machine to do it instead. At a philosophical level it is harder. What, after all, do we really mean by learning?

Learning is an aspect of intelligence, which is often defined as the ability to learn. Machine learning thus depends on some kind of artificial intelligence (AI). As a scientific discipline machine learning is counted as a sub-area of AI, which is a more well-known idea at least for the general public. In contrast, our impression of what AI is may be shaped as much by science fiction as by science. Many of us would first think of the sentient computers and robots in the 1960s and 1970s literature, such as Isaac Asimov's famous robots who could only be kept from world domination by the three robotic laws deeply embedded in their circuitry.

As often as a dream, AI has been portrayed as a nightmare. Watching films like Terminator and The Matrix, maybe we should be glad that scientists have not yet managed to realise the dream of AI. Discussing AI as a scientific discipline today, it may be more fruitful to discuss the different sub-disciplines. The intelligent and sentient computer remains science fiction, but various AI-related properties have been realised with great success and valuable applications. Machine learning is one of these sub-disciplines.

The task in steganalysis is to take an object (communication) and classify this into one out of two classes, either the class of steganograms or the class of clean messages. This type of problem, of designing an algorithm to map objects to classes, is known as pattern recognition or classification in the literature. Once upon a time pattern recognition was primarily based on statistics, and the approach was analytic, aiming to design a statistical model to predict the class. Unfortunately, in many applications, the problem is too complex to make this approach feasible. Machine learning provides an alternative to the analytic approach.

A learning classifier builds a statistical model to solve the classification problem, by brute-force study of a large number of statistics (so-called features) from a set of objects selected for training. Thus, we say that the classifier learns from the study of the training set, and the acquired learning can later be used to classify previously unseen objects. Contrary to the analytic models of statistical approaches, the model produced by machine learning does not have to be comprehensible for human users, as it is primarily for machine processing. Thus, more complex and difficult problems can be solved more accurately.

With respect to machine learning, the primary objective of this book is to provide a tutorial to allow a reader with primary interest in steganography and steganalysis to use black box learning algorithms in steganalysis. However, we will also dig a little bit deeper into the theory, to inspire some readers to carry some of their experience into other areas of research at a later stage.

There are no ‘don't do this at home’ clauses in this book. Quite the contrary. The body of experimental data in the literature is still very limited, and we have not been able to run enough experiments to give you more than anecdotal evidence in this book. To choose the most promising methods for steganalysis, the reader will have to make his own comparisons with his own images. Therefore, the advice must be ‘don't trust me; try it yourself’. As an aid to this, the software used in this book can be found at: http://www.ifs.schaathun.net/pysteg/.

For this reason, the primary purpose of this book has been to provide a hands-on tutorial with sufficient detail to allow the reader to reproduce examples. At the same time, we aim to establish the links to theory, to make the connection to relevant areas of research as smooth as possible. In particular we spend time on statistical methods, to explain the limitations of the experimental paradigms and understand exactly how far the experimental results can be trusted.

In this first part of the book, we will give the background and context of steganalysis (Chapter 2), and a quick introduction and tutorial (Chapter 3) to provide a test platform for the next part.

Part II is devoted entirely to feature vectors for steganalysis. We have aimed for a broad survey of available features, but it is surely not complete. The best we can hope for is that it is more complete than any previous one. The primary target audience is research students and young researchers entering the area of steganalysis, but we hope that more experienced researchers will also find some topics of interest.

Part III investigates both the theory and methodology, and the context and challenges of steganalysis in more detail. More diverse than the previous parts, this will both introduce various classifier algorithms and take a critical view on the experimental methodology and applications in steganalysis. The classification algorithms introduced in Chapters 11 and 12 are intended to give an easy introduction to the wider area of machine learning. The discussions of statistics and experimental methods (Chapter 10), as well as applications and practical issues in steganalysis (Chapter 14) have been written to promote thorough and theoretically founded evaluation of steganalytic methods. With no intention of replacing any book on machine learning or statistics, we hope to inspire the reader to read more.

Chapter 2

Steganography and Steganalysis

Secret writing has fascinated mankind for several millennia, and it has been studied for many different reasons and motivations. The military and political purposes are obvious. The ability to convey secret messages to allies and own units without revealing them to the enemy is obviously of critical importance for any ruler. Equally important are the applications in mysticism. Literacy, in its infancy, was a privilege of the elite. Some cultures would hold the ability to create or understand written messages as a sacred gift. Secret writing, further obscuring the written word, would further elevate the inner-most circle of the elite. Evidence of this can be seen both in hieroglyphic texts in Egypt and Norse runes on the Scottish islands.

The term steganography was first coined by an occultist, namely Trithemius (c. 1500) (see also Fridrich (2009)). Over three volumes he discussed methods for encoding messages, occult powers and communication with spirits. The word ‘steganography’ is derived from the Greek words ganesh (steganos) for ‘roof’ or ‘covered’ and ganesh (grafein) ‘to write’. Covered, here, means that the message should be concealed in such a way that the uninitiated cannot tell that there is a secret message at all. Thus the very existence of the secret message is kept a secret, and the observer should think that only a mundane, innocent and non-confidential message is transmitted.

Use of steganography predates the term. A classic example was reported by Herodotus (440 bc, Book V). Histiæus of Miletus (late 6th century bc) wanted to send a message to his nephew, Aristagoras, to order a revolt. With all the roads being guarded, an encrypted message would surely be intercepted and blocked. Even though the encryption would keep the contents safe from enemy intelligence, it would be of no use without reaching the intended recipient. Instead, he took a trusted slave, shaved his head, and tattooed the secret message onto it. Then he let the hair regrow to cover the message before the slave was dispatched.

Throughout history, techniques for secret writing have most often been referred to as cryptography, from ganesh (cryptos) meaning ‘hidden’ and ganesh (grafein, ‘to write’). The meaning of the word has changed over time, and today, the cryptography and steganography communities would tend to use it differently.

Cryptography, as an area of research, now covers a wide range of security-related problems, including secret communications, message authentication, identification protocols, entity authentication, etc. Some of these problems tended to have very simple solutions in the pre-digital era. Cryptography has evolved to provide solutions to these problems in the digital domain. For instance, historically, signatures and seals have been placed on paper copies to guarantee their authenticity. Cryptography has given us digital signatures, providing, in principle, similar protection to digital files. From being an occult discipline in Trithemius' time, it has developed into an area of mathematics in the 20th century. Modern cryptography is always based on formal methods and formal proofs of security, giving highly trusted modules to be used in the design of secure systems.

The most well-known problem in cryptography is obviously that of encryption or ciphers, where a plain text message is transformed into incomprehensible, random-looking data, and knowledge of a secret key is necessary to reverse the transformation. This provides secret communications, in the sense that the contents of the message are kept secret. However, the communication is not ‘covered’ in the sense of steganography. Communicating random-looking data is suspicious and an adversary will assume that it is an encrypted message.

It is not uncommon to see ‘cryptography’ used to refer to encryption only. In this book, however, we will consistently use the two terms in the way they are used by the cryptography community and as described above. Most of the current research in steganography lacks the formal and mathematical foundations of cryptography, and we are not aware of any practical steganography solutions with formal and quantitative proofs of security. Yet, as we shall see below, steganography can be cast, and has indeed been cast, as a cryptographic problem. It just seems too difficult to be solved within the strict formal framework of modern cryptography.

Much has been written about steganography during the last 15–30 years. The steganography community derives largely from the signal- and image-processing community. The less frequent contributions from the cryptography and information theory communities do not always use the same terminology, and this can make it hard to see the connections between different works.

The modern interest in steganography is largely due to Simmons (1983) who defined and explained a steganographic security model as a cryptographic problem, and presented it at the Number 1 international conference on cryptography, Crypto 1983.

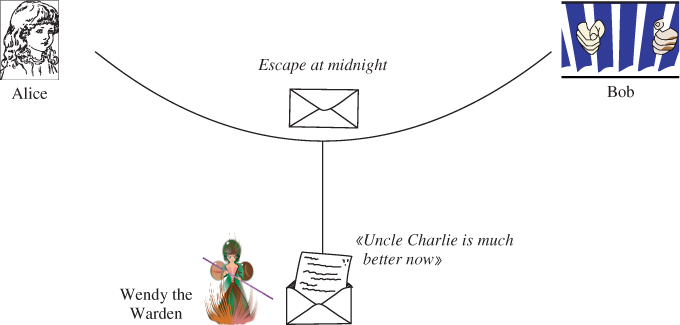

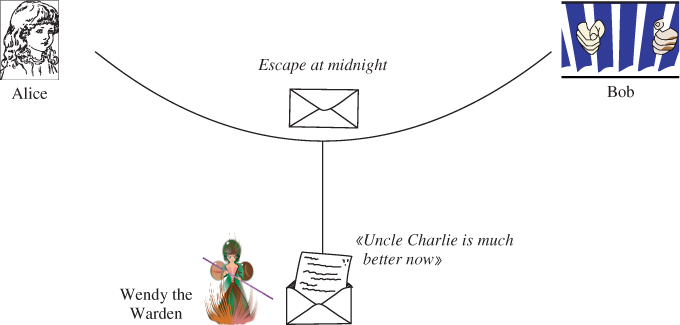

This security model is known as the prisoners' problem. Two prisoners, Alice and Bob, are allowed to exchange messages, but Wendy the Warden will monitor all correspondence. There are two variations of the problem, with an active or a passive warden (see e.g. Fridrich et al. (2003a)). The passive warden model seems to be the one most commonly assumed in the literature. When Wendy is passive, she is only allowed to review, and not to modify, the messages. The passive warden is allowed to block communications, withdraw privileges, or otherwise punish Alice and Bob if she thinks they have abused their communication link (Figure 2.1). That could mean solitary confinement or the loss of communications. If Alice sends an encrypted message to Bob, it will be obviously suspicious, and Wendy, assuming the worst, will block it.

Figure 2.1 The prisoners' problem with passive warden

The seemingly less known scenario with an active warden is actually the original focus of Simmons (1983). The active warden can modify messages between Alice and Bob, aiming either to disable the hidden message (a Denial of Service attack), or forge a message which Bob will think is a genuine message from Alice, something which could serve to lure Bob into a trap.

Let's elaborate on the security problem of Alice and Bob. The centre of any security problem is some asset which needs protection; it can be any item of value, tangible or intangible. The main asset of Alice and Bob in the prisoners' problem is the privileges they are granted only as long as they are well-behaved and either do not abuse them or keep the abuse secret from Wendy. The most obvious privilege is the privilege to communicate. With a passive warden, the only threat is detection of their abuse, leading to loss of privileges. Thus the passive warden scenario is a pure confidentiality problem, where some fact must be kept secret.

In the active warden scenario, there are three threats:

In the original work, Simmons (1983) addressed the integrity problem and suggested solutions for message authentication. The objective was to allow Bob to distinguish between genuine messages from Alice and messages forged by Wendy.

It should be noted that, in the passive warden model, the contents of the message is not a significant asset per se. Once Wendy suspects that an escape plan is being communicated, she will put Alice and/or Bob in solitary confinement, or take some other measure which makes it impossible to implement any plans they have exchanged. Thus, once the existence of the message is known, the contents have no further value, and keeping the very existence of the secret secret is paramount. Once that is lost, everything is lost.

A steganographic system (stego-system) can be seen as a special case of a cipher. This was observed by Bacon (1605), who presented three criteria for sound cipher design. Although he did not use the word steganography, his third criterion was ‘that they be without suspicion’, i.e. that the cipher text should not be recognised as one.

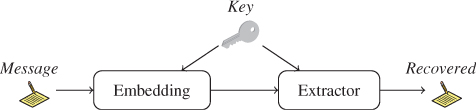

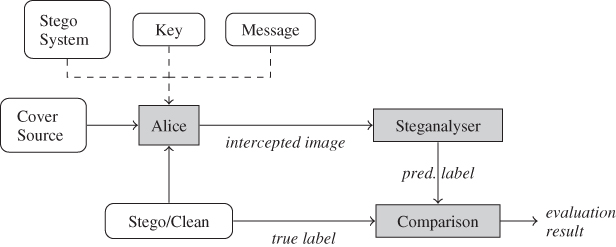

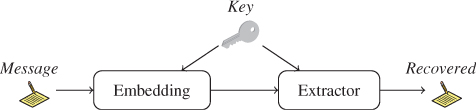

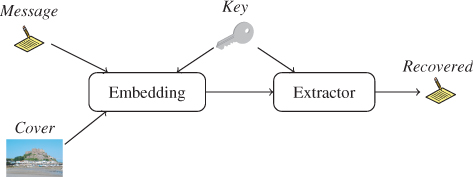

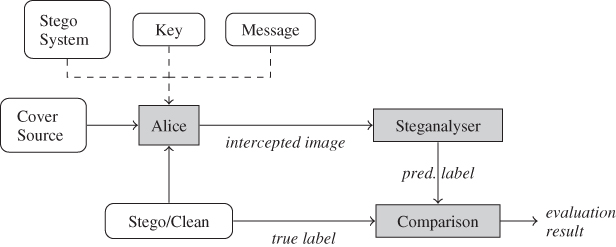

This view reappears in some modern, cryptographical studies of steganography, e.g. Backes and Cachin (2005). A stego-system is a special case of a cipher, where we require that the cipher text be indistinguishable from plain text from Wendy's point of view. This system is shown in Figure 2.2. Note that the stego-encoder (encryption algorithm) has to synthesise an innocuous file (a cover) unrelated to the message. Thus, stego-systems which work this way are known as steganography by cover synthesis (Cox et al., 2007).

Figure 2.2 A typical (secret-key) system for steganography by cover synthesis

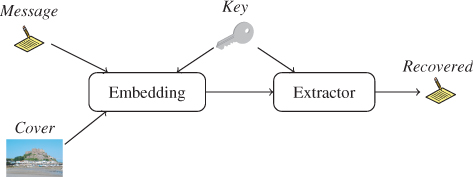

Cover synthesis is challenging, and there are few known stego-systems that efficiently apply cover synthesis. Steganography by modification avoids this challenge by taking an innocuous file, called the cover, as input to the stego-encoder in addition to the key and the message, as shown in Figure 2.3. This model is closely related to traditional communication systems, where the cover takes the role of a carrier wave. The message is then modulated by imperceptible modifications of the cover. The result is a steganogram which closely resembles the original cover. Many authors restrict the definition of steganography to cover only steganography by modification. For example, Cox et al. (2007, p. 425) ‘define steganography as the practice of undetectably altering a Work to embed a message’.

Figure 2.3 A typical (secret-key) system for steganography by modification

In theory, cover synthesis is the most flexible and powerful approach, as the synthetic cover can be adapted to the message without restriction. In practice, however, media synthesis is so difficult to automate that almost all known practical systems use modification. There are notable exceptions though, such as the Spam-Mimic system1 which imitates spam email messages. The author is not aware of any stego-system which synthesises images.

A third alternative is known as cover selection (Fridrich, 2009), which corresponds to using a code book in conventional cryptography, usually with many covers corresponding to each possible message. Each possible cover or cipher text is associated with a specific plain text, and the encoder will simply look up a cover to match the message. Cover selection is not always practical, as the code book required may be huge, but examples do exist.

Properly done, cover selection steganography is impossible to detect. The steganogram is not only indistinguishable from an unmodified cover; it is an unmodified cover. In the thumbnail example above one could argue that the steganalyst can make inferences from the order or combination of images, especially if the message is sufficiently long. For short messages, however, steganalysis by cover selection is possible and perfectly secure.

The possibility of using cover selection or synthesis highlights the role the cover has in steganography. The objective is to encode, rather than to embed, and we can choose any cover we want, synthetic or natural. Embedding is just one way to encode. The current state of the art may indicate that embedding, that is cover modification, is the most practical approach, as many simple methods are known, but there is no other reason to prefer modification. Even steganography by modification allows one to be selective about the cover. It is not necessary that a stego-system by modification be secure or even work for arbitrary covers, as long as Alice determines in advance which covers are good.

Early stego-systems depended entirely on the secrecy of the algorithm. The stego-encoder would take only the message and possibly a cover as input, and no secret key would be used. This is known as a pure stego-system. The problem with these systems is that once they are known, they become useless. It is straightforward for Wendy to try all known pure stego-decoders. Alice can only secretly send a message to Bob if Bob has a secret that Wendy does not know. If Wendy knows all that Bob knows she can decode the steganogram as he would.

The stego-systems shown in Figures 2.2 and 2.3 are secret-key stego-systems. The stego-encoder and -decoder are parameterised by a secret, known as the key shared by Alice and Bob. This key is the same concept that we know from encryption: it is any secret data which Bob has and Wendy has not, and which enables Bob to decode the message.

In cryptography one would always assume that the security depends only on the key, and that knowledge of the algorithm would not help Wendy to distinguish between a steganogram and an innocent message. This idea was very controversial when it was introduced by Kerckhoffs (1883), but is now universally accepted in the cryptography community and has been labelled Kerckhoffs' Principle. The steganography community is still debating whether and how Kerckhoffs' Principle applies to steganography.

Systems which adhere to Kerckhoffs' Principle can easily be instantiated with different secret keys and used for different applications. If one instance is compromised completely, this will not affect the other instances using different keys. This is a great advantage, because the key can easily be chosen at random, whereas algorithm design is a long and costly process. Without Kerckhoffs' Principle it would be impossible to get off-the-shelf cryptographic software, as the enemy would be able to buy the same software.

It should be noted that Kerckhoffs did not propose that all cryptographic algorithms ought to be made publicly available, and many experts in cryptography do indeed argue that, in practice, it may be wise to keep algorithms secret. He only said that encryption algorithms should be designed so that they could fall into enemy hands without inconvenience.

In reality, it has proved very hard to design stego-systems which are secure under Kerckhoffs' Principle. Excluding steganograms with extremely low payload, all known stego-systems seem to have been broken. Recent research on the square-root law of steganography (Ker et al., 2008) indicates that any stego-system will be easy to break if it is used long enough, as the total length of the steganograms has to increase proportionally to the square of the total message lengths. This is very different from encryption, where the same cipher can be used indefinitely, with varying keys. Quite possibly, steganography will have to look beyond Kerckhoffs' Principle, but the debate is not over.

In practice, it will of course often be the case that Wendy has no information about Bob and Alice's stego-system. Hence, if no Kerckhoffs-compliant stego-system can be found, it is quite possible that Alice and Bob can get away with one which is not. Surely the feasibility of Kerckhoffs' Principle in encryption must have contributed to its popularity.

Public-key stego-systems have also been proposed (see e.g. Backes and Cachin (2005)), following the principles of public-key cryptography. Instead of Alice and Bob sharing a secret key, Bob generates a key pair (ks, kp), where the secret ks is used as input to the decoder, and the public kp is used as input to the encoder. Anybody knowing kp can then send steganograms which only Bob can decode.

As far as we know, there are no practical public-key stego-systems which can handle more than very short messages. Common examples of public-key stego-systems require either extremely long covers or a perfect probability model of possible cover sources. Hopper (2004), for instance, suggests a public-key stego-system based on cover selection, where each message bit b is encoded as one so-called document D, which is simply selected from the cover source so that the decoding function matches the message bit, i.e. f(D) = m. Thus the steganogram becomes a sequence of documents, and although nothing is said about the size of each document, they must clearly be of such a length or complexity that the steganalyst cannot detect statistical anomalies in the distribution of document sequences.

One of the classic image steganography techniques is LSB embedding. It was initially applied to pixmap images, where each pixel is an integer indicating the colour intensity (whether grey scale or RGB). A small change in colour intensities is unlikely to be perceptible, and the stego-system will discard the least significant bit (LSB) of each pixel, replacing it with a message bit. The receiver can extract the message by taking the image modulo two.

There are a number of variations of LSB embedding. The basic form embeds sequentially in pixels or coefficients starting in the upper left corner, and proceeding in whichever direction is most natural for the compiler or interpreter. In Matlab and Fortran that means column by column, while in Python it is row by row by default. This approach means that the hidden message will always be found in the same locations. Steganalysis becomes harder if the message is embedded in random pixels.

def embed(X,msg,key=None):

(I,P,Sh) = im2sig( cover, key )

L = len(msg)

I[:L] −= I[:L]%2

I[:L] += msg

return sig2im(I,P,Sh)

def extract(X,key=None):

(I,P,Sh) = im2sig( cover, key )

return I % 2

def im2sig(cover,key=None):

Sh = cover.shape

if key != None:

rnd.seed(key)

P = numpy.random.permutation(S)

I = np.zeros(S,dtype=cover.dtype)

I[P] = cover.flatten()

return (I,P,Sh)

else:

return (cover.flatten(),None,Sh)

def sig2im(I,P,Sh):

if P == None: return I.reshape(Sh)

else: return I[P].reshape(Sh)

Embedding in random locations can be implemented by permuting a sequence of pixels using a pseudo-random number generator (PRNG) before embedding and inverting the permutation afterwards. The seed for the PRNG makes up the key which must be shared by Alice and Bob ahead of time, so that Bob can obtain the same permutation and know where to extract the message.

Example Python code for LSB embedding and extraction can be seen in Code Example 2.1. We shall give a brief introduction to Python in the next chapter. If key is given, it is used to seed a PRNG which generates a random permutation that is applied to the sequence of pixels before embedding. Thus the message bits are embedded in random locations. The embedding itself is done simply by subtracting the LSB (image modulo two) and then adding the message in its place.

Although LSB embedding most often refers to embedding in the spatial domain (pixmap), it can apply to any integer signal where a ± 1 change is imperceptible. In particular, it applies to JPEG images, but is then called JSteg in the key less version and Outguess 0.1 in the keyed version. The only special care JSteg takes is to ignore coefficients equal to 0 or 1; because of the great perceptual impact changes to or from 0 would cause. We will discuss JPEG steganography in further detail in Chapter 8.

Another variation of LSB, known as LSB matching, ± 1 embedding or LSB±, aims to randomise the distortion for each sample. The extraction function is the same for LSB and LSB±; taking the pixels modulo two returns the message. The embedding is different. When the pixel LSB matches the message bit to be embedded, the pixel is left unchanged as in LSB replacement. When a change is required, the embedding will add ± 1 with uniform probability to the pixel value, where LSB replacement would always add + 1 for even pixels and − 1 for odd ones.

The principles of LSB are used in a wide range of stego-systems in different variations. Few alternatives exist. More success has been achieved by applying LSB to different transforms of the image or by selectively choosing coefficients for embedding than by seeking alternatives to LSB. For instance, the recent HUGO algorithm of Pevný et al. (2010a) applies LSB± to pixels where the change is difficult to detect.

Steganography by modification, together with digital watermarking, form the area of information hiding, which in general aims to hide one piece of data (message) inside another piece of data (cover).

The difference between steganography and watermarking is subtle, but very important. Recall that Cox et al. (2007) defined steganography as undetectably altering a cover. Their definition of watermarking is

the practice of imperceptibly altering a Work to embed a message that about Work.

Two points are worth noting. Firstly, watermarking is used to embed a message about the cover work itself. In steganography the cover is immaterial, and can in fact be created to suit the message, as it is in steganography by cover synthesis. Watermarking refers to a number of different security models, but what they have in common is an adversary seeking to break the connection between the Work and the embedded message. This could mean redistributing copies of the Work without the watermarked message, or forging a new Work with the same watermark.

We should also note the subtle difference between ‘undetectably’ and ‘imperceptibly’. Imperceptibility here means that the watermarked Work should be perceptually indistinguishable from the original Work for a human observer, something which is important when the cover is the asset. With the cover being of no relevance in steganography, there is also no need for imperceptibility in this sense. What we require is that Wendy will not be able to determine whether or not there is a secret message, i.e. undetectability. In watermarking the existence of the message is not necessarily a secret. In some application scenarios, we may even allow Wendy to extract it, as long as she cannot remove it.

Watermarking has been proposed for a number of different applications. Radio commercials have been watermarked for the purpose of broadcast monitoring, i.e. an automated system to check that the commercial is actually broadcast. There have been cases where broadcasters have cheated, and charged advertisers for airings which have not taken place, and broadcast monitoring aims to detect this. Clearly, in this application, the commercial and the watermark must be linked in such a way that the broadcaster cannot air the watermark without the commercial.

Another application is image authentication and self-restoration, which aims to detect, and possibly correct, tampering with the image. This has obvious applications in forensic photography, where it is important to ensure that an image is not modified after being taken. The watermark in authentication systems contains additional information about the image, so that if some sections are modified, the inconsistency is detected. In self-restoration, modified sections can be restored by using information from the watermarks of other sections.

Robust watermarking is used for copyright protection, where the adversary may try to distribute pirate copies of the Work without the watermark. Watermarking is said to be robust if it is impossible or impractical for the adversary to remove it. This is similar to the objective in the active warden scenario, where Wendy is not so much concerned with detecting the message as disabling it or falsifying it. Still, the main distinction between watermarking and steganography applies. In watermarking, the cover is the asset and the message is just a means to protect it. In steganography it is the other way around, and the active warden does not change that. Both cover synthesis and cover selection can be used in active warden steganography.

The difference between watermarking and steganography (by modification) may look minor when one considers the operation of actual systems. In fact, abuse of terminology is widespread in the literature, and some caution is required on the reader's part to avoid confusion. Part of the confusion stems from a number of works assessing the usability of known watermarking systems for steganography. That is a good question to ask, but the conclusion so far has been that watermarking is easy to detect.

In this book, we will strictly follow the terminology and definitions which seem to have been agreed by the most recent literature and in particular by the textbooks of Cox et al. (2007) and Fridrich (2009). The distinctions may be subtle, but should nevertheless be clear by comparing the security criteria and the threats and assets of the respective security models. In steganography, the message is the asset, while the cover is the asset in watermarking. Although many different threats are considered in watermarking, they are all related to the integrity confidentiality, integrity, or availability of the cover.

Steganography, like watermarking, applies to any type of media. The steganogram must be an innocuous document, but this could be text, images, audio files, video files, or anything else that one user might want to send to another.

The vast majority of research in steganography focuses on image steganography, and the imbalance is even greater when we consider steganalysis. The imbalance can also be seen in watermarking, but not to the same extent. There are a number of noteworthy watermarking systems for speech signals (e.g. Hofbauer et al. (2009)) and video files. Part of the reason for this is no doubt that audio and video processing is somewhat more difficult to learn. Research in robust watermarking has also demonstrated that the strong statistical correlation between subsequent frames facilitates attacks which are not possible on still images, and this correlation is likely to be useful also in steganalysis.

There has been work on steganography in text documents, but this stems from other disciplines, like computational linguistics and information theory, rather than the signal- and multimedia-processing community which dominates multimedia steganalysis. Wayner (2002) gives several examples.

Steganalysis refers to any attack mounted by a passive warden in a steganography scenario. It refers to Wendy's analysing the steganogram to obtain various kinds of information about Alice and Bob's correspondence. An active warden would do more than mere analysis and this is outside the scope of this book.

In the prisoners' problem with a passive warden, Wendy's objective is to detect the presence of secret communications. Any evidence that unauthorised communications are taking place is sufficient to apply penalties and would thus cause damage to Alice and Bob. Any additional information that Wendy may obtain is outside the model. The solution to her problem is a basic steganalyser, defined as follows.

Some authors argue that the basic steganalyser is only a partial solution to the problem, and that steganalysis should aim to design message-recovery attacks which are able to output also the secret message. These attacks clearly go beyond the security model of the prisoners' problem. There may very well be situations where Alice and Bob have additional assets which are not damaged by Wendy's learning of the existence of the secret, but would be damaged if the contents became known to the adversary.

There is, however, a second reason to keep message-recovery out of the scope of steganalysis. If Alice and Bob want to protect the contents of the communications even if Wendy is able to break the stego-system, they have a very simple and highly trusted solution at hand. They can encrypt the message before it is embedded, using a standard cryptographic tool, making sure that a successful message-recover attack on the stego-system would only reveal a cipher text. If the encryption is correctly applied, it is computationally infeasible to recover the plain text based on the cipher text. There is no significant disadvantage in encrypting the message before stego-encoding, as the computational cost of encryption is negligible compared to the stego-system. Thus, established cryptographic tools leave no reason to use steganography to protect the confidentiality (or integrity) of the contents of communications, and thus little hope of steganalysis to reveal it.

Of course, this does not mean that message-recovery is not an interesting problem, at least academically, but very little research has yet been reported, so the time for inclusion in a textbook has not yet come. There has been more work on other types of extended steganalysis, aiming to get different kinds of additional information about the contents of the steganogram.

Quantitative steganalysis aims to estimate the length of the secret message. Key-recovery attacks are obviously useful, as knowledge of the key would allow detection and decoding of any subsequent communication using the same key. Extended steganalysis also covers techniques to determine other information about the secret message or the technology used, such as the stego-algorithm which was used.

Most early steganalysis algorithms were based on an analysis of individual known stego-systems, such as LSB in the spatial domain and JSteg. Such algorithms are known as targeted in that they target particular stego-systems and are not expected to work against any other stego-systems. Well-known examples include the pairs-of-values (or χ2) test (Westfeld and Pfitzmann, 2000), sample pairs analysis (Dumitrescu et al., 2002; Fridrich et al., 2001b) and RS steganalysis (Fridrich et al., 2001b). Targeted steganalysers can often be made extremely accurate against the target stego-system.

The opposite of targeted steganalysis is usually called blind steganalysis. We define blind steganalysis as those methods which can detect steganograms created by arbitrary and unknown stego-systems. Blind steganalysis can be achieved with one-class classifiers (cf. Section 11.5.1).

Most steganalysers based on machine learning are neither truly blind nor targeted. We will call these algorithms universal, because the classification algorithm applies universally to many, if not every, stego-system. However, with the exception of one-class classifiers, the classifiers have to be trained on real data including real steganograms. Thus the training process is targeted, while the underlying algorithm is in a sense blind. Some authors consider universal steganalysis to be blind, but we consider this to be misleading when Wendy has to commit to particular stego-systems during training.

Each of blind, universal and targeted steganalysers have important uses. A firm believer in Kerckhoffs' Principle may argue that targeted steganalysis is the right approach, and successful design of a targeted steganalyser renders the target stego-system useless. Indeed, targeted steganalysis is a very useful tool in the security analysis of proposed stego-systems. In practice, however, Wendy rarely knows which algorithm Alice has used, and it may be computationally infeasible for Wendy to run a targeted steganalyser for every conceivable stego-system. It would certainly make her task easier if we can design effective blind steganalysers.

The concept of targeted blind steganalysis has been used by some authors (e.g. Pevný et al., 2009b). It refers to universal steganalysers whose training has been targeted for specific use cases. This need not be limited to a particular stego-system, but could also assume a particular cover source.

Universal steganalysers are limited by the data available for training. If the training data are sufficiently comprehensive, they may, in theory, approach blind steganalysis. However, computational complexity is a serious issue as the training set grows. A serious limitation of steganalysers based on machine learning is that they depend on a range of characteristics of the training set. Taking images as an example, these characteristics include JPEG compression factors, lighting conditions, resolution, and even characteristics of the source camera (sensor noise). The more information Wendy has about possible cover images, the more accurately she can train the steganalyser.

It is useful to distinguish between a steganalytic algorithm or steganalyser, which is a fundamental and atomic technique for steganalysis, and a steganalytic system, which is a practical implementation of one or more algorithms. A common approach to achieve a degree of blindness is to combine multiple steganalytic algorithms to cover a range of stego-algorithms in a complex system. As the individual algorithms are simpler than the system, they can be evaluated more thoroughly, and mathematical theory can be designed. We will return to the implementation issues and complex steganalytic systems in Chapter 14.

Steganalysis ranges from manual scrutiny by experts to automated data analysis. We can distinguish between four known classes of image steganalysis. Ordered by the need for manual action, they are

Visual and structural steganalysis are well suited for manual inspection, and visual steganalysis completely depends on a subjective interpretation of visual data. Statistical and learning steganography, on the other hand, are well suited for automated calculation, although statistical steganalysis will usually have an analytic basis.

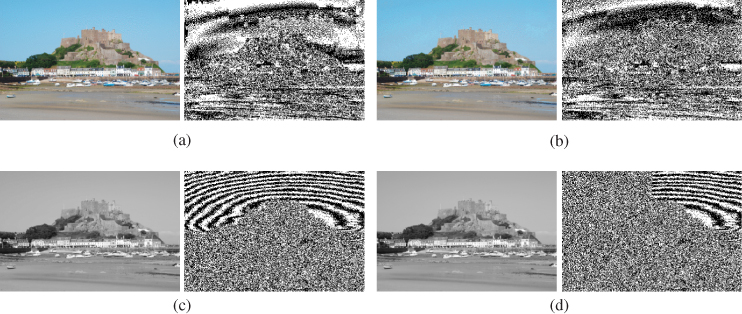

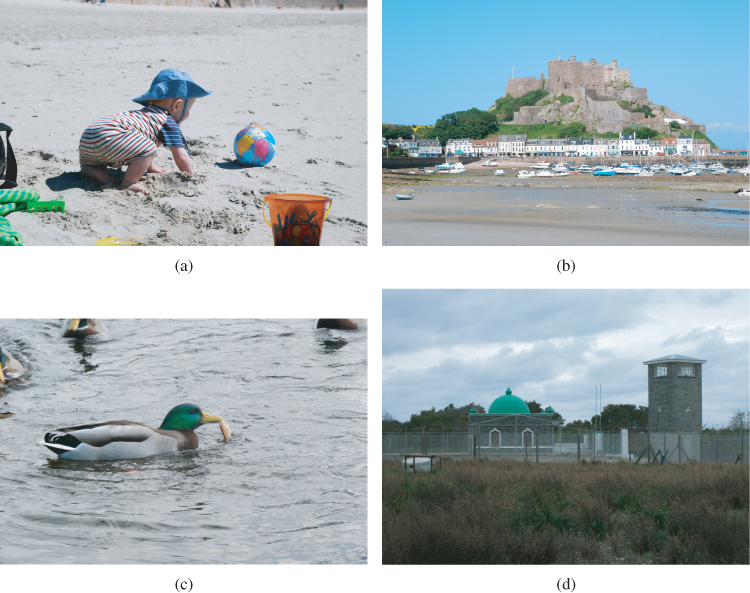

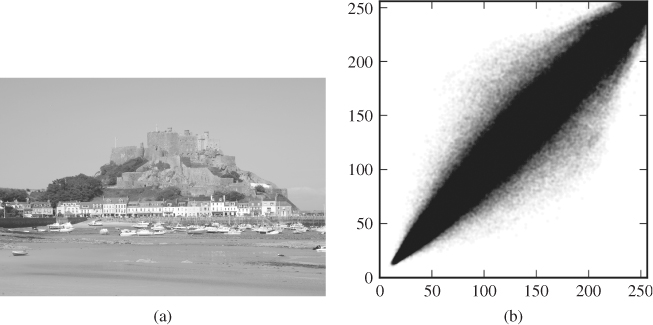

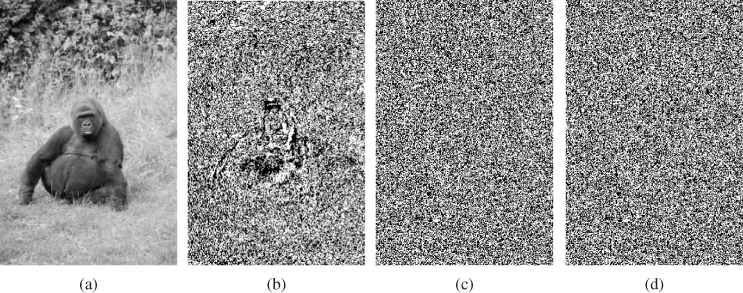

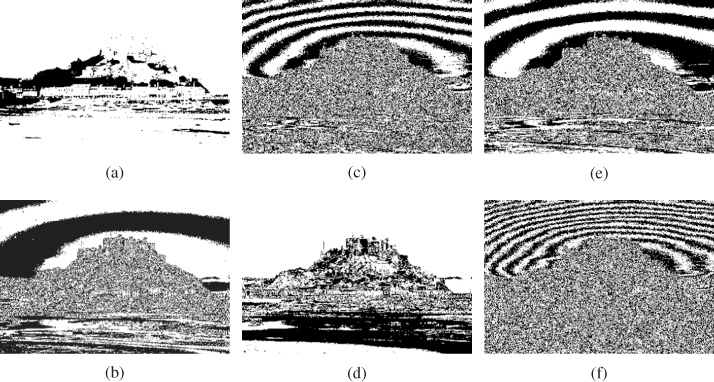

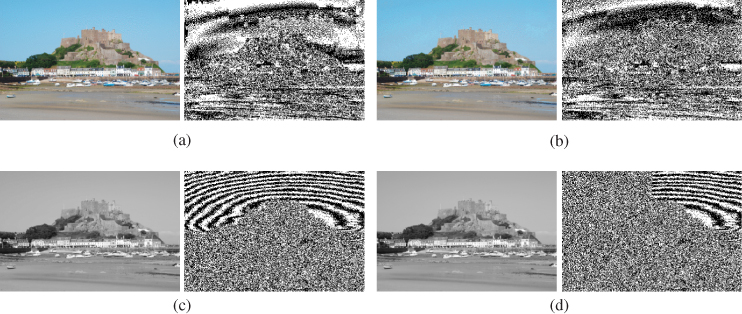

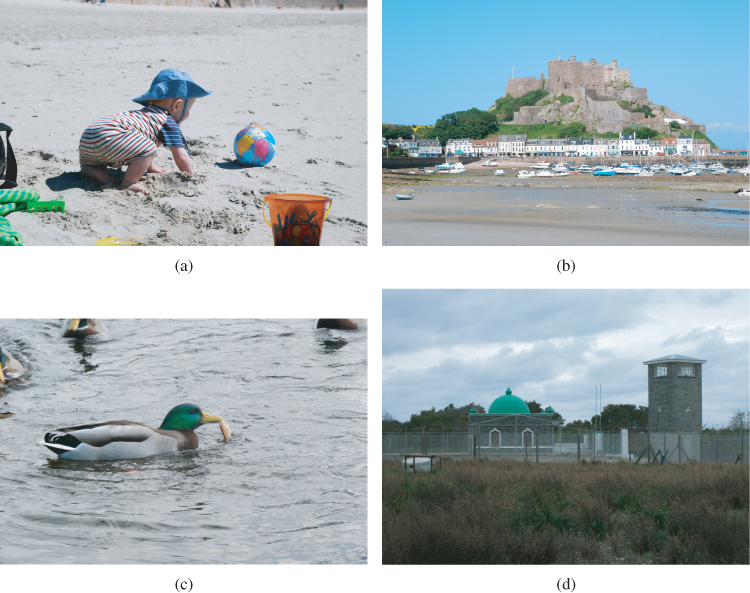

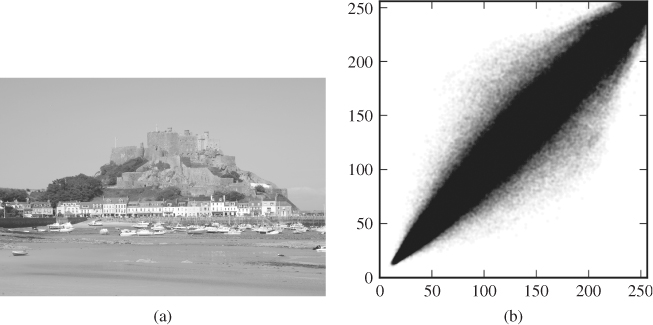

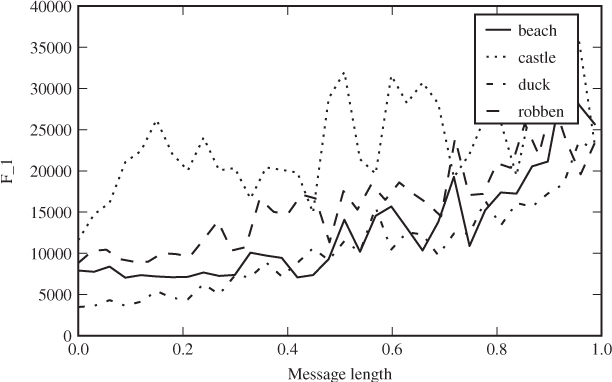

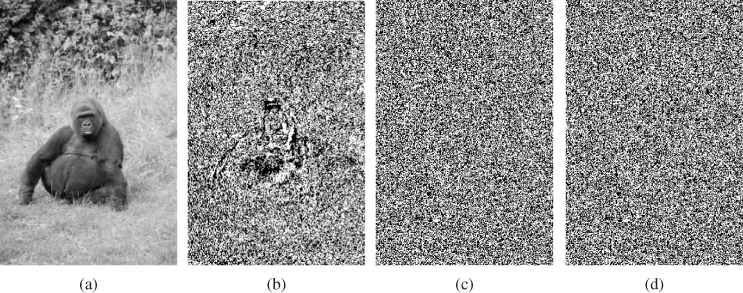

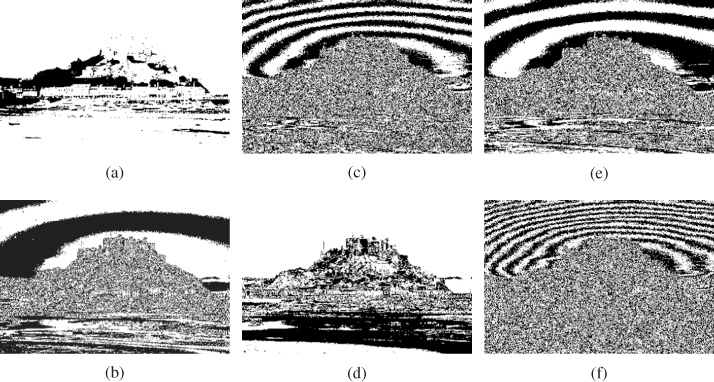

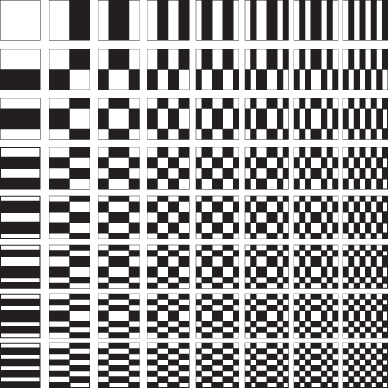

Visual steganalysis is the most manual approach, as Wendy would use her own eyes to look for visual artifacts in the image itself. In addition to inspecting the full uncompressed image, she can also examine transforms of the image. One of the most popular tools for visual inspection is the LSB plane plotted as a two-tone image, as illustrated in Figure 2.4. The source image was captured by a Nikon D80 and stored with the highest resolution and highest compression quality, albeit not in raw format. The image was downsampled by a factor of 10 and converted using ImageMagick convert. Both the 8-bit grey-scale image and the GIF image were obtained directly from the source image. The messages embedded are unbiased, random bit strings. EzStego embeds in random locations. The embedding in the grey-scale image is in consecutive images, and we can see how it clearly covers the left-hand half of the image.

Figure 2.4 Clean images and steganograms with corresponding LSB bit plane: (a) clean GIF image; (b) EzStego steganogram; (c) clean grey-scale image; (d) LSB steganogram. The steganograms have 6772 bytes embedded out of a capacity of 13 312 bytes (photograph of Mont Orgueil, Jersey)

It should be noted that the grey-scale example in Figure 2.4 is not necessarily typical. Most images we have inspected do not display as much visual structure in the LSB plane as this one. However, this structure is described as typical by other authors focusing on palette images, including Westfeld and Pfitzmann (2000) and Wayner (2002).

Visual steganalysis is obviously very flexible, as it can pick up on new and previously unknown artifacts. However, not all cover images exhibit the same visual structure, making the approach very cover-dependent.

Structural steganalysis looks for give-away signs in the media representation or file format. A classic and blatant example is some of the software packages of the 1990s, which inserted the name of the stego software in the comment field of JFIF (JPEG) files. The variations in how the standard is implemented may also give room for forensic analysis, tracing a given file back to particular software packages including stego-embedders. The variations may include both bugs and legitimate design choices.

Vulnerabilities to structural attacks include artificial constraints, like only allowing images of 320 × 480 pixels as Hide and Seek version 4.1 did (Wayner, 2002). Although this does not in itself prove anything, it gives a valuable clue as to what other artifacts may be worth investigating. The colour palette, for instance in GIF images, is a source of many other vulnerabilities. Many steganography systems reorder the palette to facilitate simple, imperceptible embedding. S-Tools is an extreme example of this, as it first creates a small, but optimal 32-colour palette, and then adds, for each colour, another seven near-identical colours differing in only one bit (Wayner, 2002). Any palette image where the colours come in such clusters of eight is quite likely to be a steganogram.

Another structural attack is the JPEG compatibility attack of Fridrich et al. (2001a). This attack targets embedding in pixmap images where the cover image has previously been JPEG compressed. Because JPEG is lossy compression, not every possible pixmap array will be a possible output of a given JPEG decompression implementation. The attack quite simply checks if the image is a possible decompression of JPEG, and if it isn't it has to be due to some kind of post-compression distortion which is assumed to be steganographic embedding.

Structural steganalysis is important because it demonstrates how vulnerable quick and dirty steganography software can be. In contrast, a steganographer can easily prevent these attacks by designing the software to avoid any artificial constraints and mimic a chosen common-place piece of software. It is possible to automate the detection of known structural artifacts, but such attacks become very targeted. An experienced analyst may be able to pick up a wider range of artifacts through manual scrutiny.

Statistical steganalysis uses methods from statistics to detect steganography, and they require a statistical model, describing the probability distribution of steganograms and/or covers theoretically. The model is usually developed analytically, at least in part, requiring some manual analysis of relevant covers and stego-systems. Once the model is defined, all the necessary calculations to analyse an actual image are determined, and the test can be automated.

A basic steganalyser would typically use statistical hypothesis testing, to determine if a suspected file is a plausible cover or a plausible steganogram. A quantitative steganalyser could use parameter estimation to estimate the length of the embedded message. Both hypothesis testing and parameter estimation are standard techniques in statistics, and the challenge is the development of good statistical models.

Statistical modelling of covers has proved to be very difficult, but it is often possible to make good statistical models of steganograms from a given stego-system, and this allows targeted steganalysers.

In practice it is very often difficult to obtain a statistical model analytically. Being the most computerised class of methods, learning steganalysis overcomes the analytic challenge by obtaining an empirical model through brute-force data analysis. The solutions come from areas known as pattern recognition, machine learning, or artificial intelligence.

Most of the recent research on steganalysis has been based on machine learning, and this is the topic of the present book. The machine is presented with a large amount of sample data called a training set, and is started to estimate a model which can later be used for classification. There is a grey area between statistical and learning techniques, as methods can combine partial analytic models with computer-estimated models of raw data. Defining our scope as learning steganalysis therefore does not exclude statistical methods. It just means that we are interested in methods where the data are too complex or too numerous to allow a transparent, analytic model. Machine learning may come in lieu of or in addition to statistics.

A pioneer in statistical steganalysis is the pairs-of-values (PoV) attack which was introduced by Westfeld and Pfitzmann (2000). It may be more commonly known as the χ2 attack, but this is somewhat ambiguous as it refers to the general statistical technique for hypothesis testing which is used in the attack. The attack was designed to detect LSB embedding, based on the image histogram.

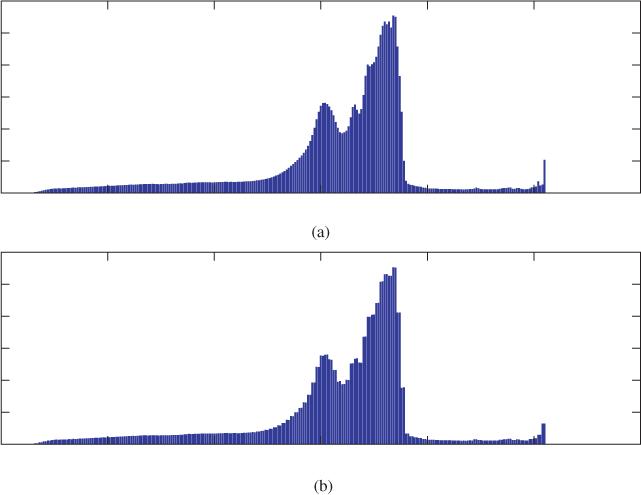

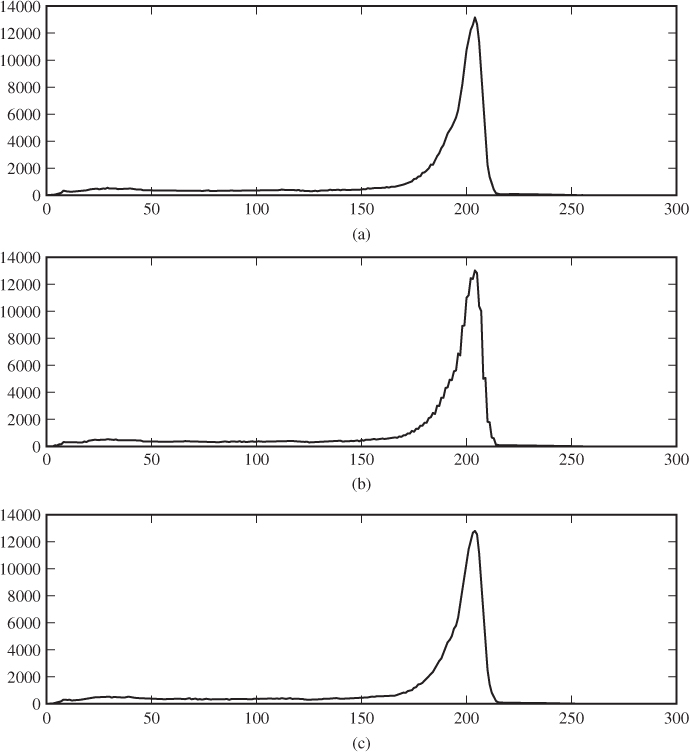

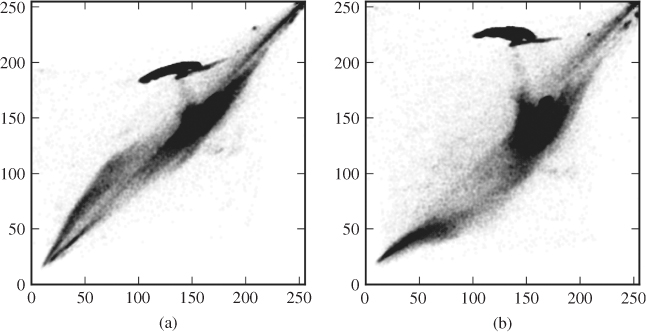

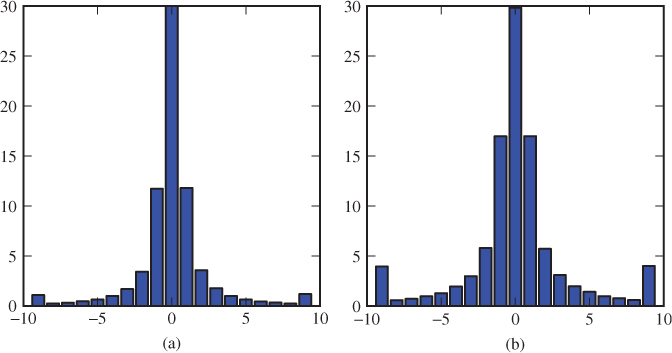

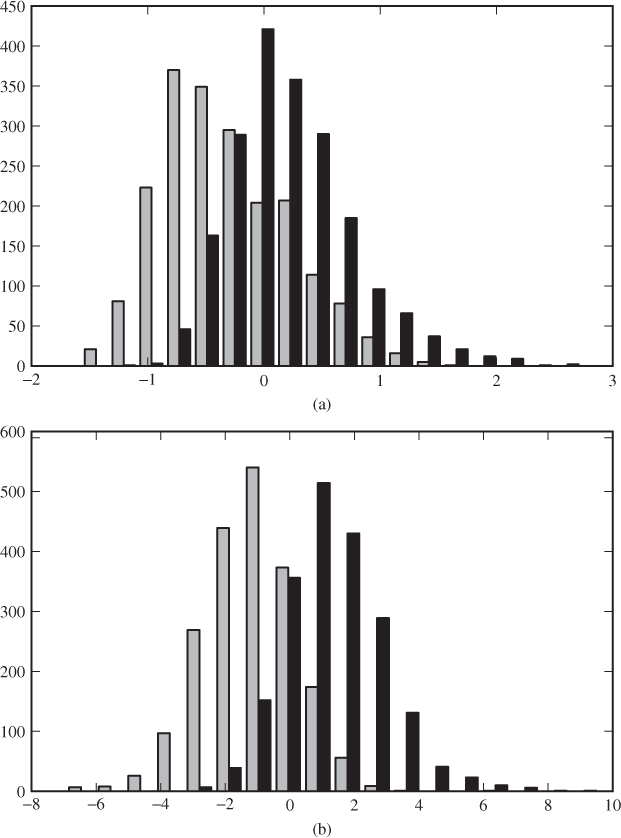

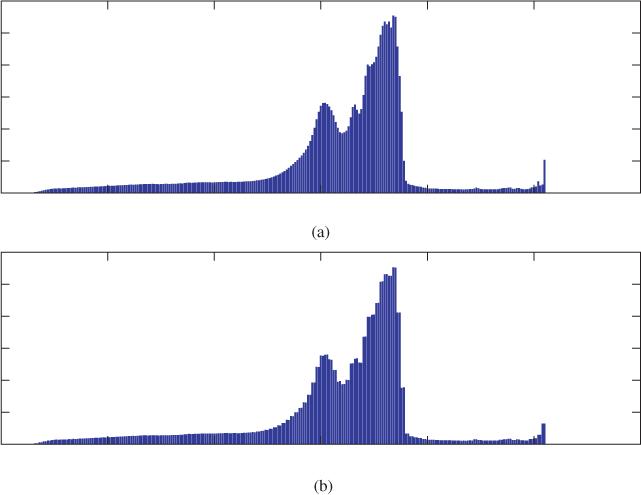

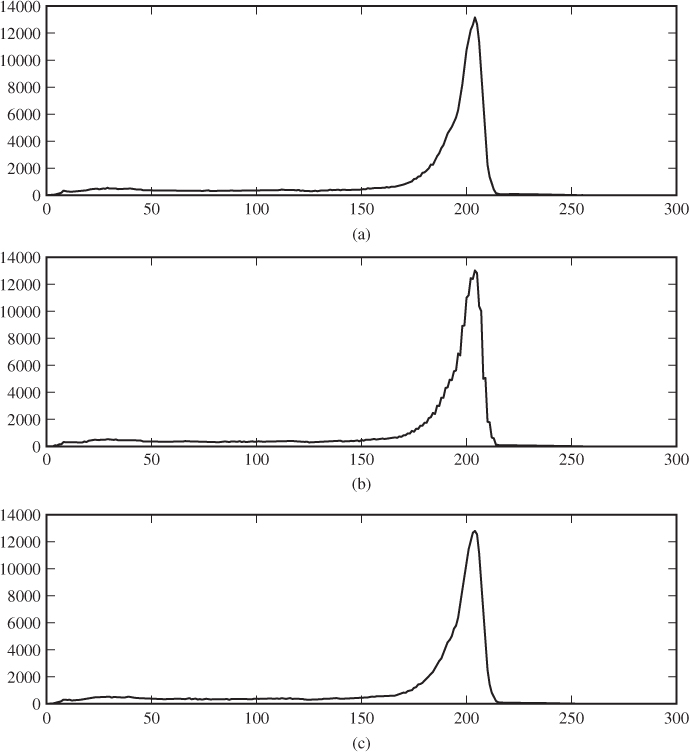

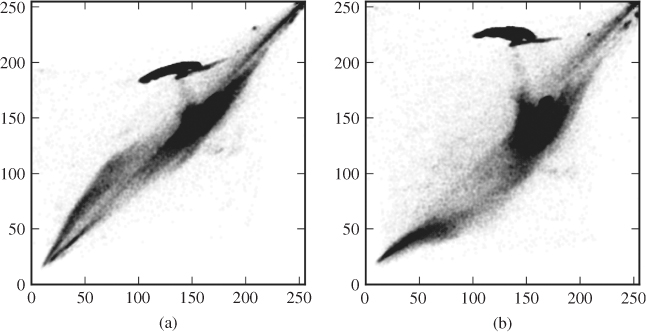

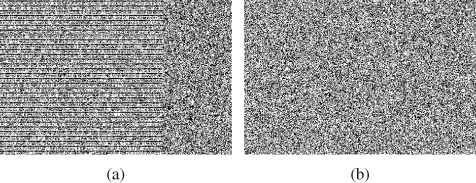

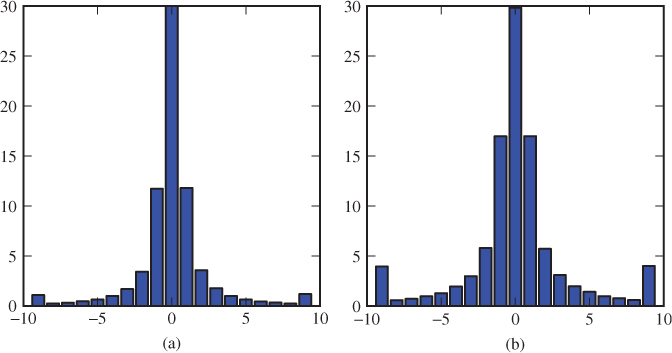

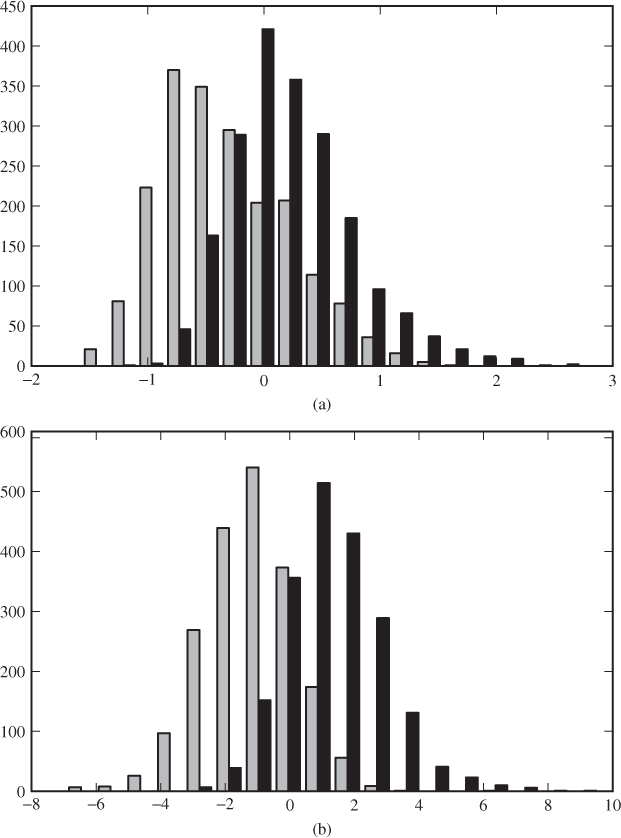

To understand pairs-of-values, it is instructive to look at the histograms of a cover image and a steganogram where the cover image has been embedded with LSB embedding at full capacity, i.e. one bit per pixel. An example, taken from 8-bit grey-scale images, is shown in Figure 2.5. The histogram counts the number of pixels for each grey-scale value. Note that the histogram of the steganogram pre-dominantly has pairs of bars at roughly equal height.

Figure 2.5 Histograms of grey-scale images: (a) clean image; (b) steganogram

If we look at what happens in LSB embedding, we can explain these pairings. A pixel with value 2i in the cover image can either remain at 2i or change to 2i + 1. A pixel of value 2i + 1 can become either 2i or 2i + 1. Thus (2i, 2i + 1) forms a pair of values for each i = 0, 1, … , 127. LSB embedding can change pixel values within a pair, but cannot change them to values from a different pair. The embedded message seems to have an even distribution of 0 and 1, which is to be expected in a random or encrypted message, and this results in an approximately even distribution of 2i and 2i + 1 for each i in the steganogram.

We can use statistical hypothesis testing to check if a suspected image is a plausible steganogram, by checking if the frequencies of 2i and 2i + 1 are plausible under an even probability distribution. The pairs-of-values attack uses the χ2 test to do this. Let hm be the number of pixels of intensity m. In a steganogram (at full capacity) we expect an even distribution between the two values in a pair, thus E(h2l) = (h2l + h2l+1)/2. Following the standard framework for a χ2 test (see e.g. Bhattacharyya and Johnson (1977)), we get the statistic

2.1

Consulting the χ2 distribution, we can calculate the corresponding p-value.

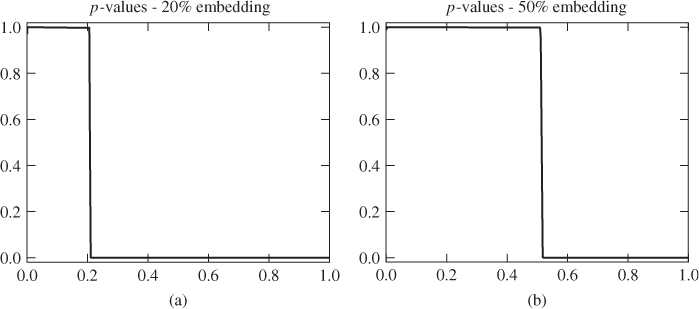

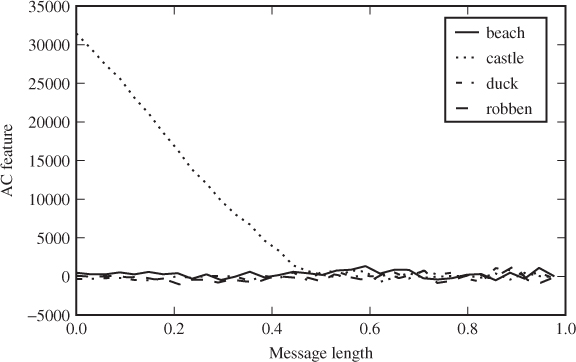

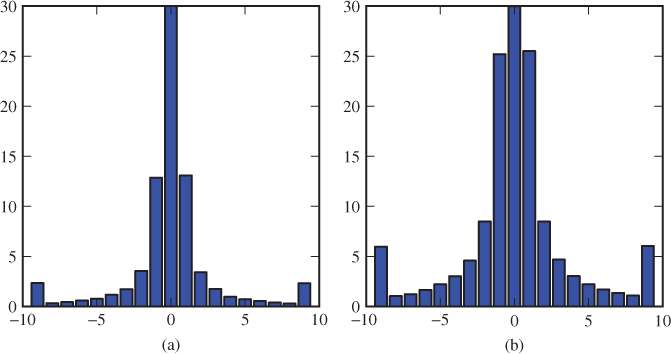

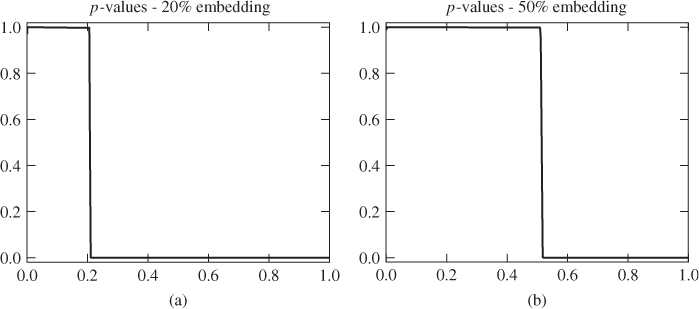

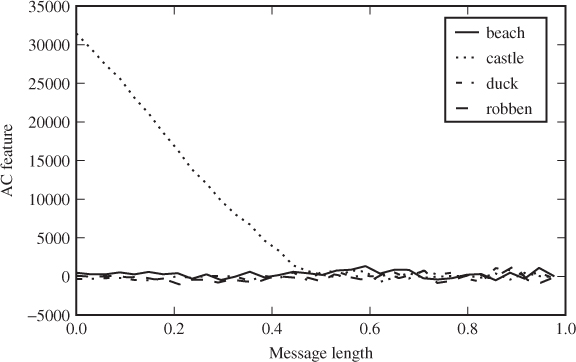

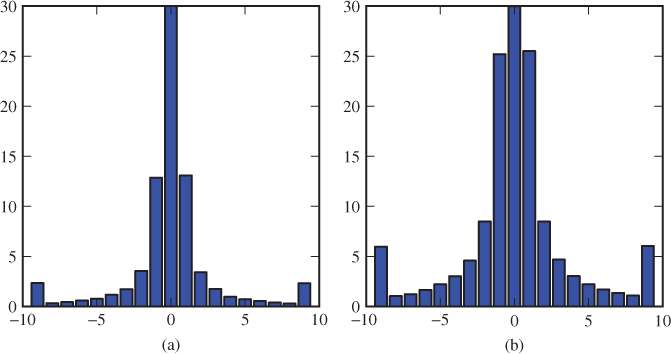

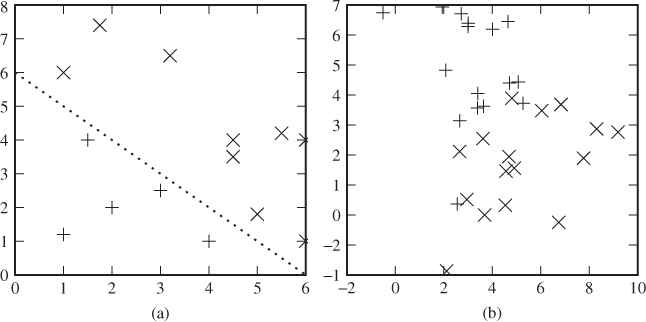

The pairs-of-values test was first designed for consecutive LSB embedding. Then we can perform the test considering only the first N pixels of the image. In Figure 2.6, we have plotted the p-value for different values of N. The p-value is the probability of seeing the observed or a larger value of  assuming that the image is a steganogram. We can easily see that when we only consider the pixels used for embedding, we get a large p-value indicating a steganogram. When we start considering more pixels than those used for embedding, the p-value drops quickly. Thus we can use the plot to estimate the message length.

assuming that the image is a steganogram. We can easily see that when we only consider the pixels used for embedding, we get a large p-value indicating a steganogram. When we start considering more pixels than those used for embedding, the p-value drops quickly. Thus we can use the plot to estimate the message length.

Figure 2.6 Plots of the p-values for a χ2 test: (a) 20% embedding; (b) 50% embedding

The basic form of the χ2 attack is only effective against LSB replacement in consecutive pixels. However, the idea has been extended several times to attack other stego-systems and their variations. Very early on, Provos and Honeyman (2001) introduced the extended χ2 attack to attack embedding in random locations. Later, Lee et al. (2006, 2007) created the category attack which is very effective against embedding in the JPEG domain.

We have summarised the wide variety of techniques and approaches in steganography and steganalysis, and it is time to define our scope. Some topics are excluded because they do not currently appear to be sufficiently mature, others have been excluded because they have been covered by other textbooks. In the rest of this book, steganography will refer to image steganography using cover modification in the passive warden model. Alice, Bob, and Wendy will remain our main characters, to personify respectively the sender, the receiver and the steganalyst.

Fridrich (2009) gives a comprehensive introduction to steganography. We will focus on steganalysis using machine learning in this steganography model only. Some statistical and visual steganalysis methods will be presented as examples, and a somewhat wider overview can be found in Fridrich (2009). For the most part we will limit the discussion to basic steganalysis. When we investigate extended steganalysis later in the book, we will use appropriately qualified terms.

Chapter 3

Getting Started with a Classifier

In Part II of the book, we will survey a wide range of feature vectors proposed for steganalysis. An in-depth study of machine learning techniques and classifiers will come in Part III. However, before we enter Part II, we need a simple framework to test the feature vectors. Therefore, this chapter starts with a quick overview of the classification problem and continues with a hands-on tutorial.

A classifier is any function or algorithm mapping objects to classes. Objects are drawn from a population which is divided into disjoint classes, identified by class labels. For example, the objects can be images, and the population of all possible images is divided into a class of steganograms and a class of clean images. The steganalytic classifier, or steganalyser, could then take images as input, and output either ‘stego’ or ‘clean’.

A class represents some property of interest in the object. Every object is a member of one (and only one) class, which we call its true class, and it is represented by the true label. An ideal classifier would return the true class for any object input. However, it is not always possible to determine the true class by observing the object, and most of the time we have to settle for something less than ideal. The classifier output is often called the predicted class (or predicted label) of the object. If the predicted class matches the true class, we have correct classification. Otherwise, we have a classification error.

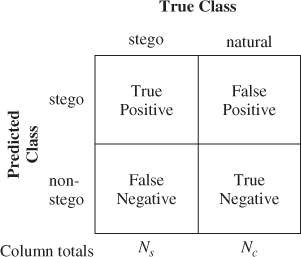

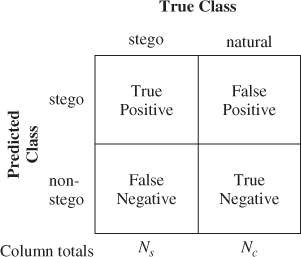

Very often, one class represents some specific property and we aim to detect the absence or presence of this property. The presence is then considered as ‘positive’ and absence as ‘negative’. It is therefore natural to speak of two different error types. A positive prediction for a negative object is called a false positive, and conversely, a negative prediction for a positive object is a false negative. Very often, one error type is more serious than the other, and we may need to assess the two error probabilities separately.

Steganalysis aims to detect the presence of a hidden message, and thus steganograms are considered positive. Falsely accusing the correspondents, Alice and Bob, of using steganography is called a false positive. Failing to detect Alice and Bob's secret communication is called a false negative. Accusing Alice and Bob when they are innocent (false positive) is typically considered to be a more serious error than a false negative.

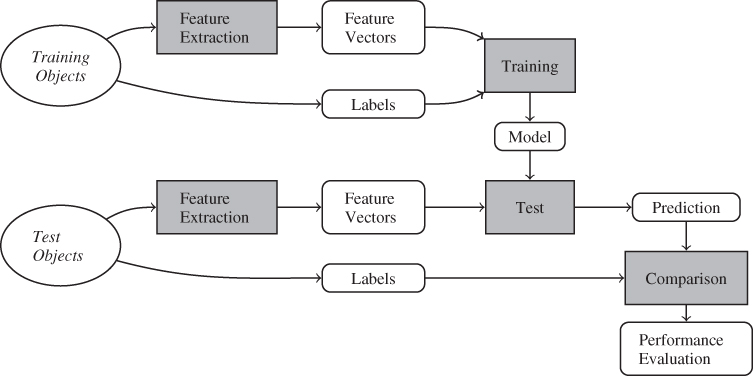

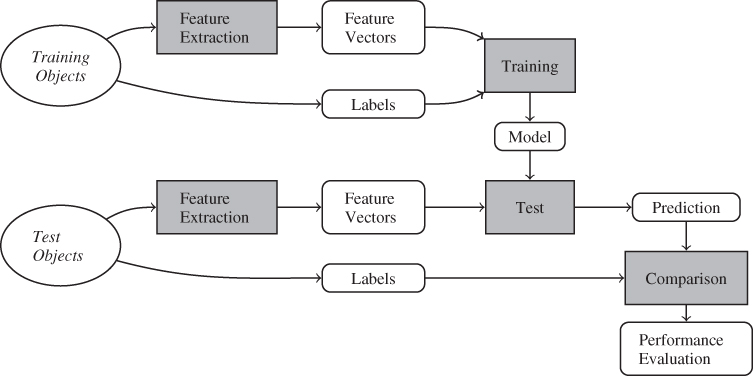

A typical classifier system is depicted in Figure 3.1. It consists of a training phase, where the machine learns, and a test phase, where the learning is practised. In the centre is the model, which represents the knowledge acquired during training. The model is output in the training phase and input in the test phase.

Figure 3.1 A learning classifier system. The grey boxes represent operations or algorithms, while the white boxes represent data

It is rarely feasible for the classifier to consider the object as a whole. Instead, a feature vector is extracted to represent the object in the classifier. Each feature is (usually) a floating point value, and it can be any kind of measure taken from the object. Selecting a good feature vector is the main challenge when machine learning is applied to real problems. Obviously, the same method of feature extraction must be used in training and in testing.

Before the classifier system can actually classify objects, it has to be trained on some training set. The figure depicts supervised learning, which means that the training algorithm receives both the feature vector and the true class label for each training object.

Testing is somewhat ambiguous. In practical use, we want to use the system to test objects in order to determine their class. In this case we have an object with unknown class, and its feature vector is input to the system, together with the model. The classifier outputs a class prediction, which we assume is its class.

Before actual use, it is necessary to evaluate the classifier to estimate how accurately it will classify objects. This evaluation is also commonly known as the test phase. When we test the classifier, we need a set of test objects with known labels, but the labels are not made available to the classifier. These objects are tested as described above and the resulting predictions are compared with the known labels, and the number of errors are counted. This allows us to calculate error rates and other performance measures.

When we review feature vectors for steganalysis in Part II, we will use one simple performance heuristic, namely the accuracy, which is defined as the rate of correct classifications during testing, or equivalently

3.1

where FP and FN are the number of false positives and false negatives in the test respectively, and TP and TN are the total number of positives and negatives. Obviously, the error rate is  .

.

Normally, we expect the accuracy to be in the range  . A classifier which randomly outputs ‘stego’ or ‘clean’ with 50/50 probability, that is a coin toss, would get

. A classifier which randomly outputs ‘stego’ or ‘clean’ with 50/50 probability, that is a coin toss, would get  on average. If we have a classifier C with

on average. If we have a classifier C with  , we can design another classifier C′ which outputs ‘stego’ whenever C says ‘clean’ and vice versa. Then C′ would get accuracy

, we can design another classifier C′ which outputs ‘stego’ whenever C says ‘clean’ and vice versa. Then C′ would get accuracy  . It is often said that a classifier with

. It is often said that a classifier with  has good information but applies it incorrectly.

has good information but applies it incorrectly.

Accuracy is not the only performance measure we could use, nor is it necessarily the best one. Accuracy has the advantages of being simple to use and popular in the steganalysis literature. A problem with accuracy is that it depends on the class skew, that is the relative size of the classes. For example, consider a classifier which always outputs a negative. Clearly, this would not be a useful algorithm, and if there is no class skew, that is TP = TN, then  . However, if the positive class is only 1% of the population, i.e. TN = 99TP, then we would suddenly get 99%accuracy with the very same classifier.

. However, if the positive class is only 1% of the population, i.e. TN = 99TP, then we would suddenly get 99%accuracy with the very same classifier.

The accuracy is worthless as a measure without knowledge of the class skew. When we quote accuracy in this book, we will assume that there is no class skew (TP = TN), unless we very explicitly say otherwise. We will explore other performance measures in Chapter 10.

The accuracy  as defined above is an empirical measure. It describes the outcome of an experiment, and not the properties of the classifier. Ideally, we are interested in the true accuracy A, which is defined as the probability of correct classification, but this, alas, would require us to test every possible image from a virtually infinite universe. Clearly impossible.

as defined above is an empirical measure. It describes the outcome of an experiment, and not the properties of the classifier. Ideally, we are interested in the true accuracy A, which is defined as the probability of correct classification, but this, alas, would require us to test every possible image from a virtually infinite universe. Clearly impossible.

The empirical accuracy  is an estimator for the true accuracy A. Simply put, it should give us a good indication of the value of A. To make a useful assessment of a classifier, however, it is not sufficient to have an estimate of the accuracy. One also needs some information about the quality of the estimate. How likely is it that the estimate is far from the true value?

is an estimator for the true accuracy A. Simply put, it should give us a good indication of the value of A. To make a useful assessment of a classifier, however, it is not sufficient to have an estimate of the accuracy. One also needs some information about the quality of the estimate. How likely is it that the estimate is far from the true value?

We will discuss this question in more depth in Chapter 10. For now, we shall establish some basic guidance to help us through Part II. An estimator  for some unknown parameter θ is a stochastic variable, with some probability distribution. It has a mean

for some unknown parameter θ is a stochastic variable, with some probability distribution. It has a mean  and a variance

and a variance  . If

. If  , we call

, we call  an unbiased estimator of θ. In most cases, it is preferable to use the unbiased estimator with the lowest possible variance.

an unbiased estimator of θ. In most cases, it is preferable to use the unbiased estimator with the lowest possible variance.

The standard deviation  is known as the standard error of the estimator, and it is used to assess the likely estimation error. We know from statistics that if

is known as the standard error of the estimator, and it is used to assess the likely estimation error. We know from statistics that if  is normally distributed, a 95.4% error bound is given as

is normally distributed, a 95.4% error bound is given as  . This means that, prior to running the experiment, we have a 95.4% chance of getting an estimation error

. This means that, prior to running the experiment, we have a 95.4% chance of getting an estimation error

In practice, σ is unknown, and we will have to estimate the standard error as well.

The test phase is a series of Bernoulli trials. The test of one image is one trial which produces either correct classification, with probability A, or a classification error, with probability 1 − A. The number of correct classifications in the test phase is binomially distributed, and we denote it as C ∼ B(A, N). Then  , and it follows that the expected value is

, and it follows that the expected value is

3.2

showing that  is an unbiased estimator for A. We know that the variance of

is an unbiased estimator for A. We know that the variance of  is A(1 − A)/N, and the customary estimator for the standard error is

is A(1 − A)/N, and the customary estimator for the standard error is

3.3

By the normal approximation of the binomial distribution, we have approximately a 95.4% chance of getting an estimation error less than ϵ prior to running the experiment, where

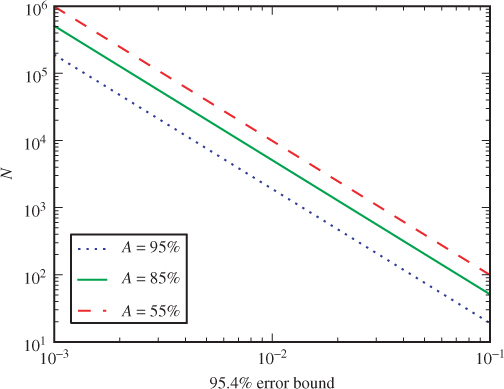

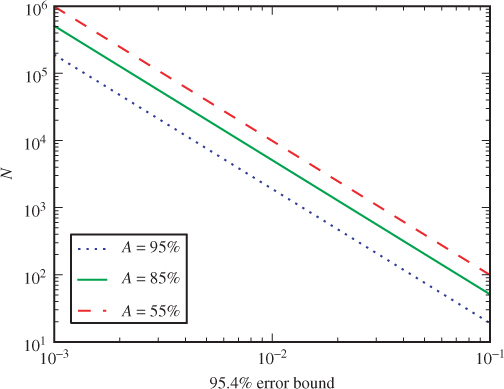

Note the dependency on the number of test images N. If we want to tighten the error bound, the obvious approach is to increase N. This dependency is illustrated in Figure 3.2.

Figure 3.2 The number of test samples required to obtain a given 95.4% error margin

It is useful to look at the plot to decide on a test set size which gives a satisfactory error bound. In Part II we have used a test set of 4000 images (2000 for each class). When estimating accuracies around 85% this gives an error bound of ± 0.011. In other words, almost 5% of the time the estimate is off by more than 1.1 percentage points. This is important when comparing feature vectors. If the estimated accuracies don't differ by more than 1.1 percentage points or so, then we should consider them approximately equal. Or if we really want to show that one method is better than the other, we could rerun the test with a larger test set, assuming that a larger test set is available.

The error bound is somewhat smaller for higher accuracies, but this is deceptive. First note that the classification error probabilities Eclass = 1 − A is as important as the accuracy A. When studying classification error probabilities of a few percent, or equivalently, accuracies close to 100%, a difference of one percentage point is much more significant than it is for accuracies less than 90%. Focusing on ϵ, the relative error bound, that is

is larger for small error probabilities.

For example, suppose you have a method with an estimated accuracy  and an error bound of 0.011. Then introduce a new method, which we think has a better accuracy. How can you make a convincing case to argue that it is better? If we were studying accuracies around 85%, it would be quite conceivable that a better method could improve the estimated accuracy by more than the error bound, but starting at 99% even 100% is within the error bound.

and an error bound of 0.011. Then introduce a new method, which we think has a better accuracy. How can you make a convincing case to argue that it is better? If we were studying accuracies around 85%, it would be quite conceivable that a better method could improve the estimated accuracy by more than the error bound, but starting at 99% even 100% is within the error bound.

It is useful to design the experiments so that the accuracies are not too close to 1. In steganalysis, this probably means that we do not want to embed very long messages, as shorter messages are harder to detect. By thus lowering the accuracy, different methods and feature vectors become more distinguishable. A common rule of thumb, in some areas of simulation and error probability estimation, is to design the experiment in such a way that we get some fixed number r of error events (classification errors in our case); r = 100 errors is common. This is motivated by the fact that the standard error on the estimate of logEclass is roughly linear in r and independent of N (MacKay, 2003, p. 463). Applying this rule of thumb, it is obvious that an increase in A requires an increase in the test set size N.

There is a second reason to be wary of accuracies close to 1. We used the normal approximation for the binomial approximation when estimating the error bound, and this approximation is only valid when A is not too close to 1 or 0, and N is sufficiently large. When the 95.4% error bound does not bound A away from 1, or in other words when  , it may well be a sign that we are moving too close to 1 for the given size of test set N. Such estimates of accuracy should be taken with a big pinch of salt.

, it may well be a sign that we are moving too close to 1 for the given size of test set N. Such estimates of accuracy should be taken with a big pinch of salt.

Support vector machines (SVM) were introduced by Boser et al. (1992) and Cortes and Vapnik (1995), and quickly became very popular as a classifier. Many implementations of SVM are available, and it is easy to get started as an SVM user. The libSVM package of Chang and Lin (2001) is a good choice, including both command line tools and APIs for most of the popular programming languages, such as C, Python and Matlab. For the initial experiments throughout Part II, we will follow the recommendations of Hsu et al. (2003).

The first task is to compile training and test sets. For each object, we have a class label l, usually l ∈ {0, 1}, and a feature vector (f1, f2, … , fn). Using libSVM on the command line, it takes plain text files as input. Each line represents one object and can look, for instance, like this

1 1:0.65 3:0.1 4:-9.2 10:2

which is interpreted as

and fi = 0 for any other i. The first number is the class label. The remaining, space-separated, elements contain the feature number, or coordinate index, before the colon and the feature itself after the colon. Zero features may be omitted, something which is useful if the data are sparse, with few non-zero features.

To get started, we must create one such file with training data and one containing test data. In the sequel, we assume they exist and are called train.txt and test.txt, respectively. In the simplest form, we can train and test libSVM using the following commands:

$ svm-train train.txt * $ svm-predict test.txt train.txt.model predict1.txt Accuracy = 50.2959% (170/338) (classification)

This uses radial basis functions (RBF) as the default kernel. We will not discuss the choice of kernel until Chapter 11; the default kernel is usually a good choice. However, as we can see from the accuracy of ≈ 50%, some choice must be bad, and we need some tricks to improve this.

A potential problem with SVM, and many other machine learning algorithms, is that they are sensitive to scaling of the features. Features with a large range will tend to dominate features with a much smaller range. Therefore, it is advisable to scale the features so that they have the same range. There is a libSVM tool to do this, as follows:

$ svm-scale -l -1 -u +1 -s range train.txt > train-scale.txt $ svm-scale -r range test.txt > test-scale.txt $ svm-train train-scale.txt * [...] $ svm-predict test-scale.txt train-scale.txt.model predict2.txt Accuracy = 54.7337% (185/338) (classification)

The first command will calculate a linear scaling function f′ = af + b for each coordinate position, and use it to scale the features to the range specified by the -l and -u options. The scaling functions are stored in the file range and the scaled features in train-scale.txt. The second command uses the parameters from the range file to scale the test set.

The last two commands are just as before, to train and test the classifier. In this case, scaling seems to improve the accuracy, but it is still far from satisfactory.

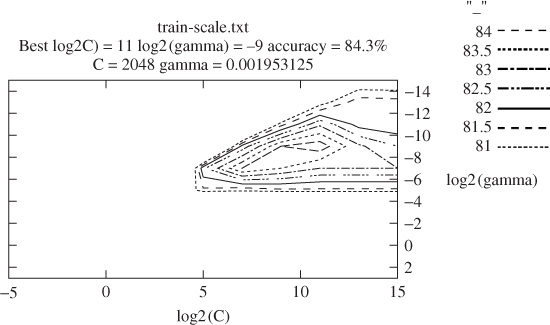

Choosing the right parameters for SVM is non-trivial, and there is no simple theory to aid that choice. Hsu et al. (2003) recommend a so-called grid search to identify the SVM parameter C and kernel parameter γ. This is done by choosing a grid of trial values for (γ, C) in the 2-D space, and testing the classifier for each and every parameter choice on this grid. Because the expected accuracy is continuous in the parameters, the trial values with the best accuracy will be close to optimal.

The grid search is part of the training process, and thus only the training set is available. In order to test the classifier on the grid points, we need to use only a part of the training set for training, reserving some objects for testing. Unless the training set is generous, this may leave us short of objects and make the search erratic. A possible solution to this problem is cross-validation.

The idea of cross-validation is to run the training/testing cycle several times, each time with a different partitioning into a training set and a test set. Averaging the accuracies from all the tests will give a larger sample and hence a more precise estimate of the true accuracy. Each object is used exactly once for testing, and when it is used for testing it is excluded from training.

In n-fold cross-validation, we split the set into n equal-sized sets and run n repetitions. For each round we reserve one set for testing and use the remaining objects for training. The cross-validation estimate of accuracy is the average of the accuracies for the n tests.

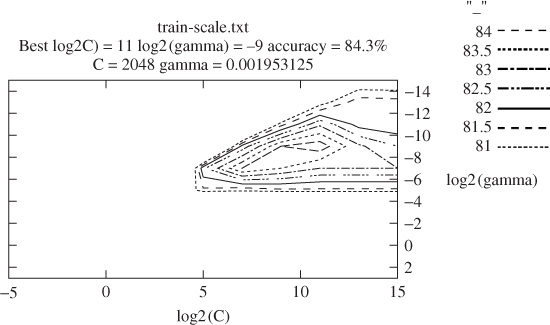

The libSVM package includes a script to perform grid search. Both C and γ are searched on a logarithmic scale, and to save time, a coarse search grid is used first to identify interesting areas before a finer grid is applied on a smaller range. The grid search may seem overly banal and expensive, but it is simple and effective. Computational time may be saved by employing more advanced search techniques, but with only two parameters (C and γ) to tune, the grid search is usually affordable. The grid search can be visualised as the contour plot in Figure 3.3, which has been generated by the grid search script. Each contour represents one achieved accuracy in the (C, y) space.

Figure 3.3 Contour plot of the cross-validation results from the grid search. The grid.py script was slightly modified to make the contour plot more legible in print

The grid search script can be used as follows:

$ grid.py train-scale.txt [local] 5 -7 82.4 (best c=32.0, g=0.0078125, rate=82.4) [...] [local] 13 -3 74.8 (best c=2048.0, g=0.001953125, rate=84.3) 2048.0 0.001953125 84.3